1. Introduction

The changing climate has resulted in an increase in forest damage globally, with extreme weather events, wildfires, and insect disturbances being the main contributing factors [

1,

2,

3]. One of the most destructive forest pests is the European spruce bark beetle (

Ips typographus L.), which has benefitted from the warming climate as it provides opportunities for the development of additional generations during a single growing season due to the temperature dependence of bark beetle development [

4,

5]. This bark beetle has already caused vast tree mortality in central Europe, southern Sweden, and Russia, and large-scale tree mortality events are witnessed increasingly at higher latitudes [

3]. Extensive tree mortality causes great economical, ecological, and social losses [

6] that can be mitigated by means of forest management and the monitoring of forest health [

7].

To effectively monitor and manage forest pests, the accurate and timely detection of damaged trees is essential. Traditional methods of detecting bark beetle damage have involved in situ visual inspections, which are time consuming, inefficient, and challenging for large forest areas [

8,

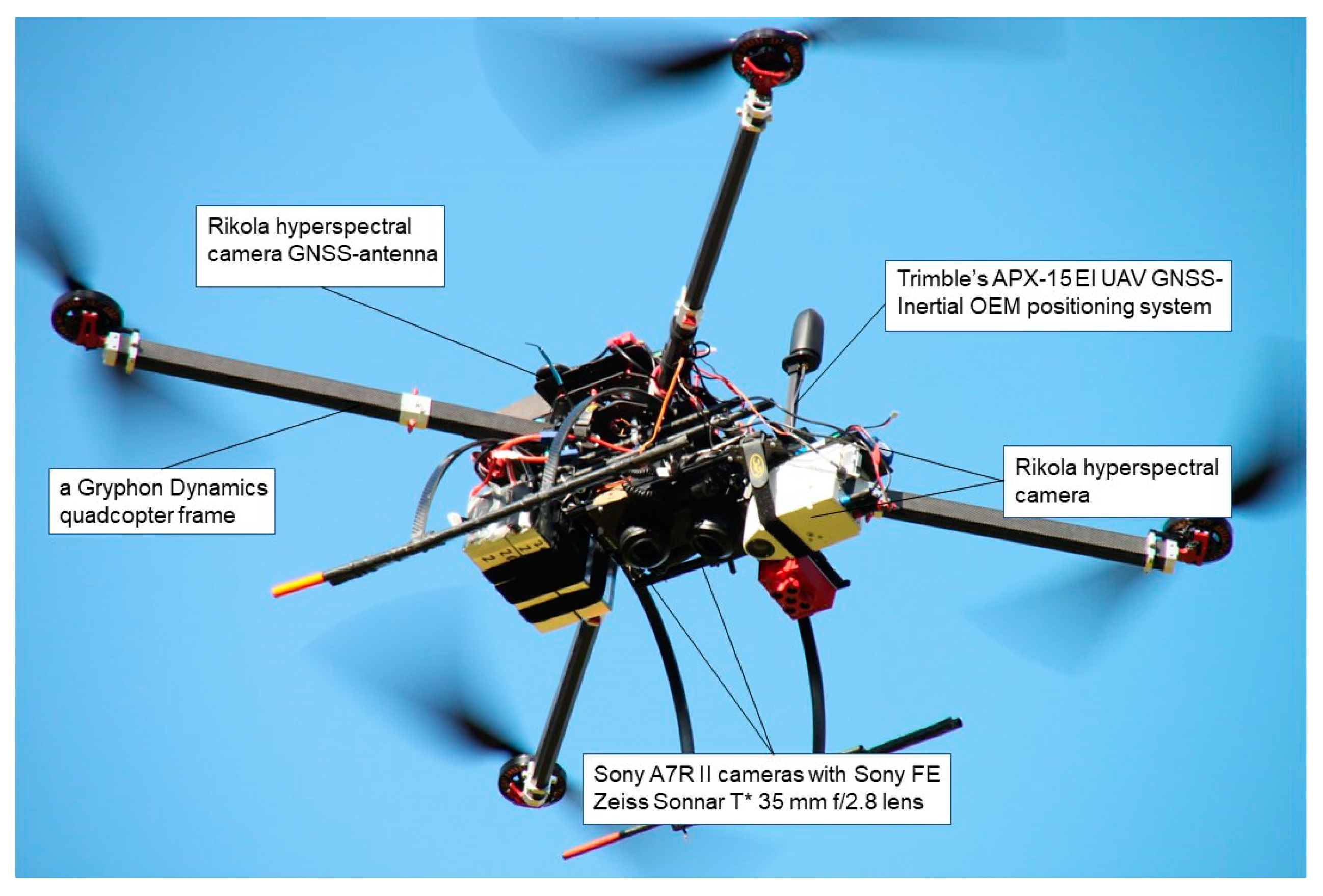

9]. Remote sensing technologies have emerged as a promising alternative, offering the potential for the timely and accurate identification of affected trees over large areas. Unoccupied aircraft systems (UASs) can promptly acquire the high-resolution imagery data of forests, making them an attractive tool for monitoring and estimating forest health [

10,

11].

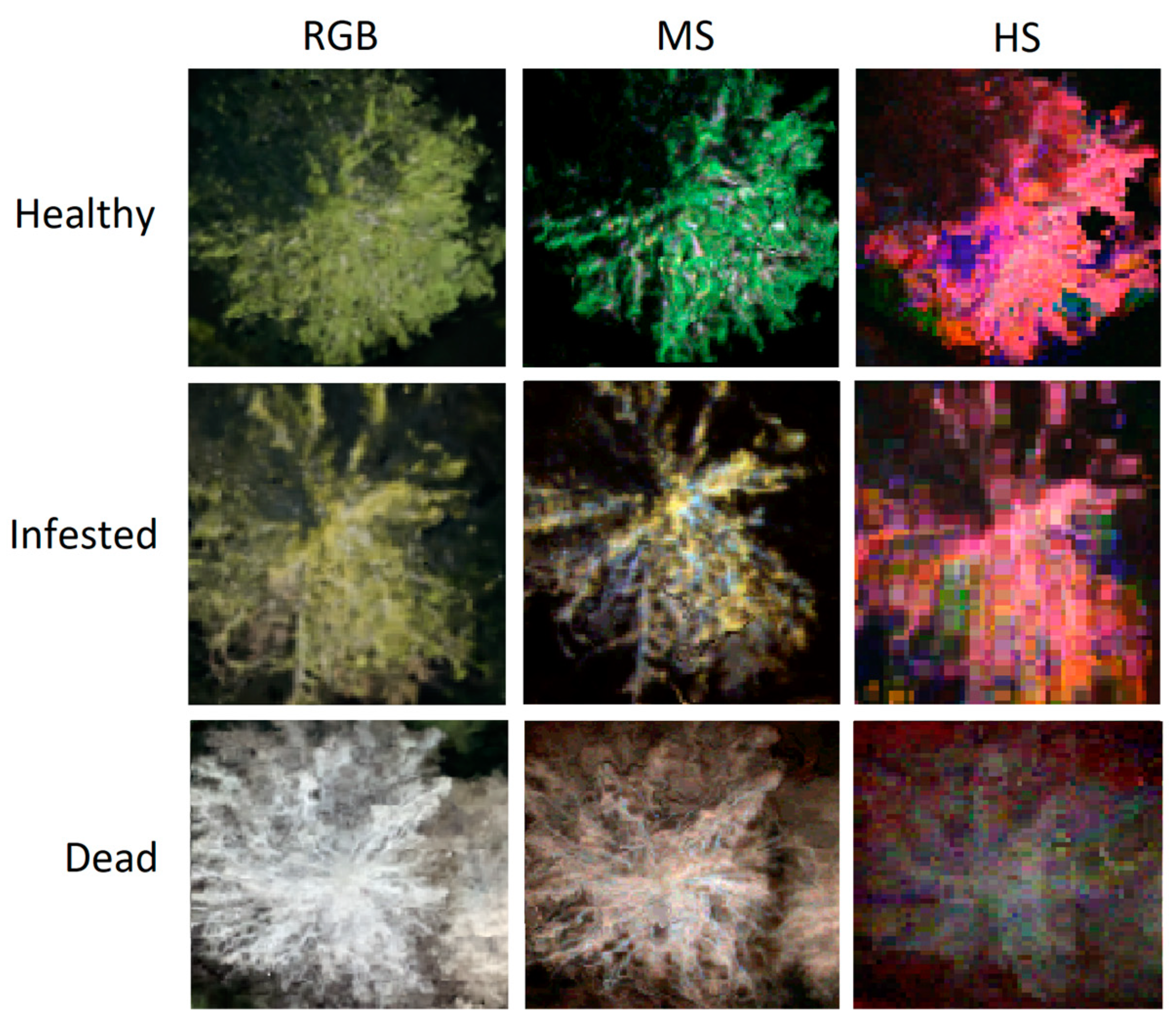

The methods for detecting bark beetle infestations from UAS images are usually based on crown discoloration symptoms that occur when the water and nutrient transportation of the tree are disturbed, and tree health begins to decline. The color of the crown gradually changes from green to yellow and finally to reddish brown and gray in the final step when the tree dies [

9]. These color symptoms can be detected from UAS images taken of the tree crowns. A great challenge in the detection of bark beetle infestations is the identification of the early stages of infestation. The first visible signs of bark beetle infestations include entrance holes, thin resinous flows on the trunk of the tree, and boring dust below the entrance hole; however, these symptoms are challenging to detect from above the tree canopies [

12]. Other trunk symptoms include bark flaking and shedding. The early stage of infestation when the first trunk symptoms are already visible but the crown is still green is referred to as the “green attack” phase [

13].

Red–green–blue (RGB) [

14,

15] and multispectral (MS) cameras [

13,

16,

17,

18,

19,

20] have been the most frequently used technologies in recent UAS studies on forest insect pests and diseases. Hyperspectral (HS) cameras have been used in only a few studies from UAS [

21,

22] and manned platforms [

9,

21]. There is a need for using HS cameras characterized by a high radiometric resolution and a wide spectrum to enable a more rapid detection of infested trees [

22].

A variety of computational approaches have been employed to detect tree stress from remote sensing images [

18]. Thresholding spectral indices derived from different band combinations, such as the Normalized Difference Vegetation Index (NDVI) and Chlorophyll Vegetation Index (CVI), are a commonly used approach [

9,

13,

16,

23]. Other research efforts have employed machine learning algorithms, such as Random Forests (RFs) and Deep Neural Networks (DNNs), to classify healthy and stressed trees [

14,

15,

19,

20].

A study comparing three different convolutional neural network architectures and RFs for bark beetle symptom classifications from MS images showed that the CNNs outperformed RFs; the accuracies were 0.94 and 0.86 for infested and healthy spruces, respectively [

19]. Studies using multitemporal MS images collected at early and later stages of bark beetle infestations showed that the classification of the different health stages was more accurate at the end of the summer season [

16,

20]. Recently, state-of-the-art single-stage DNN architectures You Only Look Once (YOLO) [

24] and RGB images provided high accuracies in the detection and classification of infested trees. A mean average precision of 0.94 was obtained in a study conducted in Bulgaria [

14], whereas the F-scores were 0.90, 0.79, and 0.98, respectively, for healthy, infested, and dead trees in a study conducted in Finland [

15].

Despite the increasing research efforts, there is still a need for more precise and efficient methods to monitor forest health. RGB UAS imagery is not sufficient for the detection of the early infestation stage as the green attack trees cannot be detected from the visible crown discoloration symptoms [

14]. For the early detection of bark beetle infestations, it may be advantageous to use MS and HS images that contain richer spectral information [

11]. For example, the wavelength regions affected by leaf water content have been found to be sensitive to changes during the early stages of a bark beetle infestation [

25]. MS and HS imaging can capture more spectral bands than RGB images with a higher spectral resolution. MS and HS capture wavelengths that have been shown to offer insights into cellular-level changes affecting plant health and can therefore be a useful tool for bark beetle infestation detection [

26,

27]. The limited use of advanced sensor technologies, such as HS cameras and LiDARs, has been considered a shortcoming in the present research, and it is expected that more detailed data would improve the performance of analytics [

9,

11,

22]. Furthermore, the scientific literature lacks a thorough investigation of the performance of different DNN architectures in connection with different imaging techniques.

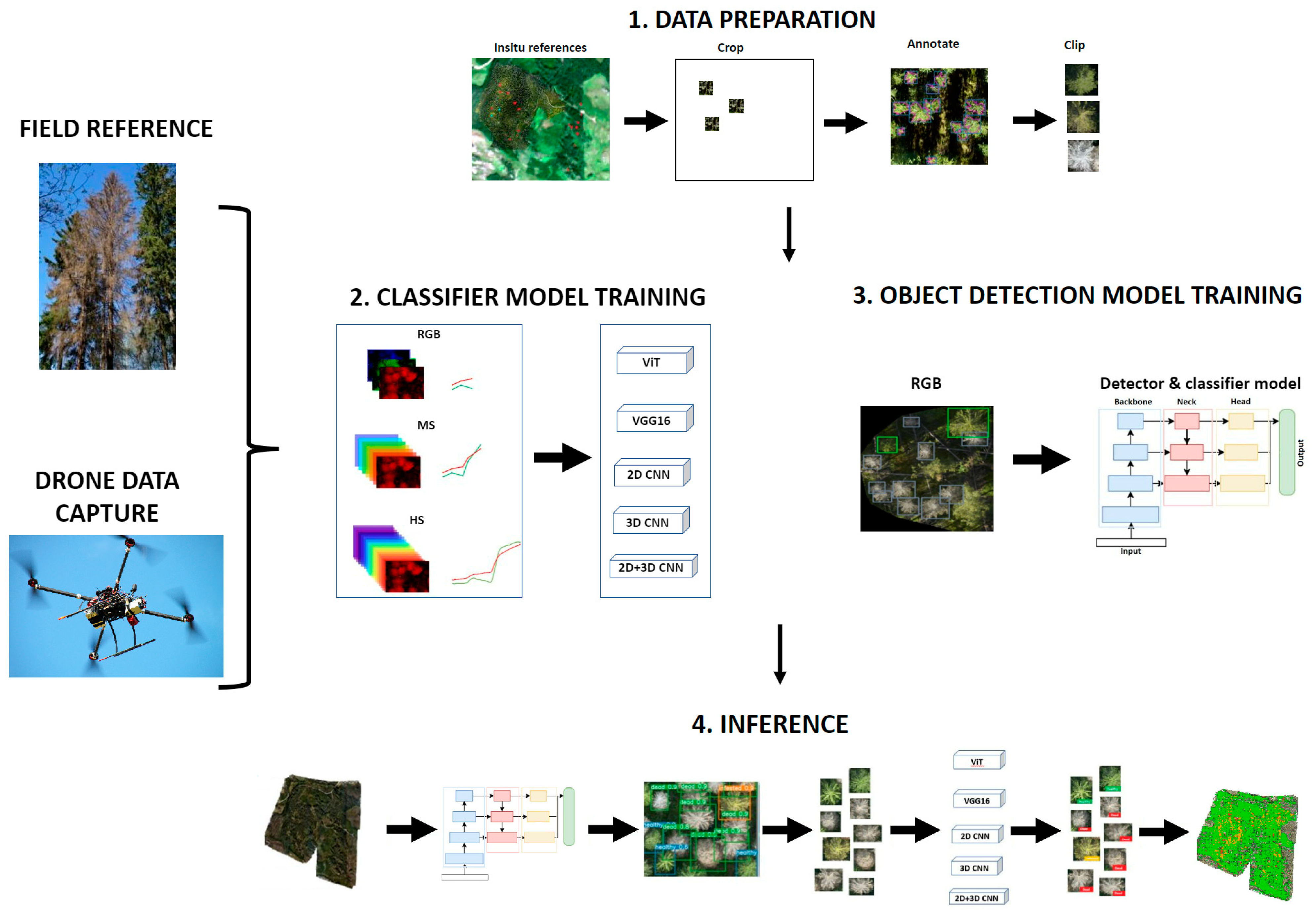

The overall objective of this study is to develop and assess deep learning-based methods for tree health analysis at individual tree levels utilizing high spatial and spectral resolution image data collected by a UAS. The study compares the effectiveness of different DNNs for identifying bark beetle damage on spruce trees using UAS RGB, MS, and HS images. The specific research questions aim to answer the following questions: (i) does the use of MS and HS images result in enhanced classification results compared to RGB images when using deep learning techniques? (ii) Which network structures work best for RGB, MS, and HS images? (iii) How does data augmentation impact the performance of the investigated classifiers? (iv) What kind of performance level is achieved by using single-stage detection and the classification network YOLO or integrating YOLO with a separate image classification network?

Our results highlight the potential of DNNs for the accurate and efficient detection of bark beetle damage and provide insights into the strengths and limitations of different network architectures for this task. The study also shows that careful consideration must be made to the data availability and model selection. Ultimately, this research has the potential to inform and improve the timely management of bark beetle infestations, contributing to more sustainable and resilient forest ecosystems.

3. Results

3.1. Classification Results

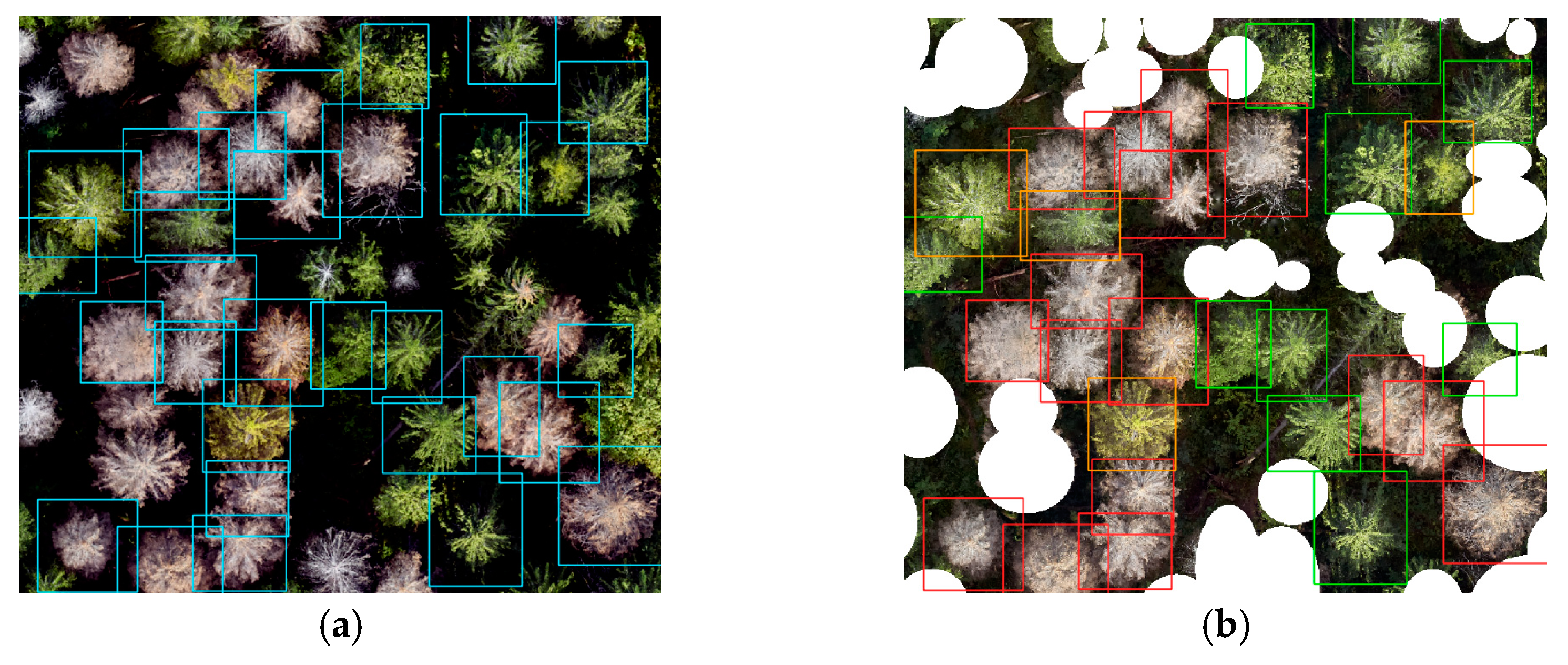

Overall, different datasets and classifiers provided relatively high and even classification accuracies for the healthy and dead spruce classes, while the accuracies were poorer for the infested class (

Table 9). The healthy-class F1-scores were 0.76–0.90 and, on average, 0.82. Correspondingly, the F1-scores were 0.85–0.98 and, on average 0.93, for the dead class. The infested-class F1-scores were 0.46–0.74 and had an average of 0.60.

When comparing the F1-scores of the infested class using different RGB and MS models, VGG16 and 2D-CNN outperformed the ViT model, despite ViT achieving a slightly higher overall accuracy than VGG16 and 2D-CNN for the RGB images. The good overall accuracy can be attributed to its effective classification of healthy and dead trees. However, the VGG16 and 2D-CNN models demonstrated better F1-scores when dealing with the infested class. VGG16 provided the best classification results with the RGB data, and 2D-CNN provided the best classification results with the MS-data for the infested class. However, among all the models and datasets (MS and HS), the ViT model trained on the RGB dataset yielded the best results for the dead class, achieving an F1-score of 0.98.

For the HS dataset, 3D-CNN 3 and 4 produced 5–8% better F1-scores for the infested class than the 3D-CNN 1 and 2 models. All HS 3D-CNN models outperformed the HS VGG16, ViT, and 2D-CNN models. The HS 2D-3D CNN 2 model provided the best results for the infested class, with an F1-score of 0.742. The 2D-3D CNN 1 and 3 models produced the poorest performances for the infested class of all HS models.

The best F1-scores for the infested class were 0.589, 0.722, and 0.742, for RGB, MSI, and HS models, respectively; thus, the HS models provided the best classification performance for the infested trees. The overall accuracies were 0.813, 0.901, and 0.829, respectively, for the corresponding models. The MS models thus provided a better overall accuracy than the HS models. This was due to the better results of the healthy trees in the MS models; the healthy class dominated the accuracy results due to the larger number of test samples in the healthy test data.

3.2. Impact of Data Augmentation

Through the analysis of the impact of different augmentations, the study identified the optimal data augmentation method, which comprised the utilization of random rotation, padding, and random perspective shift transforms. These selected transforms demonstrated the greatest potential for enhancing the performance of the model and were deemed crucial components of the augmentation process.

The best classifier models were all trained on the augmented dataset. Data augmentation improved the results in most cases (

Table 10). The greatest improvements of 2.0–4.0% were obtained for the infested class with the RGB and HS models. In the case of MS models, the data augmentation reduced the F1-scores by approx. 4.0% for the healthy and infested classes, while the dead-class F1-score stayed practically the same.

The confusion matrix of the best-performing models showed that the greatest misclassification appeared between the healthy and infested trees (

Table 11). The HS model classified 85% of the infested spruce trees correctly to the infested class, whereas 35% and 50% of them were misclassified to the healthy class by the MS and RGB models, respectively. Practically all dead spruce trees were classified to the dead class; however, 15–20% of the healthy spruce trees were classified as infested or dead.

3.3. Integrating YOLO and Classifiers

The YOLO model provided F1-scores of 0.706, 0.580, and 0.815, for the healthy, infested, and dead classes, respectively (

Table 12). When integrating the trees detected by YOLO and tuning the classification further using the VGG, 2D-CNN, and 2D-3D-CNN 2 classifiers, the classification accuracies improved significantly. For example, the infested class results improved by 5%, 20%, and 26% with the RGB, MS, and HS models, respectively. The used 2D-CNN model was trained without augmentation as the utilization of data augmentation led to a decrease in the model’s performance. The VGG and 2D-3D-CNN 2 models were trained with augmentations. The YOLO tree-detection results differed slightly from the visually identified reference trees, and therefore the classification results differed from the initial classification results (

Table 9 and

Table 10).

4. Discussion

4.1. Assessment of Classification Models for Different Datasets

An extensive evaluation of different neural network models was conducted on RGB, MS, and HS images captured by UASs. The findings reveal that the VGG16 model outperforms other networks when applied to RGB images. For the MS images, the three-layer 2D-CNN model showed the most promising performance. Meanwhile, the most effective model for HS images was the 2D-3D-CNN 2 model. The study also found that data augmentation improved the classification results in most cases. The findings of the study further indicate that MS and HS images exhibit superior performances compared to the RGB images for the task of tree health classification.

The HS 2D-3D-CNN 2 model stood out as the top performer, regarding the infested class, with an overall accuracy of 0.863 and F1-scores of 0.880, 0.759, and 0.928 for healthy, infested, and dead trees, respectively. The next-best results for the infested class were obtained with the 2D-CNN model trained on MS images, with an overall accuracy of 0.901 and F1-scores of 0.911, 0.722, and 0.895 for healthy, infested, and dead trees, respectively. The RGB images provided the poorest results for the infested class; the VGG16 model achieved an overall accuracy of 0.848 and F1-scores of 0.866, 0.591, and 0.905 for healthy, infested, and dead trees, respectively. The superiority of MS and HS images was further supported by the fact that the RGB dataset consisted of a larger number of data samples compared to the MS and HS datasets, providing it with an advantage in the learning process. Despite the limitations imposed by smaller sample sizes, the models trained on MS and HS images outperformed the RGB-trained model.

The 2D-3D-CNN 2 model performed well on HS images due to its use of 3D convolutions, which captured spatial, spectral, and joint spatial–spectral features efficiently. Using only 2D convolutions led to interband information loss as they could not fully exploit the structural characteristics of high-dimensional data, such as HS images. Combining 2D and 3D convolutions reduced the trainable parameters and computational complexity compared to using 3D convolutions alone, explaining the network’s superior performance over the 3D-CNN networks. The suboptimal performance of the other 2D-3D-CNN models can be attributed to specific factors. The 2D-3D-CNN 1 model may lack the depth to capture intricate data patterns effectively. The 2D-3D-CNN 3 model’s performance might be negatively impacted by batch normalization, which, given the small dataset size, could overly regularize the model and hinder generalization. In contrast, 2D convolution-based networks work well on MS and RGB images due to their lower dimensionality (5 channels for MS). The simple 2D-CNN model outperformed VGG16 for MS images, possibly because of the scarcity of the MS data, causing VGG16 to overfit and reduce the generalization capacity.

The findings from the model comparison align with the existing literature and discussions in the relevant section. Notably, the success of the 2D-3D-CNN 2 model concerning HS images corroborates the previous research conducted by various authors [

34,

35,

36]. These authors demonstrated the effectiveness of 3D-CNNs on HS images; however, acknowledged the high complexity of such models, requiring a large number of trainable parameters and extensive data for training purposes. In contrast, 2D-3D convolutional networks were found to be better suited for the cases with limited data, as they significantly reduce the number of parameters and data requirements. The most complex 3D-CNNs in this study demanded several -hundred-million trainable parameters, while 2D-3D-CNNs required only around 10–20 million trainable parameters.

The simplest and fastest model tested, 2D-CNN, proved to be the best performer for MS images. This same 2D-CNN model performed well for HS images in grass sward-quality estimations [

30]. Although the model’s performance on HS images in this study was not as strong, both investigations showcased the network’s ability to identify relevant features from the multi-dimensional data. The differences in the results can be attributed to the distinct tasks and datasets used in each study. For the RGB images, the VGG16 network showed the best performance for the infested class, aligning with the previous research [

32,

54,

55]. In contrast, the ViT network did not achieve comparable results for the infested class, despite the promising findings in other studies [

5,

30,

56,

57].

One of the primary findings of this study is that the use of MS and HS images results in a significant improvement in the classification accuracy of bark beetle-infested trees, as compared to using RGB images. The literature on this topic presents different findings, depending on the application and network. Some studies indicate that MS and HS images enhance the performances of tree species and health classification tasks, while others present no significant improvement over RGB images [

31,

58]. These outcomes can be attributed to the critical role of network selection when training models on MS and HS images. For example, a Mask R-CNN model [

58] might not be optimal for extracting the features from MS images, while a 3D-CNN model [

31] demonstrates great potential for HS images. In addition, the complexity of classification tasks might also have an impact, i.e., the detection of different health classes in comparison to species classifications.

4.2. Complete Pipeline for Detections and Classifications

The integration of classifier networks with the YOLO object detector resulted in a framework that enabled both the detection and classification of trees with a high accuracy. The use of YOLO object detections in conjunction with the VGG16, 2D-CNN, and 2D-3D-CNN classifiers outperformed the results obtained from utilizing only the classifier module of the YOLO network. It is worth mentioning that the YOLO network was not designed to process MS and HS images; hence, the detection and classification were performed on RGB images. Despite this, the VGG16 network, which was also trained on RGB images, still exhibited a superior performance in terms of classification.

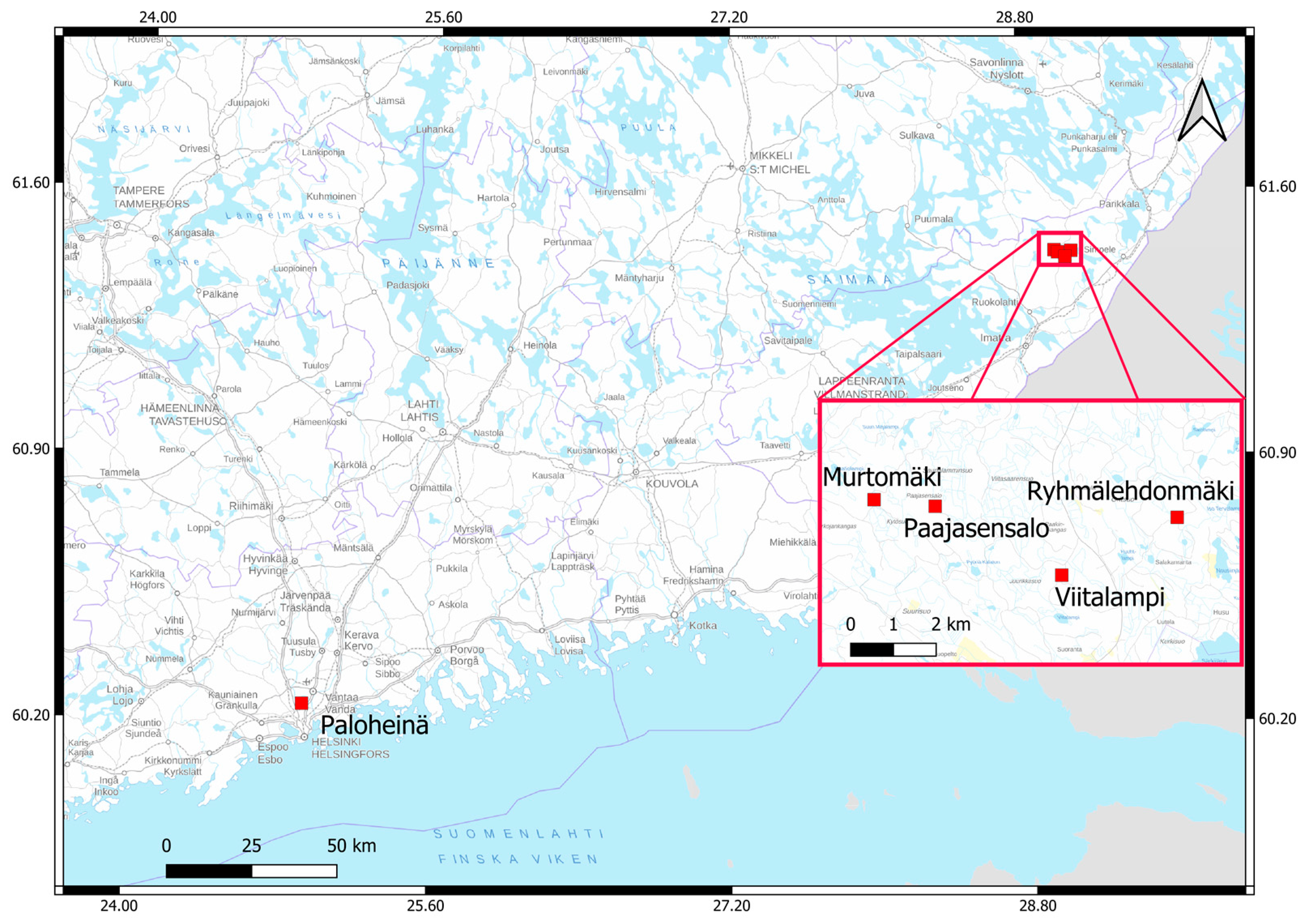

The performance of the YOLO algorithm was evaluated and compared with the results obtained in a previous study [

15], where a setting very similar to that used in this study was employed to detect and classify spruce trees into healthy, infested, and dead categories. The F1-scores were 0.788, 0.578, and 0.827 for healthy, infested, and dead trees, respectively, using the data from the Paloheinä, Ruokolahti, and Lahti areas, and the same class-labeling scheme as in the current study. The current study’s F1-scores of 0.706, 0.580, and 0.815 were comparable to these results, with the F1-scores for healthy trees being lower, infested trees being almost the same, and dead trees being slightly lower. The slightly better performance of the YOLO algorithm in [

14] can be attributed to the larger dataset used, which also included samples from an additional study area in southern Finland (city of Lahti), as well as for the use of different training and test sets. In the current study, the city of Lahti’s data were not used due to the absence of MS and HS data from the same cameras, whereas considering the training and test sets, they were optimized so that the same trees could be used for the datasets from all sensors. It is worth noting that the previous study [

15] reported higher F1-scores of 0.90, 0.79, and 0.98, albeit with a different labeling scheme and solely using Paloheinä data in the test set. The study acknowledges that the results obtained from the Paloheinä data may be somewhat optimistic compared to the data from the other areas. This was attributed to the specific processing techniques applied to the Paloheinä data, which enhanced the detectability of trees in this particular dataset, as well as being due to the better distribution of different health classes.

4.3. Further Research

The development of the proposed method involved several design choices that could affect the outcomes. Data preprocessing was an integral part of the process and included the cropping and resampling of images. These operations could impact the learning capabilities of the models under evaluation. Resampling images to very high or low spatial sizes results in either reduced resolutions or limited convolutional layer sizes. The original tree crown images were of varying sizes, which made the choice of optimal image size challenging. In the end, a compromise was reached by selecting the optimal size for the majority of the images. A further optimization of preprocessing should be considered in future developments.

We did not perform a statistical analysis to determine whether the observed differences in the F1-scores were statistically significant or fell within the margin of experimental errors. To properly establish the significance of the observed variations in the results, hypothesis testing should be conducted in future studies. In addition, the splitting of data into training, validation, and test sets was found to have an impact on the results. This holds true, especially when the amount of data was limited. To ensure that the data split was optimal, efforts were made to ensure that each set had enough samples of each class and that the training and test sets were independent. Ideally, the results should have been cross-validated with several different data splits and multiple rounds of training and testing; however, this was not feasible due to the limited amount of data. Therefore, the reproducibility of the results was not fully addressed in this study. However, it was emphasized that all the results were validated using independent test data.

The limited nature of the data could also impact the comparability of the models that were tested on various image types. The number of samples in each dataset varied, with the RGB dataset having the largest number of samples in each class. The MS dataset contained a slightly lower number of samples of infested trees and a higher number of healthy tree samples than the HS dataset. This disparity could be the primary reason for why the MS 2D-CNN model exhibited a lower F1-score for infested trees and a higher F1-score for healthy trees, compared to the HS 2D-3D-CNN model. To overcome the challenges caused by the insufficient data, future research should focus on collecting a more extensive reference dataset, enabling a more accurate detection of infested classes.

The characteristics of applied camera technology can impact the results of an empirical study. The RGB and MS cameras used in this study represent widely applied camera technologies in UAV remote sensing, thus the obtained results can be considered representative. The HS camera sampled spectral signatures with a relatively high spectral resolution (average of 8.98 nm) and FWHM (average of 6.9 nm) in the visible to near-infrared spectral range (500–900 nm). It is worth recognizing that the state-of-the-art technology offers enhanced-performance figures, for example, a spectral resolution of 2.6 nm and an FWHM of 5.5 nm in the spectral range of 400–1000 nm, as well as extending the spectral range beyond visible and near-infrared to the short-wave-infrared region [

59]. Furthermore, this study does not apply spectral-band selection or handcrafted spectral features or indices that are commonly used in the context of classical machine learning approaches [

13,

20,

59]. Instead, we considered that the DNN models could find the relevant features from the spectral signatures. Further studies should evaluate if enhancing camera and spectral qualities, as well as band selection, would be beneficial for assessing tree health.

4.4. Contributions and Outlook

The literature on the classification of bark beetle-infested trees is limited, and to the best of our knowledge, there is no systematic study comparing the performances of RGB, MS, and HS images in this type of tree health assessment task. Furthermore, prior to this study, the use of different network structures for each specific data type had not been investigated in this field. The important contribution of this study is the comprehensive evaluation of several types of deep learning architectures and various remote sensing camera types. The presented results thus provide new information and offer a basis for the further exploration of the use of MS and HS images in detecting bark beetle disturbances using deep learning techniques, as well as for other image classification tasks in the field of remote sensing.

The fundamental need of this research is the development of methods for managing bark beetle outbreaks. For example, in Finland, the outbreaks typically appear in scattered individual trees [

15], and therefore high-resolution datasets are required. The UAS-based techniques provided an effective tool to rapidly detect new infestations at the individual tree level. Our results show that dead trees can be reliably detected, even using RGB images. The analysis of multitemporal RGB or MS datasets can be used to detect, understand, and predict the spread of outbreaks. The effective methods for detecting green attacks are still absent from the scientific literature, and HS images, in particular, is expected to be a potential tool for performing this task [

9]. Our research will continue to develop strategies for bark beetle management, particularly in terms of developing efficient machine learning and inference pipelines, increasing the datasets for model training, studying the performance of state-of-the-art visible- to shortwave-infrared HS imaging techniques, using novel beyond-visual lines-of-sight drone techniques that enable larger area surveys, as well as developing strategies for green attack detections. Efficient monitoring tools offer knowledge-based decision support to the authorities, politicians, forest owners, and other stakeholders for performing optimized forest management actions in the cases where bark beetle outbreaks continue to spread. This will enable minimizing the negative social, economic, and environmental impacts of bark beetle disturbances.

5. Conclusions

The important contribution of this study is the comprehensive evaluation of several types of deep learning architectures with UAS-acquired RGB, multispectral (MS), and hyperspectral images (HS) to assess the health of individual spruce trees in a test area suffering from a bark beetle outbreak. The presented results thus provide new information and offer a basis for the further exploration of the use of MS and HS images for detecting bark beetle disturbances using deep learning techniques, as well as for other image classification tasks in the field of remote sensing.

The results show that MS and HS images can achieve superior classification outcomes compared to RGB images and identify the most appropriate network structures for each image type. High-accuracy results were obtained for the classifications of healthy and dead trees with all tested camera types, whereas the infested class was often mixed with the healthy class due to the smaller spectral difference between these two classes. Considering the best-performing models with different cameras, the best results for the infested class were obtained using the 2D-3D-CNN 2 model trained on an augmented set of HS images, the next-best results were obtained with the 2D-CNN model trained on an unaugmented set of MS images, and the VGG16 model for augmented RGB images had the lowest performance. The study demonstrated that data augmentation may be used to balance the training class sizes and slightly improve the classification performance, particularly when complex networks with millions of parameters were employed. Additionally, the outcomes reveal that integrating separate classifier networks with the YOLO object detector achieves improved results compared to the YOLO classifier.

The findings of this study offer a basis for the further development of the use of MS and HS images in detecting bark beetle disturbances using deep learning techniques, as well as for other image classification tasks in the field of remote sensing. With the ongoing developments, these technologies have the potential to play a crucial role in forest management efforts to combat large-scale bark beetle outbreaks by providing timely information on the health status of trees.