Abstract

Knowledge of tree size is of great importance for the precision management of a hazelnut orchard. In fact, it has been shown that site-specific crop management allows for the best possible management and efficiency of the use of inputs. Generally, measurements of tree parameters are carried out using manual techniques that are time-consuming, labor-intensive and not very precise. The aim of this study was to propose, evaluate and validate a simple and innovative procedure using images acquired by an unmanned aerial vehicle (UAV) for canopy characterization in an intensive hazelnut orchard. The parameters considered were the radius (Rc), the height of the canopy (hc), the height of the tree (htree) and of the trunk (htrunk). Two different methods were used for the assessment of the canopy volume using the UAV images. The performance of the method was evaluated by comparing manual and UAV data using the Pearson correlation coefficient and root mean square error (RMSE). High correlation values were obtained for Rc, hc and htree while a very low correlation was obtained for htrunk. The method proposed for the volume calculation was promising.

1. Introduction

The European hazelnut tree (Corylus avellana L.) is distributed in the temperate zone of the northern hemisphere but is spreading rapidly in new areas such as Chile, South Africa, and Australia. Its total cultivated area in the world is about 660,000 ha, with an average world annual production of about 865,000 t (in-shell hazelnuts), showing an increasing trend of geographical expansion caused by strong demand from the confectionery industry [1,2]. Hazelnut traditionally grows as a multi-stemmed bush with a planting density of between 250 and 550 trees/ha [3,4]. On the contrary, new hazelnut orchards are designed with higher planting densities of up to 1700 trees/ha; drip or sub-irrigation; a free vase training system; and a single trunk, allowing for mechanical cultivation. Traditional orchard management methods have a low degree of informatization, leading to various problems, including low production efficiency, excessive water/fertilizer/pesticide use and severe pollution problems. In addition, modern orchard management will be empowered by informatization, which will provide opportunities for growers to make decisions based on facts, and help them to reproduce good practices [5,6].

It is known that the development of the tree canopy affects both the quality and yield of fruits [7,8,9]; therefore, to achieve high hazelnut production, manipulation and management of the tree canopy are essential. Due to the increasing price of agrochemicals, agricultural diesel, water and labor, there is a need for efficient precision farming that applies the appropriate timing and amount of fertilizer, pesticides, and irrigation water for hazelnut orchard management [7].

Precision agriculture, especially for fruit tree crops, is an environmental and economic management strategy that uses information and communication technology to acquire data supporting decisions in climate change [10,11,12]. The preliminary step of precision farming is receiving as much growth data on the crop as possible, which depends on accurately describing the crop’s morphological and structural characteristics, including canopy width, height, area and volume [7,13]. Among these, canopy width is essential for precision spraying and machine harvesting, while the canopy projection area is important for determining tree growth and water requirements during the growing season [7]. Moreover, geometrical canopy characteristics and tree vigor are crucial traits in phenotyping studies to assess the cultivar’s suitability to be cultivated in specific growing systems, as well as for evaluating pruning practices, irrigation and fertilization, and spraying [5,14].

With the development of sensor technology, nondestructive large-area orchard canopy measurement can be realized using unmanned aerial vehicles (UAVs) and visual imaging technology [5,8]. A UAV is a powered, aerial vehicle, without any human operator, that can fly autonomously or be controlled remotely with various payloads. Furthermore, due to their advantages in terms of flexible data acquisition and high spatial resolution, UAVs are quickly evolving and provide a powerful technical approach that is rapid and nondestructive, for many applications in precision agriculture, including crop-state mapping, crop-yield prediction, disease detection, and weed management [15].

A UAV with low-altitude remote sensing has advantages such as good mobility, easy construction, and high resolution for obtaining images. These UAVs are easily accessible and provide accurate data. Furthermore, they are cost-effective, easy to deploy anywhere and can produce real-time spatial images compared with other traditional remote sensing (RS) platforms. However, the use of UAVs in precision agriculture faces critical challenges such as payload, the sensors used in the UAV, the cost of the UAV, flight duration, data analytics, environmental conditions, and other requirements. Cost is the main challenge to UAV use due to the various sensors, mounting parts, technology-based applications and software needed for data analytics. Weather conditions, such as rain, snowfall, clouds, and fog, are another factor that limits UAV activities and the sensing process. A recent study showed how [14] the quality of services provided by UAVs in comparison to other types of remote sensing platforms, such as satellites, manned aircrafts and ground-based platforms, resulted in higher flexibility, adaptability and accuracy, with easy deployment and operability [16]. One other limitation of multi rotor UAVs is battery duration, although this enables UAVs to be small- to medium-sized [6]. Several studies have been conducted on the use of UAV sensing technology in various types of orchards [17], including olive [14,18], peach [7], almond [19], apple [20], mango [21], grape [22,23], cherry [24] and pine [25] orchards, with promising results. In the last decade, research on hazelnuts has focused on the improvement of field management and the quality of products; however, techniques are lagging behind advanced agronomic practices [1,6,26].

From the first studies before the new century [27] up to the very recent H2020 Panteon project, various authors have tried their hand at modern data collection techniques [28,29,30]. These studies mainly focused on using satellite remote sensing images for the investigation of the land characteristics of hazelnut orchards. Moreover, the resolution of satellite images is not often sufficient to highlight spatial variability because of different types of soil tillage and canopy management practices that can invalidate the canopy vigor data, as has already been reported for other tree crops [19].

Despite this, research works involving the use of environmental remote sensing systems are very few: the review of Zhang et al. [17] reports no published paper on the use of UAVs in Corylus avellana L. Altieri et al. [6] recently defined a rapid procedure to calculate the canopy area and leaf area index (LAI) of young hazelnut trees using NDVI (normal difference vegetation index) and CHM (canopy height model) values derived from UAV images. However, they did not report geometric information such as canopy width.

Hazelnut has particular characteristics both for the canopies, which have irregular and complex shapes, and for the high leaf area index (LAI) [6] that challenge their 3D characterization. Therefore, it is important to improve the characterization of this tree crop. In addition, in recent years this species has shown an increasing trend of geographical expansion caused by strong demand from the confectionery industry, with the plantation of modern, big, mechanically manageable, irrigated, and high-density orchards. This led to the necessity to adapt the principles of precision farming also to Corylus avellana L. Overall, there is still a lack of methodological information on how to use the UAV’s data to make accurate measurements of the geometrical characteristics and the volume of hazelnut canopy. Meanwhile, there is a lack of assessment on time and labor consumption associated with efforts to enhance accuracy with the UAV photogrammetry method. Accordingly, the general aim of this paper was to give to researchers and growers an innovative and simple method for the punctual and complete characterization of the hazelnut canopy by UAV photogrammetry.

2. Materials and Methods

2.1. Study Site Description and Tree Sampling

The research was carried out during the growing season of 2022 in the experimental orchard of the Department of Agricultural, Food, and Environmental Sciences of the University of Perugia, located in central Italy (42°58′22.82″N, 12°24′13.02″E) on an orchard planted in 2017 with three densities:

- (A)

- 625 trees ha−1, spaced 4 m between rows and 4 m on the row, used as a control treatment—the common density used by farmers;

- (B)

- 1250 trees ha−1, spaced 4 m × 2 m;

- (C)

- 2500 trees ha−1, spaced 4 m × 1 m.

All trees, grafted on no-suckering rootstock, were trained as single trunks with four main branches each.

The orchard is composed of six rows, each containing 43 trees divided into the three densities of the plantation; on the same row, the first 25 plants are spaced 1 m from each other, the next 12 are spaced 2 m and the last 6 are placed 4 m apart. Specifically, the orchard is made up of trees belonging to two of the main Italian hazelnut varieties: Tonda di Giffoni in the first three rows and Tonda Francescana® in the next three rows. In this paper, the trees studied belonged only to the hazelnut variety Tonda Francescana®.

A previous study showed that hazelnut canopy characterization by UAV was unsuitable for the third plant density reported above, namely 2500 trees ha−1 [31]. For this reason, later research was carried out only on the first two plant densities of the hazelnut orchard.

2.2. Manual Measurements

Manual measurements have been carried out on 36 trees, 12 per each row, of which 6 had a 1250 trees ha−1 density and the other 6 a 625 trees ha−1 density. Since the studied trees are replicated in three rows, 18 trees were measured for each of the two tree densities.

Manual measurements were used to characterize tree hazelnut canopy parameters such as plant height and canopy volume. Specifically, the instrument used to obtain the parameters was a meter measure, which was used to measure the plant height, canopy height, width, and thickness. The tree height, trunk and canopy height and canopy width and thickness were measured by a trained operator standing in front of the plant, collecting a single measurement per parameter. The canopy size parameters, measured manually, represent the mean value of the canopy, derived from a single measure per size parameter. In detail, the canopy’s height was calculated as the difference between the plant’s height and the trunk’s height, while the width and thickness of the canopy were measured as the average distance between the sides. The width is considered the average distance between the north and south sides of the canopy; on the contrary, the thickness is measured between the west and the east sides. The width and thickness of the canopy were used to calculate the average radius of the canopy area, assimilated to a regular circle and, with the height of the canopy, was used to calculate its volume, which was assimilated to a simple cylinder, according to what the report by [32], as if the plant shape was a regular geometric solid.

2.3. Acquisition of UAV Images

The acquisition was made using a DJI (Shenzhen, China) Phantom 4 (P4) Multispectral UAV [33]. The P4 multispectral is a high-precision drone capable of multispectral imaging functions. The imaging system contains six cameras with ½.9-inch CMOS (complementary metal–oxide semiconductor) sensors, including a Red–Green Blue (RGB) camera and a multispectral camera array containing five cameras for multispectral imaging, covering the following bands: blue (B): 450 nm ± 16 nm; green (G): 560 nm ± 16 nm; red (R): 650 nm ± 16 nm; red edge (RE): 730 nm ± 16 nm and near-infrared (NIR): 840 nm ± 26 nm. The spectral sunlight sensor on the top of the aircraft detects solar irradiance in real time for image compensation, maximizing the accuracy of the collected multispectral data. The P4 Multispectral uses a global shutter to avoid distortions that might be present when using a rolling shutter.

The P4 multispectral aircraft has a built-in DJI Onboard D-RTK, providing high-precision data for centimeter-level positioning when used with a Network RTK service. The P4 multispectral has a maximum take-off weight of 1487 g, and a maximum speed of 31 mph or 36 mph (based on the flight mode). The controllable range of the pitch angle is −90° to +30°. The app DJI GSPro, installed on an Apple iPad, was used for the design and execution of the UAV flight. The flight plan was designed as a polygon grid with a flight altitude of 10 m, an overlap of the flight path of 75% in both the side and heading directions, a velocity of 1.5–2 m/s and the capture mode “Hover & Capture at Point”. The ground resolution (GSD—ground sample distance) was set to 0.5 cm/pixels.

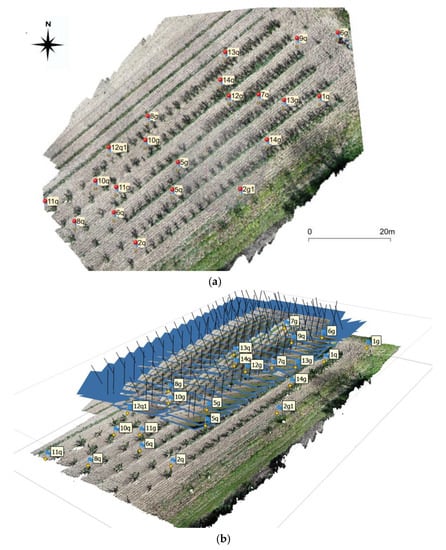

Ground control points (GCPs) were used to ensure the accuracy of the subsequent point cloud information. The coordinates of the GCPs were measured using network real time kinematic (NRTK) Positioning, so the position of the receiver points on global navigation satellite system (GNSS) is obtained in real time with centimeter precision. On the field were also positioned check points (CPs), i.e., known coordinate points, not used for the point cloud model but only for position control. The position of the GCPs and CPs is reported in Figure 1a.

Figure 1.

(a) Distribution of the control points in the survey area; and (b) control points and frames on the point cloud.

The relative and absolute orientation of the frames was determined by aerial triangulation with a bundle adjustment algorithm. The tie points of the frames were automatically identified using an image-matching algorithm. The GCPs, whose coordinates were known from the GNSS survey, were manually identified on the frames, and collimated one by one [34].

2.4. Point Cloud Reconstruction

Agisoft (St. Petersburg, Russia) Metashape software [35] was used to process the UAV images. The software easily and automatically converts hundreds of images into a point cloud. It is widely used for ground and aerial photogrammetry and remote sensing [36,37,38,39]. The red-green-blue (RGB) images collected by the UAV were used to create the 3D point cloud (Figure 1b). The detailed procedure used to obtain the point cloud is described in [36]. Briefly, the calibration procedure was first applied to calculate the calibration camera parameters and the obtained parameters were imported to Agisoft Metashape before processing. Metashape was used to find matching points between overlapping images, estimate the camera position, and build a sparse and dense point cloud model [36]. As described above, 10 GCPs (Figure 1a,b) were used for orienting the images during the optimisation and for georeferencing the photogrammetric model and point cloud in the global datum WGS 84. An ortophoto of the study area was extracted (Figure 1a).

2.5. Recognition of Hazelnut Trees

For the identification of every single tree, two procedures were tested:

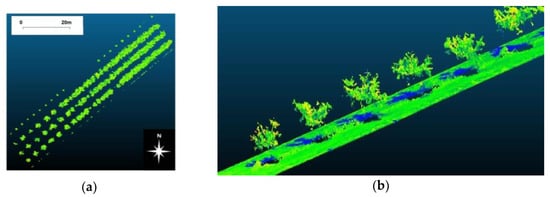

- The 3D point cloud was divided into two point clouds: a “canopy” point cloud and a “ground” point cloud, using the classification procedure of Agisoft Metashape. Each point cloud was exported separately to the open-source software Cloud Compare (Paris, France). In Figure 2a, the “canopy” point cloud exported on Cloud Compare was reported. The obtained 3D point cloud is affected by noise, especially in the lower part of the canopy. Thus, filters available on Cloud Compare software for automatic noise removal have been used. Rasterisation of the “canopy” and ”ground” point clouds was done, resulting in Digital Surface Model (DSM) DSMcanopy and DSMground, respectively, with a resolution of 0.01 m × 0.01 m. To the DSMcanopy no interpolation to fill empty areas was performed because the holes could represent essential data for the evaluation of the penetration of the light through the canopy; instead, to the DSMground a weighted average interpolation was applied to fill some holes in the original cloud. Finally, the two DSMs were imported into QGis (Figure 2b) to operate the analysis described below.

Figure 2. (a) The “canopy” point cloud on Cloud Compare; and (b) the “canopy” and ”ground” point clouds on Cloud Compare.

Figure 2. (a) The “canopy” point cloud on Cloud Compare; and (b) the “canopy” and ”ground” point clouds on Cloud Compare.

The DSMcanopy in the GIS environment was elaborated to obtain the canopy radius and height.

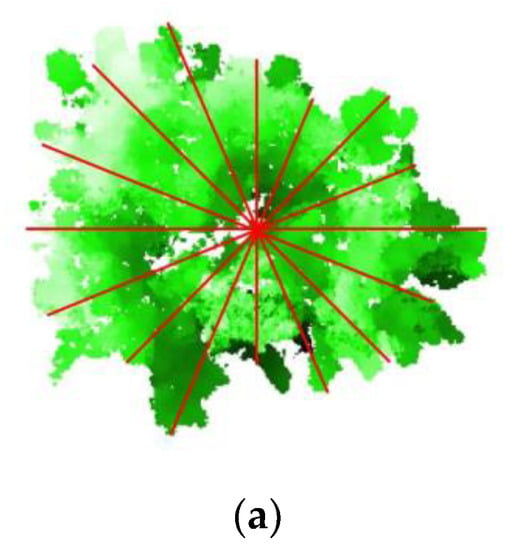

The radius Rc was calculated as the mean of the width of the canopy evaluated on eight different directions with an inclination of 22°30′ (Figure 3a).

Figure 3.

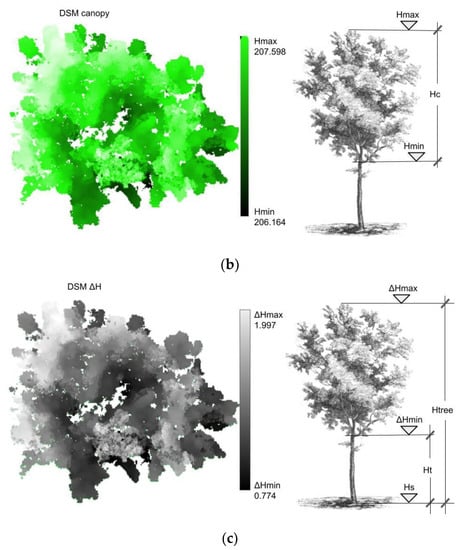

(a) Width of the canopy evaluated on eight different directions with an inclination of 22°30′; (b) evaluation of the height of the canopy; and (c) evaluation of the height of the trunk and of the tree.

The height of the canopy hc was obtained from the difference between the maximum and the minimum elevation of the file raster of the canopy (Figure 3b):

The height of the tree was evaluated from a in which each pixel value is the elevation difference ΔH between the DSMcanopy and the DSMground (Figure 3c). Thus, the height of the tree, htree is:

while the height of the trunk, htrunk is:

For the evaluation of the surface of the canopy on the ground, DSMcanopy was vectorised in QGis using the command “from raster to vector”. In this way, the polygon of the canopy was obtained, as well as, automatically, geometrical attributes, such as the surface.

- 2.

- The ortophoto obtained from the elaboration of the multispectral images was used, in a GIS environment, to obtain the NDVI map using the formula:

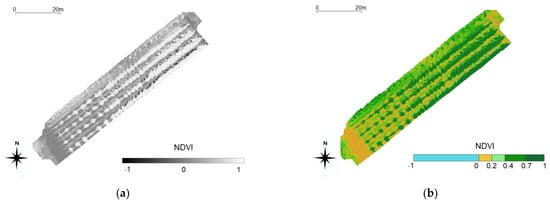

Figure 4.

(a) Greyscale NDVI map obtained by UAV survey; and (b) classified NDVI map obtained by UAV survey.

The NDVI map was evaluated fully automatically, classifying the pixels into five vegetation classes (Figure 4b): presence of water, no vegetation, sparse vegetation, moderate vegetation, and dense vegetation. (NDVI < 0: presence of water; 0 < NDVI < 0.2: bare soil; 0.2 < NDVI < 0.4: sparse vegetation; 0.4 < NDVI < 0.7: moderate vegetation; NDVI > 0.7: dense vegetation).

2.6. Assessment of the Canopy Volume

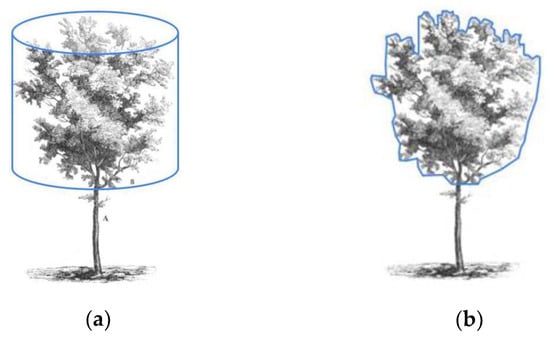

The assessment of the canopy volume was made by simulating the manual method (method 1) to test the UAV technology compared to the manual measurements and in automatic mode in GIS environment (method 2). In particular:

- Method 1: the canopy was assimilated to a cylinder (Figure 5a), and the volume was assessed as follows:

Figure 5. (a) Volume of the canopy assimilated to a cylinder (method 1); and (b) volume of the canopy evaluated considering the shape of the canopy obtained from the 3D point cloud (method 2).

Figure 5. (a) Volume of the canopy assimilated to a cylinder (method 1); and (b) volume of the canopy evaluated considering the shape of the canopy obtained from the 3D point cloud (method 2).

- Method 2: the volume was evaluated considering the shape of the canopy obtained from the 3D point cloud (Figure 5b). From the raster file of the canopy, DSMcanopy, the volume was obtained in a GIS environment by evaluating the volume between the DSM and a horizontal plane passing through the lowest point of the same.

2.7. Evaluation of Model Accuracy

Two indicators evaluate the accuracy of the model in this study: the Pearson correlation coefficient (R) and the root mean square error (RMSE). R represents a measure of linear correlation between two sets of data and has a value between −1 (a perfect negative correlation) and 1 (a perfect positive correlation), where 0 indicates no correlation. The RMSE represents the deviation of the predicted value from the actual value, i.e., the closer the calculated value of the volumetric algorithm is to the true value of the manual measurement, the higher the prediction accuracy of the model. The calculation equation is as follows:

where Yi is the sample predicted value (in this case corresponding to UAV data), Xi is the sample actual value (corresponding to manual measurements) and N is the number of samples.

3. Results

3.1. Assessing Errors of the Point Cloud Reconstruction

For the UAV survey 23 points of known location, including 10 GCPs and 13 CPs, were used. The total error was evaluated by both GCPs and CPs and was lower than 0.02 m (Total Error Control Points: 0.0173 m; Check Points: 0.0193 m).

Table 1 shows the GCPs/CPs location, Root Mean Square Error for X, Y, and Z coordinates (Error-m) and Root Mean Square Error for X, Y coordinates (Error-pixel) for GCPs and CP averaged over all the images. The total error implies averaging over all the GCP locations/check points.

Table 1.

GCPs and CPs locations and error estimates.

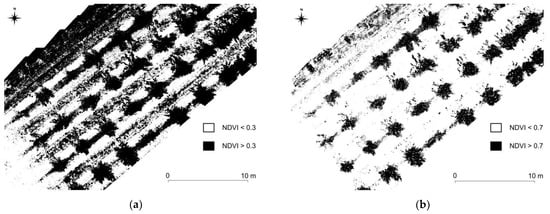

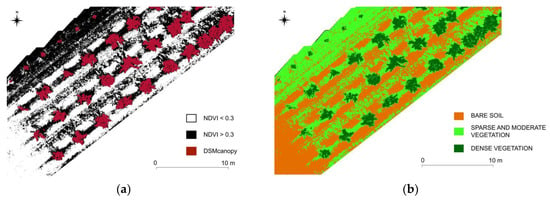

3.2. Recognition of Hazelnut Trees

Figure 6a gives the NDVI map obtained using a threshold NDVI = 0.3. The black pixels corresponding to NDVI > 0.3 represent vegetation; the white pixels corresponding to NDVI < 0.3 represent no vegetation. From this map (Figure 6a), it is not possible to extract the single tree canopy due to the presence of sparse vegetation on the ground. To exclude the sparse vegetation from the analysis, the threshold was increased to NDVI = 0.7 (Figure 6b).

Figure 6.

(a) NDVI map classified using the threshold NDVI = 0.3; and (b) NDVI map classified using the threshold NDVI = 0.7.

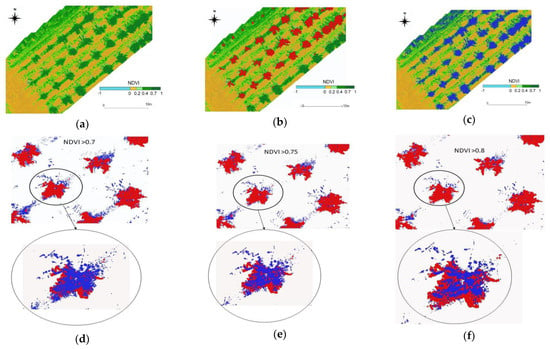

The overlap between the DSM of the canopy and the NDVI map has been noted (Figure 7a,b), but there are some problems related to the vegetation present on the ground between the trees that do not allow unique identification of the single canopy. For this reason, the NDVI map was filtered with values higher of 0.7 highlighted in blue (Figure 7c). The further overlap between the NDVI map (>0.7) and the DSMcanopy showed in Figure 7d that some pixels with vegetation do not belong to the canopy. The same analysis was repeated for NDVI values of 0.75 (Figure 7e) and 0.8 (Figure 7f). Therefore, by filtering by increasing values, there was an important reduction in the number of pixels relative to the vegetation on the ground. Still, inside the canopy, there was a significant increase in empty areas. To solve this problem, in a GIS environment, a combination “pixel to pixel” between the altimetric information derived from point cloud and the NDVI map (with threshold NDVI = 0.3) was possible (Figure 8a,b).

Figure 7.

(a) NDVI map reclassified; (b) overlap NDVI map re-classified and DSMcanopy (red); (c) NDVI map with blue values representing an NDVI > 0.7; (d) overlap NDVI map (blue) with values > 0.7 and DSMcanopy (red) and detail of one tree; (e) overlap NDVI map (blue) with values > 0.75 and DSMcanopy (red) and detail of one tree; and (f) overlap NDVI map (blue) with values > 0.8 and DSMcanopy (red) and detail of one tree.

Figure 8.

(a) Overlap between the NDVI map (NDVI = 0.3) and DSMcanopy (red); and (b) intersection between NDVI map and DSMcanopy.

From the intersection between the NDVI map and the DSMcanopy, the pixels that represent vegetation but have an elevation less than the DSMcanopy were excluded. In this way, only the tree canopy was identified.

3.3. Comparison between Geometrical Characteristics Obtained from Manual and UAV Methods in the Two Tree Densities

Table S1 reports the parameters (Rc, hc, htree and htrunk) obtained from the UAV survey and manual measurements. In the second column, the number of trees surveyed is indicated. Each tree was identified by a code X_YY, where X is the row and YY is the plant number.

From the UAV surveys, as shown in Table S1, for Rc the minimum value obtained was 0.34 m (tree 6_43) and the maximum value was 1.15 m (tree 5_26); for hc the minimum value obtained was 0.78 m (tree 6_43) and the maximum value was 2.42 m (tree 5_29); for htree the minimum value obtained was 1.54 m (tree 6_43) and the maximum value was 2.95 m (tree 5_29); for htrunk the minimum value obtained was 0.41 m (tree 4_41) and the maximum value was 0.89 m (tree 4_38).

From manual surveys, as shown in Table S1, for Rc the minimum value obtained was 0.63 m (tree 6_43) and the maximum value was 1.19 m (tree 5_39); for hc the minimum value obtained was 0.90 m (tree 6_43) and the maximum value was 2.20 m (tree 4_37); for htree the minimum value obtained was 1.60 m (tree 6_43) and the maximum value was 2.70 m (tree 4_37); for htrunk the minimum value obtained was 0.20 m (tree 6_38) and the maximum value was 0.85 m (tree 5_33).

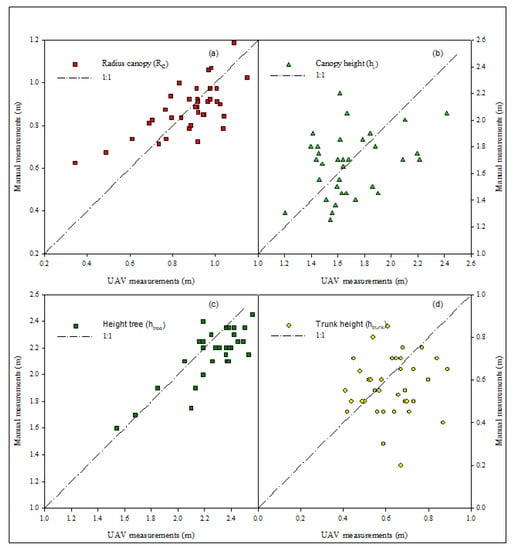

The Pearson correlation coefficient was used for the evaluation of the correlation between the two methods (Table 2). A low Pearson correlation coefficient shows no correlation for htrunk (and R = 0.07), while good results were obtained for hc, Rc and htree (R = 0.544, R = 0.694 and R = 0.728).

Table 2.

Values of Pearson correlation coefficient (R) and root mean square error (RMSE) for the geometric characteristics.

The data obtained from two methods are shown in Figure 9a–c. The black dotted line represents the ratio 1:1. All the Rc data obtained by the UAV survey were lower than the manual measurements for hc and htree. For htrunk it is not possible to define a clear trend. The characteristics that showed more variation were hc and htrunk.

Figure 9.

The black dotted line represents a 1:1 ratio; (a) comparison of Rc measurements from UAV and manual; (b) comparison of hc measurements from UAV and manual; (c) comparison of htree measurements from UAV and manual; and (d) comparison of htrunk measurements from UAV and manual.

The htrunk data, reported in Table S1, showed that, for manual measurements, the measured values are the same for many trees. Against this, the htrunk data derived from the UAV survey for the same trees varied from 0.41 m (4_41) to 0.69 m (6_28).

To complete the analysis, the root mean square error (RMSE) for all the geometrical characteristics was also evaluated (Table 2). Lower RMSE values were obtained for Rc, htrunk, htree (0.115 m, 0.184 m and 0.230 m), while for hc a higher value was obtained (0.282 m).

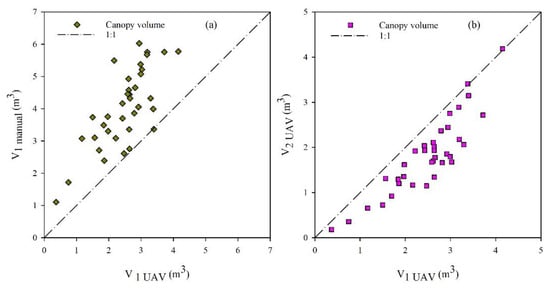

3.4. Comparison between the Volume Obtained by UAV and Manual Surveys

To validate the UAV method, a comparison between the canopy volume was evaluated by applying the Equation (5) (V1,UAV) using the UAV and manual measurements. A good Pearson correlation coefficient (R = 0.752) and a lower RMSE (RMSE = 1.130 m) were obtained. As showed in Figure 10a, the UAV data were lower than the manually acquired data.

Figure 10.

The black dotted line represents a 1:1 ratio; (a) comparison of the canopy volume calculated from UAV using the method 1 V1,UAV and the manual measurements V1,manual; and (b) comparison of the canopy volume calculated from UAV using method 1 V1,UAV and method 2 V2,UAV.

In Figure 10b, the canopy volume values obtained from UAV using Equation (5) (V1,UAV) and from DSMcanopy (V2,UAV) were compared. As expected, the data calculated using method 2 were systematically lower than those obtained with the automatic method 1.

3.5. Comparison between Geometrical Characteristics and Canopy Volumes between the Tree Densities

The comparison between UAV and manual measurements for geometrical characteristics allows an analysis of the data for different tree densities, showing that for 625 trees/ha, the Pearson correlation coefficient was very high for htree (R = 0.841) and high for Rc (R = 0.765) and hc (R = 0.508), but low and negative for htrunk (R = −0.117); low values were obtained for RMSE, i.e., RMSE = 0.130 m, RMSE = 0.281 m, RMSE = 0.186 m, RMSE = 0.208 m for Rc, hc, htree, and htrunk respectively. For 1250 trees/ha, the Pearson correlation coefficient was lower for all the characteristics Rc, hc, htree, and htrunk (R = 0.545, R = 0.348, R = 0.340, R = 0.245); the RMSE was low for Rc (RMSE = 0.099 m) and not high for the other parameters, i.e., RMSE = 0.283 m, RMSE = 0.267 m, RMSE = 0.155 m for hc, htree, and htrunk, respectively.

The canopy volumes calculated by UAV and manual measurements could be analysed for the density of the plantation. In this case, a high Pearson correlation coefficient was found (R = 0.805) for a density of 625 trees/ha and a not-high Pearson correlation coefficient (R = 0.610) for a density of 1250 trees/ha. The RMSE was 1.630 m for a density of 625 trees/ha and 1.836 m for a density of 1250 trees/ha.

4. Discussion

This study has presented a UAV image analysis method for accurate and efficient determination of canopy size and volume of hazelnut trees and evaluated its accuracy against field measurements. The correlation values were always positive, indicating a fair general agreement among the geometric characteristics in the description of the structure of the canopy. The geometrical parameter most correlated with the manual one was htree (R = 0.841) followed by Rc (R = 0.765) and hc (R = 0.508), in agreement with what was measured by [5]. These results are better than those obtained for blueberry bushes, where the canopy size derived from imagery only achieved a moderate correlation with manual measurements, mainly due to the occlusion of the canopy by the bush canopy [42]. The UAV method allows for reaching a high level of detail for every hazelnut tree reconstruction, in agreement with what was obtained for almond trees [20].

According to the results reported by [42] for Citrus reticulata, trees were easily obscured by the branches and leaves of adjacent trees during UAV tilt photogrammetry, resulting in the occlusion of the lower part of the trees. This situation can explain the lack of correlation for the parameter htrunk reported between UAV and manual measurements in this study. This problem could be overcome by using, e.g., a multiparameter sensor mounted on an agricultural tractor, as already performed in intensive olive orchards by [43].

The reconstruction of the experimental site in a 3D model confirmed the suitability of using UAVs for monitoring the canopy characteristics of hazelnut orchards. The elevation information obtained from the point cloud allowed for better recognition of the canopy characteristics, excluding pixels representing the ground and incorrectly classified as belonging to the canopy from the NDVI map. These results agree with those of [18], who reported that the use of the NDVI index for tree canopy delineation in olives was not effective. Although adult olive trees could be classified, tree canopy delineation was not possible because of the differing shape of the canopy, the mix with other types of vegetation and/or even the trees’ shadows. In this paper, contrary to [6], the altimetric information of the canopy (DSMcanopy) was available because the point cloud was filtered into two point clouds, one for the canopy and one for the ground. In cases where it is not possible to know the difference between the canopy and the ground (for example, for extensive or heterogeneous slope areas), the intersections between the complete DSM (canopy + ground) and the NDVI map must be filtered using an elevation threshold (for example, the mean or minimum height of the trunk).

According to [44], UAV photogrammetry resulted in obtaining, quickly and conveniently, suitable images of a tree (in this case, hazelnuts). This research is evidence that, to successfully determine the canopy from a NDVI map, the soil below adult hazelnut trees should be completely free of grass to avoid interference. Regarding the determination of the canopy volume of trees with high plantation densities, the UAV method was affected by the planting density, with less accuracy as the number of trees increased, and the distance between trees decreased. These results agree with those obtained for other species, such as olive trees [8], where the vegetation’s high density negatively affected the canopy volume estimation.

Volume mapping also creates the opportunity to design site-specific treatments adapted to the needs of the trees according to their size; the application of these treatments with variable-rate sprayers has allowed a pesticide savings of up to 58% of the application volume [45] in vineyards.

Photogrammetric reconstruction of crop canopies could be used to estimate the canopy characteristics accurately. For example, DSMcanopy could represent the base maps, where the evident heterogeneity in the canopy vigor was effectively quantified with the proposed algorithm.

Numerous studies, such as [46], have highlighted the importance of monitoring crop variability by applying precision agriculture principles. However, if much progress has been made on crops such as vines and olives, in terms of hazelnut cultivation, research must still investigate numerous aspects. This work, together with [6,31], could represent a first step towards improved knowledge of hazelnut cultivation.

In this paper, the determination of geometric characteristics was made in two types of planting situations. To save on agricultural inputs, including water and fertilizers, the choice of intensive trees has found numerous applications in recent years. Determining the relationship between light, temperature, water, and vegetative development is a challenge for the future. Different planting densities can affect the light, temperature, heat, and enzyme activity of assimilated metabolism at different positions in the canopy [47,48]. In addition, dense trees seem to increase the size of the trees. Studies on others crops (such as olives, cotton, and maize) have also reported that higher planting densities can reduce water evaporation and increase water consumption in the field [48,49]. In addition, a reasonably high planting density could affect the fruit quality [9]. Thus, in the future, the irrigation rate could be investigated in relation to higher planting density and the growth of the canopy.

5. Conclusions

This study revealed that the hazelnut canopy size and volume derived by the UAV method were close to those obtained via manual delineation and field measurement. The UAV method could replace field measurement to achieve significant labour savings. Moreover, using the UAV method, a large amount of information can be extracted (tree height; volume, thickness, and width of the canopy), whereas traditional on-ground observation of the same field would require much more time and effort.

In addition, this study showed high correlations between the measurements of the main plant characteristics, such as canopy volume, between the UAV and manual measurements.

Finally, the present study has developed two methods for calculating the volume of a hazelnut canopy. In one method, the canopy is assimilated into a cylinder, and the measurements are well correlated with the manual ones. In the other, however, the canopy is well defined in its shape. It, therefore, provides more precise information on the management of the tree, e.g., the intensity of annual pruning or vegetative growth.

The results presented here could benefit growers and the scientific community working on management practices in hazelnut orchards. For example, such methods can be crucial when deciding on the variable rate of chemical or irrigation applications.

Supplementary Materials

The following supporting information can be downloaded from: https://www.mdpi.com/article/10.3390/rs15020541/s1, Table S1: Values of geometric characteristics (Rc, hc, htree and htrunk) obtained from the UAV survey and from manual measurements; Table S2: Canopy volume obtained from UAV survey and manual measurements.

Author Contributions

Conceptualisation, A.V. and D.F.; methodology, A.V. and D.F.; software, A.V. and R.B.; validation, A.V., D.F. and R.B.; formal analysis, A.V. and D.F.; investigation, A.V., C.T. and D.F.; resources, A.V. and D.F.; data curation, A.V., C.T., R.B., D.F.; writing—original draft preparation, A.V. and D.F.; writing—review and editing, A.V. and D.F.; visualisation, A.V. and D.F.; supervision, A.V. and D.F.; project administration, A.V. and D.F.; funding acquisition, A.V. and D.F. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by “Ricerca di Base 2020, grant code VINRICBASE2020”.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Portarena, S.; Gavrichkova, O.; Brugnoli, E.; Battistelli, A.; Proietti, S.; Moscatello, S.; Famiani, F.; Tombesi, S.; Zadra, C.; Farinelli, D. Carbon allocation strategies and water uptake in young grafted and own-rooted hazelnut (Corylus avellana L.) cultivars. Tree Physiol. 2022, 42, 939–957. [Google Scholar] [CrossRef]

- Pacchiarelli, A.; Priori, S.; Chiti, T.; Silvestri, C.; Cristofori, V. Carbon Sequestration of Hazelnut Orchards in Central Italy. SSRN 2022, 333, 107955. [Google Scholar] [CrossRef]

- Beyhan, N.; Marangoz, D. An investigation of the relationship between reproductive growth and yield loss in hazelnut. Sci. Hortic. 2007, 113, 208–215. [Google Scholar] [CrossRef]

- Fideghelli, C.; De Salvador, F.R. World hazelnut situation and perspective. Acta Hortic. 2009, 845, 39–52. [Google Scholar] [CrossRef]

- Sun, G.; Wang, X.; Ding, Y.; Lu, W.; Sun, Y. Remote Measurement of Apple Orchard Canopy Information Using Unmanned Aerial Vehicle Photogrammetry. Agronomy 2019, 9, 774. [Google Scholar] [CrossRef]

- Altieri, G.; Maffia, A.; Pastore, V.; Amato, M.; Celano, G. Use of high-resolution multispectral UAVs to calculate projected ground area in Corylus avellana L. tree orchard. Sensors 2022, 22, 7103. [Google Scholar] [CrossRef]

- Mu, Y.; Fujii, Y.; Takata, D.; Zheng, B.Y.; Noshita, K.; Honda, K.; Ninomiya, S.; Guo, W. characterisation of peach tree crown by using high-resolution images from an unmanned aerial vehicle. Hortic. Res. 2018, 5, 74. [Google Scholar] [CrossRef]

- Anifantis, A.S.; Camposeo, S.; Vivaldi, G.A.; Santoro, F.; Pascuzzi, S. Comparison of UAV photogrammetry and 3D modeling techniques with other currently used methods for estimation of the tree row volume of a super-high-density olive orchard. Agriculture 2019, 9, 233. [Google Scholar] [CrossRef]

- Pannico, A.; Cirillo, C.; Giaccone, M.; Scognamiglio, P.; Romano, R.; Caporaso, N.; Sacchi, R.; Basile, B. Fruit position within the canopy affects kernel lipid composition of hazelnuts. J. Sci. Food Agric. 2017, 97, 4790–4799. [Google Scholar] [CrossRef]

- Dewi, C.; Chen, R.-C. Decision making based on IoT data collection for precision agriculture. Stud. Comput. Intell. 2019, 830, 31–42. [Google Scholar] [CrossRef]

- Vergni, L.; Vinci, A.; Todisco, F. Effectiveness of the new standardized deficit distance index and other meteorological indices in the assessment of agricultural drought impacts in central Italy. J. Hydrol. 2021, 603, 126986. [Google Scholar] [CrossRef]

- Park, S.; Ryu, D.; Fuentes, S.; Chung, H.; O’Connell, M.; Kim, J. Mapping Very-High-Resolution Evapotranspiration from Unmanned Aerial Vehicle (UAV) Imagery. ISPRS Int. J. Geo-Inf. 2021, 10, 211. [Google Scholar] [CrossRef]

- Narvaez, F.Y.; Reina, G.; Torres-Torriti, M.; Kantor, G.; Cheein, F.A. A Survey of Ranging and Imaging Techniques for Precision Agriculture Phenotyping. IEEE ASME Trans. Mechatron. 2017, 22, 2428–2439. [Google Scholar] [CrossRef]

- Caruso, G.; Palai, G.; D’Onofrio, C.; Marra, F.P.; Gucci, R.; Caruso, T. Detecting biophysical and geometrical characteristics of the canopy of three olive cultivars in hedgerow planting systems using an UAV and VIS-NIR cameras. Acta Hortic. 2021, 1314, 269–274. [Google Scholar] [CrossRef]

- Liu, J.; Xiang, J.; Jin, Y.; Liu, R.; Yan, J.; Wang, L. Boost Precision Agriculture with Unmanned Aerial Vehicle Remote Sensing and Edge Intelligence: A Survey. Remote. Sens. 2021, 13, 4387. [Google Scholar] [CrossRef]

- Velusamy, P.; Rajendran, S.; Mahendran, R.K.; Naseer, S.; Shafiq, M.; Choi, J.-G. Unmanned Aerial Vehicles (UAV) in Precision Agriculture: Applications and Challenges. Energies 2022, 15, 217. [Google Scholar] [CrossRef]

- Zhang, C.; Valente, J.; Kooistra, L.; Guo, L.; Wang, W. Orchard management with small unmanned aerial vehicles: A survey of sensing and analysis approaches. Precis. Agric. 2021, 22, 2007–2052. [Google Scholar] [CrossRef]

- Stateras, D.; Kalivas, D. Assessment of Olive Tree Canopy Characteristics and Yield Forecast Model Using High Resolution UAV Imagery. Agriculture 2020, 10, 385. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; de Castro, A.I.; Peña, J.M.; Jiménez-Brenes, F.M.; Arquero, O.; Lovera, M.; López-Granados, F. Mapping the 3D structure of almond trees using UAV acquired photogrammetric point clouds and object-based image analysis. Biosyst. Eng. 2018, 176, 172–184. [Google Scholar] [CrossRef]

- Hobart, M.; Pflanz, M.; Weltzien, C.; Schirrmann, M. Growth Height Determination of Tree Walls for Precise Monitoring in Apple Fruit Production Using UAV Photogrammetry. Remote Sens. 2020, 12, 1656. [Google Scholar] [CrossRef]

- Ishida, T.; Kurihara, J.; Viray, F.A.; Namuco, S.B.; Paringit, E.C.; Perez, G.J.; Takahashi, Y.; Marciano, J.J., Jr. A novel approach for vegetation classification using UAV-based hyperspectral imaging. Comput. Electron. Agric. 2018, 144, 80–85. [Google Scholar] [CrossRef]

- Comba, L.; Biglia, A.; Ricauda Aimonino, D.; Gay, P. Unsupervised detection of vineyards by 3D point-cloud UAV photogrammetry for precision agriculture. Comput. Electron. Agric. 2018, 155, 84–95. [Google Scholar] [CrossRef]

- Pagliai, A.; Ammoniaci, M.; Sarri, D.; Lisci, R.; Perria, R.; Vieri, M.; D’Arcangelo, M.; Storchi, P.; Kartsiotis, S.-P. Comparison of Aerial and Ground 3D Point Clouds for Canopy Size Assessment in Precision Viticulture. Remote Sens. 2022, 14, 1145. [Google Scholar] [CrossRef]

- Blanco, V.; Blaya Ros, P.J.; Castillo, C.; Soto, F.; Torres, R.; Domingo, R. Potential of UAS-Based Remote Sensing for Estimating Tree Water Status and Yield in Sweet Cherry Trees. Remote Sens. 2020, 12, 2359. [Google Scholar] [CrossRef]

- Gallardo-Salazar, J.L.; Pompa-García, M. Detecting individual tree attributes and multispectral indices using unmanned aerial vehicles: Applications in a pine clonal orchard. Remote Sens. 2020, 12, 4144. [Google Scholar] [CrossRef]

- Farinelli, D.; Luciani, E.; Villa, F.; Manzo, A.; Tombesi, S. First selection of non-suckering rootstocks for hazelnut cultivars. Acta Hortic. 2022, 1346, 699–708. [Google Scholar] [CrossRef]

- Franco, S. Use of remote sensing to evaluate the spatial distribution of hazelnut cultivation: Results of a study performed in an Italian production area. In Proceedings of the IV International Symposium on Hazelnut, Ordu, Turkey, 30 July 1996; Volume 445, pp. 381–398. [Google Scholar] [CrossRef]

- Reis, S.; Taşdemir, K. Identification of hazelnut fields using spectral and Gabor textural features. ISPRS J. Photogramm. Remote Sens. 2011, 66, 652–661. [Google Scholar] [CrossRef]

- Sener, M.; Altintas, B.; Kurc, H.C. Planning and controlling of hazelnut production areas with the remote sensing techniques. J. Nat. Sci. 2013, 16, 16–23. [Google Scholar]

- Raparelli, E.; Lolletti, D. Research, innovation and development on Corylus avellana through the bibliometric approach. Int. J. Fruit Sci. 2020, 20 (Suppl. 3), S1280–S1296. [Google Scholar] [CrossRef]

- Vinci, A.; Traini, C.; Farinelli, D.; Brigante, R. Assessment of the geometrical characteristics of hazelnut intensive orchard by an Unmanned Aerial Vehicle (UAV). In Proceedings of the 2022 IEEE Workshop on Metrology for Agriculture and Forestry (MetroAgriFor), Perugia, Italy, 3–5 November 2022; pp. 218–222. [Google Scholar] [CrossRef]

- Farinelli, D.; Boco, M.; Tombesi, A. Influence of canopy density on fruit growth and flower formation. Acta Hortic. 2005, 686, 247–252. [Google Scholar] [CrossRef]

- DJI P4 Multispectral User Manual v1.4. 2020. Available online: https://dl.djicdn.com/downloads/p4-multispectral/20190927/P4_Multispectral_User_Manual_v1.0_EN.pdf (accessed on 10 November 2022).

- Brigante, R.; Cencetti, C.; De Rosa, P.; Fredduzzi, A.; Radicioni, F.; Stoppini, A. Use of aerial multispectral images for spatial analysis of flooded riverbed-alluvial plain systems: The case study of the Paglia River (Central Italy). Geomat. Nat. Hazards Risk 2017, 8, 1126–1143. [Google Scholar] [CrossRef]

- Agisoft LLC. Agisoft Metashape User Manual, Professional edition, version 1.6; Agisoft LLC: Saint Petersburg, Russia, 2020; p. 166. Available online: https://www.agisoft.com/pdf/metashape-pro_1_6_en.pdf (accessed on 22 January 2021).

- Vinci, A.; Todisco, F.; Brigante, R.; Mannocchi, F.; Radicioni, F. A smartphone camera for the structure from motion reconstruction for measuring soil surface variations and soil loss due to erosion. Hydrol. Res. 2017, 48, 673–685. [Google Scholar] [CrossRef]

- Vinci, A.; Todisco, F.; Vergni, L.; Torri, D. A comparative evaluation of random roughness indices by rainfall simulator and photogrammetry. Catena 2020, 188, 104468. [Google Scholar] [CrossRef]

- Vergni, L.; Vinci, A.; Todisco, F.; Santaga, F.S.; Vizzari, M. Comparing Sentinel-1, Sentinel-2, and Landsat-8 data in the early recognition of irrigated areas in central Italy. J. Agric. Eng. 2021, 52, 1265. [Google Scholar] [CrossRef]

- Vergni, L.; Todisco, F.; Vinci, A. Setup and calibration of the rainfall simulator of the Masse experimental station for soil erosion studies. Catena 2018, 167, 448–455. [Google Scholar] [CrossRef]

- Baiocchi, V.; Brigante, R.; Dominici, D.; Milone, M.V.; Mormile, M.; Radicioni, F. Automatic three-dimensional features extraction: The case study of L’Aquila for collapse identification after April 06, 2009 earthquake. Eur. J. Remote Sens. 2014, 47, 413–435. [Google Scholar] [CrossRef]

- Brigante, R.; Radicioni, F. Use of multispectral sensors with high spatial resolution for territorial and environmental analysis. Geogr. Tech. 2014, 9, 9–20. [Google Scholar]

- Patrick, A.; Li, C. High Throughput Phenotyping of Blueberry Bush Morphological Traits Using Unmanned Aerial Systems. Remote Sens. 2017, 9, 1250. [Google Scholar] [CrossRef]

- Assirelli, A.; Romano, E.; Bisaglia, C.; Lodolini, E.M.; Neri, D.; Brambilla, M. Canopy index evaluation for precision management in an intensive olive orchard. Sustainability 2021, 13, 8266. [Google Scholar] [CrossRef]

- Qi, Y.; Dong, X.; Chen, P.; Lee, K.-H.; Lan, Y.; Lu, X.; Jia, R.; Deng, J.; Zhang, Y. Canopy Volume Extraction of Citrus reticulate Blanco cv. Shatangju Trees Using UAV Image-Based Point Cloud Deep Learning. Remote Sens. 2021, 13, 3437. [Google Scholar] [CrossRef]

- Llorens Calveras, J.; Gil, E.; Llop Casamada, J.; Escolà, A. Variable rate dosing in precision viticulture: Use of electronic devices to improve application efficiency. Crop Prot. 2010, 29, 239–248. [Google Scholar] [CrossRef]

- Comba, L.; Biglia, A.; Aimonino, D.R.; Barge, P.; Tortia, C.; Gay, P. 2D and 3D data fusion for crop monitoring in precision agriculture. In Proceedings of the 2019 IEEE International Workshop on Metrology for Agriculture and Forestry (MetroAgriFor), Portici, Italy, 24–26 October 2019; pp. 62–67. [Google Scholar] [CrossRef]

- Lou, S.W.; Zhao, Q.; Gao, Y.G.; Zhang, J.S. The effect of different density to canopy microclimate and quality of cotton. Cotton Sci. 2010, 22, 260–266. [Google Scholar] [CrossRef]

- Kaggwa-Asiimwe, R.; Andrade-Sanchez, P.; Wang, G. Plant architecture influences growth and yield response of upland cotton to population density. Field Crops Res. 2013, 145, 52–59. [Google Scholar] [CrossRef]

- Antonietta, M.; Fanello, D.D.; Acciaresi, H.A.; Guiamet, J.J. Senescence and yield responses to plant density in stay green and earlier-senescing maise hybrids from Argentina. Field Crops Res. 2014, 155, 111–119. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).