1. Introduction

Light Detection and Ranging (LiDAR) sensors have been commercialized among growing remote sensing technologies, spreading over all major scales and platforms such as ground-based terrestrial laser scanners (TLS), vehicle-based mobile laser scanners (MLS) inclusive hand-held devices, unmanned aerial systems (UAS) laser scanners, or an airborne (manned) laser scanner (ALS) and spaceborne land elevation satellites deciphering three-dimensional (3D) objects with centimeter to millimeter-level accuracies [

1,

2,

3,

4]. In comparison with all the major platforms, UAS is highly customizable ensuring several payloads during a single mission such as LiDAR and red-green-blue (RGB) optical sensors commonly referred to as digital aerial photogrammetry (DAP) [

5,

6,

7]. UAS-DAP provides multispectral information for LiDAR point clouds as most LiDAR sensors operate in the infrared portion of the electromagnetic spectrum with a single channel (e.g., 1064 nm) [

8]. Additionally, UAS-DAP is capable of generating point clouds through structure-from-motion (SfM) techniques [

9]. Compared with UAS-LiDAR, UAS-DAP point clouds can only measure the top of the canopies; therefore, significant information loss occurs below the canopy. In addition, optical sensors are highly sensitive to light conditions, shadow, and occlusion effects [

10]. The occlusions are the most fundamental problems with remotely sensed data. The mechanisms are classified as absolute, geometric, and turbid occlusions [

11]. The absolute occlusion is caused by solid objects, for example, rooftops, tree trunks, branches, etc. Geometric occlusions are caused by directional blocking due to nadir off-nadir positioning of imaging/scanning sensors. Finally, turbid occlusions are least in context and are caused by the medium through which light travels; therefore, weather-dependent mechanisms can be avoided. LiDAR sensors mainly suffer from absolute occlusion (e.g., tree trunk and branches), because laser penetration capabilities, therefore, overcome geometric and turbid occlusions to better present the 3D volumetric presentation, e.g., height, canopy dimensions, gaps, and biomass of trees and crops compared with UAS-DAP [

11,

12,

13].

Additionally, compared with manned ALS, UAS-LiDAR allows far-denser point clouds over low-cost small-area projects with easy operation and faster post-processing, as deliverables are available within a few hours post-survey. Moreover, UAS-LiDAR offers unprecedented repeatability compared with expensive wide-area ALS surveys [

5]. UAS-LiDAR integrated with Global Navigational Satellite System (GNSS) or Real Time Kinematics (RTK) and Inertial Measurement Unit (IMU) transmit the geolocated laser pulses and record the backscattered signal in the full waveform or more widely adopted three-dimensional (3D) cloud of discrete laser measurements known as point clouds [

14]. UAS-LiDAR point clouds are geolocated with precise horizontal measurements (

x, y) with elevation (

z) above mean-sea-level (MSL) with an average centimeter-level accuracy [

4,

5,

15]. The strength of the backscattered LiDAR signal referred to as intensity information is also provided in most of the LiDAR data. The laser backscattered signal from bare earth is referred to as ground points and those off the terrain (OT), e.g., trees, plants, and buildings, are called non-ground points [

16]. Nevertheless, point clouds generated by processing GNSS or RTK, IMU, and LiDAR datasets have no prior discrimination of ground and non-ground points. To use point clouds for accuracy assessment or to produce Digital Elevation Models (DEM), Canopy Height Models (CHM), and Crop Surface Models (CSM), highly accurate ground point classification is the most important step in LiDAR data processing [

15]. Manual quality control (QC) and ground classification have been found most accurate, yet they are slow and labor-intensive. With the advent of UAS-LiDAR, more and more point clouds are available with an ever-increasing demand for ground point classification.

1.1. Related Work

For ground classification, elevation (

z) is widely used information that accounts for anything elevated above the ground, therefore establishing the basis for ground classification algorithms [

17]. Based on points elevation (

z), algorithms categorically fall into four classes [

18], segmentation/clustering [

19], morphology [

20], Triangle Irregular Network (TIN) [

21], and interpolation [

22]. Over the last two decades, ALS has extensively been adopted in earth sciences and, in particular, in investigating topography and forestry [

23,

24]. In forests and topography, the preponderance of research investigations was dedicated to classifying ground points from non-ground points to develop DEM, CHM, and CSM over state or national scales [

24]. Compared with traditional remote sensing methods and optical imagery, ALS offers unprecedented topographic detail and precision over complex terrains. Nevertheless, the ALS point density (points per square meter area (pts/m

2)) varies greatly with the sensor’s operational parameters such as flying altitudes, pulse repetition rate (PRR), and pulse penetration rate (PPR) [

25]. In a typical ALS survey, point density could range from 1 to 15 pts/m

2 and the latest ALS system could achieve a maximum of 204 pts/m

2 [

26]. ALS has been a widely adopted technique for decades; almost all the ground classification algorithms were developed using low-density evenly distributed point clouds with an average of 2 pts/m

2 [

27]. Compared with ALS, UAS-LiDAR point densities have increased several fold in recent times, e.g., 1630 pts/m

2 with an average of 335 pts/m

2 [

12]. Furthermore, based on cost, UAS-LiDAR sensors are classified as high-accuracy high-end, and affordable low-cost sensors with lower accuracy as compared to high-end LiDAR sensors, offering point clouds of varying degrees of precision with complex geometries of objects under investigation [

28]. Consequently, UAS-LiDAR data are comprised of richer geometric information compared with low-density ALS data [

5,

29].

In recent times, several studies were based on traditional ground classification algorithms using UAS-based point clouds. Zhou et al. (2022) used UAS-DAP and UAS-LiDAR point clouds to classify ground and non-ground points using the CSF algorithm in urban environments [

10]. Moudrý et al. (2019) classified ground and non-ground points of a post-mining site using Riegl LMS-Q780 full-waveform airborne LiDAR and UAS-DAP point clouds with ArcGIS 10.4.1 software application (ESRI, Redlands, CA, USA) [

30]. In another study, Brede et al. (2019) estimated tree volumes using UAS-LiDAR in forest environments where the ground points were manually labeled using CloudCompare 2.10 (

http://cloudcompare.org/ (accessed on 4 January 2023)) software application [

31]. In mangrove forest monitoring, Navarro et al. (2020) used the PMF algorithm to classify ground points of UAS-DAP point clouds [

32]. Zeybek and Şanlıoğlu (2019) used open-source and commercial software packages to assess the ground filtering methods using UAS-DAP point clouds in landslide-monitoring forested research areas [

33]. The previous studies highlight several important findings. First, ground classification offers a wide variety of algorithms where most authors used a single algorithm without any logical conclusion of their choice [

10,

30]. Some authors preferred a manual approach to that of an algorithm-based approach to ensure the high accuracy of classified ground points [

31]. Nevertheless, Zeybek and Şanlıoğlu’s (2019) study was based on an inter-comparison of four different ground classification algorithms, which is potentially relevant to UAS-DAP ground points classification rather than UAS-LiDAR point clouds [

32]. In addition, the transferability evaluation of ground classification algorithms to other test sites has not been conducted in their research. Additionally, intra-comparison of traditional ground classification algorithms with modern deep learning methods is lacking in the past studies [

30,

31,

32,

33].

In an agricultural environment, rigorous evaluation of ground classification using UAS-LiDAR point clouds is pending. Nevertheless, the accurate classification of UAS-LiDAR ground points in an agricultural environment is an important processing step to monitor the plant height, stem size, crown area, and crown density during the growth stages. UAS flights are frequent throughout the growing season resulting in massive point clouds. Moreover, during the growing season UAS-LiDAR surveys, the overall crop structure, and point densities do not remain the same. Henceforth, the automated and precise classification of ground points of UAS-LiDAR data is in great demand for various stakeholders in agricultural domains. In the past, the difficulty of ground point classification was considered a major issue compromising the accuracy assessment of grassland structural traits [

12,

34].

1.2. Contributions

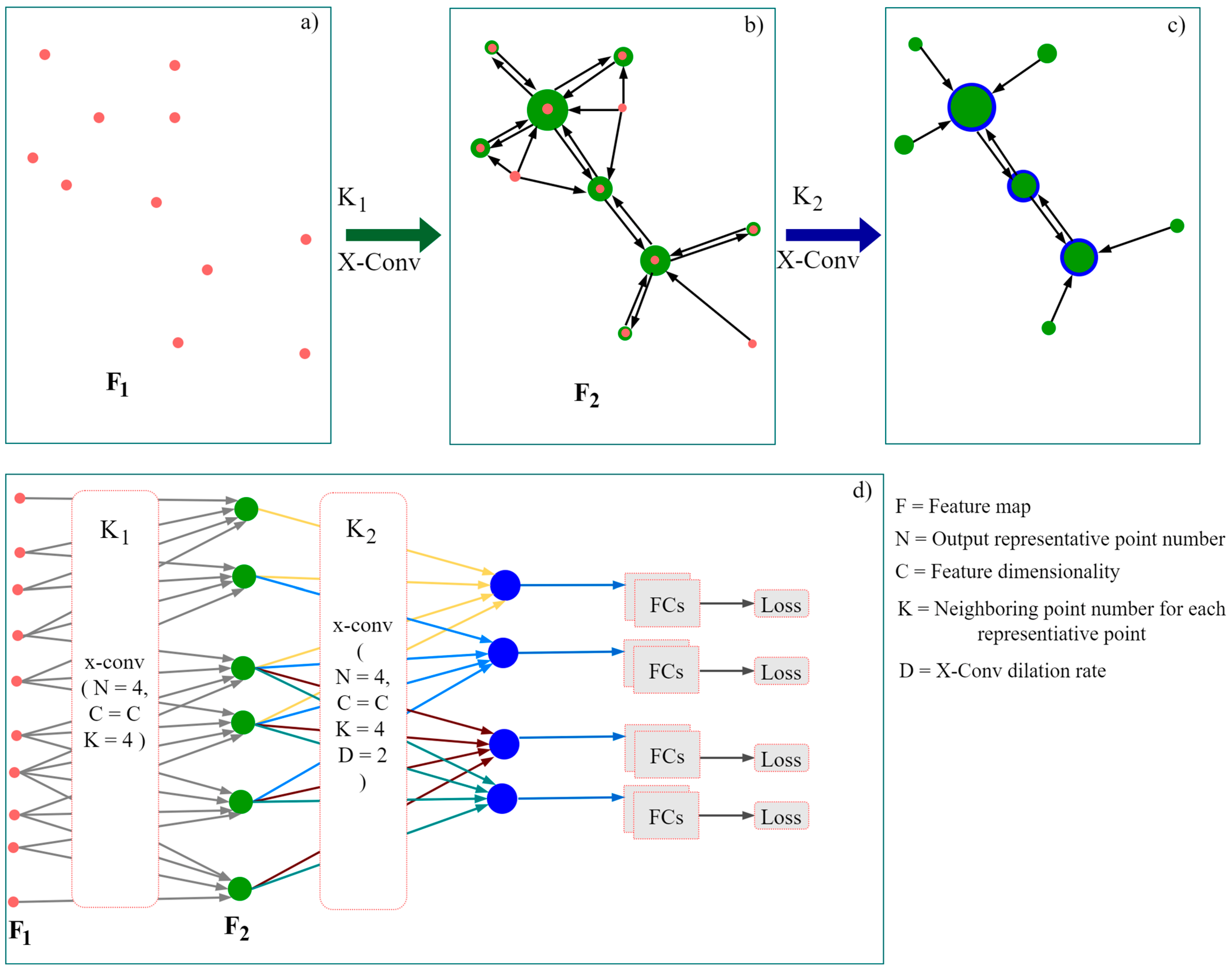

To the best of our knowledge, scientific investigations on ground point classification using UAS-LiDAR point clouds have not yet been conducted. The overarching goal of the present study is to reach a robust and automated solution for ground classification using UAS-LiDAR point clouds acquired in agricultural fields. Our objective is to assess the performance of the frequently used ground filter algorithms [

17,

20,

22,

35] such as (a) Cloth Simulation Function (CSF), (b) Progressive Morphological Filter (PMF), and (c) Multiclass Curvature Classification (MCC) all available in open-source R programming [

36] using a similar approach of Zeybek and Şanlıoğlu (2019) [

32]. Additionally, the ground classification algorithms implemented in Environmental System Research Institute (ESRI) ArcGIS need to be assessed because ArcGIS is a frequently used software package available and utilized in academia and industry [

17,

27]. Additionally, Deep learning (DL) has proven to be robust in point cloud segmentation, and classification using convolutional neural networks (CNNs) [

37,

38]. In the context of ground classification, we aim at using the PointCNN model [

39] as a modern tool compared with traditional algorithms to classify ground points using UAS-LiDAR point clouds [

16,

40]. Finally, we aim at assessing the transferability potential of the traditional ground classification algorithm along with DL methods, which is an extension to the approach proposed by Zeybek and Şanlıoğlu (2019) [

32].

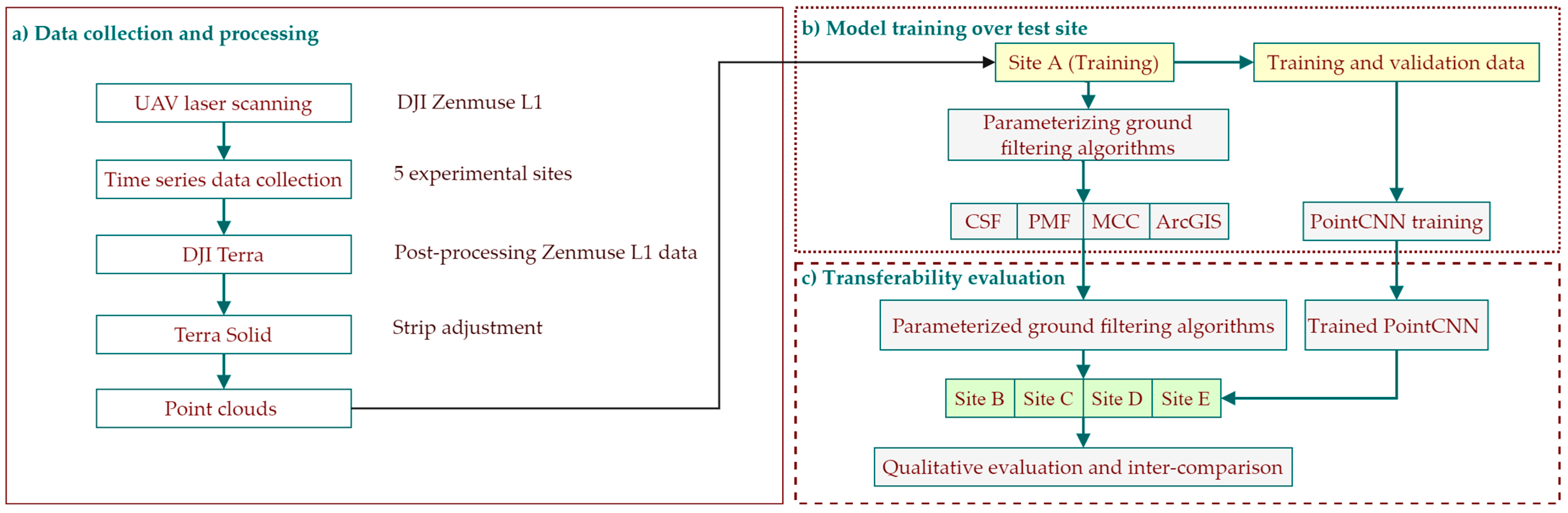

The rest of the paper is structured as follows. Study design along with representative agricultural plots description, data collection, and post-processing are explained in

Section 2. The methodology based on the ground filtering algorithms, PointCNN framework, and along with mathematical formulation of error metrics is described in

Section 3.

Section 4 is dedicated to the result assessment in the context of qualitative and quantitative analyses, and

Section 5 is based on the discussion of the results. Finally, the study is concluded in

Section 6 by highlighting the significant findings.

5. Discussion

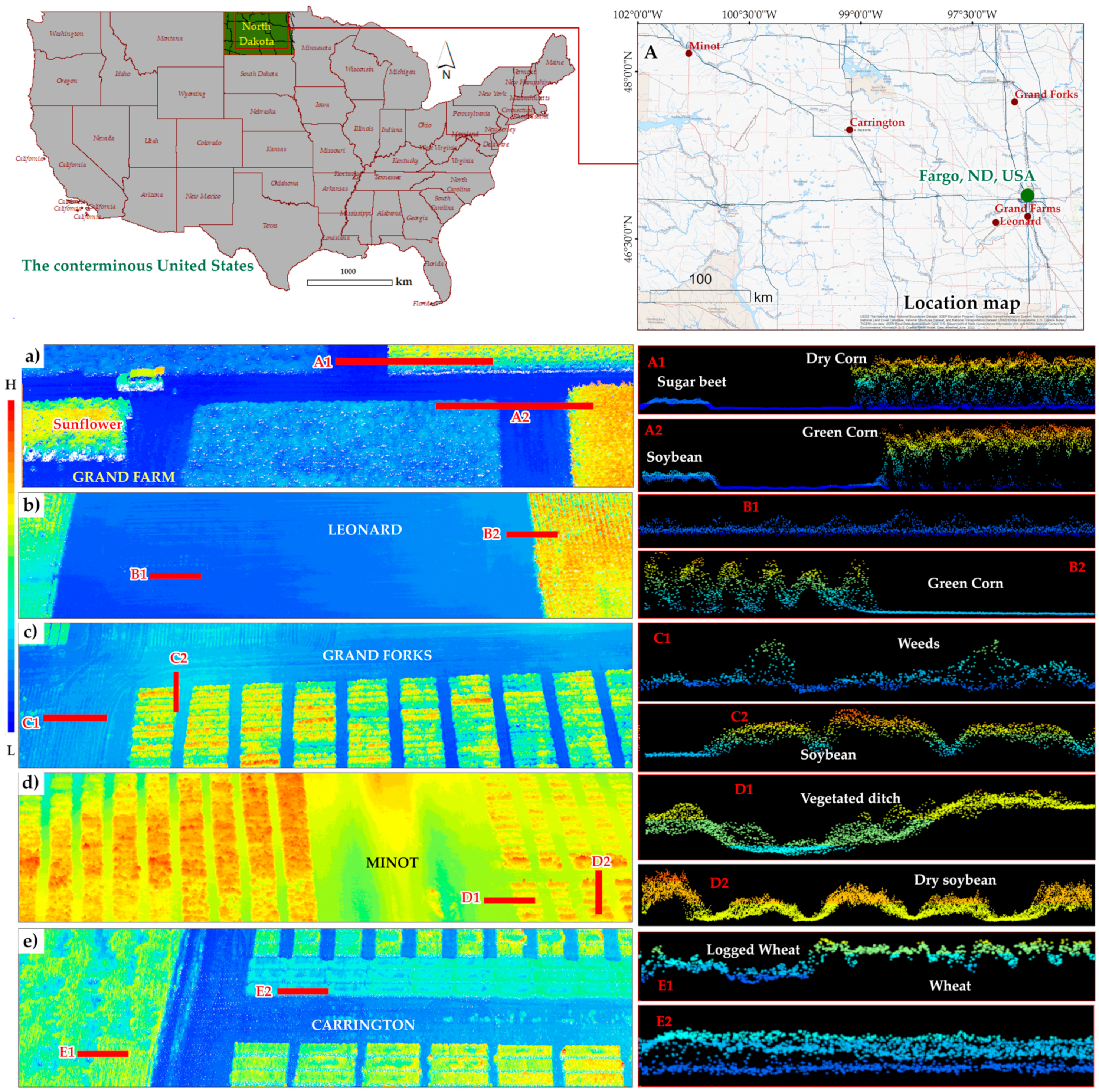

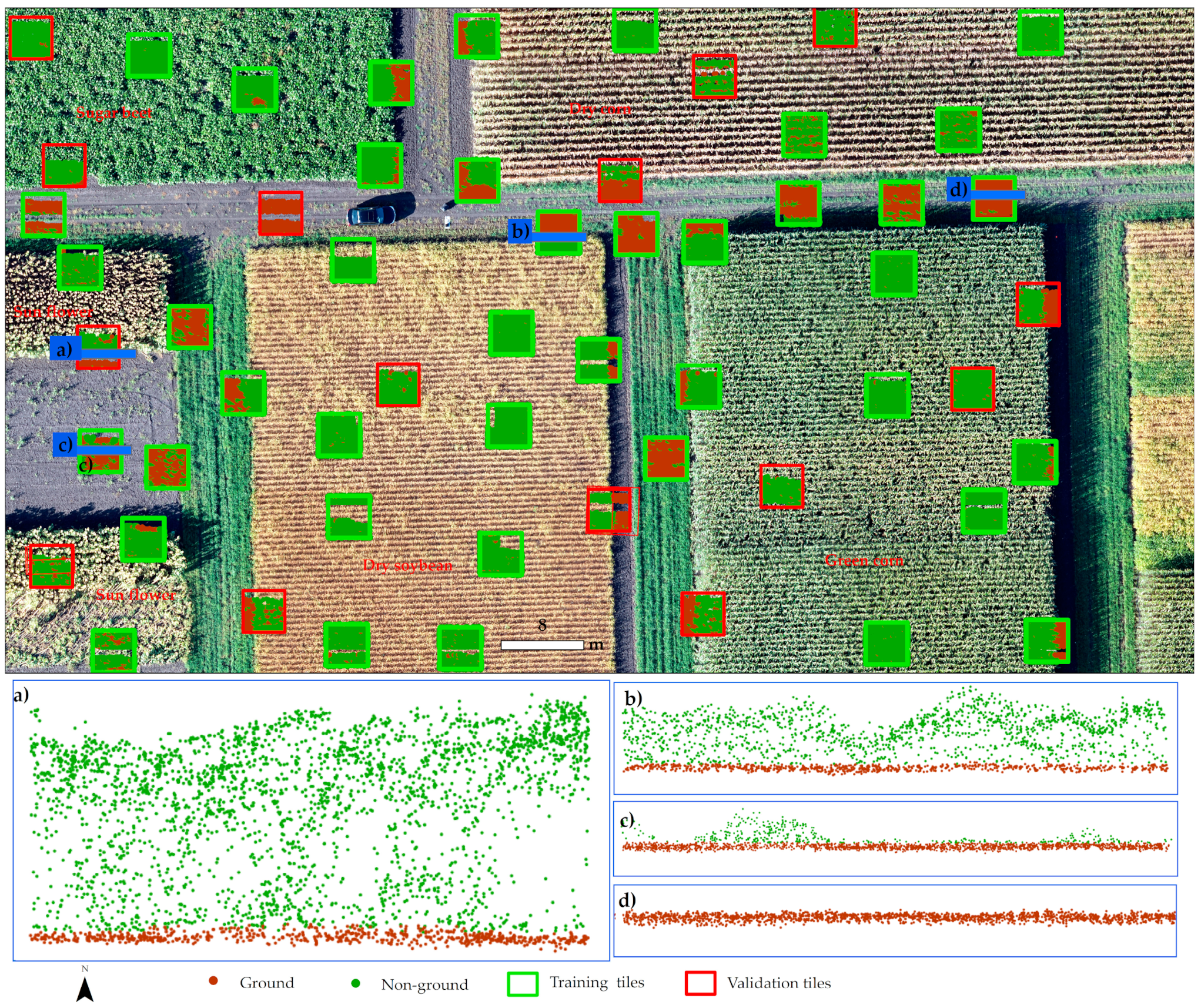

This section of the paper discusses the overall performance of ground classification algorithms and the PointCNN model to shed light on several important aspects of the used methods in comparison with the quality of the UAS-LiDAR data acquired under different crop environments (

Figure 2).

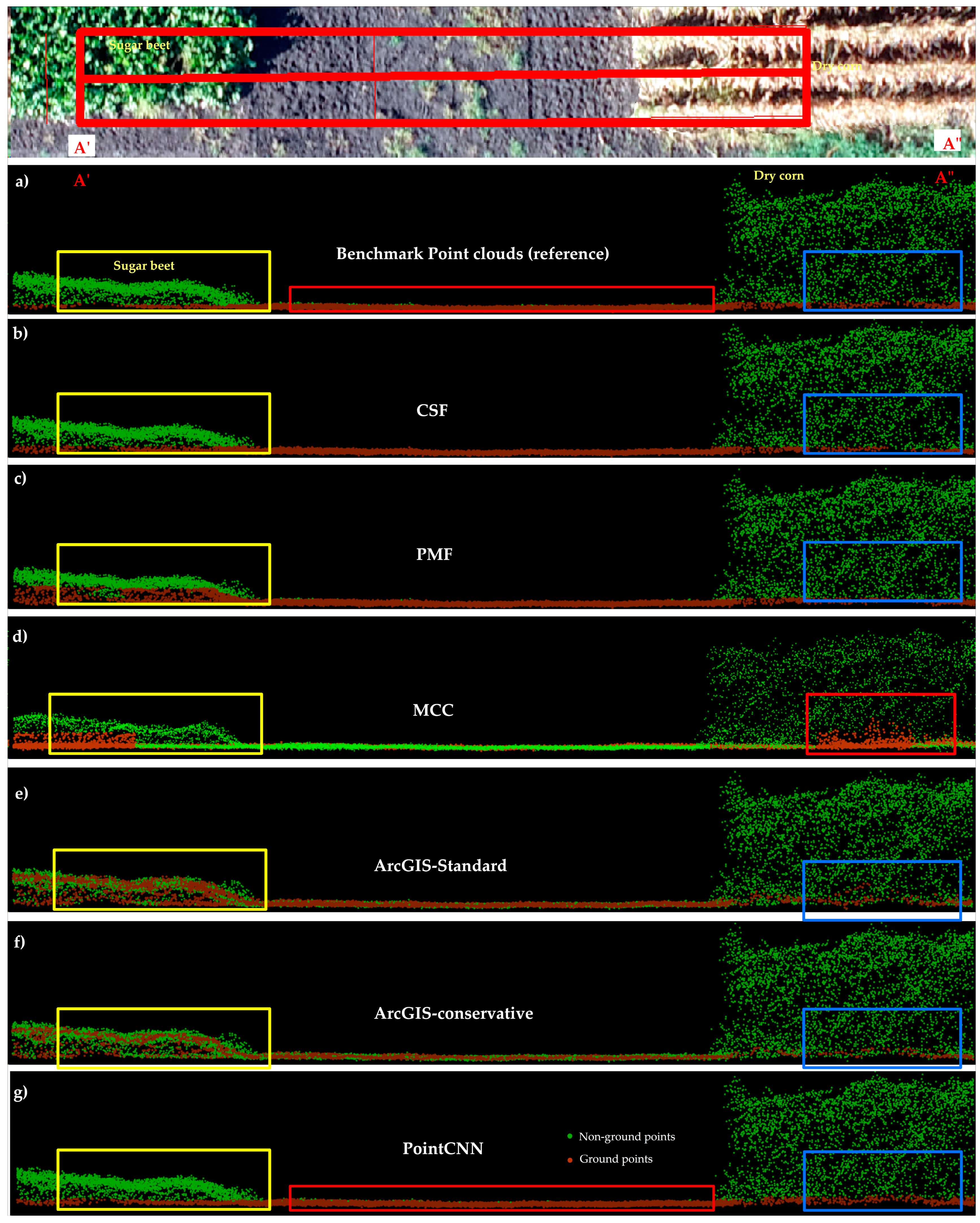

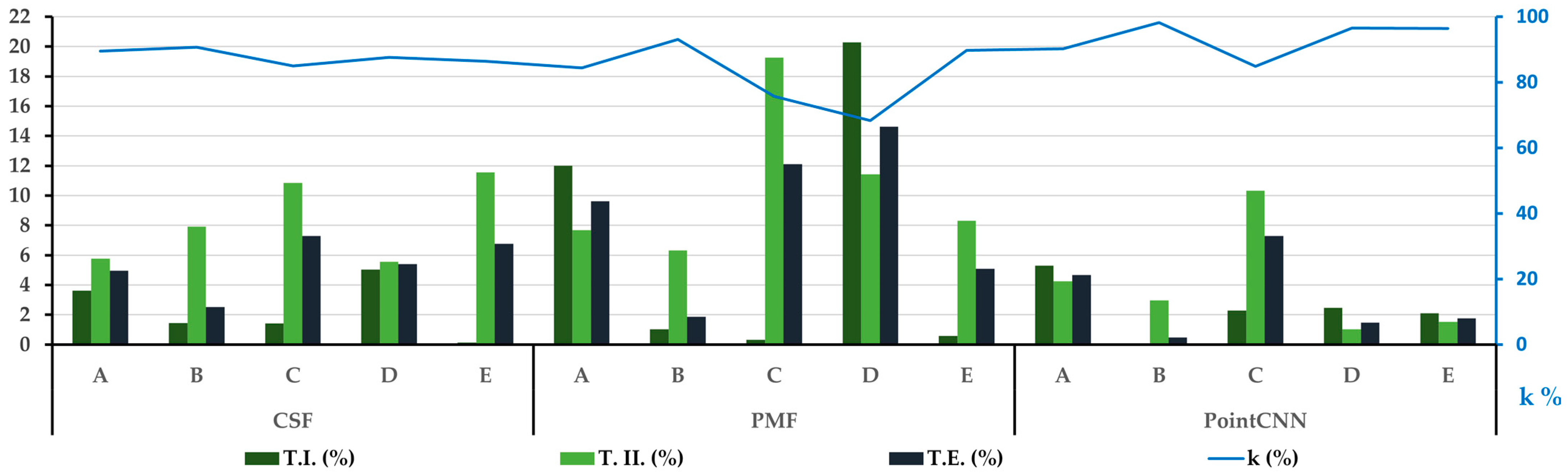

Figure 11 and

Table 7 illustrated that the overall PointCNN has been proven to outperform the traditional algorithms in the context of classification and transferability of ground points in five representative agricultural site areas. However, the overall quantitative analysis of ground classification algorithms and PointCNN using error metrics of T.I., T.II., T.E., and k were found to be significantly different for training Site A, as given in

Table 4,

Table 5 and

Table 6, compared with test sites B, D, and E, given in

Table 7.

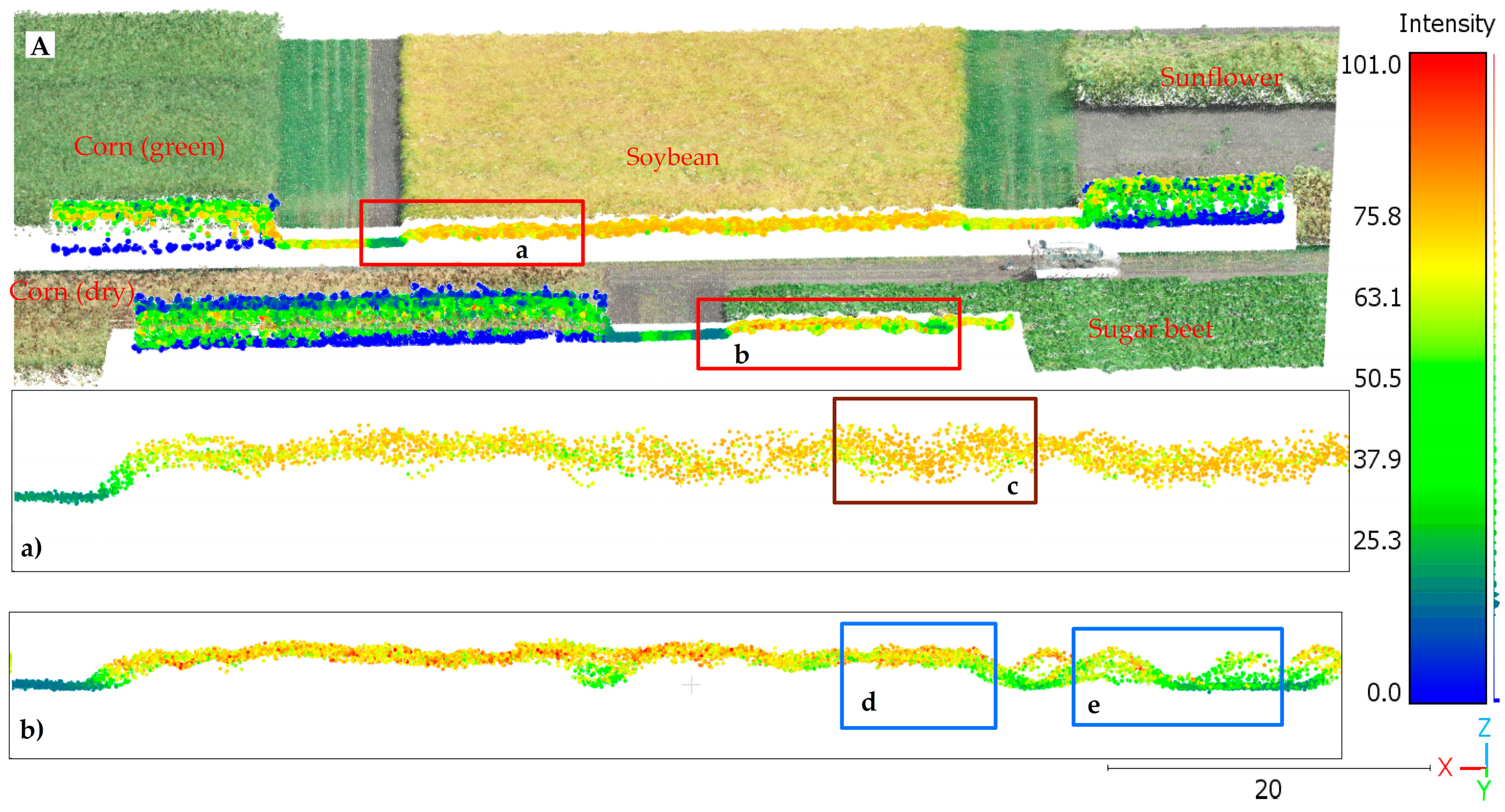

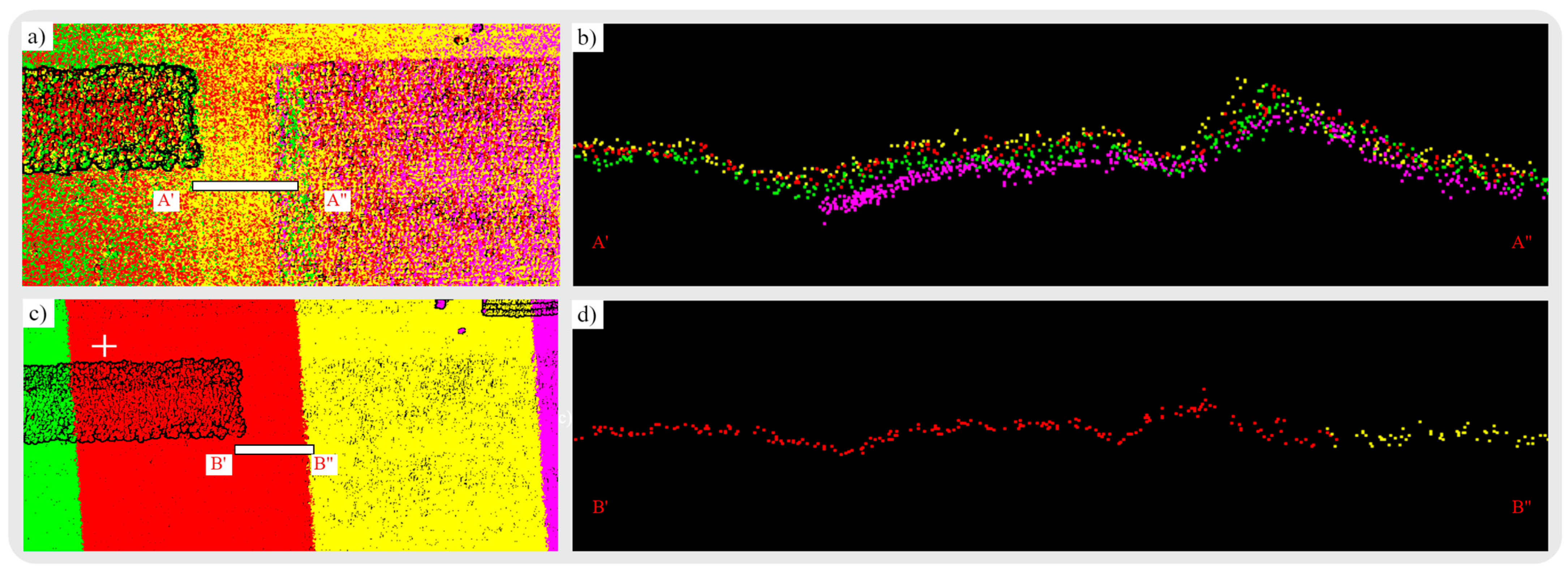

We found that all methods are subject to the rationality of ground and non-ground features present in UAS-LiDAR data. For illustration, training Site A presents a more diverse crop environment with five different crops (see

Section 2.1.1), and dry soybean presents a complex back-scattered UAS-LiDAR signal, as shown in

Figure 12c. Past studies showed that low-cost UAS-LiDAR sensors suffer from point localization uncertainty over complex surfaces [

5,

29]. Therefore, complex back-scattered UAS-LiDAR signal is challenging over certain crop environments to differentiate ground from non-ground points (

Figure 12c, brown-box). Nevertheless, the problem is associated with the sensor capabilities and high-end high-cost UAS-LiDAR sensors (e.g.,

http://www.riegl.com/products/unmanned-scanning/riegl-vux-1uav (accessed on 4 January 2023)), therefore offering a better solution [

5]. The crops where point clouds present reasonable patterns, i.e., spatially local correlation [

39], were classified correctly (

Figure 10 and

Figure 12d,e). Because of the dry soybean in Site A (

Figure 12c), the overall accuracy of CSF, PMF, and PointCNN is lowered compared with test sites B, C, D, and E (see

Figure 11 and

Table 7).

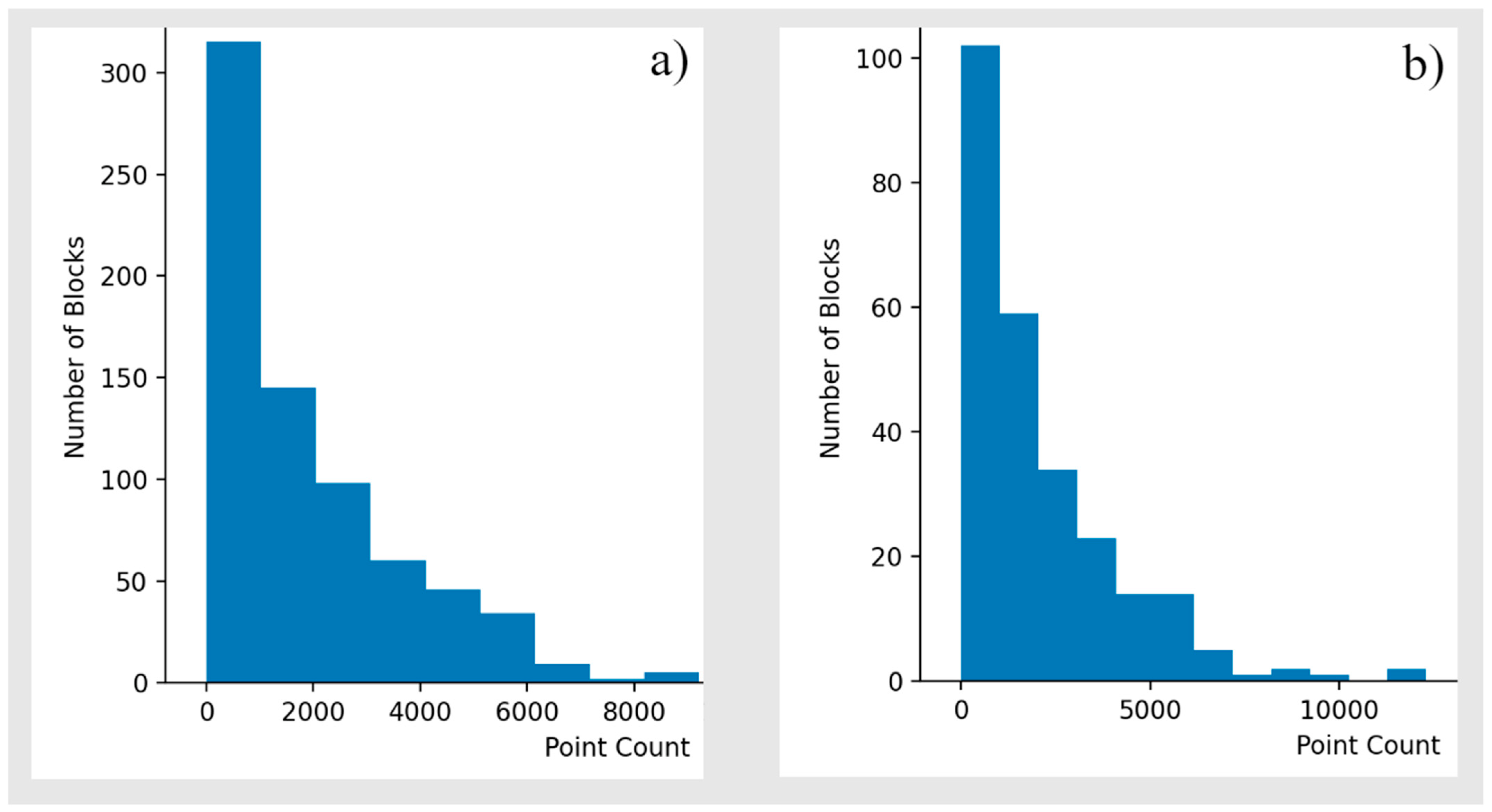

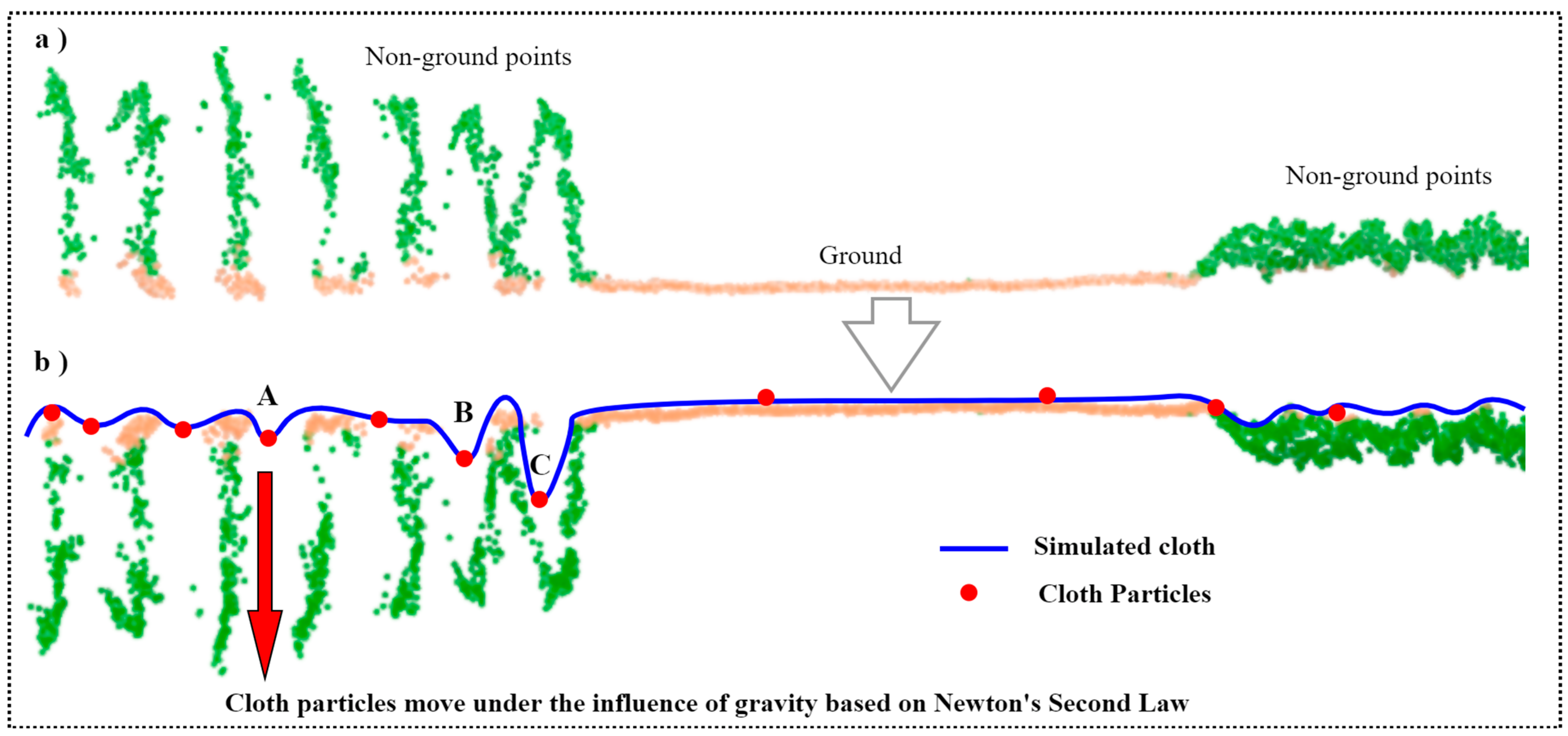

Ground classification algorithms are based on simulating the overall surface from point patches (e.g., CSF) or interpolating the minimum and maximum or mean values from point patches (e.g., PMF, MCC) with several default parameters [

18,

49]. The complex point cloud patches of dry soybean (

Figure 12a–c) have proven to be challenging for the ground classification algorithms as data captured by ZENMUSE L1 (

Table 6 and

Table 7) are of low quality due to point localization problems [

5,

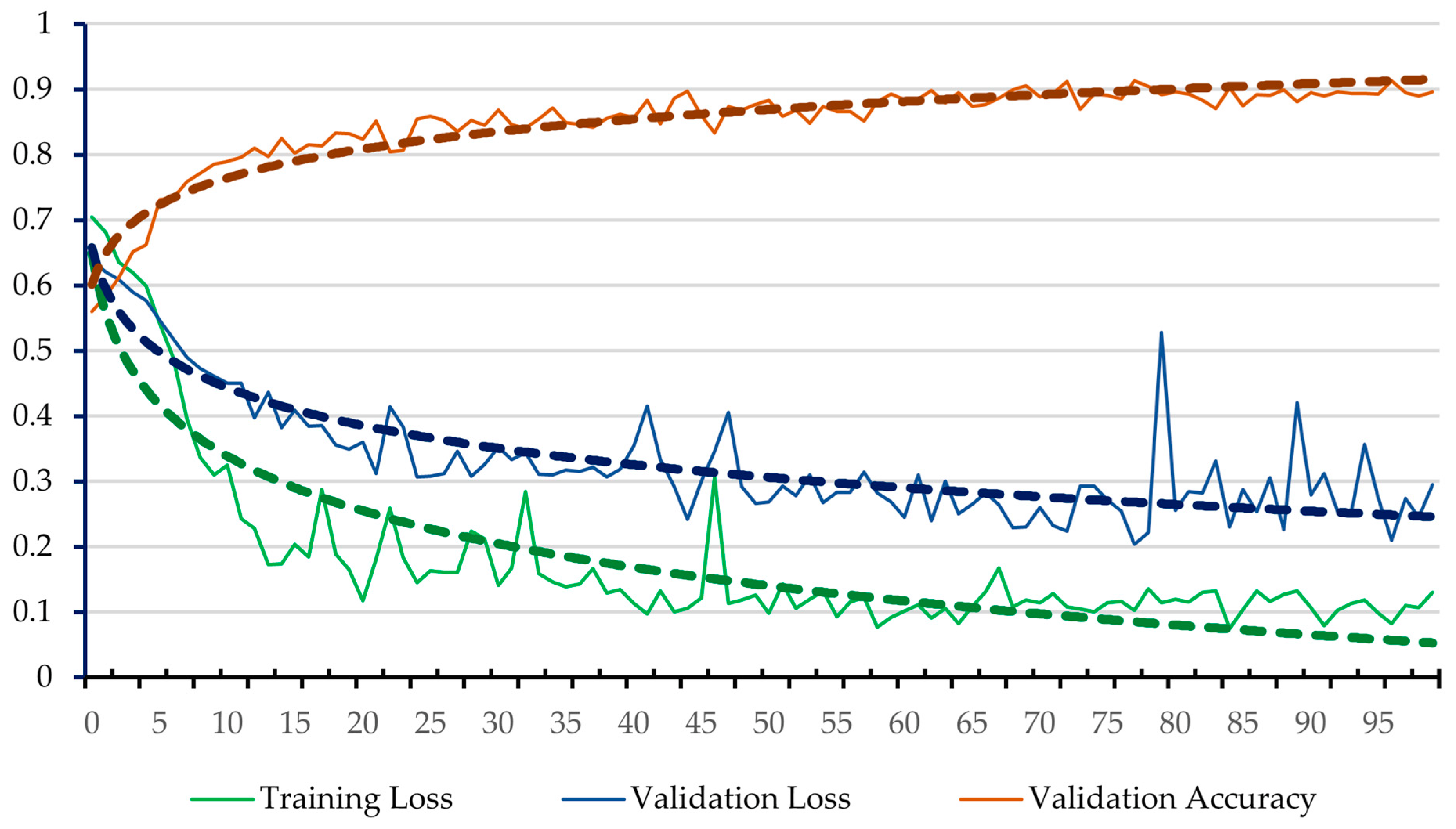

28]. The 10% validation loss with overall 90% validation accuracy of PointCNN (

Figure 8) is caused by the dry soybean of Site A (

Figure 12). Compared with PMF, the CSF provides more control over the simulated surface by adjusting default parameters such as GR, RI, dt, and the total number of iterations, therefore resulting in better accuracy given the fact that it is a simulation-based method more advanced compared with PMF, which is an old-fashioned interpolation based method [

35].

Figure 12.

(A) Colored DJI L1 point clouds (Site A) with 3D transects of intensity information: (a) Transect representative of soybean, and (b) sugar beet. (c) Complex backscattered UAS-LiDAR point clouds of dry soybean, (d) represent sugar beet with only non-ground returns from the top of the canopy, and (e) sugar beet with canopy and ground returns. Three-dimensional transects (a–e) are of the same extent (horizontal) for visualization purposes with no vertical scale.

Figure 12.

(A) Colored DJI L1 point clouds (Site A) with 3D transects of intensity information: (a) Transect representative of soybean, and (b) sugar beet. (c) Complex backscattered UAS-LiDAR point clouds of dry soybean, (d) represent sugar beet with only non-ground returns from the top of the canopy, and (e) sugar beet with canopy and ground returns. Three-dimensional transects (a–e) are of the same extent (horizontal) for visualization purposes with no vertical scale.

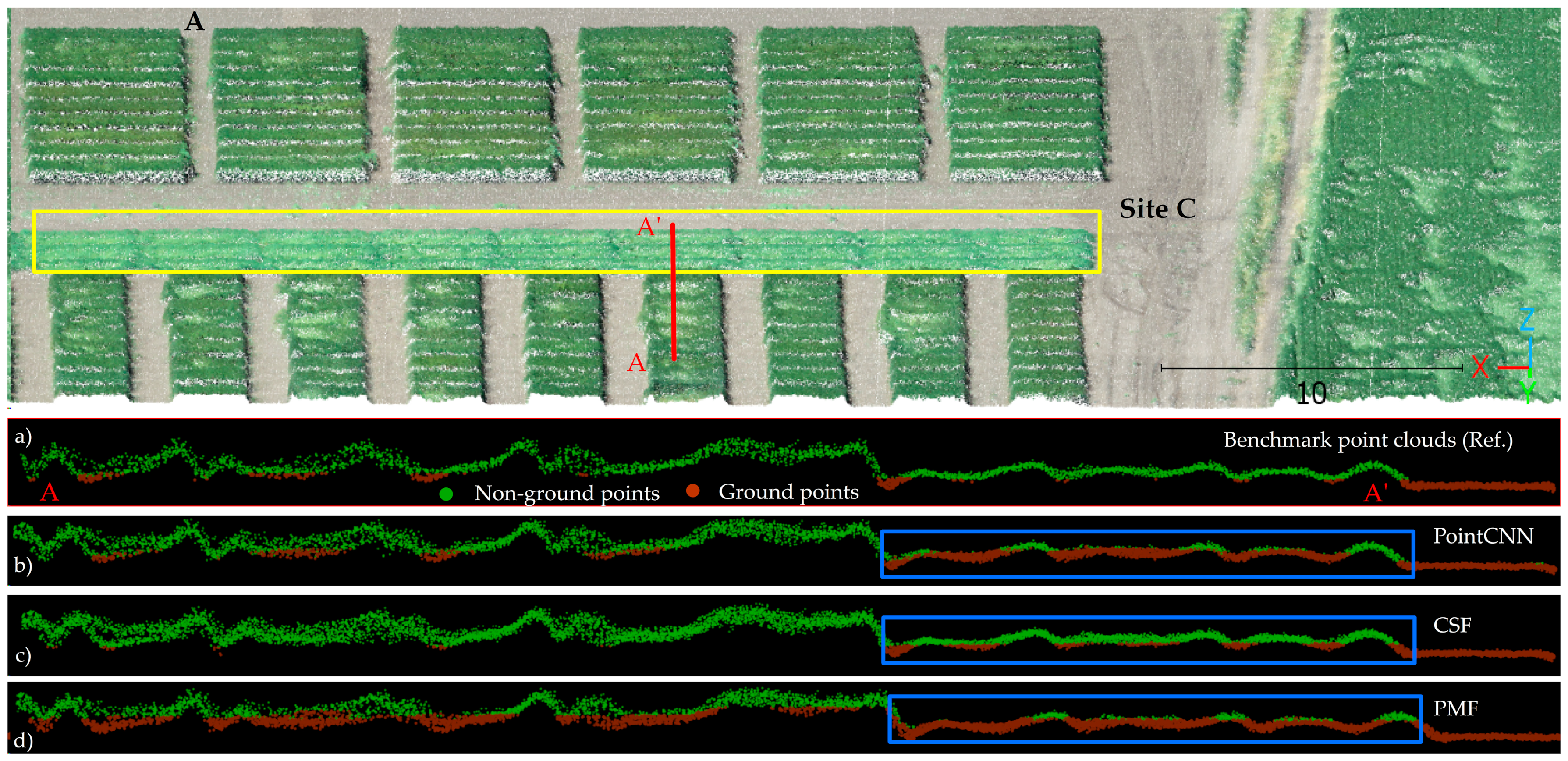

The transferability assessment over test sites yields lower error metrics with a consistent kappa coefficient for PointCNN compared with traditional ground classification algorithms. On the contrary, Site C is the only test site where the PointCNN performance is slightly lower than the CSF, as depicted in

Figure 11 and

Table 7. The PointCNN’s relatively lower performance compared with CSF is further studied for Site C.

PointCNN treats low-lying flat crops as the ground point compared with the CSF algorithm (

Figure 13b–c). The CSF with a cloth rigidness value of three puts more restrictive conditions on the simulated surface, resulting in better segregation of low-lying vegetation from the ground (

Figure 5 and

Figure 13c) [

35]. As a result, a significant portion of Site C, which is enclosed with a yellow box in

Figure 13A, is of a predominantly unique crop environment, where CSF performs slightly better than PointCNN. The crop environment enclosed in the yellow box (

Figure 13A) has a greater resemblance with the flat surface; therefore, PointCNN treats those points as ground points.

Figure 13 and

Figure 14, as exemplary test site cases, illustrate that the remaining test sites (B and E) were also comprised of growing crops with a higher degree of chlorophyll content compared with training site A (

Figure 12). The LiDAR backscatter signal is stronger for test sites given the fact that healthy vegetation tends to reflect more in the infrared portion of the electromagnetic spectrum because LiDAR sensors generally operate in infrared regions [

62]. In addition, UAS-LiDAR has a much smaller footprint than ALS systems; therefore, almost the entire LiDAR backscatter returned either from the top of the crop (e.g., leaves) or the ground; therefore, ground and non-ground points were captured with better separation over test sites [

5]. On the contrary, dry crops with low chlorophyll content particularly low-lying crops, e.g., dry soybean in Site A (

Figure 12a), established a complex backscattered signal, making it challenging for ground classification algorithms and PointCNN to segregate ground from non-ground points at certain positions (

Figure 12c).

Figure 13.

(A) Colored DJI L1 point clouds (Site C), 3D transects (AA′) of ground and non-ground points: (a) Transect representative of pulse-crop with benchmark point clouds. Ground and non-ground points are filtered by (b) PointCNN, (c) CSF, and (d) PMF. AA′ transects (a–d) are of the same extent (horizontal) for visualization purposes with no vertical scale.

Figure 13.

(A) Colored DJI L1 point clouds (Site C), 3D transects (AA′) of ground and non-ground points: (a) Transect representative of pulse-crop with benchmark point clouds. Ground and non-ground points are filtered by (b) PointCNN, (c) CSF, and (d) PMF. AA′ transects (a–d) are of the same extent (horizontal) for visualization purposes with no vertical scale.

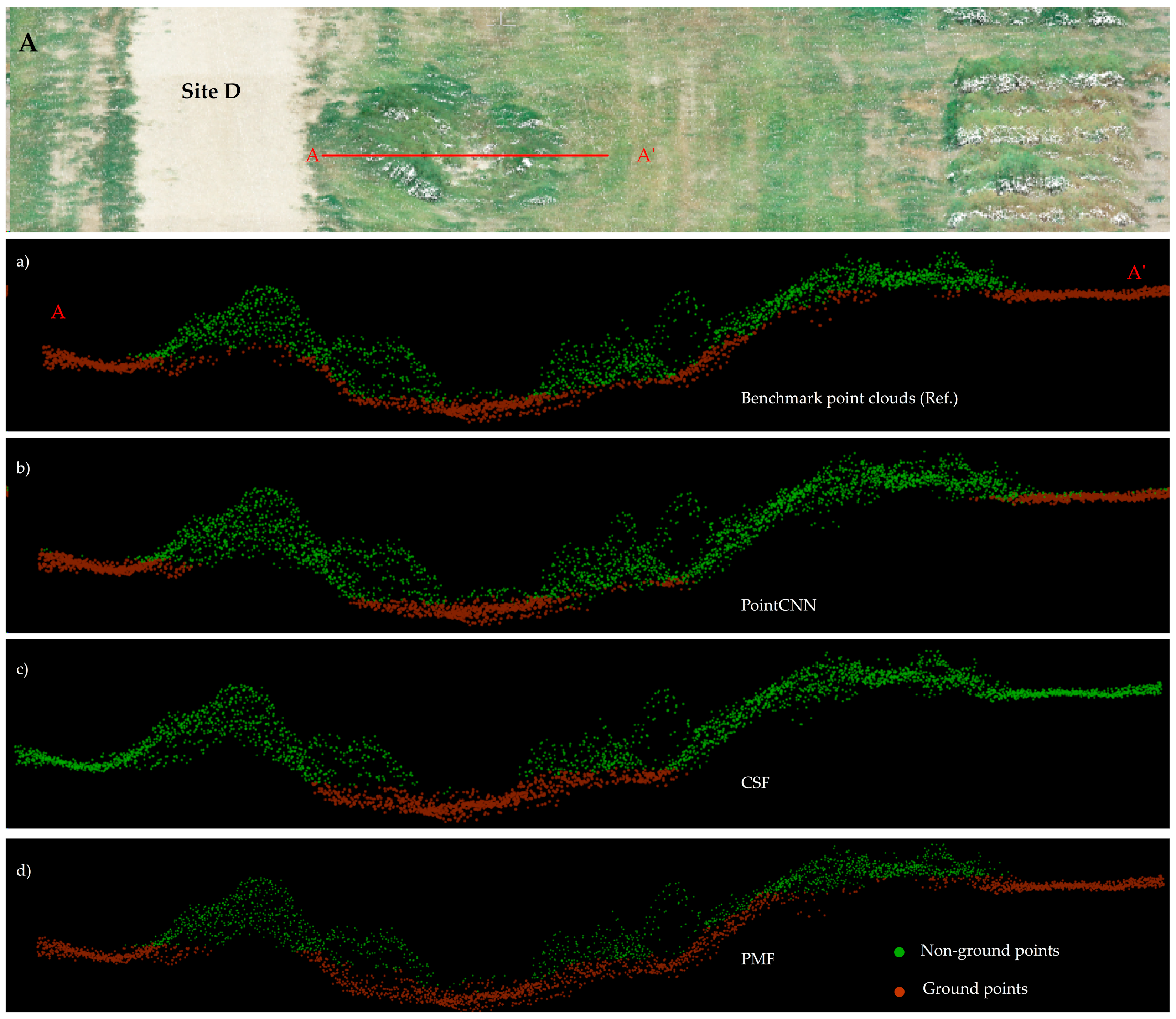

One of the objectives of the present investigation is to assess the PointCNN and ground classification algorithm’s potential to classify ground points from ditches of an undulating terrain of Site D (

Section 2.1.4).

Figure 14 shows the ground points classification results of PointCNN, CSF, and PMF as an example case. PointCNN and CSF do not take ground points into account along the slope surfaces of the ditch (

Figure 14b–c). The PMF overall performance for Site D was found to be poor (

Figure 11,

Table 7). On the contrary, PMF ground classification along the slope surface is better compared with CSF and PointCNN (

Figure 14d).

The PMF algorithm filters the ground points based on the minimum elevation differences between a minimum surface and maximum surface generated from point clouds, therefore undulating topography does not affect its performance (

Section 3.1.2) [

20]. However, CSF is based on a simulated cloth with rigidness values of three putting more tension on the simulated surface; therefore, ground points from the bottom of the ditch are only extracted as simulated cloth over inverted point clouds does not take the slope factor into account (

Section 3.1.1) [

51]. Regarding the CSF, the ground points classification accuracy can be improved by enabling the slope default parameter available in the CSF algorithm [

35]. Regarding the DL, PointCNN was trained with point clouds representative of the flat terrain (

Figure 2a); therefore, it performs poorly along the ditches (

Figure 14b) located in agricultural fields of test Site D. Nevertheless, PointCNN performance over undulating terrain is a subject of further investigations, which is beyond the scope of the present study. In the context of ground points classification at early growth stages of Site E, the qualitative analysis in

Section 4.1 and quantitative analysis in

Section 4.2, showed that PointCNN is robust in classifying ground points from crops at early growth stages along with grass and weeds, as already demonstrated in

Figure 11 and

Table 7.

Figure 14.

(A) Colored DJI L1 point clouds (Site D) with 3D transects (AA′) of ground and non-ground points: (a) 3D transects representative of a ditch with benchmark point clouds. (b) ground and non-ground points filtered by (b) PointCNN, (c) CSF, and (d) PMF. AA’ transects (a–d) are of the same extent (horizontal) for visualization purposes with no vertical scale.

Figure 14.

(A) Colored DJI L1 point clouds (Site D) with 3D transects (AA′) of ground and non-ground points: (a) 3D transects representative of a ditch with benchmark point clouds. (b) ground and non-ground points filtered by (b) PointCNN, (c) CSF, and (d) PMF. AA’ transects (a–d) are of the same extent (horizontal) for visualization purposes with no vertical scale.

The present study, in the context of classification and transferability using error metrics of T.I., T.II., T.E., and k coefficients, reveals that PointCNN outperforms CSF, PMF, MCC, and ArcGIS ground classification algorithms. Fundamentally, the ground classification algorithm’s performance is subject to user-defined parameters that were proven to be sensitive toward UAS-LiDAR data or terrain characteristics, in particular, the low-lying vegetation [

63]. On the contrary, the DL framework of PointCNN is a data-driven approach sensitive towards training data quality and overall feature representation in UAS-LiDAR point clouds, therefore, little affected by the different and diverse crop environments [

64].

6. Conclusions

Automated bare earth (ground) point classification is an important and most likely step in LiDAR point cloud processing. In the past two decades, substantial progress has been made and many ground classification algorithms have been developed and investigated, generally focusing on forest and topographic research using ALS point clouds. In comparison with matured scientific research in forests and topographic domains, the UAS-LiDAR sensor that has recently emerged has not yet been investigated, particularly in the precision agriculture domain. In this paper, we presented the first evaluation of the frequently used ground classification algorithms (CSF, PMF, and MCC) and PointCNN using UAS-LiDAR data in an agricultural landscape.

The present study aimed at two aspects of ground classification algorithms. First, the authors provided default user parameters of ground filter algorithms, which were examined, and higher omission and commission errors were found for time-series UAS-LiDAR data. Furthermore, our investigation revealed that sequential adjustment of the algorithm’s default parameters showed overall better results for CSF and PMF algorithms; therefore, default parameter optimization is important for new sensors and data. Second, we assessed the transferability potential of the ground classification algorithm with optimized parameters compared with the Deep Learning framework of PointCNN over four test sites each of unique crop environments in North Dakota, USA. Our investigation showed that the ground classification algorithms inherit the transferability potential with the optimization of default parameters, as tested in this study, with overall reasonable error metrics and kappa coefficients over four representative agricultural plots. Nevertheless, PointCNN was found to be more robust in both contexts, i.e., overall accuracy and transferability, as it showed the least error metrics and consistent kappa coefficients compared with traditional ground classification algorithms.

Considering experimental results and discussion regarding the UAS-LiDAR ground points classification, several key findings can be concluded. First, to use the ground classification algorithms, parameter optimization is required through sequential adjustment in parameter default values. Algorithm parameters presented in this study can be used to derive better ground classification results using UAS-LiDAR data. Second, compared with traditional ground classification algorithms, deep learning methods are robust with proven accuracy and efficiency provided that high-quality accurate label data are available. In conjunction with PointCNN supervised classification, the unsupervised ground classification algorithms can be used to process the raw point clouds as a first step toward producing quality labeled data through some manual interventions.