Abstract

Irrigation is one of the key agricultural management practices of crop cultivation in the world. Irrigation practice is traceable on satellite images. Most irrigated area mapping methods were developed based on time series of NDVI or backscatter coefficient within the growing season. However, it has been found that winter irrigation out of growing season is also dominating in north China. This kind of irrigation aims to increase the soil moisture for coping with spring drought and reduce the wind erosion in spring. This study developed a remote sensing-based classification approach to identify irrigated fields out of growing season with Radom Forest algorithm. Four spectral bands and all Normalized Difference Vegetation Index (NDVI) like indices computed from any two of these four bands for each of the seven scenes of GF-1 satellite data were used as the input features in the building of separated RF models and in applying the built models for the classification. The results showed that the mean of the highest out-of-bag accuracies for seven RF models was 94.9% and the mean of the averaged out-of-bag accuracies in the plateau for seven RF models was 94.1%; the overall accuracy for all seven classified outputs was in the range of 86.8–92.5%, Kappa in the range of 84.0–91.0% and F1-Score in the range of 82.1–90.1%. These results showed that the classification was neither overperformed nor underperformed as the accuracies of all classified images were lower than the model ones. This study also found that irrigation started to be applied as early as in November and irrigated fields were increased and suspended in December and January due to freezing conditions. The newly irrigated fields were found again in March and April when the temperature rose above zero degrees. The area of irrigated fields in the study area were increasing over time with sizes of 98.6, 166.9, 208.0, 292.8, 538.0, 623.1, 653.8 km2 from December to April, accounting for 6.1%, 10.4%, 12.9%, 18.2%, 33.4%, 38.7%, and 40.6% of the total irrigatable land in the study area, respectively. The results showed that the method developed in this study performed well. This study found on the satellite images that 40.6% of irrigatable fields were already irrigated before the sowing season and the irrigation authorities were supposed to improve their water supply capacity in the whole year with this information. This study may complement the traditional consideration of retrieving irrigation maps only in growing season with remote sensing images for a large area.

1. Introduction

Irrigation is one of the key agricultural management practices of crop cultivation in the world [1,2,3,4]. Irrigation reduces adverse effects of drought, increases crop yield, and finally maintains a good agricultural production profit. Irrigation consumes a lot of water resources and thus efficient water use management requires timely irrigation information in large regions [5]. Irrigated crop land, irrigation events and irrigation water amount are provide important information in the support of sustainable water resource management. Studies on hydrology [6], water availability and water use [7], and their interaction with agricultural production and food security [8], all require accurate information on the location and extent of irrigated croplands. Detailed knowledge about the timing and the amounts of water used for irrigation over large areas [3] is also of importance for various studies and applications.

Irrigation practice is traceable on satellite images [9]. A few global irrigation maps such as the Global Map of Irrigated Areas (GMIAs) [10] and the Global Irrigated Area Map (GIAM) [11] have become available. Recently, Wu [12] retrieved a 30-m resolution global maximum irrigation extent (GMIE) using the Normalized Difference Vegetation Index (NDVI) and NDVI deviation (NDVIdev) thresholds in the dry and driest months. Zajac [13] derived the European Irrigation Map for the year 2010 (EIM2010) underpinned by the agricultural census data. Siddiqui [14] developed irrigated area maps for Asia and Africa regions using canonical correlation analysis and time lagged regression at 250 m resolution for the year 2000 and 2010. Zhang [15] produced annual 500-m irrigated cropland maps across China for 2000–2019, using a two-step strategy that integrated statistics, remote sensing, and existing irrigation products into a hybrid irrigation dataset. Zhao [16] developed crop class based irrigated area maps for India using net sown area and extent of irrigated crops from the census and land use land cover data at 500 m spatial resolution for the year 2005. Ambika [17] developed annual irrigated area maps at a spatial resolution of 250 m for the period of 2000–2015 using data from the Moderate Resolution Imaging Spectroradiometer (MODIS) and 56 m high-resolution land use land cover (LULC) information in India. Gumma [18] mapped irrigated agricultural areas for Ghana using remote-sensing methods and protocols with a fusion of 30 m and 250 m spatial resolution remote-sensing data. Xie [19] mapped the extent of irrigated croplands across the conterminous U.S. (CONUS) for each year in the period of 1997–2017 at 30 m resolution, using the generated samples along with remote sensing features and environmental variables to train county-stratified random forest classifiers annually.

Most irrigated area mapping methods above-mentioned were based on time series of NDVI at a relatively low resolution of 250–1000 m. Disaggregating statistics data on the grid is another way to generate the irrigation maps. For example, the European irrigation map (EIM) [20] was created by disaggregating regional-level statistics on irrigated cropland areas into a 100 × 100 m grid, using a land cover map and constrained by the Global Map of Irrigated Areas (GMIAs) [10]. The remote sensing-based classification approach is also a great way to produce the irrigated crop maps. Salmon [21] used supervised classification of remote sensing, climate, and agricultural inventory data to generate a global map of irrigated, rain-fed, and paddy croplands. Lu [22] tried to use pixel-based random forest to map irrigated areas based on two scenes of GF-1 satellite images at 16 m in an irrigated district of China, during the winter-spring irrigation period of 2018. Magidi [23] developed a cultivated areas dataset with the Google Earth Engine (GEE) and further used the NDVI to distinguish between irrigated and rainfed areas. A large variety of classification methods at different scales and showing various levels of accuracy can be found in the literature [24,25,26,27,28,29,30,31]. Many applications and the tool of cloud-based and open source in classification have been developed recently [32,33]. However, the cloudy contamination and revisit time of optical satellite creates a major limitation to accurately identifying irrigation signature on the imagery. SAR imagery is less impacted by the cloud and has the advantage of building a long time series data to detect the irrigation signature. A number of studies [34,35,36] used timer series of SAR images to detect the irrigation event. The fusion of optical and SAR time series images for classification is also progressing well in recent years [37,38,39] in order to reduce the cloudy issue on the optical image. One study [40] assessed the value of satellite soil moisture for estimating irrigation timing and water amounts.

All these above-mentioned studies were designed to identify the irrigation signature mainly in growing season as crop develops. However, irrigation also happens out of season due to various reasons, such as sufficient water supply out of season, cheaper water prices, and lower energy prices as well as manpower availability. This kind of irrigation practice should be given more attention as the winter irrigation is dominating in this region. Therefore, this study aimed to develop a method to identify the irrigated fields and help irrigation authorities know the irrigation situation before the growing season arrives to improve their water supply capacity in the whole year so that the crop production may be stably maintained. In this study area, it found a great number of fields already irrigated in winter and in early spring, although fields are bare soil and large volumes of irrigation water was applied to the fields. This kind of irrigation practice aims to keep enough soil moisture for sowing crops at the beginning of growing season in spring to avoid irrigation water competition and in preparation for coping with spring drought. This case also complements the consideration from those researchers who are developing irrigation maps within growing season for a large area or at a global level.

2. Study Area and Data

2.1. Study Area

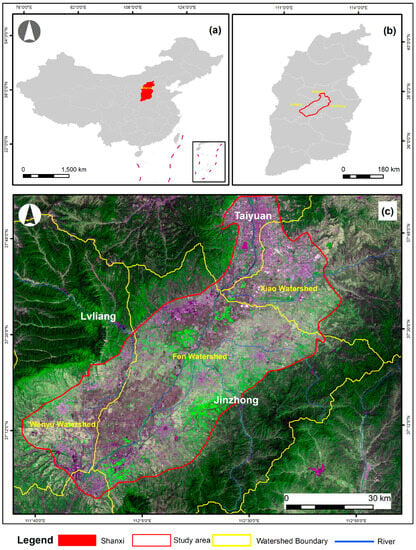

The study area is located in the midstream of the Fen River, at the center of Shanxi province in north China (Figure 1). This region, also known as the Jinzhong basin, spans approximately 150 km in length and 30–40 km in width, covering a total area of approximately 5000 km2. Three prominent rivers—Fen River, Wenyu River, and Xiao River—grace the landscape. The practice of irrigation has deep historical roots here, spanning over a millennium. The irrigation domain of the Fen River covers 1046.1 km2 of arable land, benefiting three cities and a vast agricultural community of a million farmers [41]. The Wenyu River, a tributary of the Fen River, irrigates an area of 341.8 km2 of arable land [42]. Similarly, the Xiao River irrigates an area of 221.7 km2 of arable land [43]. These irrigation facilities remain integral, with flooding irrigation still prevailing through the irrigating channels that nourish the fields. The study area holds prominence as a key grain production hub within Shanxi province, significantly contributing to regional food security. Its agricultural landscape is diverse, incorporating staple crops such as maize, sorghum, and winter wheat. Orchards, vegetable greenhouses, and other crop fields further enrich its agricultural mosaic. The conventional growing season extends from May to September, yet this area supports winter wheat cultivation throughout the winter months. Planting commences from early to mid-October. After the winter wheat harvest, short-lived crops are sown to evade early autumn frost. Presently, a few fields are dedicated to winter wheat cultivation, while most fields remain fallow during winter. This has led to the application of winter irrigation to these fallow fields. Summer witnesses the cultivation of maize in most fields, a crop that particularly benefits from winter irrigation. Climatically, the study area falls within the temperate continental seasonal climate zone, experiencing distinct seasons—spring, summer, autumn, and winter. Notably, winter and spring receive less rainfall compared to the pronounced rainy season during summer.

Figure 1.

The location of the study area: (a) shows the Shanxi province in China, (b) shows the study area in Shanxi province, and (c) shows the study area illustrated on the GF-1 image on 29 April 2023, respectively.

2.2. Satellite Data and Processing

GF, the acronym of Gaofen in Chinese and high resolution in English, is one of the key Earth observation programs in China. As the first satellite of the Chinese High Resolution Earth Observation System, GF1 Satellite was successfully launched on 26 April 2013 [44]. Four sets of multiple spectral cameras (wide field of view, WFV) were equipped onboard GF-1 and had a mosaic coverage spanning 800 km at 16-m spatial resolution and a 4-day revisit frequency [45]. As one of limitations in comparison with other high resolution satellite imagery, WFV has only four bands listed in Table 1. The L1B data of GF-1 WFV data in this study were collected from National Satellite Meteorological Center, China. After the visual check of all images, the images with less than 25% cloud coverage were selected and processed for this study. Thereafter, the FLAASH approach was used to perform the atmospheric correction [46]. The RPC Orthorectification approach [46] was used to perform the geometrical correction from L1B data. Considering that GF-1 had relatively large geometric errors, a 10 m Sentinel-2B image obtained on 17 October 2022 was used as the reference image to co-register all GF-1 images with the image chip matching method. The results for the co-registration of all GF-1 images will be reported in another article in preparation. The images in the same day were mosaiced and tailored to the study area. Finally, the available images were listed in Table 2. Due to partial cloud contamination, the satellite data in November and February were removed and the final valid data fit in seven dates. In order to make it compatible with other high resolution satellite data, like Sentinel-2 and Landsat 8/9, the spatial resolution of GF-1 WFV in this study was set to 15 m, not 16 m as expected normally.

Table 1.

Band Specification and Spatial Resolution of GF-1 WFV.

Table 2.

The used GF-1 WFV data.

2.3. Field Data and Training Samples

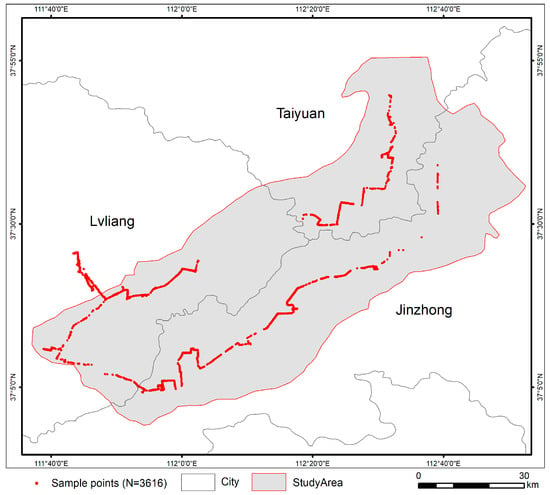

A 3-day field campaign was carried out on 24–26 February 2023. During the field campaign, the georeferenced pictures were taken with a GPS camera along the roads following predefined itineraries in the study area. At home, the land cover classes with the longitude and latitude coordinates were retrieved by visually screening pictures with the tool developed for the photo data interpretation [47,48,49]. During the field campaign, irrigated fields were partially frozen and waterlogged and it was also easy to identify on the satellite images. The final output of this process was a formatted file gathering all GPS points with corresponding classes, class codes, author, roadside (left or right), collecting dates, and times and the corresponding picture file names. Finally, 3616 ground truth pictures were valid and with spatial reference. All those sample points were distributed over the study area as shown in Figure 2.

Figure 2.

The distribution of field samples in the study area.

The field samples include built-up, water body, tree, orchards, irrigated filed, bare land, winter wheat, green house, and others. These samples are point-based ground truth and not ideally and evenly distributed in the study area. These field samples were used for further collecting, more and well-distributed training and validation samples by visually interpreting satellite images. The final samples were randomly separated into two groups with a ratio of 70% to 30%. 70%of the samples were used to build the classification model and perform the classification, while 30% were used for the validation of classified images. Following our previous experiences [47,48,49], the distance between two samples was taken into account in the sample separation process. In case both were too close, all pixels in the adjacent area were chosen as either training or validation. For instance, all samples at the level of image pixel taken in one field represented only one class, so it is good to treat them as one big sample. The threshold of the distance in this study was set as 900 m. This step avoids the strong spatial correlation among samples. Table 3 lists the description of all classes identified for final classification. Table 4 lists the number of samples and the proportion for each class at 15-m level for this study. Irrigation 1 represents the fields waterlogged or frozen in winter after the large volume flooding irrigation. Irrigation 2 represents the fields with the high soil moisture but without surface water. Two conditions explicated represented water amount difference in the fields. Irrigation 1 meant there was too much water in the fields. Due to a large volume of water applied to the field and weak evaporation in winter, no classification samples for Irrigation 2 were identified on 17 December, 4 January, and 25 January. In the other dates, the two kinds of irrigation conditions in the field were able to be identified.

Table 3.

Land cover types and their brief descriptions.

Table 4.

The number of training samples and the proportion for each class.

3. Methodology

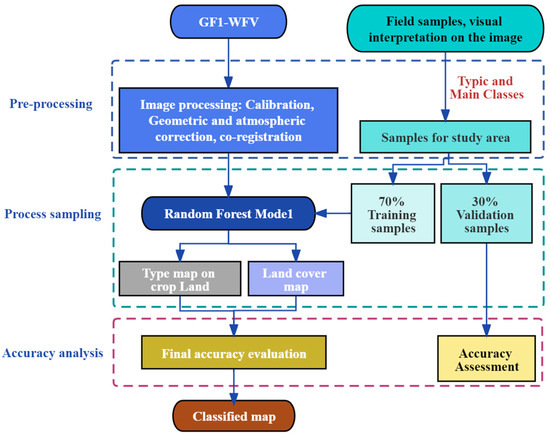

3.1. Classification Flowchart

Figure 3 presents the flowchart for this study. The GF-1 WFV satellite data from November 2022 to April 2023 were collected and processed. The main processing steps include calibration, geometric, and atmospheric correction. The calibration coefficients are available from the web portal [50]. The DN values in the images were converted to reflectance in the calibration step. The RPC Orthorectification approach [46] was applied to do geometric correction of all GF-1 L1b data. Thereafter, the FLAASH approach [46] was used to perform the atmospheric correction. In order to make all GF-1 images geometrically match each other, all images were co-registered with one scene of 10 m Sentinel-2 image obtained on 17 October 2022. Then, all finely co-registered images were mosaiced based on observing date and tailored to the study area.

Figure 3.

The flowchart for this study.

At the same period, the field data were collected. In general, these field data were not enough for the classification in terms of spatial distribution and statistical amount. In order to make well-spatially distributed training samples, these geotagged photos were linked with the satellite image to help skilled interpreters to visually identify more samples on satellite images. The classification samples corresponding to each image were separated into training and validation sets by a ratio of 70% to 30%.

In the next step, a Random Forest classifier was used to execute the classification with each image and corresponding training samples. The classified image was checked by validation samples based on error confusion matrix and expert knowledge visually. If the classification accuracy is not acceptable, tuning the training samples may improve the classification quality. Referring to F1-Score for each type, the classification samples were further tuned by spatially increasing or removal of some bad quality samples until the result was acceptable. Once the accuracy is acceptable, the final classified map is output, and the final accuracy is reported. Considering the irrigation changes over time, the training samples and validation samples were collected separately based on each image. The classification was carried out one image by one image and not worked with time series [51,52]. When all classified images were done, the final maps and statistics were made for further analysis.

3.2. Classifier Algorithm

The supervised classification algorithm is widely used at present. In supervised classification, the training samples must provide an association with the input images. The final class for each pixel is decided by the classifier. Many literatures [33,47,51,52] has proved that the accuracy from Random Forest (RF) often overperforms other supervised classifiers, e.g., Maximum Likelihood (ML) and Support Vector Machine (SVM). RF has become the popular classifier in recent years as it is robust and easy to apply and only few parameters need to be set and tuned accordingly. Therefore, RF was selected for this study. RF is a supervised machine learning algorithm and a kind of ensemble of the decision trees. RF can handle high dimension of and redundant input satellite data and does not have preference to the certain satellite data. What is of importance in executing RF is that it has to pay attention to the overfitting of the classification model. The detailed algorithm of RF may refer to the literature [53,54,55,56,57]. RF has only two key parameters to be considered. One is the number of features and another is the number of trees. RF will use the certain number of randomly selected features to build the model. It is not the case that the higher the accuracy, the more features are used. The highest accuracy may be reached with only a contained number of features. The drawback is that the higher number of features increases computing time. In this study, the number of features was set as the square root of the number of input bands of the image. The accuracy will reach the plateau after the certain number of the tree and there is no need to set a very high number. After the tests, 100 was set for the number of the tree. More features may increase the accuracy of the classified image. Every two spectral bands may be used to calculate a NDVI like index following our previous study [49]. So, in this study, all possible NDVI like indices were calculated and added with four spectral bands as the input features for the final classification.

3.3. Validation Methods

The error confusion matrix is usually used to quantitatively evaluate the accuracy of the classified image. The overall accuracy, OA, the Kappa, and F1-Scores may be further calculated based on the error confusion matrix. This study used above-mentioned indices to evaluate the accuracy. The formulars of computing OA, the Kappa, and F1-Scores may refer to the literatures [47,48,49].

In this study, the validation of each classified images was carried out separately with its independent validation samples. The statistic was computed by counting the number of pixels for each class.

4. Results and Analysis

4.1. The Classification Model Accuracy Analysis

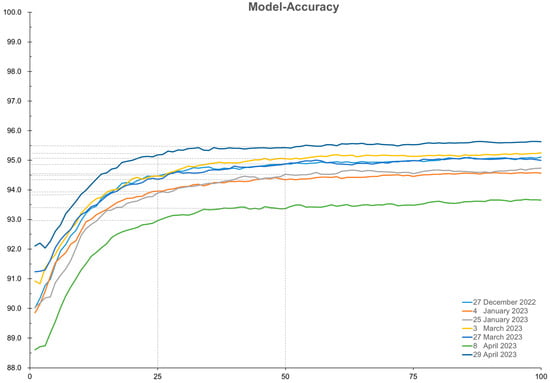

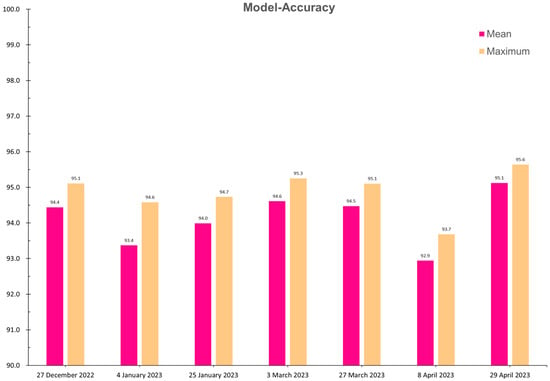

The accuracy of the classified model determines the top boundary of accuracy that classification may reasonably reach. The higher the model accuracy goes, the higher the classification accuracy may reach. Figure 4 shows the accuracies of out-of-bag of all models of Random Forest algorithm. The accuracy of out-of-bag is increasing as the number of trees increases and the accuracy reaches the plateau after 30 tries. In this study, it should be reasonable as the number of trees was set to 100 according to Figure 4. There were slight differences among all these models but the difference was in a range of about 2% that meant it was quite small. Figure 5 shows the averaged value and maximum value of model accuracy in the range of plateau taken from 50 to100 in this study. The averaged highest accuracy for seven models was 94.9% and the averaged mean accuracy was 94.1%. These data show that all models were good and acceptable.

Figure 4.

The accuracies of models corresponding to each image.

Figure 5.

The maximum and mean accuracies of models corresponding to each image in the plateau.

4.2. The Classified Images and Accuracies

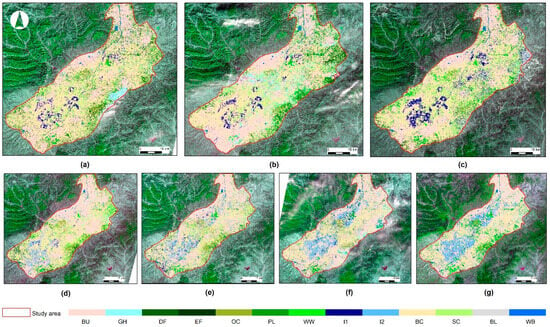

GF-1 WFV has only four bands, namely blue, green, red, and near infrared. These bands are the basic features that may be used for the classification. However, according to our previous study [49], every two spectral bands of four bands were used to calculate a NDVI like index and added as the input features. Figure 6 shows the classified images for all seven dates. The accuracies are listed in Table 5.

Figure 6.

The classified images: (a) on 27 December 2022, (b) on 4 January 2023, (c) on 25 January 2023, (d) on 3 March 2023, (e) on 27 March 2023, (f) on 8 April 2023, (g) on 29 April 2023, respectively.

Table 5.

Accuracies for all dates.

According to Table 5, in terms of overall classification performance, the OA for 7 classifications was between 86.8 and 92.5, Kappa between 84.0 and 91.0, F1-Score between 82.1 and 90.1. After the visual check of all classified images and looking at all these overall performance accuracy indictors, it concluded that these classifications were well-performed. As irrigation is the focus for this study, in the training phase, two kinds of irrigation conditions were identified. Irrigation 1 represented the fields with surface water or frozen ice and Irrigation 2 represented the fields without surface water but with high soil moisture. The F1-Scores for irrigation 1 on 17 December, 4 January, and 25 January were very high. In the other four dates, two kinds of irrigation condition were classified and the F1-Scores were not kept at the same height. The F1-Scores for irrigation 1 decreased a little and the F1-Scores for Irrigation 2 were in a large range of 72.7 to 95.8. It proves these two types were still able to be separated.

4.3. The Irrigated Area Analysis in Watersheds

According to the time series of satellite images, it found that irrigation carried out in early November when quite a few fields were irrigated and unfortunately there was no valid GF-1 satellite image which covered the entire study area available during this period for this study. As it was in winter and the temperature went down to below zero gradually, the irrigated fields were covered by frozen ice due to the cold temperature. The irrigated fields found were increased and suspended in December and January. Ice starts to melt in late-February, and the newly irrigated fields were found again in March and April as it was able to apply irrigation. The largest irrigation area was identified in late-April as the sowing happened in May and the fields must dry up for sowing. Based on these classified images, the irrigation area on each date was calculated for each watershed. Table 6 lists the statistics of irrigation conditions on seven dates for three watersheds in the study area.

Table 6.

The irrigation area for 3 watersheds (Unit: km2).

Fen River irrigation area is the largest one in the study area. The area of irrigated fields identified in frozen winter season accounted for 13.7% of total irrigatable land and the area of irrigated fields before sowing increased and accounted for 43.9%. As a tributary of Fen river, Wenyu river irrigable land ranks the second. The area of irrigated fields in winter reached 11.0% of total irrigatable land, and the area of irrigated fields before sowing increased to 35.6%. Xiao river irrigation area is the smallest one. The area of irrigated fields identified in frozen winter season accounted for 12.3% of total irrigatable land and the area of irrigated fields before sowing increased and accounted for 33.0%.

5. Discussion

5.1. The Challenges of Identifying Irrigation Outside the Growing Season

Our purpose was to know how many and in which fields irrigation has applied before the sowing season in May in spring. Many irrigated fields were able to be retrieved in the classification as the training samples were able to be visually identified. This case study has achieved its original research purpose and may complement the existing methods of mapping irrigation fields in growing season.

However, sometimes, it is not able to make the training samples inclusive. Shallow surface water or soil water in a few irrigated fields evaporates over time and the water in the fields gradually disappears as the air temperature goes up in spring. To distinguish this kind of dry up of irrigated field from other classes becomes indistinct due to the long interval between two satellite images. These kinds of irrigated fields will be omitted in the classified results as there are no training samples represented in this scenario.

This study was able to identify the irrigated fields but it did not answer which day irrigation was applied and how much water was applied. Both questions were not able to be answered in this study and they should be taken into consideration in the future research.

5.2. The Consideration in This Irrigation Mapping

In this study, only 7 scenes of GF-1 images out of growing season were valid and it witnessed the real capacity of GF-1 alone for identifying the irrigation fields. Optical satellite image is prone to cloud contamination. The better the results will be, the more multiple sources satellite images are available. Ideally, if daily and high-quality satellite images are available, it can identify the new irrigation event in time. In this sense, the integration of many more other high resolution satellite data, such as Sentinel-1/2 and Landsat8/9, should improve this study considerably.

In this study area, farmers conduct irrigation to the bare arable land as soon as the winter comes. It is easier to visually identify the irrigated field from bare land than vegetated fields. Two sets of irrigation scenarios in the fields were distinguished. Irrigation 1 represents the fields waterlogged or frozen in winter after the large volume flooding irrigation. Irrigation 2 represents the fields with the high soil moisture but without surface water. Due to the cold temperature and less evaporation in winter, no classification samples for Irrigation 2 were identified on the images of 17 December, 4 January, and 25 January while all irrigation samples represented Irrigation 1 as the irrigated fields were frozen on these dates. In the other four dates, the two kinds of irrigation conditions in the field were able to be identified.

5.3. The Winter Irrigation Impact on Ecosystem

The winter irrigation was a kind of cultivation management in the region in order to increase crop yield in the next year. Irrigation out of growing season has the advantage of protecting the ecosystem. It may help reduce the wind erosion due to wet soil in the field surface when the strong wind happens in spring. But a large volume of water applied also brings some adverse ecological effects on the farming system. Sowing in Spring 2023 had to be postponed due to wet soil in the field. On the image of 29 April 2023, it still found surface water on the fields. These fields were not able to be sowed in time. Therefore, the answer to the economic and minimum amount of water put into the field also needs to be further investigated. Soil salinization is another adverse effect induced by irrigation. Large volume of water speeds up evaporation in spring and brings the salt in deep soil back to the field surface. These effects on the ecological system imposed by irrigation out of season are worth further investigating in the near future.

6. Conclusions

This study explored the remote sensing-based classification approach to identify irrigated fields out of growing season in the winter season of 2022 to 2023. The proposed classification approach took four spectral bands and all NDVI like indices computed from any two of these four bands of GF-1 satellite data as the input features of the Random Forest algorithm. Regarding the two key parameters of RF, the number of features was set as the square root of the number of input bands of the image while the number of the tree was set to 100. The classification samples corresponding to each image were obtained by visual interpretation with the support of collected field data and then separated into training and validation sets by a ratio of 70% to 30%. Finally, the irrigated fields along with time in Jinzhong basin of Shanxi province, China were retrieved on the seven scenes of valid GF-1 satellite images, respectively.

The results show that the method developed in this study performed well and no overperformance and underperformance were found as the accuracies of classified image were not higher or far lower than that from models. The validations showed that the mean of the highest out-of-bag accuracies for seven RF models was 94.9% and the mean of the averaged out-of-bag accuracies in the plateau for seven RF models was 94.1%; the overall accuracy for all seven classified outputs was in the range of 86.8–92.5%, Kappa in the range of 84.0–91.0%, and F1-Score in the range of 82.1–90.1%. The lowest OA was 86.8% in comparison with the model accuracy of 92.9%, and the highest OA 92.5% in comparison with the model accuracy of 94.4%. The F1-Scores for irrigation 1 on 17 December, 4 January, and 25 January were very high and in the range of 92.2–97.2%. On the other four dates, the F1-Scores for Irrigation 1 decreased slightly and in the range of 86.0–91.7%, and the F1-Scores for Irrigation 2 were in a large range of 72.7 to 95.8%.

It also found that irrigation in the study area was carried out in early November but the quite few fields started to be irrigated, and the number of irrigated fields increased and suspended in December and January when the irrigated fields were covered by frozen ice and it was not able to apply irrigation due to low temperature. The irrigation was carried out again as the temperature went up in late February. The irrigation extended dramatically in March and April. The largest irrigation area was identified in later April as the sowing happened in May and the fields must dry up for sowing. The area of irrigated fields in the study area were increasing over time with sizes of 98.6, 166.9, 208.0, 292.8, 538.0, 623.1, 653.8 km2 from December to April, accounting for 6.1%, 10.4%, 12.9%,18.2%, 33.4%, 38.7%, and 40.6% of the total irrigatable land in the study area, respectively.

This case study shows that there is another window out of growing season to map the irrigated fields using Random Forest classification algorithm. This knowledge may complement the traditional consideration of retrieving irrigation maps only in growing season with remote sensing images for a large area. It also found too much water was applied in this study area and a few wet fields were not able to be sowed in time. The positive and adverse effect on the ecologic system imposed by irrigation out of season is worth being further investigated in the near future in order to support sustainable water resources management in the region. If the dense and even-distributed time series of valid satellite images may be made available, the irrigated fields over time may be well identified with the proposed approach. It frequently provides better irrigation information to the water resource authority and then the water resource authority may evaluate the excess water usage and its ecological consequences.

Author Contributions

Conceptualization, J.F., Q.S. and P.D.; methodology, J.F., Q.S. and Z.Q.; software, J.F. and C.Z.; validation, J.L., W.Z., R.P. and Y.S.; data processing, J.L. and Y.L.; writing, Q.S. and J.F.; comments Z.Q. and P.D. All authors have read and agreed to the published version of the manuscript.

Funding

The authors are grateful for the financial support by the National key research and development program(2017YFB0504105), ESA project (Dragon 5 58944).

Data Availability Statement

Some or all data, models, or code that support the findings of this study are available from the corresponding author upon reasonable request.

Acknowledgments

The authors are grateful for the valuable comments from anonymous reviewers.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Mancosu, N.; Snyder, R.L.; Kyriakakis, G.; Spano, D. Water Scarcity and Future Challenges for Food Production. Water 2015, 7, 975–992. [Google Scholar] [CrossRef]

- Debnath, S.; Adamala, S.; Palakuru, M. An overview of Indian traditional irrigation systems for sustainable agricultural practices. Int. J. Mod. Agric. 2020, 9, 12–22. [Google Scholar]

- Deng, X.P.; Shan, L.; Zhang, H.; Turner, N.C. Improving agricultural water use efficiency in arid and semiarid areas of China. Agric. Water Manag. 2006, 80, 23–40. [Google Scholar] [CrossRef]

- Mabhaudhi, T.; Mpandeli, S.; Nhamo, L.; Chimonyo, V.G.P.; Nhemachena, C.; Senzanje, A.; Naidoo, D.; Modi, A.T. Prospects for improving irrigated agriculture in southern Africa: Linking water, energy and food. Water 2018, 10, 1881. [Google Scholar] [CrossRef]

- Mpanga, I.K.; Idowu, O.J. A decade of irrigation water use trends in Southwestern USA: The role of irrigation technology, best management practices, and outreach education programs. Agric. Water Manag. 2021, 243, 106438. [Google Scholar] [CrossRef]

- Nkwasa, A.; Chawanda, C.J.; Jägermeyr, J.; van Griensven, A. Improved representation of agricultural land use and crop management for large-scale hydrological impact simulation in Africa using SWAT+. Hydrol. Earth Syst. Sci. 2022, 26, 71–89. [Google Scholar] [CrossRef]

- Ji, L.; Senay, G.B.; Friedrichs, M.K.; Schauer, M.; Boiko, O. Characterization of water use and water balance for the croplands of Kansas using satellite, climate, and irrigation data. Agric. Water Manag. 2021, 256, 107106. [Google Scholar] [CrossRef]

- Brauman, K.A.; Siebert, S.; Foley, J.A. Improvements in crop water productivity increase water sustainability and food security—A global analysis. Environ. Res. Lett. 2013, 8, 024030. [Google Scholar] [CrossRef]

- Siebert, S.; Döll, P.; Hoogeveen, J.; Faures, J.-M.; Frenken, K.; Feick, S. Development and validation of the global map of irrigation areas. Hydrol. Earth Syst. Sci. 2005, 9, 535–547. [Google Scholar] [CrossRef]

- Bégué, A.; Arvor, D.; Bellon, B.; Betbeder, J.; De Abelleyra, D.; Ferraz, P.D.R.; Lebourgeois, V.; Lelong, C.; Simões, M.; Verón, R.S. Remote Sensing and Cropping Practices: A Review. Remote Sens. 2018, 10, 99. [Google Scholar] [CrossRef]

- Thenkabail, P.S.; Biradar, C.M.; Noojipady, P.; Dheeravath, V.; Li, Y.; Velpuri, M.; Gumma, M.; Gangalakunta, O.R.P.; Turral, H.; Cai, X.; et al. Global irrigated area map (GIAM), derived from remote sensing, for the end of the last millennium. Int. J. Remote Sens. 2009, 30, 3679–3733. [Google Scholar] [CrossRef]

- Wu, B.; Tian, F.; Nabil, M.; Bofana, J.; Lu, Y.; Elnashar, A.; Beyene, A.N.; Zhang, M.; Zeng, H.; Zhu, W. Mapping global maximum irrigation extent at 30 m resolution using the irrigation performances under drought stress. Glob. Environ. Change 2023, 79, 102652. [Google Scholar] [CrossRef]

- Zajac, Z.; Gomez, O.; Gelati, E.; van der Velde, M.; Bassu, S.; Ceglar, A.; Chukaliev, O.; Panarello, L.; Koeble, R.; van den Berg, M.; et al. Estimation of spatial distribution of irrigated crop areas in Europe for large-scale modelling applications. Agric. Water Manag. 2022, 266, 107527. [Google Scholar] [CrossRef]

- Siddiqui, S.; Cai, X.; Chandrasekharan, K. Irrigated Area Map Asia and Africa; International Water Management Institute: Battaramulla, Sri Lanka, 2016; Available online: http://waterdata.iwmi.org/applications/irri_area/ (accessed on 6 August 2023).

- Zhang, C.; Dong, J.; Ge, Q. Mapping 20 years of irrigated croplands in China using MODIS and statistics and existing irrigation products. Sci. Data 2022, 9, 407. [Google Scholar] [CrossRef]

- Zhao, G.; Siebert, S. Season-wise irrigated and rainfed crop areas for India around year 2005. MyGeoHUB 2015. [Google Scholar] [CrossRef]

- Ambika, A.; Wardlow, B.; Mishra, V. Remotely sensed high resolution irrigated area mapping in India for 2000 to 2015. Sci. Data 2016, 3, 160118. [Google Scholar] [CrossRef]

- Gumma, M.K.; Thenkabail, P.S.; Hideto, F.; Nelson, A.; Dheeravath, V.; Busia, D.; Rala, A. Mapping Irrigated Areas of Ghana Using Fusion of 30 m and 250 m Resolution Remote-Sensing Data. Remote Sens. 2011, 3, 816–835. [Google Scholar] [CrossRef]

- Xie, Y.; Lark, T. Mapping annual irrigation from Landsat imagery and environmental variables across the conterminous United States Remote. Sens. Environ. 2021, 260, 112445. [Google Scholar] [CrossRef]

- Wriedt, G.; Velde, M.; Aloe, A.; Bouraoui, F. A European irrigation map for spatially distributed agricultural modelling. Agric. Water Manag. 2009, 96, 771–789. [Google Scholar] [CrossRef]

- Salmon, J.M.; Friedl, M.A.; Frolking, S.; Wisser, D.; Douglas, E.M. Global rain-fed, irrigated, and paddy croplands: A new high resolution map derived from remote sensing, crop inventories and climate data. Int. J. Appl. Earth Obs. Geoinf. 2015, 38, 321–334. [Google Scholar] [CrossRef]

- Lu, Y.; Song, W.; Su, Z.; Lü, J.; Liu, Y.; Li, M. Mapping irrigated areas using random forest based on gf-1 multi-spectral data. ISPRS J. Photogramm. Remote Sens. 2020, XLIII-B2-2020, 697–702. [Google Scholar] [CrossRef]

- Magidi, J.; Nhamo, L.; Mpandeli, S.; Mabhaudhi, T. Application of the Random Forest Classifier to Map Irrigated Areas Using Google Earth Engine. Remote Sens. 2021, 13, 876. [Google Scholar] [CrossRef]

- Chaves, E.D.M.; Picoli, C.A.M.; Sanches, D.I. Recent Applications of Landsat 8/OLI and Sentinel-2/MSI for Land Use and Land Cover Mapping: A Systematic Review. Remote Sens. 2020, 12, 3062. [Google Scholar] [CrossRef]

- Bauer, M.E.; Cipra, J.E.; Anuta, P.E.; Etheridge, J.B. Identification and Area Estimation of Agricultural Crops by Computer Classification of LANDSAT MSS Data. Remote Sens. Environ. 1979, 8, 77–92. [Google Scholar] [CrossRef]

- Badhwar, G.B. Automatic corn-soybean classification using Landsat MSS data. II. Early season crop proportion estimation. Remote Sens. Environ. 1984, 14, 31–37. [Google Scholar] [CrossRef]

- Fan, X.S.; Xu, W.B.; Fan, J.L. Mapping winter wheat growing areas in the North China Plain with FY-3250 m resolution data. J. Remote Sens. 2015, 19, 586–593. [Google Scholar] [CrossRef]

- Claverie, M.; Ju, J.; Masek, J.G.; Dungan, J.L.; Vermote, E.F.; Roger, J.-C.; Skakun, S.V.; Justice, C. The Harmonized Landsat and Sentinel-2 surface reflectance data set. Remote Sens. Environ. 2018, 219, 145–161. [Google Scholar]

- Lunetta, R.S.; Johnson, D.M.; Lyon, J.G.; Crotwell, J. Impacts of imagery temporal frequency on land-cover change detection monitoring. Remote Sens. Environ. 2004, 89, 444–454. [Google Scholar]

- Carrão, H.; Gonçalves, P.; Caetano, M. Contribution of multispectral and mul-titemporal information from MODIS images to land cover classification. Remote Sens. Environ. 2008, 112, 986–997. [Google Scholar] [CrossRef]

- Griffiths, P.; Nendel, C.; Hostert, P. Intra-annual reflectance composites from sentinel-2 and landsat for national-scale crop and land cover mapping. Remote Sens. Environ. 2019, 220, 135–151. [Google Scholar] [CrossRef]

- Xiong, J.; Thenkabail, P.S.; Gumma, M.K.; Teluguntla, P.; Poehnelt, J.; Congalton, R.G.; Yadav, K.; Thau, D. Automated cropland mapping of continental Africa using Google Earth Engine cloud computing. ISPRS J. Photogramm. Remote Sens. 2017, 126, 225–244. [Google Scholar] [CrossRef]

- Defourny, P.; Bontemps, S.; Bellemans, N.; Cara, C.; Dedieu, G.; Guzzonato, E.; Hagolle, O.; Inglada, J.; Nicola, L.; Rabaute, T.; et al. Near real-time agriculture monitoring at national scale at parcel resolution: Performance assessment of the Sen2-Agri automated system in various cropping systems around the world. Remote Sens. Environ. 2018, 221, 551–568. [Google Scholar] [CrossRef]

- Bazzi, H.; Baghdadi, N.; Fayad, I.; Charron, F.; Zribi, M.; Belhouchette, H. Irrigation Events Detection over Intensively Irrigated Grassland Plots Using Sentinel-1 Data. Remote Sens. 2020, 12, 4058. [Google Scholar] [CrossRef]

- Bazzi, H.; Baghdadi, N.; Fayad, I.; Zribi, M.; Demarez, V.; Pageot, Y.; Belhouchette, H. Detecting irrigation events using Sentinel-1 data. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 6355–6358. [Google Scholar] [CrossRef]

- Balenzano, A.; Satalino, G.; Lovergine, F.P.; D’Addabbo, A.; Palmisano, D.; Grassi, R.; Ozalp, O.; Mattia, F.; Nafría García, D.; Paredes Gómez, V. Sentinel-1 and Sentinel-2 Data to Detect Irrigation Events: Riaza Irrigation District (Spain) Case Study. Water 2022, 14, 3046. [Google Scholar] [CrossRef]

- Blaes, X.; Vanhalle, L.; Defourny, P. Efficiency of crop identification based on optical and SAR image time series. Remote Sens. Environ. 2005, 96, 352–365. [Google Scholar] [CrossRef]

- Zhao, W.; Qu, Y.; Chen, J.; Yuan, Z. Deeply synergistic optical and SAR time series for crop dynamic monitoring. Remote Sens. Environ. 2020, 247, 111952. [Google Scholar] [CrossRef]

- Orynbaikyzy, A.; Gessner, U.; Mack, B.; Conrad, C. Crop Type Classification Using Fusion of Sentinel-1 and Sentinel-2 Data: Assessing the Impact of Feature Selection, Optical Data Availability, and Parcel Sizes on the Accuracies. Remote Sens. 2020, 12, 2779. [Google Scholar] [CrossRef]

- Zappa, L.; Schlaffer, S.; Brocca, L.; Vreugdenhil, M.; Nendel, C.; Dorigo, W. How accurately can we retrieve irrigation timing and water amounts from (satellite) soil moisture? Int. J. Appl. Earth Obs. Geoinf. 2022, 113, 102979. [Google Scholar] [CrossRef]

- Liu, C.; Guo, T. The Questions and Countermeasures in Fen River Irrigation Area. Shanxi Water Resour. 2017, 6, 2. [Google Scholar]

- Lei, J. The Current Condition and Development Plan on the Engineering Construction in Wenyu River Irrigation Area. Shanxi Water Resour. 2019, 6, 3. [Google Scholar]

- Huo, X. Review on the design of the Trapezoidal Channel of attached Engineering in Xiao River Irrigation Area. Shanxi Water Resour. 2014, 30, 10. [Google Scholar]

- Zhao, S.H.; Wang, Q.; Yang, Y.P.; Zhu, L.; Wang, Z.T.; Jiang, D. The demonstration research of GF-1 satellite data monitoring environment application. Satell. Appl. 2015, 3, 37–40. [Google Scholar]

- Jiang, W.; He, G.; Liu, H.; Long, T.; Wang, W.; Zheng, S.; Ma, X. Research on China’s land image mosaicking and mapping technology based on GF-1 satellite WFV data. Remote Sens. Land Resour. 2017, 29, 190–196. [Google Scholar] [CrossRef]

- Liu, J.; Wang, L.; Yang, L.; Teng, F.; Shao, J.; Yang, F.; Fu, C. GF-1 satellite image atmospheric correction based on 6S model and its effect. Trans. Chin. Soc. Agric. Eng. 2015, 31, 159–168. [Google Scholar]

- Fan, J.; Defourny, P.; Dong, Q.; Zhang, X.; De Vroey, M.; Belleman, N.; Xu, Q.; Li, Q.; Zhang, L.; Gao, H. Sent2Agri System Based Crop Type Mapping in Yellow River Irrigation Area. J. Geod. Geoinf. Sci. 2020, 3, 110–117. [Google Scholar]

- Fan, J.; Defourny, P.; Zhang, X.; Dong, Q.; Wang, L.; Qin, Z.; De Vroey, M.; Zhao, C. Crop Mapping with Combined Use of European and Chinese Satellite Data. Remote Sens. 2021, 13, 4641. [Google Scholar] [CrossRef]

- Fan, J.; Zhang, X.; Zhao, C.; Qin, Z.; De Vroey, M.; Defourny, P. Evaluation of Crop Type Classification with Different High Resolution Satellite Data Sources. Remote Sens. 2021, 13, 911. [Google Scholar] [CrossRef]

- Calibration Coefficients for Domestic Satellite Data (2008–2022). Available online: https://www.cresda.com/zgzywxyyzx/zlxz/article/20230410112855288395031.html (accessed on 24 May 2023).

- Gómez, C.; White, J.C.; Wulder, M.A. Optical remotely sensed time series data for land cover classification: A review. ISPRS J. Photogramm. Remote Sens. 2016, 116, 55–72. [Google Scholar] [CrossRef]

- Durgun, Y.Ö.; Gobin, A.; Van De Kerchove, R.; Tychon, B. Crop Area Mapping Using 100-m Proba-V Time Series. Remote Sens. 2016, 8, 585. [Google Scholar] [CrossRef]

- Pelletiera, C.; Valeroa, S.; Ingladaa, J.; Championb, N.; Dedieua, G. Assessing the robustness of Random Forests to map land cover with high resolution satellite image time series over large areas. Remote Sens. Environ. 2016, 187, 156–168. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Gislason, P.O.; Benediktsson, J.A.; Sveinsson, J.R. Random Forests for land cover classification. Pattern Recognit. Lett. 2006, 27, 294–300. [Google Scholar] [CrossRef]

- Pal, M. Random Forests for Land Cover Classification. In Proceedings of the IEEE International Symposium on Geoscience and Remote Sensing (IGARSS), Toulouse, France, 21–25 July 2003; pp. 3510–3512. [Google Scholar]

- Polikar, R. Ensemble based systems in decision making. IEEE Circuits Syst. Mag. 2006, 6, 21–45. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).