A Review on UAV-Based Applications for Plant Disease Detection and Monitoring

Abstract

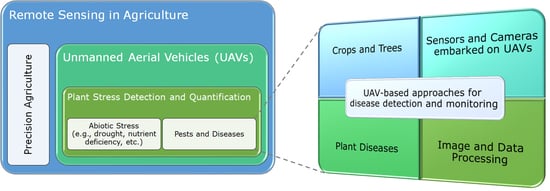

1. Introduction

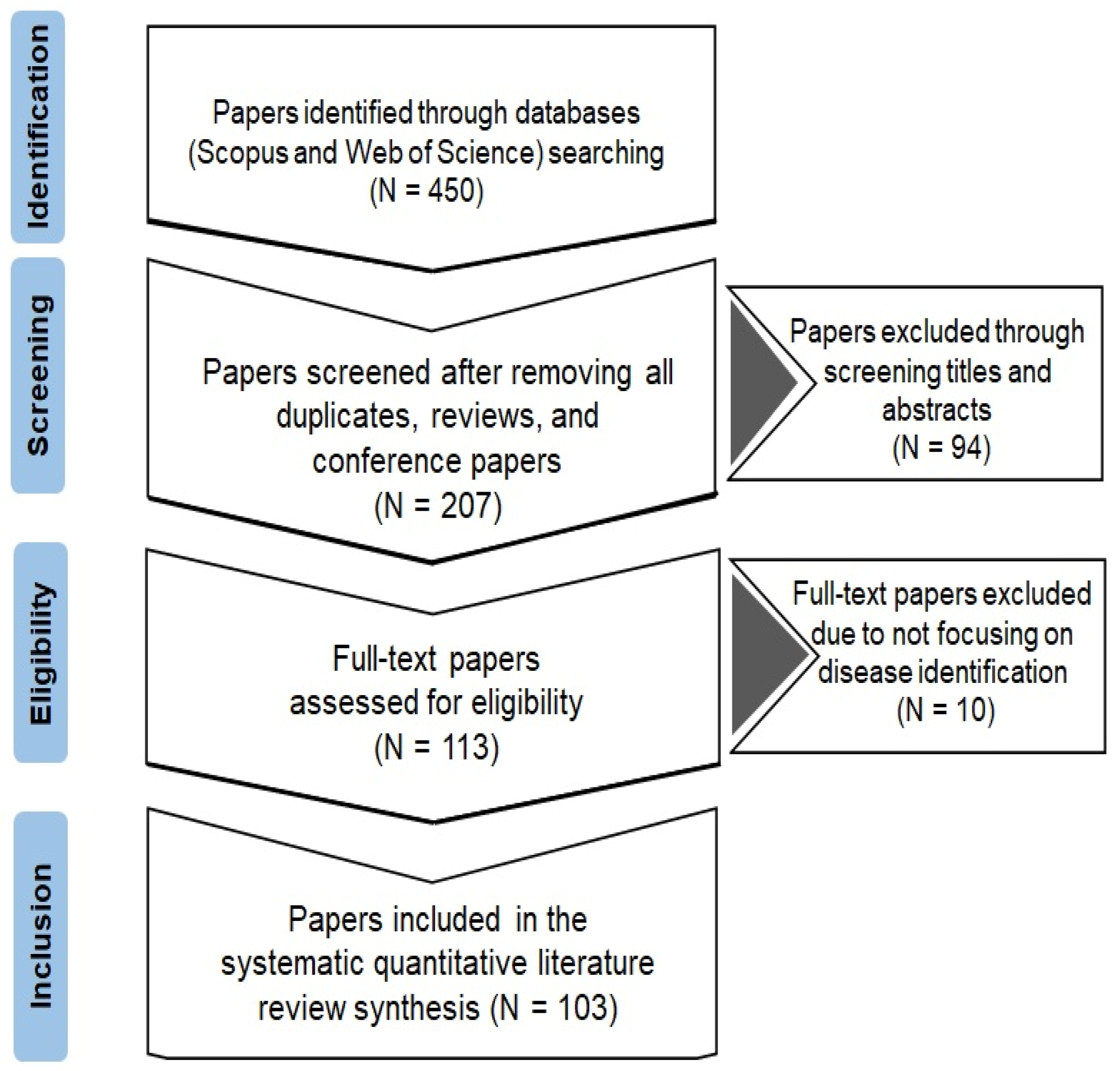

2. Methods

3. Results

3.1. Increased Research Interest in Recent Years

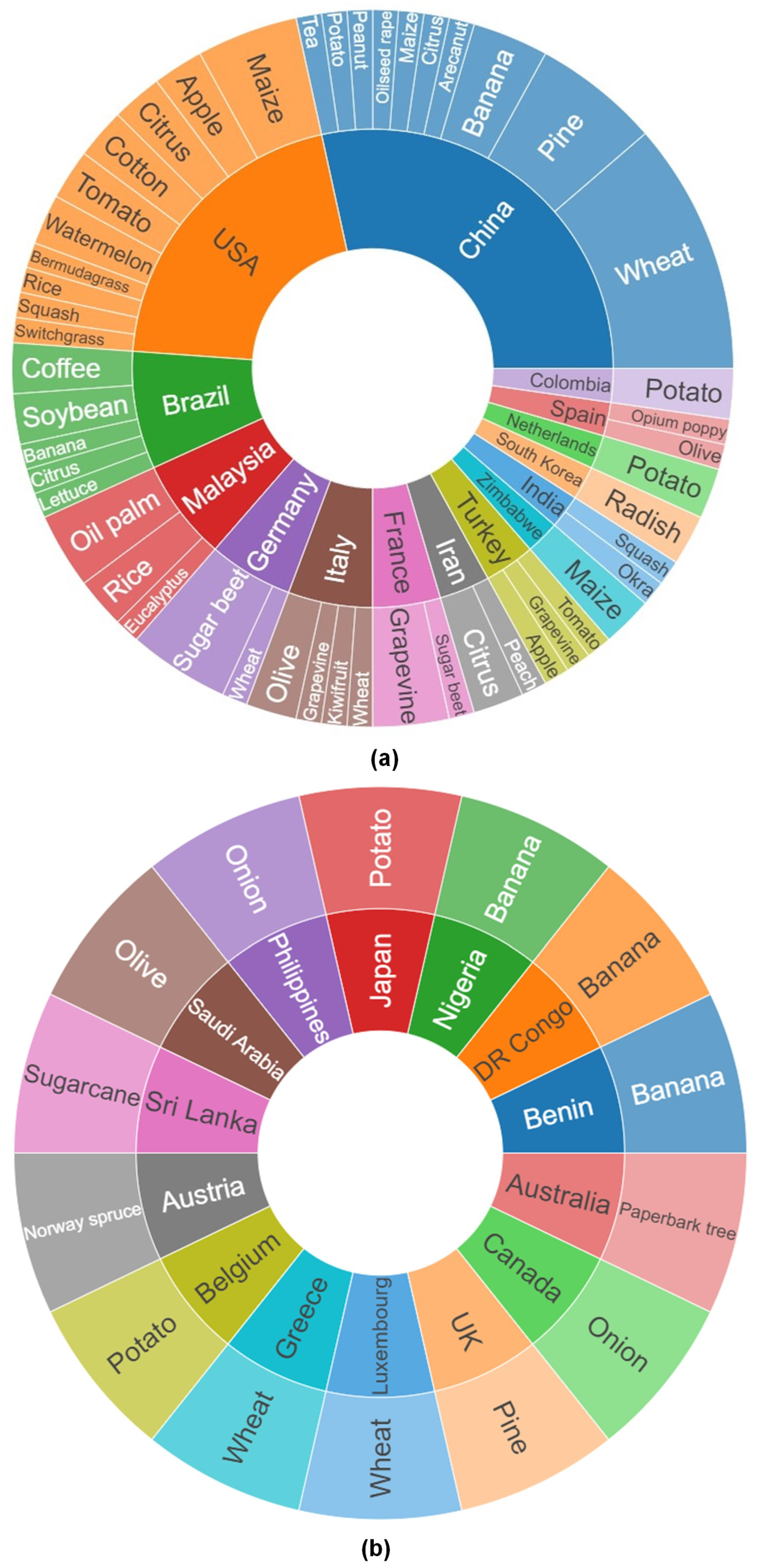

3.2. Plants of Interest Found in the Reviewed Research Articles

3.3. Diseases and Groups of Pathogens Investigated

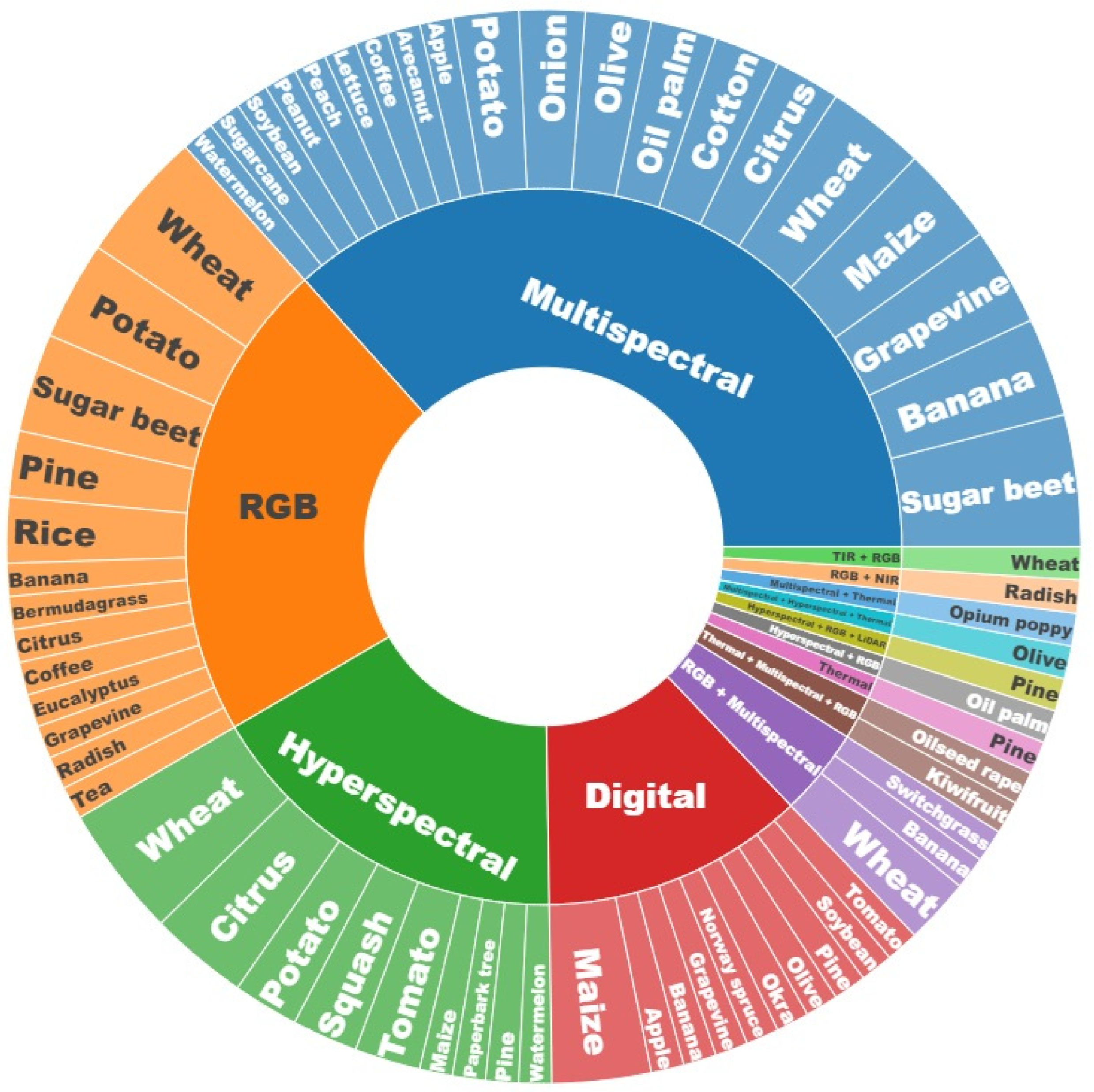

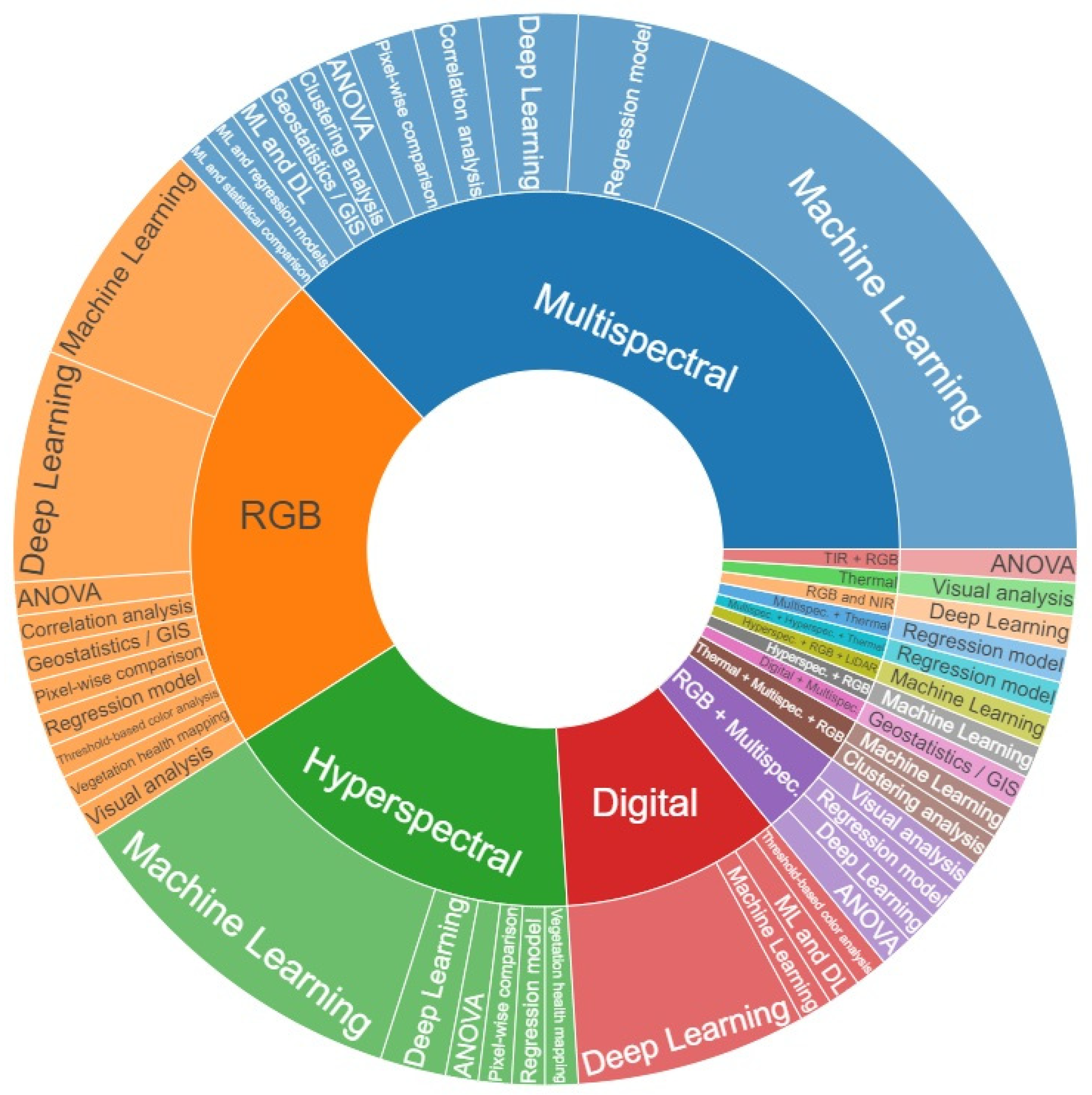

3.4. Sensors Used for the Detection and Monitoring of Plant Diseases

3.5. Methods Used for Image Processing and Data Analysis

3.6. Increasing Popularity of Machine Learning-Based Approaches

4. Discussion

4.1. Promising Means for Improving Plant Disease Management

4.2. Addressing the Potential Ethical Implications and Privacy Concerns

4.3. The Way Forward

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ristaino, J.B.; Anderson, P.K.; Bebber, D.P.; Brauman, K.A.; Cunniffe, N.J.; Fedoroff, N.V.; Finegold, C.; Garrett, K.A.; Gilligan, C.A.; Jones, C.M.; et al. The persistent threat of emerging plant disease pandemics to global food security. Proc. Natl. Acad. Sci. USA 2021, 118, e2022239118. [Google Scholar] [CrossRef]

- Chaloner, T.M.; Gurr, S.J.; Bebber, D.P. Plant pathogen infection risk tracks global crop yields under climate change. Nat. Clim. Chang. 2021, 11, 710–715. [Google Scholar] [CrossRef]

- FAO. New Standards to Curb the Global Spread of Plant Pests and Diseases; The Food and Agriculture Organization of the United Nations (FAO): Rome, Italy, 2019; Available online: https://www.fao.org/news/story/en/item/1187738/icode/ (accessed on 16 January 2023).

- Savary, S.; Willocquet, L.; Pethybridge, S.J.; Esker, P.; McRoberts, N.; Nelson, A. The global burden of pathogens and pests on major food crops. Nat. Ecol. Evol. 2019, 3, 430–439. [Google Scholar] [CrossRef] [PubMed]

- Chakraborty, S.; Newton, A.C. Climate change, plant diseases and food security: An overview. Plant Pathol. 2011, 60, 2–14. [Google Scholar] [CrossRef]

- Gilbert, G.S. Evolutionary ecology of plant diseases in natural ecosystems. Annu. Rev. Phytopathol. 2002, 40, 13–43. [Google Scholar] [CrossRef] [PubMed]

- Bennett, J.W.; Klich, M. Mycotoxins. In Encyclopedia of Microbiology, 3rd ed.; Schaechter, M., Ed.; Academic Press: Oxford, UK, 2009; pp. 559–565. [Google Scholar]

- Cao, S.; Luo, H.; Jin, M.A.; Jin, S.; Duan, X.; Zhou, Y.; Chen, W.; Liu, T.; Jia, Q.; Zhang, B.; et al. Intercropping influenced the occurrence of stripe rust and powdery mildew in wheat. Crop Prot. 2015, 70, 40–46. [Google Scholar] [CrossRef]

- Verreet, J.A.; Klink, H.; Hoffmann, G.M. Regional monitoring for disease prediction and optimization of plant protection measures: The IPM wheat model. Plant Dis. 2000, 84, 816–826. [Google Scholar] [CrossRef]

- Jones, R.A.C.; Naidu, R.A. Global dimensions of plant virus diseases: Current status and future perspectives. Annu. Rev. Virol. 2019, 6, 387–409. [Google Scholar] [CrossRef]

- Moran, M.S.; Inoue, Y.; Barnes, E.M. Opportunities and limitations for image-based remote sensing in precision crop management. Remote Sens. Environ. 1997, 61, 319–346. [Google Scholar] [CrossRef]

- Seelan, S.K.; Laguette, S.; Casady, G.M.; Seielstad, G.A. Remote sensing applications for precision agriculture: A learning community approach. Remote Sens. Environ. 2003, 88, 157–169. [Google Scholar] [CrossRef]

- Barbedo, J.G.A. A review on the use of unmanned aerial vehicles and imaging sensors for monitoring and assessing plant stresses. Drones 2019, 3, 40. [Google Scholar] [CrossRef]

- Mahlein, A.-K.; Oerke, E.-C.; Steiner, U.; Dehne, H.-W. Recent advances in sensing plant diseases for precision crop protection. Eur. J. Plant Pathol. 2012, 133, 197–209. [Google Scholar] [CrossRef]

- Bock, C.H.; Poole, G.H.; Parker, P.E.; Gottwald, T.R. Plant disease severity estimated visually, by digital photography and image analysis, and by hyperspectral imaging. Crit. Rev. Plant Sci. 2010, 29, 59–107. [Google Scholar] [CrossRef]

- Chang, C.Y.; Zhou, R.; Kira, O.; Marri, S.; Skovira, J.; Gu, L.; Sun, Y. An Unmanned Aerial System (UAS) for concurrent measurements of solar-induced chlorophyll fluorescence and hyperspectral reflectance toward improving crop monitoring. Agric. For. Meteorol. 2020, 294, 108145. [Google Scholar] [CrossRef]

- Neupane, K.; Baysal-Gurel, F. Automatic identification and monitoring of plant diseases using unmanned aerial vehicles: A review. Remote Sens. 2021, 13, 3841. [Google Scholar] [CrossRef]

- Boursianis, A.D.; Papadopoulou, M.S.; Diamantoulakis, P.; Liopa-Tsaalidi, A.; Barouchas, P.; Salahas, G.; Karagiannidis, G.; Wan, S.; Goudos, S.K. Internet of Things (IoT) and agricultural unmanned aerial vehicles (UAVs) in smart farming: A comprehensive review. Internet Things 2022, 18, 100187. [Google Scholar] [CrossRef]

- Panday, U.S.; Pratihast, A.K.; Aryal, J.; Kayastha, R.B. A review on drone-based data solutions for cereal crops. Drones 2020, 4, 41. [Google Scholar] [CrossRef]

- Feng, L.; Chen, S.; Zhang, C.; Zhang, Y.; He, Y. A comprehensive review on recent applications of unmanned aerial vehicle remote sensing with various sensors for high-throughput plant phenotyping. Comput. Electron. Agric. 2021, 182, 106033. [Google Scholar] [CrossRef]

- Mahlein, A.-K. Plant disease detection by imaging sensors-Parallels and specific demands for precision agriculture and plant phenotyping. Plant Dis. 2015, 100, 241–251. [Google Scholar] [CrossRef]

- Maes, W.H.; Steppe, K. Perspectives for remote sensing with unmanned aerial vehicles in precision agriculture. Trends Plant Sci. 2019, 24, 152–164. [Google Scholar] [CrossRef]

- Barbedo, J.G.A. Factors influencing the use of deep learning for plant disease recognition. Biosyst. Eng. 2018, 172, 84–91. [Google Scholar] [CrossRef]

- Barbedo, J.G.A. A review on the main challenges in automatic plant disease identification based on visible range images. Biosyst. Eng. 2016, 144, 52–60. [Google Scholar] [CrossRef]

- Bouguettaya, A.; Zarzour, H.; Kechida, A.; Taberkit, A.M. A survey on deep learning-based identification of plant and crop diseases from UAV-based aerial images. Cluster Comp. 2022, 26, 1297–1317. [Google Scholar] [CrossRef] [PubMed]

- Shahi, T.B.; Xu, C.-Y.; Neupane, A.; Guo, W. Recent advances in crop disease detection using UAV and deep learning techniques. Remote Sens. 2023, 15, 2450. [Google Scholar] [CrossRef]

- Kuswidiyanto, L.W.; Noh, H.H.; Han, X.Z. Plant disease diagnosis using deep learning based on aerial hyperspectral images: A review. Remote Sens. 2022, 14, 6031. [Google Scholar] [CrossRef]

- Pickering, C.; Byrne, J. The benefits of publishing systematic quantitative literature reviews for PhD candidates and other early-career researchers. High. Educ. Res. Dev. 2014, 33, 534–548. [Google Scholar] [CrossRef]

- Pickering, C.; Grignon, J.; Steven, R.; Guitart, D.; Byrne, J. Publishing not perishing: How research students transition from novice to knowledgeable using systematic quantitative literature reviews. Stud. High. Educ. 2015, 40, 1756–1769. [Google Scholar] [CrossRef]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G. Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. BMJ 2009, 339, b2535. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2023. [Google Scholar]

- Calderón, R.; Navas-Cortés, J.A.; Lucena, C.; Zarco-Tejada, P.J. High-resolution airborne hyperspectral and thermal imagery for early detection of Verticillium wilt of olive using fluorescence, temperature and narrow-band spectral indices. Remote Sens. Environ. 2013, 139, 231–245. [Google Scholar] [CrossRef]

- Messina, G.; Modica, G. Applications of UAV thermal imagery in precision agriculture: State of the art and future research outlook. Remote Sens. 2020, 12, 1491. [Google Scholar] [CrossRef]

- Sandino, J.; Pegg, G.; Gonzalez, F.; Smith, G. Aerial mapping of forests affected by pathogens using UAVs, hyperspectral sensors, and artificial intelligence. Sensors 2018, 18, 944. [Google Scholar] [CrossRef]

- Chivasa, W.; Mutanga, O.; Biradar, C. UAV-based multispectral phenotyping for disease resistance to accelerate crop improvement under changing climate conditions. Remote Sens. 2020, 12, 2445. [Google Scholar] [CrossRef]

- Gomez Selvaraj, M.; Vergara, A.; Montenegro, F.; Alonso Ruiz, H.; Safari, N.; Raymaekers, D.; Ocimati, W.; Ntamwira, J.; Tits, L.; Omondi, A.B.; et al. Detection of banana plants and their major diseases through aerial images and machine learning methods: A case study in DR Congo and Republic of Benin. ISPRS J. Photogramm. Remote Sens. 2020, 169, 110–124. [Google Scholar] [CrossRef]

- Chivasa, W.; Mutanga, O.; Burgueño, J. UAV-based high-throughput phenotyping to increase prediction and selection accuracy in maize varieties under artificial MSV inoculation. Comput. Electron. Agric. 2021, 184, 106128. [Google Scholar] [CrossRef]

- Alabi, T.R.; Adewopo, J.; Duke, O.P.; Kumar, P.L. Banana mapping in heterogenous smallholder farming systems using high-resolution remote sensing imagery and machine learning models with implications for banana bunchy top disease surveillance. Remote Sens. 2022, 14, 5206. [Google Scholar] [CrossRef]

- Rejeb, A.; Abdollahi, A.; Rejeb, K.; Treiblmaier, H. Drones in agriculture: A review and bibliometric analysis. Comput. Electron. Agric. 2022, 198, 107017. [Google Scholar] [CrossRef]

- Hughes, D.P.; Salathé, M. An open access repository of images on plant health to enable the development of mobile disease diagnostics. arXiv 2016, arXiv:1511.08060. [Google Scholar]

- FAOSTAT. Crops and Livestock Products; The Food and Agriculture Organization of the United Nations (FAO): Rome, Italy, 2023; Available online: https://www.fao.org/faostat/en/#data/QCL (accessed on 14 August 2023).

- Heidarian Dehkordi, R.; El Jarroudi, M.; Kouadio, L.; Meersmans, J.; Beyer, M. Monitoring wheat leaf rust and stripe rust in winter wheat using high-resolution UAV-based Red-Green-Blue imagery. Remote Sens. 2020, 12, 3696. [Google Scholar] [CrossRef]

- Su, J.Y.; Liu, C.J.; Coombes, M.; Hu, X.P.; Wang, C.H.; Xu, X.M.; Li, Q.D.; Guo, L.; Chen, W.H. Wheat yellow rust monitoring by learning from multispectral UAV aerial imagery. Comput. Electron. Agric. 2018, 155, 157–166. [Google Scholar] [CrossRef]

- Bohnenkamp, D.; Behmann, J.; Mahlein, A.K. In-field detection of yellow rust in wheat on the ground canopy and UAV scale. Remote Sens. 2019, 11, 2495. [Google Scholar] [CrossRef]

- Su, J.Y.; Liu, C.J.; Hu, X.P.; Xu, X.M.; Guo, L.; Chen, W.H. Spatio-temporal monitoring of wheat yellow rust using UAV multispectral imagery. Comput. Electron. Agric. 2019, 167, 105035. [Google Scholar] [CrossRef]

- Zhang, X.; Han, L.; Dong, Y.; Shi, Y.; Huang, W.; Han, L.; González-Moreno, P.; Ma, H.; Ye, H.; Sobeih, T. A deep learning-based approach for automated yellow rust disease detection from high-resolution hyperspectral UAV images. Remote Sens. 2019, 11, 1554. [Google Scholar] [CrossRef]

- Guo, A.T.; Huang, W.J.; Dong, Y.Y.; Ye, H.C.; Ma, H.Q.; Liu, B.; Wu, W.B.; Ren, Y.; Ruan, C.; Geng, Y. Wheat yellow rust detection using UAV-based hyperspectral technology. Remote Sens. 2021, 13, 123. [Google Scholar] [CrossRef]

- Pan, Q.; Gao, M.; Wu, P.; Yan, J.; Li, S. A deep-learning-based approach for wheat yellow rust disease recognition from unmanned aerial vehicle images. Sensors 2021, 21, 6540. [Google Scholar] [CrossRef]

- Su, J.Y.; Yi, D.W.; Su, B.F.; Mi, Z.W.; Liu, C.J.; Hu, X.P.; Xu, X.M.; Guo, L.; Chen, W.H. Aerial visual perception in smart farming: Field study of wheat yellow rust monitoring. IEEE Trans. Indus. Inform. 2021, 17, 2242–2249. [Google Scholar] [CrossRef]

- Deng, J.; Zhou, H.R.; Lv, X.; Yang, L.J.; Shang, J.L.; Sun, Q.Y.; Zheng, X.; Zhou, C.Y.; Zhao, B.Q.; Wu, J.C.; et al. Applying convolutional neural networks for detecting wheat stripe rust transmission centers under complex field conditions using RGB-based high spatial resolution images from UAVs. Comput. Electron. Agric. 2022, 200, 107211. [Google Scholar] [CrossRef]

- Zhang, T.; Yang, Z.; Xu, Z.; Li, J. Wheat yellow rust severity detection by efficient DF-Unet and UAV multispectral imagery. IEEE Sens. J. 2022, 22, 9057–9068. [Google Scholar] [CrossRef]

- Liu, W.; Cao, X.R.; Fan, J.R.; Wang, Z.H.; Yan, Z.Y.; Luo, Y.; West, J.S.; Xu, X.M.; Zhou, Y.L. Detecting wheat powdery mildew and predicting grain yield using unmanned aerial photography. Plant Dis. 2018, 102, 1981–1988. [Google Scholar] [CrossRef]

- Vagelas, I.; Cavalaris, C.; Karapetsi, L.; Koukidis, C.; Servis, D.; Madesis, P. Protective effects of Systiva® seed treatment fungicide for the control of winter wheat foliar diseases caused at early stages due to climate change. Agronomy 2022, 12, 2000. [Google Scholar] [CrossRef]

- Francesconi, S.; Harfouche, A.; Maesano, M.; Balestra, G.M. UAV-based thermal, RGB imaging and gene expression analysis allowed detection of Fusarium head blight and gave new insights into the physiological responses to the disease in durum wheat. Front. Plant Sci. 2021, 12, 628575. [Google Scholar] [CrossRef]

- Zhang, H.S.; Huang, L.S.; Huang, W.J.; Dong, Y.Y.; Weng, S.Z.; Zhao, J.L.; Ma, H.Q.; Liu, L.Y. Detection of wheat Fusarium head blight using UAV-based spectral and image feature fusion. Front. Plant Sci. 2022, 13, 4427. [Google Scholar] [CrossRef] [PubMed]

- Van De Vijver, R.; Mertens, K.; Heungens, K.; Nuyttens, D.; Wieme, J.; Maes, W.H.; Van Beek, J.; Somers, B.; Saeys, W. Ultra-high-resolution UAV-based detection of Alternaria solani infections in potato fields. Remote Sens. 2022, 14, 6232. [Google Scholar] [CrossRef]

- Sugiura, R.; Tsuda, S.; Tamiya, S.; Itoh, A.; Nishiwaki, K.; Murakami, N.; Shibuya, Y.; Hirafuji, M.; Nuske, S. Field phenotyping system for the assessment of potato late blight resistance using RGB imagery from an unmanned aerial vehicle. Biosyst. Eng. 2016, 148, 1–10. [Google Scholar] [CrossRef]

- Duarte-Carvajalino, J.M.; Alzate, D.F.; Ramirez, A.A.; Santa-Sepulveda, J.D.; Fajardo-Rojas, A.E.; Soto-Suárez, M. Evaluating late blight severity in potato crops using unmanned aerial vehicles and machine learning algorithms. Remote Sens. 2018, 10, 1513. [Google Scholar] [CrossRef]

- Franceschini, M.H.D.; Bartholomeus, H.; van Apeldoorn, D.F.; Suomalainen, J.; Kooistra, L. Feasibility of unmanned aerial vehicle optical imagery for early detection and severity assessment of late blight in Potato. Remote Sens. 2019, 11, 224. [Google Scholar] [CrossRef]

- Shi, Y.; Han, L.X.; Kleerekoper, A.; Chang, S.; Hu, T.L. Novel CropdocNet model for automated potato late blight disease detection from unmanned aerial vehicle-based hyperspectral imagery. Remote Sens. 2022, 14, 396. [Google Scholar] [CrossRef]

- Siebring, J.; Valente, J.; Franceschini, M.H.D.; Kamp, J.; Kooistra, L. Object-based image analysis applied to low altitude aerial imagery for potato plant trait retrieval and pathogen detection. Sensors 2019, 19, 5477. [Google Scholar] [CrossRef]

- León-Rueda, W.A.; León, C.; Caro, S.G.; Ramírez-Gil, J.G. Identification of diseases and physiological disorders in potato via multispectral drone imagery using machine learning tools. Trop. Plant Pathol. 2022, 47, 152–167. [Google Scholar] [CrossRef]

- Kalischuk, M.; Paret, M.L.; Freeman, J.H.; Raj, D.; Da Silva, S.; Eubanks, S.; Wiggins, D.J.; Lollar, M.; Marois, J.J.; Mellinger, H.C.; et al. An improved crop scouting technique incorporating unmanned aerial vehicle-assisted multispectral crop imaging into conventional scouting practice for gummy stem blight in watermelon. Plant Dis. 2019, 103, 1642–1650. [Google Scholar] [CrossRef]

- Prasad, A.; Mehta, N.; Horak, M.; Bae, W.D. A two-step machine learning approach for crop disease detection using GAN and UAV technology. Remote Sens. 2022, 14, 4765. [Google Scholar] [CrossRef]

- Yağ, İ.; Altan, A. Artificial intelligence-based robust hybrid algorithm design and implementation for real-time detection of plant diseases in agricultural environments. Biology 2022, 11, 1732. [Google Scholar] [CrossRef]

- Xiao, D.Q.; Pan, Y.Q.; Feng, J.Z.; Yin, J.J.; Liu, Y.F.; He, L. Remote sensing detection algorithm for apple fire blight based on UAV multispectral image. Comput. Electron. Agric. 2022, 199, 107137. [Google Scholar] [CrossRef]

- Lei, S.H.; Luo, J.B.; Tao, X.J.; Qiu, Z.X. Remote sensing detecting of yellow leaf disease of arecanut based on UAV multisource sensors. Remote Sens. 2021, 13, 4562. [Google Scholar] [CrossRef]

- Calou, V.B.C.; Teixeira, A.d.S.; Moreira, L.C.J.; Lima, C.S.; de Oliveira, J.B.; de Oliveira, M.R.R. The use of UAVs in monitoring yellow sigatoka in banana. Biosyst. Eng. 2020, 193, 115–125. [Google Scholar] [CrossRef]

- Ye, H.C.; Huang, W.J.; Huang, S.Y.; Cui, B.; Dong, Y.Y.; Guo, A.T.; Ren, Y.; Jin, Y. Recognition of banana fusarium wilt based on UAV remote sensing. Remote Sens. 2020, 12, 938. [Google Scholar] [CrossRef]

- Ye, H.C.; Huang, W.J.; Huang, S.Y.; Cui, B.; Dong, Y.Y.; Guo, A.T.; Ren, Y.; Jin, Y. Identification of banana fusarium wilt using supervised classification algorithms with UAV-based multi-spectral imagery. Int. J. Agric. Biol. Eng. 2020, 13, 136–142. [Google Scholar] [CrossRef]

- Zhang, S.M.; Li, X.H.; Ba, Y.X.; Lyu, X.G.; Zhang, M.Q.; Li, M.Z. Banana fusarium wilt disease detection by supervised and unsupervised methods from UAV-based multispectral imagery. Remote Sens. 2022, 14, 1231. [Google Scholar] [CrossRef]

- Booth, J.C.; Sullivan, D.; Askew, S.A.; Kochersberger, K.; McCall, D.S. Investigating targeted spring dead spot management via aerial mapping and precision-guided fungicide applications. Crop Sci. 2021, 61, 3134–3144. [Google Scholar] [CrossRef]

- Abdulridha, J.; Batuman, O.; Ampatzidis, Y. UAV-based remote sensing technique to detect citrus canker disease utilizing hyperspectral imaging and machine learning. Remote Sens. 2019, 11, 1373. [Google Scholar] [CrossRef]

- DadrasJavan, F.; Samadzadegan, F.; Seyed Pourazar, S.H.; Fazeli, H. UAV-based multispectral imagery for fast citrus greening detection. J. Plant Dis. Prot. 2019, 126, 307–318. [Google Scholar] [CrossRef]

- Pourazar, H.; Samadzadegan, F.; Javan, F.D. Aerial multispectral imagery for plant disease detection: Radiometric calibration necessity assessment. Eur. J. Remote Sens. 2019, 52, 17–31. [Google Scholar] [CrossRef]

- Deng, X.L.; Zhu, Z.H.; Yang, J.C.; Zheng, Z.; Huang, Z.X.; Yin, X.B.; Wei, S.J.; Lan, Y.B. Detection of Citrus Huanglongbing based on multi-input neural network model of UAV hyperspectral remote sensing. Remote Sens. 2020, 12, 2678. [Google Scholar] [CrossRef]

- Garza, B.N.; Ancona, V.; Enciso, J.; Perotto-Baldivieso, H.L.; Kunta, M.; Simpson, C. Quantifying citrus tree health using true color UAV images. Remote Sens. 2020, 12, 170. [Google Scholar] [CrossRef]

- Moriya, É.A.S.; Imai, N.N.; Tommaselli, A.M.G.; Berveglieri, A.; Santos, G.H.; Soares, M.A.; Marino, M.; Reis, T.T. Detection and mapping of trees infected with citrus gummosis using UAV hyperspectral data. Comput. Electron. Agric. 2021, 188, 106298. [Google Scholar] [CrossRef]

- Marin, D.B.; Ferraz, G.A.E.S.; Santana, L.S.; Barbosa, B.D.S.; Barata, R.A.P.; Osco, L.P.; Ramos, A.P.M.; Guimarães, P.H.S. Detecting coffee leaf rust with UAV-based vegetation indices and decision tree machine learning models. Comput. Electron. Agric. 2021, 190, 106476. [Google Scholar] [CrossRef]

- Soares, A.D.S.; Vieira, B.S.; Bezerra, T.A.; Martins, G.D.; Siquieroli, A.C.S. Early detection of coffee leaf rust caused by Hemileia vastatrix using multispectral images. Agronomy 2022, 12, 2911. [Google Scholar] [CrossRef]

- Wang, T.Y.; Thomasson, J.A.; Isakeit, T.; Yang, C.H.; Nichols, R.L. A plant-by-plant method to identify and treat cotton root rot based on UAV remote sensing. Remote Sens. 2020, 12, 2453. [Google Scholar] [CrossRef]

- Wang, T.Y.; Thomasson, J.A.; Yang, C.H.; Isakeit, T.; Nichols, R.L. Automatic classification of cotton root rot disease based on UAV remote sensing. Remote Sens. 2020, 12, 1310. [Google Scholar] [CrossRef]

- Megat Mohamed Nazir, M.N.; Terhem, R.; Norhisham, A.R.; Mohd Razali, S.; Meder, R. Early monitoring of health status of plantation-grown eucalyptus pellita at large spatial scale via visible spectrum imaging of canopy foliage using unmanned aerial vehicles. Forests 2021, 12, 1393. [Google Scholar] [CrossRef]

- di Gennaro, S.F.; Battiston, E.; di Marco, S.; Facini, O.; Matese, A.; Nocentini, M.; Palliotti, A.; Mugnai, L. Unmanned Aerial Vehicle (UAV)-based remote sensing to monitor grapevine leaf stripe disease within a vineyard affected by esca complex. Phytopathol. Mediterr. 2016, 55, 262–275. [Google Scholar] [CrossRef]

- Kerkech, M.; Hafiane, A.; Canals, R. Deep leaning approach with colorimetric spaces and vegetation indices for vine diseases detection in UAV images. Comput. Electron. Agric. 2018, 155, 237–243. [Google Scholar] [CrossRef]

- Kerkech, M.; Hafiane, A.; Canals, R. VddNet: Vine disease detection network based on multispectral images and depth map. Remote Sens. 2020, 12, 3305. [Google Scholar] [CrossRef]

- Dwivedi, R.; Dey, S.; Chakraborty, C.; Tiwari, S. Grape disease detection network based on multi-task learning and attention features. IEEE Sens. J. 2021, 21, 17573–17580. [Google Scholar] [CrossRef]

- Albetis, J.; Duthoit, S.; Guttler, F.; Jacquin, A.; Goulard, M.; Poilvé, H.; Féret, J.B.; Dedieu, G. Detection of Flavescence dorée grapevine disease using Unmanned Aerial Vehicle (UAV) multispectral imagery. Remote Sens. 2017, 9, 308. [Google Scholar] [CrossRef]

- Savian, F.; Martini, M.; Ermacora, P.; Paulus, S.; Mahlein, A.K. Prediction of the kiwifruit decline syndrome in diseased orchards by remote sensing. Remote Sens. 2020, 12, 2194. [Google Scholar] [CrossRef]

- Carmo, G.J.D.; Castoldi, R.; Martins, G.D.; Jacinto, A.C.P.; Tebaldi, N.D.; Charlo, H.C.D.; Zampiroli, R. Detection of lesions in lettuce caused by Pectobacterium carotovorum subsp. carotovorum by supervised classification using multispectral images. Can. J. Remote Sens. 2022, 48, 144–157. [Google Scholar] [CrossRef]

- Stewart, E.L.; Wiesner-Hanks, T.; Kaczmar, N.; DeChant, C.; Wu, H.; Lipson, H.; Nelson, R.J.; Gore, M.A. Quantitative phenotyping of northern leaf blight in uav images using deep learning. Remote Sens. 2019, 11, 2209. [Google Scholar] [CrossRef]

- Wiesner-Hanks, T.; Wu, H.; Stewart, E.L.; DeChant, C.; Kaczmar, N.; Lipson, H.; Gore, M.A.; Nelson, R.J. Millimeter-level plant disease detection from aerial photographs via deep learning and crowdsourced data. Front. Plant Sci. 2019, 10, 1550. [Google Scholar] [CrossRef]

- Wu, H.; Wiesner-Hanks, T.; Stewart, E.L.; DeChant, C.; Kaczmar, N.; Gore, M.A.; Nelson, R.J.; Lipson, H. Autonomous detection of plant disease symptoms directly from aerial imagery. Plant Phenome J. 2019, 2, 1–9. [Google Scholar] [CrossRef]

- Gao, J.M.; Ding, M.L.; Sun, Q.Y.; Dong, J.Y.; Wang, H.Y.; Ma, Z.H. Classification of southern corn rust severity based on leaf-level hyperspectral data collected under solar illumination. Remote Sens. 2022, 14, 2551. [Google Scholar] [CrossRef]

- Oh, S.; Lee, D.Y.; Gongora-Canul, C.; Ashapure, A.; Carpenter, J.; Cruz, A.P.; Fernandez-Campos, M.; Lane, B.Z.; Telenko, D.E.P.; Jung, J.; et al. Tar spot disease quantification using unmanned aircraft systems (UAS) data. Remote Sens. 2021, 13, 2567. [Google Scholar] [CrossRef]

- Ganthaler, A.; Losso, A.; Mayr, S. Using image analysis for quantitative assessment of needle bladder rust disease of Norway spruce. Plant Pathol. 2018, 67, 1122–1130. [Google Scholar] [CrossRef]

- Izzuddin, M.A.; Hamzah, A.; Nisfariza, M.N.; Idris, A.S. Analysis of multispectral imagery from unmanned aerial vehicle (UAV) using object-based image analysis for detection of ganoderma disease in oil palm. J. Oil Palm Res. 2020, 32, 497–508. [Google Scholar] [CrossRef]

- Ahmadi, P.; Mansor, S.; Farjad, B.; Ghaderpour, E. Unmanned aerial vehicle (UAV)-based remote sensing for early-stage detection of Ganoderma. Remote Sens. 2022, 14, 1239. [Google Scholar] [CrossRef]

- Kurihara, J.; Koo, V.C.; Guey, C.W.; Lee, Y.P.; Abidin, H. Early detection of basal stem rot disease in oil palm tree using unmanned aerial vehicle-based hyperspectral imaging. Remote Sens. 2022, 14, 799. [Google Scholar] [CrossRef]

- Cao, F.; Liu, F.; Guo, H.; Kong, W.W.; Zhang, C.; He, Y. Fast detection of Sclerotinia sclerotiorum on oilseed rape leaves using low-altitude remote sensing technology. Sensors 2018, 18, 4464. [Google Scholar] [CrossRef] [PubMed]

- Rangarajan, A.K.; Balu, E.J.; Boligala, M.S.; Jagannath, A.; Ranganathan, B.N. A low-cost UAV for detection of Cercospora leaf spot in okra using deep convolutional neural network. Multimed. Tools Appl. 2022, 81, 21565–21589. [Google Scholar] [CrossRef]

- Di Nisio, A.; Adamo, F.; Acciani, G.; Attivissimo, F. Fast detection of olive trees affected by Xylella fastidiosa from UAVs using multispectral imaging. Sensors 2020, 20, 4915. [Google Scholar] [CrossRef]

- Castrignanò, A.; Belmonte, A.; Antelmi, I.; Quarto, R.; Quarto, F.; Shaddad, S.; Sion, V.; Muolo, M.R.; Ranieri, N.A.; Gadaleta, G.; et al. A geostatistical fusion approach using UAV data for probabilistic estimation of Xylella fastidiosa subsp. pauca infection in olive trees. Sci. Total Environ. 2021, 752, 141814. [Google Scholar] [CrossRef]

- Ksibi, A.; Ayadi, M.; Soufiene, B.O.; Jamjoom, M.M.; Ullah, Z. MobiRes-Net: A hybrid deep learning model for detecting and classifying olive leaf diseases. Appl. Sci. 2022, 12, 10278. [Google Scholar] [CrossRef]

- Alberto, R.T.; Rivera, J.C.E.; Biagtan, A.R.; Isip, M.F. Extraction of onion fields infected by anthracnose-twister disease in selected municipalities of Nueva Ecija using UAV imageries. Spat. Inf. Res. 2020, 28, 383–389. [Google Scholar] [CrossRef]

- McDonald, M.R.; Tayviah, C.S.; Gossen, B.D. Human vs. Machine, the eyes have it. Assessment of Stemphylium leaf blight on onion using aerial photographs from an NIR camera. Remote Sens. 2022, 14, 293. [Google Scholar] [CrossRef]

- Calderón, R.; Montes-Borrego, M.; Landa, B.B.; Navas-Cortés, J.A.; Zarco-Tejada, P.J. Detection of downy mildew of opium poppy using high-resolution multi-spectral and thermal imagery acquired with an unmanned aerial vehicle. Precision Agric. 2014, 15, 639–661. [Google Scholar] [CrossRef]

- Bagheri, N. Application of aerial remote sensing technology for detection of fire blight infected pear trees. Comput. Electron. Agric. 2020, 168, 105147. [Google Scholar] [CrossRef]

- Chen, T.; Yang, W.; Zhang, H.; Zhu, B.; Zeng, R.; Wang, X.; Wang, S.; Wang, L.; Qi, H.; Lan, Y.; et al. Early detection of bacterial wilt in peanut plants through leaf-level hyperspectral and unmanned aerial vehicle data. Comput. Electron. Agric. 2020, 177, 105708. [Google Scholar] [CrossRef]

- Li, F.D.; Liu, Z.Y.; Shen, W.X.; Wang, Y.; Wang, Y.L.; Ge, C.K.; Sun, F.G.; Lan, P. A remote sensing and airborne edge-computing based detection system for pine wilt disease. IEEE Access 2021, 9, 66346–66360. [Google Scholar] [CrossRef]

- Wu, B.Z.; Liang, A.J.; Zhang, H.F.; Zhu, T.F.; Zou, Z.Y.; Yang, D.M.; Tang, W.Y.; Li, J.; Su, J. Application of conventional UAV-based high-throughput object detection to the early diagnosis of pine wilt disease by deep learning. For. Ecol. Manag. 2021, 486, 118986. [Google Scholar] [CrossRef]

- Yu, R.; Ren, L.L.; Luo, Y.Q. Early detection of pine wilt disease in Pinus tabuliformis in North China using a field portable spectrometer and UAV-based hyperspectral imagery. For. Ecosyst. 2021, 8, 44. [Google Scholar] [CrossRef]

- Liang, D.; Liu, W.; Zhao, L.; Zong, S.; Luo, Y. An improved convolutional neural network for plant disease detection using unmanned aerial vehicle images. Nat. Environ. Pollut. Technol. 2022, 21, 899–908. [Google Scholar] [CrossRef]

- Yu, R.; Huo, L.N.; Huang, H.G.; Yuan, Y.; Gao, B.T.; Liu, Y.J.; Yu, L.F.; Li, H.A.; Yang, L.Y.; Ren, L.L.; et al. Early detection of pine wilt disease tree candidates using time-series of spectral signatures. Front. Plant Sci. 2022, 13, 1000093. [Google Scholar] [CrossRef]

- Smigaj, M.; Gaulton, R.; Suárez, J.C.; Barr, S.L. Canopy temperature from an Unmanned Aerial Vehicle as an indicator of tree stress associated with red band needle blight severity. For. Ecol. Manag. 2019, 433, 699–708. [Google Scholar] [CrossRef]

- Dang, L.M.; Hassan, S.I.; Suhyeon, I.; Sangaiah, A.K.; Mehmood, I.; Rho, S.; Seo, S.; Moon, H. UAV based wilt detection system via convolutional neural networks. Sustain. Comput.-Infor. 2020, 28, 100250. [Google Scholar] [CrossRef]

- Dang, L.M.; Wang, H.; Li, Y.; Min, K.; Kwak, J.T.; Lee, O.N.; Park, H.; Moon, H. Fusarium wilt of radish detection using RGB and near infrared images from unmanned aerial vehicles. Remote Sens. 2020, 12, 2863. [Google Scholar] [CrossRef]

- Zhang, D.; Zhou, X.; Zhang, J.; Lan, Y.; Xu, C.; Liang, D. Detection of rice sheath blight using an unmanned aerial system with high-resolution color and multispectral imaging. PLoS ONE 2018, 13, e0187470. [Google Scholar] [CrossRef] [PubMed]

- Kharim, M.N.A.; Wayayok, A.; Fikri Abdullah, A.; Rashid Mohamed Shariff, A.; Mohd Husin, E.; Razif Mahadi, M. Predictive zoning of pest and disease infestations in rice field based on UAV aerial imagery. Egypt. J. Remote Sens. Space Sci. 2022, 25, 831–840. [Google Scholar] [CrossRef]

- Tetila, E.C.; Machado, B.B.; Menezes, G.K.; Da Silva Oliveira, A., Jr.; Alvarez, M.; Amorim, W.P.; De Souza Belete, N.A.; Da Silva, G.G.; Pistori, H. Automatic recognition of soybean leaf diseases using UAV images and deep convolutional neural networks. IEEE Geosci. Remote Sens. 2020, 17, 903–907. [Google Scholar] [CrossRef]

- Castelao Tetila, E.; Brandoli Machado, B.; Belete, N.A.D.S.; Guimaraes, D.A.; Pistori, H. Identification of soybean foliar diseases using unmanned aerial vehicle images. IEEE Geosci. Remote Sens. 2017, 14, 2190–2194. [Google Scholar] [CrossRef]

- Babu, R.G.; Chellaswamy, C. Different stages of disease detection in squash plant based on machine learning. J. Biosci. 2022, 47, 9. [Google Scholar] [CrossRef] [PubMed]

- Jay, S.; Comar, A.; Benicio, R.; Beauvois, J.; Dutartre, D.; Daubige, G.; Li, W.; Labrosse, J.; Thomas, S.; Henry, N.; et al. Scoring Cercospora leaf spot on sugar beet: Comparison of UGV and UAV phenotyping systems. Plant Phenomics 2020, 2020, 9452123. [Google Scholar] [CrossRef]

- Rao Pittu, V.S. Image processing system integrated multicopter for diseased area and disease recognition in agricultural farms. Int. J. Control Autom. 2020, 13, 219–230. [Google Scholar]

- Rao Pittu, V.S.; Gorantla, S.R. Diseased area recognition and pesticide spraying in farming lands by multicopters and image processing system. J. Eur. Syst. Autom. 2020, 53, 123–130. [Google Scholar] [CrossRef]

- Görlich, F.; Marks, E.; Mahlein, A.K.; König, K.; Lottes, P.; Stachniss, C. UAV-based classification of Cercospora leaf spot using RGB images. Drones 2021, 5, 34. [Google Scholar] [CrossRef]

- Gunder, M.; Yamati, F.R.I.; Kierdorf, J.; Roscher, R.; Mahlein, A.K.; Bauckhage, C. Agricultural plant cataloging and establishment of a data framework from UAV-based crop images by computer vision. Gigascience 2022, 11, giac054. [Google Scholar] [CrossRef]

- Ispizua Yamati, F.R.; Barreto, A.; Günder, M.; Bauckhage, C.; Mahlein, A.K. Sensing the occurrence and dynamics of Cercospora leaf spot disease using UAV-supported image data and deep learning. Zuckerindustrie 2022, 147, 79–86. [Google Scholar] [CrossRef]

- Joalland, S.; Screpanti, C.; Varella, H.V.; Reuther, M.; Schwind, M.; Lang, C.; Walter, A.; Liebisch, F. Aerial and ground based sensing of tolerance to beet cyst nematode in sugar beet. Remote Sens. 2018, 10, 787. [Google Scholar] [CrossRef]

- Narmilan, A.; Gonzalez, F.; Salgadoe, A.S.A.; Powell, K. Detection of white leaf disease in sugarcane using machine learning techniques over UAV multispectral images. Drones 2022, 6, 230. [Google Scholar] [CrossRef]

- Xu, Y.P.; Shrestha, V.; Piasecki, C.; Wolfe, B.; Hamilton, L.; Millwood, R.J.; Mazarei, M.; Stewart, C.N. Sustainability trait modeling of field-grown switchgrass (Panicum virgatum) using UAV-based imagery. Plants 2021, 10, 2726. [Google Scholar] [CrossRef]

- Zhao, X.H.; Zhang, J.C.; Tang, A.L.; Yu, Y.F.; Yan, L.J.; Chen, D.M.; Yuan, L. The stress detection and segmentation strategy in tea plant at canopy level. Front. Plant Sci. 2022, 13, 9054. [Google Scholar] [CrossRef]

- Yamamoto, K.; Togami, T.; Yamaguchi, N. Super-resolution of plant disease images for the acceleration of image-based phenotyping and vigor diagnosis in agriculture. Sensors 2017, 17, 2557. [Google Scholar] [CrossRef]

- Abdulridha, J.; Ampatzidis, Y.; Kakarla, S.C.; Roberts, P. Detection of target spot and bacterial spot diseases in tomato using UAV-based and benchtop-based hyperspectral imaging techniques. Precis. Agric. 2020, 21, 955–978. [Google Scholar] [CrossRef]

- Abdulridha, J.; Ampatzidis, Y.; Qureshi, J.; Roberts, P. Laboratory and UAV-based identification and classification of tomato yellow leaf curl, bacterial spot, and target spot diseases in tomato utilizing hyperspectral imaging and machine learning. Remote Sens. 2020, 12, 2732. [Google Scholar] [CrossRef]

- Abdulridha, J.; Ampatzidis, Y.; Qureshi, J.; Roberts, P. Identification and classification of downy mildew severity stages in watermelon utilizing aerial and ground remote sensing and machine learning. Front. Plant Sci. 2022, 13, 791018. [Google Scholar] [CrossRef]

- Lu, J.; Tan, L.; Jiang, H. Review on convolutional neural network (CNN) applied to plant leaf disease classification. Agriculture 2021, 11, 707. [Google Scholar] [CrossRef]

- Frankelius, P.; Norrman, C.; Johansen, K. Agricultural Innovation and the Role of Institutions: Lessons from the Game of Drones. J. Agric. Environ. Ethics 2019, 32, 681–707. [Google Scholar] [CrossRef]

- Ayamga, M.; Tekinerdogan, B.; Kassahun, A. Exploring the challenges posed by regulations for the use of drones in agriculture in the African context. Land 2021, 10, 164. [Google Scholar] [CrossRef]

- Jeanneret, C.; Rambaldi, G. Drone Governance: A Scan of Policies, Laws and Regulations Governing the Use of Unmanned Aerial Vehicles (UAVs) in 79 ACP Countries; CTA Working Paper; 16/12; CTA: Wageningen, The Netherlands, 2016; Available online: https://hdl.handle.net/10568/90121 (accessed on 14 August 2023).

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Hartling, S.; Esposito, F.; Fritschi, F.B. Soybean yield prediction from UAV using multimodal data fusion and deep learning. Remote Sens. Environ. 2020, 237, 111599. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Daloye, A.M.; Erkbol, H.; Fritschi, F.B. Crop monitoring using satellite/UAV data fusion and machine learning. Remote Sens. 2020, 12, 1357. [Google Scholar] [CrossRef]

- Alvarez-Vanhard, E.; Corpetti, T.; Houet, T. UAV & satellite synergies for optical remote sensing applications: A literature review. Sci. Remote Sens. 2021, 3, 100019. [Google Scholar] [CrossRef]

- Cardoso, R.M.; Pereira, T.S.; Facure, M.H.M.; dos Santos, D.M.; Mercante, L.A.; Mattoso, L.H.C.; Correa, D.S. Current progress in plant pathogen detection enabled by nanomaterials-based (bio)sensors. Sens. Actuators Rep. 2022, 4, 100068. [Google Scholar] [CrossRef]

| Region | Count of Articles |

|---|---|

| Asia | 43 |

| Europe | 23 |

| North America | 19 |

| South America | 9 |

| Africa | 4 |

| Oceania | 1 |

| Plant | Disease | Related Reviewed Study |

|---|---|---|

| Apple tree | Cedar rust | [64,65] |

| Scab | [64] | |

| Fire blight | [66] | |

| Areca palm | Yellow leaf disease | [67] |

| Banana | Yellow sigatoka | [68] |

| Xanthomonas wilt of banana | [36] | |

| Banana bunchy top virus | [36,38] | |

| Fusarium wilt | [69,70,71] | |

| Bermudagrass | Spring dead spot | [72] |

| Citrus | Citrus canker | [73] |

| Citrus huanglongbing disease | [74,75,76,77] | |

| Phytophthora foot rot | [77] | |

| Citrus gummosis disease | [78] | |

| Coffee | Coffee leaf rust | [79,80] |

| Cotton | Cotton root rot | [81,82] |

| Eucalyptus | Various leaf diseases | [83] |

| Grapevine | Grapevine leaf stripe | [84,85,86,87] |

| Flavescence dorée phytoplasma | [88] | |

| Black rot | [65,87] | |

| Isariopsis leaf spot | [86,87] | |

| Kiwifruit | Kiwifruit decline | [89] |

| Lettuce | Soft rot | [90] |

| Maize | Northern leaf blight | [91,92,93] |

| Southern leaf blight | [94] | |

| Maize streak virus disease | [35,37] | |

| Tar spot | [95] | |

| Norway spruce | Needle bladder rust | [96] |

| Oil palm | Basal stem rot | [97,98,99] |

| Oilseed rape | Sclerotinia | [100] |

| Okra | Cercospora leaf spot | [101] |

| Olive tree | Verticillium wilt | [32] |

| Xylella fastidiosa | [102,103] | |

| Peacock spot | [104] | |

| Onion | Anthracnose-twister | [105] |

| Stemphylium leaf blight | [106] | |

| Opium poppy | Downy mildew | [107] |

| Paperbark tree | Myrtle rust | [34] |

| Peach tree | Fire blight | [108] |

| Peanut | Bacterial wilt | [109] |

| Pine tree | Pine wilt disease | [110,111,112,113,114] |

| Red band needle blight | [115] | |

| Potato | Potato late blight | [57,58,59,60] |

| Potato early blight | [56] | |

| Potato Y virus | [61] | |

| Vascular wilt | [62] | |

| Soft rot | [61] | |

| Radish | Fusarium wilt | [116,117] |

| Rice | Sheath blight | [118] |

| Bacterial leaf blight | [119] | |

| Bacterial panicle blight | [119] | |

| Soybean | Target spot | [120,121] |

| Powdery mildew | [120,121] | |

| Squash | Powdery mildew | [122] |

| Sugar beet | Cercospora leaf spot | [123,124,125,126,127,128] |

| Anthracnose | [124,125] | |

| Alternaria leaf spot | [124,125] | |

| Beet cyst nematode | [129] | |

| Sugarcane | White leaf phytoplasma | [130] |

| Switchgrass | Rust disease | [131] |

| Tea | Anthracnose | [132] |

| Tomato | Bacterial spot | [133,134,135] |

| Early blight | [133] | |

| Late blight | [133] | |

| Septoria leaf spot | [133] | |

| Tomato mosaic virus | [133] | |

| Leaf mold | [133] | |

| Target leaf spot | [133,134,135] | |

| Tomato yellow leaf curl virus | [133,135] | |

| Watermelon | Gummy stem blight | [63] |

| Anthracnose | [63] | |

| Fusarium wilt | [63] | |

| Phytophthora fruit rot | [63] | |

| Alternaria leaf spot | [63] | |

| Cucurbit leaf crumple | [63] | |

| Downy mildew | [136] | |

| Wheat | Yellow rust | [42,43,44,45,46,47,48,49,50,51] |

| Leaf rust | [42] | |

| Septoria leaf spot | [53] | |

| Powdery mildew | [52] | |

| Tan spot | [53] | |

| Fusarium head blight | [54,55] |

| Method 1 | Year | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 2013 | 2014 | 2016 | 2017 | 2018 | 2019 | 2020 | 2021 | 2022 | Total | |

| ANOVA | 1 | 1 | 3 | 5 | ||||||

| Clustering analysis | 2 | 2 | ||||||||

| Correlation analysis | 2 | 1 | 3 | |||||||

| Geostatistics/GIS | 1 | 1 | 1 | 3 | ||||||

| Machine learning (ML) 2 | 2 | 3 | 5 | 15 | 6 | 12 | 43 | |||

| ML/Deep learning 3 | 1 | 1 | 4 | 4 | 3 | 10 | 23 | |||

| ML and Deep learning | 1 | 1 | 2 | |||||||

| ML and regression models | 1 | 1 | ||||||||

| ML and statistical comparison | 1 | 1 | ||||||||

| Pixel-wise comparison | 3 | 1 | 4 | |||||||

| Regression models | 1 | 1 | 2 | 1 | 2 | 2 | 9 | |||

| Threshold-based colour analysis | 1 | 1 | 2 | |||||||

| Vegetation health mapping | 2 | 2 | ||||||||

| Visual analysis | 1 | 1 | 1 | 3 | ||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kouadio, L.; El Jarroudi, M.; Belabess, Z.; Laasli, S.-E.; Roni, M.Z.K.; Amine, I.D.I.; Mokhtari, N.; Mokrini, F.; Junk, J.; Lahlali, R. A Review on UAV-Based Applications for Plant Disease Detection and Monitoring. Remote Sens. 2023, 15, 4273. https://doi.org/10.3390/rs15174273

Kouadio L, El Jarroudi M, Belabess Z, Laasli S-E, Roni MZK, Amine IDI, Mokhtari N, Mokrini F, Junk J, Lahlali R. A Review on UAV-Based Applications for Plant Disease Detection and Monitoring. Remote Sensing. 2023; 15(17):4273. https://doi.org/10.3390/rs15174273

Chicago/Turabian StyleKouadio, Louis, Moussa El Jarroudi, Zineb Belabess, Salah-Eddine Laasli, Md Zohurul Kadir Roni, Ibn Dahou Idrissi Amine, Nourreddine Mokhtari, Fouad Mokrini, Jürgen Junk, and Rachid Lahlali. 2023. "A Review on UAV-Based Applications for Plant Disease Detection and Monitoring" Remote Sensing 15, no. 17: 4273. https://doi.org/10.3390/rs15174273

APA StyleKouadio, L., El Jarroudi, M., Belabess, Z., Laasli, S.-E., Roni, M. Z. K., Amine, I. D. I., Mokhtari, N., Mokrini, F., Junk, J., & Lahlali, R. (2023). A Review on UAV-Based Applications for Plant Disease Detection and Monitoring. Remote Sensing, 15(17), 4273. https://doi.org/10.3390/rs15174273