Abstract

Integrating multimodal remote sensing data can optimize the mapping accuracy of individual trees. Yet, one issue that is not trivial but generally overlooked in previous studies is the spatial mismatch of individual trees between remote sensing datasets, especially in different imaging modalities. These offset errors between the same tree on different data that have been geometrically corrected can lead to substantial inaccuracies in applications. In this study, we propose a novel approach to match individual trees between aerial photographs and airborne LiDAR data. To achieve this, we first leveraged the maximum overlap of the tree crowns in a local area to determine the correct and the optimal offset vector, and then used the offset vector to rectify the mismatch on individual tree positions. Finally, we compared our proposed approach with a commonly used automatic image registration method. We used pairing rate (the percentage of correctly paired trees) and matching accuracy (the degree of overlap between the correctly paired trees) to measure the effectiveness of results. We evaluated the performance of our approach across six typical landscapes, including broadleaved forest, coniferous forest, mixed forest, roadside trees, garden trees, and parkland trees. Compared to the conventional method, the average pairing rate of individual trees for all six landscapes increased from 91.13% to 100.00% (p = 0.045, t-test), and the average matching accuracy increased from 0.692 ± 0.175 (standard deviation) to 0.861 ± 0.152 (p = 0.017, t-test). Our study demonstrates that the proposed tree-oriented matching approach significantly improves the registration accuracy of individual trees between aerial photographs and airborne LiDAR data.

1. Introduction

Information on individual trees is essential for forest management, biodiversity conservation, and assessment of ecosystem services [1,2,3]. Meanwhile, as a meaningful unit of analysis at a fine scale [4], individual tree level information is crucial for accurate estimation of essential biodiversity variables (EBVs) [5,6,7], such as biomass [8,9,10], structural and chemical properties [11,12], as well as functional diversity [13,14]. However, traditional tree inventory tends to be carried out at the plot or stand level [15,16,17,18] since obtaining individual tree level information over large areas is usually impractical or impossible via ground survey methods due to extremely high cost and labor-intensive fieldwork [19,20,21].

To complement in situ measurements, remote sensing has been commonly used to study individual trees at a local or landscape level over the past two decades [22,23,24]. A critical step before studying individual trees with remote sensing data is delineating individual tree crowns [22,25,26]. High spatial resolution optical remote sensing data (e.g., satellite images, aerial photographs, and drone images) have a proven potential to effectively segment individual trees [3,23,27,28,29,30,31,32,33,34,35,36,37,38,39]. However, segmenting individual trees using optical images often leads to over- or under-segmentation of trees as objects in different scenarios, caused by spectral heterogeneity, noise pixels (intensity variation), shadows, as well as observation and illumination angles for imaging [30,31,33,40].

Light detection and ranging (LiDAR) can render three-dimensional (3D) structural information of trees [41,42,43,44]. Recently, with the rapid advancement of LiDAR sensors and the availability of LiDAR data, LiDAR technology has gradually become an indispensable active remote sensing technique for delineating individual trees [45,46,47,48]. Small-footprint airborne [49,50,51,52] and drone (UAV)-based LiDAR data [53,54,55] are the primary sources of LiDAR data that have been widely used to segment individual trees. Compared to optical sensors, LiDAR is not impacted by illumination artifacts such as the shading of shorter trees by their taller neighbors [25,56,57]. For that reason, the problem of under-segmentation has been effectively resolved with LiDAR by some existing individual tree segmentation algorithms [58,59,60]. However, these algorithms do detect multiple peaks in tree crowns, resulting in varying degrees of over-segmentation [61,62]. To address this problem, recent studies have attempted to improve the accuracy of individual tree delineation by integrating optical and LiDAR data [63,64]. These studies have shown that the accuracy of delineating individual trees can be considerably improved by fusing both spectral (optical images) and structural features (LiDAR data). Consequently, the fusion of multimodal remote sensing data for delineating individual trees has gained increasing attraction in recent years.

Local mismatches (misalignments) between images are often overlooked when integrating multimodal remote sensing data (e.g., fusion of optical and LiDAR data) to identify individual trees [65,66]. However, local mismatch still occurs and is referred to as registration noise/error [67,68] due to dissimilarities in acquisition circumstances and imaging principles of the sensor with different modes [69,70]. Moreover, images in different modes are more likely to cause complicated nonlinear deformation and displacement of ground objects between images [71,72,73]. The positional discrepancy between multimodal datasets has a significant effect on subsequent applications through error propagation [74,75,76,77]. Area- and feature-based matching are two methods commonly utilized to align remotely sensed images [78].

The area-based matching processes the intensity of corresponding image areas and searches for the best matching similarity in the window template, but the classic methods are not robust enough for multimodal image with apparent radiometric difference [79,80]. Although some normalized cross-correlation (NCC) (one of area-based methods) variants like automatic registration of remote sensing images (ARRSI) and orientation phase consistency histograms (HOPC) were proposed to allow NCC framework to realize image registration with nonlinear radiometric difference [81,82], they were limited to invariance of intensity mapping matching process and specific geometric and radiometric constraints, respectively [81,83]. In terms of feature-based matching, the most common method is to explore tie points between the reference image and candidate image by manual selection or automatic techniques [84]. Yet, the manual selection is labor intensive, time consuming, and prone to subjective errors, which is only suitable for small areas or medium- and low-resolution remote sensing imagery with spatially consistent offset. In terms of automatic approach, the scale-invariant feature transform (SIFT) [85] and variations thereof [86,87,88,89] are the most commonly used automatic algorithms [90,91]. These algorithms aim to detect the homonymy points based on local features in images, which is hence difficult or even not available to be applied in multimodal images due to the influence of nonlinear radiometric differences [73,92].

In recent years, researchers have attempted to use deep learning techniques to register multimodal images [93]. Two main strategies have been explored to leverage deep learning for image registration [72]. The first strategy is deep iterative methods that employ neural networks to compute advanced similarity metrics between image pairs and guide iterative optimization for accurate registration [94]. Examples of deep iterative methods include stacked autoencoders [95,96,97], convolutional neural networks (CNNs) [98,99,100], and reinforcement learning [101]. The second method is deep transformation estimation that directly predicts geometric transformation parameters or deformative fields. This method can be supervised or unsupervised, depending on the training strategy [102,103,104,105]. Supervised methods need ground-truth data and use various architectures and loss functions for training. Unsupervised methods rely on traditional similarity metrics and regularization for loss functions. They also use spatial transformer networks for end-to-end transformation learning and adapt to multimodal images. Deep learning-based methods have achieved remarkable progress in remote sensing image registration, but they also face challenges to practicality and generalizability [106]. They require substantial labeled data and computational resources for training and inference. However, traditional approaches, which are known for their efficiency and simplicity, struggle to match the high accuracy showcased by deep learning models. Therefore, considering the trade-offs between the advantages and challenges of deep learning, it is critical to further refine and expedite image registration processes, especially for multimodal images.

In this study, we propose a novel tree-oriented approach to match individual trees between aerial photographs and airborne LiDAR data. To achieve this, we first leverage the maximum overlap of the tree crowns in a local area to determine the correct and the optimal offset vector, and then use the offset vector to rectify the mismatch on individual tree positions. We used pairing rate (the percentage of correctly paired trees) and matching accuracy (the degree of overlap between the correctly paired trees) to assess the effectiveness between our proposed approach in matching individual trees and compare it with a commonly used automatic image registration method.

2. Methods

2.1. Proposed Tree-Oriented Matching Approach

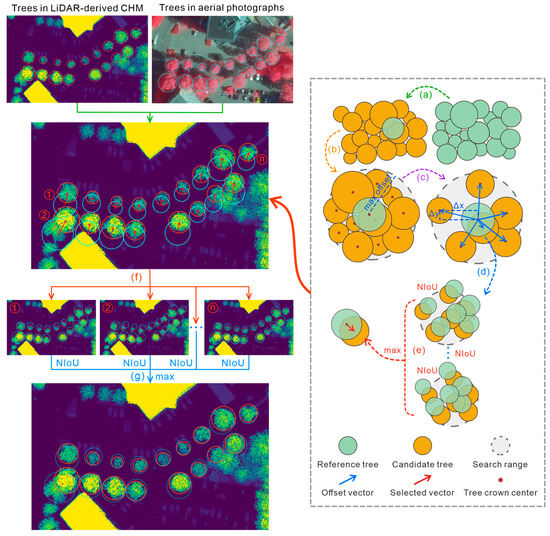

When processing images, pixels are first grouped as an object, based on either spectral similarity or external variables (such as ownership and geological unit) as a new analysis unit, which is a more effective and meaningful alternative way to studying targets than using pixels directly [107,108,109], i.e., object-based image analysis (OBIA) [107,110]. The results of delineating an individual trees boundary fits the concept of OBIA, and some structural traits may be more easily retrieved at an individual tree level (e.g., tree height, crown size, and the diameter at breast height) [111,112]. Therefore, we proposed an individual tree-oriented matching approach to improve the matching accuracy of individual trees from aerial photographs and airborne LiDAR-derived CHMs. The flow diagram of the approach is shown in Figure 1 and followed by a detailed description of each step (Algorithm 1).

| Algorithm 1: The pseudocode of proposed tree-oriented matching approach. |

| Matching individual trees is |

| Initialize: minimum and maximum crown area ratio: min_car, max_car; maximum offset: max_offset |

| Input: canopy height models (CHMs) # reference dataset; aerial photographs (ARPs) # candidate dataset |

| # Step 1: Mark individual trees with bounding boxes (The bounding boxes were delineated manually in this study) CHM_trees <- mark_trees(CHMs) ARP_trees <- mark_trees(ARPs) |

| # Step 2 Iterate over a tree in CHMs as a reference tree (Figure 1a) for reference_tree in CHM_trees: search_center <- reference_tree.center # Step 3: Choose candidate trees according to search_center and max_offset (Figure 1b) (To filter those trees whose center exceeds the maximum offset of the reference tree) candidate_trees <- ARP_trees.within_radius(search_center, max_offset) reference_trees <- CHM_trees.within_radius(search_center, max_offset) # Step 4: Filter candidate trees based on crown area ratio (Figure 1c) (To filter those trees whose crown area is much larger or smaller than that of the reference tree) candidate_trees <- candidate_trees.filter(min_car, max_car, reference_tree.crown_area) # Step 5: Calculate offset vectors for each candidate tree and rectify tree locations (Figure 1d) (To rectify the candidate trees according to the offset vectors) for candidate_tree in candidate_trees: offset_vector <- reference_tree.center() - candidate_tree.center() candidate_rectified_trees.append(candidate_trees.rectify_location(offset_vector)) |

| # Step 6: Calculate NIoU and select the correct offset vector (Figure 1e) (To choose the correct offset vector for the currently selected reference tree that maximizes the sum of NIoU between the selected reference trees and the candidate trees) correct_offset_vectors.append(max(sum(NIoU(reference_trees, candidate_rectified_trees)))) # Step 7: Rectify tree locations based on the correct offset vectors (Figure 1f) (To rectify the trees in aerial photographs with the correct offset vectors) for correct_offset_vector in correct_offset_vectors: ARP_rectified_trees.append(ARP_trees.rectify_location(correct_offset_vector)) # Step 8: Determine the final offset vector (Figure 1g) (To determine the final offset vector that maximizes the sum of NIoU between individual trees in CHMs and the rectified trees in aerial photographs) final_offset_vector <- max(sum(NIoU(CHM_trees, ARP_rectified_trees))) |

Figure 1.

The flow diagram of the proposed tree-oriented matching approach.

2.2. Automatic Image Registration Workflow in ENVI

To compare with the traditional method, we also used the Automatic Image Registration Workflow module in mainstream commercial software ENVI (version 5.6.3 (c) 2022 L3Harris Geospatial Solutions, Inc., Manila, Philippines) to geometrically align two images [113]. We chose the mutual information matching method (developed for images with different modalities) to automatically generate tie points and kept other parameters as default. After tie points were generated, we removed those with clear errors and then registered the warp image with the remaining points. Finally, we delineated bounding boxes of individual trees from the registered images to compare the effectiveness of both methods.

3. Experiments

3.1. Experimental Site

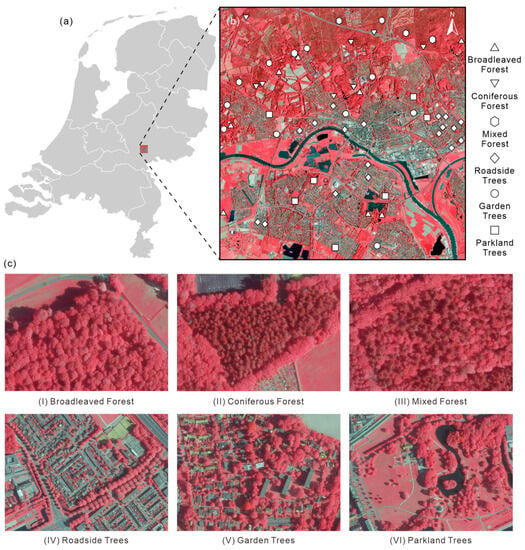

The experimental site is Arnhem, the Netherlands (Figure 2). Arnhem is a city and municipality situated in the central part of the Netherlands, containing many parks and forests. The north corner of the municipality is part of the Veluwe—the largest forest area in the Netherlands. Following the definition of the United Nations’ Food and Agriculture Organization (FAO) [114] and CORINE land cover classification scheme [115], we divided the forest landscapes in the study area into broadleaved forest (>75% cover of broadleaved trees), coniferous forest (>75% coniferous trees formation), and mixed forest (composed of more than 25% coniferous and broadleaved trees). Furthermore, due to some differences in the layout, density, species richness, and context of groups of individual trees in the city, we also chose three more landscape types—roadside trees, garden trees, and parkland trees. Specifically, roadside trees, regularly lined the road, are often composed of the same tree species with similar height and crown size in a local area (4–16 trees/100 m of linear feature [116]); garden trees, planted in the garden in front or behind a house or buildings, are often of various species and vary in height and crown size (but contain > 25 trees ha−1 [117]); and parkland trees, planted based on the layout of parkland, are species abundant, usually having large widely spaced trees separated by grass areas (>18 trees ha−1). Samples of individual trees were chosen from these six landscapes to represent the diversity and complexity of tree distribution, with the level of tree cover used to assess the effectiveness and robustness of our proposed approach.

Figure 2.

Location of the experimental site and the distribution of sample trees within the six typical landscape areas. (a) The location of the city of Arnhem in the Netherlands, (b) the distribution of sample trees selected in the typical landscape areas, and (c) sample landscapes from the aerial photographs of the study area.

3.2. Aerial Photographs

The Dutch government conducts country-wide aerial photography campaigns twice a year with one winter (leaf-off season) and one summer (leaf-on season) since 2012. The aerial photographs acquired in summer with four bands (red, green, blue, and infrared) have a spatial resolution of 25 cm, while the aerial photographs of winter with a spatial resolution of 8 cm have only three bands (red, green, and blue). Both datasets are openly accessible through the web map service (WMS) (opendata.beeldmateriaal.nl). The aerial photographs used in this study were captured during the summer in 2020. There are two reasons for choosing the aerial photographs acquired in 2020. On the one hand, higher image clarity and stability help us delineate tree canopies (the comparison is shown in Figure S1). On the other hand, it can better simulate real-world applications since data from different sensors are often acquired on different dates and mounted on various platforms.

3.3. Airborne LiDAR Data

The airborne LiDAR point cloud data were from the 3rd national airborne LiDAR flight campaign in the Netherlands (i.e., Actueel Hoogtebestand Nederland 3, AHN3), which can be openly accessible via the online repository PDOK (app.pdok.nl/ahn3-downloadpage). The AHN3 data of our study area were acquired during the leaf-on season in 2018 (ahn.nl/kwaliteitsbeschrijving). The average point density of AHN3 is between 6 and 10 points m−2, and the area of individual trees can reach approximately 40 points m−2 owing to the penetration capability of LiDAR.

3.4. Generation of Canopy Height Models from Airborne LiDAR Data

Canopy height models (CHMs) derived from LiDAR point cloud data have been commonly used to generate the boundaries of individual tree crowns [49,118,119], which is obtained through cutting a digital surface model (DSM) with a digital terrain model (DTM) [120,121,122]. To maintain the same spatial resolution as aerial photographs, we processed AHN3 LiDAR point cloud data to generate CHMs with a spatial resolution of 25 cm. To generate CHMs that could show clearer boundary of tree crowns, we conducted the following processing chain for the LiDAR point cloud. The first step was to normalize the LiDAR data, aiming to eliminate the influence of topographic relief on the elevation value of point, and those points classified as “unclassified” were extracted from the original AHN3 data. To perform normalization, the elevation of the nearest ground point to each point is subtracted from its elevation value. Then, the points with elevation less than 25% of the mean elevation in clipped point cloud dataset were eliminated, and the outliers were removed [123,124]. Finally, inverse distance weighting (IDW) function was chosen to generate the CHMs, a widely employed deterministic model in spatial interpolation that relies on the first law of geography [125,126,127]. The above processing was all implemented with the software LiDAR360 (version 4.1).

3.5. Delineating Individual Tree Crowns from Aerial Photographs and CHMs

In this study, we used manually delineated individual trees as samples to verify and test our proposed approach, which can effectively avoid the omission and commission error of automatic detection algorithms for tree detection. To improve the reliability of the crown boundaries in aerial photographs and CHMs, airborne images with higher spatial resolution (8 cm) and LiDAR point cloud data with 3D spatial structure information were employed as reference images. To guarantee the representativeness of the samples, we uniformly selected individual trees in space and number according to different landscape types. Specifically, we first delineated the boundaries of individual trees in the aerial photographs. Then, we further delineated individual trees’ boundaries in CHMs according to the boundaries sketched in aerial photographs. The number of individual trees sketched in each landscape and the area of each landscape are shown in Table 1.

Table 1.

The number of individual trees manually delineated from aerial photographs and LiDAR-derived CHMs, as well as its corresponding number of sample plots and total area in each landscape.

There are some small differences in the number of delineated individual trees between aerial photographs and LiDAR-derived CHMs, with more tree crowns sketched in CHMs than in aerial photographs. We chose trees that could be delineated in both the aerial photograph and CHM to validate the proposed approach, and meanwhile assigned a same ID number to the same trees by editing the vector in QGIS for both datasets, establishing which tree pairs matched correctly. In other words, a pair of trees are considered correctly matched when they have the same ID number. The percentage of correctly matched trees is used to evaluate the matching accuracy of individual trees for our proposed approach.

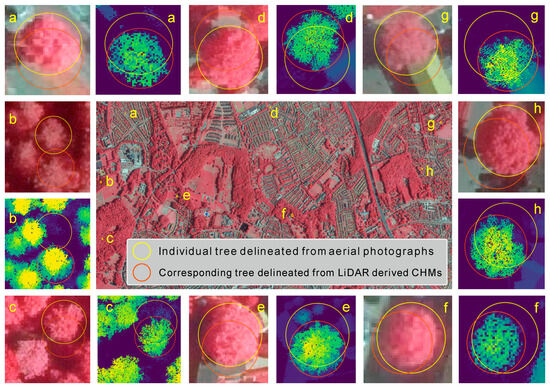

3.6. Visualization of Individual Tree Mismatches between Aerial Photographs and CHMs

As shown in Figure 3, there are some examples of the spatial mismatch of individual trees in the manually delineated datasets, which are caused by local displacement between aerial photograph and CHMs. These mismatches are spatially inconsistent in both distance and orientation, and consequently cannot be directly addressed through traditional global geographic calibration for images [69,128,129,130].

Figure 3.

Examples of the spatial mismatches between individual trees resulting from local displacement between aerial photographs and the canopy height models (CHMs) generated from the airborne LiDAR point cloud data in the study area. Yellow and red circles with the same letter have the same geographical location.

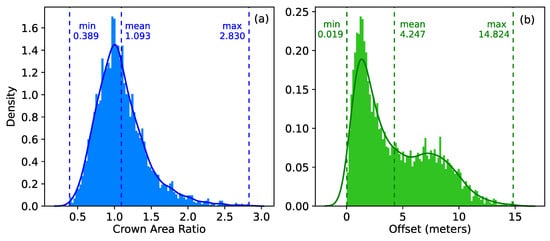

3.7. Statistical Description on Mismatch of Individual Trees

We used crown area ratio and offset to explore the mismatch of individual trees between aerial photographs and CHMs (airborne LiDAR point cloud data were considered as the reference data in this study) (Figure 4). We defined the crown area ratio as the ratio of the crown area of an individual tree in the aerial photographs to the crown area of the corresponding tree in CHMs. The offset is defined as the distance between centers of two matching trees in aerial photographs and CHMs. We also performed a statistical description on the original offset of individual trees in each landscape (Figure S2).

Figure 4.

The statistical description on the mismatch of individual trees between aerial photographs and CHMs before rectifying the offset. (a) Density histograms of the crown area ratio of individual trees in aerial photographs to the corresponding trees in CHMs, and (b) density histograms of the offset between the center of two matching trees separately from aerial photographs and CHMs. The bar, vertical dotted line, and curve represent the density, mean, and density distribution curve of corresponding metrics, respectively.

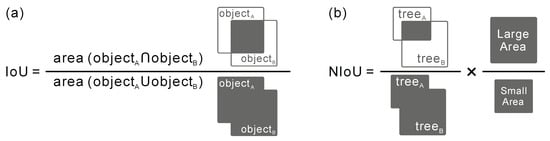

3.8. Normalized Intersection over Union

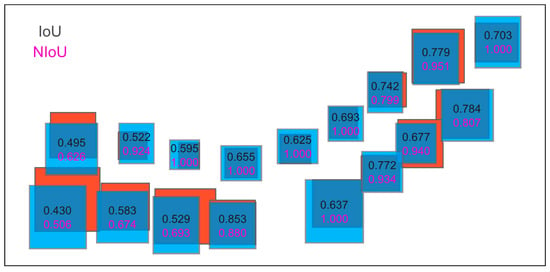

The intersection over union (IoU, Figure 5a) is commonly used to evaluate the performance of an object detector for a specific dataset [131,132,133]. In the case of object detection and segmentation, IoU quantifies the degree of overlap between the target bounding box and the predicted bounding box [134,135,136]. The larger the overlapping area, the greater the IoU. The bounding boxes are usually the same size in most applications about object matching. While in our study, we found that the bounding box size between the tree in the aerial photograph and the corresponding tree in the CHMs was not always the same in most pairs of individual trees (Figure 4a), leading to discrepancies when evaluating the matching accuracy with IoU. In response, we proposed a normalized intersection over union (NIoU) to eliminate the impact of area difference on IoU by multiplying the ratio of the area of the larger tree to that of the smaller one (Figure 5b). The value of NIoU is between 0 and 1, the closer the value is to 1, the higher the degree of overlap between the two trees. We computed the IoU and NIoU for a roadside tree sample individually to show the difference in measuring the overlap between trees in both datasets (Figure 6). In this study, NIoU served two functions, i.e., (1) screening out the correct or optimal offset vector in determining both the correct offset of a particular tree and the final offset of trees in a region; (2) evaluating the matching accuracy after rectifying the offset of individual trees in two images.

Figure 5.

The formula and schematic diagram of intersection over union (IoU (a)) and normalized intersection over union (NIoU (b)).

Figure 6.

Comparison of IoU and NIoU in measuring overlap between trees. The numbers over the bounding boxes represent the corresponding values.

3.9. Accuracy Assessment

In our preliminary experiment, we found that some trees were assigned to non-matching trees when calculating the NIoU, especially in coniferous forest (Figure 3b). This is due to the fact that the algorithm considers the tree with maximum NIoU as the matching tree when calculating neighbor candidate trees with the reference tree. Therefore, we first used the pairing rate (the percentage of correctly paired trees) to screen out those trees that de facto were not pairwise matching with the reference tree (Equation (1)).

where Nsame_ID represents the number of tree pairs with the same ID number when calculating the NIoU of individual trees between two data, while N is the total number of tree pairs. And then, we define matching accuracy (Equation (2)) as the mean NIoU calculated from the remaining matching tree pairs to measure the degree of overlap between the correctly paired trees.

where ΣNIoU indicates the sum of NIoU calculated from each matching tree pair. Moreover, the density histograms with curves of NIoU calculated from matching tree pairs were exploited to compare the difference more intuitively between the results before and after rectifying the offset.

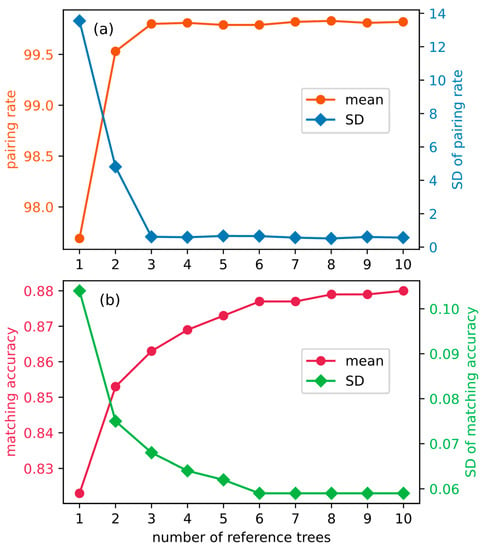

3.10. Parameter Tuning

The key parameter for our approach is the number of reference trees used for calculating, selecting, and determining the final offset vector. Hence, we tested the pairing rate and matching accuracy as the number of reference trees increases. We tested the number of reference trees from one to ten. Depending on the number of reference trees we needed to test, we randomly selected the specific number of trees from CHMs as reference tree to calculate their correct offset vectors, and then chose the final one to rectify the trees in aerial photographs (the core steps are the same as our proposed approach in Section 2.1). We repeated the above experimental steps with 20 different random seeds in all samples of six landscapes (Figure 1b) and calculated the average value of the pairing rate, matching accuracy, and their standard deviation.

4. Results

4.1. Parameter Tuning

As shown in Figure 7a, when the number of reference trees reaches three, the pairing rate is close to saturation and its standard deviation (SD) no longer significantly changes. While the matching accuracy and its SD becomes steady after the number greater than eight (Figure 7b). Therefore, in accordance with the “maximum principle”, we set the number of reference trees to be eight to apply our proposed method if there are too many reference trees for selecting the correct offset vectors.

Figure 7.

The evaluation indices and their standard deviation (SD) of individual trees using our proposed matching approach as the number of reference trees increases. (a) pairing rate; (b) matching accuracy.

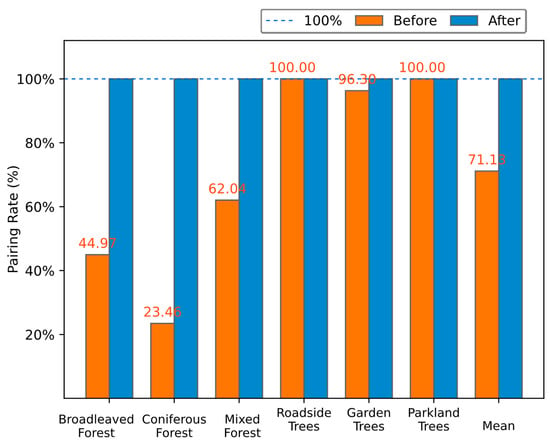

4.2. Pairing Rate after Using Our Proposed Approach

The pairing rate of the individual trees in all six landscapes before and after rectifying the offset is presented in Figure 8, which shows that all trees were correctly paired after offset rectification using our proposed approach. The mean pairing rate of all six landscapes increased from 71.13% (SD = ±29.83) to 100.00% (SD = 0). Specifically, coniferous forest had the largest improvement with an increase of 76.54%, followed by broadleaved forest (55.03%), mixed forest (37.96%), and garden trees (3.70%), while the individual tree pairs in roadside trees and parkland trees were totally paired even before offset rectification.

Figure 8.

The pairing rate of the individual trees in all six landscapes before and after offset rectification.

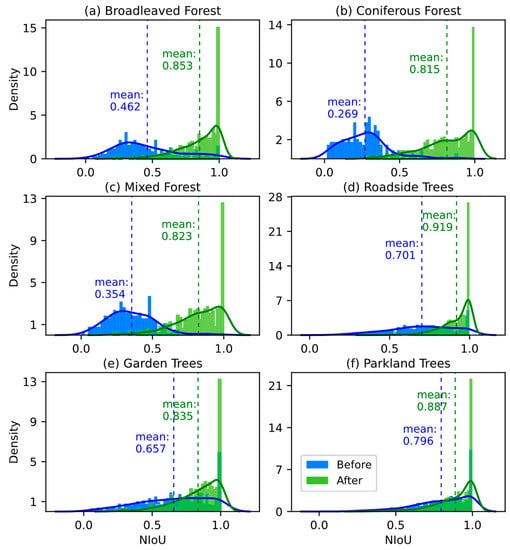

4.3. Matching Accuracy after Using Our Proposed Approach

Figure 9 details the density of NIoU calculated from matching tree pairs in all six landscapes before and after rectifying the offset. In all six landscapes, the matching accuracy (mean NIoU) improved significantly (p < 0.001, ANOVA), and coniferous forest demonstrated the greatest improvement with an increase of 0.546, followed by mixed forest (0.469), broadleaved forest (0.391), roadside trees (0.218), garden trees (0.178), and parkland trees (0.091). The optimal matching accuracy of individual trees before and after offset rectification was respectively in parkland trees (0.796) and roadside trees (0.919), which was also the only landscape with matching accuracy greater than 0.9. Before applying our approach, the mean matching accuracy of landscapes in city (Figure 9d–f) was 0.718, which was significantly higher than that in forest (0.362). While after rectifying the offset, the six landscapes with matching accuracy in descending order were sequentially roadside trees (0.919), parkland trees (0.887), broadleaved forest (0.853), garden trees (0.835), mixed forest (0.823), and coniferous forest (0.815). Overall, the mean matching accuracy of all six landscapes significantly rose from 0.642 ± 0.264 (SD) to 0.861 ± 0.152 (SD).

Figure 9.

The overlaid density histograms with density curves of NIoU before and after offset rectification. The bar and curve represent the density and its distribution curve of NIoU, respectively, while the vertical dash line is the mean NIoU (i.e., matching accuracy). The blue elements denote the results before offset rectification, while the results after rectifying the offset are shown in green.

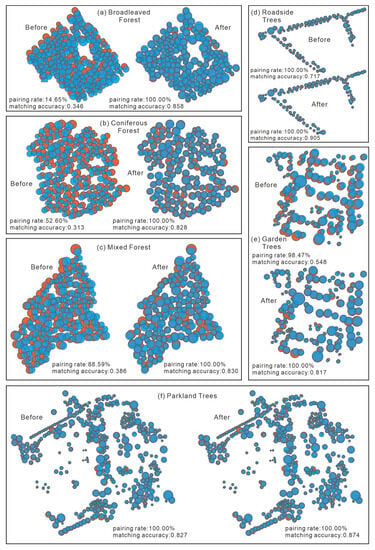

4.4. Visualization of Pairing Rate and Matching Accuracy before and after Rectification

We selected a representative sample from each landscape to visually illustrate the pairing and matching effect of individual trees before and after offset rectification (Figure 10). As observed in Figure 10, the pairing rate of the individual trees in all six landscapes was improved to 100% following application of the new matching algorithm described above, while the pairing rate was 14.65%, 52.60%, and 88.59% in broadleaved, coniferous, and mixed forest, respectively, before rectifying the offset (Figure 10a–c). Meanwhile, mean matching accuracy increased to 0.839. Regarding the roadside, garden, and parkland trees, although the pairing rate has not increased significantly, the matching accuracy of roadside and garden trees has been substantially raised (Figure 10d,e). Furthermore, the matching accuracy in parkland trees was slightly improved after offset rectification (Figure 10f).

Figure 10.

Instance of visualization of the pairing and matching effects of individual trees in all six landscapes before and after offset rectification.

4.5. Comparison of Results Using Image Registration with Our Proposed Approach

We compared the pairing rate and matching accuracy of individual trees after registering images with ENVI automatic registration module and matching with our proposed approach, respectively. As shown in Table 2, through our proposed approach, the mean pairing rate is up to 100.00%, while about 10% of the trees remain mismatched when using the conventional image registration method. In terms of matching accuracy, our proposed approach achieved better results and had smaller standard deviations. It is noted that the matching accuracy in urban areas (roadside trees, garden trees, and parkland trees) has a certain degree of decline, although the pairing rate increases. Moreover, comparing the evaluation indices of the traditional method and our proposed approach, the p-values of the paired t-test for pairing rate and matching accuracy were 0.045 and 0.017, respectively.

Table 2.

The pairing rate, matching accuracy, and its standard deviation (SD) of each landscape using image registration module (ENVI) and our proposed approach.

5. Discussion

5.1. Influence of Registration Noise on Pairing Rate

Results of this study show that the pairing rate of individual trees in the deciduous, coniferous, and mixed forest classes was clearly lower than that for roadside, garden, and parkland trees (Figure 8). We can readily infer that registration noise exerts a greater influence on forest classes, which might be caused by the quantity and quality of ground control points available for geo-correcting aerial photographs. Compared to roadside, garden, and parkland trees, it is more challenging to locate highly precise control points for fine registration in forested areas (Figure 2), and this leads to a larger offset between individual trees of two data. In general, the lower the density of trees, the higher the matching accuracy and vice versa [137]. It is not a unique problem to this study as there is generally a lack of tie points in areas that are difficult to survey (e.g., mountains, grasslands, and forests) [138]. There are only a few trees in the garden class that were not calculated from matching tree pairs, probably due to the larger variance in canopy and tree height from a higher abundance of tree species (Figure 2c(V)).

5.2. Effectiveness of the Proposed Approach

The improvement of pairing rate was more prominent in coniferous forest, probably since coniferous trees typically had large spaces between adjacent trees than broadleaved trees [25,139,140]. As shown in Figure 3b, coniferous forests were prone to be reference trees to compute the NIoU with a non-matching tree once the offset exceeds the diameter of the tree (especially in forest with high-density trees). In comparison, broadleaved forest and mixed forest had a relatively better pairing rate. Moreover, the improvement of matching accuracy in forest was more evident than that in landscapes of city. The matching accuracy of individual trees in forest before rectifying the offset was much lower than for roadside, garden, and parkland trees. After offset rectification, the matching accuracy reached an asymptotic and highest level for all six landscapes, demonstrating the effectiveness of our proposed matching approach in improving matching accuracy regardless of landscape type (see Figure 9).

5.3. Matching Accuracy in Different Landscapes

As described above for the pairing rate, the most dramatic improvement in the matching accuracy was for coniferous forest. Except for the greater offsets in forests, smaller crowns of coniferous trees had smaller NIoU values, as expected from the NIoU formula (Figure 5). Therefore, in forests, the matching accuracy of broadleaved forest ranks first, followed by mixed forest, and finally coniferous forest. For roadside, garden, and parkland trees, the maximum increment and the maximum value of matching accuracy after rectifying the offsets were for the roadside trees. Several possible reasons may have contributed. Most roadside trees consist of broadleaved trees which were usually the same species, similar in tree age (i.e., tree height and crown size) [141,142,143]. The regular layout of roadside trees made the offset simple and spatially consistent [144,145], reducing the local registration noise for roadside trees.

The best matching accuracy was observed in different landscapes before and after offset rectification. Before rectifying the offsets between individual trees from two datasets, the best matching accuracy was observed in parkland trees, one possible reason is that the design and context of parklands provide richer features for geographic registration of aerial photographs, as well as parklands had large widely spaced trees separated by grass areas [146,147]. Moreover, although garden trees were characterized by highly accurate ground control points due to their (mostly) solitary and isolated nature and growing environments surrounded by roads and buildings, tree matching results were not as satisfactory as expected, probably stemming from the species-rich areas with trees varying in height and crown size [148,149,150,151], complicating the degree and orientation of offsets between different trees.

5.4. Analyzing the Results Using Conventional Image Registration Approach

Compared with that before image registration, the matching accuracy has been improved for forest landscape (broadleaved forest, coniferous forest, and mixed forest), but there has been a certain degree of decline in urban landscapes (roadside trees, garden trees, and parkland trees) (Table 2). Meanwhile, the pairing rate of the corresponding landscape had a similar trend. The above phenomenon was mainly caused by unevenly distributed, low precision, and insufficient control points (CPs) that were found by ENVI automatic program. In this study, we chose landscapes in small patches to verify our proposed method, while it was difficult to find suitable and sufficient CPs to align images with high accuracy [152,153,154,155]. Taking the landscape roadside trees as an instance, its area is always narrow and long, thus the unevenly distributed points can easily change the shape of the image to be registered, resulting in serious distortion of the edge of the image. Therefore, though many methods have been proposed to solve multimodal images registration problems, they are usually suitable for large-scale images due to the uneven distribution and limited quantity of CPs [156,157], which is opposed to solving local registration noise.

In our study, aerial photographs and CHMs (different modalities) make it more difficult to find high-precision CPs. On the one hand, the values in different images represent different meanings, e.g., individual trees with high optical reflectance do not always correspond to high altitude in LiDAR height products and vice versa. On the other hand, different viewpoints also result in different shapes for the same objects, e.g., area (aerial photographs) to line (CHMs), line (aerial photographs) to point (CHMs). In addition, the characteristics of ground features change with time. Moreover, pixel values in CHMs are not always geographically true values and some values are generated by interpolation of adjacent values due to missing or insufficient points of the corresponding location in LiDAR point cloud data [158,159,160]. Therefore, looking for evenly distributed and high-quality CPs between multimodal images requires a large amount of manual intervention, while the method is usually not applicable for large-scale area and fine-grained object studies. In conclusion, in the face of different area sizes, our proposed matching approach is more robust.

5.5. Choosing a Suitable Threshold for Specific Applications

In the object detection domain, a predicted bounding box is widely considered to be correctly detected if its IoU with the ground truth bounding box is greater than 0.5 [1,133,134]. In this study, we used NIoU to determine whether the candidate tree was correctly matched with the reference one, and we found the widely accepted threshold of NIoU (i.e., 0.5) was not always optimal. Meanwhile, other factors like stand density and crown size of individual trees ought to be considered. For instance, in roadside trees where there was sufficient space between adjacent trees, we found an NIoU of around 0.4 or less. In a nutshell, the choice of an appropriate NIoU threshold for a specific application was required, which [161] also recommended. Last but not the least, in theory, the smaller the area of individual trees to be matched, the higher the matching accuracy. However, the corresponding amount of computation will also increase substantially, which should not be overlooked for large areas.

5.6. Possible Challenges and Improvements in Practical Application

First, one apparent challenge comes from the quality of individual tree products. Unlike the manual delineated trees used in this study, the over- and under- segmentation are unavoidable phenomena when using algorithm to automatically extract boundary information of individual trees, which can easily lead to some trees not having the corresponding trees in another dataset. While the rapid advancement of deep learning promises opportunities to reduce the errors caused by the phenomena. Another possible challenge may arise in plantation forests, the offset vector might be wrongly chosen when the spatial layout of trees is similar. At this point, we can expand the range of candidate trees to alleviate achieving the wrong offset vector. Moreover, we can make an improvement of our proposed approach by sightly modifying. If we need to achieve a more accurate or even completely corresponding offset vector for each tree, we can narrow down the trees that used to confirm which offset vector is optimal. However, it would cause a large amount of computation and is not suitable for large-scale use, like national or continental scales.

6. Conclusions

In this study, we proposed a novel tree-oriented matching algorithm that improved the pairing rate and matching accuracy of individual trees derived from aerial photographs and airborne LiDAR point cloud data. Our results demonstrated that the proposed approach effectively increases the pairing rate and matching accuracy of individual trees that were manually delineated from aerial photographs and LiDAR-derived CHMs. Compared to the traditional registration method, the average pairing rate of individual trees for all six landscapes increased from 91.13% to 100.00% (p = 0.045, t-test), which suggested that our proposed approach could perfectly solve the problem of matching trees. Meanwhile, the average matching accuracy increased from 0.692 ± 0.175 (standard deviation) to 0.861 ± 0.152 (p = 0.017, t-test), demonstrating the effectiveness of the proposed matching approach in matching individual trees between multimodal images.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/rs15174128/s1. Figure S1: Aerial photographs respectively acquired in 2018 (a) and 2020 (b). Figure S2: The original offsets of individual trees between aerial photographs and CHMs derived from airborne LiDAR data in six landscapes.

Author Contributions

Conceptualization, methodology, programming, validation, visualization, writing—original draft preparation, Y.X.; formal analysis, investigation, Y.X. and T.W.; supervision, T.W. and A.K.S.; writing—review and editing, T.W., A.K.S. and T.W.G.; funding acquisition, Y.X. and A.K.S. All authors have read and agreed to the published version of the manuscript.

Funding

The China Scholarship Council (202008440522) and the ITC Research Fund co-funded this research. The work was also supported by the European Union’s Horizon 2020 research and innovation program (834709), funded by the European Research Council (ERC).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Weinstein, B.G.; Marconi, S.; Bohlman, S.A.; Zare, A.; Singh, A.; Graves, S.J.; White, E.P. A remote sensing derived data set of 100 million individual tree crowns for the National Ecological Observatory Network. eLife 2021, 10, e62922. [Google Scholar]

- Walther, G.-R.; Post, E.; Convey, P.; Menzel, A.; Parmesan, C.; Beebee, T.J.C.; Fromentin, J.-M.; Hoegh-Guldberg, O.; Bairlein, F. Ecological responses to recent climate change. Nature 2002, 416, 389–395. [Google Scholar]

- Lassalle, G.; Ferreira, M.P.; La Rosa, L.E.C.; de Souza Filho, C.R. Deep learning-based individual tree crown delineation in mangrove forests using very-high-resolution satellite imagery. ISPRS J. Photogramm. Remote Sens. 2022, 189, 220–235. [Google Scholar]

- Zheng, Z.; Zeng, Y.; Schuman, M.C.; Jiang, H.; Schmid, B.; Schaepman, M.E.; Morsdorf, F. Individual tree-based vs pixel-based approaches to mapping forest functional traits and diversity by remote sensing. Int. J. Appl. Earth Obs. Geoinf. 2022, 114, 103074. [Google Scholar]

- Skidmore, A.K.; Coops, N.C.; Neinavaz, E.; Ali, A.; Schaepman, M.E.; Paganini, M.; Kissling, W.D.; Vihervaara, P.; Darvishzadeh, R.; Feilhauer, H.; et al. Priority list of biodiversity metrics to observe from space. Nat. Ecol. Evol. 2021, 5, 896–906. [Google Scholar]

- Helfenstein, I.S.; Schneider, F.D.; Schaepman, M.E.; Morsdorf, F. Assessing biodiversity from space: Impact of spatial and spectral resolution on trait-based functional diversity. Remote Sens. Environ. 2022, 275, 113024. [Google Scholar]

- Pettorelli, N.; Wegmann, M.; Skidmore, A.; Mücher, S.; Dawson, T.P.; Fernandez, M.; Lucas, R.; Schaepman, M.E.; Wang, T.; O’Connor, B.; et al. Framing the concept of satellite remote sensing essential biodiversity variables: Challenges and future directions. Remote Sens. Ecol. Conserv. 2016, 2, 122–131. [Google Scholar]

- Bortolot, Z.J.; Wynne, R.H. Estimating forest biomass using small footprint LiDAR data: An individual tree-based approach that incorporates training data. ISPRS J. Photogramm. Remote Sens. 2005, 59, 342–360. [Google Scholar]

- Jeronimo, S.M.A.; Kane, V.R.; Churchill, D.J.; McGaughey, R.J.; Franklin, J.F. Applying LiDAR Individual Tree Detection to Management of Structurally Diverse Forest Landscapes. J. For. 2018, 116, 336–346. [Google Scholar]

- Uzoh, F.C.C.; Oliver, W.W. Individual tree diameter increment model for managed even-aged stands of ponderosa pine throughout the western United States using a multilevel linear mixed effects model. For. Ecol. Manage. 2008, 256, 438–445. [Google Scholar]

- Ferreira, M.P.; Féret, J.-B.; Grau, E.; Gastellu-Etchegorry, J.-P.; Amaral, C.H.D.; Shimabukuro, Y.E.; de Souza Filho, C.R. Retrieving structural and chemical properties of individual tree crowns in a highly diverse tropical forest with 3D radiative transfer modeling and imaging spectroscopy. Remote Sens. Environ. 2018, 211, 276–291. [Google Scholar]

- Weinhold, A.; Döll, S.; Liu, M.; Schedl, A.; Pöschl, Y.; Xu, X.; Neumann, S.; van Dam, N.M. Tree species richness differentially affects the chemical composition of leaves, roots and root exudates in four subtropical tree species. J. Ecol. 2022, 110, 97–116. [Google Scholar]

- Zheng, Z.; Zeng, Y.; Schneider, F.D.; Zhao, Y.; Zhao, D.; Schmid, B.; Schaepman, M.E.; Morsdorf, F. Mapping functional diversity using individual tree-based morphological and physiological traits in a subtropical forest. Remote Sens. Environ. 2021, 252, 112170. [Google Scholar]

- Schneider, F.D.; Morsdorf, F.; Schmid, B.; Petchey, O.L.; Hueni, A.; Schimel, D.S.; Schaepman, M.E. Mapping functional diversokity from remotely sensed morphological and physiological forest traits. Nat. Commun. 2017, 8, 1441. [Google Scholar]

- Köhl, M.; Magnussen, S.; Marchetti, M. Sampling Methods, Remote Sensing and GIS Multiresource Forest Inventory; Springer: Berlin/Heidelberg, Germany, 2006; Volume 2. [Google Scholar]

- Nielsen, A.B.; Östberg, J.; Delshammar, T. Review of urban tree inventory methods used to collect data at single-tree level. Arboric. Urban For. 2014, 40, 96–111. [Google Scholar] [CrossRef]

- Saarinen, N.; Vastaranta, M.; Kankare, V.; Tanhuanpää, T.; Holopainen, M.; Hyyppä, J.; Hyyppä, H. Urban-Tree-Attribute Update Using Multisource Single-Tree Inventory. Forests 2014, 5, 1032–1052. [Google Scholar]

- Wallace, L.; Sun, Q.; Hally, B.; Hillman, S.; Both, A.; Hurley, J.; Saldias, D.S.M. Linking urban tree inventories to remote sensing data for individual tree mapping. Urban For. Urban Greening 2021, 61, 127106. [Google Scholar] [CrossRef]

- Alonzo, M.; Bookhagen, B.; Roberts, D.A. Urban tree species mapping using hyperspectral and lidar data fusion. Remote Sens. Environ. 2014, 148, 70–83. [Google Scholar]

- Myeong, S.; Nowak, D.J.; Duggin, M.J. A temporal analysis of urban forest carbon storage using remote sensing. Remote Sens. Environ. 2006, 101, 277–282. [Google Scholar]

- Lumnitz, S.; Devisscher, T.; Mayaud, J.R.; Radic, V.; Coops, N.C.; Griess, V.C. Mapping trees along urban street networks with deep learning and street-level imagery. ISPRS J. Photogramm. Remote Sens. 2021, 175, 144–157. [Google Scholar]

- Ke, Y.; Quackenbush, L.J. A review of methods for automatic individual tree-crown detection and delineation from passive remote sensing. Int. J. Remote Sens. 2011, 32, 4725–4747. [Google Scholar] [CrossRef]

- Brandtberg, T.; Walter, F. Automated delineation of individual tree crowns in high spatial resolution aerial images by multiple-scale analysis. Mach. Vis. Appl. 1998, 11, 64–73. [Google Scholar] [CrossRef]

- Jurado, J.M.; López, A.; Pádua, L.; Sousa, J.J. Remote sensing image fusion on 3D scenarios: A review of applications for agriculture and forestry. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102856. [Google Scholar] [CrossRef]

- Dalponte, M.; Ørka, H.O.; Ene, L.T.; Gobakken, T.; Næsset, E. Tree crown delineation and tree species classification in boreal forests using hyperspectral and ALS data. Remote Sens. Environ. 2014, 140, 306–317. [Google Scholar] [CrossRef]

- Kattenborn, T.; Leitloff, J.; Schiefer, F.; Hinz, S. Review on Convolutional Neural Networks (CNN) in vegetation remote sensing. ISPRS J. Photogramm. Remote Sens. 2021, 173, 24–49. [Google Scholar] [CrossRef]

- Gougeon, F.A. A Crown-Following Approach to the Automatic Delineation of Individual Tree Crowns in High Spatial Resolution Aerial Images. Can. J. Remote Sens. 1995, 21, 274–284. [Google Scholar] [CrossRef]

- Yang, J.; He, Y.; Caspersen, J. A multi-band watershed segmentation method for individual tree crown delineation from high resolution multispectral aerial image. In Proceedings of the 2014 IEEE Geoscience and Remote Sensing Symposium, Quebec City, QC, Canada, 13–18 July 2014. [Google Scholar]

- Erikson, M. Segmentation of individual tree crowns in colour aerial photographs using region growing supported by fuzzy rules. Can. J. For. Res. 2003, 33, 1557–1563. [Google Scholar] [CrossRef]

- Wagner, F.H.; Ferreira, M.P.; Sanchez, A.; Hirye, M.C.M.; Zortea, M.; Gloor, E.; Phillips, O.L.; de Souza Filho, C.R.; Shimabukuro, Y.E.; Aragão, L.E.O.C. Individual tree crown delineation in a highly diverse tropical forest using very high resolution satellite images. ISPRS J. Photogramm. Remote Sens. 2018, 145, 362–377. [Google Scholar] [CrossRef]

- Tong, F.; Tong, H.; Mishra, R.; Zhang, Y. Delineation of Individual Tree Crowns Using High Spatial Resolution Multispectral WorldView-3 Satellite Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 7751–7761. [Google Scholar] [CrossRef]

- Gomes, M.F.; Maillard, P.; Deng, H. Individual tree crown detection in sub-meter satellite imagery using Marked Point Processes and a geometrical-optical model. Remote Sens. Environ. 2018, 211, 184–195. [Google Scholar] [CrossRef]

- Huang, H.; Li, X.; Chen, C. Individual Tree Crown Detection and Delineation From Very-High-Resolution UAV Images Based on Bias Field and Marker-Controlled Watershed Segmentation Algorithms. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 2253–2262. [Google Scholar] [CrossRef]

- Miraki, M.; Sohrabi, H.; Fatehi, P.; Kneubuehler, M. Individual tree crown delineation from high-resolution UAV images in broadleaf forest. Ecol. Inform. 2021, 61, 101207. [Google Scholar] [CrossRef]

- Safonova, A.; Hamad, Y.; Dmitriev, E.; Georgiev, G.; Trenkin, V.; Georgieva, M.; Dimitrov, S.; Iliev, M. Individual Tree Crown Delineation for the Species Classification and Assessment of Vital Status of Forest Stands from UAV Images. Drones 2021, 5, 77–94. [Google Scholar] [CrossRef]

- Xi, X.; Xia, K.; Yang, Y.; Du, X.; Feng, H. Evaluation of dimensionality reduction methods for individual tree crown delineation using instance segmentation network and UAV multispectral imagery in urban forest. Comput. Electron. Agric. 2021, 191, 106506. [Google Scholar] [CrossRef]

- Lei, L.; Yin, T.; Chai, G.; Li, Y.; Wang, Y.; Jia, X.; Zhang, X. A novel algorithm of individual tree crowns segmentation considering three-dimensional canopy attributes using UAV oblique photos. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102893. [Google Scholar] [CrossRef]

- Xu, X.; Iuricich, F.; Calders, K.; Armston, J.; De Floriani, L. Topology-based individual tree segmentation for automated processing of terrestrial laser scanning point clouds. Int. J. Appl. Earth Obs. Geoinf. 2023, 116, 103145. [Google Scholar] [CrossRef]

- Moradi, F.; Javan, F.D.; Samadzadegan, F. Potential evaluation of visible-thermal UAV image fusion for individual tree detection based on convolutional neural network. Int. J. Appl. Earth Obs. Geoinf. 2022, 113, 103011. [Google Scholar] [CrossRef]

- Leckie, D.; Gougeon, F.; Hill, D.; Quinn, R.; Armstrong, L.; Shreenan, R. Combined high-density lidar and multispectral imagery for individual tree crown analysis. Can. J. Remote Sens. 2003, 29, 633–649. [Google Scholar] [CrossRef]

- Galvincio, J.D.; Popescu, S.C. Measuring individual tree height and crown diameter for mangrove trees with airborne LiDAR data. Int. J. Adv. Eng. Manag. Sci. 2016, 2, 239456. [Google Scholar]

- Tang, H.; Dubayah, R.; Swatantran, A.; Hofton, M.; Sheldon, S.; Clark, D.B.; Blair, B. Retrieval of vertical LAI profiles over tropical rain forests using waveform lidar at La Selva, Costa Rica. Remote Sens. Environ. 2012, 124, 242–250. [Google Scholar] [CrossRef]

- Shi, Y.; Skidmore, A.K.; Wang, T.; Holzwarth, S.; Heiden, U.; Pinnel, N.; Zhu, X.; Heurich, M. Tree species classification using plant functional traits from LiDAR and hyperspectral data. Int. J. Appl. Earth Obs. Geoinf. 2018, 73, 207–219. [Google Scholar] [CrossRef]

- Khosravipour, A.; Skidmore, A.K.; Isenburg, M. Generating spike-free digital surface models using LiDAR raw point clouds: A new approach for forestry applications. Int. J. Appl. Earth Obs. Geoinf. 2016, 52, 104–114. [Google Scholar] [CrossRef]

- Sankey, T.; Donager, J.; McVay, J.; Sankey, J.B. UAV lidar and hyperspectral fusion for forest monitoring in the southwestern USA. Remote Sens. Environ. 2017, 195, 30–43. [Google Scholar] [CrossRef]

- Holmgren, J.; Persson, Å.; Söderman, U. Species identification of individual trees by combining high resolution LiDAR data with multi-spectral images. Int. J. Remote Sens. 2008, 29, 1537–1552. [Google Scholar] [CrossRef]

- Zhen, Z.; Quackenbush, L.J.; Zhang, L. Trends in Automatic Individual Tree Crown Detection and Delineation—Evolution of LiDAR Data. Remote Sens. 2016, 8, 333. [Google Scholar] [CrossRef]

- Li, H.; Hu, B.; Li, Q.; Jing, L. CNN-Based Individual Tree Species Classification Using High-Resolution Satellite Imagery and Airborne LiDAR Data. Forests 2021, 12, 1697–1718. [Google Scholar] [CrossRef]

- Wu, B.; Yu, B.; Wu, Q.; Huang, Y.; Chen, Z.; Wu, J. Individual tree crown delineation using localized contour tree method and airborne LiDAR data in coniferous forests. Int. J. Appl. Earth Obs. Geoinf. 2016, 52, 82–94. [Google Scholar] [CrossRef]

- Koch, B.; Heyder, U.; Weinacker, H. Detection of individual tree crowns in airborne lidar data. Photogramm. Eng. Remote Sens. 2006, 72, 357–363. [Google Scholar] [CrossRef]

- Wan Mohd Jaafar, W.S.; Woodhouse, I.H.; Silva, C.A.; Omar, H.; Maulud, K.N.A.; Hudak, A.T.; Klauberg, C.; Cardil, A.; Mohan, M. Improving Individual Tree Crown Delineation and Attributes Estimation of Tropical Forests Using Airborne LiDAR Data. Forests 2018, 9, 759–781. [Google Scholar] [CrossRef]

- Hamraz, H.; Contreras, M.A.; Zhang, J. A robust approach for tree segmentation in deciduous forests using small-footprint airborne LiDAR data. Int. J. Appl. Earth Obs. Geoinf. 2016, 52, 532–541. [Google Scholar] [CrossRef]

- Torresan, C.; Carotenuto, F.; Chiavetta, U.; Miglietta, F.; Zaldei, A.; Gioli, B. Individual Tree Crown Segmentation in Two-Layered Dense Mixed Forests from UAV LiDAR Data. Drones 2020, 4, 10–29. [Google Scholar] [CrossRef]

- Mohan, M.; Leite, R.V.; Broadbent, E.N.; Jaafar, W.S.W.M.; Srinivasan, S.; Bajaj, S.; Corte, A.P.D.; Amaral, C.H.D.; Gopan, G.; Saad, S.N.M.; et al. Individual tree detection using UAV-lidar and UAV-SfM data: A tutorial for beginners. Open Geosci. 2021, 13, 1028–1039. [Google Scholar] [CrossRef]

- Rudge, M.L.M.; Levick, S.R.; Bartolo, R.E.; Erskine, P.D. Modelling the diameter distribution of savanna trees with drone-based LiDAR. Remote Sens. 2021, 13, 1266–1283. [Google Scholar] [CrossRef]

- Allouis, T.; Durrieu, S.; Véga, C.; Couteron, P. Stem Volume and Above-Ground Biomass Estimation of Individual Pine Trees From LiDAR Data: Contribution of Full-Waveform Signals. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 924–934. [Google Scholar] [CrossRef]

- Shi, Y.; Wang, T.; Skidmore, A.K.; Heurich, M. Improving LiDAR-based tree species mapping in Central European mixed forests using multi-temporal digital aerial colour-infrared photographs. Int. J. Appl. Earth Obs. Geoinf. 2020, 84, 101970. [Google Scholar] [CrossRef]

- Yang, J.; Kang, Z.; Cheng, S.; Yang, Z.; Akwensi, P.H. An Individual Tree Segmentation Method Based on Watershed Algorithm and Three-Dimensional Spatial Distribution Analysis From Airborne LiDAR Point Clouds. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 1055–1067. [Google Scholar] [CrossRef]

- Reitberger, J.; Schnörr, C.; Krzystek, P.; Stilla, U. 3D segmentation of single trees exploiting full waveform LIDAR data. ISPRS J. Photogramm. Remote Sens. 2009, 64, 561–574. [Google Scholar] [CrossRef]

- Lee, J.; Cai, X.; Lellmann, J.; Dalponte, M.; Malhi, Y.; Butt, N.; Morecroft, M.; Schönlieb, C.B.; Coomes, D.A. Individual Tree Species Classification From Airborne Multisensor Imagery Using Robust PCA. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 2554–2567. [Google Scholar] [CrossRef]

- Hui, Z.; Cheng, P.; Yang, B.; Zhou, G. Multi-level self-adaptive individual tree detection for coniferous forest using airborne LiDAR. Int. J. Appl. Earth Obs. Geoinf. 2022, 114, 103028. [Google Scholar] [CrossRef]

- Beese, L.; Dalponte, M.; Asner, G.P.; Coomes, D.A.; Jucker, T. Using repeat airborne LiDAR to map the growth of individual oil palms in Malaysian Borneo during the 2015–16 El Niño. Int. J. Appl. Earth Obs. Geoinf. 2022, 115, 103117. [Google Scholar] [CrossRef]

- Qin, H.; Zhou, W.; Yao, Y.; Wang, W. Individual tree segmentation and tree species classification in subtropical broadleaf forests using UAV-based LiDAR, hyperspectral, and ultrahigh-resolution RGB data. Remote Sens. Environ. 2022, 280, 113143. [Google Scholar] [CrossRef]

- Harikumar, A.; D’Odorico, P.; Ensminger, I. Combining Spectral, Spatial-Contextual, and Structural Information in Multispectral UAV Data for Spruce Crown Delineation. Remote Sens. 2022, 14, 2044–2062. [Google Scholar] [CrossRef]

- Zhang, C.; Qiu, F. Mapping individual tree species in an urban forest using airborne lidar data and hyperspectral imagery. Photogramm. Eng. Remote Sens. 2012, 78, 1079–1087. [Google Scholar] [CrossRef]

- Huang, R.; Zheng, S.; Hu, K. Registration of Aerial Optical Images with LiDAR Data Using the Closest Point Principle and Collinearity Equations. Sensors 2018, 18, 1770–1790. [Google Scholar] [CrossRef] [PubMed]

- Bovolo, F.; Bruzzone, L.; Marchesi, S. Analysis and Adaptive Estimation of the Registration Noise Distribution in Multitemporal VHR Images. IEEE Trans. Geosci. Remote Sens. 2009, 47, 2658–2671. [Google Scholar] [CrossRef]

- Wu, W.; Shao, Z.; Huang, X.; Teng, J.; Guo, S.; Li, D. Quantifying the sensitivity of SAR and optical images three-level fusions in land cover classification to registration errors. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102868. [Google Scholar] [CrossRef]

- Han, Y.; Bovolo, F.; Bruzzone, L. An Approach to Fine Coregistration Between Very High Resolution Multispectral Images Based on Registration Noise Distribution. IEEE Trans. Geosci. Remote Sens. 2015, 53, 6650–6662. [Google Scholar] [CrossRef]

- Han, Y. Fine geometric alignment of very high resolution optical images using registration noise and quadtree structure. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017. [Google Scholar]

- Lee, J.; Cai, X.; Schönlieb, C.; Coomes, D.A. Nonparametric Image Registration of Airborne LiDAR, Hyperspectral and Photographic Imagery of Wooded Landscapes. IEEE Trans. Geosci. Remote Sens. 2015, 53, 6073–6084. [Google Scholar] [CrossRef]

- Jiang, X.; Ma, J.; Xiao, G.; Shao, Z.; Guo, X. A review of multimodal image matching: Methods and applications. Inf. Fusion 2021, 73, 22–71. [Google Scholar] [CrossRef]

- Xu, X.; Li, X.; Liu, X.; Shen, H.; Shi, Q. Multimodal registration of remotely sensed images based on Jeffrey’s divergence. ISPRS J. Photogramm. Remote Sens. 2016, 122, 97–115. [Google Scholar] [CrossRef]

- Näsi, R.; Honkavaara, E.; Blomqvist, M.; Lyytikäinen-Saarenmaa, P.; Hakala, T.; Viljanen, N.; Kantola, T.; Holopainen, M. Remote sensing of bark beetle damage in urban forests at individual tree level using a novel hyperspectral camera from UAV and aircraft. Urban For. Urban Greening 2018, 30, 72–83. [Google Scholar] [CrossRef]

- Duncanson, L.; Dubayah, R. Monitoring individual tree-based change with airborne lidar. Ecol. Evol. 2018, 8, 5079–5089. [Google Scholar] [CrossRef]

- Vastaranta, M.; Holopainen, M.; Yu, X.; Hyyppä, J.; Mäkinen, A.; Rasinmäki, J.; Melkas, T.; Kaartinen, H.; Hyyppä, H. Effects of Individual Tree Detection Error Sources on Forest Management Planning Calculations. Remote Sens. 2011, 3, 1614–1626. [Google Scholar] [CrossRef]

- Mäyrä, J.; Keski-Saari, S.; Kivinen, S.; Tanhuanpää, T.; Hurskainen, P.; Kullberg, P.; Poikolainen, L.; Viinikka, A.; Tuominen, S.; Kumpula, T.; et al. Tree species classification from airborne hyperspectral and LiDAR data using 3D convolutional neural networks. Remote Sens. Environ. 2021, 256, 112322. [Google Scholar] [CrossRef]

- Palenichka, R.M.; Zaremba, M.B. Automatic Extraction of Control Points for the Registration of Optical Satellite and LiDAR Images. IEEE Trans. Geosci. Remote Sens. 2010, 48, 2864–2879. [Google Scholar] [CrossRef]

- Li, L.; Han, L.; Ding, M.; Cao, H.; Hu, H. A deep learning semantic template matching framework for remote sensing image registration. ISPRS J. Photogramm. Remote Sens. 2021, 181, 205–217. [Google Scholar] [CrossRef]

- Mustaffar, M.; Mitchell, H.L. Improving area-based matching by using surface gradients in the pixel co-ordinate transformation. ISPRS J. Photogramm. Remote Sens. 2001, 56, 42–52. [Google Scholar] [CrossRef]

- Ye, Y.; Shen, L. Hopc: A novel similarity metric based on geometric structural properties for multi-modal remote sensing image matching. ISPRS Ann. Photogramm. Remote Sens. Spatial Inf. Sci. 2016, 3, 9. [Google Scholar] [CrossRef]

- Ye, Y.; Shan, J.; Bruzzone, L.; Shen, L. Robust registration of multimodal remote sensing images based on structural similarity. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2941–2958. [Google Scholar] [CrossRef]

- Wong, A.; Clausi, D.A. ARRSI: Automatic Registration of Remote-Sensing Images. IEEE Trans. Geosci. Remote Sens. 2007, 45, 1483–1493. [Google Scholar] [CrossRef]

- Govindu, V.M.; Chellappa, R. Feature-based image to image registration. In Image Registration for Remote Sensing; Le Moigne, J., Netanyahu, N.S., Eastman, R.D., Eds.; Cambridge University Press: Cambridge, UK, 2011; pp. 215–239. [Google Scholar]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999. [Google Scholar]

- Paul, S.; Pati, U.C. Remote Sensing Optical Image Registration Using Modified Uniform Robust SIFT. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1300–1304. [Google Scholar] [CrossRef]

- Sedaghat, A.; Mohammadi, N. Uniform competency-based local feature extraction for remote sensing images. ISPRS J. Photogramm. Remote Sens. 2018, 135, 142–157. [Google Scholar] [CrossRef]

- Goncalves, H.; Corte-Real, L.; Goncalves, J.A. Automatic Image Registration Through Image Segmentation and SIFT. IEEE Trans. Geosci. Remote Sens. 2011, 49, 2589–2600. [Google Scholar] [CrossRef]

- Kupfer, B.; Netanyahu, N.S.; Shimshoni, I. An Efficient SIFT-Based Mode-Seeking Algorithm for Sub-Pixel Registration of Remotely Sensed Images. IEEE Geosci. Remote Sens. Lett. 2015, 12, 379–383. [Google Scholar] [CrossRef]

- Hasan, M.; Jia, X.; Robles-Kelly, A.; Zhou, J.; Pickering, M.R. Multi-spectral remote sensing image registration via spatial relationship analysis on sift keypoints. In Proceedings of the 2010 IEEE International Geoscience and Remote Sensing Symposium, Honolulu, HI, USA, 25–30 July 2010. [Google Scholar]

- Turner, D.; Lucieer, A.; Malenovský, Z.; King, D.H.; Robinson, S.A. Spatial Co-Registration of Ultra-High Resolution Visible, Multispectral and Thermal Images Acquired with a Micro-UAV over Antarctic Moss Beds. Remote Sens. 2014, 6, 4003–4024. [Google Scholar] [CrossRef]

- Wong, A.; Orchard, J. Efficient FFT-accelerated approach to invariant optical–LIDAR registration. IEEE Trans. Geosci. Remote Sens. 2008, 46, 3917–3925. [Google Scholar] [CrossRef]

- Dusmanu, M.; Rocco, I.; Pajdla, T.; Pollefeys, M.; Sivic, J.; Torii, A.; Sattler, T. D2-net: A trainable cnn for joint description and detection of local features. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 8092–8101. [Google Scholar]

- Blendowski, M.; Heinrich, M.P. Combining MRF-based deformable registration and deep binary 3D-CNN descriptors for large lung motion estimation in COPD patients. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 43–52. [Google Scholar] [CrossRef]

- Cheng, X.; Zhang, L.; Zheng, Y. Deep similarity learning for multimodal medical images. Comput. Methods Biomech. Biomed. Eng. Imaging Vis. 2018, 6, 248–252. [Google Scholar] [CrossRef]

- Wu, W.; Hu, S.; Shen, J.; Ma, L.; Han, J. Sensitization of 21% Cr Ferritic Stainless Steel Weld Joints Fabricated With/Without Austenitic Steel Foil as Interlayer. J. Mater. Eng. Perform. 2015, 24, 1505–1515. [Google Scholar] [CrossRef]

- Wu, G.; Kim, M.; Wang, Q.; Gao, Y.; Liao, S.; Shen, D. Unsupervised Deep Feature Learning for Deformable Registration of MR Brain Images. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2013; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Simonovsky, M.; Gutiérrez-Becker, B.; Mateus, D.; Navab, N.; Komodakis, N. A Deep Metric for Multimodal Registration. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2016; Springer International Publishing: Cham, Switzerland, 2016. [Google Scholar]

- Han, X.; Leung, T.; Jia, Y.; Sukthankar, R.; Berg, A.C. Matchnet: Unifying feature and metric learning for patch-based matching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3279–3286. [Google Scholar]

- Zagoruyko, S.; Komodakis, N. Learning to compare image patches via convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3279–3286. [Google Scholar]

- Liao, R.; Miao, S.; de Tournemire, P.; Grbic, S.; Kamen, A.; Mansi, T.; Comaniciu, D. An artificial agent for robust image registration. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Haskins, G.; Kruger, U.; Yan, P. Deep learning in medical image registration: A survey. Mach. Vis. Appl. 2020, 31, 8. [Google Scholar] [CrossRef]

- Zhang, J. Inverse-consistent deep networks for unsupervised deformable image registration. arXiv 2018, arXiv:1809.03443. [Google Scholar]

- Li, H.; Fan, Y. Non-rigid image registration using fully convolutional networks with deep self-supervision. arXiv 2017, arXiv:1709.00799. [Google Scholar]

- De Vos, B.D.; Berendsen, F.F.; Viergever, M.A.; Sokooti, H.; Staring, M.; Išgum, I. A deep learning framework for unsupervised affine and deformable image registration. Med. Image Anal. 2019, 52, 128–143. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.-S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep learning in remote sensing: A comprehensive review and list of resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- Hossain, M.D.; Chen, D. Segmentation for Object-Based Image Analysis (OBIA): A review of algorithms and challenges from remote sensing perspective. ISPRS J. Photogramm. Remote Sens. 2019, 150, 115–134. [Google Scholar] [CrossRef]

- Kotaridis, I.; Lazaridou, M. Remote sensing image segmentation advances: A meta-analysis. ISPRS J. Photogramm. Remote Sens. 2021, 173, 309–322. [Google Scholar] [CrossRef]

- Blaschke, T.; Hay, G.J.; Kelly, M.; Lang, S.; Hofmann, P.; Addink, E.; Feitosa, R.Q.; van der Meer, F.; van der Werff, H.; van Coillie, F.; et al. Geographic Object-Based Image Analysis—Towards a new paradigm. ISPRS J. Photogramm. Remote Sens. 2014, 87, 180–191. [Google Scholar] [CrossRef]

- Chandler, C.J.; van der Heijden, G.M.F.; Boyd, D.S.; Cutler, M.E.J.; Costa, H.; Nilus, R.; Foody, G.M. Remote sensing liana infestation in an aseasonal tropical forest: Addressing mismatch in spatial units of analyses. Remote Sens. Ecol. Conserv. 2021, 7, 397–410. [Google Scholar] [CrossRef]

- Ma, K.; Chen, Z.; Fu, L.; Tian, W.; Jiang, F.; Yi, J.; Du, Z.; Sun, H. Performance and Sensitivity of Individual Tree Segmentation Methods for UAV-LiDAR in Multiple Forest Types. Remote Sens. 2022, 14, 298–317. [Google Scholar] [CrossRef]

- Jin, X. ENVI Automated Image Registration Solutions; Internal Report; Harris Corporation: Melbourne, FL, USA, 2017; p. 26. [Google Scholar]

- FAO. Global Forest Resources Assessment 2020, Terms and Definitions; FAO: Roma, Italy, 2020. [Google Scholar]

- Kosztra, B.; Büttner, G.; Hazeu, G.; Arnold, S. Updated CLC Illustrated Nomenclature Guidelines; European Environment Agency: Wien, Austria, 2017; pp. 1–124. [Google Scholar]

- Smart, N.; Eisenman, T.S.; Karvonen, A. Street Tree Density and Distribution: An International Analysis of Five Capital Cities. Front. Ecol. Evol. 2020, 8, 562646. [Google Scholar] [CrossRef]

- Crowther, T.W.; Glick, H.B.; Covey, K.R.; Bettigole, C.; Maynard, D.S.; Thomas, S.M.; Smith, J.R.; Hintler, G.; Duguid, M.C.; Amatulli, G.; et al. Mapping tree density at a global scale. Nature 2015, 525, 201–205. [Google Scholar] [CrossRef] [PubMed]

- Fekete, A.; Cserep, M. Tree segmentation and change detection of large urban areas based on airborne LiDAR. Comput. Geosci. 2021, 156, 104900. [Google Scholar] [CrossRef]

- Mücher, C.A.; Roupioz, L.; Kramer, H.; Bogers, M.M.B.; Jongman, R.H.G.; Lucas, R.M.; Kosmidou, V.E.; Petrou, Z.; Manakos, I.; Padoa-Schioppa, E.; et al. Synergy of airborne LiDAR and Worldview-2 satellite imagery for land cover and habitat mapping: A BIO_SOS-EODHaM case study for the Netherlands. Int. J. Appl. Earth Obs. Geoinf. 2015, 37, 48–55. [Google Scholar] [CrossRef]

- Hyyppä, J.; Hyyppä, H.; Leckie, D.; Gougeon, F.; Yu, X.; Maltamo, M. Review of methods of small-footprint airborne laser scanning for extracting forest inventory data in boreal forests. Int. J. Remote Sens. 2008, 29, 1339–1366. [Google Scholar] [CrossRef]

- Khosravipour, A.; Skidmore, A.K.; Wang, T.; Isenburg, M.; Khoshelham, K. Effect of slope on treetop detection using a LiDAR Canopy Height Model. ISPRS J. Photogramm. Remote Sens. 2015, 104, 44–52. [Google Scholar] [CrossRef]

- Alexander, C.; Korstjens, A.H.; Hill, R.A. Influence of micro-topography and crown characteristics on tree height estimations in tropical forests based on LiDAR canopy height models. Int. J. Appl. Earth Obs. Geoinf. 2018, 65, 105–113. [Google Scholar] [CrossRef]

- Chen, Q.; Wang, X.; Hang, M.; Li, J. Research on the improvement of single tree segmentation algorithm based on airborne LiDAR point cloud. Open Geosci. 2021, 13, 705–716. [Google Scholar] [CrossRef]

- Wang, X.; Chan, T.O.; Liu, K.; Pan, J.; Luo, M.; Li, W.; Wei, C. A robust segmentation framework for closely packed buildings from airborne LiDAR point clouds. Int. J. Remote Sens. 2020, 41, 5147–5165. [Google Scholar] [CrossRef]

- Tobler, W.R. A computer movie simulating urban growth in the Detroit region. Econ. Geogr. 1970, 46 (Suppl. 1), 234–240. [Google Scholar] [CrossRef]

- Lu, G.Y.; Wong, D.W. An adaptive inverse-distance weighting spatial interpolation technique. Comput. Geosci. 2008, 34, 1044–1055. [Google Scholar] [CrossRef]

- Feng, R.; Du, Q.; Li, X.; Shen, H. Robust registration for remote sensing images by combining and localizing feature- and area-based methods. ISPRS J. Photogramm. Remote Sens. 2019, 151, 15–26. [Google Scholar] [CrossRef]

- Wang, S.; Quan, D.; Liang, X.; Ning, M.; Guo, Y.; Jiao, L. A deep learning framework for remote sensing image registration. ISPRS J. Photogramm. Remote Sens. 2018, 145, 148–164. [Google Scholar] [CrossRef]

- Ye, Y.; Bruzzone, L.; Shan, J.; Bovolo, F.; Zhu, Q. Fast and Robust Matching for Multimodal Remote Sensing Image Registration. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9059–9070. [Google Scholar] [CrossRef]

- Zhu, B.; Ye, Y.; Zhou, L.; Li, Z.; Yin, G. Robust registration of aerial images and LiDAR data using spatial constraints and Gabor structural features. ISPRS J. Photogramm. Remote Sens. 2021, 181, 129–147. [Google Scholar] [CrossRef]

- Xiao, Y.; Tian, Z.; Yu, J.; Zhang, Y.; Liu, S.; Du, S.; Lan, X. A review of object detection based on deep learning. Multimed. Tools Appl. 2020, 79, 23729–23791. [Google Scholar] [CrossRef]

- Dhillon, A.; Verma, G.K. Convolutional neural network: A review of models, methodologies and applications to object detection. Prog. Artif. Intell. 2020, 9, 85–112. [Google Scholar] [CrossRef]

- Wu, X.; Sahoo, D.; Hoi, S.C.H. Recent advances in deep learning for object detection. Neurocomputing 2020, 396, 39–64. [Google Scholar] [CrossRef]

- Zhao, Z.Q.; Zheng, P.; Xu, S.T.; Wu, X. Object Detection With Deep Learning: A Review. IEEE Trans. Neural Networks Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef]

- Choi, K.; Lim, W.; Chang, B.; Jeong, J.; Kim, I.; Park, C.-R.; Ko, D.W. An automatic approach for tree species detection and profile estimation of urban street trees using deep learning and Google street view images. ISPRS J. Photogramm. Remote Sens. 2022, 190, 165–180. [Google Scholar] [CrossRef]

- Liu, T.; Yao, L.; Qin, J.; Lu, N.; Jiang, H.; Zhang, F.; Zhou, C. Multi-scale attention integrated hierarchical networks for high-resolution building footprint extraction. Int. J. Appl. Earth Obs. Geoinf. 2022, 109, 102768. [Google Scholar] [CrossRef]

- Feng, T.; Chen, S.; Feng, Z.; Shen, C.; Tian, Y. Effects of Canopy and Multi-Epoch Observations on Single-Point Positioning Errors of a GNSS in Coniferous and Broadleaved Forests. Remote Sens. 2021, 13, 2325–2339. [Google Scholar] [CrossRef]

- Yuan, X.; Yuan, X.; Chen, J.; Wang, X. Large Aerial Image Tie Point Matching in Real and Difficult Survey Areas via Deep Learning Method. Remote Sens. 2022, 14, 3907–3924. [Google Scholar] [CrossRef]

- Ørka, H.O.; Dalponte, M.; Gobakken, T.; Næsset, E.; Ene, L.T. Characterizing forest species composition using multiple remote sensing data sources and inventory approaches. Scand. J. For. Res. 2013, 28, 677–688. [Google Scholar] [CrossRef]

- Hastings, J.H.; Ollinger, S.V.; Ouimette, A.P.; Sanders-DeMott, R.; Palace, M.W.; Ducey, M.J.; Sullivan, F.B.; Basler, D.; Orwig, D.A. Tree Species Traits Determine the Success of LiDAR-Based Crown Mapping in a Mixed Temperate Forest. Remote Sens. 2020, 12, 309–329. [Google Scholar] [CrossRef]

- Jim, C.Y.; Liu, H.T. Species diversity of three major urban forest types in Guangzhou City, China. For. Ecol. Manage. 2001, 146, 99–114. [Google Scholar] [CrossRef]

- Jim, C.Y.; Chen, W.Y. Diversity and distribution of landscape trees in the compact Asian city of Taipei. Appl. Geogr. 2009, 29, 577–587. [Google Scholar] [CrossRef]

- Jin, E.J.; Yoon, J.H.; Bae, E.J.; Jeong, B.R.; Yong, S.H.; Choi, M.S. Particulate Matter Removal Ability of Ten Evergreen Trees Planted in Korea Urban Greening. Forests 2021, 12, 438–454. [Google Scholar] [CrossRef]

- Źróbek-Sokolnik, A.; Dynowski, P.; Źróbek, S. Preservation and Restoration of Roadside Tree Alleys in Line with Sustainable Development Principles—Mission (Im)possible? Sustainability 2021, 13, 9635–9651. [Google Scholar] [CrossRef]

- Bella, F. Driver perception of roadside configurations on two-lane rural roads: Effects on speed and lateral placement. Accid. Anal. Prev. 2013, 50, 251–262. [Google Scholar] [CrossRef]

- Xu, X.; Sun, S.; Liu, W.; García, E.H.; He, L.; Cai, Q.; Xu, S.; Wang, J.; Zhu, J. The cooling and energy saving effect of landscape design parameters of urban park in summer: A case of Beijing, China. Energy Build. 2017, 149, 91–100. [Google Scholar] [CrossRef]

- Goličnik, B.; Thompson, C.W. Emerging relationships between design and use of urban park spaces. Landscape Urban Plann. 2010, 94, 38–53. [Google Scholar] [CrossRef]

- Belaire, J.A.; Whelan, C.J.; Minor, E.S. Having our yards and sharing them too: The collective effects of yards on native bird species in an urban landscape. Ecol. Appl. 2014, 24, 2132–2143. [Google Scholar] [CrossRef] [PubMed]

- Vila-Ruiz, C.P.; Meléndez-Ackerman, E.; Santiago-Bartolomei, R.; Garcia-Montiel, D.; Lastra, L.; Figuerola, C.E.; Fumero-Caban, J. Plant species richness and abundance in residential yards across a tropical watershed: Implications for urban sustainability. Ecol. Soc. 2014, 19, 22–32. [Google Scholar] [CrossRef]

- Avolio, M.; Blanchette, A.; Sonti, N.F.; Locke, D.H. Time Is Not Money: Income Is More Important Than Lifestage for Explaining Patterns of Residential Yard Plant Community Structure and Diversity in Baltimore. Front. Ecol. Evol. 2020, 8, 85–98. [Google Scholar] [CrossRef]

- Kirkpatrick, J.B.; Daniels, G.D.; Davison, A. Temporal and spatial variation in garden and street trees in six eastern Australian cities. Landscape Urban Plann. 2011, 101, 244–252. [Google Scholar] [CrossRef]