A Review of Practical AI for Remote Sensing in Earth Sciences

Abstract

1. Introduction

- Overview of successful examples of practical AI in research and real-world applications;

- Discussion of research challenges and reality gaps in the practical integration of AI with remote sensing;

- Emerging trends and advancements in practical AI techniques for remote sensing;

- Common challenges practical AI face in remote sensing, such as data quality, availability of training data, interpretability, and the requirement for domain expertise;

- Potential practical AI solutions and ongoing or future real-world applications.

2. Basics of AI and Remote Sensing

2.1. Brief Recap of Remote Sensing Technologies

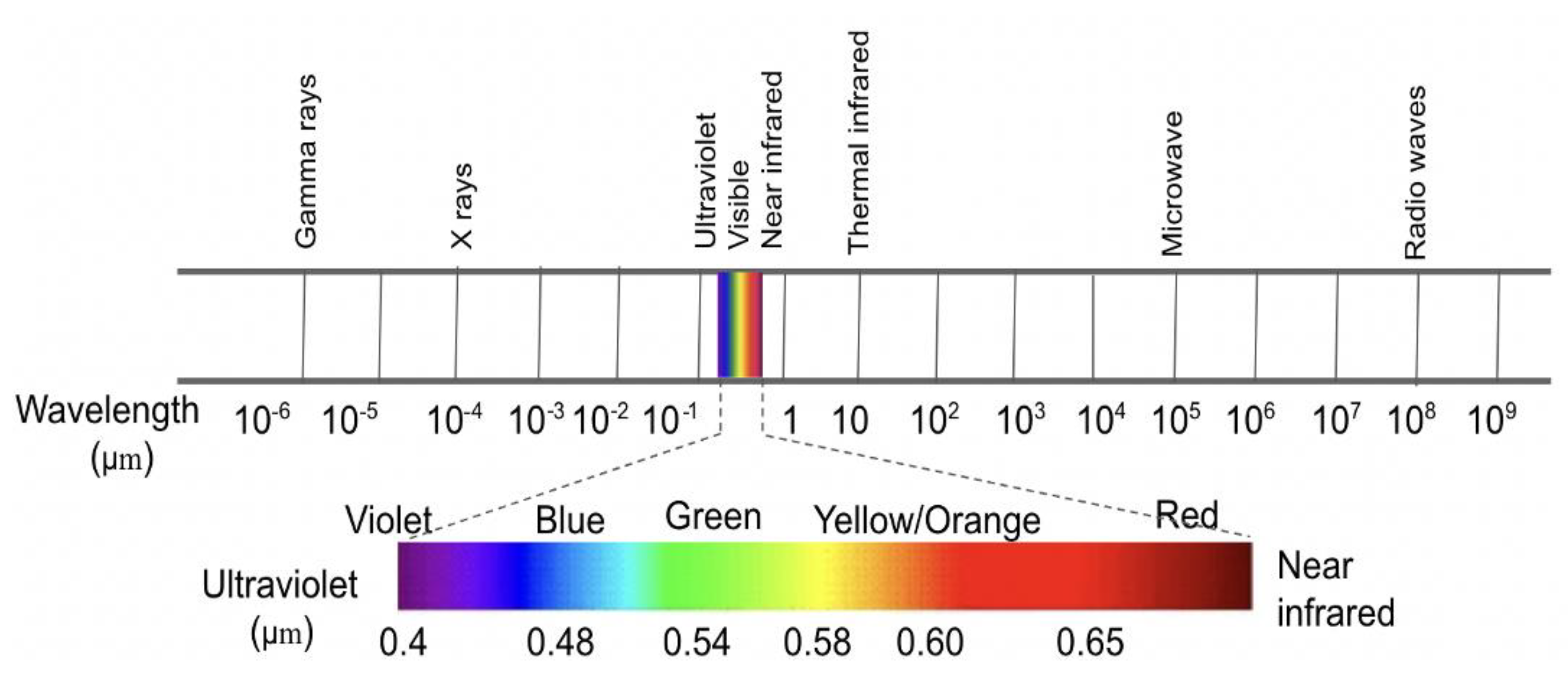

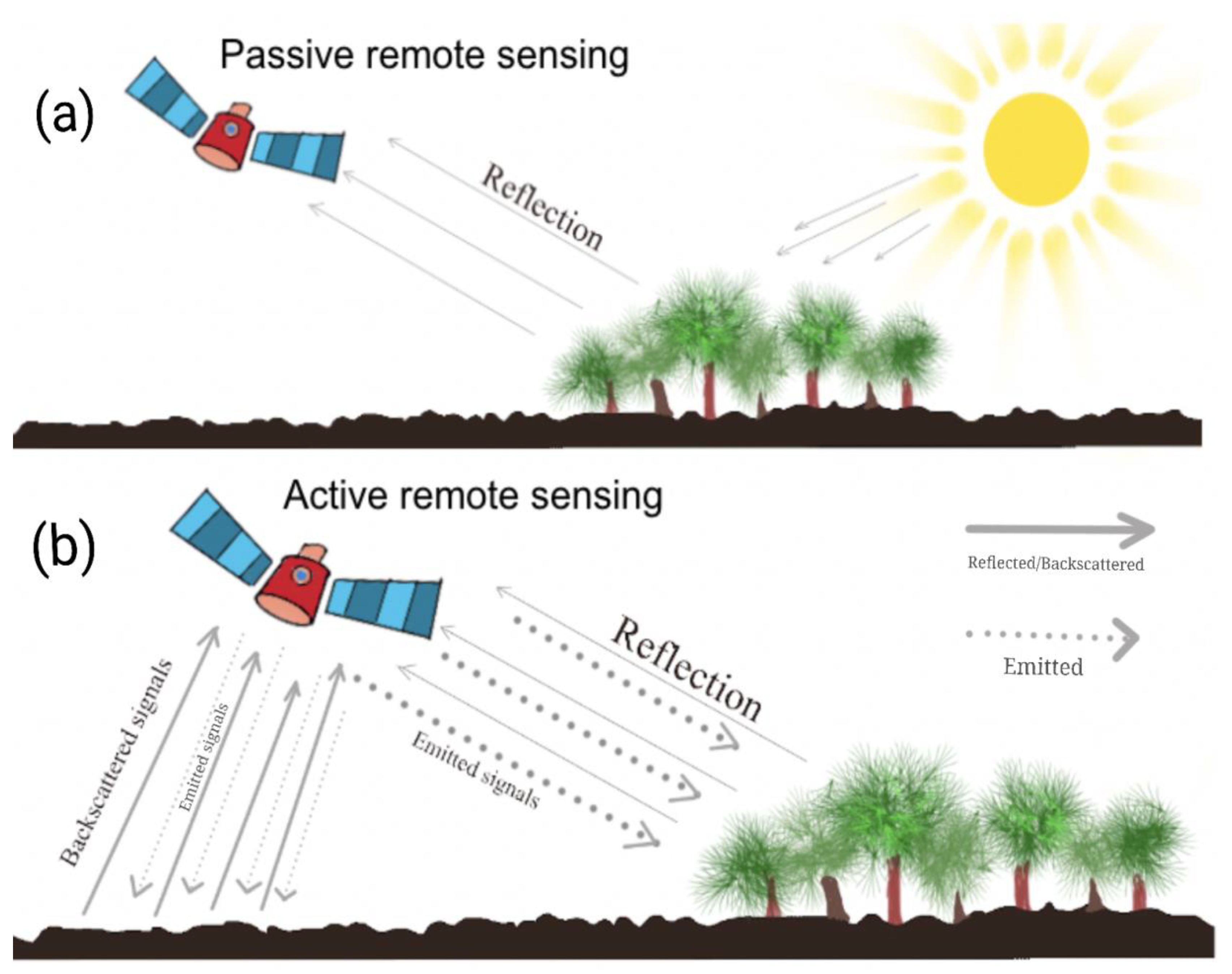

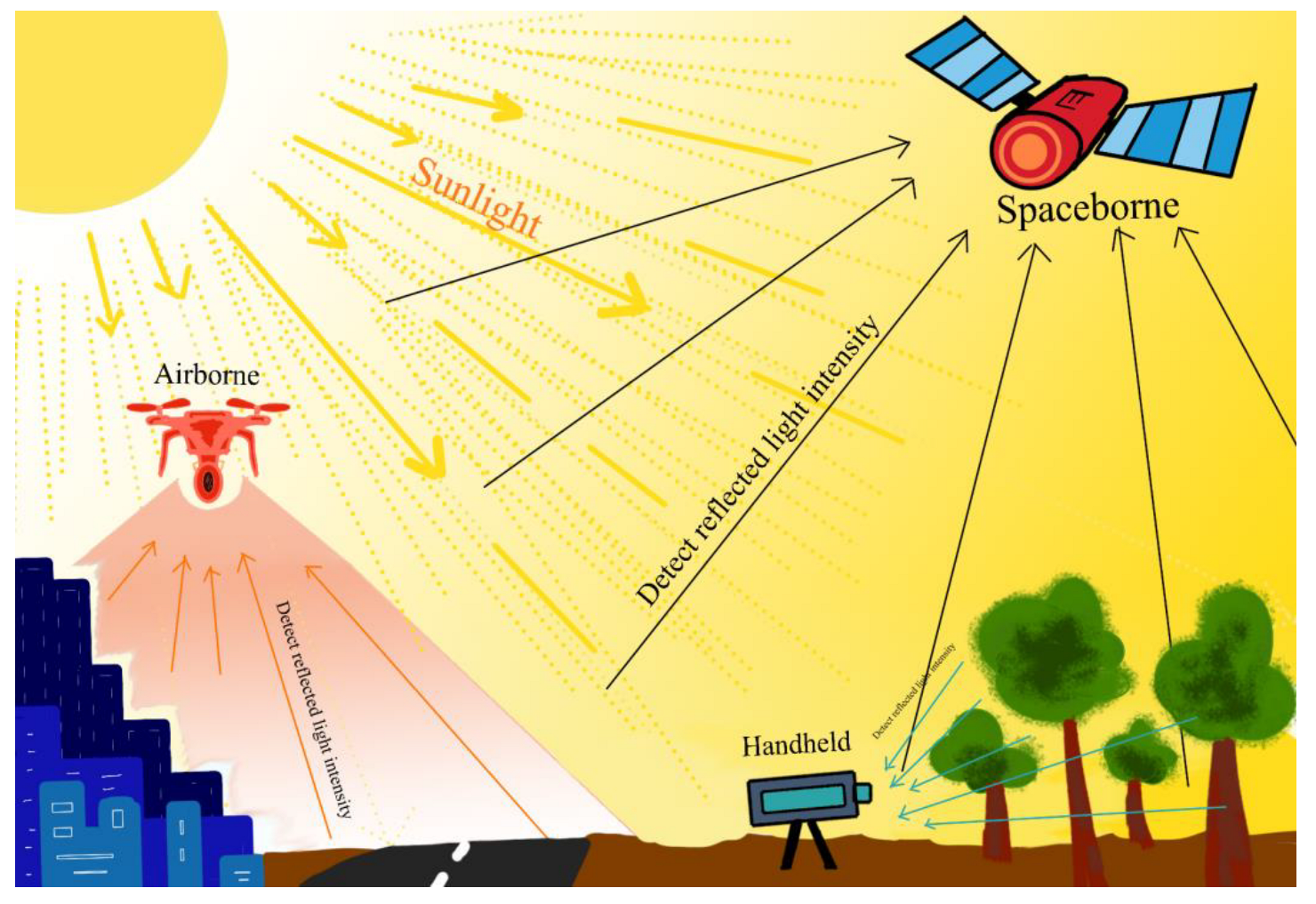

2.1.1. Optical Remote Sensing

2.1.2. Radar Remote Sensing

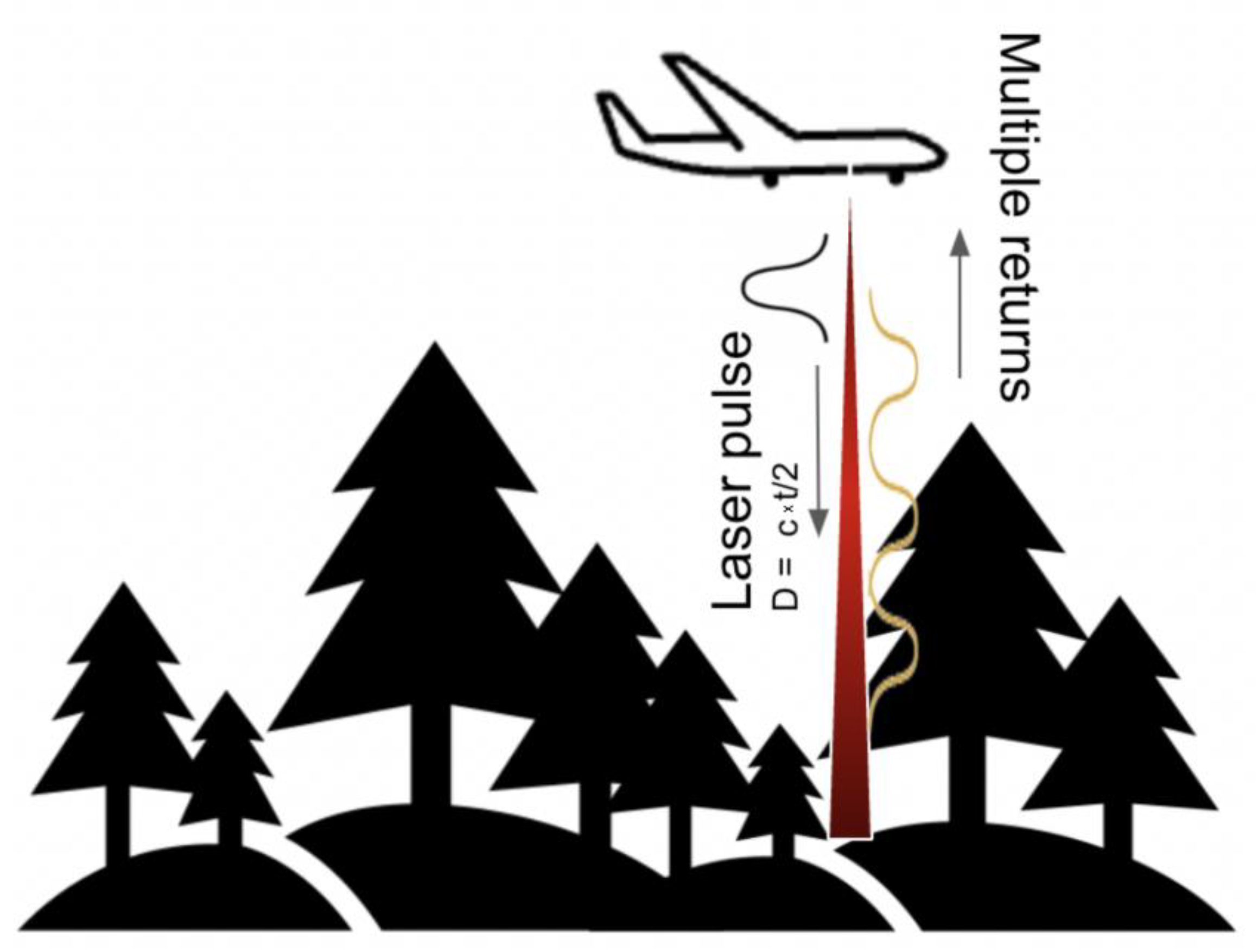

2.1.3. LiDAR

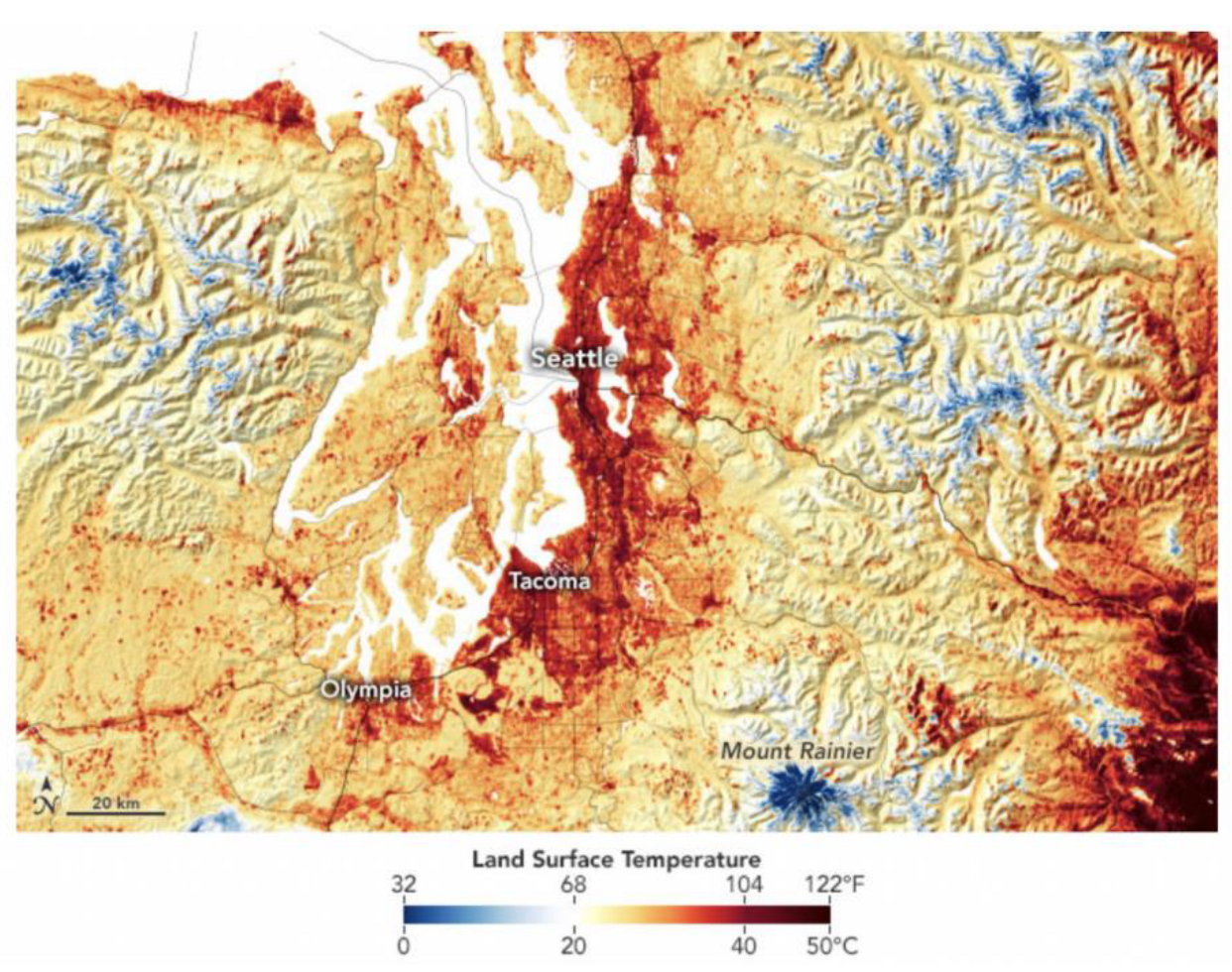

2.1.4. Thermal Remote Sensing

2.1.5. Multispectral and Hyperspectral Imaging

2.2. Key AI Techniques in Remote Sensing

2.2.1. Conventional Machine Learning in Remote Sensing

2.2.2. Deep Learning in Remote Sensing

- Deep Convolutional Neural Networks (DCNNs)

- 2.

- Deep Residual Networks (ResNets)

- 3.

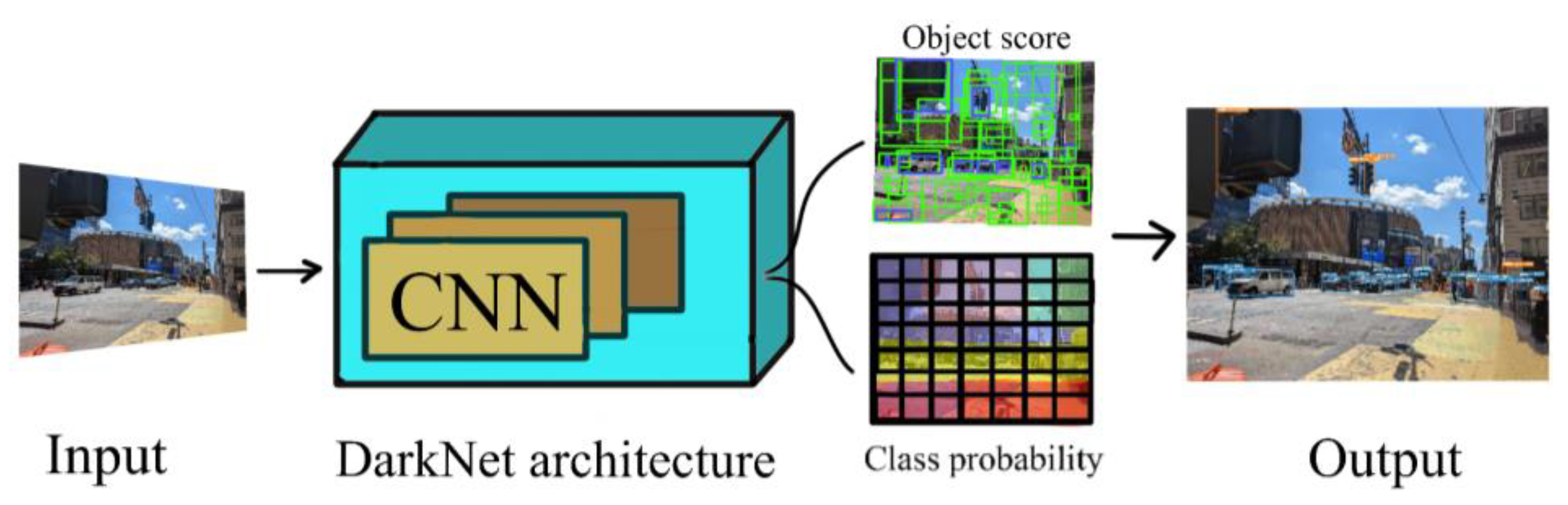

- You Only Look Once (YOLO)

- 4.

- Faster Region-Based CNN (R-CNN)

- 5.

- Self-Attention Methods

- 6.

- Long Short-Term Memory, LSTM

2.2.3. Other AI Methods in Remote Sensing

3. Current Practical Applications of AI in Remote Sensing

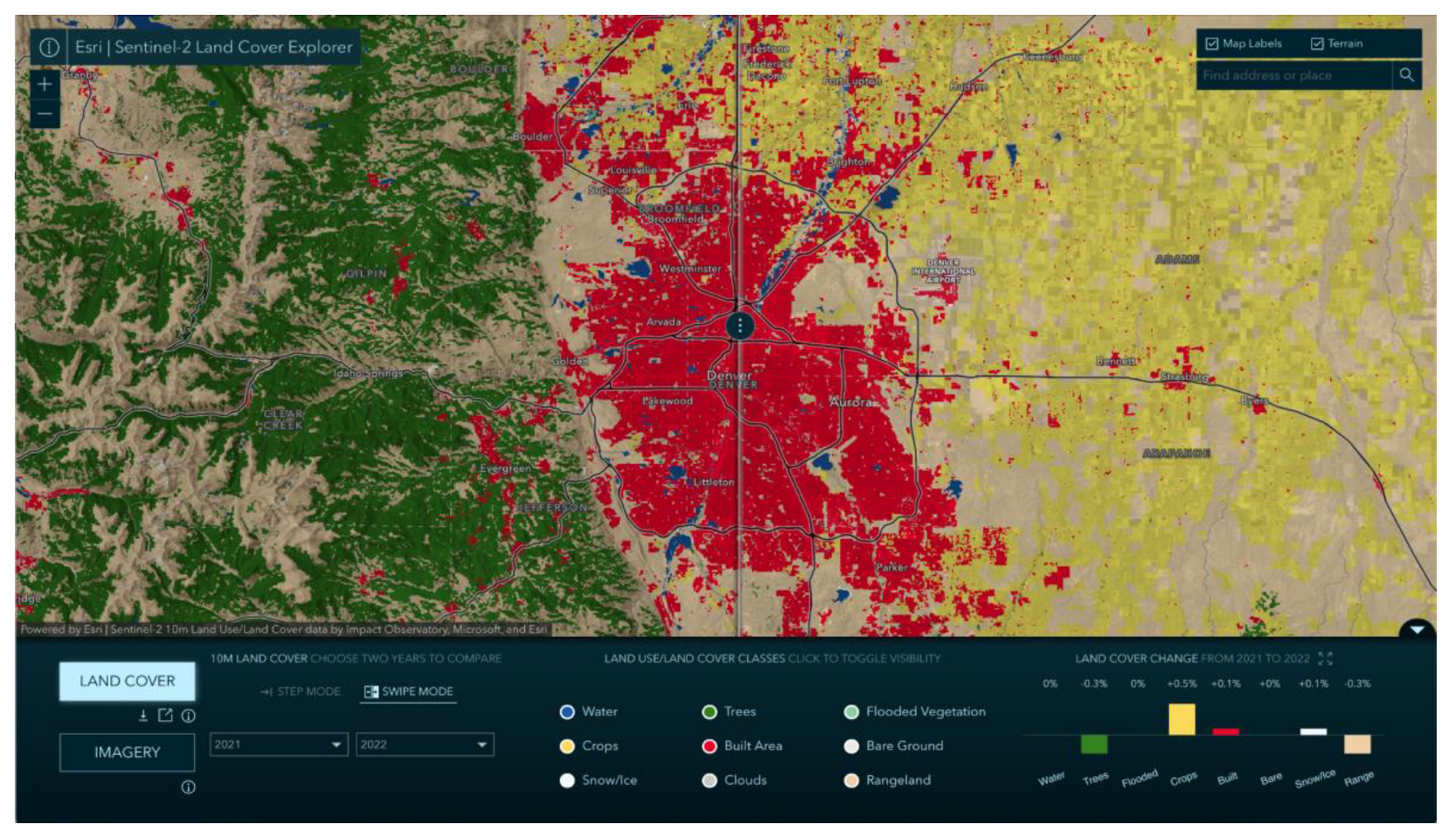

3.1. Land Cover Mapping

3.2. Earth Surface Object Detection

3.3. Multisource Data Fusion and Integration

3.4. Three-Dimensional and Invisible Object Extraction

4. Existing Challenges

4.1. Data Availability

4.2. Training Optimization

4.3. Data Quality

4.4. Uncertainty

4.5. Model Interpretability

4.6. Diversity

4.7. Integrity and Security

5. Ongoing and Future Practical AI Applications in Remote Sensing

5.1. Wildfire Detection and Management

5.2. Illegal Logging and Deforestation Monitoring

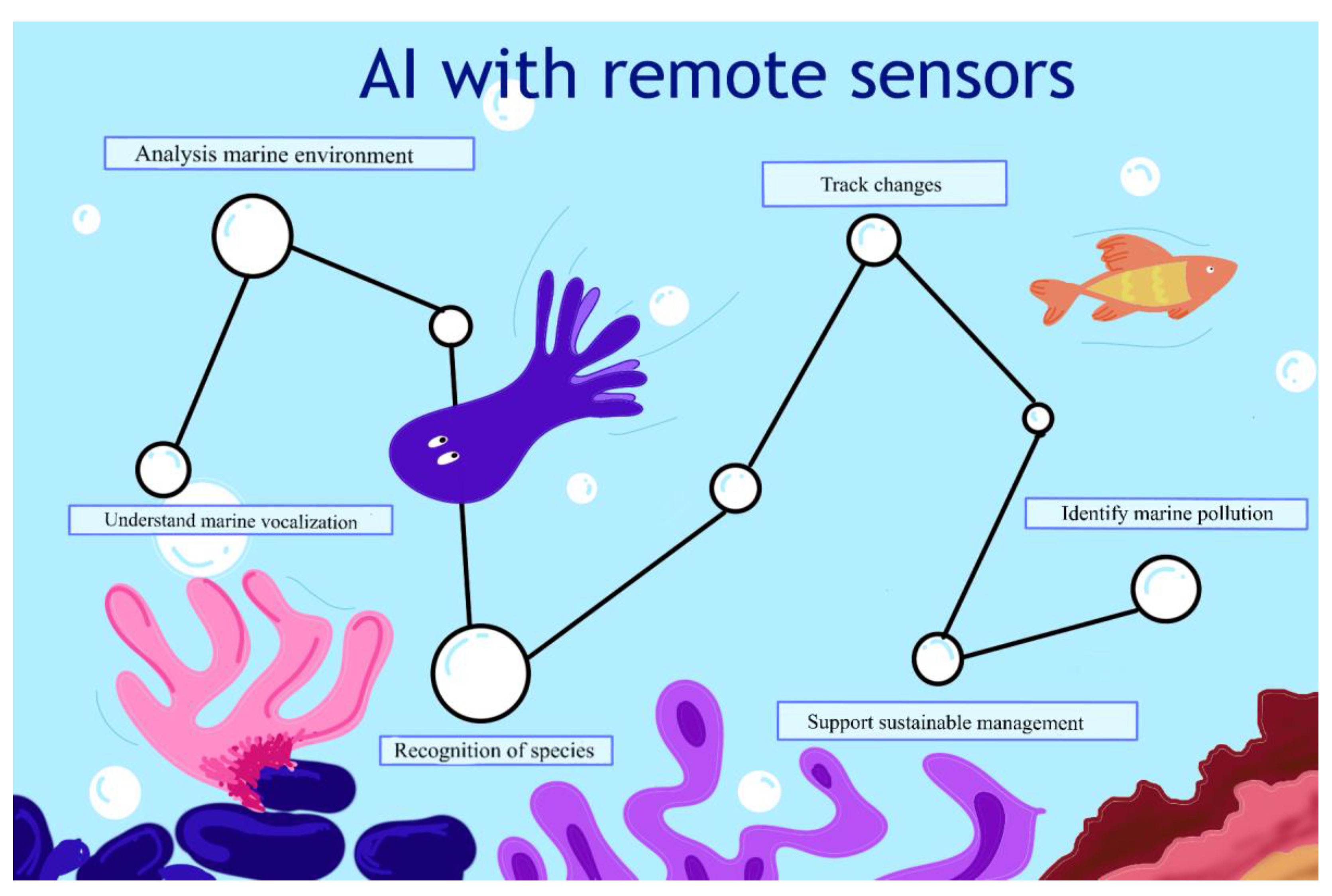

5.3. Coastal and Marine Ecosystem Monitoring

5.4. Biodiversity Conservation and Habitat Monitoring

5.5. Airborne Disease Monitoring and Forecasting

5.6. Precision Forestry

5.7. Urban Heat Island Mitigation

5.8. Precision Water Management

5.9. Disaster Resilience Planning

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Campbell, J.B.; Wynne, R.H. Introduction to Remote Sensing; Guilford Press: New York, NY, USA, 2011. [Google Scholar]

- Earthdata Cloud Evolution. Earthdata. 30 March 2022. Available online: https://www.earthdata.nasa.gov/eosdis/cloud-evolution (accessed on 4 July 2023).

- Jensen, J.R. Remote Sensing of the Environment: An Earth Resource Perspective 2/e; Pearson Education: Bangalore, India, 2009. [Google Scholar]

- Mohan, E.; Rajesh, A.; Sunitha, G.; Konduru, R.M.; Avanija, J.; Babu, L.G. A deep neural network learning-based speckle noise removal technique for enhancing the quality of synthetic-aperture radar images. Concurr. Comput. Pract. Exp. 2021, 33, e6239. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L. Artificial Intelligence for Remote Sensing Data Analysis: A review of challenges and opportunities. IEEE Geosci. Remote Sens. Mag. 2022, 10, 270–294. [Google Scholar] [CrossRef]

- Li, J.; Li, Y.; He, L.; Chen, J.; Plaza, A. Spatio-temporal fusion for remote sensing data: An overview and new benchmark. Sci. China Inf. Sci. 2020, 63, 1–17. [Google Scholar] [CrossRef]

- Xu, S.; Cheng, J.; Zhang, Q. A Random Forest-Based Data Fusion Method for Obtaining All-Weather Land Surface Temperature with High Spatial Resolution. Remote Sens. 2021, 13, 2211. [Google Scholar] [CrossRef]

- Kinaneva, D.; Hristov, G.; Raychev, J.; Zahariev, P. Early Forest Fire Detection Using Drones and Artificial Intelligence. In Proceedings of the 2019 42nd International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), Opatija, Croatia, 20–24 May 2019; pp. 1060–1065. [Google Scholar]

- Ghamisi, P.; Rasti, B.; Yokoya, N.; Wang, Q.M.; Hofle, B.; Bruzzone, L.; Bovolo, F.; Chi, M.M.; Anders, K.; Gloaguen, R.; et al. Multisource and multitemporal data fusion in remote sensing a comprehensive review of the state of the art. IEEE Geosci. Remote Sens. Mag. 2019, 7, 6–39. [Google Scholar] [CrossRef]

- Mo, Y.; Xu, Y.; Liu, Y.; Xin, Y.; Zhu, S. Comparison of gap-filling methods for producing all-weather daily remotely sensed near-surface air temperature. Remote Sens. Environ. 2023, 296, 113732. [Google Scholar] [CrossRef]

- Peng, J.; Loew, A.; Merlin, O.; Verhoest, N.E.C. A review of spatial downscaling of satellite remotely sensed soil moisture. Rev. Geophys. 2017, 55, 341–366. [Google Scholar] [CrossRef]

- Hong, D.; He, W.; Yokoya, N.; Yao, J.; Gao, L.; Zhang, L.; Chanussot, J.; Zhu, X. Interpretable Hyperspectral Artificial Intelligence: When nonconvex modeling meets hyperspectral remote sensing. IEEE Geosci. Remote Sens. Mag. 2021, 9, 52–87. [Google Scholar] [CrossRef]

- Chen, H.; Qi, Z.; Shi, Z. Remote sensing image change detection with transformers. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–14. [Google Scholar] [CrossRef]

- Sun, Z.; Sandoval, L.; Crystal-Ornelas, R.; Mousavi, S.M.; Wang, J.; Lin, C.; Cristea, N.; Tong, D.; Carande, W.H.; Ma, X.; et al. A review of earth artificial intelligence. Comput. Geosci. 2022, 159, 105034. [Google Scholar]

- Le Moigne, J. Artificial Intelligence and Machine Learning for Earth Science. In Proceedings of the 2021 International Space University (ISU) Alumni Conference, Online, 30 July 2021. [Google Scholar]

- Sayer, A.M.; Govaerts, Y.; Kolmonen, P.; Lipponen, A.; Luffarelli, M.; Mielonen, T.; Patadia, F.; Popp, T.; Povey, A.C.; Stebel, K.; et al. A review and framework for the evaluation of pixel-level uncertainty estimates in satellite aerosol remote sensing. Atmospheric Meas. Tech. 2020, 13, 373–404. [Google Scholar] [CrossRef]

- Lillesand, T.; Kiefer, R.W.; Chipman, J. Remote Sensing and Image Interpretation, 5th ed.; John Wiley & Sons: Hobokan, NJ, USA, 2004; ISBN 0471152277. [Google Scholar]

- Gupta, R.P. Remote Sensing Geology; Springer: Berlin/Heidelberg, Germany, 2017; ISBN 9783662558744. [Google Scholar]

- Prasad, S.; Bruce, L.M.; Chanussot, J. Optical Remote Sensing—Advances in Signal Processing and Exploitation Techniques; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Aggarwal, S. Principles of remote sensing. Satell. Remote Sens. GIS Appl. Agric. Meteorol. 2004, 23, 23–28. [Google Scholar]

- Cheng, G.; Han, J. A survey on object detection in optical remote sensing images. ISPRS J. Photogramm. Remote Sens. 2016, 117, 11–28. [Google Scholar] [CrossRef]

- Yang, H.; Nguyen, T.-N.; Chuang, T.-W. An Integrative Explainable Artificial Intelligence Approach to Analyze Fine-Scale Land-Cover and Land-Use Factors Associated with Spatial Distributions of Place of Residence of Reported Dengue Cases. Trop. Med. Infect. Dis. 2023, 8, 238. [Google Scholar] [CrossRef]

- Kamarulzaman, A.M.M.; Jaafar, W.S.W.M.; Said, M.N.M.; Saad, S.N.M.; Mohan, M. UAV Implementations in Urban Planning and Related Sectors of Rapidly Developing Nations: A Review and Future Perspectives for Malaysia. Remote Sens. 2023, 15, 2845. [Google Scholar] [CrossRef]

- Pettorelli, N. The Normalized Difference Vegetation Index; Oxford University Press: Cary, NC, USA, 2013. [Google Scholar]

- Sun, Z.; Peng, C.; Deng, M.; Chen, A.; Yue, P.; Fang, H.; Di, L. Automation of Customized and Near-Real-Time Vegetation Condition Index Generation Through Cyberinfrastructure-Based Geoprocessing Workflows. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 4512–4522. [Google Scholar] [CrossRef]

- Gholizadeh, A.; Kopačková, V. Detecting vegetation stress as a soil contamination proxy: A review of optical proximal and remote sensing techniques. Int. J. Environ. Sci. Technol. 2019, 16, 2511–2524. [Google Scholar] [CrossRef]

- Stone, M.L.; Solie, J.B.; Raun, W.R.; Whitney, R.W.; Taylor, S.L.; Ringer, J.D. Use of Spectral Radiance for Correcting In-season Fertilizer Nitrogen Deficiencies in Winter Wheat. Trans. ASAE 1996, 39, 1623–1631. [Google Scholar] [CrossRef]

- Osborne, S.L.; Schepers, J.S.; Francis, D.D.; Schlemmer, M.R. Detection of Phosphorus and Nitrogen Deficiencies in Corn Using Spectral Radiance Measurements. Agron. J. 2002, 94, 1215–1221. [Google Scholar] [CrossRef]

- Cannistra, A.F.; Shean, D.E.; Cristea, N.C. High-resolution CubeSat imagery and machine learning for detailed snow-covered area. Remote Sens. Environ. 2021, 258, 112399. [Google Scholar] [CrossRef]

- John, A.; Cannistra, A.F.; Yang, K.; Tan, A.; Shean, D.; Lambers, J.H.R.; Cristea, N. High-Resolution Snow-Covered Area Mapping in Forested Mountain Ecosystems Using PlanetScope Imagery. Remote Sens. 2022, 14, 3409. [Google Scholar] [CrossRef]

- Richards, J.A. Remote Sensing with Imaging Radar; Springer: Berlin/Heidelberg, Germany, 2009; Volume 1. [Google Scholar] [CrossRef]

- Dinh, H.T.M.; Hanssen, R.; Rocca, F. Radar interferometry: 20 years of development in time series techniques and future perspectives. Remote Sens. 2020, 121, 1364. [Google Scholar]

- Oguchi, T.; Hayakawa, Y.S.; Wasklewicz, T. Remote Data in Fluvial Geomorphology: Characteristics and Applications. In Treatise on Geomorphology; Elsevier: Amsterdam, The Netherlands, 2022; pp. 1116–1142. [Google Scholar]

- Moreira, A.; Prats-Iraola, P.; Younis, M.; Krieger, G.; Hajnsek, I.; Papathanassiou, K.P. A tutorial on synthetic aperture radar. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–43. [Google Scholar] [CrossRef]

- Devaney, J.; Barrett, B.; Barrett, F.; Redmond, J.; O’halloran, J. Forest Cover Estimation in Ireland Using Radar Remote Sensing: A Comparative Analysis of Forest Cover Assessment Methodologies. PLoS ONE 2015, 10, e0133583. [Google Scholar] [CrossRef]

- Dubayah, R.O.; Drake, J.B. Lidar remote sensing for forestry. J. For. 2000, 98, 44–46. [Google Scholar]

- Dassot, M.; Constant, T.; Fournier, M. The use of terrestrial LiDAR technology in forest science: Application fields, benefits and challenges. Ann. For. Sci. 2011, 68, 959–974. [Google Scholar] [CrossRef]

- Deems, J.S.; Painter, T.H.; Finnegan, D.C. Lidar measurement of snow depth: A review. J. Glaciol. 2013, 59, 467–479. [Google Scholar] [CrossRef]

- Disney, M.; Kalogirou, V.; Lewis, P.; Prieto-Blanco, A.; Hancock, S.; Pfeifer, M. Simulating the impact of discrete-return lidar system and survey characteristics over young conifer and broadleaf forests. Remote Sens. Environ. 2010, 114, 1546–1560. [Google Scholar] [CrossRef]

- Prakash, A. Thermal remote sensing: Concepts, issues and applications. Int. Arch. Photogramm. Remote Sens. 2000, 33, 239–243. [Google Scholar]

- Bakker, W.H.; Feringa, W.; Gieske, A.S.M.; Gorte, B.G.H.; Grabmaier, K.A.; Hecker, C.A.; Horn, J.A.; Huurneman, G.C.; Janssen, L.L.F.; Kerle, N.; et al. Thermal Remote Sensing; Humboldt.Edu: Arcata, CA, USA, 2009. [Google Scholar]

- Allison, R.S.; Johnston, J.M.; Craig, G.; Jennings, S. Airborne Optical and Thermal Remote Sensing for Wildfire Detection and Monitoring. Sensors 2016, 16, 1310. [Google Scholar] [CrossRef]

- Voogt, J.A.; Oke, T.R. Thermal remote sensing of urban climates. Remote Sens. Environ. 2003, 86, 370–384. [Google Scholar] [CrossRef]

- Shaw, G.A.; Burke, H.K. Spectral imaging for remote sensing. Linc. Lab. J. 2003, 14, 3–28. [Google Scholar]

- Manolakis, D.G.; Lockwood, R.B.; Cooley, T.W. Hyperspectral Imaging Remote Sensing: Physics, Sensors, and Algorithms; Cambridge University Press: Cambridge, UK, 2016. [Google Scholar]

- Sun, W.; Du, Q. Hyperspectral band selection: A review. IEEE Geosci. Remote Sens. Mag. 2019, 7, 118–139. [Google Scholar] [CrossRef]

- Dong, P.; Chen, Q. LiDAR Remote Sensing and Applications; CRC Press: Boca Raton, FL, USA, 2017. [Google Scholar]

- Weiss, M.; Jacob, F.; Duveiller, G. Remote sensing for agricultural applications: A meta-review. Remote Sens. Environ. 2020, 236, 111402. [Google Scholar] [CrossRef]

- Ghosh, A.; Fassnacht, F.E.; Joshi, P.K.; Koch, B. A framework for mapping tree species combining hyperspectral and LiDAR data: Role of selected classifiers and sensor across three spatial scales. Int. J. Appl. Earth Obs. Geoinf. 2014, 26, 49–63. [Google Scholar] [CrossRef]

- Lary, D.J.; Alavi, A.H.; Gandomi, A.H.; Walker, A.L. Machine learning in geosciences and remote sensing. Geosci. Front. 2016, 7, 3–10. [Google Scholar] [CrossRef]

- Sarker, I.H. Deep Learning: A Comprehensive Overview on Techniques, Taxonomy, Applications and Research Directions. SN Comput. Sci. 2021, 2, 420. [Google Scholar] [CrossRef]

- Sun, Z.; Cristea, N.; Tong, D.; Tullis, J.; Chester, Z.; Magill, A. A review of cyberinfrastructure for machine learning and big data in the geosciences. Recent Adv. Geoinformatics Data Sci. 2023, 558, 161. [Google Scholar] [CrossRef]

- Sun, Z.; Cristea, N.; Rivas, P. (Eds.) Artificial Intelligence in Earth Science: Best Practices and Fundamental Challenges; Elsevier-Health Sciences Division: Amsterdam, The Netherlands, 2023. [Google Scholar]

- Saini, R.; Ghosh, S. Ensemble classifiers in remote sensing: A review. In Proceedings of the 2017 International Conference on Computing, Communication and Automation (ICCCA), Greater Noida, India, 5–6 May 2017; pp. 1148–1152. [Google Scholar] [CrossRef]

- Miao, X.; Heaton, J.S.; Zheng, S.; Charlet, D.A.; Liu, H. Applying tree-based ensemble algorithms to the classification of ecological zones using multi-temporal multi-source remote-sensing data. Int. J. Remote Sens. 2011, 33, 1823–1849. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, J.; Shen, W. A Review of Ensemble Learning Algorithms Used in Remote Sensing Applications. Appl. Sci. 2022, 12, 8654. [Google Scholar] [CrossRef]

- Breiman, L. Bagging predictors. Mach. Learn. 1996, 24, 123–140. [Google Scholar] [CrossRef]

- Schapire, R.E. A Brief Introduction to Boosting. Psu.Edu. 1999. Available online: https://citeseerx.ist.psu.edu/document?repid=rep1&type=pdf&doi=fa329f834e834108ccdc536db85ce368fee227ce (accessed on 4 August 2023).

- Freund, Y.; Schapire, R.E. A Decision-Theoretic Generalization of On-Line Learning and an Application to Boosting. J. Comput. Syst. Sci. 1997, 55, 119–139. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Pal, M. Random forest classifier for remote sensing classification. Int. J. Remote Sens. 2005, 26, 217–222. [Google Scholar] [CrossRef]

- Gislason, P.O.; Benediktsson, J.A.; Sveinsson, J.R. Random Forests for land cover classification. Pattern Recognit. Lett. 2006, 27, 294–300. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.F.; Ghimire, B.; Rogan, J.; Chica-Olmo, M.; Rigol-Sanchez, J.P. An assessment of the effectiveness of a random forest classifier for land-cover classification. ISPRS J. Photogramm. Remote Sens. 2012, 67, 93–104. [Google Scholar] [CrossRef]

- Mascaro, J.; Asner, G.P.; Knapp, D.E.; Kennedy-Bowdoin, T.; Martin, R.E.; Anderson, C.; Higgins, M.; Chadwick, K.D. A Tale of Two “Forests”: Random Forest Machine Learning Aids Tropical Forest Carbon Mapping. PLoS ONE 2014, 9, e85993. [Google Scholar] [CrossRef] [PubMed]

- Phan, T.N.; Kuch, V.; Lehnert, L.W. Land Cover Classification using Google Earth Engine and Random Forest Classifier—The Role of Image Composition. Remote Sens. 2020, 12, 2411. [Google Scholar] [CrossRef]

- Yang, K.; John, A.; Shean, D.; Lundquist, J.D.; Sun, Z.; Yao, F.; Todoran, S.; Cristea, N. High-resolution mapping of snow cover in montane meadows and forests using Planet imagery and machine learning. Front. Water 2023, 5, 1128758. [Google Scholar] [CrossRef]

- Rittger, K.; Krock, M.; Kleiber, W.; Bair, E.H.; Brodzik, M.J.; Stephenson, T.R.; Rajagopalan, B.; Bormann, K.J.; Painter, T.H. Multi-sensor fusion using random forests for daily fractional snow cover at 30 m. Remote Sens. Environ. 2021, 264, 112608. [Google Scholar] [CrossRef]

- Ham, J.; Chen, Y.; Crawford, M.M.; Ghosh, J. Investigation of the random forest framework for classification of hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2005, 43, 492–501. [Google Scholar] [CrossRef]

- Sabat-Tomala, A.; Raczko, E.; Zagajewski, B. Comparison of Support Vector Machine and Random Forest Algorithms for Invasive and Expansive Species Classification Using Airborne Hyperspectral Data. Remote Sens. 2020, 12, 516. [Google Scholar] [CrossRef]

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Behnamian, A.; Banks, S.; White, L.; Millard, K.; Pouliot, D.; Pasher, J.; Duffe, J. Dimensionality Reduction in The Presence of Highly Correlated Variables for Random Forests: Wetland Case Study. In Proceedings of the IGARSS 2019–2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 9839–9842. [Google Scholar] [CrossRef]

- Georganos, S.; Grippa, T.; Vanhuysse, S.; Lennert, M.; Shimoni, M.; Kalogirou, S.; Wolff, E. Less is more: Optimizing classification performance through feature selection in a very-high-resolution remote sensing object-based urban application. GIScience Remote Sens. 2017, 55, 221–242. [Google Scholar] [CrossRef]

- Millard, K.; Richardson, M. On the Importance of Training Data Sample Selection in Random Forest Image Classification: A Case Study in Peatland Ecosystem Mapping. Remote Sens. 2015, 7, 8489–8515. [Google Scholar] [CrossRef]

- Chen, T.; Carlos, G. XGBoost: A Scalable Tree Boosting System. arXiv 2016, arXiv:1603.02754. [Google Scholar]

- Ghatkar, J.G.; Singh, R.K.; Shanmugam, P. Classification of algal bloom species from remote sensing data using an extreme gradient boosted decision tree model. Int. J. Remote Sens. 2019, 40, 9412–9438. [Google Scholar] [CrossRef]

- Mountrakis, G.; Im, J.; Ogole, C. Support vector machines in remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2011, 66, 247–259. [Google Scholar] [CrossRef]

- Kavzoglu, T.; Colkesen, I. A kernel functions analysis for support vector machines for land cover classification. Int. J. Appl. Earth Obs. Geoinf. 2009, 11, 352–359. [Google Scholar] [CrossRef]

- Sheykhmousa, M.; Mahdianpari, M.; Ghanbari, H.; Mohammadimanesh, F.; Ghamisi, P.; Homayouni, S. Support Vector Machine Versus Random Forest for Remote Sensing Image Classification: A Meta-Analysis and Systematic Review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 6308–6325. [Google Scholar] [CrossRef]

- Zhu, J.-Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. arXiv 2017, arXiv:1703.10593. [Google Scholar]

- Ma, L.; Liu, Y.; Zhang, X.; Ye, Y.; Yin, G.; Johnson, B.A. Deep learning in remote sensing applications: A meta-analysis and review. ISPRS J. Photogramm. Remote Sens. 2019, 152, 166–177. [Google Scholar] [CrossRef]

- Yuan, Q.; Shen, H.; Li, T.; Li, Z.; Li, S.; Jiang, Y.; Xu, H.; Tan, W.; Yang, Q.; Wang, J.; et al. Deep learning in environmental remote sensing: Achievements and challenges. Remote Sens. Environ. 2020, 241, 111716. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Zhang, L.; Zhang, L.; Du, B. Deep Learning for Remote Sensing Data: A Technical Tutorial on the State of the Art. IEEE Geosci. Remote Sens. Mag. 2016, 4, 22–40. [Google Scholar] [CrossRef]

- Traore, B.B.; Kamsu-Foguem, B.; Tangara, F. Deep convolution neural network for image recognition. Ecol. Inform. 2018, 48, 257–268. [Google Scholar] [CrossRef]

- Chen, L.; Li, S.; Bai, Q.; Yang, J.; Jiang, S.; Miao, Y. Review of Image Classification Algorithms Based on Convolutional Neural Networks. Remote Sens. 2021, 13, 4712. [Google Scholar] [CrossRef]

- Agarap, A.F. Deep learning using rectified linear units (relu). arXiv 2018, arXiv:1803.08375. [Google Scholar]

- Aloysius, N.; Geetha, M. A review on deep convolutional neural networks. In Proceedings of the 2017 International Conference on Communication and Signal Processing (ICCSP), Chennai, India, 6–8 April 2017; pp. 0588–0592. [Google Scholar]

- Dubey, A.K.; Jain, V. Comparative Study of Convolution Neural Network’s ReLu and Leaky-ReLu Activation Functions. In Applications of Computing, Automation and Wireless Systems in Electrical Engineering; Springer: Singapore, 2019; pp. 873–880. [Google Scholar]

- Zhang, Y.-D.; Pan, C.; Sun, J.; Tang, C. Multiple sclerosis identification by convolutional neural network with dropout and parametric ReLU. J. Comput. Sci. 2018, 28, 1–10. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. arXiv 2015, arXiv:1505.04597. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Alom, M.Z.; Taha, T.M.; Yakopcic, C.; Westberg, S.; Sidike, P.; Nasrin, M.S.; Van Esesn, B.C.; Awwal, A.A.S.; Asari, V.K. The history began from alexnet: A comprehensive survey on deep learning approaches. arXiv 2018, arXiv:1803.01164. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. arXiv 2016, arXiv:1606.00915. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Y.; Zhang, X.; Feng, W.; Xu, J. Deep Learning Classification by ResNet-18 Based on the Real Spectral Dataset from Multispectral Remote Sensing Images. Remote Sens. 2022, 14, 4883. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Li, H.; Wu, X.-J.; Durrani, T.S. Infrared and visible image fusion with ResNet and zero-phase component analysis. Infrared Phys. Technol. 2019, 102, 103039. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. arXiv 2015, arXiv:1506.02640. [Google Scholar]

- Redmon, J. Darknet: Open Source Neural Networks in C. Pjreddie.Com. 2013. Available online: https://pjreddie.com/darknet/ (accessed on 4 August 2023).

- Wu, Z.; Chen, X.; Gao, Y.; Li, Y. Rapid Target Detection in High Resolution Remote Sensing Images Using Yolo Model. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 1915–1920. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. arXiv 2016, arXiv:1612.08242. [Google Scholar]

- Xu, D.; Wu, Y. Improved YOLO-V3 with DenseNet for Multi-Scale Remote Sensing Target Detection. Sensors 2020, 20, 4276. [Google Scholar] [CrossRef]

- Yang, F. An improved YOLO v3 algorithm for remote Sensing image target detection. J. Phys. Conf. Ser. 2021, 2132, 012028. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Jiang, P.; Ergu, D.; Liu, F.; Cai, Y.; Ma, B. A Review of Yolo Algorithm Developments. Procedia Comput. Sci. 2022, 199, 1066–1073. [Google Scholar] [CrossRef]

- Terven, J.; Cordova-Esparza, D. A Comprehensive Review of YOLO: From YOLOv1 and Beyond. arXiv 2023, arXiv:2304.00501. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. arXiv 2015, arXiv:1506.01497. [Google Scholar] [CrossRef]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 13–16 December 2015; pp. 1440–1448. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Aleissaee, A.A.; Kumar, A.; Anwer, R.M.; Khan, S.; Cholakkal, H.; Xia, G.-S.; Khan, F.S. Transformers in Remote Sensing: A Survey. Remote Sens. 2023, 15, 1860. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- He, J.; Zhao, L.; Yang, H.; Zhang, M.; Li, W. HSI-BERT: Hyperspectral Image Classification Using the Bidirectional Encoder Representation from Transformers. IEEE Trans. Geosci. Remote Sens. A Publ. IEEE Geosci. Remote Sens. Soc. 2020, 58, 165–178. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Sun, Z.; Di, L.; Fang, H. Using long short-term memory recurrent neural network in land cover classification on Landsat and Cropland data layer time series. Int. J. Remote Sens. 2018, 40, 593–614. [Google Scholar] [CrossRef]

- Graves, A.; Graves, A. Long short-term memory. In Supervised Sequence Labelling with Recurrent Neural Networks; Springer: Berlin/Heidelberg, Germany, 2012; pp. 37–45. [Google Scholar]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. Neurips.Cc. 2014. Available online: https://proceedings.neurips.cc/paper_files/paper/2014/file/5ca3e9b122f61f8f06494c97b1afccf3-Paper.pdf (accessed on 7 August 2023).

- Ankan, D.; Ye, J.; Wang, G. A Review of Generative Adversarial Networks (GANs) and Its Applications in a Wide Variety of Disciplines—From Medical to Remote Sensing. arXiv 2021, arXiv:2110.01442. [Google Scholar]

- Jozdani, S.; Chen, D.; Pouliot, D.; Johnson, B.A. A review and meta-analysis of Generative Adversarial Networks and their applications in remote sensing. Int. J. Appl. Earth Obs. Geoinf. 2022, 108, 102734. [Google Scholar] [CrossRef]

- Creswell, A.; White, T.; Dumoulin, V.; Arulkumaran, K.; Sengupta, B.; Bharath, A.A. Generative Adversarial Networks: An Overview. IEEE Signal Process. Mag. 2018, 35, 53–65. [Google Scholar] [CrossRef]

- Xu, C.; Zhao, B. Satellite Image Spoofing: Creating Remote Sensing Dataset with Generative Adversarial Networks (Short Paper); Schloss Dagstuhl—Leibniz-Zentrum fuer Informatik GmbH.: Wadern/Saarbruecken, Germany, 2018. [Google Scholar]

- Zi, Y.; Xie, F.; Song, X.; Jiang, Z.; Zhang, H. Thin Cloud Removal for Remote Sensing Images Using a Physical-Model-Based CycleGAN With Unpaired Data. IEEE Geosci. Remote Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.P.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 105–114. [Google Scholar]

- Isola, P.; Zhu, J.-Y.; Zhou, T.; Efros, A.A. Image-to-Image Translation with Conditional Adversarial Networks. arXiv 2017, arXiv:1611.07004. [Google Scholar]

- Sun, H.; Wang, P.; Chang, Y.; Qi, L.; Wang, H.; Xiao, D.; Zhong, C.; Wu, X.; Li, W.; Sun, B. HRPGAN: A GAN-based Model to Generate High-resolution Remote Sensing Images. IOP Conf. Series Earth Environ. Sci. 2020, 428, 012060. [Google Scholar] [CrossRef]

- Lin, D.; Fu, K.; Wang, Y.; Xu, G.; Sun, X. MARTA GANs: Unsupervised Representation Learning for Remote Sensing Image Classification. IEEE Geosci. Remote Sens. Lett. 2017, 14, 2092–2096. [Google Scholar] [CrossRef]

- Liu, X.; Wang, Y.; Liu, Q. Psgan: A Generative Adversarial Network for Remote Sensing Image Pan-Sharpening. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 873–877. [Google Scholar]

- Hu, A.; Xie, Z.; Xu, Y.; Xie, M.; Wu, L.; Qiu, Q. Unsupervised Haze Removal for High-Resolution Optical Remote-Sensing Images Based on Improved Generative Adversarial Networks. Remote Sens. 2020, 12, 4162. [Google Scholar] [CrossRef]

- Singh, P.; Komodakis, N. Cloud-Gan: Cloud Removal for Sentinel-2 Imagery Using a Cyclic Consistent Generative Adversarial Networks. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 1772–1775. [Google Scholar] [CrossRef]

- Arulkumaran, K.; Deisenroth, M.P.; Brundage, M.; Bharath, A.A. Deep Reinforcement Learning: A Brief Survey. IEEE Signal Process. Mag. 2017, 34, 26–38. [Google Scholar] [CrossRef]

- Li, Y. Deep Reinforcement Learning: An Overview. arXiv 2017, arXiv:1701.07274. [Google Scholar]

- Mou, L.; Saha, S.; Hua, Y.; Bovolo, F.; Bruzzone, L.; Zhu, X.X. Deep Reinforcement Learning for Band Selection in Hyperspectral Image Classification. IEEE Trans. Geosci. Remote. Sens. 2021, 60, 1–14. [Google Scholar] [CrossRef]

- Fu, K.; Li, Y.; Sun, H.; Yang, X.; Xu, G.; Li, Y.; Sun, X. A Ship Rotation Detection Model in Remote Sensing Images Based on Feature Fusion Pyramid Network and Deep Reinforcement Learning. Remote Sens. 2018, 10, 1922. [Google Scholar] [CrossRef]

- Filar, J.; Vrieze, K. Competitive Markov Decision Processes; Springer Science & Business Media: New York, NY, USA, 2012. [Google Scholar] [CrossRef]

- Maxwell, A.E.; Warner, T.A.; Fang, F. Implementation of machine-learning classification in remote sensing: An applied review. Int. J. Remote Sens. 2018, 39, 2784–2817. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, H.; Xue, X.; Jiang, Y.; Shen, Q. Deep learning for remote sensing image classification: A survey. WIREs Data Min. Knowl. Discov. 2018, 8, e1264. [Google Scholar] [CrossRef]

- Song, J.; Gao, S.; Zhu, Y.; Ma, C. A survey of remote sensing image classification based on CNNs. Big Earth Data 2019, 3, 232–254. [Google Scholar] [CrossRef]

- Methodology & Accuracy Summary 10m Global Land Use Land Cover Maps. Impactobservatory.Com. 2022. Available online: https://www.impactobservatory.com/static/lulc_methodology_accuracy-ee742a0a389a85a0d4e7295941504ac2.pdf (accessed on 29 June 2023).

- AI Enables Rapid Creation of Global Land Cover Map. Esri. 7 September 2021. Available online: https://www.esri.com/about/newsroom/arcuser/ai-enables-rapid-creation-of-global-land-cover-map/ (accessed on 5 July 2023).

- SpaceKnow. GEMSTONE CASE STUDY: Global Economic Monitoring Using Satellite Data and AI/ML Technology. Medium. 25 April 2022. Available online: https://spaceknow.medium.com/gemstone-case-study-global-economic-monitoring-using-satellite-data-and-ai-ml-technology-6526c336bf18 (accessed on 29 June 2023).

- Qi, W. Object detection in high resolution optical image based on deep learning technique. Nat. Hazards Res. 2022, 2, 384–392. [Google Scholar] [CrossRef]

- Yang, W.; Song, H.; Du, L.; Dai, S.; Xu, Y. A Change Detection Method for Remote Sensing Images Based on Coupled Dictionary and Deep Learning. Comput. Intell. Neurosci. 2022, 2022, 3404858. [Google Scholar] [CrossRef] [PubMed]

- Schmitt, M.; Zhu, X.X. Data Fusion and Remote Sensing: An ever-growing relationship. IEEE Geosci. Remote Sens. Mag. 2016, 4, 6–23. [Google Scholar] [CrossRef]

- Floodly AI. Esa.Int. 15 January 2021. Available online: https://business.esa.int/projects/floodly-ai (accessed on 29 June 2023).

- Paganini, M.; Wyniawskyj, N.; Talon, P.; White, S.; Watson, G.; Petit, D. Total Ecosystem Management of the InterTidal Habitat (TEMITH). Esa.Int. 12 September 2020. Available online: https://eo4society.esa.int/wp-content/uploads/2021/06/TEMITH-DMU-TEC-ESR01-11-E_Summary_Report.pdf (accessed on 5 July 2023).

- Zhong, H.; Lin, W.; Liu, H.; Ma, N.; Liu, K.; Cao, R.; Wang, T.; Ren, Z. Identification of tree species based on the fusion of UAV hyperspectral image and LiDAR data in a coniferous and broad-leaved mixed forest in Northeast China. Front. Plant Sci. 2022, 13, 964769. [Google Scholar] [CrossRef]

- Woodie, A. AI Opens Door to Expanded Use of LIDAR Data. Datanami. 17 September 2020. Available online: https://www.datanami.com/2020/09/17/ai-opens-door-to-expanded-use-of-lidar-data/ (accessed on 5 July 2023).

- Technology. Metaspectral. 20 September 2022. Available online: https://metaspectral.com/technology/ (accessed on 29 June 2023).

- Redins, L. Metaspectral’s AI Platform Uses Hyperspectral Imaging, Edge Computing to Transform Space, Recycling and Other Industries. 26 January 2023. Available online: https://www.edgeir.com/metaspectrals-ai-platform-uses-hyperspectral-imaging-edge-computing-to-transform-space-recycling-and-other-industries-20230125 (accessed on 5 July 2023).

- Skulovich, O.; Gentine, P. A Long-term Consistent Artificial Intelligence and Remote Sensing-based Soil Moisture Dataset. Sci. Data 2023, 10, 154. [Google Scholar] [CrossRef] [PubMed]

- Esen, Berivan, and Jonathan Wentworth. 2020. “Remote Sensing and Machine Learning.” Parliament.Uk. 19 June 2020. Available online: https://post.parliament.uk/research-briefings/post-pn-0628/ (accessed on 5 July 2023).

- Holland, S.; Hosny, A.; Newman, S.; Joseph, J.; Chmielinski, K. The dataset nutrition label. Data Prot. Priv. 2020, 12, 1. [Google Scholar]

- Verbesselt, J.; Zeileis, A.; Herold, M. Near real-time disturbance detection using satellite image time series. Remote Sens. Environ. 2012, 123, 98–108. [Google Scholar] [CrossRef]

- Dörnhöfer, K.; Oppelt, N. Remote sensing for lake research and monitoring—Recent advances. Ecol. Indic. 2016, 64, 105–122. [Google Scholar] [CrossRef]

- Engel-Cox, J.A.; Hoff, R.M.; Haymet, A. Recommendations on the Use of Satellite Remote-Sensing Data for Urban Air Quality. J. Air Waste Manag. Assoc. 2004, 54, 1360–1371. [Google Scholar] [CrossRef]

- Wang, Q.; Ma, Y.; Zhao, K.; Tian, Y. A Comprehensive Survey of Loss Functions in Machine Learning. Ann. Data Sci. 2020, 9, 187–212. [Google Scholar] [CrossRef]

- Kotsiantis, S.B.; Pintelas, P.E. Mixture of expert agents for handling imbalanced data sets. Ann. Math. Comput. Teleinform. 2003, 1, 46–55. [Google Scholar]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8, 1–74. [Google Scholar] [CrossRef] [PubMed]

- Soydaner, D. A comparison of optimization algorithms for deep learning. Int. J. Pattern Recognit. Artif. Intell. 2020, 34, 2052013. [Google Scholar] [CrossRef]

- Sheng, V.S.; Provost, F.; Ipeirotis, P.G. Get another label? improving data quality and data mining using multiple, noisy labelers. In Proceedings of the 14th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Las Vegas, NV, USA, 24–27 August 2008; pp. 614–622. [Google Scholar]

- Shan, J.; Aparajithan, S. Urban DEM generation from raw LiDAR data. Photogramm. Eng. Remote Sens. 2005, 71, 217–226. [Google Scholar] [CrossRef]

- Gao, F.; Hilker, T.; Zhu, X.; Anderson, M.; Masek, J.; Wang, P.; Yang, Y. Fusing Landsat and MODIS Data for Vegetation Monitoring. IEEE Geosci. Remote Sens. Mag. 2015, 3, 47–60. [Google Scholar] [CrossRef]

- Petitjean, F.; Inglada, J.; Gancarski, P. Satellite Image Time Series Analysis Under Time Warping. IEEE Trans. Geosci. Remote Sens. 2012, 50, 3081–3095. [Google Scholar] [CrossRef]

- Griffith, D.A.; Chun, Y. Spatial Autocorrelation and Uncertainty Associated with Remotely-Sensed Data. Remote Sens. 2016, 8, 535. [Google Scholar] [CrossRef]

- Miura, T.; Huete, A.; Yoshioka, H. Evaluation of sensor calibration uncertainties on vegetation indices for MODIS. IEEE Trans. Geosci. Remote Sens. 2000, 38, 1399–1409. [Google Scholar] [CrossRef]

- Güntner, A.; Stuck, J.; Werth, S.; Döll, P.; Verzano, K.; Merz, B. A global analysis of temporal and spatial variations in continental water storage. Water Resour. Res. 2007, 43, W05416. [Google Scholar] [CrossRef]

- Alvarez-Vanhard, E.; Corpetti, T.; Houet, T. UAV & satellite synergies for optical remote sensing applications: A literature review. Sci. Remote Sens. 2021, 3, 100019. [Google Scholar] [CrossRef]

- Himeur, Y.; Rimal, B.; Tiwary, A.; Amira, A. Using artificial intelligence and data fusion for environmental monitoring: A review and future perspectives. Inf. Fusion 2022, 86–87, 44–75. [Google Scholar] [CrossRef]

- von Eschenbach, W.J. Transparency and the black box problem: Why we do not trust AI. Philos. Technol. 2021, 34, 1607–1622. [Google Scholar] [CrossRef]

- Kakogeorgiou, I.; Karantzalos, K. Evaluating explainable artificial intelligence methods for multi-label deep learning classification tasks in remote sensing. Int. J. Appl. Earth Obs. Geoinf. 2021, 103, 102520. [Google Scholar] [CrossRef]

- Belle, V.; Papantonis, I. Principles and Practice of Explainable Machine Learning. Front. Big Data 2021, 4, 688969. [Google Scholar] [CrossRef] [PubMed]

- Shorten, C.; Khoshgoftaar, T.M. A survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Torrey, L.; Shavlik, J. Transfer learning. In Handbook of Research on Machine Learning Applications and Trends: Algorithms, Methods, and Techniques; IGI Global: Hershey, PA, USA, 2010; pp. 242–264. [Google Scholar]

- Ganaie, M.A.; Hu, M.; Malik, A.K.; Tanveer, M.; Suganthan, P.N. Ensemble deep learning: A review. Eng. Appl. Artif. Intell. 2022, 115, 105151. [Google Scholar] [CrossRef]

- Chi, M.; Plaza, A.; Benediktsson, J.A.; Sun, Z.; Shen, J.; Zhu, Y. Big Data for Remote Sensing: Challenges and Opportunities. Proc. IEEE 2016, 104, 2207–2219. [Google Scholar] [CrossRef]

- Xie, M.; Jean, N.; Burke, M.; Lobell, D.; Ermon, S. Transfer Learning from Deep Features for Remote Sensing and Poverty Mapping. In Proceedings of the AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; Volume 30. [Google Scholar] [CrossRef]

- Benchaita, S.; Mccarthy, B.H. IBM and NASA Open Source Largest Geospatial AI Foundation Model on Hugging Face. IBM Newsroom. 3 August 2023. Available online: https://newsroom.ibm.com/2023-08-03-IBM-and-NASA-Open-Source-Largest-Geospatial-AI-Foundation-Model-on-Hugging-Face (accessed on 10 August 2023).

- Wang, J.; Lan, C.; Liu, C.; Ouyang, Y.; Qin, T.; Lu, W.; Chen, Y.; Zeng, W.; Yu, P. Generalizing to Unseen Domains: A Survey on Domain Generalization. IEEE Trans. Knowl. Data Eng. 2022, 35, 8052–8072. [Google Scholar] [CrossRef]

- Mehrabi, N.; Morstatter, F.; Saxena, N.; Lerman, K.; Galstyan, A. A Survey on Bias and Fairness in Machine Learning. ACM Comput. Surv. 2021, 54, 1–35. [Google Scholar] [CrossRef]

- Roselli, D.; Matthews, J.; Talagala, N. Managing bias in AI. In Proceedings of the 2019 World Wide Web Conference, New York, NY, USA, 13–17 May 2019; pp. 539–544. [Google Scholar]

- Raji, I.D.; Smart, A.; White, R.N.; Mitchell, M.; Gebru, T.; Hutchinson, B.; Smith-Loud, J.; Theron, D.; Barnes, P. Closing the AI accountability gap: Defining an end-to-end framework for internal algorithmic auditing. In Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency, Barcelona, Spain, 27–30 January 2020; pp. 33–44. [Google Scholar]

- Alkhelaiwi, M.; Boulila, W.; Ahmad, J.; Koubaa, A.; Driss, M. An Efficient Approach Based on Privacy-Preserving Deep Learning for Satellite Image Classification. Remote Sens. 2021, 13, 2221. [Google Scholar] [CrossRef]

- Zhang, X.; Zhu, G.; Ma, S. Remote-sensing image encryption in hybrid domains. Opt. Commun. 2012, 285, 1736–1743. [Google Scholar] [CrossRef]

- Potkonjak, M.; Meguerdichian, S.; Wong, J.L. Trusted sensors and remote sensing. In Proceedings of the SENSORS, 2010 IEEE, Waikoloa, HI, USA, 1–4 November 2010; pp. 1104–1107. [Google Scholar] [CrossRef]

- Ismael, C.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar]

- Jain, P.; Coogan, S.C.P.; Subramanian, S.G.; Crowley, M.; Taylor, S.W.; Flannigan, M.D. A review of machine learning applications in wildfire science and management. Environ. Rev. 2020, 28, 478–505. [Google Scholar] [CrossRef]

- Bouguettaya, A.; Zarzour, H.; Taberkit, A.M.; Kechida, A. A review on early wildfire detection from unmanned aerial vehicles using deep learning-based computer vision algorithms. Signal Process. 2022, 190, 108309. [Google Scholar] [CrossRef]

- Nguyen, G.; Dlugolinsky, S.; Bobák, M.; Tran, V.; García, L.; Heredia, I.; Malík, P.; Hluchý, L. Machine Learning and Deep Learning frameworks and libraries for large-scale data mining: A survey. Artif. Intell. Rev. 2019, 52, 77–124. [Google Scholar] [CrossRef]

- Amani, M.; Ghorbanian, A.; Ahmadi, S.A.; Kakooei, M.; Moghimi, A.; Mirmazloumi, S.M.; Moghaddam, S.H.A.; Mahdavi, S.; Ghahremanloo, M.; Parsian, S.; et al. Google Earth Engine Cloud Computing Platform for Remote Sensing Big Data Applications: A Comprehensive Review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 5326–5350. [Google Scholar] [CrossRef]

- Garbini, S. How Geospatial AI Can Help You Comply with EU’s Deforestation Law—Customers. Picterra. 25 April 2023. Available online: https://picterra.ch/blog/how-geospatial-ai-can-help-you-comply-with-eus-deforestation-law/ (accessed on 6 July 2023).

- Mujetahid, A.; Nursaputra, M.; Soma, A.S. Monitoring Illegal Logging Using Google Earth Engine in Sulawesi Selatan Tropical Forest, Indonesia. Forests 2023, 14, 652. [Google Scholar] [CrossRef]

- González-Rivero, M.; Beijbom, O.; Rodriguez-Ramirez, A.; Bryant, D.E.; Ganase, A.; Gonzalez-Marrero, Y.; Herrera-Reveles, A.; Kennedy, E.V.; Kim, C.J.; Lopez-Marcano, S.; et al. Monitoring of Coral Reefs Using Artificial Intelligence: A Feasible and Cost-Effective Approach. Remote Sens. 2020, 12, 489. [Google Scholar] [CrossRef]

- Lou, R.; Lv, Z.; Dang, S.; Su, T.; Li, X. Application of machine learning in ocean data. Multimedia Syst. 2021, 29, 1815–1824. [Google Scholar] [CrossRef]

- Ditria, E.M.; Buelow, C.A.; Gonzalez-Rivero, M.; Connolly, R.M. Artificial intelligence and automated monitoring for assisting conservation of marine ecosystems: A perspective. Front. Mar. Sci. 2022, 9, 918104. [Google Scholar] [CrossRef]

- Shafiq, S.I. Artificial intelligence and big data science for oceanographic research in Bangladesh: Preparing for the future. J. Data Acquis. Process. 2023, 38, 418. [Google Scholar]

- Weeks, P.J.D.; Gaston, K.J. Image analysis, neural networks, and the taxonomic impediment to biodiversity studies. Biodivers. Conserv. 1997, 6, 263–274. [Google Scholar] [CrossRef]

- Silvestro, D.; Goria, S.; Sterner, T.; Antonelli, A. Improving biodiversity protection through artificial intelligence. Nat. Sustain. 2022, 5, 415–424. [Google Scholar] [CrossRef]

- Toivonen, T.; Heikinheimo, V.; Fink, C.; Hausmann, A.; Hiippala, T.; Järv, O.; Tenkanen, H.; Di Minin, E. Social media data for conservation science: A methodological overview. Biol. Conserv. 2019, 233, 298–315. [Google Scholar] [CrossRef]

- Tong, D.Q.; Gill, T.E.; Sprigg, W.A.; Van Pelt, R.S.; Baklanov, A.A.; Barker, B.M.; Bell, J.E.; Castillo, J.; Gassó, S.; Gaston, C.J.; et al. Health and Safety Effects of Airborne Soil Dust in the Americas and Beyond. Rev. Geophys. 2023, 61, e2021RG000763. [Google Scholar] [CrossRef]

- Alnuaim, A.; Ziheng, S.; Didarul, I. AI for improving ozone forecasting. In Artificial Intelligence in Earth Science; Elsevier: Amsterdam, The Netherlands, 2023; pp. 247–269. [Google Scholar]

- Bragazzi, N.L.; Dai, H.; Damiani, G.; Behzadifar, M.; Martini, M.; Wu, J. How Big Data and Artificial Intelligence Can Help Better Manage the COVID-19 Pandemic. Int. J. Environ. Res. Public Heal. 2020, 17, 3176. [Google Scholar] [CrossRef]

- Alnaim, A.; Sun, Z.; Tong, D. Evaluating Machine Learning and Remote Sensing in Monitoring NO2 Emission of Power Plants. Remote Sens. 2022, 14, 729. [Google Scholar] [CrossRef]

- Vaishya, R.; Javaid, M.; Khan, I.H.; Haleem, A. Artificial Intelligence (AI) applications for COVID-19 pandemic. Diabetes Metab. Syndr. Clin. Res. Rev. 2020, 14, 337–339. [Google Scholar] [CrossRef]

- Lim, K.; Treitz, P.; Wulder, M.; St-Onge, B.; Flood, M. LiDAR remote sensing of forest structure. Prog. Phys. Geogr. Earth Environ. 2003, 27, 88–106. [Google Scholar] [CrossRef]

- Liu, L.; Zhang, Q.; Guo, Y.; Chen, E.; Li, Z.; Li, Y.; Wang, B.; Ri, A. Mapping the Distribution and Dynamics of Coniferous Forests in Large Areas from 1985 to 2020 Combining Deep Learning and Google Earth Engine. Remote Sens. 2023, 15, 1235. [Google Scholar] [CrossRef]

- Sharma, M.K.; Mujawar, R.; Mujawar, A.; Dhayalini, K. Precision Forestry: Integration of Robotics and Sensing Technologies for Tree Measurement and Monitoring. Eur. Chem. Bull. 2023, 12, 4747–4764. [Google Scholar]

- Stereńczak, K. Precision Forestry. IDEAS NCBR—Intelligent Algorithms for Digital Economy. 13 April 2023. Available online: https://ideas-ncbr.pl/en/research/precision-forestry/ (accessed on 5 July 2023).

- Liu, W.; Quijano, K.; Crawford, M.M. YOLOv5-Tassel: Detecting Tassels in RGB UAV Imagery With Improved YOLOv5 Based on Transfer Learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 8085–8094. [Google Scholar] [CrossRef]

- Amila, J.; Ranaweera, N.; Abenayake, C.; Bandara, N.; De Silva, C. Modelling vegetation land fragmentation in urban areas of Western Province, Sri Lanka using an Artificial Intelligence-based simulation technique. PLoS ONE 2023, 18, e0275457. [Google Scholar]

- Kolokotroni, M.; Zhang, Y.; Watkins, R. The London Heat Island and building cooling design. Sol. Energy 2007, 81, 102–110. [Google Scholar] [CrossRef]

- Lyu, F.; Wang, S.; Han, S.Y.; Catlett, C.; Wang, S. An integrated cyberGIS and machine learning framework for fine-scale prediction of Urban Heat Island using satellite remote sensing and urban sensor network data. Urban Inform. 2022, 1, 1–15. [Google Scholar] [CrossRef]

- Rahman, A.; Roy, S.S.; Talukdar, S.; Shahfahad (Eds.) Advancements in Urban Environmental Studies: Application of Geospatial Technology and Artificial Intelligence in Urban Studies; Springer International Publishing: Cham, Switzerland, 2023. [Google Scholar]

- Alnaim, A.; Ziheng, S. Using Geoweaver to Make Snow Mapping Workflow FAIR. In Proceedings of the 2022 IEEE 18th International Conference on e-Science (e-Science), Salt Lake City, UT, USA, 11–14 October 2022; pp. 409–410. [Google Scholar]

- Yang, K.; John, A.; Sun, Z.; Cristea, N. Machine learning for snow cover mapping. In Artificial Intelligence in Earth Science; Elsevier: Amsterdam, The Netherlands, 2023; pp. 17–39. [Google Scholar]

- An, S.; Rui, X. A High-Precision Water Body Extraction Method Based on Improved Lightweight U-Net. Remote. Sens. 2022, 14, 4127. [Google Scholar] [CrossRef]

- Al-Bakri, J.T.; D’Urso, G.; Calera, A.; Abdalhaq, E.; Altarawneh, M.; Margane, A. Remote Sensing for Agricultural Water Management in Jordan. Remote Sens. 2022, 15, 235. [Google Scholar] [CrossRef]

- Xiang, X.; Li, Q.; Khan, S.; Khalaf, O.I. Urban water resource management for sustainable environment planning using artificial intelligence techniques. Environ. Impact Assess. Rev. 2020, 86, 106515. [Google Scholar] [CrossRef]

- Sun, A.Y.; Scanlon, B.R. How can Big Data and machine learning benefit environment and water management: A survey of methods, applications, and future directions. Environ. Res. Lett. 2019, 14, 073001. [Google Scholar] [CrossRef]

- Sun, W.; Bocchini, P.; Davison, B.D. Applications of artificial intelligence for disaster management. Nat. Hazards 2020, 103, 2631–2689. [Google Scholar] [CrossRef]

- Chapman, A. Leveraging Big Data and AI for Disaster Resilience and Recovery; Texas A&M University College of Engineering: College Station, TX, USA, 2023; Available online: https://engineering.tamu.edu/news/2023/06/leveraging-big-data-and-ai-for-disaster-resilience-and-recovery.html (accessed on 10 August 2023).

- Imran, M.; Castillo, C.; Lucas, J.; Meier, P.; Vieweg, S. AIDR: Artificial Intelligence for Disaster Response. In Proceedings of the 23rd International Conference on World Wide Web, Seoul, Republic of Korea, 7–11 April 2014; ACM: New York, NY, USA; pp. 159–162. [Google Scholar]

- Gevaert, C.M.; Carman, M.; Rosman, B.; Georgiadou, Y.; Soden, R. Fairness and accountability of AI in disaster risk management: Opportunities and challenges. Patterns 2021, 2, 100363. [Google Scholar] [CrossRef] [PubMed]

- Cao, L. AI and Data Science for Smart Emergency, Crisis and Disaster Resilience. Int. J. Data Sci. Anal. 2023, 15, 231–246. [Google Scholar] [CrossRef]

| Technique | Advantages | Limitations | Sample Applications |

|---|---|---|---|

| Optical remote sensing | - captures reflected solar radiation and emitted thermal radiation for analysis within the visible and near-infrared spectrum bands - provides various sensor types for the collection of handheld, airborne, and space-borne data - offers extensive coverage and repeated observations over time with spaceborne sensors | - atmospheric conditions can impact data accuracy, limitations due to sun angles and shadows - night-time data are not available, and single snapshot acquisition - limited visibility due to clouds which can hinder data collection, inability to penetrate clouds - cost and availability of high-resolution data | - land-use mapping, crop health assessment - Monitoring vegetation - Monitoring climate change |

| Radar remote sensing | - operates in the microwave region, providing valuable data on distance, direction, shape, size, roughness, and dielectric properties of targets - enables accurate mapping even in challenging weather or limited visibility conditions - utilizes dual-polarization technology for enhanced forest cover mapping | - data processing can be complex, especially for full waveform LiDAR systems - lack of spectral information and limited penetration through some materials - high sensitivity to surface roughness | - mapping land surfaces and monitoring weather patterns - studying ocean currents - detecting buildings, vehicles, and changes in forest cover |

| LiDAR | - provides precise distance and elevation measurements of ground objects - high-resolution 3D data - penetration of vegetation - day and night operation - multiple returns of one single laser pulse and reduced atmospheric interference | - data processing complexity, especially for full waveform LiDAR systems - accuracy dependent on elevation and angle - high cost and availability - limited penetration through thick dense vegetation | - create accurate and detailed 3D maps of trees, buildings, pipelines, etc |

| Thermal remote sensing | - measures radiant flux emitted by ground objects within specific wavelength ranges - provides information on the emissivity, reflectivity, and temperature of target objects | - atmospheric conditions, changes in solar illumination, and target variations can impact data accuracy | - agriculture (e.g., fire detection, urban heat islands) and environmental monitoring |

| Multispectral and hyperspectral imaging | - captures a broad range of wavelengths, including infrared and ultraviolet, for comprehensive data collection - HSI provides valuable insights into material composition, structure, and condition | - high-dimensional and noisy data in HSI pose analysis challenges - limited spectral resolution in multispectral imaging | - recognition of vegetation patterns such as greenness, vitality, and biomass - studying material properties (e.g., physical and chemical alterations |

| Technique | Advantages | Limitations | Applications |

|---|---|---|---|

| RF | - effectively handles multi-temporal and multi-sensor remote sensing data - provide variable importance measurements for feature selection - enhances generalization and reduces computational load and redundancy - RF feature selection prioritizes informative variables by evaluating interrelationships and discriminating ability in high-dimensional remote sensing data, leading to more accurate classification results | - can be sensitive to the choice of hyper-parameters - does not guarantee that the selected features will be the best for all tasks | - classification of remote sensing data - object detection in remote sensing |

| XGBoost | - the ability to handle cases where different classes exhibit similar spectral signatures - effective differentiation of classes with subtle spectral differences, enhancing classification performance. - utilization of hyper-parameter tuning techniques to ensure optimal accuracy and prevent overfitting | - hyperparameter sensitivity - prone to overfitting - slower than RF | - the classification of remote sensing data with high accuracy and robustness |

| DCNNs | - efficiently handle intricate patterns and features in remote sensing images - learn hierarchical representations of features from convolution and pooling layers - enable accurate recognition of objects through fully connected layers with softmax activation | - training DCNNs can be computationally expensive, especially for large-scale datasets - may suffer from vanishing gradients or overfitting if not properly regularized | - remote sensing image recognition and classification - object detection tasks in remote sensing using RPN |

| ResNets | - alleviate the degradation problem in deep learning models, allowing the training of much deeper networks - handling complex high-dimensional and noisy data in remote sensing | - implementing very deep networks may still require significant computational resources | - image recognition object detection |

| YOLO | - efficiently identify and classify multiple objects in large datasets of images or video frames - simultaneously process the entire image and region proposals - utilize NMS to remove overlapping bounding boxes and improve precision | - may struggle with the detection of small objects in low-resolution images - requires careful anchor box design for accurate bounding box predictions | - real-time object detection and segmentation in remote sensing images |

| Self Attention methods | - capture long-range dependencies in sequences and handle spatial and spectral dependencies in remote sensing data - provide access to all elements in a sequence, enabling a comprehensive understanding of dependencies | - transformer models can be memory-intensive due to their self-attention mechanism - properly tuning the number of attention heads and layers is essential for optimal performance | - sequence modeling and image classification in remote sensing data - time series analysis of remote sensing data and capture diverse pixel relationships regardless of spatial distance |

| LSTM | - effectively captures long-term dependencies in sequences - overcomes the vanishing gradient problem with gate mechanisms | - training LSTMs can be time consuming, particularly for longer sequences - can struggle with capturing very long-term dependencies in sequences - may require careful tuning of hyperparameters to prevent overfitting | - sequence modeling and time series analysis in remote sensing data |

| GANs | - capable of handling complex, high-dimensional data distributions with limited or no annotated training data - data augmentation method enhances the performance of data-reliant deep learning models | - training GANs can be challenging and unstable, requiring careful hyper-parameter tuning - generating high-quality, realistic images may be difficult in some cases - may suffer from mode collapse, where the generator produces limited variations in images | - image-to-image translation tasks like converting satellite images with cloud coverage into cloud-free versions using CycleGAN - enhancing the resolution of low-resolution satellite images with SRGAN and similar approaches - image-to-image translation, data augmentation, and pan-sharpening |

| DRL | - learns from unlabeled data to improve decision-making processes - combines reinforcement learning (RL) with deep neural networks for solving complex problems - handles redundant spectral information | - requires careful design and tuning of reward functions to ensure the desired behavior - training deep neural networks in DRL can be computationally expensive and time consuming - exploration vs. exploitation trade-off in RL can impact the learning process and can be dependent on the sample | - improving unsupervised band selection in hyperspectral image classification using DRL with DQN - image processing applications that analyze large amounts of data |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Janga, B.; Asamani, G.P.; Sun, Z.; Cristea, N. A Review of Practical AI for Remote Sensing in Earth Sciences. Remote Sens. 2023, 15, 4112. https://doi.org/10.3390/rs15164112

Janga B, Asamani GP, Sun Z, Cristea N. A Review of Practical AI for Remote Sensing in Earth Sciences. Remote Sensing. 2023; 15(16):4112. https://doi.org/10.3390/rs15164112

Chicago/Turabian StyleJanga, Bhargavi, Gokul Prathin Asamani, Ziheng Sun, and Nicoleta Cristea. 2023. "A Review of Practical AI for Remote Sensing in Earth Sciences" Remote Sensing 15, no. 16: 4112. https://doi.org/10.3390/rs15164112

APA StyleJanga, B., Asamani, G. P., Sun, Z., & Cristea, N. (2023). A Review of Practical AI for Remote Sensing in Earth Sciences. Remote Sensing, 15(16), 4112. https://doi.org/10.3390/rs15164112