Localization of Mobile Robots Based on Depth Camera

Abstract

1. Introduction

2. Methods

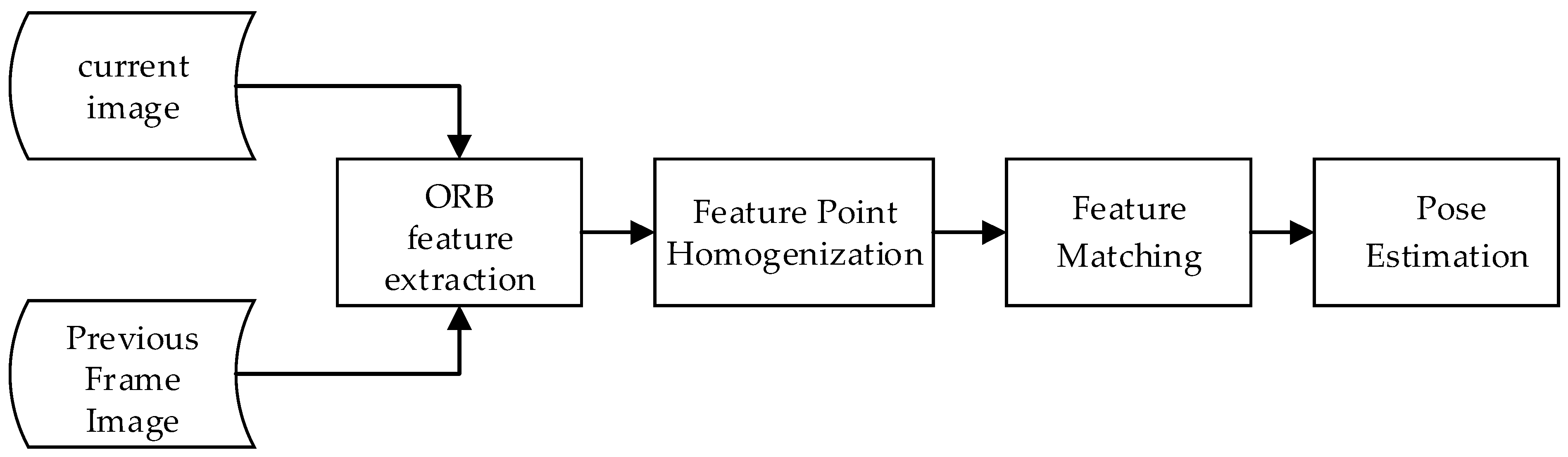

2.1. System Framework

2.2. ORB Feature Extraction

2.3. Feature Point Homogenization

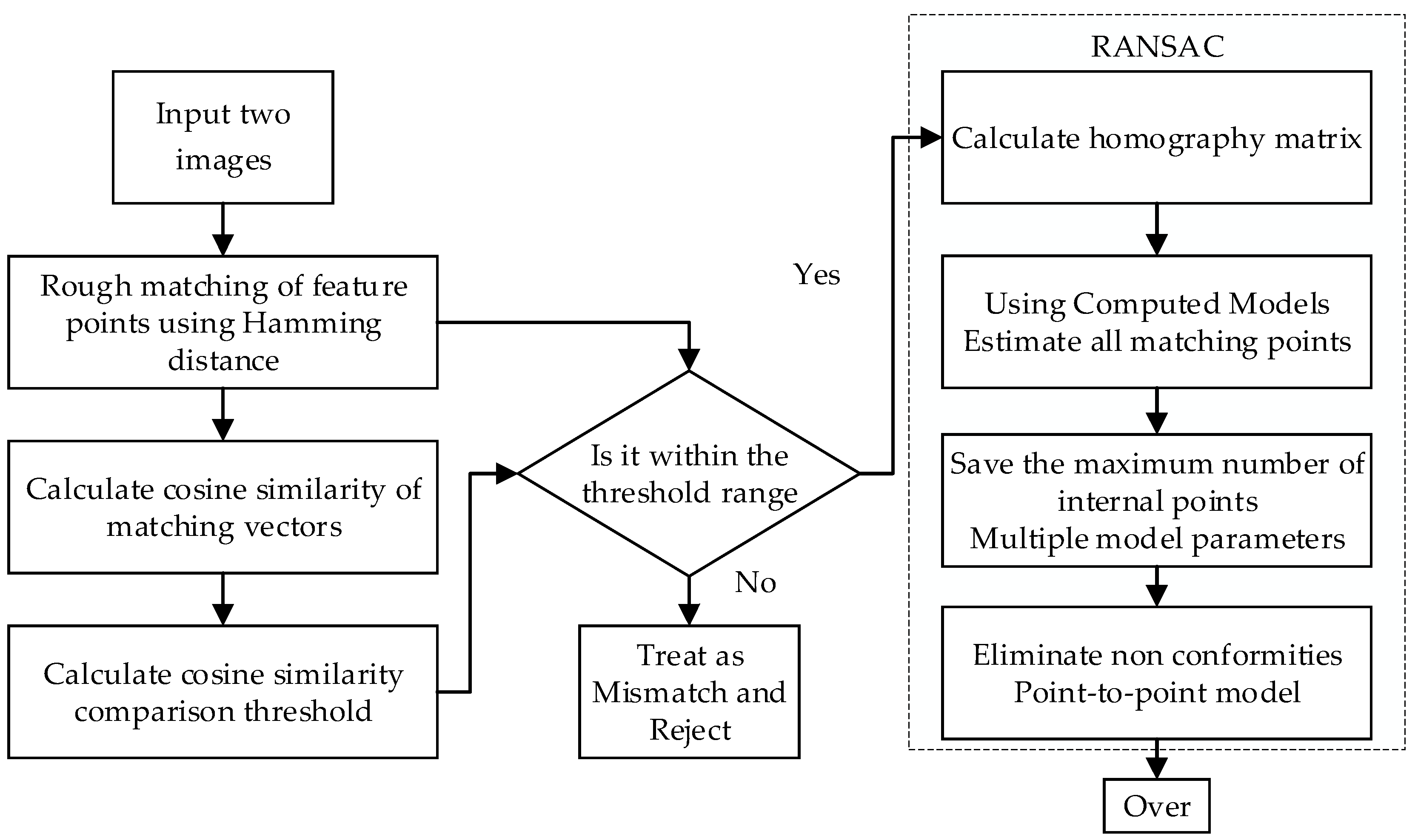

2.4. Feature Matching

2.5. Camera Pose Estimation

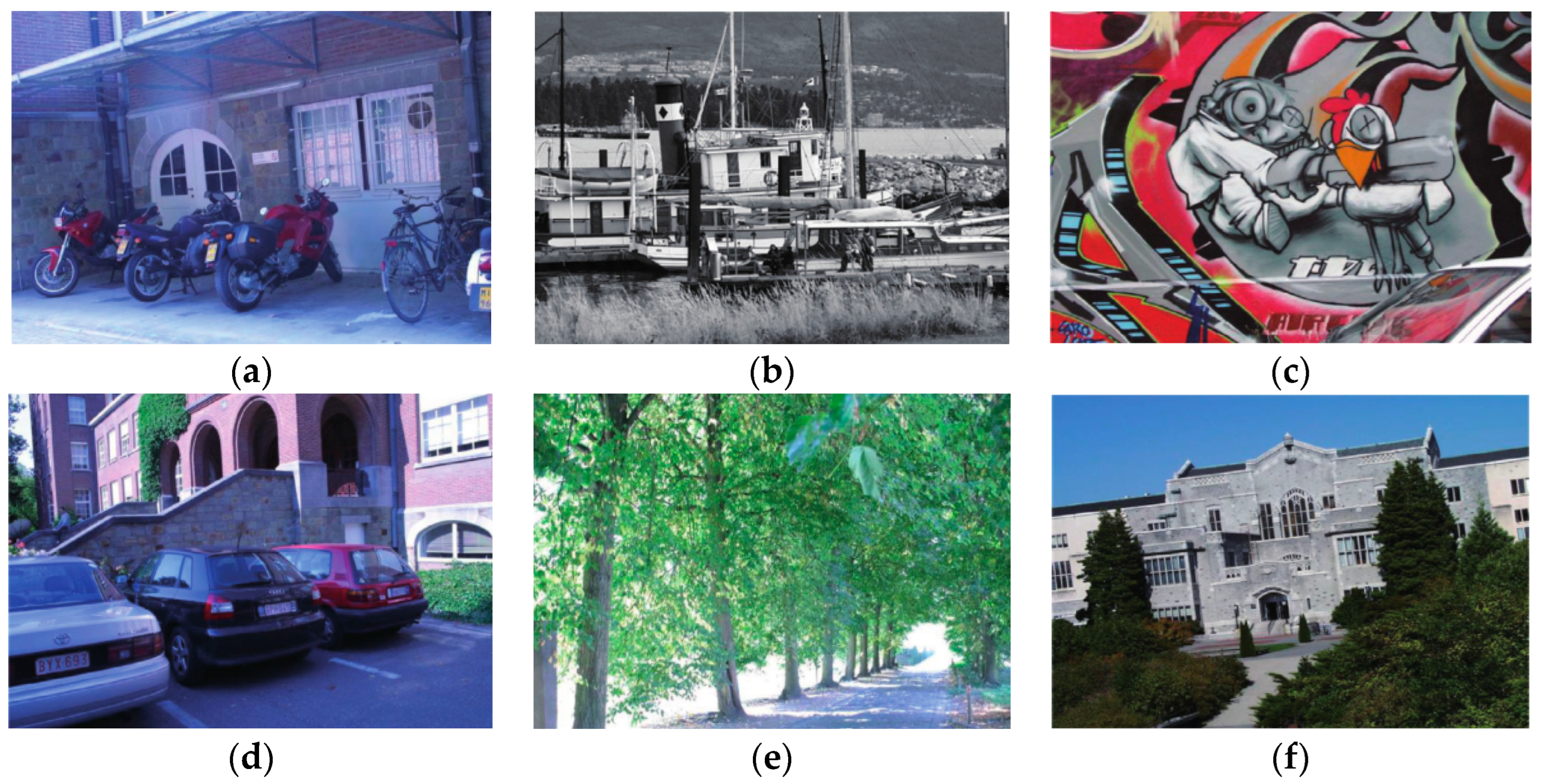

3. Experimental Environment and Datasets

4. Technical Implementation and Results

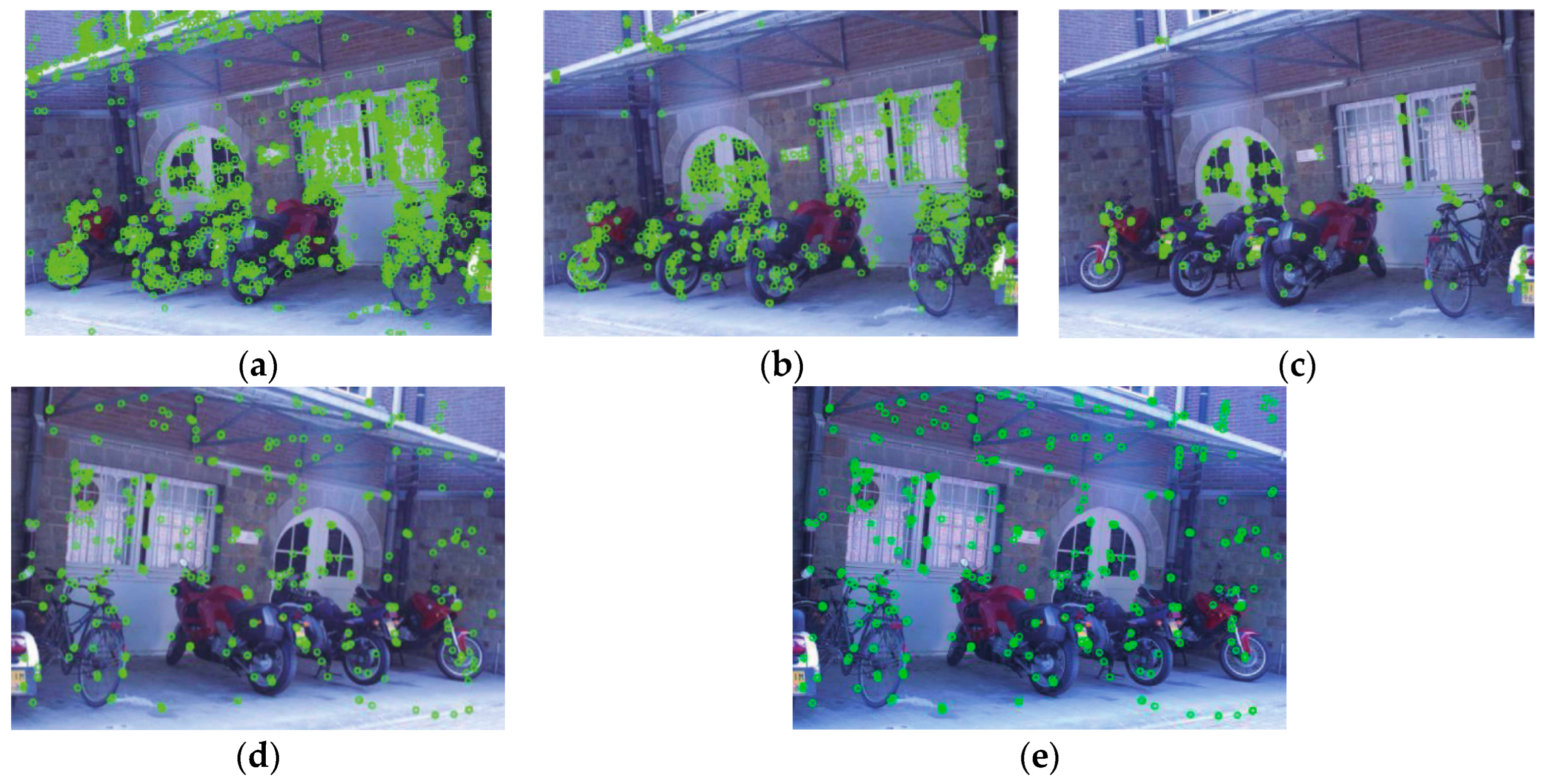

4.1. Feature Point Extraction and Homogenization

4.2. Feature Matching and Mismatching Elimination

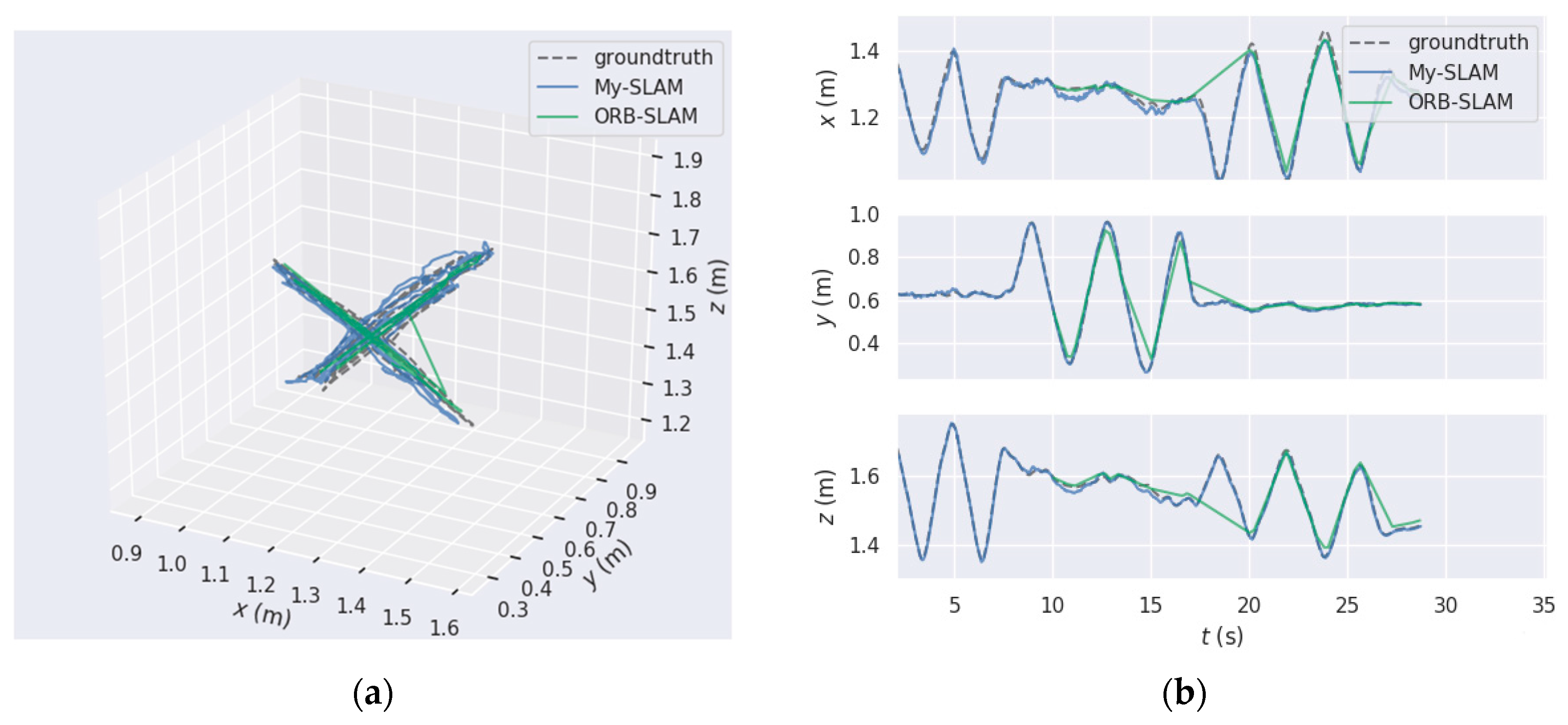

4.3. Localization and Map Construction

5. Discussion and Future Work

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ohno, K.; Tsubouchi, T.; Shigematsu, B. Differential GPS and odometry-based outdoor navigation of a mobile robot. Adv. Robot. 2004, 18, 611–635. [Google Scholar] [CrossRef]

- Khan, M.U.; Zaidi, S.A.A.; Ishtiaq, A.; Bukhari, S.U.R.; Samer, S. A comparative survey of lidar-slam and lidar based sensor technologies. In Proceedings of the 2021 Mohammad Ali Jinnah University International Conference on Computing, Karachi, Pakistan, 15–17 July 2021; pp. 1–8. [Google Scholar]

- Van der Kruk, E.; Marco, M.; Reijne, M.M. Accuracy of human motion capture systems for sport applications; state-of-the-art review. Eur. J. Sport Sci. 2018, 18, 806–819. [Google Scholar] [CrossRef] [PubMed]

- Zafari, F.; Gkelias, A.; Leung, K.K. A survey of indoor localization systems and technologies. IEEE Commun. Surv. Tutor. 2019, 21, 2568–2599. [Google Scholar] [CrossRef]

- Balasuriya, B.; Chathuranga, B.; Jayasundara, B.; Napagoda, N.; Kumarawadu, S.; Chandima, D.; Jayasekara, A. Outdoor robot navigation using Gmapping based SLAM algorithm. In Proceedings of the 2016 Moratuwa Engineering Research Conference, Moratuwa, Sri Lanka, 4–5 April 2016; pp. 403–408. [Google Scholar]

- Wei, W.; Shirinzadeh, B.; Ghafarian, M.; Esakkiappan, S.; Shen, T. Hector SLAM with ICP trajectory matching. In Proceedings of the 2020 IEEE/ASME International Conference on Advanced Intelligent Mechatronics, Boston, MA, USA, 6–10 July 2020; pp. 1971–1976. [Google Scholar]

- Meng, Z.; Xia, X.; Xu, R. HYDRO-3D: Hybrid Object Detection and Tracking for Cooperative Perception Using 3D LiDAR. IEEE Trans. Intell. Veh. 2023. [Google Scholar] [CrossRef]

- Liu, W.; Quijano, K.; Crawford, M.M. YOLOv5-Tassel: Detecting tassels in RGB UAV imagery with improved YOLOv5 based on transfer learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 8085–8094. [Google Scholar] [CrossRef]

- Nützi, G.; Weiss, S.; Scaramuzza, D. Fusion of IMU and vision for absolute scale estimation in monocular SLAM. J. Intell. Robot. Syst. 2011, 61, 287–299. [Google Scholar] [CrossRef]

- Qin, T.; Li, P.; Shen, S. Vins-mono: A robust and versatile monocular visual-inertial state estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef]

- Saputra, M.R.U.; Markham, A.; Trigoni, N. Visual SLAM and structure from motion in dynamic environments: A survey. ACM Comput. Surv. (CSUR) 2018, 51, 1–36. [Google Scholar] [CrossRef]

- Taketomi, T.; Uchiyama, H.; Ikeda, S. Visual SLAM algorithms: A survey from 2010 to 2016. IPSJ Trans. Comput. Vis. Appl. 2017, 9, 1–11. [Google Scholar] [CrossRef]

- Sturm, P. Multi-view geometry for general camera models. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20 June–26 June 2005; pp. 206–212. [Google Scholar]

- Macario, B.A.; Michel, M.; Moline, Y. A comprehensive survey of visual slam algorithms. Robotics 2022, 11, 24. [Google Scholar] [CrossRef]

- Zuo, X.; Xie, X.; Liu, Y. Robust visual SLAM with point and line features. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–29 September 2017; pp. 1775–1782. [Google Scholar]

- Silveira, G.; Malis, E.; Rives, P. An efficient direct approach to visual SLAM. IEEE Trans. Robot. 2008, 24, 969–979. [Google Scholar] [CrossRef]

- Smith, R. On the estimation and representation of spatial uncertainty. Int. J. Robot. Res. 1987, 5, 113–119. [Google Scholar] [CrossRef]

- Davison, A.J.; Reid, I.D.; Molton, N.D.; Stasse, O. MonoSLAM: Real-time single camera SLAM. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 1052–1067. [Google Scholar] [CrossRef] [PubMed]

- Klein, G.; Murray, D. Parallel tracking and mapping for small AR workspaces. In Proceedings of the 2007 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, Nara, Japan, 13–16 November 2007; pp. 225–234. [Google Scholar]

- Newcombe, R.A.; Lovegrove, S.J.; Davison, A.J. DTAM: Dense tracking and mapping in real-time. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2320–2327. [Google Scholar]

- Juan, L.; Gwun, O. A comparison of sift, pca-sift and surf. Int. J. Image Process. (IJIP) 2009, 3, 143–152. [Google Scholar]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-up robust features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Mikolajczyk, K.; Schmid, C. A performance evaluation of local descriptors. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1615–1630. [Google Scholar] [CrossRef]

- Sturm, J.; Engelhard, N.; Endres, F.; Burgard, W.; Cremers, D. A benchmark for the evaluation of RGB-D SLAM systems. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portuga, 7–12 October 2012; pp. 573–580. [Google Scholar]

| Sequence | Trajectory Length | Number of Frames | Average Angular Velocity | Translational Speed |

|---|---|---|---|---|

| fr1/xyz | 7.11 m | 798 | 8.920 deg/s | 0.244 m/s |

| fr1/desk | 18.88 m | 2965 | 23.33 deg/s | 0.293 m/s |

| fr1/desk2 | 10.16 m | 640 | 29.31 deg/s | 0.430 m/s |

| Algorithm | SIFT | SURF | ORB | MRA | This Paper |

|---|---|---|---|---|---|

| Bikes | 189.54 | 254.09 | 171.85 | 141.27 | 140.64 |

| Boat | 201.34 | 201.34 | 148.83 | 111.05 | 92.43 |

| Graf | 158.66 | 159.55 | 120.23 | 84.45 | 84.37 |

| Leuven | 234.04 | 273.04 | 175.45 | 158.66 | 168.16 |

| Trees | 206.39 | 231.24 | 146.66 | 112.15 | 113.32 |

| Ubc | 253.76 | 269.88 | 180.09 | 168.16 | 166.01 |

| Algorithm | Bikes | Boat | Graf | Leuven | Trees | Ubc |

|---|---|---|---|---|---|---|

| ORB | 58.04 | 50.95 | 39.46 | 63.90 | 73.64 | 68.66 |

| MRA | 70.55 | 64.31 | 46.45 | 86.84 | 90.43 | 85.56 |

| Our algorithm | 76.52 | 76.39 | 47.18 | 88.90 | 94.37 | 90.45 |

| Algorithm | fr1/xyz | fr1/Desk2 | fr1/Desk |

|---|---|---|---|

| My-SLAM | 0.0126 m | 0.0582 m | 0.0191 m |

| ORB-SLAM | 0.0139 m | 0.0752 m | 0.0223 m |

| Algorithm | fr1/xyz | fr1/Desk2 | Fr1/Desk |

|---|---|---|---|

| My-SLAM | 0.0109 m | 0.0439 m | 0.0247 m |

| ORB-SLAM | 0.0121 m | 0.0533 m | 0.0296 m |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yin, Z.; Wen, H.; Nie, W.; Zhou, M. Localization of Mobile Robots Based on Depth Camera. Remote Sens. 2023, 15, 4016. https://doi.org/10.3390/rs15164016

Yin Z, Wen H, Nie W, Zhou M. Localization of Mobile Robots Based on Depth Camera. Remote Sensing. 2023; 15(16):4016. https://doi.org/10.3390/rs15164016

Chicago/Turabian StyleYin, Zuoliang, Huaizhi Wen, Wei Nie, and Mu Zhou. 2023. "Localization of Mobile Robots Based on Depth Camera" Remote Sensing 15, no. 16: 4016. https://doi.org/10.3390/rs15164016

APA StyleYin, Z., Wen, H., Nie, W., & Zhou, M. (2023). Localization of Mobile Robots Based on Depth Camera. Remote Sensing, 15(16), 4016. https://doi.org/10.3390/rs15164016