A Spatial–Temporal Bayesian Deep Image Prior Model for Moderate Resolution Imaging Spectroradiometer Temporal Mixture Analysis

Abstract

1. Introduction

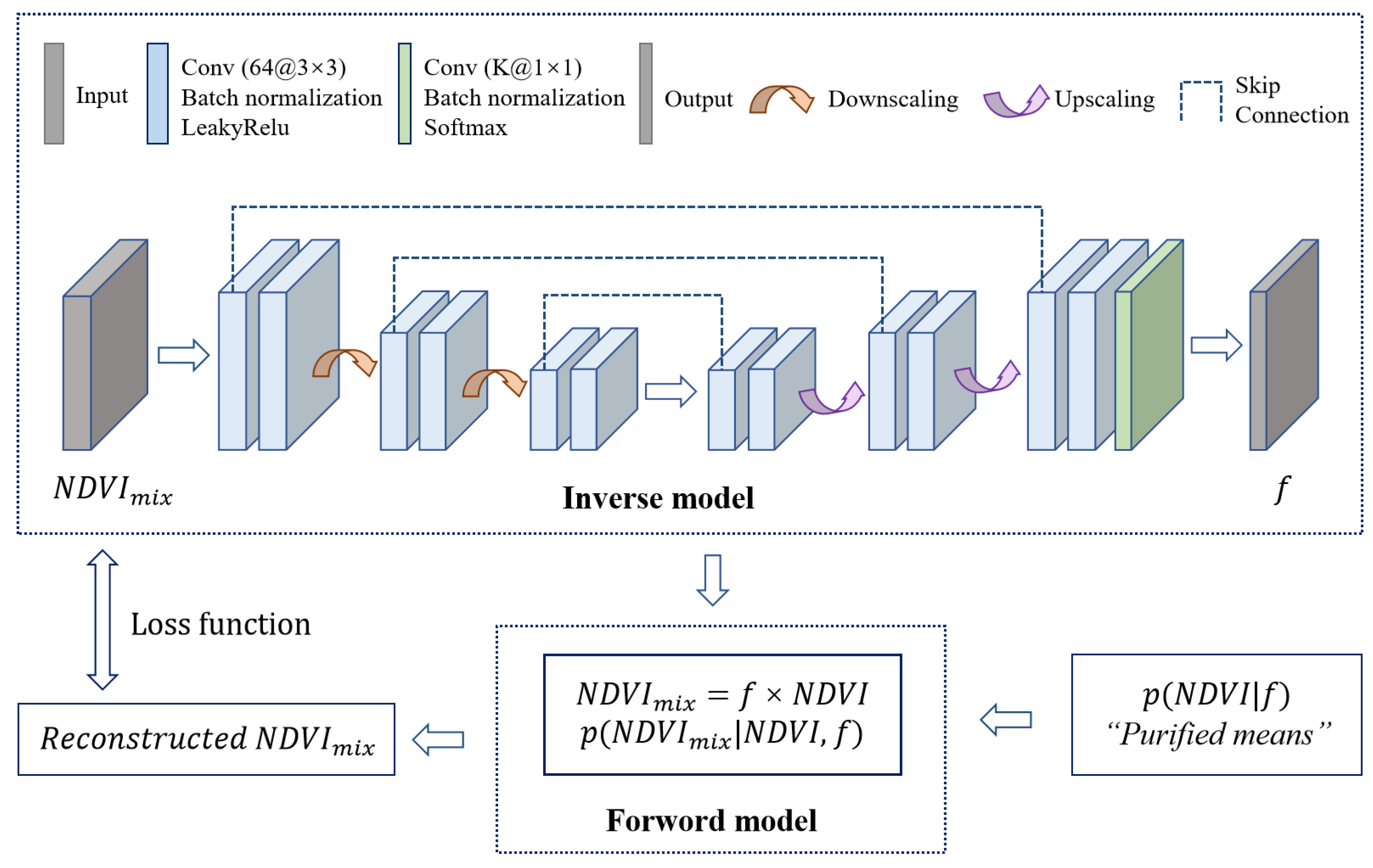

- The U-Net convolutional architecture is specifically designed to estimate crop coverage of the MODIS time series NDVI data. To be specific, this model uses multiple downsampling layers and upsampling layers to obtain the spatial and temporal context information of MODIS NDVI data, so the abundance of tEMs could be estimated efficiently. And the deep image prior (DIP) is utilized to account for spatial correlation within the abundance field.

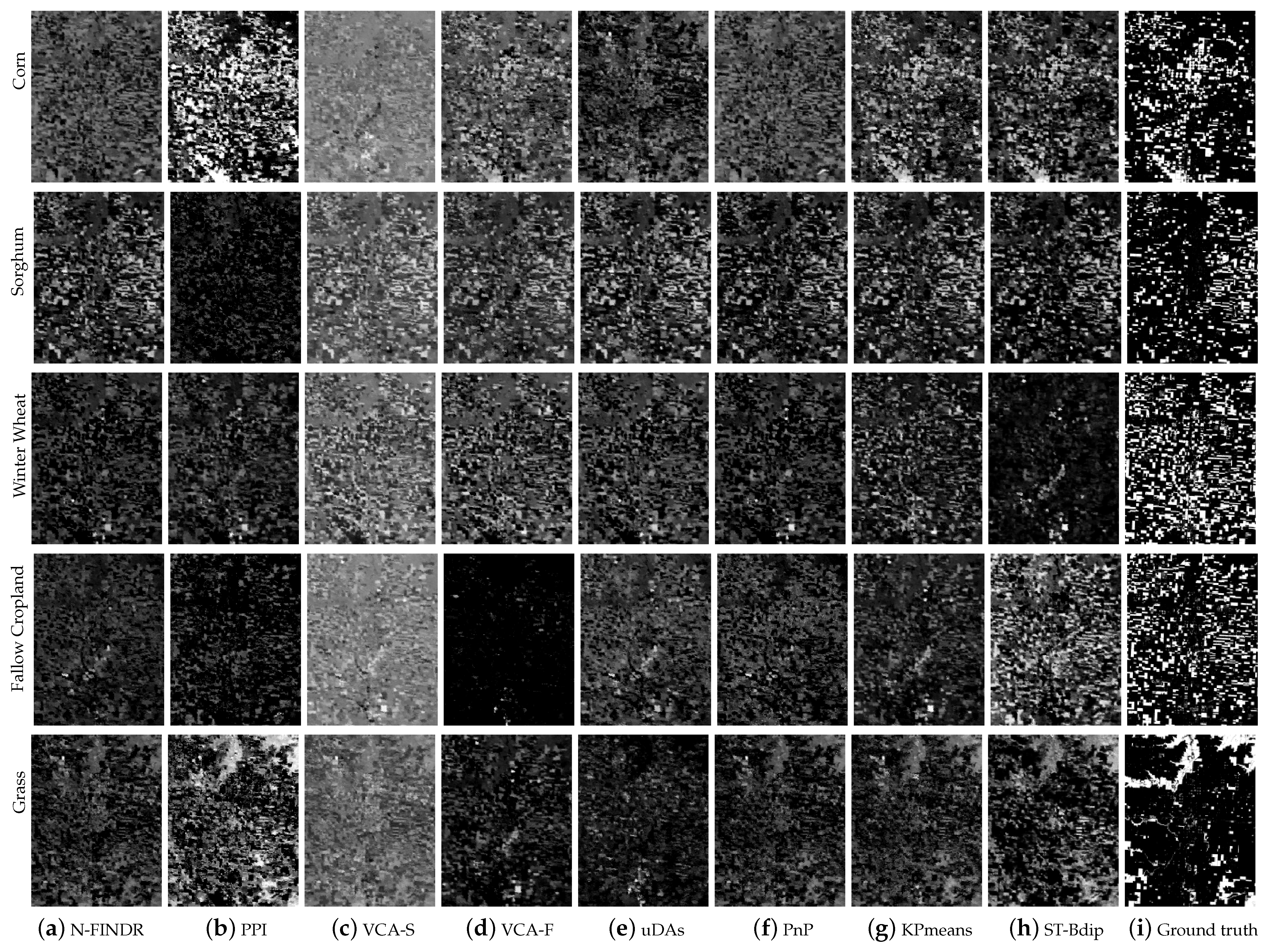

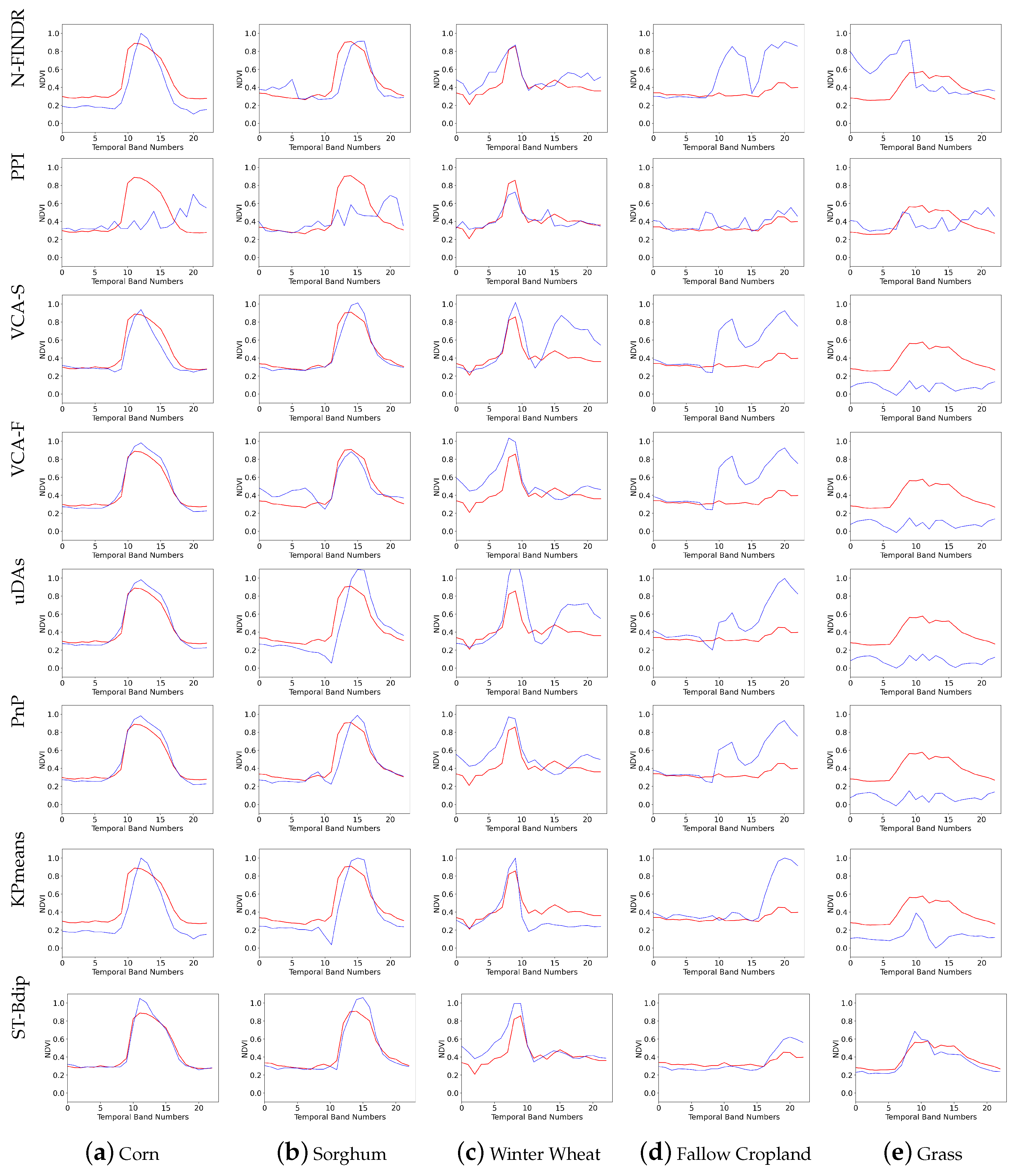

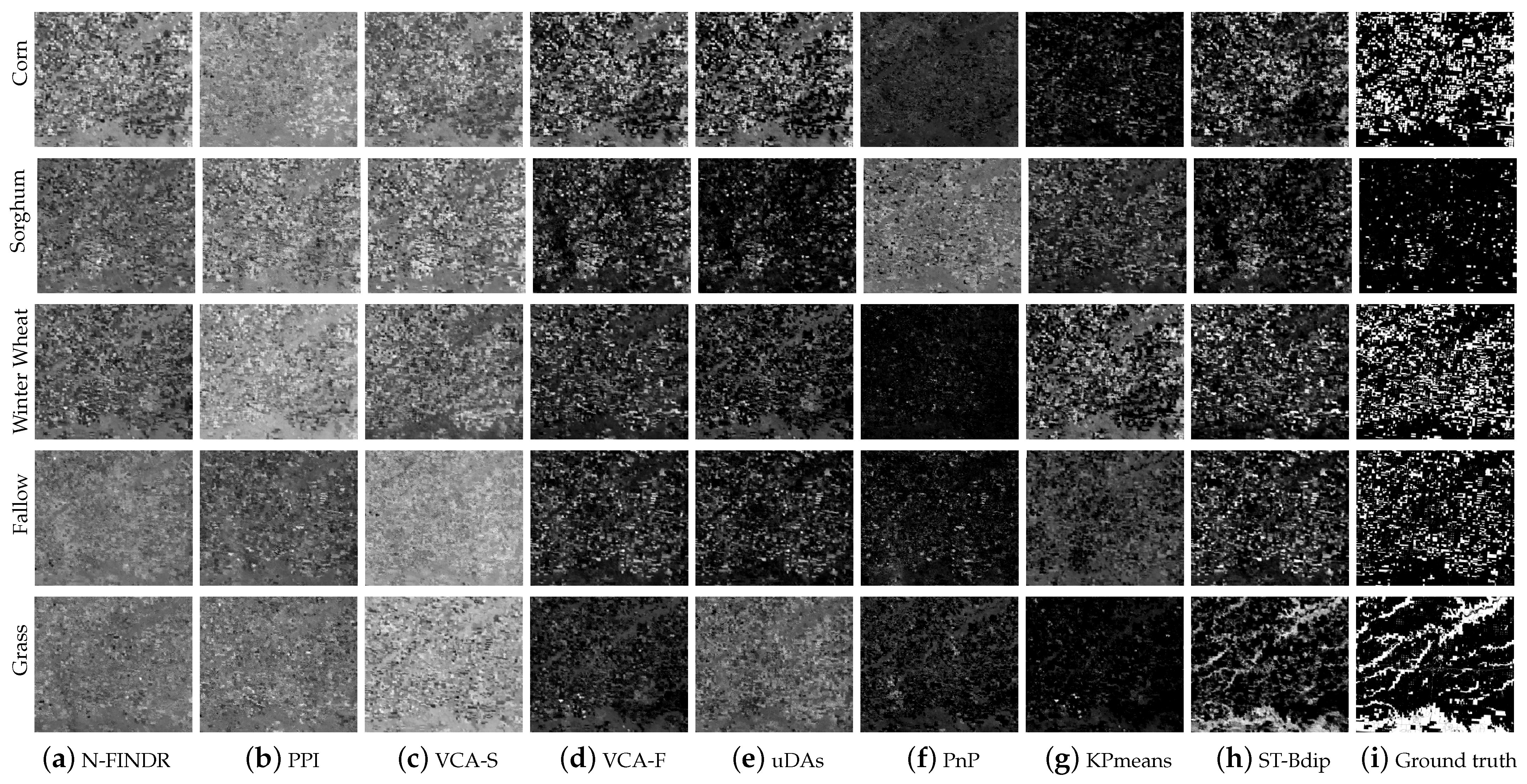

- The TMA model is incorporated into the U-Net training process, which can effectively utilize the prior knowledge of the physical imaging process in the forward model. The linear relationship between tEMs and corresponding abundances is modeled, which improves the U-Net model to better understand the information on different land cover types in the HTIs.

- To solve the temporal noise variance in HTIs, the heterogeneous noise in NDVI data distributed in different periods is modeled by the multivariate Gaussian distribution.

- The ST-Bdip approach estimates the tEMs using the “Purified means” method, which can be interpreted as a conditional distribution of the tEMs by the obtained abundances. And the above components are integrated into the Bayesian framework. The expectation–maximization (EM) algorithm is used to solve the maximum a posteriori (MAP) problem.

- We compare different traditional unmixing methods such as N-FINDR, PPI, SUnSAL, FCLS, and KPmeans, and some DL-based unmixing methods such as uDAs, PnP with the proposed ST-Bdip method in our experiments. And the performances of models are evaluated from the extracted tEMs and the estimated abundances.

2. Dataset and Preprocessing

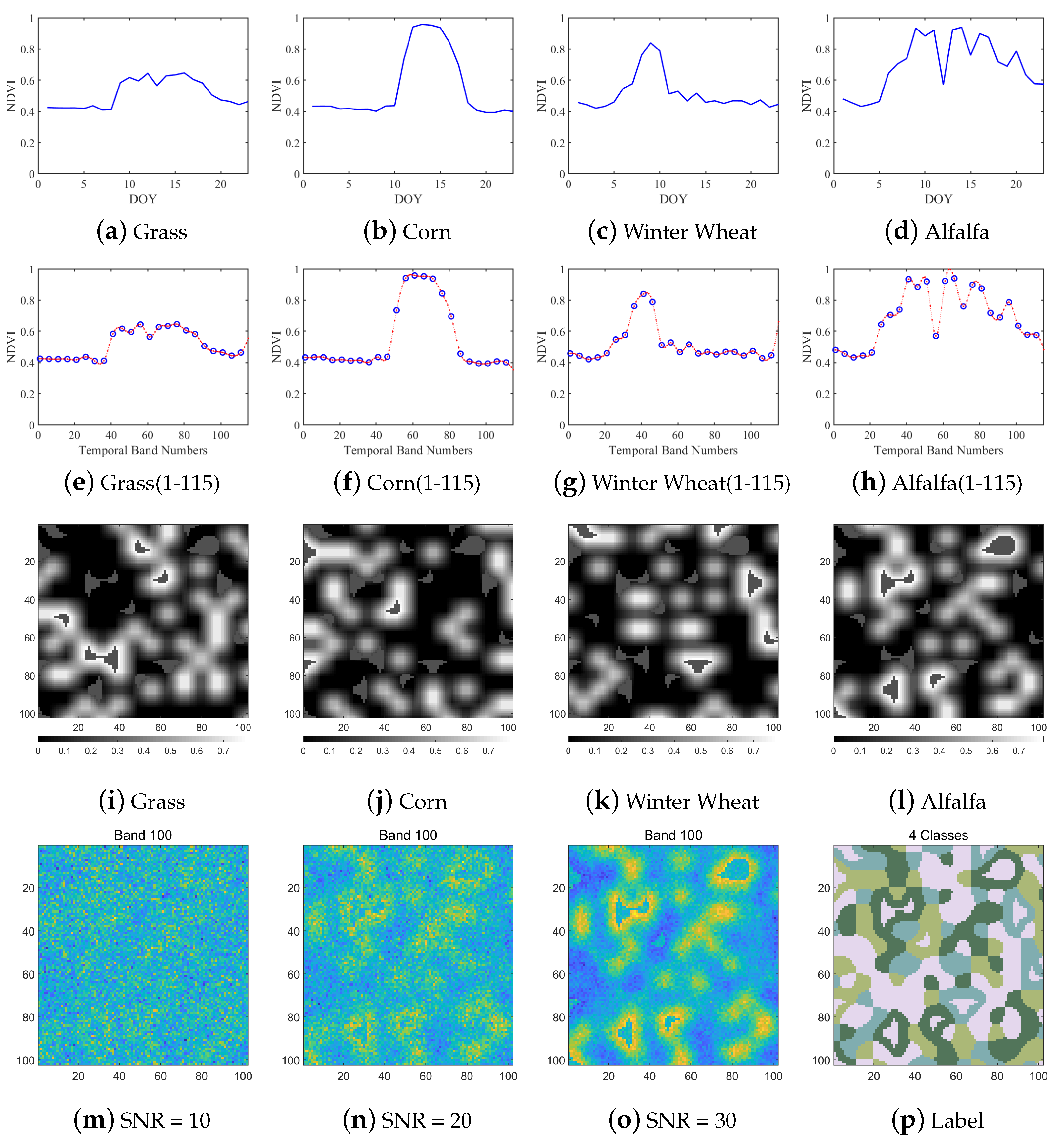

2.1. Simulated Dataset

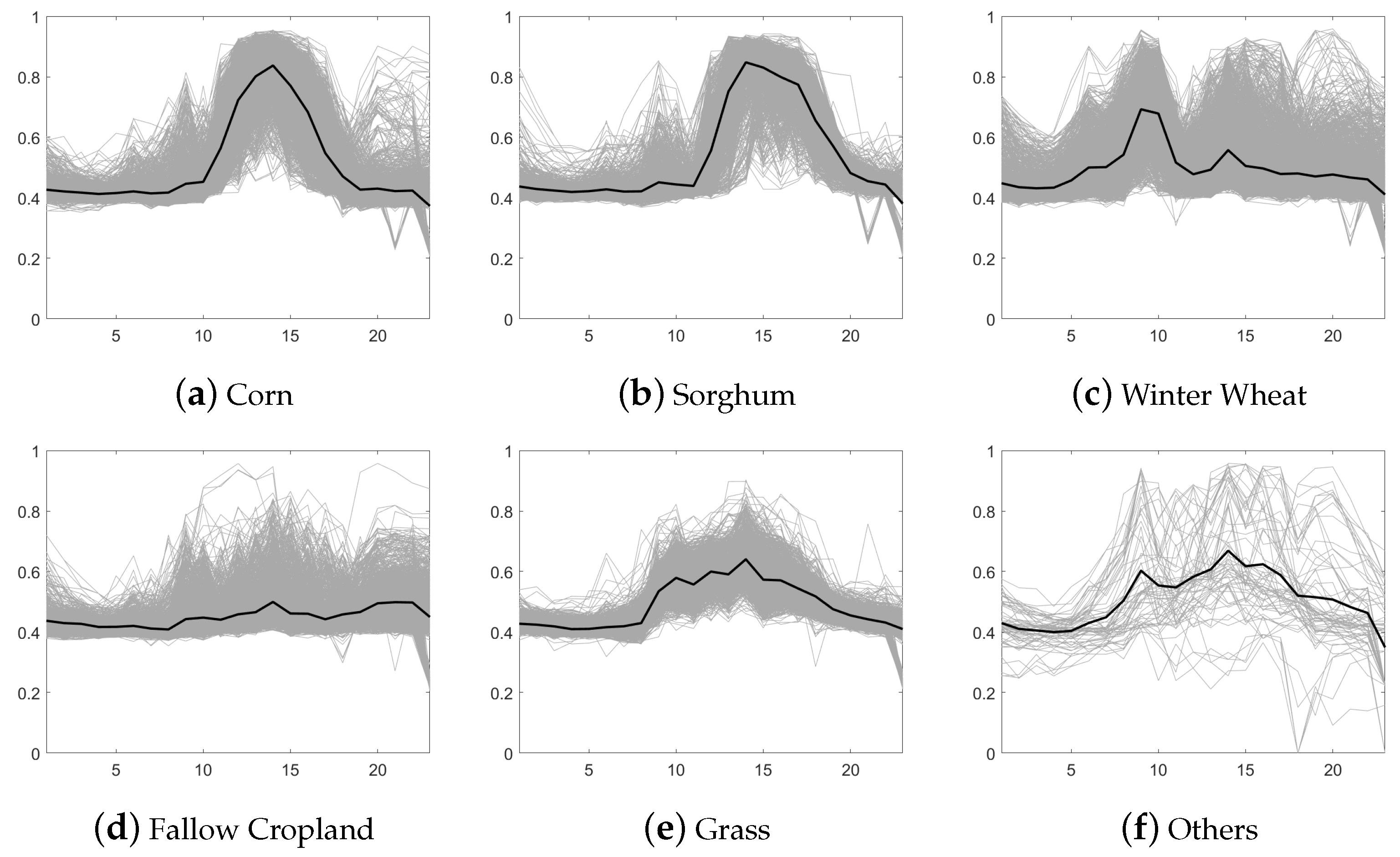

2.2. MODIS Dataset

2.2.1. Study Areas

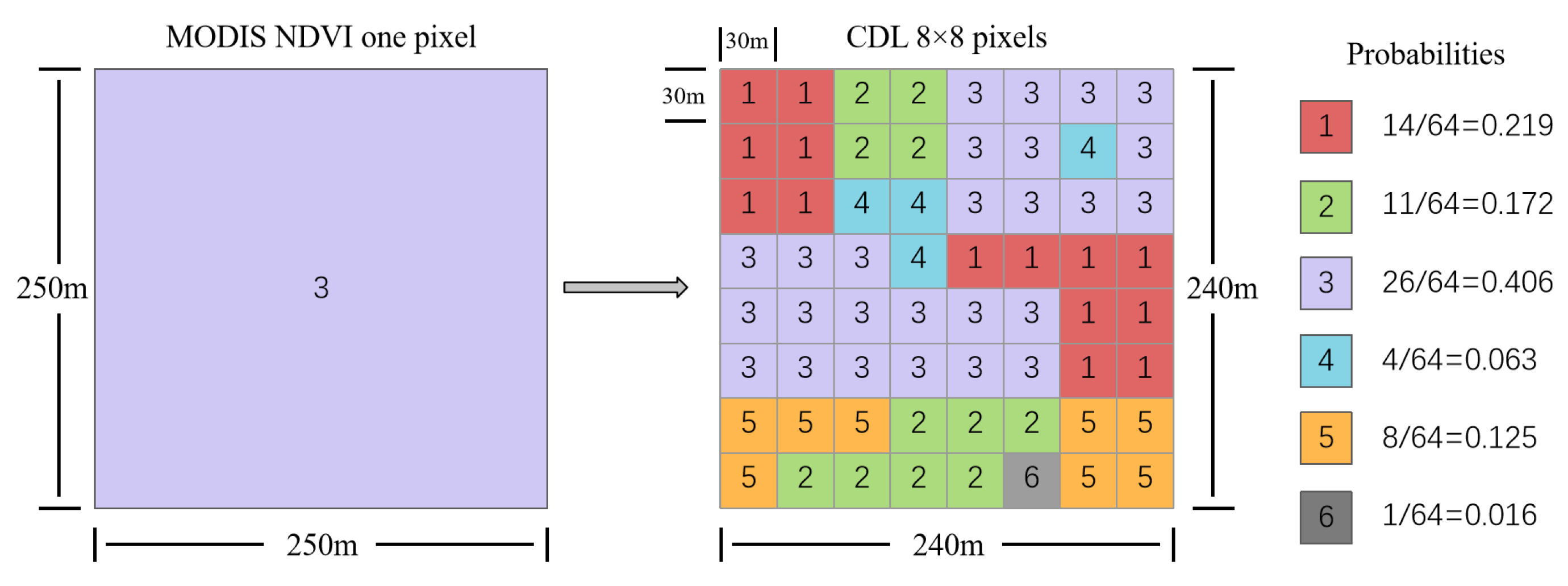

2.2.2. Data Preprocessing

2.2.3. Reference Selection

3. Methodology

3.1. Temporal Mixture Analysis

3.2. Noise Heterogeneity

3.3. ST-Bdip Framework

- The estimated abundances are acquired from an inverse model based on the U-Net framework combining skip connections using the MODIS NDVI time-series data.

- The initial tEMs are obtained based on the VCA algorithm.

- According to the TMA, the time-series image is reconstructed using the abundances from step 1 and tEMs from step 2.

- The multivariate Gaussian distribution with an anisotropic covariance matrix to represent the conditional temporal distribution is used. And the Mahalanobis distance-based loss function is calculated for the model optimization to solve the noise heterogeneity effect in remote sensing images. Thus, the model parameters are optimized, and the new abundance is obtained.

- The “Purified means” method is used to build and further optimize tEMs.

- The tEMs and abundances are constantly updated through the above step 4 and step 5 until the optimal solution is obtained from EM iteration.

4. Experiments and Results

4.1. Comparison Methods and Parameters

4.2. Numerical Measures

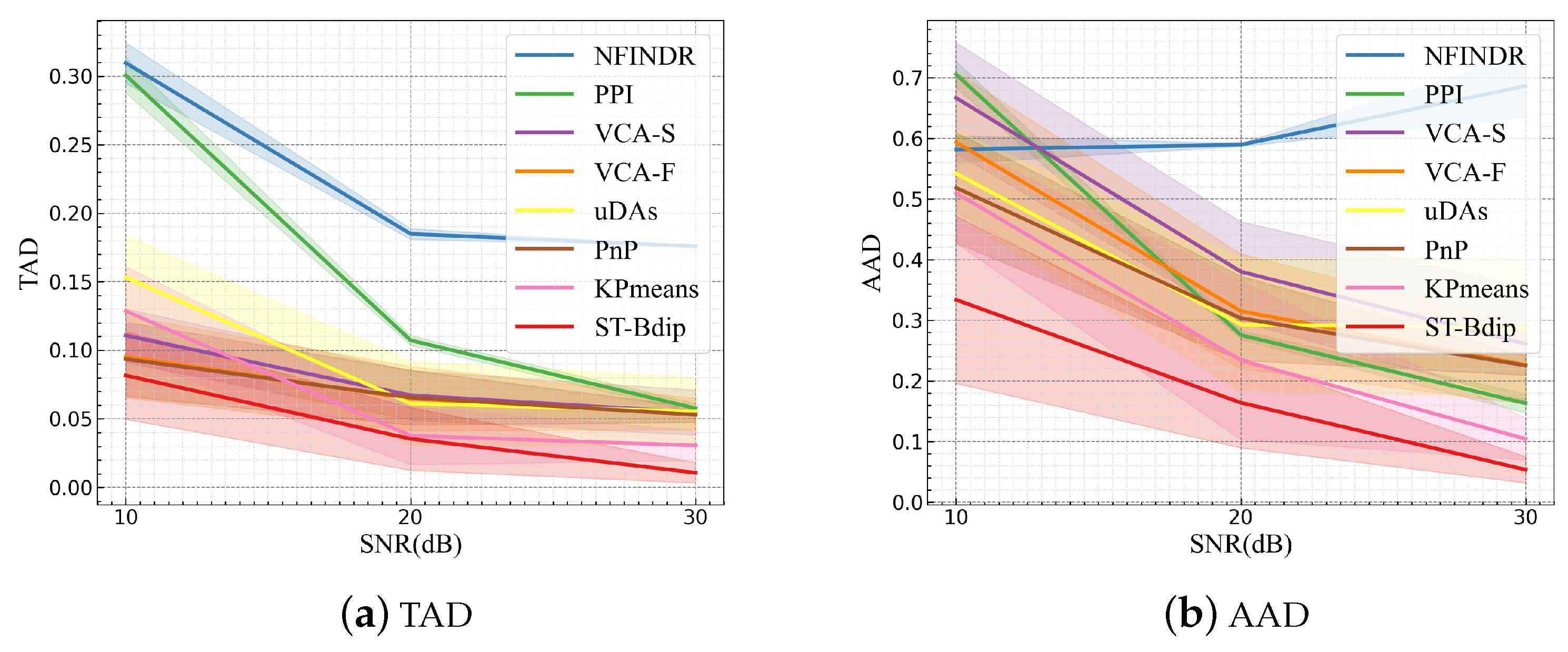

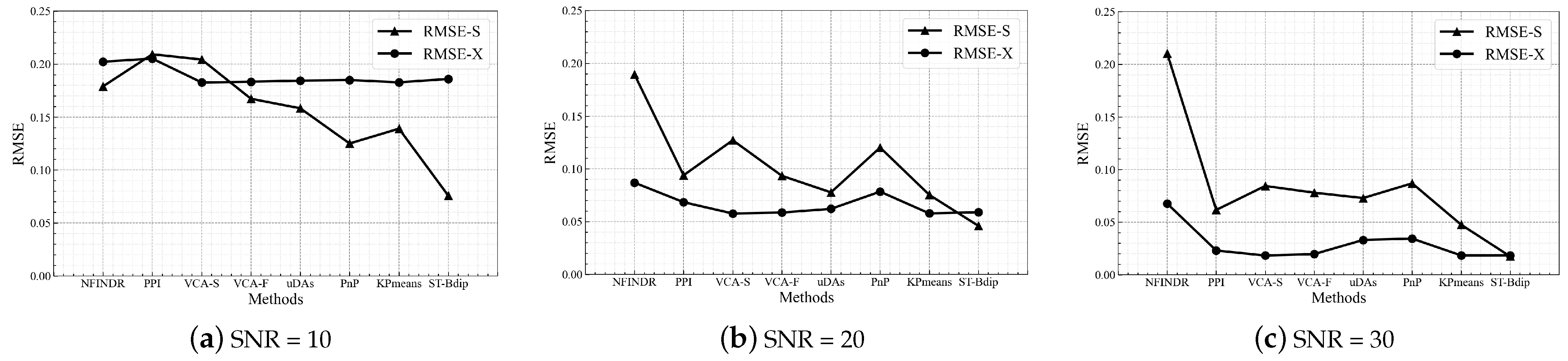

4.3. Test on Simulated Dataset

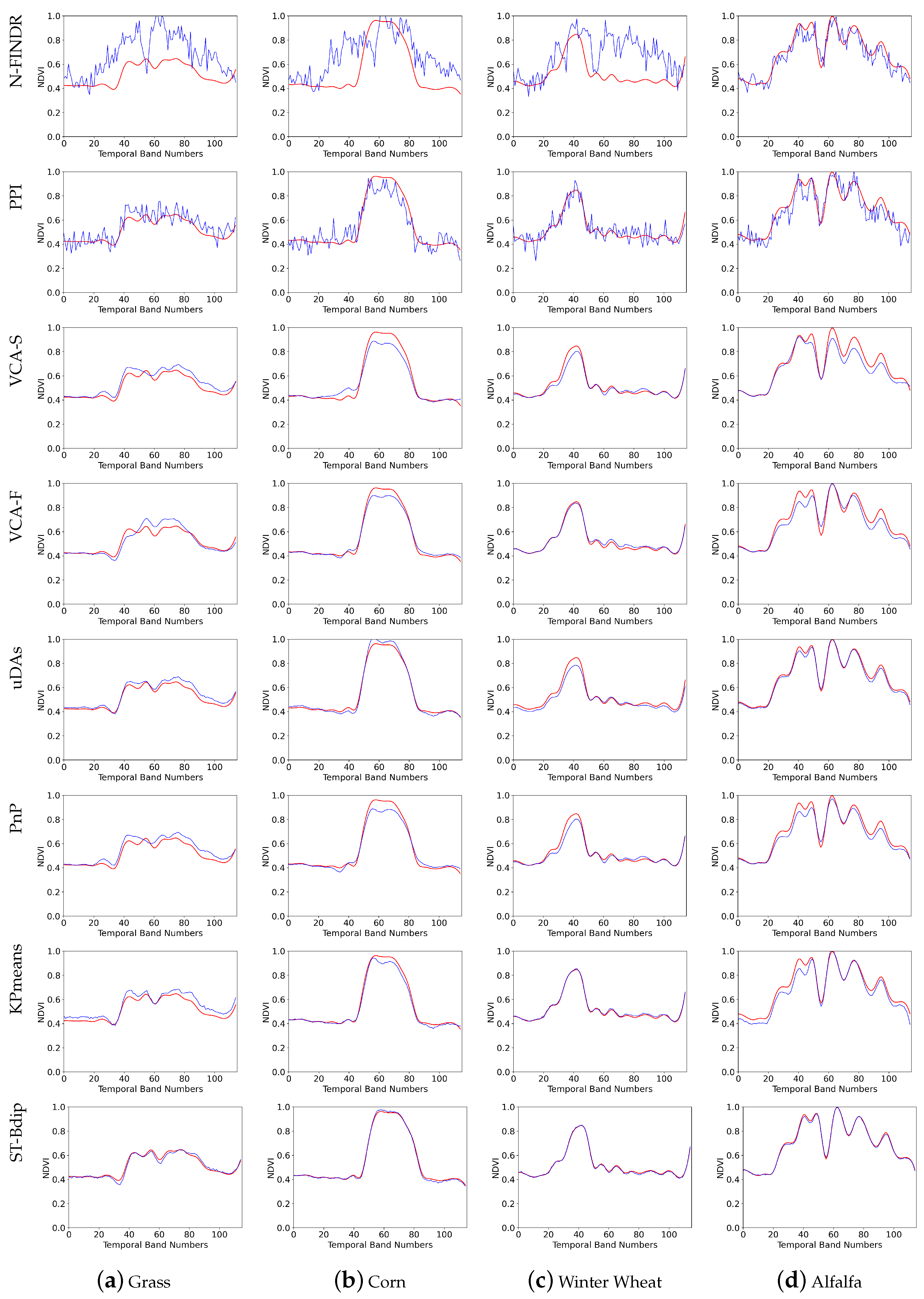

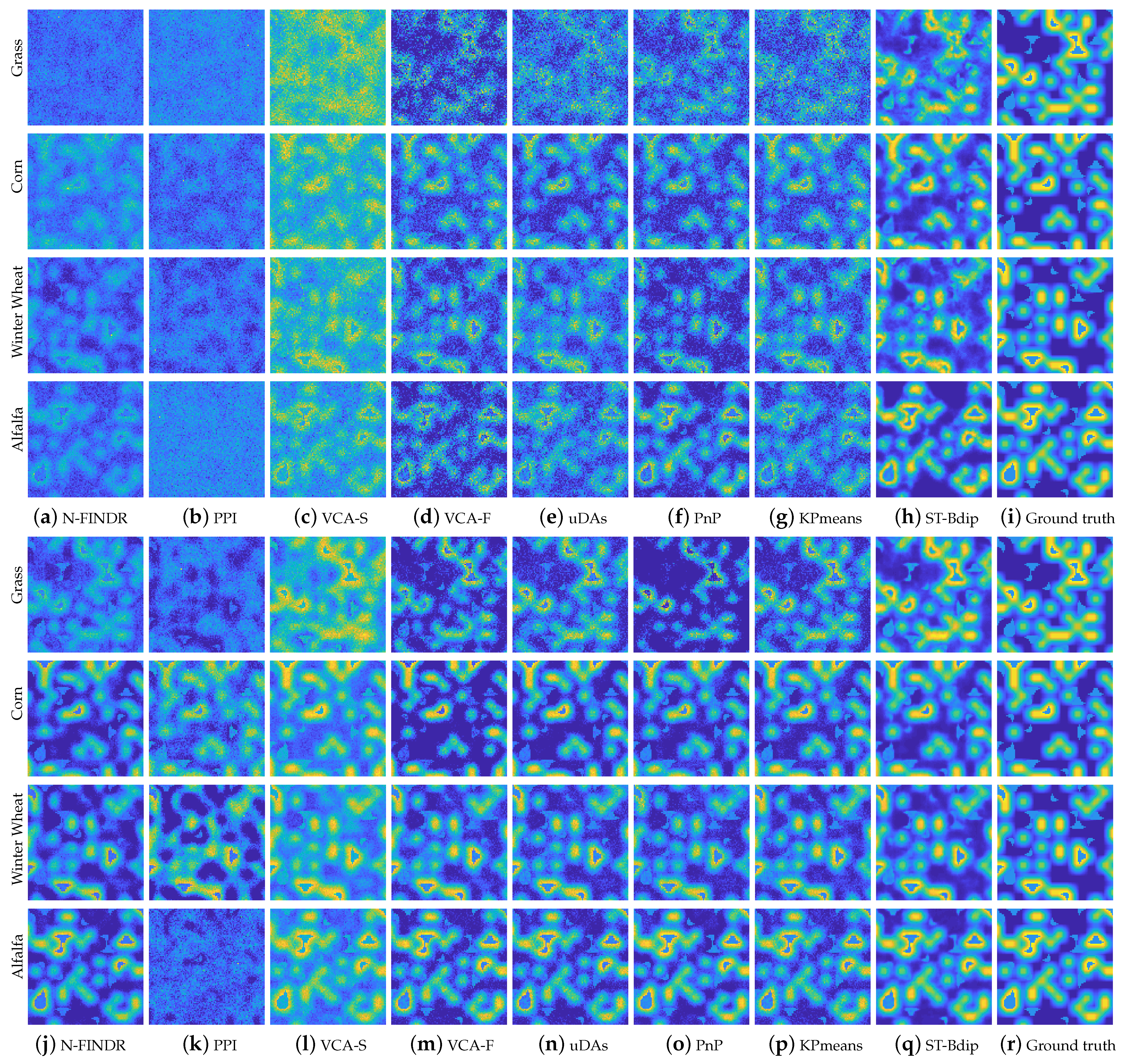

4.4. Test on MODIS NDVI Dataset

5. Discussion

5.1. Advantages of the Proposed ST-Bdip Method

5.2. Data Complexity Analysis

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Cui, J.; Zhang, X.; Luo, M. Combining Linear pixel unmixing and STARFM for spatiotemporal fusion of Gaofen-1 wide field of view imagery and MODIS imagery. Remote Sens. 2018, 10, 1047. [Google Scholar] [CrossRef]

- Shen, M.; Tang, Y.; Chen, J.; Zhu, X.; Zheng, Y. Influences of temperature and precipitation before the growing season on spring phenology in grasslands of the central and eastern Qinghai-Tibetan Plateau. Agric. For. Meteorol. 2011, 151, 1711–1722. [Google Scholar] [CrossRef]

- Asokan, A.; Anitha, J. Change detection techniques for remote sensing applications: A survey. Earth Sci. Inform. 2019, 12, 143–160. [Google Scholar] [CrossRef]

- Goenaga, M.A.; Torres-Madronero, M.C.; Velez-Reyes, M.; Van Bloem, S.J.; Chinea, J.D. Unmixing analysis of a time series of Hyperion images over the Guánica dry forest in Puerto Rico. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 6, 329–338. [Google Scholar] [CrossRef]

- Wang, Q.; Ding, X.; Tong, X.; Atkinson, P.M. Spatio-temporal spectral unmixing of time-series images. Remote Sens. Environ. 2021, 259, 112407. [Google Scholar] [CrossRef]

- Lobell, D.B.; Asner, G.P. Cropland distributions from temporal unmixing of MODIS data. Remote Sens. Environ. 2004, 93, 412–422. [Google Scholar] [CrossRef]

- Wang, Q.; Peng, K.; Tang, Y.; Tong, X.; Atkinson, P.M. Blocks-removed spatial unmixing for downscaling MODIS images. Remote Sens. Environ. 2021, 256, 112325. [Google Scholar] [CrossRef]

- Bioucas-Dias, J.M.; Plaza, A.; Dobigeon, N.; Parente, M.; Du, Q.; Gader, P.; Chanussot, J. Hyperspectral unmixing overview: Geometrical, statistical, and sparse regression-based approaches. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 354–379. [Google Scholar] [CrossRef]

- Foody, G.M. Relating the land-cover composition of mixed pixels to artificial neural network classification output. Photogramm. Eng. Remote Sens. 1996, 62, 491–498. [Google Scholar]

- Adams, J.B.; Smith, M.O.; Johnson, P.E. Spectral mixture modeling: A new analysis of rock and soil types at the Viking Lander 1 site. J. Geophys. Res. Solid Earth 1986, 91, 8098–8112. [Google Scholar] [CrossRef]

- Liu, J.; Cao, W. Based on linear spectral mixture model (LSMM) Unmixing remote sensing image. In Proceedings of the Third International Conference on Digital Image Processing (ICDIP 2011), Chengdu, China, 15–17 April 2011; SPIE: Paris, France, 2011; Volume 8009, pp. 353–356. [Google Scholar]

- Ma, T.; Li, R.; Svenning, J.C.; Song, X. Linear spectral unmixing using endmember coexistence rules and spatial correlation. Int. J. Remote Sens. 2018, 39, 3512–3536. [Google Scholar] [CrossRef]

- Tao, X.; Paoletti, M.E.; Han, L.; Wu, Z.; Ren, P.; Plaza, J.; Plaza, A.; Haut, J.M. A New Deep Convolutional Network for Effective Hyperspectral Unmixing. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 6999–7012. [Google Scholar] [CrossRef]

- Nascimento, J.M.; Dias, J.M. Vertex component analysis: A fast algorithm to unmix hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2005, 43, 898–910. [Google Scholar] [CrossRef]

- Winter, M.E. N-FINDR: An algorithm for fast autonomous spectral end-member determination in hyperspectral data. In Imaging Spectrometry V; SPIE: Paris, France, 1999; Volume 3753, pp. 266–275. [Google Scholar]

- Chang, C.I.; Plaza, A. A fast iterative algorithm for implementation of pixel purity index. IEEE Geosci. Remote Sens. Lett. 2006, 3, 63–67. [Google Scholar] [CrossRef]

- Xu, L.; Li, J.; Wong, A.; Peng, J. Kp-means: A clustering algorithm of k “purified” means for hyperspectral endmember estimation. IEEE Geosci. Remote Sens. Lett. 2014, 11, 1787–1791. [Google Scholar]

- Zhao, C.; Zhao, G.; Jia, X. Hyperspectral image unmixing based on fast kernel archetypal analysis. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 10, 331–346. [Google Scholar] [CrossRef]

- Feng, X.R.; Li, H.C.; Wang, R.; Du, Q.; Jia, X.; Plaza, A.J. Hyperspectral unmixing based on nonnegative matrix factorization: A comprehensive review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 4414–4436. [Google Scholar] [CrossRef]

- Heinz, D.C. Fully constrained least squares linear spectral mixture analysis method for material quantification in hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2001, 39, 529–545. [Google Scholar] [CrossRef]

- Bioucas-Dias, J.M.; Figueiredo, M.A. Alternating direction algorithms for constrained sparse regression: Application to hyperspectral unmixing. In Proceedings of the 2nd Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing, Reykjavik, Iceland, 14–16 June 2010; IEEE: New York, NY, USA, 2010; pp. 1–4. [Google Scholar]

- Xu, X.; Shi, Z.; Pan, B. A supervised abundance estimation method for hyperspectral unmixing. Remote Sens. Lett. 2018, 9, 383–392. [Google Scholar] [CrossRef]

- Guo, R.; Wang, W.; Qi, H. Hyperspectral image unmixing using autoencoder cascade. In Proceedings of the 7th Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Tokyo, Japan, 2–5 June 2015; IEEE: New York, NY, USA, 2015; pp. 1–4. [Google Scholar]

- Qu, Y.; Qi, H. UDAS: An untied denoising autoencoder with sparsity for spectral unmixing. IEEE Trans. Geosci. Remote Sens. 2018, 57, 1698–1712. [Google Scholar] [CrossRef]

- Gao, L.; Han, Z.; Hong, D.; Zhang, B.; Chanussot, J. CyCU-Net: Cycle-consistency unmixing network by learning cascaded autoencoders. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–14. [Google Scholar] [CrossRef]

- Qi, L.; Li, J.; Wang, Y.; Lei, M.; Gao, X. Deep spectral convolution network for hyperspectral image unmixing with spectral library. Signal Process. 2020, 176, 107672. [Google Scholar] [CrossRef]

- Zhang, X.; Sun, Y.; Zhang, J.; Wu, P.; Jiao, L. Hyperspectral unmixing via deep convolutional neural networks. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1755–1759. [Google Scholar] [CrossRef]

- Palsson, B.; Ulfarsson, M.O.; Sveinsson, J.R. Convolutional autoencoder for spectral–spatial hyperspectral unmixing. IEEE Trans. Geosci. Remote Sens. 2020, 59, 535–549. [Google Scholar] [CrossRef]

- Yan, C.; Fan, X.; Fan, J.; Wang, N. Improved U-Net remote sensing classification algorithm based on Multi-Feature Fusion Perception. Remote Sens. 2022, 14, 1118. [Google Scholar] [CrossRef]

- He, N.; Fang, L.; Plaza, A. Hybrid first and second order attention Unet for building segmentation in remote sensing images. Sci. China Inf. Sci. 2020, 63, 140305. [Google Scholar] [CrossRef]

- Zhao, M.; Wang, X.; Chen, J.; Chen, W. A Plug-and-Play Priors Framework for Hyperspectral Unmixing. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5501213. [Google Scholar] [CrossRef]

- Diao, C.; Wang, L. Temporal partial unmixing of exotic salt cedar using Landsat time series. Remote Sens. Lett. 2016, 7, 466–475. [Google Scholar] [CrossRef]

- Borsoi, R.A.; Imbiriba, T.; Bermudez, J.C.M.; Richard, C.; Chanussot, J.; Drumetz, L.; Tourneret, J.Y.; Zare, A.; Jutten, C. Spectral variability in hyperspectral data unmixing: A comprehensive review. IEEE Geosci. Remote Sens. Mag. 2021, 9, 223–270. [Google Scholar] [CrossRef]

- Hong, D.; Gao, L.; Yao, J.; Yokoya, N.; Chanussot, J.; Heiden, U.; Zhang, B. Endmember-guided unmixing network (EGU-Net): A general deep learning framework for self-supervised hyperspectral unmixing. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 6518–6531. [Google Scholar] [CrossRef]

- Su, Y.; Li, J.; Plaza, A.; Marinoni, A.; Gamba, P.; Chakravortty, S. Daen: Deep autoencoder networks for hyperspectral unmixing. IEEE Trans. Geosci. Remote Sens. 2019, 57, 4309–4321. [Google Scholar] [CrossRef]

- Chen, Y.; Song, X.; Wang, S.; Huang, J.; Mansaray, L.R. Impacts of spatial heterogeneity on crop area mapping in Canada using MODIS data. ISPRS J. Photogramm. Remote Sens. 2016, 119, 451–461. [Google Scholar] [CrossRef]

- Marchane, A.; Jarlan, L.; Hanich, L.; Boudhar, A.; Gascoin, S.; Tavernier, A.; Filali, N.; Le Page, M.; Hagolle, O.; Berjamy, B. Assessment of daily MODIS snow cover products to monitor snow cover dynamics over the Moroccan Atlas mountain range. Remote Sens. Environ. 2015, 160, 72–86. [Google Scholar] [CrossRef]

- Portillo-Quintero, C.; Sanchez, A.; Valbuena, C.; Gonzalez, Y.; Larreal, J. Forest cover and deforestation patterns in the Northern Andes (Lake Maracaibo Basin): A synoptic assessment using MODIS and Landsat imagery. Appl. Geogr. 2012, 35, 152–163. [Google Scholar] [CrossRef]

- Lloyd, D. A phenological classification of terrestrial vegetation cover using shortwave vegetation index imagery. Remote Sens. 1990, 11, 2269–2279. [Google Scholar] [CrossRef]

- Wardlow, B.D.; Egbert, S.L.; Kastens, J.H. Analysis of time-series MODIS 250 m vegetation index data for crop classification in the US Central Great Plains. Remote Sens. Environ. 2007, 108, 290–310. [Google Scholar] [CrossRef]

- Son, N.T.; Chen, C.F.; Chen, C.R.; Duc, H.N.; Chang, L.Y. A phenology-based classification of time-series MODIS data for rice crop monitoring in Mekong Delta, Vietnam. Remote Sens. 2013, 6, 135–156. [Google Scholar] [CrossRef]

- Sun, C.; Li, J.; Cao, L.; Liu, Y.; Jin, S.; Zhao, B. Evaluation of Vegetation Index-Based Curve Fitting Models for Accurate Classification of Salt Marsh Vegetation Using Sentinel-2 Time-Series. Sensors 2020, 20, 5551. [Google Scholar] [CrossRef]

- Piwowar, J.M.; Peddle, D.R.; LeDrew, E.F. Temporal mixture analysis of arctic sea ice imagery: A new approach for monitoring environmental change. Remote Sens. Environ. 1998, 63, 195–207. [Google Scholar] [CrossRef]

- Yang, F.; Matsushita, B.; Fukushima, T.; Yang, W. Temporal mixture analysis for estimating impervious surface area from multi-temporal MODIS NDVI data in Japan. ISPRS J. Photogramm. Remote Sens. 2012, 72, 90–98. [Google Scholar] [CrossRef]

- Li, W.; Wu, C. Phenology-based temporal mixture analysis for estimating large-scale impervious surface distributions. Int. J. Remote Sens. 2014, 35, 779–795. [Google Scholar] [CrossRef]

- Zhuo, L.; Shi, Q.; Tao, H.; Zheng, J.; Li, Q. An improved temporal mixture analysis unmixing method for estimating impervious surface area based on MODIS and DMSP-OLS data. ISPRS J. Photogramm. Remote Sens. 2018, 142, 64–77. [Google Scholar] [CrossRef]

- Torres-Madronero, M.C.; Velez-Reyes, M.; Van Bloem, S.J.; Chinea, J.D. Multi-temporal unmixing analysis of Hyperion images over the Guanica Dry Forest. In Proceedings of the 3rd Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Lisbon, Portugal, 6–9 June 2011; IEEE: New York, NY, USA, 2011; pp. 1–4. [Google Scholar]

- Chi, J.; Kim, H.C.; Kang, S.H. Machine learning-based temporal mixture analysis of hypertemporal Antarctic sea ice data. Remote Sens. Lett. 2016, 7, 190–199. [Google Scholar] [CrossRef]

- Liu, Z.; Hu, Q.; Tan, J.; Zou, J. Regional scale mapping of fractional rice cropping change using a phenology-based temporal mixture analysis. Int. J. Remote Sens. 2019, 40, 2703–2716. [Google Scholar] [CrossRef]

- Bruce, L.M.; Mathur, A.; Byrd, J.D., Jr. Denoising and wavelet-based feature extraction of MODIS multi-temporal vegetation signatures. GIScience Remote Sens. 2006, 43, 67–77. [Google Scholar] [CrossRef]

- Hermance, J.F.; Jacob, R.W.; Bradley, B.A.; Mustard, J.F. Extracting phenological signals from multiyear AVHRR NDVI time series: Framework for applying high-order annual splines with roughness damping. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3264–3276. [Google Scholar] [CrossRef]

- Liang, S.z.; Ma, W.d.; Sui, X.y.; Yao, H.m.; Li, H.z.; Liu, T.; Hou, X.h.; Wang, M. Extracting the spatiotemporal pattern of cropping systems from NDVI time series using a combination of the spline and HANTS Algorithms: A case study for Shandong Province. Can. J. Remote Sens. 2017, 43, 1–15. [Google Scholar] [CrossRef]

- Shou-Zhen, L.; Peng, S.; Qian-Guo, X. A comparison between the algorithms for removing cloud pixel from MODIS NDVI time series data. Remote Sens. Nat. Resour. 2011, 23, 33–36. [Google Scholar]

- Shao, Y.; Lunetta, R.S.; Wheeler, B.; Iiames, J.S.; Campbell, J.B. An evaluation of time-series smoothing algorithms for land-cover classifications using MODIS-NDVI multi-temporal data. Remote Sens. Environ. 2016, 174, 258–265. [Google Scholar] [CrossRef]

- Zhong, L.; Hu, L.; Zhou, H.; Tao, X. Deep learning based winter wheat mapping using statistical data as ground references in Kansas and northern Texas, US. Remote Sens. Environ. 2019, 233, 111411. [Google Scholar] [CrossRef]

- Chen, Y.; Lu, D.; Moran, E.; Batistella, M.; Dutra, L.V.; Sanches, I.D.; da Silva, R.F.B.; Huang, J.; Luiz, A.J.B.; de Oliveira, M.A.F. Mapping croplands, cropping patterns, and crop types using MODIS time-series data. Int. J. Appl. Earth Obs. Geoinf. 2018, 69, 133–147. [Google Scholar] [CrossRef]

- Barkau, R.L. UNET: One-Dimensional Unsteady Flow through a Full Network of Open Channels. User’s Manual; Technical Report; Hydrologic Engineering Center: Davis, CA, USA, 1996. [Google Scholar]

- Ng, S.K.; Krishnan, T.; McLachlan, G.J. The EM algorithm. In Handbook of Computational Statistics: Concepts and Methods; Springer: Berlin/Heidelberg, Germany, 2012; pp. 139–172. [Google Scholar]

- Lebrun, M.; Buades, A.; Morel, J.M. A nonlocal Bayesian image denoising algorithm. SIAM J. Imaging Sci. 2013, 6, 1665–1688. [Google Scholar] [CrossRef]

- Nascimento, J.M.; Bioucas-Dias, J.M. Nonlinear mixture model for hyperspectral unmixing. In Image and Signal Processing for Remote Sensing XV; SPIE: Paris, France, 2009; Volume 7477, pp. 157–164. [Google Scholar]

- Winter, M.E. A proof of the N-FINDR algorithm for the automated detection of endmembers in a hyperspectral image. In Algorithms and Technologies for Multispectral, Hyperspectral, and Ultraspectral Imagery X; SPIE: Paris, France, 2004; Volume 5425, pp. 31–41. [Google Scholar]

- Keleşoğlu, G.; Ertürk, A.; Erten, E. Analysis of mucilage levels build up in the sea of Marmara based on unsupervised unmixing of worldview-3 data. In Proceedings of the IEEE Mediterranean and Middle-East Geoscience and Remote Sensing Symposium (M2GARSS), Istanbul, Turkey, 7–9 March 2022; IEEE: New York, NY, USA, 2022; pp. 102–105. [Google Scholar]

- Ishidoshiro, N.; Yamaguchi, Y.; Noda, S.; Asano, Y.; Kondo, T.; Kawakami, Y.; Mitsuishi, M.; Nakamura, H. Geological mapping by combining spectral unmixing and cluster analysis for hyperspectral data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41, 431–435. [Google Scholar] [CrossRef]

- Lopez, A.C.C.; Mora, M.M.G.; Torres-Madroñero, M.C.; Velez-Reyes, M. Using hyperspectral unmixing for the analysis of very high spatial resolution hyperspectral imagery. In Algorithms, Technologies, and Applications for Multispectral and Hyperspectral Imaging XXIX; SPIE: Paris, France, 2023; Volume 12519, pp. 277–284. [Google Scholar]

- Martínez, P.J.; Pérez, R.M.; Plaza, A.; Aguilar, P.L.; Cantero, M.C.; Plaza, J. Endmember extraction algorithms from hyperspectral images. Ann. Geophys. 2006, 49, 93–101. [Google Scholar] [CrossRef]

- Wang, R.; Li, H.C.; Liao, W.; Pižurica, A. Double reweighted sparse regression for hyperspectral unmixing. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; IEEE: New York, NY, USA, 2016; pp. 6986–6989. [Google Scholar]

- Horé, A.; Ziou, D. Image Quality Metrics: PSNR vs. SSIM. In Proceedings of the 20th International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 2366–2369. [Google Scholar] [CrossRef]

- Palsson, B.; Sigurdsson, J.; Sveinsson, J.R.; Ulfarsson, M.O. Hyperspectral unmixing using a neural network autoencoder. IEEE Access 2018, 6, 25646–25656. [Google Scholar] [CrossRef]

- Sun, W.; Yang, G.; Peng, J.; Meng, X.; He, K.; Li, W.; Li, H.C.; Du, Q. A multiscale spectral features graph fusion method for hyperspectral band selection. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5513712. [Google Scholar] [CrossRef]

- Ozdogan, M. The spatial distribution of crop types from MODIS data: Temporal unmixing using Independent Component Analysis. Remote Sens. Environ. 2010, 114, 1190–1204. [Google Scholar] [CrossRef]

| Data | Spatial Resolution | Time Period | Projection | Download Source |

|---|---|---|---|---|

| MOD13Q1 | 250 m | 2018/01/01 | Sinusoidal projection | https://ladsweb.modaps.eosdis.nasa.gov/ (accessed on 1 June 2023) |

| CDL | 30 m | 2018–2018/12/31 | Albers Equal Area Conic projection | https://nassgeodata.gmu.edu/CropScape/ (accessed on 1 June 2023) |

| SNR = 10, 4 tEMs, 102 × 102 size, 115 bands | |||||

| Method | TAD | AAD | TID | AID | 1-SSIM |

| N-FINDR | 0.3096 ± 0.02 | 0.5818 ± 0.03 | 0.1320 ± 0.02 | 4.5547 ± 0.36 | 0.5473 |

| PPI | 0.3006 ± 0.01 | 0.7055 ± 0.03 | 0.1101 ± 0.01 | 5.5562 ± 0.33 | 0.7019 |

| VCA-S | 0.1108 ± 0.03 | 0.6669 ± 0.18 | 0.0170 ± 0.01 | 5.1622 ± 4.00 | 0.4683 |

| VCA-F | 0.0953 ± 0.04 | 0.5936 ± 0.13 | 0.0137 ± 0.01 | 6.3974 ± 3.47 | 0.3889 |

| uDAs | 0.1531 ± 0.04 | 0.5420 ± 0.09 | 0.0355 ± 0.02 | 4.1086 ± 1.22 | 0.3990 |

| PnP | 0.0936 ± 0.04 | 0.5186 ± 0.13 | 0.0123 ± 0.01 | 4.4523 ± 1.97 | 0.2872 |

| KPmeans | 0.1286 ± 0.04 | 0.5098 ± 0.09 | 0.0241 ± 0.01 | 3.8117 ± 1.30 | 0.3343 |

| ST-Bdip | 0.0816 ± 0.04 | 0.3339 ± 0.15 | 0.0110 ± 0.01 | 2.1410 ± 1.10 | 0.1387 |

| SNR = 20, 4 tEMs, 102 × 102 size, 115 bands | |||||

| Method | TAD | AAD | TID | AID | 1-SSIM |

| N-FINDR | 0.1849 ± 0.01 | 0.5896 ± 0.05 | 0.0404 ± 0.00 | 5.0966 ± 0.72 | 0.5711 |

| PPI | 0.1073 ± 0.01 | 0.2756 ± 0.02 | 0.0132 ± 0.00 | 1.7968 ± 0.25 | 0.1669 |

| VCA-S | 0.0672 ± 0.03 | 0.3803 ± 0.19 | 0.0056 ± 0.00 | 1.7978 ± 2.55 | 0.1965 |

| VCA-F | 0.0648 ± 0.03 | 0.3151 ± 0.10 | 0.0052 ± 0.01 | 2.0104 ± 1.23 | 0.1961 |

| uDAs | 0.0612 ± 0.04 | 0.2932 ± 0.12 | 0.0072 ± 0.01 | 1.9552 ± 1.68 | 0.1531 |

| PnP | 0.0655 ± 0.03 | 0.3035 ± 0.10 | 0.0055 ± 0.01 | 2.1320 ± 1.34 | 0.1763 |

| KPmeans | 0.0376 ± 0.02 | 0.2341 ± 0.13 | 0.0025 ± 0.00 | 1.5301 ± 1.88 | 0.1217 |

| ST-Bdip | 0.0354 ± 0.02 | 0.1640 ± 0.08 | 0.0032 ± 0.00 | 1.0483 ± 0.53 | 0.0658 |

| SNR = 30, 4 tEMs, 102 × 102 size, 115 bands | |||||

| Method | TAD | AAD | TID | AID | 1-SSIM |

| N-FINDR | 0.1758 ± 0.00 | 0.6864 ± 0.08 | 0.0391 ± 0.00 | 9.4483 ± 2.97 | 0.4656 |

| PPI | 0.0577 ± 0.00 | 0.1632 ± 0.03 | 0.0036 ± 0.00 | 0.5543 ± 0.24 | 0.0777 |

| VCA-S | 0.0546 ± 0.02 | 0.2612 ± 0.18 | 0.0036 ± 0.00 | 1.0269 ± 2.36 | 0.1553 |

| VCA-F | 0.0537 ± 0.02 | 0.2264 ± 0.07 | 0.0036 ± 0.00 | 1.2240 ± 0.87 | 0.1064 |

| uDAs | 0.0554 ± 0.03 | 0.2874 ± 0.12 | 0.0053 ± 0.01 | 1.8998 ± 1.79 | 0.1278 |

| PnP | 0.0530 ± 0.01 | 0.2255 ± 0.05 | 0.0031 ± 0.00 | 1.2114 ± 0.57 | 0.1435 |

| KPmeans | 0.0306 ± 0.01 | 0.1044 ± 0.04 | 0.0013 ± 0.00 | 0.3667 ± 0.28 | 0.0539 |

| ST-Bdip | 0.0107 ± 0.01 | 0.0536 ± 0.02 | 0.0003 ± 0.00 | 0.2663 ± 0.21 | 0.0120 |

| Methods | TAD | AAD | TID | AID | RMSE-S | RMSE-X |

|---|---|---|---|---|---|---|

| N-FINDR | 0.2757 ± 0.05 | 0.9939 ± 0.04 | 0.3208 ± 0.01 | 15.0797 ± 0.99 | 0.2911 | 0.0576 |

| PPI | 0.3007 ± 0.06 | 1.0194 ± 0.09 | 0.9141 ± 0.44 | 16.3344 ± 2.42 | 0.2969 | 0.0671 |

| VCA-S | 0.2463 ± 0.04 | 1.0092 ± 0.09 | 0.0796 ± 0.03 | 49.9739 ± 16.47 | 0.2865 | 0.0359 |

| VCA-F | 0.2541 ± 0.05 | 0.9612 ± 0.05 | 0.0832 ± 0.03 | 14.8227 ± 1.90 | 0.3019 | 0.0473 |

| uDAs | 0.2943 ± 0.09 | 0.9452 ± 0.07 | 0.3228 ± 0.55 | 15.1977 ± 2.02 | 0.3082 | 0.0477 |

| PnP | 0.2644 ± 0.08 | 0.9668 ± 0.09 | 0.0871 ± 0.05 | 16.2128 ± 3.31 | 0.2804 | 0.0559 |

| KPmeans | 0.2557 ± 0.06 | 0.8888 ± 0.05 | 0.1128 ± 0.13 | 13.6755 ± 0.78 | 0.2606 | 0.0403 |

| ST-Bdip | 0.2300 ± 0.05 | 0.8833 ± 0.06 | 0.0710 ± 0.05 | 13.1397 ± 1.21 | 0.2382 | 0.0451 |

| Methods | TAD | AAD | TID | AID | RMSE-S | RMSE-X |

|---|---|---|---|---|---|---|

| N-FINDR | 0.3067 ± 0.02 | 1.0186 ± 0.08 | 0.1263 ± 0.01 | 15.6274 ± 2.61 | 0.3103 | 0.0795 |

| PPI | 0.3185 ± 0.02 | 1.0446 ± 0.09 | 0.1407 ± 0.02 | 17.5854 ± 8.49 | 0.3201 | 0.1177 |

| VCA-S | 0.3312 ± 0.10 | 1.0687 ± 0.13 | 0.1558 ± 0.11 | 47.1089 ± 26.49 | 0.3348 | 0.0415 |

| VCA-F | 0.3173 ± 0.07 | 0.9284 ± 0.06 | 0.1431 ± 0.06 | 14.5976 ± 1.16 | 0.2781 | 0.0543 |

| uDAs | 0.3296 ± 0.07 | 0.8975 ± 0.05 | 0.7204 ± 0.75 | 13.6823 ± 1.09 | 0.2799 | 0.0516 |

| PnP | 0.2915 ± 0.02 | 0.9298 ± 0.02 | 0.1201 ± 0.01 | 16.6188 ± 1.30 | 0.2914 | 0.0598 |

| KPmeans | 0.3878 ± 0.06 | 0.9071 ± 0.02 | 0.6087 ± 0.17 | 14.8144 ± 0.85 | 0.2986 | 0.0408 |

| ST-Bdip | 0.2612 ± 0.03 | 0.8639 ± 0.03 | 0.1016 ± 0.06 | 12.7759 ± 1.57 | 0.2420 | 0.0556 |

| TAD | SNR = 10 | SNR = 20 | SNR = 30 | |||

| 4 Classes | 6 Classes | 4 Classes | 6 Classes | 4 Classes | 6 Classes | |

| NFINDR | 0.3096 ± 0.02 | 0.3339 ± 0.01 | 0.1849 ± 0.01 | 0.1365 ± 0.01 | 0.1758 ± 0.00 | 0.0821 ± 0.00 |

| PPI | 0.3006 ± 0.01 | 0.3287 ± 0.00 | 0.1073 ± 0.01 | 0.2432 ± 0.01 | 0.0577 ± 0.00 | 0.2354 ± 0.01 |

| VCA-S | 0.1108 ± 0.03 | 0.1406 ± 0.01 | 0.0672 ± 0.03 | 0.0849 ± 0.01 | 0.0546 ± 0.02 | 0.0784 ± 0.00 |

| VCA-F | 0.0953 ± 0.04 | 0.1590 ± 0.02 | 0.0648 ± 0.03 | 0.0811 ± 0.01 | 0.0537 ± 0.02 | 0.0710 ± 0.01 |

| uDAs | 0.1531 ± 0.04 | 0.1597 ± 0.01 | 0.0612 ± 0.04 | 0.0501 ± 0.01 | 0.0554 ± 0.03 | 0.0474 ± 0.00 |

| PnP | 0.0936 ± 0.04 | 0.1361 ± 0.01 | 0.0655 ± 0.03 | 0.0851 ± 0.01 | 0.0530 ± 0.01 | 0.0685 ± 0.01 |

| KPmeans | 0.1286 ± 0.04 | 0.1235 ± 0.01 | 0.0376 ± 0.02 | 0.0482 ± 0.01 | 0.0306 ± 0.01 | 0.0539 ± 0.01 |

| ST-Bdip | 0.0816 ± 0.04 | 0.1053 ± 0.01 | 0.0354 ± 0.02 | 0.0436 ± 0.01 | 0.0107 ± 0.01 | 0.0419 ± 0.01 |

| AAD | SNR=10 | SNR = 20 | SNR = 30 | |||

| 4 classes | 6 classes | 4 classes | 6 classes | 4 classes | 6 classes | |

| NFINDR | 0.5818 ± 0.03 | 0.5728 ± 0.02 | 0.5896 ± 0.05 | 0.3780 ± 0.08 | 0.6864 ± 0.08 | 0.2179 ± 0.00 |

| PPI | 0.7055 ± 0.03 | 0.8999 ± 0.01 | 0.2756 ± 0.02 | 0.9445 ± 0.03 | 0.1632 ± 0.03 | 1.1779 ± 0.13 |

| VCA-S | 0.6669 ± 0.18 | 0.8490 ± 0.05 | 0.3803 ± 0.19 | 0.4406 ± 0.04 | 0.2612 ± 0.18 | 0.3074 ± 0.04 |

| VCA-F | 0.5936 ± 0.13 | 0.6685 ± 0.04 | 0.3151 ± 0.10 | 0.3573 ± 0.07 | 0.2264 ± 0.07 | 0.2666 ± 0.03 |

| uDAs | 0.5420 ± 0.09 | 0.5728 ± 0.02 | 0.2932 ± 0.12 | 0.2834 ± 0.02 | 0.2874 ± 0.12 | 0.2111 ± 0.01 |

| PnP | 0.5186 ± 0.13 | 0.5936 ± 0.05 | 0.3035 ± 0.10 | 0.3492 ± 0.05 | 0.2255 ± 0.05 | 0.2745 ± 0.05 |

| KPmeans | 0.5098 ± 0.09 | 0.5216 ± 0.02 | 0.2341 ± 0.13 | 0.2210 ± 0.02 | 0.1044 ± 0.04 | 0.2134 ± 0.13 |

| ST-Bdip | 0.3339 ± 0.15 | 0.3727 ± 0.06 | 0.1640 ± 0.08 | 0.2089 ± 0.01 | 0.0536 ± 0.02 | 0.1394 ± 0.02 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Zhuo, R.; Xu, L.; Fang, Y. A Spatial–Temporal Bayesian Deep Image Prior Model for Moderate Resolution Imaging Spectroradiometer Temporal Mixture Analysis. Remote Sens. 2023, 15, 3782. https://doi.org/10.3390/rs15153782

Wang Y, Zhuo R, Xu L, Fang Y. A Spatial–Temporal Bayesian Deep Image Prior Model for Moderate Resolution Imaging Spectroradiometer Temporal Mixture Analysis. Remote Sensing. 2023; 15(15):3782. https://doi.org/10.3390/rs15153782

Chicago/Turabian StyleWang, Yuxian, Rongming Zhuo, Linlin Xu, and Yuan Fang. 2023. "A Spatial–Temporal Bayesian Deep Image Prior Model for Moderate Resolution Imaging Spectroradiometer Temporal Mixture Analysis" Remote Sensing 15, no. 15: 3782. https://doi.org/10.3390/rs15153782

APA StyleWang, Y., Zhuo, R., Xu, L., & Fang, Y. (2023). A Spatial–Temporal Bayesian Deep Image Prior Model for Moderate Resolution Imaging Spectroradiometer Temporal Mixture Analysis. Remote Sensing, 15(15), 3782. https://doi.org/10.3390/rs15153782