Robust and Reconfigurable On-Board Processing for a Hyperspectral Imaging Small Satellite

Abstract

1. Introduction

2. Background

2.1. Hyperspectral Imaging and Hyperspectral Data

2.2. Hyperspectral Data Processing

3. On-Board Processing Unit Design

3.1. Design Goals

Design Principles

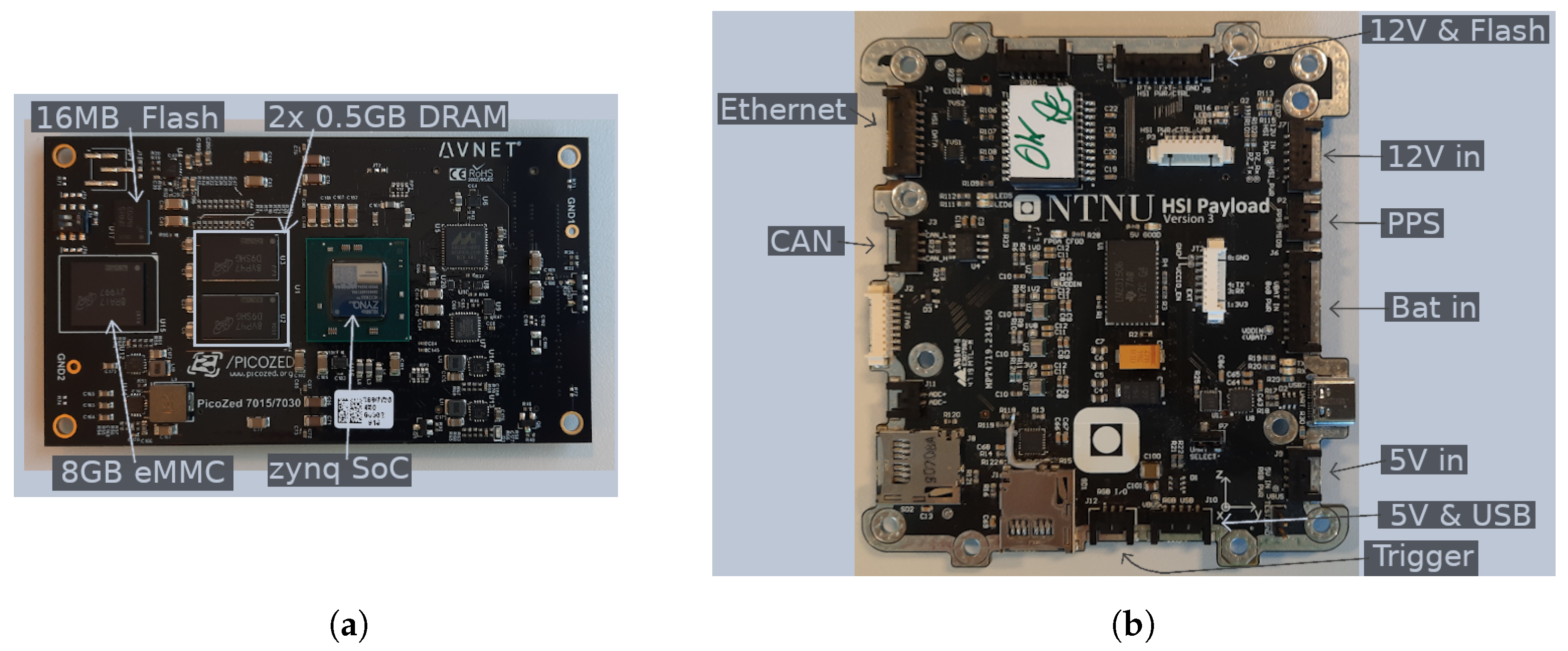

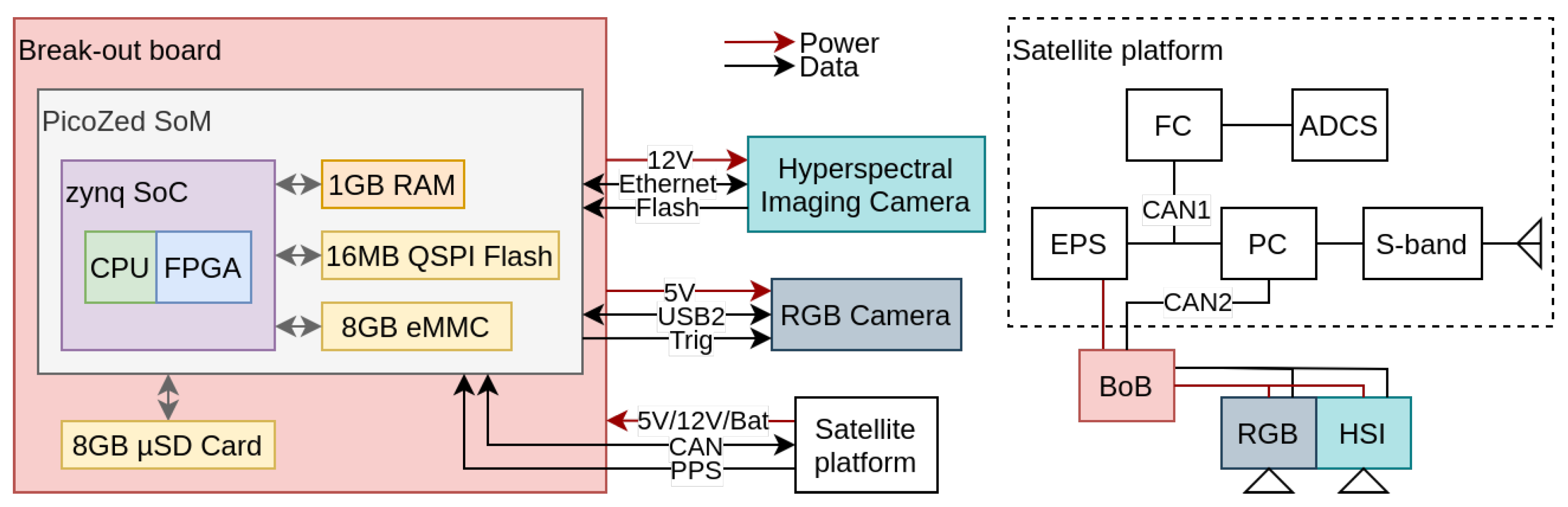

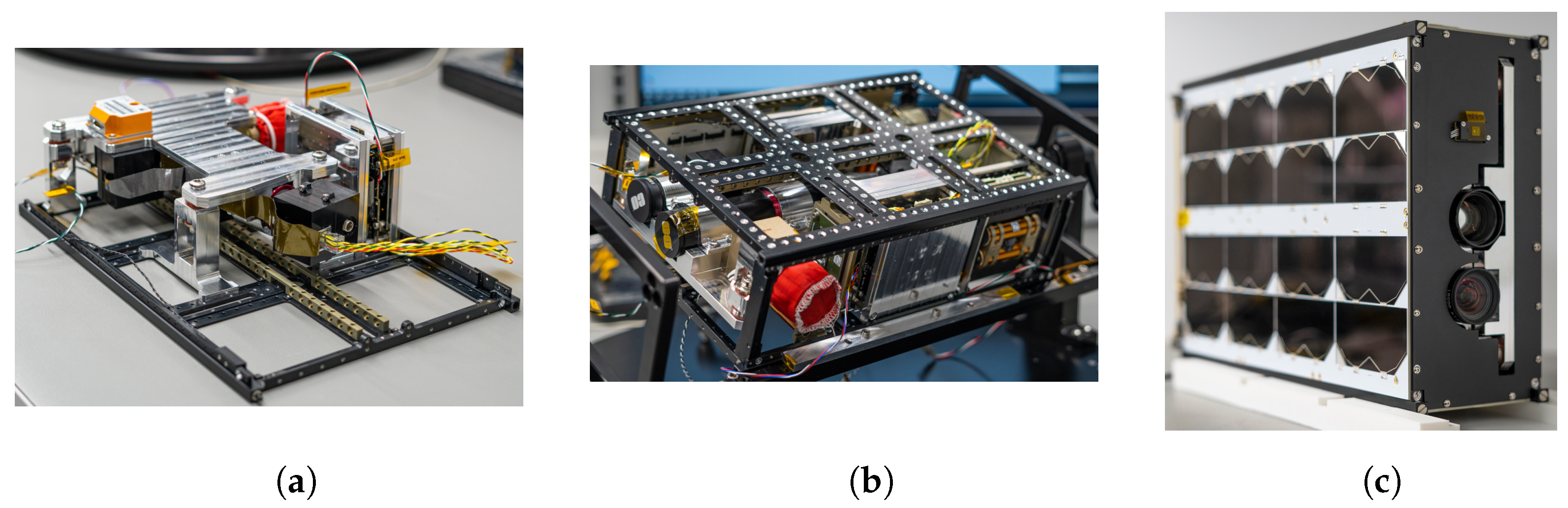

3.2. Hardware Design

3.3. Software Design

3.3.1. Booting Procedure and Operating System

3.3.2. Application Software and Payload Control

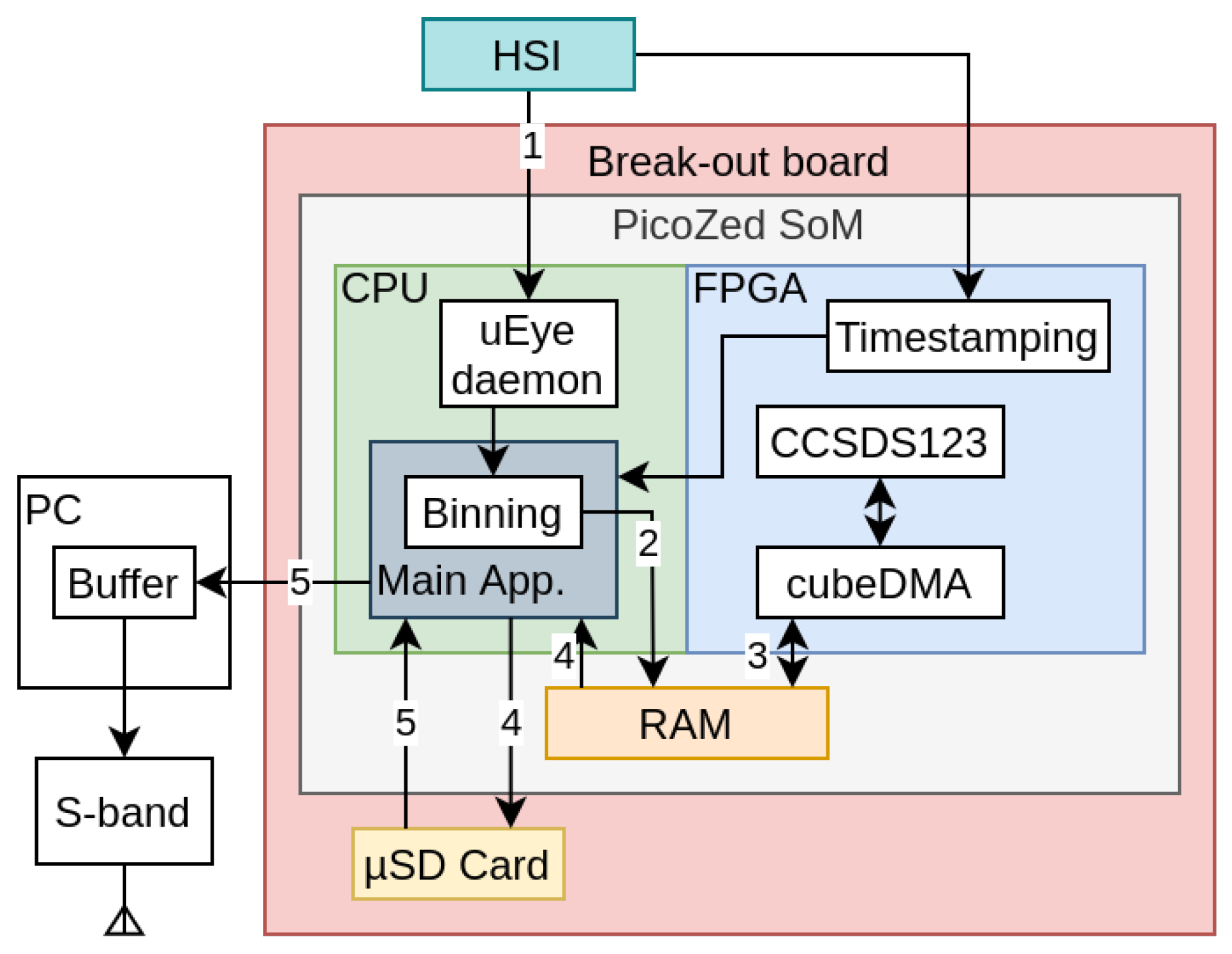

3.3.3. Hyperspectral Data Capture

3.4. On-Board Processing Pipeline

3.4.1. Minimal On-Board Image Processing Pipeline

- parsing capture command packet,

- allocating memory,

- starting and stopping the time-stamping module,

- opening the camera and setting parameters,

- starting and stopping recording,

- and creating log files and metadata files.

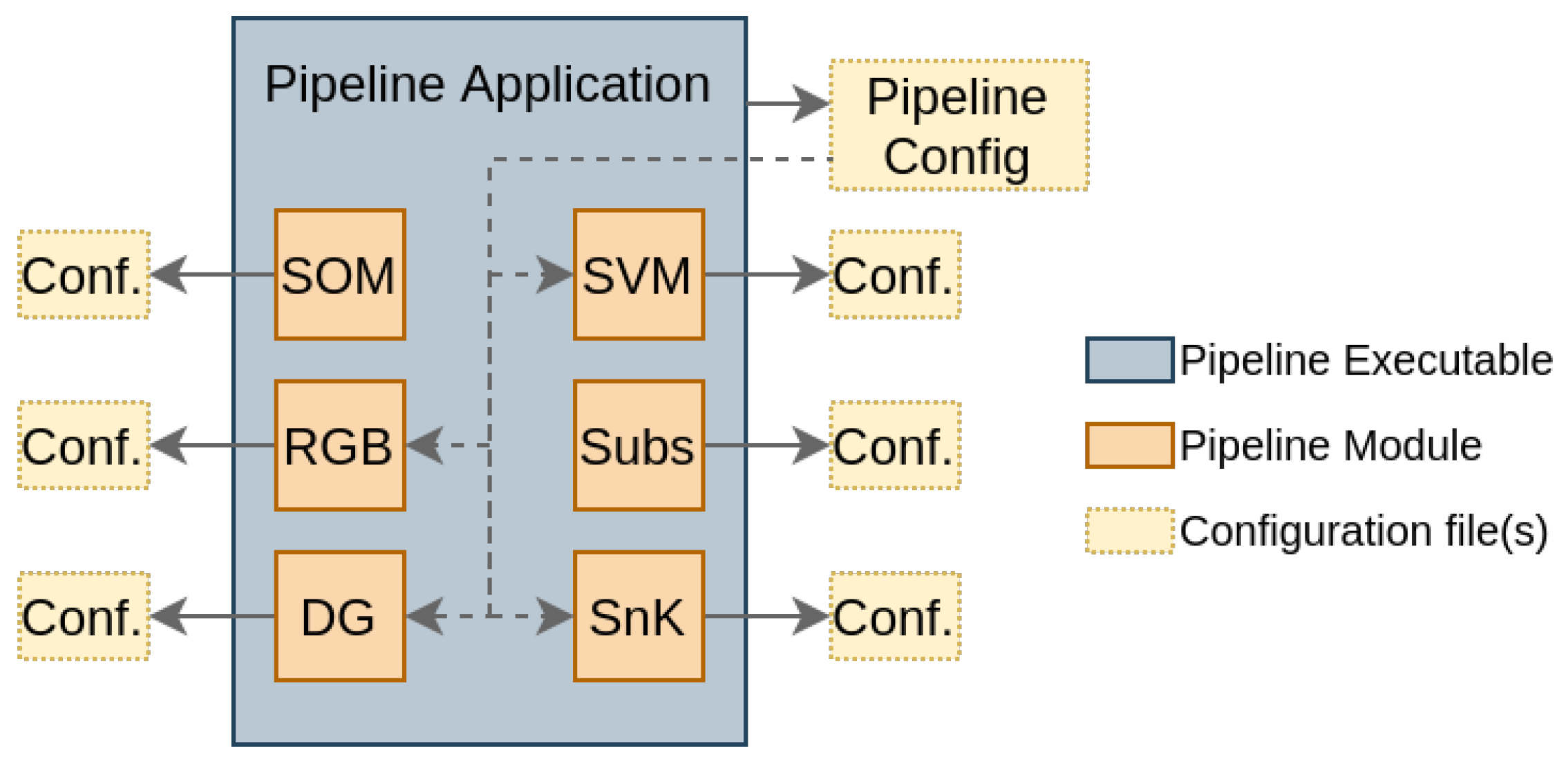

3.4.2. Reconfigurable Pipeline Framework

3.5. Software Updates

4. Testing and In-Orbit Results

4.1. Firmware Integrity Tests

- Test 1: No data corrupted: System boots correctly.

- Test 2: First copy of SSBL corrupted: System boots correctly.

- Test 3: First and second copy of SSBL corrupted: System boots correctly.

- Test 4: All three SSBL corrupted: System fails to boot.

- Test 1: No data corrupted: Primary image boots correctly

- Test 2: SD card not present: Golden image boots correctly

- Test 3: Primary image is corrupted: Golden image boots correctly

- Test 4: Five power cycles: Golden image boots correctly

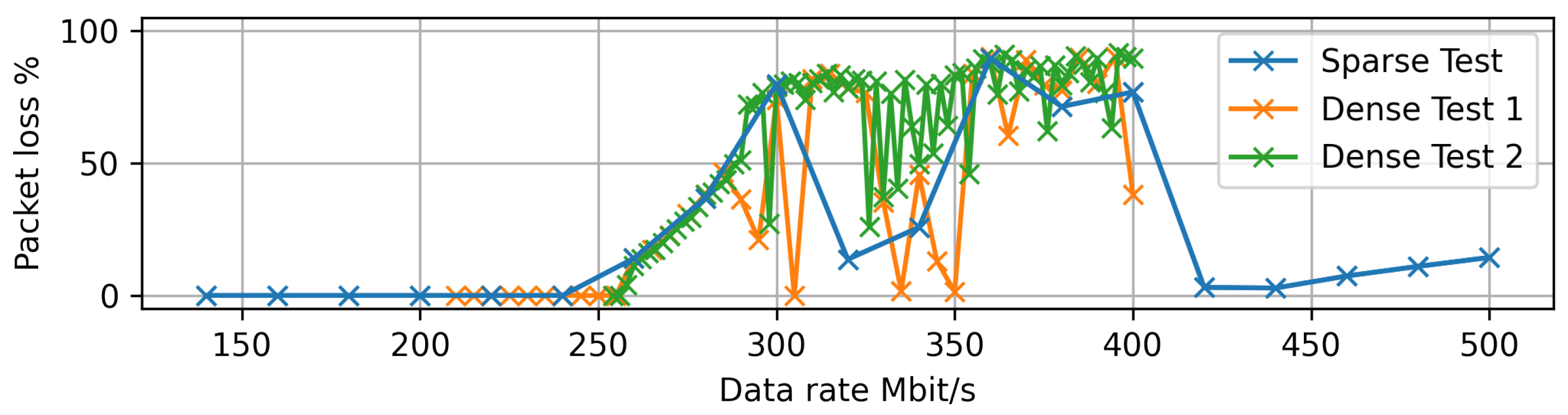

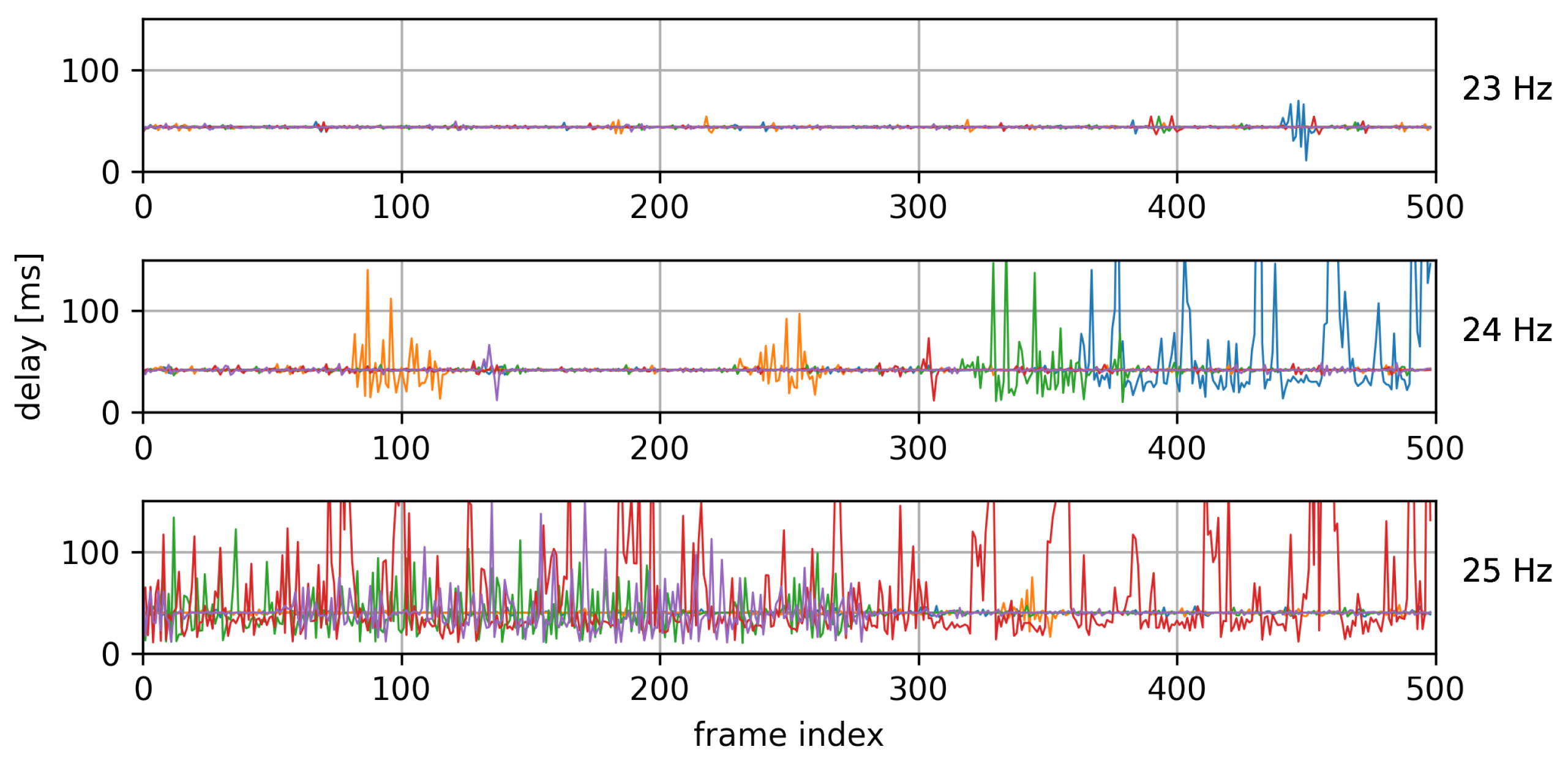

4.2. Ethernet and Data Recording

4.3. Compression

4.4. In-Orbit Results

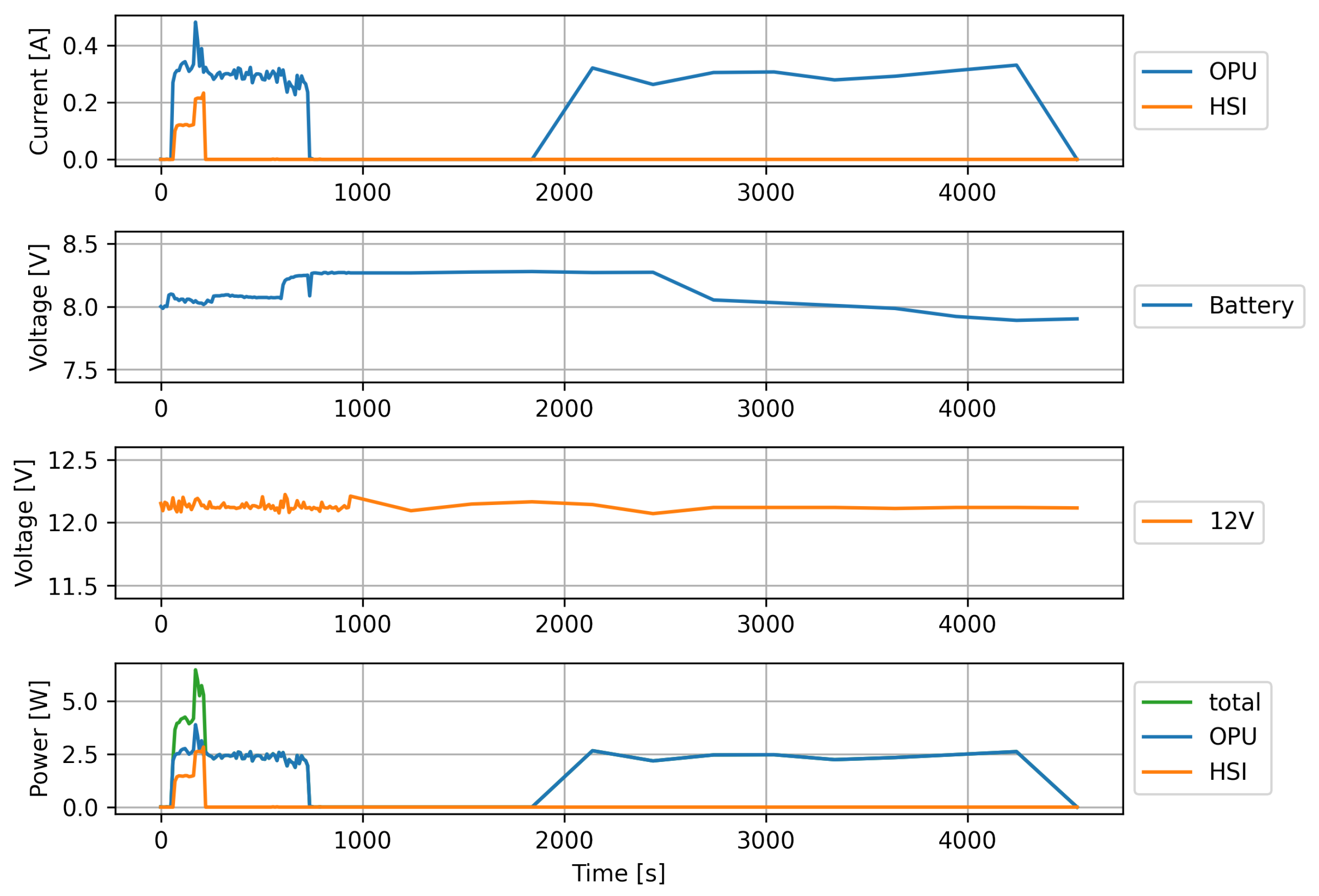

Energy Usage

- 1.

- Preparations for image recording (seconds 45 to 165).

- 2.

- Image recording (seconds 165 to 210).

- 3.

- Post actions, including software compression (seconds 210 to 730).

- 4.

- Buffering the data to the PC (seconds 1990 to 4408).

5. Discussion, Future Work and Conclusions

Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Dierssen, H.M.; Ackleson, S.G.; Joyce, K.E.; Hestir, E.L.; Castagna, A.; Lavender, S.; McManus, M.A. Living up to the Hype of Hyperspectral Aquatic Remote Sensing: Science, Resources and Outlook. Front. Environ. Sci. 2021, 9, 134. [Google Scholar] [CrossRef]

- Ghamisi, P.; Yokoya, N.; Li, J.; Liao, W.; Liu, S.; Plaza, J.; Rasti, B.; Plaza, A. Advances in Hyperspectral Image and Signal Processing: A Comprehensive Overview of the State of the Art. IEEE Geosci. Remote Sens. Mag. 2017, 5, 37–78. [Google Scholar] [CrossRef]

- Qian, S.E. Hyperspectral Satellites, Evolution, and Development History. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 7032–7056. [Google Scholar] [CrossRef]

- Middleton, E.M.; Ungar, S.G.; Mandl, D.J.; Ong, L.; Frye, S.W.; Campbell, P.E.; Landis, D.R.; Young, J.P.; Pollack, N.H. The Earth Observing One (EO-1) Satellite Mission: Over a Decade in Space. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 243–256. [Google Scholar] [CrossRef]

- Barnsley, M.; Settle, J.; Cutter, M.; Lobb, D.; Teston, F. The PROBA/CHRIS mission: A low-cost smallsat for hyperspectral multiangle observations of the Earth surface and atmosphere. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1512–1520. [Google Scholar] [CrossRef]

- Zhao, X.; Xiao, Z.; Kang, Q.; Li, Q.; Fang, L. Overview of the Fourier Transform Hyperspectral Imager (HSI) boarded on HJ-1A satellite. In Proceedings of the 2010 IEEE International Geoscience and Remote Sensing Symposium, Honolulu, HI, USA, 25–30 July 2010; pp. 4272–4274. [Google Scholar] [CrossRef]

- Lucke, R.L.; Corson, M.; McGlothlin, N.R.; Butcher, S.D.; Wood, D.L.; Korwan, D.R.; Li, R.R.; Snyder, W.A.; Davis, C.O.; Chen, D.T. Hyperspectral Imager for the Coastal Ocean: Instrument description and first images. Appl. Opt. 2011, 50, 1501–1516. [Google Scholar] [CrossRef]

- Mahalingam, S.; Srinivas, P.; Devi, P.K.; Sita, D.; Das, S.K.; Leela, T.S.; Venkataraman, V.R. Reflectance based vicarious calibration of HySIS sensors and spectral stability study over pseudo-invariant sites. In Proceedings of the 2019 IEEE Recent Advances in Geoscience and Remote Sensing: Technologies, Standards and Applications (TENGARSS), Kochi, India, 17–20 October 2019; pp. 132–136. [Google Scholar] [CrossRef]

- Matsunaga, T.; Iwasaki, A.; Tsuchida, S.; Iwao, K.; Tanii, J.; Kashimura, O.; Nakamura, R.; Yamamoto, H.; Kato, S.; Obata, K.; et al. HISUI Status Toward 2020 Launch. In Proceedings of the IGARSS 2019-2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 4495–4498. [Google Scholar] [CrossRef]

- Liu, Y.N.; Sun, D.X.; Hu, X.N.; Ye, X.; Li, Y.D.; Liu, S.F.; Cao, K.Q.; Chai, M.Y.; Zhou, W.Y.N.; Zhang, J.; et al. The Advanced Hyperspectral Imager: Aboard China’s GaoFen-5 Satellite. IEEE Geosci. Remote Sens. Mag. 2019, 7, 23–32. [Google Scholar] [CrossRef]

- Loizzo, R.; Daraio, M.; Guarini, R.; Longo, F.; Lorusso, R.; Dini, L.; Lopinto, E. Prisma Mission Status and Perspective. In Proceedings of the IGARSS 2019-2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 4503–4506. [Google Scholar] [CrossRef]

- Müller, R.; Alonso, K.; Bachmann, M.; Burch, K.; Carmona, E.; Cerra, D.; Dietrich, D.; Gege, P.; Lester, H.; Heiden, U.; et al. The Spaceborne Imaging Spectrometer Desis: Data Access and Scientific Applications. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 1386–1389. [Google Scholar] [CrossRef]

- Chabrillat, S.; Segl, K.; Foerster, S.; Brell, M.; Guanter, L.; Schickling, A.; Storch, T.; Honold, H.P.; Fischer, S. EnMAP Pre-Launch and Start Phase: Mission Update. In Proceedings of the IGARSS 2022-2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022; pp. 5000–5003. [Google Scholar] [CrossRef]

- Masek, J.G.; Wulder, M.A.; Markham, B.; McCorkel, J.; Crawford, C.J.; Storey, J.; Jenstrom, D.T. Landsat 9: Empowering open science and applications through continuity. Remote Sens. Environ. 2020, 248, 111968. [Google Scholar] [CrossRef]

- Nieke, J.; Mavrocordatos, C. Sentinel-3a: Commissioning phase results of its optical payload. In International Conference on Space Optics—ICSO 2016; Cugny, B., Karafolas, N., Sodnik, Z., Eds.; International Society for Optics and Photonics, SPIE: Bellingham, WA, USA, 2017; Volume 10562, p. 105620C. [Google Scholar] [CrossRef]

- Rast, M.; Nieke, J.; Adams, J.; Isola, C.; Gascon, F. Copernicus Hyperspectral Imaging Mission for the Environment (Chime). In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 108–111. [Google Scholar] [CrossRef]

- Vitulli, R.; Celesti, M.; Camarero, R.; Cosimo, G.D.; Gascon, F.; Longepe, N.; Rovatti, M.; Foulon, M.F.; Grynagier, A.; Lebedeff, D.; et al. CHIME: The first AI-powered ESA operational Mission. In Proceedings of the 4S Symposium, Vilamoura, Portugal, 16–20 May 2022. [Google Scholar]

- Evans, D. OPS-SAT: An ESA Cubesat. In Proceedings of the 4S Symposium, Portoroz, Slovenija, 4–8 June 2012. [Google Scholar]

- Denby, B.; Lucia, B. Orbital Edge Computing: Machine Inference in Space. IEEE Comput. Archit. Lett. 2019, 18, 59–62. [Google Scholar] [CrossRef]

- Furano, G.; Meoni, G.; Dunne, A.; Moloney, D.; Ferlet-Cavrois, V.; Tavoularis, A.; Byrne, J.; Buckley, L.; Psarakis, M.; Voss, K.O.; et al. Towards the Use of Artificial Intelligence on the Edge in Space Systems: Challenges and Opportunities. IEEE Aerosp. Electron. Syst. Mag. 2020, 35, 44–56. [Google Scholar] [CrossRef]

- George, A.D.; Wilson, C.M. Onboard Processing with Hybrid and Reconfigurable Computing on Small Satellites. Proc. IEEE 2018, 106, 458–470. [Google Scholar] [CrossRef]

- Soukup, M.; Gailis, J.; Fantin, D.; Jochemsen, A.; Aas, C.; Baeck, P.; Benhadj, I.; Livens, S.; Delauré, B.; Menenti, M.; et al. HyperScout: Onboard Processing of Hyperspectral Imaging Data on a Nanosatellite. In Proceedings of the 4S Conference, Valletta, Malta, 30 May–3 June 2016. [Google Scholar]

- Esposito, M.; Marchi, A.Z. In-orbit demonstration of the first hyperspectral imager for nanosatellites. In International Conference on Space Optics—ICSO 2018; Sodnik, Z., Karafolas, N., Cugny, B., Eds.; International Society for Optics and Photonics, SPIE: Bellingham, WA, USA, 2019; Volume 11180, p. 1118020. [Google Scholar] [CrossRef]

- Camps, A.; Munoz-Martin, J.F.; de Azua, J.A.R.; Fernandez, L.; Perez-Portero, A.; Llavería, D.; Herbert, C.; Pablos, M.; Golkar, A.; Gutiérrez, A.; et al. FSSCat: The Federated Satellite Systems 3Cat Mission: Demonstrating the capabilities of CubeSats to monitor essential climate variables of the water cycle [Instruments and Missions]. IEEE Geosci. Remote Sens. Mag. 2022, 10, 260–269. [Google Scholar] [CrossRef]

- Consultative Committee for Space Data Systems. Low-Complexity Lossless and Near-lossless Multispectral and Hyperspectral Image Compression—CCSDS 123.0-B-2. Blue Book 2019. Available online: https://public.ccsds.org/Pubs/123x0b2c3.pdf (accessed on 17 April 2023).

- Giuffrida, G.; Fanucci, L.; Meoni, G.; Batič, M.; Buckley, L.; Dunne, A.; van Dijk, C.; Esposito, M.; Hefele, J.; Vercruyssen, N.; et al. The Φ-Sat-1 Mission: The First On-Board Deep Neural Network Demonstrator for Satellite Earth Observation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5517414. [Google Scholar] [CrossRef]

- Labrèche, G.; Evans, D.; Marszk, D.; Mladenov, T.; Shiradhonkar, V.; Soto, T.; Zelenevskiy, V. OPS-SAT Spacecraft Autonomy with TensorFlow Lite, Unsupervised Learning, and Online Machine Learning. In Proceedings of the 2022 IEEE Aerospace Conference (AERO), Big Sky, MT, USA, 5–12 March 2022; pp. 1–17. [Google Scholar] [CrossRef]

- Leppinen, H.; Niemelä, P.; Silva, N.; Sanmark, H.; Forstén, H.; Yanes, A.; Modrzewski, R.; Kestilä, A.; Praks, J. Developing a Linux-based nanosatellite on-board computer: Flight results from the Aalto-1 mission. IEEE Aerosp. Electron. Syst. Mag. 2019, 34, 4–14. [Google Scholar] [CrossRef]

- Nalepa, J.; Myller, M.; Cwiek, M.; Zak, L.; Lakota, T.; Tulczyjew, L.; Kawulok, M. Towards On-Board Hyperspectral Satellite Image Segmentation: Understanding Robustness of Deep Learning through Simulating Acquisition Conditions. Remote Sens. 2021, 13, 1532. [Google Scholar] [CrossRef]

- Jervis, D.; McKeever, J.; Durak, B.O.A.; Sloan, J.J.; Gains, D.; Varon, D.J.; Ramier, A.; Strupler, M.; Tarrant, E. The GHGSat-D imaging spectrometer. Atmos. Meas. Tech. 2021, 14, 2127–2140. [Google Scholar] [CrossRef]

- Thompson, D.; Leifer, I.; Bovensmann, H.; Eastwood, M.; Fladeland, M.; Frankenberg, C.; Gerilowski, K.; Green, R.; Kratwurst, S.; Krings, T.; et al. Real-time remote detection and measurement for airborne imaging spectroscopy: A case study with methane. Atmos. Meas. Tech. 2015, 8, 4383–4397. [Google Scholar] [CrossRef]

- Grøtte, M.E.; Birkeland, R.; Honoré-Livermore, E.; Bakken, S.; Garrett, J.; Prentice, E.F.; Sigernes, F.; Orlandić, M.; Gravdahl, J.T.; Johansen, T.A. Ocean Color Hyperspectral Remote Sensing with High Resolution and Low Latency—The HYPSO-1 CubeSat Mission. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1000619. [Google Scholar] [CrossRef]

- Prentice, E.F.; Grøtte, M.E.; Sigernes, F.; Johansen, T.A. Design of a hyperspectral imager using COTS optics for small satellite applications. In International Conference on Space Optics—ICSO 2020; Cugny, B., Sodnik, Z., Karafolas, N., Eds.; International Society for Optics and Photonics, SPIE: Bellingham, WA, USA, 2021; Volume 11852, pp. 2154–2171. [Google Scholar] [CrossRef]

- Henriksen, M.B.; Prentice, E.F.; van Hazendonk, C.M.; Sigernes, F.; Johansen, T.A. Do-it-yourself VIS/NIR pushbroom hyperspectral imager with C-mount optics. Opt. Contin. 2022, 1, 427–441. [Google Scholar] [CrossRef]

- Dallolio, A.; Quintana-Diaz, G.; Honoré-Livermore, E.; Garrett, J.L.; Birkeland, R.; Johansen, T.A. A Satellite-USV System for Persistent Observation of Mesoscale Oceanographic Phenomena. Remote Sens. 2021, 13, 3229. [Google Scholar] [CrossRef]

- Bakken, S.; Henriksen, M.B.; Birkeland, R.; Langer, D.D.; Oudijk, A.E.; Berg, S.; Pursley, Y.; Garrett, J.L.; Gran-Jansen, F.; Honoré-Livermore, E.; et al. HYPSO-1 CubeSat: First Images and In-Orbit Characterization. Remote Sens. 2023, 15, 755. [Google Scholar] [CrossRef]

- Garrett, J.L.; Bakken, S.; Prentice, E.F.; Langer, D.; Leira, F.S.; Honoré-Livermore, E.; Birkeland, R.; Grøtte, M.E.; Johansen, T.A.; Orlandić, M. Hyperspectral Image Processing Pipelines on Multiple Platforms for Coordinated Oceanographic Observation. In Proceedings of the 2021 11th Workshop on Hyperspectral Imaging and Signal Processing: Evolution in Remote Sensing (WHISPERS), Amsterdam, The Netherlands, 24–26 March 2021. [Google Scholar] [CrossRef]

- Bakken, S.; Johnsen, G.; Johansen, T.A. Analysis and Model Development of Direct Hyperspectral Chlorophyll-A Estimation for Remote Sensing Satellites. In Proceedings of the 2021 11th Workshop on Hyperspectral Imaging and Signal Processing: Evolution in Remote Sensing (WHISPERS), Amsterdam, The Netherlands, 24–26 March 2021. [Google Scholar] [CrossRef]

- Bošković, Đ.; Orlandić, M.; Johansen, T.A. A reconfigurable multi-mode implementation of hyperspectral target detection algorithms. Microprocess. Microsyst. 2020, 78, 103258. [Google Scholar] [CrossRef]

- Danielsen, A.S.; Johansen, T.A.; Garrett, J.L. Self-Organizing Maps for Clustering Hyperspectral Images On-Board a CubeSat. Remote Sens. 2021, 13, 4174. [Google Scholar] [CrossRef]

- Lupu, D.; Necoara, I.; Garrett, J.L.; Johansen, T.A. Stochastic Higher-Order Independent Component Analysis for Hyperspectral Dimensionality Reduction. IEEE Trans. Comput. Imaging 2022, 8, 1184–1194. [Google Scholar] [CrossRef]

- Avagian, K.; Orlandić, M.; Johansen, T.A. An FPGA-oriented HW/SW Codesign of Lucy-Richardson Deconvolution Algorithm for Hyperspectral Images. In Proceedings of the 2019 8th Mediterranean Conference on Embedded Computing (MECO), Budva, Montenegro, 10–14 June 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Garrett, J.L.; Langer, D.; Avagian, K.; Stahl, A. Accuracy of super-resolution for hyperspectral ocean observations. In Proceedings of the OCEANS 2019-Marseille, Marseille, France, 17–20 June 2019; pp. 1–8. [Google Scholar] [CrossRef]

- Fortuna, J.; Martens, H.; Johansen, T.A. Multivariate image fusion: A pipeline for hyperspectral data enhancement. Chemom. Intell. Lab. Syst. 2020, 205, 104097. [Google Scholar] [CrossRef]

- Justo, J.A.; Orlandić, M. Study of the gOMP Algorithm for Recovery of Compressed Sensed Hyperspectral Images. In Proceedings of the 2022 12th Workshop on Hyperspectral Imaging and Signal Processing: Evolution in Remote Sensing (WHISPERS), Rome, Italy, 13–16 September 2022. [Google Scholar] [CrossRef]

- Consultative Committee for Space Data Systems. Lossless Data Compression—CCSDS 121.0-B-3. Blue Book 2020. Available online: https://public.ccsds.org/Pubs/121x0b3.pdf (accessed on 17 April 2023).

- Consultative Committee for Space Data Systems. Image Data Compression—CCSDS 122.0-B-2. Blue Book 2017. Available online: https://public.ccsds.org/Pubs/122x0b2.pdf (accessed on 17 April 2023).

- Consultative Committee for Space Data Systems. Lossless Multispectral and Hyperspectral Image Compression—CCSDS 123.0-B-1. Blue Book 2012. Available online: https://public.ccsds.org/Pubs/123x0b1ec1s.pdf (accessed on 17 April 2023).

- Consultative Committee for Space Data Systems. Low-complexity Lossless and Near-lossless Multispectral and Hyperspectral Image Compression—CCSDS 120.2-G-2. Green Book 2022. Available online: https://public.ccsds.org/Pubs/120x2g2.pdf (accessed on 17 April 2023).

- Keymeulen, D.; Aranki, N.; Bakhshi, A.; Luong, H.; Sarture, C.; Dolman, D. Airborne demonstration of FPGA implementation of Fast Lossless hyperspectral data compression system. In Proceedings of the Adaptive Hardware and Systems (AHS), Leicester, UK, 14–17 July 2014; pp. 278–284. [Google Scholar]

- Santos, L.; Berrojo, L.; Moreno, J.; López, J.F.; Sarmiento, R. Multispectral and hyperspectral lossless compressor for space applications (HyLoC): A low-complexity FPGA implementation of the CCSDS 123 standard. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 757–770. [Google Scholar] [CrossRef]

- Theodorou, G.; Kranitis, N.; Tsigkanos, A.; Paschalis, A. High Performance CCSDS 123.0-B-1 Multispectral & Hyperspectral Image Compression Implementation on a Space-Grade SRAM FPGA. In Proceedings of the 5th International Workshop on On-Board Payload Data Compression, Frascati, Italy, 28–29 September 2016; pp. 28–29. [Google Scholar]

- Báscones, D.; González, C.; Mozos, D. FPGA Implementation of the CCSDS 1.2.3 Standard for Real-Time Hyperspectral Lossless Compression. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 11, 1158–1165. [Google Scholar] [CrossRef]

- Báscones, D.; González, C.; Mozos, D. Parallel Implementation of the CCSDS 1.2.3 Standard for Hyperspectral Lossless Compression. Remote Sens. 2017, 9, 973. [Google Scholar] [CrossRef]

- University of Las Palmas de Gran Canaria; Institute for Applied Microelectronics (IUMA). SHyLoC IP Core. Available online: http://www.esa.int/Our_Activities/Space_Engineering_Technology/Microelectronics/SHyLoC_IP_Core (accessed on 12 November 2018).

- Tsigkanos, A.; Kranitis, N.; Theodorou, G.A.; Paschalis, A. A 3.3 Gbps CCSDS 123.0-B-1 Multispectral & Hyperspectral Image Compression Hardware Accelerator on a Space-Grade SRAM FPGA. IEEE Trans. Emerg. Top. Comput. 2018, 9, 90–103. [Google Scholar]

- Fjeldtvedt, J.; Orlandić, M.; Johansen, T.A. An Efficient Real-Time FPGA Implementation of the CCSDS-123 Compression Standard for Hyperspectral Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 3841–3852. [Google Scholar] [CrossRef]

- Orlandić, M.; Fjeldtvedt, J.; Johansen, T.A. A Parallel FPGA Implementation of the CCSDS-123 Compression Algorithm. Remote Sens. 2019, 11, 673. [Google Scholar] [CrossRef]

- Tsigkanos, A.; Kranitis, N.; Theodoropoulos, D.; Paschalis, A. High-performance COTS FPGA SoC for parallel hyperspectral image compression with CCSDS-123.0-B-1. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2020, 28, 2397–2409. [Google Scholar] [CrossRef]

- Prentice, E.F.; Honoré-Livermore, E.; Bakken, S.; Henriksen, M.B.; Birkeland, R.; Hjertenæs, M.; Gjersvik, A.; Johansen, T.A.; Aguado-Agelet, F.; Navarro-Medina, F. Pre-Launch Assembly, Integration, and Testing Strategy of a Hyperspectral Imaging CubeSat, HYPSO-1. Remote Sens. 2022, 14, 4584. [Google Scholar] [CrossRef]

- Nielsen, J.F.D.; Larsen, J.A.; Grunnet, J.D.; Kragelund, M.N.; Michelsen, A.; Sørensen, K.K. AAUSAT-II, a Danish Student Satellite. ISAS Nyusu; Yokohama, Japan, 2009. Available online: https://vbn.aau.dk/en/publications/aausat-ii-a-danish-student-satellite (accessed on 2 March 2023).

- Holmes, A.; Morrison, J.M.; Feldman, G.; Patt, F.; Lee, S. Hawkeye ocean color instrument: Performance summary. In CubeSats and NanoSats for Remote Sensing II; Pagano, T.S., Norton, C.D., Eds.; International Society for Optics and Photonics, SPIE: Bellingham, WA, USA, 2018; Volume 10769, p. 107690C. [Google Scholar] [CrossRef]

- Quintana-Diaz, G.; Ekman, T.; Agra, J.M.L.; de Mendoza, D.H.; Muíño, A.G.; Agelet, F.A. In-Orbit Measurements and Analysis of Radio Interference in the UHF Amateur Radio Band from the LUME-1 Satellite. Remote Sens. 2021, 13, 3252. [Google Scholar] [CrossRef]

- Camera Module UI-5261SE Rev. 4.2. Available online: https://en.ids-imaging.com/store/ui-5261se-rev-4-2.html (accessed on 31 March 2023).

- DENX Software Engineering. (Das U-Boot). Available online: https://github.com/u-boot/u-boot (accessed on 1 July 2023).

- Bakken, S.; Honoré-Livermore, E.; Birkeland, R.; Orlandić, M.; Prentice, E.F.; Garrett, J.L.; Langer, D.D.; Haskins, C.; Johansen, T.A. Software Development and Integration of a Hyperspectral Imaging Payload for HYPSO-1. In Proceedings of the 2022 IEEE/SICE International Symposium on System Integration (SII), Narvik, Norway, 9–12 January 2022; pp. 183–189. [Google Scholar] [CrossRef]

- Cubesat Space Protocol. Available online: https://github.com/libcsp/libcsp (accessed on 1 July 2023).

- uEye Driver and C API for iDS uEye Cameras. Available online: https://en.ids-imaging.com/download-details/AB02000.html (accessed on 31 March 2023).

- Langer, D.D.; Johansen, T.A.; Sørensen, A.J. Consistent along track Sharpness in a Push-Broom Imaging System. In Proceedings of the IGARSS 2023-IEEE International Geoscience and Remote Sensing Symposium, Pasadena, CA, USA, 16 July 2023. [Google Scholar]

- Fjeldtvedt, J.; Orlandić, M. CubeDMA—Optimizing three-dimensional DMA transfers for hyperspectral imaging applications. Microprocess. Microsyst. 2019, 65, 23–36. [Google Scholar] [CrossRef]

- Bakken, S.; Danielsen, A.; Døsvik, K.; Garrett, J.; Orlandic, M.; Langer, D.; Johansen, T.A. A Modular Hyperspectral Image Processing Pipeline For Cubesats. In Proceedings of the 2022 12th Workshop on Hyperspectral Imaging and Signal Processing: Evolution in Remote Sensing (WHISPERS), Rome, Italy, 13–16 September 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Bakken, S.; Birkeland, R.; Garrett, J.L.; Marton, P.A.R.; Orlandić, M.; Honoré-Livermore, E.; Langer, D.D.; Haskins, C.; Johansen, T.A. Testing of Software-Intensive Hyperspectral Imaging Payload for the HYPSO-1 CubeSat. In Proceedings of the 2022 IEEE/SICE International Symposium on System Integration (SII), Narvik, Norway, 9–12 January 2022; pp. 258–264. [Google Scholar] [CrossRef]

- Emporda Software. Available online: https://gici.uab.cat/GiciWebPage/downloads.php#emporda (accessed on 17 April 2023).

- Gjersund, J.A. A Reconfigurable Fault-Tolerant On-Board Processing System for the HYPSO CubeSat. Master’s Thesis, Norwegian University of Science and Technology, Trondheim, Norway, 2019. Available online: https://hdl.handle.net/11250/2778120 (accessed on 16 January 2023).

- Hov, M. Design and Implementation of Hardware and Software Interfaces for a Hyperspectral Payload in a Small. Master’s Thesis, Norwegian University of Science and Technology, Trondheim, Norway, 2019. Available online: http://hdl.handle.net/11250/2625750 (accessed on 16 January 2023).

- Danielsen, M. System Integration and Testing of On-Board Processing System for a Hyperspectral Imaging Payload in a CubeSat. Master’s Thesis, Norwegian University of Science and Technology, Trondheim, Norway, 2020. [Google Scholar]

- Boothby, C. An Implementation of a Compression Algorithm for Hyperspectral Images. A Novelty of the CCSDS 123.0-B-2 Standard. Master’s Thesis, Norwegian University of Science and Technology, Trondheim, Norway, 2020. Available online: https://hdl.handle.net/11250/2778129 (accessed on 16 January 2023).

- Netteland, S.; Kornberg, J.A. Timestamping of Frames in a Hyperspectral Camera Satellite Payload. Bachelor’s Thesis, Norwegian University of Science and Technology, Trondheim, Norway, 2020. [Google Scholar]

- Danielsen, A. Modular Framework for Hyperspectral Image Processing Pipelines; Project Report; Norwegian University of Science and Technology: Trondheim, Norway, 2020. [Google Scholar]

| Design Principle | Short Description |

|---|---|

| Integrity | Ability to detect faults |

| Redundancy | Presence of backups |

| Locality | Physical separation of backups |

| Modularity | System consisting of independent components |

| Extensibility | Ability to add additional functionality |

| Configurablility | Adjust functionality to user’s needs |

| Low latency | Fast processing for fast available data |

| Name | Long Name | Description |

|---|---|---|

| CSP | Cubesat Space Protocol | Services included in the CSP library mainly used for pings |

| FT | File Transfer | Handles file down- and up-load requests |

| CLI | Command Line Interface | Provides OS shell access |

| RGB | Red–Green–Blue Camera | Handles commands to control the RGB camera |

| OBIP | On-Board Image Processing | Runs on-board processing pipeline tasks |

| HSI | Hyperspectral Imager | Handles commands to control the HSI camera |

| TM | Telemetry | Collects and logs telemetry information |

| LOG | Logging | Handles information and error message logging. Creates log files. |

| Monitor | Service Monitor | Responsible for start and stop of the above tasks |

| Group | Parameter | Description |

|---|---|---|

| Sizing | Line count, L | How many lines to scan. In other words, how many frames to capture. |

| Sensor height, S | Spatial sampling. Default are 684 pixels. | |

| Sensor width, W | Spectral sampling. Default are 1080 pixels. | |

| Binning factor, | Software binning in the spectral dimension. Possible values: 1× though 18× | |

| Sub-sampling, | On-sensor subsampling in the spectral dimension. Possible values: 2×, 4× | |

| Timing and signal level | Frame rate | Rate at which a line/frame is scanned/captured. |

| Exposure time, e | How long light is collected during a line/frame scan/capture. | |

| Gain, g | ||

| Flags | CCSDS123 in software | Compress HSI data using the software implementation of CCSDS123 instead of the FPGA implementation |

| No compression | Do not compress the HSI data and store the uncompressed data on SD Card instead. |

| Abbreviation | Motivation |

|---|---|

| SnK | Improve data quality |

| DG | Pass target information to in situ agents |

| SOM | Package data for quick download |

| SVM | On-board data segmentation |

| Subs | If only parts of a cube are interesting |

| RGB | Preview a capture |

| SD Card | eMMC |

|---|---|

| Boot image | Boot image (golden) |

| FPGA image | FPGA image (golden) |

| HSI capture data | |

| log and telemetry files | |

| updated main application files | |

| updated FPGA modules | |

| updated pipeline application files | |

| pipeline configuration files |

| [Hz] | Bad Recordings/Total |

|---|---|

| 21 | 0/30 |

| 22 | 0/30 |

| 23 | 0/30 |

| 24 | 3/30 |

| 25 | 29/30 |

| [Hz]/Mode | 1936 × 1216 | 1080 × 1194 | 1080 × 684 |

|---|---|---|---|

| SIMD Bin9 | 5 | 12 | 22 |

| SIMD Bin18 | 5 | 12 | 22 |

| SIMD Bin9 Sub 2× | 9 | 20 | 36 |

| Configuration Name | Dimensions | Count |

|---|---|---|

| Nominal | 956 × 684 × 120 | 895 |

| No binning | 106 × 684 × 1080 | 34 |

| Full sensor | 33 × 1216 × 1936 | 26 |

| Wider spatial | 598 × 1092× 120 or 537 × 1216 × 120 | 88 |

| Phase | Duration [s] | OPU [W] | HSI [W] | Energy OPU & HSI [Wh] |

|---|---|---|---|---|

| 1 | 120.0 (3.87%) | 2.35 | 1.20 | 0.12 (5.58%) |

| 2 | 45.0 (1.45%) | 3.09 | 2.65 | 0.08 (3.70%) |

| 3 | 520.0 (16.76%) | 2.35 | 0.00 | 0.34 (15.61%) |

| 4 | 2418.0 (77.93%) | 2.43 | 0.00 | 1.62 (75.10%) |

| Total energy: 2.16 Wh |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Langer, D.D.; Orlandić, M.; Bakken, S.; Birkeland, R.; Garrett, J.L.; Johansen, T.A.; Sørensen, A.J. Robust and Reconfigurable On-Board Processing for a Hyperspectral Imaging Small Satellite. Remote Sens. 2023, 15, 3756. https://doi.org/10.3390/rs15153756

Langer DD, Orlandić M, Bakken S, Birkeland R, Garrett JL, Johansen TA, Sørensen AJ. Robust and Reconfigurable On-Board Processing for a Hyperspectral Imaging Small Satellite. Remote Sensing. 2023; 15(15):3756. https://doi.org/10.3390/rs15153756

Chicago/Turabian StyleLanger, Dennis D., Milica Orlandić, Sivert Bakken, Roger Birkeland, Joseph L. Garrett, Tor A. Johansen, and Asgeir J. Sørensen. 2023. "Robust and Reconfigurable On-Board Processing for a Hyperspectral Imaging Small Satellite" Remote Sensing 15, no. 15: 3756. https://doi.org/10.3390/rs15153756

APA StyleLanger, D. D., Orlandić, M., Bakken, S., Birkeland, R., Garrett, J. L., Johansen, T. A., & Sørensen, A. J. (2023). Robust and Reconfigurable On-Board Processing for a Hyperspectral Imaging Small Satellite. Remote Sensing, 15(15), 3756. https://doi.org/10.3390/rs15153756