Radar Target Characterization and Deep Learning in Radar Automatic Target Recognition: A Review

Abstract

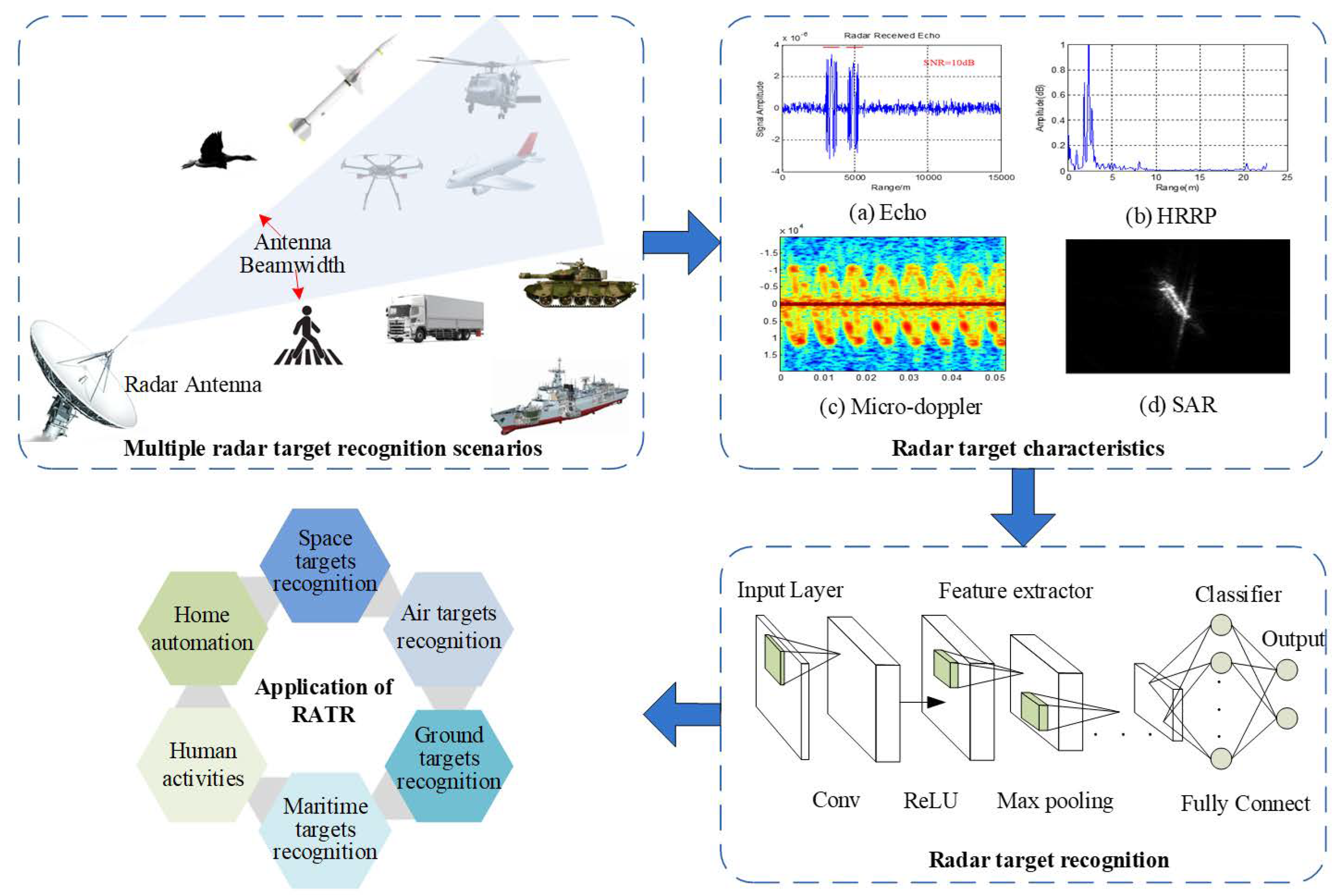

:1. Introduction

2. Related Work on RATR

2.1. Radar Target Characteristics

2.1.1. Narrowband Target Characteristics of Low-Resolution Radar

- (1)

- Motion characteristics

- (2)

- Echo characteristics

- (3)

- RCS characteristics

- (4)

- Modulation spectrum characteristics

- (5)

- Target pole distribution characteristics

- (6)

- Target polarization characteristics

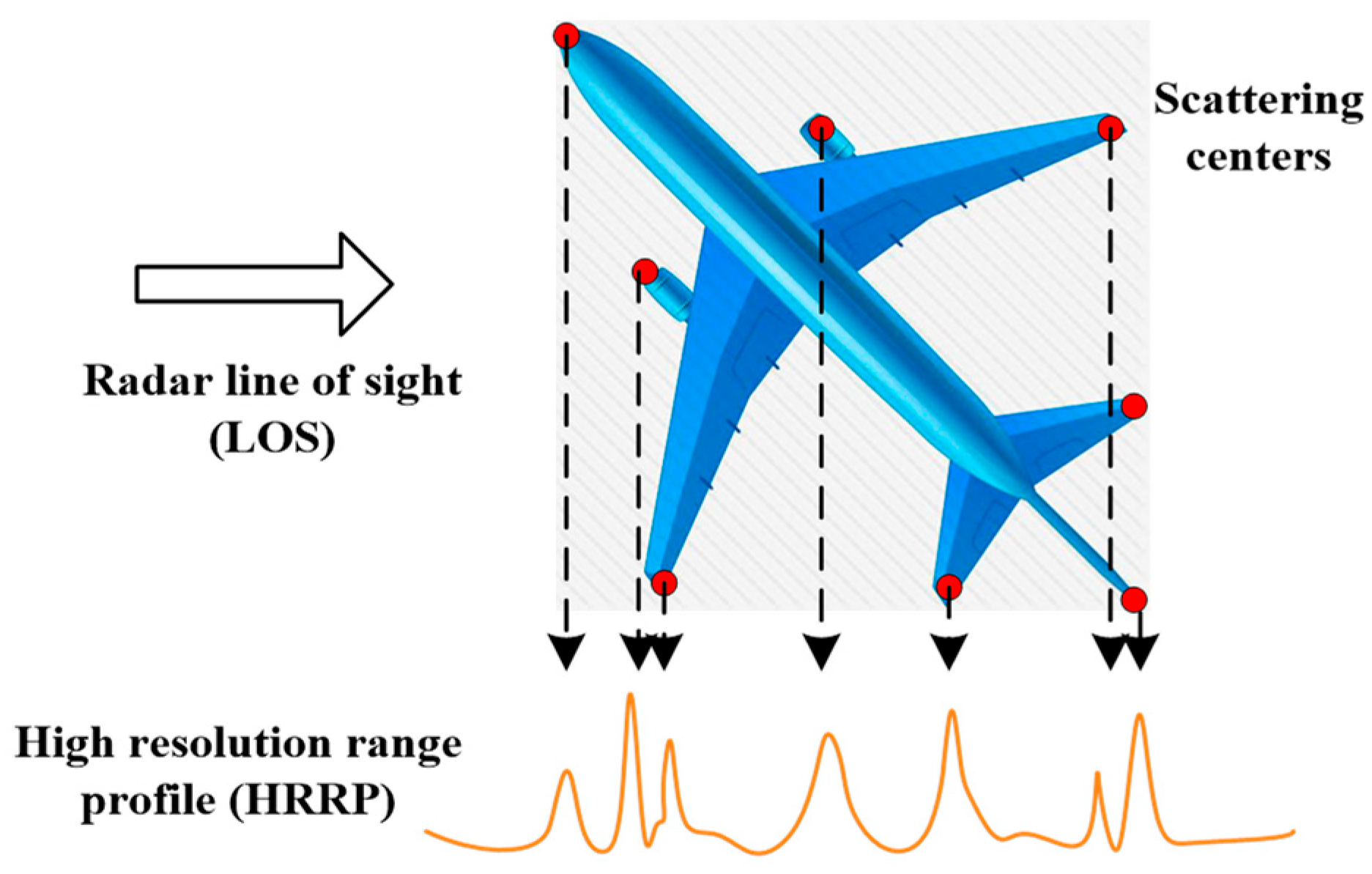

2.1.2. Wideband Target Characteristics of High-Resolution Radar

- (1)

- HRRP characteristics

- (2)

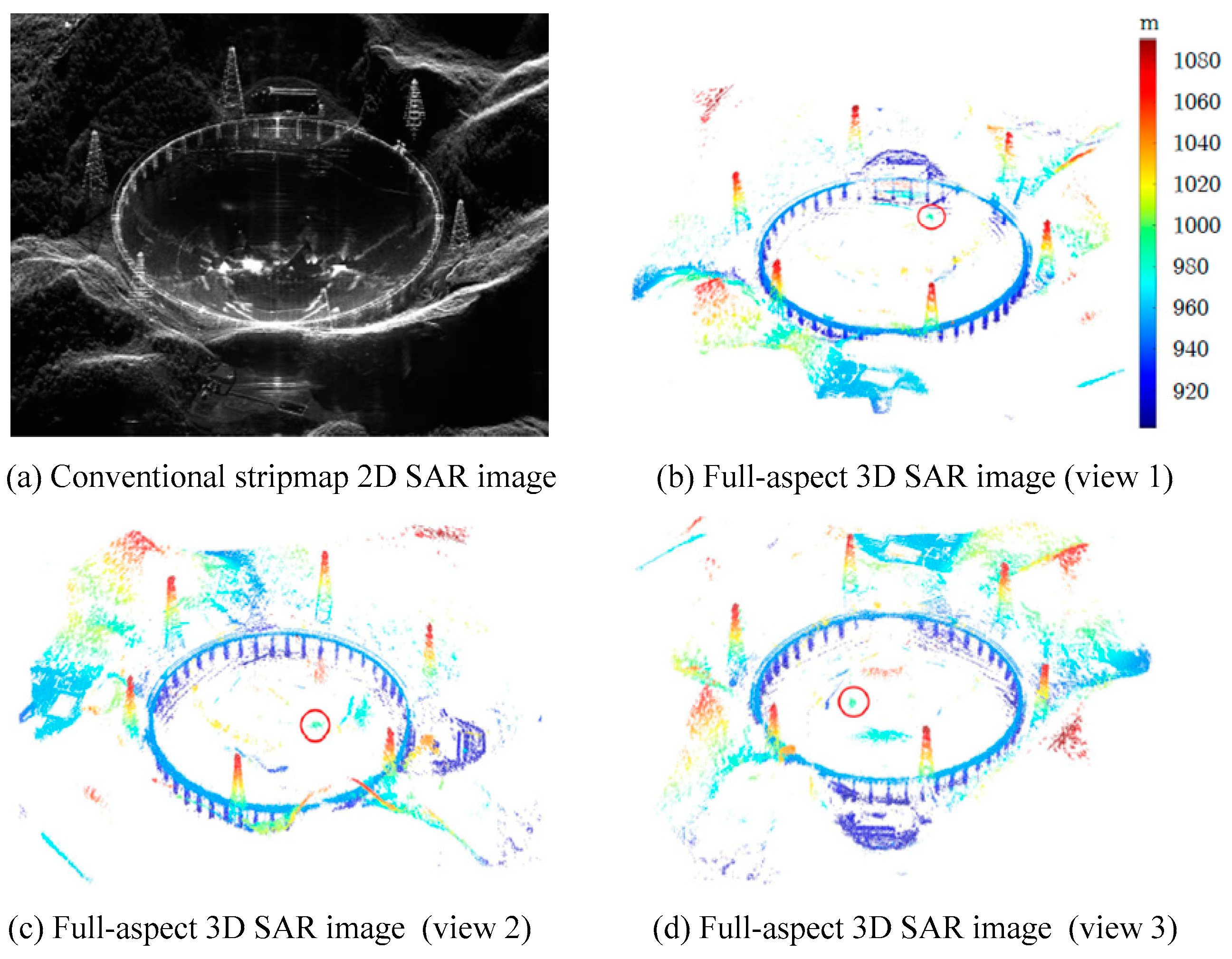

- SAR and ISAR image characteristics

- (3)

- Tomographic SAR and Interferometric SAR image characteristics

2.1.3. Radar Target Characteristics for Recognition

2.2. Traditional Methods for RATR

2.3. Deficiencies and Challenges of Traditional RATR Methods

3. Application of Deep Learning Technology in RATR

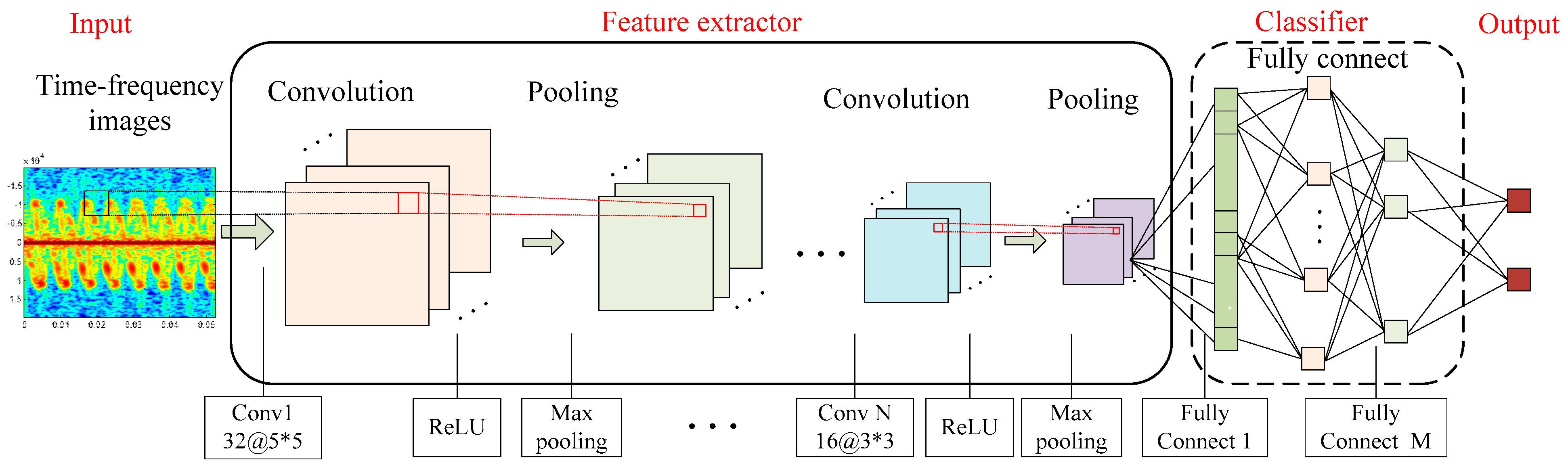

3.1. Typical Deep Learning Network Architecture in RATR

- (1)

- Convolutional neural network

- (2)

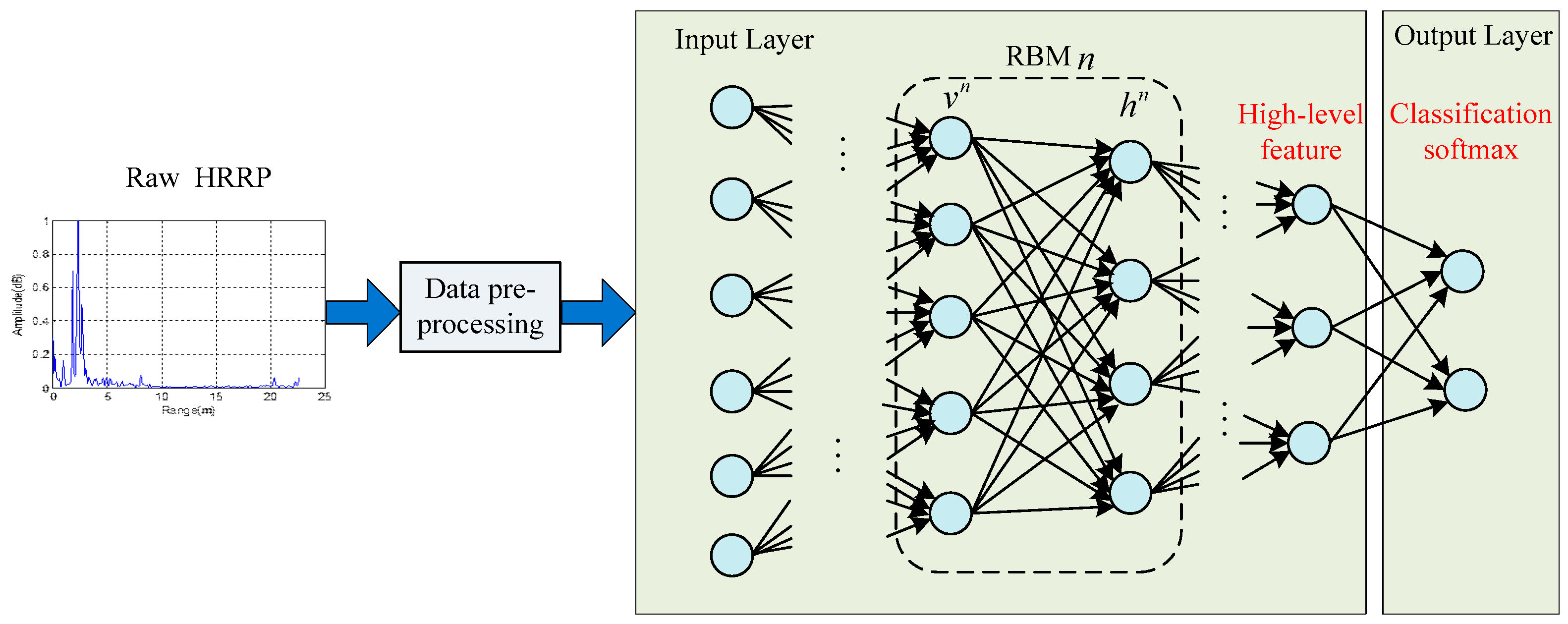

- Deep belief network

- (3)

- Recurrent neural network

- (4)

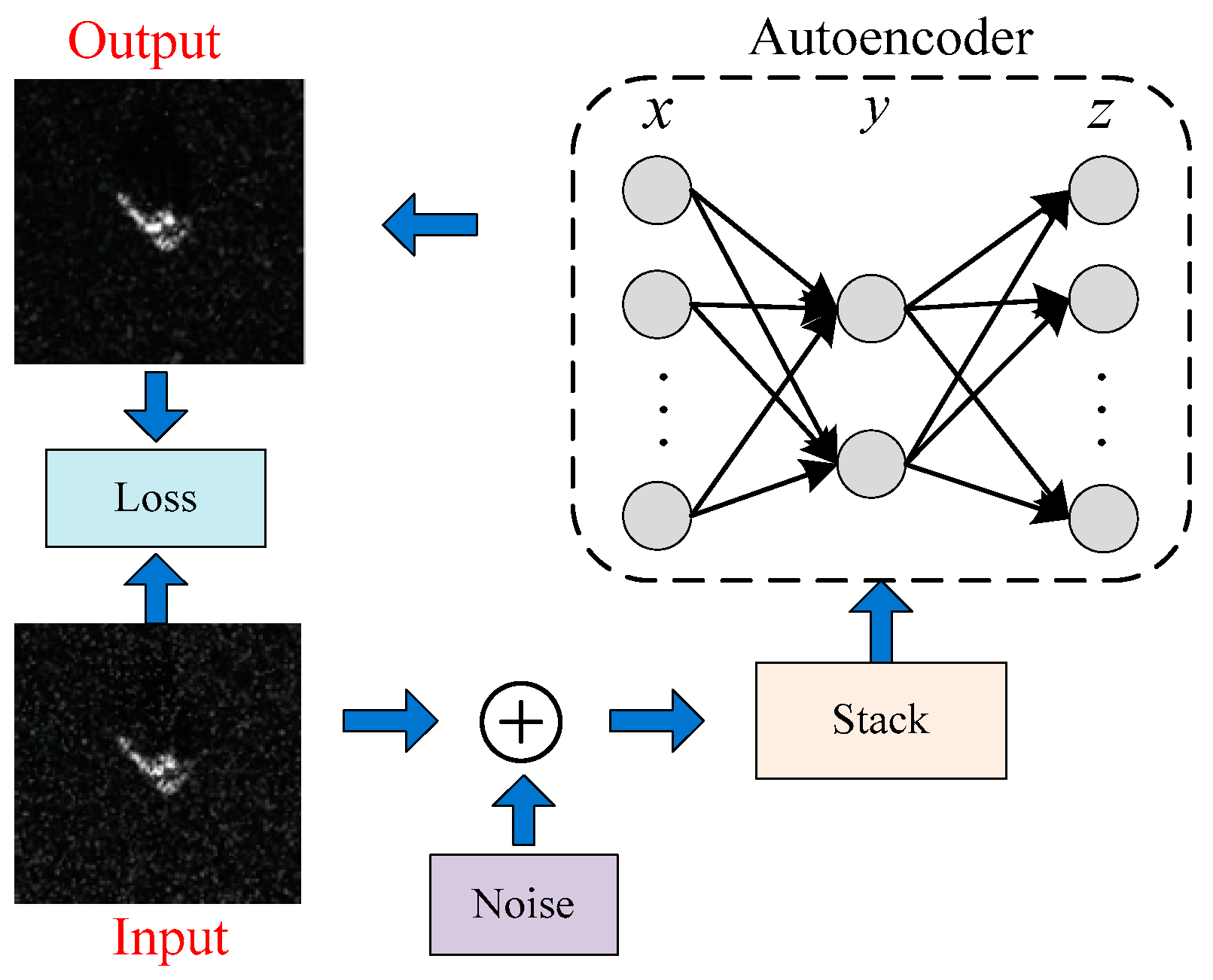

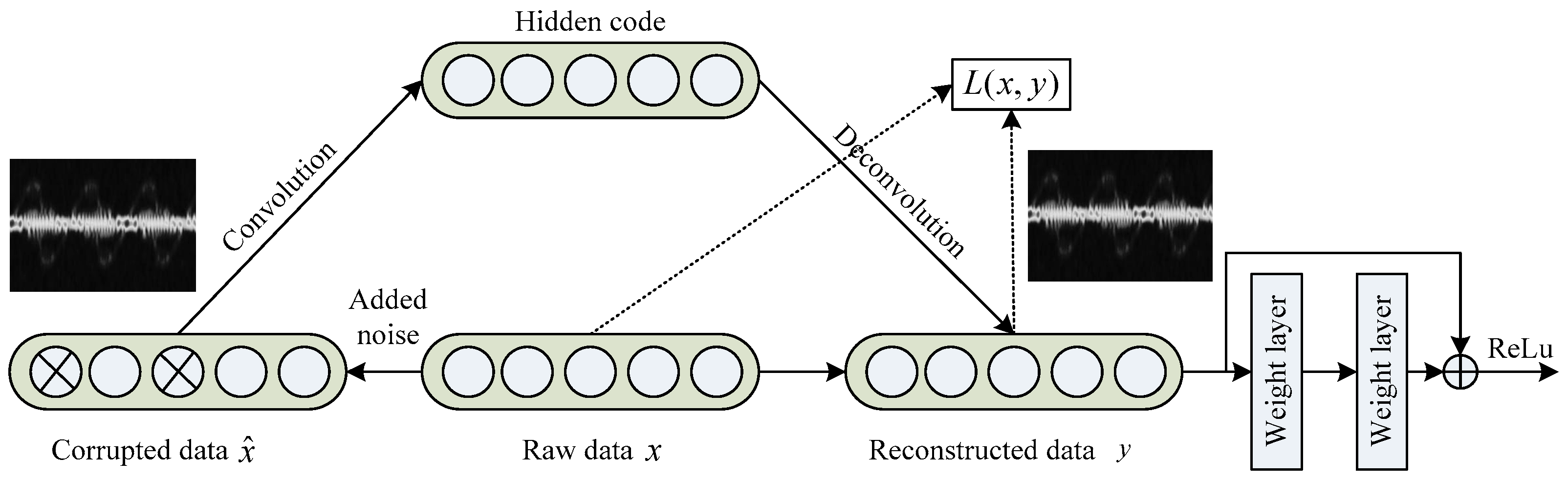

- Autoencoder

- (5)

- Multi-head attention mechanism

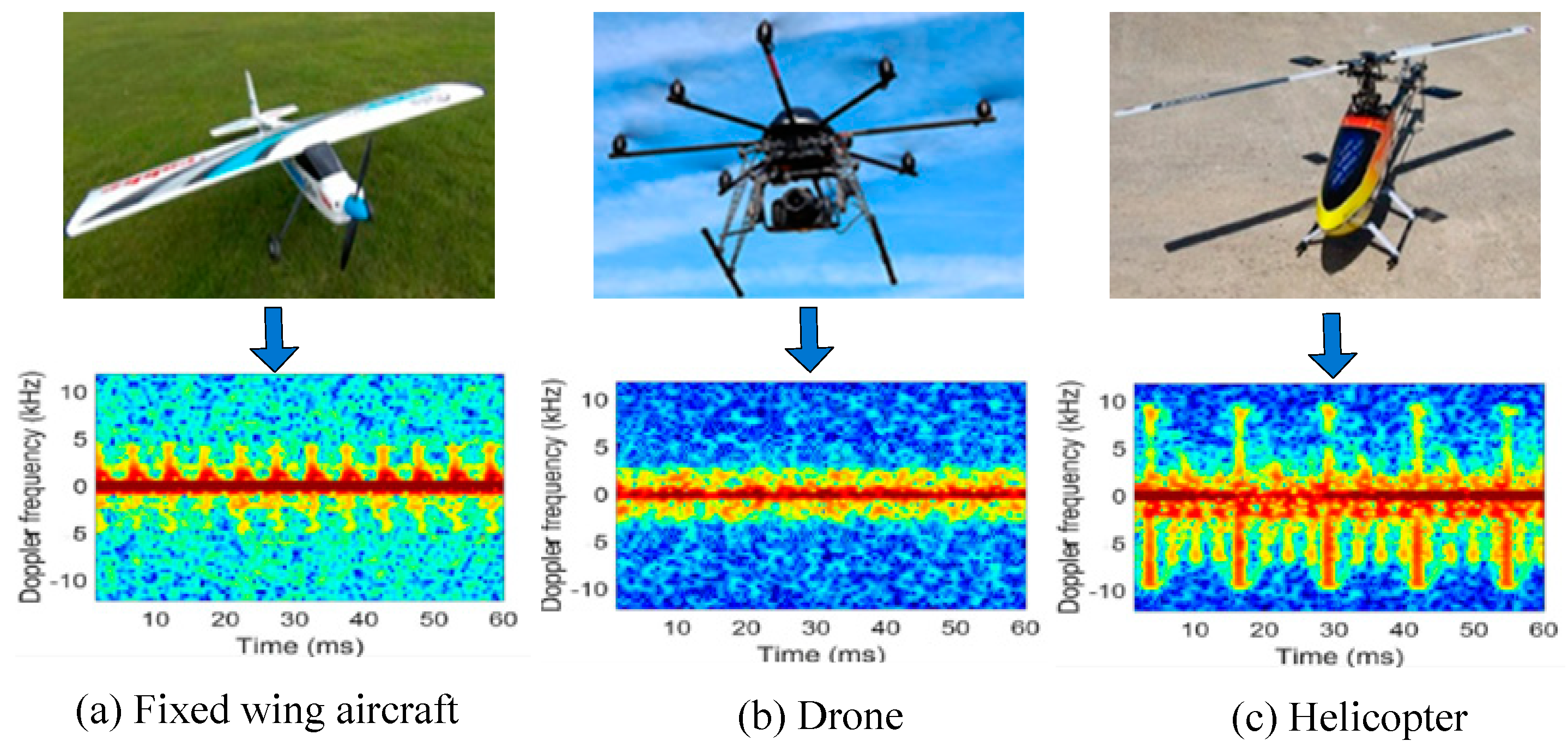

3.2. Deep Learning for RATR Based on Micro-Motion Characteristics

- (1)

- Recognition of space targets

- (2)

- Recognition of air targets

- (3)

- Recognition of ground targets

- (4)

- Recognition of sea-surface ship targets

- (5)

- Recognition of human activities

3.3. Deep Learning for HRRP-RATR

- (1)

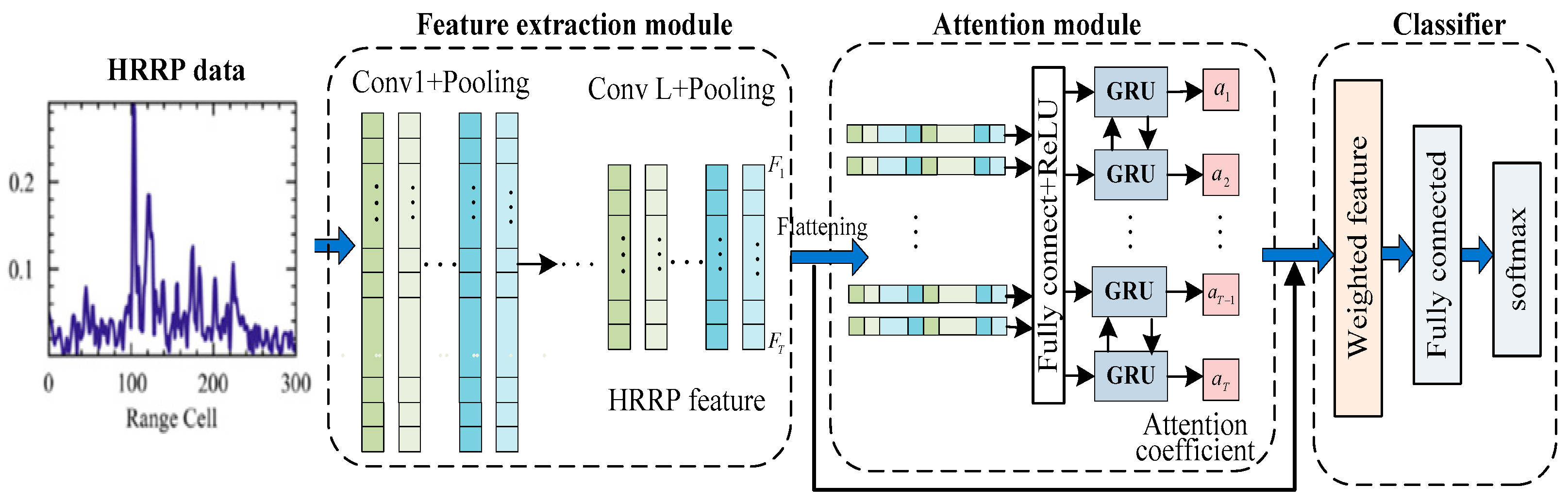

- CNN-based methods for HRRP-RATR

- (2)

- AE-based methods for HRRP-RATR

- (3)

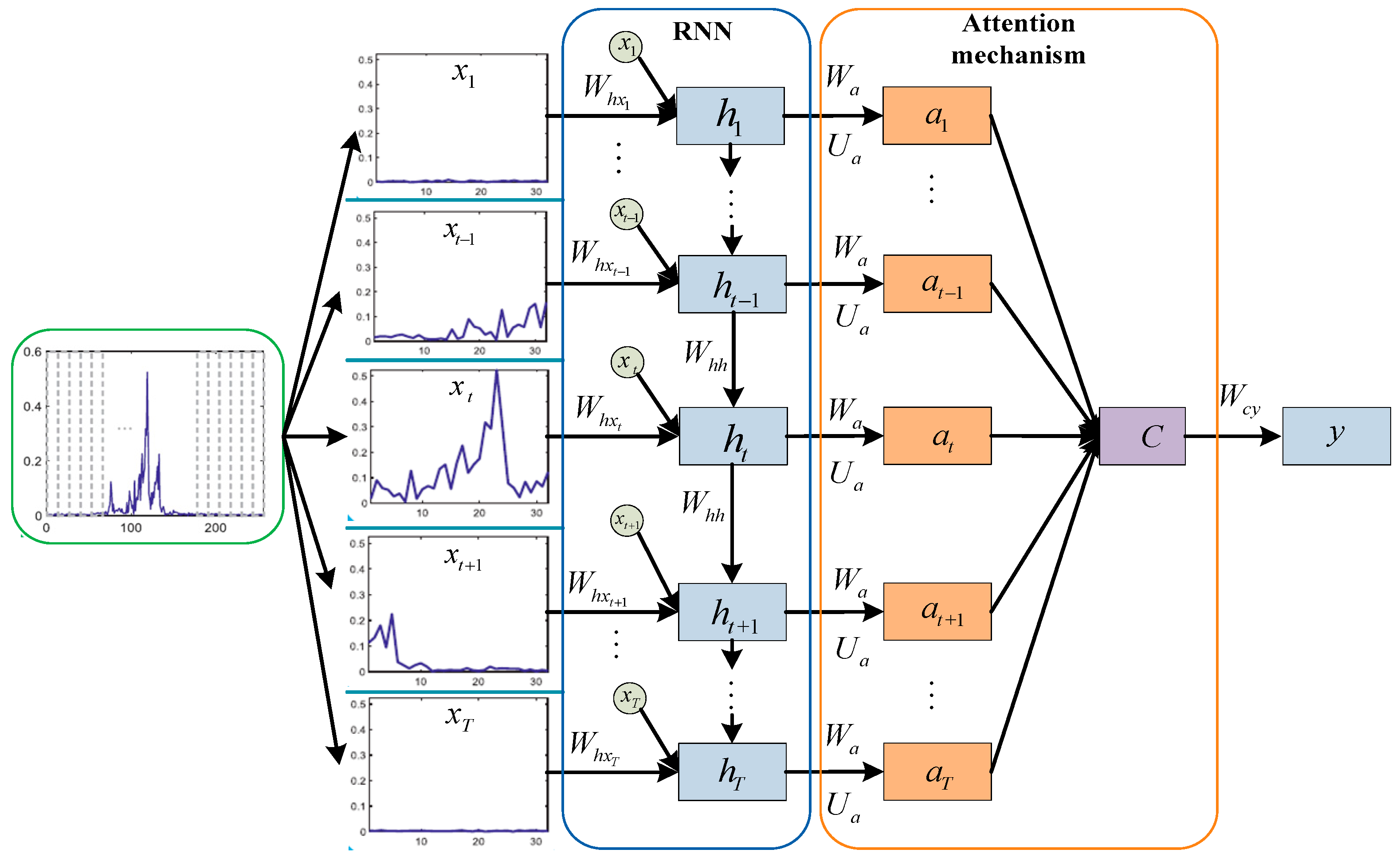

- RNN-based methods for HRRP-RATR

- (4)

- Improved deep learning methods for HRRP-RATR

- (a)

- Attention mechanism

- (b)

- Network fusion methods

- (c)

- Imbalanced and open-ended data distribution

3.4. Deep Learning for SAR-ATR

- (1)

- Semantic feature extraction and optimization methods of SAR images

- (2)

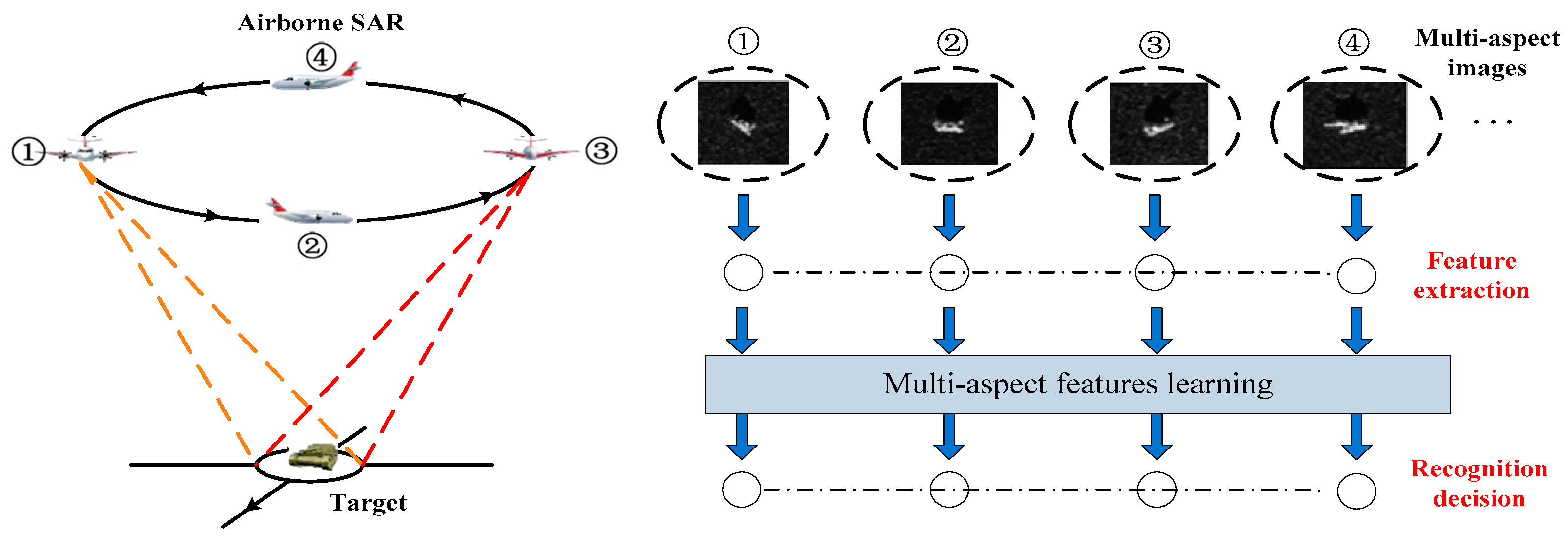

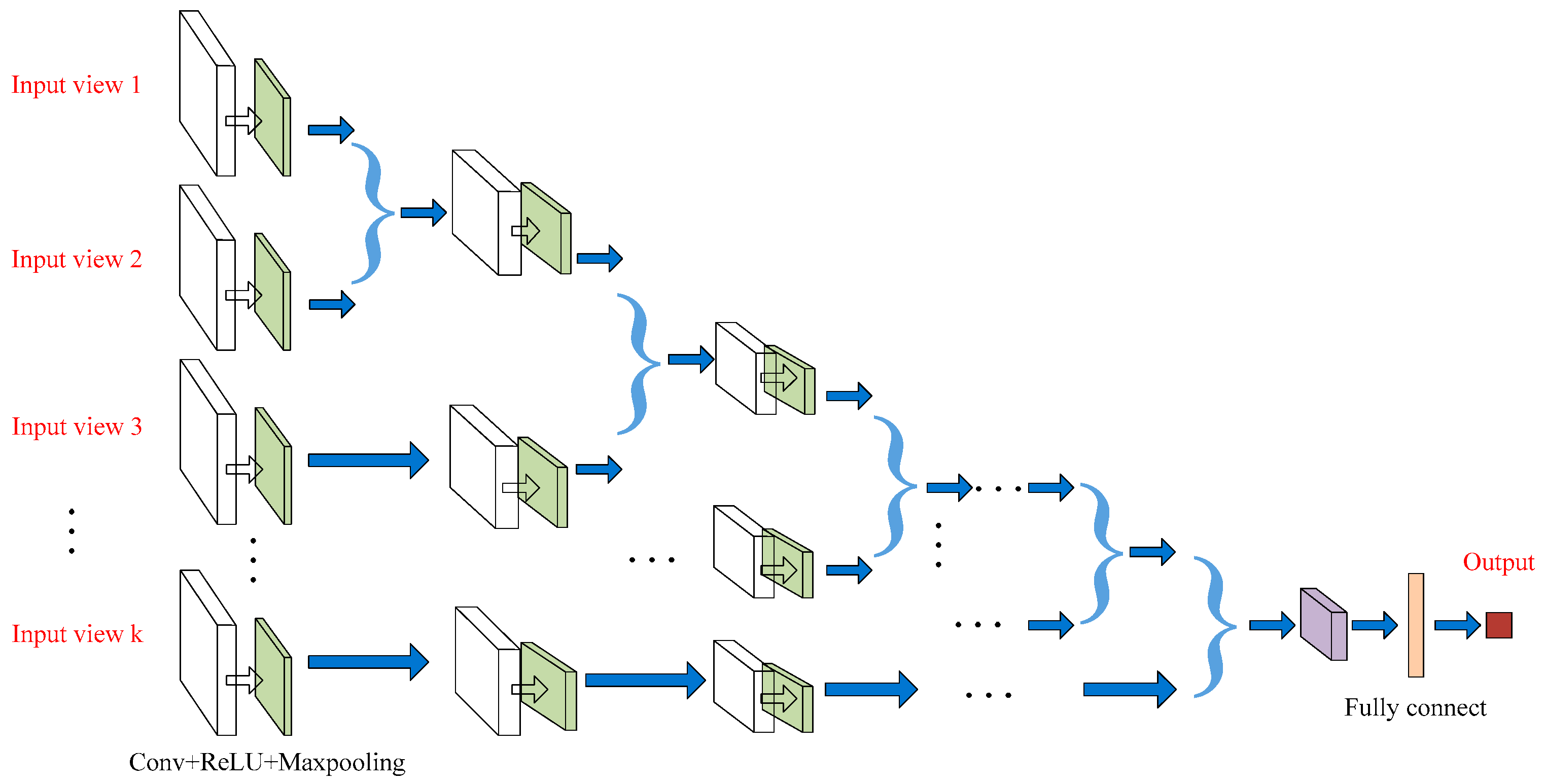

- Multi-aspect SAR-ATR methods

- (3)

- SAR-ATR methods based on small-sample dataset

- (a)

- Data augmentation

- (b)

- Generative adversarial network

- (c)

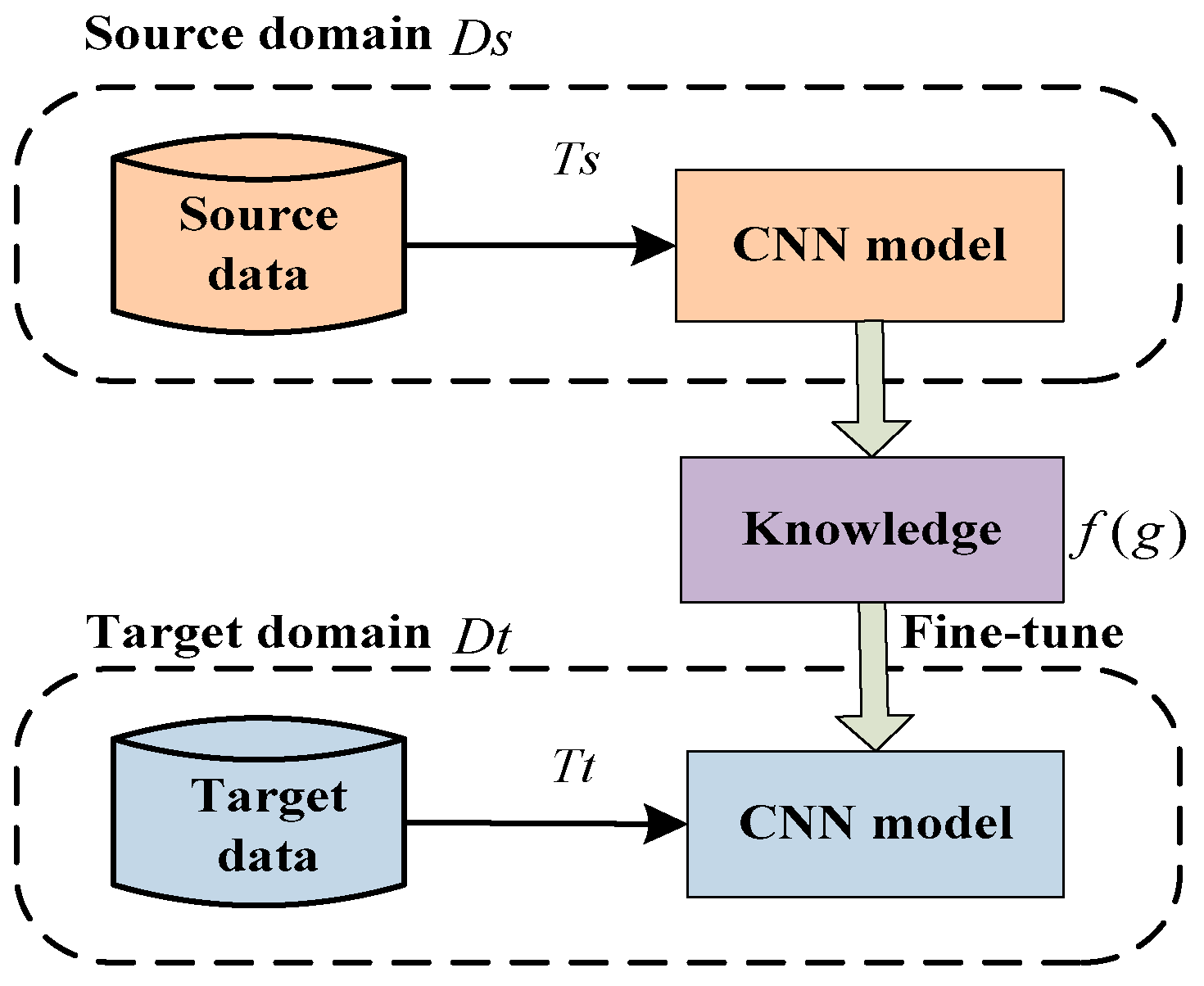

- Transfer-learning-based methods

- (d)

- Metric learning

- (4)

- SAR-ATR methods based on multi-feature fusion

3.5. Deep Learning for Other Radar-Target-Characteristic-Based RATR

3.6. Summary of Deep Learning Methods for RATR

4. Datasets for RATR

4.1. Dataset Descriptions

- (1)

- MSTAR dataset

- (2)

- OpenSARShip dataset

- (3)

- FUSAR_Ship dataset

4.2. Summary of Datasets for RATR

5. Challenges and Opportunities

- (1)

- Radar target characteristic analysis

- (2)

- RATR methods based on deep learning

- (3)

- Insufficient RATR Dataset

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Skolnik, M.I. Introduction to Radar Systems; McGraw-Hill: New York, NY, USA, 1980. [Google Scholar]

- Bhanu, B. Automatic Target Recognition: State of the Art Survey. IEEE Trans. Aerosp. Electron. Syst. 1986, 22, 364–379. [Google Scholar] [CrossRef]

- Cohen, M.N. Survey of Radar-based Target Recognition Techniques. Int. Soc. Opt. Photonics 1991, 1470, 233–242. [Google Scholar]

- Tait, P. Introduction to Radar Target Recognition; Institution of Electrical Engineers: New York, NY, USA, 2005; p. 432. [Google Scholar]

- Chen, V.C. The Micro-Doppler Effect in Radar (Artech House Radar Series), 2nd ed.; Artech House: Norwood, MA, USA, 2019. [Google Scholar]

- Jacobs, S.P.; O’Sullivan, J.A. Automatic Target Recognition Using Sequences of High-resolution Radar Range Profiles. IEEE Trans. Aerosp. Electron. Syst. 2000, 36, 364–381. [Google Scholar] [CrossRef]

- El-Darymli, K.; Gill, E.; McGuire, P.; Poewr, D.; Moloney, C. Automatic Target Recognition in Synthetic Aperture Radar Imagery: A State-of-the-Art Review. IEEE Access 2016, 4, 6014–6058. [Google Scholar] [CrossRef] [Green Version]

- Pei, J.F.; Huang, Y.L.; Sun, Z.C.; Zhang, Y.; Yang, J.Y.; Yeo, T. Multiview Synthetic Aperture Radar Automatic Target Recognition Optimization: Modeling and Implementation. IEEE Trans. Geosci. Remote Sens. 2018, 56, 6425–6439. [Google Scholar] [CrossRef]

- Pastina, D.; Spina, C. Multi-feature Based Automatic Recognition of Ship Targets in ISAR. In Proceedings of the IEEE Radar Conference, Oklahoma City, OK, USA, 23–27 April 2018; IEEE Press: Rome, Italy, 2008. [Google Scholar]

- Zhang, R.; Xia, J.; Tao, X. A Novel Proximal Support Vector Machine and Its Application in Radar Target Recognition. In Proceedings of the Chinese Control Conference, Zhangjiajie, China, 26–31 July 2007; pp. 513–515. [Google Scholar]

- Yu, X.; Wang, X. Kernel Uncorrelated Neighborhood Discriminative Embedding for Radar Target Recognition. Electron. Lett. 2008, 44, 154–155. [Google Scholar] [CrossRef]

- Du, L.; Liu, H.; Bao, Z. Radar HRRP Statistical Recognition: Parametric Model and Model Selection. IEEE Trans. Signal Process. 2008, 56, 1931–1944. [Google Scholar] [CrossRef]

- Byi, M.F.; Demers, J.T.; Rietman, E.A. Using a Kernel Adatron for Object Classification with RCS Data. arXiv 2010, arXiv:1005.5337. [Google Scholar]

- Long, T.; Liang, Z.; Liu, Q. Advanced Technology of High-Resolution Radar: Target Detection, Tracking, Imaging, and Recognition. Sci. China Inf. Sci. 2019, 62, 40301. [Google Scholar] [CrossRef] [Green Version]

- Haykin, S. Cognitive Radar: A Way of the Future. IEEE Signal Process. Mag. 2006, 23, 30–40. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Mason, E.; Yonel, B.; Yazici, B. Deep Learning for Radar. In Proceedings of the IEEE Radar Conference, Seattle, WA, USA, 8–12 May 2017. [Google Scholar] [CrossRef]

- Jiang, W.; Ren, Y.; Liu, Y.; Leng, J. Artificial Neural Networks and Deep Learning Techniques Applied to Radar Target Detection A Review. Electronics 2022, 11, 156. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; The MIT Press: Cambridge, UK, 2016. [Google Scholar]

- Mustaqeem; Ishaq, M.; Kwon, S. A CNN-Assisted deep echo state network using multiple Time-Scale dynamic learning reservoirs for generating Short-Term solar energy forecasting. Sustain. Energy Technol. Assess. 2022, 52, 102275. [Google Scholar] [CrossRef]

- Hinton, G.E.; Osindero, S.; The, Y.W. A Fast Learning Algorithm for Deep Belief Nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef]

- Ricci, R.; Balleri, A. Recognition of Humans Based on Radar Micro-Doppler Shape Spectrum Features. IET Radar Sonar Navig. 2015, 9, 1216–1223. [Google Scholar] [CrossRef]

- Jokanovic, B.; Amin, M. Fall Detection using Deep Learning in Range-Doppler Radars. IEEE Trans Trans. Aerosp. Electron. Syst. 2018, 54, 180–189. [Google Scholar] [CrossRef]

- Tang, T.; Wang, C.; Gao, M. Radar Target Recognition Based on Micro-Doppler Signatures Using Recurrent Neural Network. In Proceedings of the IEEE 4th International Conference on Electronics Technology, Chengdu, China, 7–10 May 2021. [Google Scholar] [CrossRef]

- Kim, Y.; Moon, T. Human Detection and Activity Classification based on Micro-Dopplers using Deep Convolutional of Neural Networks. IEEE Geosci. Remote Sens. Lett. 2016, 13, 2–8. [Google Scholar] [CrossRef]

- Fu, H.X.; Li, Y.; Wang, Y.C.; Peng, L. Maritime Ship Targets Recognition with Deep Learning. In Proceedings of the 37th Chinese Control Conference, Wuhan, China, 25–27 July 2018. [Google Scholar]

- Arabshahi, P.; Tillman, M. Development and Performance Analysis of a Class of Intelligent Target Recognition Algorithms. In Proceedings of the 15th IEEE International Conference on Fuzzy Systems, New Orleans, LA, USA, 11 October 1996. [Google Scholar]

- Han, J.; He, M.; Mao, Y. A New Method for Recognition for Recognizing Radar Radiating Source. In Proceedings of the International Conference on Wavelet Analysis and Pattern Recognition, Beijing, China, 2–4 November 2007. [Google Scholar]

- Zyweck, A.; Bogner, R.E. Radar Target Classification of Commercial Aircraft. IEEE Trans. Aerosp. Electron. Syst. 1996, 32, 598–606. [Google Scholar] [CrossRef]

- Lee, K.; Wang, L.; Ou, J. An Efficient Algorithm for the Radar Recognition of Ships on the Sea Surface. In Proceedings of the 2007 International Symposium of MTS/IEEE Oceans, Vancouver, BC, Canada, 29 September–4 October 2007; pp. 1–6. [Google Scholar]

- Shuley, N.; Lui, H. Sampling Procedures for Resonance Based Radar Target Identification. IEEE Trans. Antenna Propag. 2008, 5, 1487–1491. [Google Scholar]

- Bell, M.; Grubbs, R. JEM modeling and measurement for radar target identification. IEEE Trans. Aerosp. Electron. Syst. 1993, 29, 73–87. [Google Scholar] [CrossRef]

- Chen, V.; Li, F.; Ho, S. Micro-Doppler Effect in Radar Phenomenon, Model and Simulation Study. IEEE Trans. Aerosp. Electron. Syst. 2006, 42, 2–21. [Google Scholar] [CrossRef]

- Blaricum, M.L.; Mittra, R.A. Technique for Extracting the Poles and Residues of a System Directly from Its Transient Response. IEEE Trans. Antennas Propag. 1975, 23, 777–781. [Google Scholar] [CrossRef]

- Jain, V.K.; Sarkar, T.; Weiner, D. Rational modeling by Pencil-of-Function Method. IEEE Trans. Acoust. Speech Signal Process. 1983, 31, 564–573. [Google Scholar] [CrossRef]

- Hua, Y.B.; Sarkar, T.K. Generalized Pencil-of-Function Method for Extracting Poles of an EM System from Its Transient Response. IEEE Trans. Antennas Propag. 1989, 37, 229–234. [Google Scholar] [CrossRef] [Green Version]

- Chen, K.M. Radar Wave Synthesis Method: A New Radar Detection Scheme. IEEE Trans. Antennas Propag. 1981, 29, 55–565. [Google Scholar]

- Cameron, W.L.; Leung, L.K. Feature Motivated Polarization Scattering Matrix Decomposition. In Proceedings of the IEEE International Radar Conference, Arlington, VA, USA, 7–10 May 1990; pp. 549–557. [Google Scholar]

- Chamberlain, N.F. Syntactic Classification of Radar Targets Using Polarimetric Signatures. In Proceedings of the IEEE International Conference on Systems Engineering, Pittsburgh, PA, USA, 9–11 August 1990. [Google Scholar]

- Xiao, J.; Nehorai, A. Polarization Optimization for Scattering Estimation in Heavy Clutter. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Las Vegas, NV, USA, 31 March–4 April 2008; pp. 1473–1476. [Google Scholar]

- Gao, Q.; Liu, J.; Wu, R. Novel Method for Automatic Target Recognition Based on High Resolution Range Profiles. J. Civ. Aviat. Univ. China 2002, 20, 1–4. [Google Scholar]

- Li, H.J.; Yang, S.H. Using Range Profiles as Feature Vectors to Identify Aerospace Objects. IEEE Trans. Antennas Propag. 1993, 41, 261–268. [Google Scholar] [CrossRef]

- Turnbaugh, M.A.; Bauer, K.W.; Oxley, M.E.; Miller, J.O. HRR Signature Classification Using Syntactic Pattern Recognition. In Proceedings of the IEEE Aerospace Conference, Big Sky, MT, USA, 1–8 March 2008; pp. 1–9. [Google Scholar]

- Mishra, A.K.; Mulgrew, B. Bistatic SAR ATR. IET Radar Sonar Navig. 2007, 6, 459–469. [Google Scholar] [CrossRef]

- Yang, W.; Zou, T.Y.; Dai, D.X. Supervised Land-cover Classification of TerraSAR-X Imagery over Urban Areas using Extremely Randomized Clustering Forests. In Proceedings of the 2009 Joint Urban Remote Sensing Event, Shanghai, China, 20–22 May 2009. [Google Scholar]

- Lowe, D.G. Object Recognition from Local Scale-invariant Features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of Oriented Gradients for Human Detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR05), San Diego, CA, USA, 20–25 June 2005; pp. 886–893. [Google Scholar]

- Saidi, M.; Hoeltzener, D.; Toumi, A. Automatic Recognition of ISAR Images: Target Shapes Features Extraction. In Proceedings of the 3rd International Conference on Information and Communication Technologies: From Theory to Applications, Damascus, Syria, 7–11 April 2008; pp. 1–6. [Google Scholar]

- Feng, S.; Lin, Y.; Wang, Y.; Teng, F.; Hong, W. 3D Point Cloud Reconstruction Using Inversely Mapping and Voting from Single Pass CSAR Images. Remote Sens. 2021, 13, 3534. [Google Scholar] [CrossRef]

- Li, Y.; Yin, Q.; Lin, Y.; Hong, W. Anisotropy Scattering Detection from Multiaspect Signatures of Circular Polarimetric SAR. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1575–1579. [Google Scholar] [CrossRef]

- Lin, Y.; Liu, Y.; Wang, Y.; Ye, S.; Zhang, Y.; Li, Y.; Li, W.; Qu, H.; Hong, W. Frequency Domain Panoramic Imaging Algorithm for Ground-Based ArcSAR. Sensors 2020, 20, 7027. [Google Scholar] [CrossRef]

- Wang, Y.; Song, Y.; Lin, Y.; Li, Y.; Hong, W. Interferometric DEM-Assisted High Precision Imaging Method for ArcSAR. Sensors 2019, 19, 2921. [Google Scholar] [CrossRef] [Green Version]

- Zhang, H.; Lin, Y.; Teng, F.; Hong, W. A Probabilistic Approach for Stereo 3D Point Cloud Reconstruction from Airborne Single-Channel Multi-Aspect SAR Image Sequences. Remote Sens. 2022, 14, 5715. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, Q.; Lin, Y.; Zhao, Z.; Li, Y. Multi-phase-center Sidelobe Suppression Method for Circular GBSAR Based on Sparse Spectrum. IEEE Access 2020, 8, 133802–133816. [Google Scholar] [CrossRef]

- Teng, F.; Lin, Y.; Wang, Y.; Hong, W. An Anisotropic Scattering Analysis Method Based on the Statistical Properties of Multi-Angular SAR Images. Remote Sens. 2020, 12, 2152. [Google Scholar] [CrossRef]

- Lin, Y.; Zhang, L.; Wei, L.; Zhang, H.; Feng, S.; Wang, Y.; Hong, W. Research on Full-aspect Three-dimensional SAR Imaging Method for Complex Structural Facilities without Prior Model. J. Radars 2022, 11, 909–919. [Google Scholar]

- Zhou, J.; Zhao, H. Global Scattering Center Model Extraction of Radar Targets Based on Wideband Measurements. IEEE Trans. Antennas Propag. 2008, 56, 2051–2060. [Google Scholar] [CrossRef]

- Haykin, S. Neural Networks and Learning Machines; Pearson Education Inc.: Upper Saddle River, NJ, USA, 2009. [Google Scholar]

- Bi, F.; Hou, J.; Wang, Y.; Chen, J.; Wang, Y. Land Cover Classification of Multispectral Remote Sensing Images Based on Time-Spectrum Association Features and Multikernel Boosting Incremental Learning. J. Appl. Remote Sens. 2019, 13, 044510. [Google Scholar] [CrossRef]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the Dimensionality of Data with Neural Networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mikolov, T.; Karafiat, M.; Burget, L.; Cernock, J.; Khudanpur, S. Recurrent Neural Network Based Language Model. In Proceedings of the International Speech Communication Association, Makuhari, Chiba, Japan, 6–10 September 2015. [Google Scholar]

- Vincent, P.; Larochelle, H.; Bengio, Y.; Manzago, P.A. Extracting and Composing Robust Features with Denoising Autoencoders. In Proceedings of the International Conference on Machine Learning (ICML), Helsinki, Finland, 5–9 July 2008; pp. 1096–1103. [Google Scholar]

- Wang, Y.; Feng, C.; Zhang, Y.; Ge, Q. Classification of Space Targets with Micro-motion Based on Deep CNN. In Proceedings of the IEEE 2nd International Conference on Electronic Information and Communication Technology (ICEICT), Harbin, China, 20–22 January 2019; pp. 557–561. [Google Scholar]

- Han, L.; Feng, C. Micro-Doppler-based Space Target Recognition with a One-Dimensional Parallel Network. Int. J. Antennas Propag. 2020, 2020, 8013802. [Google Scholar] [CrossRef]

- Garcia, A.J.; Aouto, A.; Lee, J.; Kim, D. CNN-32DC: An Improved Radar-based Drone Recognition System Based on Convolutional Neural Network. ICT Express 2022, 8, 606–610. [Google Scholar] [CrossRef]

- Park, J.; Park, J.-S.; Park, S.-O. Small Drone Classification with Light CNN and New Micro-Doppler Signature Extraction Method Based on A-SPC Technique. arXiv 2020, arXiv:2009.14422. [Google Scholar] [CrossRef]

- Rahman, S.; Robertson, D.A. Classification of Drones and Birds Using Convolutional Neural Networks Applied to Radar Micro-Doppler Spectrogram Images. IET Radar Sonar Navig. 2020, 14, 653–661. [Google Scholar] [CrossRef] [Green Version]

- Hanif, A.; Muaz, M. Deep Learning Based Radar Target Classification Using Micro-Doppler Features. In Proceedings of the 17th International Conference on Aerospace and Engineering (ICASE), Las Palmas de Gran Canaria, Spain, 17–22 February 2018. [Google Scholar] [CrossRef]

- Choi, B.; Oh, D. Classification of Drone Type Using Deep Convolutional Neural Networks Based on Micro-Doppler Simulation. In Proceedings of the International Symposium on Antennas and Propagation (ISAP), Busan, Republic of Korea, 23–26 October 2018; pp. 1–2. [Google Scholar]

- Vanek, S.; Gotthans, J.; Gotthans, T. Micro-Doppler Effect and Determination of Rotor Blades by Deep Neural Networks. In Proceedings of the 32nd International Conference Radio Elektronika, Kosice, Slovakia, 21–22 April 2022. [Google Scholar] [CrossRef]

- Angelov, A.; Robertson, A. Murray-Smith R, and Fioranelli F, Practical Classification of Different Moving Targets Using Automotive Radar and Deep Neural Networks. IET Radar Sonar Navig. 2018, 12, 1082–1089. [Google Scholar] [CrossRef] [Green Version]

- Wu, Q.; Gao, T.; Lai, Z.; Li, D. Hybrid SVM-CNN Classification Technique for Human-vehicle Targets in an Automotive LFMCW Radar. Sensors 2020, 20, 3504. [Google Scholar] [CrossRef]

- Zhu, L.; Zhang, S.; Wang, X.; Chen, S.; Zhao, H.; Wei, D. Multilevel Recognition of UAV-to-Ground Targets Based on Micro-Doppler Signatures and Transfer Learning of Deep Convolutional Neural Networks. IEEE Trans. Instrum. Meas. 2021, 70, 2503111. [Google Scholar] [CrossRef]

- Zhu, L.; Zhang, S.; Chen, K.; Chen, S.; Wang, X.; Wei, D.; Zhao, H. Low-SNR Recognition of UAV-to-Ground Targets Based on Micro-Doppler Signatures Using Deep Convolutional Denoising Encoders and Deep Residual Learning. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5106913. [Google Scholar] [CrossRef]

- Jiang, W.; Ren, Y.; Liu, Y.; Wang, Z.; Wang, X. Recognition of Dynamic Hand Gesture Based on Mm-wave FMCW Radar Micro-doppler Signatures. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021. [Google Scholar] [CrossRef]

- Kong, F.; Deng, J.; Fan, Z. Gesture Recognition System Based on Ultrasonic FMCW and ConvLSTM model. Measurement 2022, 190, 110743. [Google Scholar] [CrossRef]

- Wang, M.; Zhang, Y.; Cui, G. Human Motion Recognition Exploiting Radar with Stacked Recurrent Neural Network. Digit. Signal Process. 2019, 87, 125–131. [Google Scholar] [CrossRef]

- Jia, Y.; Guo, Y.; Wang, G.; Song, R.; Cui, G.; Zhong, X. Multi-frequency and Multi-domain Human Activity Recognition Based on SFCW Radar using Deep Learning. Neurocomputing 2021, 444, 274–287. [Google Scholar] [CrossRef]

- Li, X.; He, Y.; Fioranelli, F.; Jing, X.; Yarovoy, A.; Yang, Y. Human Motion Recognition with Limited Radar Micro-Doppler Signatures. IEEE Trans. Geosci. Remote Sens. 2021, 59, 6586–6599. [Google Scholar] [CrossRef]

- Chakraborty, M.; Kumawat, H.C.; Dhavale, S.V. Application of DNN for Radar Micro-Doppler Signature-based Human Suspicious Activity Recognition. Pattern Recognit. Lett. 2022, 162, 1–6. [Google Scholar] [CrossRef]

- Persico, A.R.; Clemente, C.; Gaglione, D. On Model, Algorithms, and Experiment for Micro-Doppler-based Recognition of Ballistic Targets. IEEE Trans. Aerosp. Electron. Syst. 2017, 53, 1088–1108. [Google Scholar] [CrossRef] [Green Version]

- Gao, H.; Xie, L.; Wen, S.; Kuang, Y. Micro-Doppler Signature Extraction from Ballistic Target with Micro-motions. IEEE Trans. Aerosp. Electron. Syst. 2010, 46, 1969–1982. [Google Scholar] [CrossRef]

- Huizing, A.; Heiligers, M.; Dekker, B.; Wit, J.; Cifola, L.; Harmanny, R. Deep Learning for Classification of Mini-UAVs Using Micro-Doppler Spectrograms in Cognitive Radar. IEEE Aerosp. Electron. Syst. Mag. 2019, 34, 46–56. [Google Scholar] [CrossRef]

- Zhang, Q.; Zeng, Y.; He, Y.; Luo, Y. Avian Detection and Identification with High-resolution radar. In Proceedings of the 2008 IEEE Radar Conference, Rome, Italy, 26–30 May 2008; pp. 1–6. [Google Scholar]

- Zhu, F.; Luo, Y.; Zhang, Q.; Feng, Y.; Bai, Y. ISAR Imaging for Avian Species Identification with Frequency-stepped Chirp Signals. IEEE Geosci. Remote Sens. Lett. 2010, 7, 151–155. [Google Scholar] [CrossRef]

- Amiri, R.; Shahzadi, A. Micro-Doppler Based Target Classification in Ground Surveillance Radar Systems. Digit. Signal Process. 2020, 101, 102702. [Google Scholar] [CrossRef]

- Chen, X.; Dong, Y.; Li, X.; Guan, J. Modeling of Micromotion and Analysis of Properties of Rigid Marine Targets. J. Radars 2015, 4, 630–638. [Google Scholar]

- Chen, X.; Guan, J.; Zhao, Z.; Ding, H. Micro-Doppler Signatures of Sea Surface Targets and Applications to Radar Detection. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016. [Google Scholar] [CrossRef]

- Damarla, T.; Bradley, M.; Mehmood, A.; Sabatier, J. Classification of Animals and People Ultrasonic Signatures. IEEE Sens. J. 2013, 13, 1464–1472. [Google Scholar] [CrossRef]

- Shi, X.R.; Zhou, F.; Liu, L.; Bo, Z.; Zhang, Z. Textural Feature Extraction Based on Time-frequency Spectrograms of Humans and Vehicles. IET Radar Sonar Navig. 2015, 9, 1251–1259. [Google Scholar] [CrossRef]

- Amin, M.G.; Ahmad, F.; Zhang, Y.D.; Boashash, B. Human Gait Recognition with Cane Assistive Device Using Quadratic Time Frequency Distributions. IET Radar Sonar Navig. 2015, 9, 1224–1230. [Google Scholar] [CrossRef] [Green Version]

- Saho, K.; Fujimoto, M.; Masugi, M.; Chou, L.S. Gait Classification of Young Adults, Elderly Non-fallers, and Elderly Fallers Using Micro-Doppler Radar Signals: Simulation Study. IEEE Sens. J. 2017, 17, 2320–2321. [Google Scholar] [CrossRef]

- Mikhelson, I.V.; Bakhtiari, S.; Elmer, T.W., II; Sahakian, A.V. Remote Sensing of Heart Rate and Patterns of Respiration on a Stationary Subject Using 94-GHz Millimeter-wave Interferometry. IEEE Trans. Biomed. Eng. 2011, 58, 1671–1677. [Google Scholar] [CrossRef] [PubMed]

- Xu, Z.W.; Wu, Y.J.; Lu, X.Q. Time-frequency Analysis of Terahertz Radar Signal for Vital Signs Sensing Based on Radar Sensor. Int. J. Sens. Netw. 2013, 13, 241–253. [Google Scholar] [CrossRef]

- Lunden, J.; Koivunen, V. Deep Learning for HRRP-based Target Recognition in Multi-static Radar System. In Proceedings of the 2016 IEEE Radar Conference, Philadelphia, PA, USA, 2–6 May 2016; pp. 1–6. [Google Scholar]

- Guo, C.; He, Y.; Wang, H.P.; Jian, T.; Sun, S. Radar HRRP Target Recognition Based on Deep One-Dimensional Residual-Inception Network. IEEE Access 2019, 7, 9191–9204. [Google Scholar] [CrossRef]

- Guo, C.; Jian, T.; Xu, C.; He, Y.; Sun, S. Radar HRRP Target Recognition Based on Deep Multi-Scale 1D Convolutional Neural Network. J. Electron. Inf. Technol. 2019, 41, 1302–1309. [Google Scholar]

- Guo, C.; Wang, H.P.; Jian, T.; He, Y.; Zhang, X. Radar Target Recognition Based on Feature Pyramid Fusion Lightweight CNN. IEEE Access 2019, 7, 51140–51149. [Google Scholar] [CrossRef]

- Liao, K.; Si, J.; Zhu, F.; He, X. Radar HRRP Target Recognition Based on Concatenated Deep Neural Networks. IEEE Access 2018, 6, 29211–29218. [Google Scholar] [CrossRef]

- Pan, M.; Jiang, J.; Li, Z.; Cao, J.; Zhou, T. Radar HRRP recognition Based on Discriminant Deep Autoencoders with Small Training Data Size. Electron. Lett. 2016, 52, 1725–1727. [Google Scholar]

- Feng, B.; Chen, B.; Liu, H.W. Radar HRRP Target Recognition with Deep Networks. Pattern Recognit. 2017, 61, 379–393. [Google Scholar] [CrossRef]

- Pan, M.; Jiang, J.; Kong, Q.; Shi, J.; Zhou, T. Radar HRRP Target Recognition Based on t-SNE Segmentation and Discriminant Deep Belief Network. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1609–1613. [Google Scholar] [CrossRef]

- Zhao, F.; Liu, Y.; Huo, K.; Zhang, S.; Zhang, Z. Radar HRRP Target Recognition Based on Stacked Autoencoder and Extreme Learning Machine. Sensors 2018, 18, 173–187. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhao, F.; Liu, Y.; Huo, K. A Radar Target Classification Algorithm Based on Dropout Constrained Deep Extreme Learning Machine. J. Radars 2018, 7, 613–621. [Google Scholar]

- Yu, S.H.; Xie, Y.J. Application of a Convolutional Autoencoder to Half Space Radar HRRP Recognition. In Proceedings of the 2018 International Conference on Wavelet Analysis and Pattern Recognition (ICWAPR), Chengdu, China, 15–18 July 2018. [Google Scholar]

- Xu, B.; Chen, B.; Liu, H.; Jin, L. Attention-Based Recurrent Neural Network Model for Radar High-Resolution Range Profile Target Recognition. J. Electron. Inf. Technol. 2016, 38, 2988–2995. [Google Scholar]

- Xu, B.; Chen, B.; Wan, J.W.; Liu, H.; Lin, P. Target-Aware Recurrent Attentional Network for Radar HRRP Target Recognition. Signal Process. 2018, 155, 268–280. [Google Scholar] [CrossRef]

- Xu, B.; Chen, B.; Liu, J. Radar HRRP Target Recognition by the Bidirectional LSTM Model. J. Xidian Univ. Nat. Sci. 2019, 46, 29–34. [Google Scholar]

- Du, C.; Tian, L.; Chen, B.; Zhang, L.; Chen, W.; Liu, H. Region-factorized Recurrent Attentional Network with Deep Clustering for Radar HRRP Target Recognition. Signal Process. 2021, 183, 108010. [Google Scholar] [CrossRef]

- Chen, J.; Du, L.; Guo, G.; Yin, L.; Wei, D. Target-attentional CNN for Radar Automatic Target Recognition with HRRP. Signal Process. 2022, 196, 108497. [Google Scholar] [CrossRef]

- Song, Y.; Zhou, Q.; Yang, W.; Wang, Y.; Hu, C.; Hu, X. Multi-View HRRP Generation with Aspect-Directed Attention GAN. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 7643–7656. [Google Scholar] [CrossRef]

- Zhang, Y.; Wu, Z.; Wei, S.; Zhang, Y. Spatial Target Recognition Based on CNN and LSTM. In Proceedings of the 12th National Academic Conference on Signal and Intelligent Information Processing and Application, Hangzhou, China, 20–22 October 2018; pp. 1–4. [Google Scholar]

- Zhang, M.; Chen, B. Wavelet Autoencoder for Radar HRRP Target Recognition with Recurrent Neural Network. In Proceedings of the International Conference on Intelligence Science and Big Data Engineering, LNCS, Lanzhou, China, 18–19 August 2018; pp. 262–275. [Google Scholar]

- Liu, Z.; Miao, Z.; Zhan, X.; Wang, J.; Gong, B.; Yu, S. Large-scale Long-tailed Recognition in an Open World. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Zhang, X.; Wang, W.; Zheng, X.; Wei, Y. A Novel Radar Target Recognition Method for Open and Imbalanced High-resolution Range Profile. Digit. Signal Process. 2021, 118, 103212. [Google Scholar] [CrossRef]

- Chen, S.; Wang, H. SAR Target Recognition based on Deep Learning. In Proceedings of the IEEE International Conference on Data Science & Advanced Analytics, Shanghai, China, 30 October–1 November 2014. [Google Scholar]

- Chen, S.; Wang, H.; Xu, F.; Jin, Y.Q. Target Classification Using the Deep Convolutional Networks for SAR Images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4806–4817. [Google Scholar] [CrossRef]

- Li, J.; Zhang, R.; Li, Y. Multiscale Convolutional Neural Network for the Detection of Built-up Areas in High Resolution SAR Images. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 910–913. [Google Scholar]

- Tian, Z.; Zhan, R.; Hu, J.; Zhang, J. Research on SAR Image Target Recognition Based on Convolutional Neural Network. J. Radars 2016, 5, 320–325. [Google Scholar]

- Wagner, S.A. SAR ATR by a Combination of Convolutional Neural Network and Support Vector Machines. IEEE Trans. Aerosp. Electron. Syst. 2016, 52, 2861–2872. [Google Scholar] [CrossRef]

- Housseini, A.E.; Toumi, A.; Khenchaf, A. Deep Learning for Target Recognition from SAR Images. In Proceedings of the Detection Systems Architectures & Technologies, Algiers, Algeria, 20–22 February 2017. [Google Scholar]

- Li, X.; Li, C.; Wang, P.; Men, Z.; Xu, H. SAR ATR Based on Dividing CNN into CAE and SNN. In Proceedings of the 2015 IEEE 5th Asia-Pacific Conference on Synthetic Aperture Radar (APSAR), Singapore, 1–4 September 2015; pp. 676–679. [Google Scholar]

- He, H.; Wang, S.; Yang, D.; Wang, S. SAR Target Recognition and Unsupervised Detection Based on Convolutional Neural Network. In Proceedings of the 2017 Chinese Automation Congress (CAC), Jinan, China, 20–22 October 2017; pp. 435–438. [Google Scholar]

- Zou, H.; Lin, Y.; Hong, W. Research on Multi-azimuth SAR Image Target Recognition using Deep Learning. Signal Process. 2018, 34, 513–522. [Google Scholar]

- Pei, J.; Huang, Y.; Huo, W.; Zhang, Y.; Yang, J.; Yeo, T. SAR Automatic Target Recognition based on Multi-view Deep Learning Framework. IEEE Trans. Geosci. Remote Sens. 2018, 56, 2196–2210. [Google Scholar] [CrossRef]

- Zhang, F.; Hu, C.; Yin, Q.; Li, W.; Li, H.; Hong, W. Multi-aspect-aware Bidirectional LSTM Networks for Synthetic Aperture Radar Target Recognition. IEEE Access 2017, 5, 26880–26891. [Google Scholar] [CrossRef]

- Zhao, P.; Liu, K.; Zou, H.; Zhen, X. Multi-stream Convolutional Neural Network for SAR Automatic Target Recognition. Remote Sens. 2018, 10, 1473. [Google Scholar] [CrossRef] [Green Version]

- Zhao, P.; Huang, L. Target Recognition Method for Multi-aspect Synthetic Aperature Radar Images Based on EfficientNet and BiGRU. J. Radars 2021, 10, 859–904. [Google Scholar]

- Furukawa, H. Deep Learning for Target Classification from SAR Imagery: Data Augmentation and Translation Invariance. IEICE Tech. Rep. 2017, 117, 13–17. [Google Scholar]

- Ding, J.; Chen, B.; Liu, H.; Huang, M. Convolutional Neural Network with Data Augmentation for SAR Target Recognition. IEEE Geosci. Remote Sens. Lett. 2016, 13, 364–368. [Google Scholar] [CrossRef]

- Odegaard, N.; Knapskog, A.O.; Cochin, C.; Louvigne, J. Classification of Ships using Real and Simulated Data in a Convolutional Neural Network. In Proceedings of the 2016 IEEE Radar Conference, Philadelphia, PA, USA, 2–6 May 2016. [Google Scholar]

- Zhang, M.; Cui, Z.; Wang, X.; Cao, Z. Data Augmentation Method of SAR Image Dataset. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018. [Google Scholar]

- Zheng, C.; Jiang, X.; Liu, X. Semi-Supervised SAR ATR via Multi-Discriminator Generative Adversarial Network. IEEE Sens. J. 2019, 99, 7525–7533. [Google Scholar] [CrossRef]

- Chen, L.; Wu, H.; Cui, X.; Guo, Z.; Jia, Z. Convolution Neural Network SAR Image Target Recognition Based on Transfer Learning. Chin. Space Sci. Technol. 2018, 38, 45–51. [Google Scholar]

- Ren, S.; Suo, J.; Zhang, L. SAR Target Recognition Based on Convolution Neural Network and Transfer Learning. Electron. Opt. Control 2020, 27, 37–41. [Google Scholar]

- Wang, Z.L.; Xu, X.H.; Zhang, L. Automatic Target Recognition Based on Deep Transfer Learning of Simulated SAR Image. J. Univ. Chin. Acad. Sci. 2020, 37, 516–524. [Google Scholar]

- Huang, Z.; Pan, Z.; Lei, B. What, where, and how to transfer in SAR target recognition based on deep CNNs. IEEE Trans. Geosci. Remote Sens. 2020, 58, 2324–2336. [Google Scholar] [CrossRef] [Green Version]

- Lin, Z.; Ji, K.; Kang, M.; Leng, X.; Zou, H. Deep Convolutional Highway Unit Network for SAR Target Classification with Limited Labeled Training Data. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1091–1095. [Google Scholar] [CrossRef]

- Wang, B.; Pan, Z.; Hu, Y.; Ma, W. SAR Target Recognition Based on Siamese CNN with Small Scale Dataset. Radar Sci. Technol. 2019, 17, 603–609. [Google Scholar]

- Pan, Z.X.; Bao, X.J.; Zhang, Y.T.; Wang, B.; Lei, B. Siamese Network Based Metric Learning for SAR Target Classification. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 1342–1345. [Google Scholar]

- Wang, N.; Wang, Y.; Liu, H.; Zuo, Q.; He, J. Feature-Fused SAR Target Discrimination Using Multiple Convolutional Neural Networks. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1695–1699. [Google Scholar] [CrossRef]

- Wang, Y.L.; Xu, T.; Du, D.W. SAR Image Classification Method Based on Multi-Features and Convolutional Neural Network. Math. Pract. Knowl. 2020, 50, 140–147. [Google Scholar]

- Zhang, W.; Xu, Y.; Ni, J.; Shi, H. Image Target Recognition Method based on Multi-scale Block Convolutional Neural Network. J. Comput. Appl. 2016, 36, 1033–1038. [Google Scholar]

- Wu, Y. Research on SAR Target Recognition Algorithm Based on CNN; University of Electronic Science and Technology of China: Chengdu, China, 2020. [Google Scholar]

- Liu, J.; Gong, M.; Qin, K.; Zhang, P. A Deep Convolutional Coupling Network for Change Detection Based on Heterogeneous Optical and Radar Images. IEEE Trans. Neural Netw. Learn. Syst. 2016, 29, 545–559. [Google Scholar] [CrossRef] [PubMed]

- Fan, T.; Liu, C.; Cui, T.J. Deep Learning of Raw Radar Echoes for Target Recognition. In Proceedings of the International Conference on Computational Electromagnetics, Chengdu, China, 26–28 March 2018. [Google Scholar]

- Iqbal, S.; Iqbal, U.; Hassan, S.A.; Saleem, S. Indoor Motion Classification Using Passive RF Sensing Incorporating Deep Learning. In Proceedings of the IEEE Vehicular Technology Conference, Porto, Portugal, 3–6 June 2018. [Google Scholar]

- Hang, H.; Meng, X.; Shen, J. RCS Recognition Method of Corner Reflectors Based on 1D-CNN. In Proceedings of the 2021 International Applied Computational Electromagnetics Society (ACES-China) Symposium, Chengdu, China, 28–31 July 2021. [Google Scholar]

- Wengrowski, E.; Purri, M.; Dana, K.; Huston, A. Deep CNNs as a Method to Classify Rotating Objects Based on Monostatic RCS. IET Radar Sonar Navig. 2019, 13, 1092–1100. [Google Scholar] [CrossRef]

- Cao, X.; Yi, J.; Gong, Z.; Wan, X. Preliminary Study on Bistatic RCS of Measured Targets for Automatic Target Recognition. In Proceedings of the 13th International Symposium on Antennas, Propagation and EM Theory, Zhuhai, China, 1–4 December 2021. [Google Scholar]

- Yang, J.; Zhang, Z.; Mao, W. Identification and Micro-motion Parameter Estimation of Non-cooperative UAV Targets. Phys. Commun. 2021, 46, 101314. [Google Scholar] [CrossRef]

- Ezuma, M.; Anjinappa, C.; Semkin, V.; Guvenc, I. Comparative Analysis of Radar-Cross-Section-Based UAV Recognition Techniques. IEEE Sens. J. 2022, 22, 17932–17949. [Google Scholar] [CrossRef]

- The Sensor Data Management System, MSTAR Public Dataset [EB/OL]. Available online: https://www.sdms.afrl.af.mil/index.php?collection=mstar (accessed on 4 April 2009).

- Available online: https://opensar.sjtu.edu.cn/ (accessed on 30 May 2017).

- Huang, L.; Liu, B.; Li, B.; Guo, W.; Yu, W.; Zhang, Z.; Yu, W. OpenSARShip: A Dataset Dedicated to Sentinel-1 Ship Interpretation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 195–208. [Google Scholar] [CrossRef]

- Li, B.; Liu, B.; Huang, L.; Guo, W.; Zhang, Z.; Yu, W. OpenSARShip 2.0: A large volume dataset for deeper interpretation of ship targets in Sentinel-1 imagery. In Proceedings of the SAR in Big Data Era: Models, Methods and Applications (BIGSARDATA), Beijing, China, 13–14 November 2017. [Google Scholar]

- Hou, X.; Wei, A.; Song, Q.; Lai, J.; Wang, H.; Feng, X. FUSAR-Ship: Building a High-Resolution SAR-AIS Matchup Dataset of Gaofen-3 for Ship Detection and Recognition. Sci. China 2020, 63, 36–54. [Google Scholar] [CrossRef] [Green Version]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Rabinovich, A. Going Deeper with Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 20–25 June 2009; pp. 1–9. [Google Scholar]

- Chen, J.; Du, L.; He, H.; Guo, Y. Convolutional Factor Analysis Model with Application to Radar Automatic Target Recognition. Pattern Recognit. 2019, 87, 140–156. [Google Scholar] [CrossRef]

- Qosja, D.; Wagner, S.; Brüggenwirth, S. Benchmarking Convolutional Neural Network Backbones for Target Classification in SAR. In Proceedings of the IEEE Radar Conference, San Antonio, TX, USA, 1–5 May 2023. [Google Scholar]

- Copsey, K.; Webb, A. Bayesian Gamma Mixture Model Approach to Radar Target Recognition. IEEE Trans. Aerosp. Electron. Syst. 2003, 39, 1201–1217. [Google Scholar] [CrossRef]

- Nebabin, V.G. Data Fusion: A Promising Way of Solving Radar Target Recognition Problems. JED 1996, 19, 78–80. [Google Scholar]

- Jiang, W.; Liu, Y.; Wei, Q.; Wang, W.; Ren, Y.; Wang, C. A High-resolution Radar Automatic Target Recognition Method for Small UAVs Based on Multi-feature Fusion. In Proceedings of the 2022 IEEE 25th International Conference on Computer Supported Cooperative Work in Design, Hangzhou, China, 4–6 May 2022. [Google Scholar]

- Li, Z.; Papson, S.; Narayanan, R.M. Data-Level Fusion of Multi-look Inverse Synthetic Aperture Radar Images. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1394–1406. [Google Scholar]

- Hospedales, T.; Antoniou, A.; Micaelli, P.; Storkey, A. Meta-Learning in Neural Networks: A Survey. arXiv 2004, arXiv:2004.05439v1. [Google Scholar] [CrossRef] [PubMed]

- Tian, L.; Chen, B.; Chen, W.; Xu, Y.; Liu, H. Domain-aware Meta Network for Radar HRRP Target Recognition with Missing Aspects. Signal Process. 2021, 187, 108167. [Google Scholar] [CrossRef]

- Yu, X.; Liu, S.; Ren, H.; Zou, L.; Zhou, Y.; Wang, X. Transductive Prototypical Attention Network for Few-shot SAR Target Recognition. In Proceedings of the IEEE Radar Conference, San Antonio, TX, USA, 1–5 May 2023. [Google Scholar]

| Target Attribute | Commonly Used Robust Features | |

|---|---|---|

| Motion characteristics | Target altitude, velocity, acceleration, ballistic coefficient, regional features | |

| Micro-motion characteristics | Micro-motion period, spectrum distribution width, waveform entropy, instantaneous frequency, polarization scattering matrix, depolarization coefficient, maximum polarization direction angle, moment feature | |

| Physical characteristics | structural feature | Target size, radial length, scatter intensity, strong scattering centers, number of peaks, width of crest, high-order central moment, scattering center distribution, radial energy |

| image feature | Target contour, area graph, peak feature, shadow feature, wavelet low-frequency feature, scattering center feature, texture feature, and semantic feature | |

| Areas of Application | Target Classes | Methods | Radar | Acc. | Ref. | Year |

|---|---|---|---|---|---|---|

| Space targets | Ballistic targets | AlexNet and SqueezeNet | —— | 97.5% | [63] | 2019 |

| Warhead and decoy | LSTM | Pulse-Doppler | 99% | [64] | 2020 | |

| Air targets | Drones | CNN | FMCW | 96.86% | [65] | 2022 |

| Three commercial small drones | Light CNN | FMCW | 97.14% | [66] | 2020 | |

| Drones and birds | CNN | FMCW | 94.4% | [67] | 2020 | |

| Fixed-wing aircraft and hexacopter | MobileNetV2 | FMCW | 99% | [68] | 2021 | |

| Drones | CNN | —— | 93% | [69] | 2018 | |

| Helicopters with 3,4,6,8 propeller blades | CNN | —— | 95.8% | [70] | 2022 | |

| Ground targets | Car, single and multiple people, and bicycle | DNNs | FMCW | 98.33% | [71] | 2018 |

| Pedestrians and vehicles | SVM-CNN | FMCW | 95% | [72] | 2020 | |

| Pedestrians, wheeled and tracked vehicles | LeNet5 | CW | 95% | [73] | 2021 | |

| Pedestrians, wheeled and tracked vehicles | DCDE + residual network | CW | >90% | [74] | 2022 | |

| Human activities | Hand gestures | CNN | FMCW | 95.2% | [75] | 2021 |

| Hand gestures | LSTM | FMCW | 85.7% | [76] | 2022 | |

| 6 human motions | LSTM | CW | 92.65% | [77] | 2019 | |

| 6 human motions | CNN + Sparse Autoencoder | SFCW | 96.42% | [78] | 2021 | |

| 6 human motions | CNN + Transfer learning | Pulse-Doppler | 96.7% | [79] | 2021 | |

| 6 suspicious actions | CNNs | CW | 98% | [80] | 2022 |

| Method Improvement | Specific Methods | Main Contributions | Dataset | Acc. | Ref. | Year |

|---|---|---|---|---|---|---|

| Semantic feature extraction and optimization | Sparse autoencoder + CNN | Using a single layer of CNN to extract features. | MSTAR | 84.7% | [116] | 2015 |

| CNN without fully connected layers | Using sparsely connected convolution architecture to reduce overfitting. | MSTAR | 99% | [117] | 2016 | |

| Multi-scale CNN | Extracting robust multi-scale and hierarchical features of built-up areas. | TerraSAR-X | 92.86% | [118] | 2016 | |

| CNN + SVM | Using SVM to classify the feature map and introducing class separability measure into the loss function. | MSTAR | 93.76% | [119] | 2016 | |

| CNN + SVM | Feature maps extracted by CNN are classified by SVM. | MSTAR | 99.5% | [120] | 2016 | |

| CNN + autoencoder | Combining CNN and autoencoder to extract features of military vehicles. | MSTAR | 93% | [121] | 2017 | |

| CNN + autoencoder | Splitting CNN into SNN and CAE to greatly reduce the learning time. | MSTAR | 98.02% | [122] | 2015 | |

| Shallow CNN | Designing a light-level shallow CNN to classify targets. | MSTAR | 99.47% | [123] | 2017 | |

| Multi-aspect | CNN | Using pseudo-color image as input to reduce the difference between targets at different azimuth angles. | MSTAR | 98.49% | [124] | 2018 |

| CNN + parallel network topology | Sufficient multi-aspect SAR images are generated and features are extracted using parallel CNN. | MSTAR | 98.52% | [125] | 2018 | |

| Bidirectional LSTM | A bidirectional LSTM structure is used to explore the spacing-varying scattering feature of different aspects. | MSTAR | 99.9% | [126] | 2017 | |

| Multi-stream CNN | Multi-stream CNN is used to extract the multi-view features and then combine them by the Fourier feature fusion. | MSTAR | 99.92% | [127] | 2018 | |

| EfficientNet + BiGRU + island loss | Combining EfficientNet, BiGRU, and island loss to reduce azimuth sensitivity of SAR targets. | MSTAR | 100% | [128] | 2021 | |

| Small-sample dataset | Data augmentation | Extending the training data by central clipping and using ResNet to extract features. | MSTAR | 99.56% | [129] | 2017 |

| Three domain-specific data augmentation operations are performed on SAR images utilizing CNN. | MSTAR | 93.16% | [130] | 2016 | ||

| Constructing simulated SAR images based on CAD models to fill the data gap. | —— | —— | [131] | 2016 | ||

| GAN | Using GAN to generate SAR target slice images. | MSTAR | —— | [132] | 2018 | |

| Combining semi-supervised CNN and dynamic multi-discriminator GAN. | MSTAR | 97.81% | [133] | 2019 | ||

| Transfer learning | Transferring the pre-trained CNN model from the 3-class target recognition task to the 10-class target recognition task. | MSTAR | 99.13% | [134] | 2018 | |

| Transfer learning combined with VGG16. | MSTAR | 94.4% | [135] | 2020 | ||

| Transferring a CReLU-based model from simulated dataset to MSTAR dataset. | MSTAR | 99.78% | [136] | 2020 | ||

| Transferring a CNN-based model pre-trained with MSTAR to OpenSARShip. | MSTAR, OpenSARShip | 90.75% | [137] | 2020 | ||

| Metric learning | The convolutional highway unit network is adopted for training with limited SAR data. | MSTAR | 99% | [138] | 2017 | |

| A Siamese CNN based on deep learning and metric learning is adopted to evaluate the similarity between data. | MSTAR, OpenSARShip | 94.77% | [139] | 2019 | ||

| The Siamese network is introduced to evaluate the probability of similarity between two samples. | MSTAR | 93.2% | [140] | 2019 | ||

| Multi-feature fusion | CNN | Using intensity and edge information jointly. | Self-built | 93.64% | [141] | 2017 |

| Canny-WTD-CNN | The edge features extracted by Canny operator fused with the wavelet features extracted by wavelet threshold denoising method as the input of CNN. | MSTAR | 99.14% | [142] | 2020 |

| Depression Angles (°) | 2S1 | BMP2 | BRDM2 | BTR60 | BTR70 | D7 | T62 | T72 | ZIL131 | ZSU234 |

|---|---|---|---|---|---|---|---|---|---|---|

| 15 | 274 | 195 | 274 | 195 | 196 | 274 | 273 | 196 | 274 | 274 |

| 17 | 299 | 233 | 298 | 255 | 233 | 299 | 299 | 232 | 299 | 299 |

| Dataset | Release Time and Nation | Gathering Satellites | Resolution | Number of Images | Size of Images |

|---|---|---|---|---|---|

| MSTAR | 1996, USA | —— | 0.3 m × 0.3 m | 5950 | 128 × 128 |

| OpenSARShip | 2017/2019, China | Sentinel-1A | 20 m × 22 m (2.7 m~3.5 m) × 22 m | 11,346 (V1) 34,528 (V2) | —— |

| FUSAR_Ship | 2020, China | GF-3 | (1.7 m~1.754 m) × 1.124 m | 6252 | 512 × 512 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jiang, W.; Wang, Y.; Li, Y.; Lin, Y.; Shen, W. Radar Target Characterization and Deep Learning in Radar Automatic Target Recognition: A Review. Remote Sens. 2023, 15, 3742. https://doi.org/10.3390/rs15153742

Jiang W, Wang Y, Li Y, Lin Y, Shen W. Radar Target Characterization and Deep Learning in Radar Automatic Target Recognition: A Review. Remote Sensing. 2023; 15(15):3742. https://doi.org/10.3390/rs15153742

Chicago/Turabian StyleJiang, Wen, Yanping Wang, Yang Li, Yun Lin, and Wenjie Shen. 2023. "Radar Target Characterization and Deep Learning in Radar Automatic Target Recognition: A Review" Remote Sensing 15, no. 15: 3742. https://doi.org/10.3390/rs15153742

APA StyleJiang, W., Wang, Y., Li, Y., Lin, Y., & Shen, W. (2023). Radar Target Characterization and Deep Learning in Radar Automatic Target Recognition: A Review. Remote Sensing, 15(15), 3742. https://doi.org/10.3390/rs15153742