Enhancing Wheat Above-Ground Biomass Estimation Using UAV RGB Images and Machine Learning: Multi-Feature Combinations, Flight Height, and Algorithm Implications

Abstract

1. Introduction

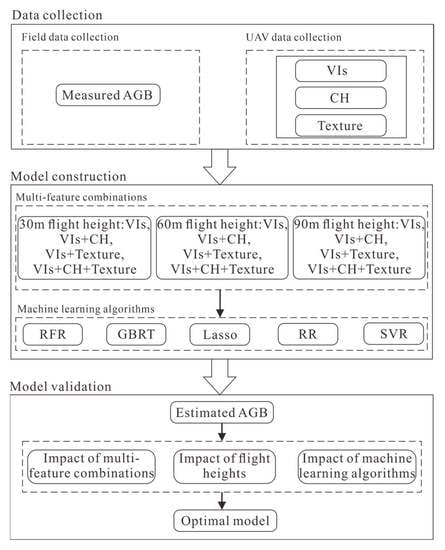

2. Materials and Methods

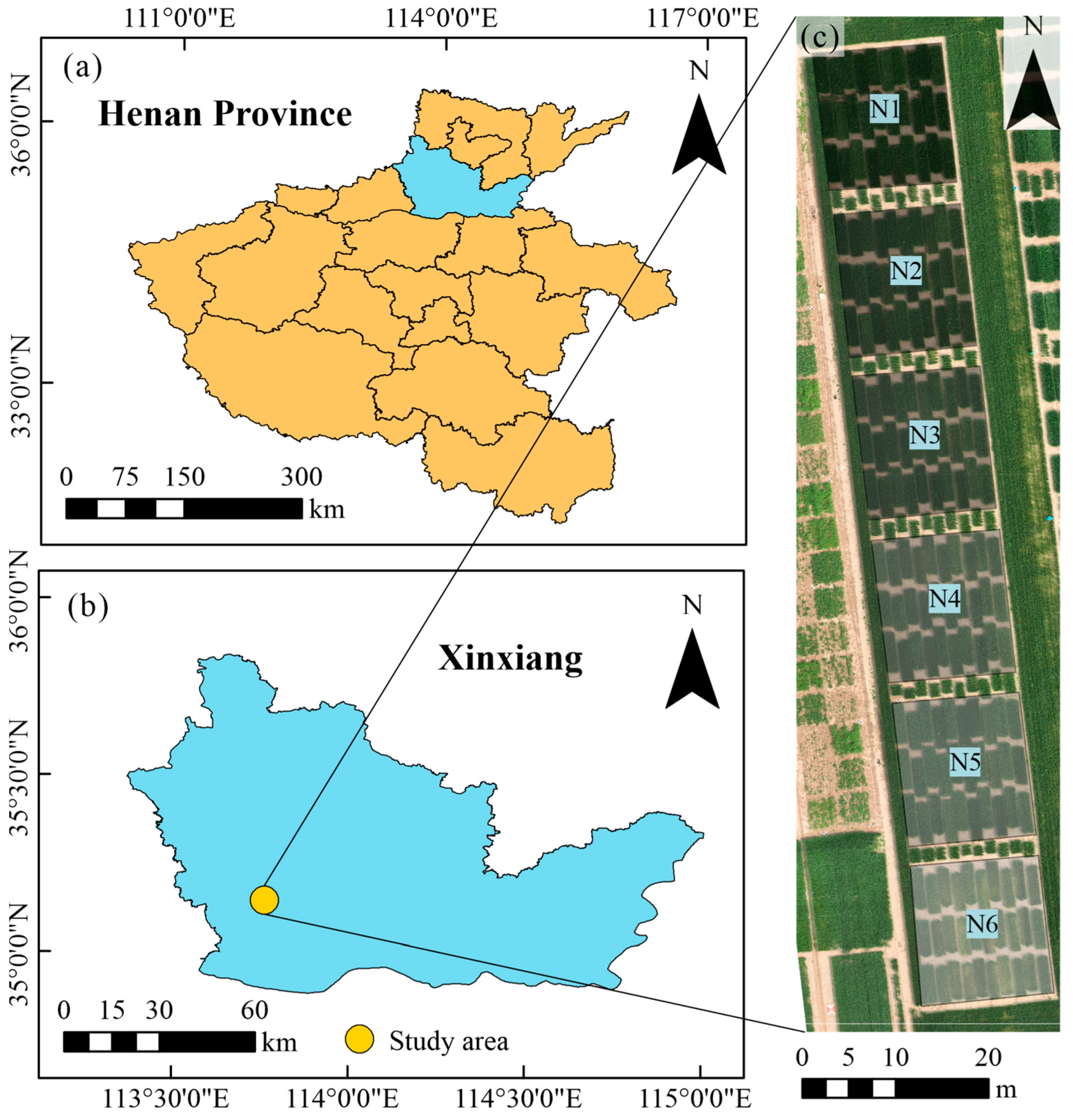

2.1. Study Area and Experimental Design

2.2. Field Data Acquisition

2.3. UAV Data Acquisition and Preprocessing

2.4. Feature Extraction

2.4.1. Spectral Feature Extraction

2.4.2. CH Extraction

2.4.3. Texture Feature Extraction

2.5. Machine Learning Algorithms

2.5.1. SVR

2.5.2. Ridge Regression

2.5.3. Least Absolute Shrinkage and Selection Operator

2.5.4. RFR

2.5.5. GBRT

2.6. Accuracy Evaluation

3. Results

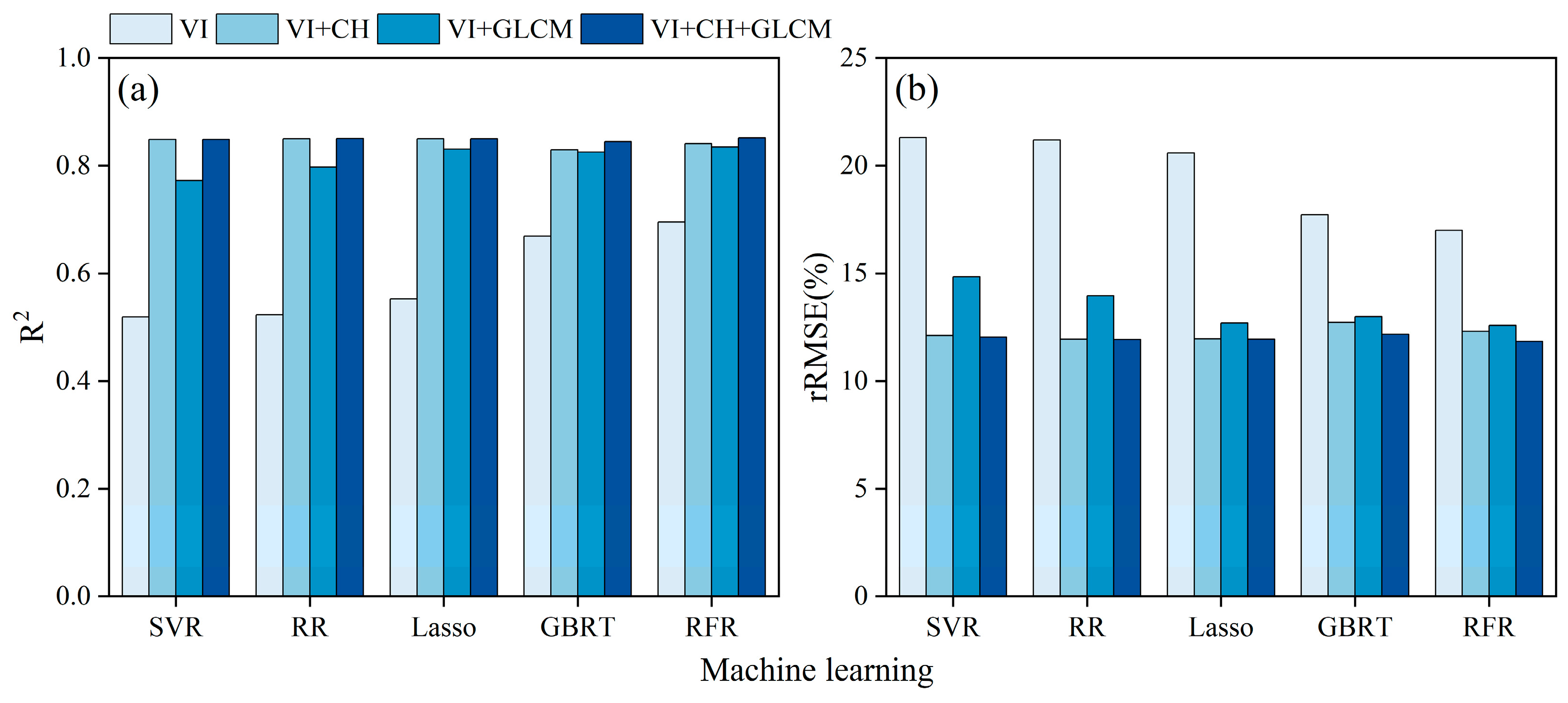

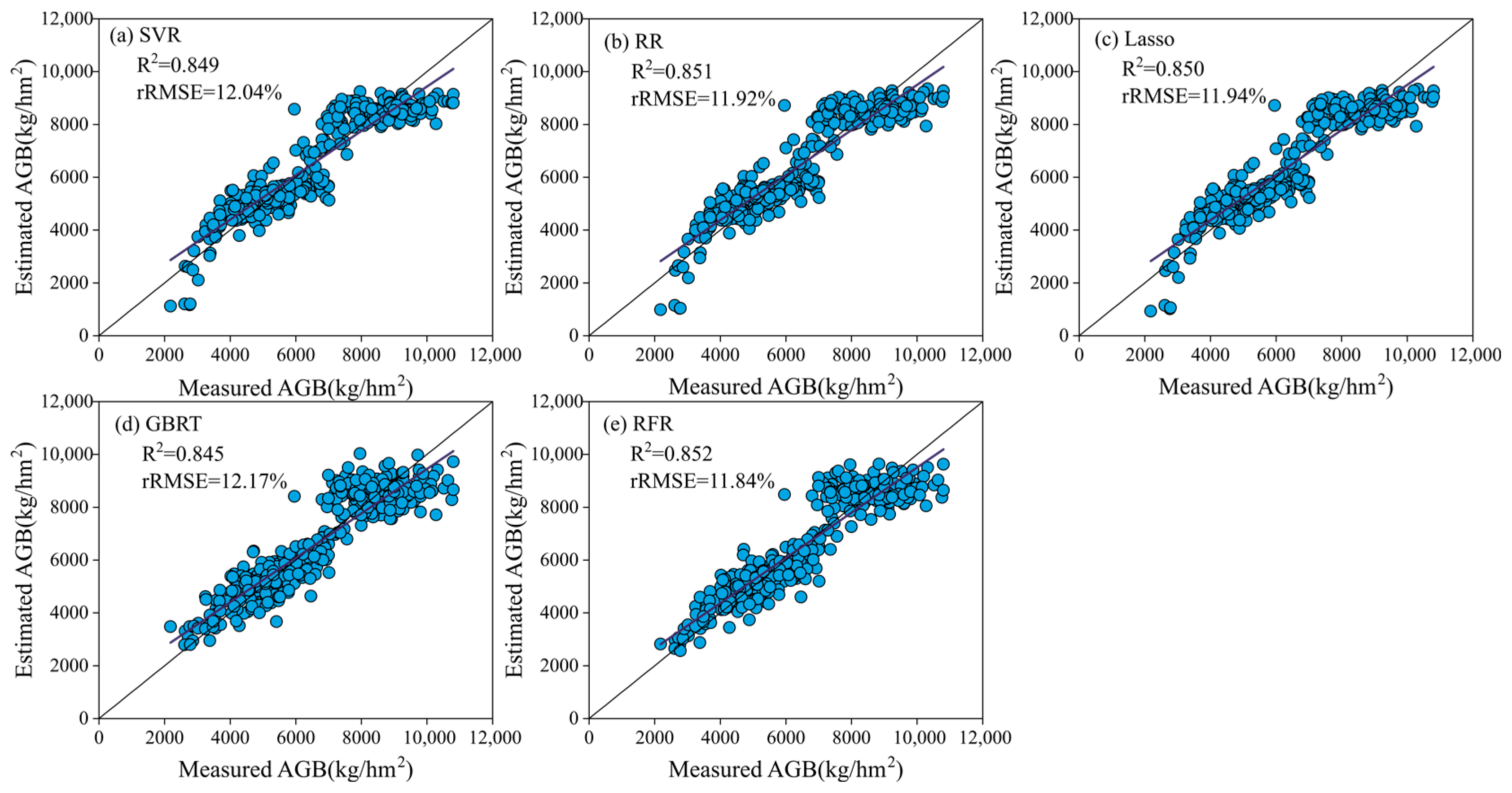

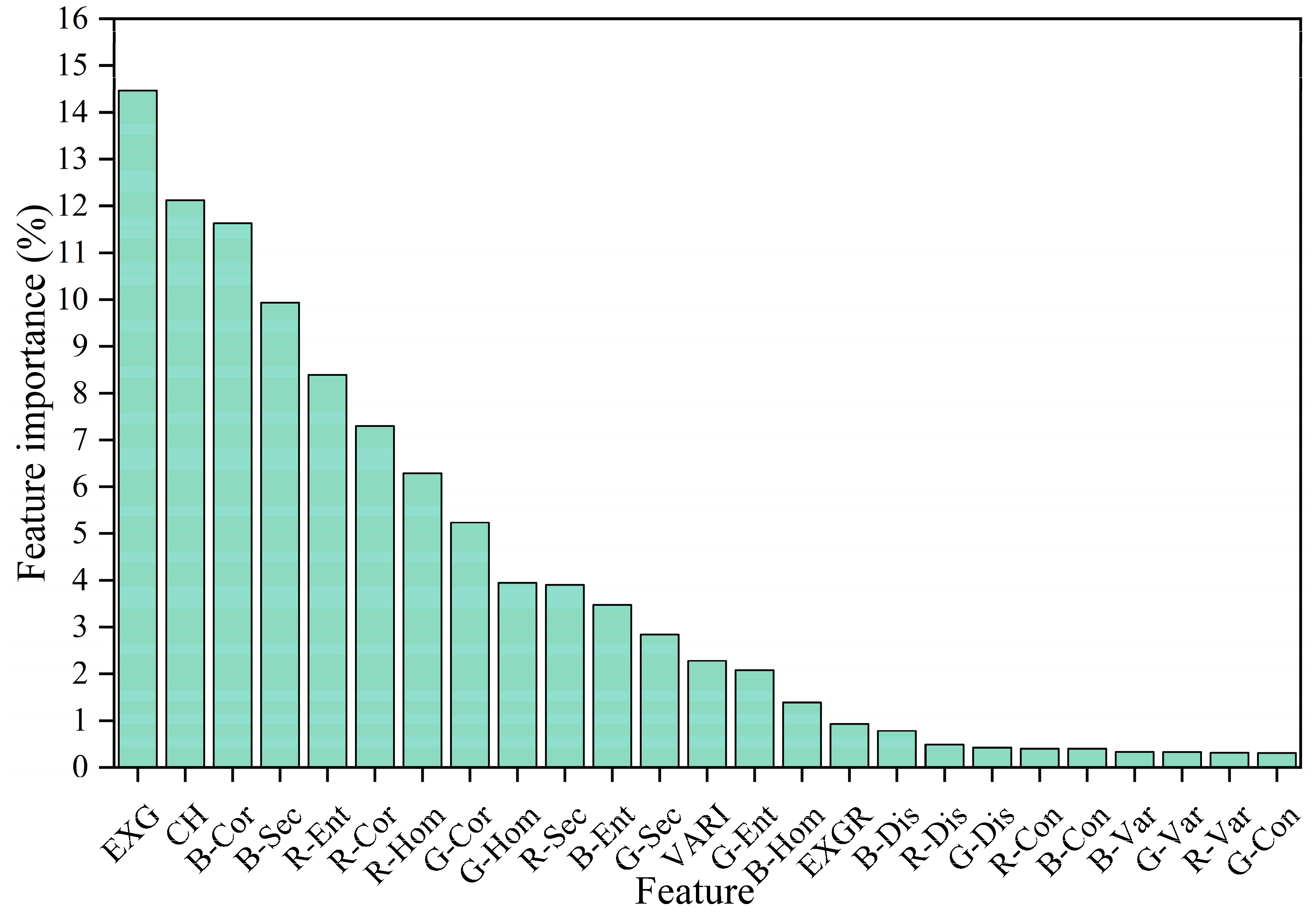

3.1. AGB Estimation by the Combination of VIs, CH, and Texture Features

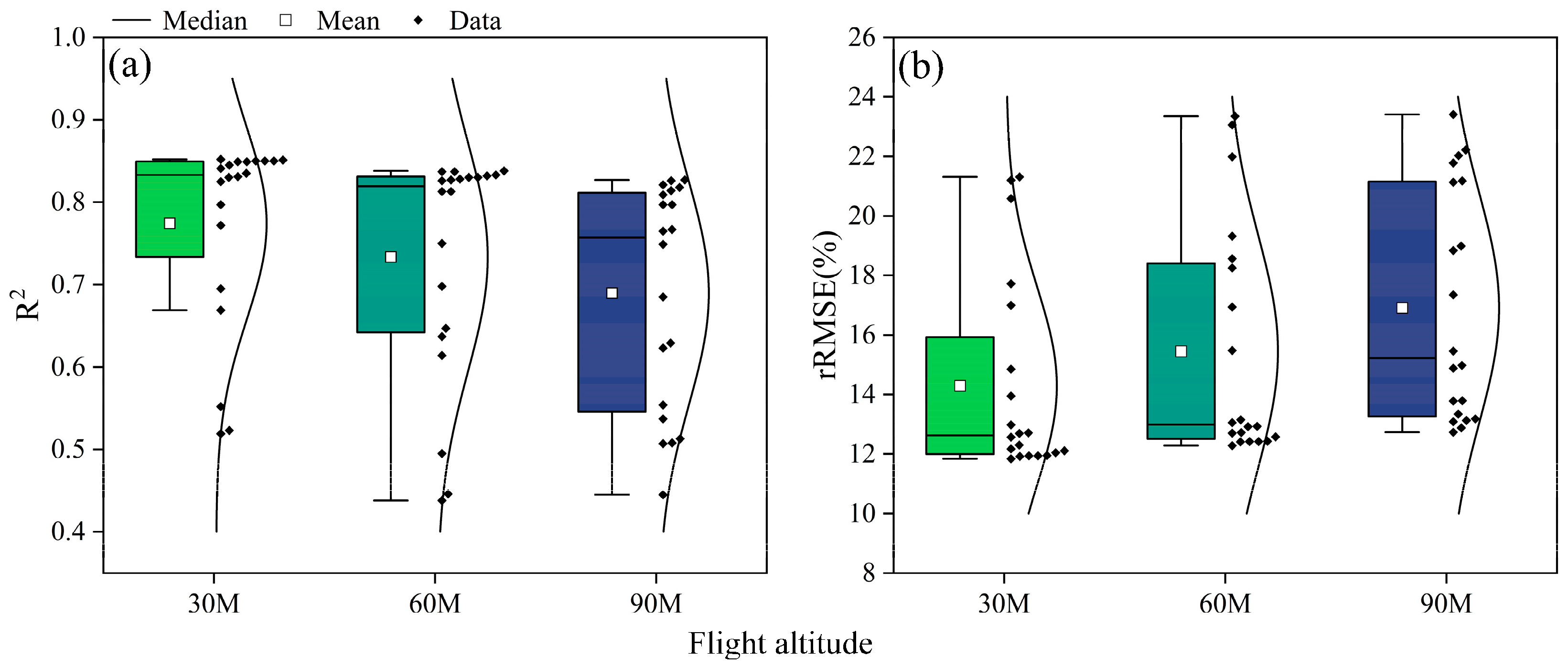

3.2. AGB Estimation at Different Flight Heights

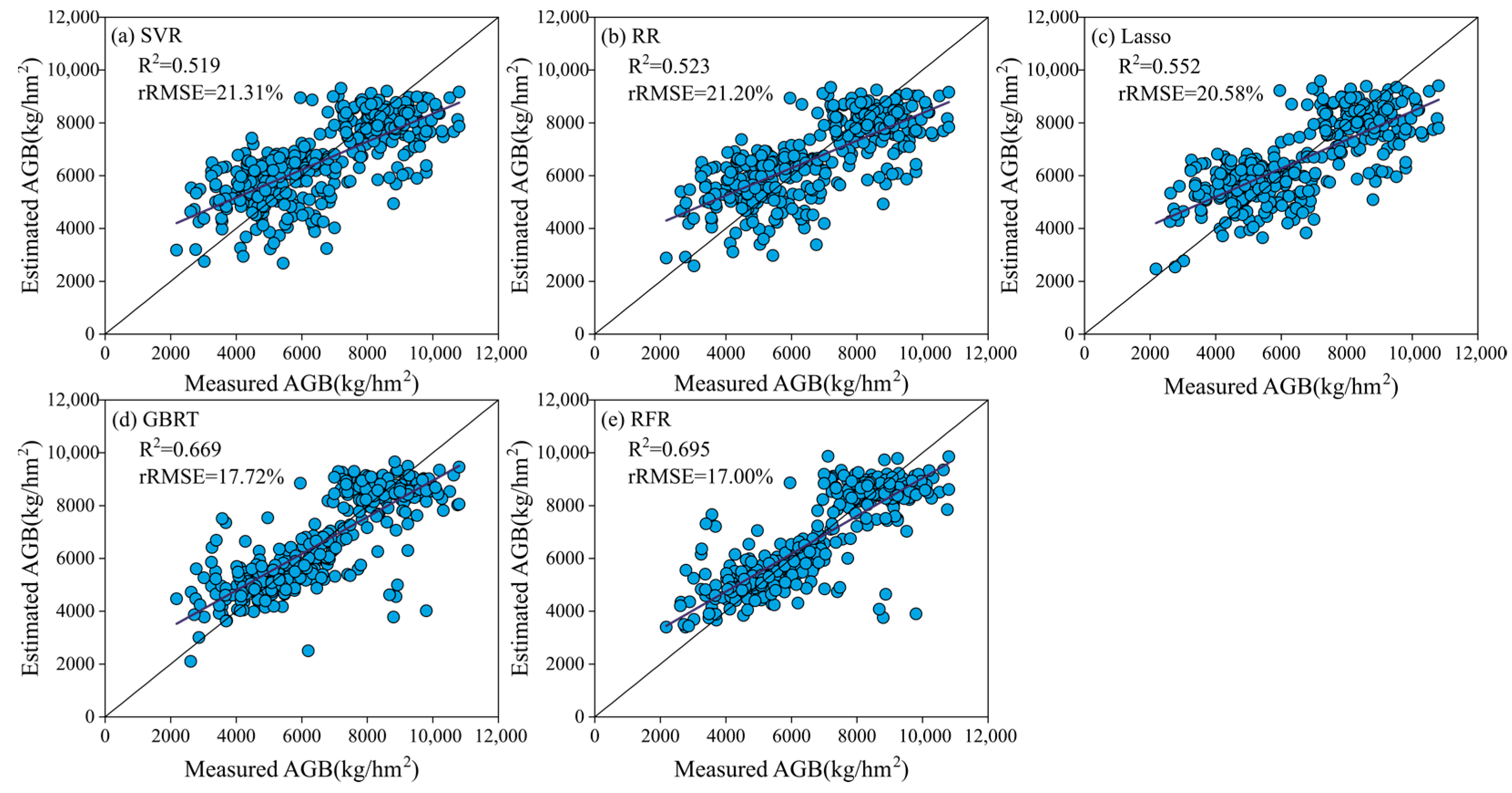

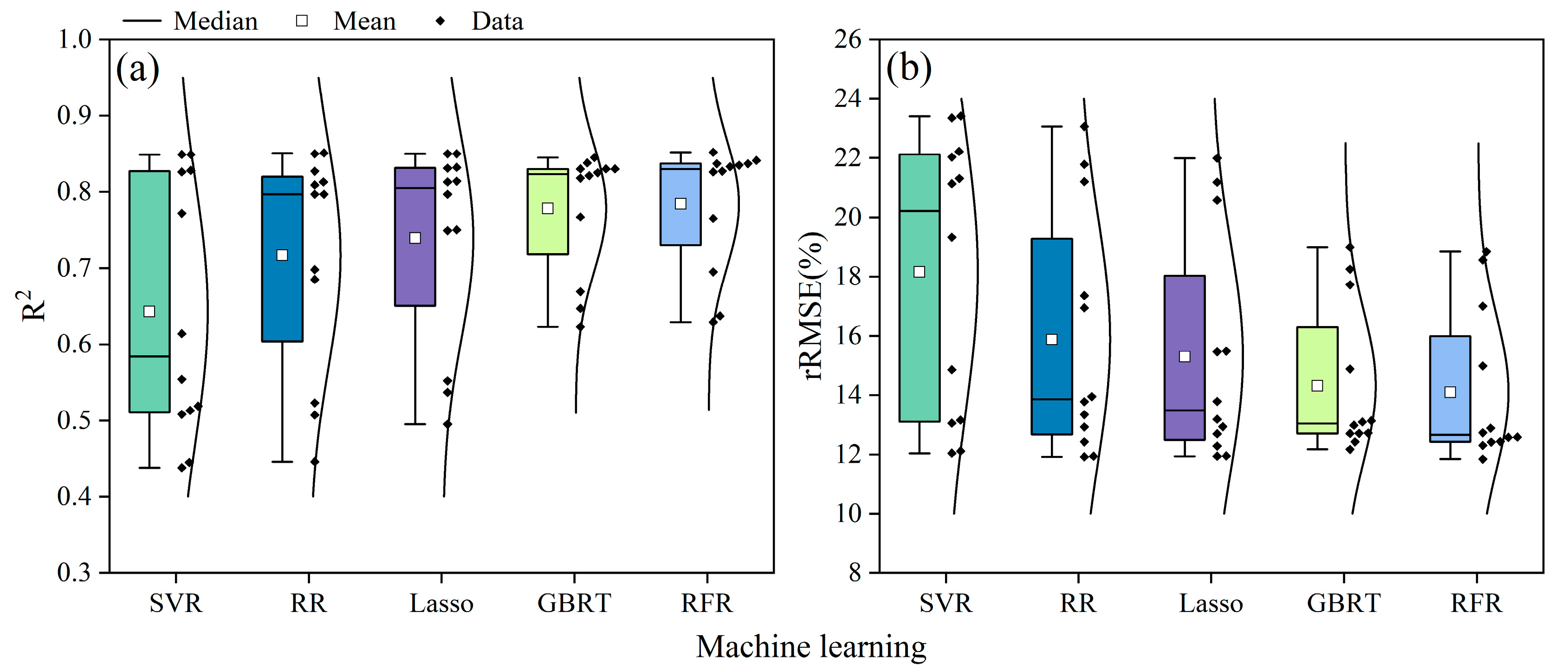

3.3. AGB Estimation Using Different Machine Learning Algorithms

4. Discussion

4.1. AGB Estimation Using VIs, CH, and Texture Feature Combination

4.2. Influence of Flight Height on Estimation Accuracy

4.3. Comparison of Different Machine Learning Algorithms

4.4. Implications and Limitations of the Study

5. Conclusions

- Combining VIs with either CH or texture features improves the accuracy of AGB estimation compared to using VI alone. The highest accuracy was achieved when combining VI, CH, and texture features (VI + CH + texture) for wheat AGB estimation.

- Flight height has a significant influence on the accuracy of AGB estimation. A flight height of 30 m resulted in higher accuracy. However, flight heights of 60 or 90 m can significantly reduce the acquisition costs of the flight mission. The choice of flight height should be based on specific mission requirements.

- The selection of machine learning algorithms is crucial for wheat AGB estimation. In this study, the RFR algorithm outperformed other machine learning algorithms, leading to higher accuracy in AGB estimation.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Geng, L.; Che, T.; Ma, M.; Tan, J.; Wang, H. Corn biomass estimation by integrating remote sensing and long-term observation data based on machine learning techniques. Remote Sens. 2021, 13, 2352. [Google Scholar] [CrossRef]

- Li, Z.; Zhao, Y.; Taylor, J.; Gaulton, R.; Jin, X.; Song, X.; Li, Z.; Meng, Y.; Chen, P.; Feng, H.; et al. Comparison and transferability of thermal, temporal and phenological-based in-season predictions of above-ground biomass in wheat crops from proximal crop reflectance data. Remote Sens. Environ. 2022, 273, 112967. [Google Scholar] [CrossRef]

- Kumar, A.; Tewari, S.; Singh, H.; Kumar, P.; Kumar, N.; Bisht, S.; Devi, S.; Nidhi; Kaushal, R. Biomass accumulation and carbon stock in different agroforestry systems prevalent in the Himalayan foothills, India. Curr. Sci. 2021, 120, 1083–1088. [Google Scholar] [CrossRef]

- Yue, J.; Yang, G.; Tian, Q.; Feng, H.; Xu, K.; Zhou, C. Estimate of winter-wheat above-ground biomass based on UAV ultrahigh-ground-resolution image textures and vegetation indices. ISPRS-J. Photogramm. Remote Sens. 2019, 150, 226–244. [Google Scholar] [CrossRef]

- Han, S.; Zhao, Y.; Cheng, J.; Zhao, F.; Yang, H.; Feng, H.; Li, Z.; Ma, X.; Zhao, C.; Yang, G. Monitoring Key Wheat Growth Variables by Integrating Phenology and UAV Multispectral Imagery Data into Random Forest Model. Remote Sens. 2022, 14, 3723. [Google Scholar] [CrossRef]

- Jin, X.; Zarco-Tejada, P.J.; Schmidhalter, U.; Reynolds, M.P.; Hawkesford, M.J.; Varshney, R.K.; Yang, T.; Nie, C.; Li, Z.; Ming, B.; et al. High-throughput estimation of crop traits: A review of ground and aerial phenotyping platforms. IEEE Geosci. Remote Sens. Mag. 2020, 9, 200–231. [Google Scholar] [CrossRef]

- Liu, S.; Jin, X.; Nie, C.; Wang, S.; Yu, X.; Cheng, M.; Shao, M.; Wang, Z.; Tuohuti, N.; Bai, Y.; et al. Estimating leaf area index using unmanned aerial vehicle data: Shallow vs. deep machine learning algorithms. Plant Physiol. 2021, 187, 1551–1576. [Google Scholar] [CrossRef]

- Zhang, Y.; Yang, Y.; Zhang, Q.; Duan, R.; Liu, J.; Qin, Y.; Wang, X. Toward Multi-Stage Phenotyping of Soybean with Multimodal UAV Sensor Data: A Comparison of Machine Learning Approaches for Leaf Area Index Estimation. Remote Sens. 2022, 15, 7. [Google Scholar] [CrossRef]

- Zhu, W.; Sun, Z.; Huang, Y.; Yang, T.; Li, J.; Zhu, K.; Zhang, J.; Yang, B.; Shao, C.; Peng, J.; et al. Optimization of multi-source UAV RS agro-monitoring schemes designed for field-scale crop phenotyping. Precis. Agric. 2021, 22, 1768–1802. [Google Scholar] [CrossRef]

- Liu, Y.; Feng, H.; Yue, J.; Li, Z.; Yang, G.; Song, X.; Yang, X.; Zhao, Y. Remote-sensing estimation of potato above-ground biomass based on spectral and spatial features extracted from high-definition digital camera images. Comput. Electron. Agric. 2022, 198, 107089. [Google Scholar] [CrossRef]

- Guo, Z.-C.; Wang, T.; Liu, S.-L.; Kang, W.-P.; Chen, X.; Feng, K.; Zhang, X.-Q.; Zhi, Y. Biomass and vegetation coverage survey in the Mu Us sandy land-based on unmanned aerial vehicle RGB images. Int. J. Appl. Earth Obs. Geoinf. 2021, 94, 102239. [Google Scholar] [CrossRef]

- Gée, C.; Denimal, E. RGB image-derived indicators for spatial assessment of the impact of broadleaf weeds on wheat biomass. Remote Sens. 2020, 12, 2982. [Google Scholar] [CrossRef]

- Guo, Y.; Fu, Y.H.; Chen, S.; Bryant, C.R.; Li, X.; Senthilnath, J.; Sun, H.; Wang, S.; Wu, Z.; de Beurs, K. Integrating spectral and textural information for identifying the tasseling date of summer maize using UAV based RGB images. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102435. [Google Scholar] [CrossRef]

- Duan, B.; Fang, S.; Gong, Y.; Peng, Y.; Wu, X.; Zhu, R. Remote estimation of grain yield based on UAV data in different rice cultivars under contrasting climatic zone. Field Crop. Res. 2021, 267, 108148. [Google Scholar] [CrossRef]

- Fu, Y.; Yang, G.; Li, Z.; Song, X.; Li, Z.; Xu, X.; Wang, P.; Zhao, C. Winter wheat nitrogen status estimation using UAV-based RGB imagery and gaussian processes regression. Remote Sens. 2020, 12, 3778. [Google Scholar] [CrossRef]

- Yue, J.; Feng, H.; Jin, X.; Yuan, H.; Li, Z.; Zhou, C.; Yang, G.; Tian, Q. A comparison of crop parameters estimation using images from UAV-mounted snapshot hyperspectral sensor and high-definition digital camera. Remote Sens. 2018, 10, 1138. [Google Scholar] [CrossRef]

- Pipatsitee, P.; Eiumnoh, A.; Tisarum, R.; Taota, K.; Kongpugdee, S.; Sakulleerungroj, K.; Suriyan, C.-U. Above-ground vegetation indices and yield attributes of rice crop using unmanned aerial vehicle combined with ground truth measurements. Not. Bot. Horti Agrobot. Cluj-Napoca 2020, 48, 2385–2398. [Google Scholar] [CrossRef]

- Fei, S.; Hassan, M.A.; Xiao, Y.; Su, X.; Chen, Z.; Cheng, Q.; Duan, F.; Chen, R.; Ma, Y. UAV-based multi-sensor data fusion and machine learning algorithm for yield prediction in wheat. Precis. Agric. 2023, 24, 187–212. [Google Scholar] [CrossRef]

- Zhang, M.; Zhou, J.; Sudduth, K.A.; Kitchen, N.R. Estimation of maize yield and effects of variable-rate nitrogen application using UAV-based RGB imagery. Biosyst. Eng. 2020, 189, 24–35. [Google Scholar] [CrossRef]

- Luo, H.-X.; Dai, S.-P.; Li, M.-F.; Liu, E.-P.; Zheng, Q.; Hu, Y.-Y.; Yi, X.-P. Comparison of machine learning algorithms for mapping mango plantations based on Gaofen-1 imagery. J. Integr. Agric. 2020, 19, 2815–2828. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I.H. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, 610–621. [Google Scholar] [CrossRef]

- Qiao, L.; Gao, D.; Zhao, R.; Tang, W.; An, L.; Li, M.; Sun, H. Improving estimation of LAI dynamic by fusion of morphological and vegetation indices based on UAV imagery. Comput. Electron. Agric. 2022, 192, 106603. [Google Scholar] [CrossRef]

- Liang, Y.; Kou, W.; Lai, H.; Wang, J.; Wang, Q.; Xu, W.; Wang, H.; Lu, N. Improved estimation of aboveground biomass in rubber plantations by fusing spectral and textural information from UAV-based RGB imagery. Ecol. Indic. 2022, 142, 109286. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, K.; Sun, Y.; Zhao, Y.; Zhuang, H.; Ban, W.; Chen, Y.; Fu, E.; Chen, S.; Liu, J.; et al. Combining spectral and texture features of UAS-based multispectral images for maize leaf area index estimation. Remote Sens. 2022, 14, 331. [Google Scholar] [CrossRef]

- Kheir, A.M.; Ammar, K.A.; Amer, A.; Ali, M.G.; Ding, Z.; Elnashar, A. Machine learning-based cloud computing improved wheat yield simulation in arid regions. Comput. Electron. Agric. 2022, 203, 107457. [Google Scholar] [CrossRef]

- Yu, D.; Zha, Y.; Sun, Z.; Li, J.; Jin, X.; Zhu, W.; Bian, J.; Ma, L.; Zeng, Y.; Su, Z. Deep convolutional neural networks for estimating maize above-ground biomass using multi-source UAV images: A comparison with traditional machine learning algorithms. Precis. Agric. 2023, 24, 92–113. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Hartling, S.; Esposito, F.; Fritschi, F.B. Soybean yield prediction from UAV using multimodal data fusion and deep learning. Remote Sens. Environ. 2020, 237, 111599. [Google Scholar] [CrossRef]

- Ding, F.; Li, C.; Zhai, W.; Fei, S.; Cheng, Q.; Chen, Z. Estimation of Nitrogen Content in Winter Wheat Based on Multi-Source Data Fusion and Machine Learning. Agriculture 2022, 12, 1752. [Google Scholar] [CrossRef]

- Meng, S.; Zhong, Y.; Luo, C.; Hu, X.; Wang, X.; Huang, S. Optimal temporal window selection for winter wheat and rapeseed mapping with Sentinel-2 images: A case study of Zhongxiang in China. Remote Sens. 2020, 12, 226. [Google Scholar] [CrossRef]

- Woebbecke, D.M.; Meyer, G.E.; Von Bargen, K.; Mortensen, D.A. Color indices for weed identification under various soil, residue, and lighting conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Meyer, G.E.; Neto, J.C. Verification of color vegetation indices for automated crop imaging applications. Comput. Electron. Agric. 2008, 63, 282–293. [Google Scholar] [CrossRef]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Tibshirani, R. Regression shrinkage and selection via the lasso. J. R. Stat. Soc. Ser. B-Stat. Methodol. 1996, 58, 267–288. [Google Scholar] [CrossRef]

- Wang, X.; Wang, Y.; Zhou, C.; Yin, L.; Feng, X. Urban forest monitoring based on multiple features at the single tree scale by UAV. Urban For. Urban Green. 2021, 58, 126958. [Google Scholar] [CrossRef]

- Yang, L.; Zhang, X.; Liang, S.; Yao, Y.; Jia, K.; Jia, A. Estimating surface downward shortwave radiation over china based on the gradient boosting decision tree method. Remote Sens. 2018, 10, 185. [Google Scholar] [CrossRef]

- Cheng, M.; Jiao, X.; Liu, Y.; Shao, M.; Yu, X.; Bai, Y.; Wang, Z.; Wang, S.; Tuohuti, N.; Liu, S.; et al. Estimation of soil moisture content under high maize canopy coverage from UAV multimodal data and machine learning. Agric. Water Manag. 2022, 264, 107530. [Google Scholar] [CrossRef]

- Jin, X.; Li, Z.; Feng, H.; Ren, Z.; Li, S. Deep neural network algorithm for estimating maize biomass based on simulated Sentinel 2A vegetation indices and leaf area index. Crop J. 2020, 8, 87–97. [Google Scholar] [CrossRef]

- Li, W.; Niu, Z.; Chen, H.; Li, D.; Wu, M.; Zhao, W. Remote estimation of canopy height and aboveground biomass of maize using high-resolution stereo images from a low-cost unmanned aerial vehicle system. Ecol. Indic. 2016, 67, 637–648. [Google Scholar] [CrossRef]

- Mao, P.; Qin, L.; Hao, M.; Zhao, W.; Luo, J.; Qiu, X.; Xu, L.; Xiong, Y.; Ran, Y.; Yan, C.; et al. An improved approach to estimate above-ground volume and biomass of desert shrub communities based on UAV RGB images. Ecol. Indic. 2021, 125, 107494. [Google Scholar] [CrossRef]

- Li, M.; Wu, J.; Song, C.; He, Y.; Niu, B.; Fu, G.; Tarolli, P.; Tietjen, B.; Zhang, X. Temporal variability of precipitation and biomass of alpine grasslands on the northern Tibetan plateau. Remote Sens. 2019, 11, 360. [Google Scholar] [CrossRef]

- Zhang, C.; Huang, C.; Li, H.; Liu, Q.; Li, J.; Bridhikitti, A.; Liu, G. Effect of textural features in remote sensed data on rubber plantation extraction at different levels of spatial resolution. Forests 2020, 11, 399. [Google Scholar] [CrossRef]

- Li, M.; Yang, Q.; Yuan, Q.; Zhu, L. Estimation of high spatial resolution ground-level ozone concentrations based on Landsat 8 TIR bands with deep forest model. Chemosphere 2022, 301, 134817. [Google Scholar] [CrossRef] [PubMed]

- Feng, P.; Wang, B.; Liu, D.L.; Waters, C.; Xiao, D.; Shi, L.; Yu, Q. Dynamic wheat yield forecasts are improved by a hybrid approach using a biophysical model and machine learning technique. Agric. For. Meteorol. 2020, 285–286, 107922. [Google Scholar] [CrossRef]

- Wei, Z.; Meng, Y.; Zhang, W.; Peng, J.; Meng, L. Downscaling SMAP soil moisture estimation with gradient boosting decision tree regression over the Tibetan Plateau. Remote Sens. Environ. 2019, 225, 30–44. [Google Scholar] [CrossRef]

- Cheng, M.; Penuelas, J.; McCabe, M.F.; Atzberger, C.; Jiao, X.; Wu, W.; Jin, X. Combining multi-indicators with machine-learning algorithms for maize yield early prediction at the county-level in China. Agric. For. Meteorol. 2022, 323, 109057. [Google Scholar] [CrossRef]

- Fu, Y.; Yang, G.; Song, X.; Li, Z.; Xu, X.; Feng, H.; Zhao, C. Improved estimation of winter wheat aboveground biomass using multiscale textures extracted from UAV-based digital images and hyperspectral feature analysis. Remote Sens. 2021, 13, 581. [Google Scholar] [CrossRef]

- Tian, Y.; Huang, H.; Zhou, G.; Zhang, Q.; Tao, J.; Zhang, Y.; Lin, J. Aboveground mangrove biomass estimation in Beibu Gulf using machine learning and UAV remote sensing. Sci. Total Environ. 2021, 781, 146816. [Google Scholar] [CrossRef]

- Zha, H.; Miao, Y.; Wang, T.; Li, Y.; Zhang, J.; Sun, W.; Feng, Z.; Kusnierek, K. Improving unmanned aerial vehicle remote sensing-based rice nitrogen nutrition index prediction with machine learning. Remote Sens. 2020, 12, 215. [Google Scholar] [CrossRef]

| Growth Stages | Sample Size | Max (kg·hm−2) | Min (kg·hm−2) | Mean (kg·hm−2) | SD (kg·hm−2) | CV (%) |

|---|---|---|---|---|---|---|

| Heading | 180 | 7012.0 | 2180.0 | 4958.7 | 1071.8 | 21.61 |

| Grain filling | 180 | 10,800.0 | 4480.0 | 8254.2 | 1280.3 | 15.51 |

| VI | Formulation | Reference |

|---|---|---|

| Excess Green Index (EXG) | 2G − R − B | [30] |

| Excess Blue Index (EXB) | 1.4B − G | [15] |

| Green Leaf Index (GLI) | (2G − R − B)/(2G + R + B) | [15] |

| Visible Atmospherically Resistant Index (VARI) | (G − R)/(G + R − B) | [15] |

| Excess Green minus Red Index (EXGR) | 3G − 2.4R − B | [31] |

| Red Green Blue Vegetation Index (RGBVI) | (G2 − BR)/(G2 + BR) | [31] |

| Modified Green Red Vegetation Index (MGRVI) | (G2 − R2)/(G2 + R2) | [32] |

| Normalized Green Red Difference Index (NGRDI) | (G − R)/(G + R) | [15] |

| Green Red Ratio Index (GRRI) | R/G | [15] |

| Normalized Difference Index (NDI) | (R − G)/(R + G + 0.01) | [31] |

| VI | EXG | VARI | EXGR | NGBDI | EXR | RGBVI | MGRVI | GLI | NGRDI | EXB |

|---|---|---|---|---|---|---|---|---|---|---|

| variance explained ratio | 91.13% | 8.38% | 0.49% | 3.46 × 10−5% | 3.16 × 10−6% | 2.23 × 10−7% | 4.19 × 10−8% | 1.85 × 10−9% | 3.81 × 10−10% | 8.87 × 10−19% |

| Feature Combination | SVR | RR | Lasso | GBRT | RFR | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| R2 | rRMSE (%) | R2 | rRMSE (%) | R2 | rRMSE (%) | R2 | rRMSE (%) | R2 | rRMSE (%) | |

| VIs | 0.519 | 21.31 | 0.523 | 21.20 | 0.552 | 20.58 | 0.669 | 17.72 | 0.695 | 17.00 |

| VIs + CH | 0.849 | 12.11 | 0.850 | 11.94 | 0.850 | 11.95 | 0.830 | 12.71 | 0.841 | 12.30 |

| VIs + Texture | 0.772 | 14.85 | 0.797 | 13.95 | 0.831 | 12.69 | 0.825 | 12.98 | 0.835 | 12.57 |

| VIs + CH + Texture | 0.849 | 12.04 | 0.851 | 11.92 | 0.850 | 11.94 | 0.845 | 12.17 | 0.852 | 11.84 |

| Flight Heights | Feature Combination | SVR | RR | Lasso | GBRT | RFR | |||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| R2 | rRMSE (%) | R2 | rRMSE (%) | R2 | rRMSE (%) | R2 | rRMSE (%) | R2 | rRMSE (%) | ||

| 60 m | VIs | 0.438 | 23.35 | 0.446 | 23.06 | 0.495 | 21.99 | 0.647 | 18.25 | 0.637 | 18.56 |

| VIs + CH | 0.826 | 13.15 | 0.813 | 12.92 | 0.813 | 12.93 | 0.830 | 12.70 | 0.833 | 12.58 | |

| VIs + GLCM | 0.614 | 19.32 | 0.698 | 16.94 | 0.750 | 15.48 | 0.830 | 12.72 | 0.837 | 12.43 | |

| VI + CH + GLCM | 0.828 | 13.05 | 0.827 | 12.42 | 0.832 | 12.28 | 0.838 | 12.42 | 0.837 | 12.41 | |

| 90 m | VIs | 0.445 | 23.41 | 0.507 | 21.78 | 0.537 | 21.18 | 0.623 | 18.99 | 0.629 | 18.84 |

| VIs + CH | 0.513 | 22.22 | 0.797 | 13.78 | 0.797 | 13.79 | 0.821 | 13.09 | 0.826 | 12.88 | |

| VIs + GLCM | 0.508 | 22.03 | 0.685 | 17.35 | 0.749 | 15.46 | 0.767 | 14.88 | 0.765 | 14.98 | |

| VIs + CH + GLCM | 0.554 | 21.13 | 0.809 | 13.34 | 0.814 | 13.18 | 0.818 | 13.13 | 0.827 | 12.73 | |

| Flight Heights | Resolution (cm/pixel) | Flight Time | Waypoints | Flight Path Length (m) | Photo Storage |

|---|---|---|---|---|---|

| 30 m | 0.81 | 12 m 53 s | 12 | 783 | 122 |

| 60 m | 1.61 | 6 m 39 s | 7 | 404 | 31 |

| 90 m | 2.42 | 4 m 27 s | 4 | 272 | 14 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhai, W.; Li, C.; Cheng, Q.; Mao, B.; Li, Z.; Li, Y.; Ding, F.; Qin, S.; Fei, S.; Chen, Z. Enhancing Wheat Above-Ground Biomass Estimation Using UAV RGB Images and Machine Learning: Multi-Feature Combinations, Flight Height, and Algorithm Implications. Remote Sens. 2023, 15, 3653. https://doi.org/10.3390/rs15143653

Zhai W, Li C, Cheng Q, Mao B, Li Z, Li Y, Ding F, Qin S, Fei S, Chen Z. Enhancing Wheat Above-Ground Biomass Estimation Using UAV RGB Images and Machine Learning: Multi-Feature Combinations, Flight Height, and Algorithm Implications. Remote Sensing. 2023; 15(14):3653. https://doi.org/10.3390/rs15143653

Chicago/Turabian StyleZhai, Weiguang, Changchun Li, Qian Cheng, Bohan Mao, Zongpeng Li, Yafeng Li, Fan Ding, Siqing Qin, Shuaipeng Fei, and Zhen Chen. 2023. "Enhancing Wheat Above-Ground Biomass Estimation Using UAV RGB Images and Machine Learning: Multi-Feature Combinations, Flight Height, and Algorithm Implications" Remote Sensing 15, no. 14: 3653. https://doi.org/10.3390/rs15143653

APA StyleZhai, W., Li, C., Cheng, Q., Mao, B., Li, Z., Li, Y., Ding, F., Qin, S., Fei, S., & Chen, Z. (2023). Enhancing Wheat Above-Ground Biomass Estimation Using UAV RGB Images and Machine Learning: Multi-Feature Combinations, Flight Height, and Algorithm Implications. Remote Sensing, 15(14), 3653. https://doi.org/10.3390/rs15143653