4.1. Dataset

To verify our proposed method’s performance, we used two publicly available CD datasets named LEVIR-CD and WHU-CD. The detailed information is listed as follows.

The LEVIR-CD dataset [

19] is a binary CD dataset comprising 637 pairs of very-high-resolution (VHR) image patches. Each patch has a size of

pixels, with a resolution of approximately 0.5 m/pixel. These image pairs were derived from Google Earth global images of Texas, spanning the years 2002 to 2018. In order to conduct our experiment, we cropped non-overlapping patches of size

and randomly split them into three parts: 70% for training, 10% for validation, and 20% for testing. Finally, we obtained 7120/1024/2048 image pairs for train/val/test, respectively.

The WHU-CD dataset [

36] contains just one pair of images, with a resolution of 32,507 × 15,354 as a crop of a wider geographic area. This dataset consists of aerial images obtained in April 2012 that contain 12,796 buildings in 20.5 km

(16,077 buildings in the same area in the 2016 dataset) with 1.6-pixel accuracy. Following [

37], we cropped the original image pairs in a non-overlapping manner, and after cropping, we formed 7434 small images of the size 256 × 256. After that, we randomly divided all the images into training, validation, and test sets with the rates of 70%, 10%, and 20%, respectively. Finally, we obtained 5203/743/1488 image pairs for train, val, and test, respectively.

4.5. Compared with the State-of-the-Art

In this section, we present a comprehensive comparison of our proposed model with several existing methods on two benchmark datasets, namely, LEVIR-CD and WHU-CD. The compared methods can be categorized into attention-based approaches and other efficient encoder–decoder structures. To ensure a fair and unbiased evaluation, we meticulously re-implemented all these methods and replicated their results within the same experimental environment. For each comparative method, we carefully selected a set of optimal hyperparameters that maximized the F1 score on the validation subset. This approach guarantees that all methods are fine-tuned under the same criteria, enabling a meaningful and consistent performance comparison.

The quantitative evaluation results for the two datasets are presented in

Table 1 and

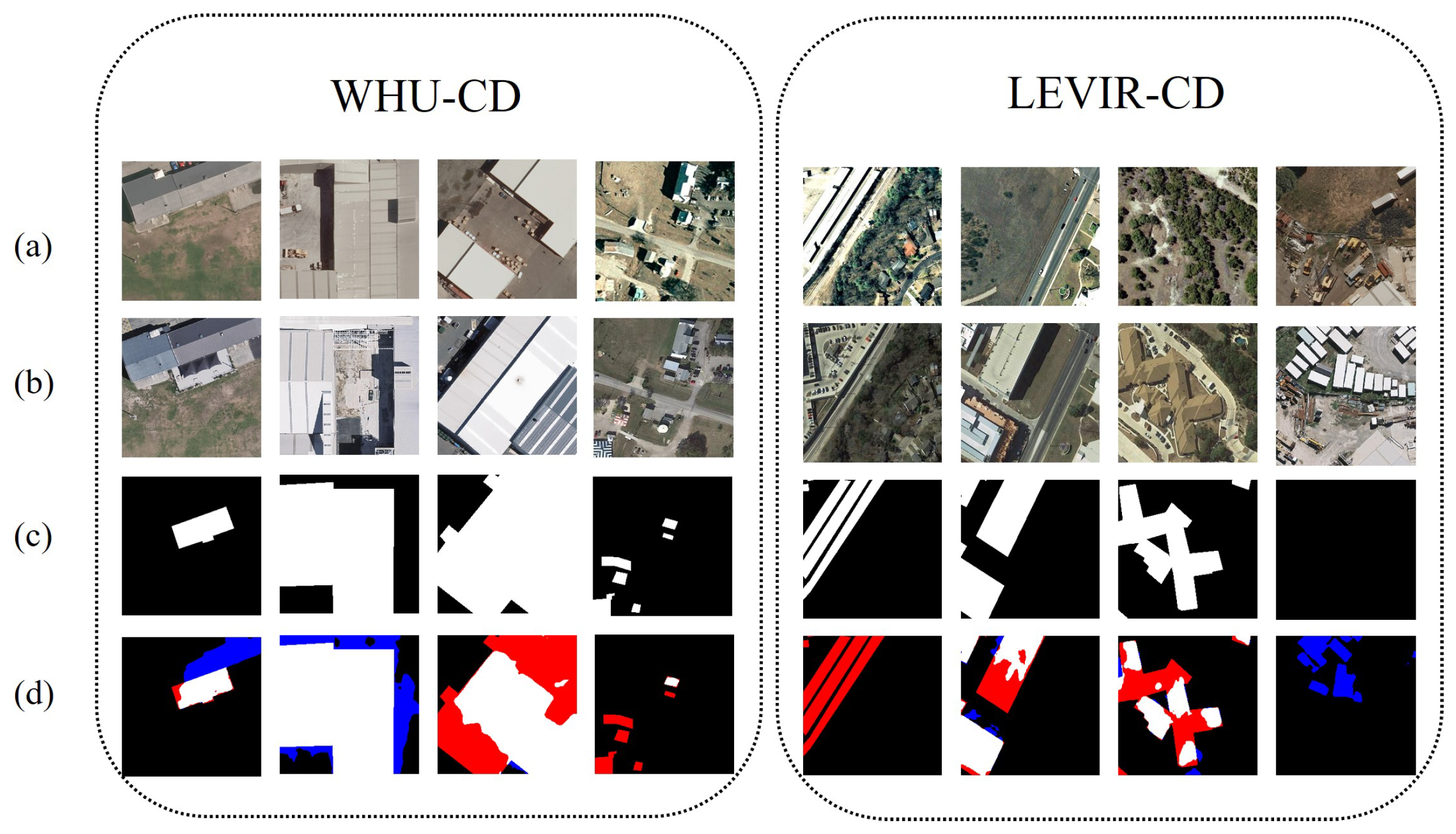

Table 2. Furthermore, the qualitative assessment of the comparative methods is visualized in

Figure 8 and

Figure 9. These figures depict true positive (TP) regions in white, false positive (FP) regions in blue, false negative (FN) regions in red, and true negative (TN) regions in black. These visualizations allow for a comprehensive comparison of the methods’ performance. To evaluate our proposed model in terms of both accuracy and model size, we compared it with a method that strikes a good balance between these factors.

Experimental Results on the LEVIR-CD Dataset: Although FC-EF, FC-Siam-conc, FC-Siam-diff, and CDNet have the advantageous feature of occupying smaller memory footprints, they exhibit the poorest performance in terms of , F1, and IoU metrics. On the other hand, attention-based models such as BIT and SNUNet demonstrate similar performance, but BIT achieves comparable results with only one-tenth of the parameters compared to SNUNet (4.02 M vs. 42.38 M). It is worth noting that SNUNet, incorporating self-attention mechanisms, shows enhanced accuracy over CNN-based methods, albeit with a larger model size of 18.68 M.

When comparing our proposed network with transformer-based approaches, it is evident that BIT, despite its smaller parameter count, lags behind our network by 4.74%, 6.72%, and 7.49% in terms of , F1, and IoU on the LEVIR-CD dataset. Conversely, Changeformer, which utilizes the transformer as its backbone, surpasses CNN-based and self-attention-based methods in terms of , F1, and IoU, indicating the superiority of transformer-based feature extraction for remote sensing images. However, Changeformer exhibits a significantly larger parameter size of 40.5 M, roughly four times the size of our proposed network. Ultimately, our proposed SMBCNet achieves the best overall performance with a of 0.9032, an F1 score of 0.9087, and an IoU of 0.8316. This is attributed to the ability of our method to identify pseudo-change regions from multi-temporal images. Notably, SMBCNet demonstrates its superiority despite having a moderate parameter count of 10.14 M, which is significantly smaller than pure transformer-based Changeformer (47.3 M) and CNN-based DSIFN (42.38 M) methods.

Compared with traditional CNN methods, the FC-ef, FC-conc, and FC-diff networks have much smaller parameter sizes, but their performance metrics are not impressive. In terms of the F1 metric on the LEVIR-CD dataset, our network shows a significant improvement of 4.3% compared to the best-performing network among the three. Although DTCDSCN performs better than the previous three methods, the number of parameters determines that the method is difficult to use in practical applications. This indicates that CNN methods have limitations in remote sensing object detection.

Figure 8 presents a perceptual comparison of various CD methods. It is apparent that misclassified changed pixels are prevalent in the results of all methods except for our approach. Additionally, efforts have been made to reduce repetition in the text. Specifically, the results of SNUNet, BIT, and Changeformer exhibit a noticeable missing part of the building. In the top row of

Figure 8, FC-diff, Fc-ef, Fc-cat, DSIFN, SNUNet, BIT, P2V-CD, and Changeformer fail to accurately localize the changed buildings, resulting in erroneous predictions. In contrast, our proposed method accurately and comprehensively detects the changed buildings. Notably, the change maps produced by SMBCNet exhibit the most favorable visual effect, appearing the closest to the ground truth. Additionally, efforts have been made to decrease repetition in the text.

Experimental Results on the WHU-CD Dataset:

Table 2 shows the performance metrics for the WHU-CD dataset. The experimental results indicate that FC-EF, FC-Siam-conc, and FC-Siam-diff do not perform better than the other methods. Although DSIFN yields higher precision and recall than the aforementioned methods, its F1 score lags behind that of Changeformer, the pure transformer-based method. On a positive note, P2V-CD proves to be a promising solution, delivering favorable results across different datasets. Despite being the largest model on the list, DSIFN effectively prevents overfitting by utilizing pretrained encoders. It achieves a precision score of 0.9626 and the second-highest F1 score of 0.9127 on this dataset. Meanwhile, SNUNet fails to deliver competitive results, despite having more network parameters than the comparatively smaller CDNet model. Notably, our proposed SMBCNet method dominates the other methods with an F1 score of at least 2.82%, indicating its superior performance in detecting change between two remote sensing images.

Figure 9 presents a qualitative evaluation of the change detection techniques on the WHU-CD dataset, providing a more intuitive comparison of the methods. The comparison displays that the majority of the CD methods produce spurious changes or missed detections, especially in heavily built-up areas. For example, in row 3 of

Figure 9, all methods besides our proposed technique misclassify the unremarkable region as the actual change region, leading to incorrectly classified areas shown as blue regions in the figure.

Table 3 shows the comparison between our proposed network and the selected method in terms of parameter count and accuracy on the LEVIR-CD dataset. We can observe that our proposed approach demonstrates an advancement compared to existing lightweight RSCD methods, establishing its efficacy in the task of change detection between RSIs. This outcome substantiates the effectiveness of our method and its superiority in addressing this specific challenge. Furthermore, the scalability and generalizability of our approach make it a promising solution for future research in the field of RSCD. Despite not attaining state-of-the-art results in terms of parameter size, SMBCNet exhibits a noteworthy enhancement in both performance and parameter size when compared to Changeformer, which also employs a transformer-based architecture. Particularly, SMBCNet showcases enhanced computational effectiveness, as reflected in its improved performance metrics and reduced parameter requirements.

On the other hand, our method stands out, as it accurately identifies change objects while effectively suppressing background interference between the bi-temporal images. Our method utilizes the strong contextual-dependency-capturing ability of transformers by progressively aggregating multilevel temporal difference features in a coarse-to-fine manner. This approach results in a more refined change map for RSCD. The qualitative results indicate that our proposed method outperforms other techniques in terms of detecting change objects with better accuracy and mitigating the negative influence of background interference in the bi-temporal images.

4.6. Ablation Study

To verify the effectiveness of the components and configurations of the proposed SMBCNet, we conduct comprehensive ablation studies on two RSCD datasets.

Effectiveness of transformer backbone. To verify the effectiveness of the transformer-based encoder in our network, we conducted ablation experiments using different lightweight CNN-based backbones. The results are summarized in

Table 4, which includes the parameters and accuracy for both LEVIR-CD and WHU-CD datasets. It is important to note that the “Params” column in

Table 4 refers to the size of a single backbone but not the size of the entire model. Additionally, “TB” refers to “transformer blocks”, as illustrated in

Figure 2. The table shows that our transformer-based encoder outperforms other CNN-based backbones in both model size and feature extraction capabilities for RSI.

Furthermore, we combined our proposed CEM with the compared backbones to validate its effectiveness. The results demonstrate that MobileNetV2 combined with our proposed CEM achieves the highest accuracy on the LEVIR-CD dataset, while the encoder used by our network comes in second place. However, it is important to note that the MobileNetV2+ contains 14M parameters, which is nearly double the number of parameters in our proposed encoder model.

Effectiveness of MCFM. We devise MCFM to extract the change information and fuse temporal features, which enjoy high interpretability and reveal the essential characteristic of CD. The MCFM aims to account for the diverse nature of changes in RSCD and enhance changes. There are four branches, as illustrated in

Figure 5. In order to validate the effect of different branches on the whole network, we selected four branches in turn for the experiment, and the results are shown in

Table 5. Since the image enhancement we employ includes an enhancement strategy that swaps pairs of images, “A + DA” is much more accurate than “A”, and in terms of F1 score, it improves by nearly 4.72%. After adding the last two branches, we achieve the highest accuracy and can see that each branch plays a significant role in the change feature fusion. We argue that with our accompanying proposed MCFM, we are able to “reduce” CD to semantic segmentation, which means tailoring an existing and powerful semantic segmentation network to solve CD.

In the course of these MCFM ablation experiments, we tested the case of only choosing “DA”, “E”, and “D", but the results were not actually optimal. This is because in real life, “Appear” and “Disappear” are always present randomly, so using only one of the two branches will not achieve the best result. In order to simulate this process to increase the robustness and generalizability of our model, we have used random temporal exchange data augmentation techniques. Finally, our experiments prove that the results obtained by using all four branches are optimal.

As shown in

Figure 10, we utilize heatmaps to effectively visualize the feature maps derived from the MCFM. These heatmaps are generated by analyzing the variance of all feature maps. By applying MCFM, regions that have undergone changes exhibit increased energy, particularly with enhanced intensity at the edges of the target. We can observe that the low-resolution feature map (e) is responsible for localizing the change area, while the high-resolution feature map (d) is responsible for making the edges of the change object more accurate. In essence, this approach strengthens and precisely localizes the edges of the modified target, leading to improved detection performance.

Influence of the size of the transformer blocks. We conducted an analysis of the effect of increasing the size of the encoder on the performance and model efficiency, and

Table 6 summarizes the results for the three datasets. The

and

mentioned in Section

Figure 3 control the size of our encoder. We observed that the increasing size of the encoder does not lead to consistent improvements in performance, while

and

achieves the best performance on the LEVIR-CD dataset. However, we also observed that the accuracy of the model does not consistently improve on the LEVIR-CD dataset with the progression of

and

. We believe that this inconsistency may be due to variations in overfitting tendencies that we observed during the model training, which are directly influenced by the size of the encoder.

While the combination of and yielded the best accuracy results, it also exhibited potential drawbacks regarding real-time processing. The larger encoder sizes presented in and strike a balance between accuracy and computational cost. By selecting these parameters, we aim to achieve a reasonable level of accuracy while ensuring that the model can operate efficiently in real-time scenarios.

Influence of , the MLP decoder channel dimension. We present an analysis of the impact of the channel dimension

in the MLP decoder, as discussed in

Section 3.4. In

Table 7, we demonstrate the model’s performance and parameters as a function of this dimension. Our findings indicate that setting

yields highly competitive performance with minimal computational cost. As the value of

increases, the model’s performance improves, resulting in larger and less efficient models. It is worth noting that an excessively large value of

may lead to overfitting and a subsequent decrease in the model’s accuracy, despite an increase in the number of parameters. Based on these findings, we select

as the optimal dimension for our final SMBCNet.