Abstract

Focusing on problems of blurred detection boundary, small target miss detection, and more pseudo changes in high-resolution remote sensing image change detection, a change detection algorithm based on Siamese neural networks is proposed. Siam-FAUnet can implement end-to-end change detection tasks. Firstly, the improved VGG16 is utilized as an encoder to extract the image features. Secondly, the atrous spatial pyramid pooling module is used to increase the receptive field of the model to make full use of the global information of the image and obtain the multi-scale contextual information of the image. The flow alignment module is used to fuse the low-level features in the encoder to the decoder and solve the problem of semantic misalignment caused by the direct concatenation of features when the features are fused, so as to obtain the change region of the image. The experiments are trained and tested using publicly available CDD and SZTAKI datasets. The results show that the evaluation metrics of the Siam-FAUnet model are improved compared to the baseline model, in which the F1-score is improved by 4.00% on the CDD and by 7.32% and 2.62% on the sub-datasets of SZTAKI (SZADA and TISZADOB), respectively; compared to other state-of-the-art methods, the Siam-FAUnet model has improved in both evaluation metrics, indicating that the model has a good detection performance.

1. Introduction

Remote sensing images have long served as a crucial conduit of information for humankind []. They are generated by capturing visual data through photographic or non-photographic methods from a distance without physical contact with the objects of interest. Remote sensing techniques enable the swift and extensive surveillance of the Earth’s surface, furnishing accurate and impartial information on terrestrial features. Therefore, remote sensing technology represents the sole means currently at our disposal to perform the rapid and real-time monitoring of the Earth’s surface over large areas [,,].

The change detection of remote sensing image is to extract and analyze the changes in the surface area, including the changes in the location, scope, and nature of the area, by combining the characteristics of ground objects, remote sensing imaging mechanism, and relevant geospatial data, using the mathematical model and image processing theory of multi-source remote sensing images of the same surface during different periods []. Currently, remote sensing image change detection is widely applied in various applications such as monitoring urban changes [], analyzing land use and coverage changes [], assessing natural disasters [], and environmental monitoring [], etc. With the development of remote sensing platforms and sensors, a large number of multi-source, multi-scale, and multi-type remote sensing images have been accumulated; however, remote sensing imaging is easily affected by external conditions, and the images may be coarse and contain a large number of mixed pixels. Super-resolution mapping (SRM) can analyze such mixed pixels and obtain mapping information at the sub-pixel level. Wang et al. [] proposed a spatial-spectral correlation (SSC) method using a mixed spatial attraction model (MSAM) based on linear Euclidean distance to obtain spatial correlation, combined with a spectral correlation method based on the nonlinear Kullback–Leibler distance (KLD). The combination of spatial correlation and spectral correlation reduces the effect of linear and nonlinear imaging conditions. Li et al. [] used the spatial distribution of fine spatial resolution images to improve the accuracy of spectral unmixing, performed spectral unmixing with coarse spatial resolution images, integrated the spatial distribution information of fine spatial resolution images into the original abundance images, and used the improved abundance values to generate sub-pixel maps of fine spatial resolution using sub-pixel mapping methods. The study of sub-pixel-level mapping can reduce the influence of remote sensing images by external factors. At the same time, remote sensing images also exhibit high resolution, strong timeliness, and diversity [,]. As a result, low-to-medium resolution remote sensing image change detection is no longer sufficient to meet demands. Therefore, high-resolution remote sensing image change detection has become a popular but difficult research topic in the field of remote sensing [].

In the early stages of remote sensing change detection research, manual visual interpretation methods were primarily used. However, these methods are labor-intensive, time-consuming, and have low interpretation efficiency [], making them inadequate for meeting the practical needs of production and development. Subsequently, traditional change detection methods such as the post-classification comparison method [,,,], the direct comparison analysis method [,,,], change vector analysis (CVA) method [,], and principal component analysis (PCA) method [,,,] were introduced. While these methods can quickly identify change areas, they have higher requirements for image preprocessing and often require threshold segmentation and cluster analysis, etc. The process of change detection in remote sensing images still heavily relies on manual intervention, which can lead to large errors and failure to fully utilize the rich ground object information contained in high-resolution images. This results in the low accuracy and poor performance of change detection. Therefore, the development of techniques for the machine-based, automatic determination of change areas is a critical aspect of research in this field [].

Since 2012, with the birth of the Alex-Net network [], deep learning began to flourish and was rapidly applied to the field of change detection, which can autonomously learn the multi-level features of images without manual extraction and through training, for change detection, with wide applicability []. Deep learning technology has powerful image processing capabilities, data analysis capabilities, and automation capabilities. Change detection is essentially a classification problem of changed pixels and unchanged pixels in the image. Deep learning technology can be used to effectively solve this type of problem [,]. Unlike traditional detection methods, change detection combined with deep learning is treated as a semantic segmentation task, which eliminates the need for manual intervention and allows for end-to-end detection. This approach can quickly process large amounts of data, thus improving efficiency and accuracy. With the introduction of deep learning, change detection technology based on remote sensing images has been rapidly developed to avoid tedious manual feature extraction, classification, etc., and to provide science and technology to realize the intelligent and efficient processing of remote sensing images []. Currently, change detection based on deep learning can be divided into two categories based on the network structure: single-branch and multi-branch structures [].

The single-branch structure employs a single sub-network, and the bi-temporal images are connected as a single object input to the network by difference comparison or superposition, and only a core feature extraction model is needed for change detection. For example, the deeplabv3+ network was optimized using heterogeneous receptive fields and multi-scale feature tensors proposed by Chang Zhenliang et al. []. On the other hand, the change detection model proposed by Zhang et al. [] uses a feature pyramid and trapezoidal U-Net network; in the encoder structure, the model incorporates dilated convolutions with different dilation rates to ensure the sufficient receptive field coverage of objects of different sizes, which is capable of effectively learning multi-scale features. However, the U-Net network may result in less context information due to the trade-off between precise positioning and contextual information. Zhang Cuijun et al. [] proposed a change detection model that combines the use of asymmetric convolution blocks and the convolutional block attention module (CBAM) to improve U-Net network and convert change detection into a pixel-level binary classification task, thereby achieving end-to-end detection. Tian Qinglin et al. [] proposed a building change detection method based on an attention pyramid network, adding dilated convolution and pyramid pooling structure to the CNN in the encoder to expand the receptive field and extract multi-scale features, introducing attention mechanism in the decoder, and using the top–down dense connection to calculate the feature pyramid, fully fusing multi-level features to solve the problem of missed detection and indistinct detection boundaries of multi-scale features occurring in detection. Ji Shunping et al. [] proposed the FACNN model using the U-Net framework to obtain finer multi-scale information by replacing the normal convolution in the encoder with the dilated convolution and using atrous spatial pyramid pooling (ASPP). Similarly, Peng et al. [] proposed an improved U-Net++ detection model that employs a multi-side output strategy, making use of both global and fine-grained information to mitigate the problem of error propagation in change detection. Papadomanolaki et al. [] used U-Net to extract the spatial features of images and LSTM to obtain temporal features to fully exploit the spatio-temporal information of high-resolution images and improve the change detection evaluation. Wang et al. [] proposed using faster R-CNN for the change detection of high-resolution images, and designed two detection models; MFRCNN merges bi-temporal image bands and feeds them into faster R-CNN for detection, while SFRCNN generates difference images before detection, and the experimental results show that SFRCNN has a higher detection accuracy and the MFRCNN architecture is simpler and highly automatic. However, this type of single-branch structure detection model, which often inputs images to the network through difference comparison or superposition, can lead to the loss of high-dimensional features of the original image and result in detection errors.

The multi-branch structure in deep learning-based change detection requires two data inputs, fusing bi-temporal features from the two sub-networks to extract change information, and can be further divided into two categories: Siamese networks and pseudo-Siamese networks. The main difference between the two is that Siamese networks share weights when the two branches extract image features, while pseudo-Siamese networks do not. The multi-branch structure change detection model with shared sub-network weights: Chopra et al. [] used a Siamese network for face recognition verification, which not only preserves the complete spatiality of the image, but also does not require any prior information about the category, which is superior compared to other networks. Zhan Y. et al. [] used a Siamese convolutional neural network with a contrast loss function for remote sensing image change detection at the pixel level, where each pixel point in the input image generates a 16-dimensional vector and compares the similarity of the vectors generated for each pixel point of the two images to determine, pixel-by-pixel, whether a change has occurred or not. Zhang et al. [] employed a Siamese convolutional network to extract highly representative deep features and presented a deep-supervised Siamese fully convolutional neural network (IFN) with a deep supervision strategy to supervise the model’s output, thereby enhancing the network’s discriminative ability. Similarly, Raza et al. [] used a CBAM for feature fusion and designed an efficient convolution module to improve U-Net++, thereby enhancing the model’s ability to extract fine-grained image features and improving detection performance. Wang et al. [] proposed a deep supervision network, which is based on the CBAM of the Siamese structure for change detection and can make full use of the ground feature information of the image. Similarly, Jiang et al. [] proposed a Siamese network based on feature pyramids, which uses an encoder–decoder architecture with a shared attention module at the end of the encoder to enable the model to find unique objects in other images and address the complex relationship between buildings and displacements in building change detection in remote sensing orthophotos. Shi et al. [] used a convolutional neural network (CNN) network with the Siamese structure to extract image features, introduced an attention mechanism and a deep supervision module to obtain change maps with rich spatial information, and effectively reduced the influence of pseudo-change and noise in the detection maps due to external factors. Zhang et al. [] proposed a DSIFN model that uses a Siamese-structured CNN network to extract image depth features, uses a deeply supervised disparity discrimination network for change detection, and introduces an attention mechanism in the disparity discrimination network to make full use of the fine image details and complex texture features in high-resolution images. Bandara et al. [] used two transformer encoder structures to extract multilayer features and designed a lightweight multilayer perception and decoder to fuse feature differences and predict change information, capable of simultaneously acquiring remote contextual information to identify changes in images in both the spatial and temporal scales. Fan Wei et al. [] proposed the MDFCD model, which uses a Siamese-structured CNN network to extract depth features from multiple layers, and the multi-scale fusion of high-level and low-level features to make full use of texture features and semantic information in images. Chen et al. [], proposed the SiamCRNN model for the change detection of heterogeneous data, combining CNN and RNN to process images, extracting spatial spectral features using Siamese-structured CNN (pseudo-Siamese is used for heterogeneous data), and then fully mining change information using RNN. Fang et al. [] proposed the DLSF model with a hybrid Siamese structure, consisting of two branches for dual-learning feature extraction and Siamese-based change detection, respectively. Wang et al. [] proposed the DSCNH model with a Siamese convolutional neural network based on a hybrid convolutional feature module to achieve the change detection of multi-sensor images. Additionally, Xu et al. [], Liu et al. [], Wiratama et al. [], and Touati et al. [] developed a pseudo-Siamese network, which is composed of two parallel convolutional neural networks with non-shared weights, to accomplish the task of change detection. The multi-branch structure detection model is capable of effectively preserving the high-dimensional features of the image. However, the complexity of high-resolution image information can still lead to issues such as blurred detection boundaries, small target missed detection and more pseudo changes.

Continuous exploration by researchers has resulted in the widespread application and significant development of remote sensing change detection technology in many fields. However, the diversity and variability of remote sensing image sensors, changed areas, and real-world scenarios preclude the existence of a change detection method that can be universally applied to all application scenarios, rendering change detection methods lacking in generality. After a comprehensive analysis of the current research status, the high-resolution remote sensing image change detection is faced with several challenges and shortcomings:

- Remote sensing images exhibit internal complexity and distinct characteristics in the expression of features as compared to images used in everyday settings. Remote sensing images are large-scale observations of the Earth, with original sizes that are significantly larger than those of regular images. With increasing image resolution, more detailed parts of the features can be resolved, leading to increased information content in the images. Nevertheless, the majority of research scenes are typically limited to specific scenarios with relatively low information content, thereby complicating change detection in high-resolution remote sensing images. As the multi-source heterogeneous data sources born with the continuous development of remote sensing technology have differences in imaging modes, the traditional change detection techniques cannot accurately identify the change areas, and the current methods, which mainly target the change detection between different data sources, are not universal for multimodal data application scenarios.

- The low degree of automation is a major challenge in traditional change detection methods, which require a considerable amount of manual intervention, leading to a high degree of uncertainty and a significant impact on human factors on the results. One of the current difficulties is to automatically extract features, simplify the process, and improve the level of automation. Moreover, due to the existence of diversity among the datasets used in the research, there is a need to further investigate change detection methods that have universality and generalization.

- The blurred boundary and missed detection of small targets. High-resolution remote sensing image information is complex, and shallow information will be lost when feature extraction is performed by the change detection network, resulting in blurred detection boundaries and small target miss detection, etc. Further research on multi-scale information extraction is needed to improve the detection performance. The imaging of remote sensing images is often susceptible to disturbances from external factors such as lighting, sensors, and human factors, which can result in the presence of pseudo-changes. Change detection, which involves comparing two remote sensing images obtained at different times, can be compromised if these external factors interfere with the imaging process, leading to inaccuracies in the research results. As a result, the reduction in the impact of pseudo-changes has become one of the primary challenges in remote sensing image change detection. It is critical to devise strategies to minimize their interference and exclude them from the research results.

In response to the limitations of existing research, to address the problems of blurred detection boundary, the missed detection of small targets and more pseudo changes in the change detection of high-resolution remote sensing images, and fully utilize the global information of images, solve the problems of shallow information loss and the semantic misalignment caused by the direct concatenation of features during feature fusion. We developed a new change detection algorithm called Siam-FAUnet in this paper. This algorithm incorporates a Siamese structure, atrous spatial pyramid pooling module, and flow alignment module to more accurately extract the changing area and features of the bi-temporal remote sensing image.

The contributions of this research are highlighted as follows:

- This paper proposes a high-resolution image change detection model, called Siam-FAUnet, based on the Siamese structure. The Siamese network was first proposed by Bromley et al. [] in 1994, and it is used to extract change features to alleviate the influence of sample imbalance. Moreover, the bi-channel input of the two temporally separated remote sensing images can reduce the image distortion caused by channel overlay.

- The proposed model employs an encoder–decoder architecture, where an enhanced VGG16 is employed in the encoder section for feature extraction. To capture contextual features across multiple scales, the atrous spatial pyramid pooling (ASPP) module [] is employed. Atrous convolution with varying dilation rates is used to sample input features, followed by a concatenation of the results to enlarge the network’s receptive field, which enables the finer classification of remote sensing images. In the decoder section, the flow alignment module (FAM) [] is utilized to combine the features extracted by the encoder. FAM can learn the semantic flow between the features of different resolutions, and efficiently transfer the semantic information from coarse features to refined features with high resolution. This mitigates semantic misalignment issues caused by direct feature concatenation during feature fusion.

The Siam-FAUnet model is based on the Siamese structure, which effectively preserves the complexity of the high-dimensional image information, reduces the impact of sample imbalance on detection, and reduces image distortion. The ASPP module extracts multi-scale contextual features, which can increase the receptive field of the network, and the FAM module fuses feature information to solve the problem of semantic flow misalignment caused by the direct concatenation of features. The proposed model is based on the Siamese structure and combines ASPP and FAM modules to solve the problem of the loss of shallow information of high-dimensional image features, making full use of the global information of images to achieve multi-scale information extraction, and effectively solve the problems of blurred boundaries, missed detection of small targets, and more pseudo changes in change detection.

The chapters of the article are organized as follows:

In Section 1, the current background of remote sensing change detection research and its extensive and far-reaching significance are introduced. The current research status of remote sensing image change detection is summarized and sorted out, and the research difficulties and shortcomings are summarized based on the current research status. In Section 2, the structure of the proposed model is introduced, including the general structure of the model and the introduction of each module, the loss function used, and the experiment setup, including the experimental data, the experimental environment, the evaluation metrics, and the experimental comparison method. In Section 3, the experimental results are qualitatively and quantitatively presented in this chapter. In Section 4, summarize and explain the results of the study and explore how the results compare and what was found with other studies. In Section 5, summarize the main contents and research work of the paper, analyze the shortcomings of the paper, summarize the methods and experiments in the paper, and provide a reasonable outlook on the future research directions and contents.

2. Materials and Methods

2.1. Structure of Change Detection Network Based on Siam-FAUnet

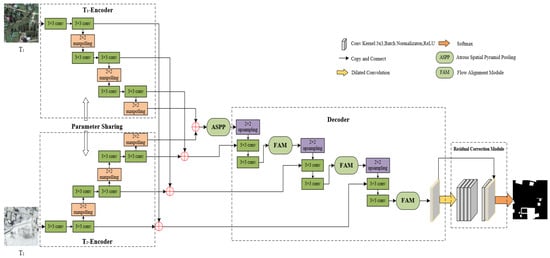

The proposed Siam-FAUnet model is illustrated in Figure 1, and comprises two parts, namely the prediction module and the residual correction module. The prediction module, which is composed of an encoder and a decoder, receives remote sensing images from two different temporal periods (T1 and T2). Combine the atrous spatial pyramid pooling (ASPP) and the flow alignment module (FAM) in this module, the network uses image information to perform multiple consecutive convolutions and the deconvolution operations to extract deep and multi-scale feature information, thus allowing for the prediction of changing areas. The residual correction module is used to reduce the residual between the prediction and the ground truth, thereby enhancing the accuracy of change detection and producing more accurate change binary maps.

Figure 1.

Siam-FAUnet structure diagram.

2.2. Prediction Module

To address the problem of the model’s limited receptive field not being able to cover multi-scale target objects, leading to the inadequate refinement of change detection results and indistinct change boundaries, a multi-branch encoder–decoder structure network is implemented in the prediction module. The single-branch structure model, which usually inputs the image into the network by difference comparison or superposition, easily leads to the loss of the high-dimensional features of the original image. Therefore, the Siam-FAUnet model based on the Siamese structure effectively preserves the high-dimensional features of the image, but due to the complexity of high-resolution image information, the detection results still have problems such as blurred detection boundary, small target miss detection, and more pseudo-variation due to the loss of shallow feature information.

The atrous spatial pyramid pooling (ASPP) and the flow alignment module (FAM) are used in the encoder–decoder structure. The ASPP module introduces the dilation rates to the convolutional layer, defines the spacing of each value when the parameter kernel processes the data, and can avoid the problems of internal data structure, loss of spatial hierarchical information and loss of small object data after downsampling caused by ordinary convolution in pooling, so as to obtain a larger receptive field, obtain multi-scale information, and improve the effect of small object change detection. However, atrous convolution can causes a grid effect and lose the continuity of information, as well as an excessively large dilation rate that makes the information irrelevant at a distance, leading to a detection that is only effective for large objects and semantic information loss during feature transfer. Additionally, FAM can learn the semantic flow between the features of different resolutions and generated semantic flow fields, which can effectively transfer semantic information from coarse features to high-resolution refined features, and can solve the semantic misalignment problem caused by direct concatenation during feature fusion. The ASPP module obtains multi-scale contextual information combined with the feature mapping of the FAM module, which can recover part of the semantic information lost in the encoding stage, effectively fusing multi-scale information and solving the problems of shallow information loss and the alignment of feature positions at different scales.

The encoder employs the first four blocks of VGG16 with a Siamese structure for feature extraction. Each block consists of two convolutional layers and a max pooling layer with a window size of 2 × 2 and a stride of 2. These layers are followed by a batch normalization layer and ReLU activation function. The convolution blocks use a convolution kernel size of 3 × 3.

The encoder utilizes the first four blocks of VGG16, with 32, 64, 128, and 256 convolution kernels in each block, respectively. The use of remote sensing images that contain objects with varying scales and inconsistent textures and colors may not be able to fully capture the entire object if only the pooling layer is used for semantic information extraction, leading to challenges such as indistinct change boundaries in the results. To mitigate this, the atrous spatial pyramid pooling (ASPP) is incorporated in the last layer of the encoder to fuse features, to obtain context information of varying scales within the image and improve detection accuracy.

The decoder, which corresponds to the encoder, is also composed of five blocks. The first four blocks of this section are parallel to the corresponding blocks in the encoder and feature mapping is performed using the flow alignment module (FAM) to recover some of the semantic information that was lost during the encoding stage. The FAM is utilized instead of the traditional method of direct concatenation for up-sampling, which reduces the loss of semantic information during feature transfer.

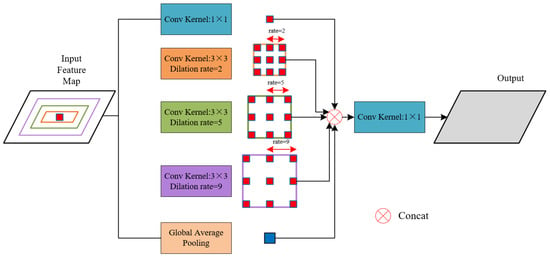

- Atrous spatial pyramid pooling (ASPP) is used to address the challenge of large variation in the scale of objects in remote sensing images and the randomness of change locations. The traditional pooling operation samples at a fixed scale, which results in the network being unable to fully utilize the global information of the image and causes significant variations in the segmentation of objects of different scales. The proposed ASPP module effectively addresses these issues and its structure is shown in Figure 2. ASPP employs atrous convolution into sample input features with various dilation rates and then concatenates the results. A 1 × 1 convolutional layer is used to decrease the number of channels of the feature map to the desired amount. By incorporating the ASPP module into the network, the receptive field of the network is enlarged. This allows the network to extract features from a wider area while preserving the details of the original image. As a result, the network can more thoroughly classify remote sensing images, leading to improved change detection performance.

Figure 2. Schematic diagram of the atrous spatial pyramid pooling (ASPP).

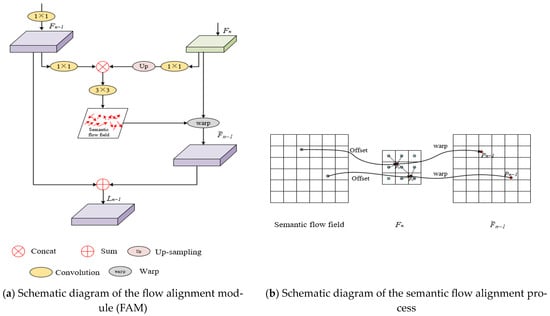

Figure 2. Schematic diagram of the atrous spatial pyramid pooling (ASPP). - The structure of the flow alignment module (FAM) is depicted in Figure 3, where Figure 3a illustrates the overall structure of FAM, and Figure 3b shows the schematic diagram of the semantic flow alignment process. FAM utilizes the feature maps, Fn and Fn−1, from two adjacent layers, where the resolution of Fn−1 is higher than that of Fn. FAM first increases the resolution of Fn to align with that of Fn−1 through bilinear interpolation and then concatenates these two feature maps. These concatenated feature maps are then processed by a sub-network comprising two 3 × 3 convolutional layers. The FAM module generates a semantic flow field by utilizing input feature maps, namely Fn and Fn−1. This flow field facilitates the improvement of the up-sampling of low-resolution features. The semantic flow field maps each pixel point, Pn−1, on the spatial grid to n levels through simple addition. Then, a differentiable bilinear sampling mechanism is employed to interpolate the values of the four neighboring pixels of Pn (upper left, upper right, lower left, and lower right) in order to estimate the final output of the FAM module, . This final output can be represented in Formula (1):where ωp is the bilinear interpolation kernel weight, which is estimated based on the distance, Fn(P) represents the value of the low-resolution feature map at a specific position p, and N(Pn) denotes the four neighboring positions of Pn. This semantic flow field allows FAM to learn the correlation between features of different resolutions. The generated offset field, also known as the semantic flow field, can effectively transfer semantic information from coarse features to high-resolution refined features, thus resolving the semantic misalignment caused by direct feature fusion during the splicing process.

Figure 3. Schematic diagram of the overall structure of the flow alignment module (FAM): (a) description of what is contained in the first panel; (b) schematic diagram of the semantic flow alignment process.

Figure 3. Schematic diagram of the overall structure of the flow alignment module (FAM): (a) description of what is contained in the first panel; (b) schematic diagram of the semantic flow alignment process.

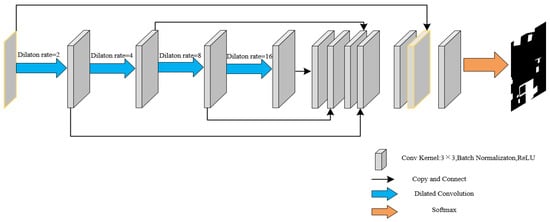

2.3. Residual Correction Module

If the detection results of the prediction module are not corrected, problems such as patch holes and unsmooth detection boundaries are likely to exist, so there is a large difference between the final predicted results and the actual changes. In order to improve the accuracy of the change detection, the proposed model includes a residual correction module that utilizes dilated convolution. This module takes in the output of the prediction module, which is the semantic information of the image, and learns the difference, also known as the residual, between this output and the ground truth during the training process. By applying this residual correction, the input image is further refined, and more accurate semantic segmentation results are obtained.

The high-resolution remote sensing image poses a significant challenge in feature extraction, as the characteristics of ground objects, such as texture, spectrum, and shape, vary greatly. Traditional methods fail to take into account the different scales of ground objects and the surrounding background, resulting in an inability to extract the global features of the image. This leads to there being unclear boundaries of ground objects in change detection results, incomplete detection, and other issues. To address this problem, the proposed dilated convolution residual correction module has multi-scale receptive fields, enabling the extraction of deeper image features.

The structure of the dilated convolution residual correction module is shown in Figure 4. The proposed dilated convolution residual correction module features a structure with four convolutional layers of varying dilation rates, with the dilation rates being 2, 4, 8, and 16, and the number of dilated convolution kernels being 32. This module fuses the feature maps of different receptive fields through superposition, with each convolutional layer being followed by batch normalization and ReLU activation functions. The input of this module contains the initial information of image prediction, and adding and fusing the input image with the obtained feature map results in more detailed change information. The final modified change detection image is then obtained through the use of the Softmax function to classify changed and non-changed pixels.

Figure 4.

Residual correction module structure diagram.

2.4. Loss Function

Cross entropy loss function (CE) [] is a loss function based on empirical error minimization. Its definition is shown in Formula (2).

Among them, y is the actual value of the sample, and y′ is the predicted value of the model output. Although the cross-entropy loss function can optimize the overall accuracy of model classification, when the number of samples of different categories in the dataset is unevenly distributed, the prediction results of the network trained with this loss function will be more biased towards the side with more samples, while those with fewer samples will be ignored, resulting in poor prediction results for categories with small loss function values but a small proportion of samples.

The Dice loss function, which is defined in Formula (3), takes into account the proportion of the intersection of pixels of the same category in the population during the training process, rather than analyzing the overall accuracy of model classification as in the case of the cross-entropy loss function. This approach makes the model less sensitive to the uneven distribution of samples among different categories, resulting in improved prediction results for categories with small sample sizes. The variable M represents the total number of pixels, Pm is the prediction result, rm is the true value of the sample, and ε is a very small real number that is used to prevent division by zero.

In order to optimize the performance of the change detection model, this paper proposes the use of a combination of the CE loss function and the Dice loss function. The CE loss function, commonly used in image classification tasks, calculates the difference between the predicted output and the actual value of the samples. However, in remote sensing change detection, the distribution of changed and unchanged pixels is often uneven, leading to errors. The Dice loss function, on the other hand, calculates the proportion of the intersection of all pixels of the same category in the population, which is less affected by the imbalance in the sample distribution. By assigning a weight λ to the CE–Dice loss function, the model can achieve better performance in detecting changes in remote sensing images. Its definition is shown in Formula (4).

Among them, LCE–Dice represents the loss function CE–Dice loss used in the research, is defined as the weighted sum of the CE loss (LCE) and the Dice loss (LDice), and λ is used to adjust the weight of the two combined items in the expression. Concerned with the problem stemming from the value of λ, an experiment is conducted to determine the optimal value of λ for the proposed loss function.

2.5. Experimental Setup

2.5.1. Datasets

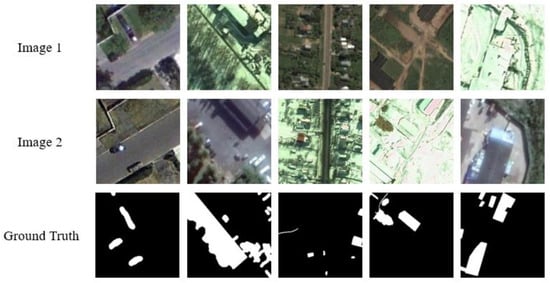

CDD: In 2018, Lebedev et al. [] created and used the Change Detection Dataset (CDD) in a published paper. The dataset contains three types of images: synthetic images without relative object movement, synthetic images with relatively small relative object movement, and real remote sensing images that change with the seasons. The experiment selected real remote sensing images that change with the seasons in the CDD. The dataset has a total of 10,000 pairs of training sets, 3000 pairs of validation sets, and 3000 pairs of images as test sets. Its satellite image is retrieved from Google Earth, a 3-channel RGB image with a size of 256 × 256 pixels, and its ground resolution varies from 0.03 m/pixel to 1 m/pixel. The two instances of each image pair were usually acquired in different seasons, and the amount of variation was occasionally increased by manually adding objects. The change truth value only considers the corresponding change in object appearance or disappearance between two instances, and does not ignore the all seasons change. Figure 5 shows a sample plot of part of the CDD. Table 1 shows the introduction of experimental datasets.

Figure 5.

Example of CDD.

Table 1.

Introduction of the CDD and SZTAKI dataset.

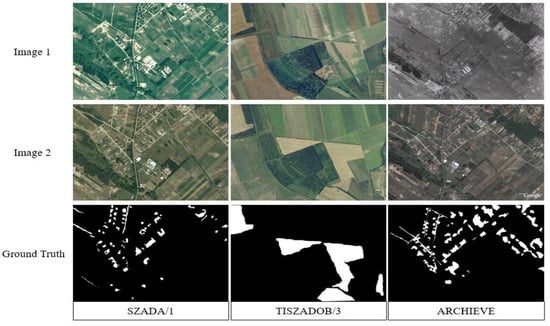

SZTAKI Dataset: an optical aerial image pair provided by the Hungarian Society of Geodesy and Cartography [], including three sub-datasets of SZADA, TISZADOB, and ARCHIVE. However, due to the poor quality of remote-sensing images in the ARCHIVE dataset, most studies only use the first two sub-datasets. The SZADA dataset contains 7 pairs of remote sensing images. The images were collected from 2000 to 2005. The size of the images is 952 × 640 pixels, and the resolution is 1.5 m/pixel. The TISZADOB dataset contains five pairs of remote sensing images collected from 2000 to 2007. The image size and resolution are consistent with those in SZADA. Therefore, in this experiment, a total of 12 pairs of remote sensing image pairs with a size of 952 × 640 pixels and a pixel resolution of 1.5 m/pixel were used in the SZTAKI dataset. The set of 12 image pairs was subjected to cropping with overlap to generate image blocks of size 256 × 256 pixels. Subsequently, a random partitioning scheme was employed, allocating the blocks into training, validation, and testing sets in an 8:1:1 ratio. To augment the dataset, operations such as flipping and rotating were applied to the image pairs. Figure 6 shows a sample plot of part of the SZTAKI dataset (wherein SZADA/2 denotes the second sample in the SZADA dataset and TISZADOB/3 denotes the third sample in the TISZADOB dataset). Table 1 shows the introduction of experimental datasets.

Figure 6.

Example of the SZTAKI dataset.

2.5.2. Experimental Environment and Evaluation Criteria

To ensure the scientific nature of the experiment, all experiments in this paper are carried out on the same desktop PC. The hardware environment of the experiment is: Intel(R) Core(TM) i7-3770CPU@3.40GHZ (manufacturer: Intel; manufacturing address: Santa Clara, CA, USA), the graphics card is NVIDIA GeForce RTX 3070 8G (manufacturer: NVIDIA Corporation; manufacturing address: Santa Clara, CA, USA); the software environment is Windows 10 64-bit, Pycharm 2021.2.3 (Community Edition), Anaconda3, Python3.6, TensorFlow2.0.

The Adam algorithm [] was used as an optimizer for the network to update the network parameters to speed up the convergence of the network. Parameter updating methods include stochastic gradient descent (SGD) algorithm [], RMSprop algorithm [], Adam algorithm, etc. The Adam algorithm combines the advantages of AdaGrad [] and RMSProp in the first- and second-order moments of the gradient the correction factor is introduced to make it more stable when adjusting the learning rate, which can effectively solve the problems caused by excessive gradient noise or sparsity. Careful consideration was given to the selection of the learning rate, as setting it too small may result in slow parameter updates and the risk of being trapped in a local minimum, while setting it too large may cause the loss value to become unstable and prevent the model from converging. Therefore, an appropriate learning rate of 0.0001 was chosen. Additionally, the number of training epochs was set to 50 for the CDD and 200 for the SZTAKI dataset, and the batch size for image processing was set to 4 for both datasets.

To quantitatively evaluate the performance of neural network models in remote sensing change detection tasks, a set of standard and widely accepted evaluation metrics are employed. These include accuracy, recall, precision, and F1 score. The accuracy measures the proportion of correctly classified pixels, recall measures the proportion of detected changed pixels, precision measures the proportion of correctly detected changed pixels, and F1-score is the harmonic mean of precision and recall. A higher score in these metrics indicates the better performance of the model.

where: TP, TN, FP, and FN represent the number of true positives, true negatives, false positives, and false negatives, respectively.

2.5.3. Comparison Methods

In this study, the proposed Siam-FAUnet change detection method is compared with several state-of-the-art methods, which include CDNet, FC-Siam-conc, FC-Siam-diff, IFN, DASNet, STANet, and SNUNet.

- CDNet [] is a fully convolutional neural network based on the idea of stacking, shrinking, and expanding.

- FC-Siam-conc [] is proposed based on the FCN model. The Siamese network is used to realize the dual input feature extraction of two-temporal remote sensing images. Finally, the features are spliced to obtain change information.

- FC-Siam-diff is proposed in [] and also uses the FCN as the benchmark network. The difference with the previous one is that the semantic restoration stage uses a difference method to connect the feature maps, and finally obtains the changing image we need.

- IFN [] is an encoder–decoder structure, and unlike U-Net, this model employs dense skip connections and implements implicit deep supervision in the decoder structure.

- DASNet [] is proposed based on a dual attention mechanism to locate the changed areas and obtain more discriminant feature representations.

- STANet [] is proposed based on the Siamese FCN to extract the bi-temporal image feature maps and design a change detection self-attention module to update the feature maps by exploiting spatial-temporal dependencies among individual pixels at different positions and times.

- SNUNet [] is proposed based on the combination of the Siamese network and NestedUNet uses dense skip connections between the encoder and decoder and between the decoder and decoder.

3. Results

3.1. Loss Function

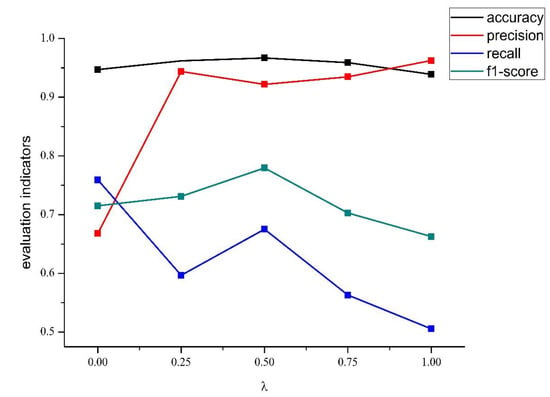

To determine the optimal value of λ in the composite loss function CE-Dice Loss, a series of experiments are conducted using different values of λ. Specifically, the network is trained using the CE-Dice Loss when λ = 0, λ = 0.25, λ = 0.5, λ = 0.75, and λ = 1 as the loss function on the SZTAKI dataset. The performance of the network is evaluated using standard evaluation criteria and the scores are recorded. The results of these experiments are illustrated in Figure 7.

Figure 7.

The influence of λ on evaluation criteria.

In Figure 7, the x axis represents the value of λ, and the y axis represents the change in the four network evaluation criteria with the change in λ. It can be observed that, when λ is equal to 0.5, the accuracy and F1-score both reach the highest value, while the precision and recall also achieve satisfactory results. Therefore, after a comprehensive analysis, it is determined that when λ is set to 0.5, the CE–Dice Loss function optimizes the performance of the Siam-FAUnet model in change detection tasks. In the subsequent experiments conducted in this paper, the value of λ for the CE–Dice Loss function is set to 0.5.

3.2. Ablation Experiments

Ablation Experiment of FAM and ASPP. In order to evaluate the effectiveness of the proposed ASSP and FAM modules, we conducted ablation experiments by comparing the performance of the Siam-FAUnet network with and without these modules, using the CDD and SZTAKI datasets. The benchmark method for these experiments is a network with the first four blocks of the encoder being VGG16. The results of these experiments are presented in Table 2 and Table 3. It is important to note that, to control for variables, the parameter settings for all comparison experiments remain consistent, with the exception of the inclusion or exclusion of the ASSP and FAM modules. In Table 2 and Table 3, OA represents the overall accuracy, P represents the precision, and R represents the recall.

Table 2.

The experimental data of the ablation experiment of the improved points proposed in this paper in the CDD.

Table 3.

The experimental data of the ablation experiment of the improved points proposed in this paper in the SZTAKI dataset.

Ablation Experiment on CDD. From Table 2, it can be seen that adding ASPP and FAM alone to the benchmark network for experiments on the CDD can improve the change detection performance of the network. When FAM has been added alone, the F1-score value improves by 1.63% compared to the benchmark network; when ASPP was added alone, the recall value improves by 6.05% compared to the benchmark network. When FAM and ASPP are added together, the performance of the network is further improved compared to the FAM and ASPP modules alone.

Ablation Experiment on SZTAKI Dataset. We conducted a fair performance comparison on SZADA/1 (the first pair of samples in the SZADA subset) and TISZADOB/3 (the third pair of samples in the TISZADOB subset). From Table 3, it can be seen that adding both ASPP and FAM alone to the benchmark network for experiments on the SZADA/1 and TISZADOB/3 can improve the change detection performance of the network. When FAM alone was added, the F1-score value improved by 1.45% and 0.51% compared with the benchmark network; when ASPP alone was added, the recall value and F1-score value improved significantly compared with the benchmark network, by 4.42% and 1.85% on the SZADA/1, 2.44% and 0.89% on the TISZADOB/3, respectively. When both FAM and ASPP are added, the performance of the network is further improved compared to the FAM and ASPP modules alone.

From Table 2 and Table 3, we can see that adding FAM or ASSP to the benchmark network alone can also improve the network change detection performance. When only FAM is added to the network, the F1-score on the three datasets (CDD, SZADA/1, and TISZADOB/3) increases by 1.63%, 1.45%, and 0.51%, respectively. This is because FAM reduces the semantic misalignment during feature fusion so that the network can more accurately learn the changing features. When the benchmark network only uses ASSP, it can be seen from the evaluation criteria that the module greatly improved the network recall by 6.05%, 4.42%, and 2.44%, respectively. This is because ASSP can achieve the multi-scale sampling of unified features, which to some extent improves the network’s use of ground object boundary information. When FAM and ASSP are added at the same time, the network feature extraction dimension is deepened, resulting in an increase in classification difficulty, so that the precision has not been significantly improved, but in terms of overall evaluation criteria, the performance of the network has been greatly improved. Compared with the non-use of ASPP and FAM modules, the accuracy increased by 1.28%, 1.84%, and 1.50%, respectively, the recall increased by 7.64%, 7.32%, and 7.04%, respectively, and F1-score increased by 4.10%, 2.07%, and 2.62%, respectively, which indicates that FAM and ASSP have played a positive role in improving the network change detection performance at the same time. From the analysis of the results of the ablation experiments, it can be concluded that the proposed Siam-FAUnet model performs better than the improved benchmark network in the remote sensing image change detection task, as well as verifies the effectiveness of our proposed ASSP and FAM.

3.3. Comparison Experiments

The proposed Siam-FAUnet change detection method is compared with several state-of-the-art methods, which include CDNet, FC-Siam-conc, FC-Siam-diff, IFN, DASNet, STANet, and SNUNet. These methods are based on various architectures such as a fully convolutional neural network, encoder–decoder structures, Siamese networks, dual attention mechanisms, and self-attention modules. The performance of the proposed method is evaluated and compared against these models using the CDD and SZTAKI dataset, and the results are presented in Table 4 and Table 5.

Table 4.

Comparison of the experimental results between the algorithm in this paper and classical methods on the CDD.

Table 5.

Comparison of experimental results between the algorithm in this paper and classical methods on the SZTAKI dataset.

In Table 4 and Table 5, the performance of various change detection methods is presented, with the proposed Siam-FAUnet method represented by “Ours” and where OA stands for accuracy, P stands for precision, and R stands for recall.

Comparison on CDD. Table 4 shows the results of Siam-FAUnet and the seven state-of-the-art models on the CDD. Among them, namely the CDNet, FC-Siam-conc, FC-Siam-diff, DASNet, STANet, and SNUNet, we re-run the experiments using the same experimental environment as Siam-FAUnet to obtain the results. The results show that, among the compared methods, FC-Siam-conc performs the worst on the CDD. Compared with the other seven methods, our method improves the accuracy, precision, recall, and F1-score, where the F1-score improves by 8.15%, 12.08%, 10.85%, 4.28%, 2.68%, 8.61%, and 8.48%, respectively.

Comparison on SZTAKI Dataset.Table 5 shows the results of the Siam-FAUnet and seven state-of-the-art models on the SZTAKI dataset. Our method uses a total of 12 pairs of remote sensing image pairs from two sub-datasets SZADA and TISZADOB in the SZTAKI dataset, and we conducted a fair evaluation comparison on SZADA/1 (the first pair of samples in the SZADA sub-dataset) and TISZADOB/3 (the third pair of samples in the TISZADOB sub-dataset). CDNet, FC-Siam-conc, FC-Siam-diff, IFN, DASNet, STANet, and SNUNet, as well as the same dataset and experimental environment as Siam-FAUnet to re-run the experimental results. The results show that, among the compared methods, FC-Siam-conc has the lowest F1-score on the SZADA/1, and FC-Siam-diff has the lowest F1-score on the TISZADOB/3. Accuracy, precision, and F1-score was improved compared with the other seven methods.

In Table 4 and Table 5, the performance of various change detection methods is presented. The results show that, among the compared methods, FC-Siam-conc performs the worst on the CDD, while FC-Siam-conc has the lowest F1-score on the SZADA/1, and FC-Siam-diff has the lowest F1-score on the TISZADOB/3. Both FC-Siam-conc and FC-Siam-diff are based on the FCN architecture, and their performance across the four evaluation criteria is relatively similar.

The F1-score achieved by the CDNet, IFN, DASNet, STANet, and SNUNet is higher than that of FC-Siam-conc and FC-Siam-diff. IFN performs better than CDNet, STANet, and SNUNet because CDNet and STANet are only composed of contraction blocks and expansion blocks, there is no skip connection between the encoder and decoder to fuse the features of different levels, so the underlying feature information is not used in the decoder, resulting in low segmentation accuracy; IFN has a multi-side output strategy, which can combine the shallow and high-resolution semantic features in the encoder, which improves the performance of the model. Compared with CDNet, STANet, and SNUNet F1-score improves 3.87%, 4.33%, and 4.20% on the CDD and 0.29%, 0.60%, and 0.19% on the SZADA/1 and 0.50%, 0.69%, and 0.14% on the TISZADOB/3, respectively. And DASNet performs better than IFN on the CDD and SZADA/1 but the F1-score on the SZTAKI dataset is worse than the IFN. The Siam-FAUnet method proposed in this chapter has achieved good detection results on both the CDD, the SZADA/1 dataset, and the TISZADOB/3 dataset. The F1-score reached 94.58%, 55.50%, and 84.24%, respectively, which has increased by 4.28%, 3.06%, and 4.63%, respectively, compared with IFN. The reason is that the proposed method uses a Siamese structure in the encoder to extract the feature information of two-temporal images, respectively, which reduces the pseudo change caused by channel superposition; the FAM module can analyze the features obtained by the encoder and decoder, bringing more detailed image feature information. The ASSP module can extract and fuse information of different scales, increase the receptive field of the model, and make full use of the context information.

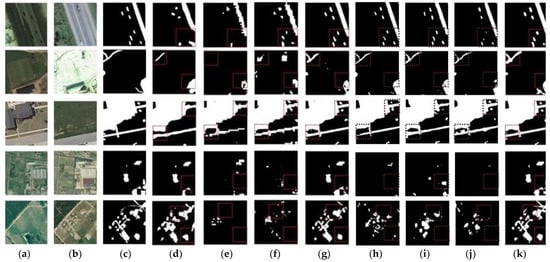

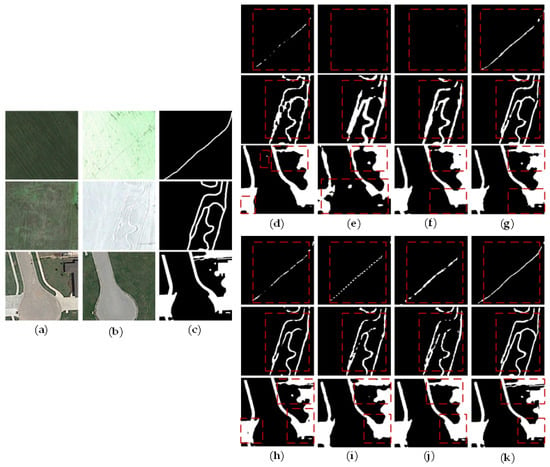

To evaluate the performance of the visually proposed method, we selected the change images of cars, roads, buildings, and fields from the test set to analyze the results of various methods. The results are illustrated in Figure 8, and the red dashed boxes in Figure 8 highlight the comparison of the results of different methods for the boundary and small target detection:

Figure 8.

Example of change detection visualization results of different models: (a,b) are original bi-temporal images; (c) is the ground truth; (d) is the result of CDNet; (e) is the result of FC-Siam-conc; (f) is the result of FC-Siam-diff; (g) is the result of IFN; (h) is the result of DASNet; (i) is the result of STANet; (j) is the result of SNUNet; (k) is our result of Siam-FAUnet.

From Figure 8, we can see that the FC-Siam-conc and FC-Siam-diff methods have serious aliasing at the edge of the detected change area, and most of the change areas are not detected and the detected change areas are not complete. At the same time, there are a lot of noise points, and the visual results of change detection are poor. Compared with FC-Siam-conc and FC-Siam-diff, CDNet have the relatively smooth boundaries of the detected change area and less noise. However, there are small target missing detection and pseudo changes in the changing area of cars, small buildings, and roads, and the internal and detail processing is not refined enough. The IFN effect is relatively clear and accurate, but there are still some problems such as holes in the changing area and discontinuous change boundary. DASNet and STANet detect the boundary of the change region smoothly, but there are holes and small target missing detection in the changing area, and the detection change region is incomplete. The SNUNet detection of the change region appears holes and discontinuous inside, most of the change region detection appears to be missed, and the detection performance for small targets is poor. The method proposed in this paper has the best visualization effect, the change boundary is smooth and clear without jaggedness, less noise, and the changing area is continuous and complete, and its visual result is closer to the ground truth. At the same time, compared with other methods, the algorithm proposed in this paper has certain advantages in the detection of small object changes, such as being able to detect changes in cars, small buildings, etc., and the road changes are more continuous and complete, with less pseudo changes. Therefore, through the quantitative and visual analysis of the experimental results, it can be concluded that the method proposed in this chapter is superior to the change detection of high-resolution remote sensing images, especially in small target changes and the detection of change boundaries.

4. Discussion

In this work, we propose a remote sensing image change detection method based on the Siam-FAUnet network, using an improved VGG16 to extract image features in the encoding part, ASPP to extract multi-scale contextual features, and FAM to fuse the feature information extracted in the encoder in the decoding part. The publicly available CDD and SZTAKI datasets are used for training and testing, and the evaluation metrics of the Siam-FAUnet model are improved compared to both the baseline model and other state-of-the-art deep learning change detection methods. Meanwhile, through visualization analysis, it is verified that the Siam-FAUnet model can effectively detect small change targets, overcome challenges associated with unclear image boundaries and the greater pseudo changes of the change regions.

Comparison and findings. To evaluate the effectiveness of ASPP and FAM, ablation experiments are conducted on the CDD and SZTAKI datasets, respectively. The results demonstrate that the introduction of either ASPP or FAM enhances the change detection performance of the model. Furthermore, integrating both ASPP and FAM improves the network’s overall evaluation metrics, albeit deepening the feature extraction dimension increases the classification difficulty. This improvement can be attributed to the increased perceptual field of the model achieved by ASPP, allowing the model to effectively make use of global image information, thus avoiding information loss and obtaining multi-scale contextual information. Meanwhile, FAM fuses low-level features from the encoder to the decoder, facilitating the learning of semantic flow between the features of varying resolutions and transferring semantic information from coarse features to high-resolution refinement features.

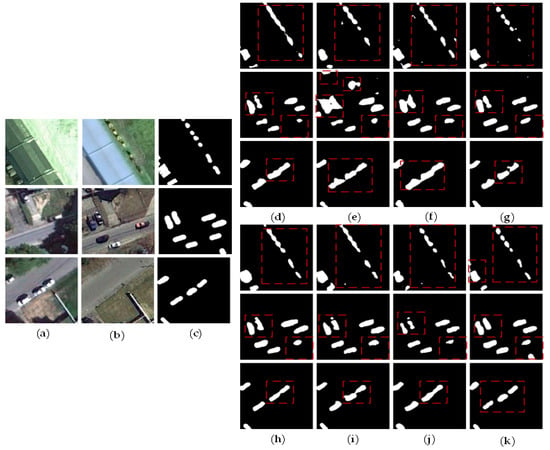

To verify the superiority of the Siam-FAUnet model in solving the problems of blurred detection boundary, the missed detection of small targets and more pseudo changes in change detection, we conduct comparative experiments using seven state-of-the-art deep learning change detection methods on the CDD and SZTAKI datasets, with the same experimental environment and parameter settings as our proposed method. Our Siam-FAUnet approach achieves superior evaluation metrics and detection results on both datasets. Compared with CDNet [], FC-Siam-conc [], FC-Siam-diff [], IFN [], DASNet [], STANet [], and SNUNet [] models, Siam-FAUnet has the highest recall R, reaching 93.56%, 73.79%, and 95.63% on the CDD and SZTAKI datasets, respectively. The larger the recall, the fewer the missed predictions are, and the lower the miss rate is, indicating that the Siam-FAUnet model is robust in reducing pseudo changes. As shown in Figure 9 and Figure 10, six pairs of images of changes in cars, roads, and buildings in the test set were selected to visualize the experimental results.

Figure 9.

Example of change detection visualization results of different models: (a,b) are original bi-temporal images; (c) is the ground truth; (d) is the result of CDNet; (e) is the result of FC-Siam-conc; (f) is the result of FC-Siam-diff; (g) is the result of IFN; (h) is the result of DASNet; (i) is the result of STANet; (j) is the result of SNUNet; and (k) is our result of Siam-FAUnet.

Figure 10.

Example of change detection visualization results of different models: (a,b) are original bi-temporal images; (c) is the ground truth; (d) is the result of CDNet; (e) is the result of FC-Siam-conc; (f) is the result of FC-Siam-diff; (g) is the result of IFN; (h) is the result of DASNet; (i) is the result of STANet; (j) is the result of SNUNet; and (k) is our result of Siam-FAUnet.

Figure 9 shows the visualization results of verifying the proposed model to solve the blurred detection boundary problem. From Figure 9, we can see that the change regions detected by FC-Siam-conc and FC-Siam-diff have blurred boundaries, discontinuities, noise in the detected regions, and the incomplete detection of the change regions; CDNet reduces the noise, but still has blurred boundaries, internal holes, and the incomplete detection of the detected regions. The boundaries in the change regions detected by IFN and DASNet are smooth, but there are still problems such as internal holes and discontinuous region boundaries. The boundaries in the change regions detected by STANet are jagged and the detected change regions are not complete. The change regions detected by SNUNet have blurred boundaries, are not smooth, and the detection is incomplete. Siam-FAUnet has the best visualization effect, the change boundary is smooth and clear without jaggedness, there is less noise, the road change is more continuous and complete with less pseudo-change, the change area is continuous and complete, and its visual result is closer to the ground truth.

Figure 10 shows the visualization results for validating the proposed model to solve the small target miss detection problem, and Table 6 shows the comparison of the number of small targets detected by each model. From Figure 10 and Table 6, we can obtain that the FC-Siam-conc and FC-Siam-diff have poor performance for small target detection, and cannot be detected for most of the small targets with blurred boundaries and a lot of noise. Although CDNet, DASNet, STANet, and SNUNet reduce the noise in the detected images, they can only detect a small portion of small targets with blurred boundaries. IFN can detect most of the small targets, but there are still problems of blurred unsmooth detection boundaries and noise. In contrast, the algorithm proposed in this paper has some superiority in the detection of small target changes compared with other methods, and can detect changes such as cars and small buildings, and the change boundary is smooth and clear with the best visualization effect.

Table 6.

Comparison of the number of small targets detected by different models.

Based on the experimental results of Siam-FAUnet and seven other state-of-the-art methods conducted on the CDD and SZTAKI datasets, it has been observed that the detection performance and evaluation metrics of each method exhibit superior performance on the CDD compared to the SZTAKI dataset. This discrepancy can be attributed to the CDD’s inherent characteristics, including a wider range of diverse feature types and a larger sample size, which provide a richer and more representative dataset for training and evaluation purposes. The seasonal differences between the before and after images of the CDD are large, and the differences before and after the changes can be clearly seen by considering the corresponding changes in the appearance or disappearance of objects between the two instances and not ignoring all seasonal changes. The SZTAKI dataset exhibits fragmented and less dense change areas, characterized by larger feature scales. The changes primarily manifest in new built-up regions, building operations, the planting of a large group of trees, fresh plough-land, and ground work before building over. However, the dataset fails to capture certain small-scale changes, necessitating the acquisition of deeper features for accurate detection. Furthermore, it is noteworthy that the TISZADOB sub-dataset of SZTAKI outperforms the SZADA sub-dataset in terms of detection efficacy and evaluation metrics. This discrepancy can be attributed to the higher prevalence of small target changes in the SZADA sub-dataset, posing greater challenges for change detection algorithms.

5. Conclusions

This study proposed a novel approach to address the challenges associated with high-resolution remote sensing image change detection using the classical semantic segmentation method. An improved method, based on the Siam-FAUnet network, is developed and implemented. The proposed method includes the use of an ASSP module that utilizes convolution with varying dilation rates to sample the image features in parallel, thereby expanding the network’s receptive field and enabling the integration of multi-scale features. Additionally, an FAM module is incorporated to address the semantic misalignment issue resulting from the direct concatenation of feature maps by utilizing a generated semantic flow field, thereby allowing the network to more accurately learn changing features. To reduce the residual between the output of the model and the ground truth, a residual correction network is added to enhance the change detection effect of the model, and the CE–Dice Loss is proposed by combining the cross-entropy loss function and the Dice loss function, which is determined by experimenting with the value of λ. In terms of the experiments, the proposed method and other deep learning change detection models are tested on two public high-resolution change detection datasets to verify the performance of the method in the change detection task. The qualitative and quantitative analysis of the experimental results shows that the model proposed herein has achieved superior change detection performance. This research, however, has certain limitations. The datasets used in the experiment are not extensive enough and may not fully reflect the complexity of the task. Additionally, there is still a small amount of noise present in the change detection binary maps. To address these issues, in future research, efforts will be directed toward optimizing the model for more complex scenarios and exploring the generalizability of the model.

Author Contributions

Conceptualization, Q.W. and X.Z.; methodology, Q.W., M.L. and J.Z.; validation, Q.W., S.Y. and Z.C.; formal analysis, Q.W., G.L. and J.Z.; writing—original draft preparation, Q.W., J.Z. and M.L.; writing—review and editing, M.L., G.L. and G.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Key Laboratory for Digital Land and Resources of Jiangxi Province, East China University of Technology, grant number DLLJ202201.

Data Availability Statement

Publicly available datasets were analyzed in this study. CDD dataset can be found here: https://drive.google.com/file/d/1GX656JqqOyBi_Ef0w65kDGVto-nHrNs9. SZTAKI dataset can be found here: http://mplab.sztaki.hu/remotesensing/airchange_benchmark.html.

Acknowledgments

We would like to thank the anonymous reviewers for their constructive and valuable suggestions on the earlier drafts of this manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Li, D.; Dong, Z.H.; Liu, X.A. The Present Status and its Enlightenment of Remote Sensing Satellite Application. Aerosp. China 2020, 501, 46–53. [Google Scholar]

- Xie, H.L.; Wen, J.M.; Chen, Q.R.; He, Y.F. Research Progress of the Application of Geo-information Science and Technology in Territorial Spatial Planning. J. Geo-Inf. Sci. 2022, 24, 202–219. [Google Scholar]

- Sui, H.G.; Zhao, B.F.; Xu, C.; Zhou, M.T.; Du, Z.T.; Liu, J.Y. Rapid Extraction of Flood Disaster Emergency Information with Multi-modal Sequence Remote Sensing Images. Geomat. Inf. Sci. Wuhan Univ. 2021, 46, 1441–1449. [Google Scholar]

- Peng, X.D. Research on Classification of GF-2 Remote Sensing Image Based on Improved Unet Network. Master’s Thesis, China University of Geosciences (Beijing), Beijing, China, 2020. [Google Scholar]

- Sui, H.G.; Feng, W.Q.; Li, W.Z. Review of Change Detection Methods for Multi-temporal Remote Sensing Imagery. Geomat. Inf. Sci. Wuhan Univ. 2018, 43, 1885–1898. [Google Scholar]

- Daudt, R.C.; Le Saux, B.; Boulch, A.; Gousseau, Y. Urban change detection for multispectral earth observation using convolutional neural networks. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 2115–2118. [Google Scholar]

- Ji, S.P.; Tian, S.Q.; Zhang, C. Urban Land Cover Classification and Change Detection Using Fully Atrous Convolutional Neural Network. Geomat. Inf. Sci. Wuhan Univ. 2020, 45, 233–241. [Google Scholar]

- Liu, S.C.; Zheng, Y.J.; Dalponte, M. A novel fire index-based burned area change detection approach using Landsat-8 OLI data. Eur. J. Remote Sens. 2020, 53, 104–112. [Google Scholar] [CrossRef]

- Chen, C.F.; Son, N.T.; Chang, N.B. Multi-decadal mangrove forest change detection and prediction in Honduras, Central America, with Landsat imagery and a Markov chain model. Remote Sens. 2013, 5, 6408–6426. [Google Scholar] [CrossRef]

- Wang, P.; Wang, L.; Leung, H.; Zhang, G. Super-Resolution Mapping Based on Spatial–Spectral Correlation for Spectral Imagery. IEEE Trans. Geosci. Remote Sens. 2021, 59, 2256–2268. [Google Scholar] [CrossRef]

- Li, Z.X.; Shi, W.Z.; Zhang, C.J.; Geng, J.; Huang, J.W.; Ye, Z.R. Subpixel Change Detection Based on Improved Abundance Values for Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 10073–10086. [Google Scholar] [CrossRef]

- Hu, S.S.; Huang, Y.; Huang, C.X.; Li, D.C.; Wang, Q. Development Status and Future Prospect of Multi-source Remote Sensing Image Collaborative Application. Radio Eng. 2021, 51, 1425–1433. [Google Scholar]

- Xu, J.F.; Zhang, B.M.; Yu, D.X.; Lin, Y.H.; Guo, H.T. Aircraft target change detection for high-resolution remote sensing images using multi-feature fusion. Natl. Remote Sens. Bull. 2020, 24, 37–52. [Google Scholar] [CrossRef]

- Zhang, C.J.; An, R.; Ma, L. Building change detection in remote sensing image based on improved U-Net. Comput. Eng. Appl. 2021, 57, 239–246. [Google Scholar]

- Yuan, H.C. Dynamic Monitoring of Urban Planning Based on Remote Sensing Technology. Master’s Thesis, Chongqing Jiaotong University, Chongqing, China, 2014. [Google Scholar]

- Volpi, M.; Tuia, D.; Bovolo, F.; Kanevski, M.; Bruzzone, L. Supervised change detection in VHR images using contextual information and support vector machines. Int. J. Appl. Earth Obs. Geoinf. 2013, 20, 77–85. [Google Scholar] [CrossRef]

- Toure, S.; Stow, D.; Shih, H. An object-based temporal inversion approach to urban land use change analysis. Remote Sens. Lett. 2016, 7, 503–512. [Google Scholar] [CrossRef]

- Wang, Y.; Shu, N.; Gong, Y. A Study of Land Use Change Detection Based on High Resolution Remote Sensing Images. Remote Sens. Nat. Resour. 2012, 24, 43–47. [Google Scholar]

- Wang, C.Y.; Tian, X. Forest Cover Change Detection based on GF-1 PMS Data. Remote Sens. Technol. Appl. 2021, 36, 208–216. [Google Scholar]

- Huang, L.; Fang, Y.M.; Zuo, X.Q.; Yu, X.Q. Automatic change detection method of multitemporal remote sensing images based on 2D-otsu algorithm improved by firefly algorithm. J. Sens. 2015, 2015, 327123. [Google Scholar] [CrossRef]

- Jin, S.M.; Yang, L.M.; Danielson, P. A comprehensive change detection method for updating the National Land Cover Database to circa 2011. Remote Sens. Environ. 2013, 132, 159–175. [Google Scholar] [CrossRef]

- Mei, Y.P.; Zhang, D.C.; Fu, R. Research on SAR image change detection method based on morphology and multi-scale spatial clustering. J. Optoelectron. Laser 2021, 32, 1140–1146. [Google Scholar]

- Zhang, Q.Y.; Li, Z.; Peng, D.L. Land use change detection based on object-oriented change vector analysis (OCVA). J. China Agric. Univ. 2019, 24, 166–174. [Google Scholar]

- Zhang, X.L.; Zhang, X.W.; Li, F.; Yang, T. Change Detection Method for High Resolution Remote Sensing Images Using Deep Learning. Acta Geod. Cartogr. Sin. 2017, 46, 999–1008. [Google Scholar]

- Huang, W.; Huang, J.L.; Wang, L.H.; Hu, Y.X.; Han, P.P. Remote sensing image change detection based on change vector analysis of PCA component. Remote Sens. Land Resour. 2016, 28, 22–27. [Google Scholar]

- Zhao, Q.J. Remote sensing image change detection algorithm based on improved principal component analysis. Geomat. Spat. Inf. Technol. 2019, 42, 111–113+117. [Google Scholar]

- Byrne, G.F.; Crapper, P.F.; Mayo, K.K. Monitoring land-cover change by principal component analysis of multitemporal Landsat data. Remote Sens. Environ. 1980, 10, 175–184. [Google Scholar] [CrossRef]

- Huang, C.X.; Yin, J.J.; Yang, J. Polarimetric SAR change detection with l1-norm principal component analysis. Syst. Eng. Electron. 2019, 41, 2214–2220. [Google Scholar]

- Chu, Y. Remote Sensing Image Change Detection Based on Deep Neural Network. Master’s Thesis, Nanjing University of Science & Technology, Nanjing, China, 2017. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet Classification with Deep Convolutional Neural Networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Zhang, L.P.; Zhang, L.F.; Du, B. Deep learning for remote sensing data: A technical tutorial on the state of the art. IEEE Geosci. Remote Sens. Mag. 2016, 4, 22–40. [Google Scholar] [CrossRef]

- Zhao, J.C. Unsupervised Change Detection Technology in High-Resolution Multispectral Remote Sensing Images Based on Superpixel and Siamese Convention Neural Network. Master’s Thesis, Zhejiang University, Hangzhou, China, 2017. [Google Scholar]

- Ren, Q.R.; Yang, W.Z.; Wang, C.J.; Wei, W.Y.; Qian, Y.Y. Review of remote sensing image change detection. J. Comput. Appl. 2021, 41, 2294–2305. [Google Scholar]

- Yuan, L. Object Change Detection Based on Deep Learning and Vectorization. Master’s Thesis, University of Electronic Science and Technology of China, Chengdu, China, 2021. [Google Scholar]

- Shi, W.Z.; Zhang, M.; Zhang, R.; Chen, S.X.; Zhan, Z. Change detection based on artificial intelligence: State-of-the-art and challenges. Remote Sens. 2020, 12, 1688. [Google Scholar] [CrossRef]

- Chang, Z.L.; Yang, X.G.; Lu, R.T. High-resolution remote sensing image change detection based on improved DeepLabv3+. Laser Optoelectron. Prog. 2022, 59, 493–504. [Google Scholar]

- Zhang, C.; Wei, S.Q.; Ji, S.P. Detecting large-scale urban land cover changes from very high resolution remote sensing images using CNN-based classification. ISPRS Int. J. Geo-Inf. 2019, 8, 189. [Google Scholar] [CrossRef]

- Tian, Q.L.; Qin, K.; Chen, J.; Li, Y.; Chen, X.J. Building change detection for aerial images based on attention pyramid network. Acta Opt. Sin. 2020, 40, 47–56. [Google Scholar]

- Peng, D.F.; Zhang, Y.J.; Guan, H.Y. End-to-end change detection for high resolution satellite images using improved UNet++. Remote Sens. 2019, 11, 1382. [Google Scholar] [CrossRef]

- Papadomanolaki, M.; Verma, S.; Vakalopoulou, M.; Gupta, S.; Karantzalos, K. Detecting urban changes with recurrent neural networks from multitemporal Sentinel-2 data. In Proceedings of the 2019 IEEE IGARSS International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; IEEE: Tokyo, Japan, 2019; pp. 214–217. [Google Scholar]

- Wang, Q.; Zhang, X.D.; Chen, G.Z.; Dai, F.; Gong, Y.F.; Zhu, K. Change detection based on Faster R-CNN for high-resolution remote sensing images. Remote Sens. Lett. 2018, 9, 923–932. [Google Scholar] [CrossRef]

- Chopra, S.; Hadsell, R.; LeCun, Y. Learning a similarity metric discriminatively, with application to face verification. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; IEEE: San Diego, CA, USA, 2005; pp. 539–546. [Google Scholar]

- Zhan, Y.; Fu, K.; Yan, M.L.; Sun, X. Change detection based on deep siamese convolutional network for optical aerial images. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1845–1849. [Google Scholar] [CrossRef]

- Zhang, C.X.; Yue, P.; Tapete, D. A deeply supervised image fusion network for change detection in high resolution bi-temporal remote sensing images. ISPRS J. Photogramm. Remote Sens. 2020, 166, 183–200. [Google Scholar] [CrossRef]

- Raza, A.; Huo, H.; Fang, T. EUNet-CD: Efficient UNet++ for change detection of very high-resolution remote sensing images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 3510805. [Google Scholar] [CrossRef]

- Wang, D.C.; Chen, X.N.; Jiang, M.Y. ADS-Net: An attention-based deeply supervised network for remote sensing image change detection. Int. J. Appl. Earth Obs. Geoinf. 2021, 101, 102348. [Google Scholar]

- Jiang, H.W.; Hu, X.Y.; Li, K. PGA-SiamNet: Pyramid feature-based attention-guided siamese network for remote sensing orthoimagery building change detection. Remote Sens. 2020, 12, 484. [Google Scholar] [CrossRef]

- Shi, Q.; Liu, M.X.; Li, S.C.; Liu, X.P.; Wang, F.; Zhang, L.P. A deeply supervised attention metric-based network and an open aerial image dataset for remote sensing change detection. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5604816. [Google Scholar] [CrossRef]

- Zhang, M.; Shi, W.Z. A feature difference convolutional neural network-based change detection method. IEEE Trans. Geosci. Remote Sens. 2020, 58, 7232–7246. [Google Scholar] [CrossRef]

- Bandara, W.G.C.; Patel, V.M. A transformer-based siamese network for change detection. arXiv 2022, arXiv:2201.01293. [Google Scholar]

- Fan, W.; Zhou, M.; Huang, R. Multiscale deep features fusion for change detection. J. Image Graph. 2020, 25, 0669–0678. [Google Scholar]

- Chen, H.R.X.; Wu, C.; Du, B.; Zhang, L.P.; Wang, L. Change detection in multisource VHR images via deep siamese convolutional multiple-layers recurrent neural network. IEEE Trans. Geosci. Remote Sens. 2020, 58, 2848–2864. [Google Scholar] [CrossRef]

- Fang, B.; Pan, L.; Kou, R. Dual learning-based siamese framework for change detection using bi-temporal VHR optical remote sensing images. Remote Sens. 2019, 11, 1292. [Google Scholar] [CrossRef]

- Wang, M.Y.; Tan, K.; Jia, X.P. A deep siamese network with hybrid convolutional feature extraction module for change detection based on multi-sensor remote sensing images. Remote Sens. 2020, 12, 205. [Google Scholar] [CrossRef]

- Xu, Q.F.; Chen, K.M.; Sun, X. Pseudo-siamese capsule network for aerial remote sensing images change detection. IEEE Geosci. Remote Sens. Lett. 2020, 19, 6000405. [Google Scholar] [CrossRef]

- Liu, T.; Li, Y.; Cao, Y.; Shen, Q. Change detection in multitemporal synthetic aperture radar images using dual-channel convolutional neural network. J. Appl. Remote Sens. 2017, 11, 042615. [Google Scholar] [CrossRef]

- Wiratama, W.; Lee, J.; Park, S.E. Dual-dense convolution network for change detection of high-resolution panchromatic imagery. Appl. Sci. 2018, 8, 1785. [Google Scholar] [CrossRef]

- Touati, R.; Mignotte, M.; Dahmane, M. Partly uncoupled siamese model for change detection from heterogeneous remote sensing imagery. J. Remote Sens. GIS 2020, 9, 272. [Google Scholar]

- Bromley, J.; Guyon, I.; LeCun, Y. Signature verification using a “siamese” time delay neural network. Adv. Neural Inf. Process. Syst. 1994, 6, 737–744. [Google Scholar]

- Yang, M.K.; Yu, K.; Zhang, C. Denseaspp for semantic segmentation in street scenes. In Proceedings of the 2018 IEEE CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 3684–3692. [Google Scholar]