A New Remote Hyperspectral Imaging System Embedded on an Unmanned Aquatic Drone for the Detection and Identification of Floating Plastic Litter Using Machine Learning

Abstract

1. Introduction

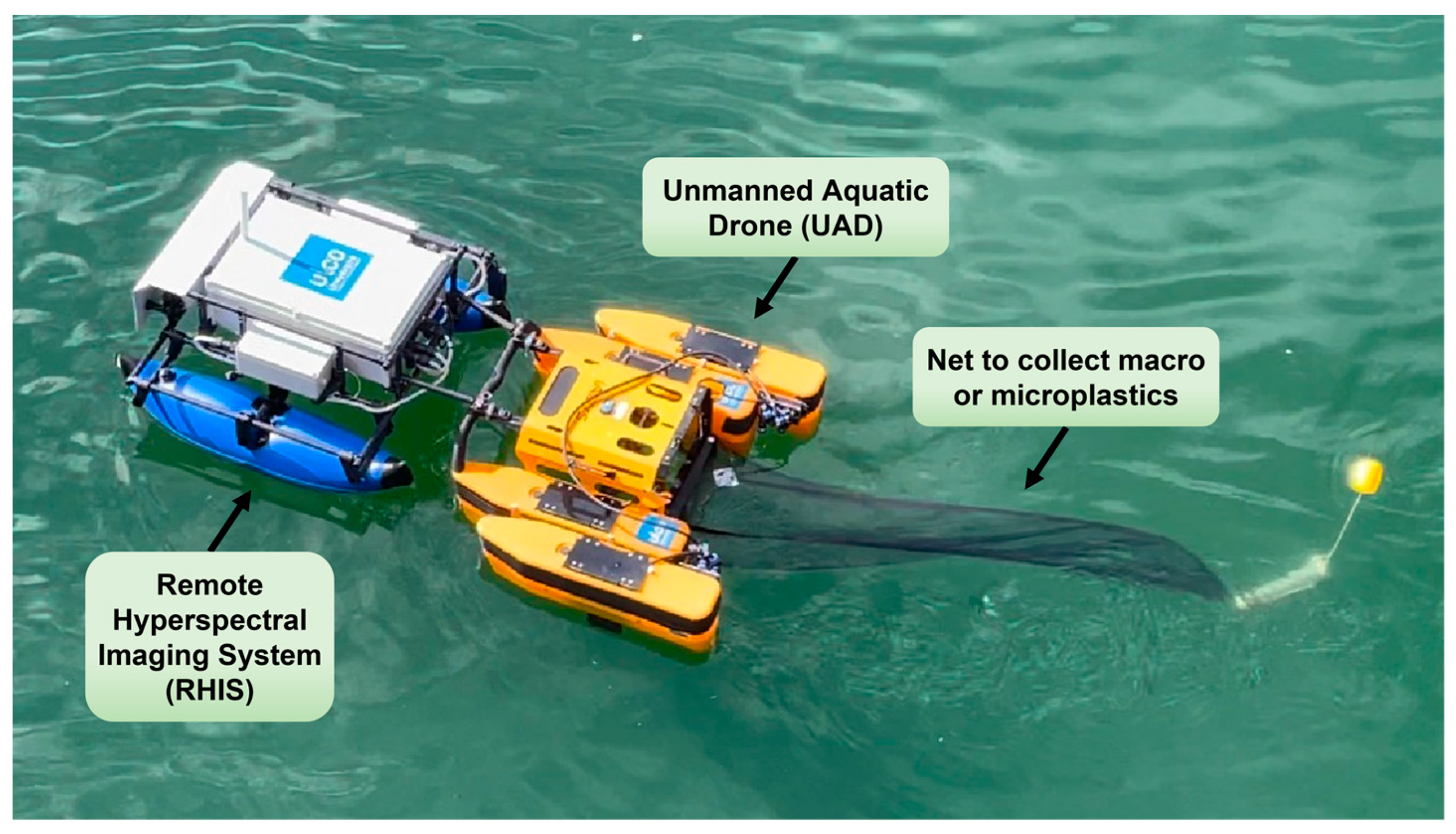

2. Materials

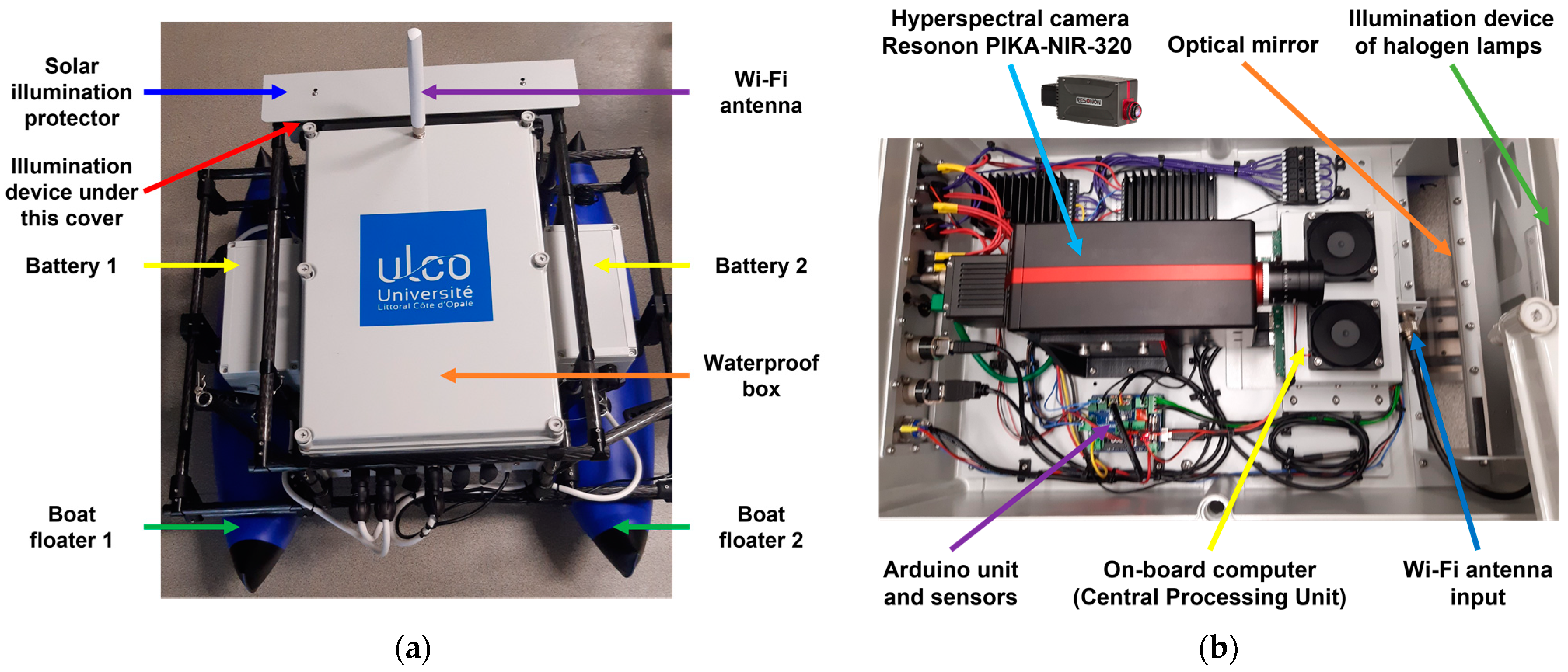

2.1. The ROV-ULCO System

- Two inflatable boat floaters;

- Two batteries (each inside a removable waterproof case);

- An illumination device of halogen lamps;

- Protections against solar illumination;

- A long-range WiFi antenna to communicate remotely;

- A waterproof box that contains the following inside components (Figure 3b):

- ○

- A line-scan hyperspectral camera (Resonon PIKA-NIR-320) with a 12 mm focal length objective lens;

- ○

- An optical mirror system;

- ○

- An Arduino unit that controls two temperature sensors and a water velocity sensor via an integrated board.

- ○

- An industrial Central Processing Unit (CPU) as the on-board computer;

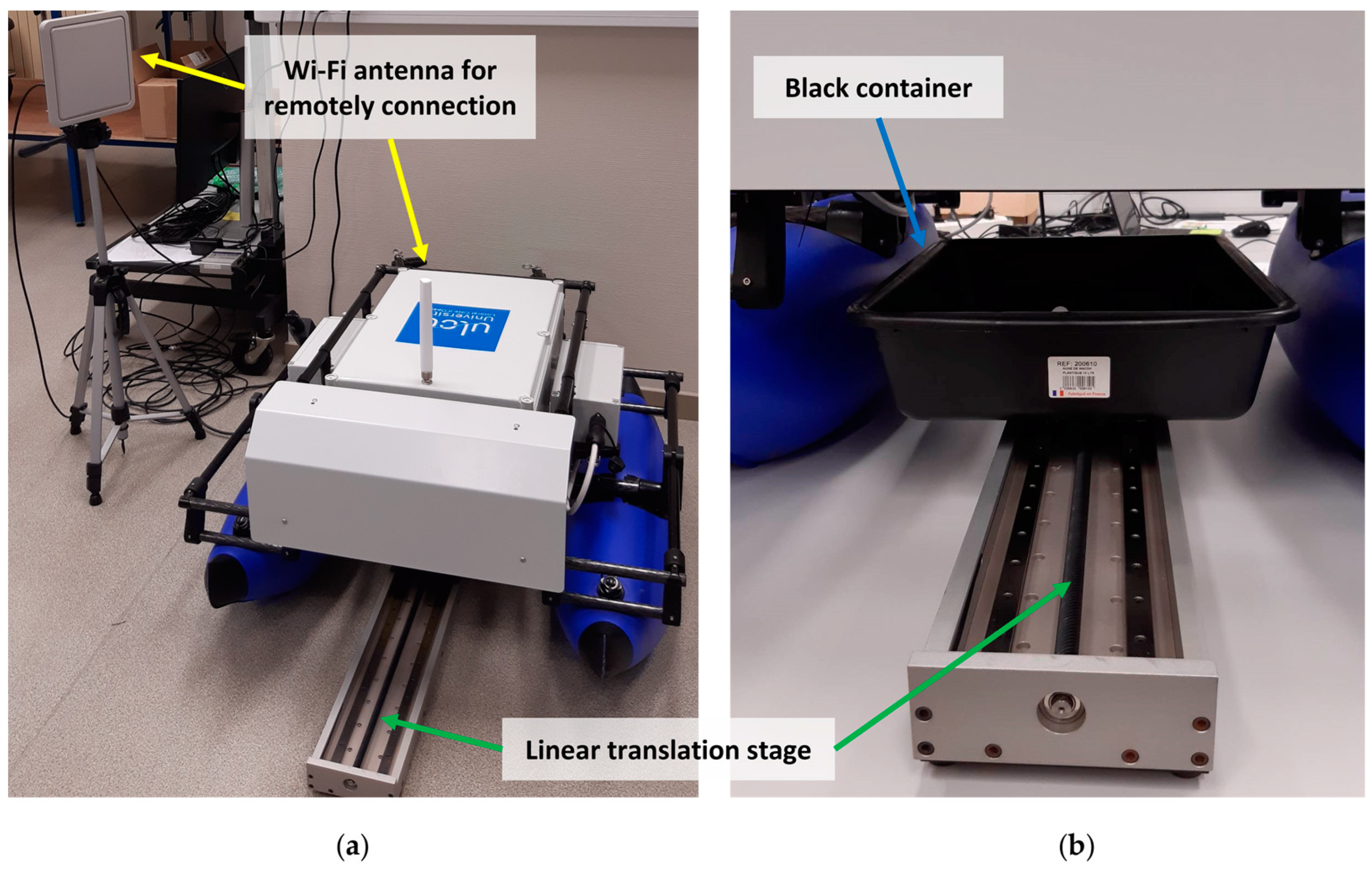

2.2. Experimental Setup

- Integration time: ti = 2.049 ms;

- Frame rate: F = 22 fps (frames per second).

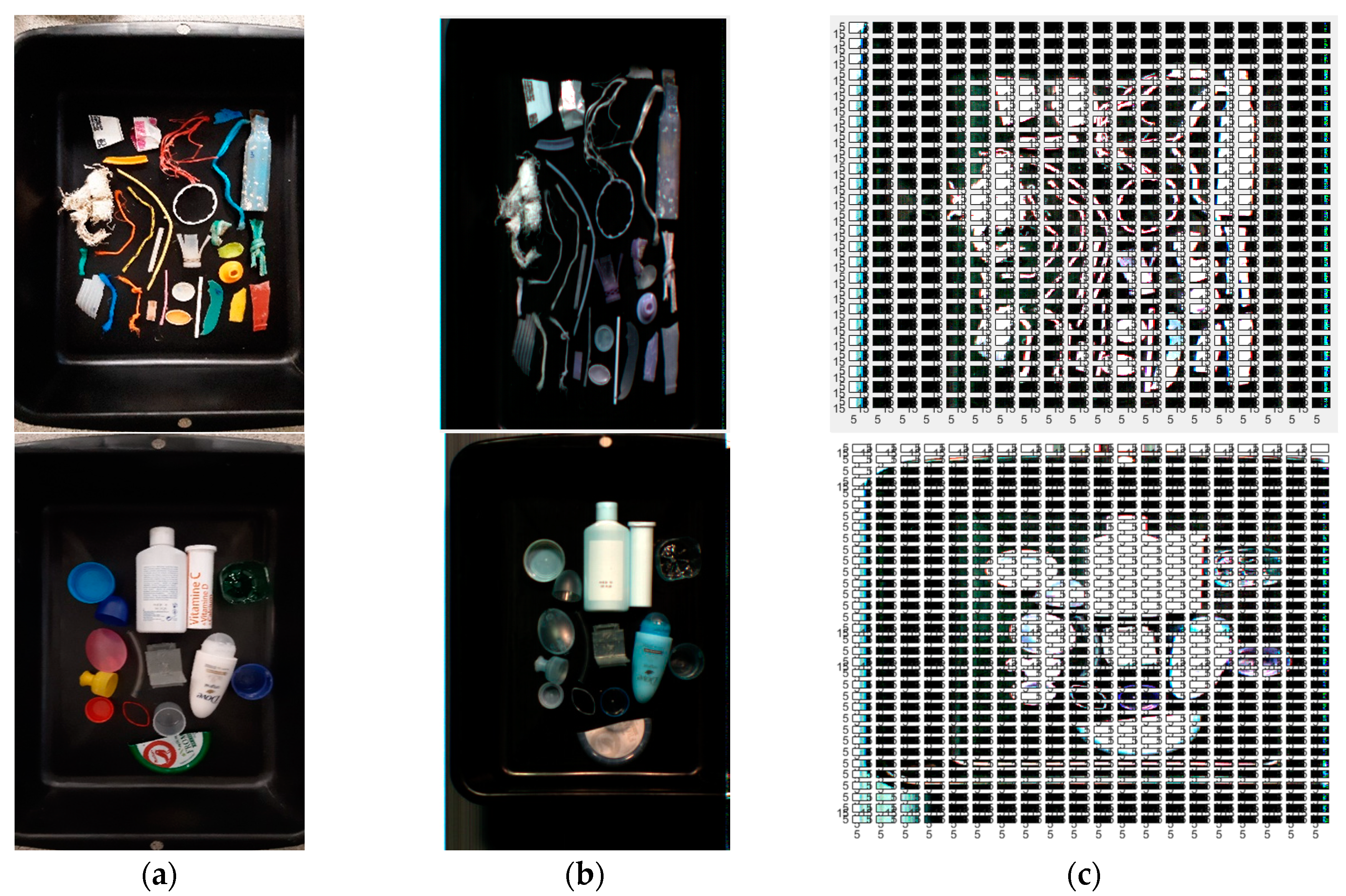

2.3. Benchmark Image Database

3. Spectral Data Analysis

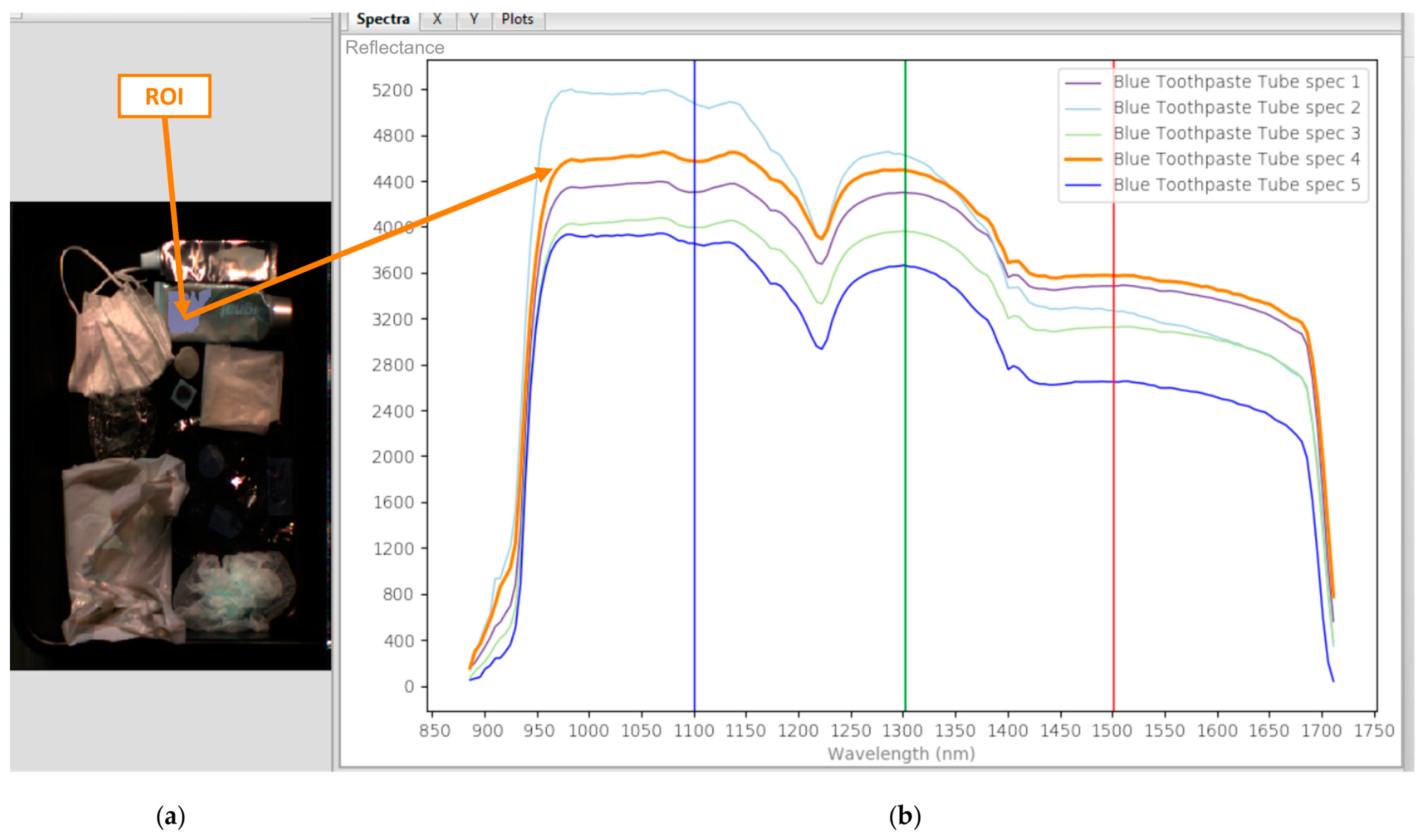

3.1. Spectral Reflectance Dataset

- Manual selection of a Region Of Interest (ROI) with a random size;

- Computation and plotting of the hyperspectral reflectance spectrum of the selected ROI as the mean value over all pixels in the ROI;

- Choice of another ROI of the same object present in another acquisition;

- Return to Step 2, and repeat this process until a significative number of spectra are computed depending on the size of the object.

3.2. Comparison with the State-of-the-Art

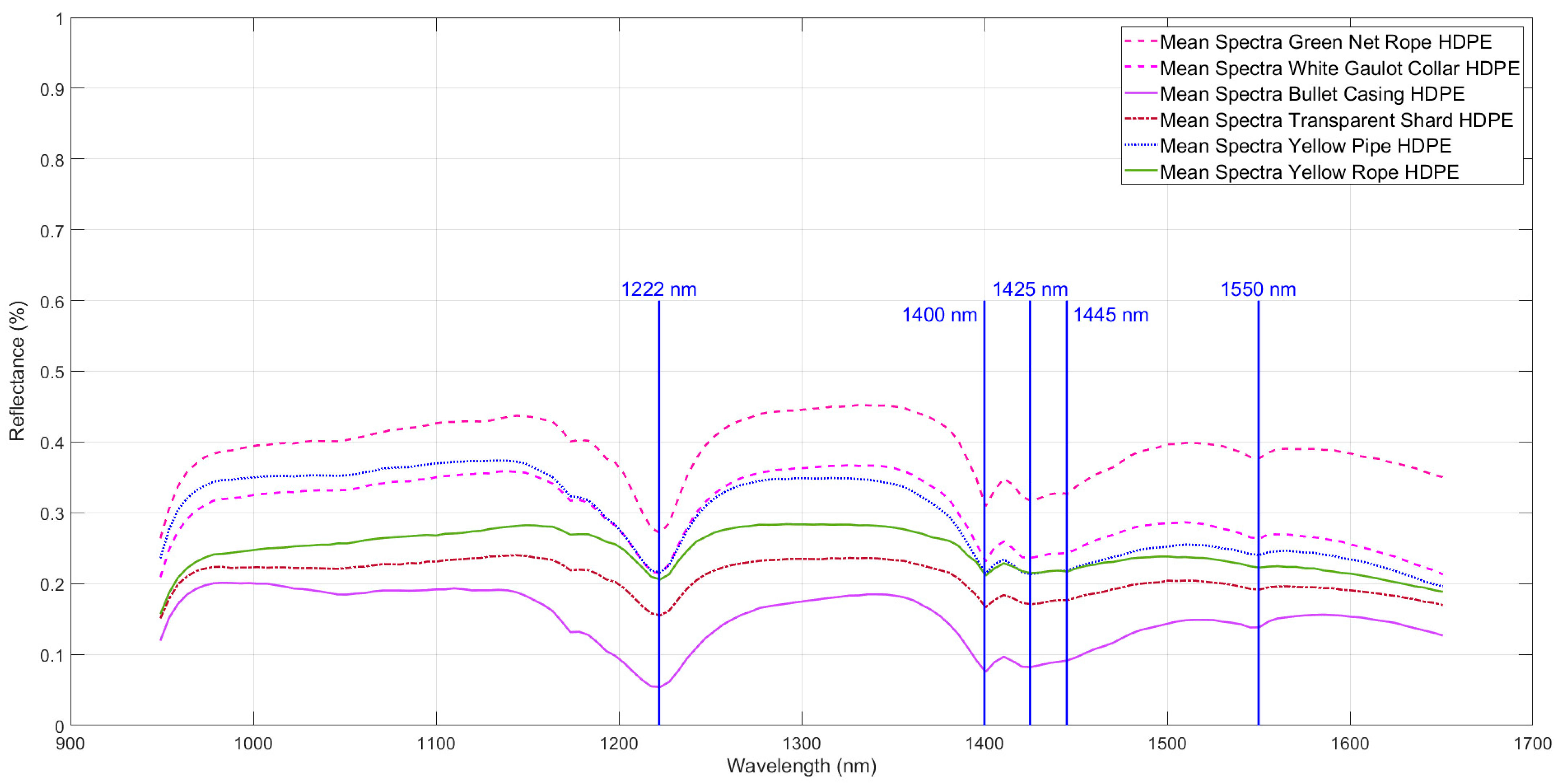

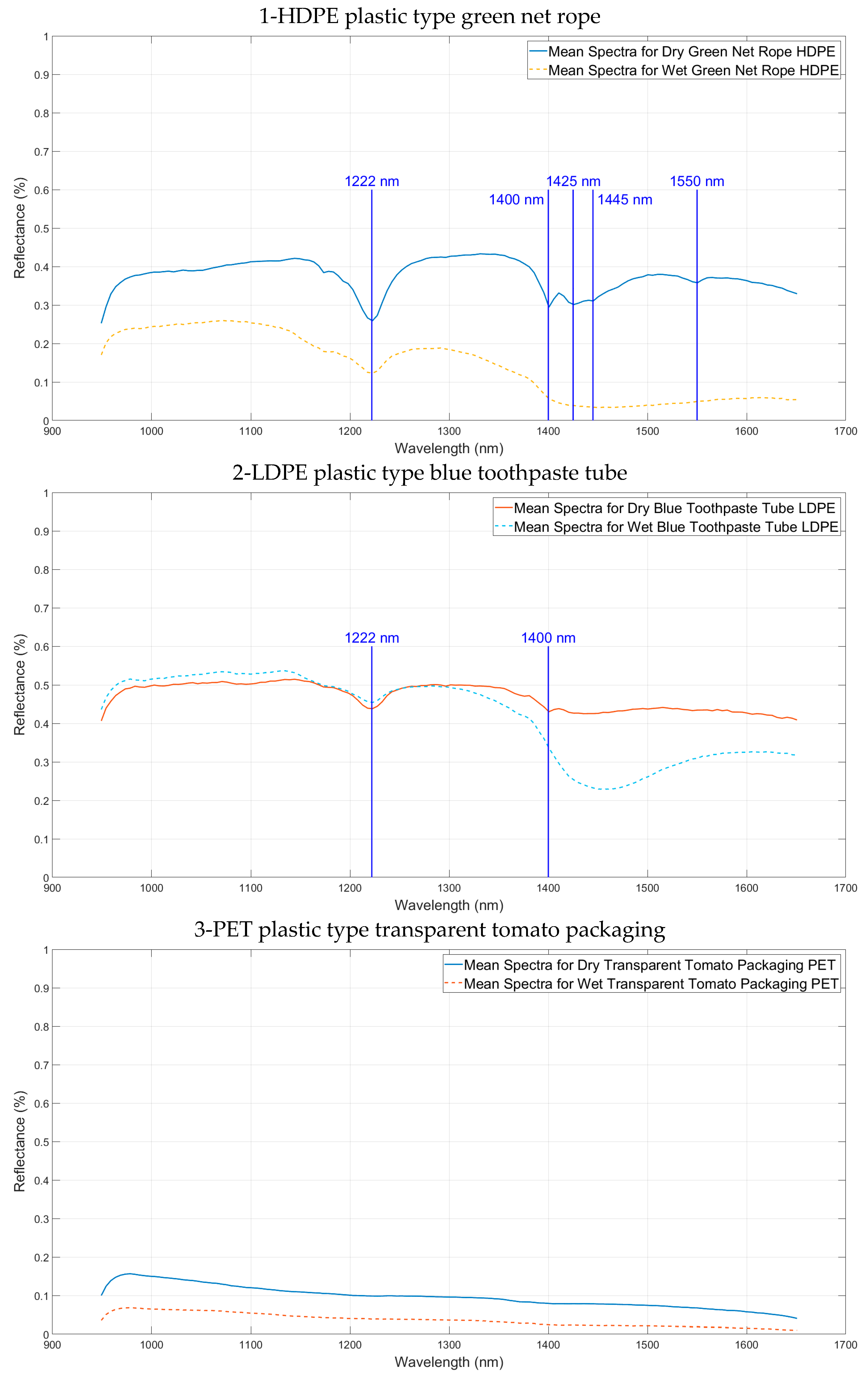

- HDPE plastic type—green net rope: The dry HDPE had five visible absorption features at wavelengths of 1222 nm, 1400 nm, 1425 nm, 1445 nm, and 1550 nm [3,8]. The most-important absorption feature is at a wavelength of 1222 nm, which is in close correspondence with the work of Tasseron et al. [3]. Similar results also appear in Figure 7 for dry objects. The wet green net rope of the HDPE plastic type had an attenuated spectral reflectance. However, the main absorption feature (1222 nm) remained visible.

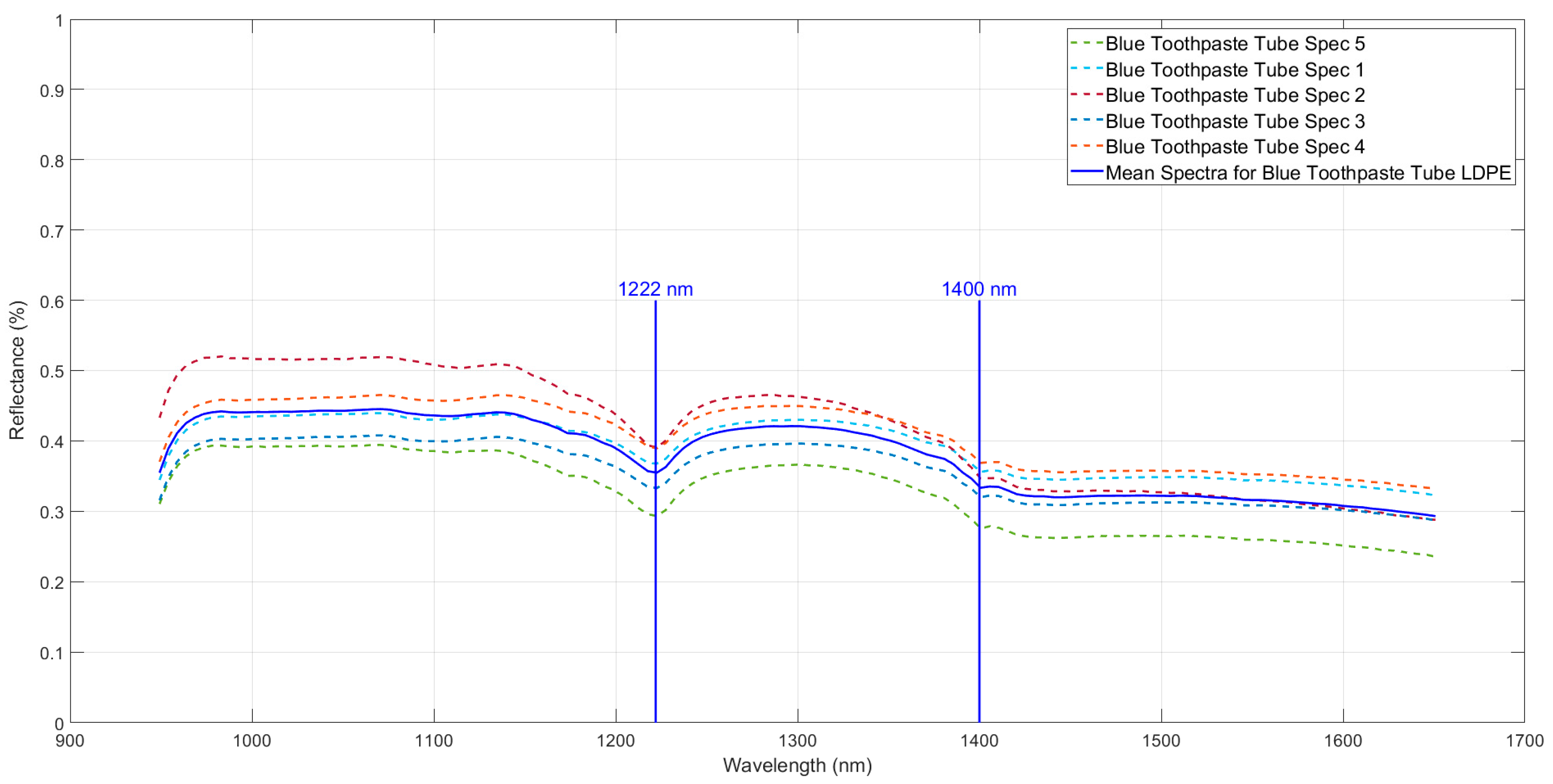

- LDPE plastic type—blue toothpaste tube: The dry LDPE plastic had two absorption features at wavelengths of 1222 nm and 1400 nm, which is in close correspondence with Tasseron et al. [3]. Similar results also appear in Figure 6 for dry objects. The reflectance spectrum of the wet blue toothpaste tube of LDPE plastic was also attenuated. The two main absorption features of polyethene plastics (HDPE and LDPE) found in this study were centered on wavelengths of 1222 nm and 1400 nm, which is very similar to the absorption features described by Tasseron et al. [3].

- PET plastic type—transparent tomato packaging: The dry PET spectral reflectance decreased with increasing wavelengths. The wet semi-transparent packaging of PET plastic type had an attenuated reflectance spectrum. The spectral shape of the transparent PET type found by Tasseron et al. [3] was similar to the spectral shape found in this study. No absorption feature can be highlighted for this type of plastic, whose reflectance was further reduced due to the transparency of the object, which reflected a small amount of light.

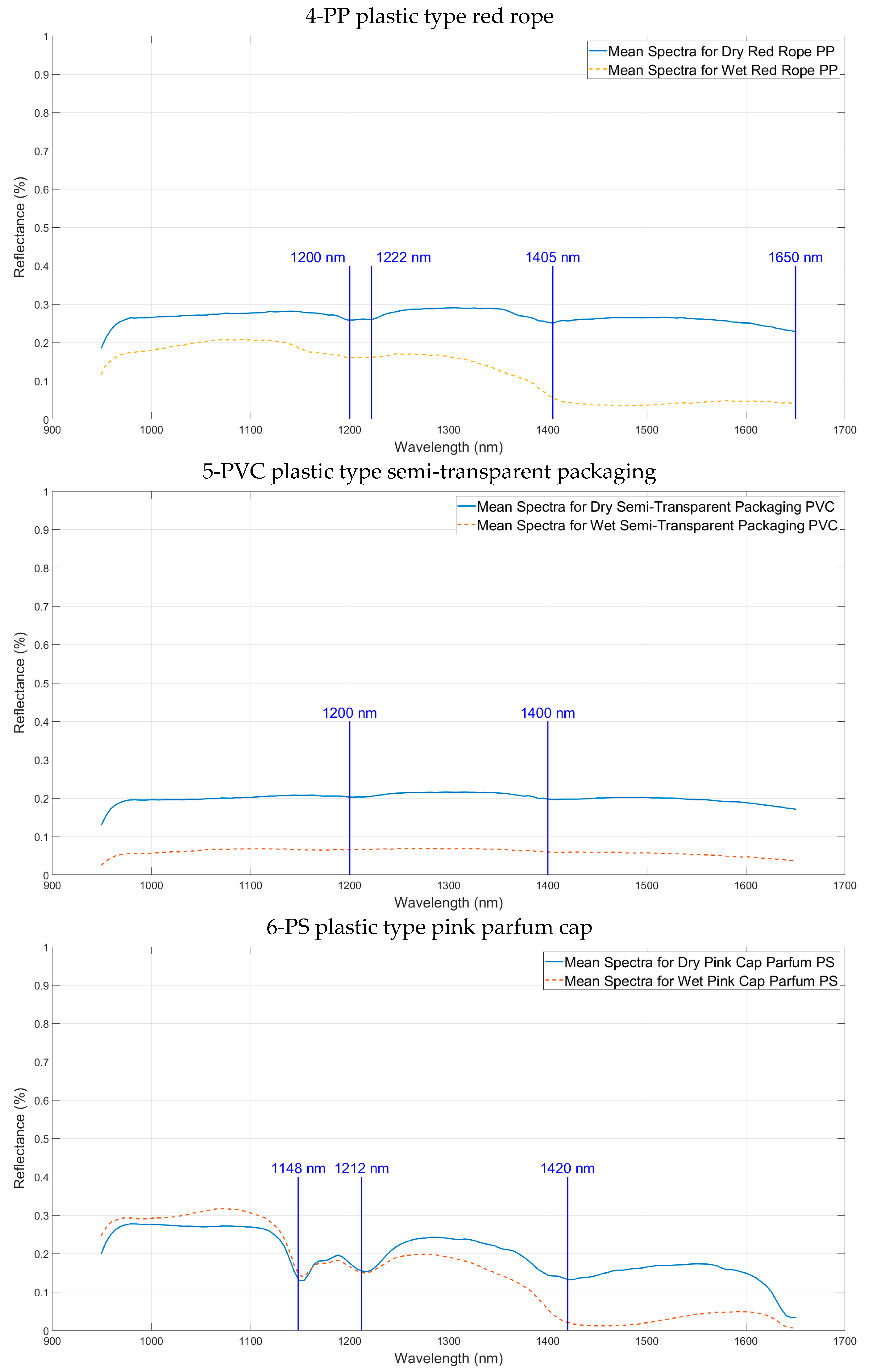

- PP plastic type—red rope: The dry PP had four visible absorption features at wavelengths of 1200 nm, 1222 nm, 1405 nm, and 1650 nm. The most-important absorption features were usually at wavelengths of 1222 nm, 1405 nm, and 1650 nm. The absorption features of PPplastics found in this study were centered on wavelengths of 1222 nm and 1405 nm, which is in close correspondence with Tasseron et al. [3] and Moshtaghi et al. [6]. The wet red rope plastic of the PP plastic type had an attenuated reflectance spectrum.

- PVC plastic type—semi-transparent packaging: The dry PVC had two small absorption features at wavelengths of 1200–1202 nm and 1400–1405 nm. The wet semi-transparent packaging of the PVC plastic type had an attenuated reflectance spectra, but it was similar to the dry spectra. The transparency of this object generated low-level spectra along the reflectance axis since the light rays were transmitted through the material to be partly absorbed by the water. Although this specific type of plastic packaging tends to float on water, other PVC objects are rarely found floating due to the high density of this polymer relative to water and was, therefore, not considered by Tasseron et al. [3].

- PS plastic type—pink parfum cap: The dry PS had three important absorption features at wavelengths of 1148 nm, 1212 nm, and 1420 nm. The wet pink parfum cap of the PS plastic type had an attenuated reflectance spectrum. Polystyrene was characterized by two distinct absorption features at 1150 and 1450 nm by Tasseron et al. [3].

4. Plastic Litter Recognition Using Machine Learning

4.1. Waste Image Patch Dataset

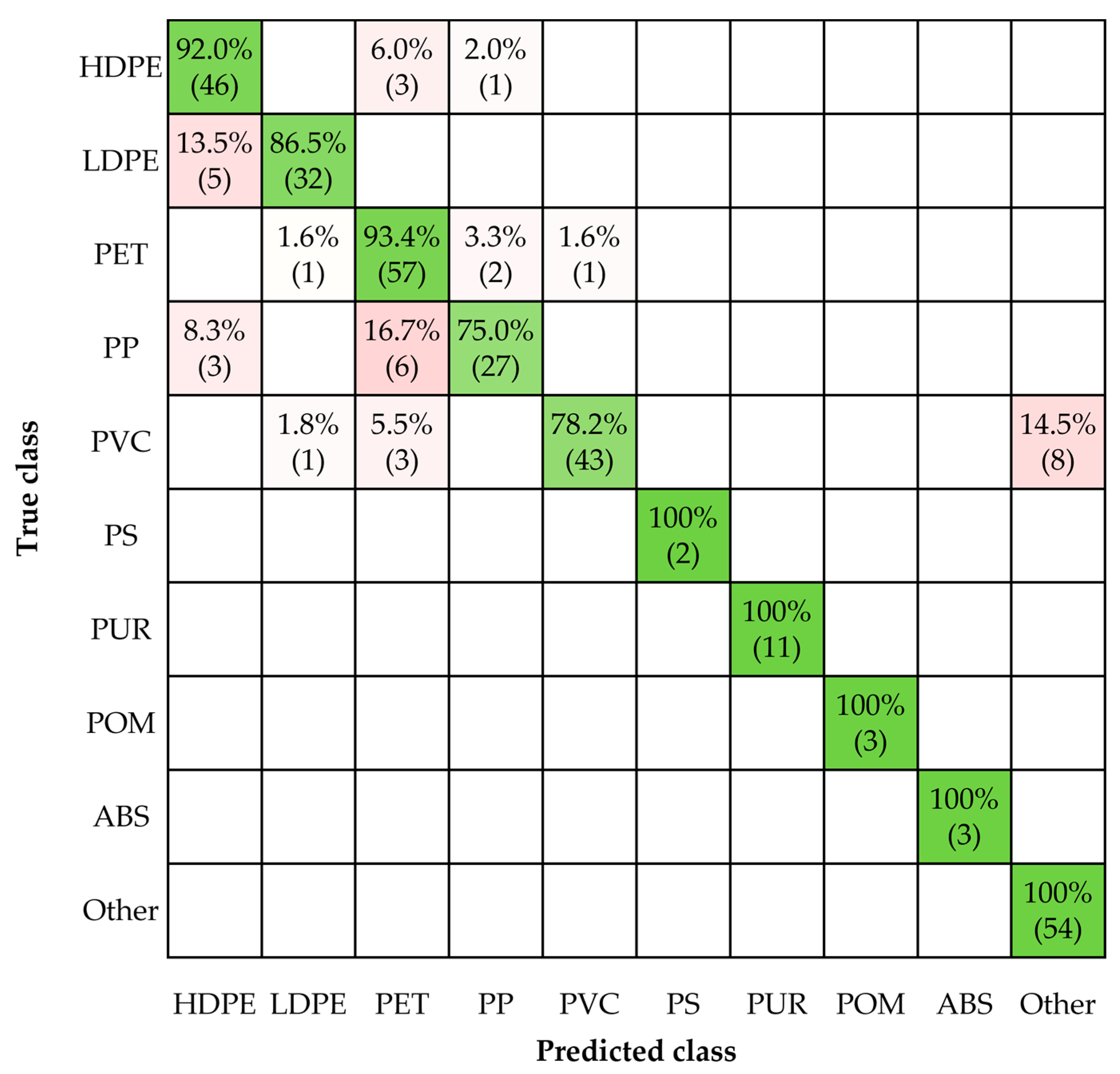

4.2. Waste Image Patch Classification

- Dimensionality reduction by feature extraction [38]: PCA was applied on the training subset, and different dimensions of the resulting feature subspace were considered for the next stage.

- Learning stage: The KNN, SVM, and ANN classification models were applied with optimization methods (Bayesian and random research) of hyper-parameter tuning to determine the best validation accuracy. In order to protect against overfitting, a five-fold cross-validation was considered. This scheme partitions the training subset into five disjoint folds. Each fold was used once as a validation fold, and the others formed a set of training folds. For each validation fold, the classification model was trained using the training folds, and the classification accuracy was assessed using the validation fold. The average accuracy was then calculated over all the folds and was used to optimize the tunning of the classification model parameters. These hyper-parameters, which are presented in Table 3, were determined by an automatic hyper-parameter optimization using two methods: Bayesian [34,35] and random research [36] optimization. The final validation accuracy gave a good estimate of the predictive accuracy of the classifier, which was used in the next stage with the full training subset, excluding any data reserved for the testing subset.

- Prediction stage: The trained models obtained during the previous stage were then applied to the testing image patches, and the overall test accuracy of the classifier was determined. The testing subset here was independent of the training subset.

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wolf, M.; Berg, K.V.D.; Garaba, S.P.; Gnann, N.; Sattler, K.; Stahl, F.; Zielinski, O. Machine learning for aquatic plastic litter detection, classification and quantification (APLASTIC-Q). Environ. Res. Lett. 2020, 15, 114042. [Google Scholar] [CrossRef]

- Tasseron, P.F.; Schreyers, L.; Peller, J.; Biermann, L.; van Emmerik, T. Toward robust river plastic detection: Combining lab and field-based hyperspectral imagery. Earth Space Sci. 2022, 9, e2022EA002518. [Google Scholar] [CrossRef]

- Tasseron, P.; van Emmerik, T.; Peller, J.; Schreyers, L.; Biermann, L. Advancing floating macroplastic detection from space using experimental hyperspectral imagery. Remote Sens. 2021, 13, 2335. [Google Scholar] [CrossRef]

- Freitas, S.; Silva, H.; Silva, E. Remote hyperspectral imaging acquisition and characterization for marine litter detection. Remote Sens. 2021, 13, 2536. [Google Scholar] [CrossRef]

- Zhou, S.; Kaufmann, H.; Bohn, N.; Bochow, M.; Kuester, T.; Segl, K. Identifying distinct plastics in hyperspectral experimental lab, aircraft-, and satellite data using machine/deep learning methods trained with synthetically mixed spectral data. Remote Sens. Environ. 2022, 281, 113263. [Google Scholar] [CrossRef]

- Moshtaghi, M.; Knaeps, E.; Sterckx, S.; Garaba, S.; Meire, D. Spectral reflectance of marine macroplastics in the VNIR and SWIR measured in a controlled environment. Sci. Rep. 2021, 11, 5436. [Google Scholar] [CrossRef] [PubMed]

- Mehrubeoglu, M.; Sickle, A.V.; Turner, J. Detection and identification of plastics using SWIR hyperspectral imaging. In Imaging Spectrometry XXIV: Applications, Sensors, and Processing; SPIE: Online Only, CA, USA, 2020; Volume 11504, p. 115040G. [Google Scholar] [CrossRef]

- Cocking, J.; Narayanaswamy, B.E.; Waluda, C.M.; Williamson, B.J. Aerial detection of beached marine plastic using a novel, hyperspectral short-wave infrared (SWIR) camera. ICES J. Mar. Sci. 2022, 79, 648–660. [Google Scholar] [CrossRef]

- Hu, C. Remote detection of marine debris using satellite observations in the visible and near infrared spectral range: Challenges and potentials. Remote Sens. Environ. 2021, 259, 112414. [Google Scholar] [CrossRef]

- Balsi, M.; Moroni, M.; Chiarabini, V.; Tanda, G. High-resolution aerial detection of marine plastic litter by hyperspectral sensing. Remote Sens. 2021, 13, 1557. [Google Scholar] [CrossRef]

- Leone, G.; Catarino, A.I.; Keukelaere, L.D.; Bossaer, M.; Knaeps, E.; Everaert, G. Hyperspectral reflectance dataset of pristine, weathered and biofouled plastics. Earth Syst. Sci. Data Discuss. 2022, 15, 745–752. [Google Scholar] [CrossRef]

- Zhou, S.; Kuester, T.; Bochow, M.; Bohn, N.; Brell, M.; Kaufmann, H. A knowledge-based, validated classifier for the identification of aliphatic and aromatic plastics by WorldView-3 satellite data. Remote Sens. Environ. 2021, 264, 112598. [Google Scholar] [CrossRef]

- Biermann, L.; Clewley, D.; Martinez-Vicente, V.; Topouzelis, K. Finding plastic patches in coastal waters using optical satellite data. Sci. Rep. 2020, 10, 2825–2830. [Google Scholar] [CrossRef] [PubMed]

- Bentley, J. Detecting Ocean Microplastics with Remote Sensing in the Near-Infrared: A Feasibility Study. 2019. Available online: https://vc.bridgew.edu/cgi/viewcontent.cgi?article=1309&context=honors_proj (accessed on 28 March 2023).

- Topouzelis, K.; Papageorgiou, D.; Karagaitanakis, A.; Papakonstantinou, A.; Ballesteros, M.A. Remote sensing of sea surface artificial floating plastic targets with Sentinel-2 and unmanned aerial systems (plastic litter project 2019). Remote Sens. 2020, 12, 2013. [Google Scholar] [CrossRef]

- Bao, Z.; Sha, J.; Li, X.; Hanchiso, T.; Shifaw, E. Monitoring of beach litter by automatic interpretation of unmanned aerial vehicle images using the segmentation threshold method. Mar. Pollut. Bull. 2018, 137, 388–398. [Google Scholar] [CrossRef]

- Topouzelis, K.; Papageorgiou, D.; Suaria, G.; Aliani, S. Floating marine litter detection algorithms and techniques using optical remote sensing data: A review. Mar. Pollut. Bull. 2021, 170, 112675. [Google Scholar] [CrossRef]

- Ramavaram, H.R.; Kotichintala, S.; Naik, S.; Critchley-Marrows, J.; Isaiah, O.T.; Pittala, M.; Wan, S.; Irorere, D. Tracking Ocean Plastics Using Aerial and Space Borne Platforms: Overview of Techniques and Challenges. In Proceedings of the 69th International Astronautical Congress (IAC), IAC 2018 Congress Proceedings. Bremen, Germany, 1–5 October 2018. [Google Scholar]

- Iordache, M.-D.; Keukelaere, L.D.; Moelans, R.; Landuyt, L.; Moshtaghi, M.; Corradi, P.; Knaeps, E. Targeting plastics: Machine learning applied to litter detection in aerial multispectral images. Remote Sens. 2022, 14, 5820. [Google Scholar] [CrossRef]

- Andriolo, U.; Gonçalves, G.; Bessa, F.; Sobral, P. Mapping marine litter on coastal dunes with unmanned aerial systems: A showcase on the Atlantic Coast. Sci. Total Environ. 2020, 736, 139632. [Google Scholar] [CrossRef]

- Geraeds, M.; van Emmerik, T.; de Vries, R.; bin Ab Razak, M.S. Riverine plastic litter monitoring using Unmanned Aerial Vehicles (UAVs). Remote Sens. 2019, 11, 2045. [Google Scholar] [CrossRef]

- Freitas, S.; Silva, H.; Silva, E. Hyperspectral imaging zero-shot learning for remote marine litter detection and classification. Remote Sens. 2022, 14, 5516. [Google Scholar] [CrossRef]

- Kikaki, K.; Kakogeorgiou, I.; Mikeli, P.; Raitsos, D.E.; Karantzalos, K. MARIDA: A benchmark for Marine Debris detection from Sentinel-2 remote sensing data. PLoS ONE 2022, 17, e0262247. [Google Scholar] [CrossRef]

- Freitas, S.; Silva, H.; Almeida, C.; Viegas, D.; Amaral, A.; Santos, T.; Dias, A.; Jorge, P.A.S.; Pham, C.K.; Moutinho, J.; et al. Hyperspectral imaging system for marine litter detection. In Proceedings of the OCEANS 2021: San Diego—Porto, San Diego, CA, USA, 20–23 September 2021; IEEE: New York, NY, USA, 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Pasquier, G.; Doyen, P.; Carlesi, N.; Amara, R. An innovative approach for microplastic sampling in all surface water bodies using an aquatic drone. Heliyon 2022, 8, e11662. [Google Scholar] [CrossRef]

- Driedger, A.; Dürr, H.; Mitchell, K.; Flannery, J.; Brancazi, E.; Cappellen, P.V. Plastic debris: Remote sensing and characterization data streams and micro-satellites reflected infrared spectroscopy raman spectroscopy great lakes marine debris network. Int. J. Remote Sens. Mar. Pollut. Bull. 2007, 22, 1. [Google Scholar]

- Neo, E.R.K.; Yeo, Z.; Low, J.S.C.; Goodship, V.; Debattista, K. A review on chemometric techniques with infrared, Raman and laser-induced breakdown spectroscopy for sorting plastic waste in the recycling industry. Resour. Conserv. Recycl. 2022, 180, 106217. [Google Scholar] [CrossRef]

- Garaba, S.P.; Harmel, T. Top-of-atmosphere hyper and multispectral signatures of submerged plastic litter with changing water clarity and depth. Opt. Express 2022, 30, 16553. [Google Scholar] [CrossRef]

- Ma, L.; Crawford, M.M.; Tian, J. Local manifold learning-based k-nearest-neighbor for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2010, 48, 4099–4109. [Google Scholar] [CrossRef]

- Melgani, F.; Bruzzone, L. Classification of hyperspectral remote sensing images with support vector machines. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1778–1790. [Google Scholar] [CrossRef]

- Gnann, N.; Björn, B.; Ternes, T.A. Close-range remote sensing-based detection and identification of macroplastics on water assisted by artificial intelligence: A review. Water Res. 2022, 222, 118902. [Google Scholar] [CrossRef]

- Glorot, X.; Bengio, Y. Understanding the difficulty of training deep feedforward neural networks. In Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics, Sardinia, Italy, 13–15 May 2010; pp. 249–256. Available online: http://proceedings.mlr.press/v9/glorot10a/glorot10a.pdf (accessed on 28 March 2023).

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 11–18 December 2015; pp. 1026–1034. [Google Scholar] [CrossRef]

- Snoek, J.; Larochelle, H.; Adams, R.P. Practical bayesian optimization of machine learning algorithms. Adv. Neural Inf. Process. Syst. 2012, 25. Available online: https://arxiv.org/pdf/1206.2944 (accessed on 28 March 2023).

- Gelbart, M.A.; Snoek, J.; Adams, R.P. Bayesian optimization with unknown constraints. arXiv 2014, arXiv:1403.5607. [Google Scholar]

- Bergstra, J.; Bengio, Y. Random search for hyper-parameter optimization. J. Mach. Learn. Res. 2012, 13, 281–305. Available online: https://dl.acm.org/doi/pdf/10.5555/2188385.2188395 (accessed on 28 March 2023).

- Jolliffe, I.T. Principal Component Analysis, 2nd ed.; Springer: New York, NY, USA, 2002. [Google Scholar]

- Lapajne, J.; Knapič, M.; Žibrat, U. Comparison of selected dimensionality reduction methods for detection of root-knot nematode infestations in potato tubers using hyperspectral imaging. Sensors 2022, 22, 367. [Google Scholar] [CrossRef] [PubMed]

- Fiore, L.; Serranti, S.; Mazziotti, C.; Riccardi, E.; Benzi, M.; Bonifazi, G. Classification and distribution of freshwater microplastics along the Italian Po river by hyperspectral imaging. Environ. Sci. Pollut. Res. 2022, 29, 48588–48606. [Google Scholar] [CrossRef] [PubMed]

| Number of Objects | Plastic Type | ||||

|---|---|---|---|---|---|

| 19 | HDPE | Yellow rope | White plastic bag | Green net rope | Yogurt cover |

| 5 | LDPE | Blue toothpaste tube | White toothpaste tube | Orange rope | Red water bottle neck  |

| 3 | PET | Green bottle bottom | Transparent tomato packaging | ||

| 23 | PP | Red rope | Dark blue rope | Blue deodorant cover | Red cap |

| 2 | PVC | Black container | Semi-transparent packaging | ||

| 2 | PS | Pink cap parfum | White part of yogurt (yogurt box) |  | |

| 2 | PUR | Gray fragment of pristine plastic | Gray fragment | ||

| 1 | POM | White fragment of pristine plastic | |||

| 1 | ABS | White fragment of pristine plastic |

| Nine Plastic Types and “Other” (10 Classes) | Training Subsets Number of Patches | Testing Subsets Number of Patches |

|---|---|---|

| (1) HDPE | 135 | 50 |

| (2) LDPE | 67 | 37 |

| (3) PET | 85 | 61 |

| (4) PP | 96 | 36 |

| (5) PVC | 236 | 55 |

| (6) PS | 4 | 2 |

| (7) PUR | 14 | 11 |

| (8) POM | 2 | 3 |

| (9) ABS | 6 | 3 |

| (10) Other | 143 | 54 |

| Total Number of Patches | 788 | 312 |

| Supervised Machine Learning Model | Hyper-Parameter Search Range | |||

|---|---|---|---|---|

| K-Nearest Neighbor classification (KNN) | Number of neighbors: 1–394 | Distance metric: city block, Chebyshev, correlation, cosine, Euclidean, Hamming, Jaccard, Mahalanobis, Minkowski (cubic), Spearman | Distance weight: equal, inverse, squared inverse | Standardized data: yes, no |

| Support Vector Machine (SVM) | Multiclass method: one-vs.-all, one-vs.-one | Box constraint level: 0.001–1000 | Kernel scale: 0.001–1000 Kernel function: Gaussian, linear, quadratic, cubic | Standardized data: yes, no |

| Artificial Neural Network (ANN) | Number of fully connected layers: 1–3 | Activation: ReLU, tanh, sigmoid, none | Regularization strength (Lambda): 1.269 × 10−8–126.9036 | Standardized data: Yes, No |

| First layer size: 1–300 | Second layer size: 1–300 | Third layer size: 1–300 | Iteration limit: 1–1000 | |

| Classification Models | PCA Values | Optimizer Options | Fine-Tuning/Optimized Hyper-Parameters | Validation Accuracy | Test Accuracy | ||||

|---|---|---|---|---|---|---|---|---|---|

| KNN | Number of neighbors | Distance metric | Distance weight | Standardized data | |||||

| Model1- KNN | PCA disabled | Random search | 1 | Correlation | Inverse | true | 89.2% | 73.1% | |

| Model2-PCA48-KNN | 48 | Random search | 5 | Spearman | Inverse | false | 89.7% | 89.1% | |

| Model3-PCA48-KNN | 48 | Bayesian optimization | 2 | Spearman | Squared inverse | false | 91.0% | 85.3% | |

| Model4-PCA64-KNN | 64 | Random search | 10 | Spearman | Squared inverse | false | 90.2% | 87.8% | |

| Model5-PCA64-KNN | 64 | Bayesian optimization | 2 | Spearman | Equal | false | 92.1% | 87.2% | |

| Model6- PCA96-KNN | 96 | Random search | 1 | Spearman | Squared inverse | false | 90.7% | 86.9% | |

| SVM (kernel scale: 1) | Multiclass method | Box constraint level | Kernel function | Standardized data | |||||

| Model7- PCA64-SVM | 64 | Bayesian optimization | One-vs.-all | 627.1421 | Quadratic | false | 88.6% | 85.3% | |

| Model8- PCA64-SVM | 64 | Random search | One-vs.-all | 268.3711 | Cubic | false | 88.5% | 72.8% | |

| Neural Network ANN | Model9- PCA64-ANN | 64 | Bayesian optimization | Number of fully connected layers: 1 | Activation: none | Regularization strength (Lambda): 5.0497 × 10−8 | Standardized data: No | 87.6% | 71.8% |

| First layer size: 19 | Second layer size: 0 | Third layer size: 0 | Iteration limit: 1000 | ||||||

| Model10-PCA64-ANN | 48 | Random search | Number of fully connected layers: 2 | Activation: tanh | Regularization strength (Lambda): 7.8208 × 10−7 | Standardized data: No | 87.9% | 84.6% | |

| First layer size: 17 | Second layer size: 288 | Third layer size: 0 | Iteration limit: 1000 | ||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alboody, A.; Vandenbroucke, N.; Porebski, A.; Sawan, R.; Viudes, F.; Doyen, P.; Amara, R. A New Remote Hyperspectral Imaging System Embedded on an Unmanned Aquatic Drone for the Detection and Identification of Floating Plastic Litter Using Machine Learning. Remote Sens. 2023, 15, 3455. https://doi.org/10.3390/rs15143455

Alboody A, Vandenbroucke N, Porebski A, Sawan R, Viudes F, Doyen P, Amara R. A New Remote Hyperspectral Imaging System Embedded on an Unmanned Aquatic Drone for the Detection and Identification of Floating Plastic Litter Using Machine Learning. Remote Sensing. 2023; 15(14):3455. https://doi.org/10.3390/rs15143455

Chicago/Turabian StyleAlboody, Ahed, Nicolas Vandenbroucke, Alice Porebski, Rosa Sawan, Florence Viudes, Perine Doyen, and Rachid Amara. 2023. "A New Remote Hyperspectral Imaging System Embedded on an Unmanned Aquatic Drone for the Detection and Identification of Floating Plastic Litter Using Machine Learning" Remote Sensing 15, no. 14: 3455. https://doi.org/10.3390/rs15143455

APA StyleAlboody, A., Vandenbroucke, N., Porebski, A., Sawan, R., Viudes, F., Doyen, P., & Amara, R. (2023). A New Remote Hyperspectral Imaging System Embedded on an Unmanned Aquatic Drone for the Detection and Identification of Floating Plastic Litter Using Machine Learning. Remote Sensing, 15(14), 3455. https://doi.org/10.3390/rs15143455