Enhancing Spatial Variability Representation of Radar Nowcasting with Generative Adversarial Networks

Abstract

1. Introduction

2. Methods

2.1. Problem Statement

2.2. The Principle of GAN

2.3. The SVRE Loss Function

2.4. The Architecture of the AGAN

3. Experiments

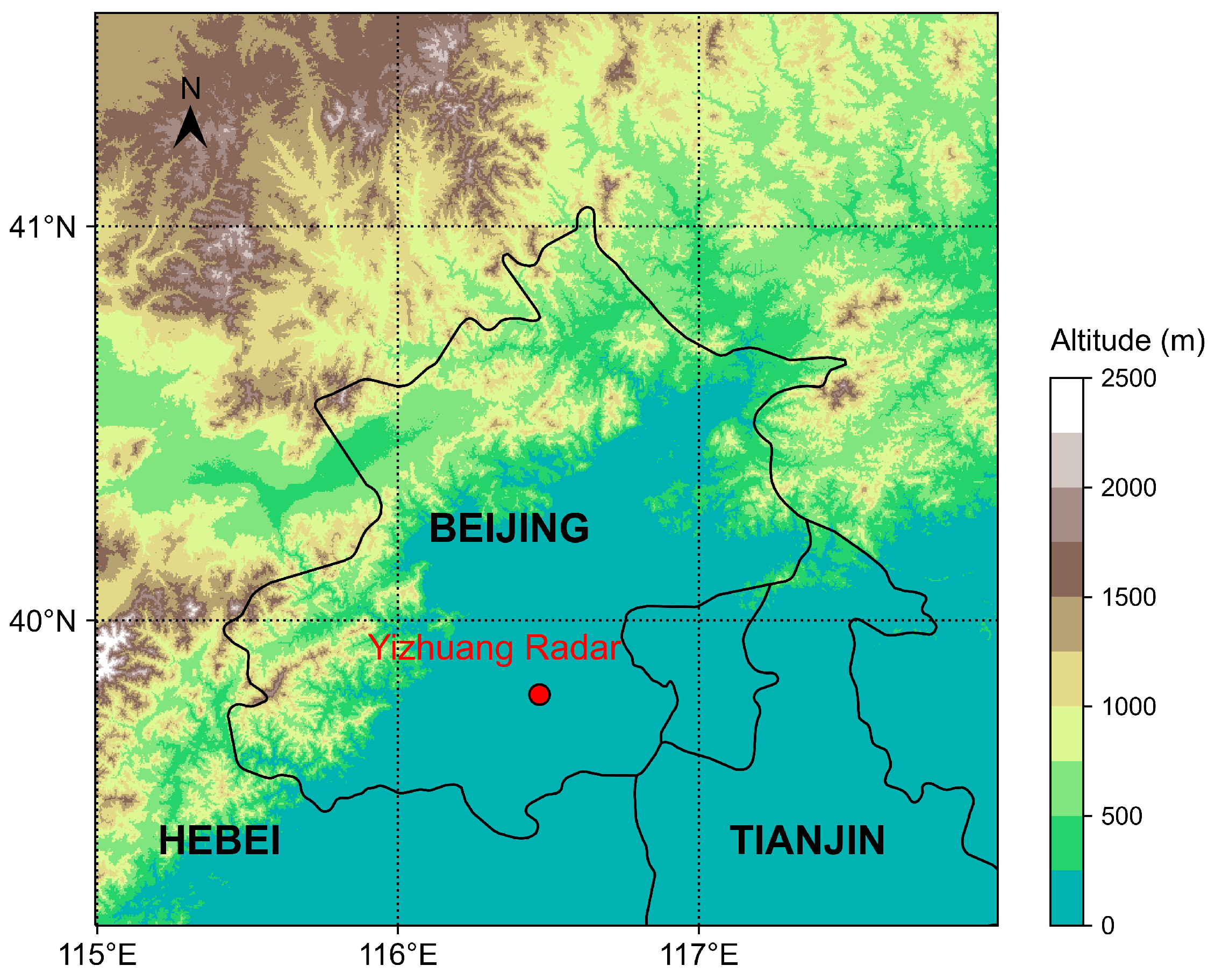

3.1. Data and Study Area

3.2. Evaluation Metrics

3.3. Experiment Settings

4. Results and Discussion

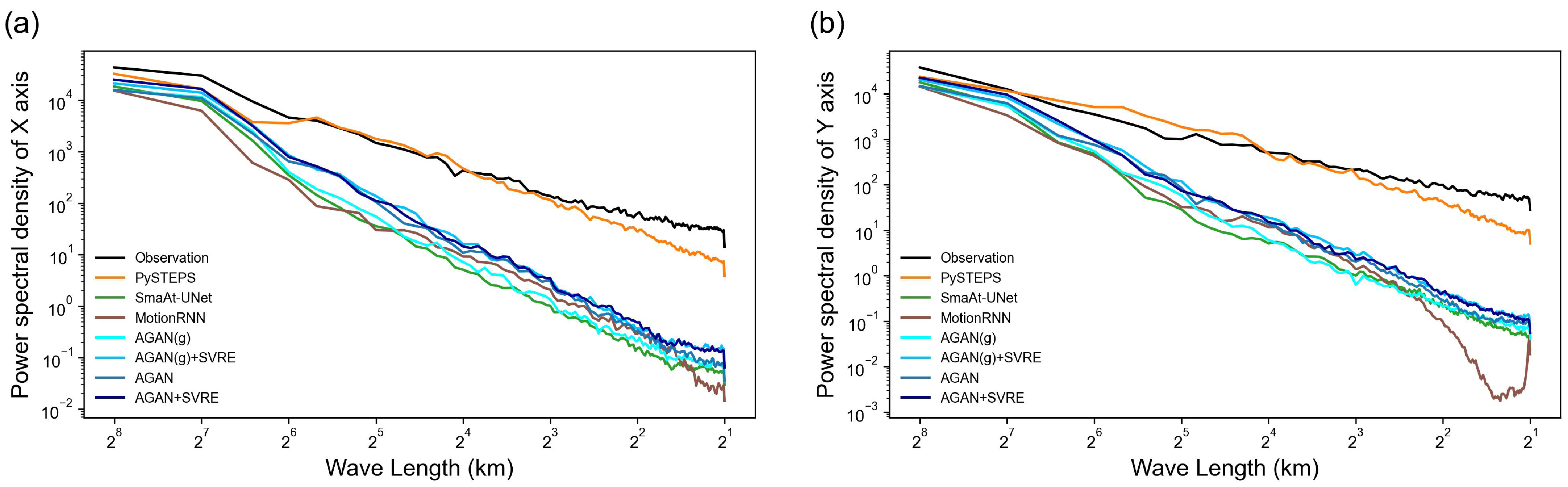

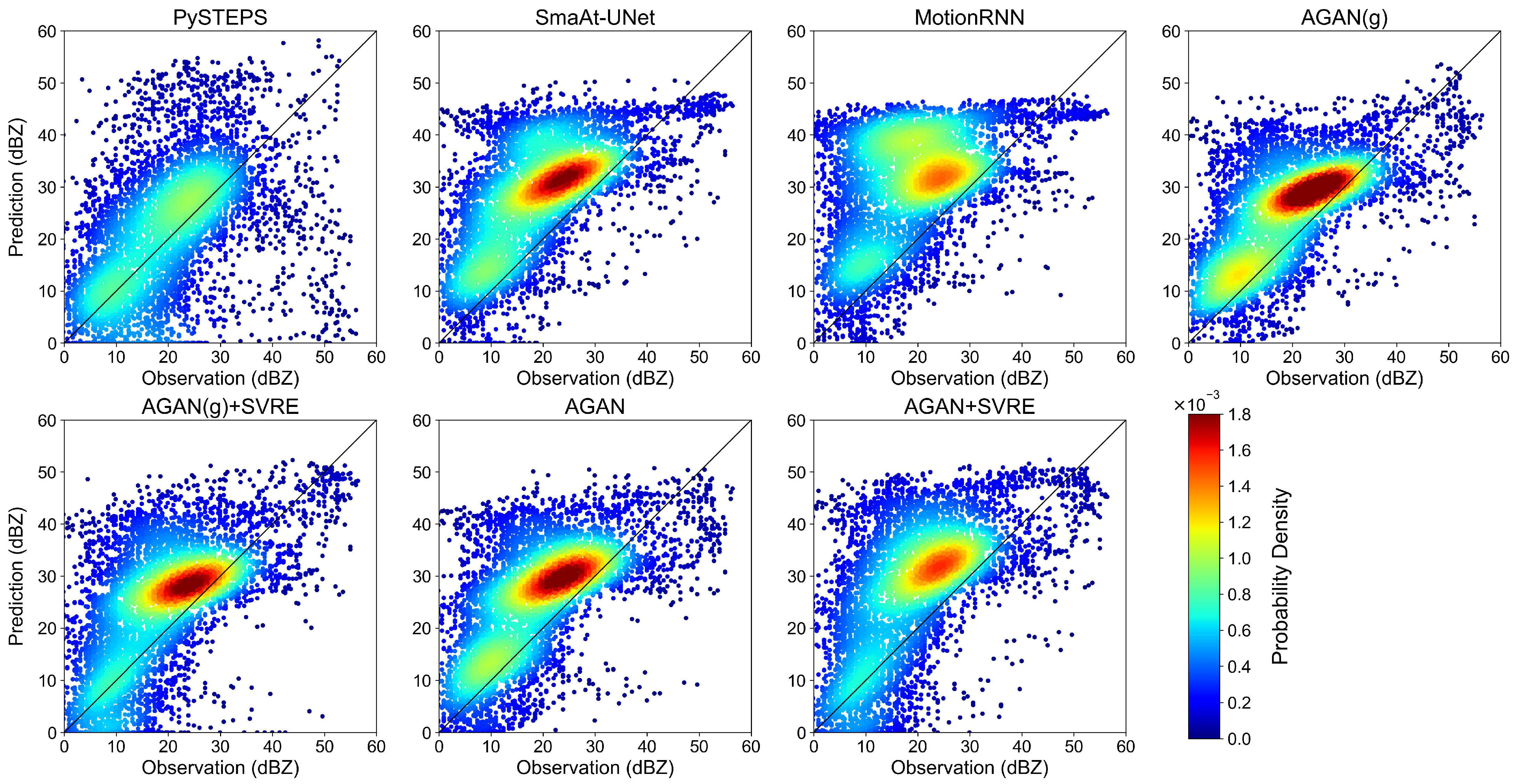

4.1. Overall Performance

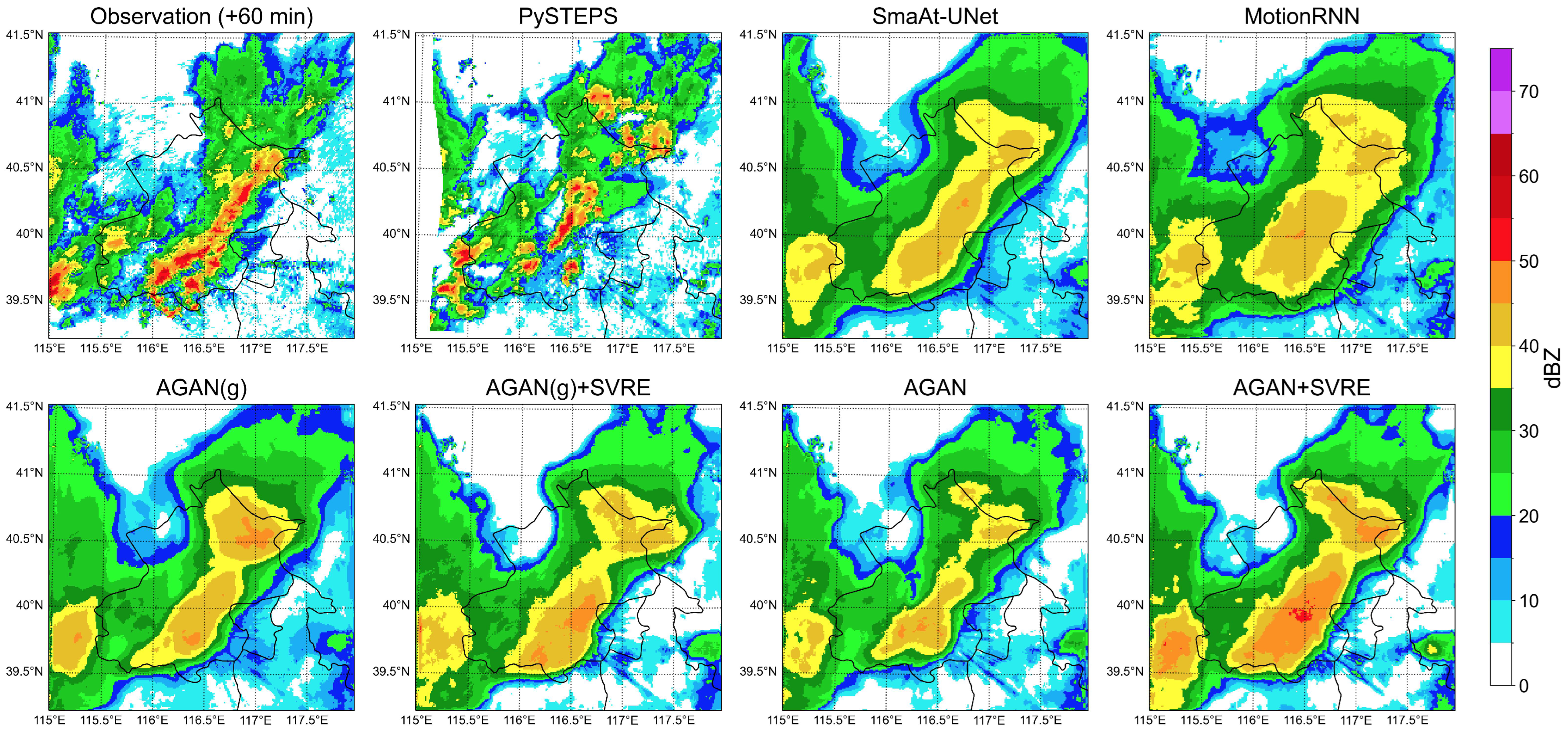

4.2. Case Study

4.2.1. Case 1

4.2.2. Case 2

4.3. Discussion

5. Conclusions

- We propose the SVRE loss function and the AGAN to alleviate the blurry effect of DL nowcasting models. Both of them can reduce this effect by enhancing the spatial variability of radar reflectivity.

- We attribute the blurry effect of DL nowcasting models to the deficiency in spatial variability representation of radar reflectivity or the precipitation field, which provides a new perspective for improving radar nowcasting.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| SVRE | Spatial varibility representation enhancement |

| AGAN | Attentional generative adversarial network |

| DL | Deep learning |

| GA | Generative adversarial |

| CNN | Convolutional neural network |

| RNN | Recurrent neural network |

| POD | Probability of detection |

| FAR | False alarm ratio |

| CSI | Critical sucess index |

| MBE | Mean bias error |

| MAE | Mean absolute error |

| RMSE | Root mean squared error |

| JSD | Jensen-Shannon divergence |

| SSIM | Structural similarity index measure |

| PSD | Power spectral density |

| CC | Correlation coefficient |

References

- WMO. Guidelines for Nowcasting Techniques; World Meteorological Organization: Geneva, Switzerland, 2017. [Google Scholar]

- Wapler, K.; de Coning, E.; Buzzi, M. Nowcasting. In Reference Module in Earth Systems and Environmental Sciences; Elsevier: Amsterdam, The Netherlands, 2019. [Google Scholar]

- Han, L.; Zhao, Y.; Chen, H.; Chandrasekar, V. Advancing Radar Nowcasting Through Deep Transfer Learning. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4100609. [Google Scholar] [CrossRef]

- Ma, Y.; Chen, H.; Ni, G.; Chandrasekar, V.; Gou, Y.; Zhang, W. Microphysical and polarimetric radar signatures of an epic flood event in Southern China. Remote Sens. 2020, 12, 2772. [Google Scholar] [CrossRef]

- Ochoa-Rodriguez, S.; Wang, L.; Willems, P.; Onof, C. A review of radar-rain gauge data merging methods and their potential for urban hydrological applications. Water Resour. Res. 2019, 55, 6356–6391. [Google Scholar] [CrossRef]

- Fulton, R.A.; Breidenbach, J.P.; Seo, D.J.; Miller, D.A.; O’Bannon, T. The WSR-88D rainfall algorithm. Weather Forecast. 1998, 13, 377–395. [Google Scholar] [CrossRef]

- Bringi, V.N.; Chandrasekar, V. Polarimetric Doppler Weather Radar: Principles and Applications; Cambridge University Press: Cambridge, UK, 2001. [Google Scholar]

- Overeem, A.; Buishand, T.A.; Holleman, I. Extreme rainfall analysis and estimation of depth-duration-frequency curves using weather radar. Water Resour. Res. 2009, 45, W10424. [Google Scholar] [CrossRef]

- Dixon, M.; Wiener, G. TITAN: Thunderstorm identification, tracking, analysis, and nowcasting—A radar-based methodology. J. Atmos. Ocean. Technol. 1993, 10, 785–797. [Google Scholar] [CrossRef]

- Johnson, J.; MacKeen, P.L.; Witt, A.; Mitchell, E.D.W.; Stumpf, G.J.; Eilts, M.D.; Thomas, K.W. The storm cell identification and tracking algorithm: An enhanced WSR-88D algorithm. Weather Forecast. 1998, 13, 263–276. [Google Scholar] [CrossRef]

- Tuttle, J.D.; Foote, G.B. Determination of the boundary layer airflow from a single Doppler radar. J. Atmos. Ocean. Technol. 1990, 7, 218–232. [Google Scholar] [CrossRef]

- Germann, U.; Zawadzki, I. Scale-dependence of the predictability of precipitation from continental radar images. Part I: Description of the methodology. Mon. Weather Rev. 2002, 130, 2859–2873. [Google Scholar] [CrossRef]

- Ruzanski, E.; Chandrasekar, V.; Wang, Y. The CASA nowcasting system. J. Atmos. Ocean. Technol. 2011, 28, 640–655. [Google Scholar] [CrossRef]

- Woo, W.c.; Wong, W.k. Operational application of optical flow techniques to radar-based rainfall nowcasting. Atmosphere 2017, 8, 48. [Google Scholar] [CrossRef]

- Bowler, N.E.; Pierce, C.E.; Seed, A. Development of a precipitation nowcasting algorithm based upon optical flow techniques. J. Hydrol. 2004, 288, 74–91. [Google Scholar] [CrossRef]

- Radhakrishna, B.; Zawadzki, I.; Fabry, F. Predictability of precipitation from continental radar images. Part V: Growth and decay. J. Atmos. Sci. 2012, 69, 3336–3349. [Google Scholar] [CrossRef]

- Han, L.; Sun, J.; Zhang, W. Convolutional neural network for convective storm nowcasting using 3-D Doppler weather radar data. IEEE Trans. Geosci. Remote Sens. 2019, 58, 1487–1495. [Google Scholar] [CrossRef]

- Sun, D.; Roth, S.; Lewis, J.; Black, M.J. Learning optical flow. In Proceedings of the European Conference on Computer Vision, Marseille, France, 12–18 October 2008; Springer: Berlin/Heidelberg, Germany, 2008; pp. 83–97. [Google Scholar]

- Novák, P.; Březková, L.; Frolík, P. Quantitative precipitation forecast using radar echo extrapolation. Atmos. Res. 2009, 93, 328–334. [Google Scholar] [CrossRef]

- Voulodimos, A.; Doulamis, N.; Doulamis, A.; Protopapadakis, E. Deep learning for computer vision: A brief review. Comput. Intell. Neurosci. 2018, 2018, 7068349. [Google Scholar] [CrossRef] [PubMed]

- Deng, L.; Liu, Y. Deep Learning in Natural Language Processing; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Reichstein, M.; Camps-Valls, G.; Stevens, B.; Jung, M.; Denzler, J.; Carvalhais, N. Deep learning and process understanding for data-driven Earth system science. Nature 2019, 566, 195–204. [Google Scholar] [CrossRef]

- Prudden, R.; Adams, S.; Kangin, D.; Robinson, N.; Ravuri, S.; Mohamed, S.; Arribas, A. A review of radar-based nowcasting of precipitation and applicable machine learning techniques. arXiv 2020, arXiv:2005.04988. [Google Scholar]

- Jing, J.; Li, Q.; Peng, X. MLC-LSTM: Exploiting the Spatiotemporal Correlation between Multi-Level Weather Radar Echoes for Echo Sequence Extrapolation. Sensors 2019, 19, 3988. [Google Scholar] [CrossRef]

- Camps-Valls, G.; Tuia, D.; Zhu, X.X.; Reichstein, M. Deep Learning for the Earth Sciences: A Comprehensive Approach to Remote Sensing, Climate Science and Geosciences; John Wiley & Sons: Hoboken, NJ, USA, 2021; pp. 218–219. [Google Scholar]

- Ji, S.; Xu, W.; Yang, M.; Yu, K. 3D convolutional neural networks for human action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 221–231. [Google Scholar] [CrossRef]

- Qiu, Z.; Yao, T.; Mei, T. Learning spatio-temporal representation with pseudo-3d residual networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5533–5541. [Google Scholar]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning spatiotemporal features with 3d convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4489–4497. [Google Scholar]

- Klein, B.; Wolf, L.; Afek, Y. A dynamic convolutional layer for short range weather prediction. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4840–4848. [Google Scholar]

- Ayzel, G.; Heistermann, M.; Sorokin, A.; Nikitin, O.; Lukyanova, O. All convolutional neural networks for radar-based precipitation nowcasting. Procedia Comput. Sci. 2019, 150, 186–192. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Ayzel, G.; Scheffer, T.; Heistermann, M. RainNet v1. 0: A convolutional neural network for radar-based precipitation nowcasting. Geosci. Model Dev. 2020, 13, 2631–2644. [Google Scholar] [CrossRef]

- Trebing, K.; Stanczyk, T.; Mehrkanoon, S. Smaat-unet: Precipitation nowcasting using a small attention-unet architecture. Pattern Recognit. Lett. 2021, 145, 178–186. [Google Scholar] [CrossRef]

- Shi, X.; Chen, Z.; Wang, H.; Yeung, D.Y.; Wong, W.K.; Woo, W.C. Convolutional LSTM network: A machine learning approach for precipitation nowcasting. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 802–810. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Shi, X.; Gao, Z.; Lausen, L.; Wang, H.; Yeung, D.Y.; Wong, W.k.; Woo, W.C. Deep learning for precipitation nowcasting: A benchmark and a new model. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5617–5627. [Google Scholar]

- Wang, Y.; Long, M.; Wang, J.; Gao, Z.; Philip, S.Y. Predrnn: Recurrent neural networks for predictive learning using spatiotemporal lstms. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 879–888. [Google Scholar]

- Wang, Y.; Gao, Z.; Long, M.; Wang, J.; Yu, P.S. Predrnn++: Towards a resolution of the deep-in-time dilemma in spatiotemporal predictive learning. arXiv 2018, arXiv:1804.06300. [Google Scholar]

- Wu, H.; Yao, Z.; Wang, J.; Long, M. MotionRNN: A flexible model for video prediction with spacetime-varying motions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 15435–15444. [Google Scholar]

- Jing, J.; Li, Q.; Ding, X.; Sun, N.; Tang, R.; Cai, Y. Aenn: A Generative Adversarial Neural Network for Weather Radar Echo Extrapolation. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 42, 89–94. [Google Scholar] [CrossRef]

- Tian, L.; Li, X.; Ye, Y.; Xie, P.; Li, Y. A Generative Adversarial Gated Recurrent Unit Model for Precipitation Nowcasting. IEEE Geosci. Remote Sens. Lett. 2020, 17, 601–605. [Google Scholar] [CrossRef]

- Fang, W.; Pang, L.; Yi, W.; Sheng, V.S. AttEF: Convolutional LSTM Encoder-Forecaster with Attention Module for Precipitation Nowcasting. Intell. Autom. Soft Comput. 2021, 29, 453–466. [Google Scholar] [CrossRef]

- Huang, Q.; Chen, S.; Tan, J. TSRC: A Deep Learning Model for Precipitation Short-Term Forecasting over China Using Radar Echo Data. Remote Sens. 2022, 15, 142. [Google Scholar] [CrossRef]

- Arnaud, P.; Bouvier, C.; Cisneros, L.; Dominguez, R. Influence of rainfall spatial variability on flood prediction. J. Hydrol. 2002, 260, 216–230. [Google Scholar] [CrossRef]

- Courty, L.G.; Rico-Ramirez, M.A.; Pedrozo-Acuna, A. The significance of the spatial variability of rainfall on the numerical simulation of urban floods. Water 2018, 10, 207. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Xie, P.; Li, X.; Ji, X.; Chen, X.; Chen, Y.; Liu, J.; Ye, Y. An Energy-Based Generative Adversarial Forecaster for Radar Echo Map Extrapolation. IEEE Geosci. Remote Sens. Lett. 2020, 19, 1–5. [Google Scholar] [CrossRef]

- Ravuri, S.; Lenc, K.; Willson, M.; Kangin, D.; Lam, R.; Mirowski, P.; Fitzsimons, M.; Athanassiadou, M.; Kashem, S.; Madge, S.; et al. Skilful precipitation nowcasting using deep generative models of radar. Nature 2021, 597, 672–677. [Google Scholar] [CrossRef]

- Fan, Y.; Lu, X.; Li, D.; Liu, Y. Video-based emotion recognition using CNN-RNN and C3D hybrid networks. In Proceedings of the 18th ACM International Conference on Multimodal Interaction, Tokyo, Japan, 12–16 November 2016; pp. 445–450. [Google Scholar]

- Pulkkinen, S.; Nerini, D.; Pérez Hortal, A.A.; Velasco-Forero, C.; Seed, A.; Germann, U.; Foresti, L. Pysteps: An open-source Python library for probabilistic precipitation nowcasting (v1. 0). Geosci. Model Dev. 2019, 12, 4185–4219. [Google Scholar] [CrossRef]

- Harshvardhan, G.; Gourisaria, M.K.; Pandey, M.; Rautaray, S.S. A comprehensive survey and analysis of generative models in machine learning. Comput. Sci. Rev. 2020, 38, 100285. [Google Scholar]

- Ng, A.; Jordan, M. On discriminative vs. generative classifiers: A comparison of logistic regression and naive bayes. Adv. Neural Inf. Process. Syst. 2001, 14. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Mao, X.; Li, Q.; Xie, H.; Lau, R.Y.; Wang, Z.; Paul Smolley, S. Least squares generative adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2794–2802. [Google Scholar]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

- Thangjai, W.; Niwitpong, S.A.; Niwitpong, S. Confidence intervals for the common coefficient of variation of rainfall in Thailand. PeerJ 2020, 8, e10004. [Google Scholar] [CrossRef] [PubMed]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Chen, M.; Gao, F.; Kong, R.; Wang, Y.; Wang, J.; Tan, X.; Xiao, X.; Zhang, W.; Wang, L.; Ding, Q. Introduction of auto-nowcasting system for convective storm and its performance in Beijing Olympics meteorological service. J. Appl. Meteorol. Sci. 2010, 21, 395–404. [Google Scholar]

- Stanski, H.; Wilson, L.; Burrows, W. Survey of common verification methods in meteorology. In World Weather Watch Technical Report; WMO: Geneva, Switzerland, 1989. [Google Scholar]

- Han, L.; Liang, H.; Chen, H.; Zhang, W.; Ge, Y. Convective precipitation nowcasting using U-Net Model. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4103508. [Google Scholar] [CrossRef]

- Chen, L.; Cao, Y.; Ma, L.; Zhang, J. A deep learning-based methodology for precipitation nowcasting with radar. Earth Space Sci. 2020, 7, e2019EA000812. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Heusel, M.; Ramsauer, H.; Unterthiner, T.; Nessler, B.; Hochreiter, S. Gans trained by a two time-scale update rule converge to a local nash equilibrium. Adv. Neural Inf. Process. Syst. 2017, 30, 6629–6640. [Google Scholar]

- Taylor, K.E. Summarizing multiple aspects of model performance in a single diagram. J. Geophys. Res. Atmos. 2001, 106, 7183–7192. [Google Scholar] [CrossRef]

| Items | Training | Validation | Test | Removed | Total |

|---|---|---|---|---|---|

| Sequences | 13,200 | 1888 | 3760 | 44 | 18,892 |

| Rainy days | 60 | 9 | 17 | / | 86 |

| Proportion | 0.699 | 0.1 | 0.199 | 0.003 | 1 |

| Year | 2017, 2018 | 2018 | 2019 | / | 2017–2019 |

| Model | Trained with GA Strategy | Trained with SVRE Loss |

|---|---|---|

| AGAN(g) | × | × |

| AGAN(g) + SVRE | × | ✓ |

| AGAN | ✓ | × |

| AGAN + SVRE | ✓ | ✓ |

| Model | POD ↑ | FAR ↓ | CSI ↑ | MBE ↓ | MAE ↓ | RMSE ↓ | SSIM ↑ | JSD ↓ |

|---|---|---|---|---|---|---|---|---|

| PySTEPS | 0.299 | 0.470 | 0.204 | 0.0 | 6.1 | 9.6 | 0.292 | 0.601 |

| SmaAt-UNet | 0.631 | 0.521 | 0.351 | 13.8 | 14.6 | 18.8 | 0.365 | 0.516 |

| MotionRNN | 0.572 | 0.477 | 0.310 | 14.0 | 15.0 | 19.2 | 0.337 | 0.521 |

| AGAN(g) | 0.749 | 0.568 | 0.373 | 13.7 | 14.6 | 18.8 | 0.360 | 0.437 |

| AGAN(g) + SVRE | 0.643 | 0.484 | 0.377 | 13.2 | 14.4 | 18.6 | 0.377 | 0.477 |

| AGAN | 0.721 | 0.565 | 0.374 | 13.4 | 14.4 | 18.5 | 0.376 | 0.427 |

| AGAN + SVRE | 0.745 | 0.578 | 0.380 | 13.4 | 14.5 | 18.6 | 0.387 | 0.421 |

| Model | POD ↑ | FAR ↓ | CSI ↑ | MBE ↓ | MAE ↓ | RMSE ↓ | SSIM ↑ | JSD ↓ |

|---|---|---|---|---|---|---|---|---|

| PySTEPS | 0.483 | 0.455 | 0.344 | −0.2 | 8.3 | 11.8 | 0.231 | 0.672 |

| SmaAt-UNet | 0.936 | 0.556 | 0.431 | 10.4 | 11.1 | 15.1 | 0.304 | 0.664 |

| MotionRNN | 0.914 | 0.601 | 0.384 | 11.0 | 11.7 | 15.5 | 0.264 | 0.619 |

| AGAN(g) | 0.899 | 0.510 | 0.464 | 10.1 | 10.8 | 14.9 | 0.354 | 0.661 |

| AGAN(g) + SVRE | 0.881 | 0.554 | 0.421 | 9.1 | 10.5 | 14.4 | 0.317 | 0.650 |

| AGAN | 0.819 | 0.482 | 0.464 | 8.3 | 9.7 | 13.7 | 0.358 | 0.621 |

| AGAN + SVRE | 0.927 | 0.536 | 0.448 | 9.1 | 10.2 | 14.0 | 0.368 | 0.616 |

| Model | POD ↑ | FAR ↓ | CSI ↑ | MBE ↓ | MAE ↓ | RMSE ↓ | SSIM ↑ | JSD ↓ |

|---|---|---|---|---|---|---|---|---|

| PySTEPS | 0.696 | 0.476 | 0.426 | 0.8 | 7.5 | 10.6 | 0.228 | 0.642 |

| SmaAt-UNet | 0.981 | 0.647 | 0.351 | 9.8 | 10.2 | 13.9 | 0.284 | 0.442 |

| MotionRNN | 0.936 | 0.659 | 0.333 | 10.7 | 11.3 | 15.3 | 0.249 | 0.298 |

| AGAN(g) | 0.933 | 0.519 | 0.465 | 8.3 | 8.8 | 13.2 | 0.306 | 0.331 |

| AGAN(g) + SVRE | 0.873 | 0.524 | 0.445 | 7.0 | 8.2 | 12.0 | 0.305 | 0.409 |

| AGAN | 0.951 | 0.604 | 0.388 | 8.5 | 9.1 | 12.8 | 0.327 | 0.341 |

| AGAN + SVRE | 0.960 | 0.617 | 0.378 | 8.3 | 9.1 | 12.8 | 0.332 | 0.271 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gong, A.; Li, R.; Pan, B.; Chen, H.; Ni, G.; Chen, M. Enhancing Spatial Variability Representation of Radar Nowcasting with Generative Adversarial Networks. Remote Sens. 2023, 15, 3306. https://doi.org/10.3390/rs15133306

Gong A, Li R, Pan B, Chen H, Ni G, Chen M. Enhancing Spatial Variability Representation of Radar Nowcasting with Generative Adversarial Networks. Remote Sensing. 2023; 15(13):3306. https://doi.org/10.3390/rs15133306

Chicago/Turabian StyleGong, Aofan, Ruidong Li, Baoxiang Pan, Haonan Chen, Guangheng Ni, and Mingxuan Chen. 2023. "Enhancing Spatial Variability Representation of Radar Nowcasting with Generative Adversarial Networks" Remote Sensing 15, no. 13: 3306. https://doi.org/10.3390/rs15133306

APA StyleGong, A., Li, R., Pan, B., Chen, H., Ni, G., & Chen, M. (2023). Enhancing Spatial Variability Representation of Radar Nowcasting with Generative Adversarial Networks. Remote Sensing, 15(13), 3306. https://doi.org/10.3390/rs15133306