1. Introduction

There is growing evidence that more intense forest disturbance events have become more frequent in recent decades, posing significant challenges to biological habitats and ecological sustainability [

1,

2,

3]. Natural disturbances, such as fire, drought, hurricanes, etc., alongside human activities, such as deforestation, urbanization, and agricultural land reclamation, significantly impact the composition, structure, and function of the forest system. These disturbance events release massive amounts of carbon stored in forest vegetation into the atmosphere while also disrupting forest oxygen-release functions [

4,

5]. Forest disturbances can cause permanent alterations in forest functions with considerable effects on the global forest carbon budget and other vital ecosystem services, including the water cycle and energy balance [

6,

7]. There is an urgent need for retrospective analysis and compilation of basic data on forest disturbance, which will facilitate the development of sustainable forest conservation policies and mitigate the increase in carbon emissions generated by the forest disturbance.

Detecting and characterizing change over time is the natural first step toward identifying the driver of the change and understanding the change mechanism [

8]. Remote sensing data have long been used for forest cover research as they capture natural and anthropogenic activities on a broad spatial scale and have natural temporal properties [

9,

10,

11]. The Landsat Archives provide a long-term, large-scale collection of satellite images in standard format that can be used on a large scale to detect changes caused by forest disturbance [

12]. In 2008, free and open access to all Landsat archives continued to revolutionize the use of Landsat data [

13]. The use of Landsat time series analysis and extraction of spectral trajectory features is a mainstream method for current systematic forest disturbance monitoring, with significant progress [

8,

14,

15,

16,

17,

18,

19,

20].

Many forest disturbance detection and Landsat-based change detection algorithms based on time series remote sensing data have been developed and widely used. Among them, the threshold method (e.g., VCT), trajectory fitting method (e.g., CCDC), and trajectory segmentation method (e.g., LandTrendr) are the most often used methods for forest disturbance detection [

13,

15,

16]. These methods have been proven to be effective in detecting forest disturbances, but the reliabilities often depend on the severity of the disturbance events themselves [

21]. Ideally, forest disturbance has significant time series variability characteristics that are sufficient to pass a fixed threshold for detection [

18]. However, data gaps and noise due to clouds, snow, or satellite system failures still make it challenging to extract reliable changes from the remote sensing time series [

22,

23,

24]. The spectral response to non-stand replacement disturbance is subtle and delayed; therefore, some algorithms tend not to consider detecting non-stand replacement disturbance or take a conservative approach to avoid errors due to factors, such as data noise and phenological changes. Most products or methods used for detecting forest disturbance are sensitive to sudden and rapid stand replacement disturbances (e.g., harvest, fire) but ineffective for detecting non-stand replacing disturbances (e.g., insect pests, drought) that persist over many years and change gradually [

21,

25,

26]. The goal of forest management is to mitigate or adapt to the impacts of constantly changing disturbance conditions, and subtle long-term disturbances often lead to more varied stand structures, which also need to be addressed [

27]. Therefore, new methods are needed to comprehensively and accurately detect forest disturbances, not solely relying on the severity of the disturbance events themselves.

DL is powerful in modeling and learning capabilities and can extract information about real-world changes from remote sensing data [

28]. Current studies using DL for forest disturbance detection are mainly based on computer vision techniques, which do not capture long-term forest dynamics information. Most of the studies have been evaluated with little reference data from a small area and only for single disturbance causal agent detection, which limits the transferability of the proposed approaches [

29,

30]. In remote sensing, the detection of forest disturbances is inherently difficult because low-magnitude and very small size disturbance image textures are not obvious, similar to other background noise [

31].

DL is well adapted to complex spatial and temporal patterns and has the ability to detect and differentiate land cover with very similar spectral characteristics [

32,

33]. Using time series remote sensing data can provide dynamic change information of the Earth’s surface. In recent years, DL-based time series classification models have been used to obtain periodic information on vegetation dynamics, mowing frequency, land use, and other land surface change information from remote sensing data, such as convolutional neural network (CNN), long short-term memory (LSTM), and recurrent neural network (RNN) [

34,

35,

36,

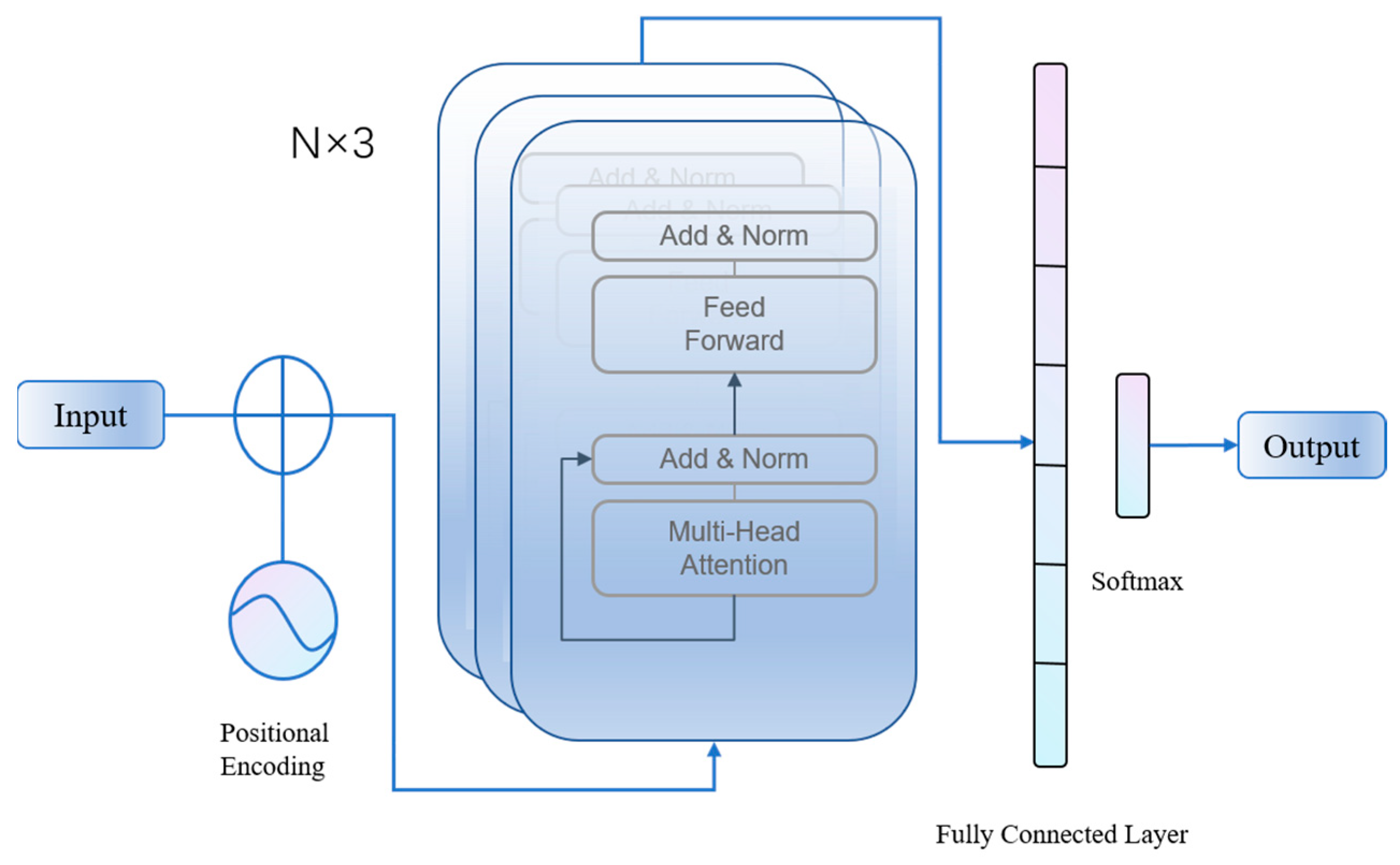

37]. The transformer was first proposed for applications in natural language processing [

38]. It has been used in other areas, such as computer vision and sequence generation, due to its efficient performance, demonstrating its advances [

38,

39,

40]. In recent studies, the transformer has been used for time series prediction and classification, showing superior performance on multiple datasets [

41,

42]. In contrast to RNN and CNN, the self-attention mechanism of the transformer allows neural networks to extract features from observations at specific time steps of the input time series of values [

42]. Rußwurm et al. [

43] compared six DL models based on Sentinel-2 raw and pre-processed data for crop-type classification, showing better performance for self-attention neural networks than the CNN.

Free open access to data and increased computing power solve the limited availability of the excessive computational demand for temporal stacks of large-scale satellite images [

44,

45]. Numerous studies have shown that the use of time dependence and spectral change is more suitable for forest disturbance detection [

13,

31]. However, the inherent variability, dropouts, and extraneous data present in remote sensing time series data pose significant challenges in achieving end-to-end forest disturbance detection using DL [

46]. If the remote sensing time series can be aligned and trimmed to equivalent lengths, it is possible to use DL time series classification to distinguish between low-magnitude disturbances and spectral changes caused by noise [

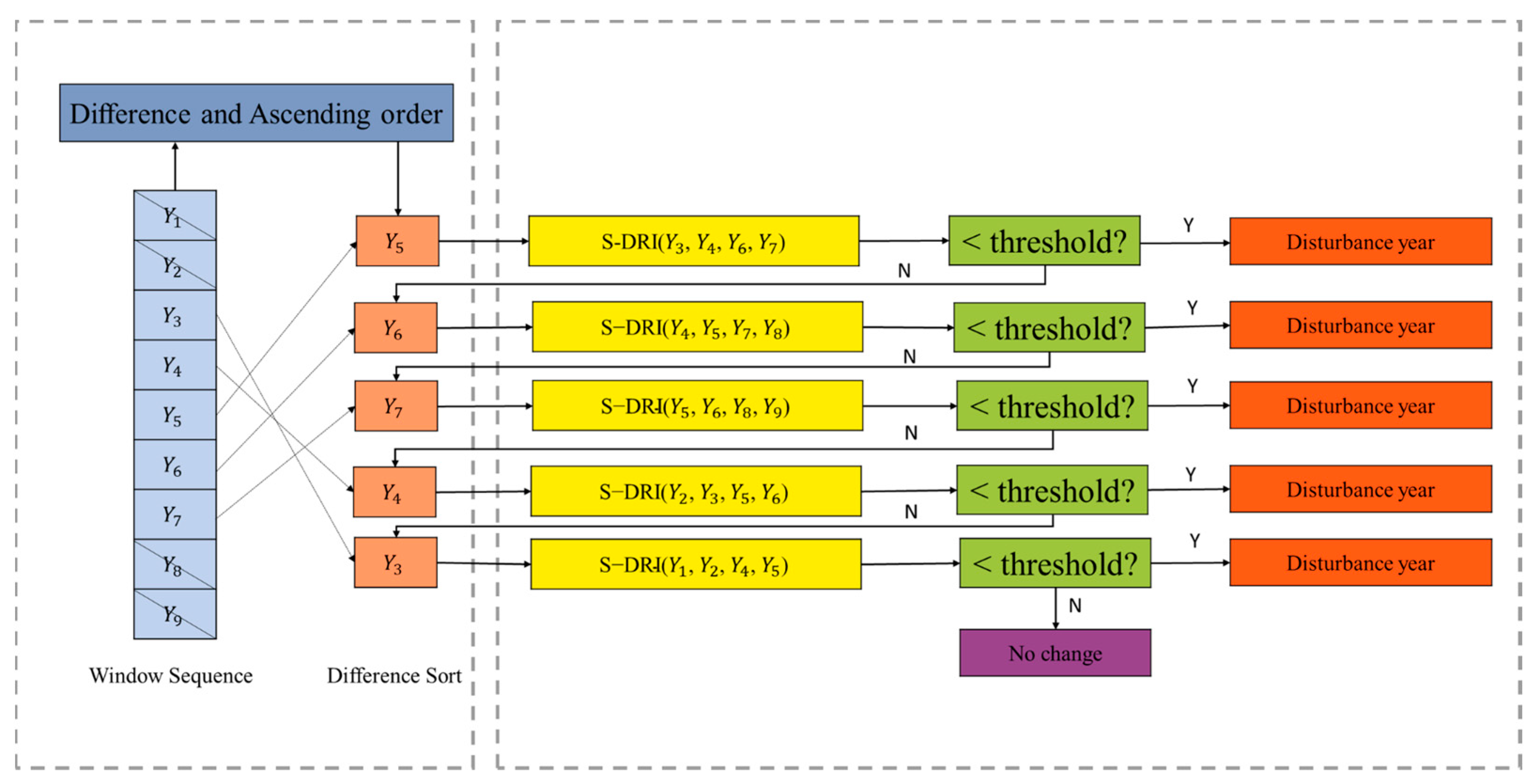

46]. DL can identify a sequence of forest disturbance events, and the exact timing of the forest disturbance can be determined by utilizing simpler statistical methods or relying on prior knowledge of the disturbance times present within the sequence. Prior knowledge constraints integrate logical rules into deep learning models, encode human intentions and domain knowledge into the model to control the output results, and avoid heavy reliance on large amounts of labeled data for training [

47]. Forest disturbance can be considered a time-varying process that can be effectively monitored through time series analysis and characteristics extraction [

48]. Based on the time-varying characteristics of forest disturbances, integrating prior knowledge constraints into deep learning time series classification models to detect forest disturbances can avoid top-down approaches to eliminate noise-induced variation and focus more on lower-magnitude disturbances.

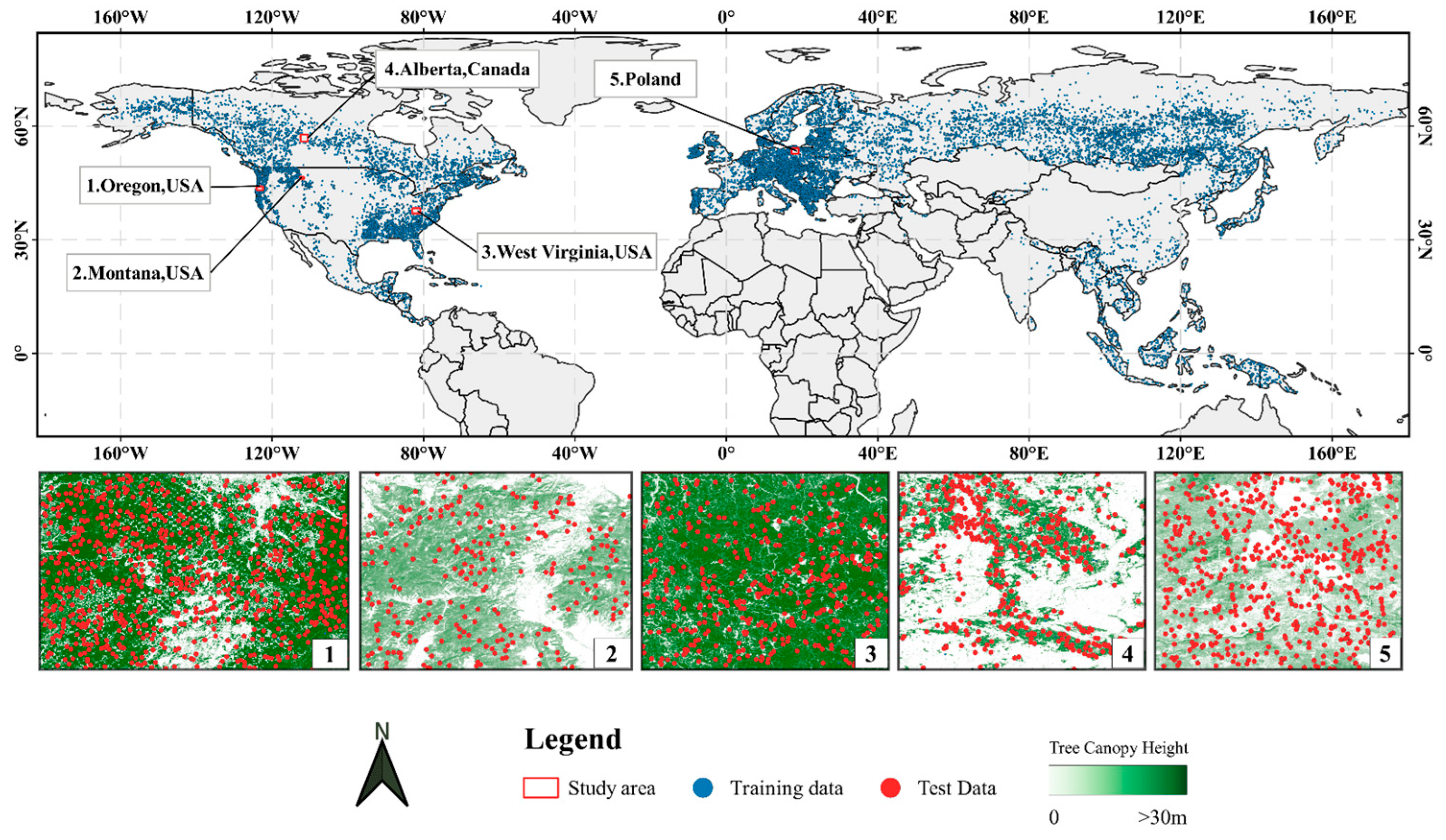

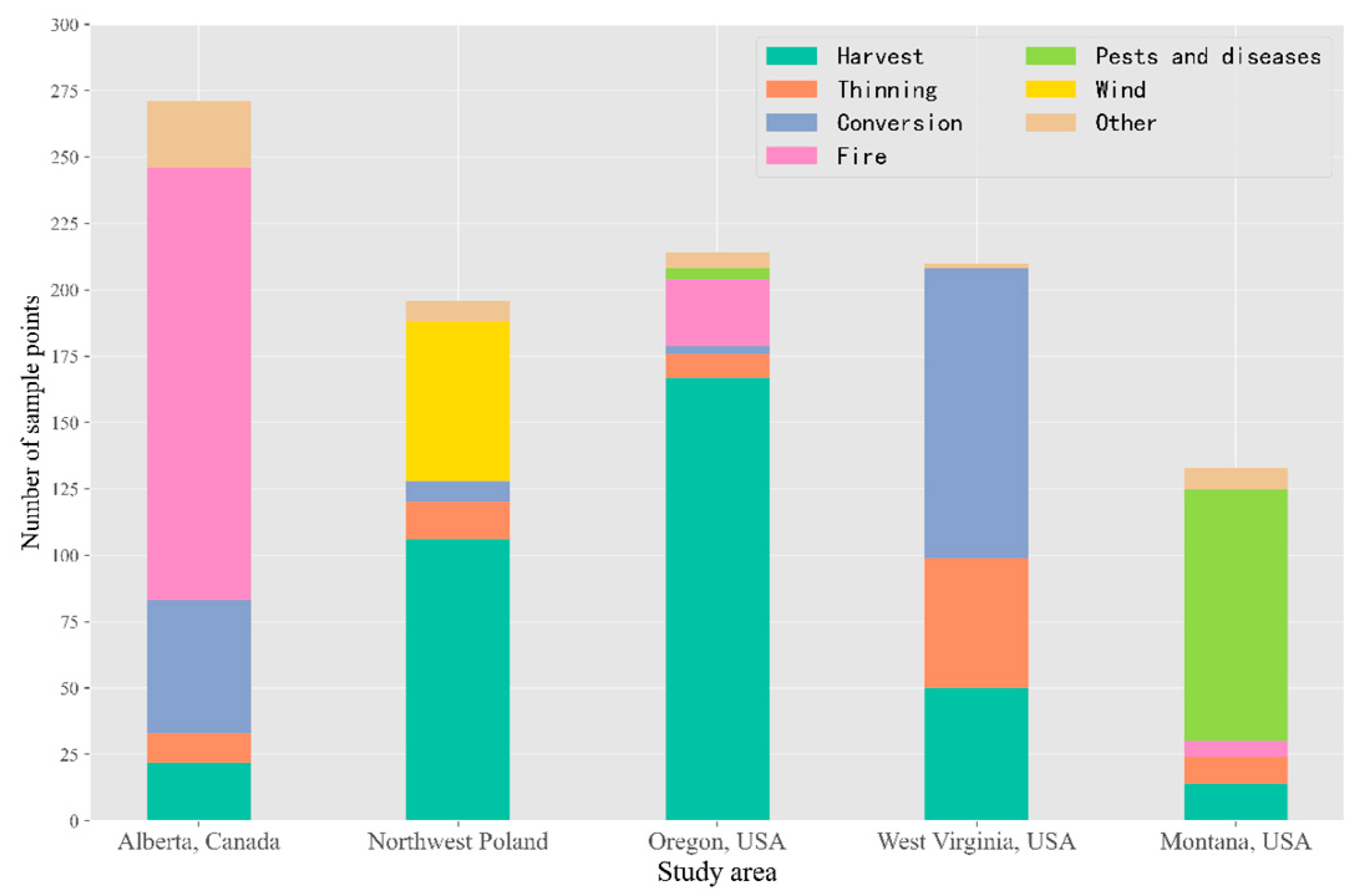

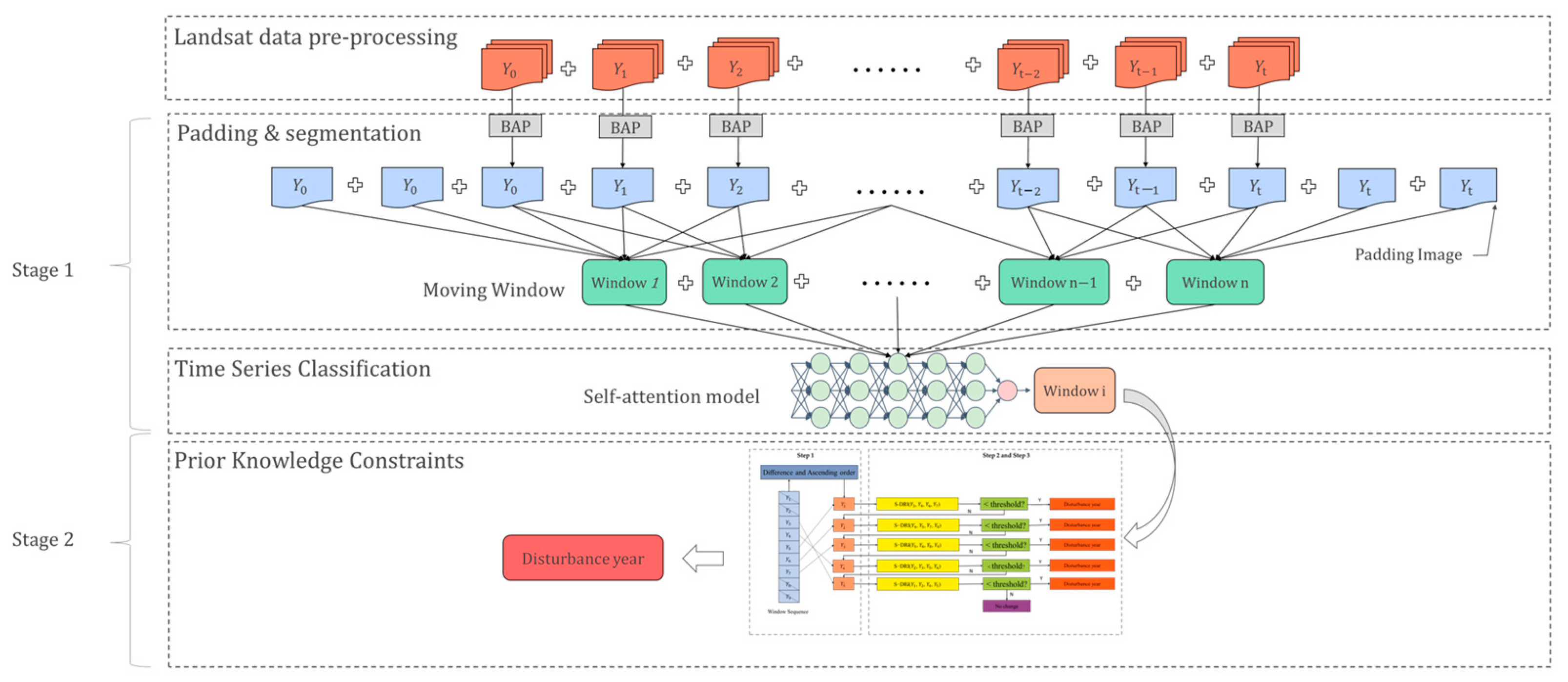

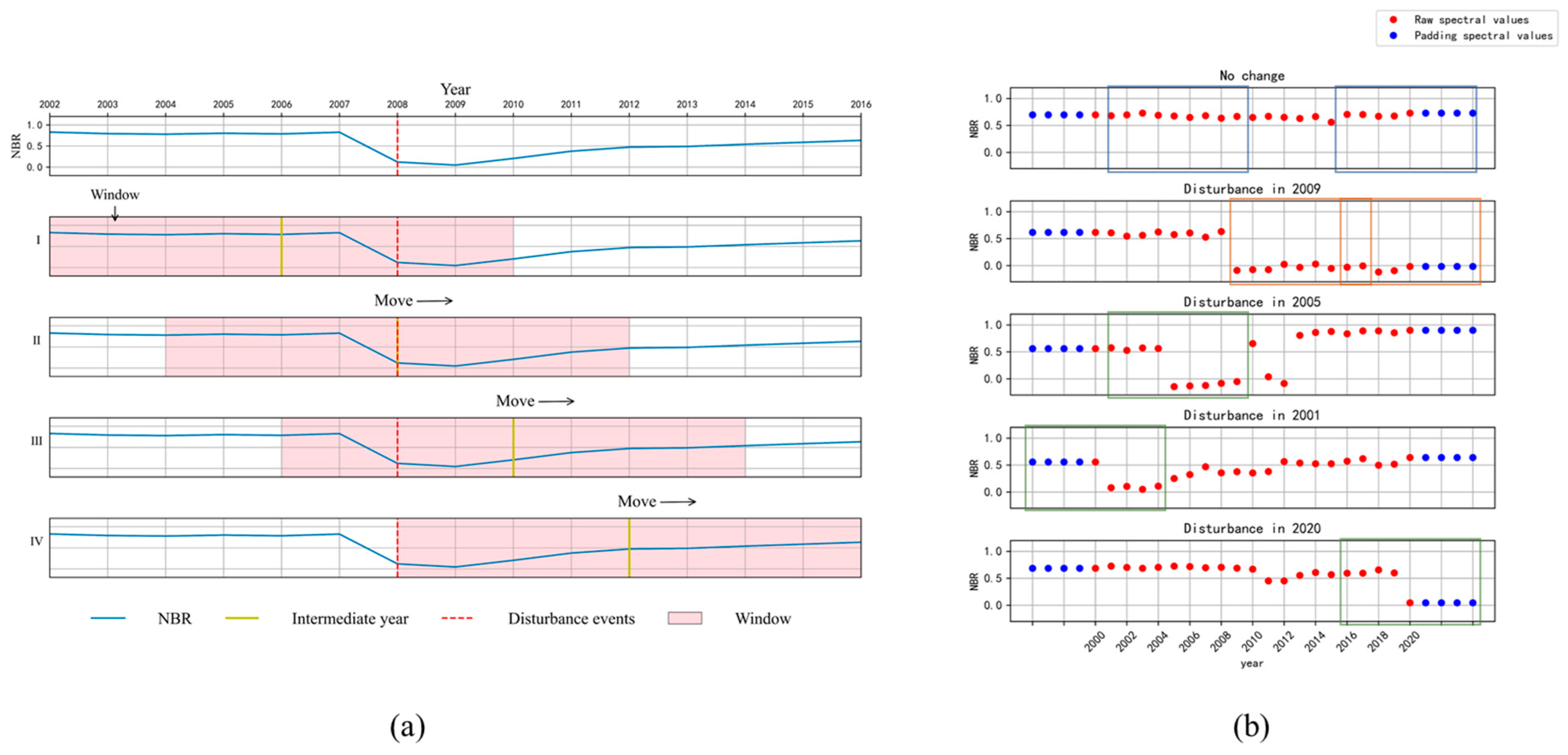

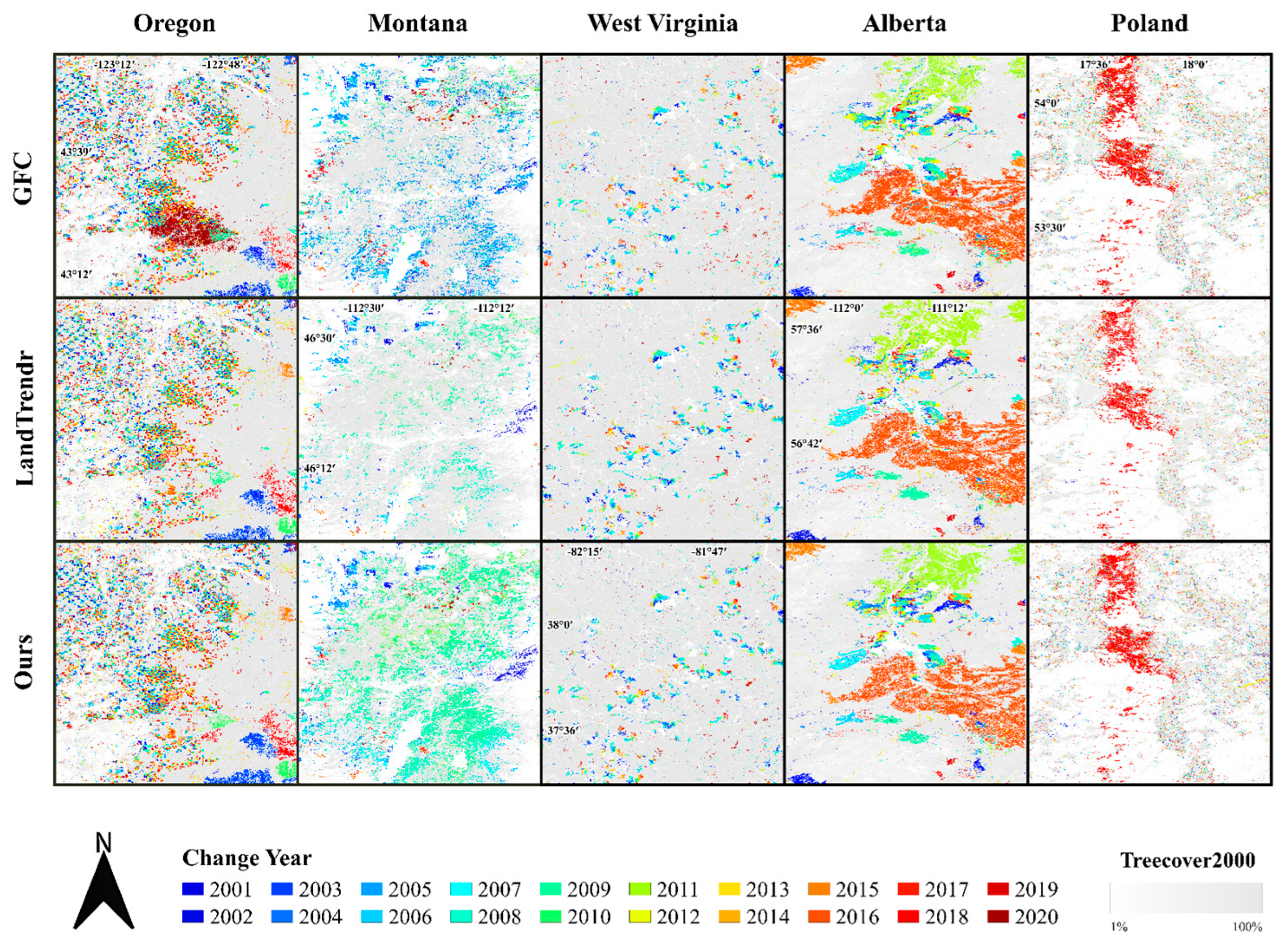

In this paper, we proposed a forest disturbance detection model that combines DL classification and prior knowledge constraints to achieve comprehensive and accurate detection of forest disturbances using time series remote sensing data. The proposed approach involves a two-phase process for mapping forest disturbance: (1) Use a moving window algorithm to align and segment remote sensing time series; then, classify time series based on DL to pinpoint the time window where the disturbance event occurred. (2) Define and apply the prior knowledge constraints to locate the specific year of the disturbance event in the identified window. We illustrated the effectiveness of our model by analyzing time series Landsat images spanning two decades (2001 to 2020) and testing on forests in study areas located in Oregon, West Virginia, Montana, Alberta, and Poland. In addition, our approach is compared with results from the LandTrendr and GFC dataset and explores the omission of the different forest disturbance causal agent classes.

4. Discussion

An accurate understanding of forest dynamics is critical for both effective forest management and mitigating the effects of climate change. The work presented here demonstrates a novel approach for detecting forest disturbances using DL time series classification and prior knowledge constraint. During the detection period, the moving window algorithm processes the time series of pixels as a window sequence and evaluates the window sequence with an improved self-attention model to obtain an interval estimate of the disturbance time. The a priori knowledge constraints are applied to the resulting window sequence with disturbance events to determine the exact year of disturbance. The results demonstrate that the combination of DL time series classification models and prior knowledge constraints can help detect forest disturbances of varying magnitudes, from subtle to severe. This approach provides comprehensive and detailed insight into forest dynamics and can support effective forest management and climate change mitigation efforts.

A key innovation of our work lies in the combination of DL time series classification and prior knowledge constraints to detect the history of forest disturbance. Landsat-based forest cover and change mapping using supervised expert-driven classification is a well-established and accepted methodology [

71]. Recent studies have shown that utilizing robust reference data and carefully constructed models results in disturbance maps with higher accuracy, such as stacked generalization, secondary classification, and ensemble methods [

22,

23,

26]. However, these methods rely on the foundation of forest disturbance detection algorithms, such as LandTrendr, to provide evidence. In previous studies, the main applications of DL in forest disturbance detection have been in semantic segmentation and time series regression and forecasting [

30,

72,

73,

74,

75]. When used for large-scale disturbance detection, these methods still have more limitations, such as the need for labor-intensive manual annotation and higher-quality images. Our study employed a method based on time series deep learning and prior knowledge constraints, effectively addressing the challenge of detecting low-amplitude disturbances. In the study, the deep learning time series classifier differentiated between stable forests and disturbances, while the application of prior knowledge constraints reduced the learning cost of the model. Our method is applicable for detecting disturbances of varying magnitudes on a global scale, as demonstrated by the validation results across multiple study areas worldwide. These results provide evidence of the reliability of our method.

Ideally, noise due to cloud contamination, smoke obscuration, and sensor failure in the time series should be completely removed before time series analysis, but complete elimination of this noise is not possible without human intervention [

23]. The differences in spectral indices based on spectral reflectance ratio between observations before and after low-magnitude forest disturbances are very similar to the spectral changes caused by phenological variations and solar angle differences [

76,

77]. Using high-magnitude threshold rules can remove these noisy and erroneous spectral changes, but it is difficult to capture low-magnitude disturbances [

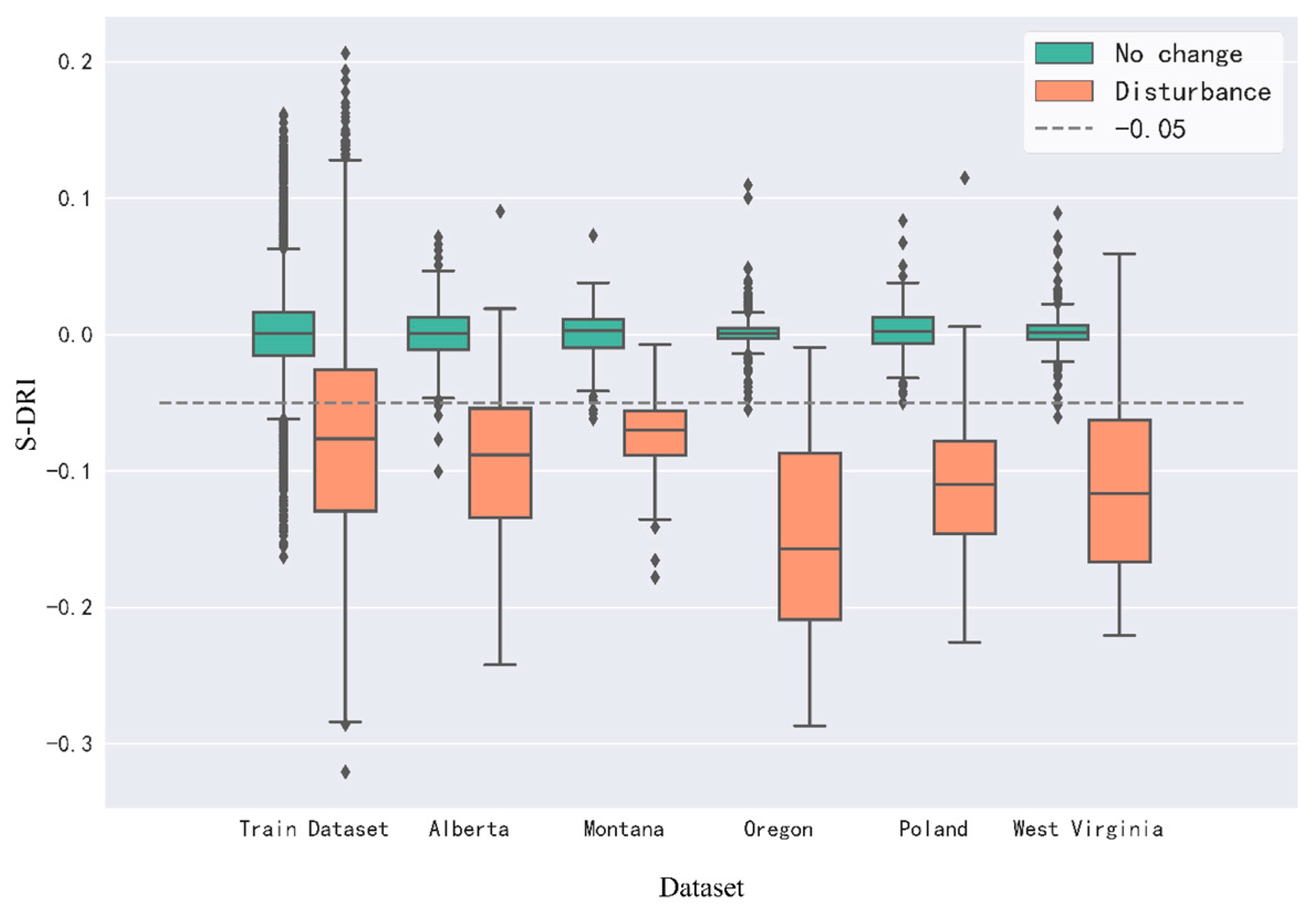

26]. Evaluation of the results for different disturbance causal agents shows that ignoring low-magnitude disturbances (e.g., thinning, pests, and diseases) is still an important factor affecting the accuracy of the model. The self-attention model uses multi-year spectral trajectory profiles to track forest disturbances, filtering out most spectral changes caused by noise, which helps the knowledge constraint (S-DRI) run in a high-confidence window sequence. By doing so, a lower threshold can be used to detect the occurrence of forest disturbance, avoiding oversensitivity to spectral changes. When we attempted to detect forest disturbance using S-DRI alone for each pixel throughout the detection period without employing a moving window and DL time series classification, there was a significant decrease in OA of the test dataset. Specifically, the OA decreased to 68.1%, as expected.

The time series classification task is complicated by extraneous, erroneous, and unaligned data of variable length [

46]. The moving window solves the problem of the fixed input data dimension of the time series classification model, and the priori knowledge constraint is used to determine the exact years of disturbance events. The window can be moved infinitely to process new images after the self-attention model has been trained, providing more efficient use of the forest detection data.

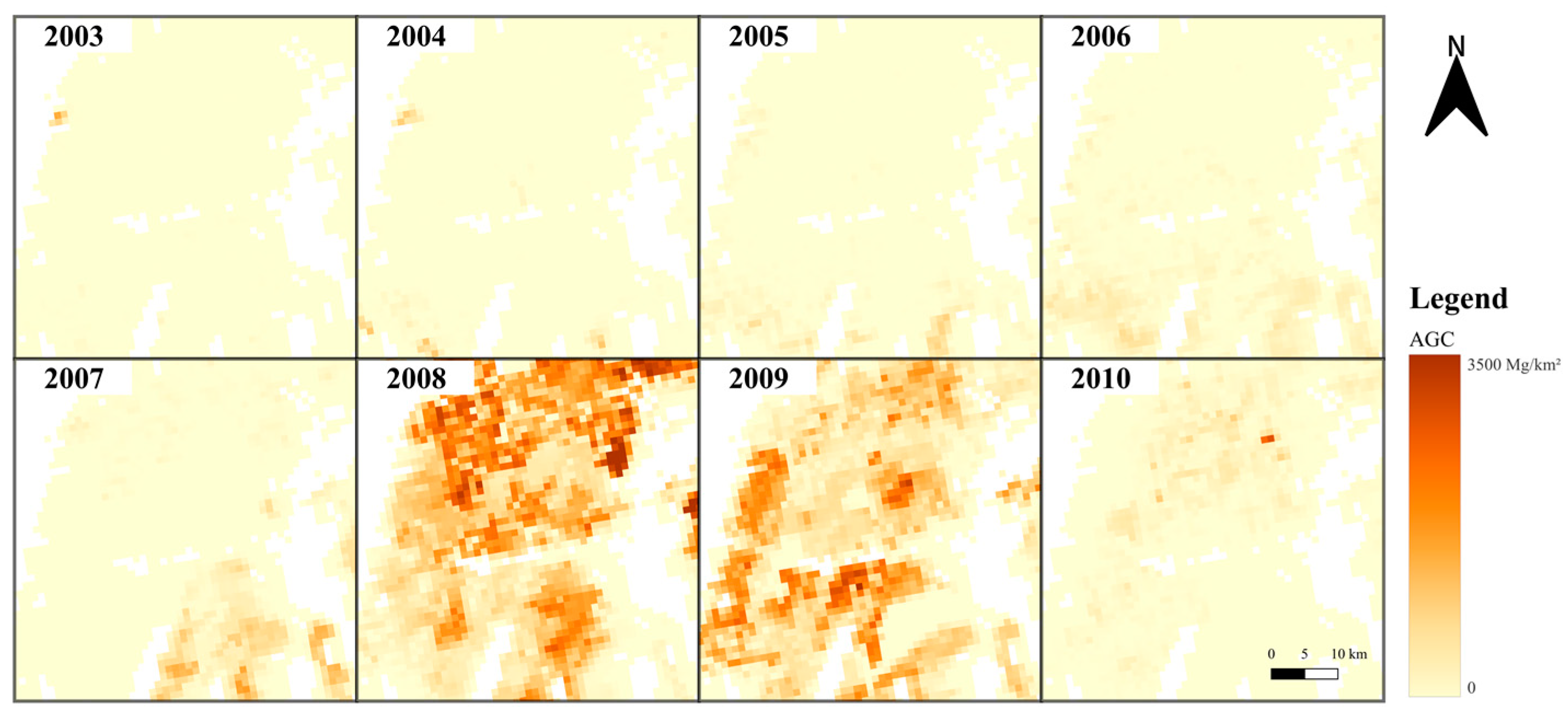

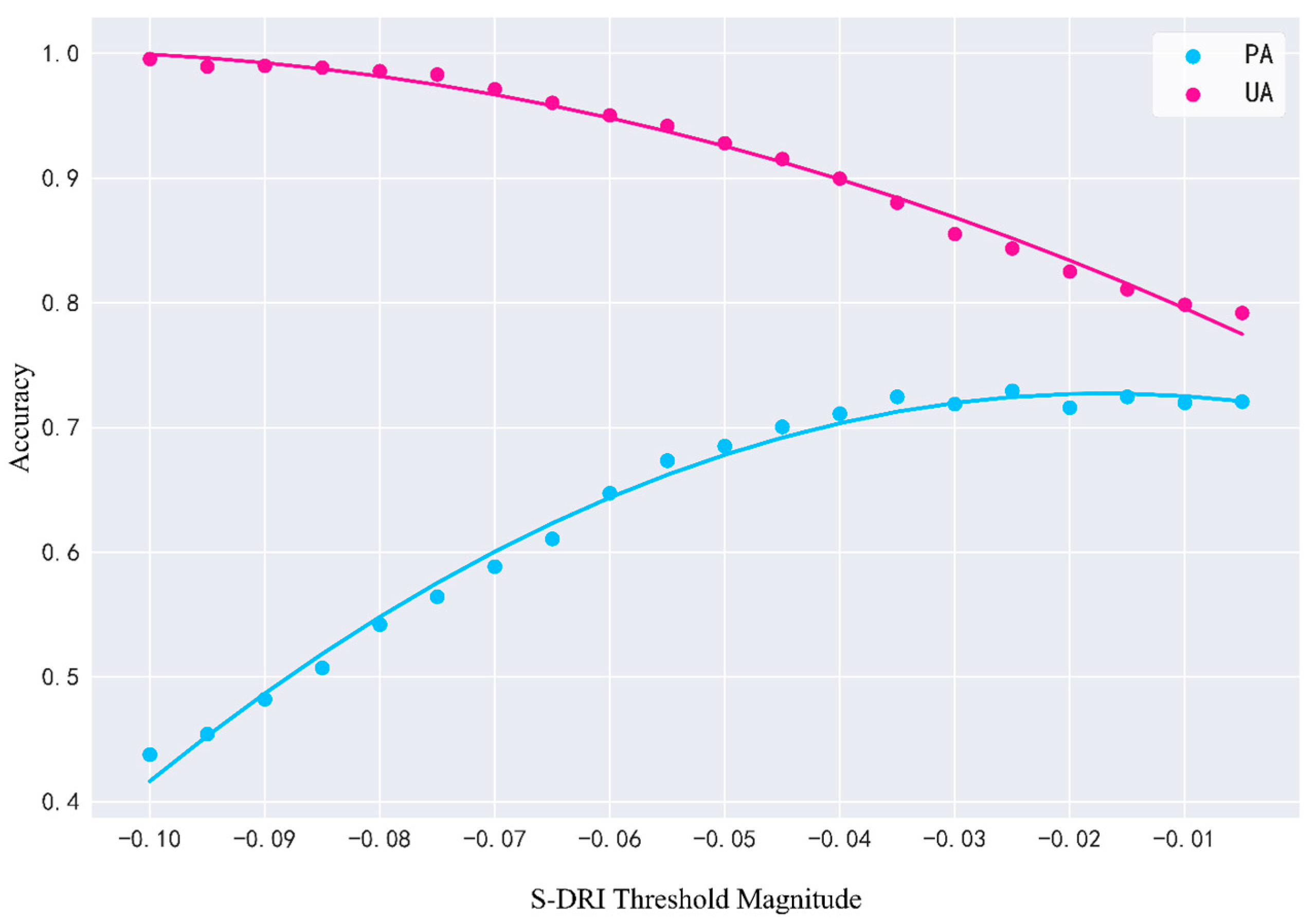

Optimizing the threshold for S-DRI allows for the detection of lower-magnitude disturbances while effectively distinguishing most noise, striking a balance between sensitivity and specificity. To demonstrate this briefly, the predefined threshold was fine-turned to evaluate the impact on our approach performance. We sequentially adjusted the threshold of S-DRI from −0.1 to 0 in intervals of 0.005 and evaluated the disturbance class PA and UA of the test dataset. As shown in

Figure 12, when lower thresholds were used, low-magnitude disturbances and noise were removed, while significantly high-magnitude disturbances with distinctive features were retained. This resulted in lower UA and higher PA. With the increased S-DRI threshold, more of the low-magnitude disturbances can be effectively retained, and the UA correspondingly increases. Meanwhile, high thresholds allowed some noise to pass through constraints, resulting in lower PA. When the threshold is adjusted to a certain value (−0.035), most of the disturbance can be detected, and the UA does not increase, even if the threshold is adjusted again. However, as the threshold increases, it also allows more false disturbances to pass the detection, resulting in a continued decrease in UA. The threshold of S-DRI was the only parameter that needed to be adjusted when the algorithm was applied in different regions and could be adjusted by removing low-magnitude disturbances to obtain the required degree of disturbance map.

The accuracy provided in this study may be slightly underestimated because strict definitions were used in this study without allowing any leeway for time adjustments [

78]. Disturbances that occur after the vegetation growth period are not detected by our approach and LandTrendr until the following year (e.g., one fire in Oregon is not detectable using both approaches). In the assessment of the results, temporal inconsistencies were considered to be omissions in the disturbance detection; therefore, all three approaches have a low UA for the no-change class. In addition, GFC detection only targets stand replacement disturbances, and non-stand replacement disturbances included in the test data also involve accuracy calculation, especially in Montana, where GFC can only correctly detect 31 out of 137 changed pixels, resulting in a lower OA.

When mapping the forest disturbance, our approach still suffers from some limitations. It is observed from the S-DRI threshold adjustment (

Figure 12) that even with a higher S-DRI threshold, the approach only has about 70~75% PA, which is caused by the omission in the self-attention model or spectral index application limitations. Due to the low-magnitude disturbance area being rare with difficult visual interpretation, the forest disturbance agents in the randomly generated training samples mainly include the harvest, wildfire, and conversion [

79]. Improving data quality and balancing the samples can enhance the capability of time series classification models to detect low-magnitude disturbances (thinning, pests, and diseases, etc.), although it requires more effort. Furthermore, the reduction in forest cover caused by factors, such as pests, diseases, and droughts, persists for several years. In subsequent studies, it is necessary to develop new conceptual definitions to describe the entire disturbance process. Cohen et al. [

26] showed that the use of multiple spectral bands/indices is very beneficial for forest disturbance detection and may solve the problem that some disturbances cannot be monitored using a single band (e.g., due to the similarity of NBR index values between water and forests, it is difficult to detect disturbances where forests are converted into water). Multiple DL-based multivariate time series learning frameworks have been proposed, and using multiple indexes/bands to detect forest change should be considered in a future study [

42]. During the later stages of our analysis of forest change (2018–2020), we identified an issue where the accuracy of the results became increasingly incorrect in all three models. This issue arose because spectral changes over multiple years continued to be an essential criterion for disturbance determination. However, time series constructed from composite images did not contain sufficient data at the end of the detection period, which contributed to decreased accuracy. The use of multi-source remote sensing data and a near-real-time change monitoring approach may solve this problem. This will be the focus of further work [

58].