1. Introduction

In the era of rapid development of radar technology, more and more countries and scholars are applying radar technology to various fields [

1,

2,

3]. Synthetic Aperture Radar (SAR) was first proposed in the 1950s as a high-resolution imaging radar [

4]. Compared with common passive imaging sensors such as infrared and optical sensors, SAR is more stable during the imaging process and less affected by background factors [

5]. In addition, SAR has high resolution and wide field of view, which allows it to detect smaller vessels and effectively monitor a larger area for vessel detection [

6]. Moreover, SAR can work in any weather and lighting conditions, and is not affected by the environment, enabling fast acquisition of real-time ship positions [

7]. These advantages make SAR an important technological support for maritime safety monitoring and maritime transportation management [

8].

In recent years, numerous methods for detecting ships in SAR images have been proposed. These methods can be broadly categorized into two groups based on their feature design approaches: traditional methods and deep learning-based methods.

Most traditional ship detection algorithms preprocess SAR images to enhance the contrast between the ship and the background and then use geometric features to identify the ship target [

9,

10,

11]. These features include many properties, such as geometric and image properties, oriented gradient histograms, and scattering features. The Constant False Alarm Rate (CFAR) algorithm and its derivatives, such as Greatest Of CFAR, Cell Averaging CFAR, Order Statistic CFAR, and Smallest Of CFAR, are among the most commonly employed methods in the research [

12,

13,

14,

15]. Such methods mainly determine a threshold after processing the input noise and compare this threshold with the input signal. If the input signal exceeds this threshold, a target is identified. Some researchers have also exploited the difference in gray value between ships and background regions to detect ships at the superpixel level. For example, Liu et al. [

16] used superpixel segmentation technology to segment sea and land areas to suppress the interference of land areas and then combined CFAR to achieve ship detection. Wang et al. [

17] utilized a superpixel-based local contrast measure which is computed using simple linear iterative clustering and patch-based intensity dissimilarity measures. Li et al. [

18] proposed a superpixel-based method for detecting targets in SAR images, which utilizes statistical differences in intensity distributions between target and clutter superpixels, and integrates global and local contrasts to achieve better target detection performance compared to backscattering-based methods. These methods require a good distribution model to describe the sea clutter and the selection of appropriate parameter settings to ensure good performance. However, the complex and variable environment in the ocean region makes it difficult to build a successful distribution model [

19].

With the rapid development of the computer technology, deep learning has been widely applied in various fields [

20,

21,

22]. In the field of object detection, deep learning-based object detection models automatically extract the features of targets through convolutional neural networks, reducing human involvement and making the extracted target features more accurate [

23]. Especially in some complex background Synthetic Aperture Radar (SAR) images, deep learning-based object detection algorithms can effectively extract and recognize targets in images compared to traditional object detection algorithms [

24]. Object detection algorithms based on deep learning can be divided into one-stage and two-stage object detection algorithms according to whether region proposals are executed on feature maps. R-CNN, Fast R-CNN, and Faster R-CNN are typical one-stage object detection algorithms which have high detection accuracy but require large computing power and long model inference time [

25,

26,

27]. One-stage object detection algorithms include You Only Look Once (YOLO), Single Shot Multibox Detector (SSD), etc. Compared to two-stage object detection algorithms, one-stage object detection algorithms have a faster inference speed. However, the lack of a region proposal step in one-stage algorithms results in a loss of accuracy [

28,

29,

30,

31]. Hu et al. [

32] proposed the Squeeze-and-Excitation (SE) block, which first introduced attention mechanism into the field of object recognition. SE weights the channels of the convolutional neural network, enabling the network to focus more on important channel features. Woo et al. [

33] proposed the Convolutional Block Attention Module (CBAM), which suppresses non-object features in the image by combining channel attention mechanism with a spatial attention mechanism. Wang et al. [

34] suggested that feature weights could be generated more efficiently by selecting an appropriate number of adjacent channels. Lin et al. [

35] proposed that shallow features have better positional information and deep features have better semantic information in convolutional neural networks. To address this, they proposed Feature Pyramid Network (FPN) for fusing shallow and deep features. To better balance semantic and positional information, Wang et al. [

36] constructed the Path Aggregation Network (PANet) by adding a top-down feature fusion path to FPN. Residual structures are also a way to optimize the expressive power of Convolutional Neural Network (CNN). For example, Bochkovskiy et al. [

37] designed Cross Stage Partial Darknet53 (CSPDarknet53) as the backbone structure for object detection networks. CSPDarknet53 effectively alleviates the loss of small object information by introducing residual connections. In addition, they also added spatial pyramid pooling (SPP) to enhance the network’s ability to detect multi-scale objects. Li et al. [

38] used residual structures to preserve more object information in the deep network of DetNet. Chen et al. [

39] proposed the Atrous Spatial Pyramid Pooling (ASPP), which replaces the pooling operation in SPP with dilated convolution to reduce the loss of object information.

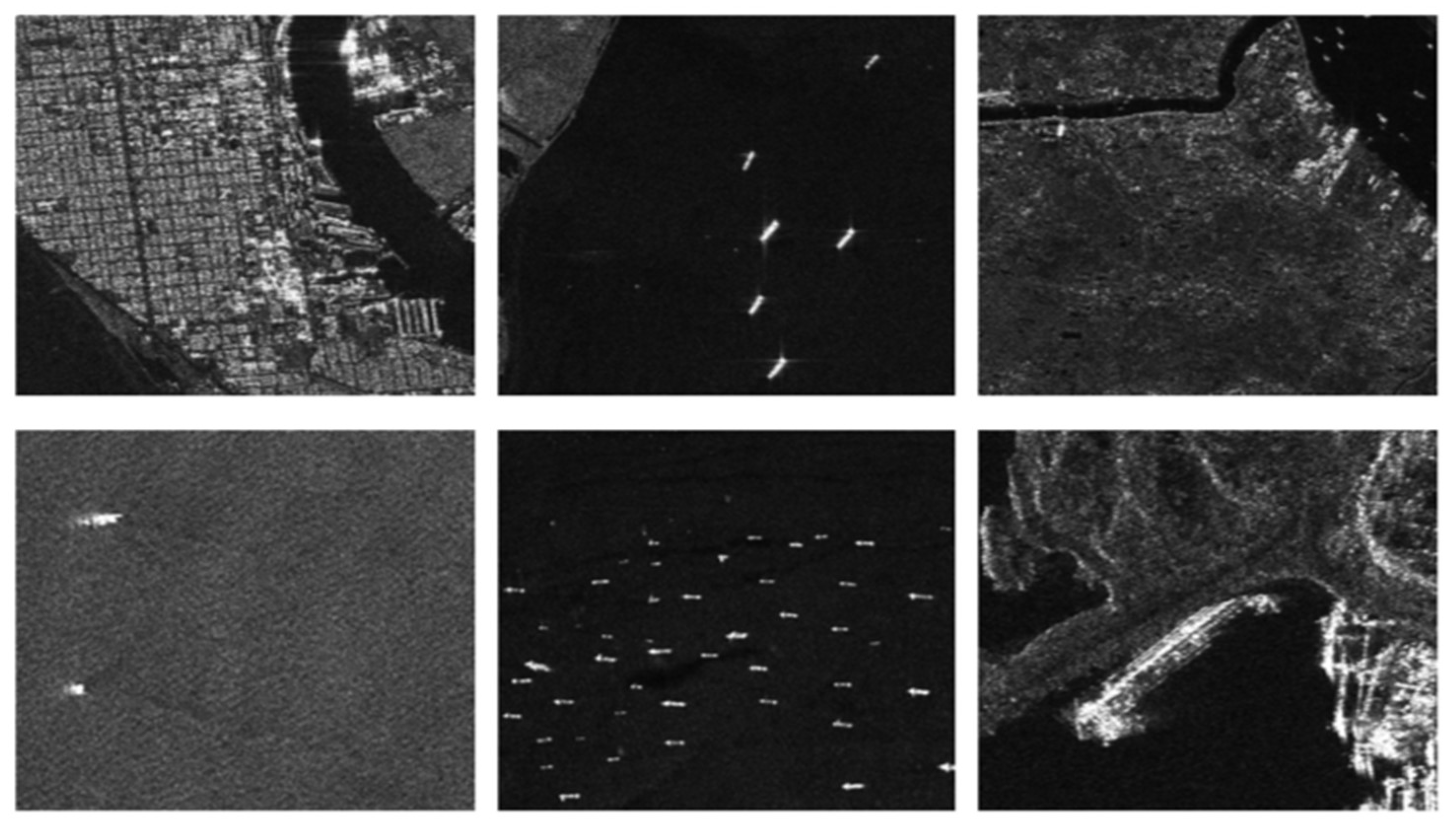

To achieve better SAR ship detection performance, researchers have gradually applied deep learning-based object detection methods and techniques to this field. Deep learning-based ship detection methods require a large amount of data to train the model. However, in the initial stage of SAR ship detection, researchers often face the challenge of a small dataset size. Lu et al. [

40] improved the detection accuracy of ship detection models applied to a relatively small dataset by combining data augmentation and transfer learning methods, achieving a 1–3% improvement. Rostami et al. [

41] proposed transferring knowledge from the electro-optical domain to the SAR domain by learning a shared invariant cross-domain embedding space, enabling electro-optical domain images to be used to train SAR domain object detection models. Zhang et al. [

42] proposed a few-shot multi-class ship detection algorithm with an attention feature map and multi-relation detector. Truong et al. [

43] constructed a convolutional neural network model using transfer learning techniques. Zhang et al. [

44] built the first publicly available dataset for SAR ship detection, called the SAR Ship Detection Dataset (SSDD). Wei et al. [

45] constructed the High-Resolution SAR Images Dataset (HRSID) for ship detection, and they applied residual structures and feature pyramid networks to build HR-SDNet. Currently, some researchers are focusing on model lightweighting. For example, Jin et al. [

46] introduced an atrous convolution kernel to reduce the number of parameters while keeping the receptive field unchanged. Ma et al. [

47] suggested a compact detection model, which uses lasso regularization to set the unimportant feature parameters to zero, thereby greatly reducing the parameters of You Only Look Once V4 (YOLOV4).

To deal with SAP image noise and background interference, incorporating attention mechanisms into SAR ship detection has been suggested. For example, Cui et al. [

48] proposed a densely attentive pyramid network that embeds CBAM into FPN to weigh feature maps of different scales, highlighting ship features. Zhang et al. [

49] proposed replacing traditional convolutions in CBAM with dilated convolutions to suppress background information while reducing the number of parameters. Wang et al. [

50] integrated the Spatial Shuffle-Group Enhance attention module into the target detection network to alleviate interference from complex environments. Yang et al. [

51] introduced the Coordinate Attention Module, which decodes features into one-dimensional vertical and horizontal features using two global pooling operations, suppressing clutter while further focusing on the ship position information. Since attention mechanisms suppress non-ship information in the image by assigning different region weights to feature maps, the correctness of weight generation has a significant impact on ship detection performance. However, the initial design of attention mechanisms such as CBAM was aimed at optical images and did not consider the influence of complex background information and large amounts of noise in SAR images on weight generation.

To address the problem of multi-scale ship detection, researchers have proposed approaches that focus on feature fusion or increasing the receptive field of the detection model. For example, Li et al. [

52] proposed a Hierarchical Selective Filtering (HSF) layer to extract feature maps using three convolution kernels of different sizes. This design is similar to SPP, which increases the receptive field of the ship detection model. Zhu et al. [

53] introduced FPN into the SAR ship detection model. Zhang et al. [

54] proposed four different feature fusion methods based on FPN to alleviate the conflict between ship semantic information and position information in convolutional neural networks. Gao et al. [

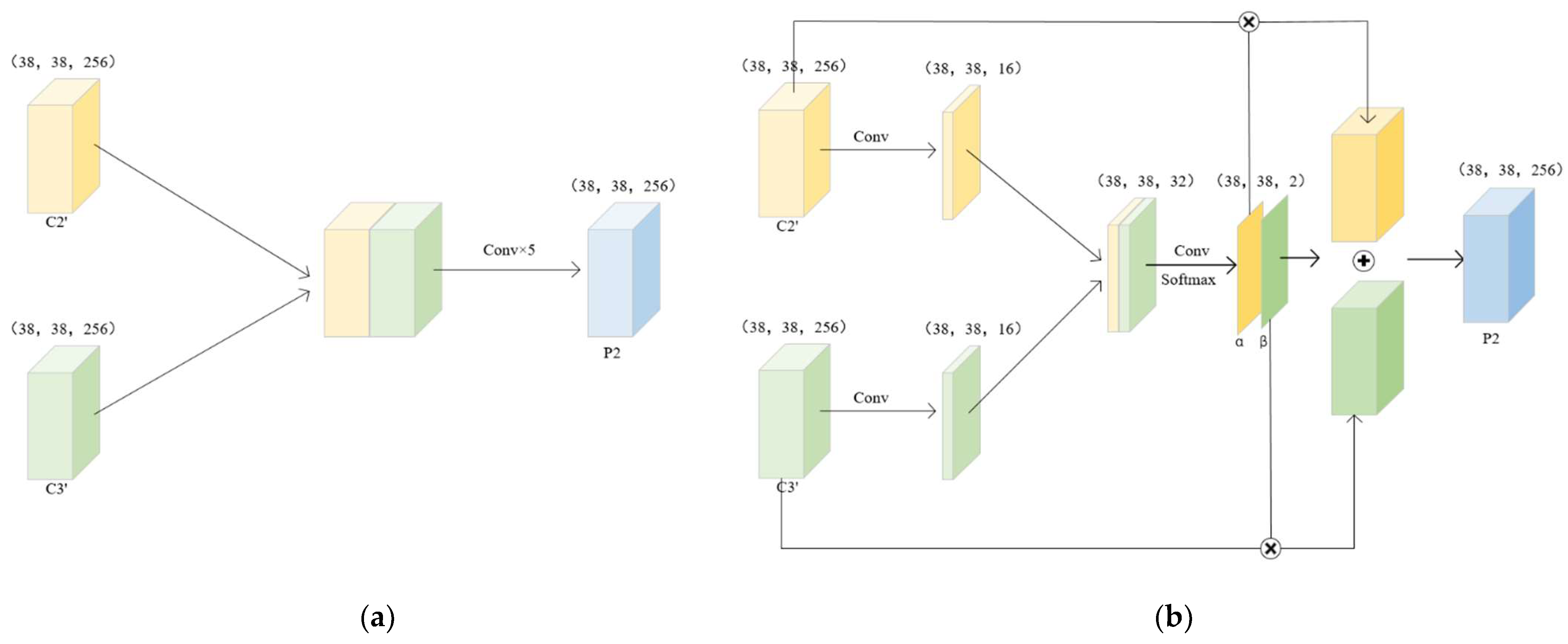

55] improved Path Aggregation Network (PANet). First, the feature fusion network was used to fuse the three-layer features of the backbone output. Then the information between different feature layers was further fused through variable convolution. However, these feature fusion methods only directly add adjacent features without considering the contribution of different input features to the output feature. Therefore, more sophisticated feature fusion methods are needed to improve the performance of the model.

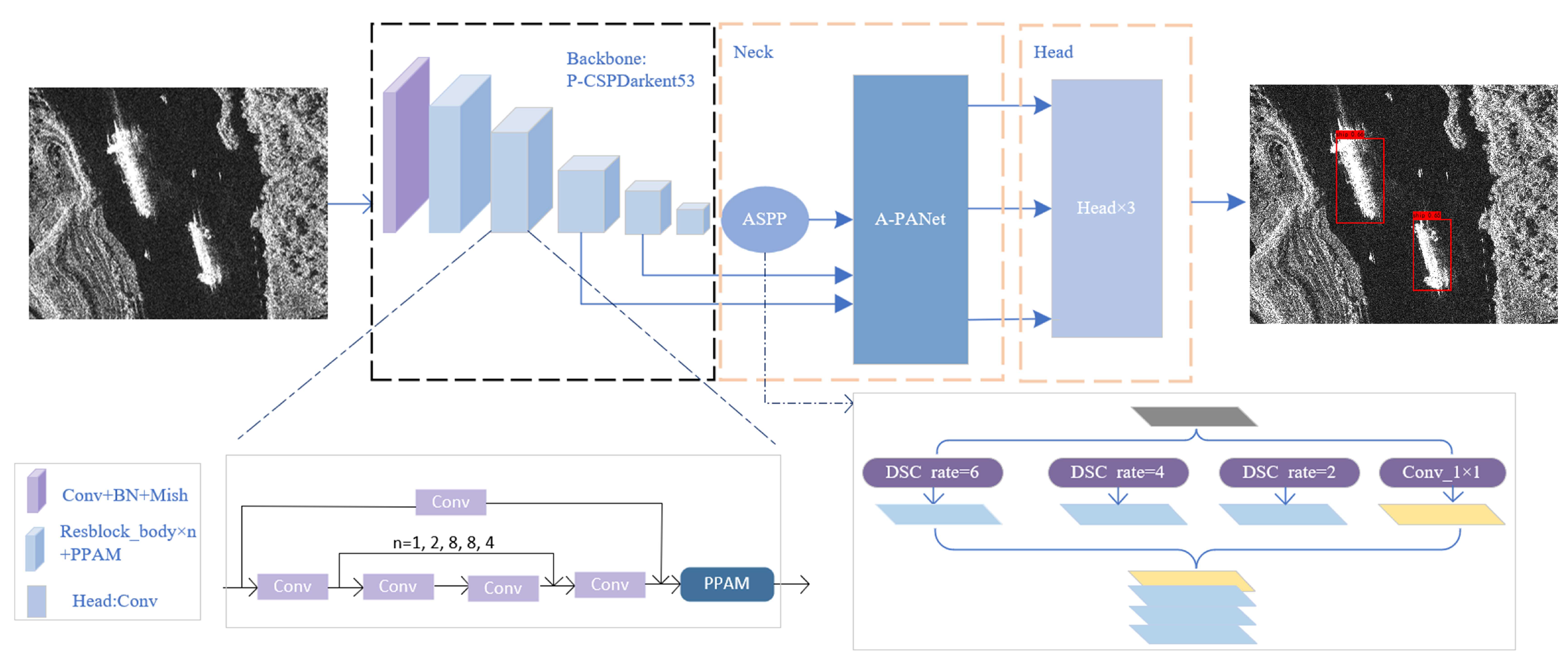

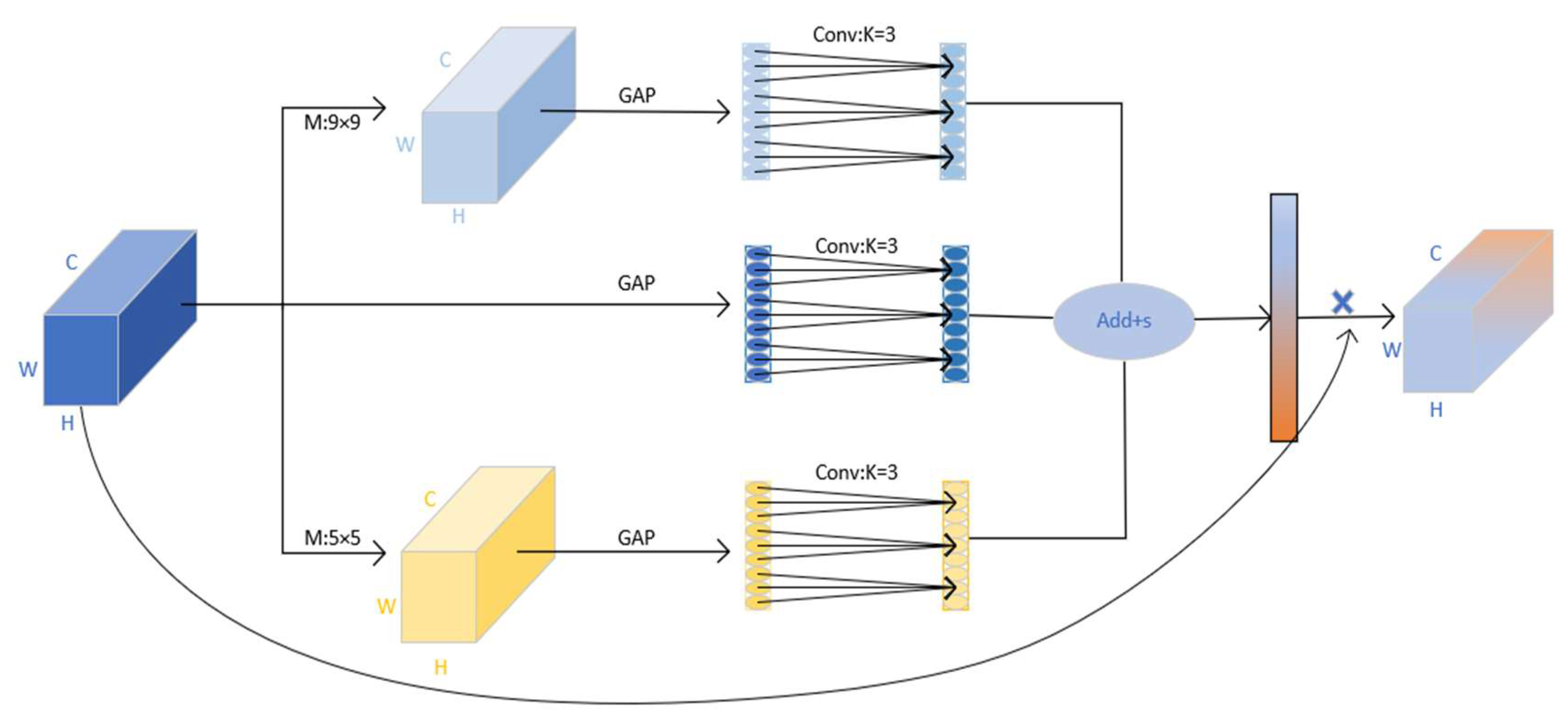

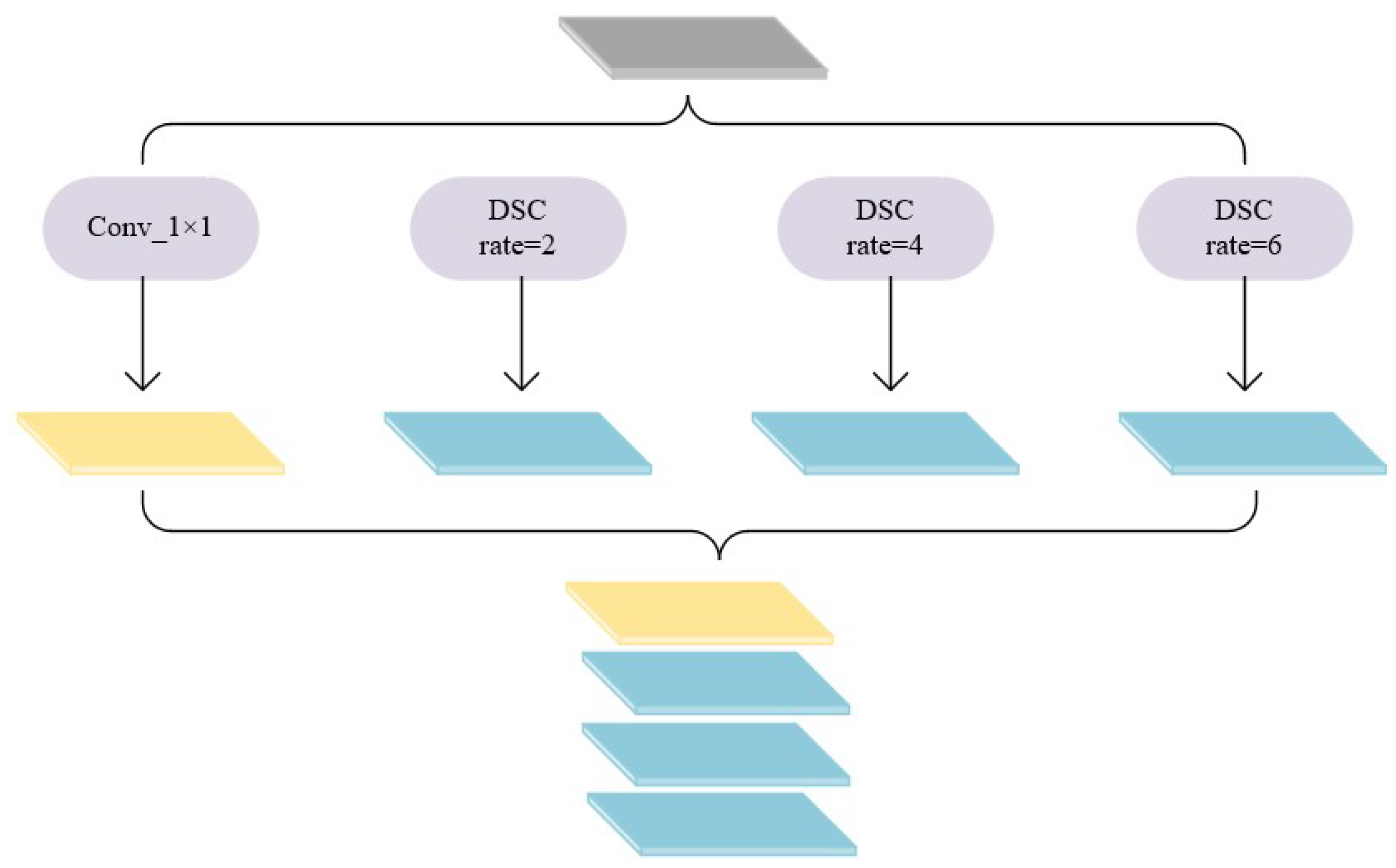

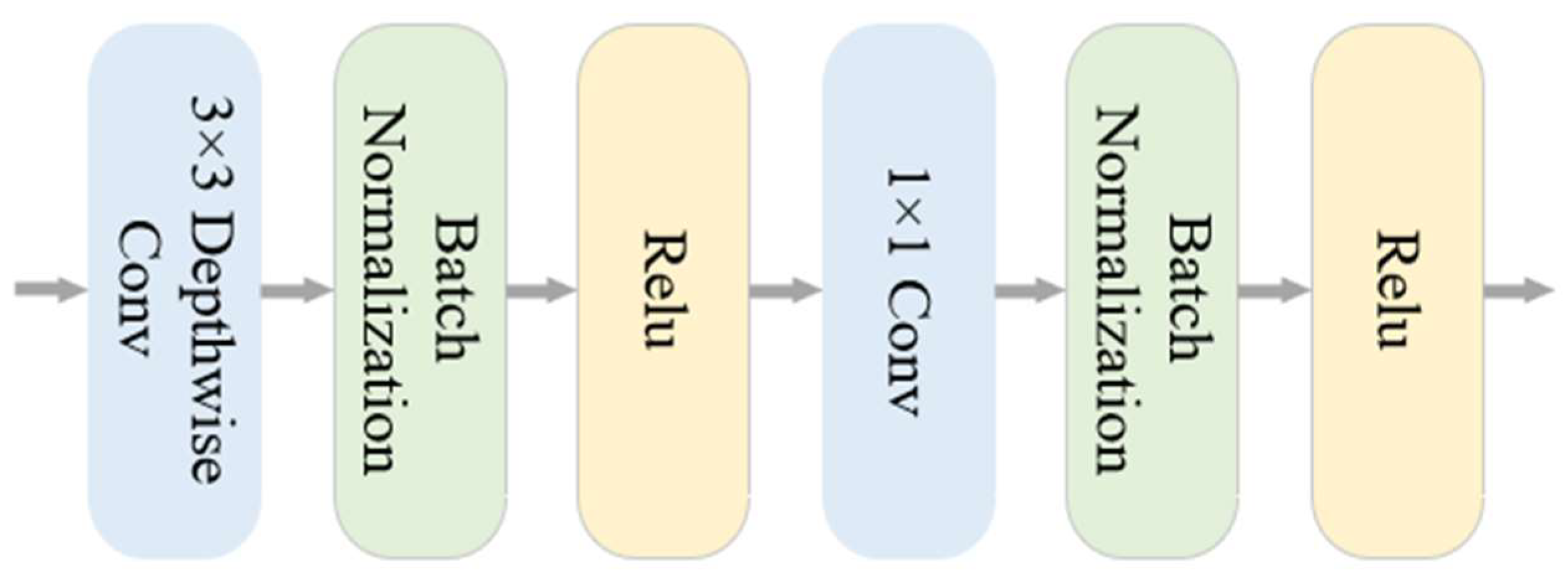

Based on the above analysis, this paper first constructs Pooling Pyramid Attention Module (PPAM) from the perspective that attention mechanisms such as CBAM, SSE, and CAM do not consider the impact of non-ship information in SAR images on weight generation. Secondly, the Adaptive Feature Balancing Module (AFBM) is constructed to address the problem that FPN and other feature fusion methods directly combine adjacent features without considering different contributions of input features to the output feature. In addition, to further enhance the ship detection ability of the model for multi-scale ships, the Atrous Spatial Pyramid Pooling (ASPP) structure is introduced. Finally, we combine these three modules with CSPDarknet53 to build a multi-scale ship detection model for SAR complex backgrounds called Pyramid Pooling Attention Network (PPA-Net). The main contributions of this paper are as follows:

- (1)

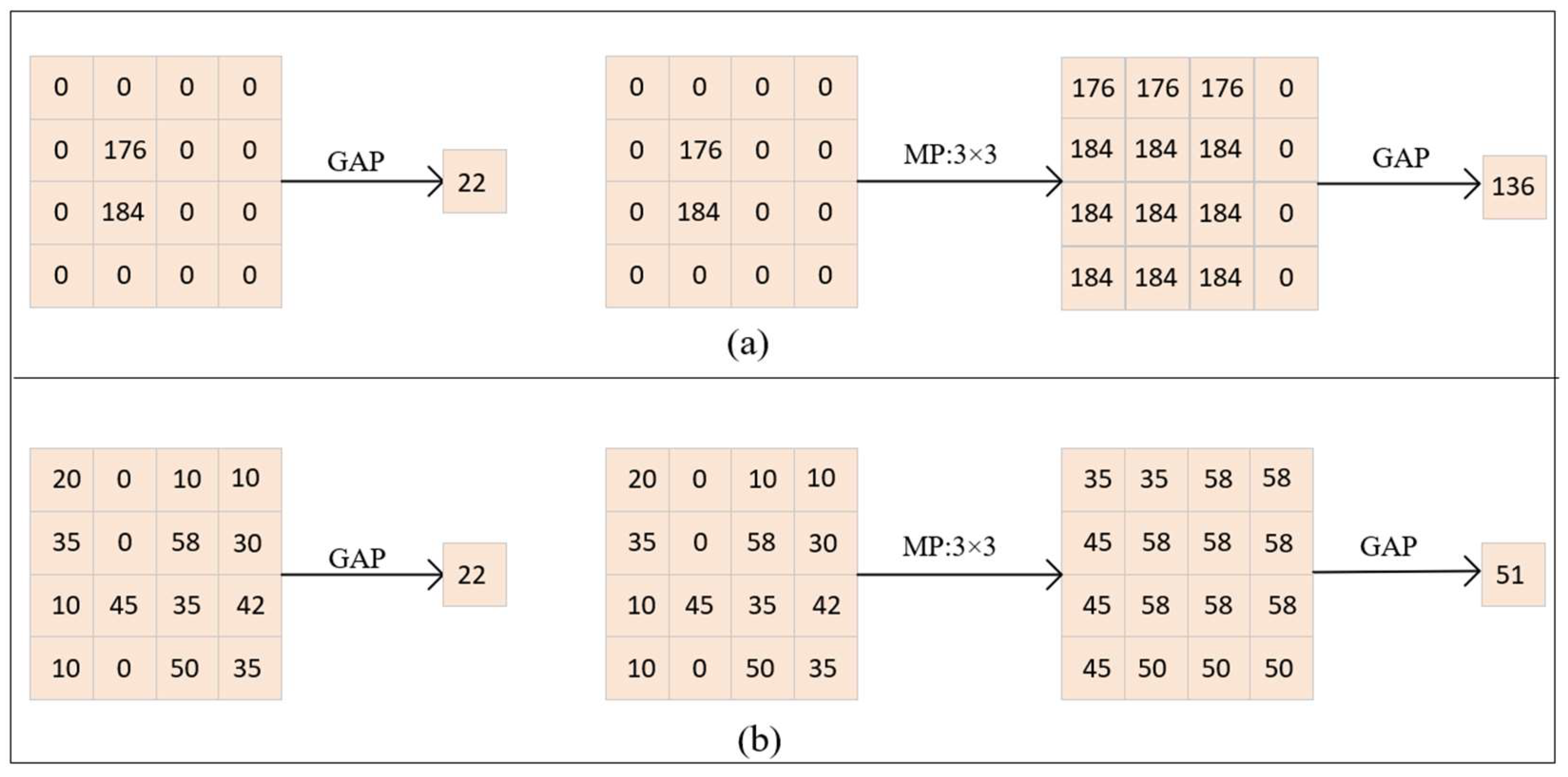

By analyzing the limitations of existing attention mechanisms in SAR ship detection, we propose a new attention module called PPAM. This module utilizes a pooling structure to reduce the impact of noise and background information on weight generation. Correct weight generation is more conducive to the suppression of noise and background information by attention mechanisms;

- (2)

We designed AFBM, in which we propose using adaptive weighted feature fusion to selectively utilize semantic and positional information contained in different feature layers to improve the performance of the ship detection model;

- (3)

An ASPP is introduced to enrich the receptive field while reducing information loss. This structure is particularly adapted to the detection of multi-scale ships.

The rest of the paper is structured as follows.

Section 2 presents the materials and methods principles,

Section 3 reports the experiments and comparisons with previous works,

Section 4 discusses the experiments. Finally,

Section 5 summarizes the paper and suggests directions for future work.

4. Discussion

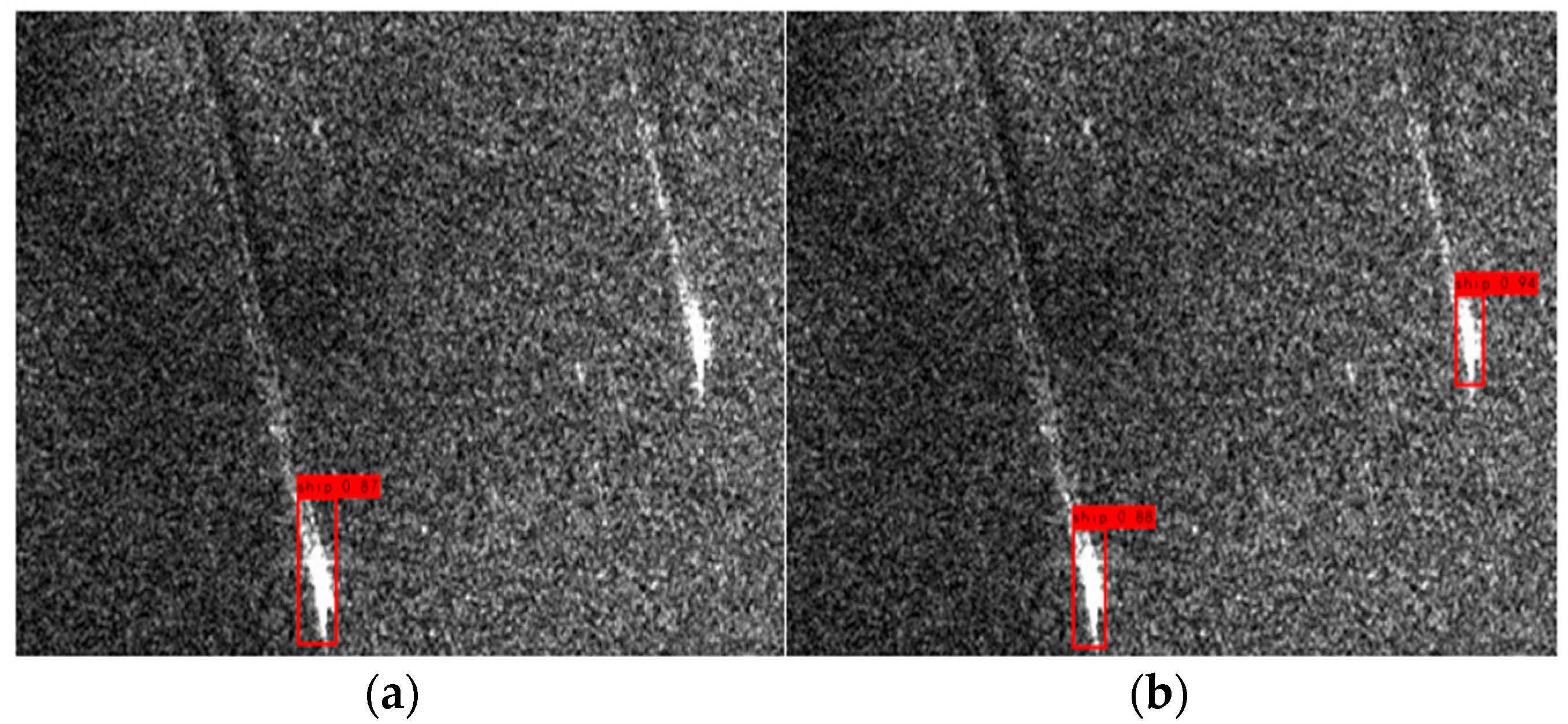

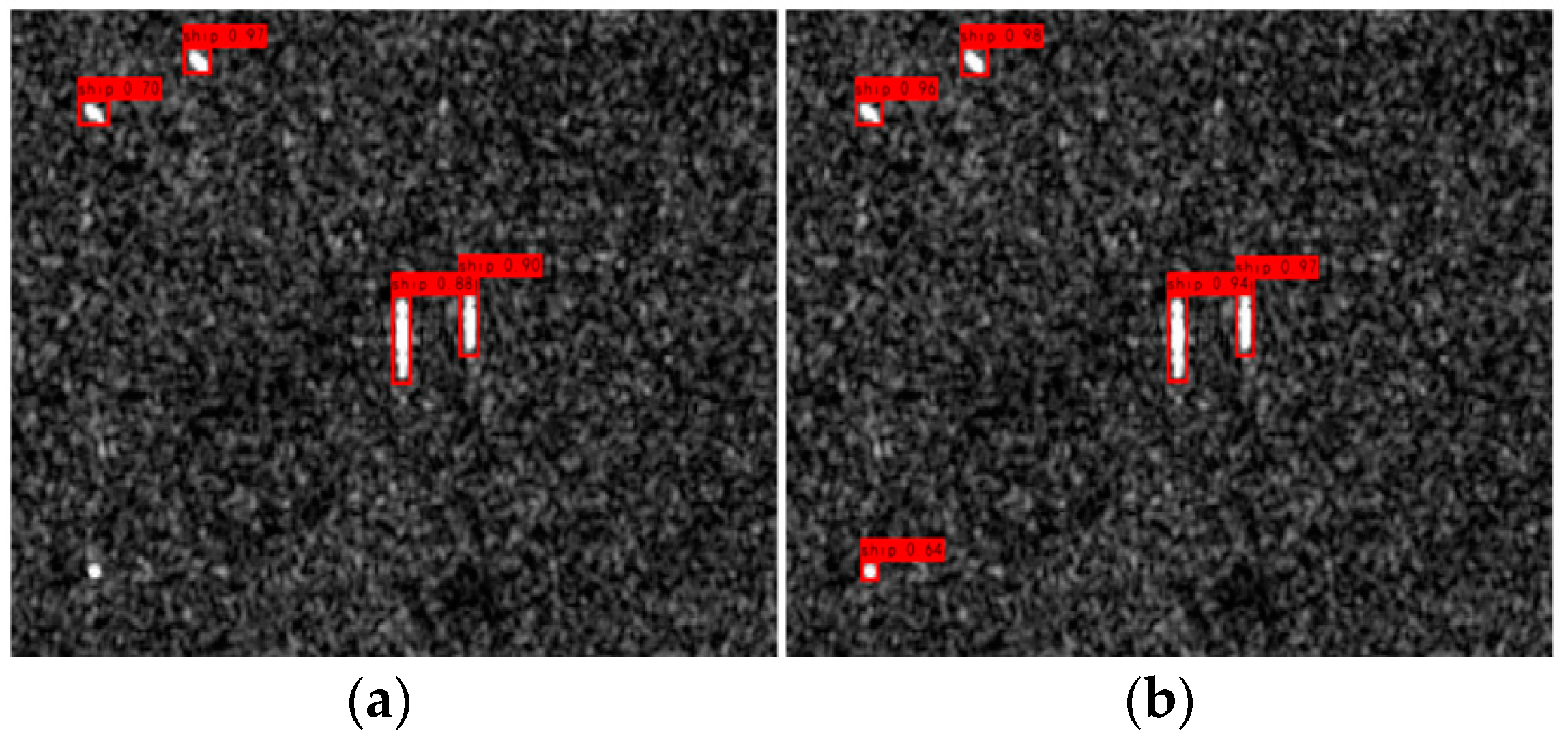

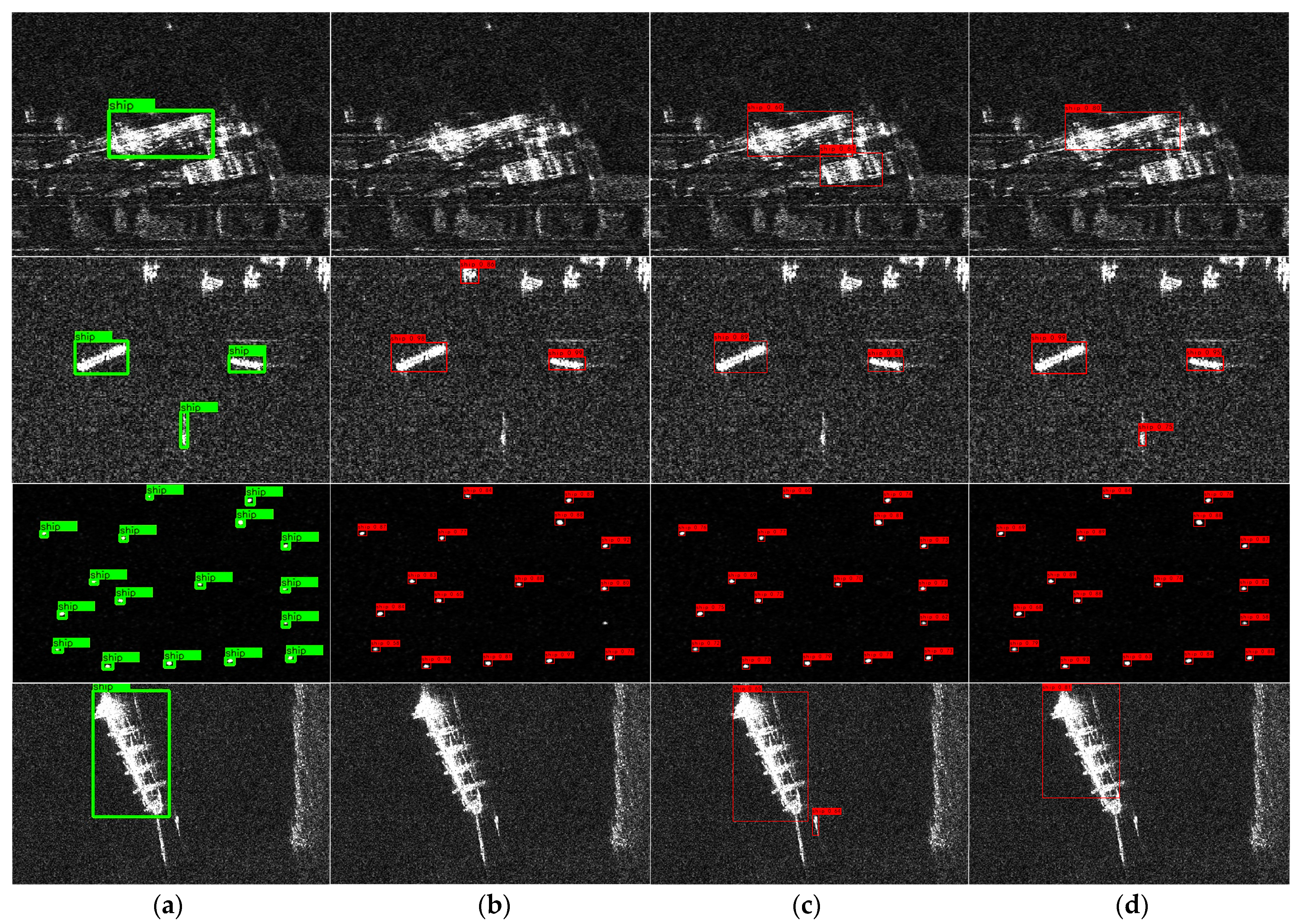

This study proposes two novel modules, PPAM and AFBM, for improving the performance of the SAR ship detection model. Our experiments on the SSDD dataset demonstrate that these two modules outperform commonly used attention and feature fusion modules. First, we evaluate the superiority of PPAM by comparing it with SE, ECA, and CBAM. The results show that PPAM achieves 1.25–1.88% higher ship detection accuracy than SE, ECA, and CBAM. Furthermore, the improvement of PPAM over ECA can be attributed to the pooling operation that suppresses the impact of noise on weight generation. This finding confirms the previously mentioned issue that noise can affect weight generation in attention mechanisms. However, compared with CBAM, the recall rate of PPAM decreases by 0.08% due to the potential damage to ship features caused by the introduction of pooling operation. Second, we evaluate the superiority of AFBM by comparing it with other commonly used feature fusion modules. The results show that AFBM achieves 1.41% and 2.56% higher ship detection accuracy than PANet and FPN, respectively. This advantage is due to the ability of AFBM to balance the semantic and positional information of ships through weighted feature fusion. However, the limitation of AFBM is the increased computational cost caused by using convolutional operations to automatically learn the contribution of different feature maps to the output features. Furthermore, through comparative experiments, we found that the improvement of the ship detection model can be better reflected in large-scale datasets because larger datasets provide a more diverse range of ship image variations, including changes in size, shape, and orientation. With more data, the model can better learn complex features and patterns that distinguish ships from backgrounds, which helps to improve the accuracy of the model.

In summary, our proposed PPAM and AFBM achieve state-of-the-art performance in SAR ship detection. Although they have limitations compared with commonly used attention and feature fusion modules, they have more significant advantages. Our future work will focus on optimizing these modules to address their limitations and further improve the performance of ship detection models.