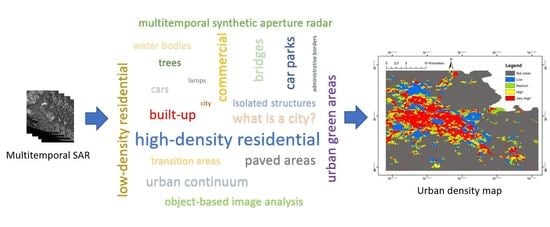

Urban Area Mapping Using Multitemporal SAR Images in Combination with Self-Organizing Map Clustering and Object-Based Image Analysis

Abstract

1. Introduction

2. Methodology

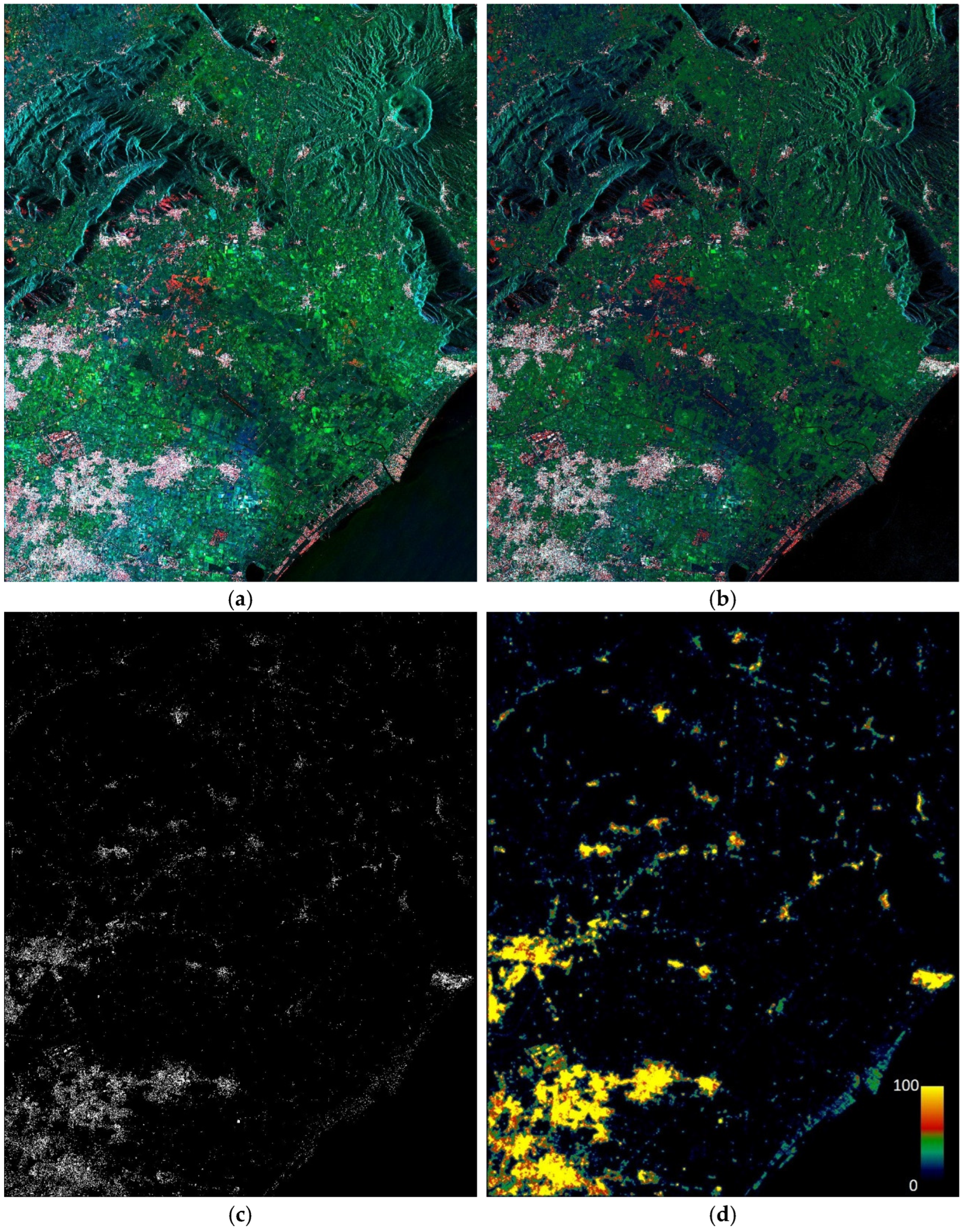

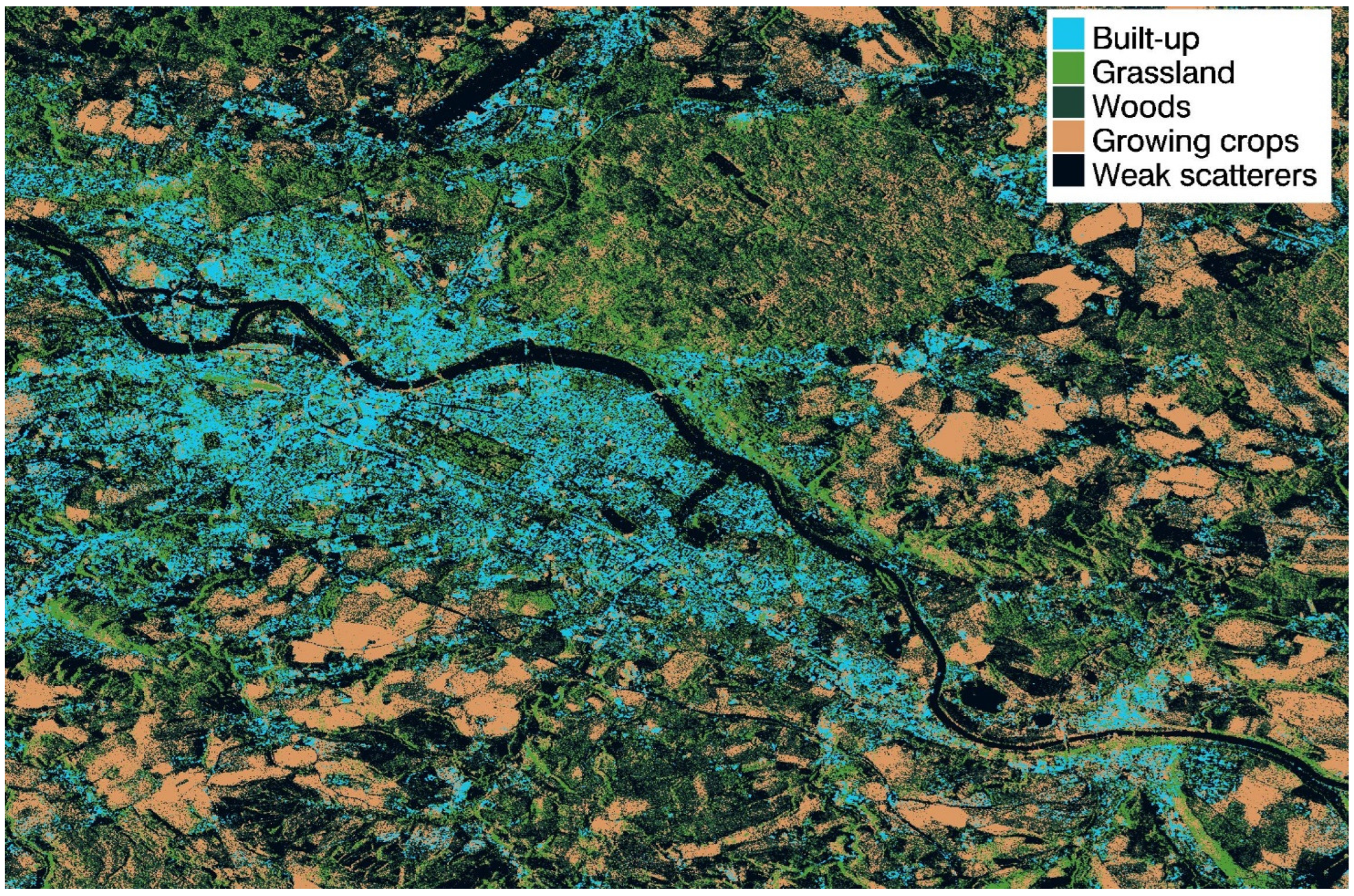

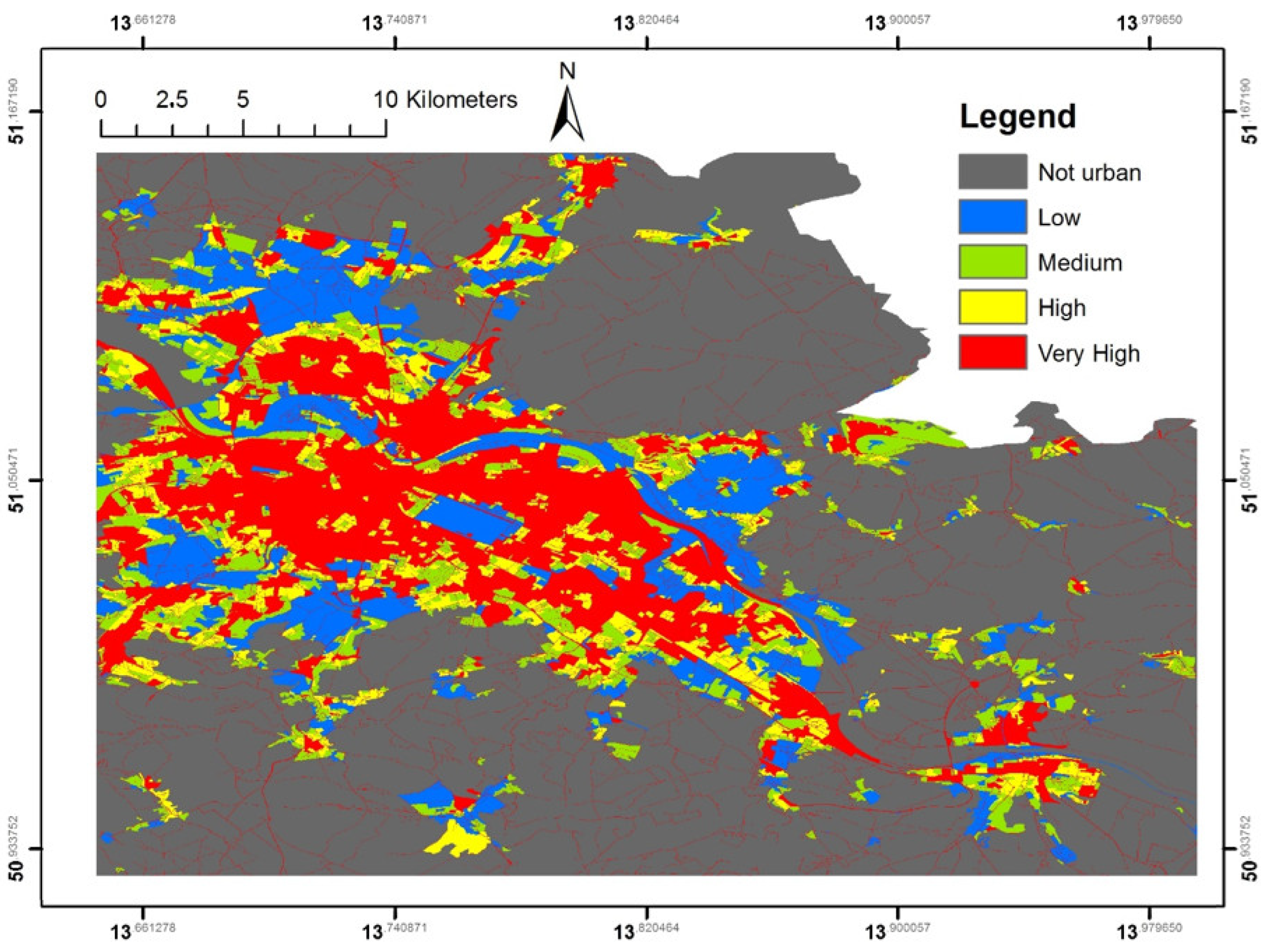

2.1. Coarse Urban Density Map Generation

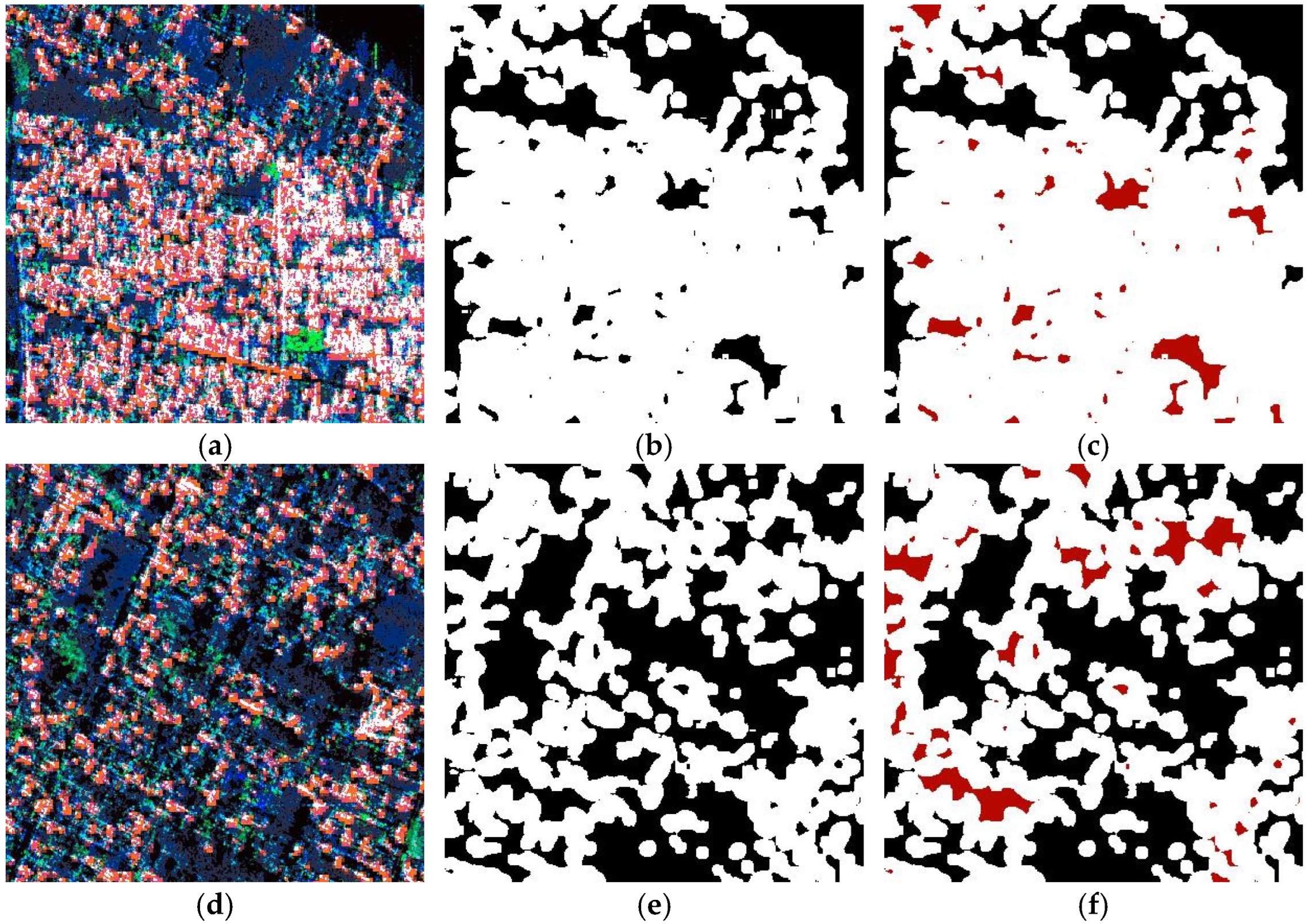

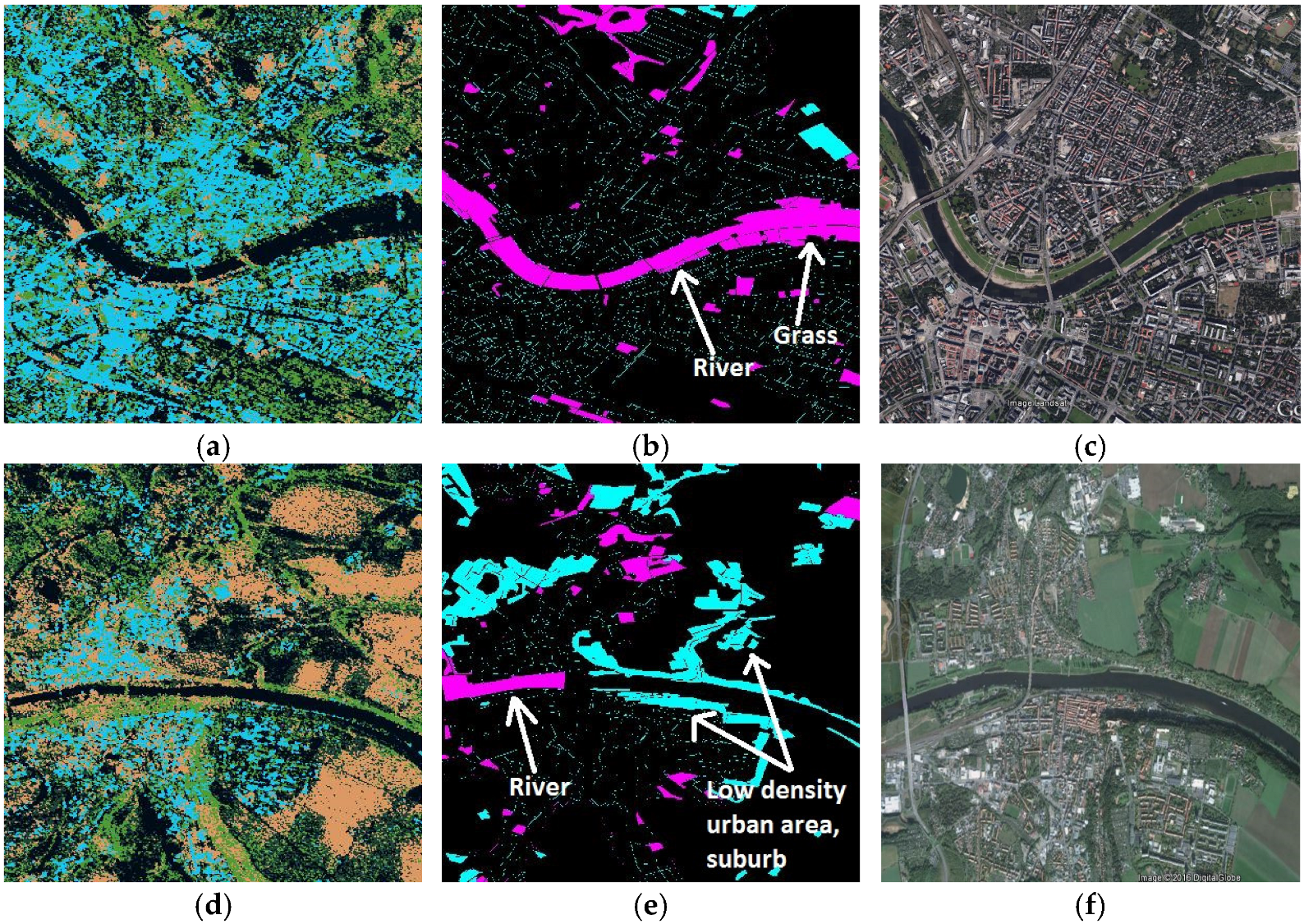

2.2. Urban Map Refinement Using OBIA Based on Spatial Relationship

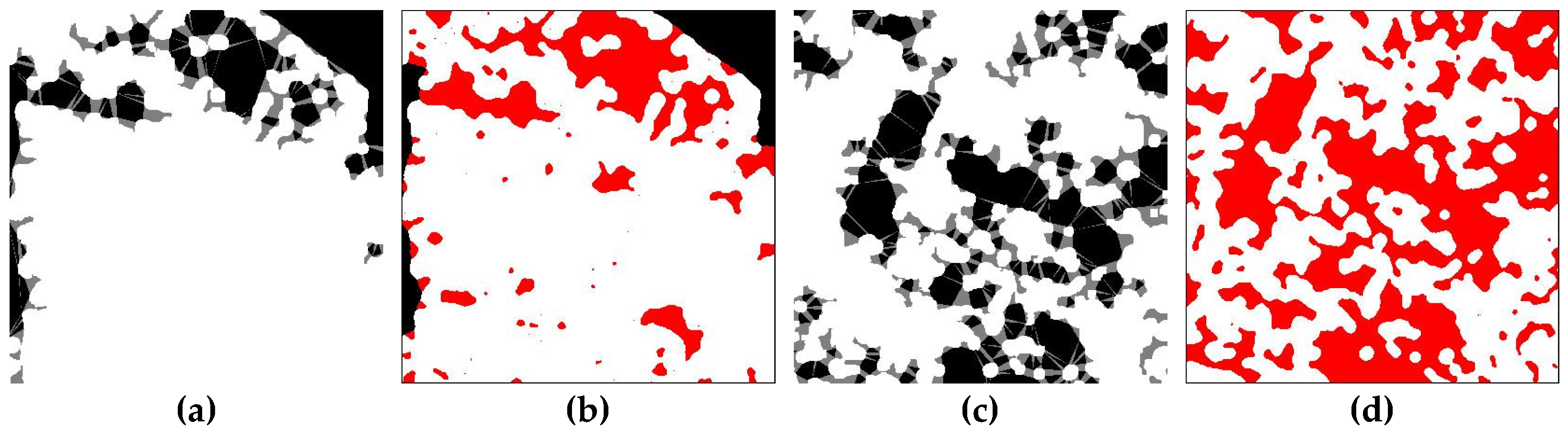

2.3. Urban Map Refinement Using OBIA Based on Delaunay Triangulation

- Obtain the vertexes coordinates () and compute the length lij of its sides according to the relation (specified here for i = 1, j = 2)

- Compute the triangle area At with the formula of Hero of Alexandria:where p is the triangle semi-perimeter.

- If At is smaller than a user-defined threshold ta, then consider the pixels included in the triangle as part of the urban area and mark them with the numeric ID 1 (i.e., “low density” urban area). In our experiments, ta has been set to 2000 m2.

3. Experimental Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Weng, Q.; Quattrochi, D.A. (Eds.) Urban Remote Sensing; CRC Press: Boca Raton, FL, USA, 2006. [Google Scholar]

- UNWater. The United Nations World Water Development Report 2015: Water for a Sustainable World; UNWater: Paris, France, 2015. [Google Scholar]

- UNDESA. World Population Prospects: The 2014 Revision, Highlights; UNDESA: New York, NY, USA, 2014. [Google Scholar]

- Potere, D.; Schneider, A. A critical look at representations of urban areas in global maps. GeoJournal 2007, 69, 55–80. [Google Scholar] [CrossRef]

- Trianni, G.; Lisini, G.; Angiuli, E.; Moreno, E.A.; Dondi, P.; Gaggia, A.; Gamba, P. Scaling up to National/Regional Urban Extent Mapping Using Landsat Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 7, 3710–3719. [Google Scholar] [CrossRef]

- Potere, D.; Schneider, A.; Angel, S.; Civco, D. Mapping urban areas on a global scale: Which of the eight maps now available is more accurate? Int. J. Remote Sens. 2009, 30, 6531–6558. [Google Scholar] [CrossRef]

- Schneider, A.; Friedl, M.A.; Potere, D. Mapping global urban areas using MODIS 500-m data: New methods and datasets based on ‘urban ecoregions. Remote Sens. Environ. 2010, 114, 1733–1746. [Google Scholar] [CrossRef]

- García-Mora, T.J.; Mas, J.-F.; Hinkley, E.A. Land cover mapping applications with MODIS: A literature review. Int. J. Digit. Earth 2012, 5, 63–87. [Google Scholar] [CrossRef]

- Pacifici, F.; Del Frate, F.; Emery, W.J.; Gamba, P.; Chanussot, J. Urban Mapping Using Coarse SAR and Optical Data: Outcome of the 2007 GRSS Data Fusion Contest. IEEE Geosci. Remote Sens. Lett. 2008, 5, 331–335. [Google Scholar] [CrossRef]

- Tsoeleng, L.T.; Odindi, J.; Mhangara, P.; Malahlela, O. Assessing the performance of the multi-morphological profiles in urban land cover mapping using pixel based classifiers and very high resolution satellite imagery. Sci. Afr. 2020, 10, e00629. [Google Scholar] [CrossRef]

- Zhao, F.; Zhang, S.; Du, Q.; Ding, J.; Luan, G.; Xie, Z. Assessment of the sustainable development of rural minority settlements based on multidimensional data and geographical detector method: A case study in Dehong, China. Socioecon. Plann. Sci. 2021, 78, 101066. [Google Scholar] [CrossRef]

- Wang, H.; Gong, X.; Wang, B.; Deng, C.; Cao, Q. Urban development analysis using built-up area maps based on multiple high-resolution satellite data. Int. J. Appl. Earth Obs. Geoinf. 2021, 103, 102500. [Google Scholar] [CrossRef]

- Lynch, P.; Blesius, L.; Hines, E. Classification of urban area using multispectral indices for urban planning. Remote Sens. 2020, 12, 2503. [Google Scholar] [CrossRef]

- Chen, B.; Xu, B.; Gong, P. Mapping essential urban land use categories (EULUC) using geospatial big data: Progress, challenges, and opportunities. Big Earth Data 2021, 5, 410–441. [Google Scholar] [CrossRef]

- Franceschetti, G.; Iodice, A.; Riccio, D. A Canonical Problem in Electromagnetic Backscattering From Buildings. IEEE Trans. Geosci. Remote Sens. 2002, 40, 1787–1801. [Google Scholar] [CrossRef]

- Guida, R.; Iodice, A.; Riccio, D. Height Retrieval of Isolated Buildings From Single High-Resolution SAR Images. IEEE Trans. Geosci. Remote Sens. 2010, 48, 2967–2979. [Google Scholar] [CrossRef]

- Amitrano, D.; Di Martino, G.; Guida, R.; Iervolino, P.; Iodice, A.; Papa, M.N.; Riccio, D.; Ruello, G. Earth environmental monitoring using multi-temporal synthetic aperture radar: A critical review of selected applications. Remote Sens. 2021, 13, 604. [Google Scholar] [CrossRef]

- Esch, T.; Thiel, M.; Schenk, A.; Roth, A.; Muller, A. Delineation of Urban Footprints From TerraSAR-X Data by Analyzing Speckle Characteristics and Intensity Information. IEEE Trans. Geosci. Remote Sens. 2003, 48, 905–916. [Google Scholar] [CrossRef]

- Amitrano, D.; Belfiore, V.; Cecinati, F.; Di Martino, G.; Iodice, A.; Mathieu, P.P.; Medagli, S.; Poreh, D.; Riccio, D.; Ruello, G. Urban Areas Enhancement in Multitemporal SAR RGB Images Using Adaptive Coherence Window and Texture Information. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 3740–3752. [Google Scholar] [CrossRef]

- Salentinig, A.; Gamba, P. A General Framework for Urban Area Extraction Exploiting Multiresolution SAR Data Fusion. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 2009–2018. [Google Scholar] [CrossRef]

- Gamba, P.; Aldrighi, M. SAR data classification of urban areas by means of segmentation techniques and ancillary optical data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 1140–1148. [Google Scholar] [CrossRef]

- Dekker, R.J. Texture analysis and classification of ERS SAR images for map updating of urban areas in the Netherlands. IEEE Trans. Geosci. Remote Sens. 2003, 41 Pt I, 1950–1958. [Google Scholar] [CrossRef]

- Dell’Acqua, F.; Gamba, P. Texture-Based Characterization of Urban Environments on Satellite SAR Images. IEEE Trans. Geosci. Remote Sens. 2003, 41, 153–159. [Google Scholar] [CrossRef]

- Amitrano, D.; Di Martino, G.; Iodice, A.; Riccio, D.; Ruello, G. RGB SAR products: Methods and applications. Eur. J. Remote Sens. 2016, 49, 777–793. [Google Scholar] [CrossRef]

- Cecinati, F.; Amitrano, D.; Leoncio, L.B.; Walugendo, E.; Guida, R.; Iervolino, P.; Natarajan, S. Exploitation of ESA and NASA Heritage Remote Sensing Data for Monitoring the Heat Island Evolution in Chennai with the Google Earth Engine. In Proceedings of the IGARSS 2019-2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 6328–6331. [Google Scholar]

- Henderson, F.M.; Xia, Z.-G. SAR applications in human settlement detection, population estimation and urban land use pattern analysis: A status report. IEEE Trans. Geosci. Remote Sens. 1997, 35, 79–85. [Google Scholar] [CrossRef]

- Koukiou, G.; Anastassopoulos, V. Fully Polarimetric Land Cover Classification Based on Markov Chains. Adv. Remote Sens. 2021, 10, 47–65. [Google Scholar] [CrossRef]

- Dumitru, C.O.; Cui, S.; Schwarz, G.; Datcu, M. Information Content of Very-High-Resolution SAR Images: Semantics, Geospatial Context, and Ontologies. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 8, 1635–1650. [Google Scholar] [CrossRef]

- Amitrano, D.; di Martino, G.; Iodice, A.; Riccio, D.; Ruello, G. A New Framework for SAR Multitemporal Data RGB Representation: Rationale and Products. IEEE Trans. Geosci. Remote Sens. 2015, 53, 117–133. [Google Scholar] [CrossRef]

- Amitrano, D.; Cecinati, F.; Di Martino, G.; Iodice, A.; Mathieu, P.P.; Riccio, D.; Ruello, G. Multitemporal Level-1β Products: Definitions, Interpretation, and Applications. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6545–6562. [Google Scholar] [CrossRef]

- Kohonen, T. The Self-Organizing Map. Proc. IEEE 1990, 78, 1464–1480. [Google Scholar] [CrossRef]

- Amitrano, D.; Cecinati, F.; Di Martino, G.; Iodice, A.; Mathieu, P.P.; Riccio, D.; Ruello, G. Feature Extraction From Multitemporal SAR Images Using Selforganizing Map Clustering and Object-Based Image Analysis. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1556–1570. [Google Scholar] [CrossRef]

- Matsuyama, T.; Hwang, V.S.-S. SIGMA—A Knowledge-Based Aerial Image Understanding System; Plenum Press: New York, NY, USA, 1990. [Google Scholar]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- Shapiro, L.; Stockman, G. Computer Vision; Prentice Hall: Upper Saddle River, NJ, USA, 2002. [Google Scholar]

- Favreau, J.-D.; Lafarge, F.; Bousseau, A.; Auvolat, A. Extracting Geometric Structures in Images with Delaunay Point Processes. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 42, 837–850. [Google Scholar] [CrossRef]

- Amitrano, D.; di Martino, G.; Iodice, A.; Riccio, D.; Ruello, G. An end-user oriented framework for the classification of multitemporal SAR images. Int. J. Remote Sens. 2016, 37, 248–261. [Google Scholar] [CrossRef]

- European Environment Agency. Mapping Guide for a European Urban Atlas; European Environment Agency: Copenhagen, Denmark, 2011. [Google Scholar]

- Weeks, J.R. Defining Urban Areas. In Remote Sensing of Urban and Suburban Areas; Rashed, T., Jurgens, C., Eds.; Springer: Berlin, Germany, 2010. [Google Scholar]

- Boehm, C.; Schenkel, R. Analysis of Spatial Patterns of Urban Areas Using High Resolution Polarimetric SAR. In Proceedings of the 1st EARSel Workshop of the SIG Urban Remote Sensing, Berlin, Germany, 2–3 March 2006. [Google Scholar]

- Amitrano, D.; Guida, R.; Iervolino, P. Semantic Unsupervised Change Detection of Natural Land Cover With Multitemporal Object-Based Analysis on SAR Images. IEEE Trans. Geosci. Remote Sens. 2020, 59, 5494–5514. [Google Scholar] [CrossRef]

| Class Name | Density (%) | ID |

|---|---|---|

| Very high density | D ≥ 30 | 4 |

| High density | 20 ≤ D < 30 | 3 |

| Medium density | 10 ≤ D < 20 | 2 |

| Low density | D < 10 | 1 |

| Very low density/not urban | D < 10 | 0 |

| Proposed | Speckle Divergence | |||

|---|---|---|---|---|

| Not Urban (%) | Urban (%) | Not Urban (%) | Urban (%) | |

| Not urban | 84.61 | 18.40 | 82.45% | 35.99% |

| Urban | 15.39 | 81.60 | 17.55% | 64.01% |

| Producer accuracy | 84.61 | 81.60 | 82.45% | 64.01% |

| User accuracy | 90.34 | 72.27 | 92.19% | 41.43% |

| Proposed | Speckle Divergence | |||

|---|---|---|---|---|

| Not Urban (%) | Urban (%) | Not Urban (%) | Urban (%) | |

| Not urban | 76.44 | 9.99 | 73.76 | 9.63 |

| Urban | 23.56 | 90.01 | 26.24 | 90.37 |

| Producer accuracy | 76.44 | 90.01 | 73.76 | 90.37 |

| User accuracy | 91.84 | 72.80 | 95.11 | 57.56 |

| Proposed | Speckle Divergence | |||

|---|---|---|---|---|

| Not Urban (%) | Urban (%) | Not Urban (%) | Urban (%) | |

| Not urban | 96.44 | 41.61 | 77.33 | 5.40 |

| Urban | 3.56 | 58.39 | 22.67 | 94.60 |

| Producer accuracy | 96.44 | 58.39 | 77.37 | 94.60 |

| User accuracy | 94.34 | 69.56 | 97.79 | 57.43 |

| Urban Atlas Category | SL (%) | SAR Category | Napoli SL (%) | Caserta SL (%) | Dresden (SL %) |

|---|---|---|---|---|---|

| Continuous urban fabric | >80 | Very high | 33.8 | 34.65 | 36.64 |

| Dense urban fabric | 50–80 | High | 24.2 | 24.54 | 24.47 |

| Medium density urban | 30–50 | Medium | 14.97 | 14.67 | 14.52 |

| Low density urban | 10–30 | Low | 7.43 | 8.07 | 9.05 |

| Very low density urban | <10 | Not urban | na | na | na |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Amitrano, D.; Di Martino, G.; Iodice, A.; Riccio, D.; Ruello, G. Urban Area Mapping Using Multitemporal SAR Images in Combination with Self-Organizing Map Clustering and Object-Based Image Analysis. Remote Sens. 2023, 15, 122. https://doi.org/10.3390/rs15010122

Amitrano D, Di Martino G, Iodice A, Riccio D, Ruello G. Urban Area Mapping Using Multitemporal SAR Images in Combination with Self-Organizing Map Clustering and Object-Based Image Analysis. Remote Sensing. 2023; 15(1):122. https://doi.org/10.3390/rs15010122

Chicago/Turabian StyleAmitrano, Donato, Gerardo Di Martino, Antonio Iodice, Daniele Riccio, and Giuseppe Ruello. 2023. "Urban Area Mapping Using Multitemporal SAR Images in Combination with Self-Organizing Map Clustering and Object-Based Image Analysis" Remote Sensing 15, no. 1: 122. https://doi.org/10.3390/rs15010122

APA StyleAmitrano, D., Di Martino, G., Iodice, A., Riccio, D., & Ruello, G. (2023). Urban Area Mapping Using Multitemporal SAR Images in Combination with Self-Organizing Map Clustering and Object-Based Image Analysis. Remote Sensing, 15(1), 122. https://doi.org/10.3390/rs15010122