Normalized Burn Ratio Plus (NBR+): A New Index for Sentinel-2 Imagery

Abstract

:1. Introduction

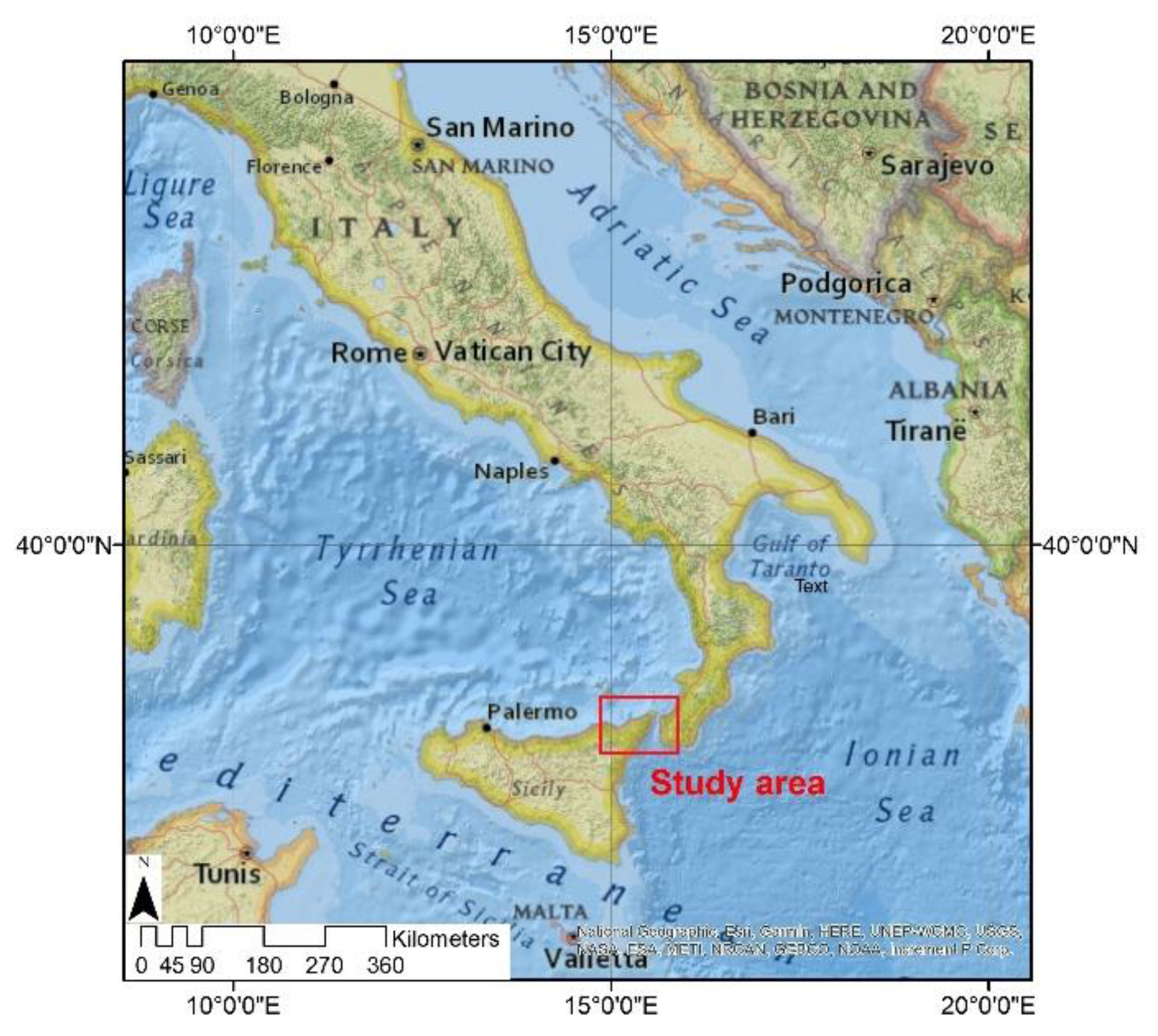

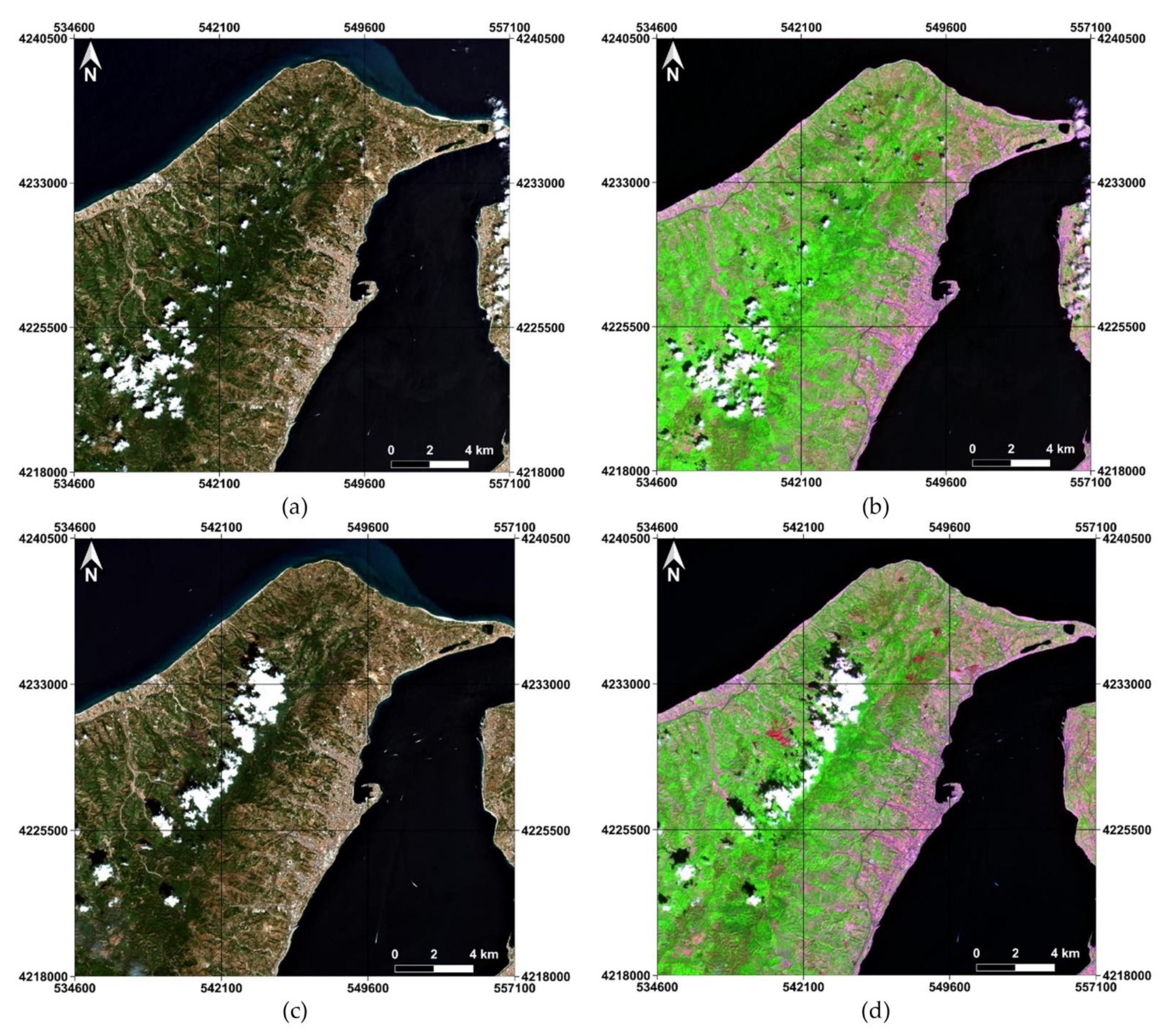

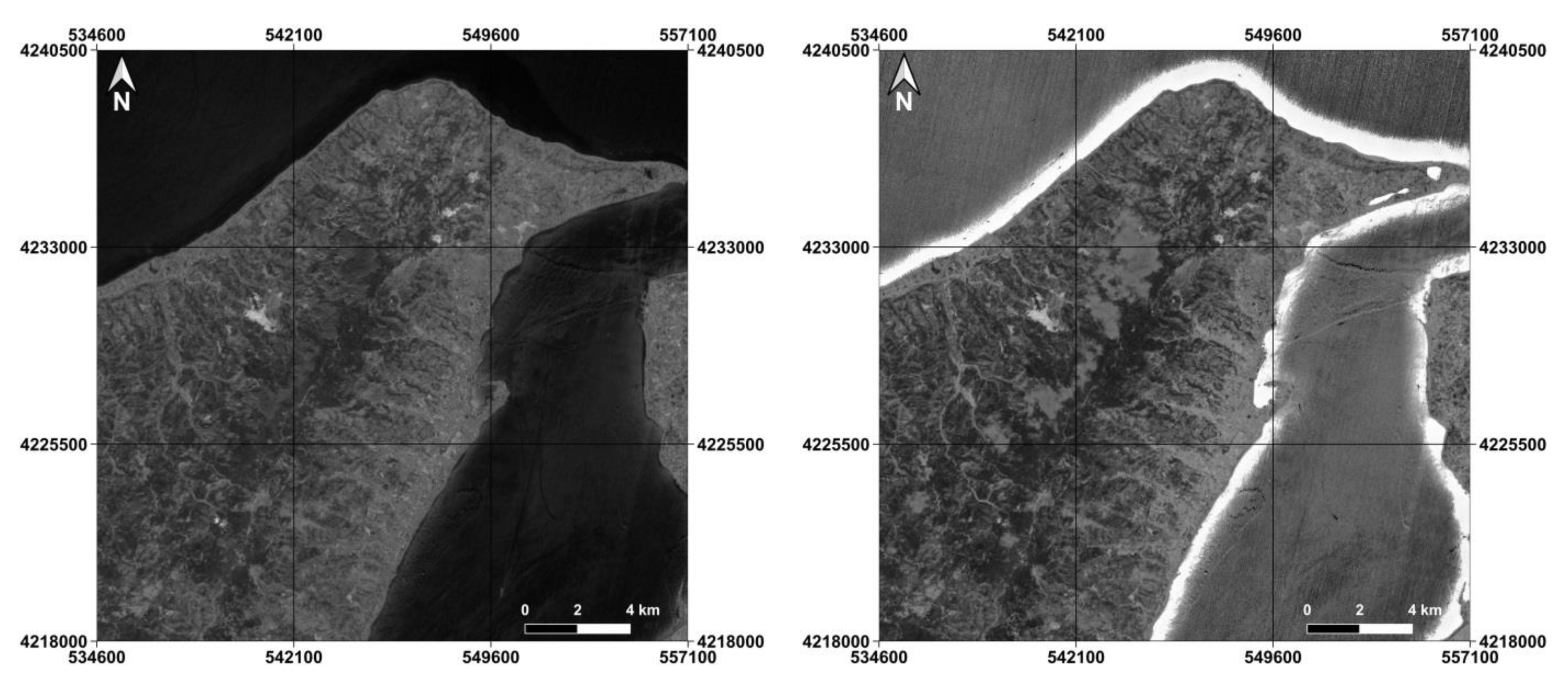

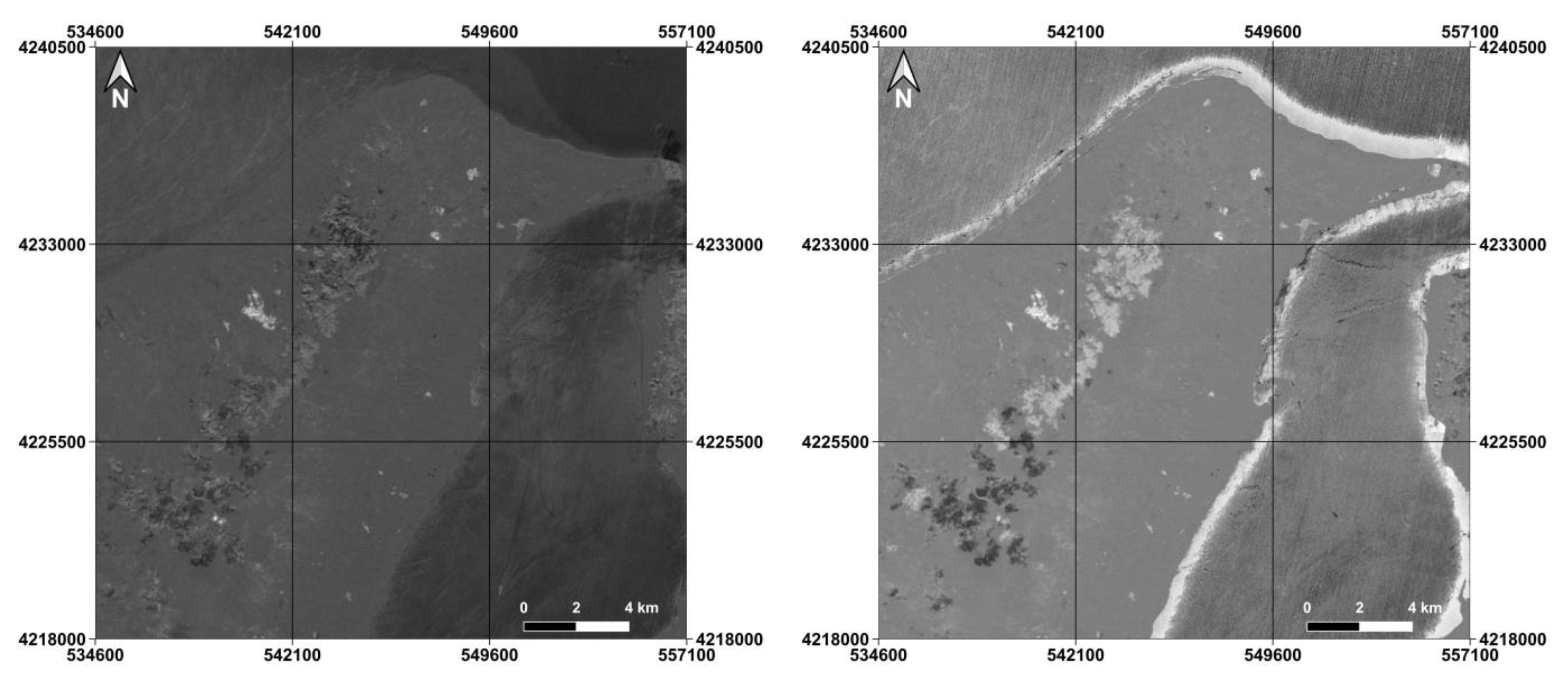

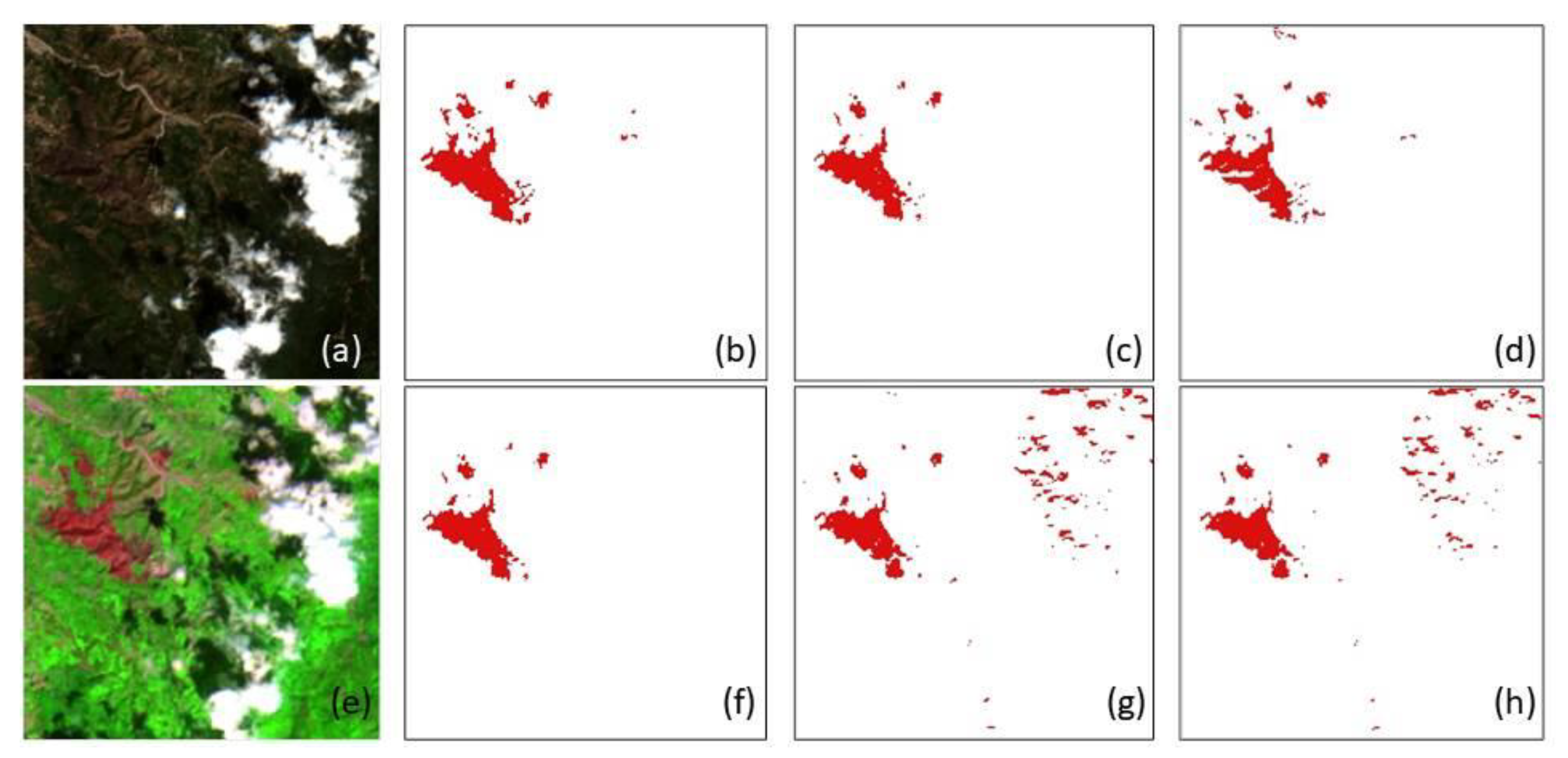

2. Study Area and Dataset

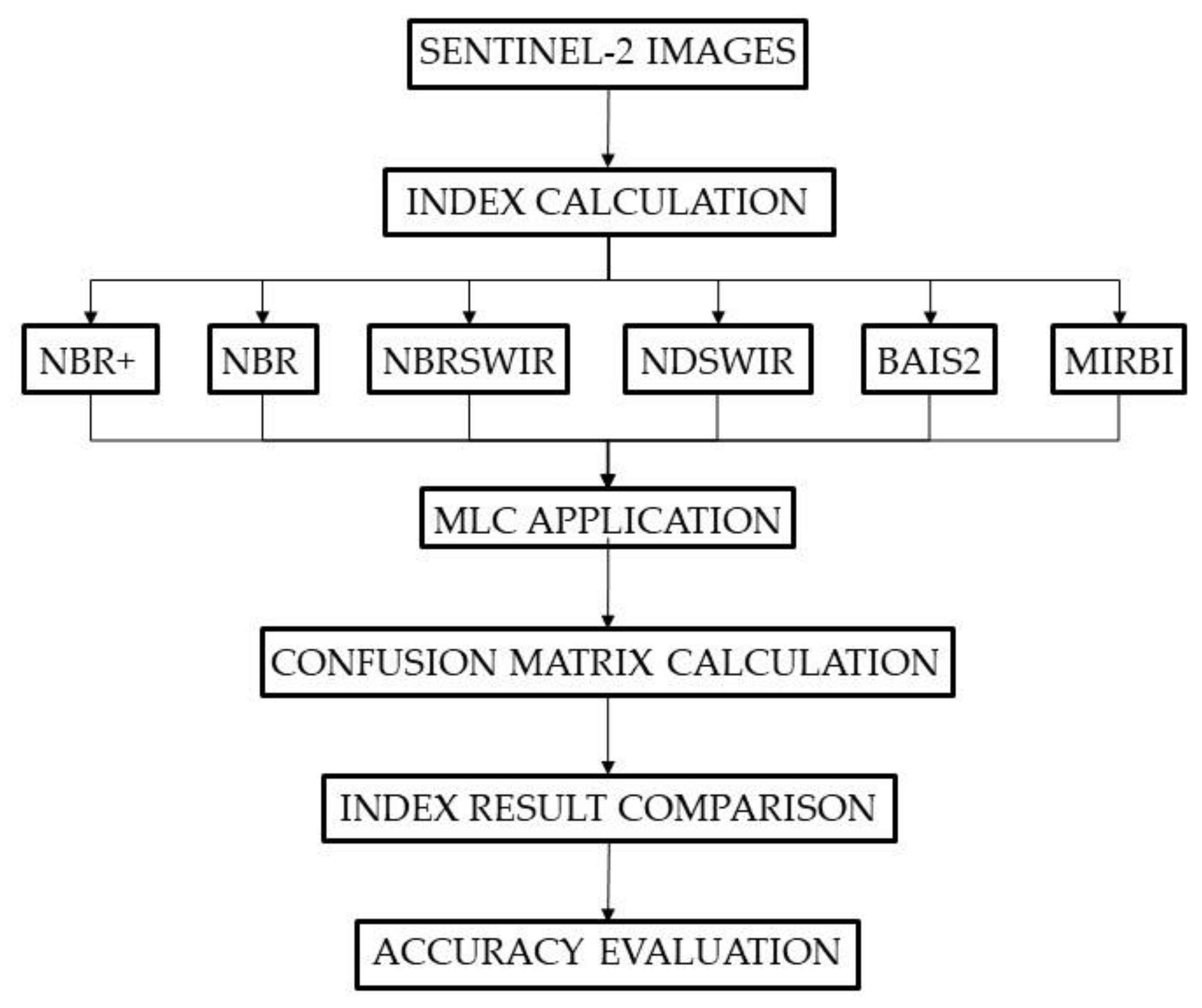

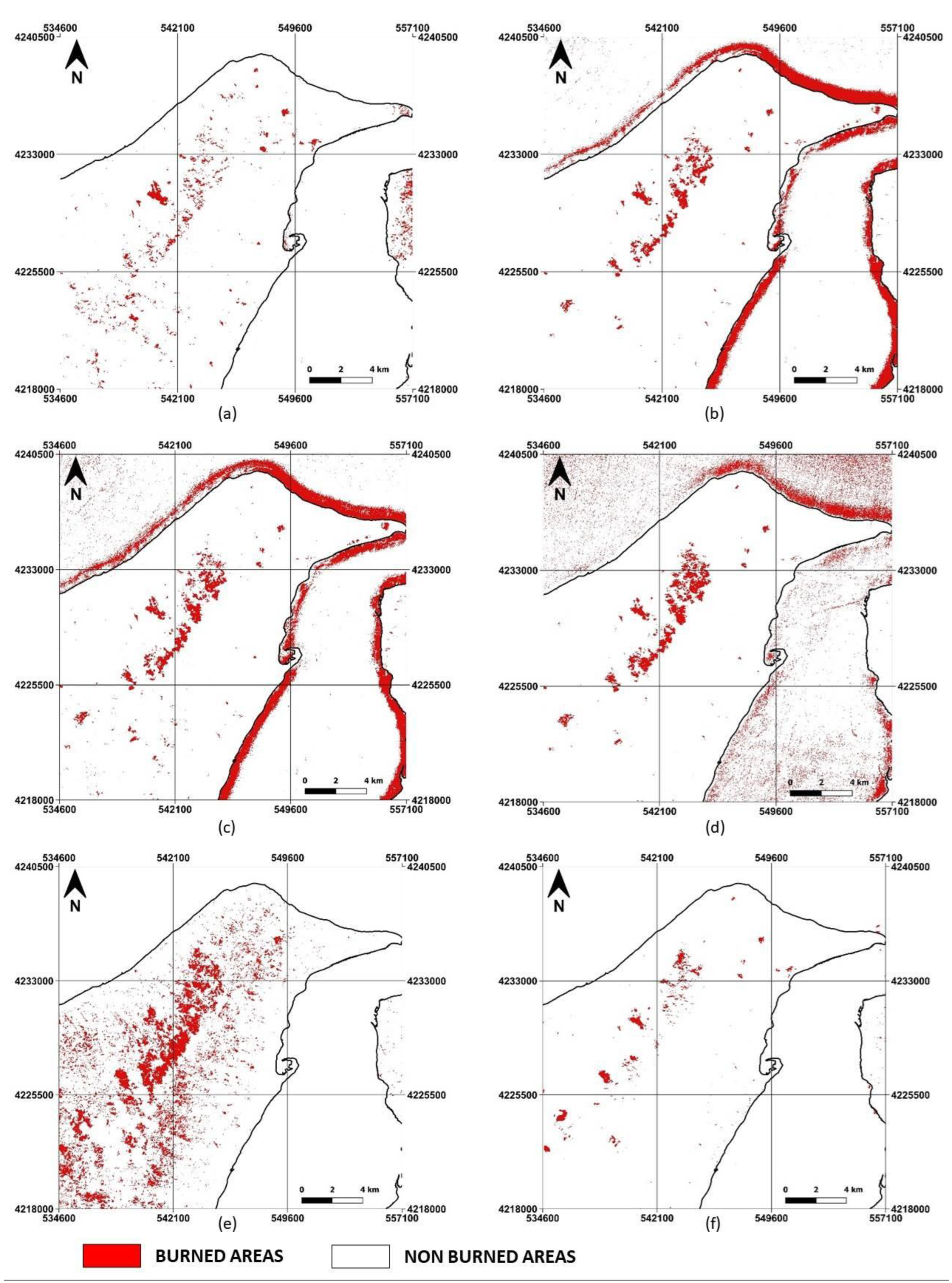

3. Methods

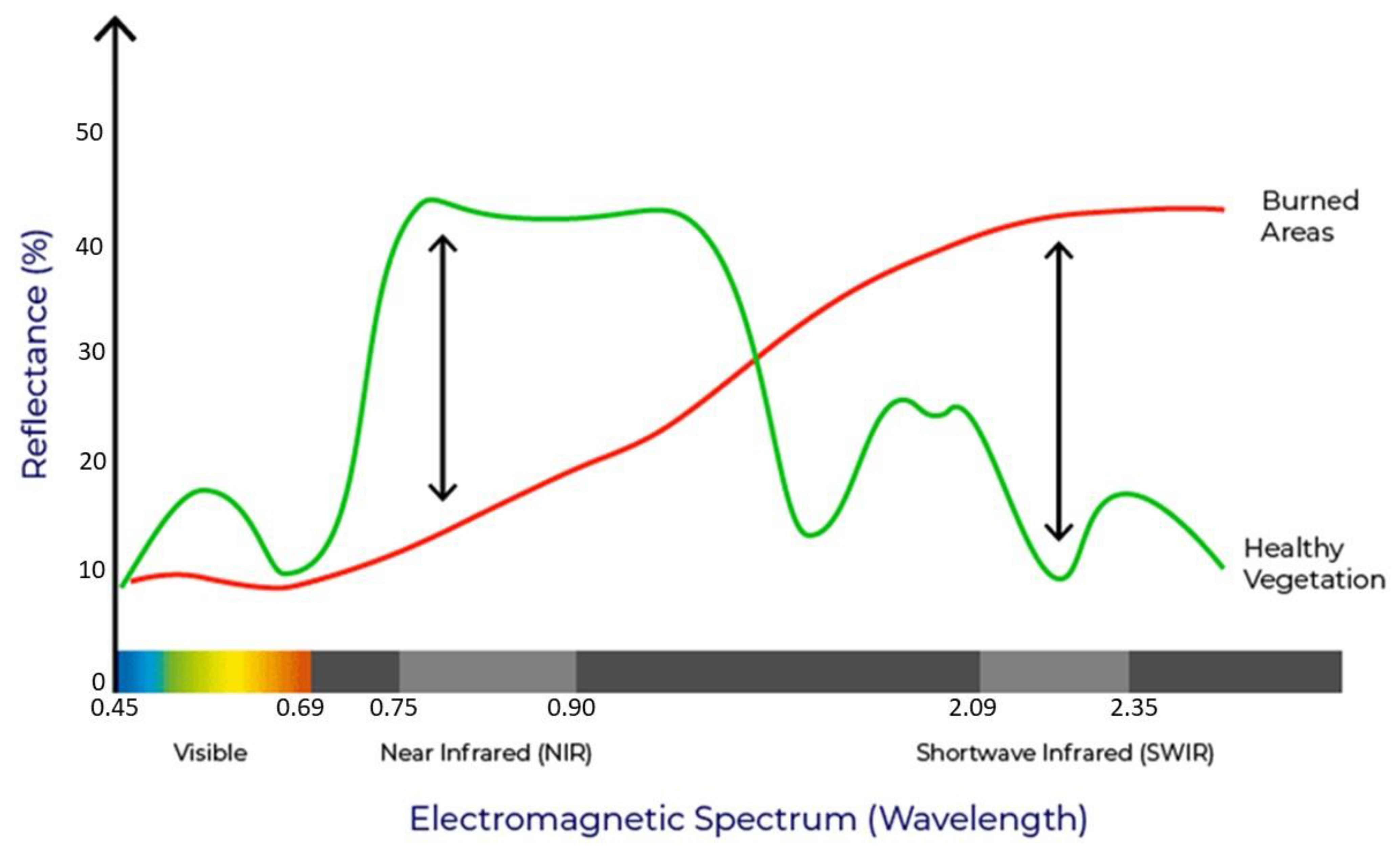

3.1. Spectral Indices Used for the Identification of Burnt Areas

3.1.1. Normalized Burn Ratio (NBR)

3.1.2. Normalized Burn Ratio-SWIR (NBRSWIR)

3.1.3. Normalized Difference Shortwave Infrared Index (NDSWIR)

3.1.4. Mid-Infrared Bi-Spectral Index (MIRBI)

3.1.5. Burnt Area Index for Sentinel 2 (BAIS2)

3.2. Development of a New Spectral Index for the Identification of BA

3.3. Maximum Likelihood Classification

3.4. Burned Area Mapping

3.5. Thematic Accuracy Assessment

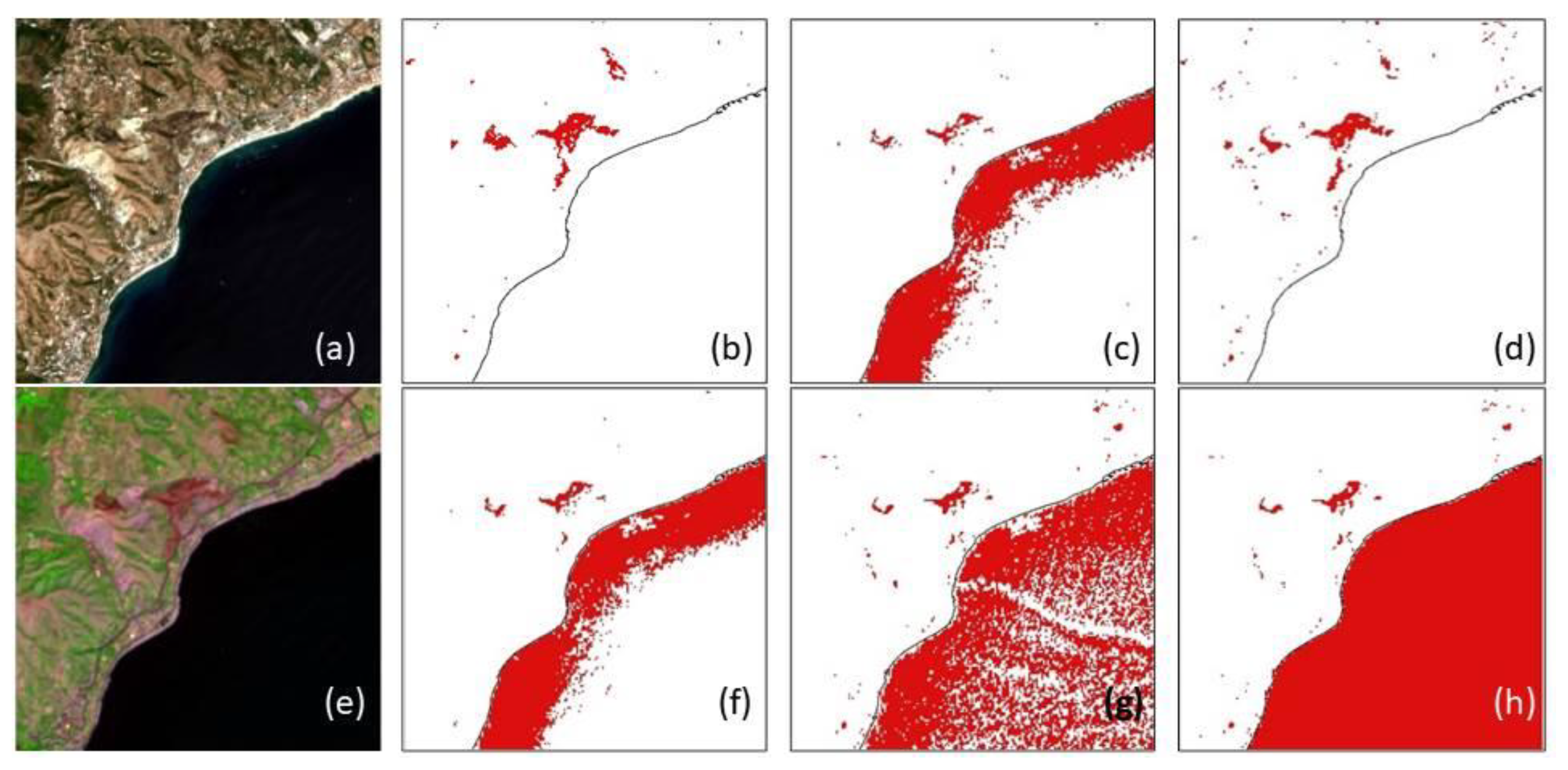

4. Results and Discussion

- Producer Accuracy of the Burned Areas (PA–BA);

- Producer Accuracy of the Non-Burned Areas (PA–NON BA);

- User Accuracy of the Burned Areas (UA–BA);

- User Accuracy of the Non-Burned Areas (UA–NON BA);

- Overall Accuracy (OA).

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Gargiulo, M.; Dell’Aglio, D.A.G.; Iodice, A.; Riccio, D.; Ruello, G. A CNN-based super-resolution technique for active fire detection on Sentinel-2 data. In Proceedings of the 2019 PhotonIcs & Electromagnetics Research Symposium-Spring (PIERS-Spring), Rome, Italy, 17–20 June 2019; pp. 418–426. [Google Scholar] [CrossRef] [Green Version]

- Kaplan, G.; Yigit Avdan, Z. Space-borne air pollution observation from Sentinel-5P Tropomi: Relationship between pollutants, geographical and demographic data. Int. J. Eng. Geosci. 2020, 5, 130–137. [Google Scholar] [CrossRef]

- Lasaponara, R.; Proto, A.M.; Aromando, A.; Cardettini, G.; Varela, V.; Danese, M. On the mapping of burned areas and burn severity using self organizing map and sentinel-2 data. IEEE Geosci. Remote Sens. Lett. 2019, 17, 854–858. [Google Scholar] [CrossRef]

- Seydi, S.T.; Akhoondzadeh, M.; Amani, M.; Mahdavi, S. Wildfire damage assessment over Australia using sentinel-2 imagery and MODIS land cover product within the google earth engine cloud platform. Remote Sens. 2021, 13, 220. [Google Scholar] [CrossRef]

- Filipponi, F. BAIS2: Burned area index for Sentinel-2. Multidiscip. Digit. Publ. Inst. Proc. 2018, 2, 364. [Google Scholar] [CrossRef] [Green Version]

- Singh, D.; Kundu, N.; Ghosh, S. Mapping rice residues burning and generated pollutants using Sentinel-2 data over northern part of India. Remote Sens. Appl. Soc. Environ. 2021, 22, 100486. [Google Scholar] [CrossRef]

- Costantino, D.; Guastaferro, F.; Parente, C.; Pepe, M. Using images generated by sentinel-2 satellite optical sensor for burned area mapping. In Proceedings of the International Workshop on R3 in Geomatics: Research, Results and Review, Naples, Italy, 10–11 October 2019; Springer: Cham, Switzerland, 2019; pp. 350–362. [Google Scholar] [CrossRef]

- Vetrita, Y.; Cochrane, M.A.; Priyatna, M.; Sukowati, K.A.; Khomarudin, M.R. Evaluating accuracy of four MODIS-derived burned area products for tropical peatland and non-peatland fires. Environ. Res. Lett. 2021, 16, 035015. [Google Scholar] [CrossRef]

- Amos, C.; Petropoulos, G.P.; Ferentinos, K.P. Determining the use of Sentinel-2A MSI for wildfire burning & severity detection. Int. J. Remote Sens. 2019, 40, 905–930. [Google Scholar] [CrossRef]

- Chuvieco, E.; Martin, M.P.; Palacios, A. Assessment of different spectral indices in the red-near-infrared spectral domain for burned land discrimination. Int. J. Remote Sens. 2002, 23, 5103–5110. [Google Scholar] [CrossRef]

- Bagwell, R.; Peters, B. Advanced spaceborne thermal emission and reflection radiometer (ASTER) map of the thomas fire area in California. In Proceedings of the AGU Fall Meeting Abstracts, Washington, DC, USA, 10–14 December 2018; p. ED43C-1251. [Google Scholar]

- Zakšek, K.; Schroedter-Homscheidt, M. Parameterization of air temperature in high temporal and spatial resolution from a combination of the seviri and modis instruments. ISPRS J. Photogramm. Remote Sens. 2009, 64, 414–421. [Google Scholar] [CrossRef]

- Giglio, L.; Boschetti, L.; Roy, D.P.; Humber, M.L.; Justice, C.O. The collection 6 MODIS burned area mapping algorithm and product. Remote Sens. Environ. 2018, 217, 72–85. [Google Scholar] [CrossRef]

- Saidi, S.; Younes, A.B.; Anselme, B. A GIS-remote sensing approach for forest fire risk assessment: Case of Bizerte region, Tunisia. Appl. Geomat. 2021, 13, 587–603. [Google Scholar] [CrossRef]

- Ngadze, F.; Mpakairi, K.S.; Kavhu, B.; Ndaimani, H.; Maremba, M.S. Exploring the utility of Sentinel-2 MSI and Landsat 8 OLI in burned area mapping for a heterogenous savannah landscape. PLoS ONE 2020, 15, e0232962. [Google Scholar] [CrossRef] [PubMed]

- Szantoi, Z.; Strobl, P. Copernicus Sentinel-2 Calibration and Validation; Taylor & Francis: New York, NY, USA, 2019. [Google Scholar] [CrossRef] [Green Version]

- Barboza Castillo, E.; Turpo Cayo, E.Y.; de Almeida, C.M.; Salas López, R.; Rojas Briceño, N.B.; Silva López, J.O.; Espinoza-Villar, R. Monitoring wildfires in the northeastern peruvian amazon using landsat-8 and sentinel-2 imagery in the GEE platform. ISPRS Int. J. Geo-Inf. 2020, 9, 564. [Google Scholar] [CrossRef]

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring vegetation systems in the Great Plains with ERTS. NASA Spec. Publ. 1974, 351, 309. [Google Scholar]

- Viana-Soto, A.; Aguado, I.; Martínez, S. Assessment of post-fire vegetation recovery using fire severity and geographical data in the Mediterranean region (Spain). Environments 2017, 4, 90. [Google Scholar] [CrossRef] [Green Version]

- Meneses, B.M. Vegetation recovery patterns in burned areas assessed with landsat 8 OLI imagery and environmental biophysical data. Fire 2021, 4, 76. [Google Scholar] [CrossRef]

- García, M.J.L.; Caselles, V. Mapping burns and natural reforestation using thematic mapper data. Geocarto Int. 1991, 6, 31–37. [Google Scholar] [CrossRef]

- Roy, D.P.; Zhang, H.K.; Ju, J.; Gomez-Dans, J.L.; Lewis, P.E.; Schaaf, C.B.; Kovalskyy, V. A general method to normalize Landsat reflectance data to nadir BRDF adjusted reflectance. Remote Sens. Environ. 2016, 176, 255–271. [Google Scholar] [CrossRef] [Green Version]

- Pepe, M.; Parente, C. Burned area recognition by change detection analysis using images derived from Sentinel-2 satellite: The case study of Sorrento Peninsula, Italy. J. Appl. Eng. Sci. 2018, 16, 225–232. [Google Scholar] [CrossRef]

- Dindaroglu, T.; Babur, E.; Yakupoglu, T.; Rodrigo-Comino, J.; Cerdà, A. Evaluation of geomorphometric characteristics and soil properties after a wildfire using Sentinel-2 MSI imagery for future fire-safe forest. Fire Saf. J. 2021, 122, 103318. [Google Scholar] [CrossRef]

- Vanderhoof, M.K.; Hawbaker, T.J.; Teske, C.; Ku, A.; Noble, J.; Picotte, J. Mapping wetland burned area from Sentinel-2 across the Southeastern United States and its contributions relative to Landsat-8 (2016–2019). Fire 2021, 4, 52. [Google Scholar] [CrossRef]

- Szpakowski, D.M.; Jensen, J.L. A review of the applications of remote sensing in fire ecology. Remote Sens. 2019, 11, 2638. [Google Scholar] [CrossRef] [Green Version]

- Liu, S.; Zheng, Y.; Dalponte, M.; Tong, X. A novel fire index-based burned area change detection approach using Landsat-8 OLI data. Eur. J. Remote Sens. 2020, 53, 104–112. [Google Scholar] [CrossRef] [Green Version]

- Nolde, M.; Plank, S.; Riedlinger, T. An adaptive and extensible system for satellite-based, large scale burnt area monitoring in near-real time. Remote Sens. 2020, 12, 2162. [Google Scholar] [CrossRef]

- Bastarrika, A.; Chuvieco, E.; Martín, M.P. Mapping burned areas from Landsat TM/ETM+ data with a two-phase algorithm: Balancing omission and commission errors. Remote Sens. Environ. 2011, 115, 1003–1012. [Google Scholar] [CrossRef]

- Oliveira, E.R.; Disperati, L.; Alves, F.L. A new method (MINDED-BA) for automatic detection of burned areas using remote sensing. Remote Sens. 2021, 13, 5164. [Google Scholar] [CrossRef]

- Sentinel-2 User Handbook, ESA. 2015. Available online: https://sentinels.copernicus.eu/documents/247904/685211/Sentinel-2_User_Handbook (accessed on 6 December 2021).

- Falchi, U. IT tools for the management of multi—Representation geographical information. Int. J. Eng. Technol 2017, 7, 65–69. [Google Scholar] [CrossRef] [Green Version]

- Copernicus Emergency Management Service. Directorate Space, Security and Migration, European Commission Joint Research Centre (EC JRC). Available online: https://emergency.copernicus.eu (accessed on 6 December 2021).

- Pappalardo, G.; Imposa, S.; Barbano, M.S.; Grassi, S.; Mineo, S. Study of landslides at the archaeological site of Abakainon necropolis (NE Sicily) by geomorphological and geophysical investigations. Landslides 2018, 15, 1279–1297. [Google Scholar] [CrossRef]

- Sciandrello, S.; D’Agostino, S.; Minissale, P. Vegetation analysis of the Taormina Region in Sicily: A plant landscape characterized by geomorphology variability and both ancient and recent anthropogenic influences. Lazaroa 2013, 34, 151. [Google Scholar] [CrossRef] [Green Version]

- Congalton, R.G. A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Lewis, H.G.; Brown, M. A generalized confusion matrix for assessing area estimates from remotely sensed data. Int. J. Remote Sens. 2001, 22, 3223–3235. [Google Scholar] [CrossRef]

- Hasmadi, M.; Pakhriazad, H.Z.; Shahrin, M.F. Evaluating supervised and unsupervised techniques for land cover mapping using remote sensing data. Geogr. Malays. J. Soc. Space 2009, 5, 1–10. [Google Scholar]

- Silva, J.M.N.; Pereira, J.M.C.; Cabral, A.I.; Sa’, A.C.L.; Vasconcelos, M.J.P.; Mota, B.; Gre’Goire, J.-M. An estimate of the area burned in southern Africa during the 2000 dry season using SPOT-VEGETATION satellite data. J. Geophys. Res. 2003, 108, 8498. [Google Scholar] [CrossRef] [Green Version]

- Trigg, S.; Flasse, S. An evaluation of different bi-spectral spaces for discriminating burned shrub-savannah. Int. J. Remote Sens. 2001, 22, 2641–2647. [Google Scholar] [CrossRef]

- Schepers, L.; Haest, B.; Veraverbeke, S.; Spanhove, T.; Vanden Borre, J.; Goossens, R. Burned area detection and burn severity assessment of a heathland fire in Belgium using airborne imaging spectroscopy (APEX). Remote Sens. 2014, 6, 1803–1826. [Google Scholar] [CrossRef] [Green Version]

- Evangelides, C.; Nobajas, A. Red-edge normalised difference vegetation index (NDVI705) from Sentinel-2 imagery to assess post-fire regeneration. Remote Sens. Appl. Soc. Environ. 2020, 17, 100283. [Google Scholar] [CrossRef]

- Van Dijk, D.; Shoaie, S.; van Leeuwen, T.; Veraverbeke, S. Spectral signature analysis of false positive burned area detection from agricultural harvests using Sentinel-2 data. Int. J. Appl. Earth Obs. Geoinf. 2021, 97, 102296. [Google Scholar] [CrossRef]

- Gerard, F.; Plummer, S.; Wadsworth, R.; Sanfeliu, A.F.; Iliffe, L.; Balzter, H.; Wyatt, B. Forest fire scar detection in the boreal forest with multitemporal spot-vegetation data. IEEE Trans. Geosci. Remote Sens. 2003, 41, 2575–2585. [Google Scholar] [CrossRef]

- Wang, L.; Qu, J.J.; Hao, X. Forest fire detection using the normalized multi-band drought index (NMDI) with satellite measurements. Agric. For. Meteorol. 2008, 148, 1767–1776. [Google Scholar] [CrossRef]

- Huang, H.; Chen, Y.; Clinton, N.; Wang, J.; Wang, X.; Liu, C.; Zhu, Z. Mapping major land cover dynamics in Beijing using all Landsat images in Google Earth Engine. Remote Sens. Environ. 2017, 202, 166–176. [Google Scholar] [CrossRef]

- Suresh Babu, K.V.; Roy, A.; Aggarwal, R. Mapping of forest fire burned severity using the sentinel datasets. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 5. [Google Scholar] [CrossRef] [Green Version]

- Kovács, K.D. Evaluation of burned areas with Sentinel-2 using SNAP: The case of Kineta and Mati, Greece, July 2018. Geogr. Tech. 2019, 14, 20–38. [Google Scholar] [CrossRef] [Green Version]

- Pulvirenti, L.; Squicciarino, G.; Fiori, E.; Fiorucci, P.; Ferraris, L.; Negro, D.; Puca, S. An automatic processing chain for near real-time mapping of burned forest areas using sentinel-2 data. Remote Sens. 2020, 12, 674. [Google Scholar] [CrossRef] [Green Version]

- Llorens, R.; Sobrino, J.A.; Fernández, C.; Fernández-Alonso, J.M.; Vega, J.A. A methodology to estimate forest fires burned areas and burn severity degrees using Sentinel-2 data. Application to the October 2017 fires in the Iberian Peninsula. Int. J. Appl. Earth Obs. Geoinf. 2021, 95, 102243. [Google Scholar] [CrossRef]

- McFeeters, S.K. The use of the Normalized Difference Water Index (NDWI) in the delineation of open water features. Int. J. Remote Sens. 1996, 17, 1425–1432. [Google Scholar] [CrossRef]

- Ip, F.; Dohm, J.M.; Baker, V.R.; Doggett, T.; Davies, A.G.; Castano, B.; Cichy, B. ASE floodwater classifier development for EO-1 hyperion imagery. Lunar Planet. Sci. 2004, 35, 1–2. [Google Scholar]

- Sisodia, P.S.; Tiwari, V.; Kumar, A. Analysis of supervised maximum likelihood classification for remote sensing image. In Proceedings of the International Conference on Recent Advances and Innovations in Engineering (ICRAIE-2014), Jaipur, India, 9–11 May 2014; pp. 1–4. [Google Scholar] [CrossRef]

- Schowengerdt, R.A. Techniques for Image Processing and Classifications in Remote Sensing; Academic Press: Cambridge, MA, USA, 2012. [Google Scholar]

- Cingolani, A.M.; Renison, D.; Zak, M.R.; Cabido, M.R. Mapping vegetation in a heterogeneous mountain rangeland using Landsat data: An alternative method to define and classify land-cover units. Remote Sens. Environ. 2004, 92, 84–97. [Google Scholar] [CrossRef]

- Colwell, R.N. Manual of Remote Sensing; American Society of Photogrammetry: Falls Church, VA, USA, 1983. [Google Scholar]

- Muñoz-Marí, J.; Bruzzone, L.; Camps-Valls, G. A support vector domain description approach to supervised classification of remote sensing images. IEEE Trans. Geosci. Remote Sens. 2007, 45, 2683–2692. [Google Scholar] [CrossRef]

- Srivastava, P.K.; Han, D.; Rico-Ramirez, M.A.; Bray, M.; Islam, T. Selection of classification techniques for land use/land cover change investigation. Adv. Space Res. 2012, 50, 1250–1265. [Google Scholar] [CrossRef]

- Alcaras, E.; Amoroso, P.P.; Parente, C.; Prezioso, G. Remotely sensed image fast classification and smart thematic map production. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, 46, 43–50. [Google Scholar] [CrossRef]

- Alcaras, E.; Errico, A.; Falchi, U.; Parente, C.; Vallario, A. Coastline extraction from optical satellite imagery and accuracy evaluation. In Proceedings of the International Workshop on R3 in Geomatics: Research, Results and Review, Naples, Italy, 10–11 October 2019; Springer: Cham, Switzerland, 2019; pp. 336–349. [Google Scholar] [CrossRef]

- Arellano-Pérez, S.; Ruiz-González, A.D.; Álvarez-González, J.G.; Vega-Hidalgo, J.A.; Díaz-Varela, R.; Alonso-Rego, C. Mapping fire severity levels of burned areas in Galicia (NW Spain) by Landsat images and the dNBR index: Preliminary results about the influence of topographical, meteorological and fuel factors on the highest severity level. Adv. For. Fire Res. 2018, 5, 1053. [Google Scholar] [CrossRef] [Green Version]

- Shimabukuro, Y.E.; Dutra, A.C.; Arai, E.; Duarte, V.; Cassol, H.L.G.; Pereira, G.; Cardozo, F.D.S. Mapping burned areas of Mato Grosso state Brazilian Amazon using multisensor datasets. Remote Sens. 2020, 12, 3827. [Google Scholar] [CrossRef]

- Ponomarev, E.; Zabrodin, A.; Ponomareva, T. Classification of fire damage to boreal forests of Siberia in 2021 based on the dNBR index. Fire 2022, 5, 19. [Google Scholar] [CrossRef]

- Wang, W.Y.; Fang, W.H.; Cai, G.Y.; Nie, P.J.; Liu, D.X. Image change detection and statistical test. DEStech transactions on computer science and engineering. In Proceedings of the 2018 International Conference on Computational, Modeling, Simulation and Mathematical Statistics (CMSMS 2018), Xi’an, China, 24–25 June 2018; pp. 598–603. [Google Scholar] [CrossRef]

- Liu, T.; Yang, L.; Lunga, D.D. Towards misregistration-tolerant change detection using deep learning techniques with object-based image analysis. In Proceedings of the 27th ACM SIGSPATIAL International Conference on Advances in Geographic Information Systems, Chicago, IL, USA, 5–8 November 2019; pp. 420–423. [Google Scholar] [CrossRef] [Green Version]

- Mallinis, G.; Gitas, I.Z.; Giannakopoulos, V.; Maris, F.; Tsakiri-Strati, M. An object-based approach for flood area delineation in a transboundary area using ENVISAT ASAR and LANDSAT TM data. Int. J. Digit. Earth 2013, 6, 124–136. [Google Scholar] [CrossRef]

- Veraverbeke, S.; Hook, S.; Hulley, G. An alternative spectral index for rapid fire severity assessments. Remote Sens. Environ. 2012, 123, 72–80. [Google Scholar] [CrossRef]

- Tanase, M.A.; Belenguer-Plomer, M.A.; Roteta, E.; Bastarrika, A.; Wheeler, J.; Fernández-Carrillo, Á.; Chuvieco, E. Burned area detection and mapping: Intercomparison of sentinel-1 and sentinel-2 based algorithms over tropical Africa. Remote Sens. 2020, 12, 334. [Google Scholar] [CrossRef] [Green Version]

- Sanchez, A.H.; Picoli, M.C.A.; Camara, G.; Andrade, P.R.; Chaves, M.E.D.; Lechler, S.; Queiroz, G.R. Comparison of cloud cover detection algorithms on sentinel–2 images of the amazon tropical forest. Remote Sens. 2020, 12, 1284. [Google Scholar] [CrossRef] [Green Version]

- Epting, J.; Verbyla, D.; Sorbel, B. Evaluation of remotely sensed indices for assessing burn severity in interior Alaska using Landsat TM and ETM+. Remote Sens. Environ. 2005, 96, 328–339. [Google Scholar] [CrossRef]

- Nasr, M.; Zenati, H.; Dhieb, M. Using RS and GIS to mapping land cover of the Cap Bon (Tunisia). Environmental Remote Sensing and GIS in Tunisia; Springer: Cham, Switzerland, 2021; pp. 117–142. [Google Scholar] [CrossRef]

- Story, M.; Congalton, R.G. Accuracy assessment: A user’s perspective. Photogramm. Eng. Remote Sens. 1986, 52, 397–399. [Google Scholar]

- Dibs, H.; Hasab, H.A.; Al-Rifaie, J.K.; Al-Ansari, N. An optimal approach for land-use/land-cover mapping by integration and fusion of multispectral landsat OLI images: Case study in Baghdad, Iraq. Water Air Soil Pollut. 2020, 231, 488. [Google Scholar] [CrossRef]

- Liu, C.; Frazier, P.; Kumar, L. Comparative assessment of the measures of thematic classification accuracy. Remote Sens. Environ. 2007, 107, 606–616. [Google Scholar] [CrossRef]

- Mpakairi, K.S.; Ndaimani, H.; Kavhu, B. Exploring the utility of Sentinel-2 MSI derived spectral indices in mapping burned areas in different land-cover types. Sci. Afr. 2020, 10, e00565. [Google Scholar] [CrossRef]

- Abdikan, S.; Bayik, C.; Sekertekin, A.; Bektas Balcik, F.; Karimzadeh, S.; Matsuoka, M.; Balik Sanli, F. Burned area detection using multi-sensor SAR, optical, and thermal data in Mediterranean pine forest. Forests 2022, 13, 347. [Google Scholar] [CrossRef]

- Dixon, D.J.; Callow, J.N.; Duncan, J.M.; Setterfield, S.A.; Pauli, N. Regional-scale fire severity mapping of Eucalyptus forests with the Landsat archive. Remote Sens. Environ. 2022, 270, 112863. [Google Scholar] [CrossRef]

- Stow, D.; Petersen, A.; Rogan, J.; Franklin, J. Mapping burn severity of Mediterranean-type vegetation using satellite multispectral data. Giscience Remote Sens. 2007, 44, 1–23. [Google Scholar] [CrossRef]

| Bands | Central Wavelength (µm) | Resolution (m) |

|---|---|---|

| B1—Coastal Aerosol | 0.443 | 60 |

| B2—Blue | 0.490 | 10 |

| B3—Green | 0.560 | 10 |

| B4—Red | 0.665 | 10 |

| B5—Red Edge1 | 0.705 | 20 |

| B6—Red Edge2 | 0.740 | 20 |

| B7—Red Edge3 | 0.783 | 20 |

| B8—NIR | 0.842 | 10 |

| B8A—Narrow NIR | 0.865 | 20 |

| B9—Water Vapor | 0.945 | 60 |

| B10—SWIR Cirrus | 1.375 | 60 |

| B11—SWIR1 | 1.610 | 20 |

| B12—SWIR2 | 2.190 | 20 |

| Method | PA-BA | PA-NON BA | UA-BA | UA-NON BA | OA |

|---|---|---|---|---|---|

| NBR | 0.611 | 0.758 | 0.716 | 0.661 | 0.685 |

| NBRSWIR | 0.558 | 0.757 | 0.696 | 0.631 | 0.657 |

| NDSWIR | 0.607 | 0.751 | 0.709 | 0.657 | 0.679 |

| MIRBI | 0.566 | 0.651 | 0.618 | 0.600 | 0.608 |

| BAIS2 | 0.630 | 0.967 | 0.950 | 0.723 | 0.798 |

| NBR+ | 0.858 | 0.958 | 0.953 | 0.871 | 0.908 |

| Method | PA-BA | PA-NON BA | UA-BA | UA-NON BA | OA |

|---|---|---|---|---|---|

| NBR | 0.611 | 1.000 | 1.000 | 0.720 | 0.805 |

| NBRSWIR | 0.558 | 0.993 | 0.987 | 0.692 | 0.776 |

| NDSWIR | 0.609 | 1.000 | 1.000 | 0.719 | 0.804 |

| MIRBI | 0.568 | 0.995 | 0.992 | 0.697 | 0.782 |

| BAIS2 | 0.630 | 0.988 | 0.981 | 0.728 | 0.809 |

| NBR+ | 0.858 | 0.998 | 0.998 | 0.796 | 0.925 |

| Method | PA-BA | PA-NON BA | U-BA | UA-NON BA | OA |

|---|---|---|---|---|---|

| NBR | 0.984 | 0.833 | 0.855 | 0.981 | 0.909 |

| NBRSWIR | 0.914 | 0.914 | 0.914 | 0.914 | 0.914 |

| NDSWIR | 0.968 | 0.766 | 0.805 | 0.960 | 0.867 |

| MIRBI | 0.749 | 0.992 | 0.990 | 0.798 | 0.870 |

| BAIS2 | 0.935 | 0.827 | 0.844 | 0.927 | 0.881 |

| NBR+ | 1.000 | 0.947 | 0.950 | 1.000 | 0.974 |

| Method | PA-BA | PA-NON BA | UA-BA | UA-NON BA | OA |

|---|---|---|---|---|---|

| NBR | 0.986 | 1.000 | 1.000 | 0.986 | 0.993 |

| NBRSWIR | 0.907 | 1.000 | 1.000 | 0.915 | 0.953 |

| NDSWIR | 0.967 | 1.000 | 1.000 | 0.968 | 0.983 |

| MIRBI | 0.749 | 0.998 | 0.997 | 0.799 | 0.874 |

| BAIS2 | 0.933 | 0.947 | 0.947 | 0.934 | 0.940 |

| NBR+ | 1.000 | 0.995 | 0.995 | 1.000 | 0.998 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alcaras, E.; Costantino, D.; Guastaferro, F.; Parente, C.; Pepe, M. Normalized Burn Ratio Plus (NBR+): A New Index for Sentinel-2 Imagery. Remote Sens. 2022, 14, 1727. https://doi.org/10.3390/rs14071727

Alcaras E, Costantino D, Guastaferro F, Parente C, Pepe M. Normalized Burn Ratio Plus (NBR+): A New Index for Sentinel-2 Imagery. Remote Sensing. 2022; 14(7):1727. https://doi.org/10.3390/rs14071727

Chicago/Turabian StyleAlcaras, Emanuele, Domenica Costantino, Francesca Guastaferro, Claudio Parente, and Massimiliano Pepe. 2022. "Normalized Burn Ratio Plus (NBR+): A New Index for Sentinel-2 Imagery" Remote Sensing 14, no. 7: 1727. https://doi.org/10.3390/rs14071727

APA StyleAlcaras, E., Costantino, D., Guastaferro, F., Parente, C., & Pepe, M. (2022). Normalized Burn Ratio Plus (NBR+): A New Index for Sentinel-2 Imagery. Remote Sensing, 14(7), 1727. https://doi.org/10.3390/rs14071727