Mapping of Urban Vegetation with High-Resolution Remote Sensing: A Review

Abstract

:1. Introduction

2. Materials and Methods

- Is the study published after 2000?

- Does the study focus on the mapping of vegetation in an urban environment?

- Does the study go beyond the functional distinction between major plant habits/life forms (e.g., woody vegetation versus herbaceous vegetation or trees, shrubs and herbs)?

- Does the study use high-resolution imagery?

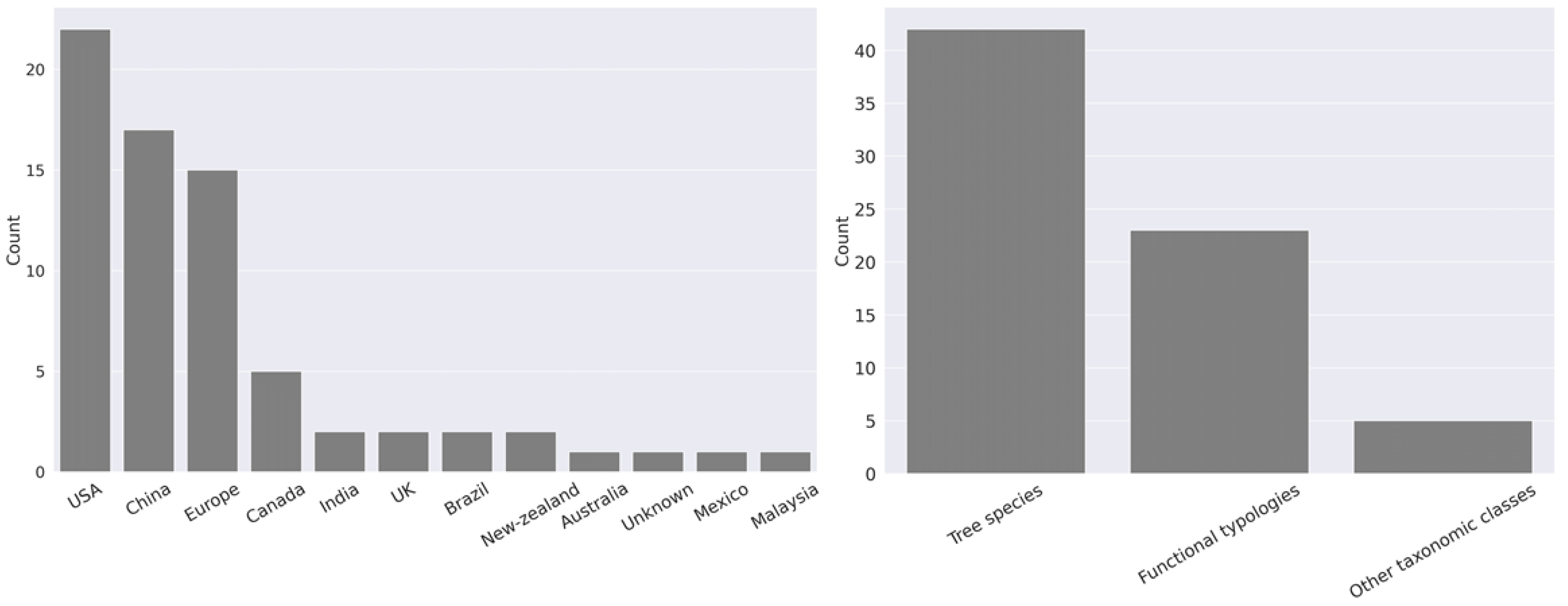

3. Results

3.1. Vegetation Typologies

3.1.1. Functional Vegetation Types

3.1.2. Taxonomic Classes

3.2. Remote Sensing Data

3.2.1. Optical Sensors

| Sensor | Spatial Resolution [m] | Spectral Resolution [# Bands] | Classification Scheme | Used by |

|---|---|---|---|---|

| High spatial resolution [1–5 m] | ||||

| Gao-Fen 2 | 4 | 4 | green space | [73] |

| IKONOS | 4 (MS) | 4 | vegetation communities, tree species | [36,45,53,58,74] |

| Quickbird | 2.62 (MS) | 4 | green space | [75] |

| Pleiades | 2 (MS) | 4 | tree species | [76,77] |

| Rapid-Eye | 5 (MS) | 5 | green space, plots of homogeneous trees | [57,78] |

| Worldview-2 | 2 (MS) | 8 | green infrastructure, tree species | [30,55,56,79] |

| Worldview-3 | 1.24 (MS) 3.7 (SWIR) | 16 | tree species | [55,66] |

| CASI | 2 | 32 (429–954 nm) | vegetation types, tree species | [52] |

| AISA | 2 | (400–850 nm) | tree species | [71] |

| HyMap | 3 | 125 | tree species | [80] |

| Hypex VNIR 1600 | 2 | 160 | green infrastructure | [12] |

| AISA | 2 | 186 (400–850 nm) | tree species | [71] |

| APEX | 2 | 218 (412–2431 nm) | functional vegetation types | [30] |

| AVIRIS | 3–17 | 224 | tree species | [18,59,61,65] |

| AISA+ | 2.2 | 248 (400–970 nm) | tree species | [54] |

| AISA Dual hyperspectral sensor | 1.6 | 492 | tree species | [81] |

| Very high spatial resolution [≤1 m] | ||||

| Nearmap Aerial photos | 0.6 | 3 | tree species | [56] |

| Aerial photos (various) | 0.075–0.4 (RGB) | 3 | vegetation types, tree species | [17,48,67,82,83,84,85,86] |

| NAIP | 1 | 4 | functional vegetation types | [51,55] |

| Aerial photos (various) | 0.20–0.5 (VNIR) | 4 | tree species | [20,72,87,88] |

| Air sensing inc. | 0.06 (VNIR) | 4 | tree species | [60] |

| Rikola | 0.65 | 16 (500–900 nm) | tree species | [89] |

| Eagle | 1 | 63 (400–970 nm) | tree species | [71] |

| CASI 1500 | 1 | 72 (363–1051 nm) | shrub species | [64,68] |

Imagery with a High Spatial Resolution (1–5 m)

Imagery with a Very High Spatial Resolution (≤1 m)

3.2.2. LiDAR

Fusion of LiDAR Data and Spectral Imagery

3.2.3. Terrestrial Sensors

3.2.4. Importance of Phenology in Vegetation Mapping

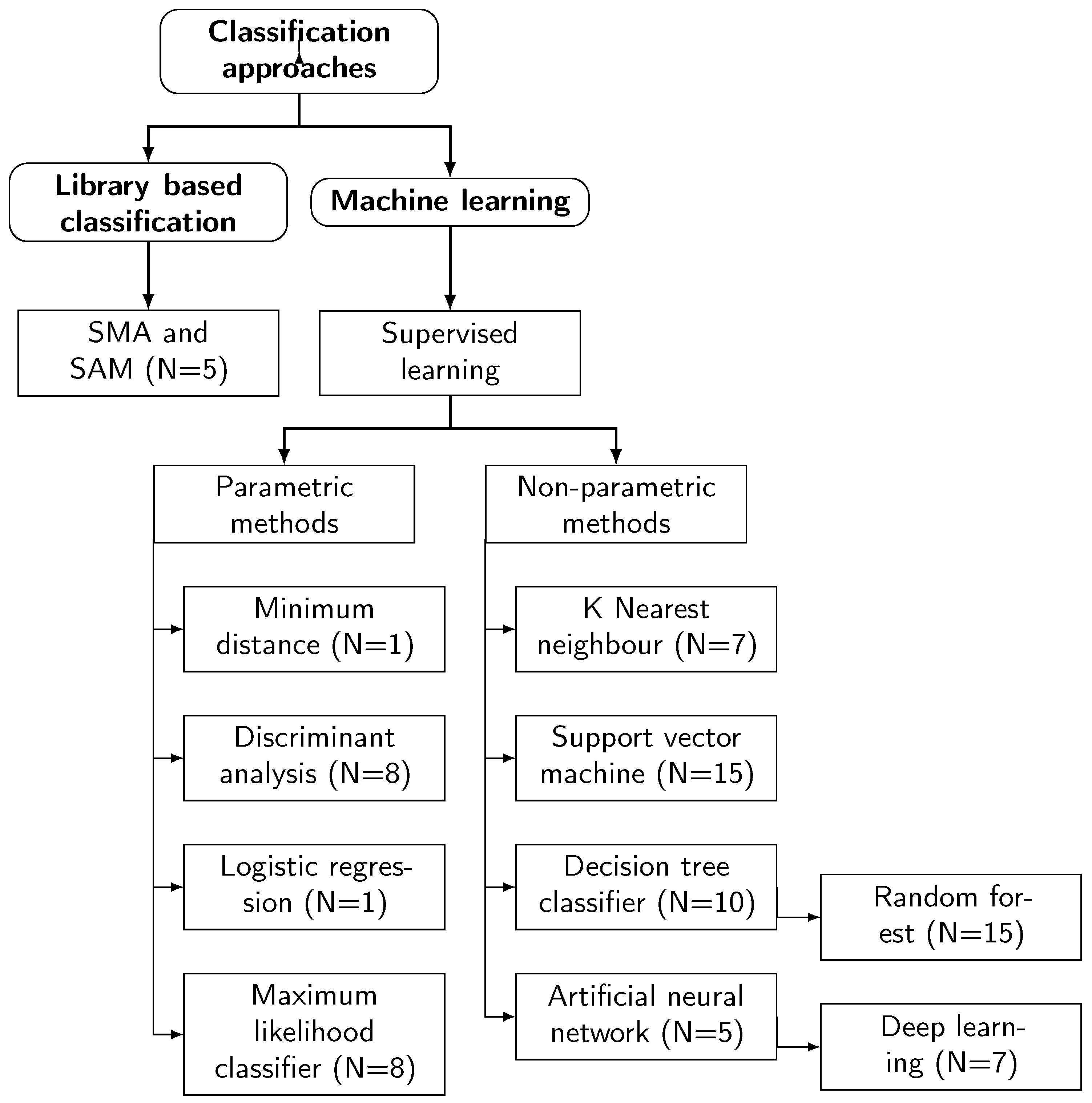

3.3. Mapping Approaches

3.3.1. Feature Definition

Spectral Features

Textural Features

Geometric Features

Contextual Features

LiDAR-Derived Features

3.3.2. Image Segmentation

3.3.3. Classification Approaches

Supervised Learning Approaches

Library-Based Classification

Deep Learning

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Nutsford, D.; Pearson, A.; Kingham, S. An ecological study investigating the association between access to urban green space and mental health. Public Health 2013, 127, 1005–1011. [Google Scholar] [CrossRef]

- Bastian, O.; Haase, D.; Grunewald, K. Ecosystem properties, potentials and services–The EPPS conceptual framework and an urban application example. Ecol. Indic. 2012, 21, 7–16. [Google Scholar] [CrossRef]

- Dimoudi, A.; Nikolopoulou, M. Vegetation in the urban environment: Microclimatic analysis and benefits. Energy Build. 2003, 35, 69–76. [Google Scholar] [CrossRef] [Green Version]

- Nowak, D.J.; Crane, D.E. Carbon storage and sequestration by urban trees in the USA. Environ. Pollut. 2002, 116, 381–389. [Google Scholar] [CrossRef]

- De Carvalho, R.M.; Szlafsztein, C.F. Urban vegetation loss and ecosystem services: The influence on climate regulation and noise and air pollution. Environ. Pollut. 2019, 245, 844–852. [Google Scholar] [CrossRef] [PubMed]

- Susca, T.; Gaffin, S.R.; Dell’Osso, G. Positive effects of vegetation: Urban heat island and green roofs. Environ. Pollut. 2011, 159, 2119–2126. [Google Scholar] [CrossRef]

- Cadenasso, M.L.; Pickett, S.T.; Grove, M.J. Integrative approaches to investigating human-natural systems: The Baltimore ecosystem study. Nat. Sci. Soc. 2006, 14, 4–14. [Google Scholar] [CrossRef]

- Pickett, S.T.; Cadenasso, M.L.; Grove, J.M.; Boone, C.G.; Groffman, P.M.; Irwin, E.; Kaushal, S.S.; Marshall, V.; McGrath, B.P.; Nilon, C.H.; et al. Urban ecological systems: Scientific foundations and a decade of progress. J. Environ. Manag. 2011, 92, 331–362. [Google Scholar] [CrossRef]

- Escobedo, F.J.; Kroeger, T.; Wagner, J.E. Urban forests and pollution mitigation: Analyzing ecosystem services and disservices. Environ. Pollut. 2011, 159, 2078–2087. [Google Scholar] [CrossRef]

- Gillner, S.; Vogt, J.; Tharang, A.; Dettmann, S.; Roloff, A. Role of street trees in mitigating effects of heat and drought at highly sealed urban sites. Landsc. Urban Plan. 2015, 143, 33–42. [Google Scholar] [CrossRef]

- Drillet, Z.; Fung, T.K.; Leong, R.A.T.; Sachidhanandam, U.; Edwards, P.; Richards, D. Urban vegetation types are not perceived equally in providing ecosystem services and disservices. Sustainability 2020, 12, 2076. [Google Scholar] [CrossRef] [Green Version]

- Bartesaghi-Koc, C.; Osmond, P.; Peters, A. Mapping and classifying green infrastructure typologies for climate-related studies based on remote sensing data. Urban For. Urban Green. 2019, 37, 154–167. [Google Scholar] [CrossRef]

- Sæbø, A.; Popek, R.; Nawrot, B.; Hanslin, H.M.; Gawronska, H.; Gawronski, S. Plant species differences in particulate matter accumulation on leaf surfaces. Sci. Total Environ. 2012, 427, 347–354. [Google Scholar] [CrossRef]

- Roy, S.; Byrne, J.; Pickering, C. A systematic quantitative review of urban tree benefits, costs, and assessment methods across cities in different climatic zones. Urban For. Urban Green. 2012, 11, 351–363. [Google Scholar] [CrossRef] [Green Version]

- Eisenman, T.S.; Churkina, G.; Jariwala, S.P.; Kumar, P.; Lovasi, G.S.; Pataki, D.E.; Weinberger, K.R.; Whitlow, T.H. Urban trees, air quality, and asthma: An interdisciplinary review. Landsc. Urban Plan. 2019, 187, 47–59. [Google Scholar] [CrossRef]

- Raupp, M.J.; Cumming, A.B.; Raupp, E.C. Street Tree Diversity in Eastern North America and Its Potential for Tree Loss to Exotic Borers. Arboric. Urban For. 2006, 32, 297–304. [Google Scholar] [CrossRef]

- Baker, F.; Smith, C.L.; Cavan, G. A combined approach to classifying land surface cover of urban domestic gardens using citizen science data and high resolution image analysis. Remote Sens. 2018, 10, 537. [Google Scholar] [CrossRef] [Green Version]

- Alonzo, M.; Bookhagen, B.; Roberts, D.A. Urban tree species mapping using hyperspectral and lidar data fusion. Remote Sens. Environ. 2014, 148, 70–83. [Google Scholar] [CrossRef]

- Walter, V. Object-based classification of remote sensing data for change detection. ISPRS J. Photogramm. Remote Sens. 2004, 58, 225–238. [Google Scholar] [CrossRef]

- Iovan, C.; Boldo, D.; Cord, M. Detection, characterization, and modeling vegetation in urban areas from high-resolution aerial imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2008, 1, 206–213. [Google Scholar] [CrossRef]

- Adeline, K.R.; Chen, M.; Briottet, X.; Pang, S.; Paparoditis, N. Shadow detection in very high spatial resolution aerial images: A comparative study. ISPRS J. Photogramm. Remote Sens. 2013, 80, 21–38. [Google Scholar] [CrossRef]

- Van der Linden, S.; Hostert, P. The influence of urban structures on impervious surface maps from airborne hyperspectral data. Remote Sens. Environ. 2009, 113, 2298–2305. [Google Scholar] [CrossRef]

- Li, D.; Ke, Y.; Gong, H.; Chen, B.; Zhu, L. Tree species classification based on WorldView-2 imagery in complex urban environment. In Proceedings of the 2014 Third International Workshop on Earth Observation and Remote Sensing Applications (EORSA), Changsha, China, 11–14 June 2014; pp. 326–330. [Google Scholar]

- Shahtahmassebi, A.; Li, C.; Fan, Y.; Wu, Y.; Gan, M.; Wang, K.; Malik, A.; Blackburn, A. Remote sensing of urban green spaces: A review. Urban For. Urban Green. 2020, 57, 126946. [Google Scholar] [CrossRef]

- Wang, K.; Wang, T.; Liu, X. A review: Individual tree species classification using integrated airborne LiDAR and optical imagery with a focus on the urban environment. Forests 2019, 10, 1. [Google Scholar] [CrossRef] [Green Version]

- Fassnacht, F.E.; Latifi, H.; Stereńczak, K.; Modzelewska, A.; Lefsky, M.; Waser, L.T.; Straub, C.; Ghosh, A. Review of studies on tree species classification from remotely sensed data. Remote Sens. Environ. 2016, 186, 64–87. [Google Scholar] [CrossRef]

- Smith, T.; Shugart, H.; Woodward, F.; Burton, P. Plant functional types. In Vegetation Dynamics & Global Change; Springer: Berlin/Heidelberg, Germany, 1993; pp. 272–292. [Google Scholar]

- Ecosystem Services and Green Infrastructure. Available online: https://ec.europa.eu/environment/nature/ecosystems/index_en.htm (accessed on 14 April 2021).

- Taylor, L.; Hochuli, D.F. Defining greenspace: Multiple uses across multiple disciplines. Landsc. Urban Plan. 2017, 158, 25–38. [Google Scholar] [CrossRef] [Green Version]

- Degerickx, J.; Hermy, M.; Somers, B. Mapping Functional Urban Green Types Using High Resolution Remote Sensing Data. Sustainability 2020, 12, 2144. [Google Scholar] [CrossRef] [Green Version]

- Adamson, R. The classification of life-forms of plants. Bot. Rev. 1939, 5, 546–561. [Google Scholar] [CrossRef]

- Yapp, G.; Walker, J.; Thackway, R. Linking vegetation type and condition to ecosystem goods and services. Ecol. Complex. 2010, 7, 292–301. [Google Scholar] [CrossRef]

- Weber, C.; Petropoulou, C.; Hirsch, J. Urban development in the Athens metropolitan area using remote sensing data with supervised analysis and GIS. Int. J. Remote Sens. 2005, 26, 785–796. [Google Scholar] [CrossRef]

- Hermosilla, T.; Palomar-Vázquez, J.; Balaguer-Beser, Á.; Balsa-Barreiro, J.; Ruiz, L.A. Using street based metrics to characterize urban typologies. Comput. Environ. Urban Syst. 2014, 44, 68–79. [Google Scholar] [CrossRef] [Green Version]

- Walde, I.; Hese, S.; Berger, C.; Schmullius, C. From land cover-graphs to urban structure types. Int. J. Geogr. Inf. Sci. 2014, 28, 584–609. [Google Scholar] [CrossRef]

- Mathieu, R.; Aryal, J.; Chong, A.K. Object-based classification of Ikonos imagery for mapping large-scale vegetation communities in urban areas. Sensors 2007, 7, 2860–2880. [Google Scholar] [CrossRef] [Green Version]

- Millennium ecosystem assessment, M. Ecosystems and Human Well-Being; Island Press: Washington, DC, USA, 2005; Volume 5. [Google Scholar]

- Freeman, C.; Buck, O. Development of an ecological mapping methodology for urban areas in New Zealand. Landsc. Urban Plan. 2003, 63, 161–173. [Google Scholar] [CrossRef]

- Anderson, J.R. A Land Use and Land Cover Classification System for Use with Remote Sensor Data; US Government Printing Office: Washington, DC, USA, 1976; Volume 964.

- Stewart, I.D.; Oke, T.R. Local climate zones for urban temperature studies. Bull. Am. Meteorol. Soc. 2012, 93, 1879–1900. [Google Scholar] [CrossRef]

- Cadenasso, M.; Pickett, S.; McGrath, B.; Marshall, V. Ecological heterogeneity in urban ecosystems: Reconceptualized land cover models as a bridge to urban design. In Resilience in Ecology and Urban Design; Springer: Berlin/Heidelberg, Germany, 2013; pp. 107–129. [Google Scholar]

- Lehmann, I.; Mathey, J.; Rößler, S.; Bräuer, A.; Goldberg, V. Urban vegetation structure types as a methodological approach for identifying ecosystem services–Application to the analysis of micro-climatic effects. Ecol. Indic. 2014, 42, 58–72. [Google Scholar] [CrossRef]

- Gill, S.E.; Handley, J.F.; Ennos, A.R.; Pauleit, S. Adapting cities for climate change: The role of the green infrastructure. Built Environ. 2007, 33, 115–133. [Google Scholar] [CrossRef] [Green Version]

- Kopecká, M.; Szatmári, D.; Rosina, K. Analysis of urban green spaces based on Sentinel-2A: Case studies from Slovakia. Land 2017, 6, 25. [Google Scholar] [CrossRef] [Green Version]

- Van Delm, A.; Gulinck, H. Classification and quantification of green in the expanding urban and semi-urban complex: Application of detailed field data and IKONOS-imagery. Ecol. Indic. 2011, 11, 52–60. [Google Scholar] [CrossRef]

- Zhang, X.; Feng, X.; Jiang, H. Object-oriented method for urban vegetation mapping using IKONOS imagery. Int. J. Remote Sens. 2010, 31, 177–196. [Google Scholar] [CrossRef]

- Liu, T.; Yang, X. Mapping vegetation in an urban area with stratified classification and multiple endmember spectral mixture analysis. Remote Sens. Environ. 2013, 133, 251–264. [Google Scholar] [CrossRef]

- Tong, X.; Li, X.; Xu, X.; Xie, H.; Feng, T.; Sun, T.; Jin, Y.; Liu, X. A two-phase classification of urban vegetation using airborne LiDAR data and aerial photography. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 4153–4166. [Google Scholar] [CrossRef]

- Kranjčić, N.; Medak, D.; Župan, R.; Rezo, M. Machine learning methods for classification of the green infrastructure in city areas. ISPRS Int. J. Geo-Inf. 2019, 8, 463. [Google Scholar] [CrossRef] [Green Version]

- Kothencz, G.; Kulessa, K.; Anyyeva, A.; Lang, S. Urban vegetation extraction from VHR (tri-) stereo imagery—A comparative study in two central European cities. Eur. J. Remote Sens. 2018, 51, 285–300. [Google Scholar] [CrossRef] [Green Version]

- Li, X.; Shao, G. Object-based urban vegetation mapping with high-resolution aerial photography as a single data source. Int. J. Remote Sens. 2013, 34, 771–789. [Google Scholar] [CrossRef]

- Wania, A.; Weber, C. Hyperspectral imagery and urban green observation. In Proceedings of the 2007 Urban Remote Sensing Joint Event, Paris, France, 11–13 April 2007; pp. 1–8. [Google Scholar]

- Pu, R.; Landry, S. A comparative analysis of high spatial resolution IKONOS and WorldView-2 imagery for mapping urban tree species. Remote Sens. Environ. 2012, 124, 516–533. [Google Scholar] [CrossRef]

- Jensen, R.R.; Hardin, P.J.; Hardin, A.J. Classification of urban tree species using hyperspectral imagery. Geocarto Int. 2012, 27, 443–458. [Google Scholar] [CrossRef]

- Hartling, S.; Sagan, V.; Sidike, P.; Maimaitijiang, M.; Carron, J. Urban tree species classification using a WorldView-2/3 and LiDAR data fusion approach and deep learning. Sensors 2019, 19, 1284. [Google Scholar] [CrossRef] [Green Version]

- Katz, D.S.; Batterman, S.A.; Brines, S.J. Improved Classification of Urban Trees Using a Widespread Multi-Temporal Aerial Image Dataset. Remote Sens. 2020, 12, 2475. [Google Scholar] [CrossRef]

- Tigges, J.; Lakes, T.; Hostert, P. Urban vegetation classification: Benefits of multitemporal RapidEye satellite data. Remote Sens. Environ. 2013, 136, 66–75. [Google Scholar] [CrossRef]

- Sugumaran, R.; Pavuluri, M.K.; Zerr, D. The use of high-resolution imagery for identification of urban climax forest species using traditional and rule-based classification approach. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1933–1939. [Google Scholar] [CrossRef]

- Gu, H.; Singh, A.; Townsend, P.A. Detection of gradients of forest composition in an urban area using imaging spectroscopy. Remote Sens. Environ. 2015, 167, 168–180. [Google Scholar] [CrossRef]

- Zhang, K.; Hu, B. Individual urban tree species classification using very high spatial resolution airborne multi-spectral imagery using longitudinal profiles. Remote Sens. 2012, 4, 1741–1757. [Google Scholar] [CrossRef] [Green Version]

- Xiao, Q.; Ustin, S.; McPherson, E. Using AVIRIS data and multiple-masking techniques to map urban forest tree species. Int. J. Remote Sens. 2004, 25, 5637–5654. [Google Scholar] [CrossRef] [Green Version]

- Kim, S.; Hinckley, T.; Briggs, D. Classifying individual tree genera using stepwise cluster analysis based on height and intensity metrics derived from airborne laser scanner data. Remote Sens. Environ. 2011, 115, 3329–3342. [Google Scholar] [CrossRef]

- Matasci, G.; Coops, N.C.; Williams, D.A.; Page, N. Mapping tree canopies in urban environments using airborne laser scanning (ALS): A Vancouver case study. For. Ecosyst. 2018, 5, 31. [Google Scholar] [CrossRef] [Green Version]

- Liu, L.; Coops, N.C.; Aven, N.W.; Pang, Y. Mapping urban tree species using integrated airborne hyperspectral and LiDAR remote sensing data. Remote Sens. Environ. 2017, 200, 170–182. [Google Scholar] [CrossRef]

- Alonzo, M.; Roth, K.; Roberts, D. Identifying Santa Barbara’s urban tree species from AVIRIS imagery using canonical discriminant analysis. Remote Sens. Lett. 2013, 4, 513–521. [Google Scholar] [CrossRef]

- Fang, F.; McNeil, B.E.; Warner, T.A.; Maxwell, A.E.; Dahle, G.A.; Eutsler, E.; Li, J. Discriminating tree species at different taxonomic levels using multi-temporal WorldView-3 imagery in Washington DC, USA. Remote Sens. Environ. 2020, 246, 111811. [Google Scholar] [CrossRef]

- Shouse, M.; Liang, L.; Fei, S. Identification of understory invasive exotic plants with remote sensing in urban forests. Int. J. Appl. Earth Obs. Geoinf. 2013, 21, 525–534. [Google Scholar] [CrossRef]

- Chance, C.M.; Coops, N.C.; Plowright, A.A.; Tooke, T.R.; Christen, A.; Aven, N. Invasive shrub mapping in an urban environment from hyperspectral and LiDAR-derived attributes. Front. Plant Sci. 2016, 7, 1528. [Google Scholar] [CrossRef] [Green Version]

- Mozgeris, G.; Juodkienė, V.; Jonikavičius, D.; Straigytė, L.; Gadal, S.; Ouerghemmi, W. Ultra-light aircraft-based hyperspectral and colour-infrared imaging to identify deciduous tree species in an urban environment. Remote Sens. 2018, 10, 1668. [Google Scholar] [CrossRef] [Green Version]

- Li, D.; Ke, Y.; Gong, H.; Li, X. Object-based urban tree species classification using bi-temporal WorldView-2 and WorldView-3 images. Remote Sens. 2015, 7, 16917–16937. [Google Scholar] [CrossRef] [Green Version]

- Voss, M.; Sugumaran, R. Seasonal effect on tree species classification in an urban environment using hyperspectral data, LiDAR, and an object-oriented approach. Sensors 2008, 8, 3020–3036. [Google Scholar] [CrossRef] [Green Version]

- Puttonen, E.; Litkey, P.; Hyyppä, J. Individual tree species classification by illuminated—Shaded area separation. Remote Sens. 2010, 2, 19–35. [Google Scholar] [CrossRef] [Green Version]

- Xu, Z.; Zhou, Y.; Wang, S.; Wang, L.; Li, F.; Wang, S.; Wang, Z. A novel intelligent classification method for urban green space based on high-resolution remote sensing images. Remote Sens. 2020, 12, 3845. [Google Scholar] [CrossRef]

- Pu, R.; Landry, S.; Yu, Q. Object-based urban detailed land cover classification with high spatial resolution IKONOS imagery. Int. J. Remote Sens. 2011, 32, 3285–3308. [Google Scholar] [CrossRef] [Green Version]

- Tooke, T.R.; Coops, N.C.; Goodwin, N.R.; Voogt, J.A. Extracting urban vegetation characteristics using spectral mixture analysis and decision tree classifications. Remote Sens. Environ. 2009, 113, 398–407. [Google Scholar] [CrossRef]

- Le Louarn, M.; Clergeau, P.; Briche, E.; Deschamps-Cottin, M. “Kill Two birds with one stone”: Urban tree species classification using bi-temporal pléiades images to study nesting preferences of an invasive bird. Remote Sens. 2017, 9, 916. [Google Scholar] [CrossRef] [Green Version]

- Pu, R.; Landry, S.; Yu, Q. Assessing the potential of multi-seasonal high resolution Pléiades satellite imagery for mapping urban tree species. Int. J. Appl. Earth Obs. Geoinf. 2018, 71, 144–158. [Google Scholar] [CrossRef]

- Di, S.; Li, Z.L.; Tang, R.; Pan, X.; Liu, H.; Niu, Y. Urban green space classification and water consumption analysis with remote-sensing technology: A case study in Beijing, China. Int. J. Remote Sens. 2019, 40, 1909–1929. [Google Scholar] [CrossRef]

- Yan, J.; Zhou, W.; Han, L.; Qian, Y. Mapping vegetation functional types in urban areas with WorldView-2 imagery: Integrating object-based classification with phenology. Urban For. Urban Green. 2018, 31, 230–240. [Google Scholar] [CrossRef]

- Zhang, Z.; Kazakova, A.; Moskal, L.M.; Styers, D.M. Object-based tree species classification in urban ecosystems using LiDAR and hyperspectral data. Forests 2016, 7, 122. [Google Scholar] [CrossRef] [Green Version]

- Zhang, C.; Qiu, F. Mapping individual tree species in an urban forest using airborne lidar data and hyperspectral imagery. Photogramm. Eng. Remote Sens. 2012, 78, 1079–1087. [Google Scholar] [CrossRef] [Green Version]

- Abdollahi, A.; Pradhan, B. Urban Vegetation Mapping from Aerial Imagery Using Explainable AI (XAI). Sensors 2021, 21, 4738. [Google Scholar] [CrossRef]

- Martins, G.B.; La Rosa, L.E.C.; Happ, P.N.; Coelho Filho, L.C.T.; Santos, C.J.F.; Feitosa, R.Q.; Ferreira, M.P. Deep learning-based tree species mapping in a highly diverse tropical urban setting. Urban For. Urban Green. 2021, 64, 127241. [Google Scholar] [CrossRef]

- Lobo Torres, D.; Queiroz Feitosa, R.; Nigri Happ, P.; Elena Cue La Rosa, L.; Marcato Junior, J.; Martins, J.; Ola Bressan, P.; Gonçalves, W.N.; Liesenberg, V. Applying fully convolutional architectures for semantic segmentation of a single tree species in urban environment on high resolution UAV optical imagery. Sensors 2020, 20, 563. [Google Scholar] [CrossRef] [Green Version]

- Zhang, C.; Xia, K.; Feng, H.; Yang, Y.; Du, X. Tree species classification using deep learning and RGB optical images obtained by an unmanned aerial vehicle. J. For. Res. 2020, 32, 1879–1888. [Google Scholar] [CrossRef]

- Wang, J.; Banzhaf, E. Derive an understanding of Green Infrastructure for the quality of life in cities by means of integrated RS mapping tools. In Proceedings of the 2017 Joint Urban Remote Sensing Event (JURSE), Dubai, United Arab Emirates, 6–8 March 2017; pp. 1–4. [Google Scholar]

- Hermosilla, T.; Ruiz, L.A.; Recio, J.A.; Balsa-Barreiro, J. Land-use mapping of Valencia city area from aerial images and LiDAR data. In Proceedings of the GEOProcessing 2012: The Fourth International Conference in Advanced Geographic Information Systems, Applications and Services, Valencia, Spain, 30 January 30–4 February 2012; pp. 232–237. [Google Scholar]

- Zhou, J.; Qin, J.; Gao, K.; Leng, H. SVM-based soft classification of urban tree species using very high-spatial resolution remote-sensing imagery. Int. J. Remote Sens. 2016, 37, 2541–2559. [Google Scholar] [CrossRef]

- Mozgeris, G.; Gadal, S.; Jonikavičius, D.; Straigytė, L.; Ouerghemmi, W.; Juodkienė, V. Hyperspectral and color-infrared imaging from ultralight aircraft: Potential to recognize tree species in urban environments. In Proceedings of the 2016 8th Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Los Angeles, CA, USA, 21–24 August 2016; pp. 1–5. [Google Scholar]

- Ma, J.; Yu, W.; Chen, C.; Liang, P.; Guo, X.; Jiang, J. Pan-GAN: An unsupervised pan-sharpening method for remote sensing image fusion. Inf. Fusion 2020, 62, 110–120. [Google Scholar] [CrossRef]

- Persson, Å.; Holmgren, J.; Söderman, U.; Olsson, H. Tree species classification of individual trees in Sweden by combining high resolution laser data with high resolution near-infrared digital images. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2004, 36, 204–207. [Google Scholar]

- Li, J.; Hu, B.; Noland, T.L. Classification of tree species based on structural features derived from high density LiDAR data. Agric. For. Meteorol. 2013, 171, 104–114. [Google Scholar] [CrossRef]

- Burr, A.; Schaeg, N.; Hall, D.M. Assessing residential front yards using Google Street View and geospatial video: A virtual survey approach for urban pollinator conservation. Appl. Geogr. 2018, 92, 12–20. [Google Scholar] [CrossRef]

- Richards, D.R.; Edwards, P.J. Quantifying street tree regulating ecosystem services using Google Street View. Ecol. Indic. 2017, 77, 31–40. [Google Scholar] [CrossRef]

- Seiferling, I.; Naik, N.; Ratti, C.; Proulx, R. Green streets- Quantifying and mapping urban trees with street-level imagery and computer vision. Landsc. Urban Plan. 2017, 165, 93–101. [Google Scholar] [CrossRef]

- Berland, A.; Lange, D.A. Google Street View shows promise for virtual street tree surveys. Urban For. Urban Green. 2017, 21, 11–15. [Google Scholar] [CrossRef]

- Berland, A.; Roman, L.A.; Vogt, J. Can field crews telecommute? Varied data quality from citizen science tree inventories conducted using street-level imagery. Forests 2019, 10, 349. [Google Scholar] [CrossRef] [Green Version]

- Branson, S.; Wegner, J.D.; Hall, D.; Lang, N.; Schindler, K.; Perona, P. From Google Maps to a fine-grained catalog of street trees. ISPRS J. Photogramm. Remote Sens. 2018, 135, 13–30. [Google Scholar] [CrossRef] [Green Version]

- Puttonen, E.; Jaakkola, A.; Litkey, P.; Hyyppä, J. Tree classification with fused mobile laser scanning and hyperspectral data. Sensors 2011, 11, 5158–5182. [Google Scholar] [CrossRef]

- Chen, Y.; Wang, S.; Li, J.; Ma, L.; Wu, R.; Luo, Z.; Wang, C. Rapid urban roadside tree inventory using a mobile laser scanning system. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 3690–3700. [Google Scholar] [CrossRef]

- Wu, J.; Yao, W.; Polewski, P. Mapping individual tree species and vitality along urban road corridors with LiDAR and imaging sensors: Point density versus view perspective. Remote Sens. 2018, 10, 1403. [Google Scholar] [CrossRef] [Green Version]

- Mokroš, M.; Liang, X.; Surovỳ, P.; Valent, P.; Čerňava, J.; Chudỳ, F.; Tunák, D.; Saloň, Š.; Merganič, J. Evaluation of close-range photogrammetry image collection methods for estimating tree diameters. ISPRS Int. J. Geo-Inf. 2018, 7, 93. [Google Scholar] [CrossRef] [Green Version]

- Balsa-Barreiro, J.; Fritsch, D. Generation of visually aesthetic and detailed 3D models of historical cities by using laser scanning and digital photogrammetry. Digit. Appl. Archaeol. Cult. Herit. 2018, 8, 57–64. [Google Scholar] [CrossRef]

- Kwong, I.H.; Fung, T. Tree height mapping and crown delineation using LiDAR, large format aerial photographs, and unmanned aerial vehicle photogrammetry in subtropical urban forest. Int. J. Remote Sens. 2020, 41, 5228–5256. [Google Scholar] [CrossRef]

- Ghanbari Parmehr, E.; Amati, M. Individual Tree Canopy Parameters Estimation Using UAV-Based Photogrammetric and LiDAR Point Clouds in an Urban Park. Remote Sens. 2021, 13, 2062. [Google Scholar] [CrossRef]

- Hill, R.; Wilson, A.; George, M.; Hinsley, S. Mapping tree species in temperate deciduous woodland using time-series multi-spectral data. Appl. Veg. Sci. 2010, 13, 86–99. [Google Scholar] [CrossRef]

- Polgar, C.A.; Primack, R.B. Leaf-out phenology of temperate woody plants: From trees to ecosystems. New Phytol. 2011, 191, 926–941. [Google Scholar] [CrossRef]

- Abbas, S.; Peng, Q.; Wong, M.S.; Li, Z.; Wang, J.; Ng, K.T.K.; Kwok, C.Y.T.; Hui, K.K.W. Characterizing and classifying urban tree species using bi-monthly terrestrial hyperspectral images in Hong Kong. ISPRS J. Photogramm. Remote Sens. 2021, 177, 204–216. [Google Scholar] [CrossRef]

- Feng, Q.; Liu, J.; Gong, J. UAV remote sensing for urban vegetation mapping using random forest and texture analysis. Remote Sens. 2015, 7, 1074–1094. [Google Scholar] [CrossRef] [Green Version]

- Wen, D.; Huang, X.; Liu, H.; Liao, W.; Zhang, L. Semantic classification of urban trees using very high resolution satellite imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 1413–1424. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I.H. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, SMC-3, 610–621. [Google Scholar] [CrossRef] [Green Version]

- Shojanoori, R.; Shafri, H.Z.; Mansor, S.; Ismail, M.H. The use of WorldView-2 satellite data in urban tree species mapping by object-based image analysis technique. Sains Malays. 2016, 45, 1025–1034. [Google Scholar]

- Clark, M.L.; Roberts, D.A.; Clark, D.B. Hyperspectral discrimination of tropical rain forest tree species at leaf to crown scales. Remote Sens. Environ. 2005, 96, 375–398. [Google Scholar] [CrossRef]

- Youjing, Z.; Hengtong, F. Identification scales for urban vegetation classification using high spatial resolution satellite data. In Proceedings of the 2007 IEEE International Geoscience and Remote Sensing Symposium, Barcelona, Spain, 23–27 July 2007; pp. 1472–1475. [Google Scholar]

- Li, C.; Yin, J.; Zhao, J. Extraction of urban vegetation from high resolution remote sensing image. In Proceedings of the 2010 International Conference On Computer Design and Applications, Qinhuangdao, China, 25–27 June 2010; Volume 4, pp. V4–V403. [Google Scholar]

- Wegner, J.D.; Branson, S.; Hall, D.; Schindler, K.; Perona, P. Cataloging public objects using aerial and street-level images-urban trees. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 6014–6023. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Shi, D.; Yang, X. Mapping vegetation and land cover in a large urban area using a multiple classifier system. Int. J. Remote Sens. 2017, 38, 4700–4721. [Google Scholar] [CrossRef]

- Degerickx, J.; Hermy, M.; Somers, B. Mapping functional urban green types using hyperspectral remote sensing. In Proceedings of the 2017 Joint Urban Remote Sensing Event (JURSE), Dubai, United Arab Emirates, 6–8 March 2017; pp. 1–4. [Google Scholar]

- Pontius, J.; Hanavan, R.P.; Hallett, R.A.; Cook, B.D.; Corp, L.A. High spatial resolution spectral unmixing for mapping ash species across a complex urban environment. Remote Sens. Environ. 2017, 199, 360–369. [Google Scholar] [CrossRef]

- Guan, H.; Yu, Y.; Ji, Z.; Li, J.; Zhang, Q. Deep learning-based tree classification using mobile LiDAR data. Remote Sens. Lett. 2015, 6, 864–873. [Google Scholar] [CrossRef]

- Blanusa, T.; Garratt, M.; Cathcart-James, M.; Hunt, L.; Cameron, R.W. Urban hedges: A review of plant species and cultivars for ecosystem service delivery in north-west Europe. Urban For. Urban Green. 2019, 44, 126391. [Google Scholar] [CrossRef]

- Klaus, V.H. Urban grassland restoration: A neglected opportunity for biodiversity conservation. Restor. Ecol. 2013, 21, 665–669. [Google Scholar] [CrossRef]

- Haase, D.; Jänicke, C.; Wellmann, T. Front and back yard green analysis with subpixel vegetation fractions from earth observation data in a city. Landsc. Urban Plan. 2019, 182, 44–54. [Google Scholar] [CrossRef]

- Cameron, R.W.; Blanuša, T.; Taylor, J.E.; Salisbury, A.; Halstead, A.J.; Henricot, B.; Thompson, K. The domestic garden–Its contribution to urban green infrastructure. Urban For. Urban Green. 2012, 11, 129–137. [Google Scholar] [CrossRef]

- Crawford, M.M.; Tuia, D.; Yang, H.L. Active learning: Any value for classification of remotely sensed data? Proc. IEEE 2013, 101, 593–608. [Google Scholar] [CrossRef] [Green Version]

- Chatterjee, A.; Saha, J.; Mukherjee, J.; Aikat, S.; Misra, A. Unsupervised land cover classification of hybrid and dual-polarized images using deep convolutional neural network. IEEE Geosci. Remote Sens. Lett. 2020, 18, 969–973. [Google Scholar] [CrossRef]

- Fang, B.; Li, Y.; Zhang, H.; Chan, J.C.W. Collaborative learning of lightweight convolutional neural network and deep clustering for hyperspectral image semi-supervised classification with limited training samples. ISPRS J. Photogramm. Remote Sens. 2020, 161, 164–178. [Google Scholar] [CrossRef]

- Sainte Fare Garnot, V.; Landrieu, L.; Giordano, S.; Chehata, N. Satellite Image Time Series Classification with Pixel-Set Encoders and Temporal Self-Attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

| Term | Explanation |

|---|---|

| Functional vegetation type | Or plant functional type (PFT) is a general term that groups plants according to their function in ecosystems and their use of resources. The term has gained popularity among researchers looking at the interaction between vegetation and climate change [27]. |

| Green infrastructure | Green infrastructure is defined by the European Commission as “a strategically planned network of natural and semi-natural areas with other environmental features designed and managed to deliver a wide range of ecosystem services such as water purification, air quality...” [28]. It is mostly used in the context of climate studies (e.g., [12]) and urban planning. |

| Green space | Green space is often defined in different ways in different disciplines. Two broad interpretations are identified by Taylor and Hochuli [29]: (a) as a synonym for nature or (b) explicitly as urban vegetation. Within the scope of this review study, the term will be used as a broad term for vegetated urban areas. |

| Urban green element | Assemblage of individual plants together providing similar functions and services [30]. |

| Vegetation life form | The similarities in structure and function of plant species allow them to be grouped into life forms. A life form is generally known to display an obvious relationship with important environmental factors, although many different interpretations exist [31]. |

| Vegetation species | Plants are taxonomically divided into families, genera, species, varieties, etc. For the mapping of trees, researchers often choose to focus on the taxonomic level of the species. |

| Vegetation type | Vegetation types can be defined at different levels, mainly depending on the set of characteristics used for discrimination. A proper scheme of vegetation types allows decision-makers and land managers to develop and apply appropriate land management practices [32]. Within the scope of urban vegetation mapping, the term is often used to indicate a broader distinction between plants that have either morphological or spectral similarities. The level of detail depends on the context of the study. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Neyns, R.; Canters, F. Mapping of Urban Vegetation with High-Resolution Remote Sensing: A Review. Remote Sens. 2022, 14, 1031. https://doi.org/10.3390/rs14041031

Neyns R, Canters F. Mapping of Urban Vegetation with High-Resolution Remote Sensing: A Review. Remote Sensing. 2022; 14(4):1031. https://doi.org/10.3390/rs14041031

Chicago/Turabian StyleNeyns, Robbe, and Frank Canters. 2022. "Mapping of Urban Vegetation with High-Resolution Remote Sensing: A Review" Remote Sensing 14, no. 4: 1031. https://doi.org/10.3390/rs14041031