1. Introduction

In recent years, with the launches of various hyperspectral satellites [

1], hyperspectral images (HSIs) have been frequently used in many applications, such as coastal wetland mapping, species classification of mangrove forests, and so on. HSIs with detailed spectral information are particularly important in the analysis of the land-cover for coastal environmental monitoring, disaster monitoring, precision agriculture, forestry surveying and urban planning [

1], because HSIs with high spectral resolution can provide better performance for qualitative and quantitative analysis of geographic entities. However, limited by the sensitivity of photoelectric sensors and transmission capability, the spatial resolution of HSIs is not sufficient for some applications [

2], such as the monitoring of air pollution [

3], land and sea surface temperatures [

4,

5], heavy metals in soil and vegetation [

6], water quality [

7], land cover [

8,

9] and lithological mapping [

10]. In recent years, the development of accurate remote sensing applications has increased the requirement for images with both high spatial and spectral resolution.

The fusion of HSIs with high spatial resolution images is an excellent solution to obtain images with both high spectral and spatial resolution [

11]. Image fusion can break through the mutual restriction of spatial and spectral resolution [

12], integrate the advantages of HSIs and high spatial resolution images, and obtain HSIs with high spatial resolution [

13,

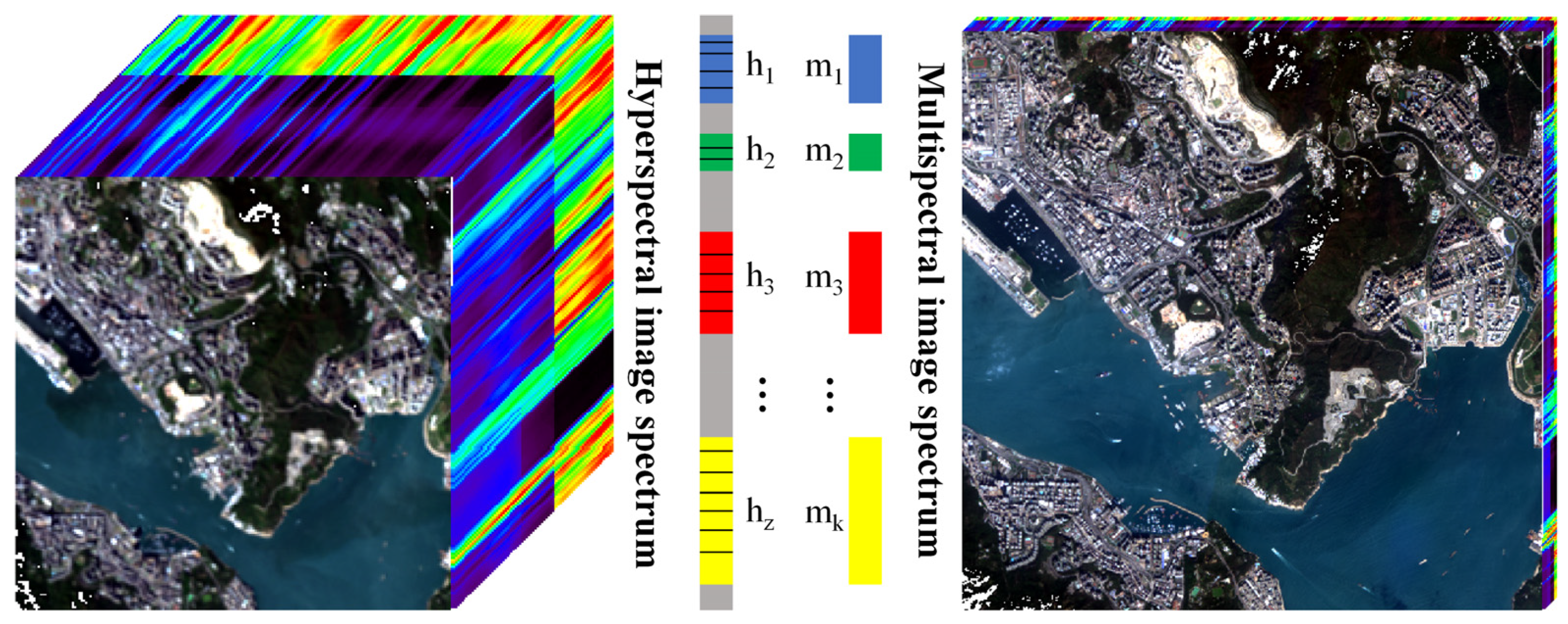

14]. Integrating the complementary advantages of HSI, MSI and PAN images in the same area through image fusion technology will greatly improve the application potential and prospects of all three. According to different combinations of input data source, HSI fusion strategies can be divided into three categories: (1) HSI+MSI, (2) HSI+PAN and (3) HSI+MSI+PAN. Currently, most of the HSI fusion studies have focused on the first two categories, and few studies have focused on the last category.

Since pan-sharpening can be considered a special case of the HSI–MSI fusion problem, spectral grouping strategies have been proposed to generalize existing pan-sharpening methods to the more challenging HSI–MSI fusion. Specifically, an HSI–MSI fusion framework was proposed in [

15] which divided HSIs into multiple groups of images according to spectrum and fused the groups with their corresponding channels of MSIs. A similar idea was also proposed in [

2], in which the HSI–MSI fusion was automatically decomposed into multiple groups of weighted pan-sharping problems. Selva et al. also proposed a framework called hyper-sharpening that effectively applied the MRA-based pan-sharpening methods to HSI–MSI fusion [

16]. Fusing images by exploiting the inherent spectral characteristics of the scene via a subspace is another method for HSI–MSI fusing [

12,

14,

17,

18], and a Bayesian method based on a maximum a posteriori (MAP) estimation which used a stochastic mixing model (SMM) to estimate the underlying spectral scene was one of the first proposed methods [

12]. Another kind of popular approach for fusing HSI and MSI was spectral unmixing, and several methods were proposed [

19,

20,

21]. Unmixing-based fusion obtained endmember information and high-resolution abundance matrices from the HSI and MSI, and the fused image can be reconstructed by multiplying the two resulting matrices.

HSI–MSI fusion can only obtain HSIs with the same spatial resolution as the MSIs. To obtain HSIs with higher spatial resolution, several HSI–PAN fusion methods were proposed [

22,

23,

24,

25]. However, most of the existing HSI–PAN fusion methods focused on increasing the spatial resolution by two–five times. In [

22,

24,

25], the spatial resolution ratios of several groups of HSI and PAN image were three times and five times, and in [

23], it was three times. In practical applications, the HSI fusion problems with spatial resolution ratios of 10 times or more are challenging [

26].

Considering the limitations of HSI–MSI and HSI–PAN fusion, HSI–MSI–PAN fusion is a potential solution. Using the integrated fusion framework proposed by Meng et al., spatial (high-frequency) and spectral (low-frequency) components of multi-sensor images were decomposed using modulation transfer function (MTF) filtering, and then they were fused by automatically estimating the fusion weights [

27]. Shen et al. also proposed an integrated method for fusing multiple temporal-spatial-spectral scales of remote sensing images. The method was designed based on the maximum a posteriori (MAP) framework [

28], and the efficacy of the method was only validated using simulated images. Besides, the fusion of HSI–MSI–PAN is theoretically complex, and there are few reliable methods validated with real data available at present. How to fuse HSI–MSI–PAN simply and effectively is still an urgent problem to be solved.

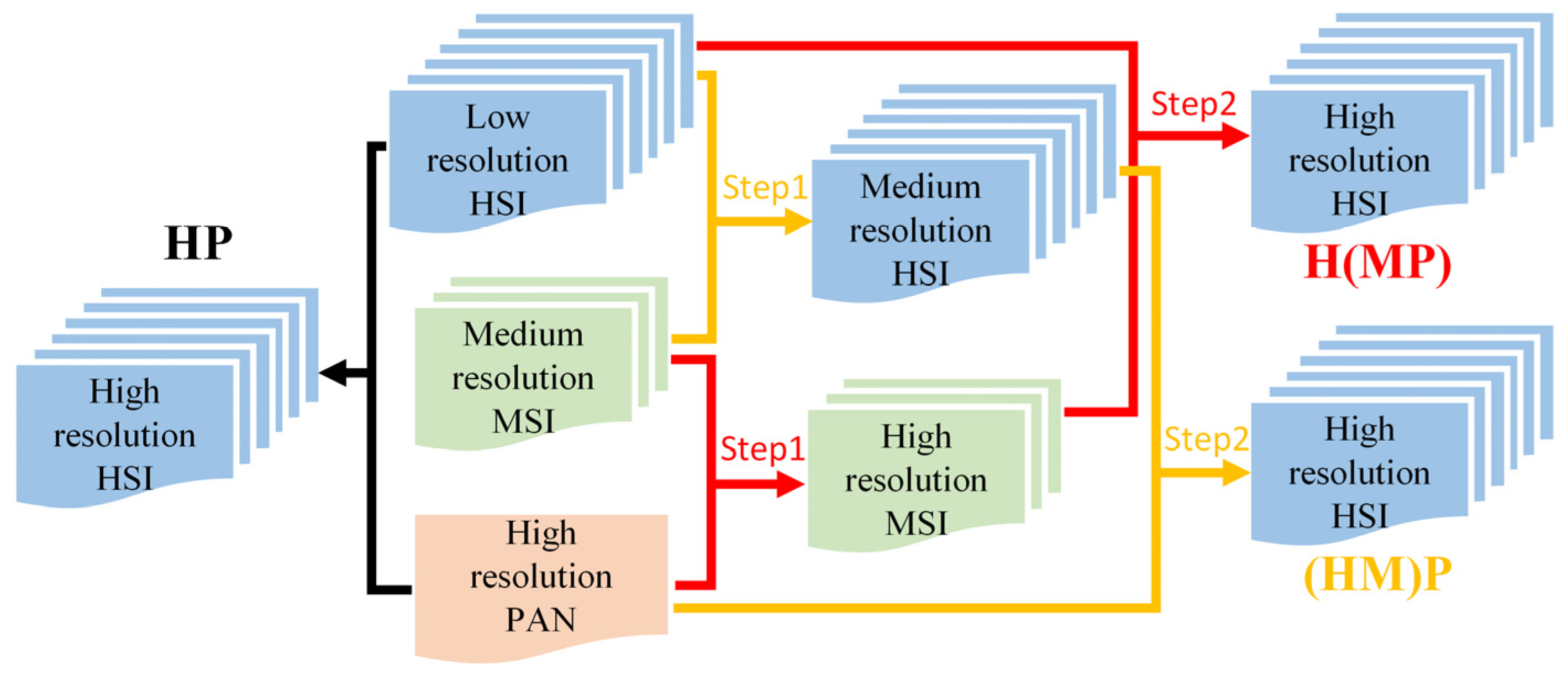

To the best of our knowledge, in terms of the strategy for fusing HSI, MSI and PAN images, existing studies in the literature have generally adopted the integrated fusion strategy [

27,

28], and have not explicitly proposed the concept of stepwise fusion. Since the spectral grouping strategy has been successfully applied in the literature [

2,

15,

16] and many MSI–PAN fusion algorithms have been proposed, can HSI–MSI–PAN fusion be theoretically simplified into several groups of sequential HSI–PAN fusion problems?

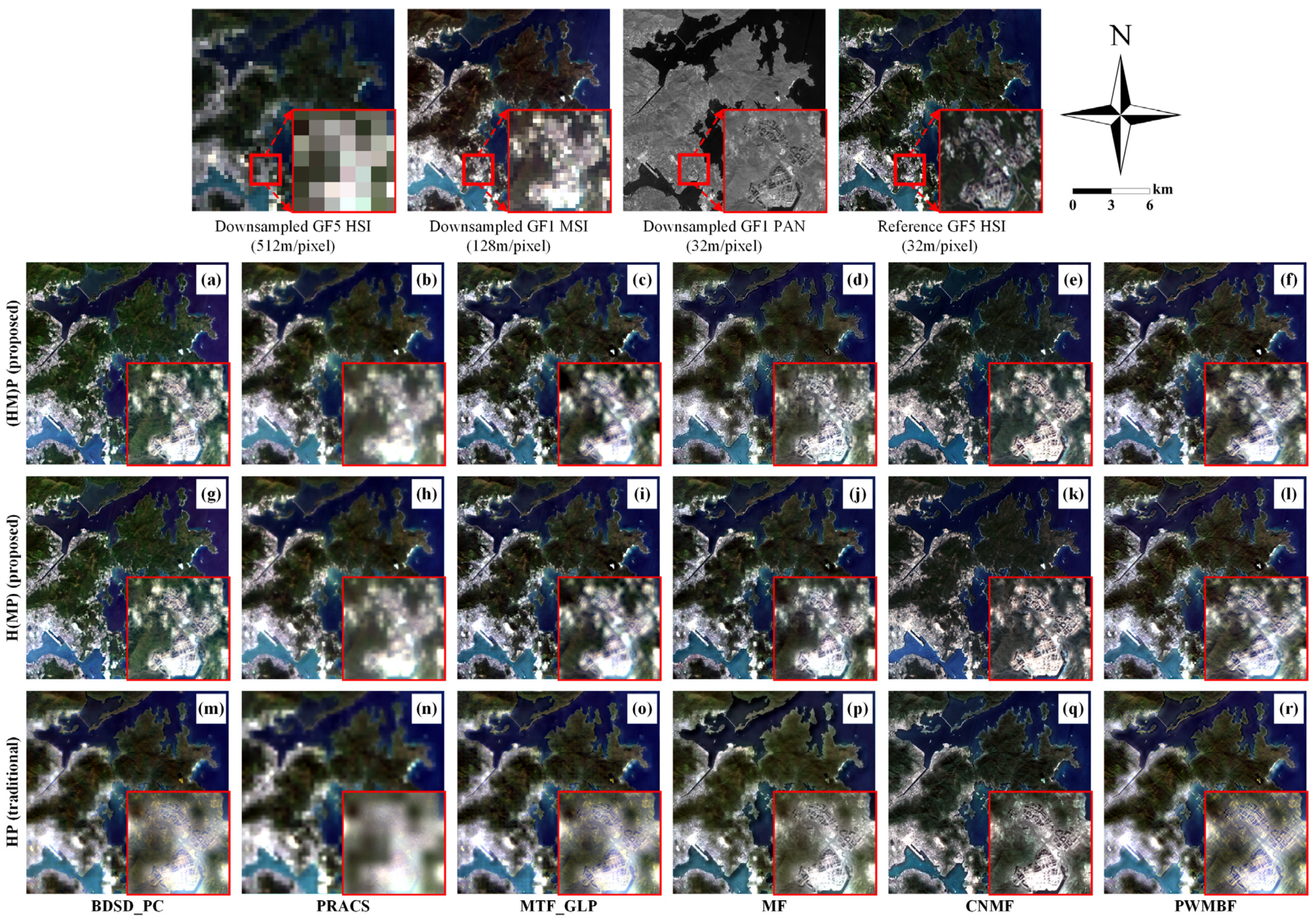

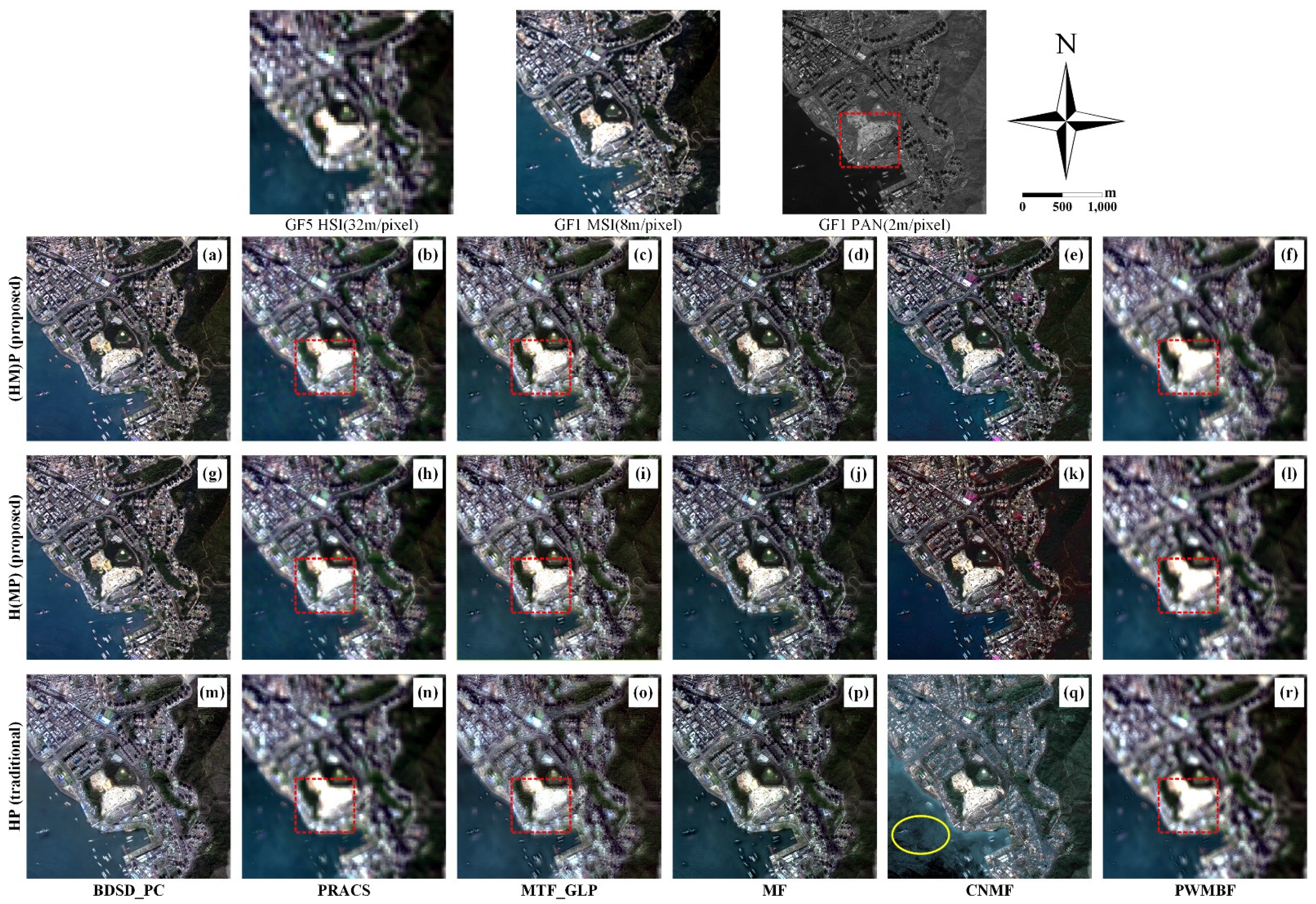

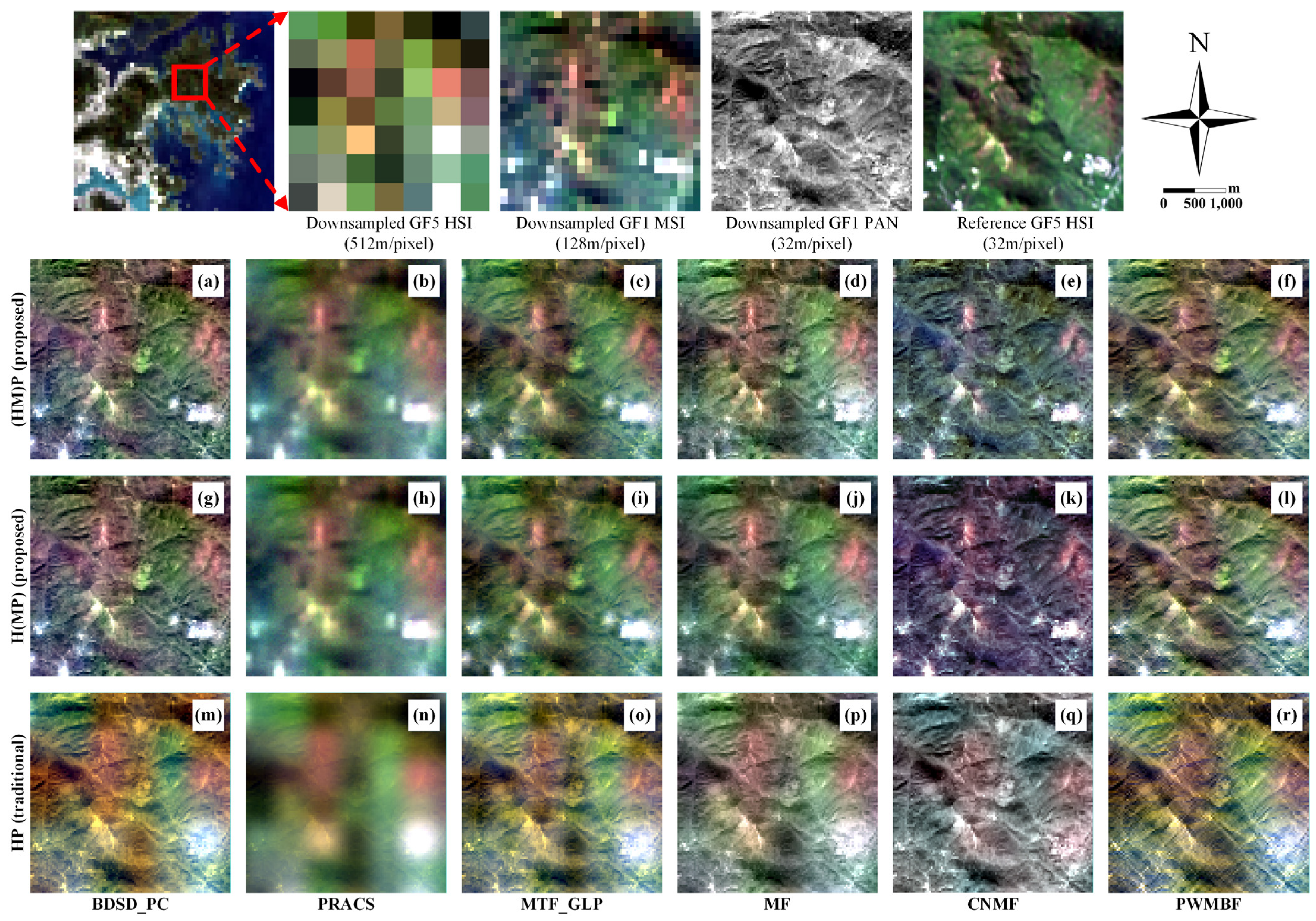

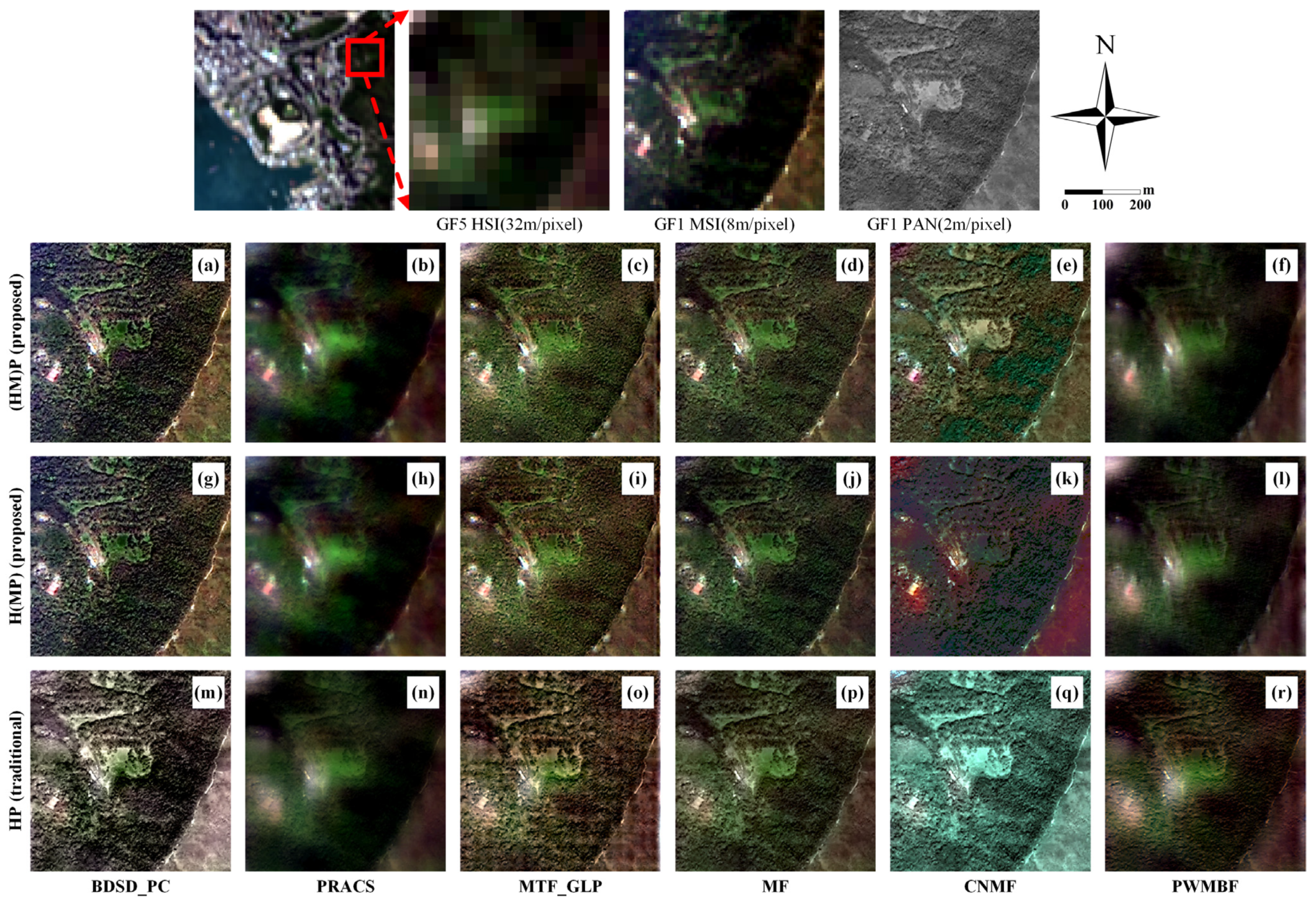

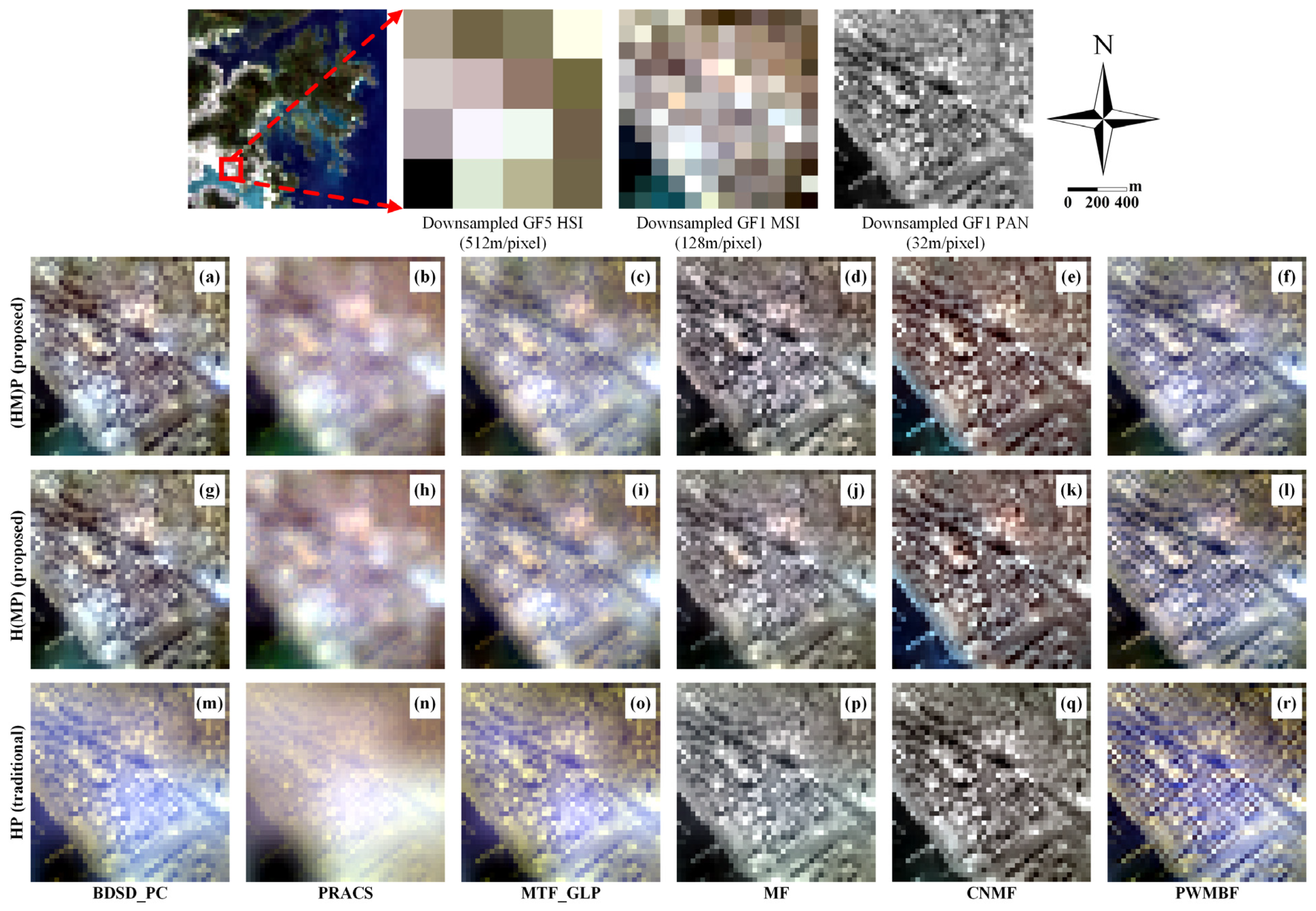

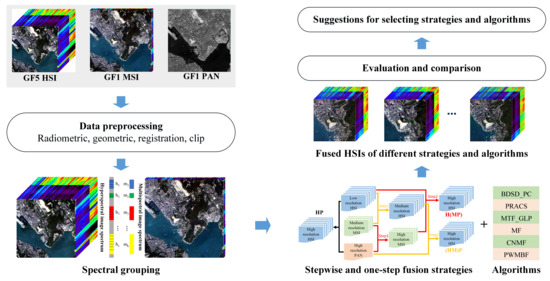

Therefore, the aim of this study is to explore the effectiveness of fusing HSI, MSI and PAN images using stepwise and spectral grouping strategies with existing pan-sharping algorithms, and also to compare the performances of different algorithms. With HSIs of Gaofen-5 (GF-5), MSIs and PAN images obtained from the Gaofen-1 (GF-1) PMS sensor as a case, an easy-to-implement stepwise and spectral grouping approach for fusing HSI, MSI and PAN images was proposed and evaluated. Adopting the stepwise and spectral grouping strategy, the fusion of HSI, MSI and PAN images was decomposed into a set of MSI–PAN fusion problems, and six state-of-the-art pan-sharpening algorithms were evaluated and compared within this framework.

The rest of this paper was organized as follows: In

Section 2, the study area and image data are introduced. The stepwise and spectral grouping approach, as well a comparison of MSI–PAN fusion algorithms are described in

Section 3. In

Section 4, we compare the performances of different fusion strategies and algorithms, considering different image types. Some important issues are discussed in

Section 5, and the final conclusions are drawn in

Section 6.

5. Discussion

5.1. Comparison of Strategies (HM)P, H(MP) and HP

Spectral fidelity: Generally, the spectral fidelity performance using the proposed strategies (HM)P and H(MP) was better than that of strategy HP. This is because MSI acted as a bridge, which enabled better integration of spectral information. It also illustrated the effectiveness of the stepwise and spectral grouping approach.

Spatial fidelity: From the perspective of spatial fidelity, the stepwise approach was not always better than the traditional strategy. In some cases, it was even slightly worse than the traditional HP approach. Nevertheless, combining all the experimental results, we still found that the stepwise strategies were significantly better than the traditional one.

Computational efficiency: In most circumstances, the efficiency of strategy HP was better than that of strategies (HM)P and H(MP), but there was not much difference, which may be due to the fact that (HM)P and H(MP) fuse one more image than HP. However, when using CS-based algorithms, we founnd that H(MP) was more efficient than HP and (HM)P, indicating that the stepwise fusion strategy may reduce the time complexity of the CS-based methods. In most cases, the efficiency of strategy H(MP) was better than that of strategy (HM)P.

Summary: Considering the spectral and spatial fidelity comprehensively, we found that the stepwise approaches are better than traditional one. Moreover, the stepwise fusion approach did not significantly increase the time complexity compared to traditional methods, and strategy H(MP) reduced the time complexity compared to HP when using CS-based algorithms (BDSD_PC and PRACS). We also found from the experimental results that the two stepwise approaches always produce comparable results for most algorithms and images. Therefore, we suggest fusing HSI, MSI and PAN images using stepwise and spectral grouping strategies to obtain better results.

5.2. Comparison of Fusion Algorithms Using Stepwise and Spectral Grouping Strategy

In order to compare different algorithms more concisely, we qualitatively classified the performances into three levels: Good (G), Acceptable (A) and Poor (P). In this section, the performances presented in

Section 4 are collected and quantified into G, A or P. As we discussed in

Section 5.1, the proposed stepwise strategies outperformed the traditional HP strategy in spectral and spatial fidelity, therefore, the HP strategy is ignored in this section. Because the strategies (HM)P and H(MP) have comparable performance, we only count the performance of (HM)P and H(MP) once. The performances of different algorithms’ spectral and spatial fidelity over different images and scenes were quantified and presented in

Table 16. Besides, to evaluate the overall performances of different algorithms, the worst scores over different scenes and images were used, as presented in the last row of the table.

Spectral and spatial fidelity: It can be seen from

Table 16, that the MF algorithm had good performance in terms of spectral and spatial fidelity for different images and scenes. The MTF_GLP algorithms performed slightly worse than MF for some areas and images. However, the worst scores of MTF_GLP methods were As, therefore, their final scores were A. The other four algorithms had some obvious defects in spectral or spatial fidelity, making them obtain poor scores, and finally resulting in the scores of P.

Computational efficiency: As can be seen from

Section 4.2, from an algorithmic point of view, the computational efficiency of simulated data fusion is ranked as PWMBF > MF > MTF_GLP > BDSD_PC > CNMF > PRACS, and the computational efficiency of real data fusion is ranked as PWMBF > MF > BDSD_PC > MTF_GLP > CNMF > PRACS, so the calculation efficiency of the six algorithms can also be divided into three levels in the

Table 17. As is showed in

Table 17, in terms of computational efficiency of the algorithms, MF and PWMBF are good, BDSD_PC and MTF_GLP are acceptable, and PRACS and CNMF are poor. From the perspective of the types of fusion algorithms, the CS-based algorithms have the lowest computational efficiency in general, while the MRA-based and subspace-based algorithms have better and equivalent computational efficiency.

It should be noted that the comparison of the algorithms is different from some other studies. We aim to evaluate the performances of these algorithms in fusing HSI, MSI and PAN images, while other comparative analyses are generally focused on their performances fusing two images [

11,

36]. Performance in fusing images with contrasting spectral-spatial resolutions has rarely been considered in previous studies [

26].

Generally, by comparing the spectral fidelity, spatial fidelity and computational efficiency of the algorithms, we recommend the MF algorithm to fuse HSI, MSI and PAN image using a stepwise and spectral grouping strategy. In some cases, the MTF_GLP algorithms is potential candidate.

5.3. Issues to Be Further Investigated

The approach in this study has some aspects to further investigated:

(1) According to the spectral correspondence, there are only 77 bands where the spectra of GF-5 HSI and GF-1 MSI overlap. To improve the spatial resolution of HSI channels that cannot be covered by the MSI spectrum, the ratio image-based spectral resampling (RIBSR) [

15] might be a solution.

(2) The stepwise fusion approach is prone to generate error accumulation, including spatial distortions and spectral errors. For spatial errors, it is necessary to perform high-precision registration of multi-source images during data preprocessing. As for spectral distortions, the quantitative evaluation indices between the fused image and the original HSI can be calculated after stepwise fusion to quantify the spectral distortions, and the error compensation mechanism can be used to eliminate the error [

27].

(3) This study is instructive for sensor design, specifically, the design of an imaging system that integrates panchromatic, multispectral and hyperspectral sensors. However, when the spatial resolution of hyperspectral images and panchromatic images is determined, the optimal spatial resolutions of the MSI that can maximize the quality of fused images is still an issue that needs to be investigated in the future.

6. Conclusions

In this study, we have demonstrated the effectiveness of fusing HSI, MSI and PAN images using a stepwise and spectral grouping strategy. Two stepwise strategies were compared with a traditional one-step fusion strategy, and six state-of-the-art image fusion algorithms were adopted and compared. From this study, we can draw the following conclusions:

(1) Image fusion performances of different strategies: Compared with the traditional fusion strategy HP, the results of the stepwise fusion strategy (HM)P and H(MP) have better spectral fidelity. However, from the perspective of spatial fidelity, the strategies (HM)P and H(MP) do not always outperform the strategy HP. Nevertheless, considering all the experimental results, we still found that the stepwise strategies were better than the traditional one, while the spectral and spatial fidelity of the stepwise strategies (HM)P and H(MP) were comparable.

(2) Image fusion performances of different algorithms: Six algorithms are evaluated with the stepwise fusion strategy. The spectral and spatial fidelity of the results of the MF algorithm was the best, followed by MTF-GLP. Although the results of BDSD_PC and CNMF had good spatial fidelity, the spectral fidelity was poor, whilst PRACS and PWMBF had better spectral fidelity and poor spatial retention.

(3) Computational efficiencyof the fusion strategies: The stepwise strategy does not significantly increase the computational load when it fuses one more image than HP, and stepwise strategy H(MP) reduces the time complexity compared with HP when using CS-based algorithms. Under most algorithms, strategy H(MP) is more computationally efficient than strategy (HM)P.

(4) Computational efficiency of the fusion algorithms: From the algorithm point of view, PWMBF and MF have the highest computational efficiency, followed by MTF_GLP, BDSD_PC, and the worst is CNMF, PRACS. From the perspective of the types of fusion algorithms, the CS-based algorithms have the lowest computational efficiency in general, while the MRA and subspace-based algorithms have better and equivalent computational efficiency.

The stepwise approach is proposed from a macro perspective, so that it is not limited to specific fusion algorithms. Moreover, we have tested and compared six well-known algorithms. The results provide us with a reference for selecting an image fusion algorithm. This study has also inspired some new ideas for designing new sensor systems, such as new satellite or drone platforms carrying sensors with different spatial and spectral resolutions.