1. Introduction

Coastal wetlands are located at the intersection of ocean and land, which are of great significance for resource protection, climate regulation, and maintenance of biodiversity, with impacts on carbon stocks. In addition, coastal wetlands are typical complex surfaces, which are significant challenges in achieving fine mapping [

1]. Recently, remote sensing (RS) technology has grown up to be a key method for wetlands survey and monitoring. The increasing availability of RS data brings rapid advancement and interest in the field of radar and optical data fusion [

2,

3,

4]. Satellite-based optical sensors passively receive solar electromagnetic waves reflected by ground objects for imaging with rich spatial and spectral information [

5]. Among them, multispectral images typically have high spatial resolution and rich details [

6,

7]. Hyperspectral images are characterized by nearly continuous spectral information, which is a distinguishing and popular feature for classification tasks [

8]. Synthetic Aperture Radar (SAR) is an active detector, and its imaging is not affected by meteorological conditions and sunlight levels [

9,

10]. Radar microwave is more sensitive to dielectric properties and moisture characteristics, and the cross-polarization of SAR has a fair degree on distinguishing the vegetation canopy [

11,

12]. SAR can also provide backscattering properties that are different from optical, such as the geometric structure and surface roughness [

13,

14,

15,

16]. Therefore, how to perform pixel-level fusion of optical and SAR images, integrating the high spatial-spectral resolution with the characteristics of polarized backscattering, is of great significance for coastal wetlands mapping.

Due to the uncertainties inherent in the data sources, common SAR and optical image fusion techniques can be divided into three categories: SAR and multispectral image fusion, SAR–panchromatic–multispectral image fusion, and SAR–hyperspectral image fusion. The fusion of SAR and multispectral images has been applied to earth with good results. Wu et al. used the shear wavelet transform to fuse TerreSAR-X images (3 m) with Landsat-8 multispectral images to enhance the extraction of impervious surfaces [

17]. Yin and Jiang made a special calculation on SAR images to minimize distortion in spectral information when fused RADARSAT-2 (PolSAR) with Landsat TM images [

18]. Gaetano et al. used the registered optical data as a guide map to Sentinel-1 SAR denoising by a generalized bilateral filter, then fused SAR with Sentinel-2 MS data to improve the accuracy of land use classification [

19]. Shao et al. combined the intensity–hue–saturation (IHS) fusion technique with gradient transfer (GTF) algorithms and described the fusion of SAR and multispectral images as an optical image optimization process that better preserved radiation information and spatial details [

20]. Amarsaikhan et al. improved the results of land cover classification based on wavelet transform fusion—high-resolution SAR and optical images were used as the research data—and then compared the fusion results with Brovey transform, Ehlers fusion, and PCA algorithms [

21]. Kulkarni et al. proposed a method combining PCA with the DWT algorithm [

22], and Chen et al. proposed an algorithm using generalized IHS combined with wavelet transform, both reducing the spectral distortion of multispectral images [

23]. Yang et al. used the Gram–Schmidt algorithm to fuse GF-1 (WFV) multispectral images with Radarsat-2 PolSAR images, improving the classification results of coastal wetlands in the Yellow River Estuary and increasing the accuracy of extracting mudflats and reeds [

24].

The fusion of SAR, panchromatic, and multispectral images has also been explored in depth. Byun et al. provided two fusion ideas for SAR, panchromatic and multispectral images. The first is an area-based hybrid pansharpening fusion scheme. The SAR image is divided into active and inactive regions, and different fusion rules are designed for the two regions. The AWT algorithm is used to fuse the SAR and the panchromatic image, and then the multispectral image has component substitution applied to fusion images [

25]. The second is a texture-based fusion scheme. The local statistical parameters are adaptively calculated to perform a weighted fusion of panchromatic images and SAR. The multispectral images are fused with the generalized IHS transform [

26]. Garzelli used à-trous wavelet transform to fuse panchromatic and multispectral images, then injected structural information from SAR images into the pansharpened images to obtain the final fusion results [

27]. Yonghonga extracted the high-pass details of SAR and panchromatic images by á-trous wavelets, and the panchromatic detail information was modulated by texture HPFM (high-pass filter modulation) of SAR images and then fused with multispectral images, and the fused images have spectral fidelity in vegetation, bare ground, and buildings [

28].

In recent years, with the great potential of hyperspectral analysis in wetland monitoring being explored, research on SAR and hyperspectral image fusion has emerged [

29,

30,

31,

32]. Chen et al. performed the IHS transformation to fuse hyperspectral data and Topographic Synthetic Aperture radar (TOPSAR) and obtained high spectral and spatial resolution images, solving the fuzzy classification of land cover [

33]. Nasrabadi used the nonlinear correlation between SAR and hyperspectral images to fuse and perform the Reed–Xioli (RX) anomaly detector based on kernel learning, improving the accuracy of mine identification [

34]. Dabbiru et al. fused UAV synthetic aperture radar (UAVSAR) and hyperspectral images (HSI) from an airborne visible/infrared imaging spectrometer (AVIRIS) to improve the classification of oil-contaminated coastal vegetation [

35].

Overall, the fusion of optical and SAR images can integrate the rich spatial and spectral information of optical images, as well as the backscattering polarimetric properties of SAR, which is of great significance for the fine mapping of coastal wetlands. However, available fusion methods for optical and SAR images have the following problems that need to be solved urgently: (1) On the one hand, SAR and optical images have divergent imaging mechanisms, and their image properties are quite different; the fusion is extremely likely to result in information distortion and component destruction. (2) On the other hand, coastal wetlands are typically a complex surface, which further poses a greater challenge to the collaborative fusion of the optical and SAR images. (3) In addition, the existing pixel-level fusion methods mainly focus on the synthesis of SAR and multispectral (or panchromatic) images, and there are few studies on the fusion of SAR and satellite hyperspectral images, especially for multi-sensor optical and SAR image fusion. To the best of our knowledge, there are no integrated cross-modal pixel-level fusion methods for SAR, multispectral (MS), and hyperspectral (HS) images, which are promising by combining their complementary advantages for coastal wetlands mapping.

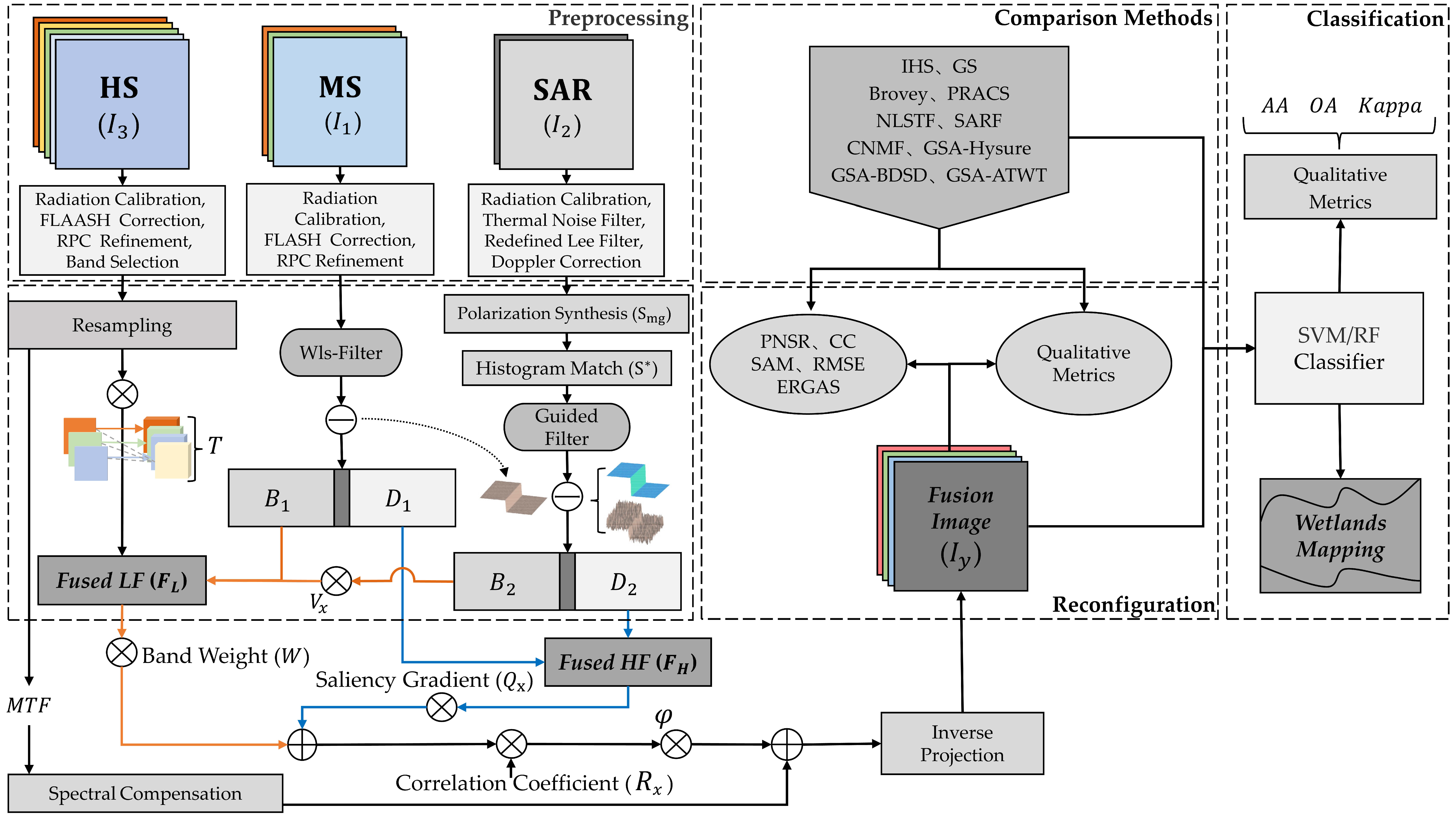

In view of the above problems, this paper proposes a multi-resolution collaborative fusion method of MS, HS, and SAR images to obtain high-quality images for practical applications, such as coastal wetland mapping. First, the high spatial resolution images are decomposed based on edge-preserving filters, reducing the information distortion by simultaneous positioning in the spatial and frequency domain. Secondly, to make the algorithm more robust, we design optical and SAR cross-modal weight in both spatial and spectral dimensions among the fusion branch, while weighted inverse projection is performed to provide good local fidelity. Finally, the upsampled HS images are modulated by injecting spatial detail information and backscattering polarimetric properties without disturbing spectral components. The main innovations of this paper are as follows:

This paper firstly proposes a multi-modal collaborative fusion method for SAR, MS, and HS images to obtain the fused image with high spatial resolution, abundant spectral information, and the geometric and polarimetric properties of the SAR;

In the proposed M2CF, the optimal spectral-spatial weighted modulation and spectral compensation are designed to reconstruct the high-fidelity fusion images. Furthermore, the backscattering gradients of SAR are guided to fuse, which is calculated from saliency gradients with edge-preserving, making it more suitable for cross-modal fusion with complex surface features;

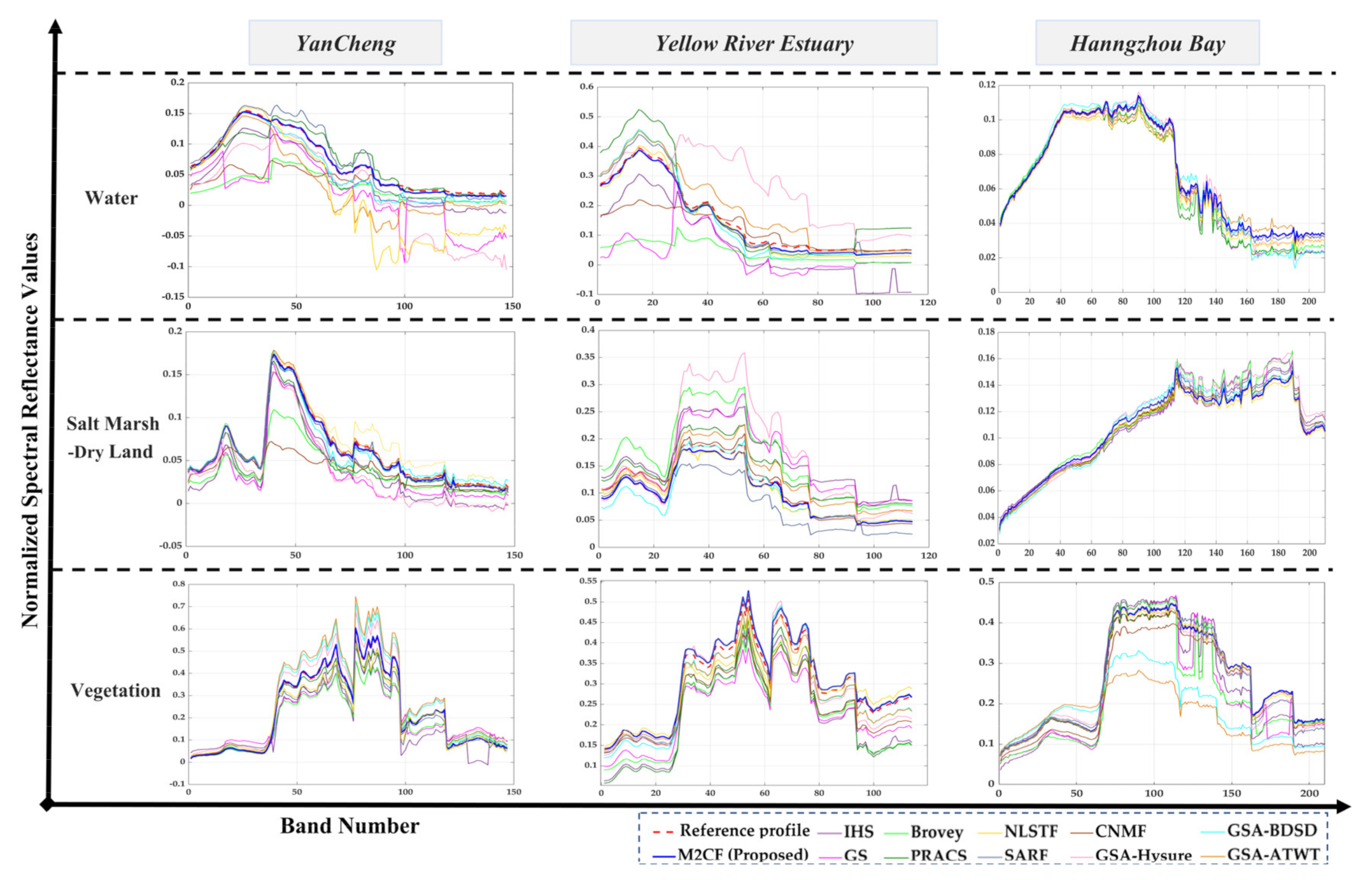

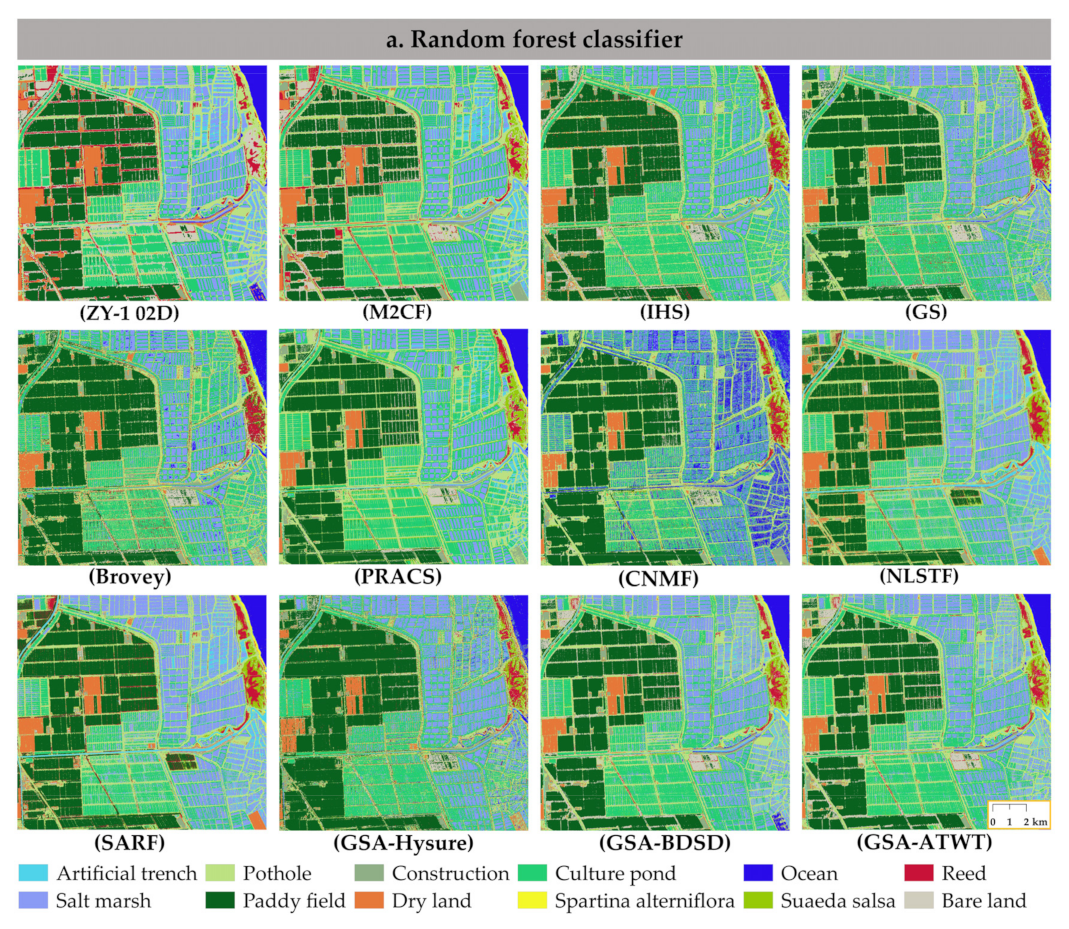

Fusion yields steady visible benefits, achieving the minimum spectral loss with high PSNR while robustly improving the classification results in coastal wetlands.

The rest of the paper is arranged as follows.

Section 2 focuses on the methodological framework of the paper.

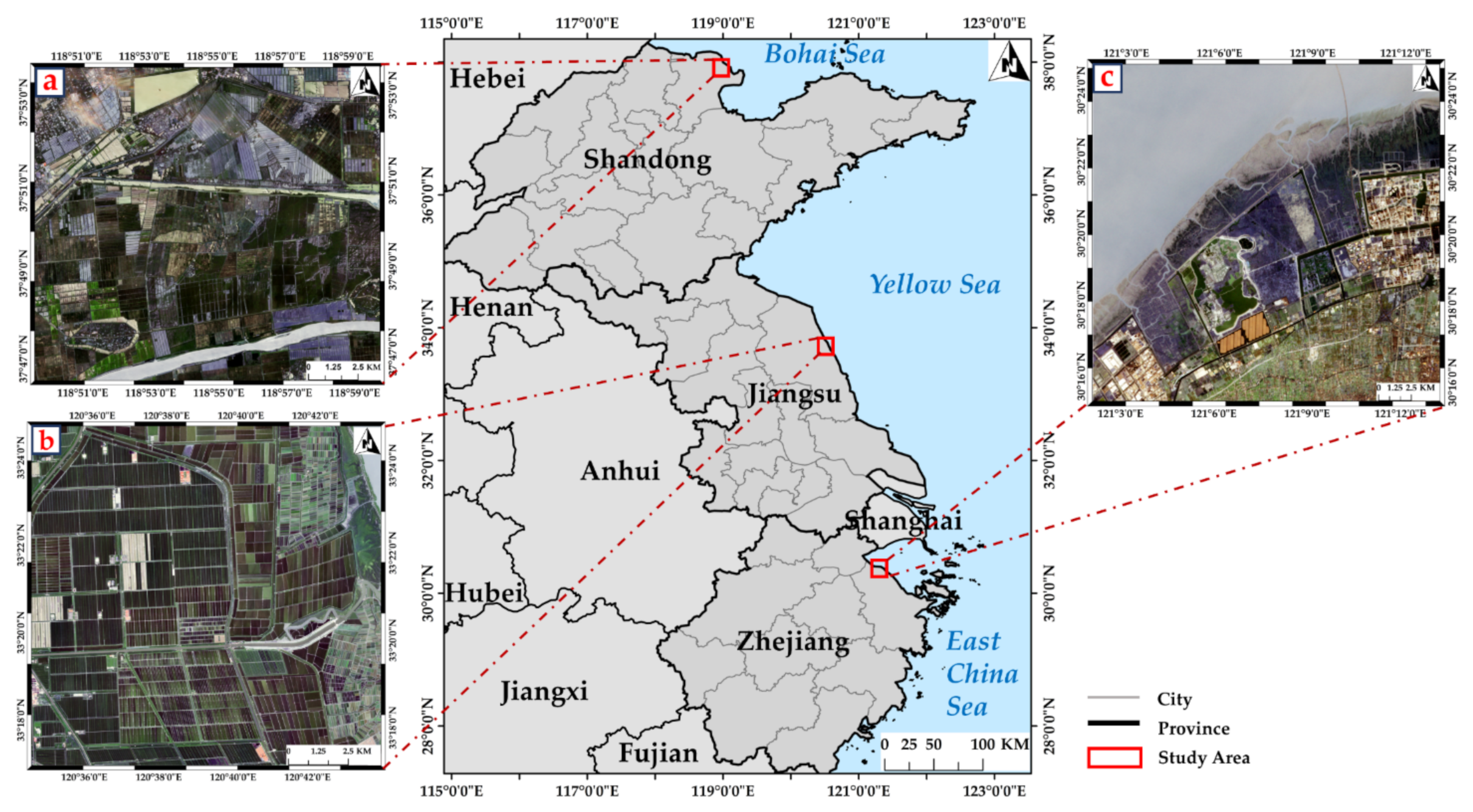

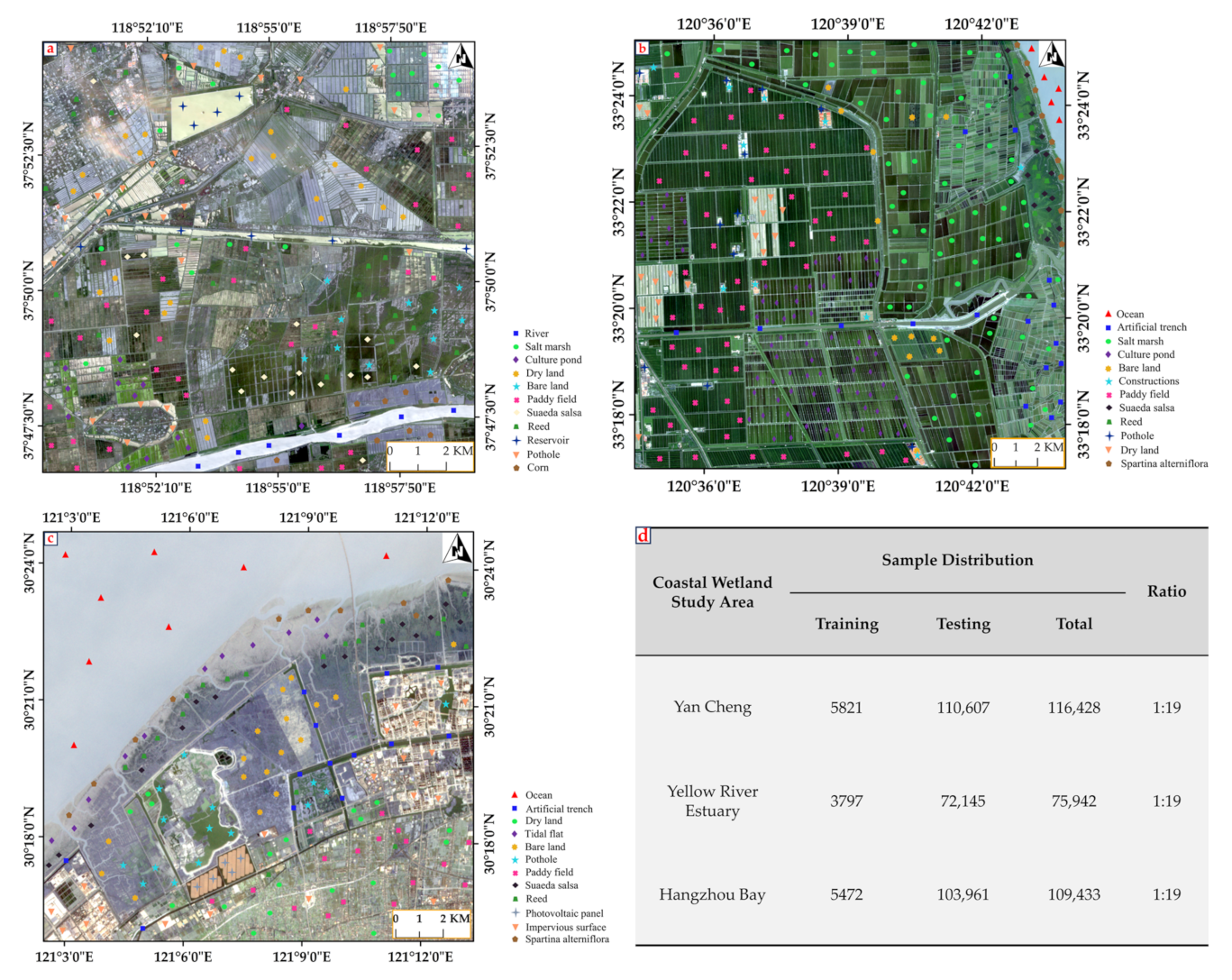

Section 3 describes image preprocessing and the fusion datasets.

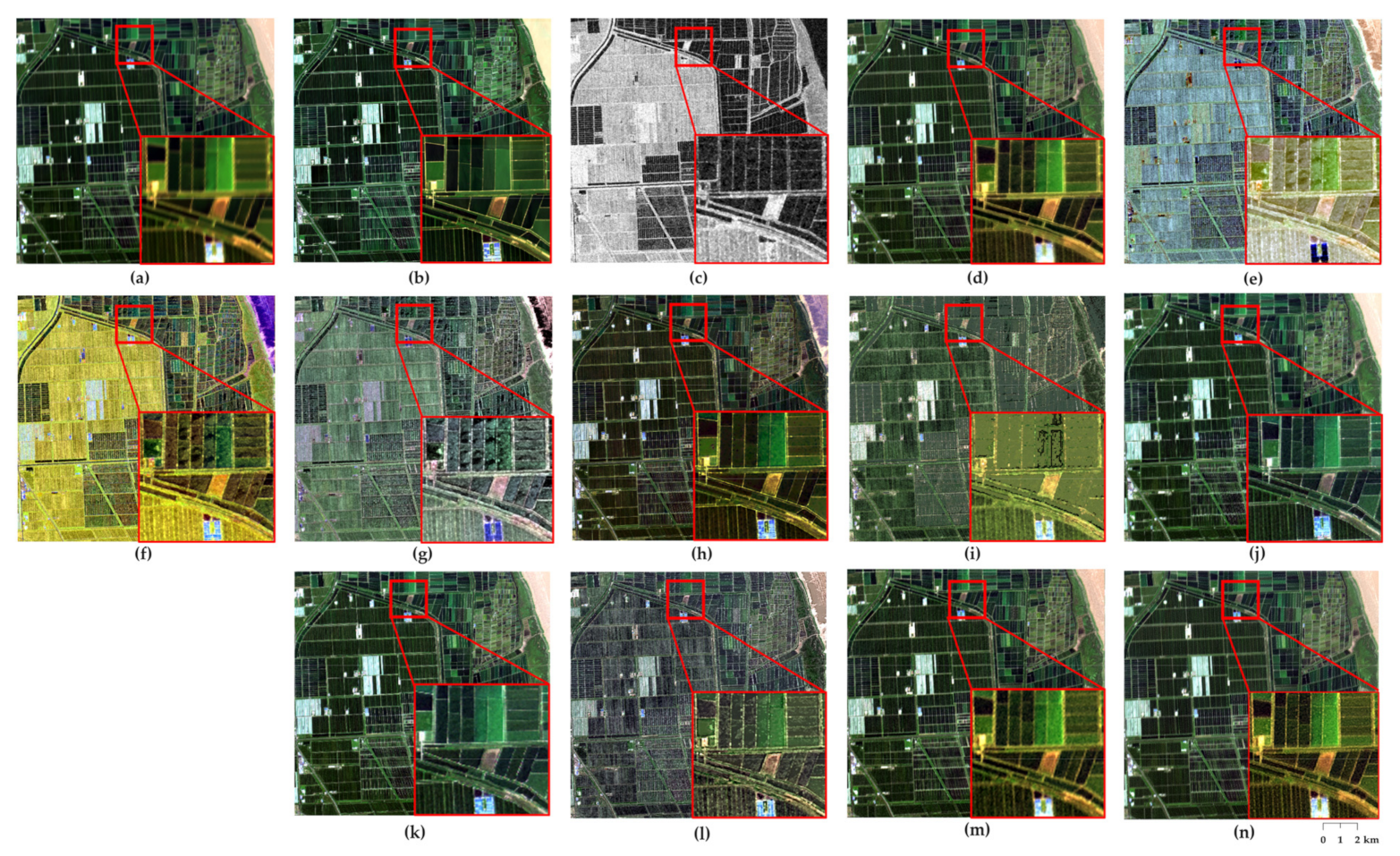

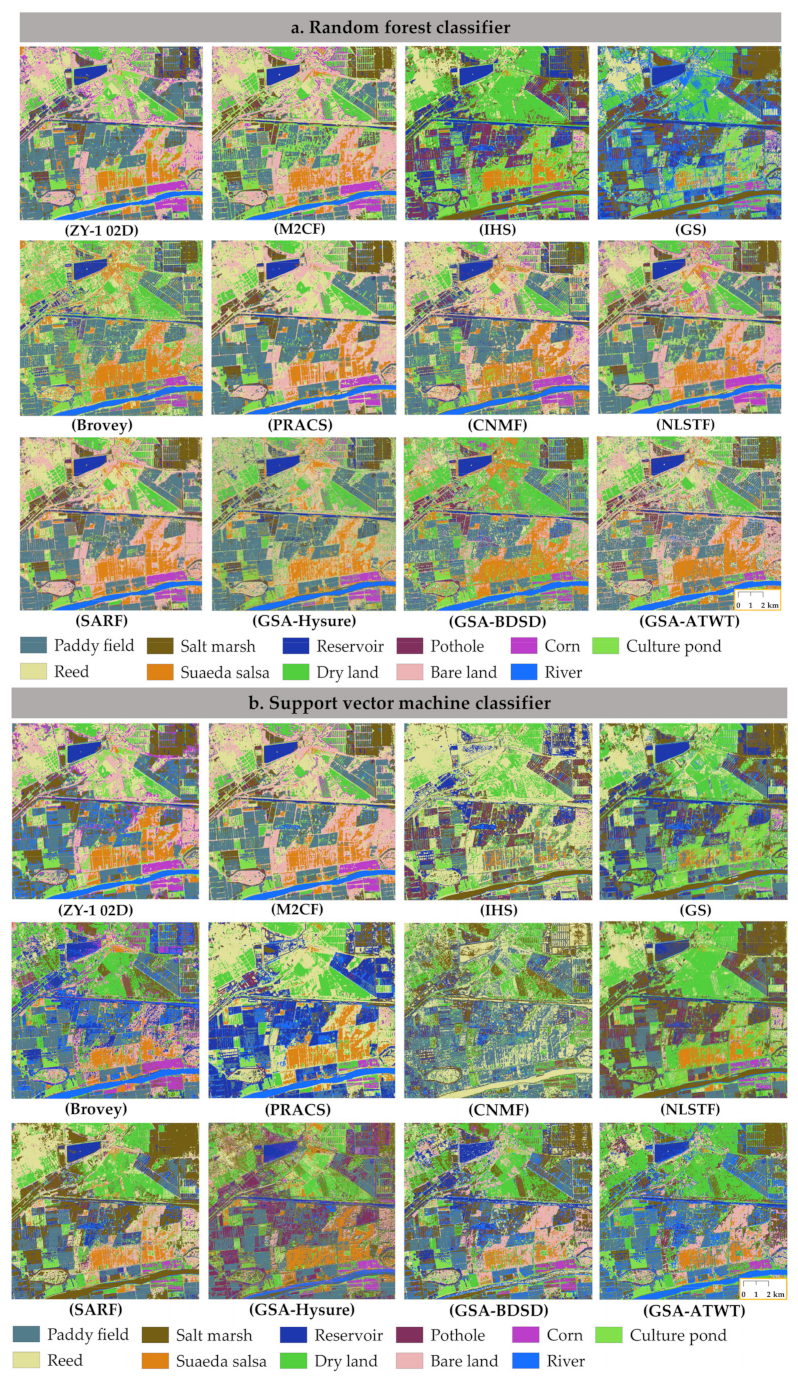

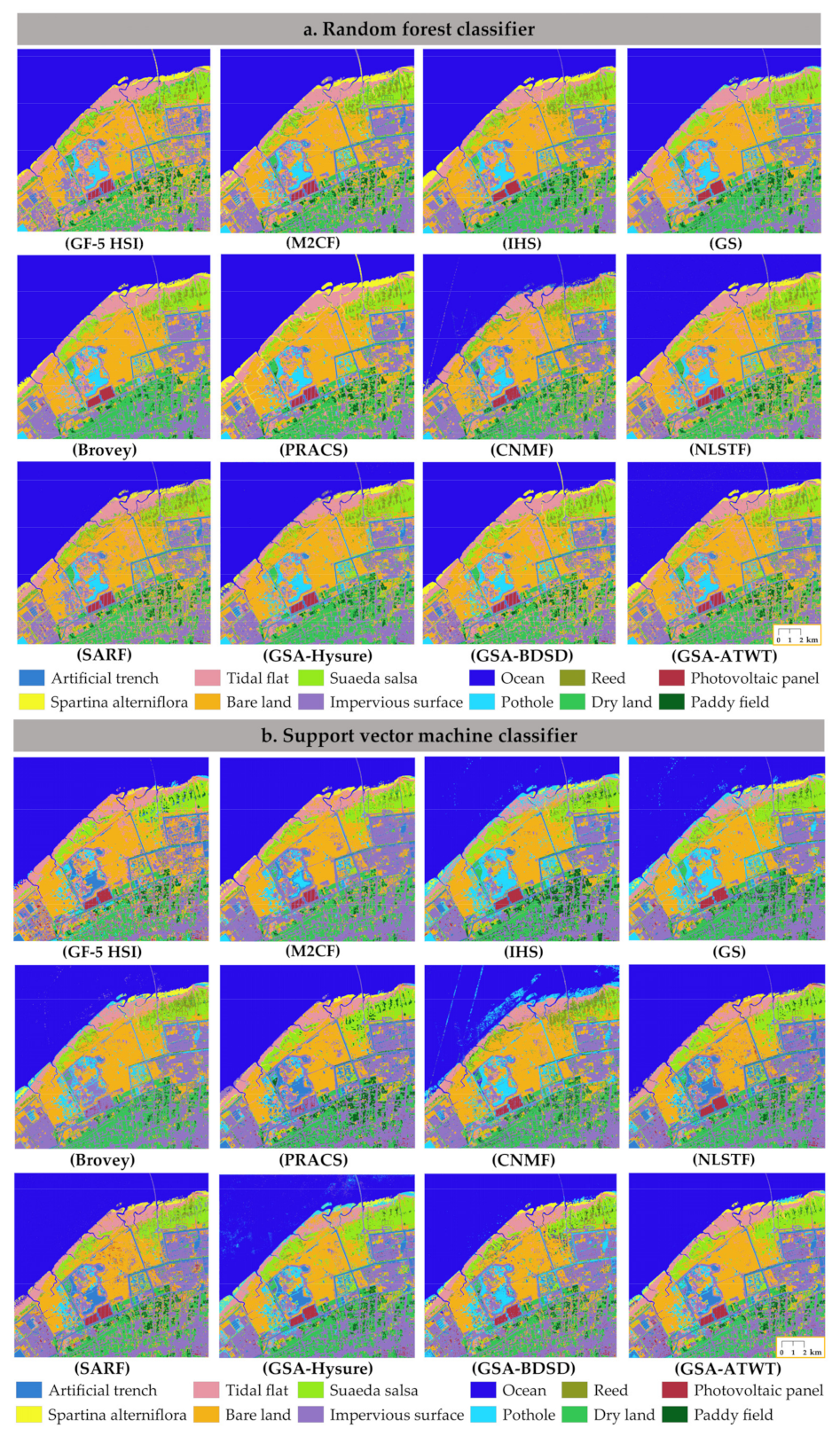

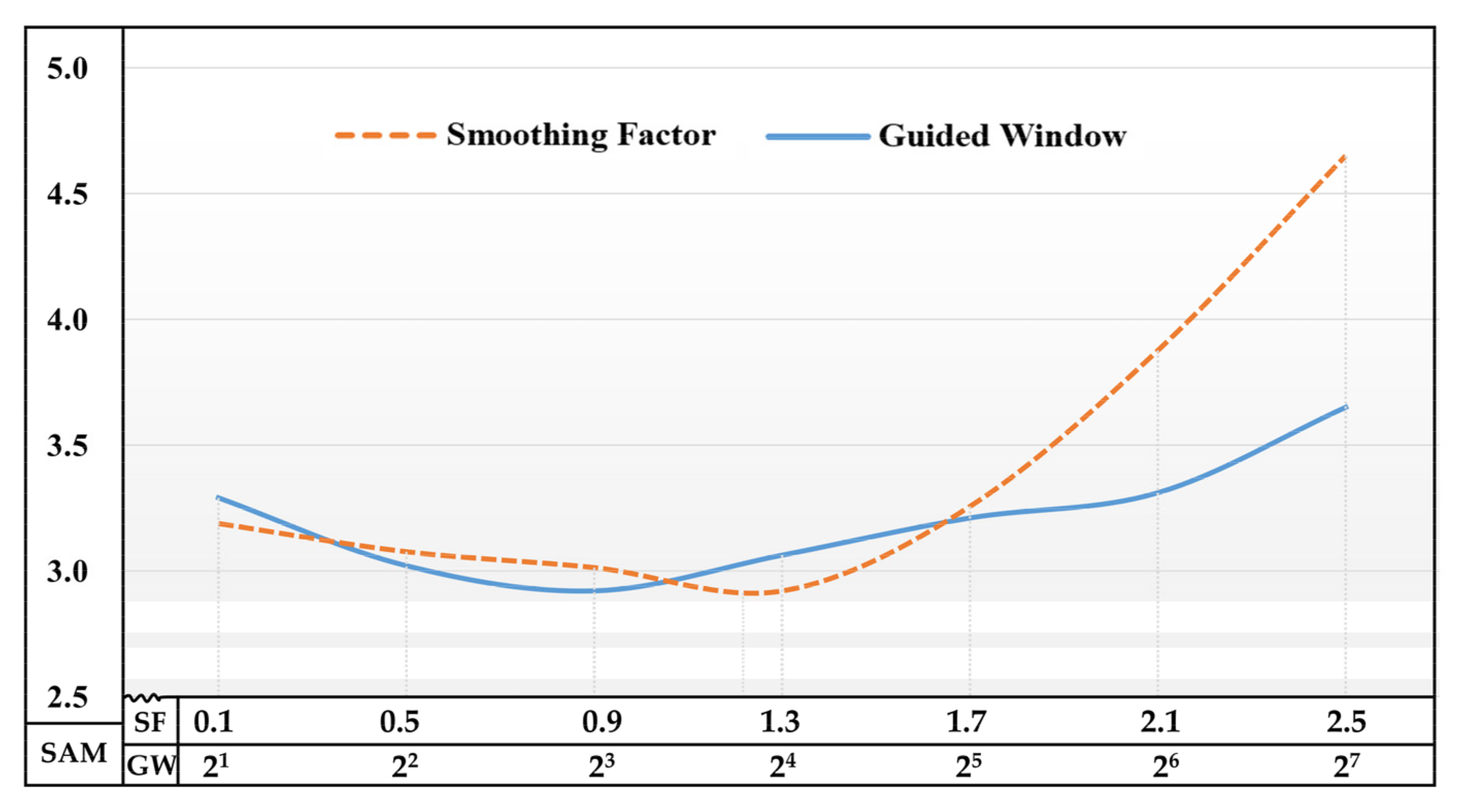

Section 4 evaluates the fused images in terms of quantitative and qualitative metrics, and the classification accuracy is used to characterize the competitiveness of the fused images in realistic applications.

Section 5 draws the discussion, and

Section 6 presents the conclusions of our paper.

5. Discussion

The classification and identification of coastal wetlands have long been interesting but challenging research in remote sensing. The increasing availability of data brings rapid advancement in the fusion of optical and radar images [

4,

52,

53]. Optical images, especially for HS data, can provide rich and continuous spectral information. Radar microwaves can distinguish differences in roughness and moisture, particularly for capturing continuous water surfaces. The increase in the number of hyperspectral image bands at this stage has brought great challenges to the fusion algorithm [

54]. It is necessary to focus on the actual production application of the algorithm, striving to achieve a balance between effectiveness and efficiency. Moreover, the polarized backscatter of SAR is sensitive to the size, density, and dielectric constant of the vegetation. Therefore, an efficient cross-modal fusion of hyperspectral and SAR images is timely and critical for further research [

55].

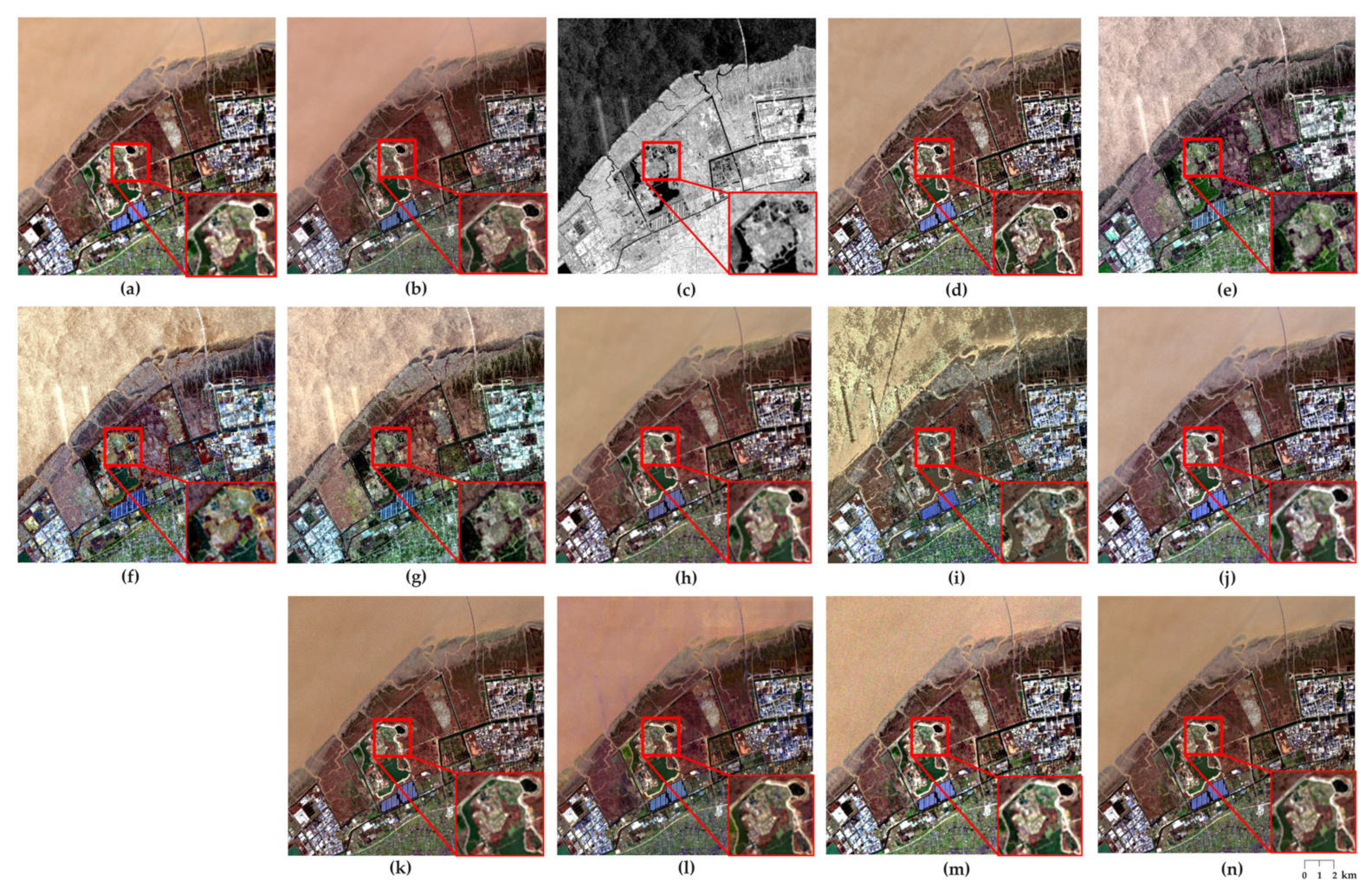

This research will fill a gap that focuses on developing a cross-modal, fast, and robust fusion method of the HS, MS, and SAR images for coastal wetlands mapping. The experiments confirm that the classification accuracy of cross-modal fusion images mostly exceeds those based on single HS or optical fusion (MS and HS) images. It is consistent with many fusion studies; that is, the appropriate fusion algorithm can improve the classification accuracy [

17,

20,

45,

56]. Besides the results presented in the experiments, the following points should be further explored. The CS fusion frameworks have advantages in high efficiency and spatial enhancement. This is the reason why CS-based methods are most widely used in SAR and optical image fusion. Hyperspectral super-resolution or unmixing-based methods are better compared to CS-based methods, but they have poor performance in cross-modal data. Hybrid methods are an available fusion option. Multi-resolution analysis can strike a balance between spectral fidelity and spatial enhancement. It avoids instability due to the uncertainty of a step-by-step framework. Among the experimental results, the proposed M2CF achieves the smallest spectral loss while obtaining the highest classification accuracy. Unfortunately, M2CF may not be suitable for fusion at large spatial resolution ratios or mountainous topography. It is mainly limited to radar shadows caused by foreshortening and layover. Joint classification using cross-modal RS data fusion for wetlands mapping is promising [

2,

14,

16,

30]. Recent trends suggest that research in cross-modal fusion is progressing towards deep learning [

57]. Because of the nonlinear correlation and inherent uncertainties in data sources, the above fusion results may not be as excellent as iteratively optimized deep learning algorithms.

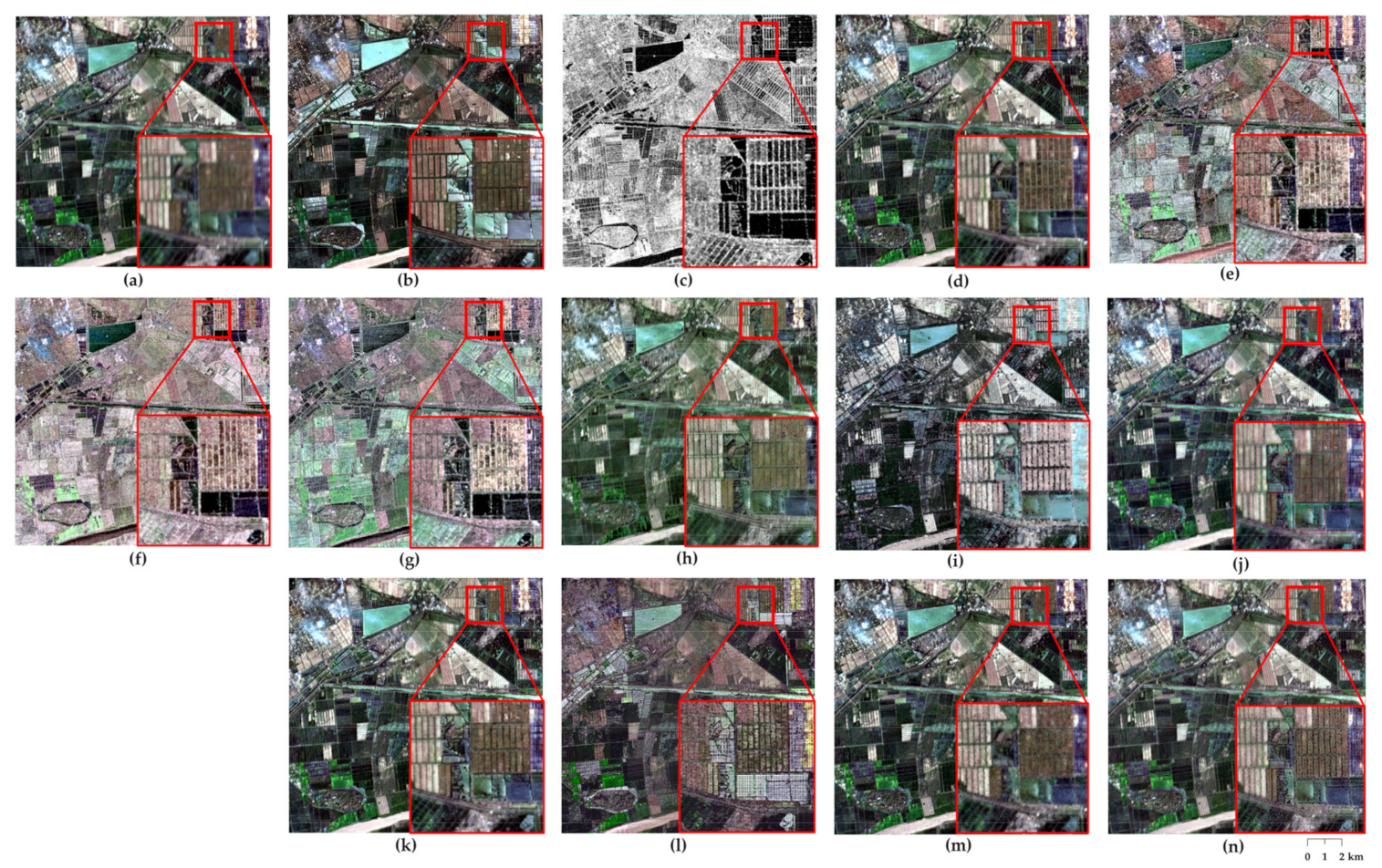

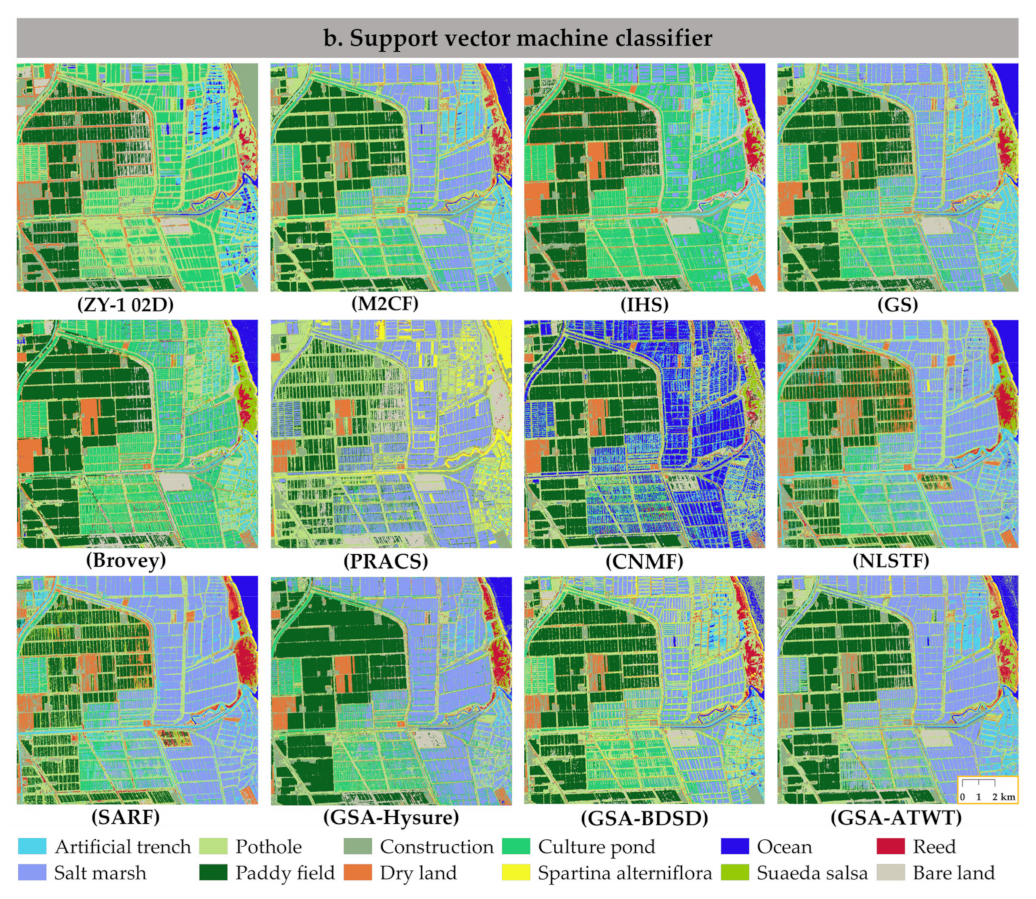

Taking Yancheng as an example,

Table 9 reports more cases as the supplement to the ablation experiment of M2CF in Yancheng. As a multi-frequency extraction experiment, Gaussian and Low-pass filters are incorporated into the method ablation. M2CF is based on MRA models, while M2CF is composed of edge-preserving filters. It is observed that the SAM and RMSE of the proposed module will increase significantly when using Gaussian and Low-pass filters to extract multi-frequency information. Specifically, the spectral metrics of the fusion module are more distorted without the spectral compensation; it further illustrates that the components work better together.

In this work, two filter parameters need to be set manually, namely the smoothing factor λ of Equation (2) and the guiding window ω of Equation (15). We applied controlled variable experiments to verify the sensitivity of the parameters. The factor of the WlsF smoothing term depends on the input image. When the gradient of the input image is relatively large, we want the constraint to be smaller to preserve the structural information of the image; when the gradient of the image is small, this detailed information is considered unimportant, and the constraint can naturally be larger. For the guided filter, each pixel is contained in multiple filter windows, and the window size is directly related to the edge-preserving of the output image.

Figure 11 shows the correlation between SAM and parameter settings. For the experiment process, the guide window (GW) is 8, and the smoothing factor (SF) is 1.2, separately.

In addition, current studies often lack ground-truth and benchmark datasets at larger spatial scales. Variations in spatial registration and radiation mismatch between the SAR and optical are also major challenges. Periodic tide levels make remote sensing of coastal wetlands still challenging, which also increases the variability of data fusion [

58]. Therefore, progress in this field still requires improvements in more robust fusion techniques and systematic procedures to assess the benefits of fusion.