TRDet: Two-Stage Rotated Detection of Rural Buildings in Remote Sensing Images

Abstract

:1. Introduction

2. Dataset and Methods

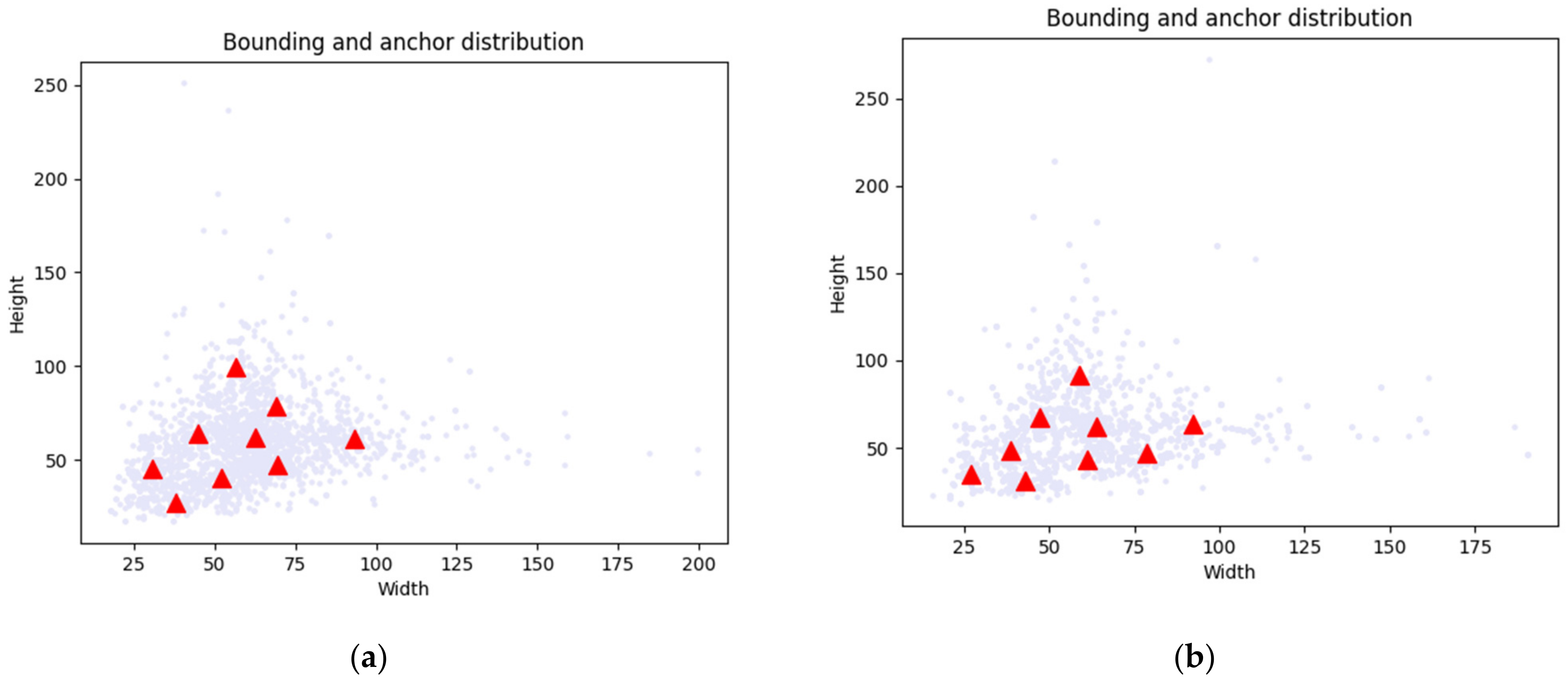

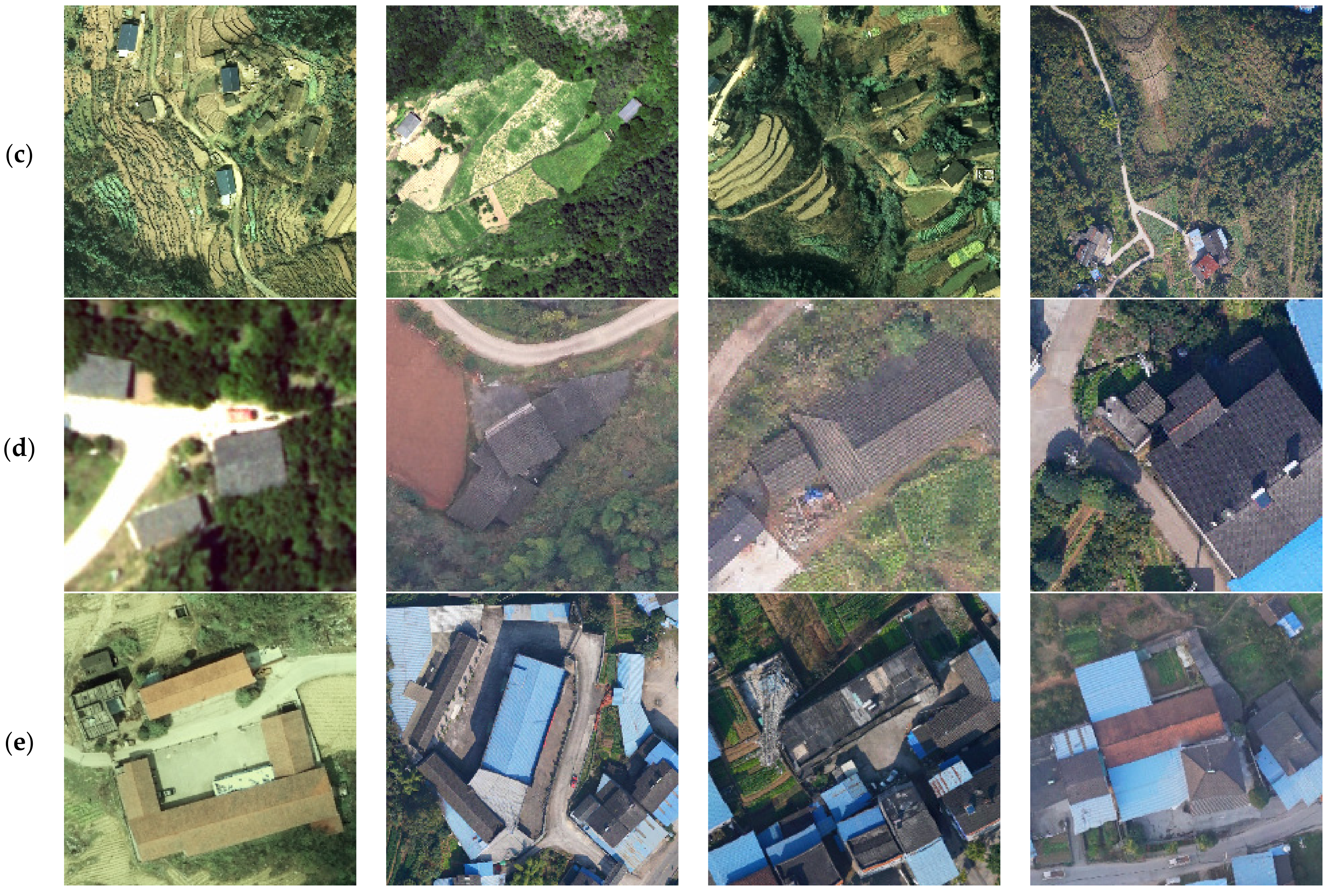

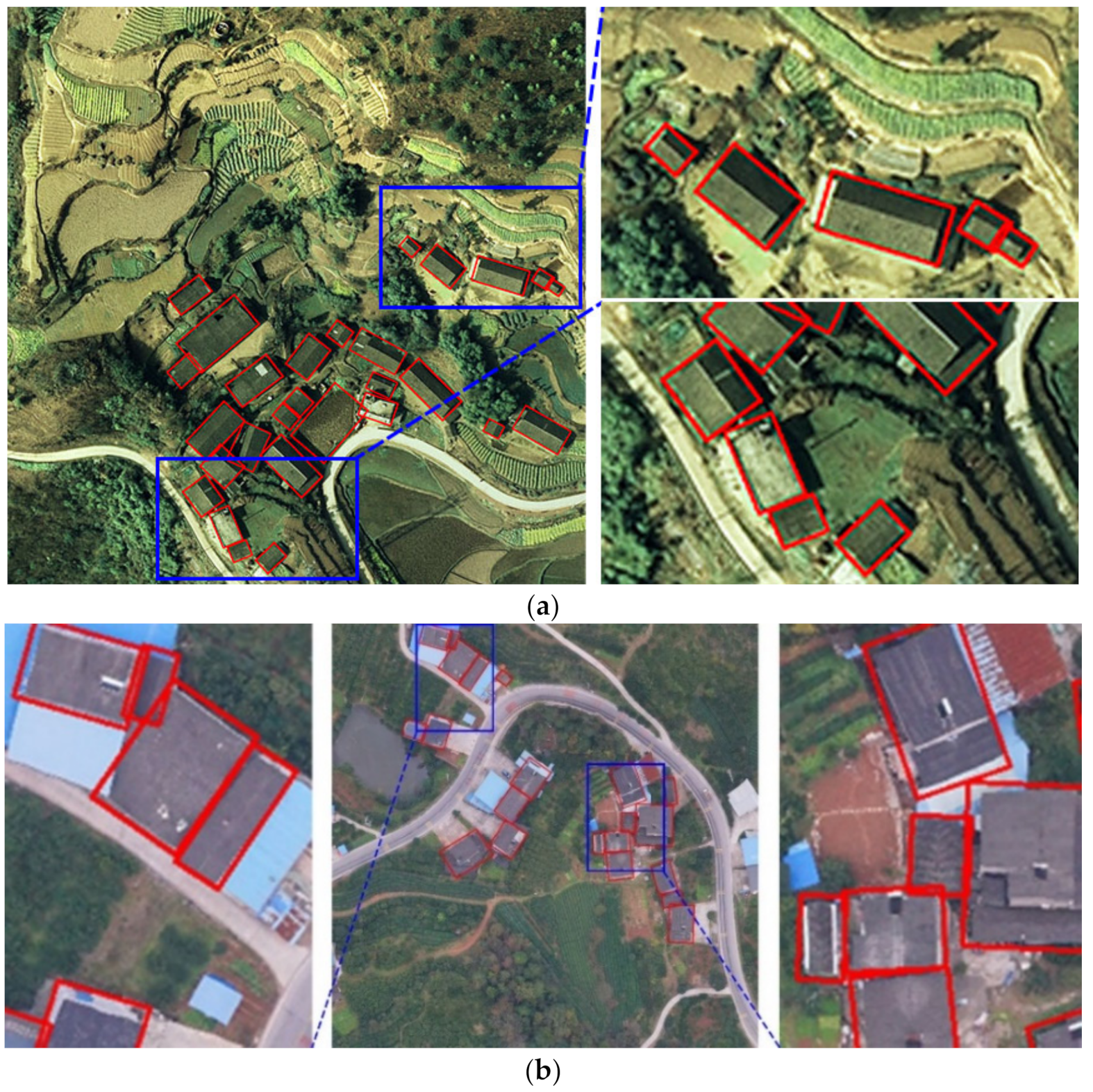

2.1. Rural Building Dataset

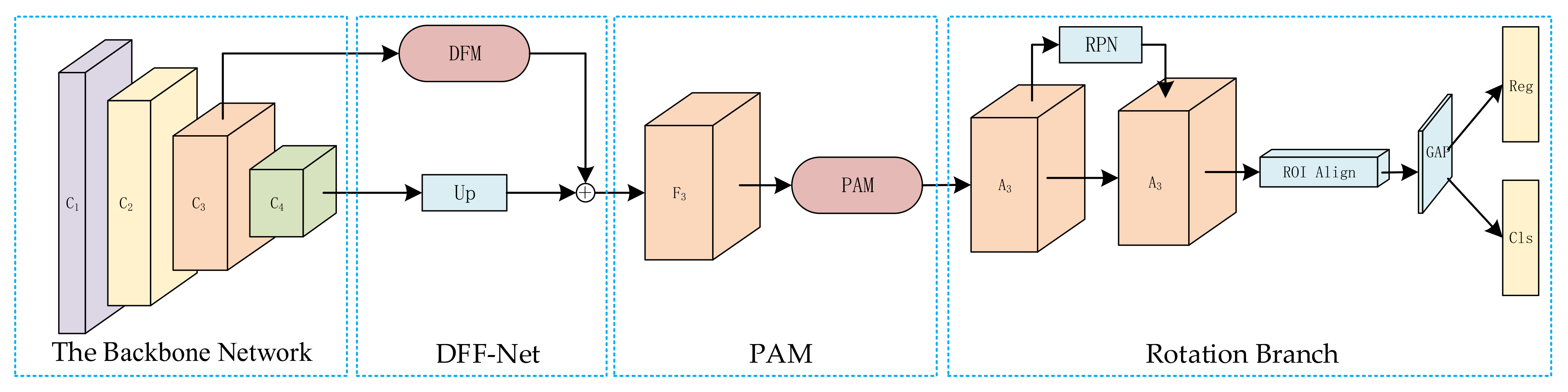

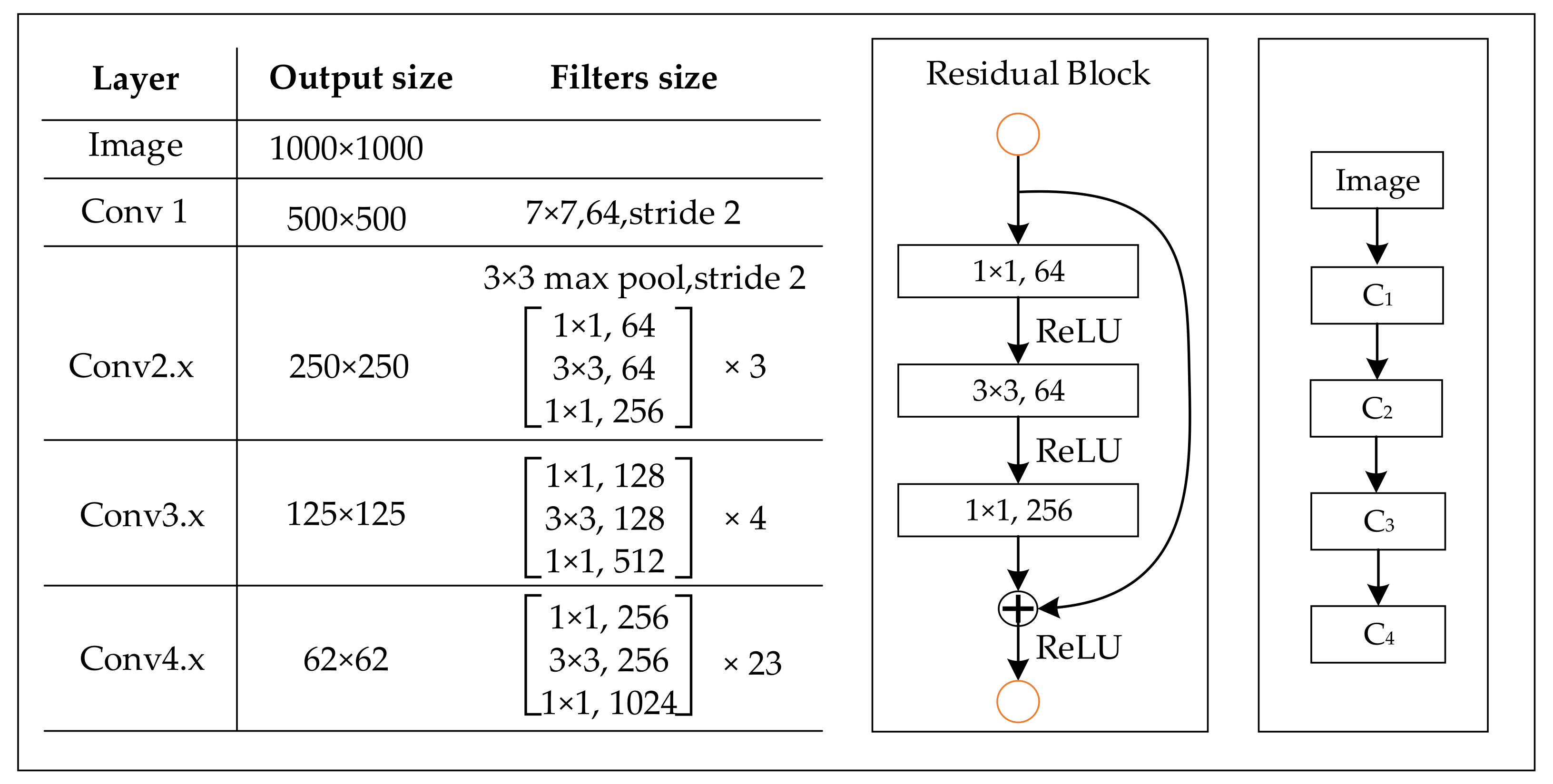

2.2. Method

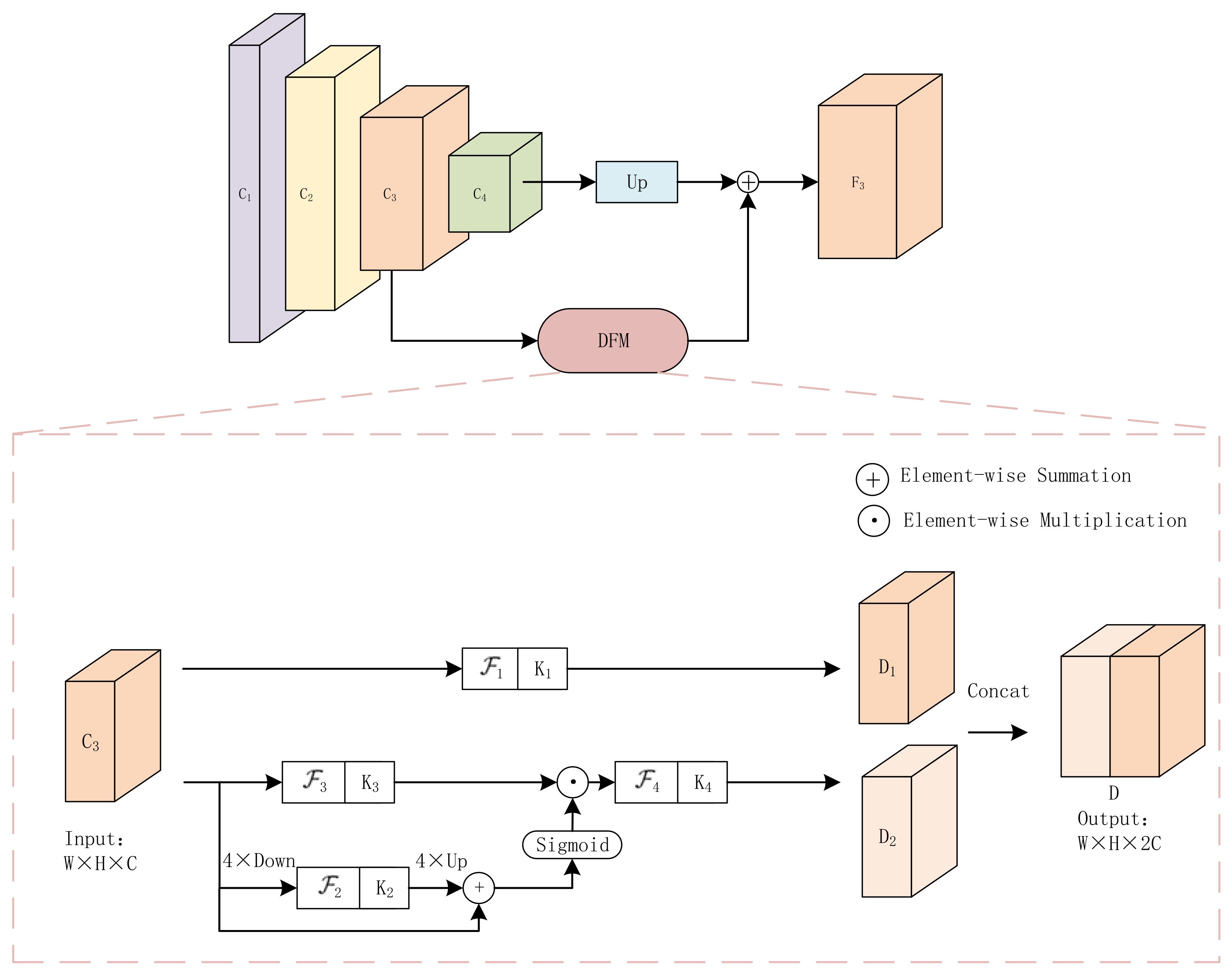

2.2.1. DFF-Net

- Part One: Feature transformations on C3 is performed based on :

- Part Two: First, we adopt the average pooling with filter size and stride 4 on C3 as follows:

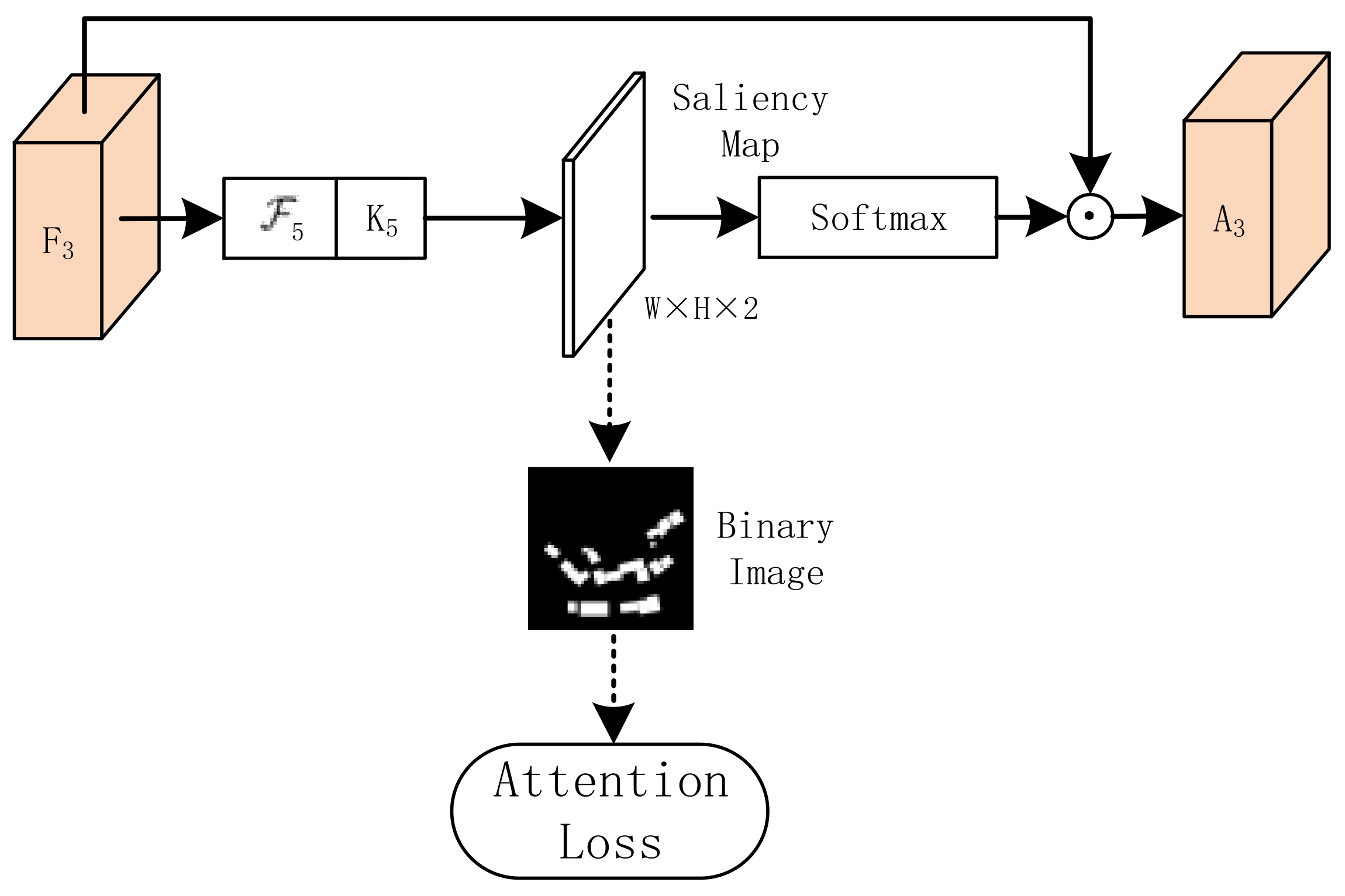

2.2.2. PAM

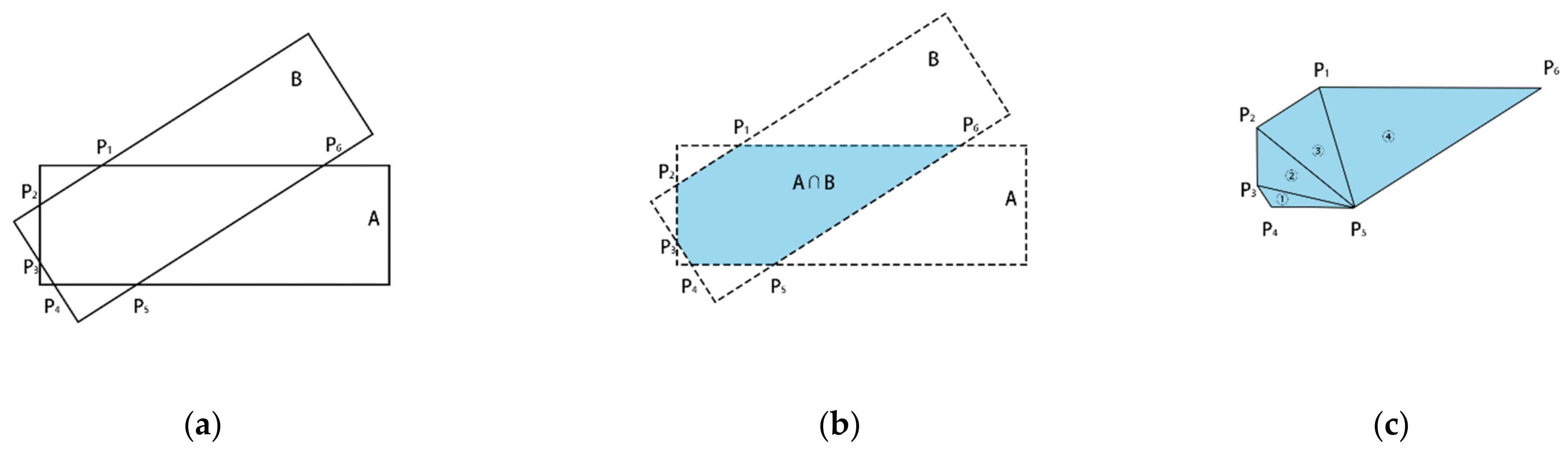

2.2.3. IoU Loss Function

3. Experimental Settings

3.1. Evaluation Metrics

3.2. Implementation Details

4. Results

4.1. Ablation Study

4.1.1. Effect of DFF-Net

4.1.2. Effect of PAM

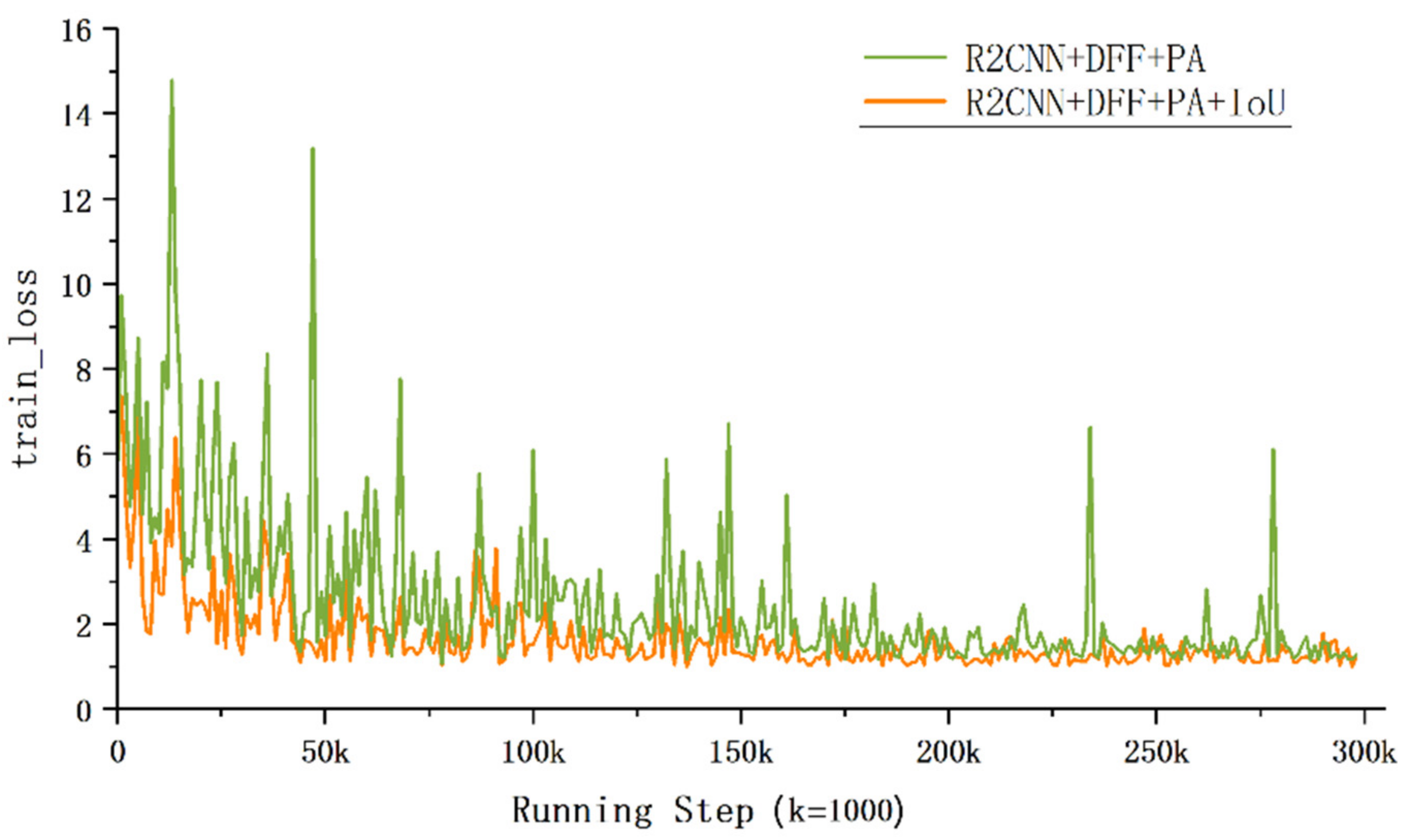

4.1.3. Effect of the IoU Loss Function

4.2. Result on the Rural Building Dataset

4.2.1. RD Task

4.2.2. HD Task

5. Discussions

5.1. Comparison of Similar Studies and the Contribution of TRDet

5.2. Comparison of Different Models

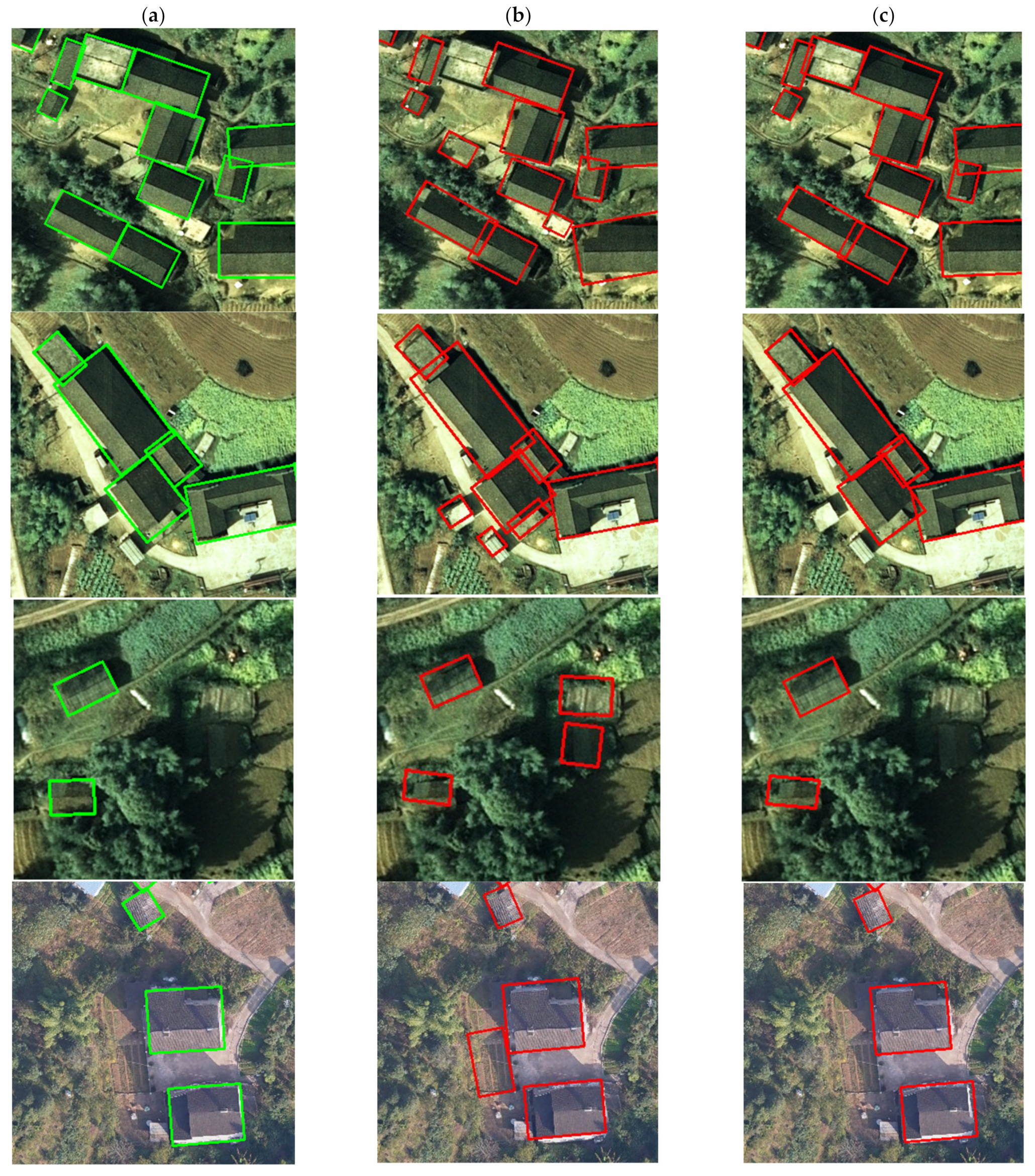

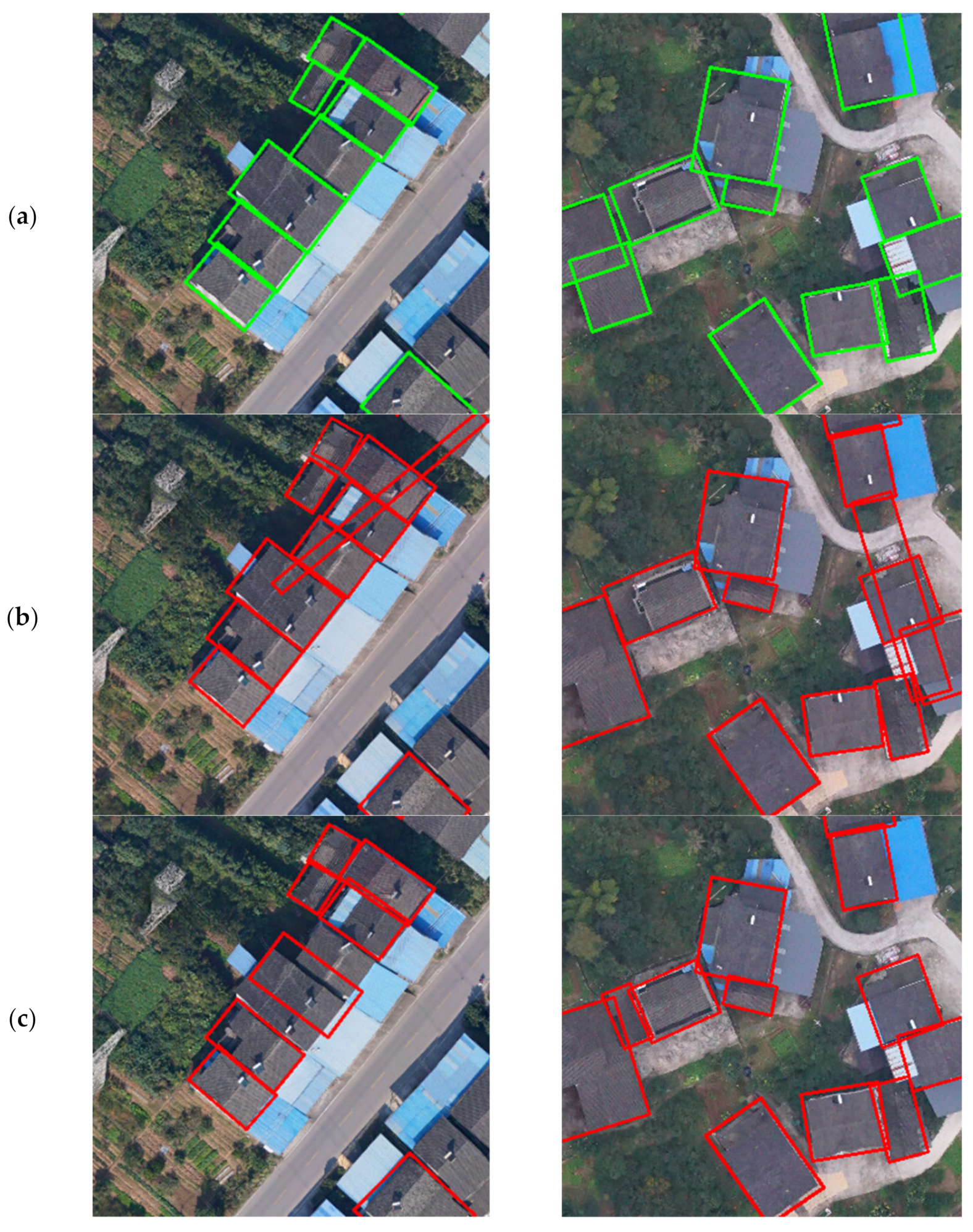

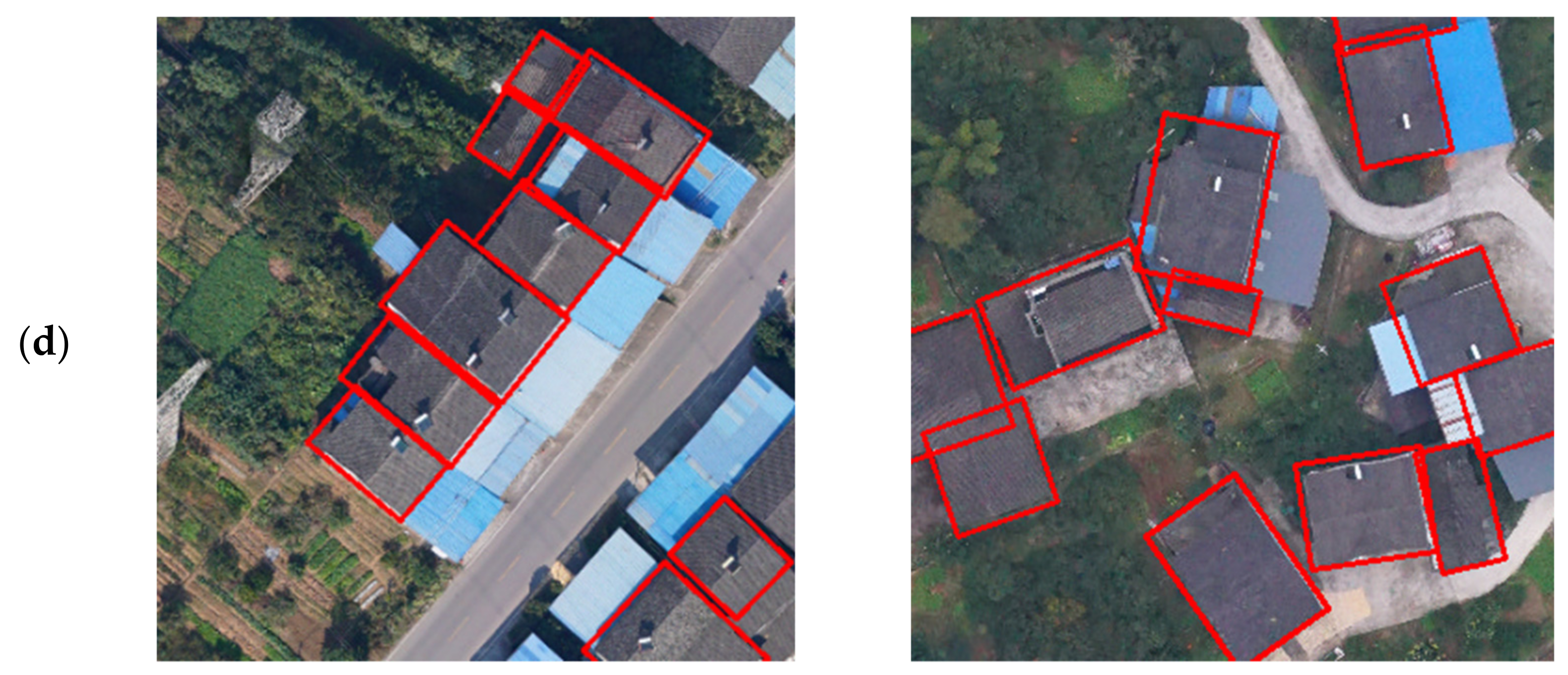

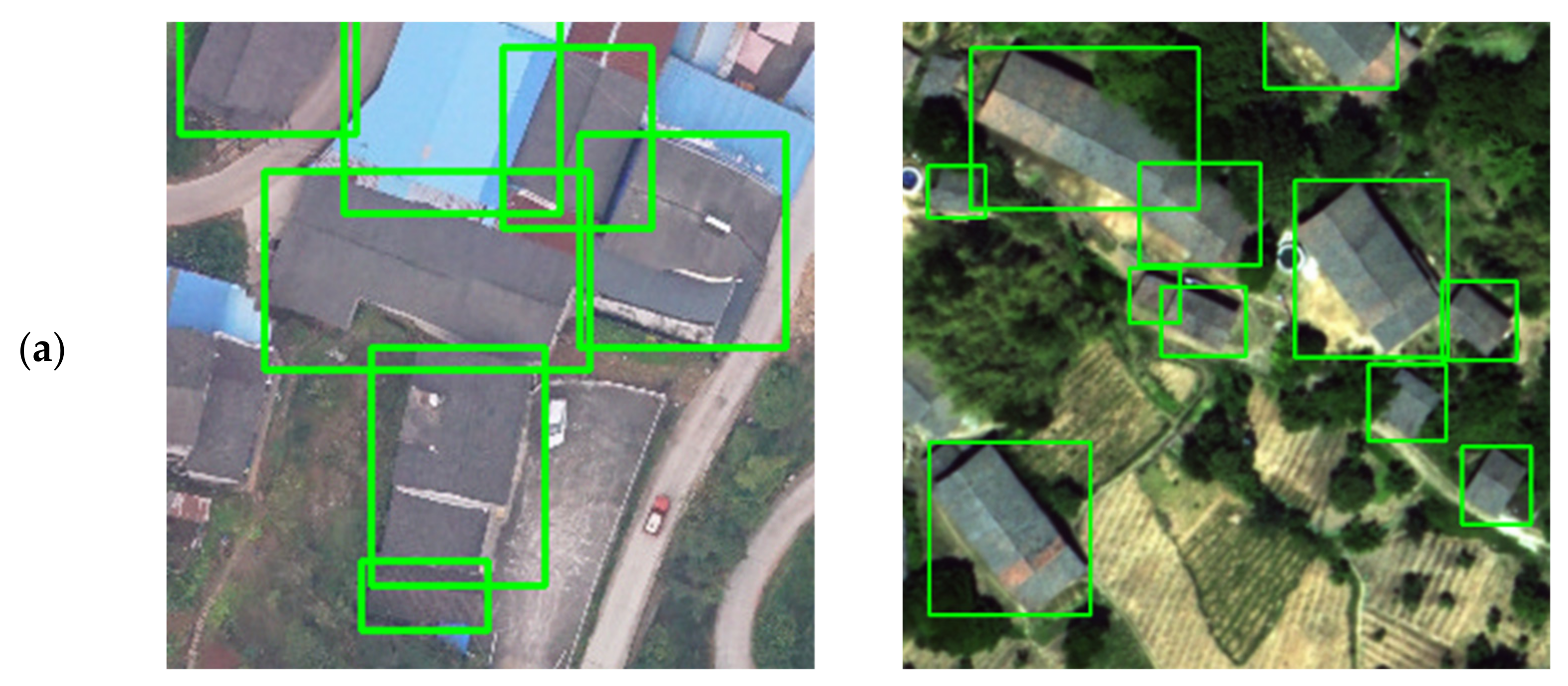

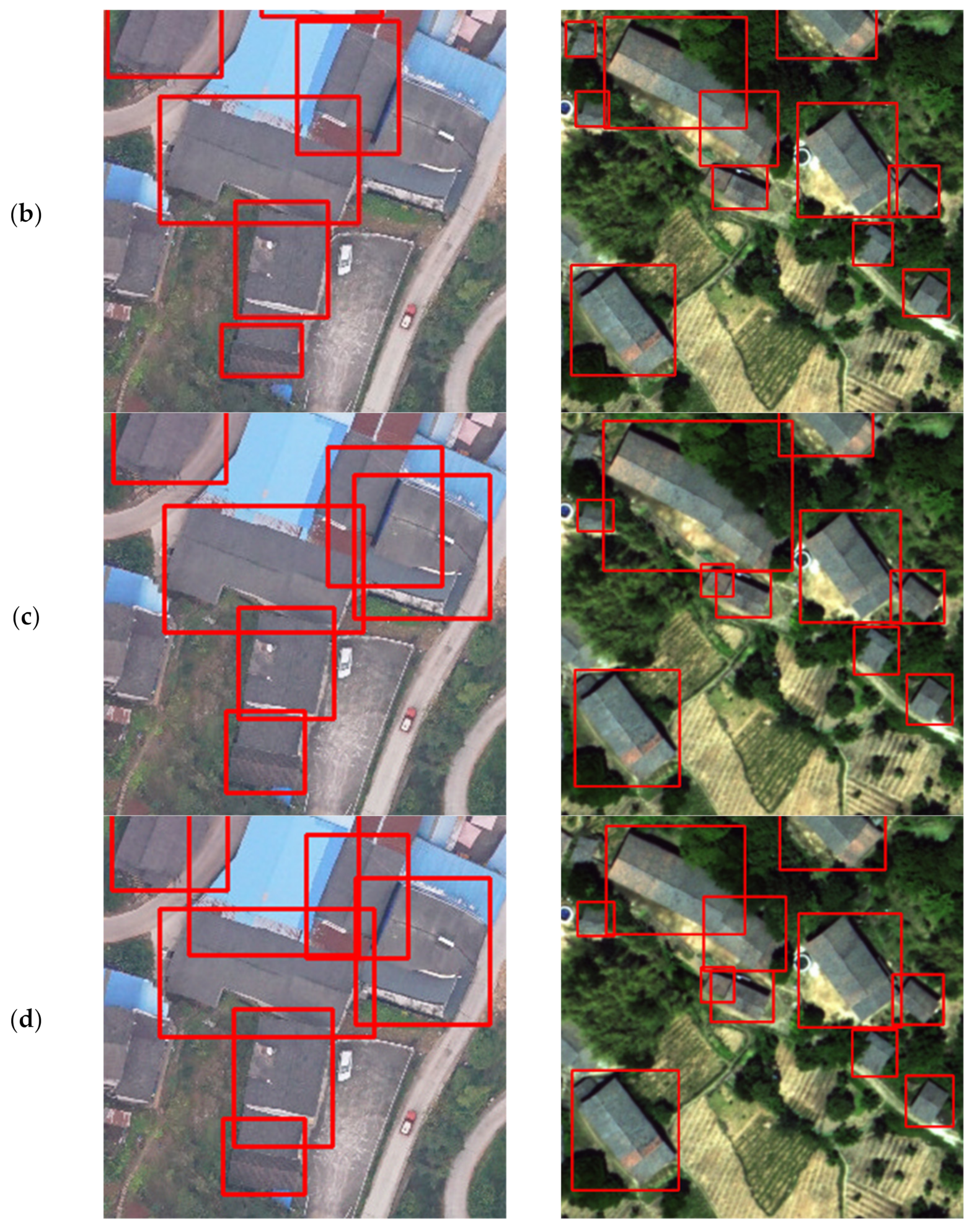

- In rural building detection, the size of buildings may vary greatly due to different altitudes. The proposed DFF-Net can extract the rural buildings of different scales. Different from the traditional feature fusion methods, the feature map by DFM integrates the information of two scales. Compared with ordinary channel expansion, D better balances the semantic information and location informants and obtains a larger receptive field. As a common feature extraction network, FPN has a complex structure and large parameters. In the HD task, the mAP of the TRDet method was 11.64% higher than FPN, the P was close, and the R was 12.89% different. The DFF-Net used the feature maps of C3 and C4 layers to ignore the bottom other less relevant features and only increased a small number of parameters. In Table 4, the mAP of our DFF-Net was 3.03% higher than that of SF-Net. We attribute the improvement in accuracy to our proposed DFM. As shown in Figure 10, the proposed model DFF-Net can perfectly fit the contours of large buildings and can also capture small buildings.

- The noise in remote sensing images will affect the model in the training phase, resulting in false detection and missed detection. The attention mechanism is a common method to alleviate noise interference. However, not all attention mechanisms are effective for it. In Section 4.1.2, we can see that the accuracy decreased by 1.24% after adding SE [46] (a typical channel attention module). The MDA [28] using channel attention was also unsatisfactory. Specifically, channel attention assigns different weights to each channel, which improves the weights of simple samples and ignores the information of hard samples. It leads to the accuracy of hard samples detections reduces. The PAM module was utilized to assign the supervised weights for each pixel to control the scores of generated feature maps from zeros to one, which reduced the noise influence, enhanced the information of target objects, and did not eliminate the non-object information. Therefore, it had great proficiency in alleviating problems with false detections and missed detections. In Figure 12, SCRDet cannot effectively distinguish the boundary between two buildings in the case of dense buildings.

- Due to the periodicity of angle, the traditional smooth L1 function is prone to sudden increase. Therefore, the IoU loss in this paper under the boundary condition, , can eliminate this surge. As shown in Figure 8, the IoU loss can accurately evaluate the loss of the prediction box relative to the true box in the training process.

5.3. Future Work

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhu, Y.; Luo, P.; Zhang, S.; Sun, B. Spatiotemporal Analysis of Hydrological Variations and Their Impacts on Vegetation in Semiarid Areas from Multiple Satellite Data. Remote Sens. 2020, 12, 4177. [Google Scholar] [CrossRef]

- Duan, W.; Maskey, S.; Chaffe, P.; Luo, P.; He, B.; Wu, Y.; Hou, J. Recent advancement in remote sensing technology for hydrology analysis and water resources management. Remote Sens. 2021, 13, 1097. [Google Scholar] [CrossRef]

- Modica, G.; De Luca, G.; Messina, G.; Praticò, S. Comparison and assessment of different object-based classifications using machine learning algorithms and UAVs multispectral imagery: A case study in a citrus orchard and an onion crop. Eur. J. Remote Sens. 2021, 54, 431–460. [Google Scholar] [CrossRef]

- Parks, S.A.; Holsinger, L.M.; Koontz, M.J.; Collins, L.; Whitman, E.; Parisien, M.-A.; Loehman, R.A.; Barnes, J.L.; Bourdon, J.-F.; Boucher, J.; et al. Giving Ecological Meaning to Satellite-Derived Fire Severity Metrics across North American Forests. Remote Sens. 2019, 11, 1735. [Google Scholar] [CrossRef] [Green Version]

- Weiers, S.; Bock, M.; Wissen, M.; Rossner, G. Mapping and indicator approaches for the assessment of habitats at different scales using remote sensing and GIS methods. Landsc. Urban Plan. 2004, 67, 43–65. [Google Scholar] [CrossRef]

- Solano, F.; Colonna, N.; Marani, M.; Pollino, M. Geospatial analysis to assess natural park biomass resources for energy uses in the context of the rome metropolitan area. In International Symposium on New Metropolitan Perspectives; Springer: Cham, Switzerland, 2019; Volume 100, pp. 173–181. [Google Scholar]

- Esch, T.; Heldens, W.; Hirner, A.; Keil, M.; Marconcini, M.; Roth, A.; Zeidler, J.; Dech, S.; Strano, E. Breaking new ground inmapping human settlements from space–The Global Urban Footprint. ISPRS J. Photogramm. Remote Sens. 2017, 134, 30–42. [Google Scholar] [CrossRef] [Green Version]

- Fang, L. Study on Evolution Process and Optimal Regulation of Rural Homestead in Guangxi Based on the Differentiation of Farmers’ Livelihoods. Master’s Thesis, Nanning Normal University, Nanning, China, 2019. [Google Scholar]

- Zheng, W. Design and Implementation of Rural Homestead Registration Management System. Master’s Thesis, University of Electronic Science and Technology of China, Chengdu, China, 2012. [Google Scholar]

- Wei, X.; Wang, N.; Luo, P.; Yang, J.; Zhang, J.; Lin, K. Spatiotemporal Assessment of Land Marketization and Its Driving Forces for Sustainable Urban–Rural Development in Shaanxi Province in China. Sustainability 2021, 13, 7755. [Google Scholar] [CrossRef]

- Li, L.; Zhu, J.; Cheng, G.; Zhang, B. Detecting High-Rise Buildings from Sentinel-2 Data Based on Deep Learning Method. Remote Sens. 2021, 13, 4073. [Google Scholar] [CrossRef]

- Ji, M.; Liu, L.; Buchroithner, M. Identifying Collapsed Buildings Using Post-Earthquake Satellite Imagery and Convolutional Neural Networks: A Case Study of the 2010 Haiti Earthquake. Remote Sens. 2018, 10, 1689. [Google Scholar] [CrossRef] [Green Version]

- Tian, X.; Wang, L.; Ding, Q. Review of Image Semantic Segmentation Based on Deep Learning. J. Softw. 2019, 30, 440–468. [Google Scholar]

- Boonpook, W.; Tan, Y.; Ye, Y.; Torteeka, P.; Torsri, K.; Dong, S. A Deep Learning Approach on Building Detection from Unmanned Aerial Vehicle-Based Images in Riverbank Monitoring. Sensors 2018, 18, 3921. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wu, G.; Shao, X.; Guo, Z.; Chen, Q.; Yuan, W.; Shi, X.; Xu, Y.; Shibasaki, R. Automatic Building Segmentation of Aerial Imagery Using Multi-Constraint Fully Convolutional Networks. Remote Sens. 2018, 10, 407. [Google Scholar] [CrossRef] [Green Version]

- Marin, C.; Bovolo, F.; Bruzzone, L. Building change detection in multitemporal very high resolution SAR images. IEEE Trans. Geosci. Remote Sens. 2014, 53, 2664–2682. [Google Scholar] [CrossRef]

- Saito, S.; Aokiet, Y. Building and road detection from large aerial imagery. In Image Processing: Machine Vision Applications VIII. San Francisco, CA, USA, 27 February 2015; p. 94050. Available online: https://spie.org/Publications/Proceedings/Paper/10.1117/12.2083273?SSO=1 (accessed on 26 December 2021).

- Guo, Z.; Wu, G.; Song, X.; Yuan, W.; Chen, Q.; Zhang, H.; Shi, X.; Xu, M.; Xu, Y.; Shibasaki, R.; et al. Super-Resolution Integrated Building Semantic Segmentation for Multi-Source Remote Sensing Imagery. IEEE Access 2019, 7, 99381–99397. [Google Scholar] [CrossRef]

- Chen, J.; Wang, C.; Zhang, H.; Wu, F.; Zhang, B.; Lei, W. Automatic Detection of Low-Rise Gable-Roof Building from Single Submeter SAR Images Based on Local Multilevel Segmentation. Remote Sens. 2017, 9, 263. [Google Scholar] [CrossRef] [Green Version]

- Prathap, G.; Afanasyev, I. Deep learning approach for building detection in satellite multispectral imagery. In Proceedings of the 2018 International Conference on Intelligent Systems (IS), Funchal, Portugal, 25–27 September 2018; pp. 461–465. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2961–2969. [Google Scholar]

- Zhang, L.; Wu, J.; Fan, Y.; Gao, H.; Shao, Y. An Efficient Building Extraction Method from High Spatial Resolution Remote Sensing Images Based on Improved Mask R-CNN. Sensors 2020, 20, 1465. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Li, D.; He, W.; Guo, B.; Li, M.; Chen, M. Building target detection algorithm based on Mask-RCNN. Sci. Surv. Mapp. 2019, 44, 172–180. [Google Scholar]

- Han, Q.; Yin, Q.; Zheng, X.; Chen, Z. Remote sensing image building detection method based on Mask R-CNN. Complex Intell. Syst. 2021, 27, 1–9. [Google Scholar] [CrossRef]

- Ma, H.; Liu, Y.; Ren, Y.; Yu, J. Detection of Collapsed Buildings in Post-Earthquake Remote Sensing Images Based on the Improved YOLOv3. Remote Sens. 2020, 12, 44. [Google Scholar] [CrossRef] [Green Version]

- Feng, J.; Hu, X. An Automatic Building Detection Method of Remote Sensing Image Based on Cascade R-CNN. J. Geomat. 2021, 46, 53–58. [Google Scholar]

- Dong, B.; Xiong, F.; Han, X.; Kuan, L.; Xu, Q. Research on Remote Sensing Building Detection Based on Improved Yolo v3 Algorithm. Comput. Eng. Appl. 2020, 56, 209–213. [Google Scholar]

- Yang, X.; Yang, J.; Yan, J.; Zhang, Y.; Zhang, T.; Guo, Z.; Sun, X.; Fu, K. Scrdet: Towards more robust detection for small, cluttered and rotated objects. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 8231–8240. [Google Scholar]

- Tan, Z.; Zhang, Z.; Xing, T.; Huang, X.; Gong, J.; Ma, J. Exploit Direction Information for Remote Ship Detection. Remote Sens. 2021, 13, 2155. [Google Scholar] [CrossRef]

- Han, J.; Ding, J.; Xue, N.; Xia, G. Redet: A rotation-equivariant detector for aerial object detection. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, Online, 19–25 June 2021; pp. 2786–2795. [Google Scholar]

- Ding, J.; Xue, N.; Long, Y.; Xia, G.; Lu, Q. Learning roi transformer for oriented object detection in aerial images. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–21 June 2019; pp. 2849–2858. [Google Scholar]

- Zhou, X.; Yao, C.; Wen, H.; Wang, Y.; Zhou, S.; He, W.; Liang, J. East: An efficient and accurate scene text detector. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5551–5560. [Google Scholar]

- Liao, M.; Shi, B.; Bai, X. Textboxes++: A single-shot oriented scene text detector. IEEE Trans. Image Processing 2018, 27, 3676–3690. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chen, Z.; Chen, K.; Lin, W.; See, J.; Yu, H.; Ke, Y.; Yang, C. PIoU Loss: Towards Accurate Oriented Object Detection in Complex Environments. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 195–211. [Google Scholar]

- Pan, X.; Ren, Y.; Sheng, K.; Dong, W.; Yuan, H.; Guo, X.; Ma, C.; Xu, C. Dynamic Refinement Network for Oriented and Densely Packed Object Detection. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–18 June 2020; pp. 11207–11216. [Google Scholar]

- Yang, X.; Yan, J.; Feng, Z.; He, T. R3det: Refined single-stage detector with feature refinement for rotating object. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 2–9 February 2021; pp. 3163–3171. [Google Scholar]

- Ma, J.; Shao, W.; Ye, H.; Wang, L.; Zheng, Y.; Xue, X. Arbitrary-oriented scene text detection via rotation proposals. IEEE Trans. Multimed. 2018, 20, 3111–3122. [Google Scholar] [CrossRef] [Green Version]

- Jiang, Y.; Zhu, X.; Wang, X.; Yang, S.; Li, W.; Wang, H.; Fu, P.; Luo, Z. R2cnn: Rotational region cnn for orientation robust scene text detection. arXiv 2017, arXiv:1706.09579. [Google Scholar]

- Zhang, G.; Lu, S.; Zhang, W. CAD-Net: A context-aware detection network for objects in remote sensing imagery. IEEE Trans. Geosci. Remote Sens. 2019, 57, 10015–10024. [Google Scholar] [CrossRef] [Green Version]

- Zhu, C.; Tao, R.; Luu, K.; Savvides, M. Seeing small faces from robust anchor’s perspective. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 5127–5136. [Google Scholar]

- Xia, G.; Bai, X.; Ding, J.; Zhu, Z.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; Zhang, L. DOTA: A large-scale dataset for object detection in aerial images. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 3974–3983. [Google Scholar]

- Kisantal, M.; Wojna, Z.; Murawski, J.; Naruniec, J.; Cho, K. Augmentation for small object detection. arXiv 2019, arXiv:1902.07296. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Lin, T.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate attention for efficient mobile network design. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, Online, 19–25 June 2021; pp. 13713–13722. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.; Kweon, I.-S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 13–16 December 2015; pp. 1440–1448. [Google Scholar]

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. Tensorflow: A system for large-scale machine learning. In Proceedings of the Symposium on Operating Systems Design and Implementation, Savannah, GA, USA, 2–4 November 2016; pp. 265–283. [Google Scholar]

- Dickenson, M.; Gueguen, L. Rotated Rectangles for Symbolized Building Footprint Extraction. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 225–228. [Google Scholar]

- Wen, Q.; Jiang, K.; Wang, W.; Liu, Q.; Guo, Q.; Li, L.; Wang, P. Automatic Building Extraction from Google Earth Images under Complex Backgrounds Based on Deep Instance Segmentation Network. Sensors 2019, 19, 333. [Google Scholar] [CrossRef] [Green Version]

| Image Quantity | RBox Quantity | Avg. RBox Quantity | |

|---|---|---|---|

| Training Set | 2196 | 21,144 | 9.6 |

| Validation Set | 280 | 2771 | 9.9 |

| Small Objects | Medium Objects | Large Objects | |

|---|---|---|---|

| Training Set | 468 | 18,420 | 2256 |

| Validation Set | 58 | 2384 | 329 |

| SA | 4 | 8 | 16 |

|---|---|---|---|

| RD mAP (%) | 80.61 | 80.60 | 80.43 |

| HD mAP (%) | 81.88 | 81.62 | 81.47 |

| Train time (s) | 3.12 | 1.69 | 0.87 |

| Model | R | P | F1 | mAP |

|---|---|---|---|---|

| R2CNN (baseline) [38] | 82.49 | 75.57 | 78.87 | 68.57 |

| + SF-Net [28] | 83.89 | 89.06 | 86.39 | 77.57 |

| + DFF-Net | 84.66 | 89.61 | 87.06 | 80.60 |

| + DFF-Net + MDA [28] | 85.13 | 89.55 | 87.28 | 81.16 |

| + DFF-Net + SE [46] + PA | 84.98 | 89.74 | 87.29 | 81.10 |

| + DFF-Net + PA | 85.45 | 90.27 | 87.79 | 82.34 |

| +DFF-Net + PA + IoU | 86.50 | 91.11 | 88.74 | 83.57 |

| Method | R | P | F1 | mAP |

|---|---|---|---|---|

| R2CNN | 82.49 | 75.57 | 78.87 | 68.57 |

| R3Det | 85.96 | 82.64 | 84.26 | 77.35 |

| SCRDet | 84.04 | 90.41 | 87.10 | 80.50 |

| Ours (TRDet) | 86.50 | 91.11 | 88.74 | 83.57 |

| Method | R | P | F1 | mAP |

|---|---|---|---|---|

| R2CNN | 77.84 | 83.63 | 78.43 | 69.87 |

| FPN | 75.88 | 90.20 | 82.42 | 74.57 |

| SCRDet | 85.81 | 90.69 | 88.18 | 82.66 |

| Ours(TRDet) | 88.77 | 91.11 | 89.92 | 86.21 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Peng, B.; Ren, D.; Zheng, C.; Lu, A. TRDet: Two-Stage Rotated Detection of Rural Buildings in Remote Sensing Images. Remote Sens. 2022, 14, 522. https://doi.org/10.3390/rs14030522

Peng B, Ren D, Zheng C, Lu A. TRDet: Two-Stage Rotated Detection of Rural Buildings in Remote Sensing Images. Remote Sensing. 2022; 14(3):522. https://doi.org/10.3390/rs14030522

Chicago/Turabian StylePeng, Baochai, Dong Ren, Cheng Zheng, and Anxiang Lu. 2022. "TRDet: Two-Stage Rotated Detection of Rural Buildings in Remote Sensing Images" Remote Sensing 14, no. 3: 522. https://doi.org/10.3390/rs14030522

APA StylePeng, B., Ren, D., Zheng, C., & Lu, A. (2022). TRDet: Two-Stage Rotated Detection of Rural Buildings in Remote Sensing Images. Remote Sensing, 14(3), 522. https://doi.org/10.3390/rs14030522