Abstract

Speckle is an interference phenomenon that contaminates images captured by coherent illumination systems. Due to its multiplicative and non-Gaussian nature, it is challenging to eliminate. The non-local means approach to noise reduction has proven flexible and provided good results. We propose in this work a new non-local means filter for single-look speckled data using the Shannon and Rényi entropies under the model. We obtain the necessary mathematical apparatus (the Fisher information matrix and asymptotic variance of maximum likelihood estimators). The similarity between samples of the patches relies on a parametric statistical test that verifies the evidence whether two samples have the same entropy or not. Then, we build the convolution mask by transforming the p-value into weights with a smooth activation function. The results are encouraging, as the filtered images have a better signal-to-noise ratio, they preserve the mean, and the edges are not severely blurred. The proposed algorithm is compared with three successful filters: SRAD (Speckle Reducing Anisotropic Diffusion), Lee, and FANS (Fast Adaptive Nonlocal SAR Despeckling), showing the new method’s competitiveness.

1. Introduction

SAR (Synthetic Aperture Radar) images are widely used in many environment monitor applications because they offer some advantages over optical remote sensing images, such as its acquisition capability being independent of sun light or the weather. In SAR imagery, the interference of waves reflected during the acquisition process gives rise to a multiplicative and non-Gaussian noise known as speckle [1], which produces a visual degradation of the image and hinders its interpretation. The purpose of image noise removal is to enhance its automatic understanding without blurring edges and obliterating small details. Reducing speckle is beneficial for SAR image visual interpretation, region-based detection, segmentation and classification, among other applications [2]. However, classical methods for noise reduction are not adequate for SAR denoising since this type of data are heavy-tailed and outlier-prone [3,4]. These features of SAR images make the modeling of the images with suitable statistical distributions essential. In the literature, there exists several probability distribution models to describe SAR data, in [5] a summary of this topic is presented.

The classic Lee filter for SAR image denoising was widespread since it was presented in [6,7]. It is based on performing operations on the value of the pixels, by sliding a window over the image, taking into account the coefficient of variation inside the window. Later it was improved by incorporating the data asymmetry [8].

A similar type of filter is the Maximum a Posteriori (MAP) based filter, which has been used to reduce the noise of single-look SAR images, modeling the a priori distribution of the backscatter with a Gaussian law [9]. Other distributions, such as and , were utilized in subsequent works [10,11] with relative success because they are applicable only in homogeneous areas [12]. Moschetti et al. [13] compared MAP filters, modeling the data with the , , and K distributions.

Perona and Malik [14] proposed the anisotropic diffusion filter. Such an approach had a huge impact on the imaging community because it uses the scale-space and is efficient at edge-preserving but it was developed for Gaussian noise elimination. Thus, this model is not proper for multiplicative speckle noise. Based in this idea, Yu and Acton [15] proposed SRAD (Speckled Reducing Anisotropic Diffusion), a specialized anisotropic diffusion filter for speckled data. It is based on a mathematical modeling of speckle noise that is removed through solving a differential equation in partial derivatives.

Cozzolino et al. [16] proposed the FANS (Fast Adaptive Non-local SAR Despeckling) filter. It is based on wavelets, and it is widely used by the SAR image processing community for its speed and good performance.

Buades et al. [17] introduced the Non-local Means (NLM) approach. These filters use the information of a group of pixels surrounding a target pixel to smooth the image by using a large convolution mask. It takes an average of all pixels in the mask, weighted by a similarity measure between these pixels and the center of the mask. The NLM approach has been utilized in many developments of image filtering. For example, Duval et al. [18] enhanced this idea, highlighting the importance of choosing local parameters in image filtering to balance the bias and the variance of the filter. In addition, Delon and Desolneux [19] addressed the problem of recovering an image contaminated with a mixture of Gaussian and impulse noise, employing an image patch-based method, which relies on the NLM idea. Lebrun et al. [20] proposed a Bayesian version of this approach and Torres et al. [21] built non-local means filters for polarimetric SAR data by comparing samples with stochastic distances between Wishart models. More recently, Refs. [22,23] have approached the comparison of samples using the properties of ratios between observations, and have enhanced the filter performance with anisotropic diffusion despeckling.

Argenti et al. [24] made an extensive review of despeckling methods. The authors compared various algorithms, including non-local, Bayesian, non-Bayesian, total variation, and wavelet-based filters.

Non-local means filters rely on two types of transformations to compare the samples, namely: (i) Pointwise comparisons, e.g., the norm or the ratio of the observations [22] (which requires samples of the same size); and (ii) parameter estimation. In this article, we propose a technique in line with parameter estimation.

This diversity of proposals generates the need of defining criteria to evaluate the quality of speckle filters quantitatively. With this objective, Gómez Déniz et al. [25] proposed the index. It is a good choice because it does not require a reference image and is tailored to SAR data.

Due to speckle noise’s stochastic nature, SAR data’s statistical modeling is strategic for image analysis and speckle noise reduction. The multiplicative model is one of the most widely used descriptions. It states that the observed data can be modeled by a random variable Z, which is the product of two independent random variables: X, which describes the backscatter, and Y that models the speckle noise. Yue et al. [26], Yue et al. [27] provide a comprehensive account of the models that arise from this assumption. Following the multiplicative model, Frery et al. [28] introduced the distribution which has been widely used for SAR data analysis. It is referred to as a universal model because of its flexibility and tractability [29]. It provides a suitable way for modeling areas with different degrees of texture, reflectivity, and signal-to-noise ratio.

Three parameters index the distribution: , related to the target texture, , related to the brightness and called scale parameter, and L, the number of looks, which describes the signal-to-noise ratio. The two first may vary among positions, while the latter can be considered the same on the whole image and can be known or estimated.

The entropy, which is a measure of a system’s disorder, is a central concept to information theory [30]. The Shannon entropy has been widely applied in statistics, image processing, and even SAR image analysis [31]. Kullback and Leibler [32] and Rényi [33], among others, studied in depth the properties of several forms of entropy. Two or more random samples may be compared with test statistics based on several forms of entropy, and this is the approach we will follow.

Chan et al. [34] presented the first attempt at using the entropy as the driving measure in a non-local means approach for speckle reduction. In this work we advance this idea by:

- Assessing and solving numerical errors that may appear when inverting the Fisher information matrix;

- Using a smooth transformation between p-values and weights that improves the results;

- Evaluating the filter performance with a metric that takes into account first- and second-order statistics;

- Applications to actual SAR images;

- Comparisons with state-of-the-art filters.

In this work, following the results by Salicrú et al. [35], we develop two statistical tests to evaluate if two random samples have the same entropy. These tests are based on the Shannon and Rényi entropies under the distribution. With this information, we propose a non-local means filter for speckled images noise reduction. We obtain the necessary mathematical apparatus for defining such filters, e.g., the Fisher information matrix of the law, and the asymptotic variance of its maximum likelihood estimators.

We evaluate these entropy-based non-local means filters’ performance using simulated data and an actual single-look SAR image. The new algorithm results are competitive with Lee, SRAD (Speckle Reducing Anisotropic Diffusion), and FANS (Fast Adaptive Nonlocal SAR Despeckling), and in some cases, they are better. Moreover, we show that building a non-local means filter with a proxy, as the entropy, is a feasible approach.

This article unfolds as follows. In Section 2, some properties of the distribution for intensity format SAR data are recalled, which results in the distribution. Section 3 introduces the formulae of Shannon and Rényi entropies under the model, the asymptotic entropies distribution, and the hypothesis tests with entropies. In Section 4, the details of the proposal of entropy-based non-local means filters are explained. Section 5 presents measures for assessing the performance of speckle filters. In Section 6, the results of applying the proposed despeckling algorithm to synthetic and actual data along with the results of applying FANS (Fast Adaptive Nonlocal SAR Despeckling), Lee, and SRAD (Speckle Reducing Anisotropic Diffusion) methods are shown. We also assess their relative performance. Finally, in Section 7 some conclusions are drawn. We also assess their relative performance. Finally, in Section 7 some conclusions are drawn. Appendix A provides information about the computational platform and points at the provided code and data.

2. The Distribution

Speckle noise follows a Gamma distribution, with density:

denoted by . The physics of image formation imposes .

The model for the backscatter X may be any distribution with positive support. Frery et al. [28] proposed using the reciprocal gamma law, a particular case of the generalized inverse Gaussian distribution, which is characterized by the density:

where and are the texture and the scale parameters, respectively. Under the multiplicative model, the return follows a distribution, whose density is:

where and . The r-order moments of the distribution are:

provided , and infinite otherwise. The distribution also arises when the observation is described as a sum of a random number of returns [26,36].

We will study the noisiest case which occurs when ; it is called single-look and expression (1) becomes:

The expected value is given by:

Chan et al. [37], using a connection between this distribution and certain Pareto laws, studied alternatives for obtaining quality samples.

We employed the maximum likelihood approach for parameter estimation. Given the sample of independent and identically distributed random variables with common distribution with , a maximum likelihood estimator of satisfies:

where is the likelihood function given by:

This leads to and such that:

where is the digamma function. We solved this system with numerical routines, using as an initial solution the moments estimators that stem from Equation (2) with .

3. Entropies for the Distribution

Entropy is an essential concept in information theory, related to the notion of disorder in statistics [30]. Salicrú et al. [38] proposed the -entropy class, which generalizes the original concept and facilitates the computation. In this section, the Shannon and Rényi entropies for the distribution, in the single-look case, are computed. Let f be a probability density function with support , and a r-dimensional parameter vector , with the parameter space. The -entropy of f is given by:

being concave and an increasing function or convex and a decreasing function [35]. In this work we use Shannon and Rényi entropies, which are obtained making:

- Shannon entropy: and ; and

- Rényi entropy: and , with .

The Shannon entropy of a probability density function f with parameters is given by:

Then, for the distribution, considering and , using Equation (3), we have:

where . The Rényi entropy of order , the probability density function f is given by:

So, for the single look distribution, considering , using Equation (3), it becomes:

where and the additional restriction .

3.1. Asymptotic Entropy Distribution

Pardo et al. [39] obtained the asymptotic behavior of any entropy indexed by maximum likelihood estimators. We present here a brief explanation of this point, and any interested readers can refer to [35,39,40]. Consider a random sample of size N from the distribution characterized by the probability density function f, with support and a r-dimensional parameter vector , where is the parameter space, and the maximum likelihood estimator of based on a random sample. Then, under mild regularity conditions, holds that:

being , where is the Fisher information matrix of f and is a vector given by:

and is the Gaussian distribution with mean and variance , and ’’ denotes convergence in distribution.

We derived closed formulas of the asymptotic variance for the single look distribution, in both cases, Shannon and Rényi entropies. From Equation (3), being the probability density function of the ,

where . The second-order derivatives are given by:

Then, the Fisher information matrix for the distribution is given by:

which is a positive definite matrix, so it can be inverted:

We compute the asymptotic variance of the Shannon entropy from (13), (9), and (19). We have that:

leading to:

The Fisher information matrix, which is involved in computing both and , is invertible, but it can be ill-conditioned. Writing:

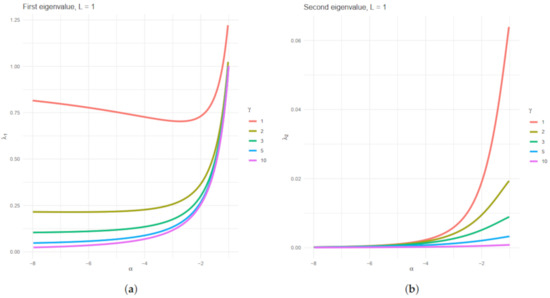

then we have its eigenvalues given by:

which are real numbers. Figure 1 shows the values of eingenvalues of the Fisher information matrix for several values of and parameters, and . Figure 1a,b show the first and second eigenvalues, respectively. It can be observed that the second eigenvalue tends to zero as for all values of and then, the matrix inversion becomes unstable for textureless areas, regardless of scale. Frery et al. [41] noticed that maximum likelihood estimates were unstable in some areas of the parameter space and analyzed this issue from the sample size viewpoint.

Figure 1.

Plots of the first and second eigenvalues of the Fisher information matrix for several values of and , . (a) First eigenvalue and (b) second eigenvalue.

Such behavior explains why maximum likelihood estimation is numerically unstable in textureless areas, i.e., where the mean number of backscatterers is huge.

3.2. Hypothesis Testing Based on h- Entropies

Entropies are widely used as feature discrimination [40,42]. In this section, a hypothesis test based on Shannon and Rényi entropies is built. Let and be two random samples of sizes and , respectively, from the distribution and parameters and , respectively. We are interested in testing the following hypotheses:

That means, statistical evidence that two random samples have the same entropy is sought or in other words, if the diversity of two independent random samples is equal. Let . be the maximum likelihood estimator of . Following the result of Equation (12) (see [35]) and for a parameter vector of dimension 2, the test statistic has known asymptotic distribution:

Therefore,

but v is unknown, then the Cochran’s theorem [43] can be applied to obtain:

being,

Since the second term of the right side of Equation (30) is distributed and the left side of the Equation (30) is distributed, we conclude that:

where are S and R, the Shannon and Rényi entropy, respectively. We are interested in compare samples of same size then, the test statistic reduces to (see [38,44]):

Then, the null hypothesis can be rejected with significance level if:

where s is the observed test statistic. The p-value is , .

4. Speckle Reduction by Comparing Entropies

The proposed method for despeckling images is based on testing whether two random samples and have the same diversity. We then compare the Shannon and Rényi entropies, which are scalars that depend on the parameters.

Non-local means filters use a large convolution mask of size with , taking an average of all pixels in the mask, weighted by a similarity measure between these pixels and the center of the mask.

Let Z be the noisy image of size , then the filtered image in position is given by:

where estimates the noiseless image X, and are the weights.

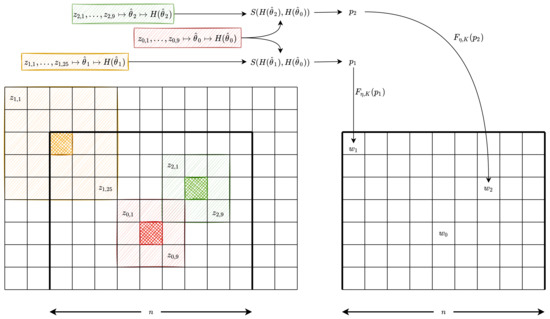

In our proposal, we build an image filter by computing the mask w in each step of the algorithm, using the test statistic from Equation (27) with significance level . For simplicity, we will describe the implementation using square windows, but the user may consider any shape. We defined two sets: The search and the estimation windows. The search window has fixed size and is centered on every pixel . At every location of this search window, we define estimation patches around each pixel. These windows may vary in size, e.g., in an adaptive scheme.

Figure 2 illustrates an instance of such implementation with . The left grid represents the image, while the right grid shows the mask that will be applied to the central pixel (identified in red cross-hatch).

Figure 2.

Left: Search window and estimation patches. Right: Resulting convolution mask to be applied on the search window to compute a single filtered value corresponding to the central pixel (cross-hatched in red).

The central pixel is identified with red cross-hatch, and its estimation window has size ; denote these observations as . We also show two estimations windows; those corresponding to the pixels identified with orange and green cross-hatches and have, respectively, dimensions and , and their observations are and , respectively.

We compare the pairwise diversities of the samples , , summarized as in Figure 2, with respect to estimating by maximum likelihood the parameters from the distribution. We obtain from , from , and from ; this step is summarized as in Figure 2. Then, both the Shannon and Rényi entropies are estimated (summarized as ) and the test statistic is obtained. The weight ( respectively) stems from comparing the samples ( respectively) and . Finally, we transform the observed p-values in the weights and by using a smoothing function.

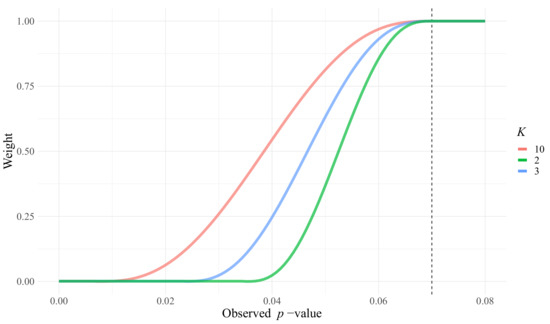

Each p-value may be used directly as a weight w but, as discussed by Torres et al. [21], such a choice introduces a conceptual distortion. Consider, for instance, the samples and . Assume that, when contrasted with the central sample , they produce the p-values and . In this case, the first weight will be significantly smaller than the second one, whereas there is no evidence to reject any of the samples at level . Torres et al. [21] proposed using a piece-wise linear function that maps all values above to 1, a linear transformation of values between and , and zero below . In this work, we propose a smooth activating function with zero first- and second-order derivatives at the inflexion points. The “smoother step function” defined by Ebert [45] is given as:

This function connects in a smooth manner the points and . We modify in order to connect and , for every , and define the weight w as a function of the observed p-value as the result of the following activating function:

This function is zero for , and is one above . The parameter K controls the transformation’s steepness, as shown in Figure 3. From our experiments, we recommend .

Figure 3.

Transformation between p-values and weights for and .

Algorithm 1 shows the steps of the method.

| Algorithm 1 Despeckling by similarity of entropies. |

|

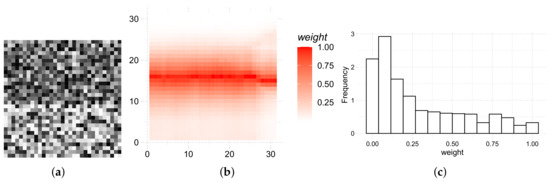

Figure 4 shows the heatmap and histogram of the weights of a sliding window over an edge of the original image. Figure 4a shows the cropping of the image where the weights were computed. Observations on the edge, and close to it have a strong influence on the filtered data, while those far or with a different underlying distribution weigh less.

Figure 4.

Original image patch, heat map, and histogram of weights over an edge, before normalization. (a) Original data. (b) Heat map. (c) Histogram of weights.

5. Filter Quality

We used the ratio image, which is the point to point division between the noisy image and the filtered image, to measure noise reduction quality. It is given by , where Z and are the original and filtered images, respectively. Under the multiplicative model, the perfect filter produces a ratio image I which is pure speckle, i.e., a collection of independent and identically distributed random variables, with no presence of patterns or geometric structures.

5.1. First-Order Statistic

The first-order statistic component of the quality measure employs the equivalent number of looks and the mean value over homogeneous areas of the original and filtered images. An effective despeckling method should increase the equivalent number of looks and preserve the mean value. Given a textureless and homogeneous image area with sample mean and sample standard deviation , the equivalent number of looks can be estimated as . We automatically select N small homogeneous areas , then the ENL residual for each area , is estimated:

where and are the estimated ENL taking the sample from for the original image Z and the ratio image I, respectively. The relative residual due to deviations from the ideal mean, which is 1, is:

where is the mean value for the region in the ratio image. The first-order residual is the sum of those two quantities:

The perfect filter would produce .

5.2. Second-Order Statistic

The remaining geometrical content in the ratio image I is measured with the homogeneity value from Haralik’s co-occurrence matrices [46], which is given by:

where is the -th element of the co-occurrence matrix of size . The coefficient h is computed under the null hypothesis, which implies that the probability distribution of the ratio image I is invariant under random permutations. Then, if there is no structure in I, h will not change after permutations.

Let be the homogeneity of the original ratio image I, the homogeneity of the m-th permutation of I, and g the total amount of permutations. The absolute value of the relative variation of , in percentage can be used as a measure of a distance from the null hypothesis and is given by:

where . As increases, the larger the geometric structure that will remain in the radio image. Finally, the statistic to measure filter quality proposed in [25] combines and in the following way:

The ideal filter produces , and the lack of quality of a filter can be measured by the observed value of .

6. Results

In this section, we present the results of applying the proposed filters. We also evaluate them using the metric presented in Section 5. Three of the most successful despeckling methods are SRAD (Speckle Reducing Anisotropic Diffusion) [15], Enhanced Lee [8], and FANS (Fast Adaptive Nonlocal SAR Despeckling) [16] algorithms. We compare their efficiency with the performance of the entropy-based filters. In all cases, the images are equalized for improved visualization.

6.1. Simulated Data

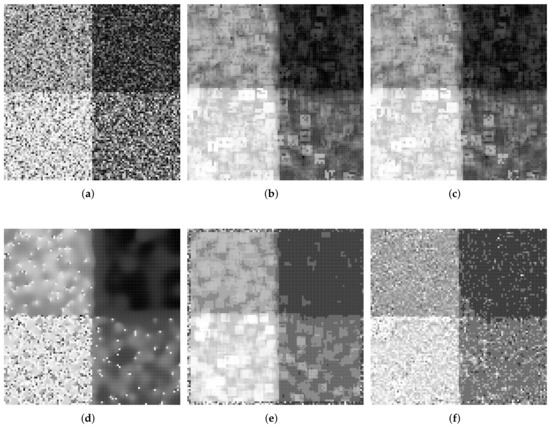

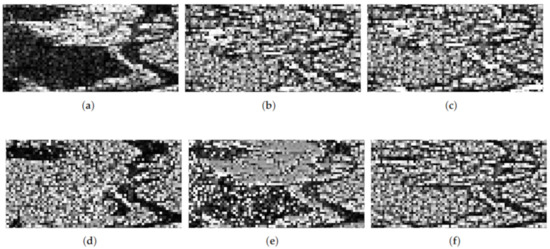

In this Section we present results of applying the filters to simulated data. We consider two phantoms: One with large areas (Figure 5), and another with strips of varying width (Figure 6). The observations deviate from the distribution, obtained according to the recommendations presented in [37].

Figure 5.

Simulated image generated with , , , and laws (top-left, top-right, bottom-left, and bottom-right, respectively), and the result of applying the filters based on Shannon entropy, on Rényi entropy, and with the SRAD (Speckle Reducing Anisotropic Diffusion), Enhanced Lee, and FANS (Fast Adaptive Nonlocal SAR despeckling) filters. (a) Original image. (b) Shannon entropy-filtered image. (c) Rényi entropy-filtered image, . (d) SRAD (Speckle Reducing Anisotropic Diffusion)-filtered image. (e) Lee-filtered image. (f) FANS (Fast Adaptive Nonlocal SAR Despeckling)-filtered image.

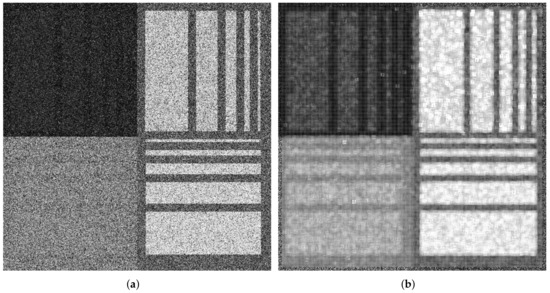

Figure 6.

Results of applying the proposed filters to the background and stripes image. (a) Phantom image. (b) Shannon entropy filter.

Figure 5 is adequate for assessing the filter performance on large areas. Figure 5a shows the original data, each half of the top of the image was generated with and distributions, respectively. Each half of the bottom of the image was generated with and distributions, respectively. Figure 5b shows the filtered image using the Shannon entropy with patches of size , a sliding window of size , and . Figure 5c shows the filtered image with Rényi entropy and , patches of size , a sliding window of size , , and . Table 1 shows the ENL (Equivalent Number of Looks) estimates and the mean value for the original and smoothed images. Figure 5d–f show the results of applying SRAD (Speckle Reducing Anisotropic Diffusion), Enhanced Lee, and FANS (Fast Adaptive Nonlocal SAR Despeckling) filters.

Table 1.

Estimates of the ENL (Equivalent Number of Looks) and mean value for images from Figure 5.

Table 1 shows that the estimated ENL increases, while the mean is almost the same.

Table 2 shows the evaluation metrics for each filter. The best method for each metric is highlighted with a gray background. It can be observed that the metric values for the proposed filters are competitive, and the Shannon entropy-based filter is the winner.

Table 2.

Filter evaluation metrics for the image of Figure 5a.

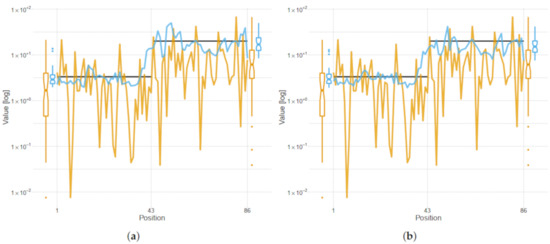

Figure 7 shows, in a semilogarithmic scale, the pixels values of one column of the original (orange) and filtered (light blue) images for a Shannon entropy-based method (Figure 7a) and Rényi entropy-based method (Figure 7b). The horizontal black straight lines are the theoretical mean of the data computed using Equation (4). Each plot shows two sets of boxplots: One pair to the left, corresponding to the original (orange) and filtered (light blue) values in the darker half of the image, and another pair to the right showing a summary of the original and filtered values in the brighter half. The effect of the filter is noticeable: The spread of the data is reduced, while the transition between halves is sharp, i.e., there is little blurring.

Figure 7.

One column image data for the original, Shannon entropy, and Rényi entropy-filtered images. The black horizontal straight lines are the theoretical mean values of the data, in orange the original data, and in light blue the filtered data. (a) Original and Shannon smoothed images. (b) Original and Rényi smoothed images.

Figure 6 shows the results of applying the proposed filters to a phantom image which is adequate for assessing the performance on relatively small features. This phantom was proposed by Gomez et al. [47].

The image has pixels, and is divided in four blocks: Upper left, upper right, bottom left, and bottom right. Table 3 shows the background and stripes parameters in each block. Notice that in the left half the background and stripes have the same mean value. This is a challenging situation for any filter.

Table 3.

Parameters of the simulated data in Figure 6a.

The ability of the filter based on the Shannon entropy to distinguish areas with the same mean but different roughness is noticeable in Figure 6. The edges between areas are also well preserved. For the sake of brevity, we omit the results produced by the filter based on the Rényi entropy, because they are similar.

Table 4 and Table 5 show the estimated ENL, mean values, and evaluation metrics for these images. It can be observed the filter ability to increase the equivalent number of looks while preserving the mean value.

Table 4.

Estimates of the ENL and mean value for images from Figure 6.

Table 5.

Filter evaluation metrics for the actual data of Figure 6.

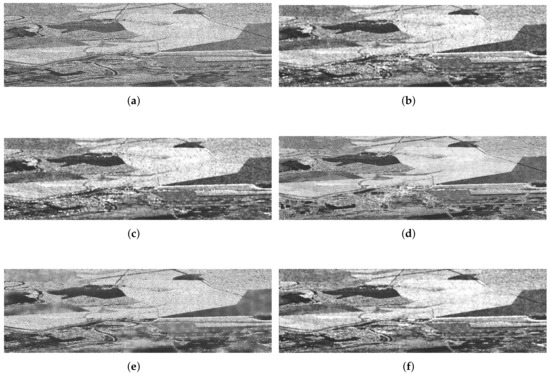

6.2. Data from Actual Sensors

Figure 8 shows the results of applying the despeckling method to actual data. Figure 8a corresponds to the original image. Figure 8b,c are the results of applying the despeckling method with Shannon entropy and with Rényi entropy, respectively. The used parameters are: , , , , and .

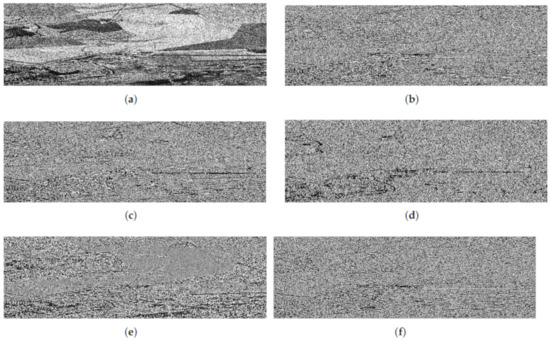

Figure 8.

Original image and filtered version with the Shannon entropy, Rényi entropy, FANS (Fast Adaptive Nonlocal SAR Despeckling), SRAD (Speckle Reducing Anisotropic Diffusion) and Enhanced Lee filters. (a) Original HH (Horizontal-Horizontal polarization) data. (b) Smoothed image with the Shannon entropy. (c) Smoothed image with the Rényi entropy . (d) Smoothed image with the FANS (Fast Adaptive Nonlocal SAR Despeckling) filter. (e) Smoothed image with the SRAD (Speckle Reducing Anisotropic Diffusion) filter. (f) Smoothed image with the Enhanced-Lee filter.

Figure 8d shows the result of applying the FANS (Fast Adaptive Nonlocal SAR Despeckling) filter, Figure 8e shows the SRAD (Speckle Reducing Anisotropic Diffusion) filter, and Figure 8f shows the Lee filter. Figure 9 shows the ratio images; Figure 8b–f correspond to Shannon entropy, Rényi entropy, FANS (Fast Adaptive Nonlocal SAR Despeckling), as well as SRAD (Speckle Reducing Anisotropic Diffusion) and Lee filters, respectively.

Figure 9.

Original and ratio images. (a) Original HH (Horizontal-Horizontal polarization) data. (b) Ratio image from the Shannon entropy filter. (c) Ratio image from the Rényi entropy filter. (d) Ratio image from the FANS (Fast Adaptive Nonlocal SAR Despeckling) filter. (e) Ratio image from the SRAD (Speckle Reducing Anisotropic Diffusion) filter. (f) Ratio image from the Lee filter.

Table 6 and Table 7 show the estimated ENL, mean values, and evaluation metrics for these images. Note the filter ability to increase the equivalent number of looks while preserving the mean value.

Table 6.

Estimates of the ENL and mean value for images from Figure 8.

Table 7.

Filter evaluation metrics for the actual data of Figure 8.

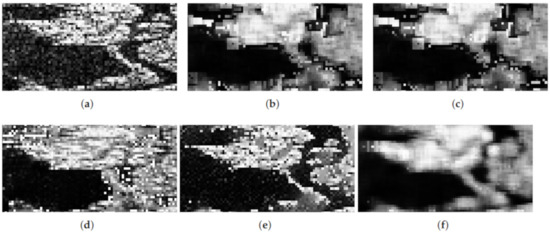

Figure 10 shows details of the original and filtered images. Visually, Shannon, Rényi, and Enhanced Lee provide the best speckle reduction. Regarding detail preservation, SRAD (Speckle Reducing Anisotropic Diffusion) seems the best one. The Shannon filter seems to provide the best balance between speckle reduction and detail preservation. Figure 11 shows the ratio images of these details and confirms our previous comments.

Figure 10.

Details of the original and filtered images. (a) Original HH (Horizontal-Horizontal polarization) data. (b) Smoothed image with the Shannon entropy. (c) Smoothed image with the Rényi entropy . (d) Smoothed image with the FANS (Fast Adaptive Nonlocal SAR Despeckling) filter. (e) Smoothed image with the SRAD filter. (f) Smoothed image with the Enhanced Lee filter.

Figure 11.

Details of the original and ratio images. (a) Original HH (Horizontal-Horizontal polarization) data. (b) Ratio image from the Shannon entropy filter. (c) Ratio image from the Rényi Entropy filter. (d) Ratio image from the FANS (Fast Adaptive Nonlocal SAR Despeckling) filter. (e) Ratio image from the SRAD (Speckle Reducing Anisotropic Diffusion) filter. (f) Ratio image from the Enhanced Lee filter.

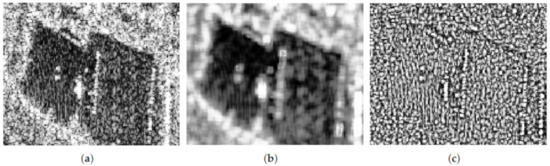

Figure 12a shows an intensity single-look L-band HH (Horizontal-Horizontal) polarization E-SAR image. This image has a complex structure, which is challenging to filter. There are structures with strong return embedded in the central dark areas. The result of applying the filter based on the Shannon entropy with a search window of side 9 and patches of side 5 is shown in Figure 12b. This filter reduces the speckle drastically while preserving the structures. Notice, in particular, that the filter enhances subtle differences within the dark areas, and that it does not smear the edges between them and the surrounding brighter region. In Figure 12 we show the ratio image. It is noteworthy that, in spite of the complexity of the image, there is little remaining structure. This result suggests that most of the relevant information has been retained in the filtered image.

Figure 12.

Results of applying the filter based on the Shannon entropy to an actual image from the E-SAR sensor. (a) Actual image with a corner reflector. (b) Smoothed image. (c) Ratio image.

Table 8 presents the quantitative analysis results of applying the Shannon and Rényi filters to the image shown in Figure 12a. The results are similar, with a slight advantage of the Shannon filter over the Rényi. This relative improvement is related to the preservation of structure.

Table 8.

Filter evaluation metrics for the actual data of Figure 12a.

7. Conclusions and Future Work

We proposed a new non-local means filter for single-look speckled data based on the asymptotic distribution of the Shannon and Rényi entropies for the distribution.

The similarity between the diversities of the central window and the patches is based on a statistical test that measures the difference between the entropies of two random samples. If two samples have the same distribution in a neighborhood, then there are no image edges, the diversity is lower, and the zone can be blurred to reduce speckle. This approach does not require using patches of equal sizes, and they can even vary along the image. The mask for speckle noise reduction is built with a smoothing function depending on the computed p-value.

We tested our proposal in simulated data and an actual single-look image, and we compared it with three other successful filters. The results are encouraging, as the filtered image has a better signal-to-noise ratio, it preserves the mean, and the edges are not severely blurred. Although the filter based on the Rényi entropy is attractive due to its improved flexibility (the parameter can be tuned), it produces very similar results to those provided by the use of the Shannon entropy that is simpler to implement.

In future works, we will assess this filter’s performance with several criteria in cases of contaminated data, and we will consider other measures, as the Kullback–Leibler distance.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/rs14030509/s1, The R code that implements our proposal, along with data examples, is available as Supplementary Material.

Author Contributions

Conceptualization, J.G. and A.C.F.; methodology, D.C., J.G. and A.C.F.; software, D.C and J.G.; validation, D.C. and J.G.; formal analysis, D.C. and J.G.; investigation, D.C., J.G. and A.C.F.; resources, J.G. and A.C.F.; writing—original draft preparation, J.G. and A.C.F.; writing—review and editing, J.G. and A.C.F.; visualization, J.G. and A.C.F.; supervision, J.G. and A.C.F.; project administration, J.G. and A.C.F. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by CNPq grants numbers 303267/2019-4 and 405364/2018-0. The APC was funded by the School of Mathematics and Statistics, Victoria University of Wellington, New Zealand.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The simulated data presented in this study can be reproduced using the code provided as Supplementary Material. The data from actual images are available on request from the corresponding author. They are not publicly available due to requirements from the providers.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Computational Information

Simulations were performed using the R language and environment for statistical computing version 3.3 [48], in a computer with processor Intel© Core™, i7-4790K CPU 4 GHz, 16 GB RAM, System Type 64–bit operating system. The R code that implements our proposal, along with data examples, is available as Supplementary Material.

References

- Oliver, C.; Quegan, S. Understanding Synthetic Aperture Radar Images; SciTech Publishing: Raleigh, NC, USA, 2004. [Google Scholar]

- Duskunovic, I.; Heene, G.; Philips, W.; Bruyland, I. Urban area detection in SAR imagery using a new speckle reduction technique and Markov random field texture classification. Int. Geosci. Remote. Sens. Symp. 2000, 2, 636–638. [Google Scholar] [CrossRef]

- Gambini, J.; Cassetti, J.; Lucini, M.; Frery, A. Parameter Estimation in SAR Imagery using Stochastic Distances and Asymmetric Kernels. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 365–375. [Google Scholar] [CrossRef]

- Rojo, J. Heavy-tailed densities. Wiley Interdiscip. Rev. Comput. Stat. 2013, 5, 30–40. [Google Scholar] [CrossRef]

- Gao, G. Statistical modeling of SAR images: A survey. Sensors 2010, 10, 775–795. [Google Scholar] [CrossRef]

- Lee, J.S. Digital Image Enhancement and Noise Filtering by Use of Local Statistics. IEEE Trans. Patternanalysis Mach. Intell. 1980, 2, 165–168. [Google Scholar] [CrossRef]

- Lee, J.S. Refined filtering of image noise using local statistics. Comput. Graph. Image Process. 1981, 15, 380–389. [Google Scholar] [CrossRef]

- Lee, J.S.; Wen, J.H.; Ainsworth, T.L.; Chen, K.S.; Chen, A.J. Improved sigma filter for speckle filtering of SAR imagery. IEEE Trans. Geosci. Remote. Sens. 2009, 47, 202–213. [Google Scholar]

- Kuan, D.; Sawchuk, A.; Strand, T.; Chavel, P. Adaptive restoration of images with speckle. IEEE Trans. Acoust. Speech Signal Process. 1987, 35, 373–383. [Google Scholar] [CrossRef]

- Lopes, A.; Nezry, E.; Touzi, R.; Laur, H. Maximum a posteriori speckle filtering and first order texture models in SAR images. In Proceedings of the 10th Annual International Symposium on Geoscience and Remote Sensing, College Park, MD, USA, 20–24 May 1990; pp. 2409–2412. [Google Scholar]

- Touzi, R. A review of speckle filtering in the context of estimation theory. IEEE Trans. Geosci. Remote Sens. 2002, 4, 2392–2404. [Google Scholar] [CrossRef]

- Lattari, F.; Gonzalez Leon, B.; Asaro, F.; Rucci, A.; Prati, C.; Matteucci, M. Deep Learning for SAR Image Despeckling. Remote Sens. 2019, 11, 1532. [Google Scholar] [CrossRef]

- Moschetti, E.; Palacio, M.G.; Picco, M.; Bustos, O.H.; Frery, A.C. On the use of Lee’s protocol for speckle-reduncing techniques. Lat. Am. Appl. Res. 2006, 36, 115–121. [Google Scholar]

- Perona, P.; Malik, J. Scale-space and edge detection using anisotropic diffusion. IEEE Trans. Pattern Anal. Mach. Intell. 1990, 12, 629–639. [Google Scholar] [CrossRef]

- Yu, Y.; Acton, S.T. Speckle Reducing Anisotropic Diffusion. IEEE Trans. Image Process. 2002, 11, 1260–1270. [Google Scholar]

- Cozzolino, D.; Parrilli, S.; Scarpa, G.; Poggi, G.; Verdoliva, L. Fast Adaptive Nonlocal SAR Despeckling. IEEE Geosci. Remote. Sens. Lett. 2014, 11, 524–528. [Google Scholar] [CrossRef]

- Buades, A.; Coll, B.; Morel, J. A review of image denoising algorithms, with a new one. Multiscale Model. Simul. 2005, 4, 490–530. [Google Scholar] [CrossRef]

- Duval, V.; Aujol, J.F.; Gousseau, Y. A Bias-Variance Approach for the Nonlocal Means. SIAM J. Imaging Sci. 2011, 4, 760–788. [Google Scholar] [CrossRef]

- Delon, J.; Desolneux, A. A Patch-Based Approach for Removing Impulse or Mixed Gaussian-Impulse Noise. SIAM J. Imaging Sci. 2013, 6, 1140–1174. [Google Scholar] [CrossRef]

- Lebrun, M.; Buades, A.; Morel, J. A Nonlocal Bayesian Image Denoising Algorithm. SIAM J. Imaging Sci. 2013, 6, 1665–1688. [Google Scholar] [CrossRef]

- Torres, L.; Sant’Anna, S.J.S.; Freitas, C.C.; Frery, A.C. Speckle Reduction in Polarimetric SAR Imagery with Stochastic Distances and Nonlocal Means. Pattern Recognit. 2014, 47, 141–157. [Google Scholar] [CrossRef]

- Ferraioli, G.; Pascazio, V.; Schirinzi, G. Ratio-Based Nonlocal Anisotropic Despeckling Approach for SAR Images. IEEE Trans. Geosci. Remote. Sens. 2019, 57, 7785–7798. [Google Scholar] [CrossRef]

- Aghababaei, H.; Ferraioli, G.; Vitale, S.; Zamani, R.; Schirinzi, G.; Pascazio, V. Non Local Model Free Denoising Algorithm for Single and Multi-Channel SAR Data. IEEE Trans. Geosci. Remote. Sens. 2021, in press. [CrossRef]

- Argenti, F.; Lapini, A.; Bianchi, T.; Alparone, L. A tutorial on speckle reduction in synthetic aperture radar images. IEEE Geosci. Remote. Sens. Mag. 2013, 1, 6–35. [Google Scholar] [CrossRef]

- Gómez Déniz, L.; Ospina, R.; Frery, A.C. Unassisted Quantitative Evaluation of Despeckling Filters. Remote Sens. 2017, 9, 389. [Google Scholar] [CrossRef]

- Yue, D.X.; Xu, F.; Frery, A.C.; Jin, Y.Q. SAR Image Statistical Modeling Part I: Single-Pixel Statistical Models. IEEE Geosci. Remote. Sens. Mag. 2021, 9, 82–114. [Google Scholar] [CrossRef]

- Yue, D.X.; Xu, F.; Frery, A.C.; Jin, Y.Q. SAR Image Statistical Modeling Part II: Spatial Correlation Models and Simulation. IEEE Geosci. Remote. Sens. Mag. 2021, 9, 115–138. [Google Scholar] [CrossRef]

- Frery, A.; Müller, H.; Yanasse, C.; Sant’Anna, S. A model for extremely heterogeneous clutter. IEEE Trans. Geosci. Remote. Sens. 1997, 35, 648–659. [Google Scholar] [CrossRef]

- Mejail, M.; Jacobo-Berlles, J.C.; Frery, A.C.; Bustos, O.H. Classification of SAR images using a general and tractable multiplicative model. Int. J. Remote. Sens. 2003, 24, 3565–3582. [Google Scholar] [CrossRef]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Nascimento, A.D.C.; Frery, A.C.; Cintra, R.J. Detecting Changes in Fully Polarimetric SAR Imagery With Statistical Information Theory. IEEE Trans. Geosci. Remote. Sens. 2019, 57, 1380–1392. [Google Scholar] [CrossRef]

- Kullback, S.; Leibler, R.A. On Information and Sufficiency. Ann. Math. Stat. 1951, 22, 79–86. [Google Scholar] [CrossRef]

- Rényi, A. On Measures of Entropy and Information. Proceedings of the Fourth Berkeley Symposium on Mathematical Statistics and Probability, Volume 1: Contributions to the Theory of Statistics; University of California Press: Berkeley, CA, USA, 1961; pp. 547–561. [Google Scholar]

- Chan, D.; Gambini, J.; Frery, A.C. Speckle Noise Reduction In SAR Images Using Information Theory. In Proceedings of the 2020 IEEE Latin American GRSS & ISPRS Remote Sensing Conference (LAGIRS), Santiago, Chile, 22–26 March 2020; pp. 456–461. [Google Scholar] [CrossRef]

- Salicrú, M.; Morales, D.; Menéndez, M.L.; Pardo, L. On the Applications of Divergence Type Measures in Testing Statistical Hypotheses. J. Multivar. Anal. 1994, 51, 372–391. [Google Scholar] [CrossRef]

- Yue, D.X.; Xu, F.; Frery, A.C.; Jin, Y.Q. A Generalized Gaussian Coherent Scatterer Model for Correlated SAR Texture. IEEE Trans. Geosci. Remote. Sens. 2020, 58, 2947–2964. [Google Scholar] [CrossRef]

- Chan, D.; Rey, A.; Gambini, J.; Frery, A.C. Sampling from the distribution. Monte Carlo Methods Appl. 2018, 24, 271–287. [Google Scholar] [CrossRef]

- Salicrú, M.; Menéndez, M.L.; Pardo, L. Asymptotic distribution of (h;ϕ)-entropy. Commun. Stat. Theory Methods 1993, 22, 2015–2031. [Google Scholar] [CrossRef]

- Pardo, L.; Morales, D.; Salicrú, M.; Menéndez, M. Large sample behavior of entropy measures when parameters are estimated. Commun. Stat. Theory Methods 1997, 26, 483–501. [Google Scholar] [CrossRef]

- Frery, A.C.; Cintra, R.J.; Nascimento, A.D.C. Entropy-Based Statistical Analysis of PolSAR Data. IEEE Trans. Geosci. Remote. Sens. 2013, 51, 3733–3743. [Google Scholar] [CrossRef]

- Frery, A.C.; Cribari-Neto, F.; de Souza, M.O. Analysis of minute features in speckled imagery with maximum likelihood estimation. EURASIP J. Adv. Signal Process. 2004, 2004, 375370. [Google Scholar] [CrossRef]

- Wang, T.; Guan, S.U.; Liu, F. Entropic Feature Discrimination Ability for Pattern Classification Based on Neural IAL. In Advances in Neural Networks—ISNN 2012; Wang, J., Yen, G.G., Polycarpou, M.M., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 30–37. [Google Scholar] [CrossRef]

- Cochran, W.G. The distribution of quadratic forms in a normal system, with applications to the analysis of covariance. Math. Proc. Camb. Philos. Soc. 1934, 30, 178–191. [Google Scholar] [CrossRef]

- Nascimento, A.D.C.; Horta, M.M.; Frery, A.C.; Cintra, R.J. Comparing Edge Detection Methods Based on Stochastic Entropies and Distances for PolSAR Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2014, 7, 648–663. [Google Scholar] [CrossRef]

- Ebert, D. Texturing & Modeling: A Procedural Approach; Morgan Kaufmann: San Francisco, CA, USA, 2003. [Google Scholar]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I. Textural features for image classification. IEEE Trans. Syst. Man, Cybern. 1973, 3, 610–621. [Google Scholar] [CrossRef]

- Gomez, L.; Alvarez, L.; Mazorra, L.; Frery, A.C. Fully PolSAR image classification using machine learning techniques and reaction-diffusion systems. Neurocomputing 2017, 255, 52–60. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2016. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).