Abstract

In recent years, the scale of rural land transfer has gradually expanded, and the phenomenon of non-grain-oriented cultivated land has emerged. Obtaining crop planting information is of the utmost importance to guaranteeing national food security; however, the acquisition of the spatial distribution of crops in large-scale areas often has the disadvantages of excessive calculation and low accuracy. Therefore, the IO-Growth method, which takes the growth stage every 10 days as the index and combines the spectral features of crops to refine the effective interval of conventional wavebands for object-oriented classification, was proposed. The results were as follows: (1) the IO-Growth method obtained classification results with an overall accuracy and F1 score of 0.92, and both values increased by 6.98% compared to the method applied without growth stages; (2) the IO-Growth method reduced 288 features to only 5 features, namely Sentinel-2: Red Edge1, normalized difference vegetation index, Red, short-wave infrared2, and Aerosols, on the 261st to 270th days, which greatly improved the utilization rate of the wavebands; (3) the rise of geographic data processing platforms makes it simple to complete computations with massive data in a short time. The results showed that the IO-Growth method is suitable for large-scale vegetation mapping.

1. Introduction

Food security mainly refers to the problem of food supply security [1]. However, the phenomenon of non-grain-oriented land refers to a change in the cultivation of land from its original use to grow wheat (Triticum aestivum L.), rice (Oryza sativa L.), and other grain crops to other purposes, resulting in a reduction in the actual area of grain production and a decline in grain yield. At present, the non-grain-oriented land phenomenon is already the dominant trend in land circulation and, if things continue in this way, it will become a major hidden danger to the national food security strategy [2].

For this reason, to control the non-grain-oriented use of agricultural land and to guarantee national food security, monitoring of the area of agricultural crop planting is of utmost importance [3]. Moreover, remote-sensing technology has unique advantages in terms of agricultural crop monitoring due to its large range of observation and short cycles [4], and many studies have utilized remote-sensing technology to quickly extract the spatial distributions of agricultural crops [5]. At present, the commonly used methods for crop mapping by remote sensing are pixel-based classification and object-oriented classification; of these, the latter has been gradually adopted in more studies compared to the former [6,7] because it can avoid misclassification caused by certain pixels of the same object having different spectra or different objects having the same spectra [8], and can effectively prevent salt-and-pepper noise [9]. It can obtain a classification accuracy better than 85% by combing the spectral features, texture features, terrain features, and other factors extracted from remote-sensing images [10].

However, both pixel-based and object-oriented machine-learning classifiers have gradually replaced the conventional maximum-likelihood classifiers due to their better performance [11]. The common machine-learning classifiers include the random forest (RF), classification and regression trees (CART), support vector machine (SVM), and so on [12]. Among them, SVM and RF are regarded as unaffected by data noise, so their application is broader than that of other classifiers [13]. For example, Adriaan et al. used remote-sensing datasets in combination with 10 types of machine-learning methods to extract the spatial distributions of crops, analyzed the various accuracy indicators of the classification results, and found that RF provided the highest accuracy [14]. Based on RF, Schulz et al. proposed a new classification method to carry out large-scale agricultural monitoring of fishing crops and obtained an average prediction accuracy of 84% [15]. The introduction of machine learning has made the launching of remote-sensing research much more convenient. However, if a local computer uses machine learning, the computer performance undoubtedly must reach a certain standard [16]; at present, the Google Earth Engine (GEE) solves this problem as it can perform the functions of common algorithms, including machine learning, online [17]. GEE is a cloud computing platform developed by Google for satellite imagery and earth observation data [18], which provides Landsat, Sentinel, Moderate Resolution Imaging Spectroradiometer (MODIS), and other satellite images as well as data products for free [19]. The rich resources and powerful computing capabilities of GEE have made the online processing of geographic data the main trend in current remote-sensing research [20].

Since GEE has greatly reduced the complexity of data calling and calculation, it is easier to carry out remote-sensing research with data fusion, which refers to integration of the information obtained by sensors on satellites, aircraft, and ground platforms with different spatial and spectral resolutions to generate fused data containing more detailed information than any single data source [21]. Some commonly used methods of image fusion are as follows: (1) pixel-level fusion (data fusion), which combines the original image pixels; (2) feature fusion, which involves extracting features from individual datasets for combination; and (3) decision fusion, which combines the results of multiple algorithms to obtain the final fusion [21,22]. Currently, data fusion is widely used in remote-sensing research. In terms of crop classification, some early studies chose to fuse the images during the growth periods of crops [23]; later, the time-series data obtained during crop growth were found to be able to fully reflect the phenological features of different crops and their changes in different physical and chemical parameters and indicators [24], and also to contribute greatly to improving accuracy in crop classification [25]. It is therefore popular for research [26,27,28] to use the data fusion method of calculating the median reflectance or index median of time-series images [29].

In large-scale remote-sensing research, methods that fuse the images of crops in the growth period and then extract the spectral features to carry out classification [30] do not combine this with information relating to the growth stages of the crops, so the variation features of the crop spectra in different periods cannot be fully utilized. Moreover, the data volume in the time-series method is relatively large, and the data fusion of the entire area and the subsequent computational flow cannot be completed at the same time, and may even exceed the GEE calculation limit. Therefore, ensuring full utilization of the crop growth variation features [31] while reducing data redundancy and decreasing the data volume has become a problem to be solved.

Based on the above analysis and object-oriented classification in combination with the RF classifier, the IO-Growth method for extracting the spatial distribution of crops was proposed, in which each growth stage of the crops is regarded as an independent attribute and is combined with the spectral features to jointly optimize the selection; this method can not only obtain the advantages of time-series data—that is, make full use of the characteristics of the growth stages with high contributions to the classification accuracy of crops—but also filter out the data of the growth stages with low contributions in order to reduce the amount of data, so as to simplify the time-series data. The aims of this study was mainly structured as follows: (1) to explore the characteristics of the extensive extraction of crops online based on the GEE platform; (2) to identify the advantages and disadvantages of the IO-Growth method by comparing it with an object-oriented classification that does not combine the growth stages with the time-series method, which currently has the advantage in accuracy; (3) to obtain the spatial distributions of rice, maize (Zea mays L.), and soybean (Glycine max L. Merr.) in the study area, i.e., the Sanjiang Plain in 2019.

2. Materials and Methods

2.1. Study Area

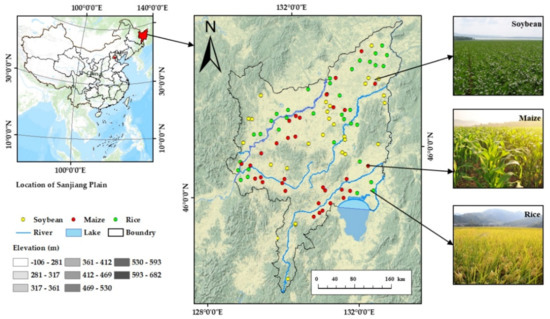

The Sanjiang Plain was chosen as the study area. As shown in Figure 1, the plain is in the northeastern part of Heilongjiang Province in China, with a total area of approximately 108,900 km2, and encompasses seven cities. The landform of the Sanjiang Plain is vast and flat, and the climate is a temperate humid–semihumid continental monsoon climate with an average temperature between 1 °C and 4 °C and an annual precipitation between 500 and 650 mm. The precipitation is mostly concentrated in June to October, and the solar radiation resources are comparatively abundant, with the characteristics of rain and heat in the same season, which makes this region suitable for agricultural development. The Sanjiang Plain is the production base for commercial grains such as soybean, maize, rice, and others, which makes it an important reclamation area for the country. According to the statistical yearbook, in Heilongjiang Province, where the Sanjiang Plain is located, the areas of three crops—rice, maize, and soybean—accounted for more than 90% in the total area of the main agricultural crops in 2019; Heilongjiang Province has become the largest grain-producing province in China, and its grain yield had ranked first in the nation for 10 consecutive years.

Figure 1.

Overview of the study area: Sanjiang Plain, located in northeast Heilongjiang Province.

2.2. Datasets and Preprocessing

2.2.1. Sentinel-2 Images and Preprocessing

This study used the Level-2A product of Sentinel-2 stored in GEE, a cloud computing platform for satellite imagery and earth observation data developed by Google, during the crop growth period of 2019, that is, from May to October, in the Sanjiang Plain [32]. The cooperation of the two satellites shortened the global revisit cycle to only five days. In addition, Level-1C is another product of Sentinel-2 available currently; it is orthorectified top-of-atmospheric reflectance without atmospheric correction, while the Level-2A product includes atmospherically corrected surface reflectance, where the reflectance (ρ) is a function of wavelength (λ), which is the ratio of reflection energy (ER) to incident energy (EI) [33]:

Sentinel-2 sensors have medium spatial resolutions of 10, 20, and 60 m [34], within which the Level-2A product has 12 spectral wavebands in the form of unsigned integers, and their resolutions and wavelengths are shown in Table 1:

Table 1.

The information of the Level-2A product of Sentinel-2.

2.2.2. Training and Validation Sample Data

This study used Sentinel-2 images synthesized by the GEE platform from May to October 2019 to select pixels as samples online [35], and as Table 2 shows, a total of 1843 samples were selected from the Sanjiang Plain, including 305 rice samples, 303 maize samples, and 303 soybean samples. During the selection process, the principles of randomness and uniformity were followed to try to make the samples evenly distributed in the study area. However, rice is less distributed in the central and southern parts of the study area, so fewer samples were selected in this part; maize is planted more in the middle of the study area, so more samples were selected in this part; meanwhile, soybean is scattered throughout the whole study area, so the samples were selected more evenly.

Table 2.

Summary of the number of samples used per class for classification training and testing.

After completing the sample selection, the high-definition images of Google Earth from May to October 2019, combined with visual interpretation knowledge and the 10 m crop-type maps for northeast China in 2017–2019 [36], released in 2020, were used to test whether the samples were correct or not. Samples that were inconsistent with the attributes of the high-definition images of Google Earth or the crop-type maps were deleted to avoid wrong selection. In addition, 299 woodland samples, 294 water samples, and 339 built-up samples were used to jointly assist in the classification. Approximately 70% of the sample points were randomly selected for training, and the other sample points, constituting approximately 30%, were used for testing [37].

2.3. Method

2.3.1. IO-Growth Method Overview

The IO-Growth method for extracting the spatial distribution of crops proposed in the study uses a 10-day interval to represent the growth stages of the crops, which is taken as an independent attribute, and carries out image synthesis on every growth stage [29] to thereby extract the spectral features of each stage. Through feature selection, the spectral features with the growth stage attributes are optimized and the feature combinations that contribute more greatly to the classification are obtained [38], which plays a role in refining the effective interval of the feature wavebands within the growth period. Subsequently, object-oriented classification is carried out [39]. This method extracts the spectral features of the growth stages of the crops as independent features and then carries out optimization, which greatly improves the utilization rate of the spectral features and reduces data redundancy.

2.3.2. SEaTH Algorithm

The basic idea of the separability and thresholds (SEaTH) algorithm is that if a feature does not conform to a normal distribution, then the feature has poor separability and is unsuitable for use in classification. If it conforms to a normal distribution, the Jeffries–Matusita (JM) distance is used to measure the separability between classes [40]. The distance between the two classes C1 and C2 is expressed as follows:

where B represents the Bhattacharyya distance, and mi and αi (i = 1, 2) respectively represent the mean and variance of a certain feature of classes C1 and C2. The value range of J is [0.00, 2.00], wherein, when the J value is between 0.00 and 1.00, the spectrum does not have separability; when the J value is between 1.00 and 1.90, the sample has a certain degree of separability, but the spectral overlap is large; and when it is between 1.90 and 2.00, the separability of the feature is high. Therefore, features with a JM distance greater than 1.90 were considered to satisfy the separability requirement, which not only compressed the number of classification features but also removed the features with low separability, thereby reducing data redundancy and improving computational efficiency.

J = 2 × (1 − e−B)

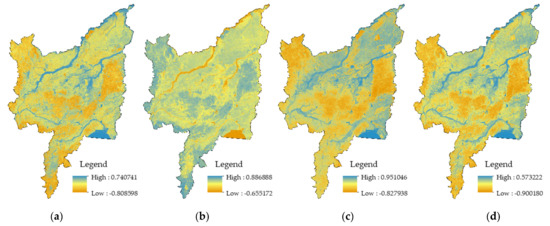

2.3.3. Construction of the Feature Collection

The growth periods of rice, maize, and soybean crops in northeastern China are usually from May to September, but the crops are occasionally still immature and unharvested in October. Therefore, we selected May to October as the study period and considered every 10 days as a growth stage; that is, there were a total of 18 growth stages. In addition, aside from the original wavebands, the visible, NIR, and SWIR bands of the Sentinel-2 L2A product were used to calculate [41,42] normalized difference water index (NDWI) [43,44], normalized difference vegetation index (NDVI) [45], modified normalized difference water index (MNDWI) [46], and enhanced water index (EWI) [47], as shown in Figure 2 and Table 3. Among these, NDVI shows favorable sensitivity for monitoring the temporal and spatial variation of vegetation [48], which has been widely used in rice monitoring; NDWI is helpful for dividing the boundary between vegetation and water [44]; MNDWI is easily used to distinguish between shadow and water [46]; and EWI also introduces a near-infrared band and short-wave infrared band, which can effectively distinguish residents, soil, and water [47]. Since different crops have different soil water content and vegetation water content, these factors can effectively distinguish them. In addition, these factors can also contribute to the extraction of farmland surface water content at the early stages of crop growth and crop classification. They were added to the image as independent spectral wavebands, so the number of wavebands in the image increased from 12 to 16. Image synthesis was carried out for the 18 growth stages [49], and the time attribute of the growth stage was combined with the 16 spectral features in each stage. Thus, we obtained a feature set that contained 288 features.

Figure 2.

The results of the features calculated from Sentinel-2 data: (a) NDWI, (b) NDVI, (c) MNDWI, (d) EWI.

Table 3.

Detailed descriptions of the features extracted from Sentinel-2 data.

2.3.4. Simple Noniterative Clustering Algorithm

Simple noniterative clustering (SNIC) [50] is an object-oriented imagery segmentation method and is also the optimization [51] of the simple linear iterative clustering (SLIC) imagery segmentation method [52]. By composing adjacent pixels with similar texture, color, brightness, and other features into an irregular pixel block with a certain visual meaning, the SLIC algorithm uses a small number of superpixels to replace a large number of pixels to express image features, which greatly reduces the complexity of imagery postprocessing. However, the following three problems [50,53] exist in terms of the SLIC algorithm: (1) the algorithm requires multiple iterations, (2) there is partial overlap in the search space at the clustering center, and (3) there is no definite connection between pixels. Therefore, the SLIC algorithm needs to be improved in terms of operation efficiency, while SNIC algorithm overcomes these shortcomings [50]. SNIC uses a regular grid to generate K initial centroids on the imagery, and the affinity between a pixel and a centroid is measured by the distance of the five-dimensional space of color and spatial coordinates. When the spatial position is expressed as p = (x, y) and the color space is expressed as CIELAB color c = (l, a, b), the distance [50] between the pixel J and the centroid K is calculated as follows:

where S is the normalizing factor for spatial distance and M is the normalizing factor for color distance.

In addition, a priority queue Q is used to return the pixel with the minimum distance from the centroid to add to the superpixel cluster and update the centroid online after the addition [50].

In the SNIC algorithm, the selection of the segmentation scale parameters directly affects the accuracy of the object-oriented classification [54,55]. Thus, the mean value of the local variance (LV) in multiple wavebands is calculated with reference to the principles of the estimation of scale parameter (ESP) algorithm [56], and by acquiring the LV between different objects in a certain waveband under each segmentation scale. The mean LV is used to represent the homogeneity in the object of segmentation and thus to judge whether the segmentation effect is good or not [57].

mean LV = (LV1 + LV2 + … + LVn)/n

The rate of change (ROC) curve of the LV is calculated to obtain the corresponding optimal segmentation parameters, and the formula is expressed as follows:

LVn is the local variance of the object of segmentation when the scale is n, and LVn−1 is the local variance of the object of segmentation when the scale is n − 1. This value is used to judge whether the segmentation effect is the best. Theoretically, when the curve reaches the local peak value, the segmentation scale corresponding to this point is the best segmentation scale for a certain land type [58].

2.3.5. Random Forest

Random forest [59] is a classification and regression model, which extracts about two-thirds of the original dataset as training samples through a bootstrap sampling strategy and generates decision trees for each training sample, so the dataset can be classified by using all decision tree prediction patterns to guide and aggregate. The extracted training samples are called ‘in-the-bag’, while the remaining ‘out-of-bag’ samples are used as validation samples to evaluate the classification accuracy. In addition, the random forest model has two main user-defined parameters, including the number of classification trees (ntree) and the number of variables used in each classification tree (mtry) [60]. In this study, the value of ntree was set to 150, which can ensure accuracy and avoid overfitting, and mtry was set to the default value, which is the square root of the number of input features.

2.3.6. Accuracy Assessment

The accuracy of the IO-Growth method in extracting the spatial distributions of crops was assessed from the perspectives of the classification and identification rate. The overall accuracy (OA), production accuracy (PA), user accuracy (UA), and the kappa coefficient (KA) were used to evaluate the classification accuracy [61,62,63]. The precision, recall, and F1 score were used to evaluate the identification rate [64]. And the formulas for indicators are shown in Table 4:

Table 4.

The meaning of the indices used to assess accuracy. Note that the parameters used in calculation are explained.

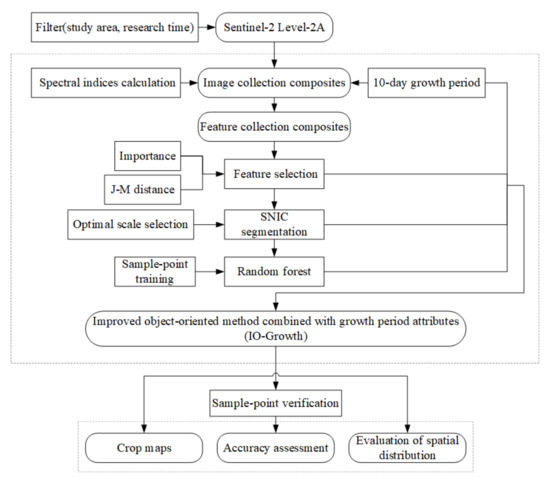

The above is all the methods used in this paper. In addition, the methodological workflow is shown in Figure 3.

Figure 3.

Methodological workflow employed in the current study.

3. Results

3.1. Feature Optimization

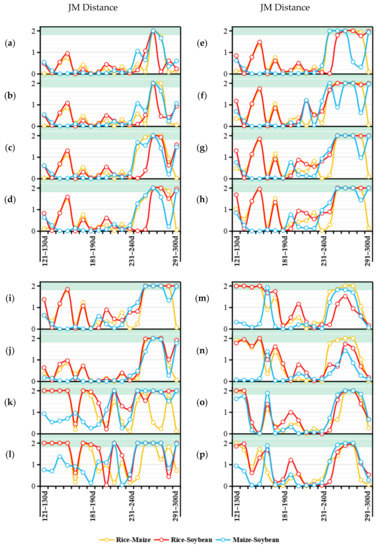

3.1.1. JM Distance Screening among Features

The JM distance was used to select the feature wavebands that conformed to the separability requirements from the feature set; we calculated the original feature set, which contained 288 features, and obtained the JM distances between all spectral features in the set. The computational results are shown in Figure 4; the features with JM distances between the three crops greater than 1.90 were concentrated between the 251st and 280th days, i.e., between mid- and late September and early October; moreover, between May and August, the JM distances between maize and soybean were low; the features with the JM distances greater than 1.90 between all surface feature categories included only B7, B8, B8A, and B12 from the 251st to 260th days (251–260d); B1, B2, B3, B4, B5, B6, B7, B8, B8A, B9, B12, and NDVI from the 261st to 270th days (261–270d); and B6, B7, B8, B8A, B9, NDVI, and NDWI from the 271st to 280th days (271–280d); that is, of the 288 features in the feature set, only 23 conformed to the separability requirements.

Figure 4.

JM distances between crops in the following features: (a) B1, (b) B2, (c) B3, (d) B4, (e) B5, (f) B6, (g) B7, (h) B8, (i) B8A, (j) B9, (k) B11, (l) B12, (m) EWI, (n) MNDWI, (o) NDVI, and (p) NDWI.

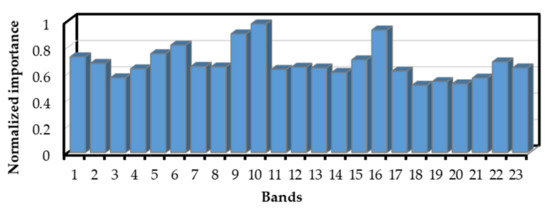

3.1.2. Feature Importance Assessment

Features with higher importance contributed more to the accuracy of the classification results; to further optimize fewer features from the 23 features that satisfied the separability requirements in order to improve the computational efficiency, the normalized importance was used as the evaluation indicator, and the top five features in importance were selected as the classification features used in the present study. Through computation, the five features were determined to be B5, NDVI, B4, B12, and B1 of 261–270d, i.e., RE1, NDVI, Red, SWIR2, and Aerosols from September 17 to September 28, as shown in Figure 5.

Figure 5.

The normalized importance assessment of the crop classification features selected that satisfied the separability requirement. 1 = 251–260d:B12; 2 = 251–260d:B7; 3 = 251–260d:B8; 4 = 251–260d:B8A; 5 = 261–270d:B1; 6 = 261–270d:B12; 7 = 261–270d:B2; 8 = 261–270d:B3; 9 = 261–270d:B4; 10 = 261–270d:B5; 11 = 261–270d:B6; 12 = 261–270d:B7; 13 = 261–270d:B8; 14 = 261–270d:B8A; 15 = 261–270d:B9; 16 = 261–270d:NDVI; 17 = 271–280d:B6; 18 = 271–280d:B7; 19 = 271–280d:B8; 20 = 271–280d:B8A; 21 = 271–280d:B9; 22 = 271–280d:NDVI; 23 = 271–280d:NDWI.

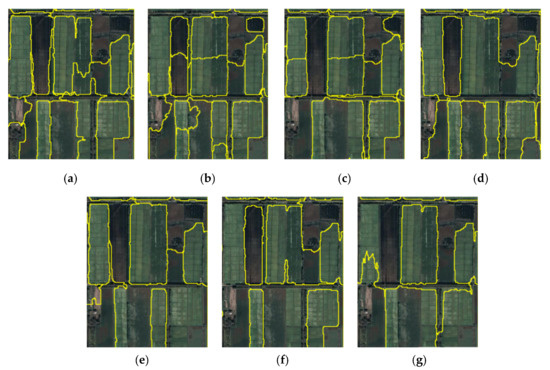

3.2. Assessment of the Optimal Segmentation Scale

The range of the segmentation scale in the SNIC algorithm was set to 2–100. The LV of the segmentation results of the study area under each segmentation scale was calculated to obtain its ROC, and the main peak values were 52, 55, 61, 68, 77, 87, and 93, which were regarded as the collection of the optimal segmentation scale.

The above peaks were set as segmentation scales, and the segmentation results of each scale in the study area were obtained. However, it was difficult to use the visual analysis method to evaluate the overall segmentation effect since the research area was too large. Therefore, we took a small part of the whole study area for local amplification to compare the segmentation effect at different scales. As shown in Figure 6, oversegmentation occurred when the scale was smaller than 68, and undersegmentation appeared when the scale was greater than 68. Therefore, 68 was considered the global optimal segmentation scale.

Figure 6.

Results of segmentation under different scales: (a) scale = 52, (b) scale = 55, (c) scale = 61, (d) scale = 68, (e) scale = 77, (f) scale = 87, (g) scale = 93.

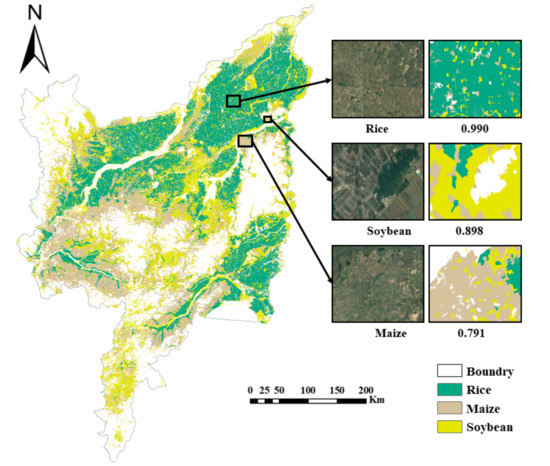

3.3. Object-Oriented Mapping of Crops in the Sanjiang Plain

The optimized feature set, i.e., B1, B4, B5, B12, and NDVI extracted from 261–270d, was used to carry out object-oriented classification based on the SNIC algorithm, thereby obtaining the range of spatial distribution for rice, maize, and soybean in the Sanjiang Plain in 2019.

As shown in Figure 7, maize, soybean, and rice were widely planted in the Sanjiang Plain, and the spatial distributions of the three crops were different. The range of rice planting was the broadest, and the distribution formed patches that were more concentrated, occurring mainly in the northern and eastern parts of the Sanjiang Plain; the range of maize planting appeared mostly strip-shaped and was mainly distributed in the area south of the central part of the Sanjiang Plain. Meanwhile, the range of soybean planting mostly appeared block-shaped and was distributed more evenly in the Sanjiang Plain.

Figure 7.

Classification map of three crops and partial enlarged detail.

3.4. Comparison of the Accuracy Assessment

To explore whether IO-Growth method had a better extraction effect than the object-oriented classification that did not combine the growth stages into terms, 30% of the testing sample points were used to evaluate the accuracy of the classification results obtained by the two methods. Since the distribution and selection of the testing points affects accuracy measurement, a random seed was set up to randomly select the testing sample points for the computation of the global confusion matrix and the OA, KA, precision, recall, and F1 scores, as well as the PA and UA of the three crops—rice, maize, and soybean.

As shown in Table 5, in the classification results obtained by the method that did not combine the growth stages, the PA values of rice, maize, and soybean were 0.89, 0.68, and 0.72, respectively, and the UA values were 0.90, 0.71, and 0.76, respectively, while in the classification results obtained by the IO-Growth method, the PA values of the three crops were 0.99, 0.79, and 0.90, respectively, and the UA values were 0.92, 0.93, and 0.83, respectively.

Table 5.

Accuracy assessment of crops classification for IO-Growth method and the method that did not combine growth stages. Note that numbers of methods and the abbreviations are explained.

For rice, the extraction effects obtained by the two methods were equivalent; for maize and soybean, the PA and UA values from the IO-Growth method were higher. Moreover, the OA of the IO-Growth method was 0.92 and the KA was 0.91, which were higher. In addition, the precision, Recall, and F1 scores were far higher than those of the method that did not combine the growth stages.

4. Discussion

4.1. Evaluation of the Features Selected

By combining Figure 4 and Figure 5, we found that for the features that conformed with the separability requirements, the growth stage was distributed from the 251st to 280th days, that is, mid-September to early October; this stage was the maturity period of the crops [39], which means that the difference of the spectral features reached the maximum level at the maturity stage of the crops. In addition, of the features that conformed with the separability requirements, the top five features in order of importance were RE1, NDVI, Red, SWIR2, and Aerosols, and the growth stage associated with these five features was between September 17 and September 28, i.e., within this 10-day growth stage, the differences in the spectral features of the three crops were the most significant and the easiest to extract [65]. In addition, it can be thus determined that in addition to using the conventional wavebands (visible light and near infrared (NIR)) [66], the addition of 703.9 nm (S2A)/703.8 nm (S2B) from RE1 and 2202.4 nm (S2A)/2185.7 nm (S2B) from SWIR2 had important significance for crop classification [60]. The wavelength of the red edge is between those of Red and NIR, and the waveband range is approximately 670–780 nm, which is an area in which the spectral albedo of green vegetation rises rapidly within a certain waveband range; it is a sensitive spectral waveband for vegetation that is closely related to the pigment status and physical and chemical properties of crops and other vegetation. Some studies have also shown that the red edge can enhance the separability of different vegetation types and play an important role in increasing the accuracy of remote-sensing classification for crops [67]. According to the research results, Red Edge1 was more effective at extracting the physiological characteristics of vegetation than the other two red-edge bands. In addition, the SWIR is mainly used to characterize water or water-related characteristics, as it is sensitive to vegetation moisture content and soil moisture content, which can be used for vegetation classification and surface water information extraction [68], and the narrow SWIR can be used to identify mangroves with different leaf and canopy shapes [69] according to the canopy water content. The results of this study showed that the SWIR2 of Sentinel-2 contributed more to classification than SWIR1.

We carried out a comparative experiment on RE1 and SWIR2 for the Sentinel-2 remote-sensing images used to obtain the contributions of the two to the accuracy of crop extraction. It can be seen from Table 6 that, compared with not adding RE1, the lack of SWIR2 resulted in greater OA loss [70]. Moreover, for rice and soybean, the participation of SWIR2 contributed more than RE1 to increasing the extraction accuracy, while for maize, the participation of RE1 better increased the extraction accuracy; that is to say, for rice and soybeans, the contribution of water monitoring to classification is higher than that of growth status monitoring, while the opposite is true for corn. This may be related to different physiological structures of different crops; for example, the canopy sizes and leaf water content of a crop will be suitable for use with some monitoring factors, but not others.

Table 6.

Accuracy assessment of crop classification for the IO-Growth method with participation of different features. Note that numbers of tests are explained.

In summary, the sensitivity of the red edge to vegetation growth status and the sensitivity of SWIR to vegetation and soil water content meant that the combination of the two could produce a satisfactory classification accuracy.

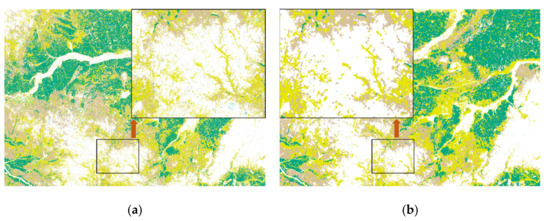

4.2. The Advantages of the Method

Due to its rich remote-sensing database, large cloud storage space, high-speed data computing capability, and numerous integrated algorithms, the GEE platform provides a convenient and efficient platform for remote-sensing-based scientific research. Since the data calls and computation processes can both be completed online on the GEE platform [71], there is no need to download large amounts of data to carry out local computations [41], which eliminates the need for satisfactory computer performance and sufficient memory for remote-sensing research, and the results obtained from cloud computing can be saved on the cloud disk, which can be obtained by logging onto the cloud account. All of the work in the study was completed using the GEE platform. Therefore, when the spatial distribution of crops was extracted over a large area, the data processing time was greatly shortened [72]; however, due to the limited computing performance, the phenomenon of exceeding the cloud computing capabilities occurred when processing was complicated and used large amounts of data, and it was necessary to divide the work into partial computations at this time.

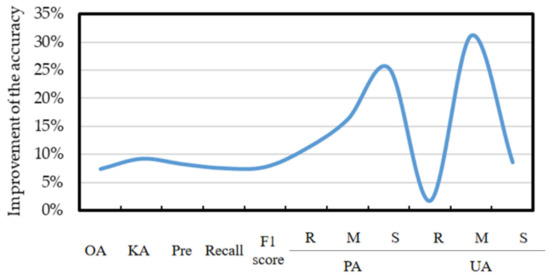

A comparison of the SNIC-based superpixel segmentation algorithm with the pixel-based classification algorithm showed that the object-oriented classification avoided interference from salt-and-pepper noise in the results of the spatial distribution of crops, and the application effect was better in areas with larger pieces of land. Moreover, it can be clearly seen from Figure 8 that many small broken spots appeared in the results obtained by the pixel-based method, which was unsuitable for the flat, broad northeastern region. In addition, the crop growth period was divided into 18 10-day growth stages in our study and refinement of the effective time intervals was carried out on the conventional wavebands, which enabled us not only to make full use of the characteristics of the growth stages with high contributions to the classification accuracy of crops, but also to filter out the data of the growth stages with low contributions and simplify the time series; only five features were selected for classification, which greatly increased the utilization rate of the wavebands, reduced the redundancy in the data of the feature set inputted into the classifier, and reduced the subsequent computational cost. All these indicators for assessing accuracy were associated with improvement to some extent, compared to the results of the method applied without dividing growth stages or refining the effective time intervals (Figure 9).

Figure 8.

Results of the classification based on IO-Growth model with (a) pixel-based method, (b) object-oriented method.

Figure 9.

Improvement in the accuracy under the method proposed in this paper calculated in Table 5.

4.3. Uncertainty and Future Work

The intention of the IO-Growth method is to use growth stages to select few features for classification and obtain a relatively high classification accuracy to overcome the shortcomings of excessively large data volume used in the more commonly seen time-series method; however, in terms of accuracy, although there is great improvement compared to the utilization of spectral features for classification in the past, the method is unstable, which makes the accuracy of the classification results fluctuate slightly due to the randomly selected training samples and testing samples. As shown in Table 7, the results obtained by the method occasionally had slightly lower accuracy than those of the time-series method. In addition, the time-series method pays attention to the overall feature changes, but the IO-Growth method uses the uniqueness of certain special growth stages to achieve classification. Perhaps improvements in the feature screening method could identify more representative growth stages to achieve higher accuracy.

Table 7.

Accuracy assessment of crop classification by the IO-Growth method and time-series method. Note that numbers of methods are explained.

In addition, for multispectral data, the selectivity of the spectral wavebands was far lower than that of the hyperspectral data. The 12 original wavebands of Sentinel-2 may not be suitable for the classification of crops, and the use of hyperspectral data to carry out research may solve this problem.

In terms of generalization of the application of the IO-Growth method, it is still uncertain whether it can achieve satisfactory accuracy in areas with complex topography and a wide variety of crops. The study area discussed in this paper is a plain area with flat, broad terrain. The main crop types are simple and most of them are distributed in chunks. The distribution of crops in areas such as the southwest mountainous area is relatively scattered; thus, the model’s application effect in these areas is still unknown, although there was a good effect in the study area. The application of IO-Growth method to complex terrain in southwest mountainous areas will be the focus of our future research.

5. Conclusions

Based on the GEE platform, the growth stage attributes of crops were utilized to extract the scope of the object-oriented spatial distribution of crops and to compare the accuracy of the IO-Growth method with that of an object-oriented classification that did not combine the growth stages. The following conclusions were obtained:

- (1)

- The popularization of spatial data processing cloud platforms such as GEE can greatly shorten the processing time for spatial data and lighten the workload. In the future, more programming languages and integrated algorithms may be introduced to improve the computing performance of the existing spatial data processing cloud platforms, so online research on spatial data can become mainstream.

- (2)

- Based on object-oriented classification, the IO-Growth integrated growth stages as an independent attribute to refine the most effective parts of the original wavebands and maximized the utilization rate of the 12 simple, conventional wavebands; after optimization, only the five features of RE1, NDVI, Red, SWIR2, and Aerosols in the growth stage of 261–270d were used, which reduced data redundancy and computation cost.

- (3)

- The IO-Growth method has good applicability in large-scale areas, as evidenced by the test results. Its OA exceeded 90%. Both the OA and F1 scores obtained by this method were 6.98% higher than those of the method that did not combine the growth stages.

Author Contributions

Conceptualization, M.L.; methodology, M.L.; software, M.L.; validation, M.L., R.Z. and H.L.; formal analysis, M.L.; investigation, M.L.; resources, S.G.; data curation, Z.Q.; writing—original draft preparation, M.L.; writing—review and editing, M.L.; visualization, M.L.; supervision, R.Z.; project administration, H.L.; funding acquisition, H.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Project of Innovative Talents Training in Primary and Secondary Schools in Chongqing: The Eagle Project of Chongqing Education Commission, grant number CY210230.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Acknowledgments

We thank the Google Earth Engine platform for providing us with a free computing platform and the European Space Agency for providing us with free data. We also thank anonymous reviewers for their insightful advice.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Seleiman, M.F.; Selim, S.; Alhammad, B.A.; Alharbi, B.M.; Juliatti, F.C. Will novel coronavirus (COVID-19) pandemic impact agriculture, food security and animal sectors? Biosci. J. 2020, 36, 1315–1326. [Google Scholar] [CrossRef]

- Pan, L.; Xia, H.M.; Zhao, X.Y.; Guo, Y.; Qin, Y.C. Mapping Winter Crops Using a Phenology Algorithm, Time-Series Sentinel-2 and Landsat-7/8 Images, and Google Earth Engine. Remote Sens. 2021, 13, 2510. [Google Scholar] [CrossRef]

- Son, N.T.; Chen, C.F.; Chen, C.R.; Toscano, P.; Cheng, Y.S.; Guo, H.Y.; Syu, C.H. A phenological object-based approach for rice crop classification using time-series Sentinel-1 Synthetic Aperture Radar (SAR) data in Taiwan. Int. J. Remote Sens. 2021, 42, 2722–2739. [Google Scholar] [CrossRef]

- Șerban, R.D.; Șerban, M.; He, R.X.; Jin, H.J.; Li, Y.; Li, X.Y.; Wang, X.B.; Li, G.Y. 46-Year (1973–2019) Permafrost Landscape Changes in the Hola Basin, Northeast China Using Machine Learning and Object-Oriented Classification. Remote Sens. 2021, 13, 1910. [Google Scholar] [CrossRef]

- Zhang, R.; Tang, Z.Z.; Luo, D.; Luo, H.X.; You, S.C.; Zhang, T. Combined Multi-Time Series SAR Imagery and InSAR Technology for Rice Identification in Cloudy Regions. Appl. Sci. 2021, 11, 6923. [Google Scholar] [CrossRef]

- Zhang, X.; Chan, N.W.; Pan, B.; Ge, X.; Yang, H. Mapping flood by the object-based method using backscattering coefficient and interference coherence of Sentinel-1 time series. Sci. Total Environ. 2021, 794, 148388. [Google Scholar] [CrossRef]

- Jayakumari, R.; Nidamanuri, R.R.; Ramiya, A.M. Object-level classification of vegetable crops in 3D LiDAR point cloud using deep learning convolutional neural networks. Precis. Agric. 2021, 22, 1617–1633. [Google Scholar] [CrossRef]

- Nyamjargal, E.; Amarsaikhan, D.; Munkh-Erdene, A.; Battsengel, V.; Bolorchuluun, C. Object-based classification of mixed forest types in Mongolia. Geocarto Int. 2019, 35, 1615–1626. [Google Scholar] [CrossRef]

- Oreti, L.; Giuliarelli, D.; Tomao, A.; Barbati, A. Object Oriented Classification for Mapping Mixed and Pure Forest Stands Using Very-High Resolution Imagery. Remote Sens. 2021, 13, 2508. [Google Scholar] [CrossRef]

- Tian, Y.Q.; Yang, C.H.; Huang, W.J.; Tang, J.; Li, X.R.; Zhang, Q. Machine learning-based crop recognition from aerial remote sensing imagery. Front. Earth Sci. 2021, 15, 54–69. [Google Scholar] [CrossRef]

- Teluguntla, P.; Thenkabail, P.S.; Oliphant, A.; Xiong, J.; Gumma, M.K.; Congalton, R.G.; Yadav, K.; Huete, A. A 30-m landsat-derived cropland extent product of Australia and China using random forest machine learning algorithm on Google Earth Engine cloud computing platform. ISPRS J. Photogramm. Remote Sens. 2018, 144, 325–340. [Google Scholar] [CrossRef]

- Thorp, K.R.; Drajat, D. Deep machine learning with Sentinel satellite data to map paddy rice production stages across West Java, Indonesia. Remote Sens. Environ. 2021, 265, 112679. [Google Scholar] [CrossRef]

- Tassi, A.; Vizzari, M. Object-Oriented LULC Classification in Google Earth Engine Combining SNIC, GLCM, and Machine Learning Algorithms. Remote Sens. 2020, 12, 3776. [Google Scholar] [CrossRef]

- Prins, A.J.; Van Niekerk, A. Crop type mapping using LiDAR, Sentinel-2 and aerial imagery with machine learning algorithms. Geo-Spat. Inf. Sci. 2020, 24, 215–227. [Google Scholar] [CrossRef]

- Schulz, C.; Holtgrave, A.-K.; Kleinschmit, B. Large-scale winter catch crop monitoring with Sentinel-2 time series and machine learning–An alternative to on-site controls? Comput. Electron. Agric. 2021, 186, 106173. [Google Scholar] [CrossRef]

- Tamiminia, H.; Salehi, B.; Mahdianpari, M.; Quackenbush, L.; Adeli, S.; Brisco, B. Google Earth Engine for geo-big data applications: A meta-analysis and systematic review. ISPRS J. Photogramm. Remote Sens. 2020, 164, 152–170. [Google Scholar] [CrossRef]

- Chen, S.J.; Woodcock, C.E.; Bullock, E.L.; Arévalo, P.; Torchinava, P.; Peng, S.; Olofsson, P. Monitoring temperate forest degradation on Google Earth Engine using Landsat time series analysis. Remote Sens. Environ. 2021, 265, 112648. [Google Scholar] [CrossRef]

- d’Andrimont, R.; Verhegghen, A.; Lemoine, G.; Kempeneers, P.; Meroni, M.; van der Velde, M. From parcel to continental scale—A first European crop type map based on Sentinel-1 and LUCAS Copernicus in-situ observations. Remote Sens. Environ. 2021, 266, 112708. [Google Scholar] [CrossRef]

- Chen, Q.; Li, X.S.; Xiu, X.M.; Yang, G.B. Large scale shrub coverage mapping of sandy land at 30m resolution based on Google Earth Engine and machine learning. Acta Ecol. Sin. 2019, 39, 4056–4069. [Google Scholar] [CrossRef]

- Fu, D.J.; Xiao, H.; Su, F.Z.; Zhou, C.H.; Dong, J.W.; Zeng, Y.L.; Yan, K.; Li, S.W.; Wu, J.; Wu, W.Z.; et al. Remote sensing cloud computing platform development and Earth science application. Natl. Remote Sens. Bull. 2021, 25, 220–230. [Google Scholar] [CrossRef]

- Zhang, J. Multi-source remote sensing data fusion: Status and trends. Int. J. Image Data Fusion 2010, 1, 5–24. [Google Scholar] [CrossRef] [Green Version]

- Joshi, N.; Baumann, M.; Ehammer, A.; Fensholt, R.; Grogan, K.; Hostert, P.; Jepsen, M.; Kuemmerle, T.; Meyfroidt, P.; Mitchard, E.; et al. A Review of the Application of Optical and Radar Remote Sensing Data Fusion to Land Use Mapping and Monitoring. Remote Sens. 2016, 8, 70. [Google Scholar] [CrossRef] [Green Version]

- Tan, S.; Wu, B.F.; Zhang, X. Mapping paddy rice in the Hainan Province using both Google Earth Engine and remote sensing images. J. Geo-Inf. Sci. 2019, 21, 937–947. [Google Scholar] [CrossRef]

- Li, F.J.; Ren, J.Q.; Wu, S.R.; Zhao, H.W.; Zhang, N.D. Comparison of Regional Winter Wheat Mapping Results from Different Similarity Measurement Indicators of NDVI Time Series and Their Optimized Thresholds. Remote Sens. 2021, 13, 1162. [Google Scholar] [CrossRef]

- Blaes, X.; Vanhalle, L.; Defourny, P. Efficiency of crop identification based on optical and SAR image time series. Remote Sens. Environ. 2005, 96, 352–365. [Google Scholar] [CrossRef]

- Bagan, H.; Millington, A.; Takeuchi, W.; Yamagata, Y. Spatiotemporal analysis of deforestation in the Chapare region of Bolivia using LANDSAT images. Land Degrad. Dev. 2020, 31, 3024–3039. [Google Scholar] [CrossRef]

- Martini, M.; Mazzia, V.; Khaliq, A.; Chiaberge, M. Domain-Adversarial Training of Self-Attention-Based Networks for Land Cover Classification Using Multi-Temporal Sentinel-2 Satellite Imagery. Remote Sens. 2021, 13, 2564. [Google Scholar] [CrossRef]

- Xie, Q.H.; Lai, K.Y.; Wang, J.F.; Lopez-Sanchez, J.M.; Shang, J.L.; Liao, C.H.; Zhu, J.J.; Fu, H.Q.; Peng, X. Crop Monitoring and Classification Using Polarimetric RADARSAT-2 Time-Series Data Across Growing Season: A Case Study in Southwestern Ontario, Canada. Remote Sens. 2021, 13, 1394. [Google Scholar] [CrossRef]

- Potapov, P.V.; Turubanova, S.A.; Hansen, M.C.; Adusei, B.; Broich, M.; Altstatt, A.; Mane, L.; Justice, C.O. Quantifying forest cover loss in Democratic Republic of the Congo, 2000–2010, with Landsat ETM+ data. Remote Sens. Environ. 2012, 122, 106–116. [Google Scholar] [CrossRef]

- Zhang, R.; Tang, X.M.; You, S.C.; Duan, K.F.; Xiang, H.Y.; Luo, H.X. A Novel Feature-Level Fusion Framework Using Optical and SAR Remote Sensing Images for Land Use/Land Cover (LULC) Classification in Cloudy Mountainous Area. Appl. Sci. 2020, 10, 2928. [Google Scholar] [CrossRef]

- Moon, M.; Richardson, A.D.; Friedl, M.A. Multiscale assessment of land surface phenology from harmonized Landsat 8 and Sentinel-2, PlanetScope, and PhenoCam imagery. Remote Sens. Environ. 2021, 266, 112716. [Google Scholar] [CrossRef]

- Luo, C.; Liu, H.J.; Lu, L.P.; Liu, Z.; Kong, F.C.; Zhang, X.L. Monthly composites from Sentinel-1 and Sentinel-2 images for regional major crop mapping with Google Earth Engine. J. Integr. Agric. 2021, 20, 1944–1957. [Google Scholar] [CrossRef]

- Cordeiro, M.C.R.; Martinez, J.M.; Peña-Luque, S. Automatic water detection from multidimensional hierarchical clustering for Sentinel-2 images and a comparison with Level 2A processors. Remote Sens. Environ. 2021, 253, 112209. [Google Scholar] [CrossRef]

- Yan, S.; Yao, X.C.; Zhu, D.H.; Liu, D.Y.; Zhang, L.; Yu, G.J.; Gao, B.B.; Yang, J.Y.; Yun, W.J. Large-scale crop mapping from multi-source optical satellite imageries using machine learning with discrete grids. Int. J. Appl. Earth Obs. Geoinf. 2021, 103, 102485. [Google Scholar] [CrossRef]

- Zeng, H.W.; Wu, B.F.; Wang, S.; Musakwa, W.; Tian, F.Y.; Mashimbye, Z.E.; Poona, N.; Syndey, M. A Synthesizing Land-cover Classification Method Based on Google Earth Engine: A Case Study in Nzhelele and Levhuvu Catchments, South Africa. Chin. Geogr. Sci. 2020, 30, 397–409. [Google Scholar] [CrossRef]

- You, N.S.; Dong, J.W.; Huang, J.X.; Du, G.M.; Zhang, G.L.; He, Y.L.; Yang, T.; Di, Y.Y.; Xiao, X.M. The 10-m crop type maps in Northeast China during 2017–2019. Sci. Data 2021, 8, 41. [Google Scholar] [CrossRef]

- Olofsson, P.; Foody, G.M.; Herold, M.; Stehman, S.V.; Woodcock, C.E.; Wulder, M.A. Good practices for estimating area and assessing accuracy of land change. Remote Sens. Environ. 2014, 148, 42–57. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Browning, D.M.; Rango, A. A comparison of three feature selection methods for object-based classification of sub-decimeter resolution UltraCam-L imagery. Int. J. Appl. Earth Obs. Geoinf. 2012, 15, 70–78. [Google Scholar] [CrossRef]

- Luo, C.; Qi, B.S.; Liu, H.J.; Guo, D.; Lu, L.P.; Fu, Q.; Shao, Y.Q. Using Time Series Sentinel-1 Images for Object-Oriented Crop Classification in Google Earth Engine. Remote Sens. 2021, 13, 561. [Google Scholar] [CrossRef]

- Nussbaum, S.; Niemeyer, I.; Canty, M.J. SEaTH-A New Tool for Automated Feature Extraction in the Context of Object-based Image Anaysis for Remote Sensing. In Proceedings of the 1st International Conference on Object-Based Image Analysis, Salzhourg, Austria, 4–5 July 2006. [Google Scholar]

- Liu, L.; Xiao, X.M.; Qin, Y.W.; Wang, J.; Xu, X.L.; Hu, Y.M.; Qiao, Z. Mapping cropping intensity in China using time series Landsat and Sentinel-2 images and Google Earth Engine. Remote Sens. Environ. 2020, 239, 111624. [Google Scholar] [CrossRef]

- Hui, J.W.; Bai, Z.K.; Ye, B.Y.; Wang, Z.H. Remote Sensing Monitoring and Evaluation of Vegetation Restoration in Grassland Mining Areas—A Case Study of the Shengli Mining Area in Xilinhot City, China. Land 2021, 10, 743. [Google Scholar] [CrossRef]

- Tornos, L.; Huesca, M.; Dominguez, J.A.; Moyano, M.C.; Cicuendez, V.; Recuero, L.; Palacios-Orueta, A. Assessment of MODIS spectral indices for determining rice paddy agricultural practices and hydroperiod. ISPRS J. Photogramm. Remote Sens. 2015, 101, 110–124. [Google Scholar] [CrossRef] [Green Version]

- McFeeters, S.K. The use of the Normalized Difference Water Index (NDWI) in the delineation of open water features. Int. J. Remote Sens. 2007, 17, 1425–1432. [Google Scholar] [CrossRef]

- Hmimina, G.; Dufrêne, E.; Pontailler, J.Y.; Delpierre, N.; Aubinet, M.; Caquet, B.; de Grandcourt, A.; Burban, B.; Flechard, C.; Granier, A.; et al. Evaluation of the potential of MODIS satellite data to predict vegetation phenology in different biomes: An investigation using ground-based NDVI measurements. Remote Sens. Environ. 2013, 132, 145–158. [Google Scholar] [CrossRef]

- Xu, H.Q. A Study on Information Extraction of Water Body with the Modified Normalized Difference Water Index (MNDWI). J. Remote Sens. 2005, 9, 589–595. [Google Scholar]

- Yan, P.; Zhang, Y.J.; Zhang, Y. A Study on Information Extraction of Water System in Semi-arid Regions with the Enhanced Water Index (EWI) and GIS Based Noise Remove Techniques. Remote Sens. Inf. 2007, 6, 62–67. [Google Scholar]

- Huete, A.; Didan, K.; Miura, T.; Rodriguez, E.P.; Gao, X.; Ferreira, L.G. Overview of the radiometric and biophysical performance of the MODIS vegetation indices. Remote Sens. Environ. 2002, 83, 195–213. [Google Scholar] [CrossRef]

- Li, X.D.; Ling, F.; Foody, G.M.; Boyd, D.S.; Jiang, L.; Zhang, Y.H.; Zhou, P.; Wang, Y.L.; Chen, R.; Du, Y. Monitoring high spatiotemporal water dynamics by fusing MODIS, Landsat, water occurrence data and DEM. Remote Sens. Environ. 2021, 265, 112680. [Google Scholar] [CrossRef]

- Achanta, R.; Susstrunk, S. Superpixels and Polygons Using Simple Non-iterative Clustering. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4895–4904. [Google Scholar]

- Yang, L.B.; Wang, L.M.; Abubakar, G.A.; Huang, J.F. High-Resolution Rice Mapping Based on SNIC Segmentation and Multi-Source Remote Sensing Images. Remote Sens. 2021, 13, 1148. [Google Scholar] [CrossRef]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Susstrunk, S. SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef] [Green Version]

- Sun, W.J.; Yang, J. Research on Remote Sensing Image Segmentation Based on Improved Simple Non-Iterative Clustering. Comput. Eng. Appl. 2021, 57, 185–192. [Google Scholar] [CrossRef]

- Zhang, X.L.; Xiao, P.F.; Feng, X.Z. Object-specific optimization of hierarchical multiscale segmentations for high-spatial resolution remote sensing images. ISPRS J. Photogramm. Remote Sens. 2020, 159, 308–321. [Google Scholar] [CrossRef]

- Dao, P.D.; Kiran, M.; He, Y.H.; Qureshi, F.Z. Improving hyperspectral image segmentation by applying inverse noise weighting and outlier removal for optimal scale selection. ISPRS J. Photogramm. Remote Sens. 2021, 171, 348–366. [Google Scholar] [CrossRef]

- Drǎguţ, L.; Tiede, D.; Levick, S.R. ESP: A tool to estimate scale parameter for multiresolution image segmentation of remotely sensed data. Int. J. Geogr. Inf. Sci. 2010, 24, 859–871. [Google Scholar] [CrossRef]

- Tu, Y.; Chen, B.; Zhang, T.; Xu, B. Regional Mapping of Essential Urban Land Use Categories in China: A Segmentation-Based Approach. Remote Sens. 2020, 12, 1058. [Google Scholar] [CrossRef] [Green Version]

- Zhong, Y.F.; Hu, X.; Luo, C.; Wang, X.Y.; Zhao, J.; Zhang, L.P. WHU-Hi: UAV-borne hyperspectral with high spatial resolution (H2) benchmark datasets and classifier for precise crop identification based on deep convolutional neural network with CRF. Remote Sens. Environ. 2020, 250, 112012. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Dobrinić, D.; Gašparović, M.; Medak, D. Sentinel-1 and 2 Time-Series for Vegetation Mapping Using Random Forest Classification: A Case Study of Northern Croatia. Remote Sens. 2021, 13, 2321. [Google Scholar] [CrossRef]

- Story, M.; Congalton, R.G. Accuracy assessment: A user’s perspective. Photogramm. Eng. Remote Sens. 1986, 52, 397–399. [Google Scholar]

- Congalton, R.G. A Review of Assessing the Accuracy of Classifications of Remotely Sensed Data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Jiao, X.F.; Kovacs, J.M.; Shang, J.L.; McNairn, H.; Walters, D.; Ma, B.L.; Geng, X.Y. Object-oriented crop mapping and monitoring using multi-temporal polarimetric RADARSAT-2 data. ISPRS J. Photogramm. Remote Sens. 2014, 96, 38–46. [Google Scholar] [CrossRef]

- Zhou, Y.; Zhang, R.; Wang, S.; Wang, F. Feature Selection Method Based on High-Resolution Remote Sensing Images and the Effect of Sensitive Features on Classification Accuracy. Sensors 2018, 18, 2013. [Google Scholar] [CrossRef] [Green Version]

- Beriaux, E.; Jago, A.; Lucau-Danila, C.; Planchon, V.; Defourny, P. Sentinel-1 Time Series for Crop Identification in the Framework of the Future CAP Monitoring. Remote Sens. 2021, 13, 2785. [Google Scholar] [CrossRef]

- Ruiz, L.F.C.; Guasselli, L.A.; Simioni, J.P.D.; Belloli, T.F.; Barros Fernandes, P.C. Object-based classification of vegetation species in a subtropical wetland using Sentinel-1 and Sentinel-2A images. Sci. Remote Sens. 2021, 3, 100017. [Google Scholar] [CrossRef]

- Kang, Y.; Meng, Q.; Liu, M.; Zou, Y.; Wang, X. Crop Classification Based on Red Edge Features Analysis of GF-6 WFV Data. Sensors 2021, 21, 4328. [Google Scholar] [CrossRef]

- Lu, P.; Shi, W.Y.; Wang, Q.M.; Li, Z.B.; Qin, Y.Y.; Fan, X.M. Co-seismic landslide mapping using Sentinel-2 10-m fused NIR narrow, red-edge, and SWIR bands. Landslides 2021, 18, 2017–2037. [Google Scholar] [CrossRef]

- Manna, S.; Raychaudhuri, B. Mapping distribution of Sundarban mangroves using Sentinel-2 data and new spectral metric for detecting their health condition. Geocarto Int. 2018, 35, 434–452. [Google Scholar] [CrossRef]

- Peña-Barragán, J.M.; Ngugi, M.K.; Plant, R.E.; Six, J. Object-based crop identification using multiple vegetation indices, textural features and crop phenology. Remote Sens. Environ. 2011, 115, 1301–1316. [Google Scholar] [CrossRef]

- Dong, J.W.; Kuang, W.H.; Liu, J.Y. Continuous land cover change monitoring in the remote sensing big data era. Sci. China Earth Sci. 2017, 60, 2223–2224. [Google Scholar] [CrossRef]

- He, Z.X.; Zhang, M.; Wu, B.F.; Xing, Q. Extraction of summer crop in Jiangsu based on Google Earth Engine. J. Geo-Inf. Sci. 2019, 21, 752–766. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).