Challenging Environments for Precise Mapping Using GNSS/INS-RTK Systems: Reasons and Analysis

Abstract

:1. Introduction

2. Mapping Strategy Using GNSS/INS-RTK System

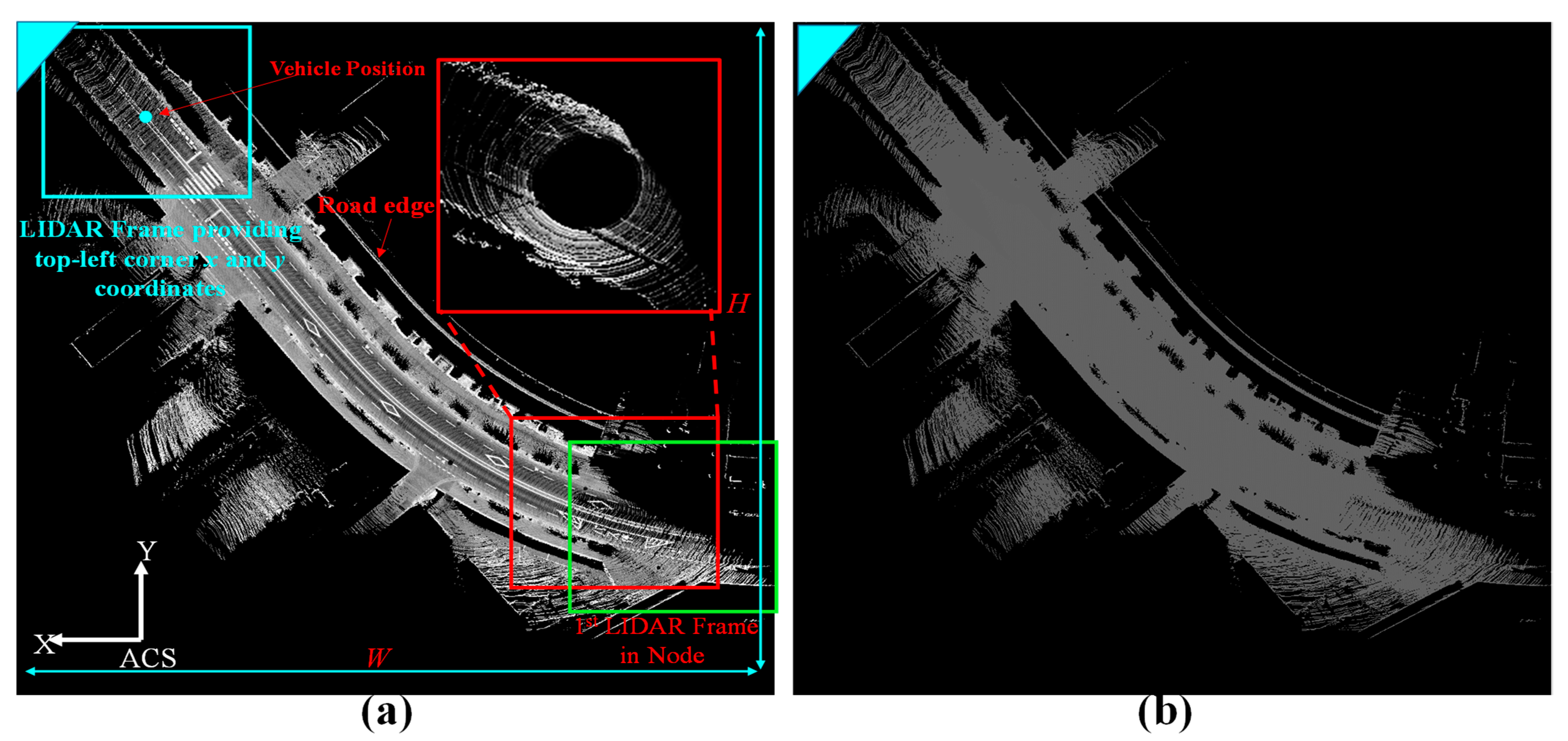

2.1. 2.5D Map Creation (Intensity and Elevation)

3. Reasons and Types of Map Distortions

4. Challenging Environments for Mapping Using GIR Systems

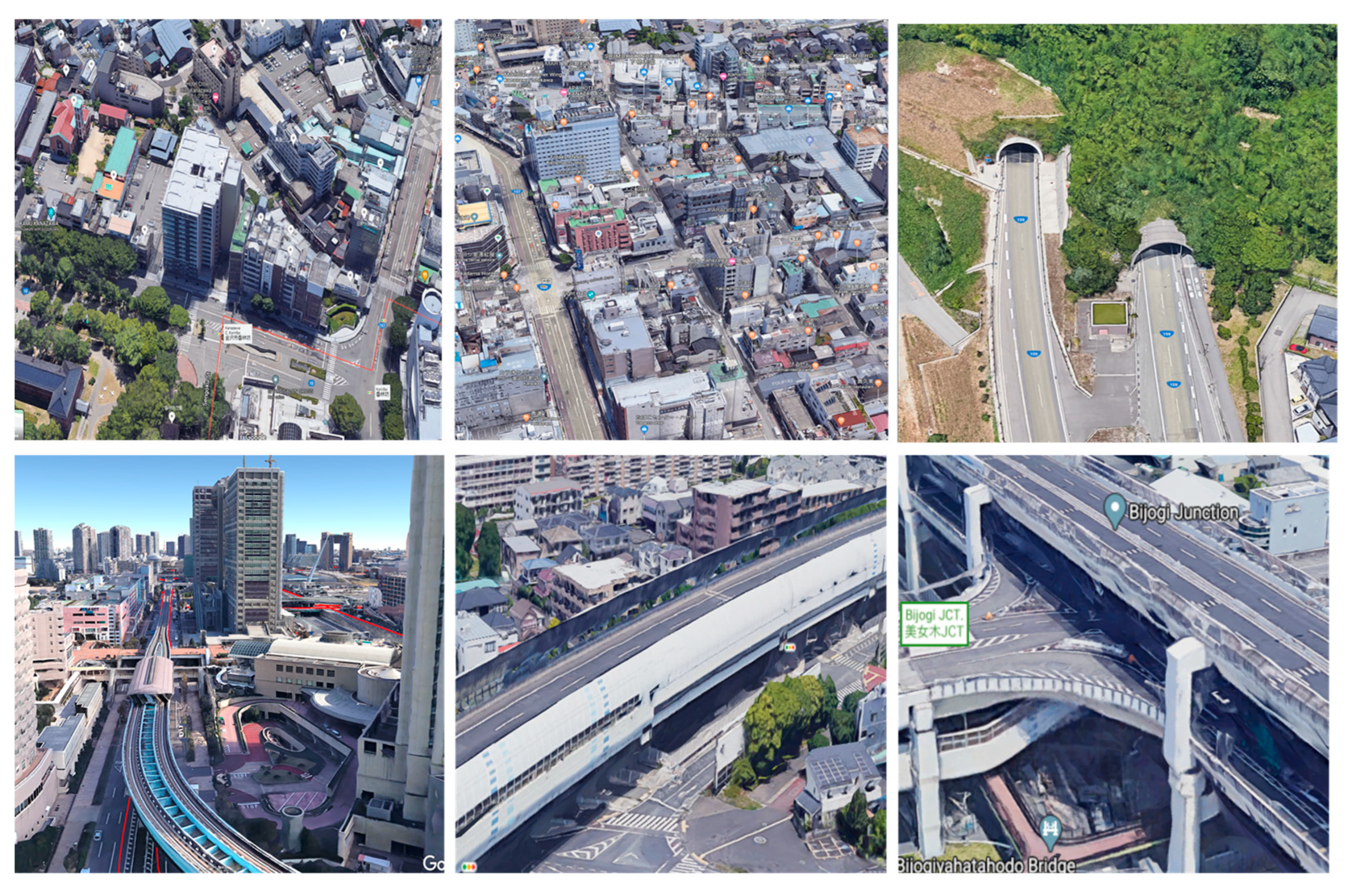

4.1. Setup and Experimental Platforms

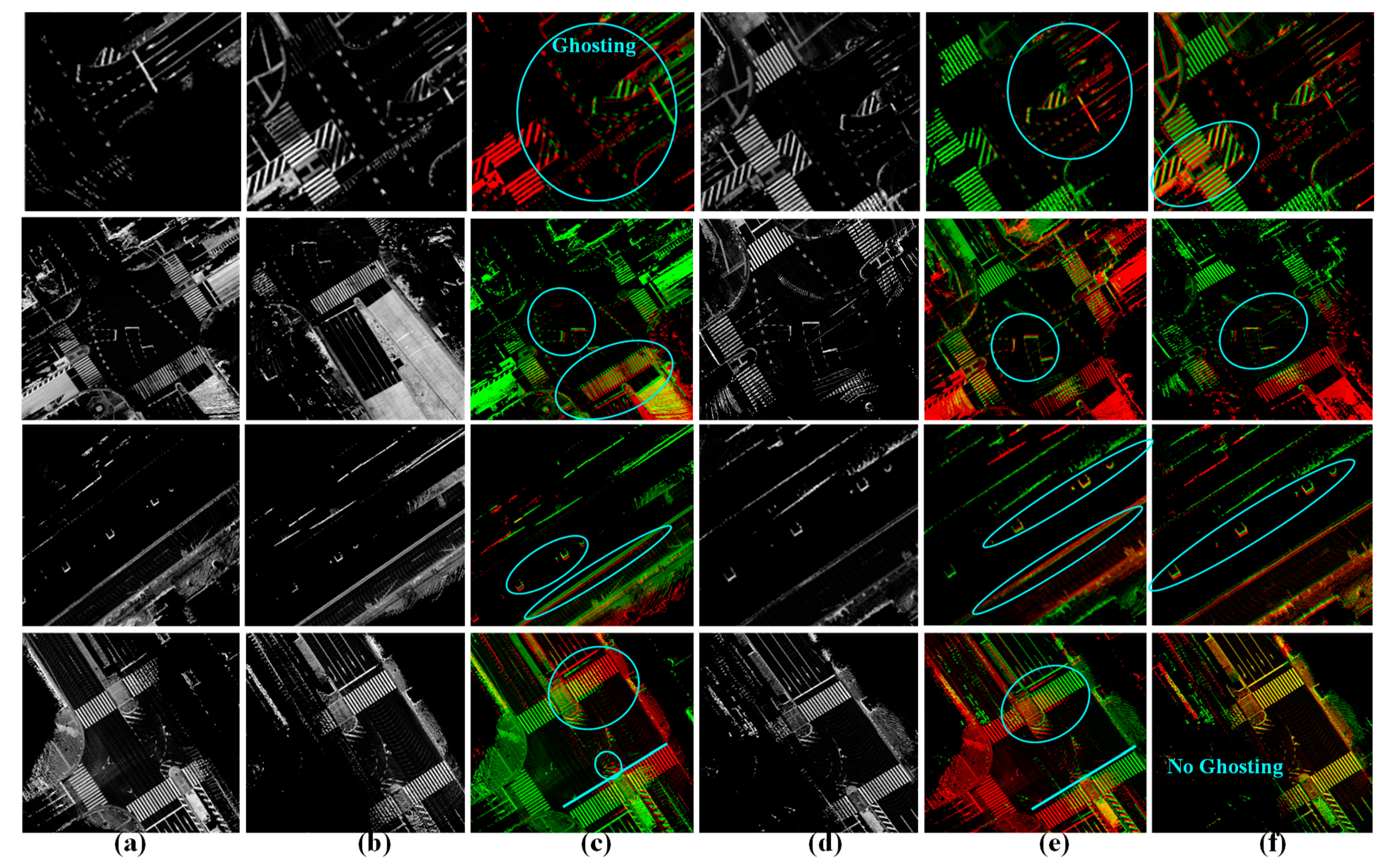

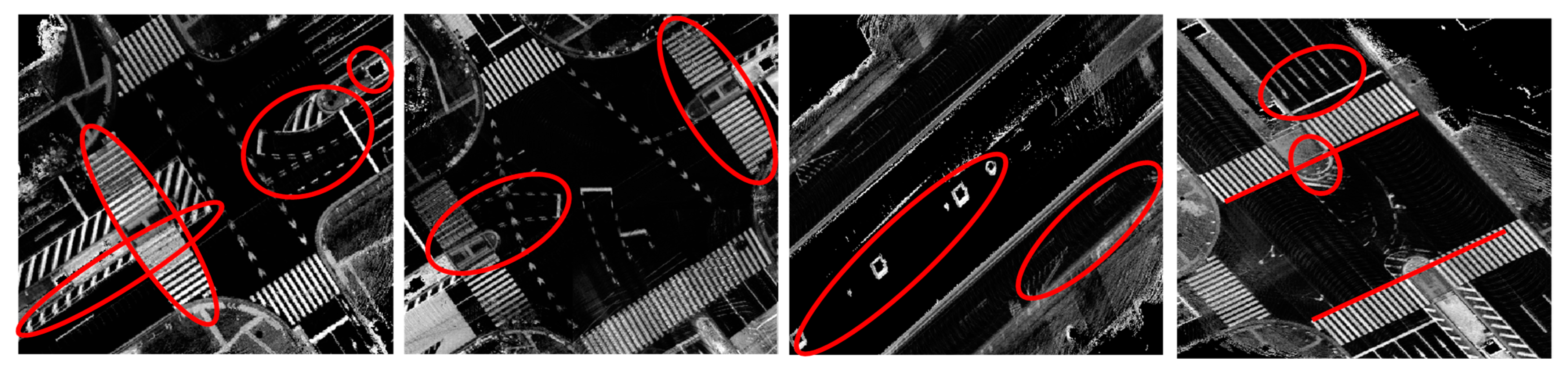

4.2. Mapping an Urban Area Using a Single Drive and a Single Agent

4.3. Mapping an Urban Area Using Two Drives and a Single Agent

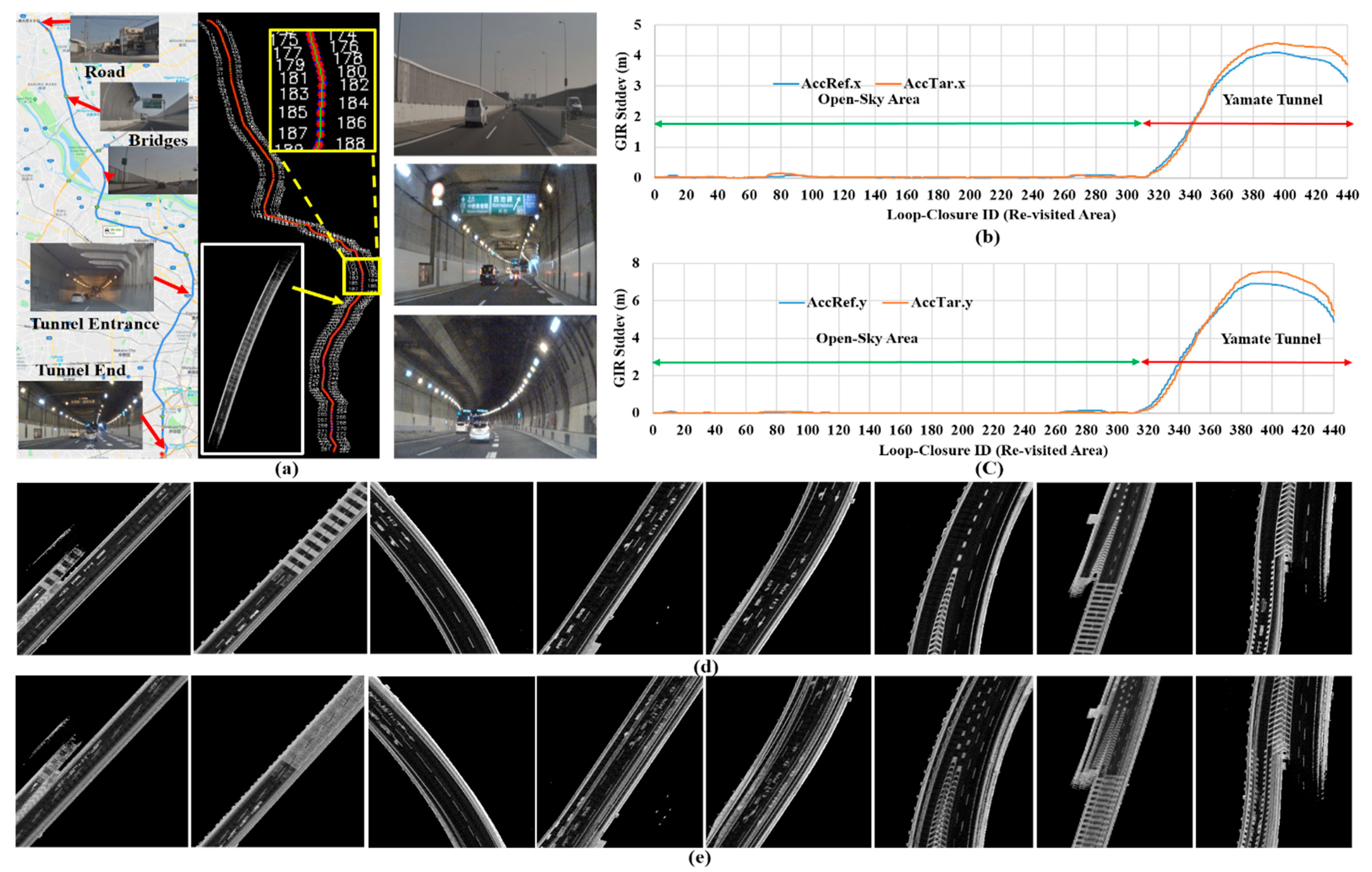

4.4. Mapping a Long Underground Tunnel Using Two Drives in the Same Driving Scenario and a Single Agent

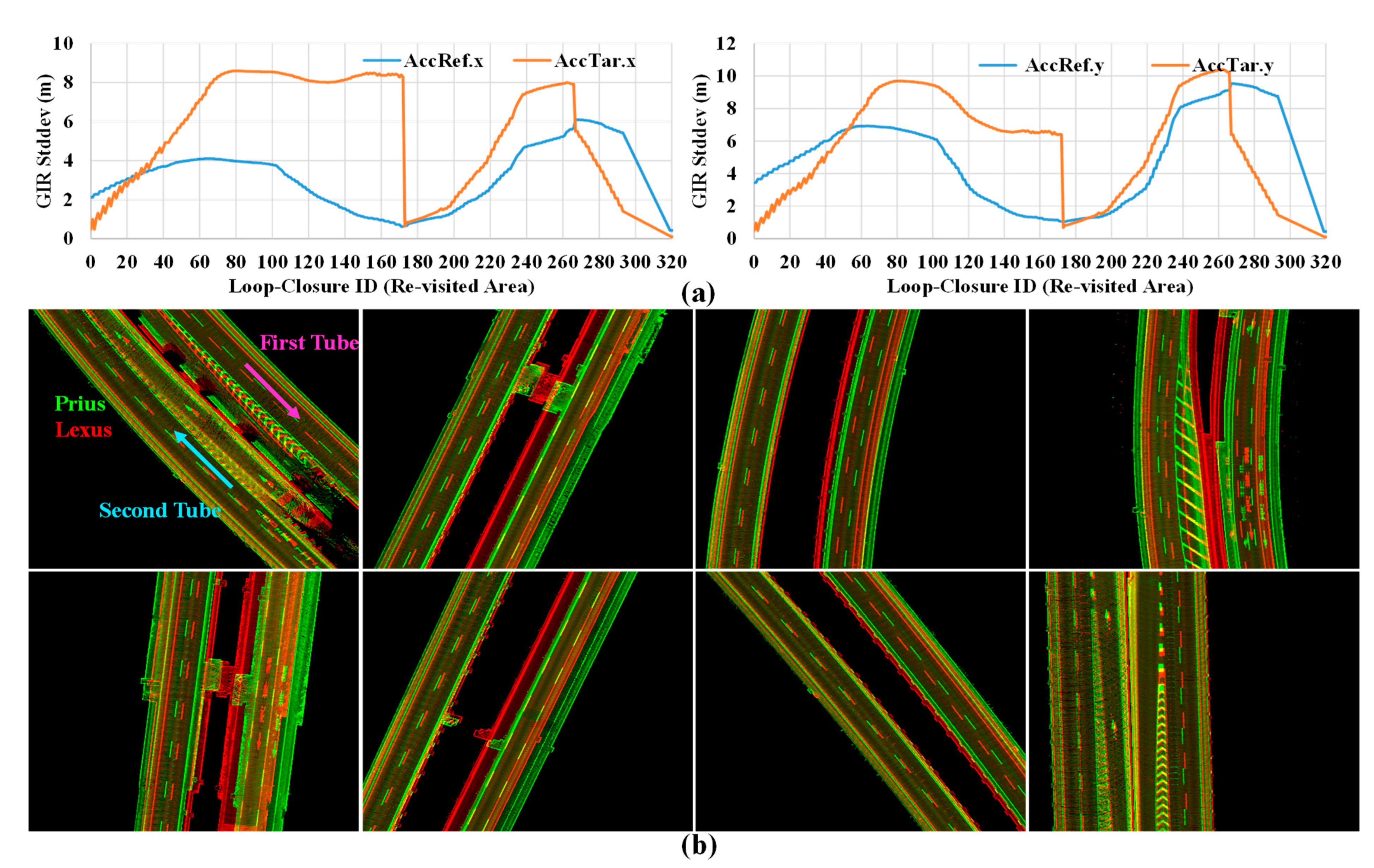

4.5. Mapping a Long Underground Tunnel Using Two Drives in the Same Driving Scenario and Tow Agents with Different Sensor Configurations

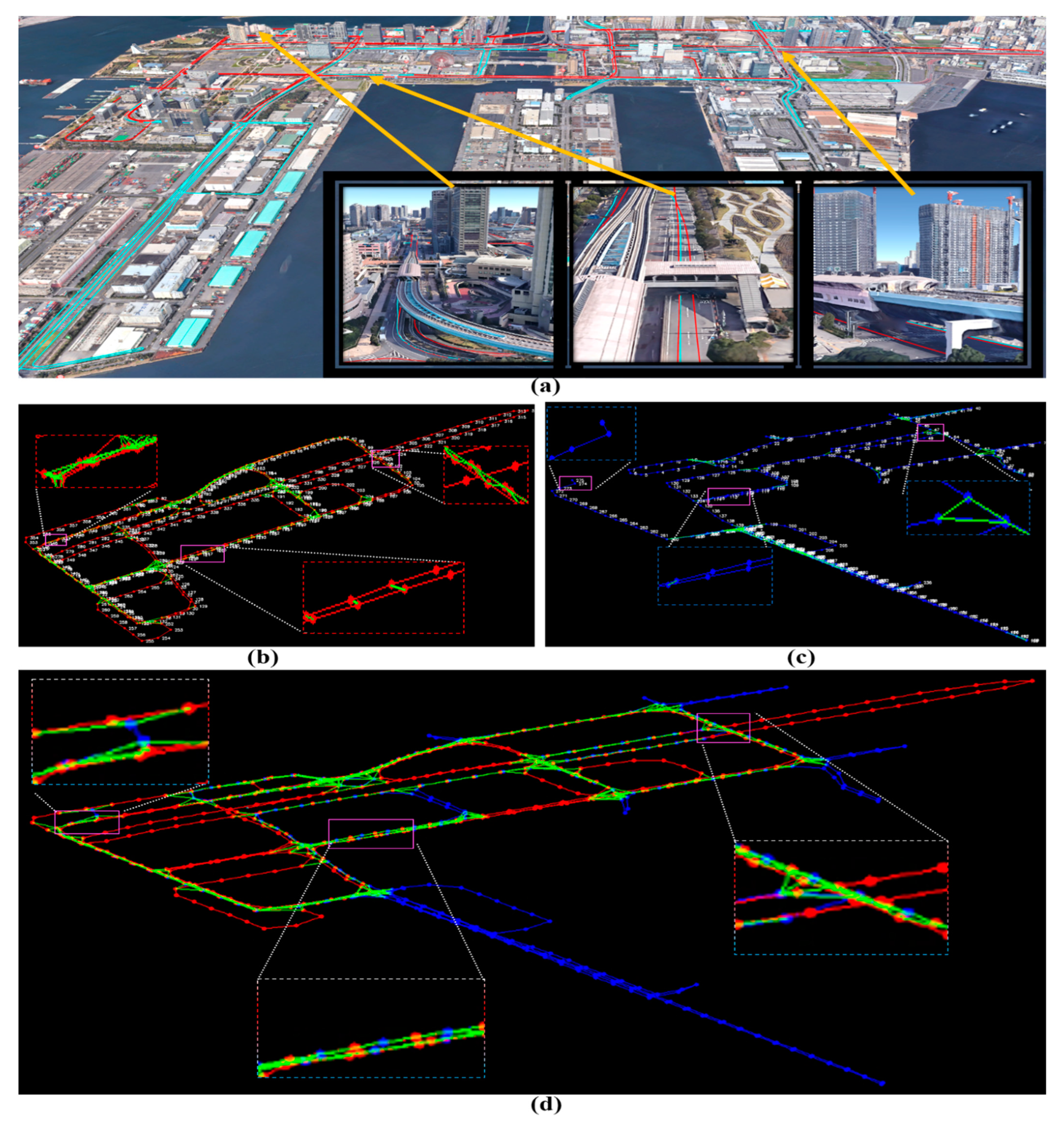

4.6. Mapping a Critical Course Simultaneously Using Two following Agents with Same Sensor Configurations and Driving Scenarios

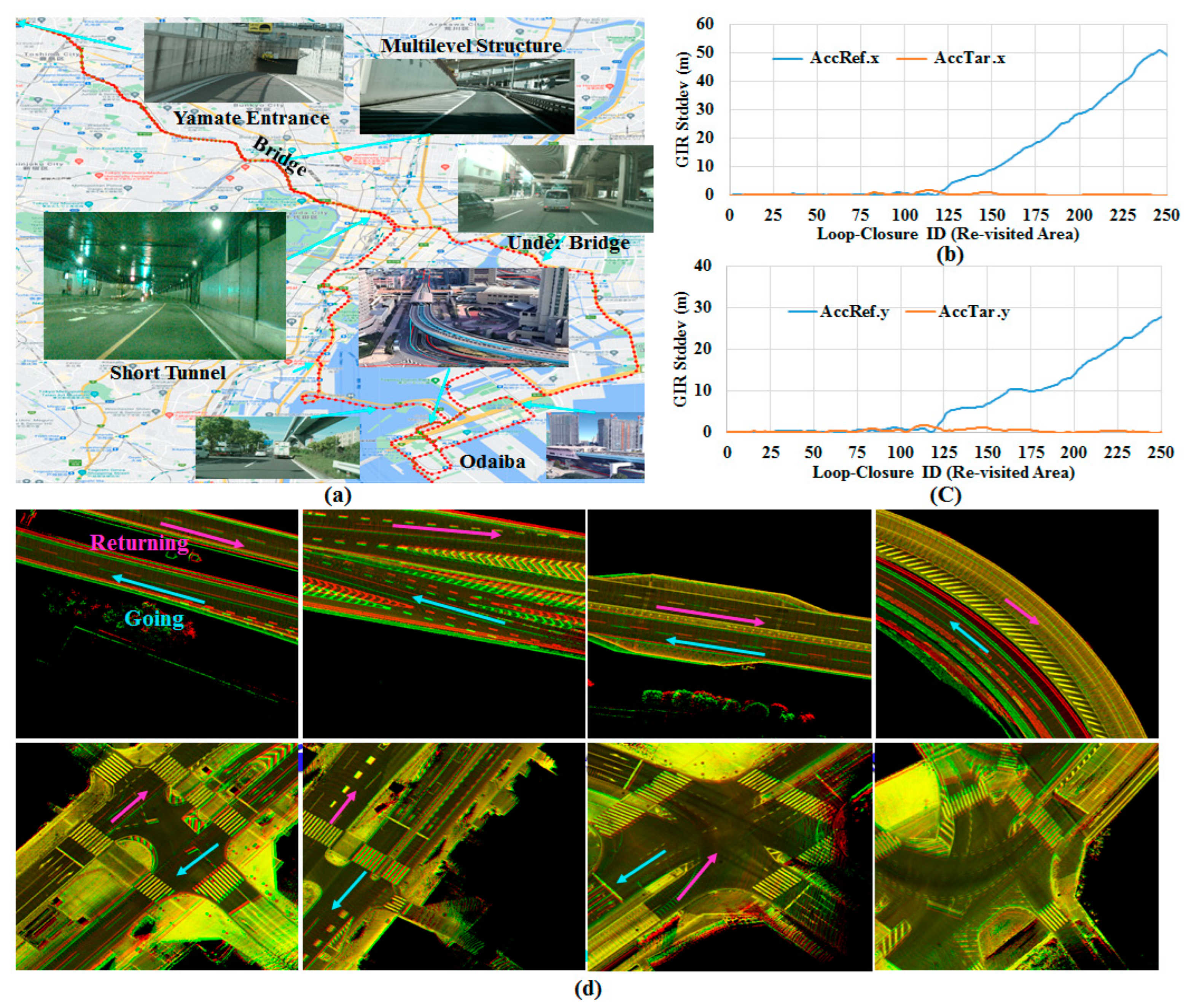

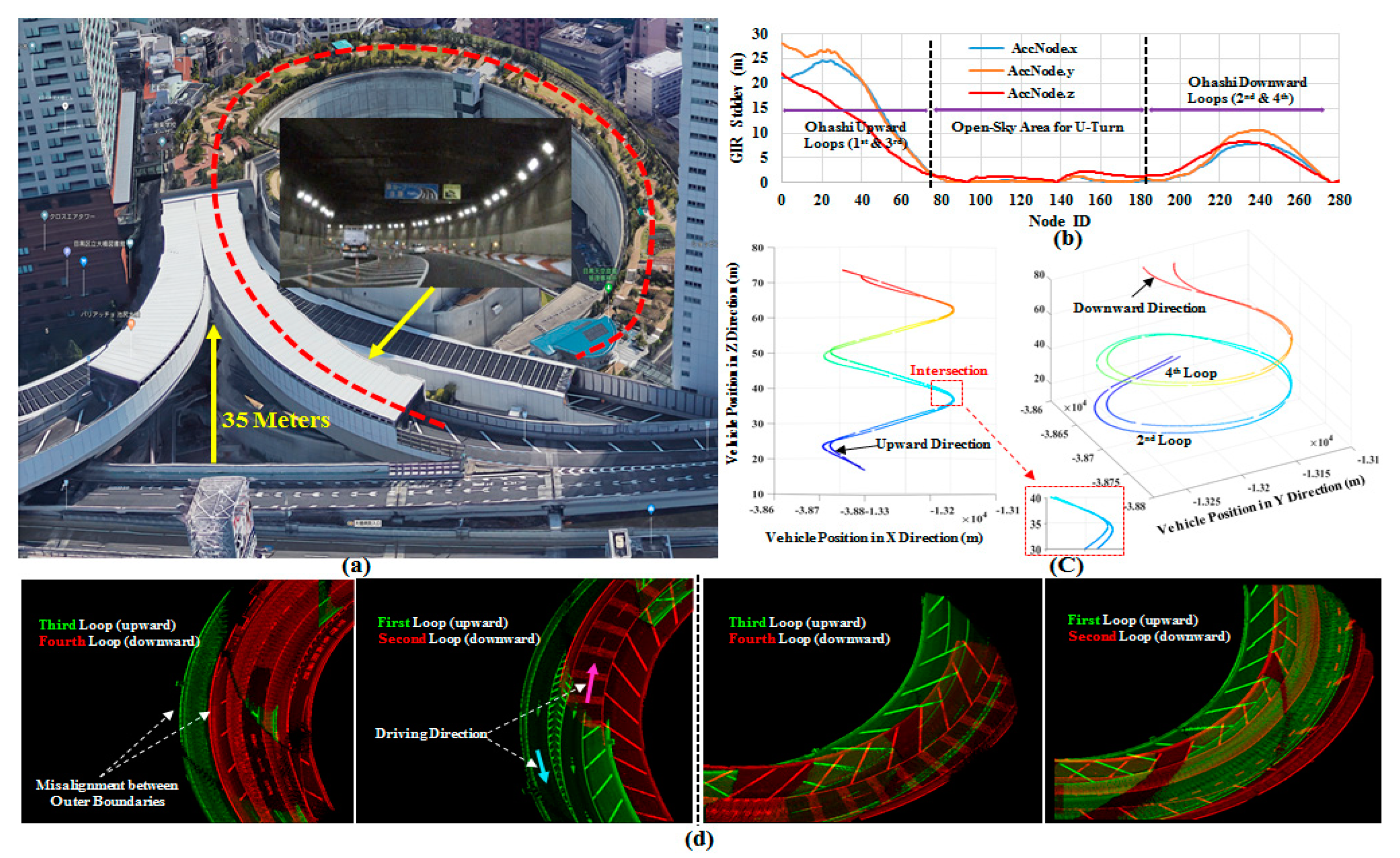

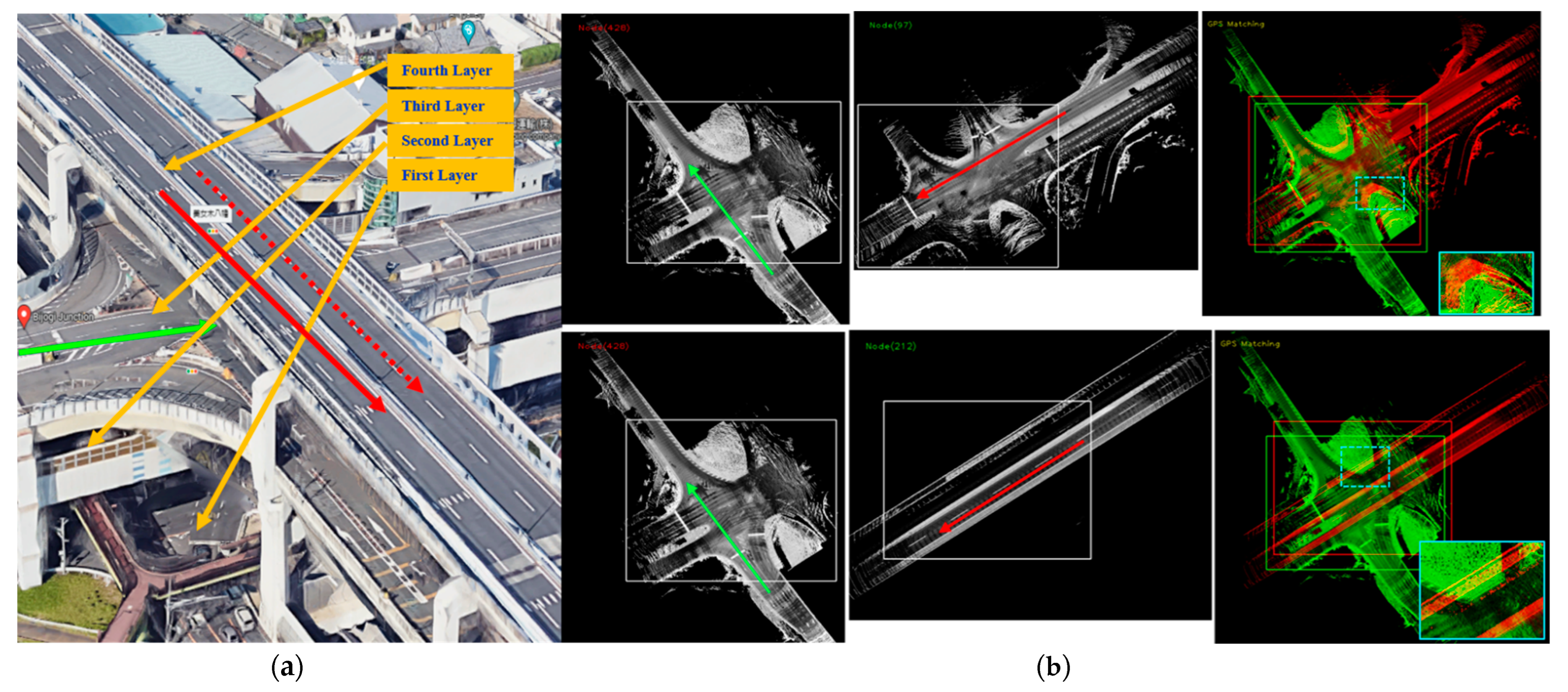

4.7. Mapping a Multilevel Environment Using Single Agent and Single Drive

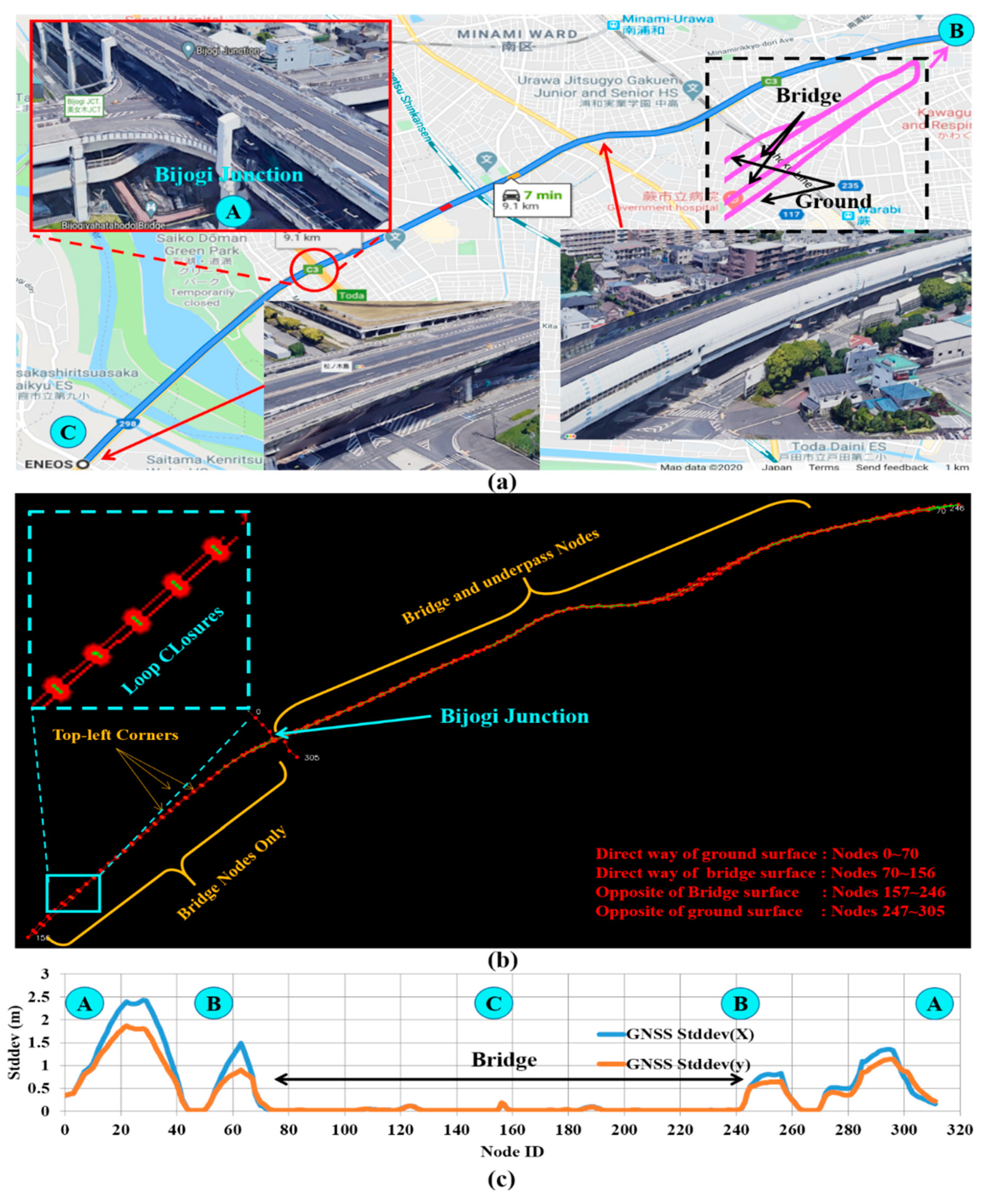

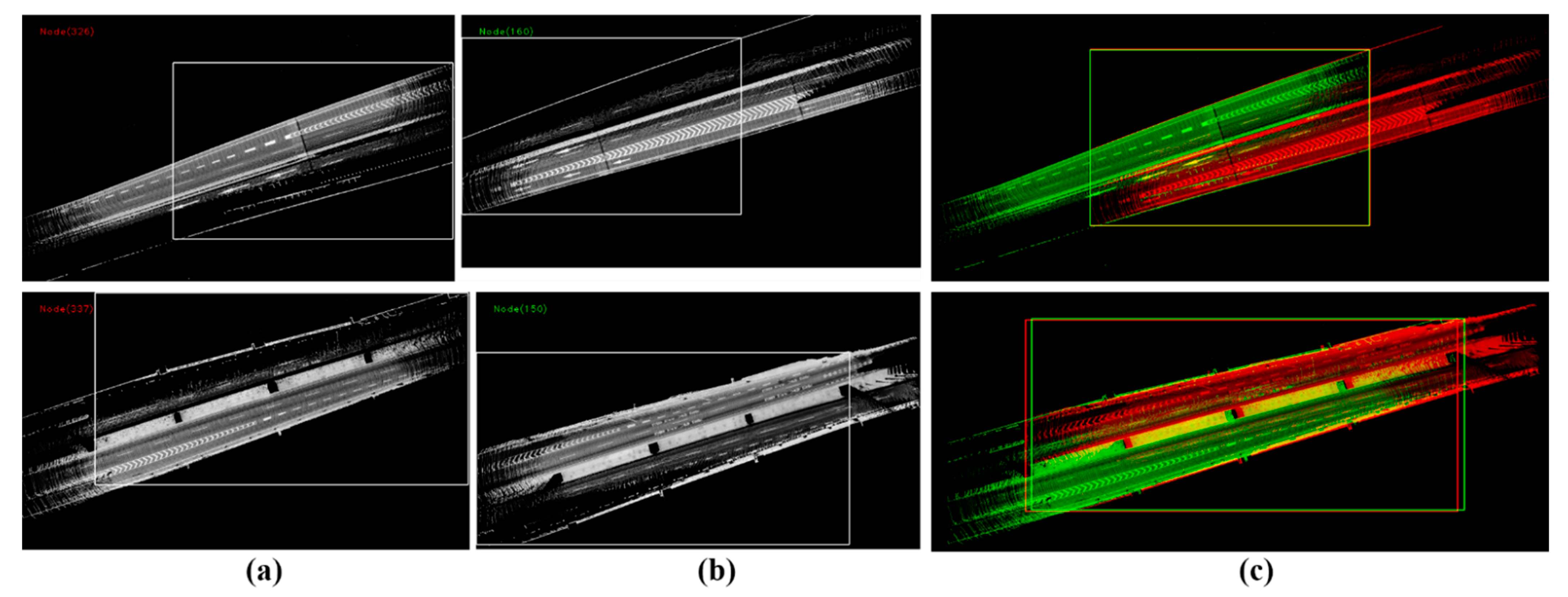

4.8. Mapping Longitudinal Bridge and Underpass Using Single Agent in Two Directions

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Forster, C.; Pizzoli, M.; Scaramuzza, D. SVO: Fast semi-direct monocular visual odometry. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation, ICRA 2014, Hong Kong, China, 31 May–7 June 2014; pp. 15–22. [Google Scholar]

- Rozenberszki, D.; Majdik, A.L. LOL: Lidar-only Odometry and Localization in 3D point cloud maps. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–21 August 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 4379–4385. [Google Scholar]

- Lee, W.; Cho, H.; Hyeong, S.; Chung, W. Practical Modeling of GNSS for Autonomous Vehicles in Urban Environments. Sensors 2019, 19, 4236. [Google Scholar] [CrossRef] [PubMed]

- Kuramoto, A.; Aldibaja, M.A.; Yanase, R.; Kameyama, J.; Yoneda, K.; Suganuma, N. Mono-Camera based 3D Object Tracking Strategy for Autonomous Vehicles. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 459–464. [Google Scholar] [CrossRef]

- Pendleton, S.D.; Andersen, H.; Du, X.; Shen, X.; Meghjani, M.; Eng, Y.H.; Rus, D.; Ang, M. Perception, Planning, Control, and Coordination for Autonomous Vehicles. Machines 2017, 5, 6. [Google Scholar] [CrossRef]

- Thrun, S.; Montemerlo, M. The Graph SLAM Algorithm with Applications to Large-Scale Mapping of Urban Structures. Int. J. Robot. Res. 2006, 25, 403–429. [Google Scholar] [CrossRef]

- Olson, E.; Agarwal, P. Inference on networks of mixtures for robust robot mapping. Int. J. Robot. Res. 2013, 32, 826–840. [Google Scholar] [CrossRef]

- Cadena, C.; Carlone, L.; Carrillo, H.; Latif, Y.; Scaramuzza, D.; Neira, J.; Reid, I.; Leonard, J.J. Past, Present, and Future of Simultaneous Localization and Mapping: Toward the Robust-Perception Age. IEEE Trans. Robot. 2016, 32, 1309–1332. [Google Scholar] [CrossRef]

- Roh, H.; Jeong, J.; Cho, Y.; Kim, A. Accurate Mobile Urban Mapping via Digital Map-Based SLAM †. Sensors 2016, 16, 1315. [Google Scholar] [CrossRef] [PubMed]

- Shan, T.; Englot, B. LeGO-LOAM: Lightweight and Ground-Optimized Lidar Odometry and Mapping on Variable Terrain. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018. [Google Scholar] [CrossRef]

- Shan, T.; Englot, B.; Meyers, D.; Wang, W.; Ratti, C.; Rus, D. LIO-SAM: Tightly-coupled Lidar Inertial Odometry via Smoothing and Mapping. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24–30 October 2020; pp. 5135–5142. [Google Scholar]

- Yoon, J.; Kim, B. Vehicle Position Estimation Using Tire Model in Information Science and Applications; Springer: Berlin, Germany, 2015; Volume 339, pp. 761–768. [Google Scholar]

- Aldibaja, M.; Suganuma, N.; Yoneda, K. LIDAR-data accumulation strategy to generate high definition maps for autonomous vehicles. In Proceedings of the 2017 IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI), Daegu, Korea, 16–18 November 2017; pp. 422–428. [Google Scholar] [CrossRef]

- Aldibaja, M.; Suganuma, N.; Yoneda, K. Robust Intensity-Based Localization Method for Autonomous Driving on Snow–Wet Road Surface. IEEE Trans. Ind. Inform. 2017, 13, 2369–2378. [Google Scholar] [CrossRef]

- Aldibaja, M.; Yanase, R.; Kim, T.H.; Kuramoto, A.; Yoneda, K.; Suganuma, N. Accurate Elevation Maps based Graph-Slam Framework for Autonomous Driving. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; pp. 1254–1261. [Google Scholar] [CrossRef]

- Murakami, T.; Kitsukawa, Y.; Takeuchi, E.; Ninomiya, Y.; Meguro, J. Evaluation Technique of 3D Point Clouds for Autonomous Vehicles Using the Convergence of Matching Between the Points. In Proceedings of the 2020 IEEE/SICE International Symposium on System Integration (SII), Honolulu, HI, USA, 12–15 January 2020; pp. 722–725. [Google Scholar] [CrossRef]

- Qin, T.; Zheng, Y.; Chen, T.; Chen, Y.; Su, Q. RoadMap: A Light-Weight Semantic Map for Visual Localization towards Autonomous Driving. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021. [Google Scholar]

- Ahmad, F.; Qiu, H.; Eells, R.; Bai, F.; Govindan, R. CarMap: Fast 3D Feature Map Updates for Automobiles; 2020. Available online: https://www.usenix.org/conference/nsdi20/presentation/ahmad (accessed on 30 May 2022).

- Aldibaja, M.; Suganuma, N. Graph SLAM-Based 2.5D LIDAR Mapping Module for Autonomous Vehicles. Remote Sens. 2021, 13, 5066. [Google Scholar] [CrossRef]

- Nam, T.H.; Shim, J.H.; Cho, Y.I. A 2.5D Map-Based Mobile Robot Localization via Cooperation of Aerial and Ground Robots. Sensors 2017, 17, 2730. [Google Scholar] [CrossRef] [PubMed]

- Sun, L.; Zhao, J.; He, X.; Ye, C. DLO: Direct LiDAR Odometry for 2.5D Outdoor Environment. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 1–5. [Google Scholar]

- Eising, C.; Pereira, L.; Horgan, J.; Selvaraju, A.; McDonald, J.; Moran, P. 2.5D vehicle odometry estimation. IET Intell. Transp. Syst. 2021, 16, 292–308. [Google Scholar] [CrossRef]

- Available online: https://www.applanix.com/pdf/faq_pos_av_rev_2a.pdf (accessed on 30 May 2022).

- Available online: https://www.applanix.com/pdf/PosPac%20MMS_LAND_Brochure.pdf (accessed on 30 May 2022).

- Aldibaja, M.; Suganuma, N.; Yanase, R.; Cao, L.; Yoneda, K.; Kuramoto, A. Loop-Closure and Map-Combiner Detection Strategy based on LIDAR Reflectance and Elevation Maps. In Proceedings of the 2020 IEEE 23rd International Conference on Intelligent Transportation Systems (ITSC), Rhodes, Greece, 20–23 September 2020; pp. 1–7. [Google Scholar] [CrossRef]

- Triebel, R.; Pfaff, P.; Burgard, W. Multi-Level Surface Maps for Outdoor Terrain Mapping and Loop Closing. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; pp. 2276–2282. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aldibaja, M.; Suganuma, N.; Yoneda, K.; Yanase, R. Challenging Environments for Precise Mapping Using GNSS/INS-RTK Systems: Reasons and Analysis. Remote Sens. 2022, 14, 4058. https://doi.org/10.3390/rs14164058

Aldibaja M, Suganuma N, Yoneda K, Yanase R. Challenging Environments for Precise Mapping Using GNSS/INS-RTK Systems: Reasons and Analysis. Remote Sensing. 2022; 14(16):4058. https://doi.org/10.3390/rs14164058

Chicago/Turabian StyleAldibaja, Mohammad, Naoki Suganuma, Keisuke Yoneda, and Ryo Yanase. 2022. "Challenging Environments for Precise Mapping Using GNSS/INS-RTK Systems: Reasons and Analysis" Remote Sensing 14, no. 16: 4058. https://doi.org/10.3390/rs14164058

APA StyleAldibaja, M., Suganuma, N., Yoneda, K., & Yanase, R. (2022). Challenging Environments for Precise Mapping Using GNSS/INS-RTK Systems: Reasons and Analysis. Remote Sensing, 14(16), 4058. https://doi.org/10.3390/rs14164058