Abstract

Vehicle density and technological development increase the need for road and pedestrian safety systems. Identifying problems and addressing them through the development of systems to reduce the number of accidents and loss of life is imperative. This paper proposes the analysis and management of dangerous situations, with the help of systems and modules designed in this direction. The approach and classification of situations that can cause accidents is another feature analyzed in this paper, including detecting elements of a psychosomatic nature: analysis and detection of the conditions a driver goes through, pedestrian analysis, and maintaining a preventive approach, all of which are embedded in a modular architecture. The versatility and usefulness of such a system come through its ability to adapt to context and the ability to communicate with traffic safety systems such as V2V (vehicle-to-vehicle), V2I (vehicle-to-infrastructure), V2X (vehicle-to-everything), and VLC (visible light communication). All these elements are found in the operation of the system and its ability to become a portable device dedicated to road safety based on (radio frequency) RF-VLC (visible light communication).

1. Introduction

According to World Health Organization reports, we can see that a leading cause of death is determined by road accidents, which lead to more than 1.35 million deaths annually. These reports also include the vulnerable categories found among the victims, such as pedestrians, cyclists, motorcyclists, and other drivers. Another important conclusion is that more than 93% of deaths caused globally are in low and middle-income countries that do not automatically have adequate road infrastructure and are unable to provide the best conditions for road users, although these countries contribute only 60% approximately of the total number of vehicles in the world. We can say that many deaths are also caused by non-compliance with safety measures and failure to wear a seat belt; between 20 and 50 million people suffer less than fatal injuries, but some of these accidents cause serious bodily injuries followed by disability for life [1,2]. We can say that in addition to human suffering, we can also talk about economic loss. Whether we analyze society, family, or nations as a whole, these events cause losses in both directions, the costs of caring and treating victims, and also loss of labor; the economy is losing staff and capable people, and families are losing members that provided stability. Thus, road accidents resulting in casualties and loss of life cost about 3% of the gross domestic product of almost any country in the world [3]. Current approaches are meant to reduce some of the risk factors to which drivers are exposed, but there are many less controllable elements talking including the unpredictable human factor. Human error is a major cause of road accidents, followed by speed and failure to adapt to traffic conditions, driving under the influence of alcohol and psychoactive substances, preventive driving, unsafe road infrastructure, old and unsafe vehicles, inadequate medical services, applied road laws and regulations, and emotional states or neurological diseases. Analyzing all this information, we can see that the proposed and presented topic requires an approach and a debate both at the structural level and at the level of practical action by implementing viable solutions to resolve this issue properly and reducing the number of victims and the loss of human lives as a result of road accidents.

The literature includes studies and analysis in this field since the 1970s, especially the Oriented Gradient Histogram (HOG), this being an efficient way to extract important features from an image, then obtaining a model that can be classified and later recognized as a series of objects, and here we can refer to some of the works of Badler and Smoilar [4]. Later approaches and future directions appeared in Badler’s article [5] and that of Gavrila [6,7]. In addition, collaborations in the matter of analyzes and practical demonstrations by Mikolajczyk et al. [8] later adapted other elements, in terms of detection, from Dalal and Triggs [9,10]. We can say that part of the presented literature belongs to the category of elements that laid the foundations for image detection and analysis without which at this time there would not have been a qualitative evolution and a homogeneous development of hardware and software components. This complex field offers satisfaction in terms of results and predictability, but detection of pedestrian or driver movements is difficult to achieve because the human factor is totally unpredictable, and in over 60–70% of cases are difficult to detect, automatically increasing the degree of error in detection. Technological advancement and market impositions have forced car manufacturers, most of them after 2014, to incorporate various efficient driving systems and safety systems, or assisted driving modules [11]. These systems are dedicated to car drivers because often the human factor is not conscious enough to meet the requirements of pre-installed applications. The human factor decides and initiates actions that lead to certain movements in order to solve problems. All existing techniques are investigated and designed in intense experimental evaluations in external environments and uncontrolled conditions. Therefore, in the design and development of a system dedicated to road safety, adaptability to all vehicles is an important contribution, and a decisive step in the realization of new devices. The analysis and creation of behavioral, emotional, and visual analysis modules but also pedestrian predictability, and safety in the passenger compartment of the vehicle or infrastructure according to the strict requirements imposed by the competent authorities, outline another important contribution. In addition to these elements, yet another contribution is the use of hybrid RF-VLC communications in the transmission of information to the external environment or to other traffic participants.

The remainder of this article is organized as follows. Section 2 addresses existing problems and solutions in the field of road safety and vehicle applications, highlighting the challenges and solutions set out in the literature. Section 3 presents the proposed system and the architecture on which it is based, including hardware and software components. Section 4 details the software architecture and tests with experimental data the the proposed solutions in the field of road safety. Section 5 provides a discussion that sets out the experimental results obtained in the processing and analysis of developed sensory modules that confirm their usefulness and necessity in production, while Section 6 provides conclusions.

1.1. Automotive Safety and Future Perspectives

We can arrive at a future perspective on the further development of the system, and also its implementation in a vehicular network, using V2V, V2I, V2X, or VLC communications, offering a complete solution to those who use the presented system. Based on the information obtained and processed, priority will be given and to constraints in certain elements or basic functions of vehicles, or voice warning to drivers about danger or traffic events, thus reducing the number of road accidents. Although the last decade has seen a new shift in the fields of technology and the automotive industry, the field of communications between vehicles (V2V) or between infrastructure and vehicles (I2V) offers enormous potential in the process of reducing traffic accidents and increasing road safety. According to existing studies in the area of intelligent transportation systems (ITS) and the reports by the National Traffic Safety Administration (NHTSA), these communications have the capacity to positively influence traffic events by up to 75–80% [11].

We can say that the development of intelligent systems in the automotive field has a solid history with over a decade of activity, with several companies as pioneers in this segment: Volvo, Mercedes-Benz, Audi, Volkswagen, BMW, Toyota, Tesla and others. These car manufacturers have managed to develop their own communication systems based on various technologies such as DSRC (dedicated short-distance communication) by implementing physical models through solid hardware components, sensors, cameras, and augmented reality. Existing applications demonstrate their necessity and usefulness by increasing the degree of road safety offered, especially by assisted driving equipment working with the human factor in critical moments of reaction time or negligence. Each system has the ability to be stopped, without taking control of the car, in extreme situations [12].

However, the difficulty of developing road safety systems based on various types of communications is a difficult process in terms of maintaining high requirements and standards on the essential elements that guide the field. Applications developed to obtain vehicle systems used in road safety and based on optical communications require low latencies that can increase accuracy but must be kept below 20 ms in order to provide a useful time to transmit the collision detection or the identified event. Thus, we can say that even the literature highlights the need to deliver data packets and distances of up to 300 m for the prevention and communication of information. Technological efforts so far have developed platforms and equipment based on sensory modules but also systems that use LiDAR sensors to be able to design an overview of the area in which cars move. The car manufacturers have also implemented systems that design holograms on the asphalt level with different messages in order to warn pedestrians or other traffic participants about the problems encountered. The multitude of road and pedestrian safety systems and methods only increase and develop the field on a beneficial trend for safe driving, there is still a difficult factor to control, that is the human factor, unpredictable, difficult to model, and unpredictable conditions and reactions [13,14].

1.2. Motivation in the Field of Road Safety

This paper presents practical simulations of predictability in the field of assisted driving, analyzing the behavior of pedestrians, drivers, and preventive driving. The practical simulations are based on a mixed augmented reality platform and visible light communications, but also on road safety applications. The developed system is based on sensory modules installed both on the outside of the car and inside, with on-board cameras, exterior cameras, and communication modules between the vehicle interface and the developed system. The detected and processed elements are presented on a universal display that can be installed on the center console and communicates directly with the developed modules or through RF communications. Later, these can be upgraded by using VLC communications.

The analysis of pedestrians and the predictability of their next movements but also the analysis of the emotional states that drivers go through are essential components presented in this paper. Identifying whether drivers are aware of the use of seat belts and analyzing all elements leads to a model through which we obtain certain messages that are then sent to the center console, but also to the car network, thus being interpreted by other cars. This direction comes as an aid in solving traffic events caused by carelessness, delayed reactions or undertaking other activities, by traffic participants or pedestrians. Future research prospects offer the opportunity to develop the field of road safety based on the exponential growth of car users, but also the large number of cars that benefit from pre-installed systems dedicated to safe driving. We can say that the development of applications and systems dedicated to the automotive sector has wide applicability and creates a series of different challenges that put both the industry and the research environment to the test. The analysis of pedestrians, drivers, and traffic events is diverse and has different characteristics, whether of appearance, movement, height, speed, color, unpredictability, and other factors that can influence a situation. However, over the years, these challenges have been addressed by automotive research groups and beyond, undertaking to analyze and characterize behaviors, movements, states, and complete identification characteristics of the profile of pedestrians, drivers, and analysis and predictability of future movements [15,16].

The motivation and necessity of the study in this direction are based on technological advance and in the next decade vehicles will be built at another level and will address a much larger segment of customers. Cars will have built-in Artificial Intelligence (AI), the Internet of Things (IoT), and Big Data systems. All these steps will shape a new lifestyle and will transform ordinary cars into autonomous vehicles. This aspect has already been put into practice for a short time in tests. The latest autonomous designed prototype without seats and steering wheel in the front is the Rolls-Royce Vision 100. This vehicle already works based on systems with virtual assistants and built-in artificial intelligence such as Call Eleanor. Current systems are progressing with natural language processing (NLP), which aims to ensure direct interaction with intelligent systems dedicated to the driver. This element makes the interconnection between Computer Vision and the user, which has, as the final goal the identification of other vehicles, people, indicators, and traffic management systems. IoT technologies outline a well-defined domain path through the technology’s ability to interact with sensors, cameras, and connect with existing devices. Another aspect is related to LiDAR (Light Detection and Ranging) technologies, which are based on laser sensors installed in the upper part of the vehicle performing a 360° scan [17]. This process allows the vehicle to design a three-dimensional model of the area in which it is identifying objects within its range. We consider the evolution of this significant field, and we try to incorporate into intelligent systems all the technologies of the future, whether we are talking about V2V, V2I, V2R, V2X, or VLC [18,19].

2. Related Work

2.1. Vehicular Communication and Driving Safety Applications Issue, Related Works and Methods

2.1.1. Current Stages in DSRC, V2V, V2I, and V2X Communications

The current situation shows that most vehicles benefit from sensor-based equipment that can participate in crowdsourcing applications. They can implement ad hoc vehicle networks (VANET) based on DSRC-type technologies in data transport. There is also a multi-hop transmission for collection between road type units (RSU) in VANET, but in these conditions there is the possibility of a delayed and extremely difficult transmission [20]. In order to address these issues, academia is trying to build hybrid networks that incorporate these technologies by transforming them into new communication protocols dedicated to the timely transport of data between vehicles and infrastructure. The most intensively studied mechanisms approach clustering type centralization with a single hop in order to maximize the data supply. However, there is also a compromise that reduces the cost of cellular bandwidth but also the way in which the delay occurs. Thus, a supply of data is managed in the agreed system through multi-hop transmissions (V2V) or cellular networks. The lack of ideal protocols and technologies highlights the C-V2X (Cellular-Vehicle-to-Everything) based on autonomous and dedicated driving in intelligent transport systems. Its variety and volatility allow an evolution from LTE (Long Term Evolution) to V2X to NR (New Radio) and V2X. These elements indicate complementarity and a capacity to work together in order to obtain low latencies and ideal reliability. Features have highlighted the high efficiency of using such hybrid communications. At the heart of C-V2X communications are cells that have the ability to carry vital information that is periodically broadcast from vehicle to vehicle through cooperative awareness services (CAM) [21,22].

We can say that the research highlights several levels and programming modes dedicated to semi-resistant iterations with utility in type detection (SB-SPS), where a vehicle detects the environment and interconnects resources in time and space through the frequencies available in the process of CAM transmission. Another aspect that the academic environment has in mind is the detection of the channels in real-time and the adjustment of transmission power in order to avoid interference in relation to other vehicles in the network. We can say that these aspects are the purpose of all research on systems dedicated to transport and road safety, regardless of the type of communication protocol used. The recent literature highlights that V2V and V2I communication systems are decisive and significant elements in traffic optimization and the development of road safety systems. Therefore, new standards have emerged in this direction, ITS-GS (IEEE 802.11p) and C-V2X (3GPP), previously highlighted and presented [23,24]. The performance of both standards in terms of physical layers and associated MAC layers is presented in [25], with the capacity of a link and of their use in obtaining notable performance in a loaded network. It also shows how the performance advantage offered by C-V2X for low-density levels in cases of congestion becomes high and automatically the performance difference is reduced until ITS-G5 reduces the gap and exceeds C-V2X. The study of latencies and reliability is also analyzed in [26] in conditions of the emergence of a new generation of communications that can raise the standard of requirements, decrease latency and increase reliability so that the delivery process is completed in a timely manner. Expectations from fifth-generation (5G) networks are extremely diverse in terms of the requirements they have to meet. Although the C-V2X has progressed in terms of LTE V2X to the 14th version, this is only in the context of improving reliability and low latency performance to meet even the most demanding V2X applications. Critical applications in dynamic environments are most promising in the research studies because they highlight the limitations of a technology or protocol. Most communication models dedicated to the road sector and traffic safety, as well as non-safety (fluidization, prevention, and analysis), are based on radio technologies. Whether we are talking about direct C-V2X communications or the IEEE 802.11p standard [27], all these resources in an urban environment characterized by congestion and density of vehicles becomes outdated and problems arise. These issues are analyzed throughout the literature but do not focus on a mix of emerging technologies that function as a hybrid platform. That is why we still consider the approach of hybrid RF-VLC type communications as useful in road safety and traffic management systems [28,29].

2.1.2. Debate on the Directions of Development in Road and Pedestrian Safety

From studies and research conducted to eliminate or significantly reduce the number of road accidents, it is important to address issues regarding pedestrian intentions and the predictability of movements performed by them. The emotional states of drivers that condition or impose certain barriers and produce behavioral reactions are often responses conditioned by what the driver is going through, subsequently requiring the analysis and detection of preventive behavior and the use of seat belts. According to studies, the factors presented above are the basis of many road accidents or adverse events in traffic. Thus, we can say that uncontrolled reactions or unpredictable movements of drivers or pedestrians in a fraction of a second can produce an unfortunate event that can lead to loss of life. In the first phase, we can say that a large part of reaction and driving style is directly influenced by the emotional states that a driver or pedestrian goes through, thus changing the degree of concern, related activities, attention, confusion, nervousness, and other factors that manage to distract them and making them vulnerable. According to the medical literature and analysis performed by Dr. Peter Noel Murray, emotions have a role and behavior is directly influenced by them. This study was performed using magnetic resonance imaging (MRI) observing in detail brain activity and emotion produced by states, feelings, deeds, and reactions [30,31].

Therefore, emotions force human beings to act and decide in a timely manner, especially if we exemplify this through a case study of angry people who in a state of reflex just want to get out of that area or to ‘face the opponent’. Such reactions are also provoked in the case of happy or unhappy drivers; others exult and emanate positive states that make them focus only in that direction and others do the opposite, but the conclusion is that both states cause altered movements and lead to inconsistency whether in driving or walking.

We can say that the role played by emotions and other mental states, including fatigue while driving a car, is essential. These become extremely important factors in influencing decisions affecting types of reaction, perception and organization of memory [32,33], details, classification, preferences, evaluation, decisional analysis [34,35], concentration, attention, performance, and communication [36,37]. The efforts made by academia and research teams, including the development of intelligent systems to analyze the human factor, have progressed in a certain direction, but insufficiently in terms of the number of road accidents. The analysis of the driver with each use of the vehicle is not enough because conditions can change quite often and unpredictably. A driver’s emotional state must be uniform and support his/her abilities without disturbing certain activities. Thus, we consider extremely important the direction of research developed by exposing the problems in practical cases and solving them with the help of systems dedicated to traffic safety and pedestrian safety. These developed and developing components are meant to analyze and process all the necessary information from the external environment and to transmit it to drivers, vehicles, pedestrians, but also to intelligent traffic systems, all within a hybrid-mixed platform to reduce the problems previously mentioned.

2.1.3. Debating the Current State of RF-VLC Hybrid Communications in Road Safety Applications

The current proposal and direction in which the applications of our research group are developing can only compare certain modules with those currently existing in the literature. This is due to the development of several systems in parallel on different communication principles and protocols. Some of them work on the basis of RF communications, some with VLC communications, and this paper incorporates these communications in a hybrid form of RF-VLC in the first stage. The main goal is to highlight the capacity and portability of the developed system but also the amount of information that can be analyses, processed, and transmitted through such a system, whether we are talking about RF or VLC communications. In the first stage, the development of the system is presented in this paper for demonstration purposes in order to highlight the need for a system that encompasses all the deficiencies that exist at this time in production, as well as issues that have not been addressed by other research groups. Improvements are constant and maintain a direction that solves as many problems as society faces. Future steps also include devices to be installed on the surface of cars to transmit and receive information from other vehicles at a considerable distance. Current studies offer feasible perspectives because the VLC communications realized can support the transmission and reception of information at distances of up to 300 m in ideal conditions [38].

The analyses and research carried out aimed at performing tests in real scenarios and at behavioral investigation of pedestrians and drivers in an external environment and an existing infrastructure; sensory and video analysis made these elements true sources of information and factors in decision-making in order to prevent loss of life. In the first phase, the development of intelligent systems dedicated to the automotive sector is based on modules designed for adaptability in different situations and on universal design in order to have a greater impact on users. Part of the developed components focuses on the prevention and detection area in the vehicle and another part on the external environment, considered as very volatile and full of challenges and insecurity. The novelty and adaptability of the developed system that is in a continuous process of improvement have the possibility to identify and transmit information through several types of communication, whether we are talking about visible light about 4G or Wi-Fi. The use of these future perspectives in the field of communications facilitates extremely high data traffic, high-quality video calls, online televisions, or video applications that the fourth generation (4G) offers. We can say that the frequency band has values between 1800/2600 GHz, which can facilitate the transfer of information at transfer speeds of about 3 GB/s [39,40]. Although 4G communications were used in the case of scenarios and applications, also being available to the public, the fifth generation runs at speeds between 10 and 50 Gb/s, using an initial frequency of 1800 GHz and 2600 GHz, with subsequent adjustments that can increase between 30 and 300 GHz. Studies conducted by private research centers show that in laboratory tests in ideal conditions the technology reaches speeds of about 1 Tb/s. To consolidate the claims and expose them to the public, in 2019 the Einride company, that deals with certain aspects of the development of applications dedicated to Ericson, have managed the performance of controlling a truck remotely over a distance of about 1200 km on the Gothenburg, Sweden to Barcelona, Spain. The results and the way the tests were performed made the experiment a success and the conclusions were that, in the field of vehicle applications, 5G communications can manage and communicate information in a timely manner with extremely low latencies [41,42].

3. Methods

3.1. Research Trends in Automotive Safety Applications and Hardware Design and Implementation

Given the previous context and the usefulness of 4G and 5G communications in in-vehicle applications, we can say that the degree of complementarity between RF and VLC communications is extremely high so we can rely on a mix of technologies to obtain an ideal platform for communication between vehicles and infrastructure. Visible Light Communication (VLC) proposes the use of visible light (380–780 nm) as a data carrier; this factor gives us the possibility of obtaining a bandwidth of approximately 400 THz. This facilitates the increase of communication speed, reaching transfer rates of hundreds of Gb/s [43,44]. Drawing a parallel, DSRC technology is divided into several channels, approximately seven. Each channel has about 10 MHz, however, the technologies discussed can be complementary and can streamline certain branches of transport, especially the development of systems dedicated to intelligent traffic management [45]. As a first step, the system is based on hardware elements developed as needed, filling certain deficiencies with commercial modules in terms of data acquisition and transfer and the mode of communication between vehicles. The collection of information inside the vehicles is done through the CAN port with the help of an OBD dongle and the information related to the passenger compartment of the car is obtained through sensory and video analysis. The exposure of the information is done individually and distinctly depending on the prioritization of events but also their severity, including sounds and voice messages perceptible to the user. The presentation of the information is done on an interface developed in Angular and .NeT language for the acquisition of data and their timely exposure after processing from sensors or cameras [46,47]. The software application allows multiple network connections and external access even from mobile devices for better exposure to users who are not currently in traffic. Universal navigation has been designed to display the information, adaptable to any type of car dashboard with the necessary dimensions to fit it on the middle console. Its design and implementation were done with the help of a detailed analysis of the dimensions available on the center console of the cars for the later design of the navigation.

The designed device is based on the intensive study of available spaces in the passenger compartment and the middle console area in order to incorporate it either on the console or inside it for a better user browsing experience and to achieve a high comfort factor. Following the studies, 3D elements were designed that later built in portable navigation with a touchscreen of about 17 cm diagonal size, with a height of 10 cm and a depth of 7 cm, sufficient to incorporate electronic components inside. The stage at which the system is located requires a new analysis of the dimensions and the redesign of the whole set in order to obtain more generous spaces to be able to make the necessary upgrades.

According to the previous presentations on mobility and flexibility in the future, circuits dedicated to the connections and interconnections between the given acquisition modules and shields for the sensor packages have been designed. Raspberry Pi3 and Pi4 modules with a 7-inch touchscreen screen and a tablet or mobile phone control module were used for the interface. In terms of optimization and processing, the final configuration benefited from an upgrade with the Raspberry 4 microcontroller, with a processing power of 1.5 GHz and a memory of 4 GB RAM, all of which were supplemented by a Neuronal USB stick Intel Coral to achieve increased processing and accuracy in the measurements performed. Figure 1 represents the designed assembly with all the components and modules contained by the system in the first stage of development. Another microcontroller used in the control and acquisition of data was Teensy 3.6, being ideal in processing information and transmitting it to other devices in the dedicated system.

Figure 1.

Illustration of the proposed system. The visible light communication (VLC) receiver and emitter in outdoor and indoor simulations. Navigation system with modules installed on the central console.

According to the simplified architecture in Figure 2 the main module is based on ARM Cortex-M4 with the main purpose of Debug-Interface and process control and parsing instructions from the other module, based on a Samsung K42G324ED processor with 2 GB-DRAM memory to instantiate the video elements and their interconnection with the message decoding and construction module. The input of images is made from several sources, whether we are talking about the cameras installed in the passenger compartment of the car or about a camera mounted outside for a much higher degree of comparison and increased accuracy in obtaining conclusive and real measurements usable in the research process [48,49].

Figure 2.

Schematic of the proposed architecture for the communication system. Module-based on ARM Cortex-M4 and Raspberry Pi4 and interface communication.

For communication with the system an improved semi-commercial module is used with OBD II connectivity through the CAN port of the vehicle. This is designed to communicate via 3G, 4G, LTE, Wi-Fi, or Bluetooth, the platform being made on a microcontroller Raspberry Pi W able to provide sufficient processing time and sufficiently secure and stable connectivity in ideal conditions for quality communication. This can be treated in the future and can be improved by mounting a dedicated antenna to increase stability and decrease latency. All these components are found in a dedicated interface developed as previously mentioned in the language Angular and .NET with some elements designed for React JS, the data being collected through an API, embedded in a Cloud platform. This first stage is strictly addressed to the analysis and detection of risk factors leading to road accidents, then the system must be developed in the direction of vehicular and inter-vehicle communications using VLC, V2I, V2V, or V2X in order to expose a solution dedicated to the problems that face the whole of humanity. These changes and improvements must be made in accordance with the legislation in force without making adjustments that would disrupt or affect people’s lives. The main concern is to obtain relevant information and to signal certain aspects that can lead to a negative impact, but without endangering the lives of drivers or pedestrians through various constraints that restrict their rights.

3.1.1. Description of Prototypes and Practical Scenarios

Considering the complexity and the way in which more and more road accidents result in the loss of human lives, we consider every aspect important regarding the analysis and management of traffic events, but also in the adjacent areas or on the route that a car follows. Thus, within a month, trials and scenarios were made regarding traffic events at different times and in different locations in order to highlight as many elements as possible that can negatively influence the general situation. In the first stage, the most loaded routes are analyzed both from the point of view of traffic and areas heavily transited by pedestrians. A multitude of accidents is based on pedestrians who simply neglect traffic signs or misunderstand certain movements that later make them vulnerable to cars and the reactions of drivers. We consider that by investigating and describing certain characteristics and warning the driver about a possible non-compliant movement of pedestrians, the avoidance of an accident is largely achieved. Therefore, events outside the car can count in equal proportions with those inside, so we consider the need to classify practical scenarios and simulations according to these priorities. Another aspect treated is the one in which the road infrastructure, traffic lights, and markings, but also traffic participants, are analyzed; all these elements will be identified and prioritized according to the severity or potential of each possible event in a road accident. This will analyze the distances and times that cars travel in certain scenarios and vehicles that do not maintain an ideal behavior in traffic by cataloging their main risk factor and highlighting these.

All these elements are considered risk factors and increase the complexity of an unforeseen event in traffic. In order to complete the list of factors that negatively influence the smooth running of events, aspects inside the passenger compartment of the car were also taken into account, namely the safety elements that the driver is obliged to use and that protect those around him. Perhaps the most important component not exposed to the general public which can be considered by drivers is s a restriction or violation of their human rights. Therefore, we refer to the situation in which we analyze a driver behaviorally and emotionally, and through this analysis we highlight features related to the mental state of the users of a car. We know very well that psychological tests are mandatory to acquire the right to become a driver and then to change permits or move to another car category. These psychological tests are performed superficially and do not address all aspects of behavioral deviations or certain behavioral deficiencies that can have an extremely serious impact on the act of driving a car. Thus, each behavioral deviation or difficult reaction can disrupt the driver’s cognitive process, and automatically there is the likelihood that certain movements are performed too late.

Another aspect analyzed inside the passenger compartment, which is in the first phase and is also treated in the simulations performed, is related to the observance of the safety measures that a car provides, more precisely the seat belts, which 60–70% of cases can alleviate the impact of the car with an obstacle, reducing the likelihood that the driver will suffer serious injuries. The seat belt is a measure of prevention and protection for both drivers and users, although, over the years, the safety systems have been improved, and when the belt is not found in the base the driver is warned but is not forced to use the seat belt. The likelihood is that an element of restraint will automatically become for a driver a reason to consider himself under unjustified pressure, or that his rights are violated, so many drivers find it useful to wear seat belts or other means of restraint in order to evade its use and to protect themselves. Thus, in the analysis and studies performed by either image analysis or frontal sensors, elements related to the use of safety systems can identify these issues, and the driver is forced to comply. The constraints are mechanical in nature so that the vehicle is not usable until all safety measures have been taken, thus obtaining a high degree of prevention. All the systems developed so far are meant to offer comfort to the users, but no definite obligations are imposed on them, which can save their life simply by being used.

To complete the system, the same mode of reception is used with the help of photodiodes, installed in traffic light areas to capture the signal and perform the process of data acquisition, amplification, processing, and decoding. As mentioned above, intelligent traffic lights have been developed over the years and remarkable results have been achieved in data transmission even over distances of over 100–150 m in favourable weather conditions without having a BER of 10 to −7 [50,51]. At a future stage, these prototypes will have the role of communicating with the traffic management systems but also with the control and management systems of vehicles and road object detection, behaving as a hybrid network in which communications are supported in creating an infrastructure dedicated to safe driving. Studies and technological developments in production have focused more on user-based safety systems to protect the driver and prevent unforeseen events, which is extremely useful but has not advanced with the systems presented in this paper, and there are many factors that require a thorough analysis. The technological mix presented is feasible from all points of view and its integration into existing systems is only a matter of time before implementation. The information exists and the existing infrastructure allows the necessary changes to revolutionize the traffic and management systems without exposing additional economic costs.

We can say that systems can have a much better match with reality by using perception sensors needed for depth analysis that could highlight the stereo vision specific to human beings, or the use of LiDAR sensors as an alternative method to compare the results of identifications with camera-based systems and image processing. All the elements presented are under development and testing in order to present a viable solution in the evolution of road safety systems dedicated to safe and accurate driving and the protection of pedestrians and other road users.

3.1.2. Description Pedestrian Setup and Practical Scenarios

Automotive technology analysis and vision systems gravitate to the area of computerized management and driverless implementation with the omission of some extremely important elements. In the last decade, studies carried out continue to increase computing power and implementation based on convolutional neural networks, developing rapidly and leading to extremely notable results in the field of computer vision driving assistance. We can say that the most commonly used methods are the R-CNN series (Region-Based Convolutional Neuronal Networks), and SSD (Single Shot MultiBox Detection), but also the controversial YOLO being developed by a team that operates within the Darknet platforms. YOLO (You Look Once), algorithm proposed by RedMon and Grishick [52], has the ability to detect objects by regression and location exclusion and classification of the object from one end to the other by a single parsing. This makes it position itself at the top for speed algorithms, but with a low degree of accuracy in the case of small objects and an error rate in the case of pedestrian scenes with a high degree of complexity [53,54].

Therefore, the YOLO algorithm has been constantly improved, and the latest version is known as YOLOv3, which uses the ideal K-means method in automatic grouping and selection of the best regression frame from the initial stage to outline the data set. Another mechanism that helps to overcome the shortcomings of the past, the multi-scale anchor mechanism [48], was adopted to increase the detection accuracy of objects of a certain size. For a better experience and much higher quality results, Liu together with Anguelov and other collaborators proposed in [55] the SSD (Single Shot MultiBox Detector) algorithm using the regression method, for position detection and integration but also for classification in a single network. Another aspect is that the SSD network has been upgraded to VGG16 [56] in order to be able to replace the complete connection between the network and the extracted convoluted layer. Each convolutional layer we have created produces a feature map that it uses as a method of entering predictions, thus resulting in a feature map based on several regression scales. The map of low-level regression characteristics contains much more useful information to preserve details and obtain training sets, improved in quality and accuracy by returning errors. We can say that the YOLO algorithm, obtains results with much better accuracy when it comes to small objects and the degree of detection and speed have increased exponentially in real-time applications. To use the algorithm in traffic safety or assisted driving applications we need an extremely high real-time and a performance far superior to existing algorithms. Thus, there is a contradiction when it comes to the characteristics of algorithms in the trade-offs between speed and accuracy, but also many other factors that could favorably adjust the detection process, such as the extraction characteristic and the size of the input images.

Therefore, the use of SSD (Single Shot Detector) and Faster R-CNN (Region Convolutional Neural Network) architectures, but also MobileNet or Inception type extractors, helps complete the entire detection process proposed for simulation scenarios but also increases the speed of processing. We can say that for increased accuracy COCO SSD should be used, which increases fast processing capabilities, but in terms of efficiency and accuracy again false data were obtained. According to the analyses and studies performed together with the existing models, the algorithms were improved and subsequently configured in order to integrate them into the simulated scenarios, presenting themselves in the first simulations with satisfactory results and in accordance with the previously proposed hardware architecture. The erosion and analysis of input blocks were based on the analysis of an architecture based in MobileNetV2 and YOLOv3, improved by increasing the analysis capacity of several blocks, and using Neural Intel Coral increased processing time [57,58].

According to the graphical representation and data collection, one can observe the distribution of reference builders and destroyers according to the models presented and used. Each configuration has a meta-architecture selection model, with an extractor characteristic of the model (RESNET and INCEPTION RESNET), including resolutions for inputs, or a number of proposals to increase R-CNN and R-FCN processing. Another method used to obtain results as needed and efficiently parameterized is the cascade operation mode, so the browsing time decreases by completing a much more efficient subset of data [59,60]. Tests conclude that R-FCN and SSD models offer better speeds as opposed to R-CNN, which tends to handle slower models but at the same time offers significantly higher accuracy, even if the processing time sometimes reaches 100 ms for each image processed. If the analyzed elements are found in a single region and subsequently limited to a single object pursued, the processing time increases and automatically limits the proposed regions for analysis. Image detection and analysis systems come with flaws in terms of the restrictive imaginary threshold of detection that sometimes cannot be exceeded for various reasons. All these results have led to the development of a mixed, hybrid solution that can overcome the obstacles mentioned above and meet all the requirements and unforeseen elements from the external environment, working hard on adaptation to the context. The changes in the model are aimed at outlining an improved algorithm for the analysis of the entire image in order to increase the degree of predictability with the possibility of analyzing the overall context of the input images. Generally speaking, the prediction time is much better than the standard of existing models and can reach a speed for the feature analysis algorithm of about 100x compared to R-CNN Fast and 1000x T-CNN [61,62,63].

3.1.3. Discussion on the Architecture and Algorithms

The choice of using an anchor box is also found in Faster-RCNN and SSD but the size of that box is set manually, which later causes the entire network to converge quite slowly when preparing the drive set, subsequently making a local optimization. Thus, YOLOv3 attracts on the basis of the anchor and the mechanism of the Faster-RCNN box, the novelty element being the K-means grouping method for the experimental data set and the optimal grouping of the anchor boxes. We can say that the classic grouping method proposed by K-means for a data set uses the Euclidian Distance function, which symbolizes that these cassettes are larger and can cause errors in the detection clusters, and thus erroneous groupings occur and can deviate from the standard. Therefore, we adopted IOU [64] (which highlights the overlap ratio for candidate type boxes generated and marked as original) which are evaluated as a result after grouping, and the error caused by the scale of the box can be avoided. The elements presented above and the distance function can be calculated according to the Equation (1) below:

where the box is the sample; centroid is characterized by the central point of the cluster; and IOU (cassette, centroid) can be said to represent the overlap ratio of the cluster cassette and the center box.

In the component of pedestrian detection and predictability, the essential data sets were extracted in order to be able to outline the ideal models being grouped, and the IOU scores with different values of k were subsequently compared. Thus, only the results with K values of x are chosen in the end, considering the complexity of the model given for analysis. The limitations imposed on pedestrian identification predictability increased with a series of parameterizable and automatic reactions, and the results obtained were much clearer and more concise in order to solve the problems exposed in the paper. Regarding the YOLO algorithm, we can also say that it is an end-to-end network, so we have a loss called square sum error throughout the calculation process [65,66], this being a simple addition of differences, even coordinate errors, IOU errors, or in the most special and unwanted cases classification errors. This loss function can be highlighted and expressed by the following Equation (2):

We can say that when the function by which the loss is estimated is added, the share of each loss function in the total estimates for the expected loss must also be taken into account. Thus, we can have a coordination error that can be consistent with the weight of the classification error and from this we deduce that the model becomes unstable and, during the simulations, it will be divergent. When calculating the IOU error, there is a possibility that there may be objects, in that grid or not, with a different contribution to the loss rate in the detection network. If the objects have a similar amount of information, they can outline a high degree of confidence in the analysis process, otherwise the degree of confidence is approximately zero, which distorts the process and the influence of errors in calculating the parameterizable gradient in the network. We can say that improved YOLO largely solves the problems exposed and analysed by the square rooting of the elements that contain information and are of the type (w and h) of characteristics related to the size of the analysed object, but does not completely solve the problems of detection and contextual analysis of pedestrians. In order to outline a software structure to be able to expose the information as accurately as possible, a set of detection models was built and added, containing elements with characteristics and parameters usable in the proposed approach. Detection models are created strictly for the analysis and predictability of pedestrians with several ways of classification and identification in different instances, whether we are talking about indoor or outdoor environments, human characteristics, or elements that can disrupt the process (see Figure 3).

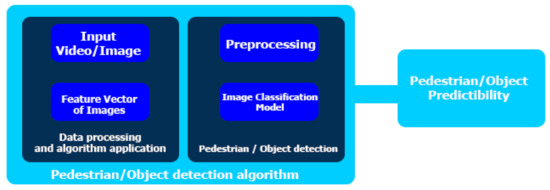

Figure 3.

Schematic of the processing algorithm for pedestrian/object detection.

The implemented architecture is based on standard elements without complicating the analysis process by classifying the information according to how the process advances in the desired direction, by outlining each pedestrian identified with a transparent gradient that has two shades, namely red and green. These shades are the way to identify a pedestrian who can resort to uncontrolled movement and at the same time has a short distance between himself and the car. Pedestrians who have a buffer distance of approximately 15–20 m between them and the vehicle are not identified as possible hazards because the distance allows and offers the possibility of operating a procedure that does not endanger anyone. According to the proposed architecture, future changes can be made in order to optimize the software application to identify a larger set of data and to classify in an organized way other elements of major importance for traffic and its safety. The degree of complexity and accuracy that this system has shown in the first stage offers solid perspectives for future research and full development. The process of delimiting and contouring the images filters them, performs detection, and manages each identified feature using the logical regression model. We can say that when the points (h and w) of height and width are well defined, we calculate the middle of each grid obtaining a prediction point within that object. Thus, if the use of logical and independent classifiers offers an extremely high degree of accuracy and efficiency, we are guaranteed a better prediction class and automatically some results expose characteristics and analyses with a high degree of accuracy. The process of completing a data set and parsed objects go through a step in which each image in the prepared set of models is compared point by point. Thus, the complexity of movement in the case of the parameterized data set becomes much easier. This is much simpler, there is the possibility of an analysis by overlap, and pedestrian detection is performed at the same time without interleaving and problems in the detection process.

Through the process of analysis and tracing of the overlapping and detection characteristics in two layers, we can say that we succeed in building a map of functions, these being later urgently needed in the concatenation process. This process becomes a complex one and requires a combination of factors that use high computing power and processes such as detection speed are automatically affected; however, a suitable method would be to use Tiny-yolov3. This version does not provide the expected results, being a simplified version of YOLOv3. This mini-network contains only seven convolutional layers and approximately six grouping layers. We can say that it is about five times slower in the sampling required to obtain ideal detection processes [67,68]. The structure of this network outlines through its annexes a mini-network that can later increase speed but loses accuracy. The tests performed for the purpose proposed by this paper saw an improvement to this solution, reaching about three convolutional layers and thus improving the process of extracting features, but this was not enough. Using the improved YOLOv3 base and implementing analysis and detection on two layers in parallel, they automatically increased both the accuracy and the degree of parsing of each detection procedure, consolidating the analysis performed for each concatenated feature map. Thus, using this method, the results obtained are largely the most qualitative of the entire process undertaken and we consider this study useful in the field of road safety and pedestrian detection. The method approached, in the end, managed to obtain semantic information that has an increased degree of fine granulation, thus in more plastic terms bringing the smoothing of the images that the whole detection process needed. The addition of new convolutional layers considerably increases the quality, but at the same time they need computing resources but also memory resources, so at this moment in the second stage elements are used that improve performance through much more useful computing power for nonlinear execution processes, subsequently obtaining processes for extracting network features with increased detection accuracy.

4. Results

4.1. Experimental Evaluation and Results

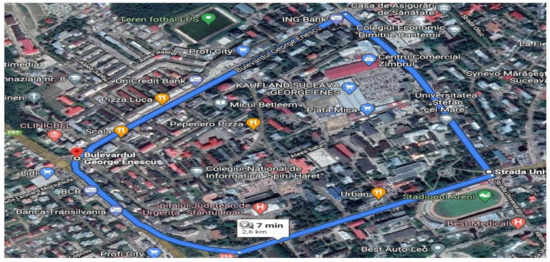

The simulated scenarios were drawn and made on routes that stretched over a distance of about 6 km several times, set according to the flow of vehicles and traffic congestion, and there was a greater flow of pedestrians (see Figure 4). In the creation of the scenarios, unforeseen events such as blockages were taken into account and therefore the measurements lasted for about 25 days with most results on weekends. The crowded times were around noon, between 11:00 and 14:00. The distance travelled of approximately 6 km took place on the crowded streets in Suceava near the campus of Ștefan cel Mare University, more precisely on Ștefan cel Mare Boulevard and on the connecting streets George Enescu and Universității Street, with a capacity of flow and transit of between 800 and 1300 cars up to the maximum degree of congestion [61]. Out of all the outlined scenarios, approximately 10 were selected, those most loaded with information and lasting more than 180 s, in order to analyze a larger sector of the road and to highlight as many elements as possible. In all these scenarios, weather conditions are tested, e.g., sunlight falling perpendicular to the road surface which automatically disrupts the measurements, the speed of the vehicle or the interleaving of objects in the detection plane, the clothing of pedestrians.

Figure 4.

The route on which the scenario simulations took place.

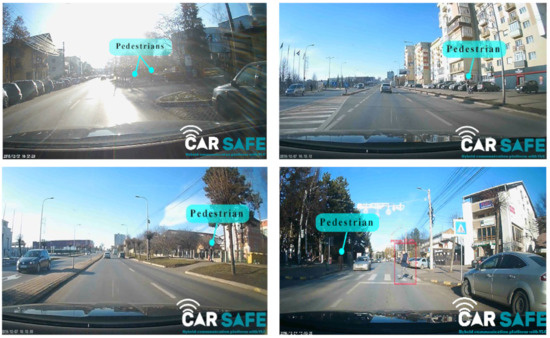

All these features are analysed gradually increasing both the flow of pedestrians and the speed of movement in order to identify the progress of the first developed version of the proposed system. The first four scenarios, presented in Figure 5, are performed on secondary alleys and streets that do not contain many elements that are difficult to identify, ideal for outlining training sets. The speed in these scenarios is between 15 km/h and 20 km/h, an ideal speed for detection, but in these conditions any negative event can be remedied in a timely manner without endangering the lives of traffic participants or pedestrians.

Figure 5.

Pedestrian detection and predictability movements. Red grids show a dangerous location and green grids show a secure location or position for pedestrians.

After the completed stage, to a large extent as expected, there are three scenarios that take place on a route with increased density of vehicles, pedestrians, traffic lights and people who do not have distributive attention and throw themselves into the process of crossing the streets without care. At this stage, the results were as expected and there were no technical problems, apart from that created by sunlight that in some areas obscured the measurements and identification of pedestrians by covering them with light reflection either from asphalt or car windshield. The last set of scenarios increases the degree of difficulty because of the complexity of the route but also the density of vehicles and pedestrians. The speed of travel increased, reaching 65 km/h speed in some sections, at which the identification and predictability process have intermittencies and deficiencies in finding the necessary characteristics. The first part of this scenario takes place on a poorly circulated secondary alley. The moment the car travels on the main road, the first annoying factor is sunlight, which constantly falls on the surface of the windshield, hood, and road surface.

This factor disrupts both the movement and the detection process, during which time no pedestrians were identified. After turning the car on an ideal route, we notice that as the speed increases the analysis and the detection of pedestrians is delayed; the speed was between 55 km/h and 62 km/h see Figure 6. In some cases, detection was made, but the identification from the image seems to be delayed. This problem is caused by the hardware component. The elements that created problems can be remedied both by adjusting the hardware components and by adding optical filters to reduce FOV (Field of View) and extracting parasitic light, but also by adding new video cameras both in the front of the car and in the side mirrors for a wide spectrum of observation [69]. All these elements make significant adjustments in increasing quality and accuracy, with more sources and more comparison areas.

Figure 6.

Unidentified elements and pedestrians, due to driving speed, stray light, or distance from the vehicle.

The analysis of all scenarios is highlighted in Table 1 which details the number of frames, travel speed, errors, and accuracy shown by the proposed module. Another not negligible aspect is that HoG features can be implemented for image processing. This would greatly increase the processing performance, and it is imperative to analyze the gradient several times as a linear operator. We can say that the accuracy in the case of pedestrian detection may have decreased caused by the total number of frames per second and the amount of information to be processed. This can be seen in the table below.

Table 1.

Identification accuracy details, frames, and pedestrians detected from total people existing in images, frames lost, and detected travel speed.

The current features that are being worked on improve the detection area of the algorithms by performing an image analysis based on contours and delimitations between the pedestrian area and the road. This step is made to exclude conflicts of any kind by sequencing, and is much more eloquent; based on leaving spaces allocated, the classifications improved, with over 20 different classes of static objects. In the first phase, the localization and reprocessing errors were in line with expectations, but the accuracy and precision were improved by overlapping the convolution layers, obtaining results about 10% more conclusive than in the early stages. The major advantage of the YOLOv3 algorithm is that it uses independent classifiers for class analysis and the binary losses obtained have cross-entropy and this factor identifies the class much more objectively [70,71]. When such logical and independent classifiers are used, the object created in its own model can be detected from a wide range of other objects. Therefore, the studies carried out led to the use of this algorithm, and the adjustments made it possible to identify over 80 categories of objects, exponentially adjusting the computing power.

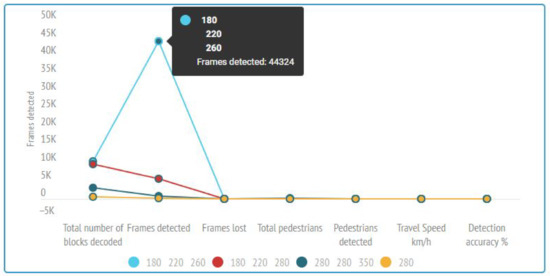

According to the analyses carried out in the literature, there are other elements that need to increase the effectiveness of the approach in terms of clothing and increased accuracy [72,73]. Identifying key objects for vulnerable people is also an aspect that we are developing. We can see in the graph in Figure 7 that through small data packets and detailed segments a viable accuracy is obtained following the detection process.

Figure 7.

Graphic analysis based on the table of details of identification accuracy, frames and pedestrians detected from the total number of people in the images, frames lost and speed detected.

4.2. Description of External Environment and Practical Scenarios

According to the literature and elements presented in previous chapters, the future of road safety systems is taking shape and is based heavily on the analysis of unpredictability in and out of traffic, namely pedestrians, infrastructure, cyclists, and objects that can distract and lead to the production of a negative event.

The predictability of these traffic elements is increasingly difficult due to the flow of cars and the very unpredictable and extremely hurried human character of certain reactions. Based on the structure of the system presented in Section 2, a system dedicated to external data processing and its transmission in a mini-traffic management system is developed in that direction, more precisely an intelligent traffic light that can intercept information promptly and provide a viable and motivating alternative. The proposal under development has the same characteristics as the other modules developed, but it addresses the external environment and gathers different information.

Therefore, the use of data exchange via VLC or V2X can provide traffic information at a relevant time without disturbing the proper functioning of the devices. When bidirectional communications are used there are advantages not only in informing the system driver, but also other receivers in the next area that benefit from VLC receivers. The existing infrastructure allows the interconnection of these devices without major changes affecting their functionality.

4.2.1. Description of External Environment and Practical Scenarios

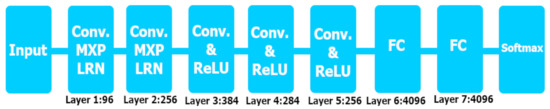

The algorithm used at the moment relies strictly on convolutional DNN (Deep Neuronal Networks) to achieve the classification (see Figure 8). This created model is based on a classification of a much larger AlexNet high-resolution data drive set, using for its development several efficient graphics processing units through convolution and parallel processing operations [74,75]. The basic network through which this algorithm operates is a rectified nonlinear type (ReLU) function expressed with the Equation (3):

Figure 8.

The architecture for AlexNet: Convolution, max-pooling, LRN (learn resource network) and totality connected (FC) layer.

Unlike the other equation, the model in Equation (4) is the neural standard, which provides much more efficient and fast results in terms of experimenting on large data packets.

Therefore, this type of neuronal standard, or more precisely this type of neuron, does not require normalization of the input parameters to manage the saturation. Thus, to realize the local normalization scheme, the activity of the neuron is calculated by applied in the function kernel i at position (x, y), applying the nonlinearity ReLU, obtaining a normalized response of the activity and given using Equation (5):

The role of Equation (5) is to sum all the maps related to the nucleus adjacent to the same spatial position, and the notation N is the integer assigned to all the cores in a layer. Therefore, unlike the standard model of the algorithm, it has a much smaller number of classes, even of those of interest such as cars, buses, bicycles [76,77]. In this direction, there are studies and niche systems such as Cyber-Physical, dedicated to the analysis of all types of bicycle, moped, or other devices in this category.

We can say that the field of vehicle detection plays an important role regardless of the area of application, civilian or military, but also in the management and planning of urban traffic. Incorporating all the basic functions into a feature dedicated to detection helps to process and create image analysis tools according to need [78,79,80].

4.2.2. Experimental Evaluation and Experimental Results

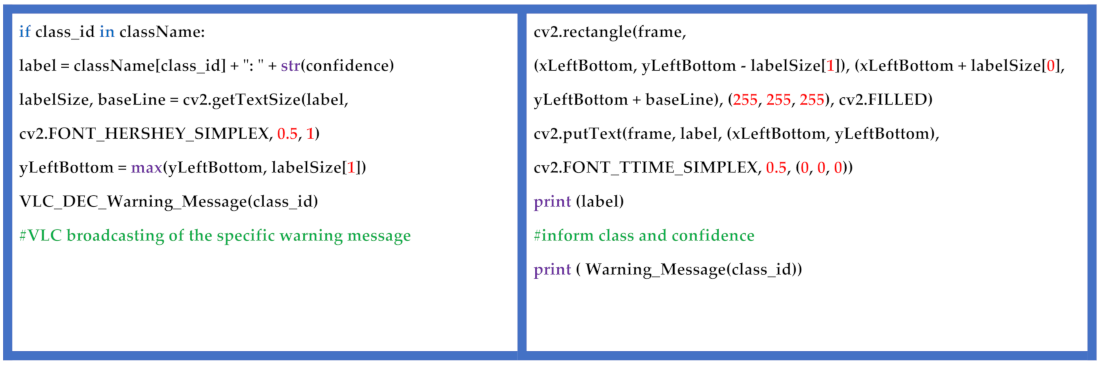

Because of the achievements of the simulations, they were molded using the same scenarios as in the case of the previous module, except that the detection method and the amount of information were different. According to the simulations, we obtain simultaneous detections on some elements of interest that are part of the category of those that offer safety in a traffic management system. We can say that visual tracking aims to deal with the streams of non-stationary images that change over time. Most existing algorithms have the ability to track objects in controlled environments but in areas with significant variations or fluctuating lighting they do not provide feedback as expected. This aspect is caused by the use of fixed analysis models without listing more details and characteristics, which limits the range of apparent detection. Detection based on incremental algorithms includes several components that correctly analyze the sample and add an ample modeling factor based on the basic observations. These adjustments demonstrate that there is the capacity for consistent improvements in the section dedicated to position, lighting, and area [81,82].

Therefore, we can see in Figure 9 that we have the possibility of identifying several objects at the same time, the information subsequently transmitted to the car interface or the traffic management system. The detection process is exemplary independently as a trigger, focusing on determining the transmission and, in turn, is maintained throughout the process. Therefore, once we get the shape of a class and it is detected according to the code sequence presented, a box aims to highlight each detected element and the specific message is ready to respond to the detected class, then sent to a prioritization list. According to experimental data, the classes mentioned in the detection process have a maximum interference time of between 55–120 milliseconds, presented in Table 2 [83].

Figure 9.

Results with detection object for external environments, interference time, and percentages for detection.

Table 2.

Detection accuracy details, total blocks decoded, frames and objects detected from total identified elements existing in the image.

Being a network method for detecting objects in deep neural network images and video sequences, it discretizes the output space of boundary boxes in a set of default type boxes. These processes are on different scales, always using the location of the feature map [55]. When the prediction is made, the network provides results for each category of object that match the shape of the model. A graphical simulation illustrates the results obtained in the presented scenarios (Figure 10).

Figure 10.

Graphic analysis based on the table on detection accuracy details, total blocks decoded, frames, and objects detected from total identified elements existing in the image.

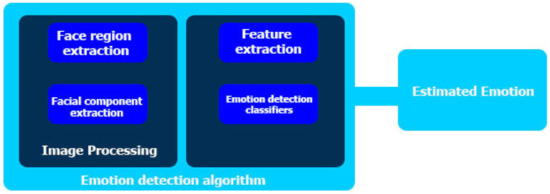

4.3. Description Emotion Drivers Setup and Practical Scenarios

According to the theory and literature, the algorithm used manages to combine classification and detection by the position regression procedure for the processed area, analyzing the area and the detection framework, and turning everything into a regression problem. We can say that during all this time the problem of detection is concentrated in the form of a convolutional neural network, concatenating the output data with the inputs from the original image by parsing the location of the characteristics. Therefore, we consider that the detection frames of those faces depend on the received coordinates and outline the last prediction frame for all frames in the created grid, so the coordination values of the anchor box are randomly reset and the network is fully examined to obtain a punctual and concrete analysis between the detection distance and the predictability area, as opposed to the standard defined by Equation (6), where IOU are characterized by the ratio between the intersections of unique feature sets and the real area.

Therefore, according to the literature, to have a qualitative and conclusive analysis and detection process, we must carefully follow certain specific steps in terms of interactions, given their maximum number, the speed of each conjunction v = 1, and using the K-means algorithm with the role of grouping the data to be able to identify and to obtain the central point m of the cluster. According to the calculations for the degree of individual sharing, it is necessary to group the elements in a common function in the case of calculating the distance being Equation (6). If we have a shorter distance, we can automatically say that the shared value is higher. If we take into account the degree of sharing for each element, the degree of matching can be sufficiently adjusted to analyze each zonal conjunction by Equation (7):

4.3.1. Description of Emotion, Drivers Setup and Practical Scenarios

The next step consists of the ascending ordering of the results according to the degree of matching with the analysed characteristics, comparing the final elements of analysis of individuals in the form of the first individuals analysed, n, where (n < m). Therefore, we obtain an operation that realizes the proportional selection, and later the cross one, calculating uniformly the variation in the form P(t), obtaining the variation We can say that by combining n and a individuals scanned and permuted in memory, the insertion is achieved through a new clustering process of the form: n + t. If we compare the physical form of people and groups according to the degree of fit, a filtering function can also be implemented. Repeating the previous instance and applying Formula (7) is done to update the memory by algebraic and evolutionary calculation of type e = e + 1 until a much larger number of iterations are reached, which tends to obtain a much smaller number of features, but of higher quality.

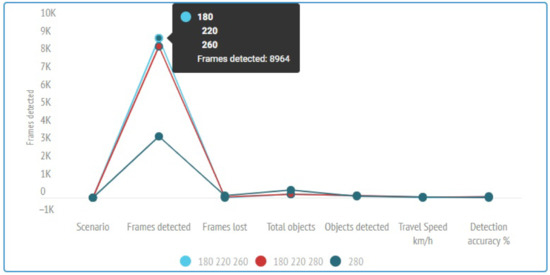

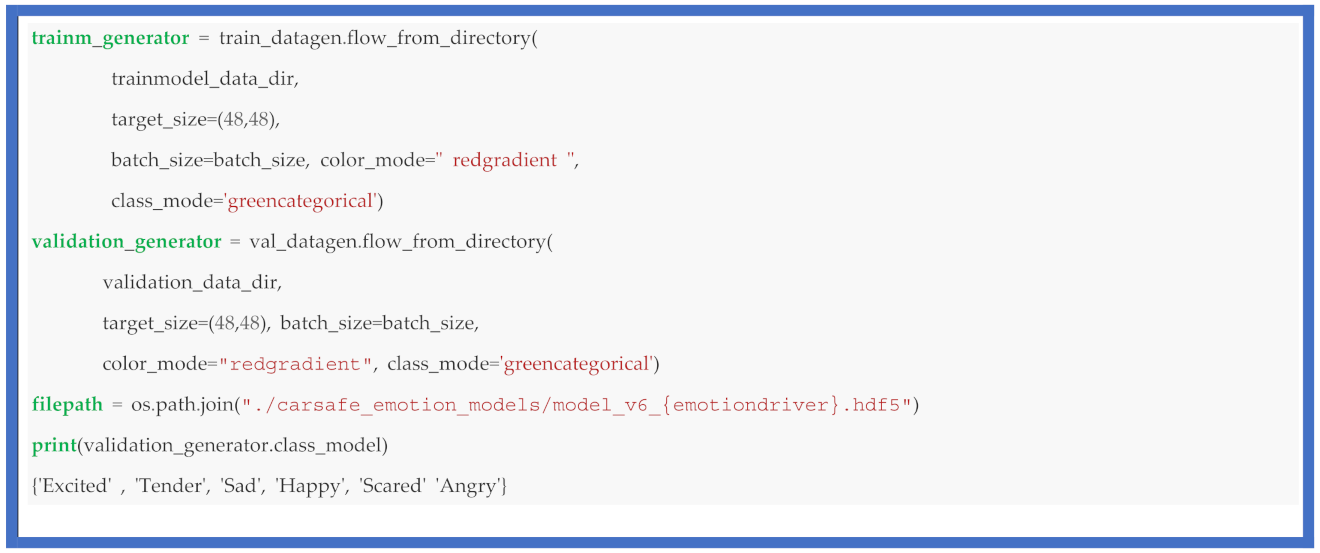

Concatenating information at the cluster level and improving existing elements by outlining the best forms of matching and similarities obtains a detection box with an ideal IOU. The direct benefits of the algorithm are related to the nature of the 53 convolutional layers, which exercise and create three feature maps at different scales, semantic information with standards, and high characteristics, according to Figure 11 [84,85]. According to studies, about six emotional states have been identified that can change the state of drivers, leading to unforeseen events in traffic. Thus, depending on the area and the features of the population, the most common states identified and outlined as models to be identified are: excited, tender, sad, happy, scared, angry.

Figure 11.

Schematic of the processing algorithm for emotion drivers detection. detection.

4.3.2. Description of Emotions, Drivers Setup and Practical Scenarios

The extractions were performed through the detection module, using detection scales and processing scales with four points of complex analysis, combining the sampling of features in the case of larger images based on scales and anchor boxes, being a predictive analysis of the following target to be analysed, with standardization scales and feature grids.

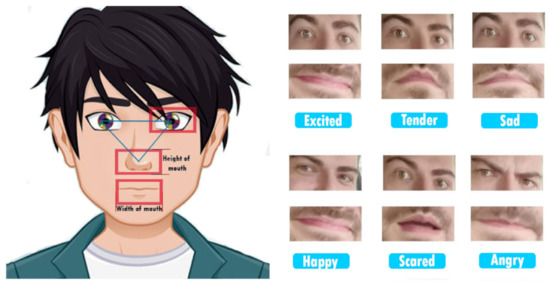

Being emotional states that emanate externalized facial expressions, we need an in-depth and detailed analysis according to Figure 12, calculating between the areas and points of interest formed by the positioning of the mouth, nose, and eyes to expose and extract the characteristic vector. Depending on these aspects, a final profile is outlined. By sampling the 32 layers in the analysis of the processing field, superficial filtering information is received and the losses from the convolutional and sampled determination of the traversed multilayer can distort the results. Several anchor boxes and predictive analysis clarify these issues by standardization scales and by forming boundary grids. Thus, the division of the face into several regions is a viable solution that identifies the oral and ocular region, outlining areas with a large number of features, delimiting them from the auxiliary region, and extracting information about shape, contour, geometric information, or facial movements. Increasing the data and creating the training elements used is done through the iterations below in which the compared model and the created one analyze and identify similarities to display the degree of validation between the characteristics that match an emotional state related to a state according to the processed models. Given that this vectorization process does not execute and responds with a valid echo, a class of confusion matrices is outlined that plots the whole process to resume these iterations and creates a new set of comparative data. The exposure of the identified and classified areas was extracted and transmitted in lists that were subsequently reconsolidated and revalidated together with classes generated, new instances, and data verification in the introduced set.

Figure 12.

Position of features in eye and mouth region and facial expressions that can reflect mental state.

A classifier report is presented with information based on criteria of precision, repeal and information support. We can say that this classification report highlights a degree of confusion regarding some emotional states that were not identified and validated as non-compliant, being an erroneous answer. We can say that the current system has a continuous approach and development because facial expressions must be identified from multiple variables of the human face, color, posture, and even facial expression or orientation. Therefore, iterations dedicated to facial movements and muscles under the eyes and nose were incremented for further classification by comparing the characteristics with the set of training values. An important aspect that we must not neglect is the one related to the naturalness of each emotion exposed by the subject, especially in the warning and road safety systems in which reactions are also involved. In order to analyze the characteristics in a robust way of facial emotions under natural conditions, the model considers nonlinear classification. Thus, the discriminative capacity of learning processes is combined with a precise classification in natural environments as a local spatio-temporal descriptor [86,87].

These negative events come from the area of facial features and non-pervasive expressions in the information. The final conversion and extraction also benefited from image smoothing and filtering elements to eliminate multiple detections from different frames, presented in Table 3.

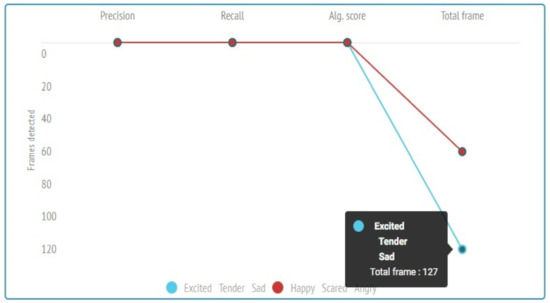

Table 3.

Identification accuracy details, total blocks decoded, precision for emotional states.

The previously presented problem in which the model suffers recognition problems has been solved by repeating the detection instances. These models have been standardized and some facial expressions suffer differences in the final exposure. The final corrections and identified characteristics do not guarantee that the extracted vector contains a single emotion that is specific to a certain condition. Being a concordance between the convolutional neural network and between a deep neural network, the developed models can approach facial expressions in several directions and states. The performance offered by deep neural architectures exposes up to six hidden layers of the detection process. Therefore, the detection and extraction of emotional traits are also based on active-looking models or coding of facial actions treated by Ekman. The final presentation of the results shows a recognition accuracy with performance in the direction of reasoning emotions using the methods proposed and expressed by the graphical illustration in Figure 13, based on the data obtained [88,89].

Figure 13.

Graphic analysis based on the table of detection accuracy details, total blocks decoded, precision for emotion states.

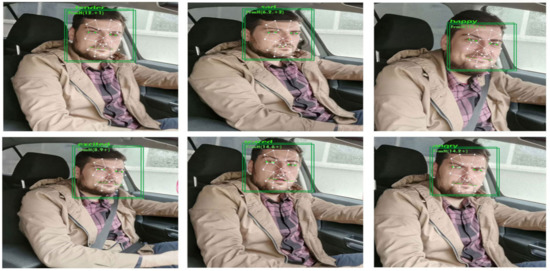

Facial grimaces and uncontrolled reactions can lead to confusing reactions and guarantee invalid or non-compliant identification. A conclusive first data set was extracted from several processed sequences and all six emotional states with repeated iterations in the case of tender and sad states were identified, with some common elements and characteristics that can be confused in facial analysis (exposures are shown in Figure 14).

Figure 14.

Experimental results on the detection of emotional states.

Human states and emotions represent feelings and manifestations of the human body in different conditions and situations that depend on the personality of each person. This concludes the complexity of the phenomenon and clearly shows that detection and a standard designed model may have different pattern characteristics identified in other people. These complicate the detection process and decrease accuracy, requiring classification and a larger number of subjects to outline a map with data sets and features. According to the filtering procedures performed and which facial elements were extracted in outlining a facial histogram, facial elements containing feature vectors were also composed. Identifying the similarities between the training sets and the processed frames led to a comparative classification that provides an extensive calculation of the recognition rate and provides the security of a valid data set and an automatically viable response.

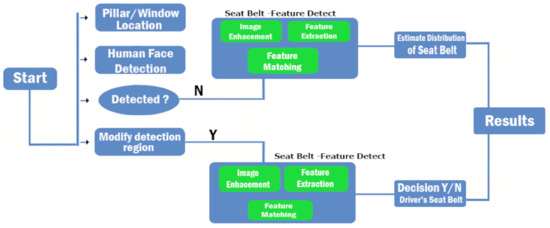

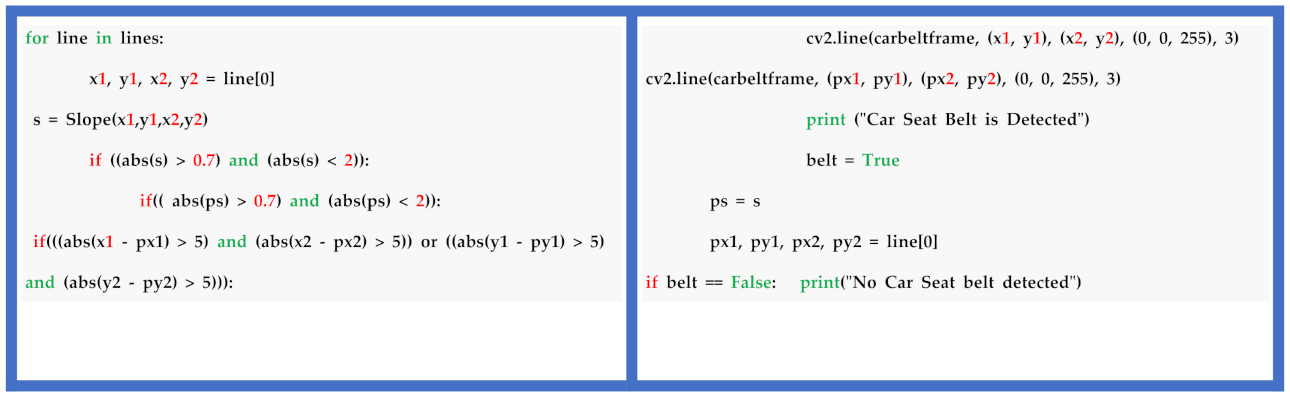

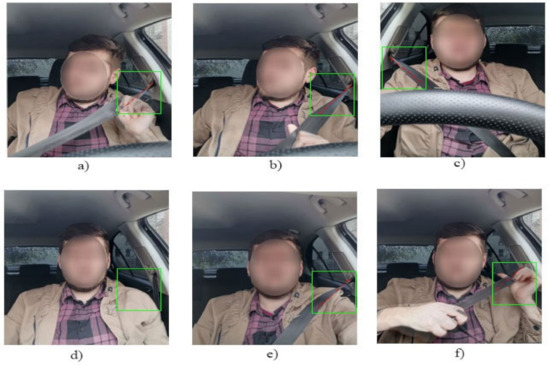

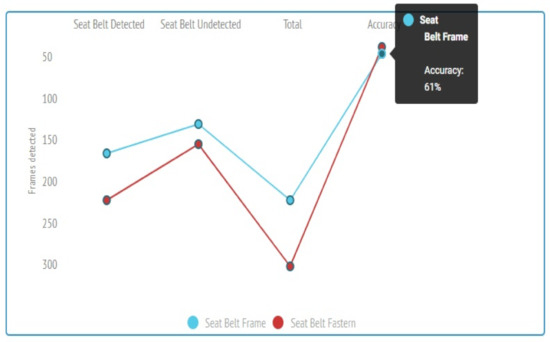

4.4. Description of Seatbelt Setup and Practical Scenarios