Canopy Top, Height and Photosynthetic Pigment Estimation Using Parrot Sequoia Multispectral Imagery and the Unmanned Aerial Vehicle (UAV)

Abstract

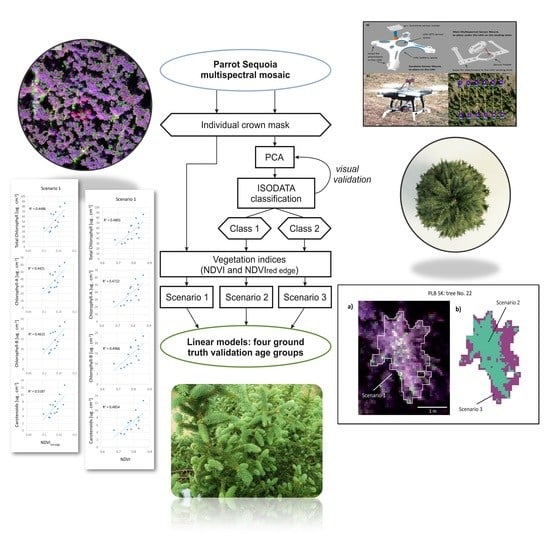

:1. Introduction

- An accurate definition of the individual tree extents (crown delineation) and derivation of other parameters, such as tree top and height using the UAV-based multispectral data.

- Testing if a linear relationship can be established between selected vegetation indices (NDVI and NDVIred edge) that are derived for individual trees and the corresponding ground truth (e.g., biochemically assessed needle photosynthetic pigment contents).

- Testing whether the needle age selection, as ground truth affects the validity of the linear models.

- Testing if the tree crown light conditions affect the validity of the linear models.

2. Materials and Methods

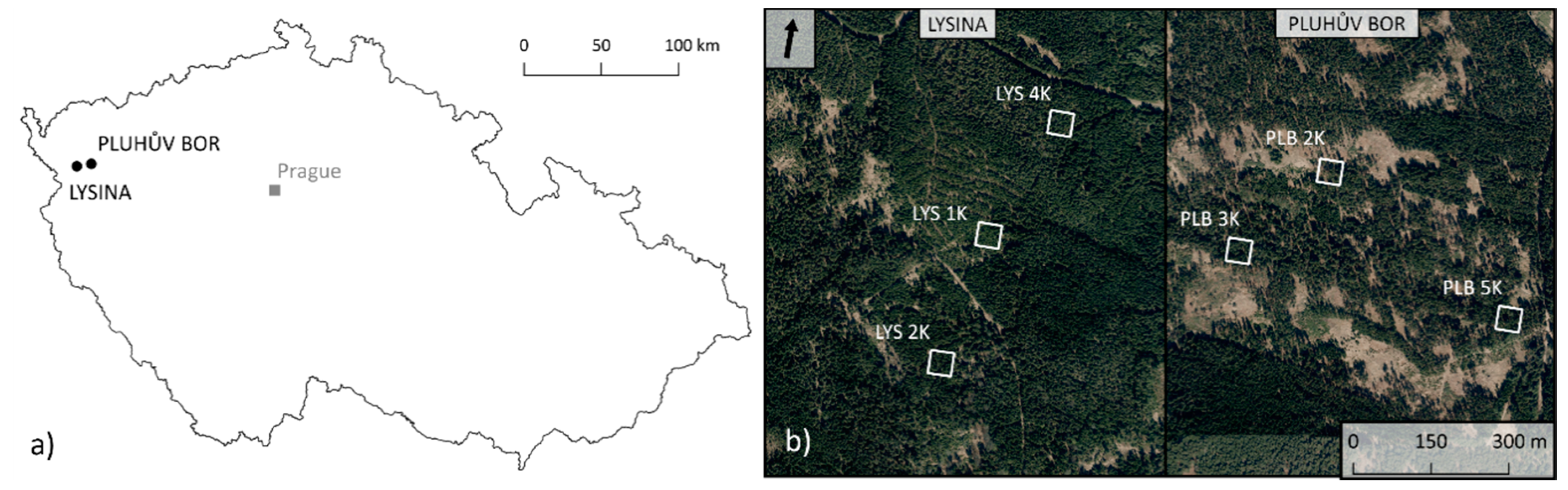

2.1. Test Sites

2.2. In-situ Ground Truth

2.3. UAV Data Acquisition

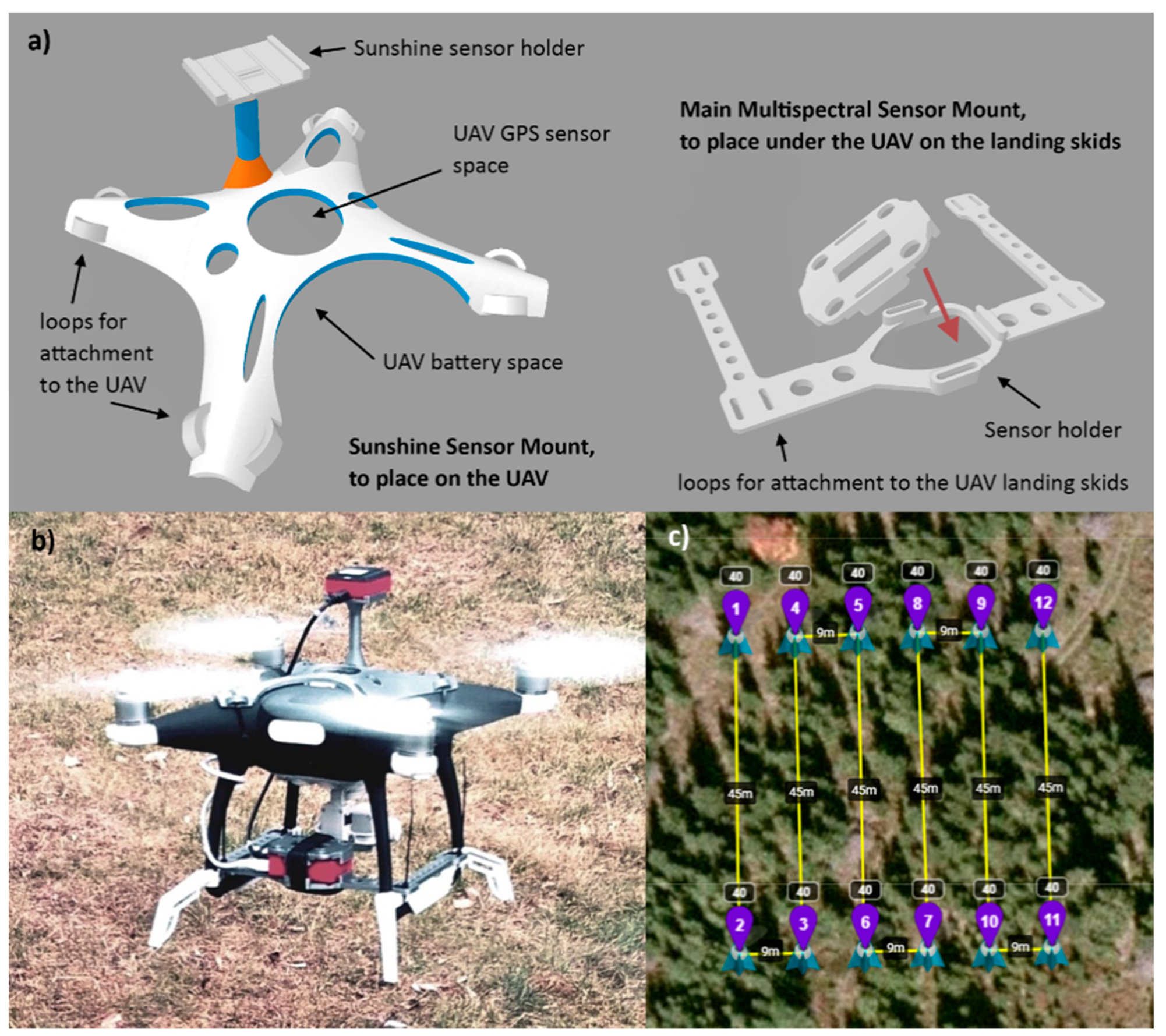

2.3.1. Equipment

2.3.2. Data Acquisition

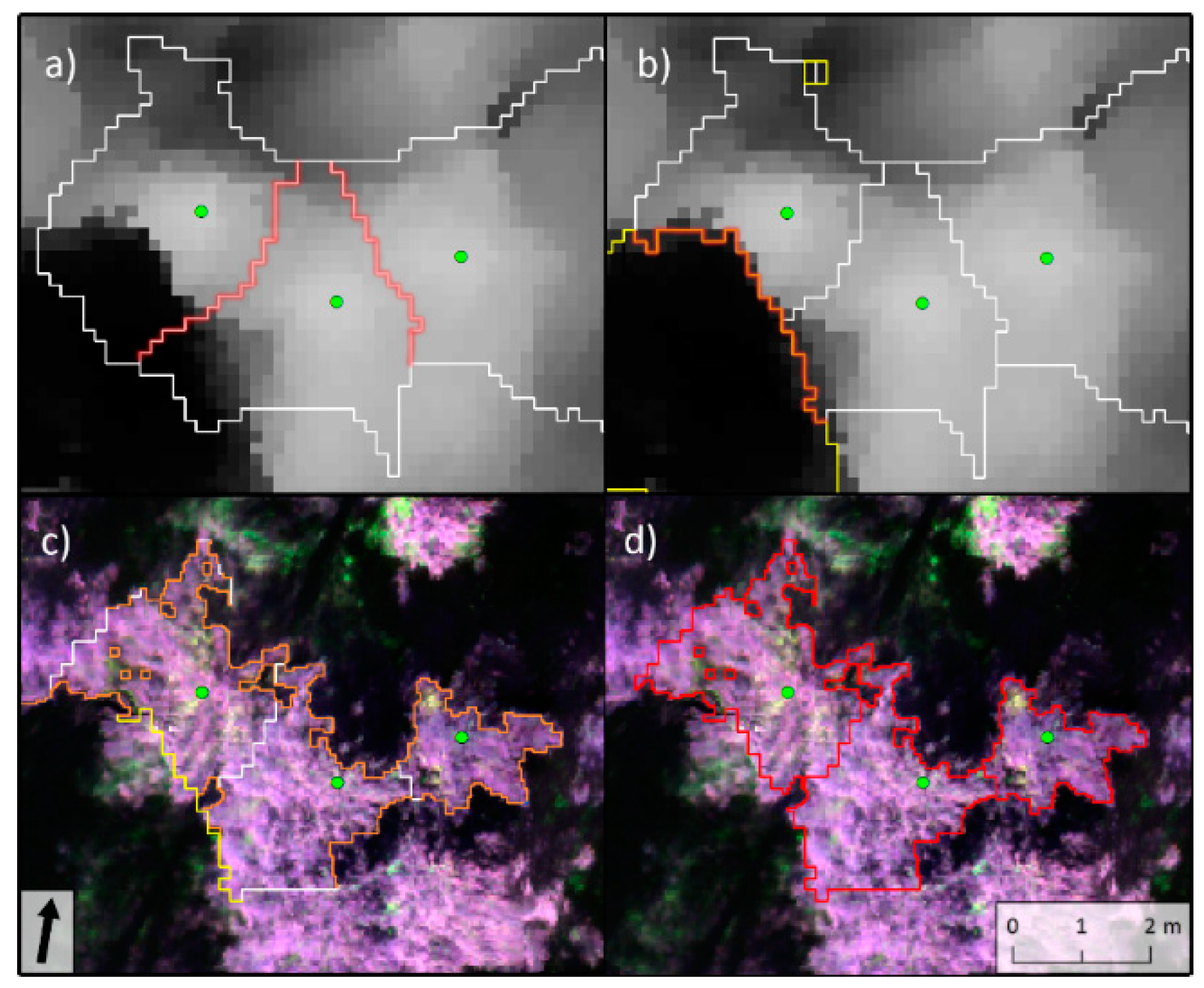

2.4. Tree Height, Crown and Top Detection

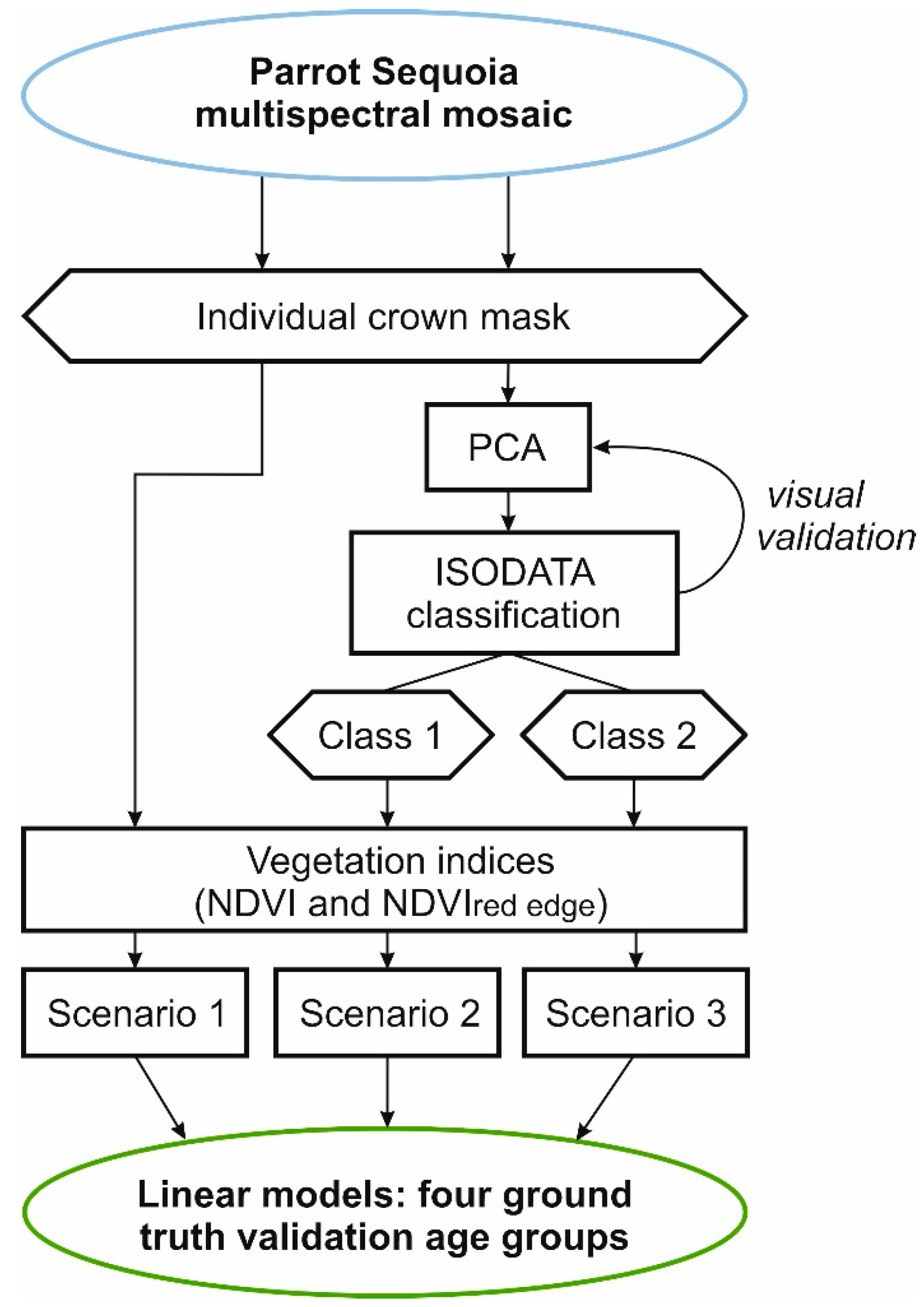

2.5. Multispectral Data Processing

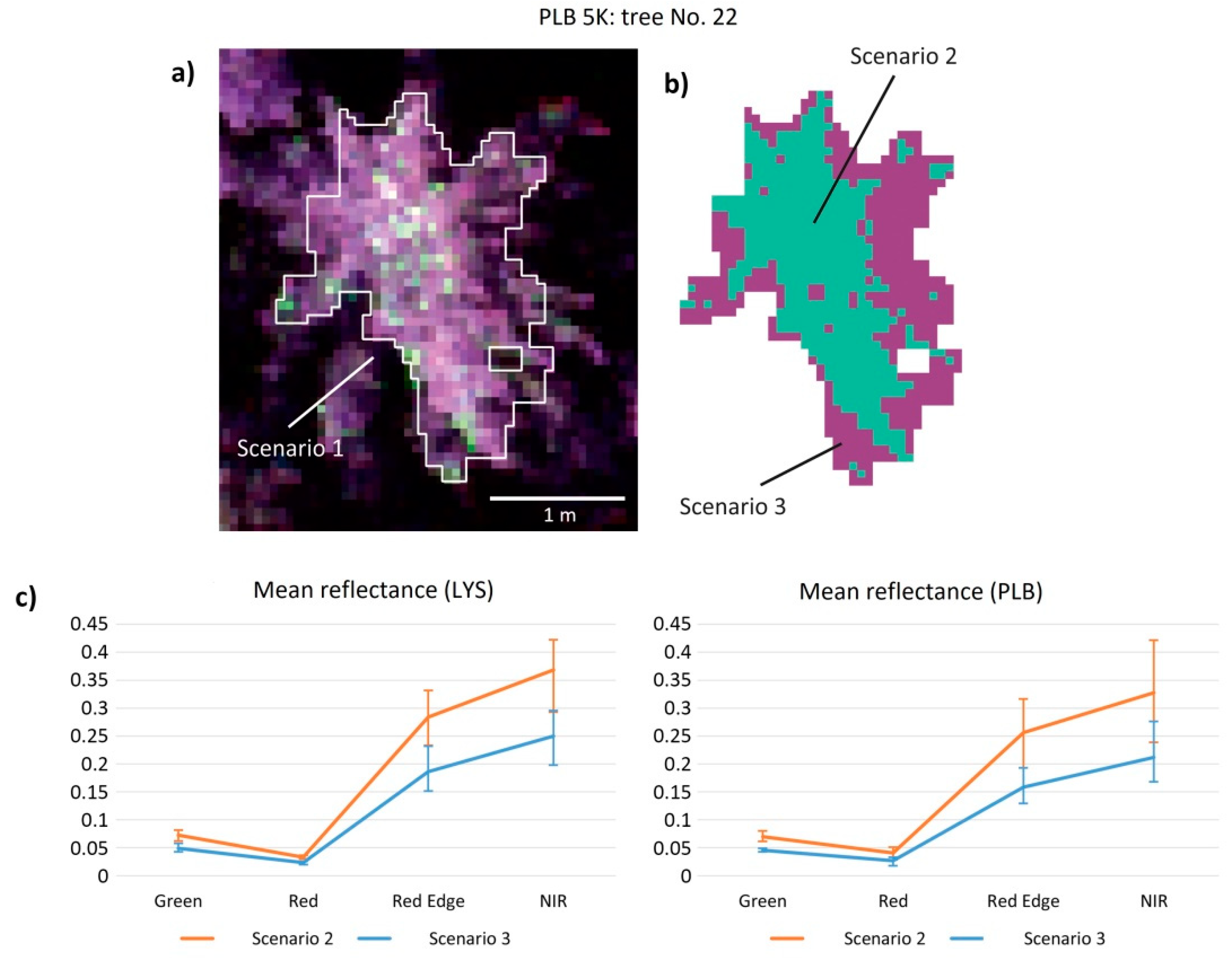

- Scenario 1: all of the pixels representing the whole tree crown have been averaged and used for further statistical analysis.

- Scenario 2: pixels representing the higher-illumination top part of the crown have been averaged and used for further statistical analysis.

- Scenario 3: pixels that represent the lower-illumination part of the crown have been averaged and used for further statistical analysis.

- all needles included

- first year needles included

- second year needles included

- mixed sample of fourth year and older needles (hereinafter referred to as fourth year for simplicity)

3. Results

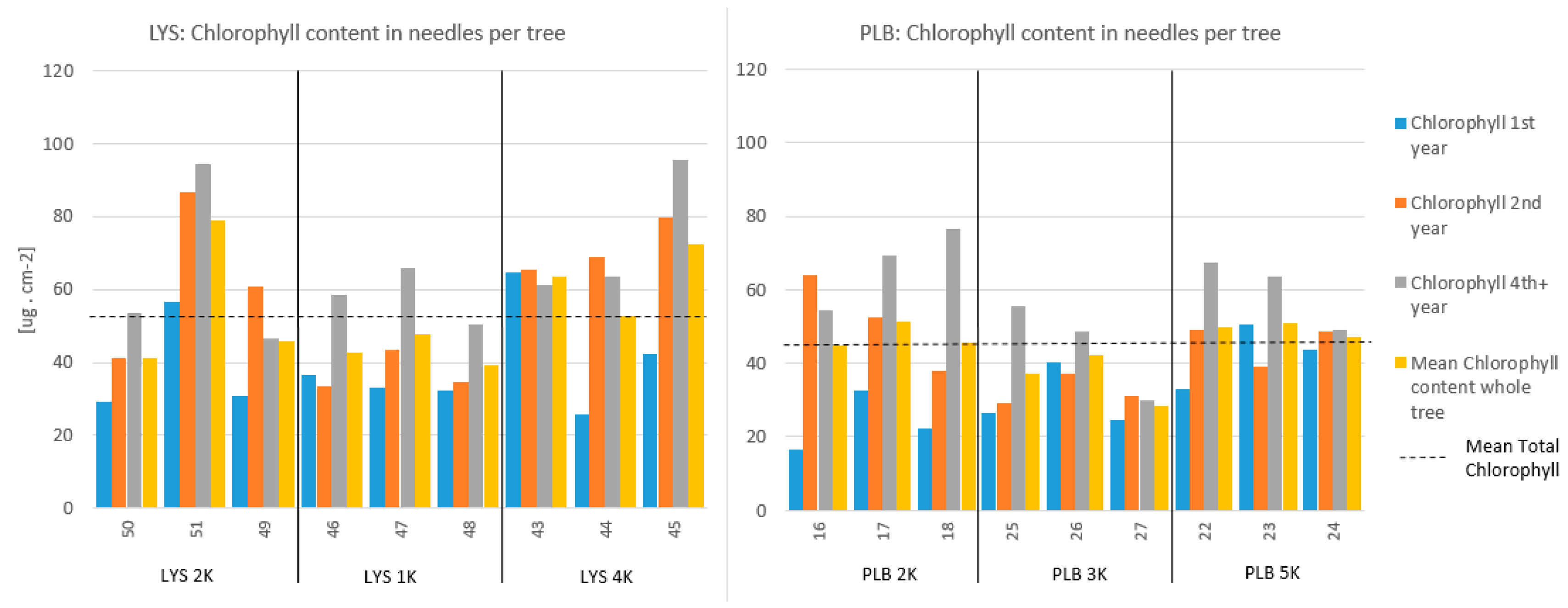

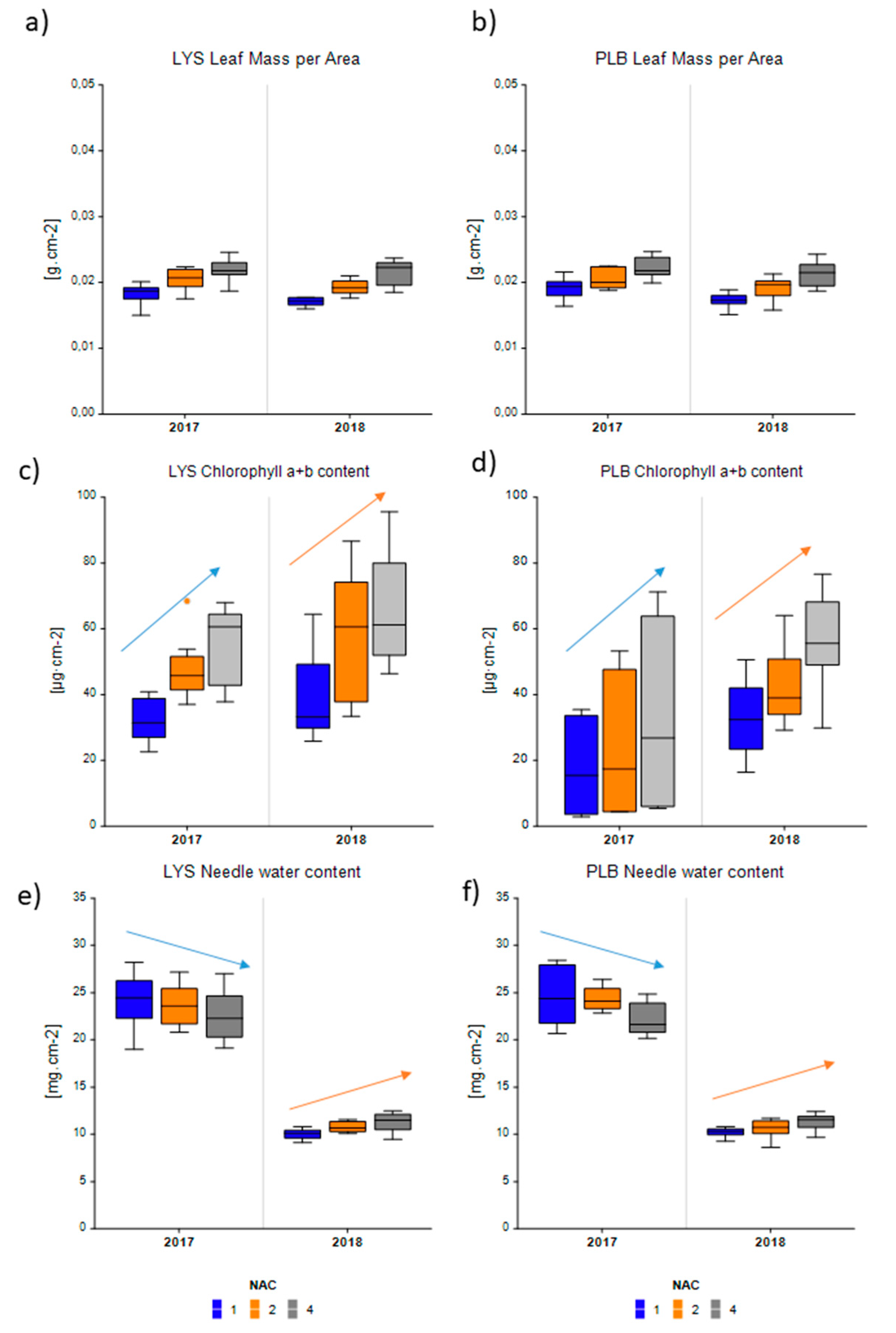

3.1. Photosynthetic Pigments

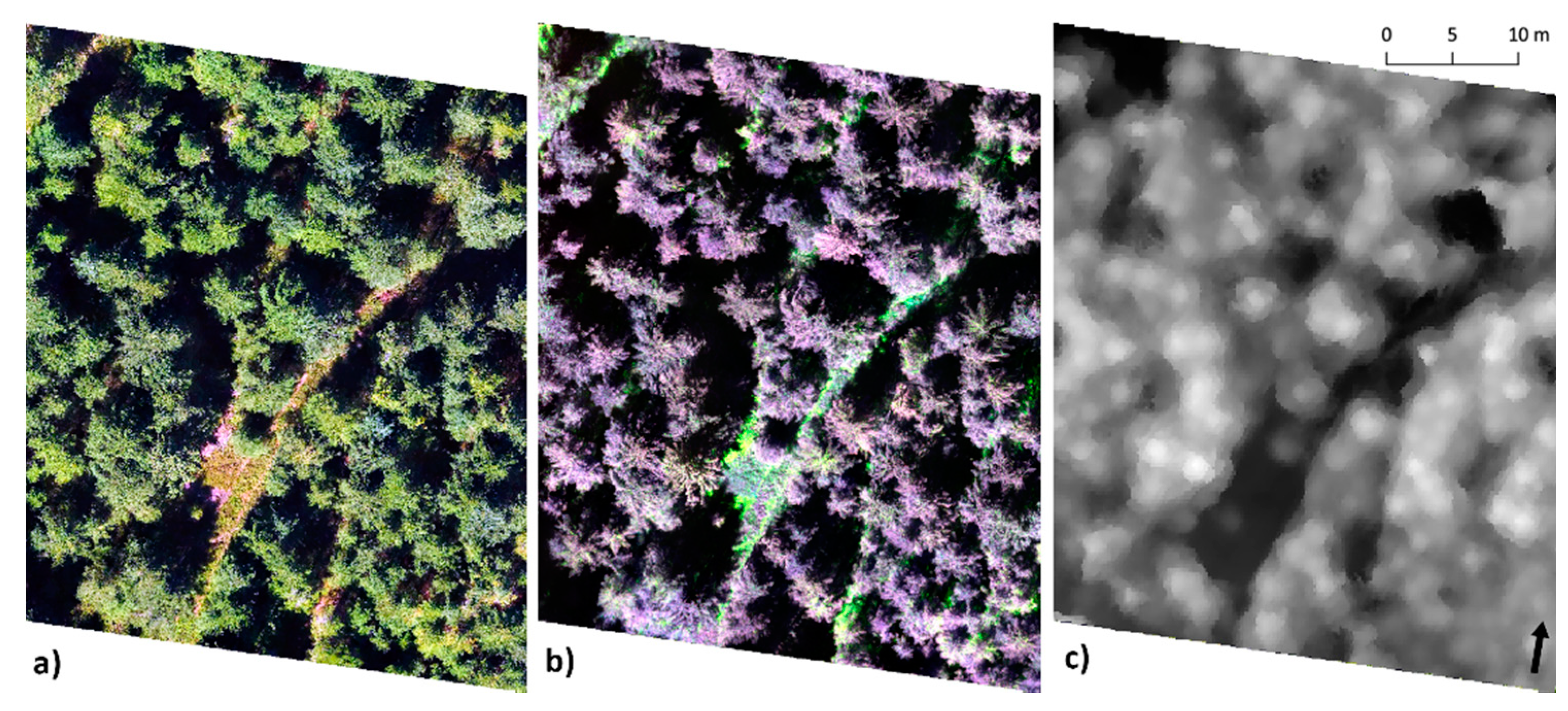

3.2. UAV Photogrammetric Products

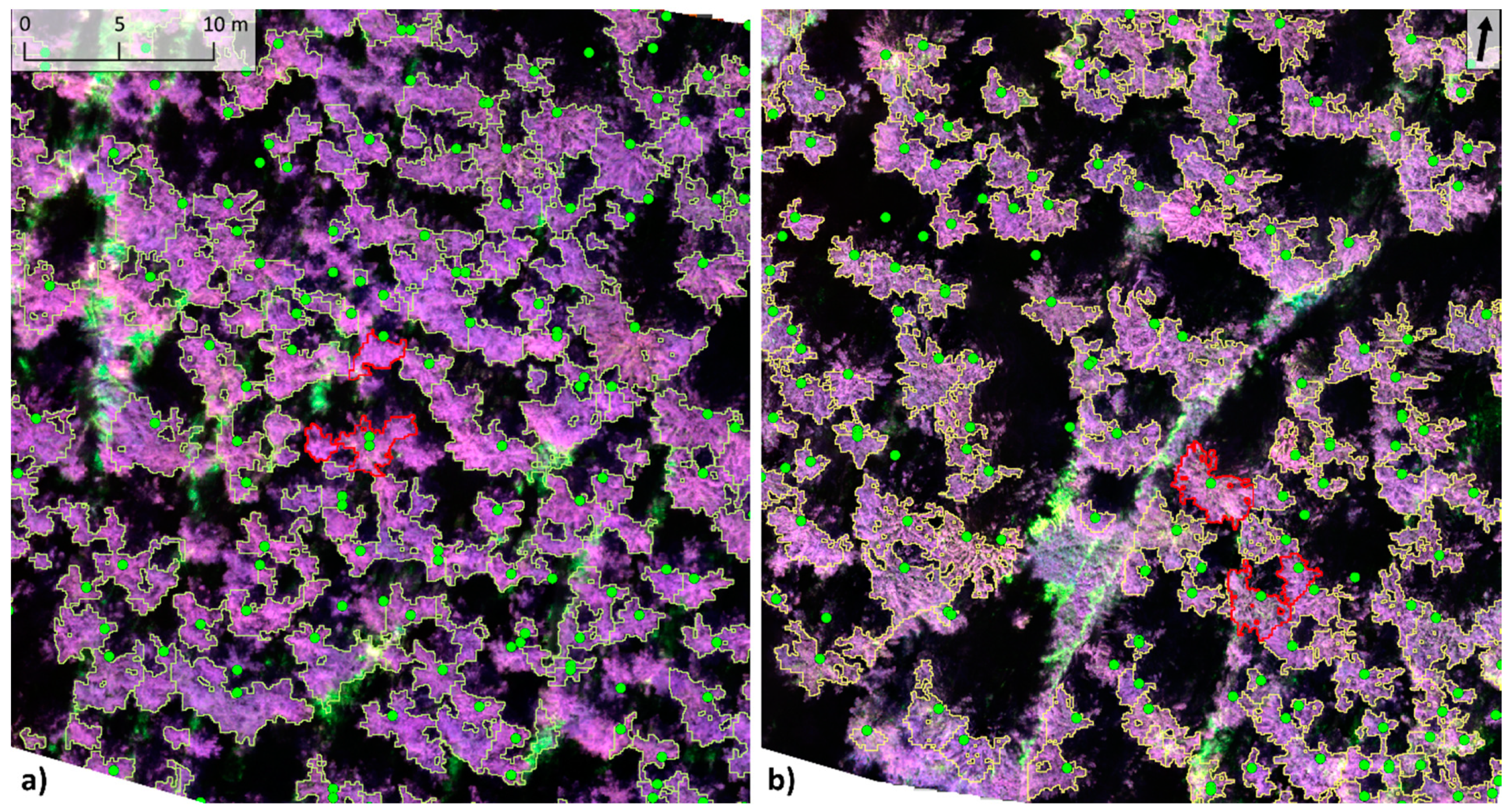

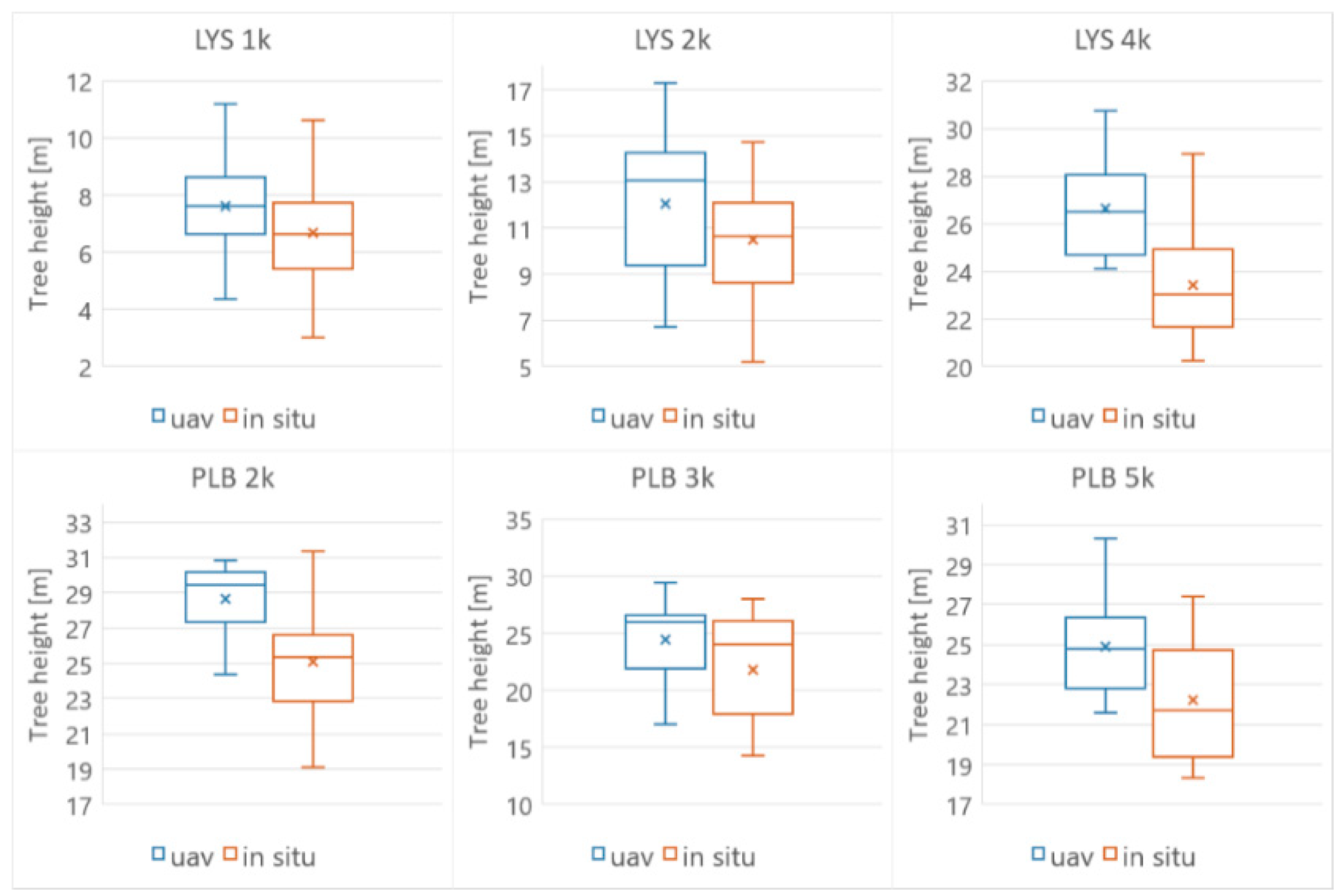

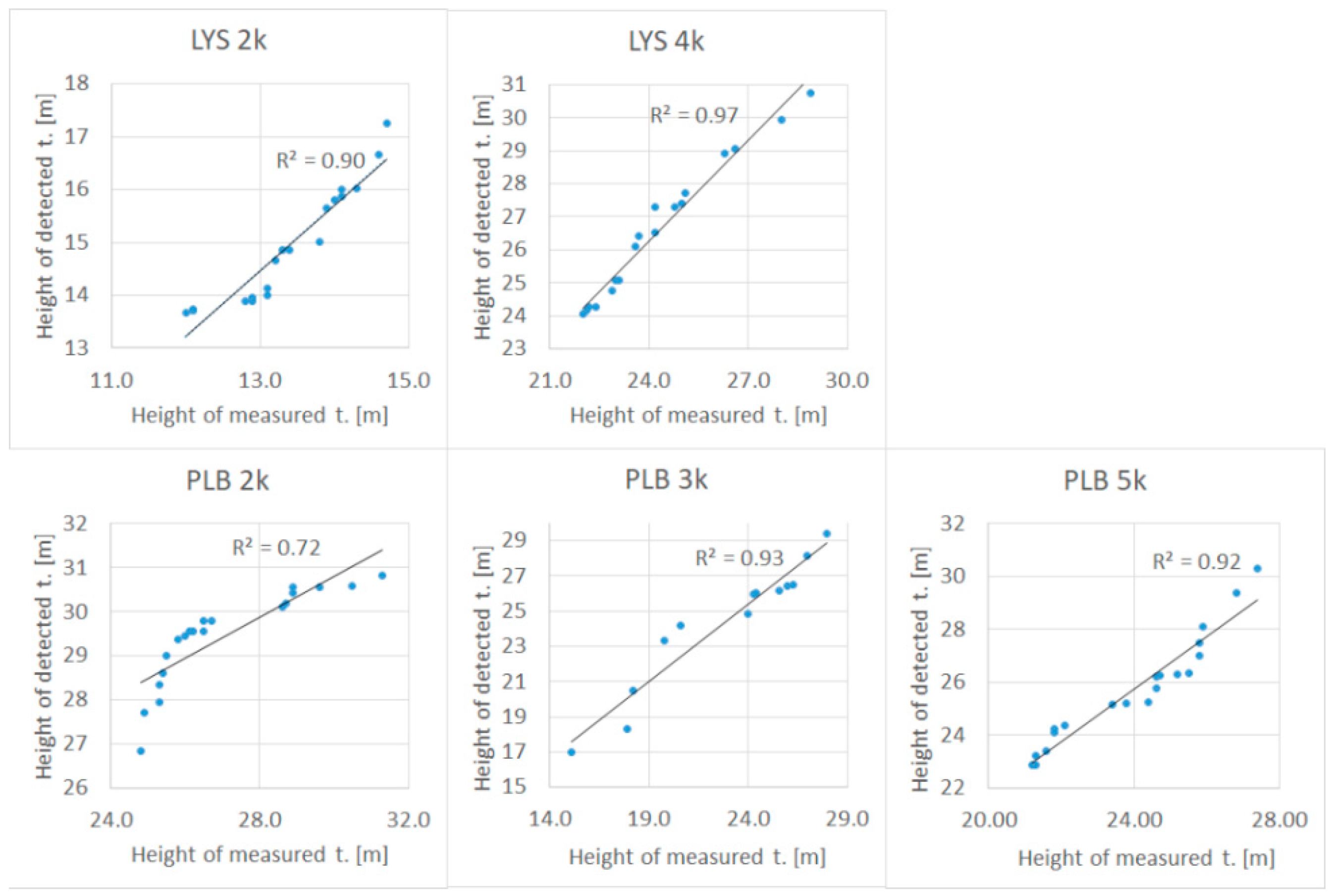

3.3. UAV Tree Height, Crown and Top Detection

3.4. Tree Crown Illumination Classes

3.5. Relationship between Selected Vegetation Indexes and the Ground Truth

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

References

- Potapov, P.; Yaroshenko, A.; Turubanova, S.; Dubinin, M.; Laestadius, L.; Thies, C.; Aksenov, D.; Egorov, A.; Yesipova, Y.; Glushkov, I. Mapping the World’s Intact Forest Landscapes by Remote Sensing. Ecol. Soc. 2008, 13, 51. [Google Scholar] [CrossRef] [Green Version]

- Boyd, D.S.; Danson, F.M. Satellite Remote Sensing of Forest Resources: Three Decades of Research Development. Prog. Phys. Geogr. 2005, 29, 1–26. [Google Scholar] [CrossRef]

- De Vries, W.; Vel, E.; Reinds, G.J.; Deelstra, H.; Klap, J.M.; Leeters, E.; Hendriks, C.M.A.; Kerkvoorden, M.; Landmann, G.; Herkendell, J. Intensive Monitoring of Forest Ecosystems in Europe: 1. Objectives, Set-up and Evaluation Strategy. For. Ecol. Manag. 2003, 174, 77–95. [Google Scholar] [CrossRef]

- Pause, M.; Schweitzer, C.; Rosenthal, M. In Situ/Remote Sensing Integration to Assess Forest Health—A Review. Remote Sens. 2016, 8, 471. [Google Scholar] [CrossRef] [Green Version]

- Žibret, G.; Kopačková, V. Comparison of Two Methods for Indirect Measurement of Atmospheric Dust Deposition: Street-Dust Composition and Vegetation-Health Status Derived from Hyperspectral Image Data. Ambio 2019, 48, 423–435. [Google Scholar] [CrossRef]

- Hruška, J.; Krám, P. Modelling Long-Term Changes in Stream Water and Soil Chemistry in Catchments with Contrasting Vulnerability to Acidification (Lysina and Pluhuv Bor, Czech Republic). Hydrol. Earth Syst. Sci. 2003, 7, 525–539. [Google Scholar] [CrossRef]

- Švik, M.; Oulehle, F.; Krám, P.; Janoutová, R.; Tajovská, K.; Homolová, L. Landsat-Based Indices Reveal Consistent Recovery of Forested Stream Catchments from Acid Deposition. Remote Sens. 2020, 12, 1944. [Google Scholar] [CrossRef]

- Fottová, D.; Skořepová, I. Changes in mass element fluxes and their importance for critical loads: GEOMON network, Czech Republic. In Biogeochemical Investigations at Watershed, Landscape, and Regional Scales; Springer: Berlin/Heidelberg, Germany, 1998; pp. 365–376. [Google Scholar]

- Fottová, D. Trends in Sulphur and Nitrogen Deposition Fluxes in the GEOMON Network, Czech Republic, between 1994 and 2000. Water. Air. Soil Pollut. 2003, 150, 73–87. [Google Scholar] [CrossRef]

- Oulehle, F.; Chuman, T.; Hruška, J.; Krám, P.; McDowell, W.H.; Myška, O.; Navrátil, T.; Tesař, M. Recovery from Acidification Alters Concentrations and Fluxes of Solutes from Czech Catchments. Biogeochemistry 2017, 132, 251–272. [Google Scholar] [CrossRef]

- Krám, P.; Hruška, J.; Shanley, J.B. Streamwater Chemistry in Three Contrasting Monolithologic Czech Catchments. Appl. Geochem. 2012, 27, 1854–1863. [Google Scholar] [CrossRef]

- Navrátil, T.; Kurz, D.; Krám, P.; Hofmeister, J.; Hruška, J. Acidification and Recovery of Soil at a Heavily Impacted Forest Catchment (Lysina, Czech Republic)—SAFE Modeling and Field Results. Ecol. Model. 2007, 205, 464–474. [Google Scholar] [CrossRef]

- Oulehle, F.; McDowell, W.H.; Aitkenhead-Peterson, J.A.; Krám, P.; Hruška, J.; Navrátil, T.; Buzek, F.; Fottová, D. Long-Term Trends in Stream Nitrate Concentrations and Losses across Watersheds Undergoing Recovery from Acidification in the Czech Republic. Ecosystems 2008, 11, 410–425. [Google Scholar] [CrossRef]

- dos Santos, A.A.; Junior, J.M.; Araújo, M.S.; Di Martini, D.R.; Tetila, E.C.; Siqueira, H.L.; Aoki, C.; Eltner, A.; Matsubara, E.T.; Pistori, H. Assessment of CNN-Based Methods for Individual Tree Detection on Images Captured by RGB Cameras Attached to UAVs. Sensors 2019, 19, 3595. [Google Scholar] [CrossRef] [Green Version]

- Fujimoto, A.; Haga, C.; Matsui, T.; Machimura, T.; Hayashi, K.; Sugita, S.; Takagi, H. An End to End Process Development for UAV-SfM Based Forest Monitoring: Individual Tree Detection, Species Classification and Carbon Dynamics Simulation. Forests 2019, 10, 680. [Google Scholar] [CrossRef] [Green Version]

- Fayad, I.; Baghdadi, N.; Guitet, S.; Bailly, J.-S.; Hérault, B.; Gond, V.; El Hajj, M.; Minh, D.H.T. Aboveground Biomass Mapping in French Guiana by Combining Remote Sensing, Forest Inventories and Environmental Data. Int. J. Appl. Earth Obs. Geoinform. 2016, 52, 502–514. [Google Scholar] [CrossRef] [Green Version]

- White, J.C.; Coops, N.C.; Wulder, M.A.; Vastaranta, M.; Hilker, T.; Tompalski, P. Remote Sensing Technologies for Enhancing Forest Inventories: A Review. Can. J. Remote Sens. 2016, 42, 619–641. [Google Scholar] [CrossRef] [Green Version]

- Masek, J.G.; Hayes, D.J.; Hughes, M.J.; Healey, S.P.; Turner, D.P. The Role of Remote Sensing in Process-Scaling Studies of Managed Forest Ecosystems. For. Ecol. Manag. 2015, 355, 109–123. [Google Scholar] [CrossRef] [Green Version]

- Lausch, A.; Heurich, M.; Gordalla, D.; Dobner, H.-J.; Gwillym-Margianto, S.; Salbach, C. Forecasting Potential Bark Beetle Outbreaks Based on Spruce Forest Vitality Using Hyperspectral Remote-Sensing Techniques at Different Scales. For. Ecol. Manag. 2013, 308, 76–89. [Google Scholar] [CrossRef]

- Halme, E.; Pellikka, P.; Mõttus, M. Utility of Hyperspectral Compared to Multispectral Remote Sensing Data in Estimating Forest Biomass and Structure Variables in Finnish Boreal Forest. Int. J. Appl. Earth Obs. Geoinform. 2019, 83, 101942. [Google Scholar] [CrossRef]

- Jarocińska, A.; Białczak, M.; Sławik, Ł. Application of Aerial Hyperspectral Images in Monitoring Tree Biophysical Parameters in Urban Areas. Misc. Geogr. 2018, 22, 56–62. [Google Scholar] [CrossRef] [Green Version]

- Kopačková, V.; Mišurec, J.; Lhotáková, Z.; Oulehle, F.; Albrechtová, J. Using Multi-Date High Spectral Resolution Data to Assess the Physiological Status of Macroscopically Undamaged Foliage on a Regional Scale. Int. J. Appl. Earth Obs. Geoinform. 2014, 27, 169–186. [Google Scholar] [CrossRef]

- Machala, M.; Zejdová, L. Forest Mapping Through Object-Based Image Analysis of Multispectral and LiDAR Aerial Data. Eur. J. Remote Sens. 2014, 47, 117–131. [Google Scholar] [CrossRef]

- Mišurec, J.; Kopačková, V.; Lhotáková, Z.; Campbell, P.; Albrechtová, J. Detection of Spatio-Temporal Changes of Norway Spruce Forest Stands in Ore Mountains Using Landsat Time Series and Airborne Hyperspectral Imagery. Remote Sens. 2016, 8, 92. [Google Scholar] [CrossRef] [Green Version]

- Lin, Y.; Jiang, M.; Yao, Y.; Zhang, L.; Lin, J. Use of UAV Oblique Imaging for the Detection of Individual Trees in Residential Environments. Urban For. Urban Green. 2015, 14, 404–412. [Google Scholar] [CrossRef]

- Klein Hentz, Â.M.; Corte, A.P.D.; Péllico Netto, S.; Strager, M.P.; Schoeninger, E.R. Treedetection: Automatic Tree Detection Using UAV-Based Data. Floresta 2018, 48, 393. [Google Scholar] [CrossRef]

- Candiago, S.; Remondino, F.; De Giglio, M.; Dubbini, M.; Gattelli, M. Evaluating Multispectral Images and Vegetation Indices for Precision Farming Applications from UAV Images. Remote Sens. 2015, 7, 4026–4047. [Google Scholar] [CrossRef] [Green Version]

- Sona, G.; Passoni, D.; Pinto, L.; Pagliari, D.; Masseroni, D.; Ortuani, B.; Facchi, A. UAV Multispectral Survey to Map Soil and Crop for Precision Farming Applications. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 1023–1029. [Google Scholar] [CrossRef] [Green Version]

- Albetis, J.; Duthoit, S.; Guttler, F.; Jacquin, A.; Goulard, M.; Poilvé, H.; Féret, J.-B.; Dedieu, G. Detection of Flavescence Dorée Grapevine Disease Using Unmanned Aerial Vehicle (UAV) Multispectral Imagery. Remote Sens. 2017, 9, 308. [Google Scholar] [CrossRef] [Green Version]

- Su, J.; Liu, C.; Hu, X.; Xu, X.; Guo, L.; Chen, W.-H. Spatio-Temporal Monitoring of Wheat Yellow Rust Using UAV Multispectral Imagery. Comput. Electron. Agric. 2019, 167, 105035. [Google Scholar] [CrossRef]

- Singhal, G.; Bansod, B.; Mathew, L.; Goswami, J.; Choudhury, B.U.; Raju, P.L.N. Chlorophyll Estimation Using Multi-Spectral Unmanned Aerial System Based on Machine Learning Techniques. Remote Sens. Appl. Soc. Environ. 2019, 15, 100235. [Google Scholar] [CrossRef]

- Guo, Y.; Wang, H.; Wu, Z.; Wang, S.; Sun, H.; Senthilnath, J.; Wang, J.; Robin Bryant, C.; Fu, Y. Modified Red Blue Vegetation Index for Chlorophyll Estimation and Yield Prediction of Maize from Visible Images Captured by UAV. Sensors 2020, 20, 5055. [Google Scholar] [CrossRef] [PubMed]

- Baluja, J.; Diago, M.P.; Balda, P.; Zorer, R.; Meggio, F.; Morales, F.; Tardaguila, J. Assessment of Vineyard Water Status Variability by Thermal and Multispectral Imagery Using an Unmanned Aerial Vehicle (UAV). Irrig. Sci. 2012, 30, 511–522. [Google Scholar] [CrossRef]

- Turner, D.; Lucieer, A.; Watson, C. Development of an Unmanned Aerial Vehicle (UAV) for Hyper Resolution Vineyard Mapping Based on Visible, Multispectral, and Thermal Imagery. In Proceedings of the 34th International Symposium on Remote Sensing of Environment, Sydney, NSW, Australia, 10–15 April 2011; p. 4. [Google Scholar]

- Zarco-Tejada, P.J.; Guillén-Climent, M.L.; Hernández-Clemente, R.; Catalina, A.; González, M.R.; Martín, P. Estimating Leaf Carotenoid Content in Vineyards Using High Resolution Hyperspectral Imagery Acquired from an Unmanned Aerial Vehicle (UAV). Agric. For. Meteorol. 2013, 171–172, 281–294. [Google Scholar] [CrossRef] [Green Version]

- Belmonte, A.; Sankey, T.; Biederman, J.A.; Bradford, J.; Goetz, S.J.; Kolb, T.; Woolley, T. UAV-derived Estimates of Forest Structure to Inform Ponderosa Pine Forest Restoration. Remote Sens. Ecol. Conserv. 2020, 6, 181–197. [Google Scholar] [CrossRef]

- D’Odorico, P.; Besik, A.; Wong, C.Y.S.; Isabel, N.; Ensminger, I. High-throughput Drone-based Remote Sensing Reliably Tracks Phenology in Thousands of Conifer Seedlings. New Phytol. 2020, 226, 1667–1681. [Google Scholar] [CrossRef] [PubMed]

- Dash, J.P.; Watt, M.S.; Pearse, G.D.; Heaphy, M.; Dungey, H.S. Assessing Very High Resolution UAV Imagery for Monitoring Forest Health during a Simulated Disease Outbreak. ISPRS J. Photogramm. Remote Sens. 2017, 131, 1–14. [Google Scholar] [CrossRef]

- Chianucci, F.; Disperati, L.; Guzzi, D.; Bianchini, D.; Nardino, V.; Lastri, C.; Rindinella, A.; Corona, P. Estimation of Canopy Attributes in Beech Forests Using True Colour Digital Images from a Small Fixed-Wing UAV. Int. J. Appl. Earth Obs. Geoinform. 2016, 47, 60–68. [Google Scholar] [CrossRef] [Green Version]

- Dash, J.; Pearse, G.; Watt, M. UAV Multispectral Imagery Can Complement Satellite Data for Monitoring Forest Health. Remote Sens. 2018, 10, 1216. [Google Scholar] [CrossRef] [Green Version]

- Minařík, R.; Langhammer, J. Use of a Multispectral UAV Photogrammetry for Detection and Tracking of Forest Disturbance Dynamics. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 711–718. [Google Scholar] [CrossRef]

- Franklin, S.E. Pixel- and Object-Based Multispectral Classification of Forest Tree Species from Small Unmanned Aerial Vehicles. J. Unmanned Veh. Syst. 2018, 6, 195–211. [Google Scholar] [CrossRef] [Green Version]

- Näsi, R.; Honkavaara, E.; Lyytikäinen-Saarenmaa, P.; Blomqvist, M.; Litkey, P.; Hakala, T.; Viljanen, N.; Kantola, T.; Tanhuanpää, T.; Holopainen, M. Using UAV-Based Photogrammetry and Hyperspectral Imaging for Mapping Bark Beetle Damage at Tree-Level. Remote Sens. 2015, 7, 15467–15493. [Google Scholar] [CrossRef] [Green Version]

- Berveglieri, A.; Tommaselli, A.M.G. Exterior Orientation of Hyperspectral Frame Images Collected with UAV for Forest Applications. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 40, 45–50. [Google Scholar] [CrossRef] [Green Version]

- Gallardo-Salazar, J.L.; Pompa-García, M. Detecting Individual Tree Attributes and Multispectral Indices Using Unmanned Aerial Vehicles: Applications in a Pine Clonal Orchard. Remote Sens. 2020, 12, 4144. [Google Scholar] [CrossRef]

- Lim, Y.S.; La, P.H.; Park, J.S.; Lee, M.H.; Pyeon, M.W.; Kim, J.-I. Calculation of Tree Height and Canopy Crown from Drone Images Using Segmentation. J. Kor. Soc. Survey. Geodesy Photogram. Cartogr. 2015, 33, 605–614. [Google Scholar] [CrossRef] [Green Version]

- Díaz-Varela, R.; de la Rosa, R.; León, L.; Zarco-Tejada, P. High-Resolution Airborne UAV Imagery to Assess Olive Tree Crown Parameters Using 3D Photo Reconstruction: Application in Breeding Trials. Remote Sens. 2015, 7, 4213–4232. [Google Scholar] [CrossRef] [Green Version]

- Wu, X.; Shen, X.; Cao, L.; Wang, G.; Cao, F. Assessment of Individual Tree Detection and Canopy Cover Estimation Using Unmanned Aerial Vehicle Based Light Detection and Ranging (UAV-LiDAR) Data in Planted Forests. Remote Sens. 2019, 11, 908. [Google Scholar] [CrossRef] [Green Version]

- Panagiotidis, D.; Abdollahnejad, A.; Surový, P.; Chiteculo, V. Determining Tree Height and Crown Diameter from High-Resolution UAV Imagery. Int. J. Remote Sens. 2017, 38, 2392–2410. [Google Scholar] [CrossRef]

- Jaakkola, A.; Hyyppä, J.; Yu, X.; Kukko, A.; Kaartinen, H.; Liang, X.; Hyyppä, H.; Wang, Y. Autonomous Collection of Forest Field Reference—The Outlook and a First Step with UAV Laser Scanning. Remote Sens. 2017, 9, 785. [Google Scholar] [CrossRef] [Green Version]

- Silva, C.A.; Crookston, N.L.; Hudak, A.T.; Vierling, L.A.; Klauberg, C.; Cardil, A. RLiDAR: LiDAR Data Processing and Visualization; The R Foundation: Vienna, Austria, 2017. [Google Scholar]

- Cardil, A.; Otsu, K.; Pla, M.; Silva, C.A.; Brotons, L. Quantifying Pine Processionary Moth Defoliation in a Pine-Oak Mixed Forest Using Unmanned Aerial Systems and Multispectral Imagery. PLoS ONE 2019, 14, e0213027. [Google Scholar] [CrossRef]

- Zaforemska, A.; Xiao, W.; Gaulton, R. Individual Tree Detection from UAV LIDAR Data in a Mixed Species Woodland. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 657–663. [Google Scholar] [CrossRef] [Green Version]

- Li, J.; Yang, B.; Cong, Y.; Cao, L.; Fu, X.; Dong, Z. 3D Forest Mapping Using A Low-Cost UAV Laser Scanning System: Investigation and Comparison. Remote Sens. 2019, 11, 717. [Google Scholar] [CrossRef] [Green Version]

- Kuželka, K.; Slavík, M.; Surový, P. Very High Density Point Clouds from UAV Laser Scanning for Automatic Tree Stem Detection and Direct Diameter Measurement. Remote Sens. 2020, 12, 1236. [Google Scholar] [CrossRef] [Green Version]

- Picos, J.; Bastos, G.; Míguez, D.; Alonso, L.; Armesto, J. Individual Tree Detection in a Eucalyptus Plantation Using Unmanned Aerial Vehicle (UAV)-LiDAR. Remote Sens. 2020, 12, 885. [Google Scholar] [CrossRef] [Green Version]

- Pulido, D.; Salas, J.; Rös, M.; Puettmann, K.; Karaman, S. Assessment of Tree Detection Methods in Multispectral Aerial Images. Remote Sens. 2020, 12, 2379. [Google Scholar] [CrossRef]

- Pleșoianu, A.-I.; Stupariu, M.-S.; Șandric, I.; Pătru-Stupariu, I.; Drăguț, L. Individual Tree-Crown Detection and Species Classification in Very High-Resolution Remote Sensing Imagery Using a Deep Learning Ensemble Model. Remote Sens. 2020, 12, 2426. [Google Scholar] [CrossRef]

- Fromm, M.; Schubert, M.; Castilla, G.; Linke, J.; McDermid, G. Automated Detection of Conifer Seedlings in Drone Imagery Using Convolutional Neural Networks. Remote Sens. 2019, 11, 2585. [Google Scholar] [CrossRef] [Green Version]

- Lhotáková, Z.; Kopačková-Strnadová, V.; Oulehle, F.; Homolová, L.; Neuwirthová, E.; Švik, M.; Janoutová, R.; Albrechtová, J. Foliage Biophysical Trait Prediction from Laboratory Spectra in Norway Spruce Is More Affected by Needle Age Than by Site Soil Conditions. Remote Sens. 2021, 13, 391. [Google Scholar] [CrossRef]

- ČÚZK: Geoportál. Available online: https://geoportal.cuzk.cz/(S(5rmwjwtpueumnrsepeeaarwk))/Default.aspx?head_tab=sekce-00-gp&mode=TextMeta&text=uvod_uvod&menu=01&news=yes&UvodniStrana=yes (accessed on 31 December 2020).

- Novotný, J.; Navrátilová, B.; Janoutová, R.; Oulehle, F.; Homolová, L. Influence of Site-Specific Conditions on Estimation of Forest above Ground Biomass from Airborne Laser Scanning. Forests 2020, 11, 268. [Google Scholar] [CrossRef] [Green Version]

- Porra, R.J.; Thompson, W.A.; Kriedemann, P.E. Determination of Accurate Extinction Coefficients and Simultaneous Equations for Assaying Chlorophylls a and b Extracted with Four Different Solvents: Verification of the Concentration of Chlorophyll Standards by Atomic Absorption Spectroscopy. Biochim. Biophys. Acta BBA Bioenerg. 1989, 975, 384–394. [Google Scholar] [CrossRef]

- Wellburn, A.R. The Spectral Determination of Chlorophylls a and b, as Well as Total Carotenoids, Using Various Solvents with Spectrophotometers of Different Resolution. J. Plant Physiol. 1994, 144, 307–313. [Google Scholar] [CrossRef]

- DJI—Official Website. Available online: https://www.dji.com (accessed on 30 December 2020).

- Phantom 4—DJI. Available online: https://www.dji.com/phantom-4 (accessed on 30 December 2020).

- SenseFly—SenseFly—The Professional’s Mapping Drone. Available online: https://www.sensefly.com/ (accessed on 30 December 2020).

- Prusa3D—Open-Source 3D Printers from Josef Prusa. Available online: https://www.prusa3d.com/ (accessed on 30 December 2020).

- Litchi for DJI Mavic/Phantom/Inspire/Spark. Available online: https://flylitchi.com/ (accessed on 31 December 2020).

- Best Practices: Collecting Data with MicaSense Sensors. Available online: https://support.micasense.com/hc/en-us/articles/224893167-Best-practices-Collecting-Data-with-MicaSense-Sensors (accessed on 31 December 2020).

- Agisoft Metashape. Available online: https://www.agisoft.com/ (accessed on 31 December 2020).

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.; Reynolds, J.M. ‘Structure-from-Motion’ Photogrammetry: A Low-Cost, Effective Tool for Geoscience Applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef] [Green Version]

- Tutorial (Intermediate Level): Radiometric Calibration Using Reflectance Panelsin PhotoScan Professional 1.4. Available online: https://www.agisoft.com/pdf/PS_1.4_(IL)_Refelctance_Calibration.pdf (accessed on 20 December 2020).

- Zhao, K.; Popescu, S. Hierarchical Watershed Segmentation of Canopy Height Model for Multi-Scale Forest Inventory. In Proceedings of the ISPRS working group “Laser Scanning 2007 and SilviLaser 2007”, Espoo, Finland, 12–14 September 2007; pp. 436–440. [Google Scholar]

- Hubacek, M.; Kovarik, V.; Kratochvil, V. Analysis of Influence of Terrain Relief Roughness on DEM Accuracy Generated from LIDAR in the Czech Republic Territory. In Proceedings of the ISPRS—International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Prague, Czech Republic, 12–19 July 2016; Copernicus GmbH: Göttingen, Germany; Volume XLI-B4, pp. 25–30. [Google Scholar]

- How Focal Statistics Works—Help|ArcGIS for Desktop. Available online: https://desktop.arcgis.com/en/arcmap/10.3/tools/spatial-analyst-toolbox/how-focal-statistics-works.htm (accessed on 31 December 2020).

- Xu, N.; Tian, J.; Tian, Q.; Xu, K.; Tang, S. Analysis of Vegetation Red Edge with Different Illuminated/Shaded Canopy Proportions and to Construct Normalized Difference Canopy Shadow Index. Remote Sens. 2019, 11, 1192. [Google Scholar] [CrossRef] [Green Version]

- Rouse, J.W.; Hass, R.H.; Schell, J.A.; Deering, D.W.; Harlan, J.C. Monitoring the Vernal Advancement and Retrogradation (Green Wave Effect) of Natural Vegetation.; RSC 1978-4; Texas A & M University: College Station, TX, USA, 1974. [Google Scholar]

- Sharma, L.; Bu, H.; Denton, A.; Franzen, D. Active-Optical Sensors Using Red NDVI Compared to Red Edge NDVI for Prediction of Corn Grain Yield in North Dakota, U.S.A. Sensors 2015, 15, 27832–27853. [Google Scholar] [CrossRef] [PubMed]

- Gitelson, A.; Merzlyak, M.N. Spectral Reflectance Changes Associated with Autumn Senescence of Aesculus Hippocastanum L. and Acer Platanoides L. Leaves. Spectral Features and Relation to Chlorophyll Estimation. J. Plant Physiol. 1994, 143, 286–292. [Google Scholar] [CrossRef]

- Sims, D.A.; Gamon, J.A. Relationships between Leaf Pigment Content and Spectral Reflectance across a Wide Range of Species, Leaf Structures and Developmental Stages. Remote Sens. Environ. 2002, 81, 337–354. [Google Scholar] [CrossRef]

- Chavez, P.S., Jr.; Kwarteng, A.Y. Extracting Spectral Contrast in Landsat Thematic Mapper Image Data Using Selective Principal Component Analysis. Photogramm. Eng. Remote Sens. 1989, 55, 10. [Google Scholar]

- Mather, P.M.; Koch, M. Computer Processing of Remotely-Sensed Images: An Introduction, 4th ed.; Wiley-Blackwell: Chichester, UK; Hoboken, NJ, USA, 2011; ISBN 978-0-470-74239-6. [Google Scholar]

- Draper, N.R.; Smith, H. Applied Regression Analysis. In Wiley Series in Probability and Statistics, 1st ed.; Wiley: Hoboken, NJ, USA, 1998; ISBN 978-0-471-17082-2. [Google Scholar]

- Homolová, L.; Lukeš, P.; Malenovský, Z.; Lhotáková, Z.; Kaplan, V.; Hanuš, J. Measurement Methods and Variability Assessment of the Norway Spruce Total Leaf Area: Implications for Remote Sensing. Trees 2013, 27, 111–121. [Google Scholar] [CrossRef] [Green Version]

- Krám, P.; Oulehle, F.; Štědrá, V.; Hruška, J.; Shanley, J.B.; Minocha, R.; Traister, E. Geoecology of a Forest Watershed Underlain by Serpentine in Central Europe. Northeast. Nat. 2009, 16, 309–328. [Google Scholar] [CrossRef]

- O’Neill, A.L.; Kupiec, J.A.; Curran, P.J. Biochemical and Reflectance Variation throughout a Sitka Spruce Canopy. Remote Sens. Environ. 2002, 80, 134–142. [Google Scholar] [CrossRef]

- Hovi, A.; Raitio, P.; Rautiainen, M. A Spectral Analysis of 25 Boreal Tree Species. Silva Fenn. 2017, 51. [Google Scholar] [CrossRef] [Green Version]

- Rautiainen, M.; Lukeš, P.; Homolová, L.; Hovi, A.; Pisek, J.; Mõttus, M. Spectral Properties of Coniferous Forests: A Review of In Situ and Laboratory Measurements. Remote Sens. 2018, 10, 207. [Google Scholar] [CrossRef] [Green Version]

- Wu, Q.; Song, C.; Song, J.; Wang, J.; Chen, S.; Yu, B. Impacts of Leaf Age on Canopy Spectral Signature Variation in Evergreen Chinese Fir Forests. Remote Sens. 2018, 10, 262. [Google Scholar] [CrossRef] [Green Version]

- Rogers, S.R.; Manning, I.; Livingstone, W. Comparing the Spatial Accuracy of Digital Surface Models from Four Unoccupied Aerial Systems: Photogrammetry Versus LiDAR. Remote Sens. 2020, 12, 2806. [Google Scholar] [CrossRef]

- Zhang, L.; Sun, X.; Wu, T.; Zhang, H. An analysis of shadow effects on spectral vegetation indexes using a ground-based imaging spectrometer. IEEE Geosci. Remote Sens. Lett. 2015, 12, 2188–2192. [Google Scholar] [CrossRef]

- Rock, B.N.; Hoshizaki, T.; Miller, J.R. Comparison of in Situ and Airborne Spectral Measurements of the Blue Shift Associated with Forest Decline. Remote Sens. Environ. 1988, 24, 109–127. [Google Scholar] [CrossRef]

- Campbell, P.K.E.; Rock, B.N.; Martin, M.E.; Neefus, C.D.; Irons, J.R.; Middleton, E.M.; Albrechtova, J. Detection of Initial Damage in Norway Spruce Canopies Using Hyperspectral Airborne Data. Int. J. Remote Sens. 2004, 25, 5557–5584. [Google Scholar] [CrossRef]

- de Tomás Marín, S.; Novák, M.; Klančnik, K.; Gaberščik, A. Spectral Signatures of Conifer Needles Mainly Depend on Their Physical Traits. Pol. J. Ecol. 2016, 64, 1–13. [Google Scholar] [CrossRef]

- Misurec, J.; Kopacková, V.; Lhotakova, Z.; Albrechtova, J.; Hanus, J.; Weyermann, J.; Entcheva-Campbell, P. Utilization of Hyperspectral Image Optical Indices to Assess the Norway Spruce Forest Health Status. J. Appl. Remote Sens. 2012, 6, 063545. [Google Scholar] [CrossRef]

- Hernández-Clemente, R.; Navarro-Cerrillo, R.M.; Zarco-Tejada, P.J. Carotenoid Content Estimation in a Heterogeneous Conifer Forest Using Narrow-Band Indices and PROSPECT+DART Simulations. Remote Sens. Environ. 2012, 127, 298–315. [Google Scholar] [CrossRef]

- Kováč, D.; Malenovský, Z.; Urban, O.; Špunda, V.; Kalina, J.; Ač, A.; Kaplan, V.; Hanuš, J. Response of Green Reflectance Continuum Removal Index to the Xanthophyll De-Epoxidation Cycle in Norway Spruce Needles. J. Exp. Bot. 2013, 64, 1817–1827. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kokaly, R.F.; Asner, G.P.; Ollinger, S.V.; Martin, M.E.; Wessman, C.A. Characterizing Canopy Biochemistry from Imaging Spectroscopy and Its Application to Ecosystem Studies. Remote Sens. Environ. 2009, 113, S78–S91. [Google Scholar] [CrossRef]

- Klem, K.; Záhora, J.; Zemek, F.; Trunda, P.; Tůma, I.; Novotná, K.; Hodaňová, K.; Rapantová, B.; Hanuš, J.; Vaříková, J.; et al. Interactive effects of water deficit and nitrogen nutrition on winter wheat. Remote sensing methods for their detection. Agricult. Water Manag. 2018, 210, 171–184. [Google Scholar] [CrossRef]

- Schlemmer, M.R.; Francis, D.D.; Shanahan, J.F.; Schepers, J.S. Remotely measuring chlorophyll content in corn leaves with differing nitrogen levels and relative water content. Agron. J. 2005, 97, 106–112. [Google Scholar] [CrossRef] [Green Version]

- Fawcett, D.; Panigada, C.; Tagliabue, G.; Boschetti, M.; Celesti, M.; Evdokimov, A.; Biriukova, K.; Colombo, R.; Miglietta, F.; Rascher, U.; et al. Multi-Scale Evaluation of Drone-Based Multispectral Surface Reflectance and Vegetation Indices in Operational Conditions. Remote Sens. 2020, 12, 514. [Google Scholar] [CrossRef] [Green Version]

- Ollinger, S.V. Sources of Variability in Canopy Reflectance and the Convergent Properties of Plants: Tansley Review. New Phytol. 2011, 189, 375–394. [Google Scholar] [CrossRef]

- Cescatti, A.; Zorer, R. Structural Acclimation and Radiation Regime of Silver Fir (Abies Alba Mill.) Shoots along a Light Gradient: Shoot Structure and Radiation Regime. Plant Cell Environ. 2003, 26, 429–442. [Google Scholar] [CrossRef]

- Kubínová, Z.; Janáček, J.; Lhotáková, Z.; Šprtová, M.; Kubínová, L.; Albrechtová, J. Norway Spruce Needle Size and Cross Section Shape Variability Induced by Irradiance on a Macro- and Microscale and CO2 Concentration. Trees 2018, 32, 231–244. [Google Scholar] [CrossRef]

- Vanbrabant, Y.; Tits, L.; Delalieux, S.; Pauly, K.; Verjans, W.; Somers, B. Multitemporal Chlorophyll Mapping in Pome Fruit Orchards from Remotely Piloted Aircraft Systems. Remote Sens. 2019, 11, 1468. [Google Scholar] [CrossRef] [Green Version]

| Site | Bedrock | Soils | Elevation (m a. s. l.) | Forest Age (Year Range) | Spruce Forest (ha) | Broadleaf Forest (ha) | Non- Forested Area (ha) |

|---|---|---|---|---|---|---|---|

| LYS | Granite | Cambisols, Podzols | 880 | 12–53 | 27 | 0.0 | 0.4 |

| PLB | Serpentinite | Magnesic Cambisols, Stagnic–Magnesic Cambisols, Magnesic Gleysols | 755 | 41–129 | 18 | 0.0 | 4.0 |

| Test Site | Average Age | Min Age | Max Age |

|---|---|---|---|

| LYS 1K | 14 | 12 | 17 |

| LYS 2K | 16 | 15 | 18 |

| LYS 4K | 47 | 44 | 53 |

| PLB 2K | 120 | 109 | 129 |

| PLB 3K | 72 | 70 | 74 |

| PLB 5K | 47 | 41 | 50 |

| Band Name | Spectral Range (nm) | Central Wavelength (nm) |

|---|---|---|

| Green | 530–570 | 550 |

| Red | 640–680 | 660 |

| Red edge (RE) | 730–740 | 735 |

| Near infrared (NIR) | 770–810 | 790 |

| Catchment | All Needles | 1st Year Needles | 2nd Year Needles | 4+ Years Needles | |

|---|---|---|---|---|---|

| Total Chlorophyll (µg·cm−2) | Lysina (LYS) | 52.580 | 35.756 | 56.055 | 65.399 |

| Pluhův Bor (PLB) | 44.425 | 31.148 | 43.895 | 57.172 | |

| Chlorophyll a (µg·cm−2) | Lysina (LYS) | 37.939 | 26.124 | 40.679 | 46.637 |

| Pluhův Bor (PLB) | 32.071 | 22.810 | 31.989 | 40.672 | |

| Chlorophyll b (µg·cm−2) | Lysina (LYS) | 14.641 | 9.631 | 15.373 | 18.761 |

| Pluhův Bor (PLB) | 12.354 | 8.343 | 11.904 | 16.500 | |

| Carotenoids (µg·cm−2) | Lysina (LYS) | 6.724 | 4.400 | 7.018 | 8.602 |

| Pluhův Bor (PLB) | 5.596 | 3.451 | 5.284 | 7.591 |

| Parrot Sequoia-Multispectral Camera | DJI Camera-RGB | |||||

|---|---|---|---|---|---|---|

| Test Site | DSM (cm/px) (Seq DSM) | Orthomosaic (cm/px) (Seq Mosaic) | Vertical Error (m) | Total Error (m) | Orthomosaic (cm/px) | Total Error (m) |

| LYS 1K | 23.95 | 5.99 | 0.78 | 1.02 | 2.26 | 0.77 |

| LYS 2K | 16.41 | 4.09 | 0.55 | 0.67 | 1.12 | 1.14 |

| LYS 4K | 19.64 | 4.91 | 0.65 | 0.94 | 2.17 | 0.79 |

| PLB 2K | 23.30 | 5.82 | 0.51 | 0.82 | 2.05 | 0.71 |

| PLB 3K | 24.47 | 6.11 | 0.53 | 0.74 | 2.36 | 1.27 |

| PLB 5K | 23.00 | 5.74 | 0.61 | 0.72 | 1.87 | 0.83 |

| Test Site | No. of Trees Measured In-Situ (Above Set Height Mask) | No. of Detected Tree Peaks Based on UAV Data | Success Rate of Detected Tree Tops (%) (Compared to the Trees Measured In-Situ) | No. of Detected Tree Crowns Based on UAV Data | Success Rate of Detected Tree Crowns (%) (Compared to the Trees Measured In-Situ) |

|---|---|---|---|---|---|

| LYS 1K | 177 | 45 | 25.42 | 61 | 34.46 |

| LYS 2K | 67 | 49 | 73.13 | 57 | 85.07 |

| LYS 4K | 25 | 18 | 72.00 | 19 | 76.00 |

| PLB 2K | 34 | 25 | 73.53 | 31 | 91.18 |

| PLB 3K | 15 | 13 | 86.66 | 17 | 86.67 |

| PLB 5K | 32 | 26 | 81.25 | 34 | 93.75 |

| Test Site | Average Tree Top Height (m)-In-Situ Data | Average Tree Top Height (m) Based on the CHM Data | Difference of Average Tree Heights (m) |

|---|---|---|---|

| LYS 2K | 13.33 | 14.87 | 1.54 |

| LYS 4K | 24.34 | 26.62 | 2.28 |

| PLB 2K | 27.08 | 29.44 | 2.36 |

| PLB 3K | 22.86 | 24.63 | 1.77 |

| PLB 5K | 23.95 | 25.70 | 1.75 |

| LYS | |||

| PC Component | Eigenvalue | Variance | Cumulative Variance |

| 1 | 0.01602 | 98.6427 | 98.6427 |

| 2 | 0.00015 | 0.9405 | 99.5832 |

| 3 | 0.00006 | 0.3733 | 99.9566 |

| 4 | 0.00001 | 0.0434 | 100 |

| PLB | |||

| PC Component | Eigenvalue | Variance | Cumulative variance |

| 1 | 0.021 | 99.0774 | 99.0774 |

| 2 | 0.00014 | 0.6383 | 99.7157 |

| 3 | 0.00005 | 0.2335 | 99.9493 |

| 4 | 0.00001 | 0.0507 | 100 |

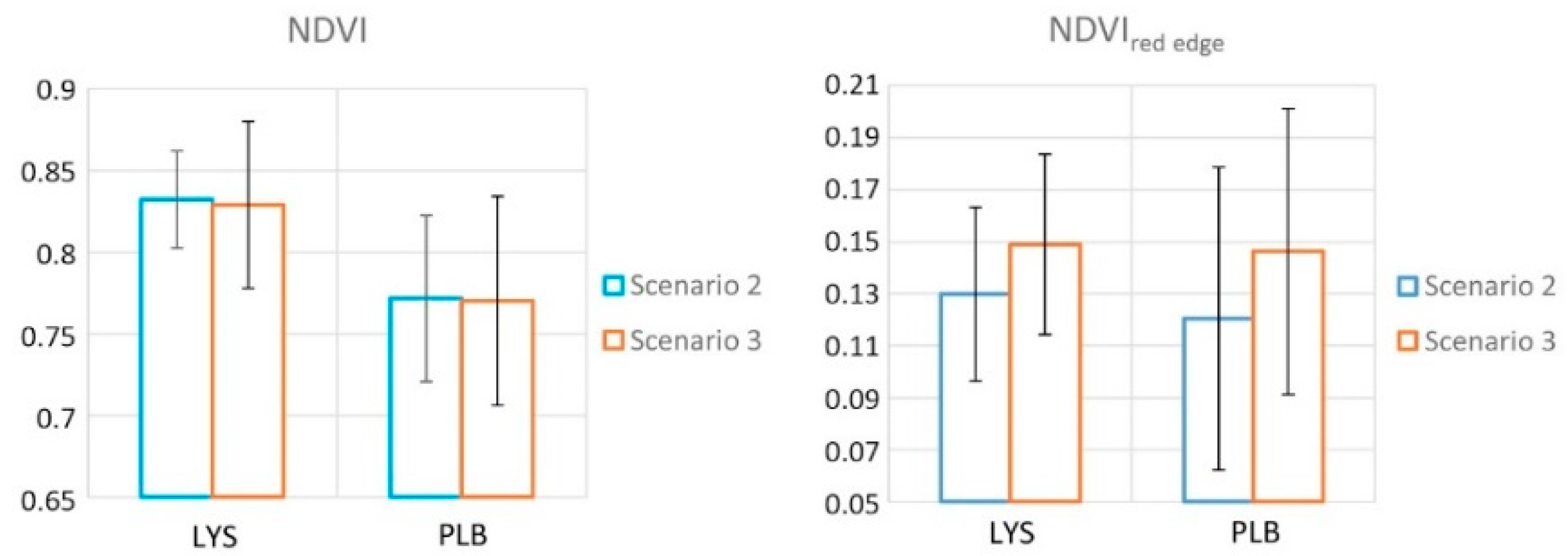

| Catchment | Scenario 1 | Scenario 2 | Scenario 3 | |

|---|---|---|---|---|

| NDVI | Lysina (LYS) | 0.831/0.022 | 0.832/0.022 | 0.829/0.023 |

| Pluhův Bor (PLB) | 0.782/0.056 | 0.772/0.055 | 0.770/0.059 | |

| NDVIred edge | Lysina (LYS) | 0.138/0.022 | 0.130/0.019 | 0.149/0.025 |

| Pluhův Bor (PLB) | 0.140/0.019 | 0.121/0.018 | 0.146/0.020 |

| Ground Truth Age Group | Parameter | Scenario 1 | Scenario 2 | Scenario 3 |

|---|---|---|---|---|

| All needles | Total chlorophyll | 0.33284 | 0.3132 | 0.34764 |

| Chlorophyll a | 0.31794 | 0.30137 | 0.33012 | |

| Chlorophyll b | 0.36924 | 0.34138 | 0.39117 | |

| Carotenoids | 0.31854 | 0.2931 | 0.33969 | |

| 1st year needles | Total chlorophyll | 0.03731 | 0.04066 | 0.03605 |

| Chlorophyll a | 0.03489 | 0.03854 | 0.03333 | |

| Chlorophyll b | 0.04373 | 0.04618 | 0.04336 | |

| Carotenoids | 0.07389 | 0.0579 | 0.08686 | |

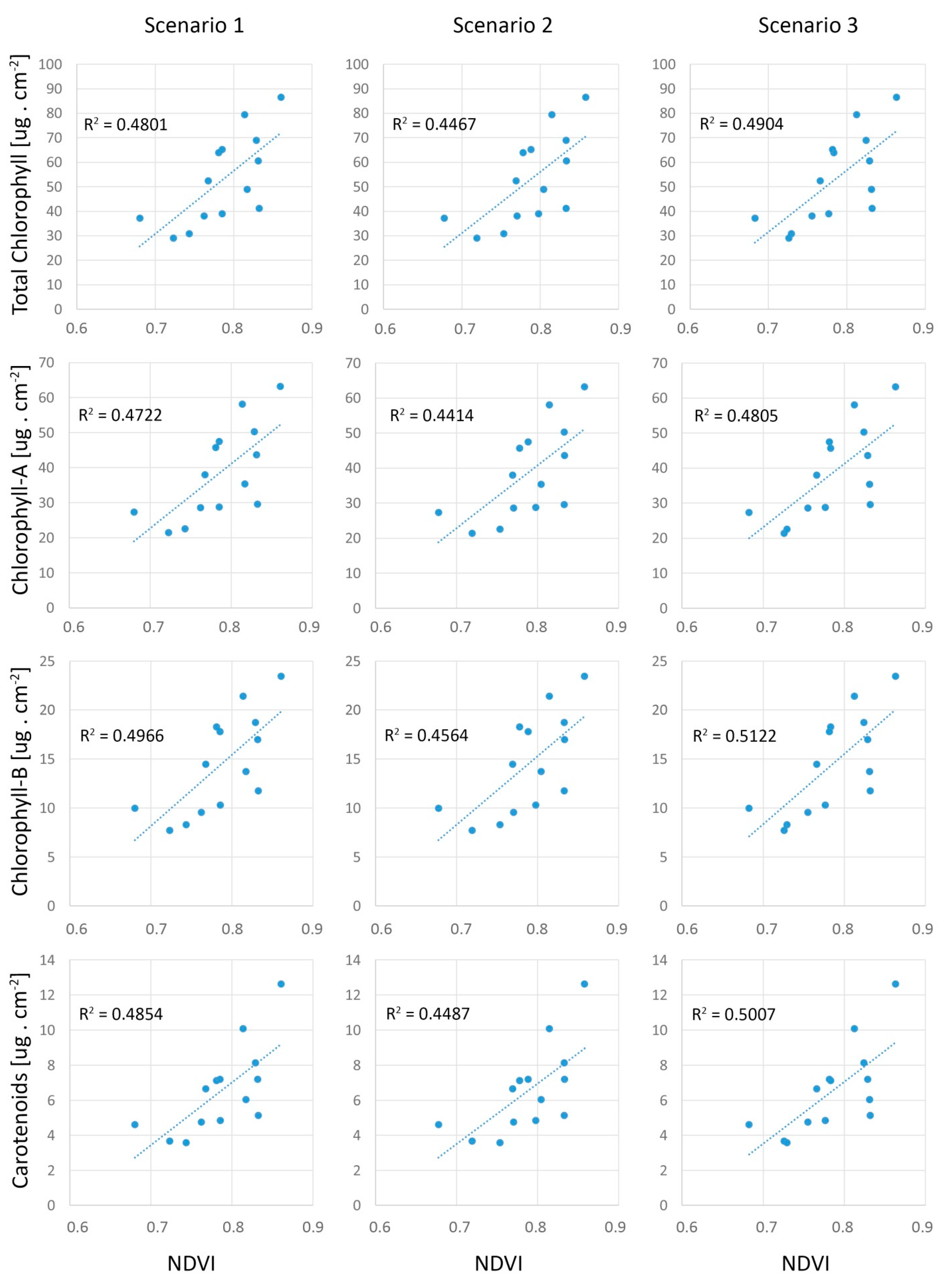

| 2nd year needles | Total chlorophyll | 0.4801 | 0.44667 | 0.49043 |

| Chlorophyll A | 0.47219 | 0.44141 | 0.48051 | |

| Chlorophyll B | 0.49659 | 0.45638 | 0.51224 | |

| Carotenoids | 0.48543 | 0.44873 | 0.50073 | |

| 4th year needles | Total chlorophyll | 0.21125 | 0.19238 | 0.23391 |

| Chlorophyll a | 0.19768 | 0.18171 | 0.21753 | |

| Chlorophyll b | 0.24389 | 0.21744 | 0.27382 | |

| Carotenoids | 0.18607 | 0.16277 | 0.21334 |

.

.| Ground Truth Age Group | Parameter | Scenario 1 | Scenario 2 | Scenario 3 |

|---|---|---|---|---|

| All needles | Total chlorophyll | 0.33424 | 0.3367 | 0.32031 |

| Chlorophyll a | 0.32371 | 0.32902 | 0.30735 | |

| Chlorophyll b | 0.35841 | 0.35316 | 0.35132 | |

| Carotenoids | 0.37654 | 0.37009 | 0.36017 | |

| 1st year needles | Total chlorophyll | 0.03079 | 0.05179 | 0.01875 |

| Chlorophyll a | 0.03029 | 0.05143 | 0.0181 | |

| Chlorophyll b | 0.03195 | 0.05241 | 0.0181 | |

| Carotenoids | 0.07625 | 0.09464 | 0.07331 | |

| 2nd year needles | Total chlorophyll | 0.44861 | 0.43218 | 0.46261 |

| Chlorophyll a | 0.44209 | 0.42862 | 0.45333 | |

| Chlorophyll b | 0.46151 | 0.43743 | 0.48268 | |

| Carotenoids | 0.51865 | 0.50788 | 0.52091 | |

| 4th year needles | Total chlorophyll | 0.2499 | 0.22732 | 0.24152 |

| Chlorophyll a | 0.23973 | 0.22026 | 0.22884 | |

| Chlorophyll b | 0.27252 | 0.24211 | 0.27104 | |

| Carotenoids | 0.26164 | 0.23661 | 0.25304 |

.

.Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kopačková-Strnadová, V.; Koucká, L.; Jelének, J.; Lhotáková, Z.; Oulehle, F. Canopy Top, Height and Photosynthetic Pigment Estimation Using Parrot Sequoia Multispectral Imagery and the Unmanned Aerial Vehicle (UAV). Remote Sens. 2021, 13, 705. https://doi.org/10.3390/rs13040705

Kopačková-Strnadová V, Koucká L, Jelének J, Lhotáková Z, Oulehle F. Canopy Top, Height and Photosynthetic Pigment Estimation Using Parrot Sequoia Multispectral Imagery and the Unmanned Aerial Vehicle (UAV). Remote Sensing. 2021; 13(4):705. https://doi.org/10.3390/rs13040705

Chicago/Turabian StyleKopačková-Strnadová, Veronika, Lucie Koucká, Jan Jelének, Zuzana Lhotáková, and Filip Oulehle. 2021. "Canopy Top, Height and Photosynthetic Pigment Estimation Using Parrot Sequoia Multispectral Imagery and the Unmanned Aerial Vehicle (UAV)" Remote Sensing 13, no. 4: 705. https://doi.org/10.3390/rs13040705

APA StyleKopačková-Strnadová, V., Koucká, L., Jelének, J., Lhotáková, Z., & Oulehle, F. (2021). Canopy Top, Height and Photosynthetic Pigment Estimation Using Parrot Sequoia Multispectral Imagery and the Unmanned Aerial Vehicle (UAV). Remote Sensing, 13(4), 705. https://doi.org/10.3390/rs13040705