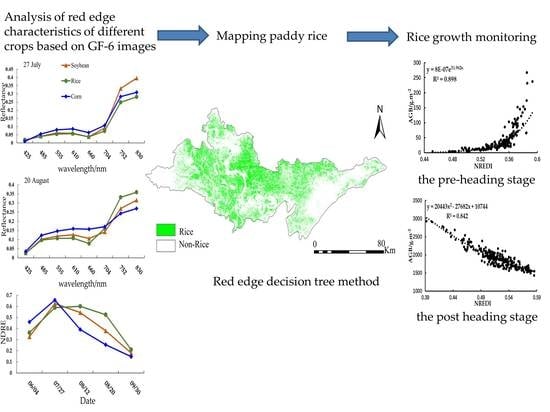

Rice Mapping and Growth Monitoring Based on Time Series GF-6 Images and Red-Edge Bands

Abstract

1. Introduction

2. Materials and Data Processing

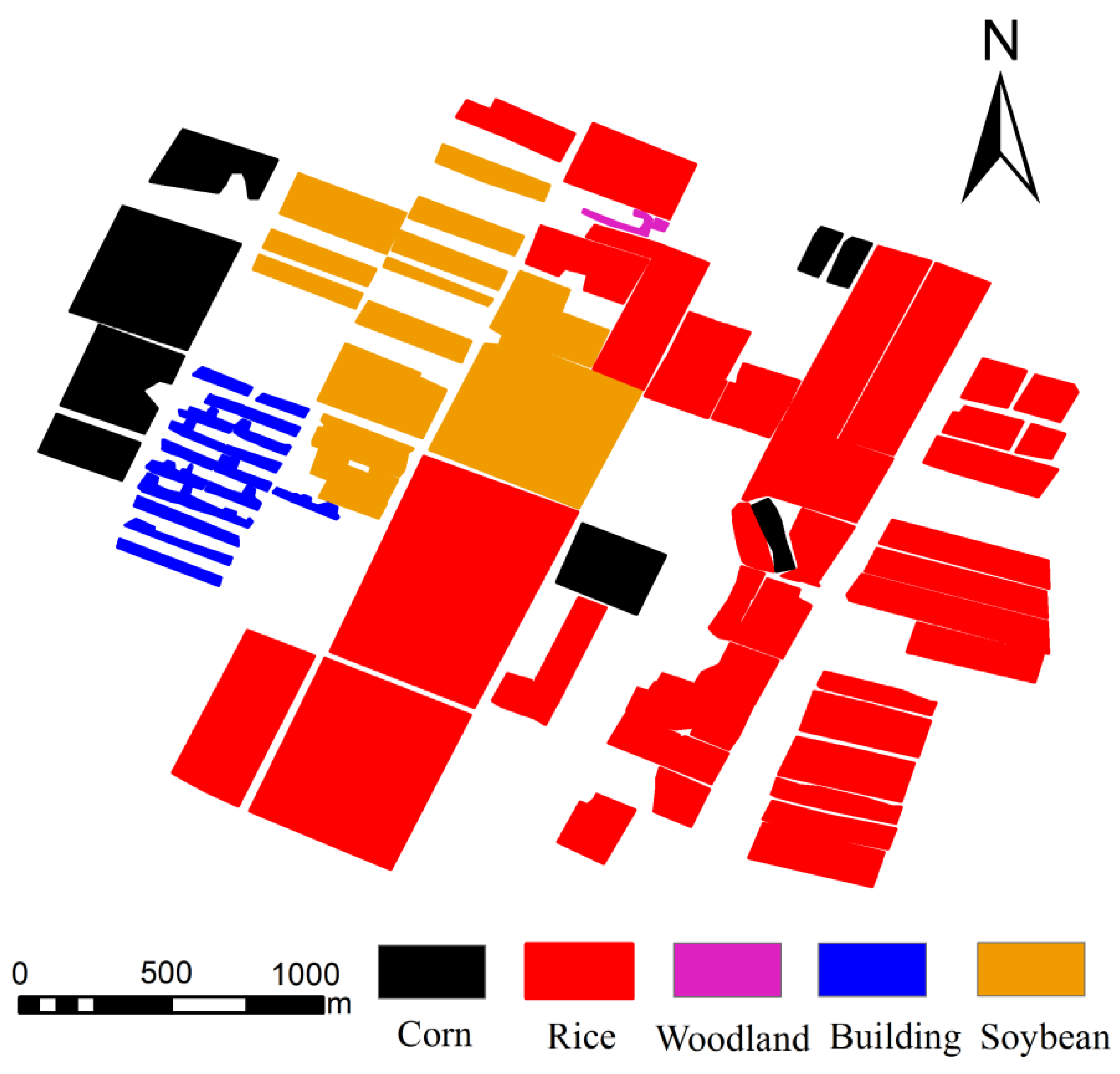

2.1. Study Area

2.2. Data and Preprocessing

2.2.1. GF-6/GF-1 Data and Preprocessing

2.2.2. UAV Image Data and Preprocessing

2.3. Ancillary Data

3. Methodology

3.1. Non-Cropland Masks

3.2. Red-Edge Decision Tree Method Based on Time Series

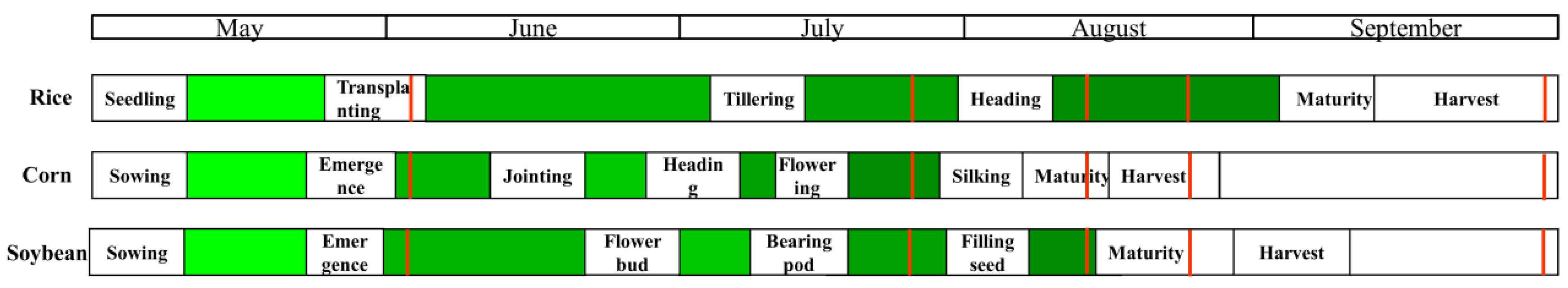

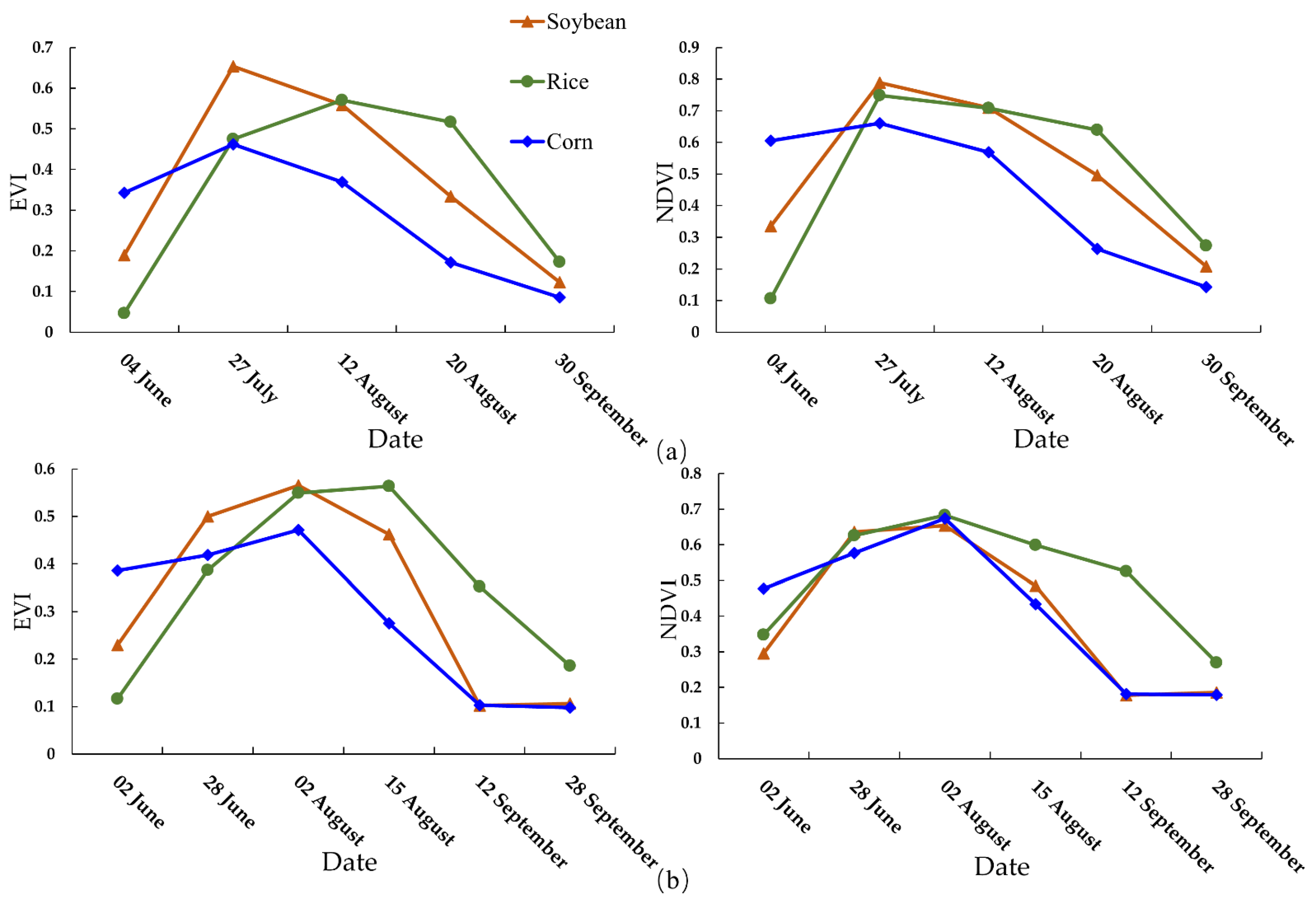

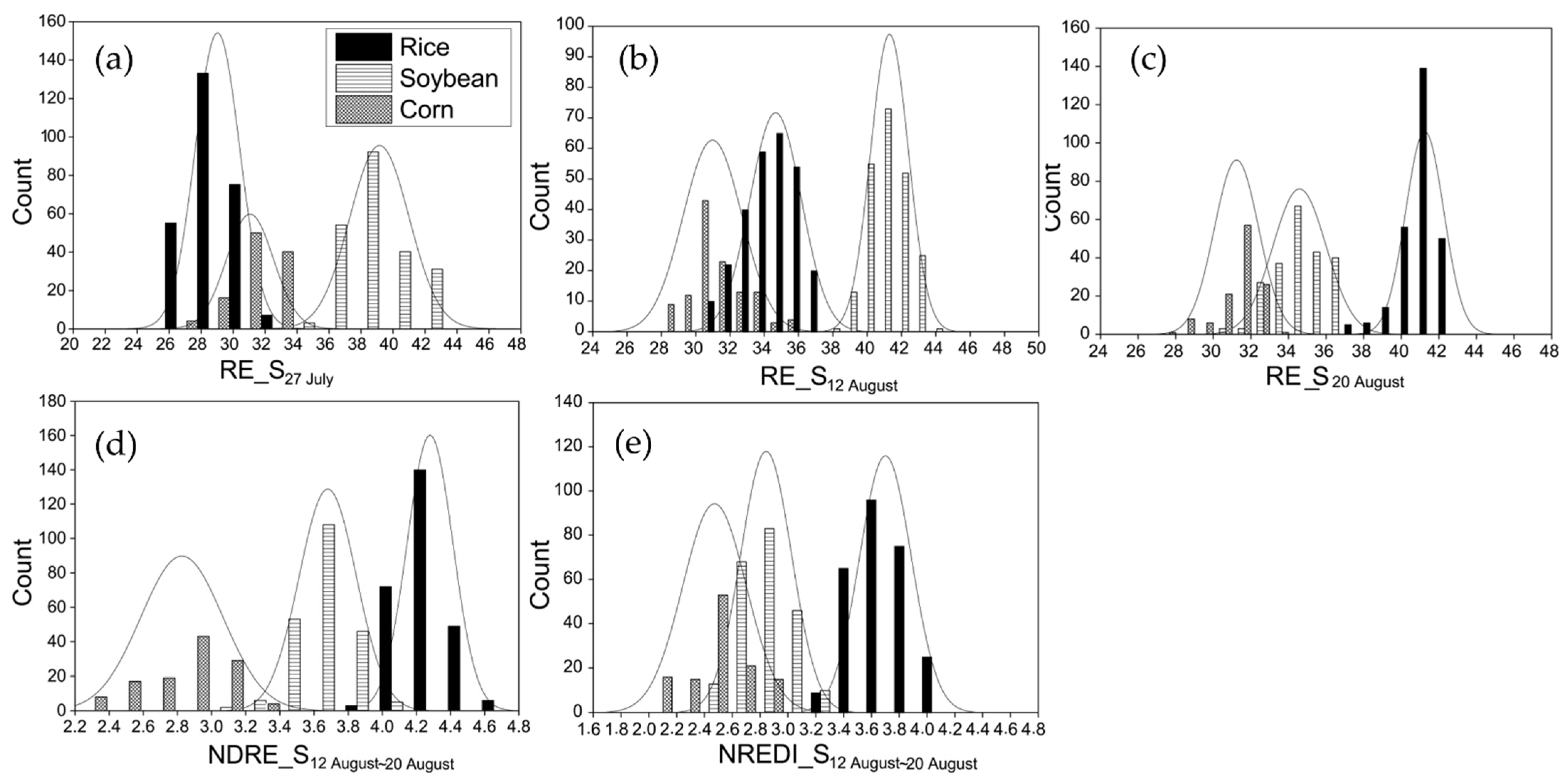

3.2.1. Phenological Analysis of Rice, Corn, and Soybean

3.2.2. Red-Edge Decision Tree Classification

3.3. Methods of the NDVI, EVI, and NDWI Time Series

3.4. Results Validation

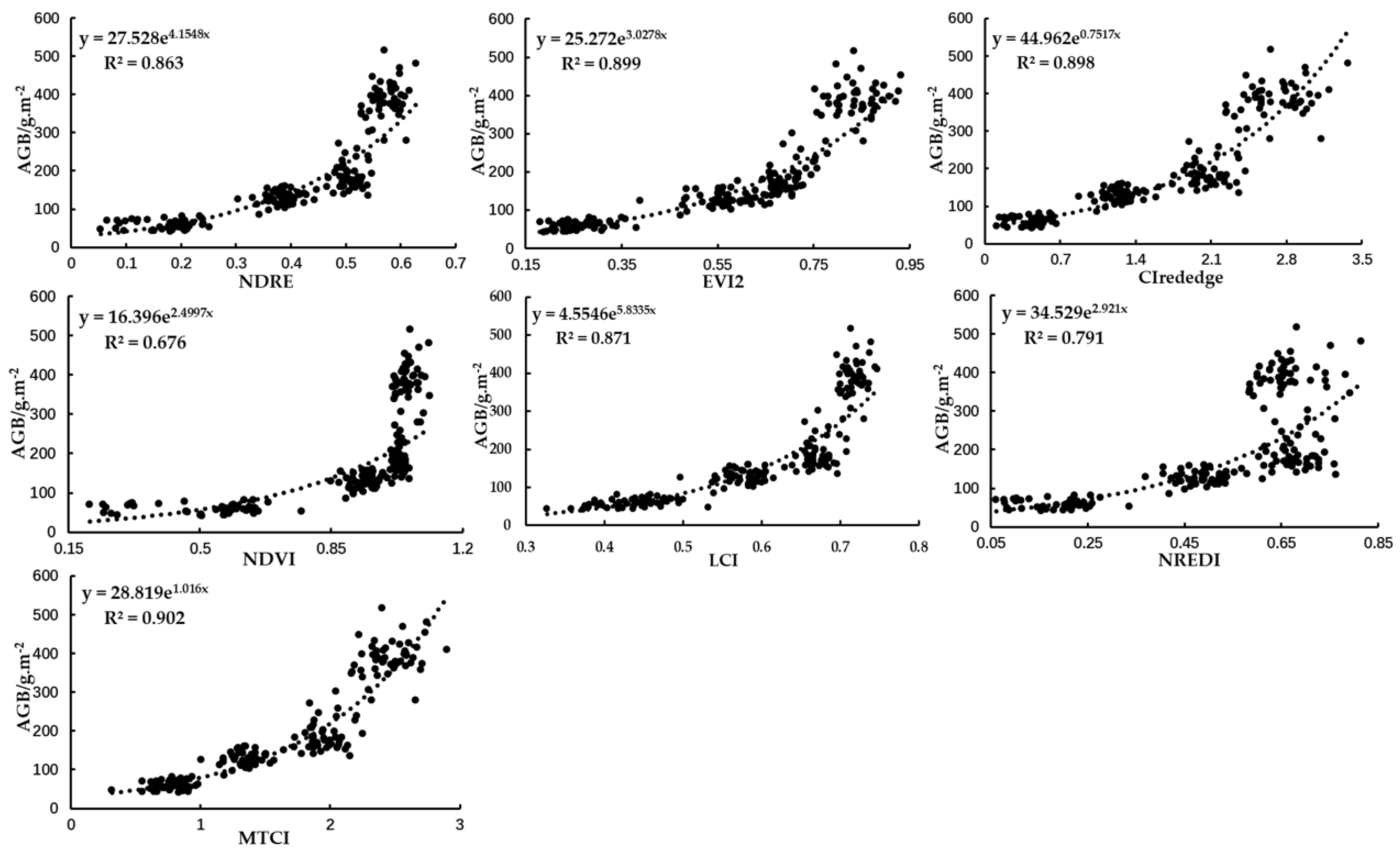

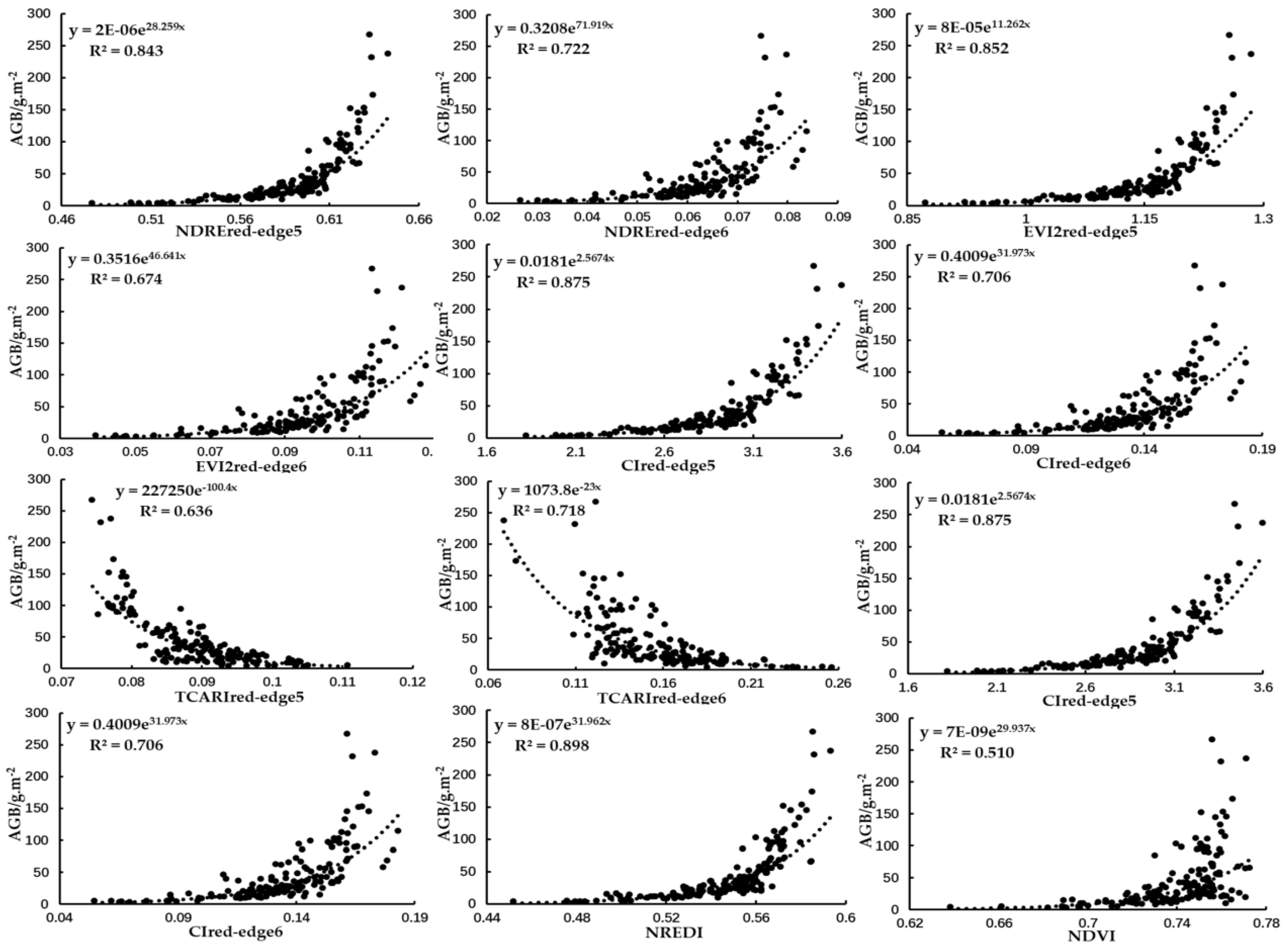

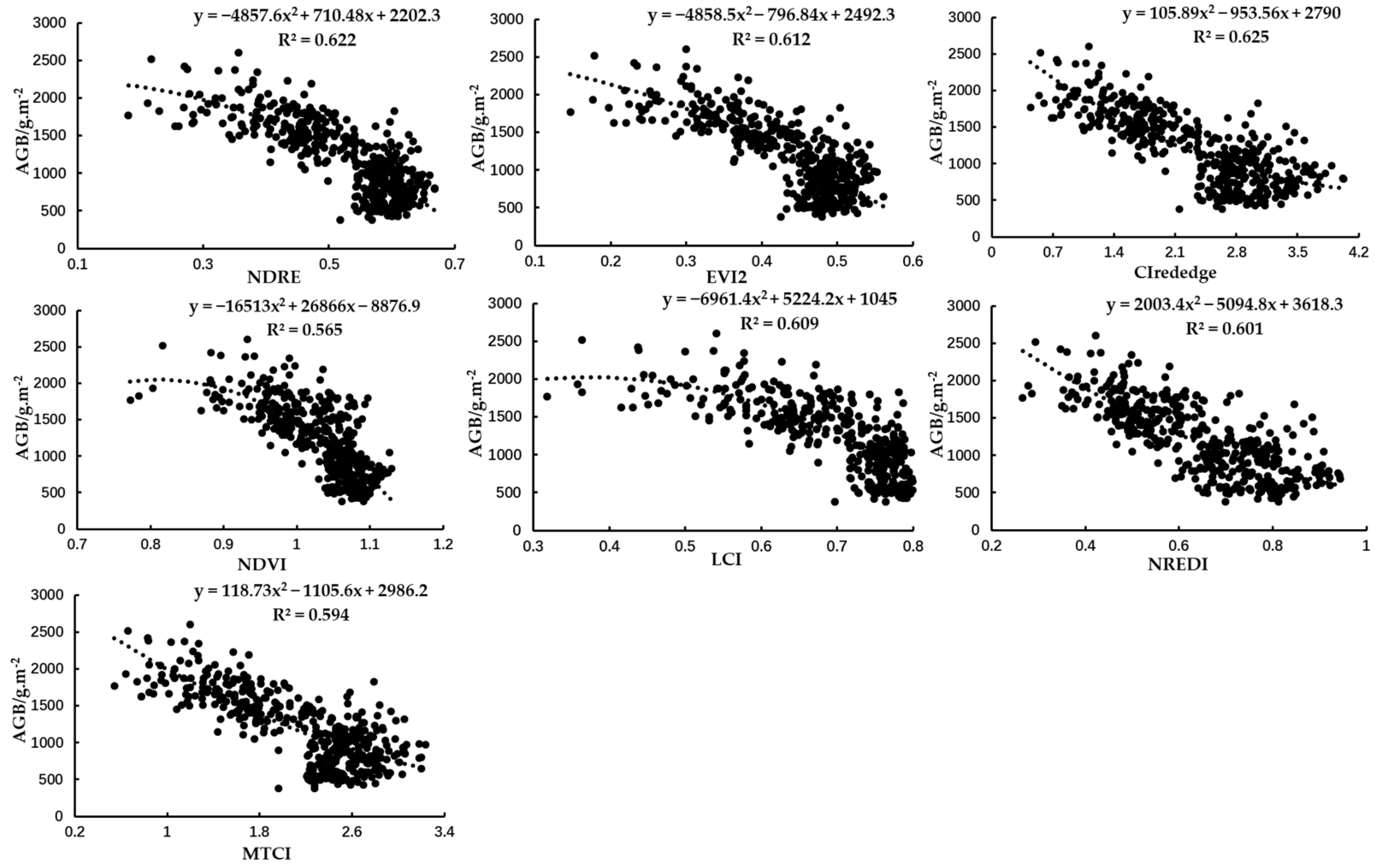

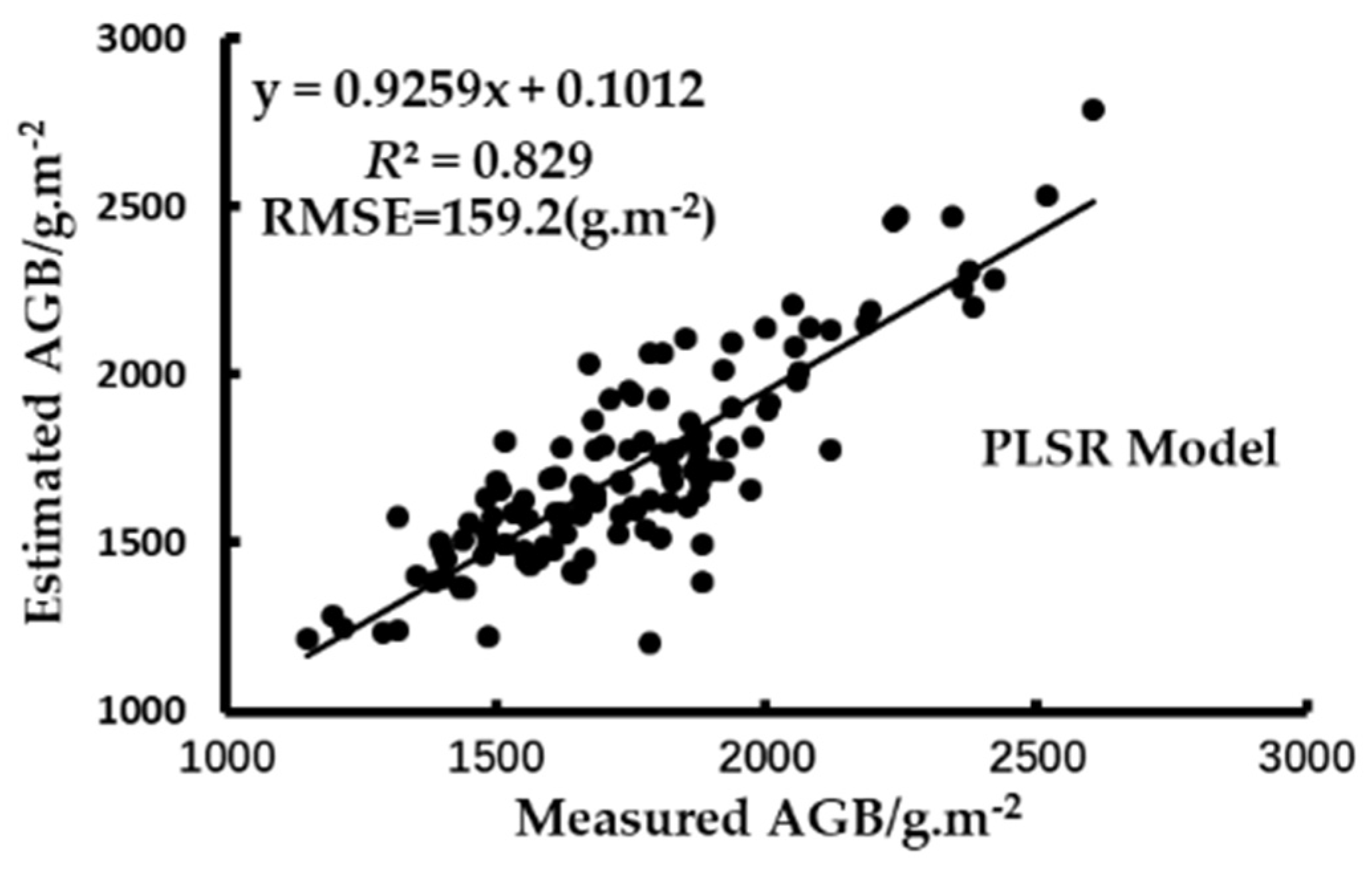

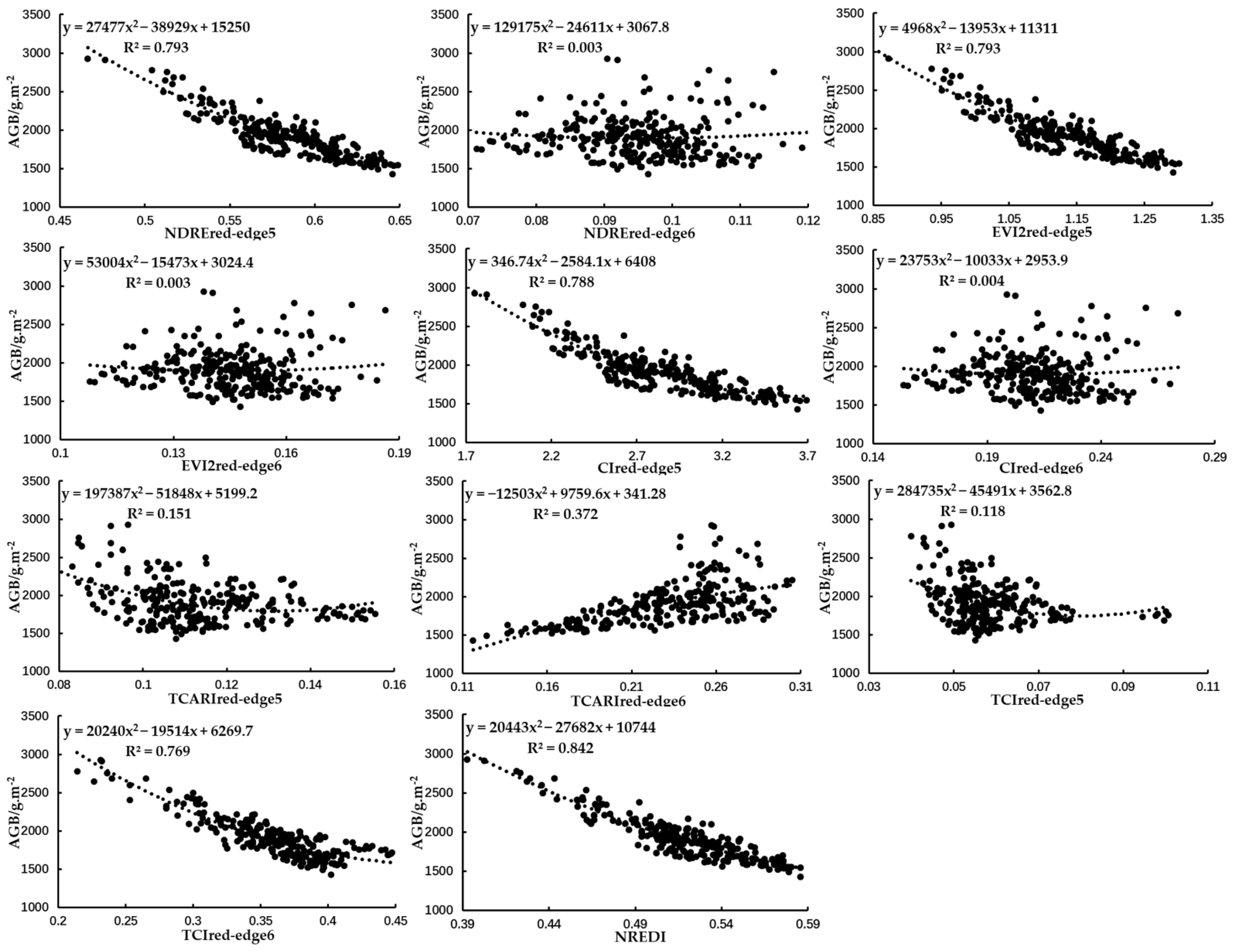

3.5. Rice Growth Monitoring Based on Red-Edge Band

4. Results

4.1. Analysis of Red-Edge Characteristics of Different Crops

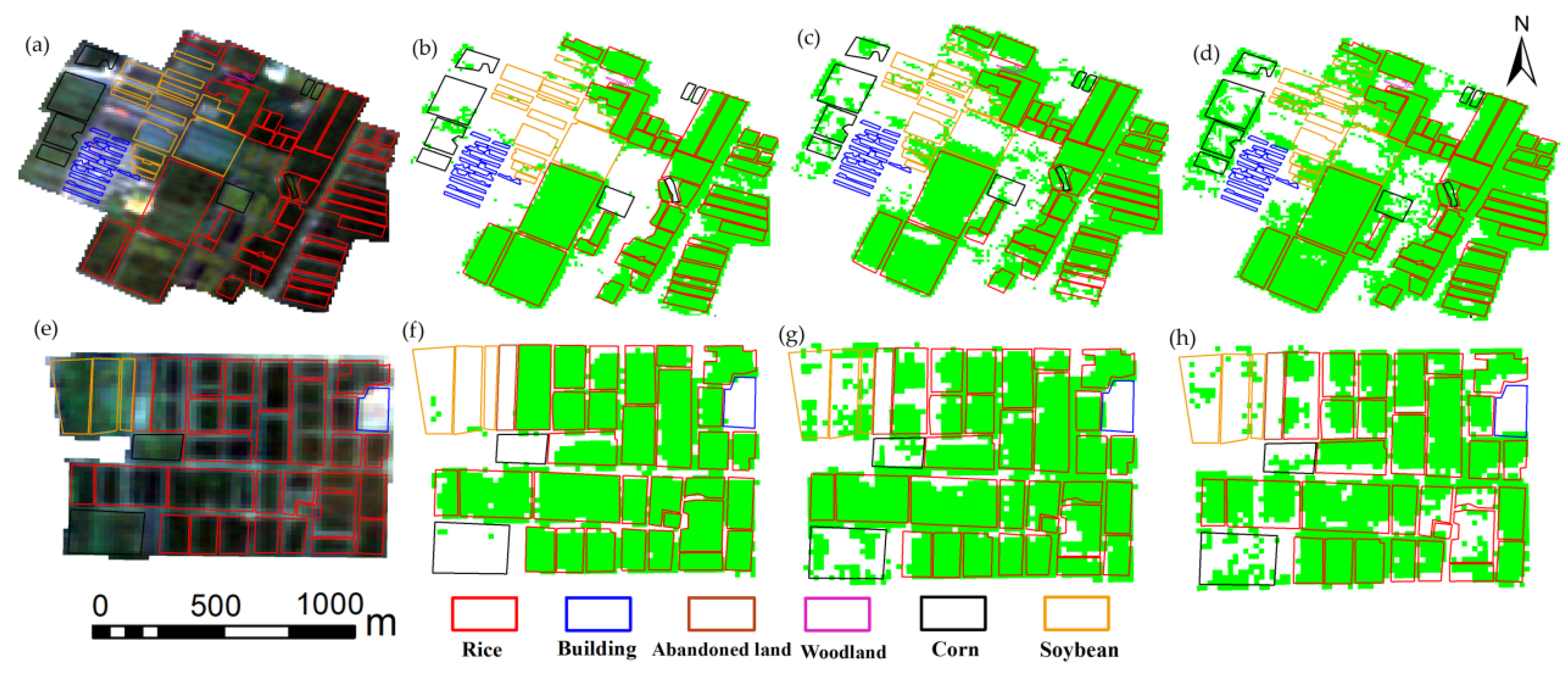

4.2. Mapping Paddy Rice Using REDT Method and Accuracy Assessment

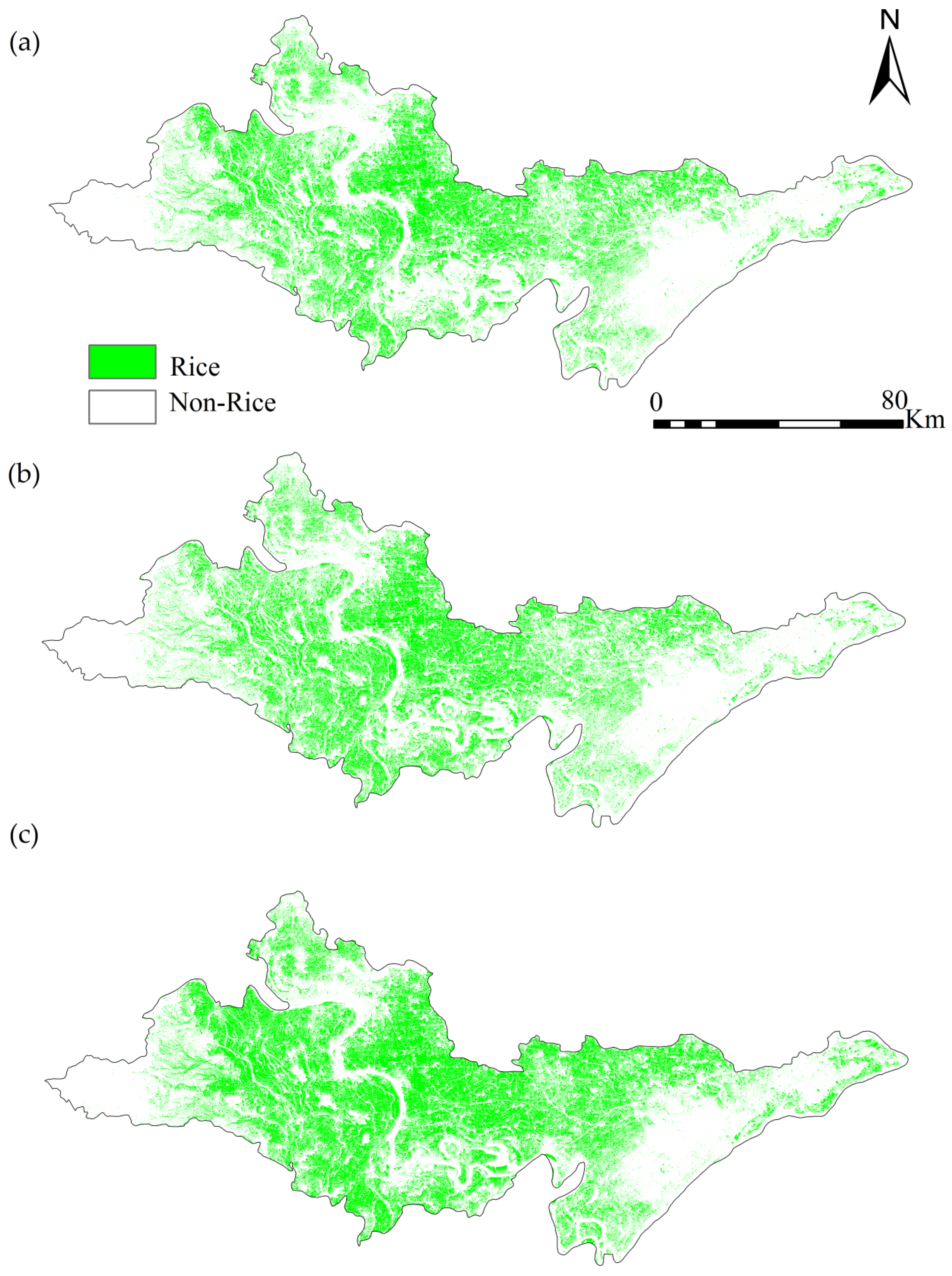

4.3. Adaptability Verification of REDT Method

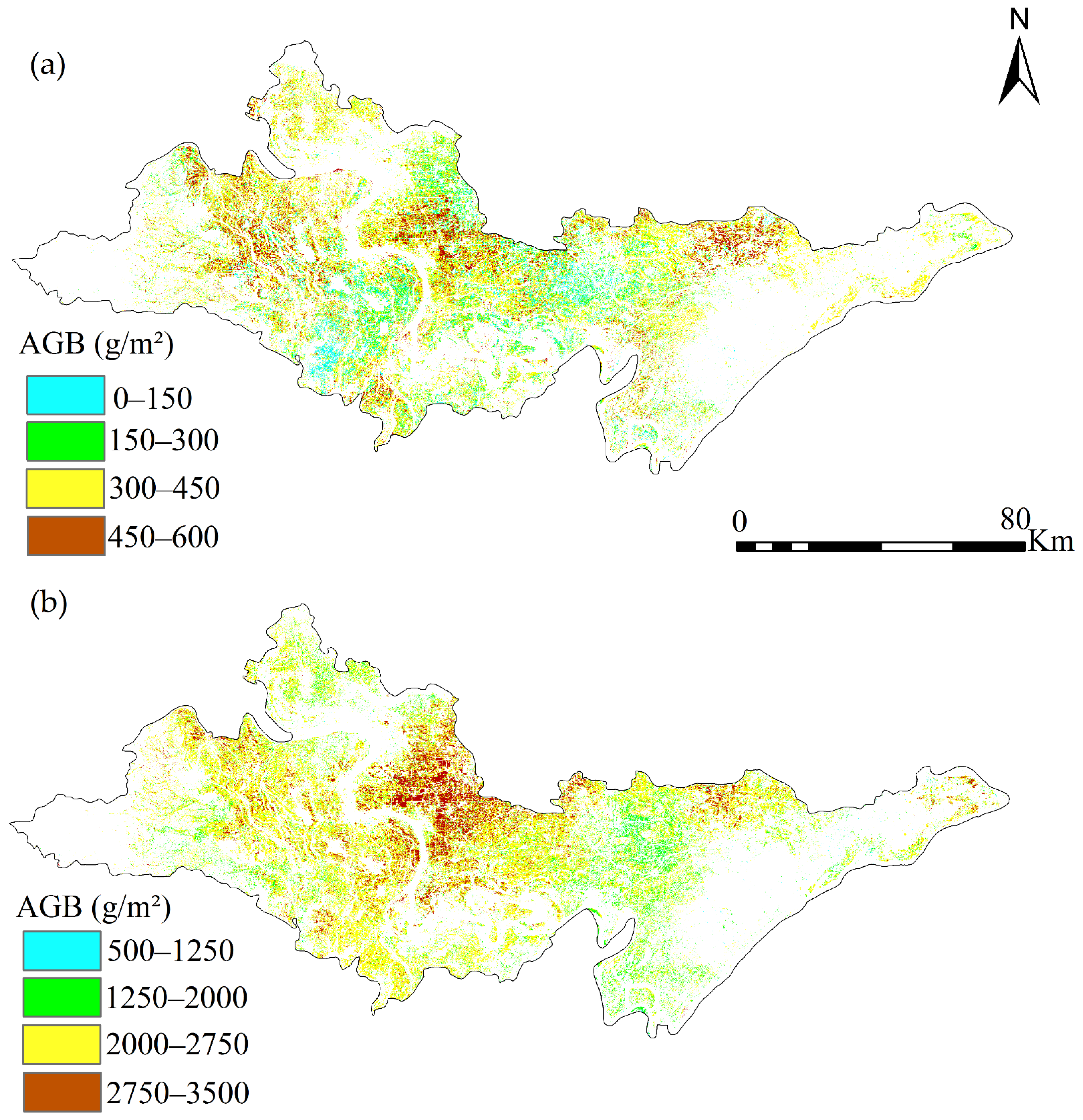

4.4. Rice Growth Monitoring

5. Discussion

5.1. Advantages of REDT Method in Rice Mapping Strategy

5.2. Application of Red-Edge Band in Rice Growth Monitoring

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Xiao, X.; Boles, S.; Frolking, S.; Salas, W.; Moore, B.; Li, C.; He, L.; Zhao, R. Observation of flooding and rice transplanting of paddy rice fields at the site to landscape scales in China using VEGETATION sensor data. Int. J. Remote Sens. 2002, 23, 3009–3022. [Google Scholar] [CrossRef]

- Yin, Q.; Liu, M.L.; Cheng, J.Y.; Ke, Y.H.; Chen, X.W. Mapping Paddy Rice Planting Area in Northeastern China Using Spatiotemporal Data Fusion and Phenology-Based Method. Remote Sens. Basel 2019, 11, 1699. [Google Scholar] [CrossRef]

- Godfray, H.C.J.; Beddington, J.R.; Crute, I.R.; Haddad, L.; Lawrence, D.; Muir, J.F.; Pretty, J.; Robinson, S.; Thomas, S.M.; Toulmin, C. Food Security: The Challenge of Feeding 9 Billion People. Science 2010, 327, 812–818. [Google Scholar] [CrossRef] [PubMed]

- Seshadri, S. Methane emission, rice production and food security. Curr. Sci. India 2007, 93, 1346–1347. [Google Scholar]

- Qin, Y.W.; Xiao, X.M.; Dong, J.W.; Zhou, Y.T.; Zhu, Z.; Zhang, G.L.; Du, G.M.; Jin, C.; Kou, W.L.; Wang, J.; et al. Mapping paddy rice planting area in cold temperate climate region through analysis of time series Landsat 8 (OLI), Landsat 7 (ETM+) and MODIS imagery. ISPRS J. Photogramm. 2015, 105, 220–233. [Google Scholar] [CrossRef] [PubMed]

- Xie, Y.C.; Sha, Z.Y.; Yu, M. Remote sensing imagery in vegetation mapping: A review. J. Plant Ecol. 2008, 1, 9–23. [Google Scholar] [CrossRef]

- Zhao, S.; Liu, X.N.; Ding, C.; Liu, S.Y.; Wu, C.S.; Wu, L. Mapping Rice Paddies in Complex Landscapes with Convolutional Neural Networks and Phenological Metrics. Gisci. Remote Sens. 2020, 57, 37–48. [Google Scholar] [CrossRef]

- Zhang, G.L.; Xiao, X.M.; Dong, J.W.; Kou, W.L.; Jin, C.; Qin, Y.W.; Zhou, Y.T.; Wang, J.; Menarguez, M.A.; Biradar, C. Mapping paddy rice planting areas through time series analysis of MODIS land surface temperature and vegetation index data. ISPRS J. Photogramm. 2015, 106, 157–171. [Google Scholar] [CrossRef] [PubMed]

- Dong, J.; Xiao, X.; Menarguez, M.A.; Zhang, G.; Qin, Y.; Thau, D.; Biradar, C.M.; Moore, B. Mapping paddy rice planting area in northeastern Asia with Landsat-8 images, phenology-based algorithm and Google Earth Engine. Remote Sens. Environ. 2016, 185. [Google Scholar] [CrossRef]

- Phiri, D.; Morgenroth, J. Developments in Landsat Land Cover Classification Methods: A Review. Remote Sens. Basel 2017, 9, 967. [Google Scholar] [CrossRef]

- Wang, J.; Huang, J.F.; Zhang, K.Y.; Li, X.X.; She, B.; Wei, C.W.; Gao, J.; Song, X.D. Rice Fields Mapping in Fragmented Area Using Multi-Temporal HJ-1A/B CCD Images. Remote Sens. Basel 2015, 7, 3467–3488. [Google Scholar] [CrossRef]

- Oguro, Y.; Suga, Y.; Takeuchi, S.; Ogawa, M.; Konishi, T.; Tsuchiya, K. Comparison of SAR and optical sensor data for monitoring of rice plant around Hiroshima. Calibrat. Charact. Satell. Sens. Accuracy Deriv. Phys. Parameters 2001, 28, 195–200. [Google Scholar] [CrossRef]

- Kour, V.P.; Arora, S. Particle Swarm Optimization Based Support Vector Machine (P-SVM) for the Segmentation and Classification of Plants. IEEE Access 2019, 7, 29374–29385. [Google Scholar] [CrossRef]

- Mountrakis, G.; Im, J.; Ogole, C. Support vector machines in remote sensing: A review. ISPRS J. Photogramm. 2011, 66, 247–259. [Google Scholar] [CrossRef]

- Murmu, S.; Biswas, S. Application of Fuzzy Logic and Neural Network in Crop Classification: A Review. Aquat. Procedia 2015, 4, 1203–1210. [Google Scholar] [CrossRef]

- Thi, T.H.N.; De Bie, C.A.J.M.; Ali, A.; Smaling, E.M.A.; Chu, T.H. Mapping the irrigated rice cropping patterns of the Mekong delta, Vietnam, through hyper-temporal SPOT NDVI image analysis. Int. J. Remote Sens. 2012, 33, 415–434. [Google Scholar] [CrossRef]

- Dong, J.W.; Xiao, X.M.; Kou, W.L.; Qin, Y.W.; Zhang, G.L.; Li, L.; Jin, C.; Zhou, Y.T.; Wang, J.; Biradar, C.; et al. Tracking the dynamics of paddy rice planting area in 1986–2010 through time series Landsat images and phenology-based algorithms. Remote Sens. Environ. 2015, 160, 99–113. [Google Scholar] [CrossRef]

- Xiao, X.M.; Boles, S.; Liu, J.Y.; Zhuang, D.F.; Frolking, S.; Li, C.S.; Salas, W.; Moore, B. Mapping paddy rice agriculture in southern China using multi-temporal MODIS images. Remote Sens. Environ. 2005, 95, 480–492. [Google Scholar] [CrossRef]

- Qiu, B.W.; Li, W.J.; Tang, Z.H.; Chen, C.C.; Qi, W. Mapping paddy rice areas based on vegetation phenology and surface moisture conditions. Ecol. Indic. 2015, 56, 79–86. [Google Scholar] [CrossRef]

- Liu, J.H.; Li, L.; Huang, X.; Liu, Y.M.; Li, T.S. Mapping paddy rice in Jiangsu Province, China, based on phenological parameters and a decision tree model. Front. Earth Sci. 2019, 13, 111–123. [Google Scholar] [CrossRef]

- Yang, Y.J.; Huang, Y.; Tian, Q.J.; Wang, L.; Geng, J.; Yang, R.R. The Extraction Model of Paddy Rice Information Based on GF-1 Satellite WFV Images. Spectrosc. Spect. Anal. 2015, 35, 3255–3261. [Google Scholar] [CrossRef]

- Zhou, Y.T.; Xiao, X.M.; Qin, Y.W.; Dong, J.W.; Zhang, G.L.; Kou, W.L.; Jin, C.; Wang, J.; Li, X.P. Mapping paddy rice planting area in rice-wetland coexistent areas through analysis of Landsat 8 OLI and MODIS images. Int. J. Appl. Earth Obs. 2016, 46, 1–12. [Google Scholar] [CrossRef]

- Wang, J.; Xiao, X.M.; Qin, Y.W.; Dong, J.W.; Zhang, G.L.; Kou, W.L.; Jin, C.; Zhou, Y.T.; Zhang, Y. Mapping paddy rice planting area in wheat-rice double-cropped areas through integration of Landsat-8 OLI, MODIS, and PALSAR images. Sci. Rep. UK 2015, 5. [Google Scholar] [CrossRef]

- Xiao, X.M.; Boles, S.; Frolking, S.; Li, C.S.; Babu, J.Y.; Salas, W.; Moore, B. Mapping paddy rice agriculture in South and Southeast Asia using multi-temporal MODIS images. Remote Sens. Environ. 2006, 100, 95–113. [Google Scholar] [CrossRef]

- Shi, J.J.; Huang, J.F.; Zhang, F. Multi-year monitoring of paddy rice planting area in Northeast China using MODIS time series data. J. Zhejiang Univ.-Sect. B 2013, 14, 934–946. [Google Scholar] [CrossRef] [PubMed]

- Wan, S.A.; Chang, S.H. Crop classification with WorldView-2 imagery using Support Vector Machine comparing texture analysis approaches and grey relational analysis in Jianan Plain, Taiwan. Int. J. Remote Sens. 2019, 40, 8076–8092. [Google Scholar] [CrossRef]

- Huang, S.Y.; Miao, Y.X.; Yuan, F.; Gnyp, M.L.; Yao, Y.K.; Cao, Q.; Wang, H.Y.; Lenz-Wiedemann, V.I.S.; Bareth, G. Potential of RapidEye and WorldView-2 Satellite Data for Improving Rice Nitrogen Status Monitoring at Different Growth Stages. Remote Sens. Basel 2017, 9, 227. [Google Scholar] [CrossRef]

- Kim, H.O.; Yeom, J.M. Sensitivity of vegetation indices to spatial degradation of RapidEye imagery for paddy rice detection: A case study of South Korea. Gisci. Remote Sens. 2015, 52, 1–17. [Google Scholar] [CrossRef]

- Cai, Y.T.; Lin, H.; Zhang, M. Mapping paddy rice by the object-based random forest method using time series Sentinel-1/Sentinel-2 data. Adv. Space Res. 2019, 64, 2233–2244. [Google Scholar] [CrossRef]

- Rad, A.M.; Ashourloo, D.; Shahrabi, H.S.; Nematollahi, H. Developing an Automatic Phenology-Based Algorithm for Rice Detection Using Sentinel-2 Time-Series Data. IEEE J.-Stars. 2019, 12, 1471–1481. [Google Scholar] [CrossRef]

- Dong, J.W.; Xiao, X.M. Evolution of regional to global paddy rice mapping methods: A review. ISPRS J. Photogramm. 2016, 119, 214–227. [Google Scholar] [CrossRef]

- Zhang, G.L.; Xiao, X.M.; Biradar, C.M.; Dong, J.W.; Qin, Y.W.; Menarguez, M.A.; Zhou, Y.T.; Zhang, Y.; Jin, C.; Wang, J.; et al. Spatiotemporal patterns of paddy rice croplands in China and India from 2000 to 2015. Sci. Total Environ. 2017, 579, 82–92. [Google Scholar] [CrossRef]

- Roy, D.P.; Wulder, M.A.; Loveland, T.R.; Woodcock, C.E.; Allen, R.G.; Anderson, M.C.; Helder, D.; Irons, J.R.; Johnson, D.M.; Kennedy, R.; et al. Landsat-8: Science and product vision for terrestrial global change research. Remote Sens. Environ. 2014, 145, 154–172. [Google Scholar] [CrossRef]

- Duan, B.; Fang, S.H.; Zhu, R.S.; Wu, X.T.; Wang, S.Q.; Gong, Y.; Peng, Y. Remote Estimation of Rice Yield With Unmanned Aerial Vehicle (UAV) Data and Spectral Mixture Analysis. Front. Plant Sci. 2019, 10. [Google Scholar] [CrossRef] [PubMed]

- Jiang, Q.; Fang, S.H.; Peng, Y.; Gong, Y.; Zhu, R.S.; Wu, X.T.; Ma, Y.; Duan, B.; Liu, J. UAV-Based Biomass Estimation for Rice-Combining Spectral, TIN-Based Structural and Meteorological Features. Remote Sens. Basel 2019, 11, 890. [Google Scholar] [CrossRef]

- Yang, S.X.; Feng, Q.S.; Liang, T.G.; Liu, B.K.; Zhang, W.J.; Xie, H.J. Modeling grassland above-ground biomass based on artificial neural network and remote sensing in the Three-River Headwaters Region. Remote Sens. Environ. 2018, 204, 448–455. [Google Scholar] [CrossRef]

- Gnyp, M.L.; Miao, Y.X.; Yuan, F.; Ustin, S.L.; Yu, K.; Yao, Y.K.; Huang, S.Y.; Bareth, G. Hyperspectral canopy sensing of paddy rice aboveground biomass at different growth stages. Field Crop Res. 2014, 155, 42–55. [Google Scholar] [CrossRef]

- Zheng, D.L.; Rademacher, J.; Chen, J.Q.; Crow, T.; Bresee, M.; le Moine, J.; Ryu, S.R. Estimating aboveground biomass using Landsat 7 ETM+ data across a managed landscape in northern Wisconsin, USA. Remote Sens. Environ. 2004, 93, 402–411. [Google Scholar] [CrossRef]

- Tao, H.L.; Feng, H.K.; Xu, L.J.; Miao, M.K.; Long, H.L.; Yue, J.B.; Li, Z.H.; Yang, G.J.; Yang, X.D.; Fan, L.L. Estimation of Crop Growth Parameters Using UAV-Based Hyperspectral Remote Sensing Data. Sensors Basel 2020, 20, 1296. [Google Scholar] [CrossRef]

- Sun, L.; Gao, F.; Anderson, M.C.; Kustas, W.P.; Alsina, M.M.; Sanchez, L.; Sams, B.; McKee, L.; Dulaney, W.; White, W.A.; et al. Daily Mapping of 30 m LAI and NDVI for Grape Yield Prediction in California Vineyards. Remote Sens. Basel 2017, 9, 317. [Google Scholar] [CrossRef]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Li, F.; Miao, Y.X.; Feng, G.H.; Yuan, F.; Yue, S.C.; Gao, X.W.; Liu, Y.Q.; Liu, B.; Ustine, S.L.; Chen, X.P. Improving estimation of summer maize nitrogen status with red edge-based spectral vegetation indices. Field Crop Res. 2014, 157, 111–123. [Google Scholar] [CrossRef]

- Li, L.Y.; Ren, X.B. A Novel Evaluation Model for Urban Smart Growth Based on Principal Component Regression and Radial Basis Function Neural Network. Sustainability Basel 2019, 11, 6125. [Google Scholar] [CrossRef]

- Boyd, M.; Porth, B.; Porth, L.; Tan, K.S.; Wang, S.; Zhu, W.J. The Design of Weather Index Insurance Using Principal Component Regression and Partial Least Squares Regression: The Case of Forage Crops. N. Am. Actuar. J. 2020, 24, 355–369. [Google Scholar] [CrossRef]

- Otgonbayar, M.; Atzberger, C.; Chambers, J.; Damdinsuren, A. Mapping pasture biomass in Mongolia using Partial Least Squares, Random Forest regression and Landsat 8 imagery. Int. J. Remote Sens. 2019, 40, 3204–3226. [Google Scholar] [CrossRef]

- Ma, D.D.; Maki, H.; Neeno, S.; Zhang, L.B.; Wang, L.J.; Jin, J. Application of non-linear partial least squares analysis on prediction of biomass of maize plants using hyperspectral images. Biosyst. Eng. 2020, 200, 40–54. [Google Scholar] [CrossRef]

- Verrelst, J.; Camps-Valls, G.; Munoz-Mari, J.; Rivera, J.P.; Veroustraete, F.; Clevers, J.G.P.W.; Moreno, J. Optical remote sensing and the retrieval of terrestrial vegetation bio-geophysical properties—A review. ISPRS J. Photogramm. 2015, 108, 273–290. [Google Scholar] [CrossRef]

- Devia, C.A.; Rojas, J.P.; Petro, E.; Martinez, C.; Mondragon, I.F.; Patino, D.; Rebolledo, M.C.; Colorado, J. High-Throughput Biomass Estimation in Rice Crops Using UAV Multispectral Imagery. J. Intell. Robot Syst. 2019, 96, 573–589. [Google Scholar] [CrossRef]

- Yanying, B.; Julin, G.; Baolin, Z. Monitoring of Crops Growth Based on NDVI and EVI. Trans. Chin. Soc. Agric. Mach. 2019, 50, 153–161. [Google Scholar]

- Zhou, X.; Zheng, H.B.; Xu, X.Q.; He, J.Y.; Ge, X.K.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.X.; Tian, Y.C. Predicting grain yield in rice using multi-temporal vegetation indices from UAV-based multispectral and digital imagery. ISPRS J. Photogramm. 2017, 130, 246–255. [Google Scholar] [CrossRef]

- Sakamoto, T.; Shibayama, M.; Kimura, A.; Takada, E. Assessment of digital camera-derived vegetation indices in quantitative monitoring of seasonal rice growth. ISPRS J. Photogramm. 2011, 66, 872–882. [Google Scholar] [CrossRef]

- Calibration Parameters for Part of Chinese Satellite Images. Available online: http://www.cresda.com/CN/Downloads/dbcs/index.shtml (accessed on 20 December 2019).

- Kira, O.; Linker, R.; Gitelson, A. Non-Destructive Estimation of Foliar Chlorophyll and Carotenoid Contents: Focus on Informative Spectral Bands. Int. J. Appl. Earth Obs. 2015, 38, 251–260. [Google Scholar] [CrossRef]

- Dwyer, J.L.; Kruse, F.A.; Lefkoff, A.B. Effects of empirical versus model-based reflectance calibration on automated analysis of imaging spectrometer data: A case study from the Drum Mountains, Utah. Photogramm. Eng. Remote Sens. 1995, 61, 1247–1254. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Goforth, M.A.; Steele, C.M.; Rango, A. Multispectral Remote Sensing from Unmanned Aircraft: Image Processing Workflows and Applications for Rangeland Environments. Remote Sens. Basel 2011, 3, 2529–2551. [Google Scholar] [CrossRef]

- Rouse, J.W., Jr.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring Vegetation Systems in the Great Plains with ERTS; NASA: Washington, DC, USA, 1974; Volume 1.

- Fitzgerald, G.; Rodriguez, D.; O’Leary, G. Measuring and predicting canopy nitrogen nutrition in wheat using a spectral index-The canopy chlorophyll content index (CCCI). Field Crop Res. 2010, 116, 318–324. [Google Scholar] [CrossRef]

- Dash, J.; Curran, P.J. The MERIS terrestrial chlorophyll index. Int. J. Remote Sens. 2004, 25, 5403–5413. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Vina, A.; Ciganda, V.; Rundquist, D.C.; Arkebauer, T.J. Remote estimation of canopy chlorophyll content in crops. Geophys. Res. Lett. 2005, 32. [Google Scholar] [CrossRef]

- Jiang, Z.Y.; Huete, A.R.; Didan, K.; Miura, T. Development of a two-band enhanced vegetation index without a blue band. Remote Sens. Environ. 2008, 112, 3833–3845. [Google Scholar] [CrossRef]

- Datt, B. A new reflectance index for remote sensing of chlorophyll content in higher plants: Tests using Eucalyptus leaves. J. Plant Physiol. 1999, 154, 30–36. [Google Scholar] [CrossRef]

- Feng, G.U.; Jian-Li, D.; Xiang-Yu, G.E.; Gao, S.B.; Wang, J.Z. Estimation of Chlorophyll Content of Typical Oasis Vegetation in Arid Area Based on Sentinel-2 Data. Arid Zone Res. 2019, 4, 924–934. [Google Scholar]

| Acquisition Date | Image Number | Sensor | Temporal Resolution | Central Wavelength/nm | |

|---|---|---|---|---|---|

| GF-6 | 4 June 2019 | 2 | WFV | 2 days | B1 (blue) : 485 B2 (green): 555 B3 (red): 660 B4 (near-infrared): 830 B5 (red-edge1): 704 B6 (red-edge2): 752 B7 (coast blue): 425 B8 (yellow): 610 |

| 27 July 2019 | 3 | ||||

| 12 August 2019 | 2 | ||||

| 20 August 2019 | 1 | ||||

| 30 September 2019 | 2 | ||||

| GF-1 | 2 June 2019 | 4 | WFV1 WFV2 WFV3 WFV4 | 4 days | B1 (blue): 485 B2 (green): 555 B3 (red): 660 B4 (near-infrared): 830 |

| 26 June 2019 | 4 | ||||

| 2 August 2019 | 4 | ||||

| 15 August 2019 | 4 | ||||

| 12 September 2019 | 4 | ||||

| 28 September 2019 | 4 |

| VIs | Formula | Reference | |

|---|---|---|---|

| UAV | Normalized Difference Vegetation Index (NDVI) | Rouse et al., 1974 [56] | |

| Normalized Difference Red edge (NDRE) | Glenn et al., 2010 [57] | ||

| MERIS Terrestrial Chlorophyll Index (MTCI) | Dashand Curran, 2004 [58] | ||

| Red-edge Chlorophyll Index (CIred-edge) | Gitelson et al., 2005 [59] | ||

| Two-band Enhanced Vegetation Index (EVI2) | Jiang et al., 2008 [60] | ||

| Leaf Chlorophyll Index (LCI) | Datt, B. et al, 1999 [61] | ||

| Normalized Red-edge Difference Index (NREDI) | Feng G U, 2019 [62] | ||

| GF-6 | Normalized Difference Vegetation Index (NDVI) | Rouse et al., 1974 [56] | |

| Normalized Difference Red edge (NDREred-edge5) | Glenn et al., 2010 [57] | ||

| Normalized Difference Red edge (NDREred-edge6) | Dashand Curran, 2004 [58] | ||

| MERIS Terrestrial Chlorophyll Index (MTCIred-edge5) | Dashand Curran, 2004 [58] | ||

| MERIS Terrestrial Chlorophyll Index (MTCIred-edge6) | Gitelson et al., 2005 [59] | ||

| Red-edge Chlorophyll Index (CIred-edge5) | Gitelson et al., 2005 [59] | ||

| Red-edge Chlorophyll Index (CIred-edge6) | Jiang et al., 2008 [60] | ||

| Two-band Enhanced Vegetation Index (EVI2red-edge5) | Jiang et al., 2008 [60] | ||

| Two-band Enhanced Vegetation Index (EVI2red-edge6) | Feng G U, 2019 [62] | ||

| Red-edge Triangle Chlorophyll Index (TCIred-edge5) | Feng G U, 2019 [62] | ||

| Red-edge Triangle Chlorophyll Index (TCIred-edge6) | Feng G U, 2019 [62] | ||

| Red-edge Transformation Chlorophyll Absorption Reflectance Index (TCARIred-edge5) | Feng G U, 2019 [62] | ||

| Red-edge Transformation Chlorophyll Absorption Reflectance Index (TCARIred-edge6) | Feng G U, 2019 [62] |

| Study Area | Paddy Rice Map | Class | PA% | UA% | OA% | Kappa Coefficient |

|---|---|---|---|---|---|---|

| A | GF-6-REDT | Rice | 91.85 | 93.04 | 93.85 | 0.87 |

| Non-rice | 95.24 | 94.40 | ||||

| GF-6-NNE | Rice | 85.82 | 81.48 | 86.42 | 0.72 | |

| Non-rice | 86.84 | 90.07 | ||||

| GF-1-NNE | Rice | 86.37 | 77.55 | 85.29 | 0.69 | |

| Non-rice | 84.61 | 90.98 | ||||

| B | GF-6-REDT | Rice | 94.80 | 94.48 | 94.06 | 0.89 |

| Non-rice | 93.14 | 93.54 | ||||

| GF-6-NNE | Rice | 89.09 | 87.78 | 87.31 | 0.74 | |

| Non-rice | 85.19 | 86.73 | ||||

| GF-1-NNE | Rice | 90.73 | 84.76 | 86.37 | 0.72 | |

| Non-rice | 81.42 | 88.52 | ||||

| Jingzhou City | GF-6-REDT | Rice | 90.01 | 89.60 | 91.10 | 0.82 |

| Non-rice | 91.93 | 92.26 | ||||

| GF-6-NNE | Rice | 81.76 | 78.11 | 83.39 | 0.66 | |

| Non-rice | 84.49 | 87.26 | ||||

| GF-1-NNE | Rice | 86.34 | 75.43 | 82.17 | 0.64 | |

| Non-rice | 79.06 | 88.60 |

| Paddy Rice Map | Class | PA (%) | UA (%) | OA (%) | Kappa Coefficient |

|---|---|---|---|---|---|

| GF-6-REDT | Rice | 94.55 | 93.86 | 93.04 | 0.85 |

| Non-rice | 90.81 | 91.80 | |||

| GF-6-NNE | Rice | 87.68 | 90.64 | 87.17 | 0.73 |

| Non-rice | 86.41 | 82.38 | |||

| GF-1-NNE | Rice | 85.26 | 91.04 | 86.08 | 0.71 |

| Non-rice | 87.33 | 79.68 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jiang, X.; Fang, S.; Huang, X.; Liu, Y.; Guo, L. Rice Mapping and Growth Monitoring Based on Time Series GF-6 Images and Red-Edge Bands. Remote Sens. 2021, 13, 579. https://doi.org/10.3390/rs13040579

Jiang X, Fang S, Huang X, Liu Y, Guo L. Rice Mapping and Growth Monitoring Based on Time Series GF-6 Images and Red-Edge Bands. Remote Sensing. 2021; 13(4):579. https://doi.org/10.3390/rs13040579

Chicago/Turabian StyleJiang, Xueqin, Shenghui Fang, Xia Huang, Yanghua Liu, and Linlin Guo. 2021. "Rice Mapping and Growth Monitoring Based on Time Series GF-6 Images and Red-Edge Bands" Remote Sensing 13, no. 4: 579. https://doi.org/10.3390/rs13040579

APA StyleJiang, X., Fang, S., Huang, X., Liu, Y., & Guo, L. (2021). Rice Mapping and Growth Monitoring Based on Time Series GF-6 Images and Red-Edge Bands. Remote Sensing, 13(4), 579. https://doi.org/10.3390/rs13040579