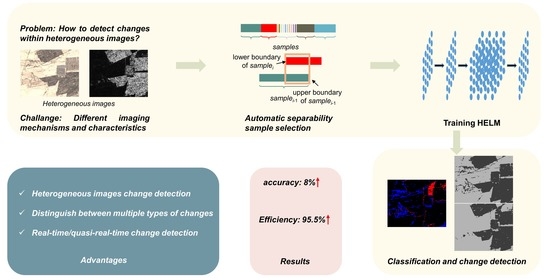

Change Detection for Heterogeneous Remote Sensing Images with Improved Training of Hierarchical Extreme Learning Machine (HELM)

Abstract

:1. Introduction

- (1)

- This paper proposes a new change detection framework, which is applicable to both homogeneous/heterogeneous images change detection, not only to obtain changed regions, but also to distinguish changed types.

- (2)

- This paper proposes a separable training sample selection method to train the network, which accurately selects training samples and does not need to utilize additional training datasets.

- (3)

- HELM with less parameter adjustment in network training is introduced for multi-temporal feature extraction to improve the accuracy and efficiency of change detection with heterogeneous images.

2. Materials and Methods

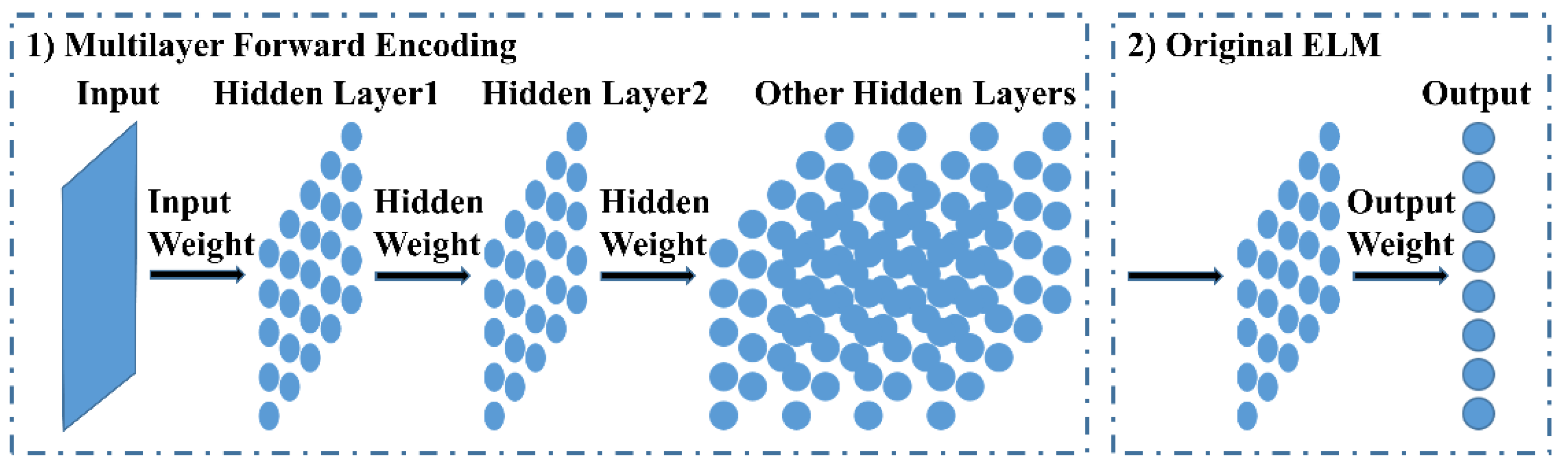

2.1. HELM

2.1.1. Multilayer Forward Encoding

2.1.2. Original ELM

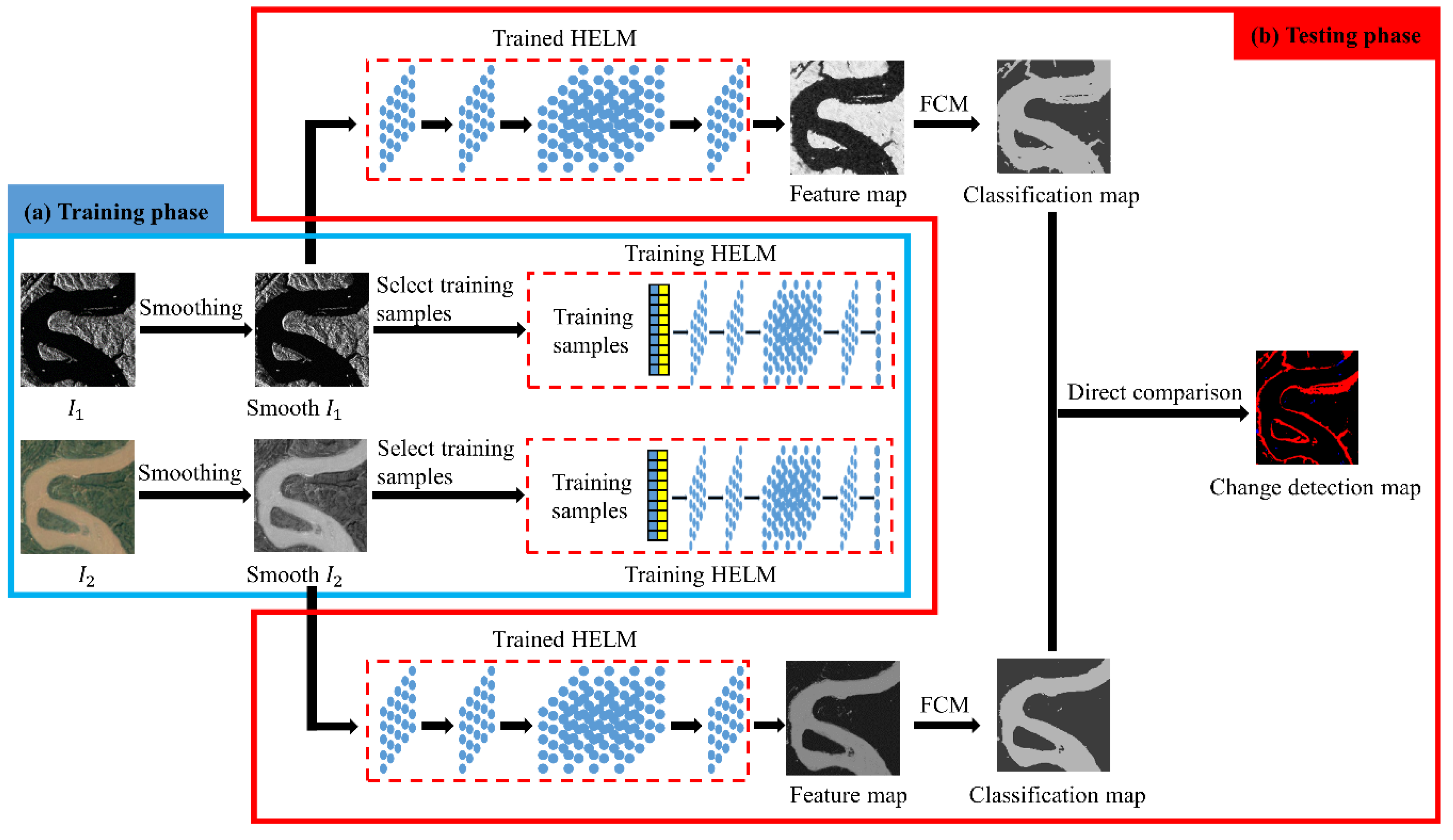

2.2. Methodology

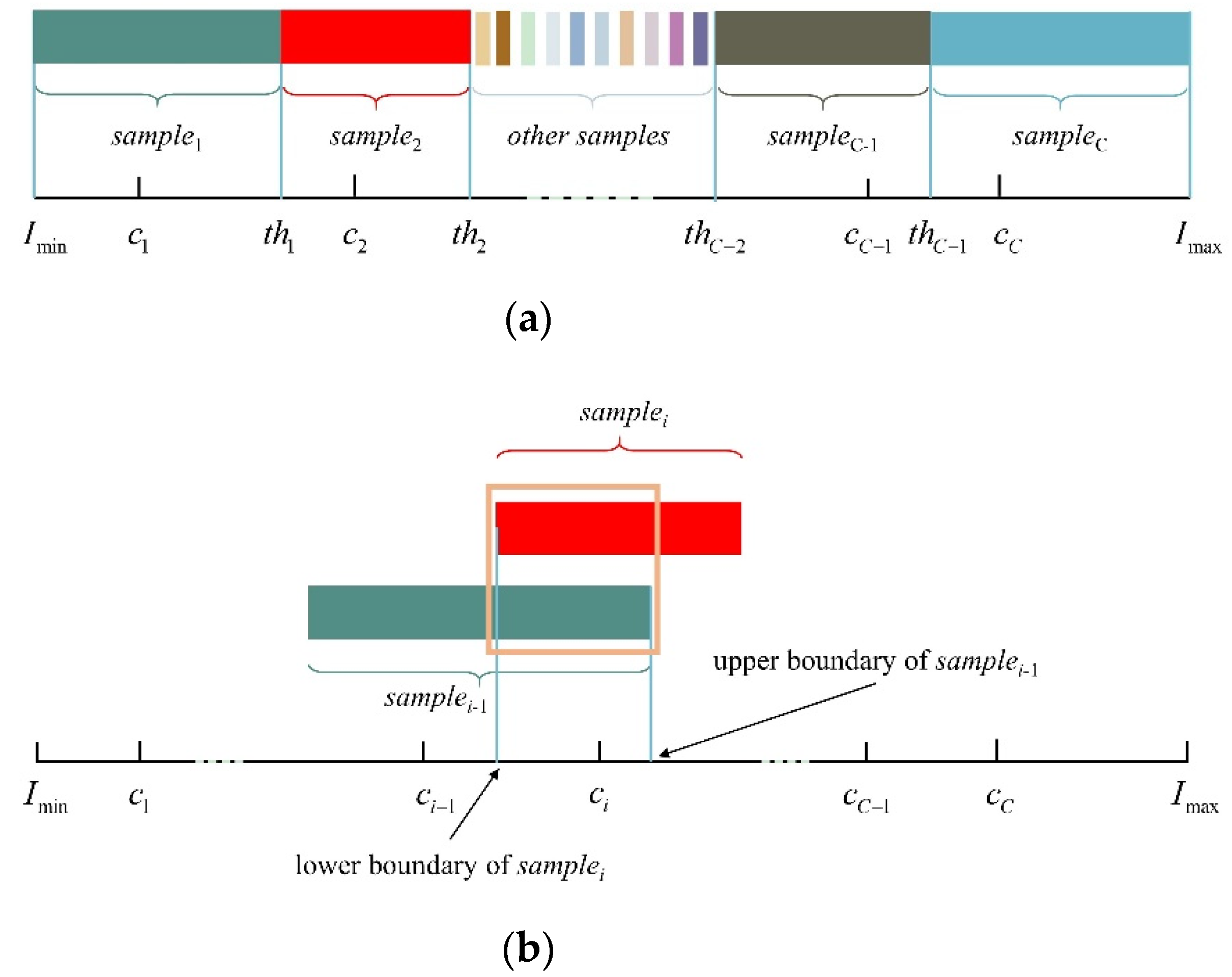

2.2.1. Training Sample Selection

2.2.2. Training of HELM

2.2.3. Feature Map Generation

2.2.4. Change Detection Map Generation

3. Results

3.1. Dataset Description

3.2. Evaluation Criteria

3.3. Experimental Setup

3.3.1. Comparison Methods

- (1)

- Compared change detection methods with homogeneous SAR Images: FCM, PCA-kmeans, FLICM, PCA-net, CWNN, NPSG, INLPG.

- (2)

- Compared change detection methods with homogeneous Optical Images: CVA, FCM, PCA-kmeans, FLICM, SFA, DSFA

- (3)

- Compared change detection methods with heterogeneous Optical and SAR Images: LTFL, CGAN, HPT, NPSG, INLPG

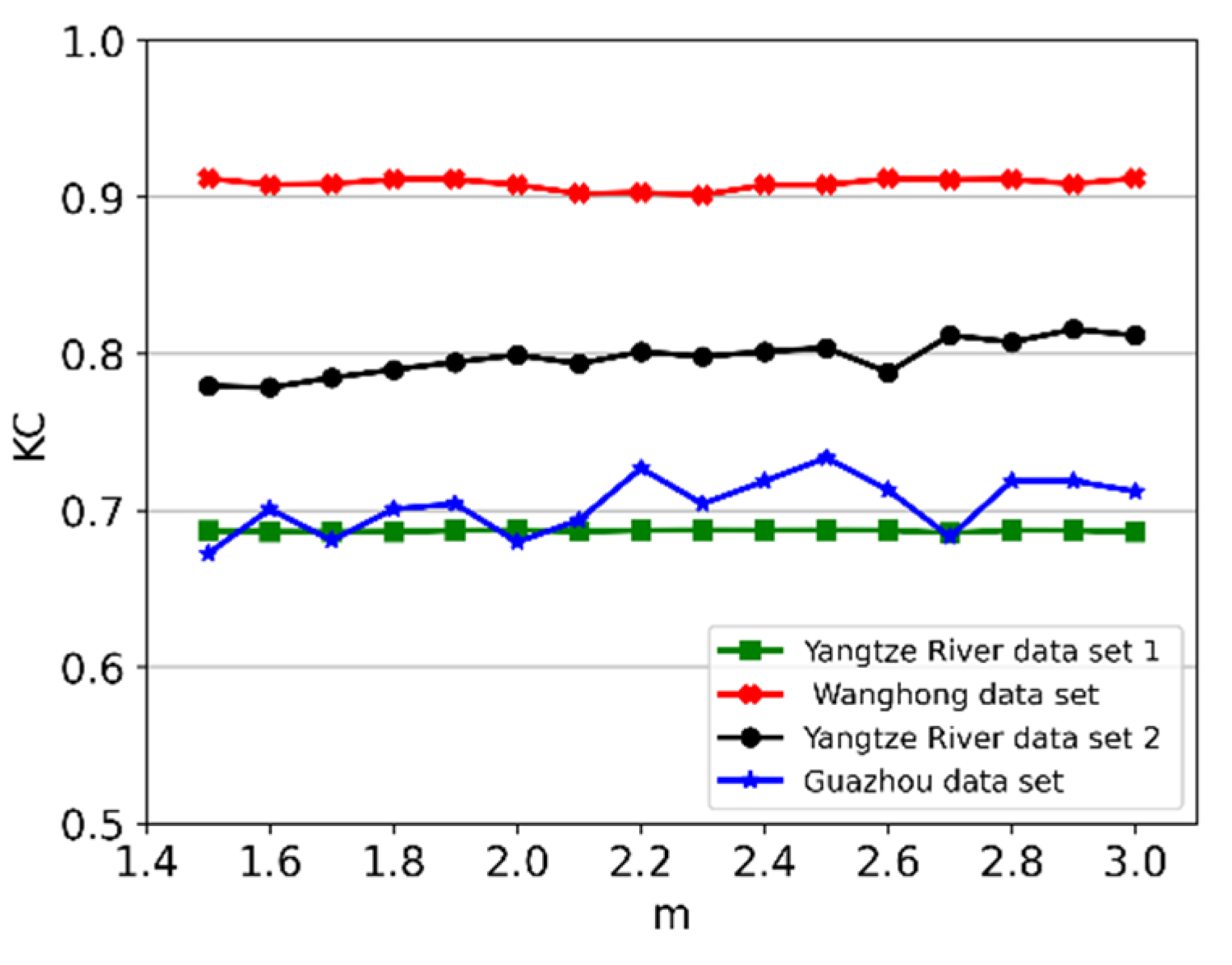

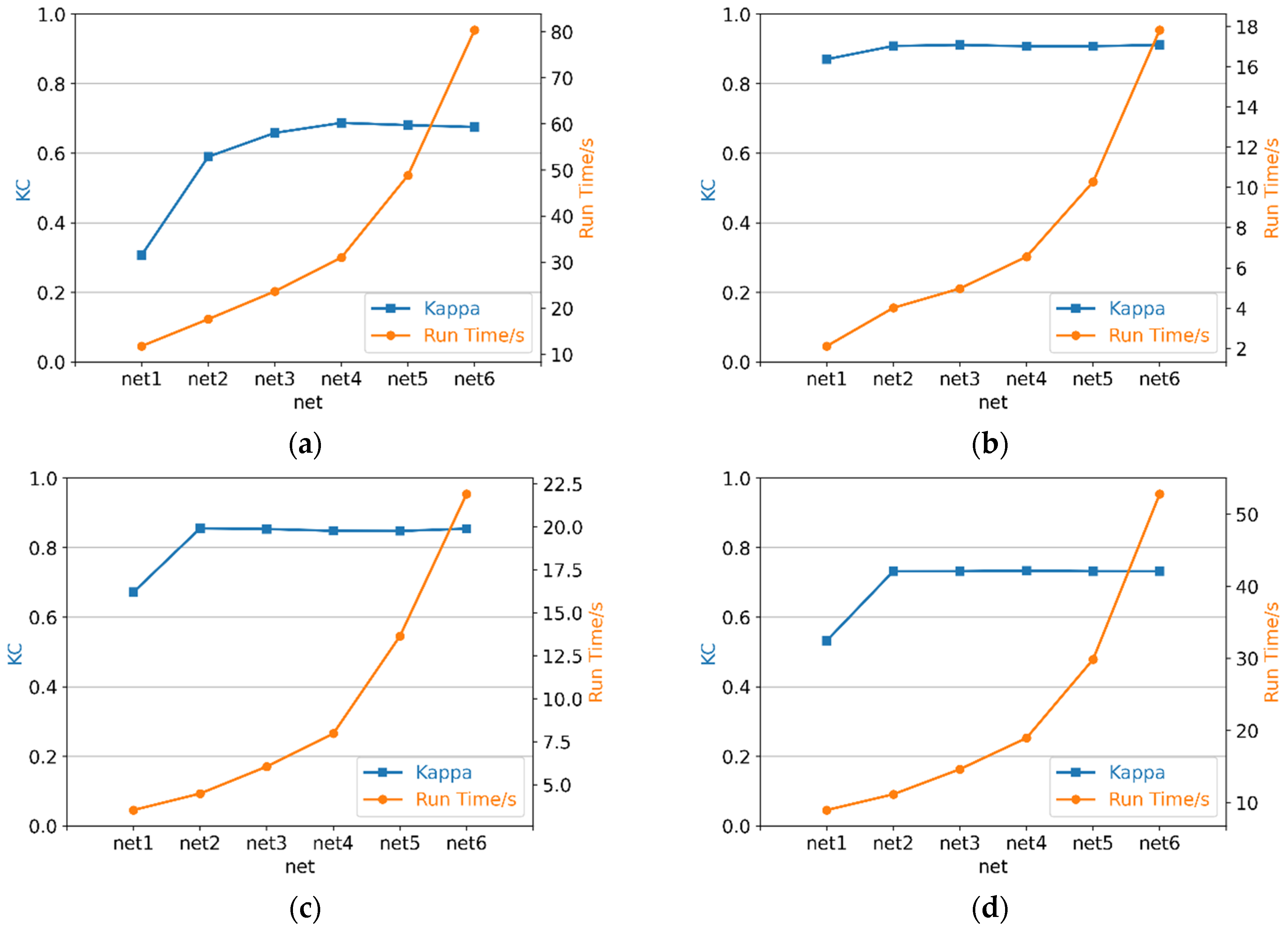

3.3.2. Parameter Setting

3.3.3. Running Environment

3.4. Results on Homogeneous Images

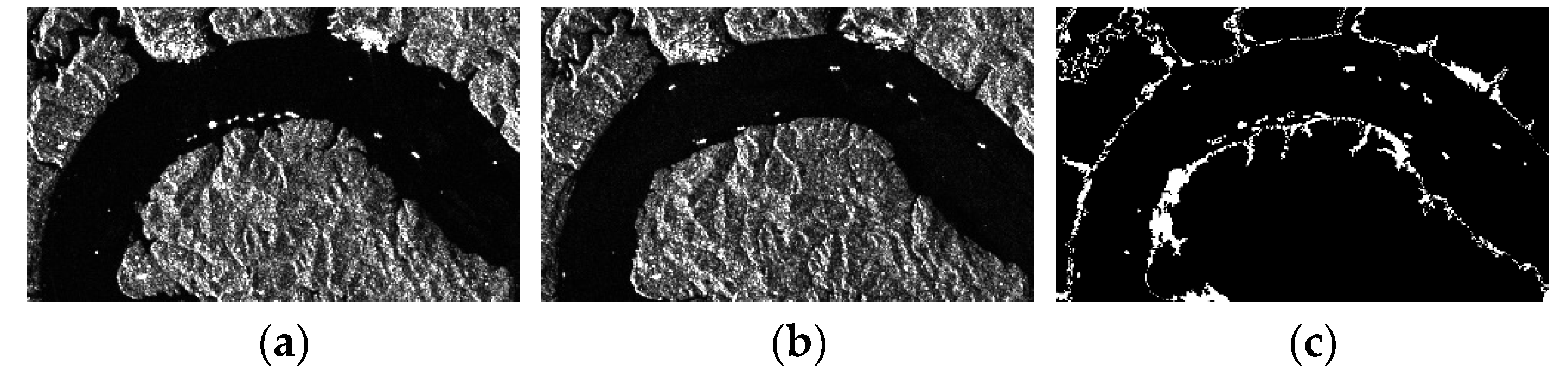

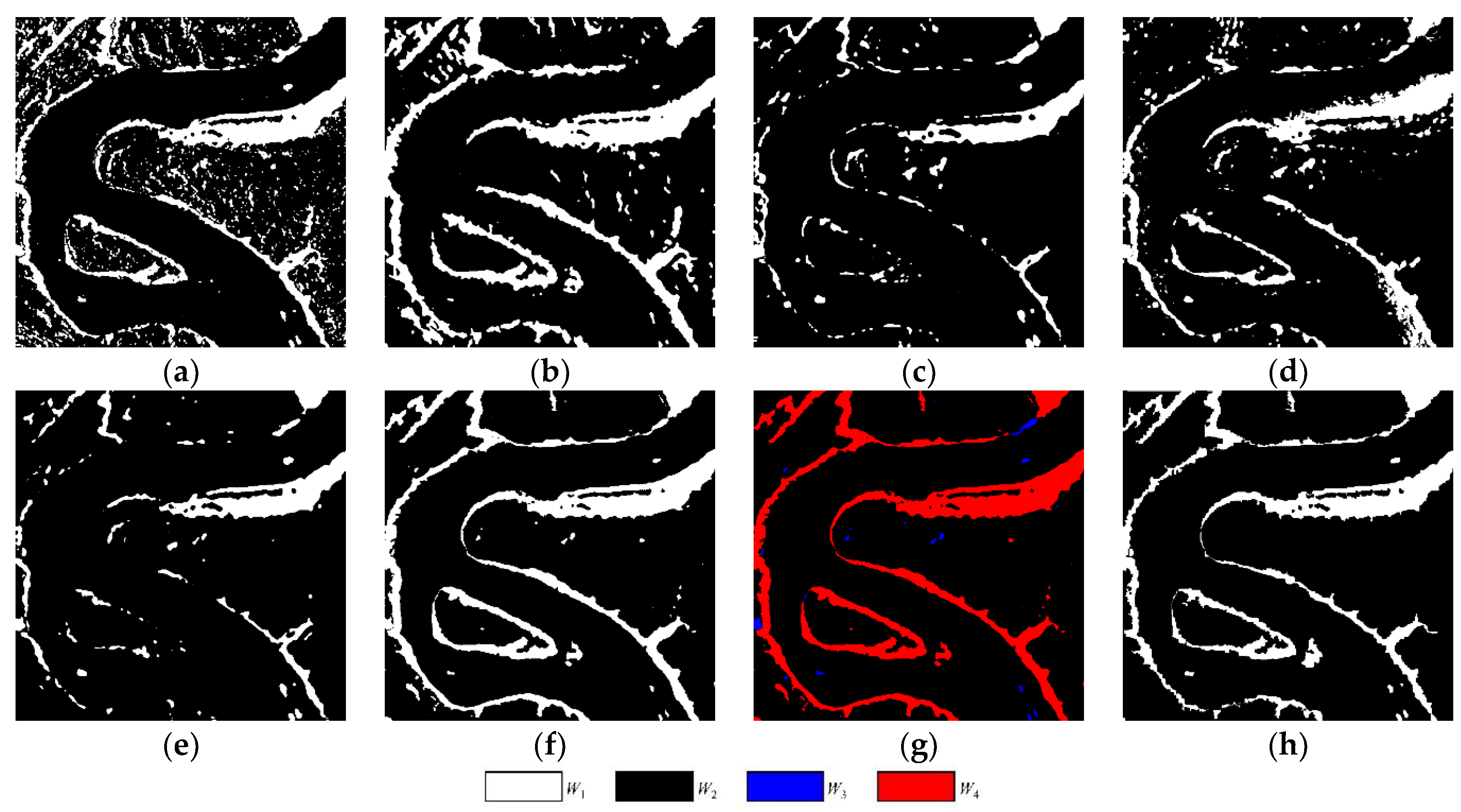

3.4.1. Results on Yangtze River Dataset 1

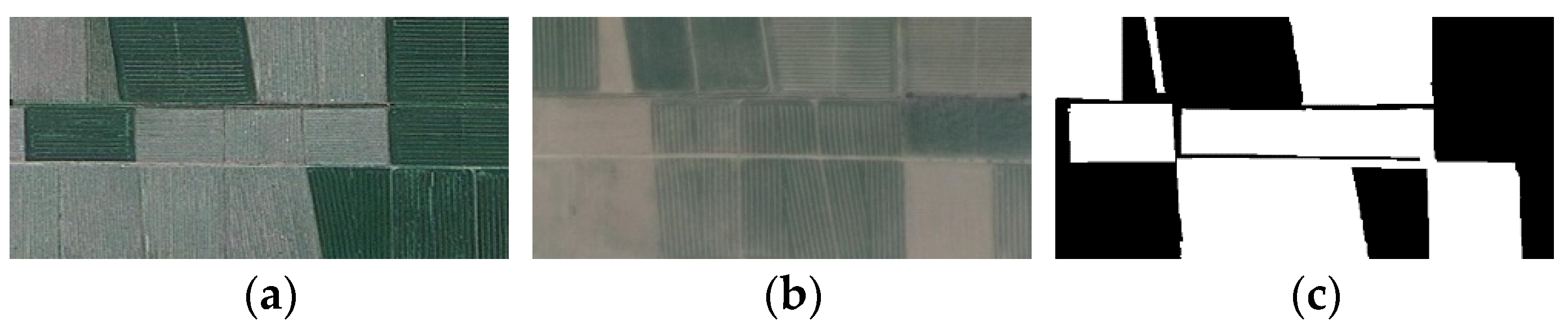

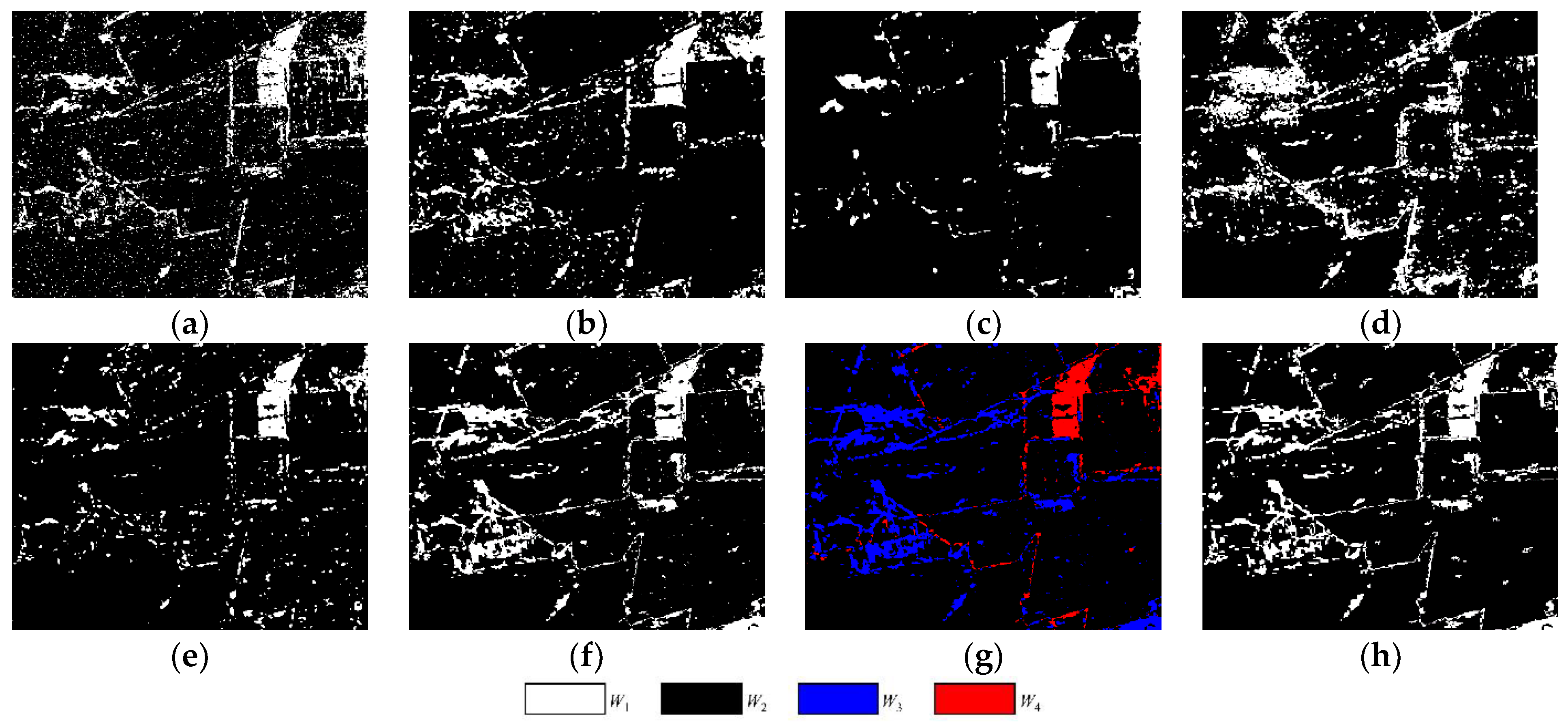

3.4.2. Results on Wanghong Data Set

3.5. Results on Heterogeneous Images

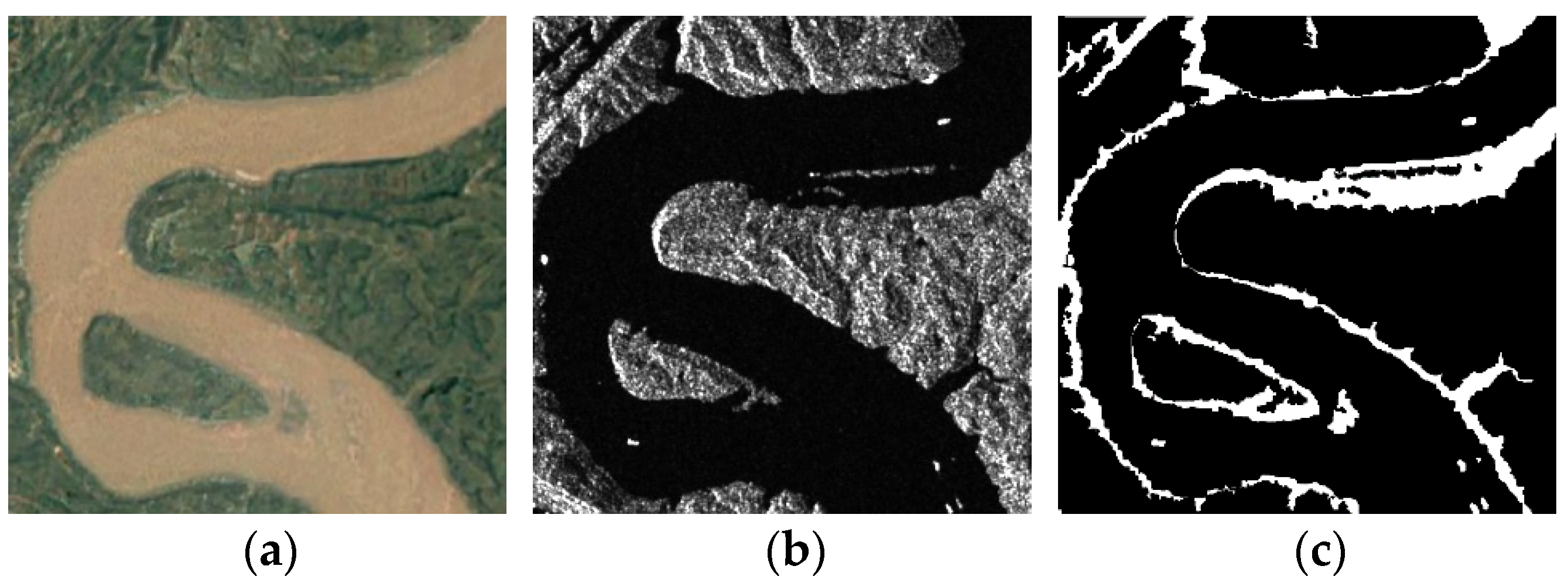

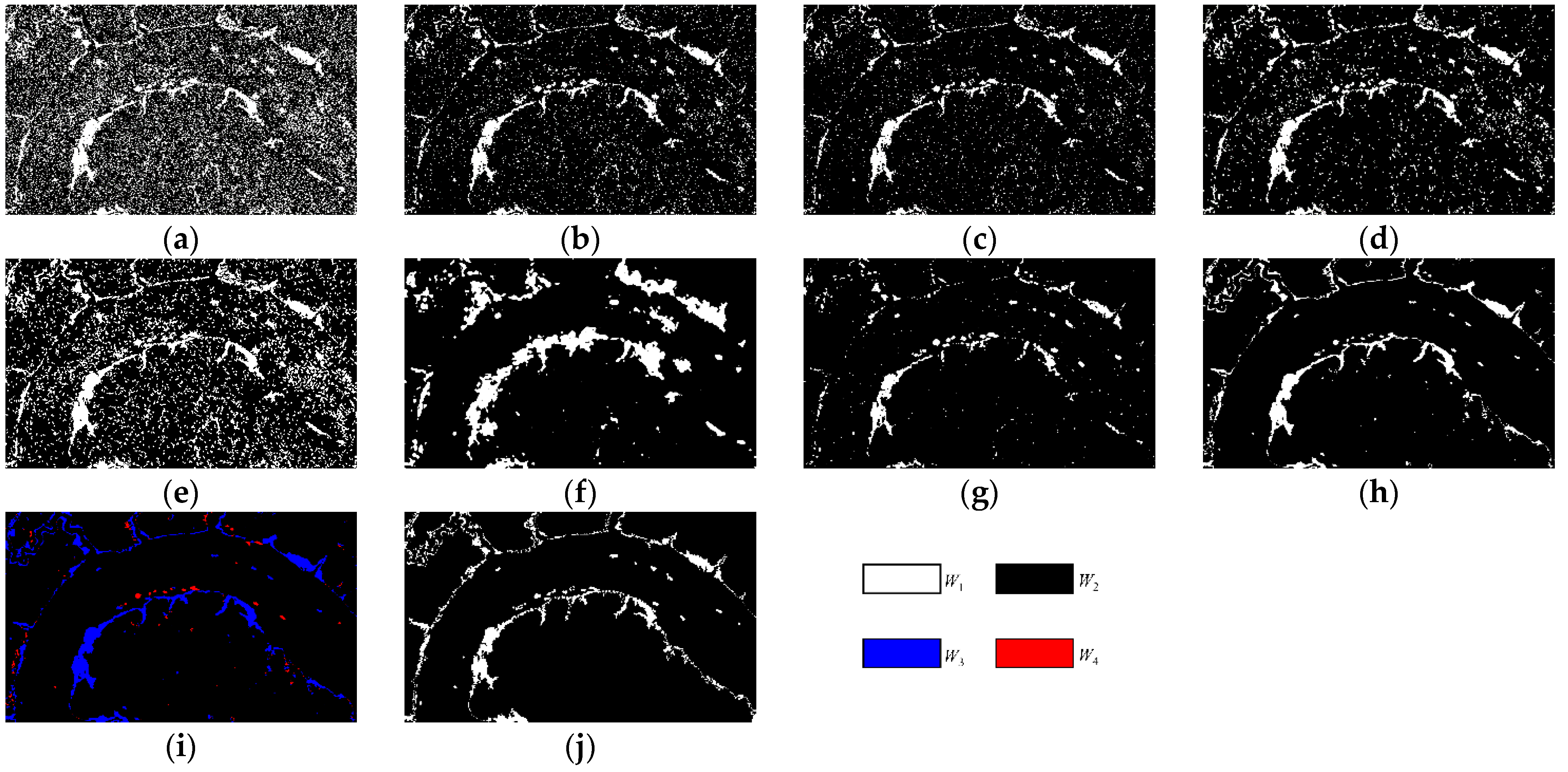

3.5.1. Results on Yangtze River Dataset 2

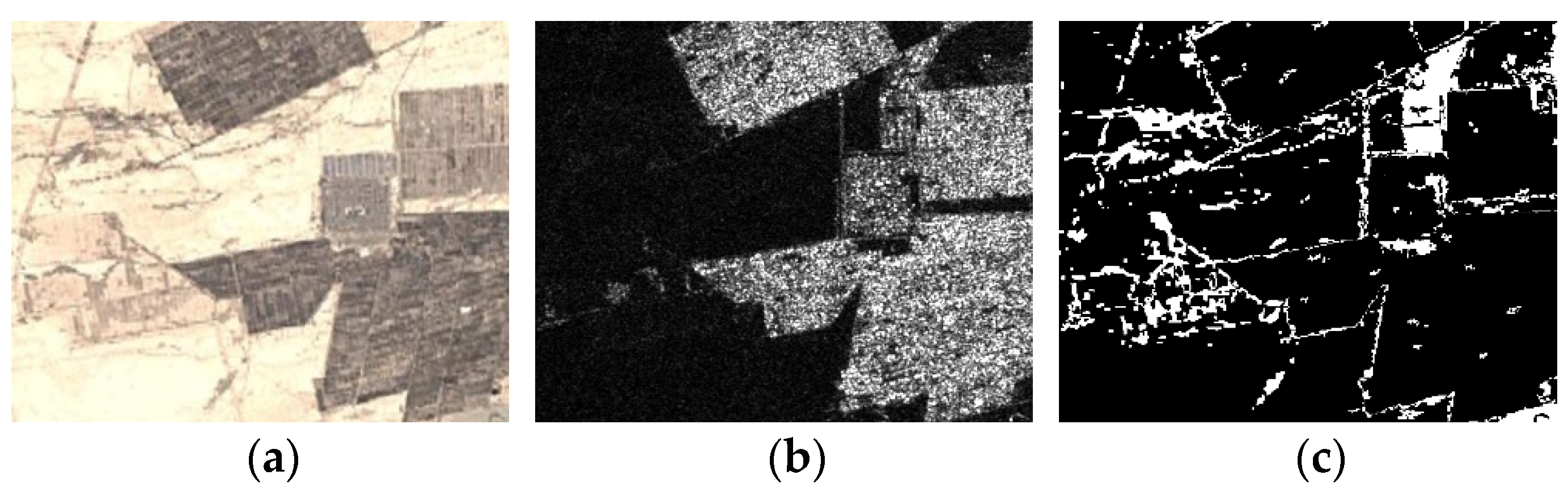

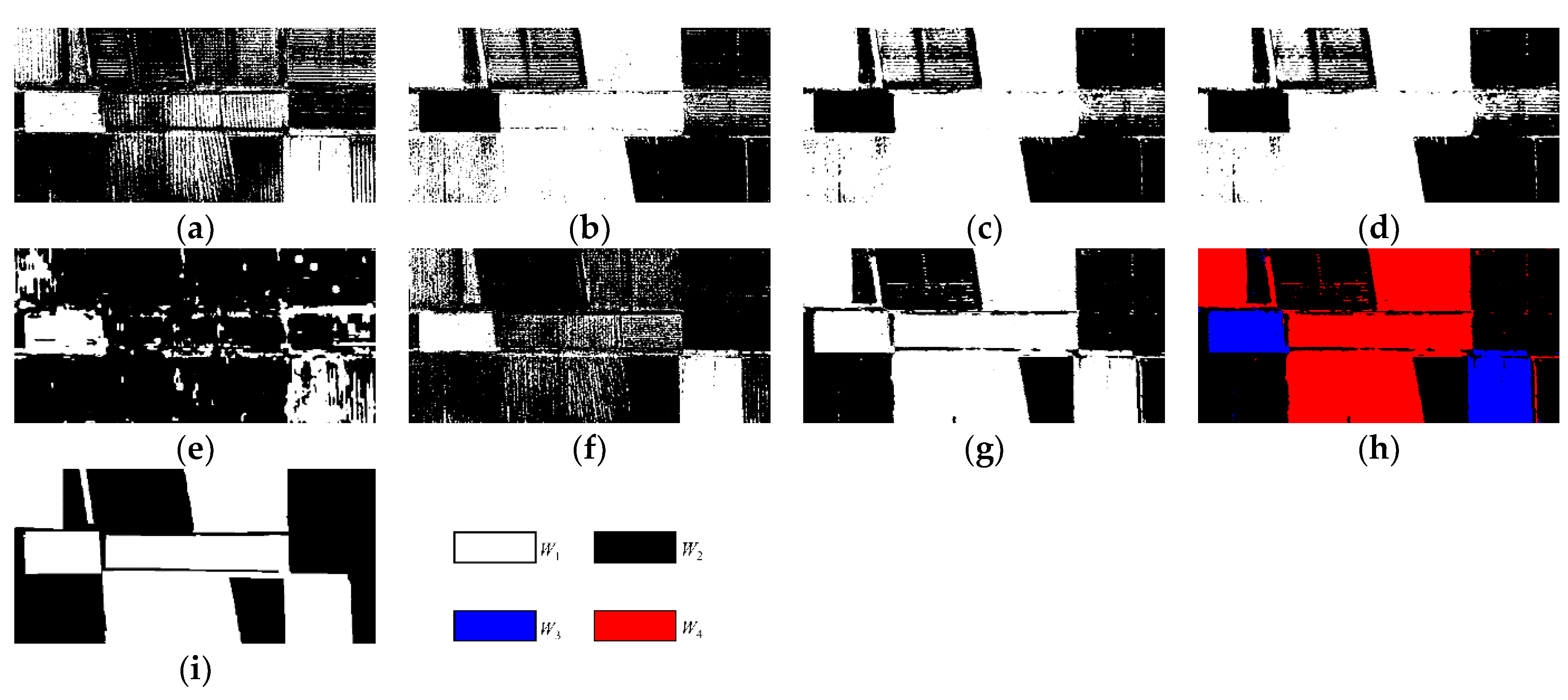

3.5.2. Results on Guazhou Dataset

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Lu, D.; Mausel, P.; Brondízio, E.; Moran, E. Change detection techniques. Int. J. Remote Sens. 2004, 25, 2365–2401. [Google Scholar] [CrossRef]

- Mas, J.-F. Monitoring land-cover changes: A comparison of change detection techniques. Int. J. Remote Sens. 1999, 20, 139–152. [Google Scholar] [CrossRef]

- Hansen, M.C.; Shimabukuro, Y.E.; Potapov, P.; Pittman, K. Comparing annual MODIS and PRODES forest cover change data for advancing monitoring of Brazilian forest cover. Remote Sens. Environ. 2008, 112, 3784–3793. [Google Scholar] [CrossRef]

- Al-Khudhairy, D.; Caravaggi, I.; Giada, S. Structural Damage Assessments from Ikonos Data Using Change Detection, Object-Oriented Segmentation, and Classification Techniques. Photogramm. Eng. Remote Sens. 2005, 71, 825–838. [Google Scholar] [CrossRef] [Green Version]

- Otsu, N. Threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef] [Green Version]

- Kittler, J.; Illingworth, J. Minimum error thresholding. Pattern Recognit. 1986, 19, 41–47. [Google Scholar] [CrossRef]

- Bovolo, F.; Bruzzone, L. A Theoretical Framework for Unsupervised Change Detection Based on Change Vector Analysis in the Polar Domain. IEEE Trans. Geosci. Remote Sens. 2006, 45, 218–236. [Google Scholar] [CrossRef] [Green Version]

- Bezdek, J.C. Pattern Recognition with Fuzzy Objective Function Algorithms; Springer Science & Business Media: Berlin/Heidelberg, Germany, 1981; Volume 4, pp. 95–154. [Google Scholar]

- Celik, T. Unsupervised change detection in satellite images using principal component analysis and κ-means clustering. IEEE Geosci. Remote Sens. Lett. 2009, 6, 772–776. [Google Scholar] [CrossRef]

- Krinidis, S.; Chatzis, V. A robust fuzzy local information C-means clustering algorithm. IEEE Trans. Image Process. 2010, 19, 1328–1337. [Google Scholar] [CrossRef]

- Tao, W.B.; Tian, J.W.; Jian, L. Image segmentation by three-level thresholding based on maximum fuzzy entropy and genetic algorithm. Pattern Recognit. Lett. 2003, 24, 3069–3078. [Google Scholar] [CrossRef]

- Hao, G.; Xu, W.; Sun, J.; Tang, Y. Multilevel Thresholding for Image Segmentation Through an Improved Quantum-Behaved Particle Swarm Algorithm. IEEE Trans. Instrum. Meas. 2010, 59, 934–946. [Google Scholar] [CrossRef]

- Moser, G.; Serpico, S.B.; Benediktsson, J.A. Land-Cover Mapping by Markov Modeling of Spatial–Contextual Information in Very-High-Resolution Remote Sensing Images. Proc. IEEE 2013, 101, 631–651. [Google Scholar] [CrossRef]

- Wu, C.; Du, B.; Zhang, L. Slow Feature Analysis for Change Detection in Multispectral Imagery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 2858–2874. [Google Scholar] [CrossRef]

- Tang, Y.; Zhang, L. Urban change analysis with multi-sensor multispectral imagery. Remote Sens. 2017, 9, 232. [Google Scholar] [CrossRef] [Green Version]

- Tang, Y.; Zhang, L.; Huang, X. Object-oriented change detection based on the Kolmogorov-Smirnov test using high-resolution multispectral imagery. Int. J. Remote Sens. 2011, 32, 5719–5740. [Google Scholar] [CrossRef]

- Wang, B.; Choi, S.; Byun, Y.; Lee, S.; Choi, J. Object-Based Change Detection of Very High Resolution Satellite Imagery Using the Cross-Sharpening of Multitemporal Data. IEEE Geosci. Remote Sens. Lett. 2017, 12, 1151–1155. [Google Scholar] [CrossRef]

- Zhang, X.; Xiao, P.; Feng, X.; Yuan, M. Separate segmentation of multi-temporal high-resolution remote sensing images for object-based change detection in urban area. Remote Sens. Environ. 2017, 201, 243–255. [Google Scholar] [CrossRef]

- Addink, E.A.; Van Coillie, F.M.B.; de Jong, S.M. Introduction to the GEOBIA 2010 special issue: From pixels to geographic objects in remote sensing image analysis. Int. J. Appl. Earth Obs. Geoinf. 2012, 15, 1–6. [Google Scholar] [CrossRef]

- Hussain, M.; Chen, D.; Cheng, A.; Wei, H.; Stanley, D. Change detection from remotely sensed images: From pixel-based to object-based approaches—ScienceDirect. ISPRS J. Photogramm. Remote Sens. 2013, 80, 91–106. [Google Scholar] [CrossRef]

- Tewkesbury, A.P.; Comber, A.J.; Tate, N.J.; Lamb, A.; Fisher, P.F. A critical synthesis of remotely sensed optical image change detection techniques. Remote Sens. Environ. 2015, 160, 1–14. [Google Scholar] [CrossRef] [Green Version]

- Feng, G.; Dong, J.; Bo, L.; Xu, Q. Automatic Change Detection in Synthetic Aperture Radar Images Based on PCANet. IEEE Geoence Remote Sens. Lett. 2017, 13, 1792–1796. [Google Scholar]

- Gao, Y.; Gao, F.; Dong, J.; Li, H.C. SAR Image Change Detection Based on Multiscale Capsule Network. IEEE Geosci. Remote Sens. Lett. 2020, 18, 484–488. [Google Scholar] [CrossRef]

- Gao, F.; Wang, X.; Gao, Y.; Dong, J.; Wang, S. Sea Ice Change Detection in SAR Images Based on Convolutional-Wavelet Neural Networks. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1240–1244. [Google Scholar] [CrossRef]

- Du, B.; Ru, L.; Wu, C.; Zhang, L. Unsupervised Deep Slow Feature Analysis for Change Detection in Multi-Temporal Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9976–9992. [Google Scholar] [CrossRef] [Green Version]

- Du, S.; Zhang, Y.; Qin, R.; Yang, Z.; Zou, Z.; Tang, Y.; Fan, C. Building change detection using old aerial images and new LiDAR data. Remote Sens. 2016, 8, 1030. [Google Scholar] [CrossRef] [Green Version]

- Sofina, N.; Ehlers, M. Object-based change detection using high-resolution remotely sensed data and gis. ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 39, B7. [Google Scholar] [CrossRef] [Green Version]

- Mercier, G.; Moser, G.; Serpico, S.B. Conditional copulas for change detection in heterogeneous remote sensing images. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1428–1441. [Google Scholar] [CrossRef]

- Liu, Z.; Li, G.; Mercier, G.; He, Y.; Pan, Q. Change Detection in Heterogenous Remote Sensing Images via Homogeneous Pixel Transformation. IEEE Trans. Image Process. 2018, 27, 1822–1834. [Google Scholar] [CrossRef] [PubMed]

- Alberga, V. Similarity measures of remotely sensed multi-sensor images for change detection applications. Remote Sens. 2009, 1, 122–143. [Google Scholar] [CrossRef] [Green Version]

- Touati, R.; Mignotte, M. An Energy-Based Model Encoding Nonlocal Pairwise Pixel Interactions for Multisensor Change Detection. IEEE Trans. Geosci. Remote Sens. 2018, 56, 1046–1058. [Google Scholar] [CrossRef]

- Sun, Y.; Lei, L.; Li, X.; Sun, H.; Kuang, G. Nonlocal patch similarity based heterogeneous remote sensing change detection. Pattern Recognit. 2020, 109, 107598. [Google Scholar] [CrossRef]

- Sun, Y.; Lei, L.; Li, X.; Tan, X.; Kuang, G. Structure Consistency-Based Graph for Unsupervised Change Detection with Homogeneous and Heterogeneous Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2021. [Google Scholar] [CrossRef]

- Liu, J.; Gong, M.; Qin, K.; Zhang, P. A Deep Convolutional Coupling Network for Change Detection Based on Heterogeneous Optical and Radar Images. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 545–559. [Google Scholar] [CrossRef]

- Zhao, W.; Wang, Z.; Gong, M.; Liu, J. Discriminative Feature Learning for Unsupervised Change Detection in Heterogeneous Images Based on a Coupled Neural Network. IEEE Trans. Geosci. Remote Sens. 2017, 55, 7066–7080. [Google Scholar] [CrossRef]

- Zhan, T.; Gong, M.; Jiang, X.; Li, S. Log-based transformation feature learning for change detection in heterogeneous images. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1352–1356. [Google Scholar] [CrossRef]

- Niu, X.; Gong, M.; Zhan, T.; Yang, Y. A Conditional Adversarial Network for Change Detection in Heterogeneous Images. IEEE Geosci. Remote Sens. Lett. 2019, 16, 45–49. [Google Scholar] [CrossRef]

- Tang, J.; Deng, C.; Huang, G. Bin Extreme Learning Machine for Multilayer Perceptron. IEEE Trans. Neural Netw. Learn. Syst. 2015, 27, 809–821. [Google Scholar] [CrossRef]

- Huang, G.B.; Zhu, Q.Y.; Siew, C.K. Extreme learning machine: Theory and applications. Neurocomputing 2006, 70, 489–501. [Google Scholar] [CrossRef]

- Bengio, Y. Learning deep architectures for AI. Found. Trends Mach. Learn. 2009, 2, 1–127. [Google Scholar] [CrossRef]

- Cheng, Y. Mean Shift, Mode Seeking, and Clustering. IEEE Trans. Pattern Anal. Mach. Intell. 1995, 17, 790–799. [Google Scholar] [CrossRef] [Green Version]

- Rosenfield, G.H.; Fitzpatrick-Lins, K. A coefficient of agreement as a measure of thematic classification accuracy. Photogramm. Eng. Remote Sens. 1986, 52, 223–227. [Google Scholar]

- Chinchor, N.; Sundheim, B.M. MUC 1993. In Proceedings of the 5th Conference on M.U. MUC-5 Evaluation Metrics, Baltimore, MD, USA, 25–27 August 1993. [Google Scholar]

- Bezdek, J.C. A Physical Interpretation of Fuzzy ISODATA. Read. Fuzzy Sets Intell. Syst. 1993, 615–616. [Google Scholar] [CrossRef]

| Datasets | Methods | FP | FN | OE | PCC(%) | KC | F1 | Run Time (S) |

|---|---|---|---|---|---|---|---|---|

| Yangtze River dataset 1 | FCM | 321,853 | 13,363 | 335,216 | 75.11 | 0.2065 | 0.2848 | 4.68 |

| PCA-Kmeans | 73,202 | 20,025 | 93,227 | 93.08 | 0.5280 | 0.6134 | 7.82 | |

| FLICM | 45,108 | 24,749 | 69,857 | 94.82 | 0.5857 | 0.5631 | 31.208 | |

| CWNN | 189,487 | 14,065 | 203,552 | 84.89 | 0.3331 | 0.3935 | 78.871 | |

| PCANet | 68,844 | 20,553 | 89,397 | 93.36 | 0.5373 | 0.5712 | 28,885.63 | |

| NPSG | 90,886 | 20,629 | 111,515 | 91.72 | 0.4754 | 0.5161 | 2165.674 | |

| INLPG | 10,659 | 35,181 | 45,840 | 96.59 | 0.6448 | 0.6621 | 1473.58 | |

| HELMC | 22,970 | 22,691 | 45,661 | 96.61 | 0.6975 | 0.7155 | 31.01 |

| Datasets | Methods | FP | FN | OE | PCC(%) | KC | F1 | Run Time/S |

|---|---|---|---|---|---|---|---|---|

| Wanghong dataset | CVA | 19,314 | 69,421 | 88,735 | 69.18 | 0.3926 | 0.6481 | 1.44 |

| FCM | 50,388 | 36,267 | 86,655 | 69.90 | 0.3936 | 0.7261 | 1.54 | |

| PCA-Kmeans | 57,592 | 35,827 | 93,419 | 67.55 | 0.3445 | 0.7117 | 1.60 | |

| FLICM | 58,054 | 35,894 | 93,948 | 67.37 | 0.3407 | 0.7104 | 6.25 | |

| SFA | 14,624 | 111,047 | 125,671 | 56.35 | 0.1532 | 0.3894 | 8.84 | |

| DSFA | 5654 | 90,784 | 96,438 | 66.51 | 0.3477 | 0.5558 | 25.85 | |

| HELMC | 6187 | 4318 | 10,505 | 96.35 | 0.9268 | 0.9655 | 6.56 |

| Datasets | Methods | FP | FN | OE | PCC(%) | KC | F1 | Run Time/S |

|---|---|---|---|---|---|---|---|---|

| Yangtze River dataset 2 | HPT | 22,007 | 7842 | 29,849 | 91.71 | 0.6854 | 0.7336 | 177.19 |

| LTFL | 24,538 | 4108 | 28,646 | 92.04 | 0.7119 | 0.7579 | 2596.56 | |

| CGAN | 5961 | 25,377 | 31,338 | 91.30 | 0.5551 | 0.6006 | 860.47 | |

| NPSG | 17,714 | 20,351 | 38,065 | 89.43 | 0.5394 | 0.6003 | 655.89 | |

| INLPG | 2626 | 21,436 | 24,062 | 93.32 | 0.6605 | 0.6957 | 162.09 | |

| HELMC | 11,136 | 3575 | 14,711 | 95.91 | 0.8367 | 0.8605 | 7.97 |

| Datasets | Methods | FP | FN | OE | PCC(%) | KC | F1 | Run Time/S |

|---|---|---|---|---|---|---|---|---|

| Guazhou dataset | HPT | 43,655 | 40,911 | 84,566 | 91.35 | 0.6039 | 0.6533 | 628.32 |

| LTFL | 34,695 | 43,109 | 77,804 | 92.04 | 0.6206 | 0.6657 | 4358.68 | |

| CGAN | 9588 | 78,360 | 87,948 | 91.00 | 0.4490 | 0.4898 | 2295.29 | |

| NPSG | 88,961 | 57,265 | 144,226 | 85.25 | 0.3831 | 0.4675 | 1858.14 | |

| INLPG | 17,459 | 67,620 | 85,079 | 91.30 | 0.5100 | 0.5546 | 813.25 | |

| HELMC | 26,053 | 25,075 | 51,128 | 94.77 | 0.7590 | 0.7888 | 18.96 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, T.; Tang, Y.; Yang, X.; Lin, Z.; Zou, B.; Feng, H. Change Detection for Heterogeneous Remote Sensing Images with Improved Training of Hierarchical Extreme Learning Machine (HELM). Remote Sens. 2021, 13, 4918. https://doi.org/10.3390/rs13234918

Han T, Tang Y, Yang X, Lin Z, Zou B, Feng H. Change Detection for Heterogeneous Remote Sensing Images with Improved Training of Hierarchical Extreme Learning Machine (HELM). Remote Sensing. 2021; 13(23):4918. https://doi.org/10.3390/rs13234918

Chicago/Turabian StyleHan, Te, Yuqi Tang, Xin Yang, Zefeng Lin, Bin Zou, and Huihui Feng. 2021. "Change Detection for Heterogeneous Remote Sensing Images with Improved Training of Hierarchical Extreme Learning Machine (HELM)" Remote Sensing 13, no. 23: 4918. https://doi.org/10.3390/rs13234918

APA StyleHan, T., Tang, Y., Yang, X., Lin, Z., Zou, B., & Feng, H. (2021). Change Detection for Heterogeneous Remote Sensing Images with Improved Training of Hierarchical Extreme Learning Machine (HELM). Remote Sensing, 13(23), 4918. https://doi.org/10.3390/rs13234918