Abstract

Change detection, i.e., the identification per pixel of changes for some classes of interest from a set of bi-temporal co-registered images, is a fundamental task in the field of remote sensing. It remains challenging due to unrelated forms of change that appear at different times in input images. Here, we propose a deep learning framework for the task of semantic change detection in very high-resolution aerial images. Our framework consists of a new loss function, a new attention module, new feature extraction building blocks, and a new backbone architecture that is tailored for the task of semantic change detection. Specifically, we define a new form of set similarity that is based on an iterative evaluation of a variant of the Dice coefficient. We use this similarity metric to define a new loss function as well as a new, memory efficient, spatial and channel convolution Attention layer: the FracTAL. We introduce two new efficient self-contained feature extraction convolution units: the CEECNet and FracTALResNet units. Further, we propose a new encoder/decoder scheme, a network macro-topology, that is tailored for the task of change detection. The key insight in our approach is to facilitate the use of relative attention between two convolution layers in order to fuse them. We validate our approach by showing excellent performance and achieving state-of-the-art scores (F1 and Intersection over Union-hereafter IoU) on two building change detection datasets, namely, the LEVIRCD (F1: 0.918, IoU: 0.848) and the WHU (F1: 0.938, IoU: 0.882) datasets.

1. Introduction

Change detection is one of the core applications of remote sensing. The goal of change detection is to assign binary labels (“change” or no “change”) to every pixel in a study area based on at least two co-registered images taken at different times. The definition of “change” varies across applications and includes, for instance, urban expansion [1], flood mapping [2], deforestation [3], and cropland abandonment [4]. Changes of multiple land-cover classes, i.e., semantic change detection, can also be addressed simultaneously [5]. It remains a challenging task due to various forms of change owed to varying environmental conditions that do not constitute a change for the objects of interest [6].

A plethora of change-detection algorithms has been devised and summarised in several reviews [7,8,9,10]. In recent years, computer vision has further pushed the state of the art, especially in applications where the spatial context is paramount. The rise of computer vision, especially deep learning, is related to advances and democratisation of powerful computing systems, increasing amounts of available data, and the development of innovative ways to exploit data [5].

The starting point of the approach presented in this work is the hypothesis that human intelligence identifies differences in images by looking for change in objects of interest at a higher cognitive level [6]. We understand this because the time required for identifying objects that changed between two images increases with time when the number of changed objects increases [11]. That is, there is strong correlation between the processing time and the number of individual objects that changed. In other words, the higher the complexity of the changes, the more time is required to detect them. Therefore, simply subtracting extracted features from images (which is a constant time operation) cannot account for the complexities of human perception. As a result, the deep convolutional neural networks proposed in this paper (e.g. Figure 1) address change detection without using bespoke features subtraction. We rely on the attention mechanism to emphasise important areas between two bi-temporal co-registered images.

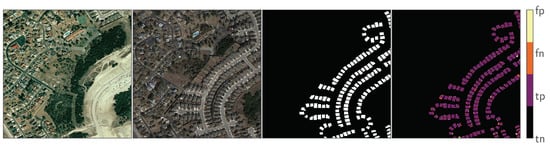

Figure 1.

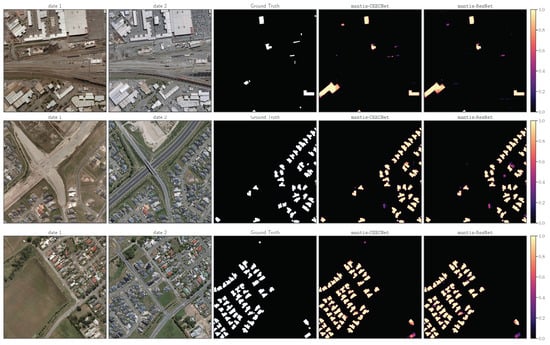

Example of the proposed framework change detection performance on the LEVIRCD test set [1] (architecture: mantis CEECNetV1). From left to right: input image at date 1, input image at date 2, ground truth buildings change mask, and colour-coded the true negative (tn), true positive (tp), false positive (fp), and false negative (fn) predictions.

1.1. Related Work

1.1.1. On Attention

The attention mechanism was first introduced by [12] for the task of neural machine translation (i.e., language to language translation of sentences, e.g., English to French; hereafter NMT). This mechanism addressed the problem of translating very long sentences in encoder/decoder architectures. An encoder is a neural network that encodes a phrase to a fixed-length vector. Then the decoder operates on this output and produces a translated phrase (of variable length). It was observed that these types of architectures were not performing well when the input sentences were very long [13]. The attention mechanism provided a solution to this problem: instead of using all the elements of the encoder vector on equal footing for the decoder, the attention provided a weighted view of them. That is, it emphasised the locations of encoder features that were more important than others for the translation, or stated another way, it emphasised some input words that were more important for the meaning of the phrase. However, in NMT, the location of the translated words is not in direct correspondence with the input phrase because of the syntax changes. Therefore, Bahdanau et al. [12] introduced a relative alignment vector, , that was responsible for encoding the location dependences: in language, it is not only the meaning (value) of a word that is important but also its relative location in a particular syntax. Hence, the attention mechanism that was devised was comparing the emphasis of inputs at location i with respect to output words at locations j. Later, Vaswani et al. [14] further developed this mechanism and introduced the scaled dot product self-attention mechanism as a fundamental constituent of their Transformer architecture. This allowed the dot product to be used as a similarity measure between feature layers, including feature vectors that have a large dimensionality.

The idea of using attention for vision tasks soon passed to the community. Hu et al. [15] introduced channel-based attention in their squeeze and excitation architecture. Wang et al. [16] used spatial attention to facilitate non-local relationships across sequences of images. Chen et al. [17] combined both approaches by introducing joint spatial and channel wise attention in convolutional neural networks, demonstrating an improved performance on image captioning datasets. Woo et al. [18] introduced the Convolution Block Attention Module (CBAM), which is also a form of spatial and channel attention, and showed improved performance on image classification and object detection tasks. To the best of our knowledge, the most faithful implementation of multi-head attention [14] for convolution layers is [19] (spatial attention).

Recently, there has been an effort to reduce the memory footprint of the attention mechanism by introducing the concept of Linear attention [20]. This ideas was soon extended to 2D for computer vision problems [21]. In addition to these, the attention used in the recently introduced Visual Attention Transformer [22], which also helps in reducing the memory footprint for computer vision tasks.

1.1.2. On Change Detection

Sakurada and Okatani [23] and Alcantarilla et al. [24] (see also [25]) were some of the first to introduce fully convolutional networks for the task of scene change detection in computer vision, and they both introduced street view change detection datasets. Sakurada and Okatani [23] extracted features from convolutional neural networks and combined them with super pixel segmentation to recover change labels in the original resolution. Alcantarilla et al. [24] proposed an approach that chains multi-sensor fusion simultaneous localisation and mapping (SLAM) with a fast 3D reconstruction pipeline that provides coarsely registered image pairs to an encoder/decoder convolutional network. The output of their algorithm is a pixel-wise change detection binary mask.

Researchers in the field of remote sensing picked up and evolved this knowledge and started using it for the task of land cover change detection. In the remote sensing community, the dual Siamese encoder and a single decoder is frequently adopted. The majority of different approaches then modifies how the different features extracted from the dual encoder are consumed (or compared) in order to produce a change detection prediction layer. In the following, we focus on approaches that follow this paradigm and are most relevant to our work. For a general overview of land cover change detection in the field of remote sensing interested readers can consult [10,26]. For a general review on AI applications of change detection to the field of remote sensing, see [27].

Caye Daudt et al. [5] presented and evaluated various strategies for land cover change detection, establishing that their best algorithm was a joint multitasking segmentation and change detection approach. That is, their algorithm simultaneously predicted the semantic classes on each input image, as well as the binary mask of change between the two.

For the task of buildings change detection, Ji et al. [28] presented a methodology that is a two-stage process, wherein the first part, they use a building extraction algorithm from single date input images. In the second part, the binary masks that are extracted are concatenated together and inserted into a different network that is responsible for identifying changes between the two binary layers. In order to evaluate the impact of the quality of the building extraction networks, the authors use two different architectures. The first, one of the most successful networks to date for instance segmentation, the Mask-RCNN [29] and the second the MS-FCN (multi scale fully convolutional network) that is based on the original UNet architecture [30]. The advantage of this approach, according to the authors, is the fact that they could use unlimited synthetic data for training the second stage of the algorithm.

Chen et al. [31] used a dual attentive convolutional neural network, i.e., the feature extractor was a siamese VGG16 pre-trained network. The attention module they used for vision was both spatial and channel attention, and it was the one introduced in [14] but with a single head. Training was performed with a contrastive loss function.

Chen and Shi [1] presented the STANet, which consists of a feature extractor based on ResNet18 [32], and two versions of spatio-temporal attention modules: the Basic spatial-temporal attention module (BAM) and the pyramid spatial-temporal attention module (PAM). The authors introduced the LEVIRCD change detection dataset and demonstrated excellent performance. Their training process facilitates a contrastive loss applied at the feature pixel level. Their algorithm predicts binary change labels.

Jiang et al. [33] introduced the PGA-SiamNet that uses a dual Siamese encoder that extracts features from the two input networks. They used VGG16 for feature extraction. A key ingredient to their algorithm is the co-attention module [34] that was initially developed for video object segmentation. The authors use it for fusing the extracted features of each input image from the dual VGG16 encoder.

1.2. Our Contributions

In this work, we developed neural networks using attention mechanisms that emphasise areas of interest in two bi-temporal coregistered aerial images. It is the network that learns what to emphasise, and how to extract features that describe change at a higher level. To this end, we propose a dual encoder–single decoder scheme that fuses information of corresponding layers with relative attention and extracts a segmentation mask as a final layer. This mask designates change for classes of interest and can also be used for the dual problem of class attribution of change. As in previous work, we facilitate the use of conditioned multi-tasking [35] that proves crucial for stabilising the training process and improving performance. In the multitasking setting, the algorithm first predicts the distance transform of the change mask, then it reuses this information and identifies the boundaries of the change mask, and, finally, re-uses both distance transform and boundaries to estimate the change segmentation mask. In summary, the main contributions of this work are:

- We introduce a new set similarity metric that is a variant of the Dice coefficient: the Fractal Tanimoto similarity measure (Section 2.1). This similarity measure has the advantage that it can be made steeper than the standard Tanimoto metric towards optimality, thus providing a finer-grained similarity metric between layers. The level of steepness is controlled from a depth recursion hyperparameter. It can be used both as a “sharp” loss function when fine-tuning a model at the latest stages of training, as well as a set similarity metric between feature layers in the attention mechanism.

- Using the above set similarity as a loss function, we propose an evolving loss strategy for fine-tuning the training of neural networks (Section 2.2). This strategy helps to avoid overfitting and improves performance.

- We introduce the Fractal Tanimoto Attention Layer (hereafter FracTAL), which is tailored for vision tasks (Section 2.3). This layer uses the fractal Tanimoto similarity to compare queries with keys inside the Attention module. It is a form of spatial and channel attention combined.

- We introduce a feature extraction building block that is based on the residual neural network [32] and the fractal Tanimoto Attention (Section 2.4.2). The new FracTALResNet converges faster to optimality than standard residual networks and enhances performance.

- We introduce two variants of a new feature extraction building block, the Compress-Expand/Expand-Compress unit (hereafter CEECNet unit—Section 2.5.1). This unit exhibits enhanced performance in comparison with standard residual units and the FracTALResNet unit.

- Capitalising on these findings, we introduce a new backbone encoder/decoder scheme, a macro-topology—the mantis—that is tailored for the task of change detection (Section 2.5.2). The encoder part is a Siamese dual encoder, where the corresponding extracted features at each depth are fused together with FracTALrelative attention. In this way, information exchange between features extracted from bi-temporal images is enforced. There is no need for manual feature subtraction.

- Given the relative fusion operation between the encoder features at different levels, our algorithm achieves state-of-the-art performance on the LEVIRCD and WHU datasets without requiring the use of contrastive loss learning during training (Section 3.2). Therefore, it is easier to implement with standard deep learning libraries and tools.

Networks integrating the above-mentioned contributions yielded state-of-the-art performance for the task of building change detection in two benchmark datasets for change detection: the WHU [36] and LEVIRCD [1] datasets.

In addition to the previously mentioned sections, the following sections complete the work. In Section 2.6, we describe the setup of our experiments. In Section 3.1, we perform an ablation study of the proposed schemes. Finally, in Appendix C, we present various key elements of our architecture in mxnet/gluon style pseudocode. A software implementation of the models that relate to this work can be found on https://github.com/feevos/ceecnet, (accessed on 6 September 2020).

2. Materials and Methods

2.1. Fractal Tanimoto Similarity Coefficient

In [35], we analysed the performance of the various flavours of the Dice coefficient and introduced the Tanimoto with a complement coefficient. Here, we further expand our analysis, and we present a new functional form for this similarity metric. We use it both as a self-similarity measure between convolution layers in a new attention module and a loss function for fine-tuning semantic segmentation models.

For two (fuzzy) binary vectors of equal dimension, and , whose elements lie in the range , the Tanimoto similarity coefficient is defined:

Interestingly, the dot product between two fuzzy binary vectors is another similarity measure of their agreement. This inspired us to introduce an iterative functional form of the Tanimoto:

For example, expanding Equation (2) for , yields:

We can expand this for an arbitrary depth d, and then, we get the following simplified version of the fractal Tanimoto similarity measure:

This function (the simplified formula was obtained with Mathematica 11) takes values in the range , and it becomes steeper as d increases. At the limit , it behaves like the integral of the Dirac function around point , . That is, the parameter d is a form of annealing “temperature”. Interestingly, although the iterative scheme was defined with d being an integer, for continuous values , remains bounded in the interval . That is:

where is the dimensionality of the fuzzy binary vectors , .

In the following, we will use the functional form of the fractal Tanimoto with complement [35], i.e.:

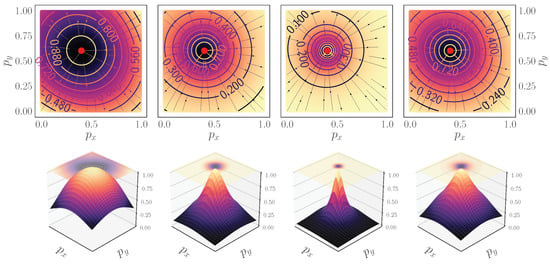

In Figure 2, we provide a simple example for a ground truth vector and a continuous vector of probabilities . On the top panel, we construct density plots of the Fractal Tanimoto function with complement . The gradient field lines that point to the ground truth are overplotted. In the bottom panels, we plot the corresponding 3D representations. From left to right, the first column corresponds to , the second to , and the third to . It is apparent that the effect of the d hyperparameter is to make the similarity metric steeper towards the ground truth. For all practical purposes (network architecture, evolving loss function), we use the average fractal Tanimoto loss (last column) due to having steeper gradients away from optimality. In order to avoid confusion between the depth d of the fractal Tanimoto loss function and the depth of the fractal Attention layer (introduced in Section 2.3), we will designate the depth of the loss function with the symbol :

Figure 2.

Fractal Tanimoto similarity measure. In the top row, we plot the two-dimensional density maps for the similarity coefficient. From left to right, the depths are . The last column corresponds to the average of values up to depth , i.e., . In the bottom figure, we represent the same values in 3D. The horizontal contour plot at corresponds to the Laplacian of the . It is observed that as the depth, d, of the iteration increases, the function becomes steeper towards optimality.

The formula is valid for ; for , it reverts to the standard fractal Tanimoto loss: .

2.2. Evolving Loss Strategy

In this section, we describe a training strategy that modifies the depth of the fractal Tanimoto similarity coefficient, when used as a loss function, on each learning rate reduction. For minimisation problems, the fractal Tanimoto loss is defined through: . In the following, when we refer to the fractal Tanimoto loss function, it should be understood that this is defined through the similarity coefficient, as described above.

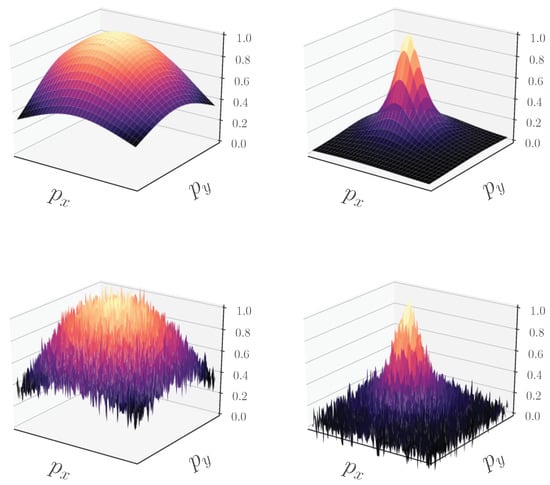

During training and until the first learning rate reduction, we use the standard Tanimoto with complement . The reason for this is that for a random initialization of the weights (i.e., for an initial prediction point in the space of probabilities away from optimality), the gradients are steeper towards the best values for this particular loss function (in fact, for cross entropy are even steeper). This can be seen in Figure 2 in the bottom row: clearly, for an initial probability vector away from the ground truth , the gradients are steeper for . As training evolves, and the value of the weights approaches optimality, the predictions approach the ground truth, and the loss function flattens out. With batch gradient descent (and variants), we are not really calculating the true (global) loss function but rather a noisy approximate version of it. This is because in each batch loss evaluation, we are not using all of the data for the gradients evaluation. In Figure 3, we represent a graphical representation of the true landscape and a noisy version of it for a toy 2D problem. In the top row, we plot the value of the similarity as well as the average value of the loss functions for for the ground truth vector . In the corresponding bottom rows, we have the same plot, where we also added random Gaussian noise. In the initial phases of training, the average gradients are greater than the local values due to noise. As the network reaches optimality, the average gradient towards optimality becomes smaller and smaller, and the gradients due to noise dominate the training. Once we reduce the learning rate, the step the optimiser takes is even smaller; therefore, it cannot easily escape local optima (due to noise). What we propose is to “shift gears”: once training stagnates, we change the loss function to a similar but steeper one towards optimality that can provide gradients (on average) that can dominate the noise. Our choice during training is the following set of learning rates and depths of the fractal Tanimoto loss: . In all evaluations of loss functions for , we use the average value for all values (Equation (8)).

Figure 3.

Fractal Tanimoto similarity measure with noise. On the top row, from left to right, is the , and . The bottom row is the same corresponding similarity measures with Gaussian random noise added. When the algorithmic training approaches optimality with the standard Tanimoto, local noise gradients tend to dominate over the background average gradient. Increasing the slope of the background gradient at later stages of training is a remedy to this problem.

2.3. Fractal Tanimoto Attention

Here, we present a novel convolutional attention layer based on the new similarity metric and a methodology of fusing information from the output of the attention layer to features extracted from convolutions.

2.4. Fractal Tanimoto Attention Layer

In the pioneering work of [14], the attention operator is defined through a scaled dot product operation. For images, in particular, i.e., two-dimensional features, assuming that is the query, the key, and its corresponding value, the (spatial) attention is defined as (see also [37]):

Here d is the dimension of the keys, and the softmax operation is with respect to the first (channel) dimension. The term is a scaling factor that ensures the Attention layer scales well even with a large number of dimensions [14]. The operator corresponds to the inner product with respect to the spatial dimensions height, H, and width, W, while is a dot product with respect to channel dimensions. This is more apparent in index notation:

In this formalism, each channel of the query features is compared with each of the channels of the key values. In addition, there is a 1-1 correspondence between keys and values, meaning that a unique value corresponds to each key. The point of the dot product is to emphasise the key-value pairs that are more relevant for the particular query. That is the dot product selects the keys that are most similar to the particular query. It represents the projection of queries on the keys space. The softmax operator provides a weighted “view” of all the values for a particular set of queries, keys, and values or else a “soft” attention mechanism. In the multi-head attention paradigm, multiple attention heads that follow the principles described above are concatenated together. One of the key disadvantages of this formulation when used in vision tasks (i.e., two dimensional features) is the very large memory footprint that this layer exhibits. For 1D problems, such as Natural Language Processing, this is not an issue in general.

Here, we follow a different approach. We develop our formalism for the case where the number of query channels, , is identical to the number of key channels, C. However, if desired, our formalism can work for the general case where .

Let be the query features, the keys, and the values. In our formalism, it is a requirement for these operators to have values in . This can be easily achieved by applying the sigmoid operator. Our approach is a joint spatial and channel attention mechanism. With the use of the Fractal Tanimoto similarity coefficient, we define the spatial, ⊠, and channel, ⊡, similarity between the query, , and key, , features according to:

where the spatial and channel products are defined as:

It is important to note that the output of these operators lies numerically within the range (0, 1), where 1 indicates identical similarity and 0 indicates no correlation between the query and key. That is, there is no need for normalisation or scaling as is the case for the traditional dot product similarity.

In our approach, the spatial and channel attention layers are defined with element-wise multiplication (denoted by the symbol ⊙):

It should be stressed that these operations do not consider that there is a 1-1 mapping between keys and values. Instead, we consider a map of one-to-many; that is, a single key can correspond to a set of values. Therefore, there is no need to use a softmax activation (see also [38]). The overall attention is defined as the average of the sum of these two operators:

In practice, we use the averaged fractal Tanimoto similarity coefficient with complement, , both for spatial and channel wise attention.

As stated previously, it is possible to extend the definitions of spatial and channel products in a way where we compare each of the channels (respectively, spatial pixels) of the query with each of the channels (respectively, spatial pixels) of the key. However, this imposes a heavy memory footprint and makes deeper models, even for modern-day GPUs, prohibitive. This will be made clear with an example of FracTALspatial similarity vs. the dot product similarity that appears in SAGAN [39] self-attention. Let us assume that we have an input feature layer of size (e.g., this appears in the layer at depth 6 of UNet-like architectures, starting from 32 initial features). From this, three layers are produced of the same dimensionalty, the query, the key, and value. With the Fractal Tanimoto spatial similarity, , the output of the similarity of query and keys is (Equation (12)). The corresponding output of the dot similarity of spatial compoments in the self-attention is → (Equation (11)), having a C-times higher memory footprint.

In addition, we found that this approach did not improve the performance for the case of change detection and classification. Indeed, one needs to question this for vision tasks: the initial definition of attention [12] introduced a relative alignment vector, , that was necessary because, for the task of NMT, the syntax of phrases changes from one language to the other. That is, the relative emphasis with respect to location between two vectors is meaningful. When we compare two images (features) at the same depth of a network (created by two different inputs, as is the case for change detection), we anticipate that the channels (or spatial pixels) will be in correspondence. For example, the RGB (or hyperspectral) order of inputs, does not change. That is, in vision, the situation can be different than NLP because we do not have a relative location change as it happens with words in phrases.

We propose the use of the Fractal Tanimoto Attention Layer (hereafter FracTAL) for vision tasks as an improvement over the scaled dot product attention mechanism [14] for the following reasons:

- The similarity is automatically scaled in the region ; therefore, it does not require normalisation or activation to be applied. This simplifies the design and implementation of Attention layers and enables training without ad hoc normalisation operations.

- The dot product does not have an upper or lower bound; therefore, a positive value cannot be a quantified measure of similarity. In contrast, has a bounded range of values in . The lowest value indicates no correlation, and the maximum value perfect similarity. It is thus easier to interpret.

- Iteration d is a form of hyperparameter, such as “temperature” in annealing. Therefore, the can become as steep as we desire (by modification of the temperature parameter d), even steeper than the dot product similarity. This can translate to finer query and key similarity.

- Finally, it is efficient in terms of the GPU memory footprint (when one considers that it does both channel and spatial attention), thus allowing the design of more complex convolution building blocks.

The implementation of the FracTALis given in Listing A.2. The multihead attention is achieved using group convolutions for the evaluation of queries, keys, and values.

2.4.1. Attention Fusion

A critical part in the design of convolution building blocks enhanced with attention is the way the information from attention is passed to convolution layers. To this aim, we propose fusion methodologies of feature layers with the FracTAL for two cases: self attention fusion and a relative attention fusion, where information from two layers are combined.

2.4.2. Self Attention Fusion

We propose the following fusion methodology between a feature layer, , and its corresponding FracTALself-attention layer, :

Here, is the output layer produced from the fusion of and the Attention layer, , ⊙ describes element wise multiplication, is a layer of ones such as L, and a trainable parameter initiated at zero. We next describe the reasons why we propose this type of fusion.

The Attention output is maximal (i.e., close to 1) in areas on the features where it must “attend” and minimal otherwise (i.e., close to zero). Directly multiplying element-wise the FracTALattention layer with the features, , effectively lowers the values of features in areas that are not “interesting”. It does not alter the value of areas that “are interesting”. This can produce loss of information in areas where “does not attend” (i.e., it does not emphasize), which would otherwise be valuable at a later stage. Indeed, areas of the image that the algorithm “does not attend” should not be perceived as empty space [11]. For this reason, the “emphasised” features, , are added to the original input . Moreover, is identical to in spatial areas where tends to zero and is emphasised in areas where is maximal.

In the initial stages of training, the attention layer, , does not contribute to due to the initial value of the trainable parameter . Therefore, it does not add complexity during the initial phase of training, and it allows for an annealing process of the Attention contribution (see also [31,39]). This property is particularly important when is produced from a known performant recipe (e.g., residual building blocks).

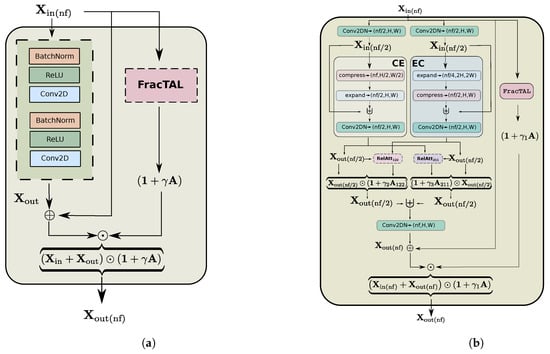

In Figure 4a, we present this fusion mechanism for the case of a Residual unit [32,40]. Here, the input layer, , is subject to the residual block sequence of Batch normalisation, convolutions, and ReLU activations and produces the layer. A separate branch uses the input to produce the self attention layer (see Listing A.2). Then we multiply element-wise the standard output of the residual unit, , with the layer. In this way, at the beginning of training, this layer behaves as a residual layer, which has excellent convergent properties of resnet at initial stages, and at later stages of training, the Attention becomes gradually more active and allows for greater performance. A software routine of this fusion for the residual unit, in particular, can be seen in Listing A.4 in the Appendix C.

Figure 4.

Left panel (a): the FracTALResidual unit. This building block demonstrates the fusion of the residual block with the self attention layer FracTALevaluated from the input features. Right panel (b): the CEECNet (Compress–Expand/Expand–Compress) feature extraction units. The symbol ⊎ represents concatenation of features along the channel dimension (for V1). For version V2, we replace all of the concatenation operations, ⊎, followed by the normalised convolution layer with relative fusion attention layers, as described in Section 2.4.3 (see also Listing A.3).

2.4.3. Relative Attention Fusion

Assuming we have two input layers, and , we can calculate the relative attention of each with respect to the other. This is achieved by using the layer we want to “attend to” as query and the layer we want to use as information for attention as a key and value. In practical implementations, the query, the key, and the value layers result after the application of a convolution layer to some input.

Here, the parameters are initialized at zero, and the concatenation operations are performed along the channel dimension. Conv2DN is a two-dimensional convolution operation followed by a normalisation layer, e.g., BatchNorm [41]). An implementation of this process in mxnet/gluon pseudocode style can be found in Listing A.3.

The relative attention fusion presented here can be used as a direct replacement of concatenation followed by a convolution layer in any network design.

2.5. Architecture

We break down the network architecture into three component parts: the micro-topology of the building blocks, which represents the fundamental constituents of the architecture; the macro-topology of the network, which describes how building blocks are connected to one another to maximise performance; and the multitasking head, which is responsible for transforming the features produced by the micro- and macro-topologies into the final prediction layers where change is identified. Each of the choices of micro- and macro-topology has a different impact on the GPU memory footprint. Usually, selecting very deep macro-topology improves performance, but then this increases the overall memory footprint and does not leave enough space for using an adequate number of filters (channels) in each micro-topology. There is obviously a trade off between the micro-topology feature extraction capacity and the overall network depth. Guided by this, we seek to maximise the feature expression capacity of the micro-topology for a given number of filters, perhaps at the expense of consuming computational resources.

2.5.1. Micro-Topology: The CEECNet Unit

The basic intuition behind the construction of the CEEC building block is that it provides two different, yet complementary, views for the same input. The first view (the CE block—see Figure 4b) is a “summary understanding” operation (performed in lower resolution than the input—see also [42,43,44]). The second view (the EC block) is an “analysis of detail” operation (performed in higher spatial resolution than the input). It then exchanges information between these two views using relative attention, and it finally fuses them together by emphasising the most important parts using the FracTAL.

Our hypothesis and motivation for this approach is quite similar to the scale-space analysis in computer vision [45]: viewing input features at different scales allows the algorithm to focus on different aspects of the inputs and thus perform more efficiently. The fact that by merely increasing the resolution of an image, its content information does not increase is not relevant here: guided by the loss function, the algorithm can learn to represent at higher resolution features that otherwise would not be possible in lower resolutions. We know this from the successful application of convolutional networks in super-resolution problems [46] as well as (variational) autoencoders [47,48]: in both of these paradigms, deep learning approaches manage to meaningfully increase the resolution of features that exist in lower spatial dimension layers.

In the following, we define the volume V of features of dimension as the product of the number of their channels (or filters), C (or ), with their spatial dimensions, height, H, and width, W, i.e., . Here, C is the number of channels, H and W the spatial dimensions, height and width, respectively. For example, for each batch dimension, the output volume of a layer of size is . The two branches consist of: a “mini ∪-Net” operation (CE block), which is responsible for summarising information from the input features by first compressing the total volume of features into half its original size and then restoring it. The second branch, a “mini ∩-Net” operation (EC block), is responsible for analysing the input features in higher detail: it initially doubles the volume of the input features by halving the number of features and doubling each spatial dimension. It subsequently compresses this expanded volume to its original size. The input to both layers is concatenated with the output, and then a normed convolution restores the number of channels to their original input value. Note that the mini ∩-Net is nothing more than the symmetric (or dual) operation of the mini ∪-Net.

The outputs of the EC and CE blocks are fused together with relative attention fusion (Section 2.4.3). In this way, the exchange of information between the layers is encouraged. The final emphasised outputs are concatened together, thus restoring the initial number of filters, and the produced layer is passed through a normed convolution in order to bind the relative channels. The operation is concluded with a FracTALresidual operation and fusion (similar to Figure 4a), where the input is added to the final output and emphasised by the self attention on the original input. The CEECNet building block is described schematically in Figure 4b.

The compression operation, C, is achieved by applying a normed convolution layer of stride equal to 2 (k = 3, p = 1, s = 2) followed by another convolution layer that is identical in every aspect, except the stride that is now s = 1. The purpose of the first convolution is to both resize the layer and extract features. The purpose of the second convolution layer is to extract features. The expansion operation, E, is achieved by first resizing the spatial dimensions of the input layer using Bilinear interpolation, and then the number of channels is brought to the desired size by the application of a convolution layer (k = 3, p = 1, s = 1). Another identical convolution layer is applied to extract further features. The full details of the convolution operations used in the EC and CE blocks can be found on Listing A.5.

2.5.2. Macro-Topology: Dual Encoder, Symmetric Decoder

In this section, we present the macro-topology (i.e., backbone) of the architecture that uses either the CEECNet or the FracTALResNet units as building blocks. We start by stating the intuition behind our choices and continue with a detailed description of the macro-topology. Our architecture is heavily influenced by the ResUNet-a model [35]. We will refer to this macro-topology as the mantis topology.

In designing this backbone, a key question we tried to address is how we can facilitate exchange of information between features extracted from images at different dates. The following two observations guided us:

- We make the hypothesis that the process of change detection between two images requires a mechanism similar to human attention. We base this hypothesis on the fact that the time required for identifying objects that changed in an image correlates directly with the number of changed objects. That is, the more objects a human needs to identify between two pictures, the more time is required. This is in accordance with the feature-integration theory of Attention [11]. In contrast, subtracting features extracted from two different input images is a process that is constant in time, independent of the complexity of the changed features. Therefore, we avoid using ad hoc feature subtraction in all parts of the network.

- In order to identify change, a human needs to look and compare two images multiple times, back and forth. We need things to emphasise on image at date 1, based on information on image at date 2 (Equation (16)) and, vice versa, (Equation (17)). Furthermore, then we combine both of these pieces of information together (Equation (18)). That is, exchange information with relative attention (Section 2.4.3) between the two at multiple levels. A different way of stating this as a question is: what is important on input image 1 based on information that exists on image 2, and vice versa?

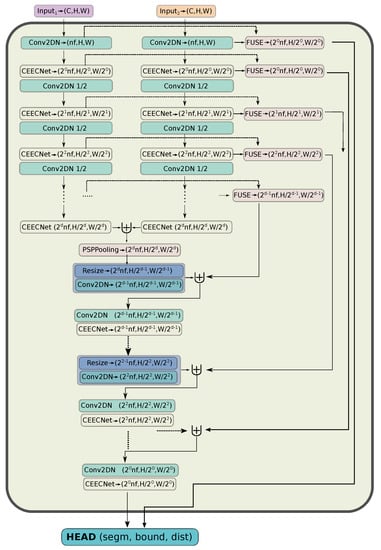

Given the above, we now proceed in detailing the mantis macro-topology (with CEECNetV1 building blocks, see Figure 5). The encoder part is a series of building blocks, where the size of the features is downscaled between the application of each subsequent building block. Downscaling is achieved with a normed convolution with stride, s = 2, without using activations. There exist two encoder branches that share identical parameters in their convolution layers. The input to each branch is an image from a different date, and the role of the encoder is to extract features at different levels from each input image. During the feature extraction by each branch, each of the two inputs is treated as an independent entity. At successive depths, the outputs of the corresponding building block are fused together with the relative attention methodology, as described in Section 2.4.3, but they are not used until later in the decoder part. Crucially, this fusion operation suggests to the network that the important parts of the first layer will be defined by what exists on the second layer (and vice versa), but it does not dictate how exactly the network should compare the extracted features (e.g., by demanding the features to be similar for unchanged areas, and maximally different for changed areas—we tried this approach, and it was not successful). This is something that the network will have to discover in order to match its predictions with the ground truth. Finally, the last encoder layers are concatenated and inserted into the pyramid scene pooling layer (PSPPooling—[35,49]).

Figure 5.

The mantis CEECNetV1 architecture for the task of change detection. The Fusion operation (FUSE) is described with mxnet/gluon style pseudocode in detail on Listing A.3.

In the (single) decoder part, the network extracts features based on the relative information that exist in the two inputs. Starting from the output of the PSPPooling layer (middle of network), we upscale lower resolution features with bilinear interpolation and combine them with the fused outputs of the decoder with a concatenation operation followed by a normed convolution layer, in a way similar to the ResUNet-a [35] model. The mantis CEECNetV2 model replaces all concatenation operations followed by a normed convolution with a Fusion operation, as described in Listing A.3.

The final features extracted from this macro-topology architecture is the final layer from the CEECNet unit that has the same spatial dimensions as the first input layers, as well as the Fused layers from the first CEECNet unit operation. Both of these layers are inserted into the segmentation HEAD.

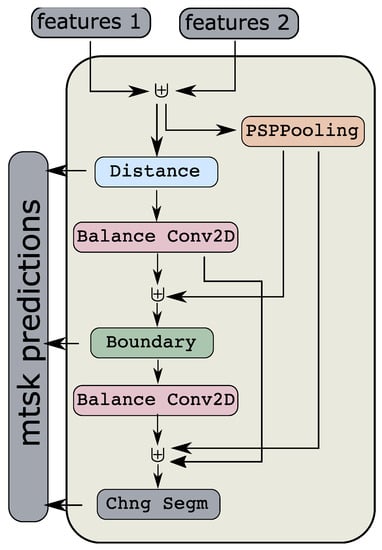

2.5.3. Segmentation HEAD

The features extracted from the features extractor (Figure 5) are inserted into a conditioned multitasking segmentation head (Figure 6) that produces three layers: a segmentation mask, a boundary mask, and a distance transform mask. This is identical with the ResUNet-a “causal” segmentation head, which has shown great performance in a variety of segmentation tasks [35,50], with two modifications.

Figure 6.

Conditioned multitasking segmentation HEAD. Here, features 1 and 2 are the outputs of the mantis CEECNet features extractor. The symbol ⊎ represents concatenation along the channels dimension. The algorithm first predicts the distance transform of the classes (regression), then re-uses this information to estimate the boundaries, and finally, both of these predictions are re-used for the change prediction layer. Here, Chng Segm stands for the change segmentation layer and mtsk for multitasking predictions.

The first modification relates to the evaluation of boundaries: instead of using a standard sigmoid activation for the boundaries layer, we are inserting a scaling parameter, , that controls how sharp the transition from 0 to 1 takes place, i.e.,

Here is a smoothing parameter. The coefficient is learned during training. We inserted this scaling after noticing in initial experiments that the algorithm needed improvement close to the boundaries of objects. In other words, the algorithm was having difficulty separating nearby pixels. Numerically, we anticipate that the distance between the values of activations of neighbouring pixels is small due to the patch-wise nature of convolutions. Therefore, a remedy to this problem is making the transition boundary sharper. We initialise training with .

The second modification to the segmentation HEAD relates to balancing the number of channels of the boundaries and distance transform predictions before re-using them in the final prediction of segmentation change detection. This is achieved by passing them through a convolution layer that brings the number of channels to the desired number. Balancing the number of channels treats the input features and the intermediate predictions as equal contributions to the final output. In Figure 6, we present schematically the conditioned multitasking head and the various dependencies between layers. Interested users can refer to [35] for details on the conditioned multitasking head.

2.6. Experimental Design

In this section, we describe the setup of our experiments for the evaluation of the proposed algorithms on the task of change detection. We start by describing the two datasets we used (LEVIRCD [1] and WHU [36]) as well as the data augmentation methodology we followed. Then we proceed in describing the metrics used for performance evaluation and the inference methodology. All models mantis CEECNetV1, V2, and mantis FracTAL ResNet have an initial number of filters equal to nf = 32, and the depth of the encoder branches was equal to 6. We designate these models with D6nf32.

2.6.1. LEVIRCD Dataset

The LEVIR-CD change detection dataset [1] consists of 637 pairs of VHR aerial images of resolution 0.5 m per pixel. It covers various types of buildings, such as villa residences, small garages, apartments, and warehouses. It contains 31,333 individual building changes. The authors provide a train/validation/test split, which standardises the performance process. We used a different split for training and validation; however, we used the test set the authors provide for reporting performance. For each tile from the training and validation set, we used ∼47% of the area for training and the remaining ∼53% for validation. For a rectangle area with sides of length a and b, this is achieved by using as training area the rectangle with sides and , i.e., . Then . From each of these areas, we extracted chips of size . These are overlapping in each dimension with stride equal to pixels.

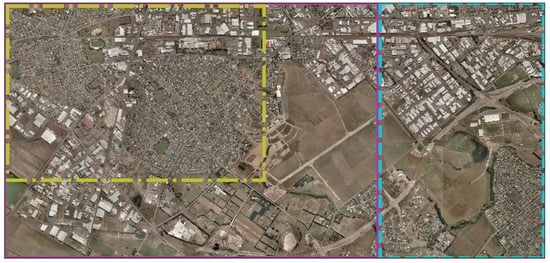

2.6.2. WHU Building Change Detection

The WHU building change dataset [36] consists of two aerial images (2011 and 2016) that cover an area of ∼20 km, which was changed from 2011 (earthquake) to 2016. The images resolution is 0.3 m spatial resolution. The dataset contains 12,796 buildings. We split the triplets of images and ground truth change labels into three areas with ratio 70% for training and validation and 30% for testing. We further split the 70% part into ∼47% area for training and ∼53% area for validation, in a way similar to the split we followed for each tile of the LEVIRCD dataset. The splitting can be seen in Figure 7. Note that the training area is spatially separated from the test area (the validation area is in between the two). The reason for the rather large train/validation ratio is for us to ensure there is adequate spatial separation between training and test areas, thus minimising spatial correlation effects.

Figure 7.

Train–validation–test split of the WHU dataset. The yellow (dash-dot line) rectangle represents the training data. The area between the magenta (solid line) and the yellow (dash-dot) rectangles represents the validation data. Finally, the cyan rectangle (dashed) is the test data. The reasoning for our split is to include in the validation data both industrial and residential areas and isolate (spatially) the training area from the test area in order to avoid spurious spatial correlation between training/test sites. The train–validation–test ratio split is .

2.6.3. Data Preprocessing and Augmentation

We split the original tiles into training chips the size of by using a sliding window methodology with stride pixels (the chips are overlapping in half the size of the sliding window). This is the maximum size we can fit to our architecture due to GPU memory limitations that we had at our disposal (NVIDIA P100 16GB). With this batch size, we managed to fit a batch size of 3 per GPU for each of the architectures we trained. Due to the small batch size, we used GroupNorm [51] for all normalisation layers.

The data augmentation methodology we used during training our network was the one used for semantic segmentation tasks as described in [35]. That is, random rotations with respect to a random centre with a (random) zoom in/out operation. We also implemented random brightness and random polygon shadows. In order to help the algorithm explicitly on the task of change detection, we implemented time reversal (reversing the order of the input images should not affect the binary change mask) and random identity (we randomly gave as input one of the two images, i.e., null change mask). These latter transformations were implemented at a rate of 50%.

2.6.4. Metrics

In this section, we present the metrics we used for quantifying the performance of our algorithms. With the exception of the Intersection over Union (IoU) metric, for the evaluation of all other metrics, we used the Python library pycm as described in [52]. The statistical measures we used in order to evaluate the performance of our modelling approach are pixel-wise precision, recall, F1 score, Matthews Correlation Coefficient (MCC) [53], and the Intersection over union. These are defined through:

2.6.5. Inference

In this section, we provide a brief description of the model selection after training (i.e., which epochs will perform best on the test set) as well as the inference methodology we followed for large raster images that exceed the memory capacity of modern-day GPUs.

2.6.6. Inference on Large Rasters

Our approach is identical to the one used in [35], with the difference that now we are processing two input images. Interested readers that want to know the full details can refer to Section 3.4 of [35].

During inference on test images, we extract multiple overlapping windows the size of with a step (stride) size of pixels. The final prediction “probability”, per pixel, is evaluated as the average “probability” over all inference windows that overlap on the given pixel. In this definition, we refer to “probability” as the output of the softmax final classification layer, which is a continuous value in the range . It is not a true probability in the statistical sense; however, it does express the confidence of the algorithm in obtaining the inference result.

With this overlapping approach, we make sure that the pixels that are closer to the edges and correspond to boundary areas for some inference windows appear closer to the centre area of the subsequent inference windows. For the boundary pixels of the large raster, we apply reflect padding before performing inference [30].

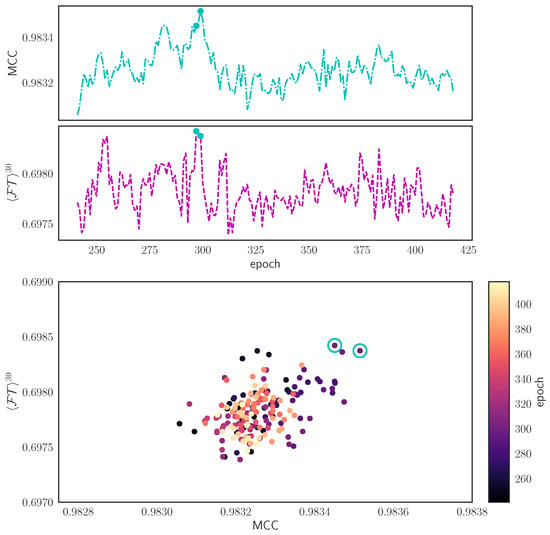

2.6.7. Model Selection Using Pareto Efficiency

For monitoring the performance of our modelling approach, we usually rely on the MCC metric on the validation dataset. We observed, however, that when we perform simultaneously learning rate reduction and depth increase, the MCC initially decreases (indicating performance drop), while the similarity is (initially) strictly increasing. After training starts to stabilise around some optimality region (with the standard noise oscillations), there are various cases where the MCC metric and the similarity coefficient do not agree on which is the best model. To account for this effect and avoid losing good candidate solutions, we evaluate the average of the inference output of a set of the best candidate models. These best candidate models are selected according to the models that belong to the Pareto front of the most-evolved solutions. We use all the Pareto front [54] model’s weights as acceptable solutions for inference. A similar approach was followed for the selection of hyperparameters for optimal solutions in [50].

In Figure 8, we plot, in the top panel, the evolution of the MCC and for . Clearly, these two performance metrics do not always agree. For example, the is close to optimality in the approximate epoch ∼250, while the MCC is clearly suboptimal. We highlight the two solutions that belong to the pareto front with filled circles (cyan dots). In the bottom panel, we plot the correspondence of the MCC values with the similarity metric. The two circles show the corresponding non-dominated Pareto solutions (i.e., best candidates).

Figure 8.

Pareto front selection after the last reduction in the learning rate. The bottom panel designates with open cyan circles the two points that are equivalent in terms of quality prediction when both MCC and are taken into account. The top two panels show the corresponding evolutions of these measures during training. There, the Pareto optimal points are designated with full circle dots (cyan).

3. Results

In this section, we first present an ablation study of the various modules and techniques we introduce. Then, we report the quantitative performance of the models we developed for the task of change detection on the LEVIRCD [1] and WHU [36] datasets. All of the inference visualisations are performed with models having the proposed FracTALdepth , although this is not always the best performant network.

3.1. FracTALUnits and Evolving Loss Ablation Study

In this section, we present the performance of the FracTAL ResNet [32,40] and CEECNet units we introduced against ResNet and CBAM [18] baselines as well as the effect of the evolving loss function on training a neural network. We also present a qualitative and quantitative analysis on the effect of the depth parameter in the FracTALbased on the mantis FracTALResNet network.

3.1.1. FracTALBuilding Blocks Performance

We construct three identical networks in the macro-topological graph (backbone) but different in micro-topology (building blocks). The first two networks are equipped with two different versions of CEECNet: the first is identical with the one presented in Figure 4b. The second is similar to the one in Figure 4b, with all concatenation operations that are followed by normed convolutions being replaced with Fusion operations, as described in Listing A.3. The third network uses as building blocks the FracTALResNet building blocks (Figure 4a). Finally, the fourth network uses standard residual units as building blocks, as described in [32,40] (ResNet V2). All building blocks have the same dimensionality of input and output features. However, each type of building block has a different number of parameters. By keeping the dimensionality of input and output layers identical to all layers, we believe the performance differences of the networks will reflect the feature expression capabilities of the building blocks we compare.

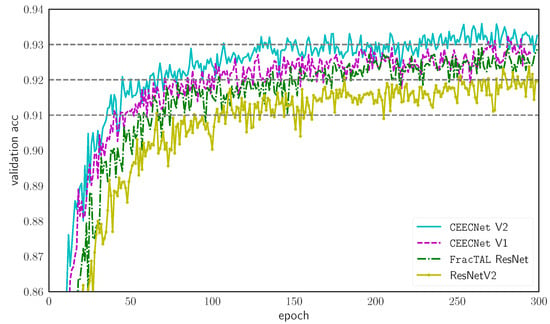

In Figure 9, we plot the validation loss for 300 epochs of training on CIFAR10 dataset [55] without learning rate reduction. We use cross entropy loss and Adam optimizer [56]. The backbone of each of the networks is described in Table A1. It can be seen that the convergence and performance of all building blocks equipped with the FracTALoutperform standard Residual units. In particular, we find that the performance and convergence properties of the networks follow: ResNet ⟨FracTALResNet⟨CEECNetV1 ⟨ CEECNetV2. The performance difference between FracTALResNet and CEECNetV1 will become more clearly apparent in the change detection datasets. The V2 version of CEECNet that uses Fusion with relative attention (cyan solid line) instead of concatenation (V1—magenta dashed line) for combining layers in the Compress-Expand and Expand-Compress branches has superiority over V1. However, it is a computationally more intensive unit.

Figure 9.

Comparison of the V1 and V2 versions of CEECNet building blocks with a FracTALResNet implementation and a standard ResNet V2 building blocks. The models were trained for 300 epochs on CIFAR10 with standard cross entropy loss.

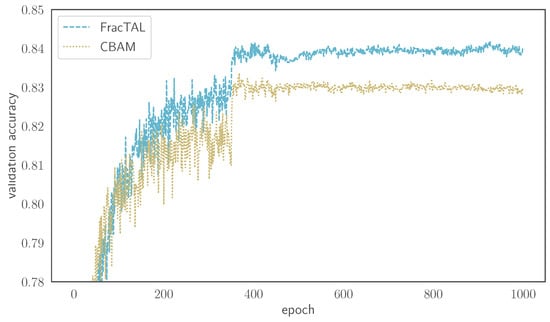

3.1.2. Comparing FracTALwith CBAM

Having shown the performance improvement over the residual unit, we proceed in comparing the FracTALproposed attention with a modern attention module and, in particular, the Convolution Block Attention Module (CBAM) [18]. We construct two networks that are identical in all aspects except the implementation of the attention used. We base our implementation on a publicly available repository that reproduces the results of [18]—written in Pytorch (https://github.com/luuuyi/CBAM.PyTorch, accessed on 1 February 2021)—that we translated into the mxnet framework. From this implementation, we use the CBAM-resnet34 model, and we compare it with a FracTAL-resnet34 model, i.e., a model that is identical to the previous one, with the exception that we replaced the CBAM attention with the FracTAL(attention). Our results can be seen in Figure 10, where a clear performance improvement is evident merely by changing the attention layer used. The improvement is of the order of 1%, from 83.37% (CBAM) to 84.20% (FracTAL), suggesting that the FracTALhas better feature extraction capacity than the CBAM layer.

Figure 10.

Performance improvement of the FracTAL-resnet34 over CBAM-resnet34: replacing the CBAM attention layers with FracTALones for two otherwise identical networks results in 1% performance improvement.

3.1.3. Evolving Loss

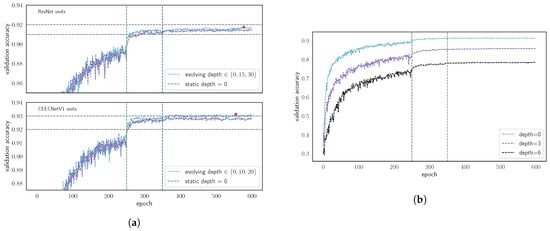

We continue by presenting experimental results on the performance of the evolving loss strategy on CIFAR10 using two networks: one with standard ResNet building blocks and one with CEECNetV1 units. The macro-topology of the networks is identical to the one in Table A1. In addition, we also demonstrate performance differences on the change detection task by training the mantis CEECNetV1 model on the LEVIRCD dataset with static and evolving loss strategies for FracTALdepth, .

In Figure 11a, we demonstrate the effect of this approach: we train the network on CIFAR10 with standard residual blocks (top panel [32,40]) under the two different loss strategies. In both strategies, we reduce the initial learning rate by a factor of 10 at epochs 250 and 350. In the first strategy, we train the networks with . In the second strategy, we evolve the depth of the fractal Tanimoto loss function: we start by training with , and on the two subsequent learning rate reductions, we use and . In the top panel, we plot the validation accuracy for the two strategies. The performance gain following the evolving depth loss is ∼0.25% in validation accuracy. In the bottom panel, we plot the validation accuracy for the CEECNetV1 -based models. Here, the evolution strategy is same as above with the difference that we use different depths for the loss (to observe potential differences). These are . Again, the difference in the validation accuracy is for the evolving loss strategy.

Figure 11.

Experimental results with the evolving loss strategy for the CIFAR10 dataset. Left panel (a): Training of two classification networks with static and evolving loss strategies. The two networks have identical macro-topologies but different micro-topologies. The first network (top) uses standard Residual units for its building blocks, while the second (bottom) uses CEECNetV1 units. The networks are trained with a static () loss strategy and an evolving one. We increase the depth of the loss function with each learning rate reduction. The vertical dashed lines designate epochs where the learning rate was scaled to 1/10th of its original value. The validation accuracy is mildly increased, although there is a clear difference. Right panel (b): Training on CIFAR10 of a network with standard ResNet building blocks and fixed depth, , of the loss. The vertical dashed lines designate epochs where the learning rate was scaled to 1/10th of its original value. As the depth of iteration, , increases ( remains constant for each experiment), the convergence speed of the validation accuracy degrades.

We should note that we observed performance degradation by using the loss for for training (from random weights). This is evident in Figure 11b where we train from scratch on CIFAR10 three identical models with a different depth for the function: . It is seen that as the hyperparameter increases, the performance of the validation accuracy degrades. We consider that this happens due to the low value of the gradients away from optimality, as it requires the network to train longer to reach the same level of validation accuracy. In contrast, the greatest benefit we observed by using this training strategy is that the network can avoid overfitting after the learning rate reduction (provided that the slope created by the choice of depth is significant) and has the potential to reach higher performance.

Next, we perform a test on evolving vs. static loss strategy on the LEVIR CD change detection dataset, using the CEECNetV1 units, as can be seen in Table 1. The CEECNetV1 unit, trained with the evolving loss strategy, demonstrates +0.856% performance increase on the Interesection over Union (IoU) and a +0.484% increase in MCC. Note that, for the same FracTALdepth, , the FracTALResNet network trained with the evolving loss strategy performs better than the CEECNetV1 that is trained with the static loss strategy (), while it falls behind the CEECNetV1 trained with the evolving loss strategy. We should also note that performance increment is larger in comparison to the classification task on CIFAR10, reaching almost ∼1% for the IoU.

Table 1.

Model comparison on the LEVIR building change detection dataset. We designate with bold font the best values, with underline the second best, and with round brackets, the third best model. All of our frameworks (D6nf32) use the mantis macro-topology and achieve state-of-the-art performance. Here, evo represents evolving loss strategy, sta represents static loss strategy, and the depth d refers to the similarity metric of the FracTAL(attention) layer. In the last column, we provide the number of trainable parameters for each model.

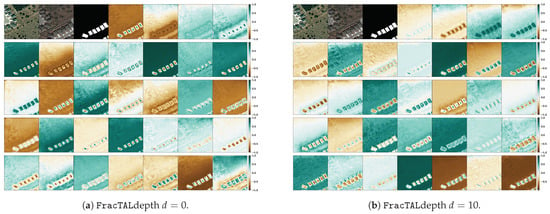

3.1.4. Performance Dependence on FracTALDepth

In order to understand how the FracTALlayer behaves with respect to different depths, we train three identical networks, the mantis FracTALResNet (D6nf32), using FracTALdepths in the range . The performance results on the LEVIRCD dataset can be seen in Table 1. It seems the three networks perform similarly (they all achieve SOTA performance on the LEVIRCD dataset), with the having top performance (+0.724% IoU), followed by the (+0.332%IoU), and, lastly, the network (baseline). We conclude that the depth d is a hyperparameter dependent on the problem at task that users of our method can choose to optimise against. Given that all models have competitive performance, it seems that the proposed depth is a sensible choice.

In Figure 12, we visualise the features of the last convolution before the multitasking segmentation head for FracTALdepth (left panel) and (right panel). The features at different depths appear similar, all identifying the regions of interest clearly. To the human eye, according to our opinion, the features for depth appear slightly more refined in comparison to the features corresponding to depth (e.g., by comparing the images in the corresponding bottom rows). The entropy of the features for (entropy: 15.9982) is negligibly higher (+0.00625 %) than for the case (entropy: 15.9972), suggesting both features have the same information content for these two models. We note that, from the perspective of information compression (assuming no loss of information), lower entropy values are favoured over higher values, as they indicate a better compression level.

Figure 12.

Visualization of the last features (before the multitasking head) for the mantisFracTALResNet models of FracTALdepth (left pannel) and (right pannel). The features appear similar. For each panel, the top left first three images are the input image at date , the input image at date , and the ground truth mask.

3.2. Change Detection Performance on LEVIRCD and WHU Datasets

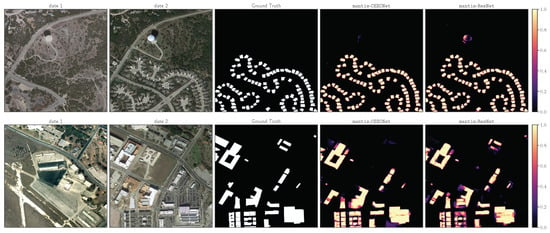

3.2.1. Performance on LEVIRCD

For this particular dataset, a fixed test set is provided, and a comparison with methods that other authors followed is possible. Both FracTAL ResNet and CEECNet (V1, V2) outperform the baseline [1] with respect to the F1 score by ∼5%.

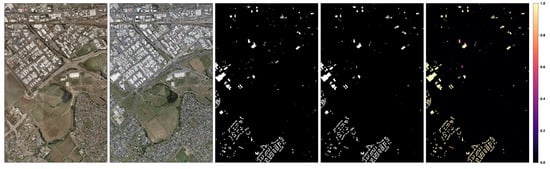

In Figure 13 (see also Figure 1), we present the inference of the CEECNet V1 algorithm for various images from the test set. For each row, from left to right, we have input image at date 1, input image at date 2, ground truth mask, inference (threshold = 0.5), and algorithm’s confidence heat map (this should not be confused with statistical confidence). It is interesting to note that the algorithm has zero doubt in areas where buildings exist in both input images. That is, it is clear our algorithm identifies change in areas covered by buildings and not building footprints. In Table 1, we present numerical performance results of both FracTALResNet as well as CEECNet V1 and V2. All metrics, precision, recall, F1, MCC, and IoU are excellent. The mantis CEECNet for FracTALdepth outperforms the mantis FracTAL ResNet by a small numerical margin; however, the difference is clear. This difference can also be seen in the bottom panel of Figure 16. We should also note that the numerical difference on, say, the F1 score, does not translate to equal portions of quality difference in images. For example, a 1% difference in the F1 score may have a significant impact on the quality of inference. We further discuss this in Section 4.1. Overall, the best model is mantis CEECNet V2 with FracTALdepth . Second best is the mantisFracTALResNet with FracTALdepth . Among the same set of models (mantisFracTALResNet), it seems that depth performs best; however, we do not know if this generalises to all models and datasets. We consider that FracTALdepth d is a hyperparameter that needs to be fine-tuned for optimal performance, and, as we have shown, the choice is a sensible one as in this particular dataset, it provided us with state-of-the-art results.

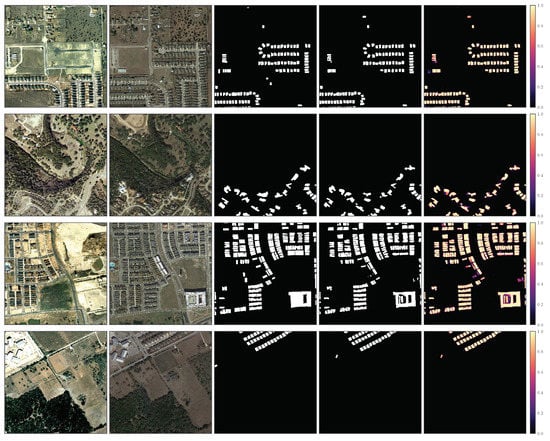

Figure 13.

Examples of inferred change detection on some test tiles from the LEVIRCD dataset of the mantis CEECNetV1 model (evolving loss strategy, FracTALdepth ). For each row, from left to right, input image date 1, input image date 2, ground truth, change prediction (threshold 0.5), and confidence heat map.

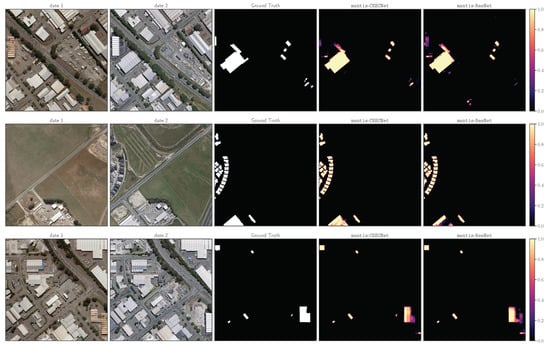

3.2.2. Performance on WHU

In Table 2, we present the results of training the mantis network with FracTALResNet and CEECNetV1 building blocks. Both of our proposed architectures outperform all other modelling frameworks, although we need to stress that each of the other authors followed a different splitting strategy of the data. However, with our splitting strategy, we used only 32.9% of the total area for training. This is significantly less than the majority of all other methods we report here, and we should anticipate a significant performance degradation in comparison with other methods. In contrast, despite the relatively smaller training set, our method outperforms other approaches. In particular, Ji et al. [28] used 50% of the raster for training and the other half for testing (Figure 10 in their manuscript). In addition, there is no spatial separation between training and test sites, as it exists in our case, and this should work in their advantage. Furthermore, the use of a larger window for training (their extracted chips are of spatial dimension ) increases in principle the performance because it includes more context information. There is a trade-off here though, in that using a larger window size reduces the number of available training chips; therefore, the model sees a smaller number of chips during training. Chen et al. [31] randomly split their training and validation chips. This should improve performance because there is a tight spatial correlation for two extracted chips that are in geospatial proximity. Cao et al. [57] used as a test set ∼20% of the total area of the WHU dataset; however, they do not specify the splitting strategy they followed for the training and validation sets. Finally, Liu et al. [58] used approximately ∼10% of the total area for the reporting test score performance. They also do not mention their splitting strategy.

Table 2.

Model comparison on the WHU building change detection dataset. We designate with bold font the best values, with underline the second best, and with round brackets, , the third best model. Ji et al. [28] presented two models for extracting buildings prior to estimating the change mask. These were the Mask-RCNN (in table: M1) and MS-FCN (in table: M2). Our models consume input images of a size of pixels. With the exception of [58] that uses the same size, all other results consume inputs of a size of pixels.

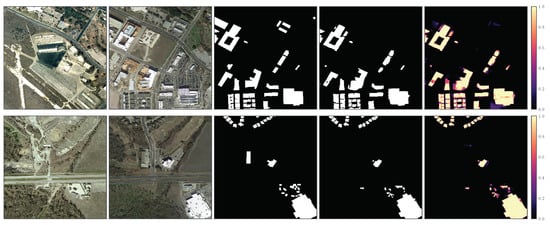

In Figure 14, we plot a set of examples of inference on the WHU dataset. The correspondence of the images in each row is identical to Figure 13, with the addition that we denote with blue rectangles the locations of changed buildings (true positive predictions) and missed changes from our model (false negative) with red squares. It can be seen that the most difficult areas are the ones that are heavily populated/heavily built up, and the changes are small area buildings. In Figure A1, we plot from left to right, the test area on date 1, the test area on date 2, the ground truth mask, and the confidence heat map of these predictions.

Figure 14.

A sample of change detection on windows the size of from the WHU dataset. Inference is with the mantis CEECNetV1 model. The ordering of the inputs, for each row, is as in Figure 13. We indicate with blue boxes successful findings and with red boxes missed changes on buildings.

In this table, we could not include [33] that report performance results evaluated only on the changed pixels and not the complete test images. Thus, they are missing out all false positive predictions that can have a dire impact on the performance metrics. They report precision: 97.840, recall: 97.01, F1: 97.29, and IoU: 97.38.

3.3. The Effect of Scaled Sigmoid on the Segmentation HEAD

Starting from an initial value of the scaled sigmoid boundary layer, the fully trained model mantis CEECNetV1 learns the following parameters that control how “crisp” the boundaries should be, or else, how sharp the decision boundary should be:

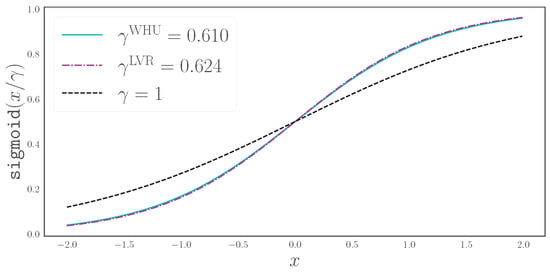

The deviation of these coefficients from their initial values demonstrates that the network indeed finds it useful to modify the decision boundary. In Figure 15, we plot the standard sigmoid function () and the sigmoid functions recovered after training on the LEVIRCD and WHU datasets.

Figure 15.

Trainable scaling parameters, , for the sigmoid activation, i.e., , that are used in the prediction of change mask boundary layers.

The algorithm in both cases learns to modify the decision boundary by making it sharper. This means that for two nearby pixels, one belonging to a boundary and the other to a background class, the numerical distance between them needs to be smaller to achieve class separation in comparison with standard sigmoid. Otherwise, a small change is sufficient to transition between the boundary and no-boundary classes.

4. Discussion

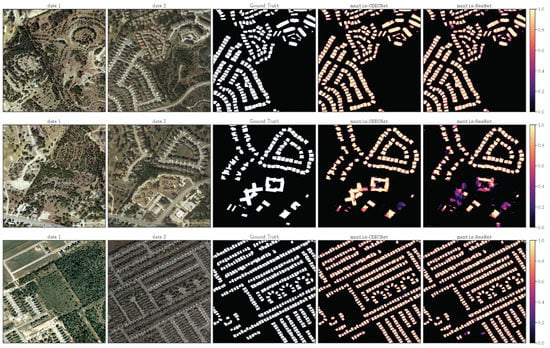

In this section, we comment on the qualitative performance of the models we developed for the task of change detection on the LEVIRCD [1] and WHU [36] datasets. The comparison between CEECNet V1 and FracTALResNet models is for the case of FracTALdepth .

4.1. Qualitative CEECNet and FracTALPerformance

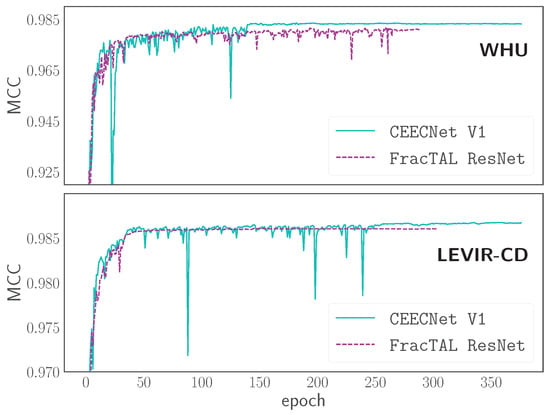

Although both CEECNet V1 and FracTALResNet achieve a very high MCC (Figure 16), the superiority of CEECNet for the same FracTALdepth is evident in the inference maps in both the LEVIRCD (Figure 17) and WHU (Figure 18) datasets. This confirms their relative scores (Table 1 and Table 2) and the faster convergence of CEECNet V1 (Figure 9). Interestingly, CEECNet V1 predicts change with more confidence than FracTALResNet (Figure 17 and Figure 18 ), even when it errs, as can be seen from the corresponding confidence heat maps. The decision on which of the models one should use is a decision to be made with respect to the relative “cost” of training each model, available hardware resources, and the performance target goal.

Figure 16.

mantisCEECNetV1 vs. mantisFracTALResNet(FracTALdepth, ) evolution performance on change detection validation datasets. The top panel corresponds to the LEVIRCD dataset. The bottom panel to the WHU dataset. For each network, we followed the evolving loss strategy: there are two learning rate reductions followed by two scaling ups of the loss function. All four training histories avoid overfitting, thanks to making the loss function sharper towards optimality.

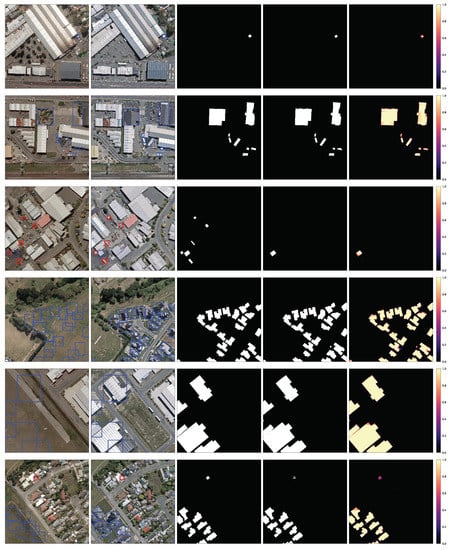

Figure 17.

Samples of relative quality change detection on test tiles of size from the LEVIRCD dataset. For each row from left to right: input image date 1, input image date 2, ground truth, and confidence heat maps of mantis CEECNetV1 and mantis FracTALResNet, respectively.

Figure 18.

As in Figure 17 for sample windows of size from the WHU dataset.

4.2. Qualitative Assesment of the Mantis Macro-Topology

A key ingredient of our approach on the task of change detection is that we emphasise the importance of avoiding using the difference of features to identify changes. Instead, we propose the exchange of information between features extracted from images at different dates with the concept of relative attention (Section 2.5.2) and fusion (Listing A.3). In this section, our aim is to gain insight into the behaviour of the relative attention and fusion layers and compare them with the features obtained by the difference of the outputs of convolution layers of images at different dates. We use the outputs of layers of a trained mantis FracTALResNet model, trained on LEVIRCD with FracTALdepth .

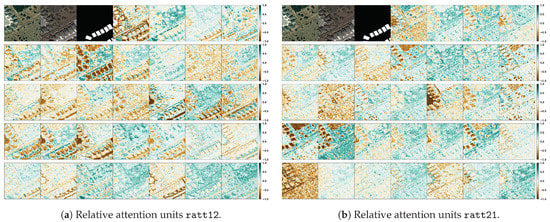

In Figure 19, we visualise the features of the first relative attention layers (channels = 32, spatial size , ratt12 (left panel) and ratt21 (right panel) for a set of image patches belonging to the test set (size: ). Here, the notation ratt12 indicates that the query features come from the input image at date , while the key/value features are extracted from the input image at date . Similar notation is applied for the relative attention, ratt21. Starting from the top left corner, we provide the input image at date , the input image at date , and the ground truth mask of change, and after that, we visualise the features as single channel images. Each feature (i.e., image per channel) is normalised in the range of for visualisation purposes. It can be seen that the algorithm emphasises from the early stages (i.e., first layers) to structures containing buildings and boundaries of these. In particular, the ratt12 (left panel) emphasises boundaries of buildings that exist on both images. It also seems to represent all buildings that exist in both images. The ratt21 layer (right panel) seems to emphasise the buildings that exist on date 1 more but not on date 2. In addition, in both relative attention layers, emphasis on roads and pavements is given.

Figure 19.

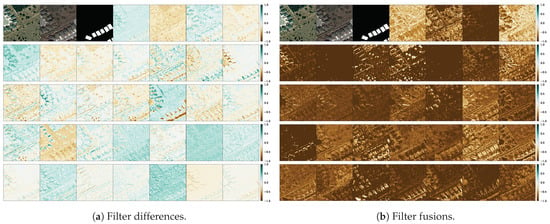

Visualization of the relative attention units, ratt12 (left pannel) and ratt21 (right pannel), for the mantis FracTALResNet with FracTALdepth, . These come from the first feature extractors (channels = 32, filter spatial size ). Here, ratt12 is the relative attention where for query we use input at date , and the key/value filters are created from input at date . In the top left rows for each panel, we have input image at date , input image at date , and ground truth building change labels, followed by the visualisation of each of the 32 channels of the features.

In Figure 20, we visualise the difference of features of the first convolution layers (channels = 32, spatial size —left panel) and the fused features (right panel) obtained using the relative attention and fusion methodology (Listing A.3). Some key differences between the two is that we observe that there is less variability within channels in the output of the fusion layer in comparison with the difference of features. In order to quantify the information content of the features, we calculated the Shanon entropy of the features for each case, and we found that the fusion features have half the entropy (11.027) in comparison with the entropy of the difference features (20.97). A similar entropy ratio was found for all images belonging to the test set. This means that the fusion features are less “surprising” than the difference features. This may suggest that the fusion provides a better compression of information in comparison with the difference of layers, assuming both layers have the same information content. It may also mean that the fusion layers have less information content than the difference features, i.e., they are harmful for the change detection process. However, if this was the case, our approach would fail to achieve state-of-the-art performance on the change detection datasets. Therefore, we conclude that the lower entropy value translates to better encoding of information in comparison with the difference of layers.

Figure 20.

For the same model as in Figure 19, we plot the difference of the first feature extractor blocks (left panel) vs. the first Fusion feature extraction block (right pannel). The entropy of the fusion features is half that of the difference channels. This means there is less “surprise” in the fusion filters in comparison with the difference of filters for the same trained network.

5. Conclusions

In this work, we propose a new deep learning framework for the task of semantic change detection on very high-resolution aerial images, presented here for the case of changes in buildings. This framework is built on top of several novel contributions that can be used independently in computer vision tasks. Our contributions are: