Learning Future-Aware Correlation Filters for Efficient UAV Tracking

Abstract

:1. Introduction

- A coarse-to-fine DCF-based tracking framework is proposed to exploit the context information hidden in the frame that is to be detected;

- Single exponential smoothing forecast is used to provide a coarse position, which is the reference for acquiring a context patch;

- We obtain a single future-aware context patch through an efficient target-aware mask generation method without additional feature extraction;

- Experimental results on three UAV benchmarks verify the advancement of the proposed tracker. Our tracker can maintain real-time speed in real-world tracking scenarios.

2. Related Works

2.1. DCF-Based Trackers

2.2. Trackers with Context Learning

2.3. Trackers with Future Informarion

2.4. Trackers for UAVs

3. Revisit BACF

4. Proposed Approach

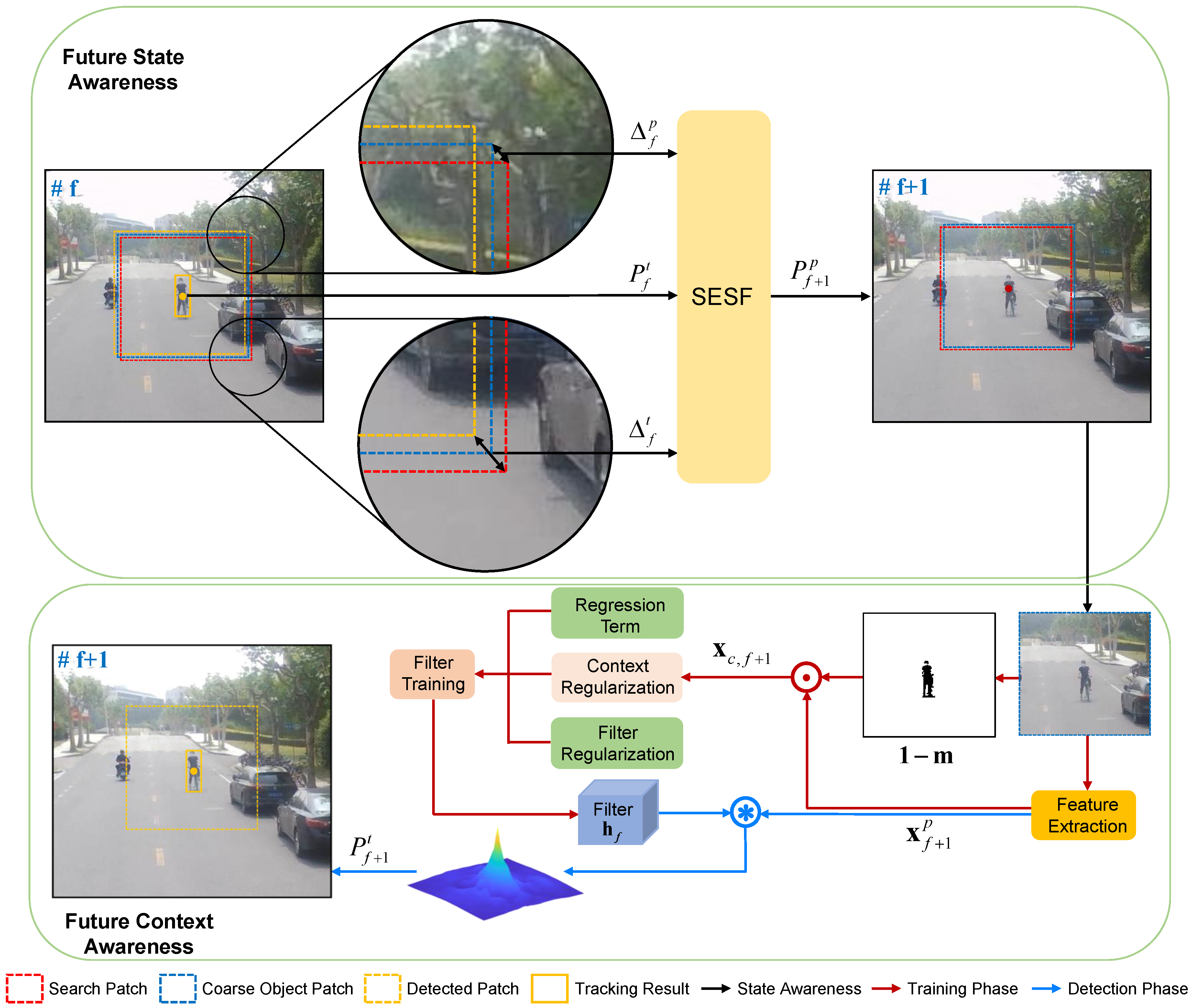

4.1. Problem Formulation

4.2. Stage One: Future State Awareness

4.3. Stage Two: Future Context Awareness

4.3.1. Fast Context Acquisition

4.3.2. Filter Training

4.3.3. Object Detection

4.3.4. Model Update

4.4. Tracking Procedure

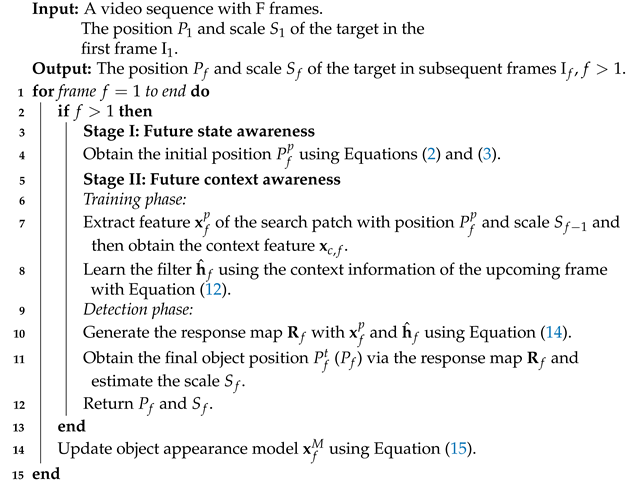

| Algorithm 1: FACF Tracker |

|

5. Experiments

5.1. Implementation Details

5.1.1. Parameters

5.1.2. Benchmarks

5.1.3. Metrics

5.1.4. Platform

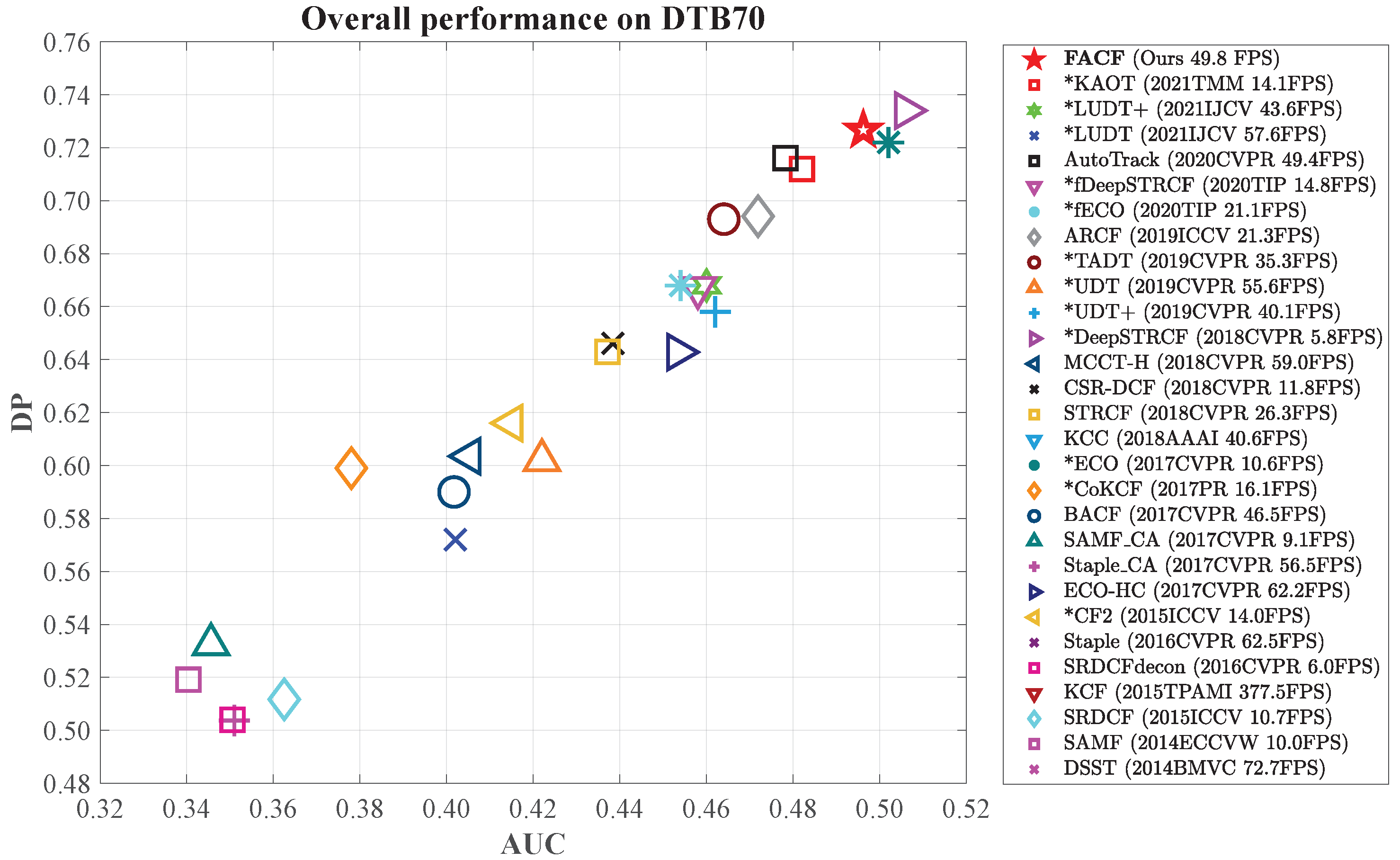

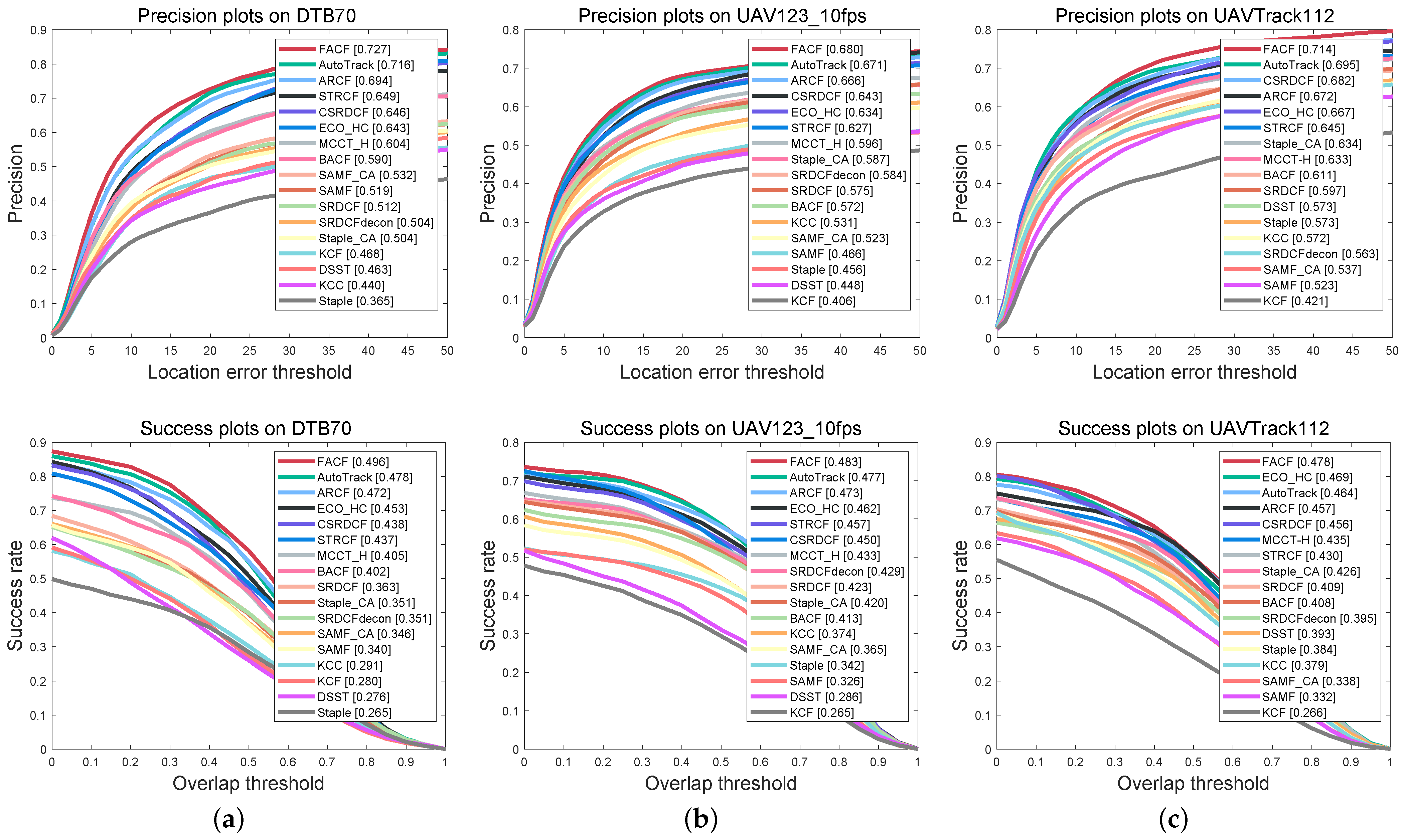

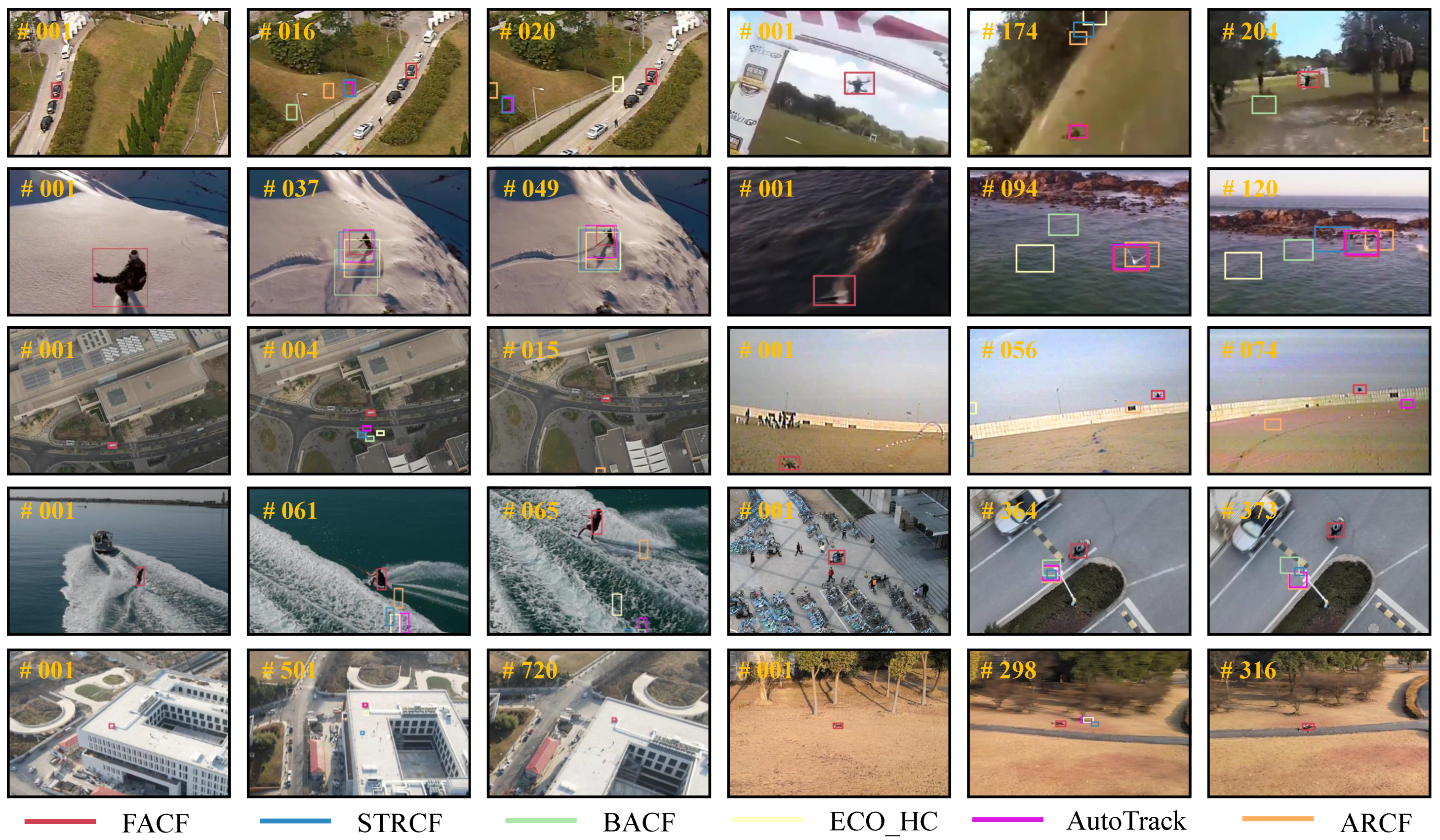

5.2. Performance Comparison

5.2.1. Comparison with Handcrafted-Based Trackers

5.2.2. Comparison with Deep-based Trackers

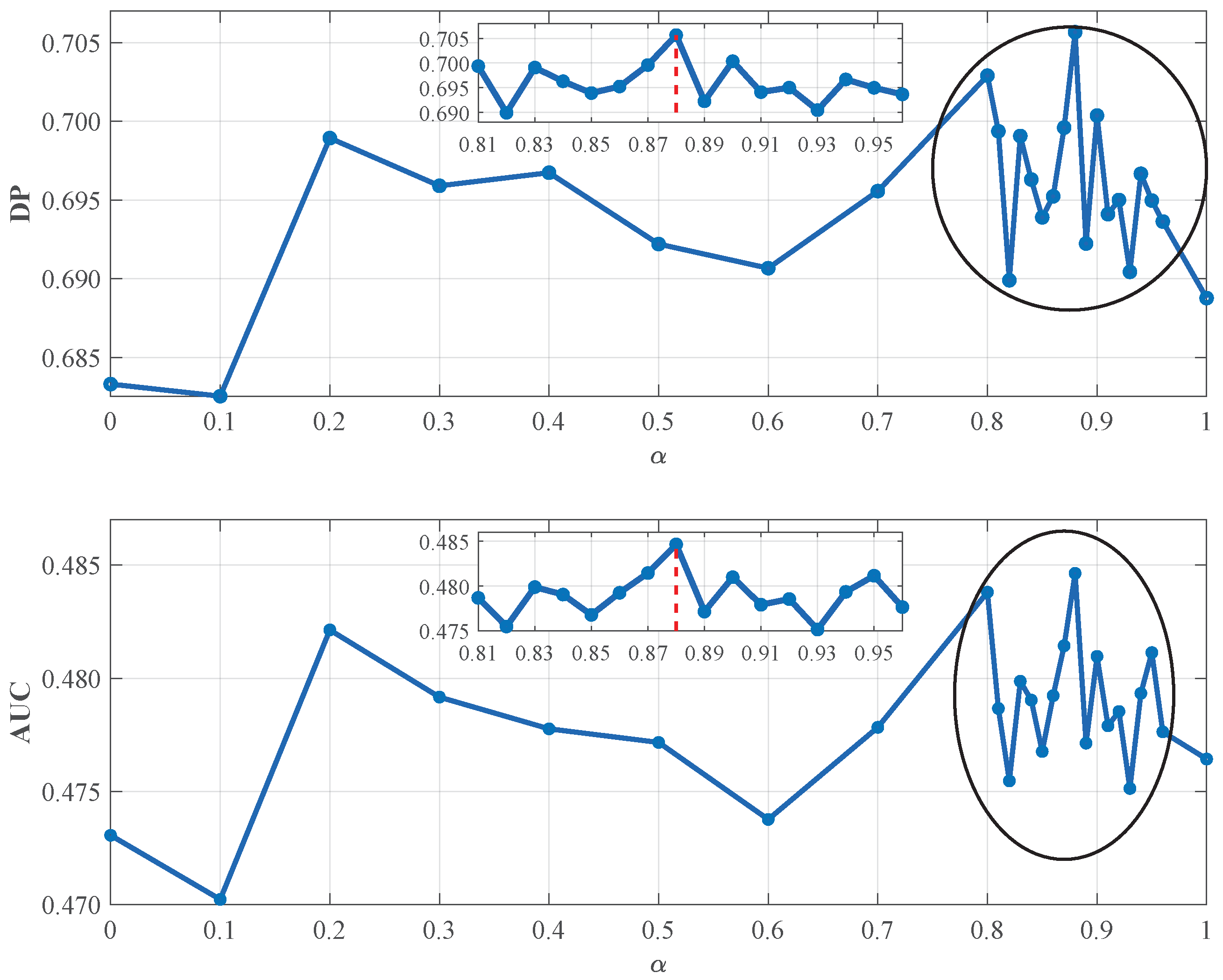

5.3. Parameter Analysis and Ablation Study

5.3.1. The Impact of Key Parameter

5.3.2. The Vality of Component

5.4. The Strategy for Context Learning

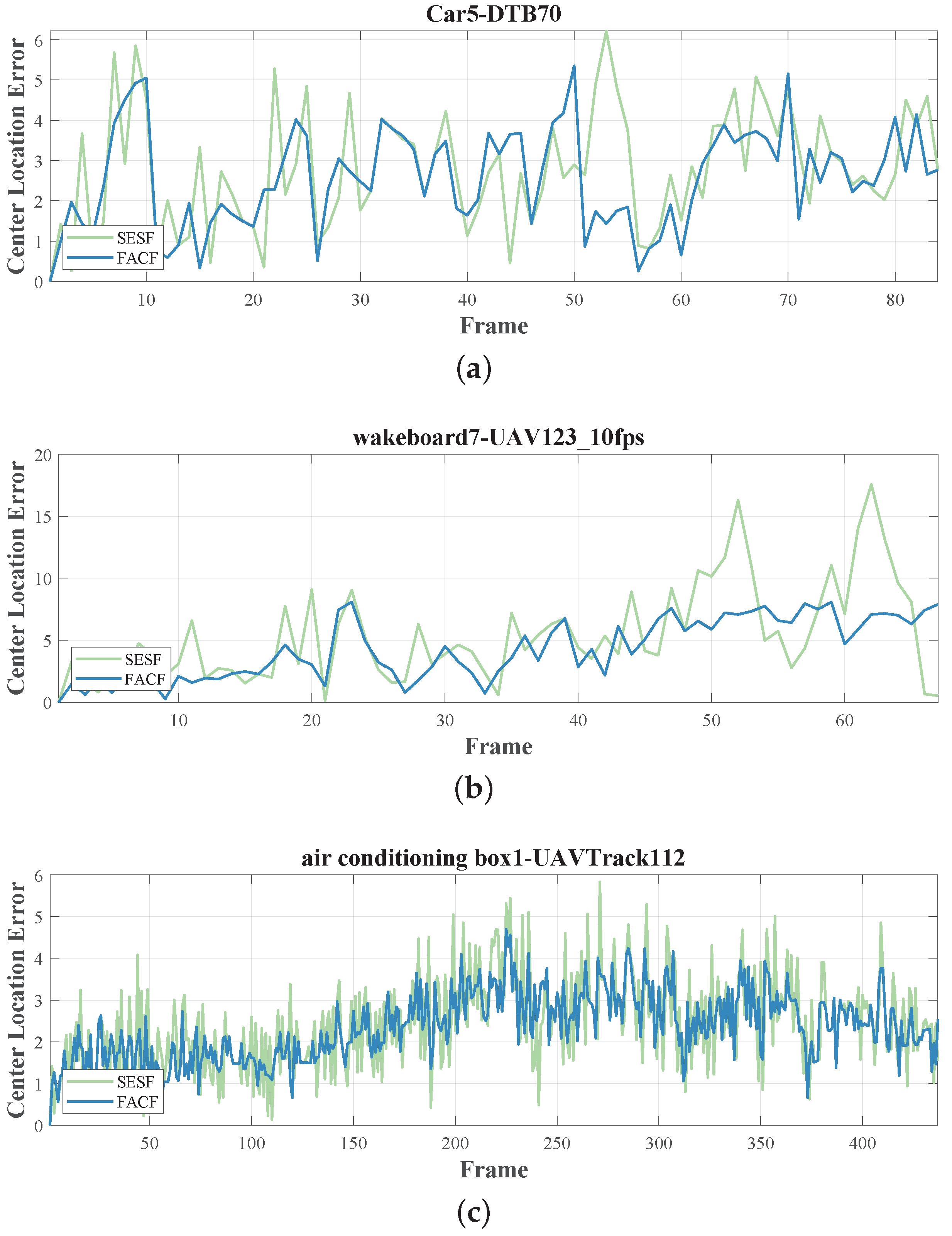

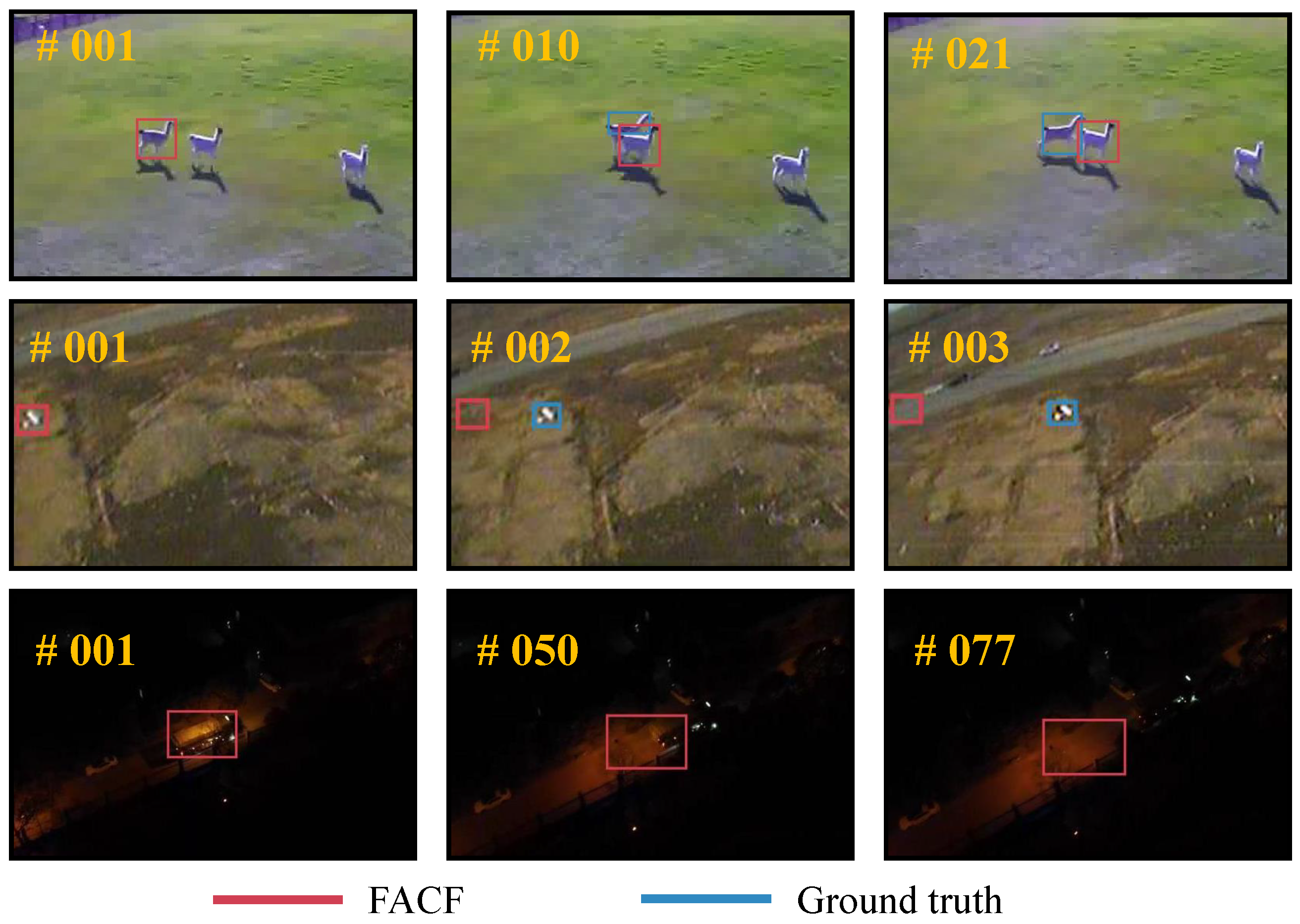

5.5. Failure Cases

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Miao, Y.; Li, J.; Bao, Y.; Liu, F.; Hu, C. Efficient Multipath Clutter Cancellation for UAV Monitoring Using DAB Satellite-Based PBR. Remote Sens. 2021, 13, 3429. [Google Scholar] [CrossRef]

- Zhang, F.; Yang, T.; Liu, L.; Liang, B.; Bai, Y.; Li, J. Image-Only Real-Time Incremental UAV Image Mosaic for Multi-Strip Flight. IEEE Trans. Multimed. 2021, 23, 1410–1425. [Google Scholar] [CrossRef]

- Mcarthur, D.R.; Chowdhury, A.B.; Cappelleri, D.J. Autonomous Control of the Interacting-BoomCopter UAV for Remote Sensor Mounting. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 5219–5224. [Google Scholar]

- Bolme, D.S.; Beveridge, J.R.; Draper, B.A.; Lui, Y.M. Visual object tracking using adaptive correlation filters. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), San Francisco, CA, USA, 13–18 June 2010; pp. 2544–2550. [Google Scholar]

- Li, F.; Tian, C.; Zuo, W.; Zhang, L.; Yang, M.H. Learning Spatial-Temporal Regularized Correlation Filters for Visual Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 4904–4913. [Google Scholar]

- Danelljan, M.; Häger, G.; Khan, F.; Felsberg, M. Accurate scale estimation for robust visual tracking. In Proceedings of the British Machine Vision Conference (BMVC), Nottingham, UK, 1–5 September 2014. [Google Scholar]

- Danelljan, M.; Bhat, G.; Shahbaz Khan, F.; Felsberg, M. Eco: Efficient convolution operators for tracking. In Proceedings of the IEEE Conference on Computer Cision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6638–6646. [Google Scholar]

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H. Fully-convolutional siamese networks for object tracking. In Proceedings of the European Conference on Computer Cision (ECCVW), Amsterdam, The Netherlands, 8–16 October 2016; pp. 850–865. [Google Scholar]

- Fu, C.; Lin, F.; Li, Y.; Chen, G. Correlation Filter-Based Visual Tracking for UAV with Online Multi-Feature Learning. Remote Sens. 2019, 11, 549. [Google Scholar] [CrossRef] [Green Version]

- Fu, C.; Li, B.; Ding, F.; Lin, F.; Lu, G. Correlation Filter for UAV-Based Aerial Tracking: A Review and Experimental Evaluation. arXiv 2020, arXiv:2010.06255. [Google Scholar]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. High-speed tracking with kernelized correlation filters. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 583–596. [Google Scholar] [CrossRef] [Green Version]

- Danelljan, M.; Hager, G.; Shahbaz Khan, F.; Felsberg, M. Learning spatially regularized correlation filters for visual tracking. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 4310–4318. [Google Scholar]

- Kiani Galoogahi, H.; Fagg, A.; Lucey, S. Learning background-aware correlation filters for visual tracking. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 1135–1143. [Google Scholar]

- Lukezic, A.; Vojir, T.; Čehovin Zajc, L.; Matas, J.; Kristan, M. Discriminative correlation filter with channel and spatial reliability. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6309–6318. [Google Scholar]

- Dai, K.; Wang, D.; Lu, H.; Sun, C.; Li, J. Visual Tracking via Adaptive Spatially-Regularized Correlation Filters. In Proceedings of the IEEE Conference on Computer Cision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 4665–4674. [Google Scholar]

- Mueller, M.; Smith, N.; Ghanem, B. Context-aware correlation filter tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1396–1404. [Google Scholar]

- Fu, C.; Jiang, W.; Lin, F.; Yue, Y. Surrounding-Aware Correlation Filter for UAV Tracking with Selective Spatial Regularization. Signal Process. 2020, 167, 1–17. [Google Scholar] [CrossRef]

- Li, Y.; Fu, C.; Huang, Z.; Zhang, Y.; Pan, J. Intermittent Contextual Learning for Keyfilter-Aware UAV Object Tracking Using Deep Convolutional Feature. IEEE Trans. Multimed. 2021, 23, 810–822. [Google Scholar] [CrossRef]

- Xu, T.; Feng, Z.H.; Wu, X.J.; Kittler, J. Joint group feature selection and discriminative filter learning for robust visual object tracking. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 7950–7960. [Google Scholar]

- Zhang, Y.; Gao, X.; Chen, Z.; Zhong, H.; Xie, H.; Yan, C. Mining Spatial-Temporal Similarity for Visual Tracking. IEEE Trans. Image Process. 2020, 29, 8107–8119. [Google Scholar] [CrossRef] [PubMed]

- Huang, Z.; Fu, C.; Li, Y.; Lin, F.; Lu, P. Learning Aberrance Repressed Correlation Filters for Real-Time UAV Tracking. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 2891–2900. [Google Scholar]

- Fu, C.; Ye, J.; Xu, J.; He, Y.; Lin, F. Disruptor-Aware Interval-Based Response Inconsistency for Correlation Filters in Real-Time Aerial Tracking. IEEE Trans. Geosci. Remote Sens. 2021, 59, 6301–6313. [Google Scholar] [CrossRef]

- Li, Y.; Fu, C.; Ding, F.; Huang, Z.; Lu, G. AutoTrack: Towards High-Performance Visual Tracking for UAV with Automatic Spatio-Temporal Regularization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11923–11932. [Google Scholar]

- Nazim, A.; Afthanorhan, A. A comparison between single exponential smoothing (SES), double exponential smoothing (DES), holt’s (brown) and adaptive response rate exponential smoothing (ARRES) techniques in forecasting Malaysia population. Glob. J. Math. Anal. 2014, 2, 276–280. [Google Scholar]

- Li, S.; Yeung, D.Y. Visual object tracking for unmanned aerial vehicles: A benchmark and new motion models. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; pp. 4140–4146. [Google Scholar]

- Mueller, M.; Smith, N.; Ghanem, B. A benchmark and simulator for uav tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; pp. 445–461. [Google Scholar]

- Fu, C.; Cao, Z.; Li, Y.; Ye, J.; Feng, C. Onboard Real-Time Aerial Tracking with Efficient Siamese Anchor Proposal Network. IEEE Trans. Geosci. Remote Sens. 2021, 1–13. [Google Scholar] [CrossRef]

- Li, Y.; Zhu, J. A scale adaptive kernel correlation filter tracker with feature integration. In Proceedings of the European Conference on Computer Vision Workshops (ECCV), Zurich, Switzerland, 6–12 September 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 254–265. [Google Scholar]

- Wang, C.; Zhang, L.; Xie, L.; Yuan, J. Kernel cross-correlator. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2017; pp. 4179–4186. [Google Scholar]

- Danelljan, M.; Hager, G.; Shahbaz Khan, F.; Felsberg, M. Adaptive decontamination of the training set: A unified formulation for discriminative visual tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1430–1438. [Google Scholar]

- Danelljan, M.; Khan, F.S.; Felsberg, M.; Van De Weijer, J. Adaptive Color Attributes for Real-Time Visual Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 1090–1097. [Google Scholar]

- Qi, Y.; Zhang, S.; Qin, L.; Yao, H.; Huang, Q.; Lim, J.; Yang, M.H. Hedged Deep Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 4303–4311. [Google Scholar]

- Danelljan, M.; Robinson, A.; Khan, F.S.; Felsberg, M. Beyond Correlation Filters: Learning Continuous Convolution Operators for Visual Tracking. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 472–488. [Google Scholar]

- Xu, T.; Feng, Z.; Wu, X.; Kittler, J. Learning Adaptive Discriminative Correlation Filters via Temporal Consistency Preserving Spatial Feature Selection for Robust Visual Object Tracking. IEEE Trans. Image Process. 2019, 28, 5596–5609. [Google Scholar] [CrossRef] [Green Version]

- Zhu, X.F.; Wu, X.J.; Xu, T.; Feng, Z.; Kittler, J. Robust Visual Object Tracking via Adaptive Attribute-Aware Discriminative Correlation Filters. IEEE Trans. Multimed. 2021, 1. [Google Scholar] [CrossRef]

- Yan, Y.; Guo, X.; Tang, J.; Li, C.; Wang, X. Learning spatio-temporal correlation filter for visual tracking. Neurocomputing 2021, 436, 273–282. [Google Scholar] [CrossRef]

- Wang, N.; Zhou, W.; Tian, Q.; Hong, R.; Wang, M.; Li, H. Multi-cue correlation filters for robust visual tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 4844–4853. [Google Scholar]

- Fu, C.; Xu, J.; Lin, F.; Guo, F.; Liu, T.; Zhang, Z. Object Saliency-Aware Dual Regularized Correlation Filter for Real-Time Aerial Tracking. IEEE Trans. Geosci. Remote Sens. 2020, 58, 8940–8951. [Google Scholar] [CrossRef]

- Fu, C.; Ding, F.; Li, Y.; Jin, J.; Feng, C. Learning dynamic regression with automatic distractor repression for real-time UAV tracking. Eng. Appl. Artif. Intell. 2021, 98, 104116. [Google Scholar] [CrossRef]

- Zheng, G.; Fu, C.; Ye, J.; Lin, F.; Ding, F. Mutation Sensitive Correlation Filter for Real-Time UAV Tracking with Adaptive Hybrid Label. In Proceedings of the International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 1–8. [Google Scholar]

- Lin, F.; Fu, C.; He, Y.; Guo, F.; Tang, Q. Learning Temporary Block-Based Bidirectional Incongruity-Aware Correlation Filters for Efficient UAV Object Tracking. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 2160–2174. [Google Scholar] [CrossRef]

- Xue, X.; Li, Y.; Shen, Q. Unmanned aerial vehicle object tracking by correlation filter with adaptive appearance model. Sensors 2018, 18, 2751. [Google Scholar] [CrossRef] [Green Version]

- Zha, Y.; Wu, M.; Qiu, Z.; Sun, J.; Zhang, P.; Huang, W. Online Semantic Subspace Learning with Siamese Network for UAV Tracking. Remote Sens. 2020, 12, 325. [Google Scholar] [CrossRef] [Green Version]

- Zhuo, L.; Liu, B.; Zhang, H.; Zhang, S.; Li, J. MultiRPN-DIDNet: Multiple RPNs and Distance-IoU Discriminative Network for Real-Time UAV Target Tracking. Remote Sens. 2021, 13, 2772. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.B.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. In Proceedings of the 29th International Conference on Neural Information Processing Systems (NIPS), Montreal, QC, Canada, 7–12 December 2015; pp. 91–99. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R.B. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Li, B.; Fu, C.; Ding, F.; Ye, J.; Lin, F. All-Day Object Tracking for Unmanned Aerial Vehicle. arXiv 2021, arXiv:2101.08446. [Google Scholar]

- Boyd, S.; Parikh, N.; Chu, E. Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends Mach. Learn. 2011, 3, 1–122. [Google Scholar] [CrossRef]

- Sherman, J.; Morrison, W.J. Adjustment of an inverse matrix corresponding to a change in one element of a given matrix. Ann. Math. Stat. 1950, 21, 124–127. [Google Scholar] [CrossRef]

- Bertinetto, L.; Valmadre, J.; Golodetz, S.; Miksik, O.; Torr, P.H. Staple: Complementary learners for real-time tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1401–1409. [Google Scholar]

- Wang, N.; Zhou, W.; Song, Y.; Ma, C.; Liu, W.; Li, H. Unsupervised Deep Representation Learning for Real-Time Tracking. Int. J. Comput. Vis. 2021, 129, 400–418. [Google Scholar] [CrossRef]

- Wang, N.; Zhou, W.; Song, Y.; Ma, C.; Li, H. Real-Time Correlation Tracking Via Joint Model Compression and Transfer. IEEE Trans. Image Process. 2020, 29, 6123–6135. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Ma, C.; Wu, B.; He, Z.; Yang, M.H. Target-aware deep tracking. In Proceedings of the IEEE Conference on Computer Cision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 1369–1378. [Google Scholar]

- Zhang, L.; Suganthan, P. Robust Visual Tracking via Co-trained Kernelized Correlation Filters. Pattern Recognit. 2017, 69, 82–93. [Google Scholar] [CrossRef]

- Wang, N.; Song, Y.; Ma, C.; Zhou, W.; Liu, W.; Li, H. Unsupervised deep tracking. In Proceedings of the IEEE Conference on Computer Cision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 1308–1317. [Google Scholar]

- Valmadre, J.; Bertinetto, L.; Henriques, J.F.; Vedaldi, A.; Torr, P.H.S. End-to-End Representation Learning for Correlation Filter Based Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition(CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5000–5008. [Google Scholar]

| Tracker | FACF | AutoTrack | ARCF | STRCF | MCCT-H | KCC | CSRDCF | BACF | ECO-HC | Staple_CA | SAMF_CA | Staple | SRDCFdecon | KCF | SRDCF | SAMF | DSST |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Venue | - | ’20CVPR | ’19ICCV | ’18CVPR | ’18CVPR | ’18AAAI | ’17CVPR | ’17ICCV | ’17CVPR | ’17CVPR | ’17CVPR | ’16CVPR | ’16CVPR | ’15TPAMI | ’15ICCV | ’14ECCV | ’14BMVC |

| DP | 0.707 | 0.694 | 0.677 | 0.579 | 0.611 | 0.514 | 0.657 | 0.591 | 0.648 | 0.579 | 0.531 | 0.465 | 0.550 | 0.432 | 0.561 | 0.503 | 0.495 |

| AUC | 0.486 | 0.473 | 0.467 | 0.441 | 0.425 | 0.348 | 0.448 | 0.408 | 0.461 | 0.399 | 0.349 | 0.331 | 0.391 | 0.270 | 0.398 | 0.0.333 | 0.318 |

| FPS | 48.816 | 50.263 | 24.690 | 25.057 | 51.743 | 36.620 | 11.207 | 47.710 | 62.163 | 44.807 | 9.220 | 57.207 | 6.290 | 533.250 | 12.007 | 10.260 | 87.777 |

| Metric | DP | AUC | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Tracker | VC | FM | LR | OCC | IV | VC | FM | LR | OCC | IV | |

| FACF | 0.707 | 0.583 | 0.643 | 0.580 | 0.585 | 0.464 | 0.382 | 0.374 | 0.388 | 0.390 | |

| AutoTrack [23] | 0.681 | 0.549 | 0.594 | 0.581 | 0.573 | 0.451 | 0.366 | 0.349 | 0.386 | 0.374 | |

| ARCF [21] | 0.662 | 0.555 | 0.617 | 0.600 | 0.593 | 0.439 | 0.372 | 0.366 | 0.398 | 0.409 | |

| STRCF [5] | 0.612 | 0.480 | 0.524 | 0.546 | 0.470 | 0.401 | 0.315 | 0.300 | 0.355 | 0.314 | |

| MCCT-H [37] | 0.540 | 0.400 | 0.472 | 0.529 | 0.406 | 0.355 | 0.262 | 0.252 | 0.332 | 0.267 | |

| KCC [29] | 0.494 | 0.417 | 0.466 | 0.496 | 0.459 | 0.322 | 0.271 | 0.253 | 0.311 | 0.295 | |

| DSST [6] | 0.503 | 0.396 | 0.475 | 0.439 | 0.429 | 0.310 | 0.247 | 0.249 | 0.273 | 0.262 | |

| CSRDCF [14] | 0.633 | 0.510 | 0.607 | 0.586 | 0.531 | 0.411 | 0.345 | 0.324 | 0.371 | 0.331 | |

| BACF [13] | 0.625 | 0.507 | 0.562 | 0.527 | 0.513 | 0.415 | 0.336 | 0.317 | 0.345 | 0.348 | |

| ECO-HC [7] | 0.615 | 0.505 | 0.527 | 0.547 | 0.559 | 0.416 | 0.346 | 0.294 | 0.358 | 0.354 | |

| Staple_CA [16] | 0.503 | 0.377 | 0.425 | 0.512 | 0.434 | 0.332 | 0.243 | 0.228 | 0.327 | 0.269 | |

| SAMF_CA [16] | 0.542 | 0.458 | 0.505 | 0.516 | 0.442 | 0.360 | 0.307 | 0.283 | 0.331 | 0.304 | |

| Staple [50] | 0.447 | 0.373 | 0.426 | 0.430 | 0.421 | 0.310 | 0.263 | 0.243 | 0.288 | 0.283 | |

| SRDCFdecon [30] | 0.578 | 0.469 | 0.542 | 0.530 | 0.502 | 0.384 | 0.313 | 0.303 | 0.342 | 0.316 | |

| KCF [11] | 0.376 | 0.310 | 0.380 | 0.363 | 0.353 | 0.251 | 0.227 | 0.262 | 0.242 | 0.240 | |

| SRDCF [12] | 0.480 | 0.397 | 0.385 | 0.451 | 0.361 | 0.322 | 0.264 | 0.199 | 0.286 | 0.241 | |

| SAMF [28] | 0.538 | 0.456 | 0.499 | 0.528 | 0.458 | 0.340 | 0.303 | 0.263 | 0.334 | 0.287 | |

| Tracker | Venue | Type | DP | AUC | FPS |

|---|---|---|---|---|---|

| FACF | - | Hog+CN+Grayscale | 0.727 | 0.496 | 51.412 |

| KAOT [18] | ’21TMM | Deep+Hog+CN | 0.712 | 0.482 | *14.045 |

| LUDT+ [51] | ’21IJCV | End-to-end | 0.668 | 0.460 | *43.592 |

| LUDT [51] | ’21IJCV | End-to-end | 0.572 | 0.402 | *57.638 |

| fDeepSTRCF [52] | ’20TIP | Deep+Hog+CN | 0.667 | 0.458 | *14.800 |

| fECO [52] | ’20TIP | Deep+Hog+CN | 0.668 | 0.454 | *21.085 |

| TADT [53] | ’19CVPR | End-to-end | 0.693 | 0.464 | *35.314 |

| UDT+ [55] | ’19CVPR | End-to-end | 0.658 | 0.462 | *40.135 |

| UDT [55] | ’19CVPR | End-to-end | 0.602 | 0.422 | *55.621 |

| DeepSTRCF [5] | ’18CVPR | Deep+Hog+CN | 0.734 | 0.506 | *5.816 |

| MCCT [37] | ’18CVPR | Deep+Hog+CN | 0.725 | 0.484 | *8.622 |

| ECO [7] | ’17CVPR | Deep+Hog | 0.722 | 0.502 | *10.589 |

| CoKCF [54] | ’17PR | Deep | 0.599 | 0.378 | *16.132 |

| CF2 [56] | ’15ICCV | End-to-end | 0.616 | 0.415 | *13.962 |

| Tracker | DP | AUC | FPS |

|---|---|---|---|

| FACF | 0.727 | 0.496 | 51.412 |

| FACF + CA | 0.687 | 0.481 | 21.578 |

| BACF + FCA | 0.701 | 0.484 | 46.007 |

| BACF + CA | 0.679 | 0.477 | 19.427 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, F.; Ma, S.; Yu, L.; Zhang, Y.; Qiu, Z.; Li, Z. Learning Future-Aware Correlation Filters for Efficient UAV Tracking. Remote Sens. 2021, 13, 4111. https://doi.org/10.3390/rs13204111

Zhang F, Ma S, Yu L, Zhang Y, Qiu Z, Li Z. Learning Future-Aware Correlation Filters for Efficient UAV Tracking. Remote Sensing. 2021; 13(20):4111. https://doi.org/10.3390/rs13204111

Chicago/Turabian StyleZhang, Fei, Shiping Ma, Lixin Yu, Yule Zhang, Zhuling Qiu, and Zhenyu Li. 2021. "Learning Future-Aware Correlation Filters for Efficient UAV Tracking" Remote Sensing 13, no. 20: 4111. https://doi.org/10.3390/rs13204111

APA StyleZhang, F., Ma, S., Yu, L., Zhang, Y., Qiu, Z., & Li, Z. (2021). Learning Future-Aware Correlation Filters for Efficient UAV Tracking. Remote Sensing, 13(20), 4111. https://doi.org/10.3390/rs13204111