1. Introduction

With decades of rapid urbanization, High-Rise Buildings (HRBs) have been emerging as a distinctive landscape in urban areas in China. HRBs mainly serving as high-end commercial and business centers and residential apartments have obvious advantages at improving the efficiency of resources and energy [

1]. With their unique characteristics and functions, HRBs have a great impact on the urban environment and socioeconomics [

2,

3,

4]. For example, HRBs influence local climate in urban areas by modifying energy balance and roughness of the urban surface, which are closely related to the urban heat island effect [

2,

3]; the compact and complex geometric structure of HRBs makes people easily vulnerable to contagious diseases [

4]. Therefore, the monitoring of HRBs in urban areas can be useful in urban planning, environment protection, and ecological assessment, and so on.

Remote sensing has been proven to be an efficient and cost-efficient way to monitor urban dynamics at various temporal and spatial scales [

5,

6,

7]. Most of the studies focus on urban land covers such as vegetation and impervious surfaces in the remote sensing community [

8,

9,

10,

11]. A few studies draw attention to land use mapping by considering spatial context [

12,

13]. However, the study of HRBs is far behind that of other urban features, although HRBs are quite visually distinct in urban areas [

14,

15]. Large-scale monitoring of HRBs is still challenging mainly for two reasons. On the one hand, little consistent and clear definition has ever been given to HRBs in the context of the large-scale monitoring of HRBs. This is due to the physical properties of HRBs, which vary a lot in different regions with different cultural, terrain, and other factors. On the other hand, HRBs have complex 3D geometric structures and surface materials, and these characteristics bring difficulties to large-scale monitor HRBs in a routine way.

To address the above challenges, HRBs have been defined as spatial clusters of buildings, and each cluster represents spatially connected buildings with relatively uniform height [

15]. The threshold of the height works as the only parameter in defining HRBs. In the latest “Uniform standard for design of civil buildings GB 50252-2019” [

16] in China, HRBs are defined as civil buildings above 27 m or public buildings with multiple floors above 24 m. Here we consider HRBs as building clusters with an average height of above 25 m in general. A similar definition for HRBs has been proposed in the study of Local Climate Zone [

2]. However, in the real scenario, the definition of HRBs solely based on height is not practical, because the precise height of HRBs is quite hard to measure, and also the height of HRBs varies a bit across geographical space. To deal with the problem, local context is included in the definition because HRBs are empirically distinctive from other urban features for a specific region. Thus, HRBs are defined in consideration of both height and local context in a specific urban region.

Another opportunity to routinely monitor HRBs in large areas is the free access of recent Sentinel-2 data from the European Space Agency (ESA) [

17]. The Sentinel-2 data have advantages at characterizing HRBs over traditional high spatial resolution satellite images for nadir viewing, 10 m spatial resolution, global coverage, and short revisiting interval, and so on. More specifically, nadir viewing can reduce the complexity of image features of HRBs with 3D geometric structures; 10 m spatial resolution can well characterize HRBs while omitting unnecessary spatial details. Global coverage and short revisiting interval can guarantee consistent and large-scale monitoring.

Almost in parallel with the availability of Sentinel-2 data, the emergence of deep learning models essentially revolutionizes the framework of remote sensing data analysis [

13,

18,

19]. The deep learning model, which is biologically inspired by the human brain, can integrate feature learning and parameter estimation into a single multiple layered neural networks. All parameters in the model are the weights between connected neurons. With the help of powerful computational resources, these weights can be learned from raw data and their labels in a rather brutal way. The merit of the deep learning model lies directly learning complex but useful features that cannot be easily designed by human engineers. Among deep learning models, Fully Convolutional Networks (FCN) [

20,

21], initially developed to segment natural images, have proven to fit well to the pixel-wise classification of remote sensing data [

22].

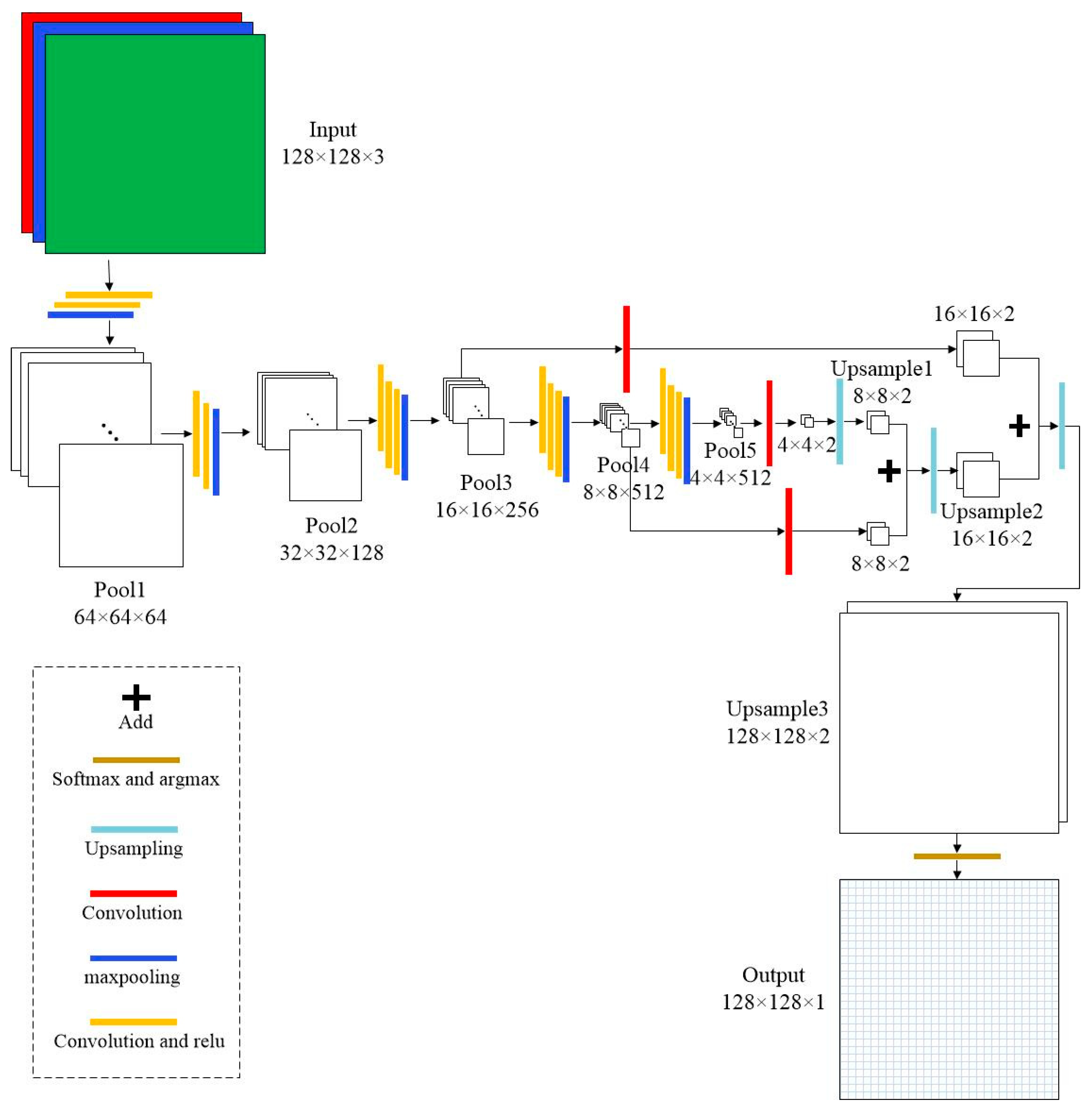

With the proposed definition of HRBs, A FCN-based method has been successfully developed to extract HRBs from Sentinel-2 images [

14]. Above 90 percent of overall accuracy measured by F1 score is obtained in the core of Xiong’an new area by the FCN-based method, which is much better than that of traditional supervised classification methods. Meanwhile, we have adopted the proposed FCN-based method to study the dynamic of HRBs in similar regions [

15]. However, previous works mainly use Sentinel-2 data acquired in Spring in a relatively local region. Image features of HRBs change a lot along with many factors such as culture, sun geometry, and land cover. It is a question of whether the newly proposed FCN-based method can be effective at extracting HRBs from Sentinel-2 data acquired in other regions and/or seasons.

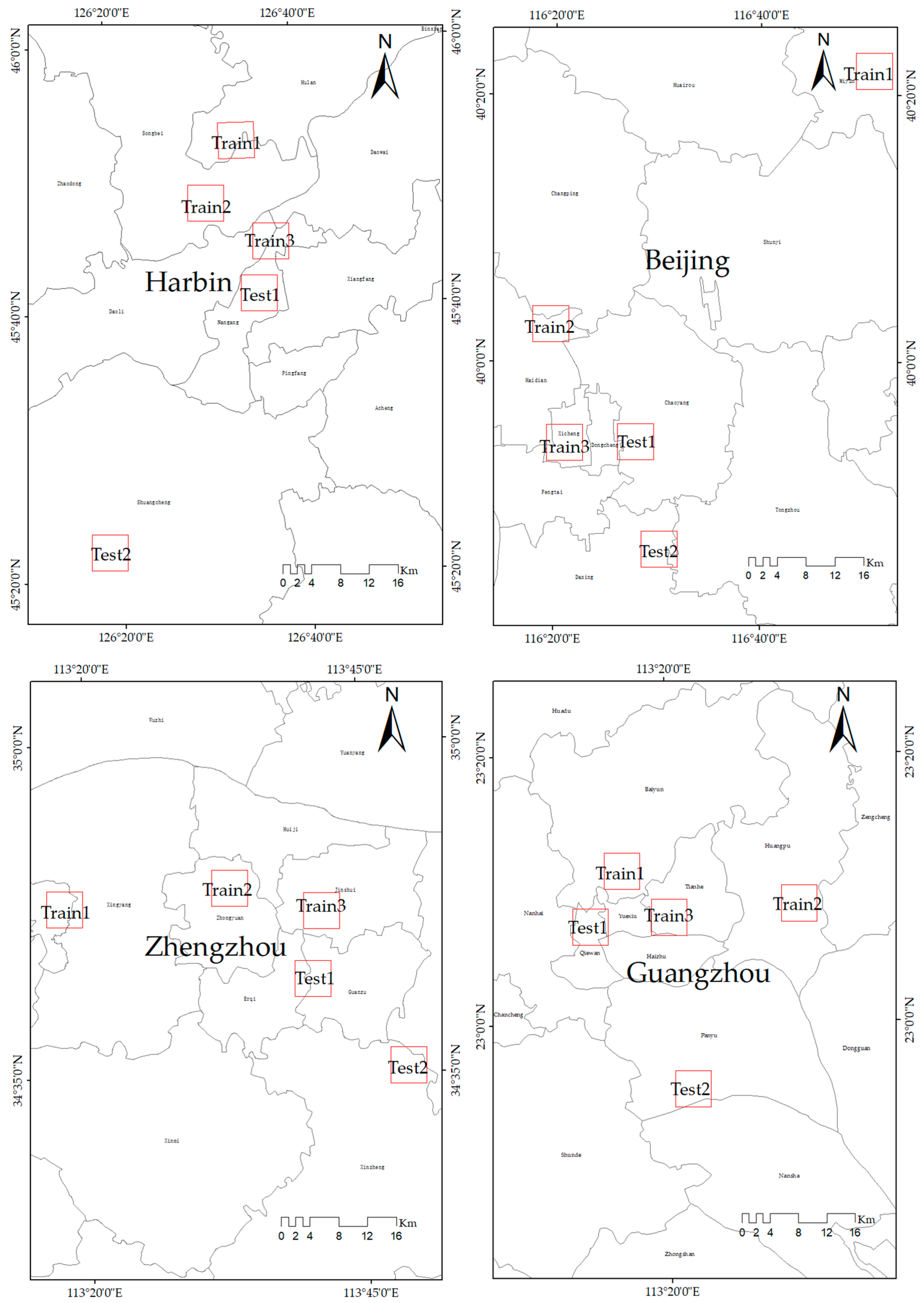

This paper extends previous work by studying the influence of seasonal and spatial factors on the effectiveness of FCN model in HRBs detection. More specifically, we study the performance of FCN model on Sentinel-2 data in different seasons and regions, also we want to evaluate the possibility to build one or a few FCN models rather than many local FCN models to handle the large diversity of HRBs in different regions and seasons without sacrificing much overall accuracy. To achieve this aim, we selected four cities, namely, Harbin, Beijing, Zhengzhou, and Guangzhou, as study regions. Four cities have diverse latitudes and landscapes. Additionally, we collected Sentinel-2 images from four seasons in each city. With multiple spatial and seasonal data, we design and conduct extensive experiments to evaluate the FCN-based method. Our study aims to answer three questions.

- (1)

What are the performances of models built on different combinations of region and season?

- (2)

Is it possible to build an effective model for all four seasons in a specific region?

- (3)

Is it possible to build an effective model for all four regions and four seasons?

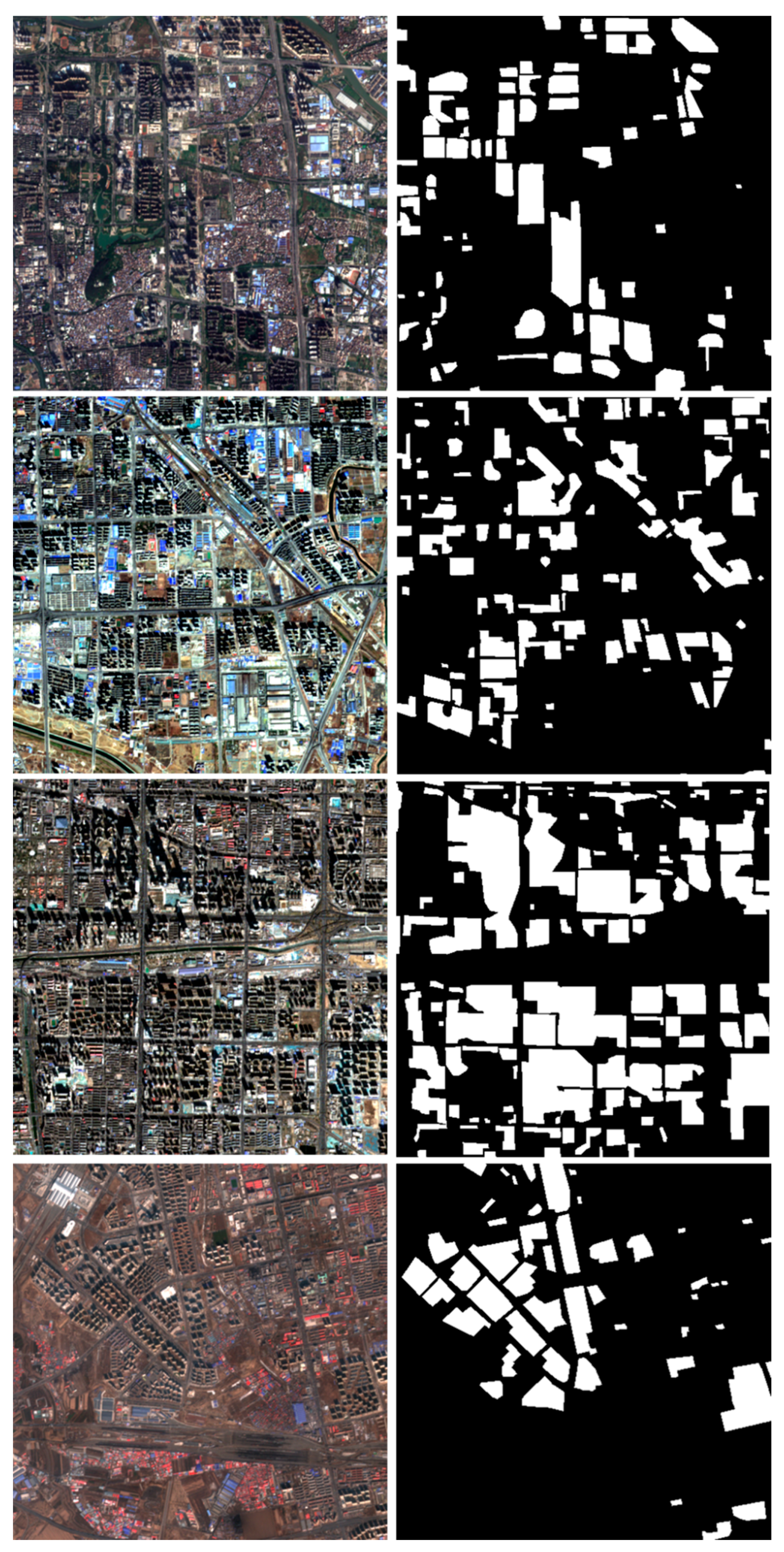

The paper is divided into five parts. Part one gives an introduction to our work. Part two describes the experimental data including images and HRBs samples, the flowchart of our method. Part three presents HRBs detection results and the analysis. Part four gives a discussion on the results. The final part concludes the paper and also provides perspectives in future work.

3. Results and Analysis

Totally 21 FCN models from E1, E2, and E3 were trained and validated according to the experimental design. The results were analyzed quantitatively and qualitatively as illustrated in

Figure 5,

Figure 6,

Figure 7,

Figure 8,

Figure 9,

Figure 10. To support a quantitative analysis, F1 scores of all trained FCN models on test data in each region are calculated to help analyze results from E1, E2, and E3 as shown in

Figure 5,

Figure 7 and

Figure 9. Meanwhile, to fulfill a qualitative analysis, predicted results on test1 data in each region along with their corresponding images and ground truth are shown in

Figure 6,

Figure 8, and

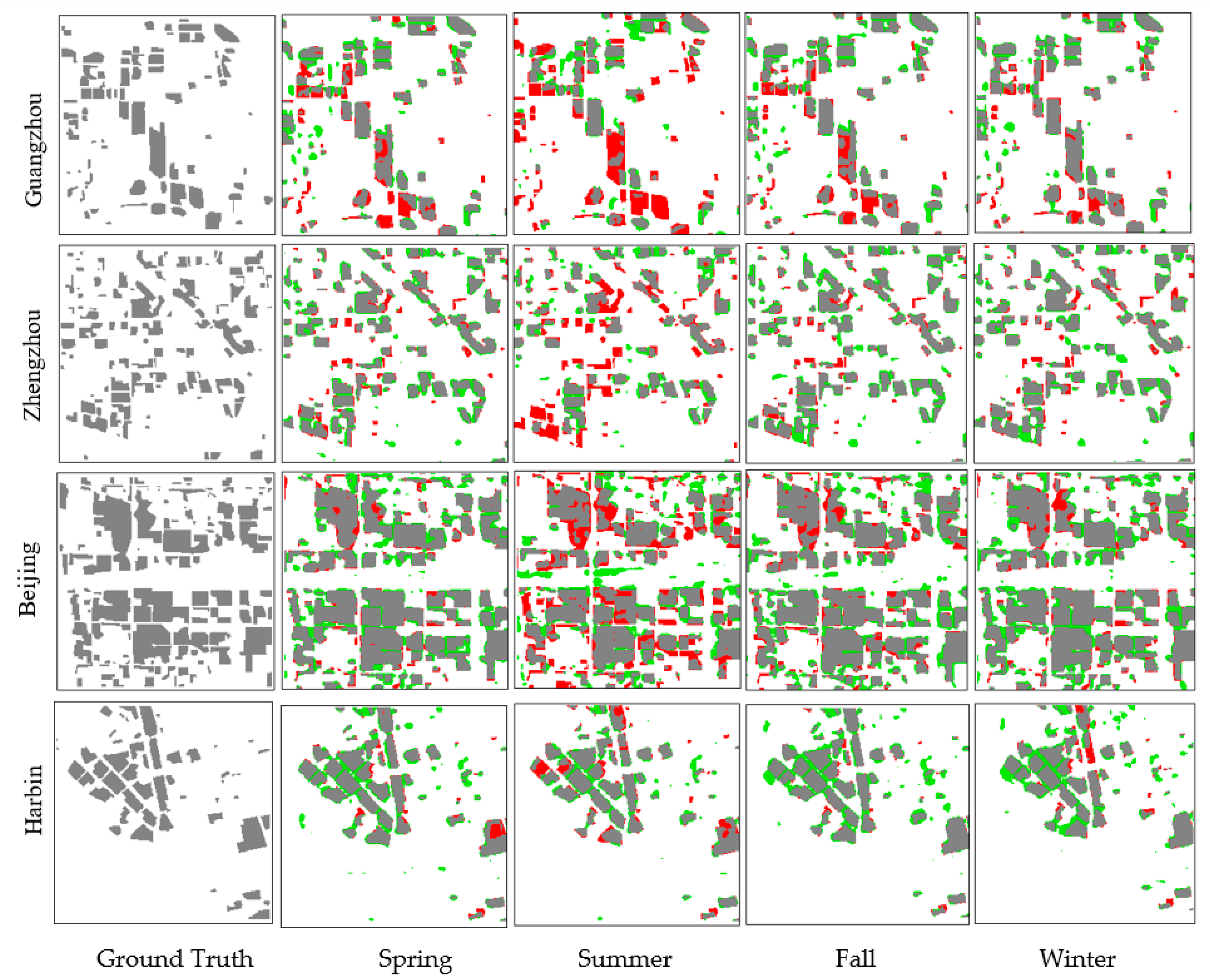

Figure 10 respectively. Also, for ease of visual interpretation of the Figures, true HRBs are colored in gray, omission errors are colored in red, and commission errors are colored in green. To answer three questions in our study, the results from E1, E2, and E3 are analyzed separately as follows.

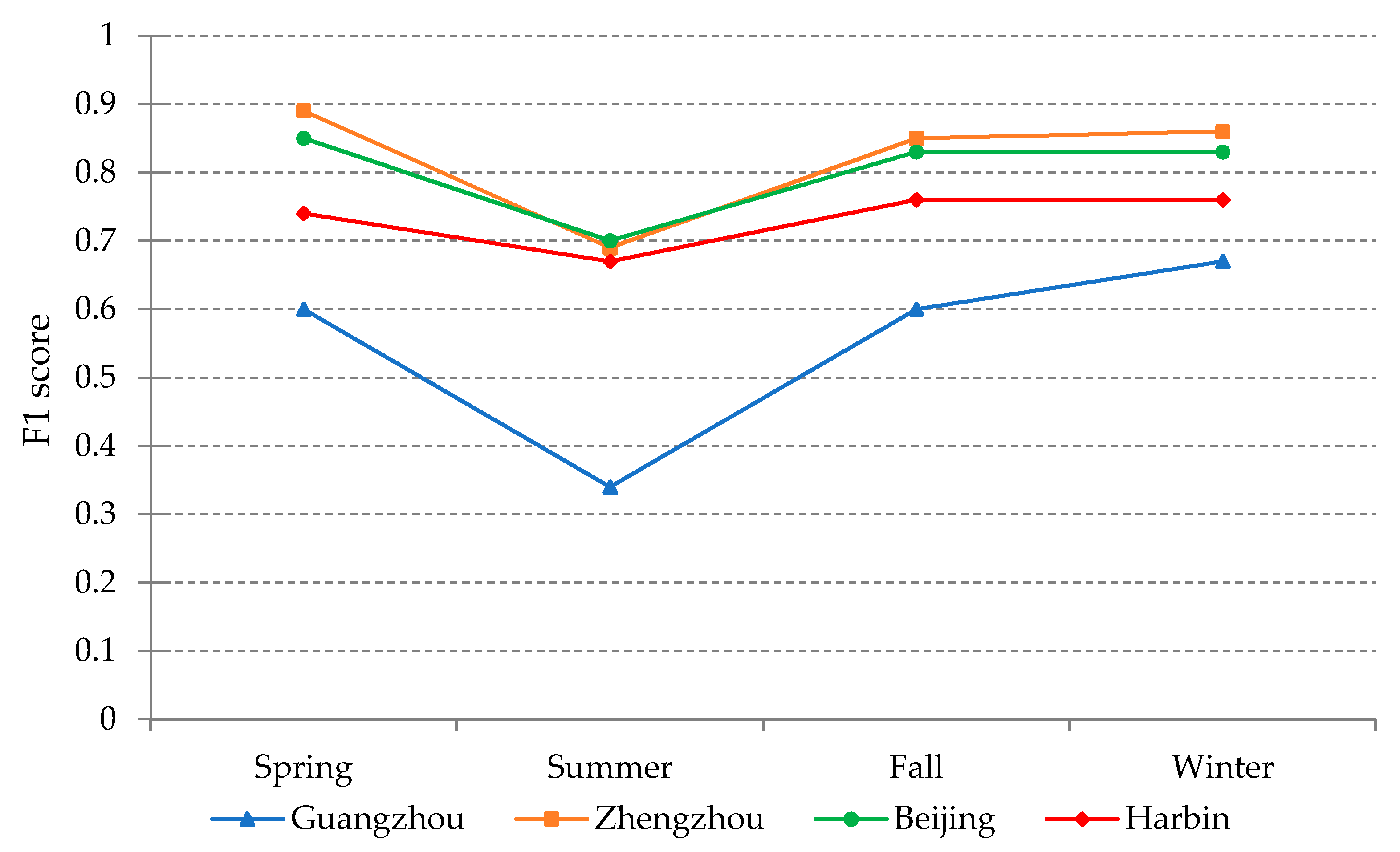

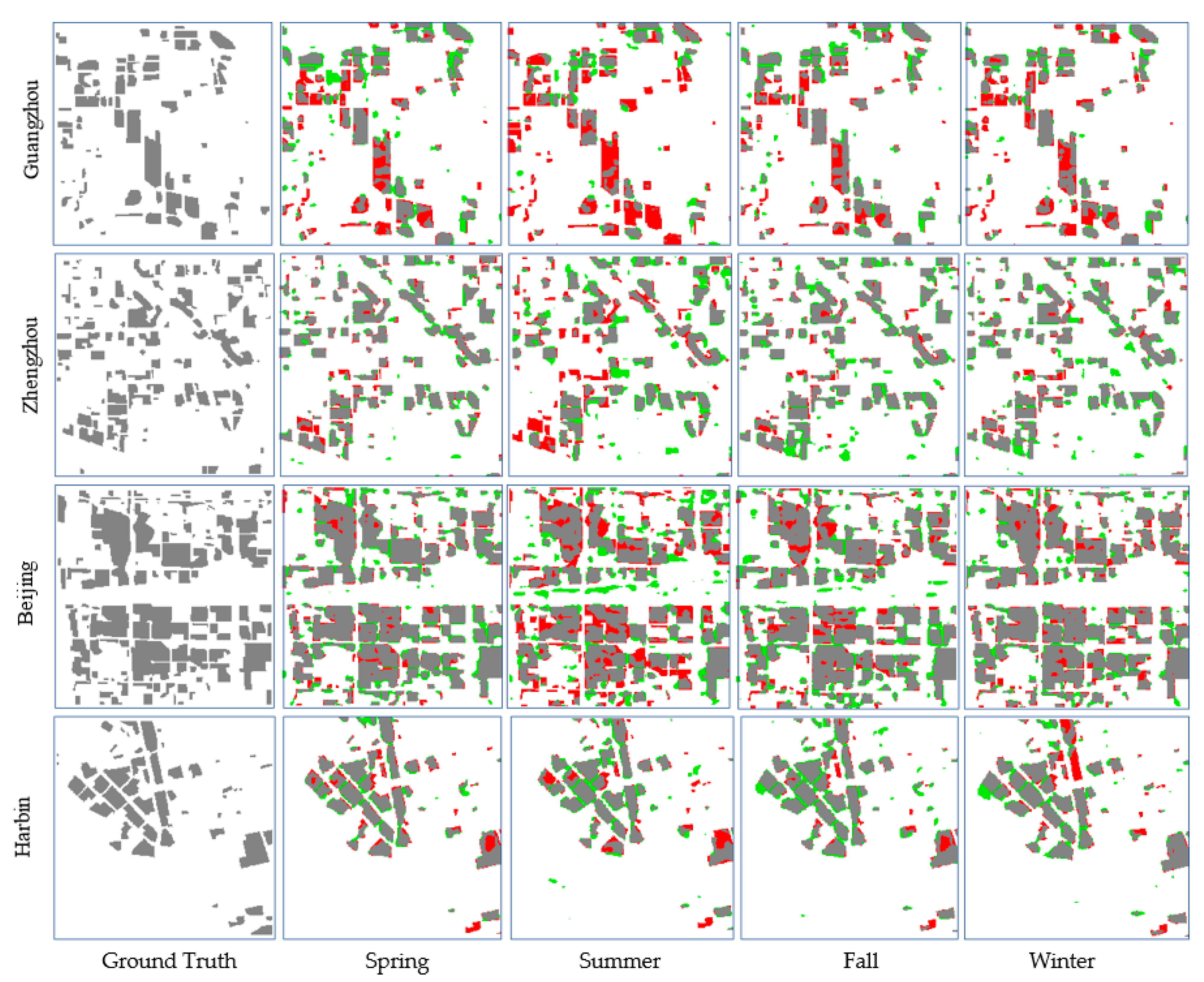

3.1. Results and Analysis for E1

Figure 3 shows F1 scores of all trained FCN models on test data from 16 combinations of region and season.

Figure 5 shows HRBs detection results of test1 data from four regions. Each result in E1 was predicted by the FCN model trained on data from the same combination of region and season as the validation.

As can be inferred from

Figure 5, results from FCN models trained and validated on the same combination of season and region fluctuate among seasons and regions. The best result is about 0.90, which is obtained in Zhengzhou in Spring. The worst is about 0.35, which is obtained in Guangzhou in Summer. The accuracies of HRB detection results differ in four regions, more specifically, taking seasonally average accuracy of HRB detection results as the criteria, Guangzhou is about 0.55, and it is the worst compared to others. Zhengzhou is slightly better than Beijing, which is about 0.8, and both of them are better than Harbin, which is about 0.75. In terms of the season, the regional average accuracy of HRB detection results varies a little; however, the seasonal change of accuracy varies a lot among regions. The most distinct change is in summer, which is the worst for all regions. Among the regions, the results of Summer in Zhengzhou, Beijing, and Harbin are nearly the same at about 0.70, which is slightly worse than those of other seasons. While the result of summer in Guangzhou has an F1 score below 0.40, it has the largest decrease of accuracy compared to that of other seasons. If the season can be chosen to get a yearly best result, F1 score of detected HRBs can reach above 0.75 for all regions with most of the errors on the boundary of HRBs.

From

Figure 6, we can see similar results to those in

Figure 5 in terms of overall accuracy. Guangzhou has the lowest accuracy among the four regions. Summer has the lowest accuracy among the four seasons. However, the HRB detection results of test1 among seasons in each region are similar to the corresponding ground truth in the spatial distribution, and it is clear that the main differences among seasons lie on the boundary of detected HRBs in all regions. Furthermore, the accuracy of results in Guangzhou in summer does not look as worse as it is indicated in

Figure 6 in terms of the location accuracy of HRBs.

The results from various combinations of region and season in E1 demonstrate the effectiveness of FCN-based method except at outlining the exact boundary. The shortage of the FCN model trained on a specific season in detecting the boundary of HRBs is mainly caused by dynamic image features in different seasons. The seasonal change of sun geometry makes the image features of HRBs change in a rather complex way, and this becomes distinct with the increase of the height. Nevertheless, by considering the seasonal change of image features, only one ground truth mask of HRBs is manually extracted for each region and it is the same for four seasons in the region. Thus, the discrepancy on boundaries between the predicted one and the ground truth is inevitable. Additionally, the bad performance of trained FCN model in summer, especially in Guangdong, is attributed to a near nadir sun geometry. Because a small solar zenith angle largely weakens the image feature of HRBs, this decreases the detection accuracy. However, the accuracy does not always increase with the solar zenith angle, as indicated by results from Harbin. Large solar zenith angle can enlarge shadows and cause them to overlap with other buildings and further increase the complexity of HRB detection.

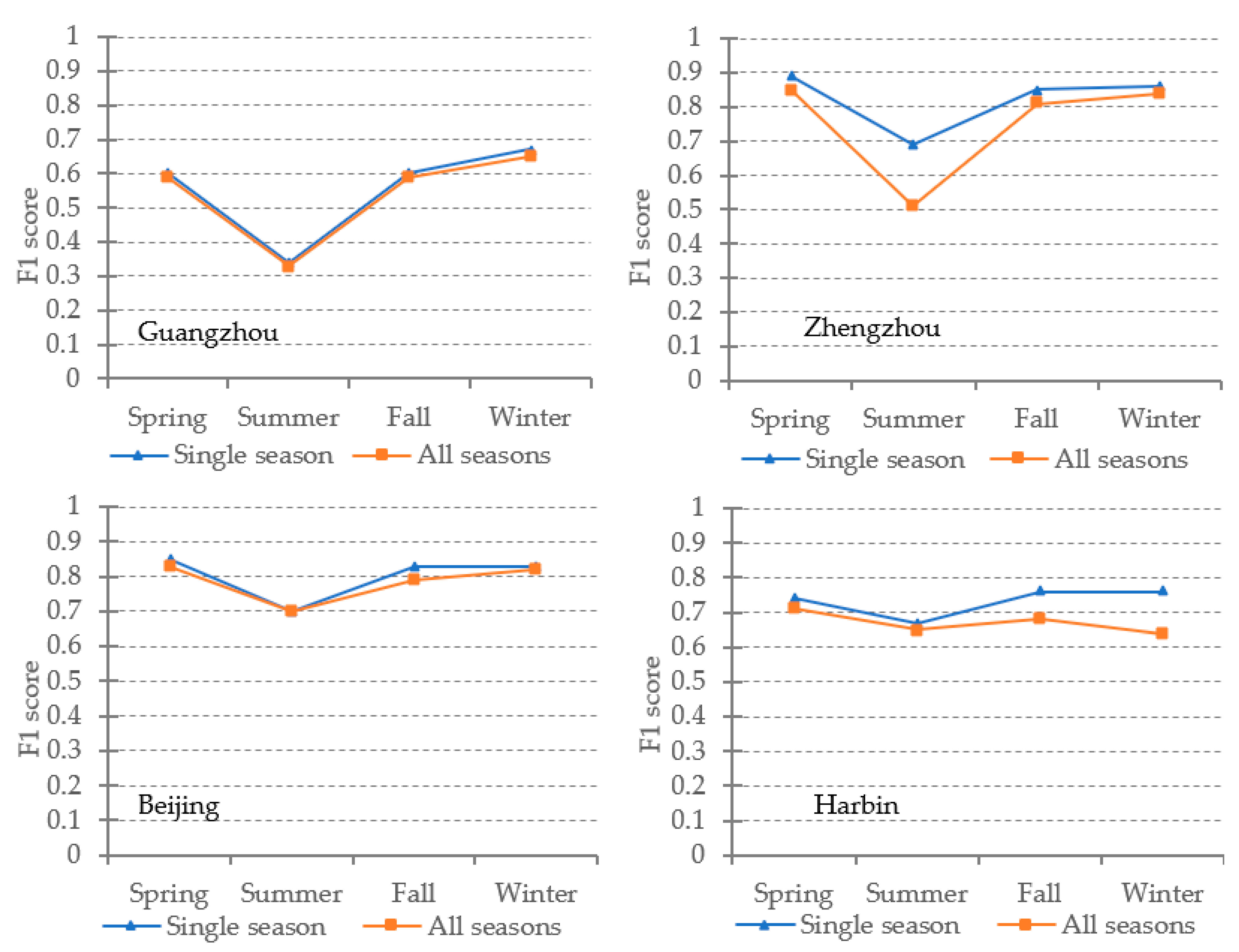

3.2. Results and Analysis for E2

Figure 7 shows F1 scores of all trained FCN models on test data from 16 combinations of region and season. Also, results from E1 are included in

Figure 7 for comparison.

Figure 8 shows HRBs detection results of test1 data of four regions. Given a specific region, each result in E2 was predicted by the FCN model trained on seasonally combined samples from the same region as the validation data. Here, for convenience of read, we refer to the FCN model trained on samples from a specific combination of season and region as the single season model, and the FCN model trained on seasonally combined data from a specific region as the all seasons model.

As can be learned from

Figure 7, single-season models are slightly better than their corresponding all-season models in terms of overall accuracy in most cases. The differences between single-season models and all-season models vary among four regions. More specifically, the differences in Guangzhou are tiny in all four seasons; results in Beijing follow the same trend in Guangzhou except a small amount of difference in fall; Zhengzhou has the most distinct difference at about 0.2 in summer, while differences are small in other seasons; the differences in fall and winter in Harbin are about 0.1. From

Figure 6 and

Figure 8, all-season models achieve similar results as single-season models. The results are similar to the ground truth in terms of spatial distribution. The uncertainties also lie on the boundary of detected HRBs in the results.

Results from E2 demonstrate the plausibility to replace four single-season models by a single all-season model in most of the regions in our study, although image features of HRBs seasonally change in a rather complex way due to the consistent change of sun geometry in a specific region. The advantage of the all-season model can be largely attributed to the powerful feature learning ability of FCN. However, as the mechanism of FCN is still in dark, it is hard to tell the shortage of the all-season model in some cases such as the Summer in Zhengzhou. Meanwhile, the boundary uncertainty in the HRBs detection results cannot be reduced through the combination of seasons. Similar reasons have been discussed in E1.

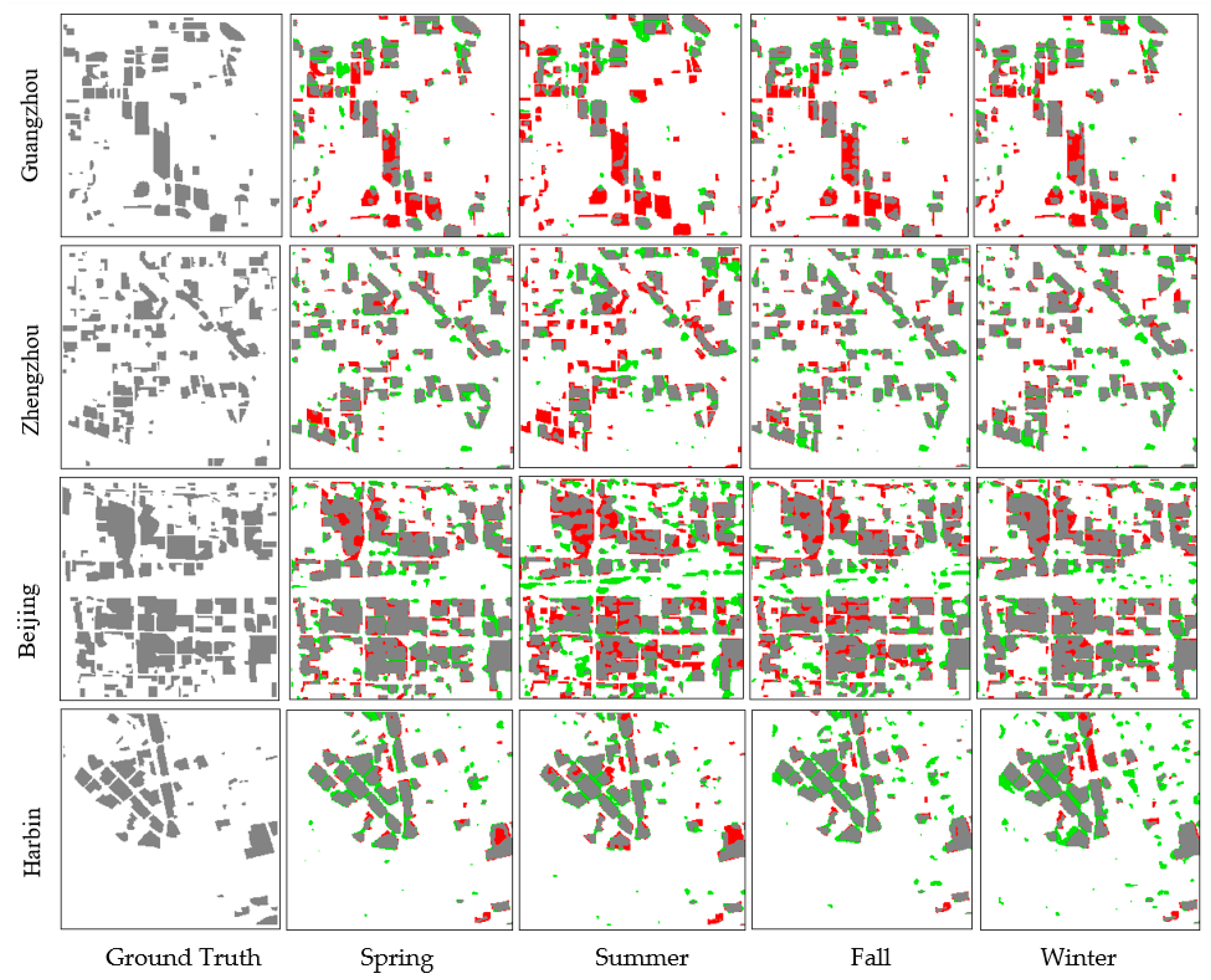

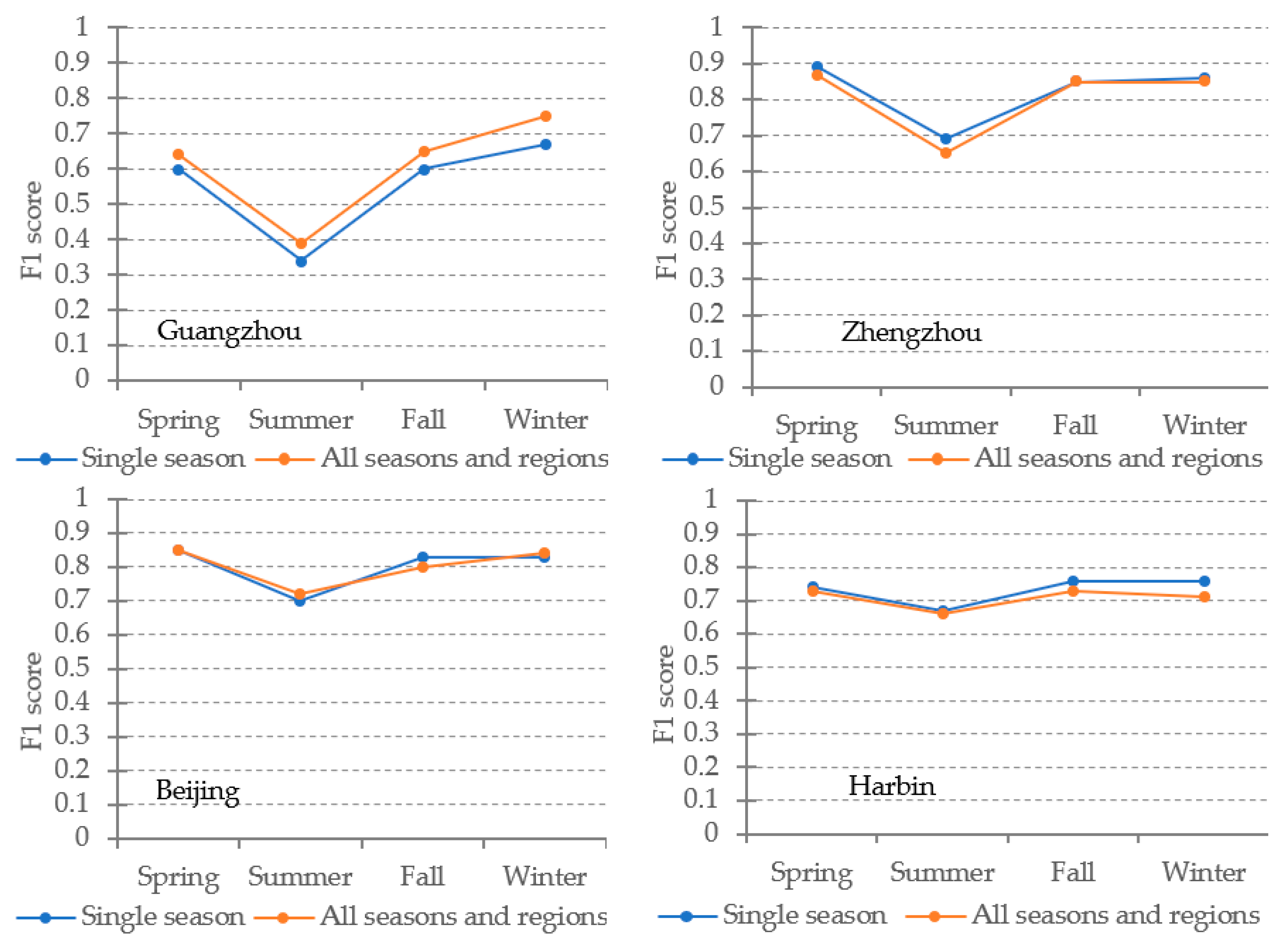

3.3. Results and Analysis for E3

Figure 9 shows F1 scores of all trained FCN models on test data from 16 combinations of region and season. Also, results from E1 are included for comparison.

Figure 10 shows HRB detection results of test1 data of the four regions. The FCN model was trained on seasonally and regionally combined data. Here for convenience, we refer to the FCN model trained on seasonally and regionally combined data as the all-season and regions model.

As can be seen from

Figure 9, single-season FCN models are close to all-seasons-and-regions models in terms of overall accuracy except the results in Guangzhou. The differences between the single season models and all seasons-and-regions models vary slightly among the four regions. More specifically, the accuracy of the all-seasons-and-regions model is consistently better than single-season models in Guangzhou, and the average difference is about 0.05. Single-season models perform slightly better than the corresponding all-seasons-and-regions models in Zhengzhou and Harbin, especially in spring and summer for Zhengzhou and in fall and winter in Harbin. In Beijing, the difference fluctuates at a small range in summer and fall. From

Figure 6,

Figure 8, and

Figure 10, the all-seasons-and-regions models achieve similar results as the single-season model and the all-season model do. Both of the results are similar to the ground truth in terms of spatial distribution. The differences among the three group of results as indicated in

Figure 9 cannot be easily observed through visual interpretation due to the spatial cluster property of the HRBs. Nevertheless, one thing in common is that the uncertainties of the results mostly lie on the boundary of detected HRBs.

Results from E3 demonstrate the plausibility to replace 16 single season and region models with a single all seasons and regions model in most of the cases in our study. Compared with results from the all seasons model in E2, the all-seasons-and-regions model is more accurate and stable at HRBs detection, no matter how image features of HRBs seasonally and regionally change. The advantage of the all-seasons-and-regions model is largely attributed to the powerful feature learning ability of the FCN model. Due to the black-box property of the FCN model as has been discussed in E2, similar seasons hold for the difficulty in explaining the shortage of the all-seasons-and-regions model in some cases such as in Guangzhou. Meanwhile, the boundary uncertainty in the HRB detection results cannot be reduced through the combination of seasons and regions in the training sample preparation.

4. Discussion

Our results show that the performance of the FCN-based method fluctuates among seasons and regions. The best F1 score can reach 0.9 in Zhengzhou in spring while the worst is below 0.4 in Guangzhou in summer. Compared to the large change of F1 scores, spatial patterns of the detected HRBs keep well for all seasons and regions, because most errors in the results locate on the boundary of HRBs. The value of the detected HRBs is high if the spatial pattern of HRBs is the key monitoring element. Furthermore, as a special type of land use, the HRBs monitoring frequency is usually longer than a year. In this sense, the newly proposed FCN-based method can achieve a yearly best F1 score of above 0.75 with most of the errors locating on the boundary of HRBs for regions with a large diversity in culture, latitude, and landscape. These results largely support the effectiveness of the method at the extraction of HRBs from Sentinel-2 data in large-scale regions if the best season can be chosen.

Our results also indicate that the use of data in summer in inference will lead to relatively poor results compared with data in other seasons. This may be mainly due to the fact that image features of HRBs are weakened by a small solar zenith angle in summer in the four selected regions. Thus, data in summer is not suggested for use in extracting HRBs if the timing is not as important as accuracy. One related problem of the FCN-based method is that it may be invalid in regions around the equator. In regions with very low latitude, the solar zenith angle is always small and even approaching zero, thus features of HRBs in the image will be nearly lost. The situation will be worse in underdeveloped regions where HRBs are sparsely located in urban areas, and also are relatively small compared to those in developed urban areas.

One unsolved problem in the study is the boundary effect of HRBs in the detection results. This is mainly due to the seasonal change of shadow of HRBs in both length and direction in urban areas caused by the change of sun geometry. Shadow works as an important component in the formation of image features of HRBs in our study. As HRBs are defined to be a spatial cluster, the change of shadows inside a cluster may not bring trouble to the HRBs detection given there are still enough image features left for learning. But shadows on the edge of the cluster can cause uncertainties in both training and inference stages for the FCN model. This is especially obvious when it comes to detect high and isolated buildings. One way to handle the problem may be by revising the definition of HRBs in a more rigorous way. The revised definition should be affected by shadows at a minimum level, independent of seasons and practical for HRB samples collection.