Situational Awareness of Large Infrastructures Using Remote Sensing: The Rome–Fiumicino Airport during the COVID-19 Lockdown

Abstract

1. Introduction

- the monitoring of the evapotranspiration, i.e., vapor contribution of plants and oceans to the atmosphere, in the Snake River Aquifer in Southern Idaho;

- the estimating snow water equivalent, i.e., the equivalent amount of liquid water stored in the snowpack, in Arizona; and

- the determination of runoff coefficient maps for use in models to measure peak flood discharges in Missouri [7].

2. Materials

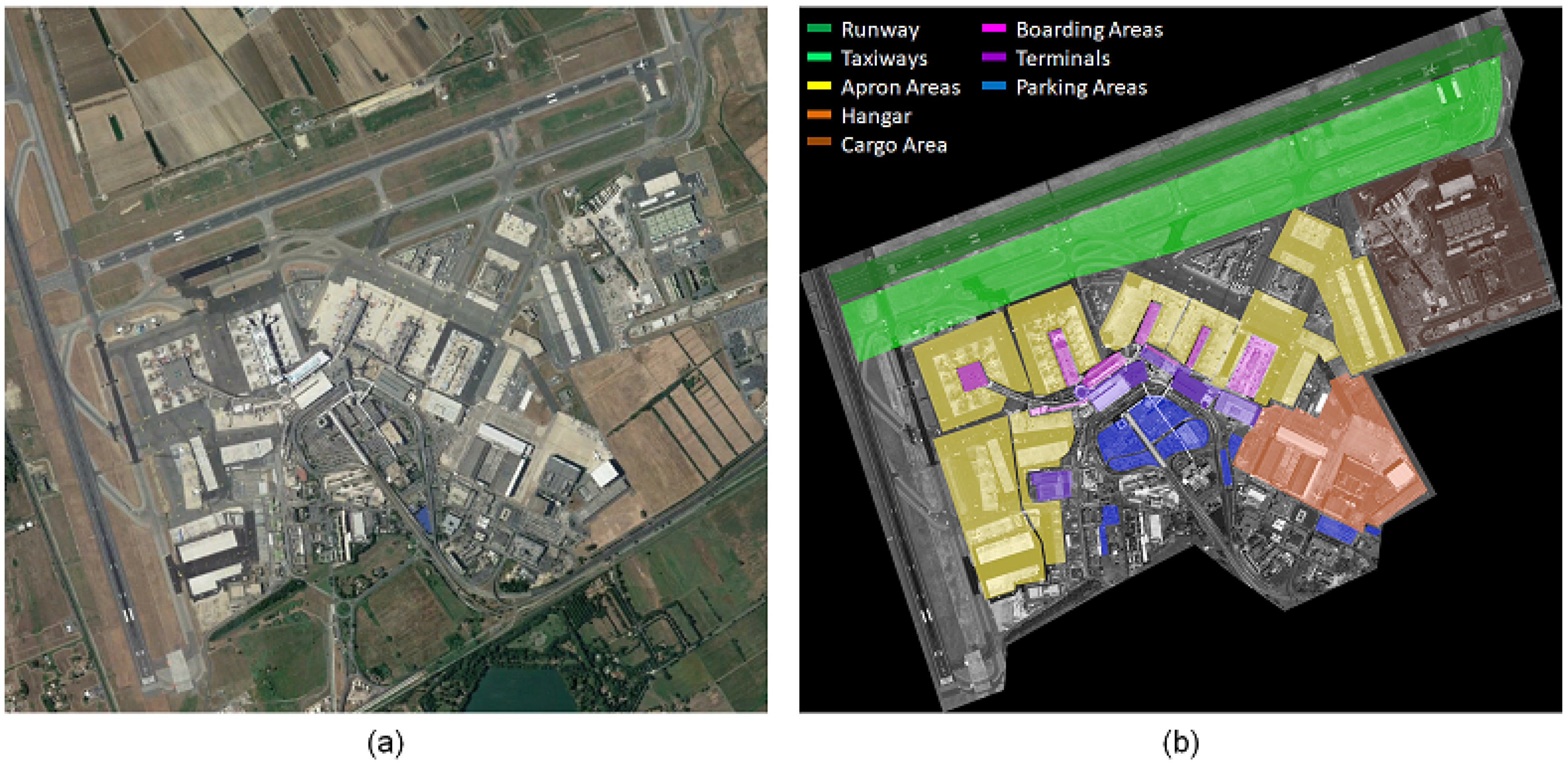

2.1. Test Site: Rome–Fiumicino International Airport

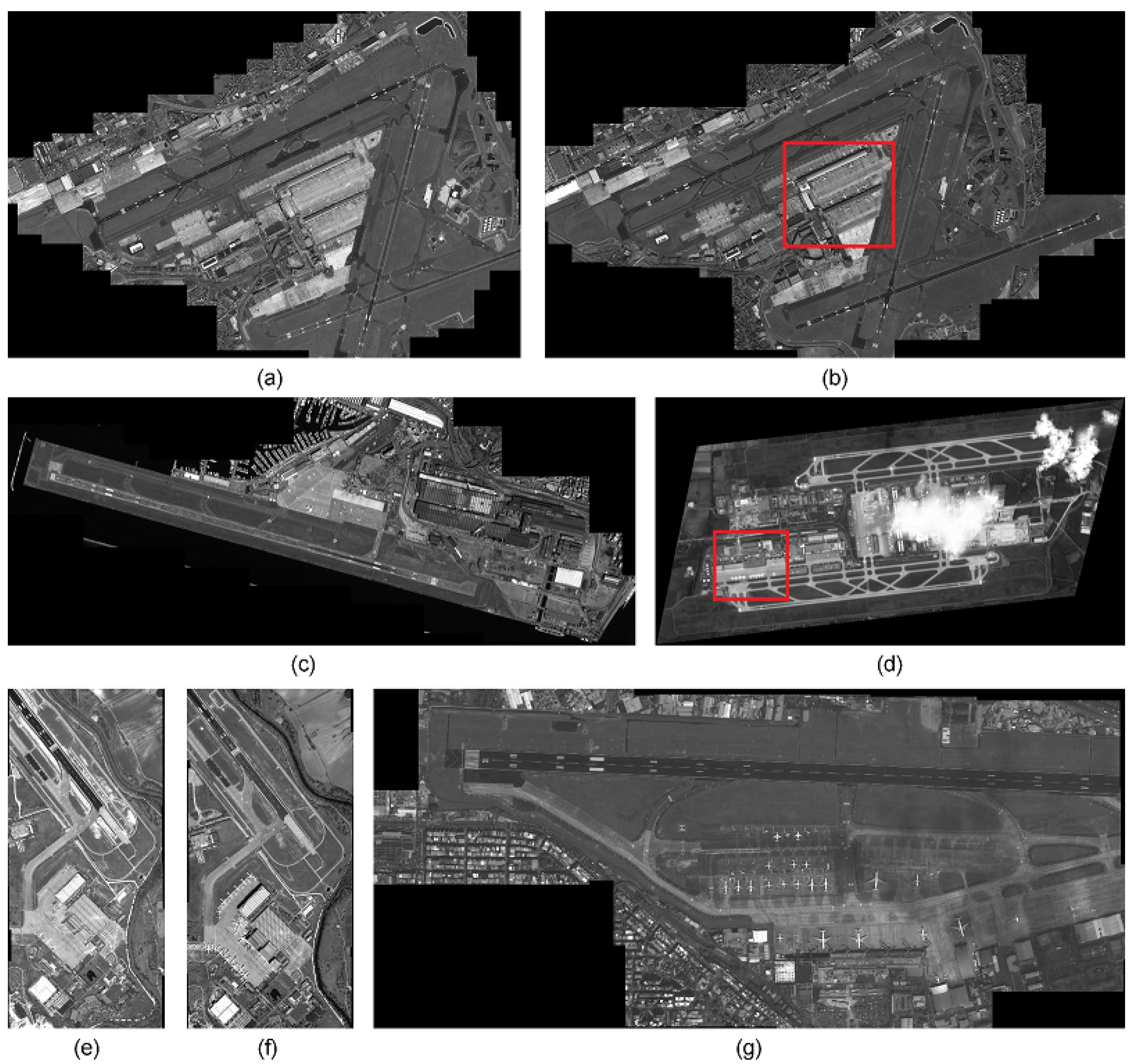

2.2. Sentinel-1 Time Series

- long-term comparison of the airport activities between different years, i.e., regular airport activities in four months of 2019 versus the occasional activities registered in the same four months of 2020 and

- short-term comparison before and after the COVID-19 pandemic lockdown of Spring 2020, officially imposed in Italy from 9 March until 18 May 2020.

2.3. Sentinel-2 Acquisitions

- The S-2 acquisition date has to lay within the chosen S-1 observation interval.

- Each S-2 image is related to the lowest cloud coverage detected in the observation interval.

- The two acquisitions from 2019 and 2020 are possibly related to the same period of the year, in order to reduce the impact of vegetation seasonal changes.

2.4. VHR Pleiades Acquisitions

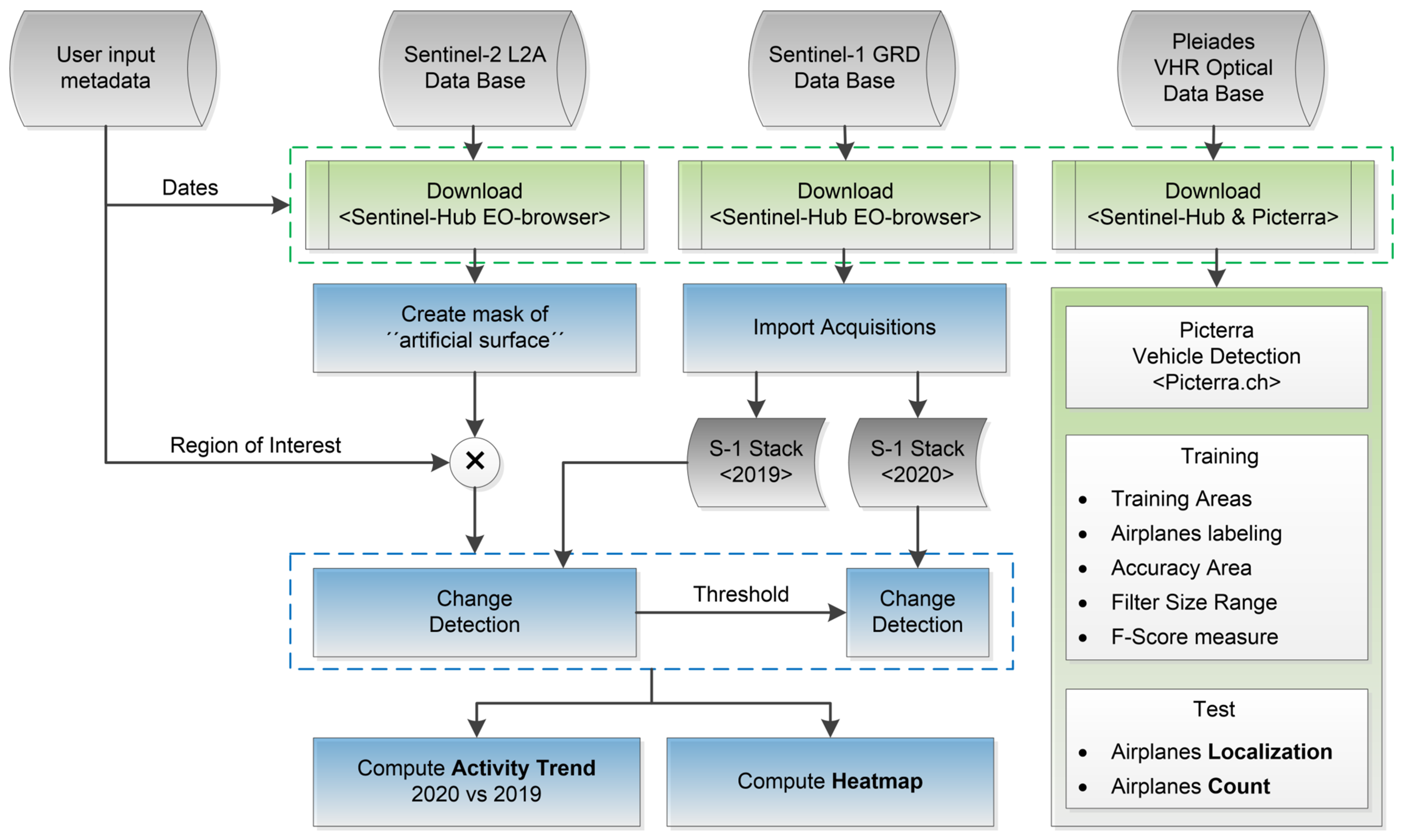

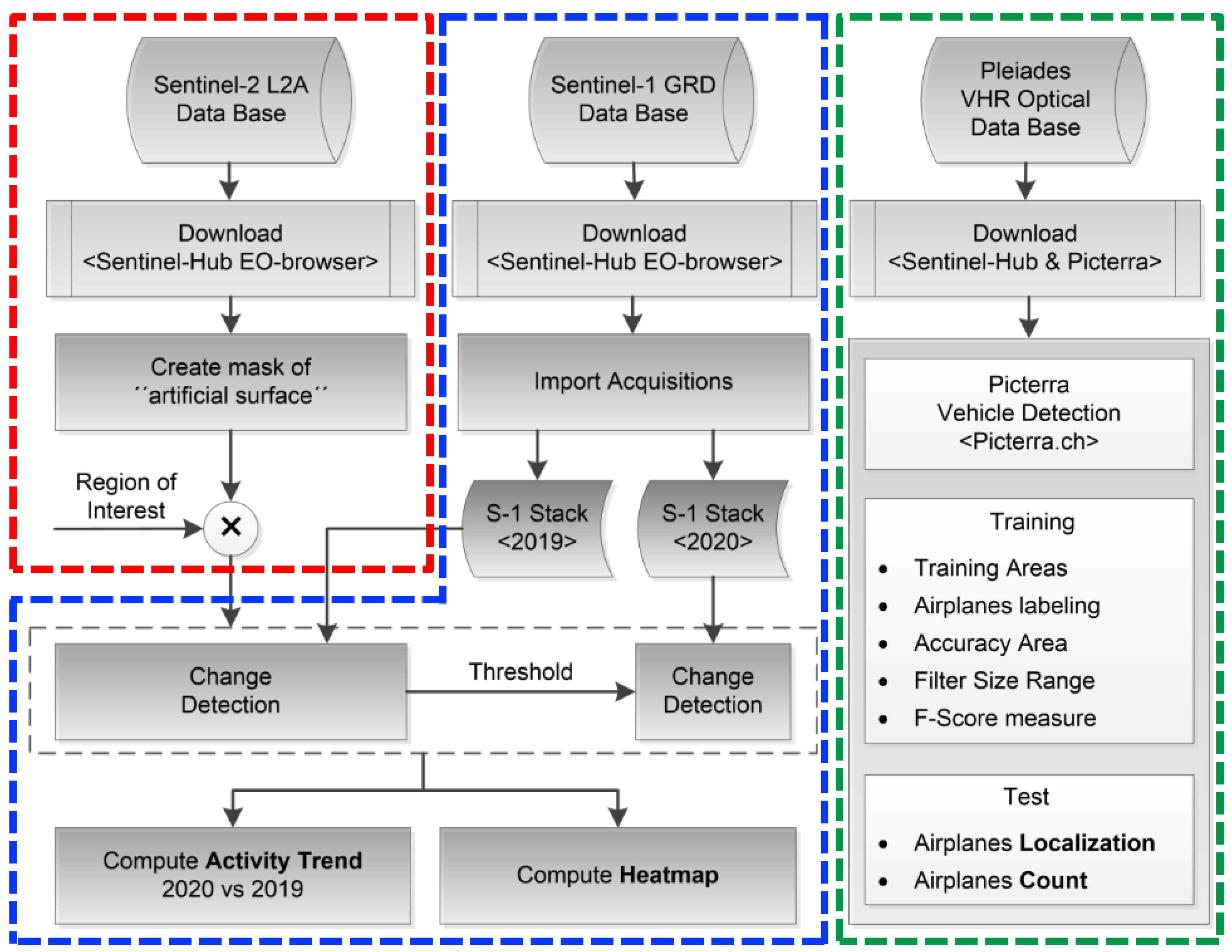

3. Methodology

- Level-1 processing, which generates quantitative measurements of crucial variables on the selected target area, i.e., the artificial surface mask extracted from S-2 cloud-free acquisitions, the heat-map addressing to activity within the airport in a S-1 time-series, and, finally, the count and localization of the airplanes using Pleiades data.

- Level-2 processing, which generates SA insights by combining the results obtained at Level-1 with the external in situ measurements depicted in Figure 2b.

3.1. Level-1 Processing

3.1.1. Artificial Surface Mapping from S-2 Data

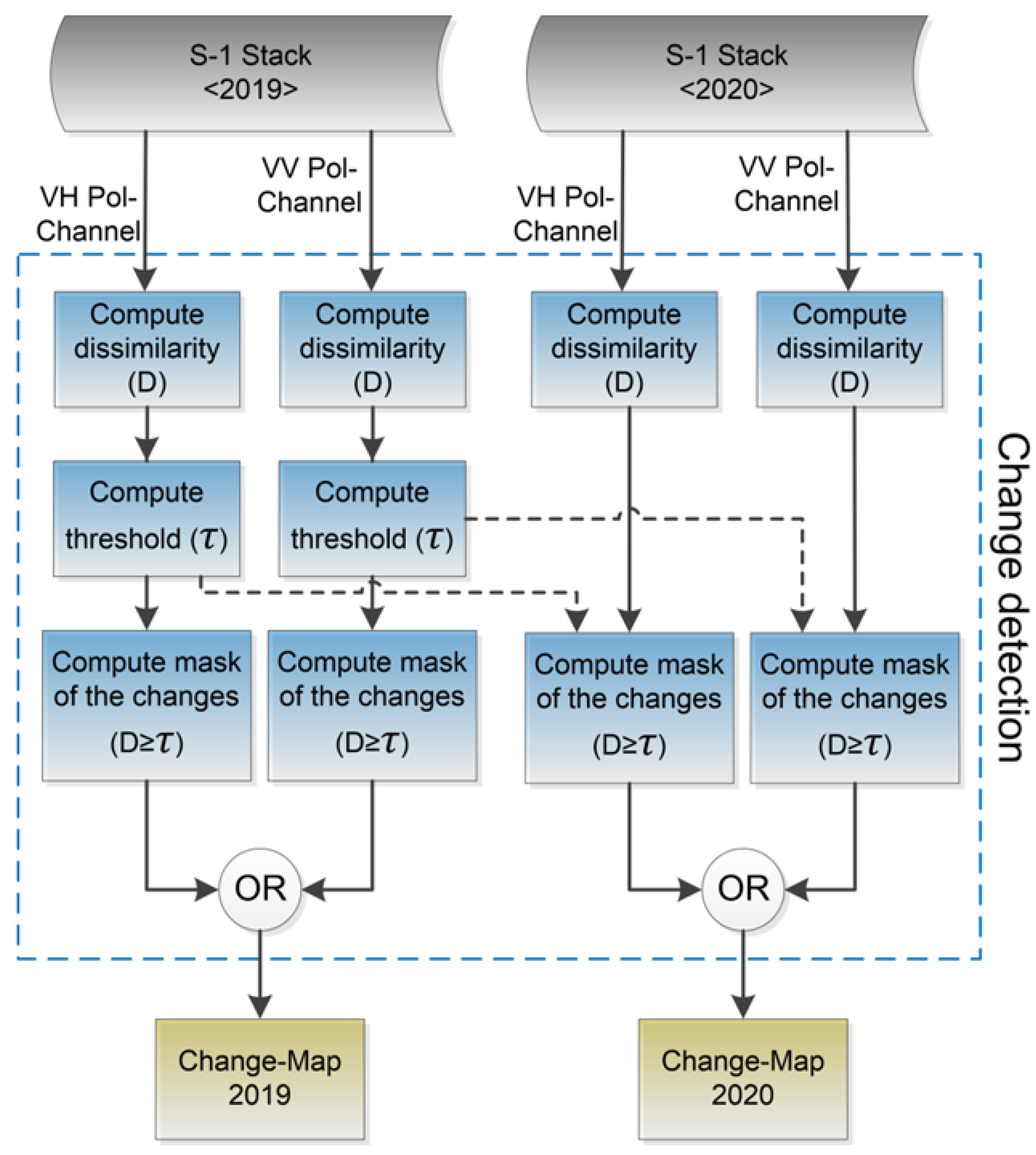

3.1.2. Activity Level Determination from S-1 Time Series

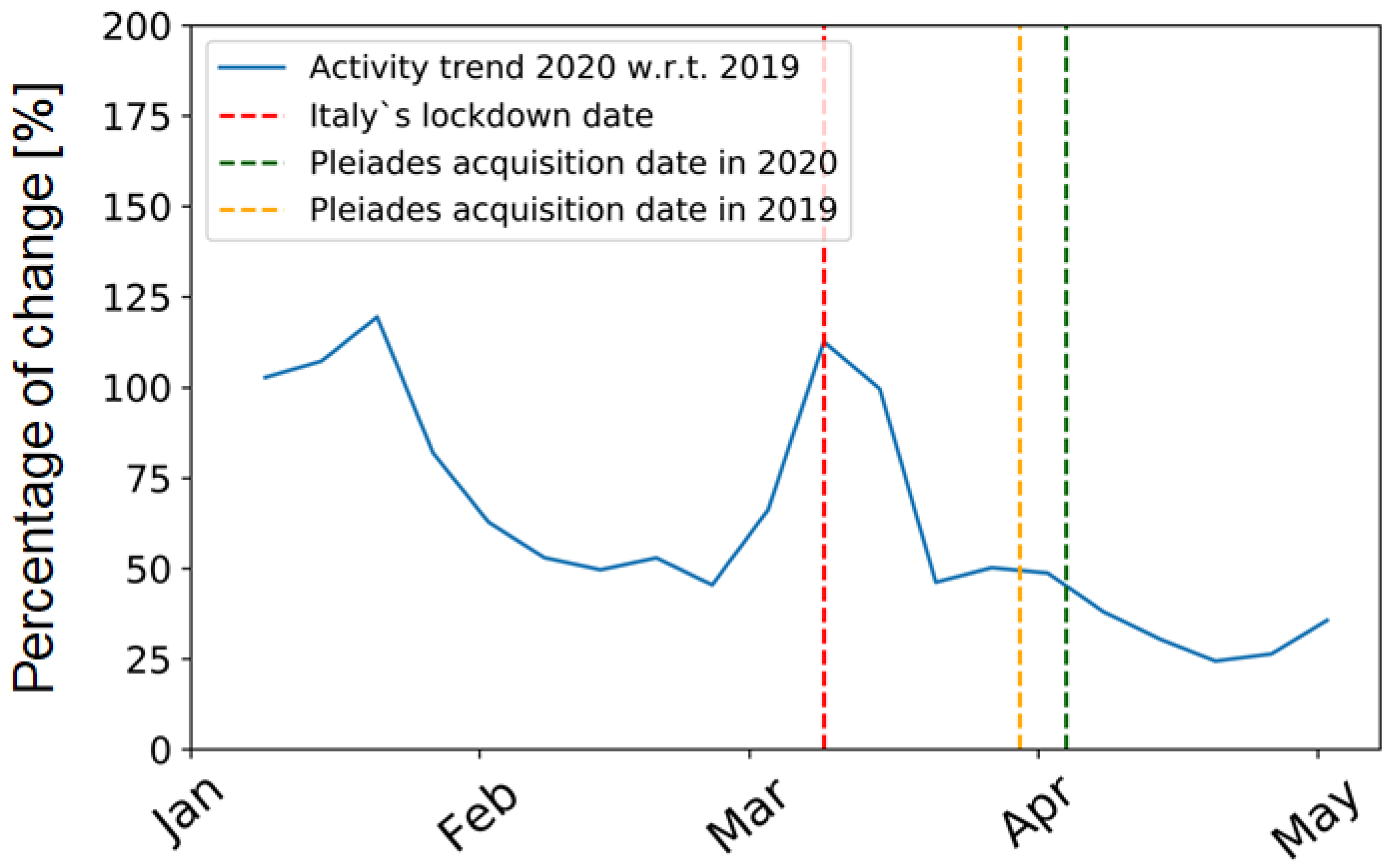

- Activity Trend: a plot showing the relative changes (in percentage) for the year 2020 with respect to 2019. It is computed as the ratio between the two temporal trends:A percentage lower than 100% indicates higher activity in 2019, otherwise an exceed over the 100% points out the presence of more changes in 2020 than in 2019. In this work, we consider as a temporal trend, the evolution of the average of the change-map at each time instant.

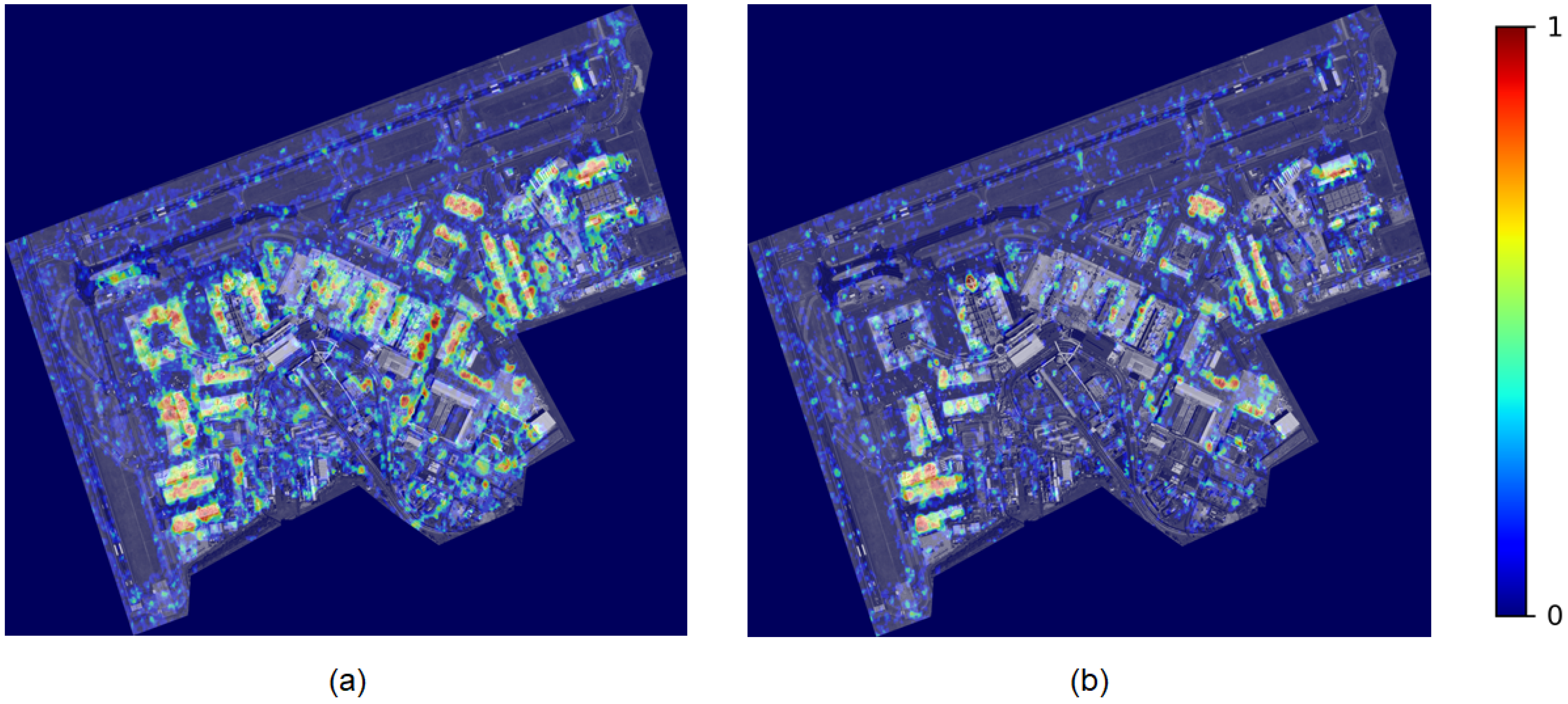

- Heat-Map: a digital map showing the degree of activity detected for each pixel during the whole observation time. We compute it as the temporal average of the change-map in a single pixel p:where N is the number of changes within the time series. A pixel always detected as change along the time series corresponds to a value equal to 1.

- Spatial and temporal resolution preservation: we provide change maps with the same spatial and temporal resolution of Sentinel-1 Ground Range Detected (GRD) data, 10 m and 6 days for the spatial and temporal dimensions, respectively. In this way, it is possible not only to turn the level of ongoing activity on the overall observation period (the heat-map) upside down, but also to provide time-related variables, such as temporal activity dynamics (called the activity trend).

- Accuracy: by considering the statistics of detected SAR amplitudes, we can increase the detection accuracy by using a lower number of data. A statistical measure is used to define the dissimilarity between two SAR amplitude samples.

- Robustness: the algorithm is independent of the input SAR data and pre-processing. Indeed, it can process Single-Look Complex (SLC) data as multi-looked data with any number of looks with no change in the algorithm.

- Automatic: the algorithm automatically sets a threshold for the change detection. The user can define a level of sensitivity to detect even smaller changes (higher sensitivity) or identify only the largest ones (lower sensitivity).

- Comparable performance: the algorithm computes a unique threshold and applies it to other stacks with the same dimension. In this way, the algorithm can map similar activity levels into identical output values, e.g., the heat-map.

| Algorithm 1: Pseudocode for the generation of the activity trend and heat-maps from S-1 GRD temporal series acquisitions. |

| INPUT: Stack 2019 (VV, VH), Stack 2020 (VV, VH), ART (Equation 3), OUTPUT: Activity Trend, Heat-Map 2019, Heat-Map 2020 compute: dissimilarity measure D (Equation 6) for each Year (2019, 2020) do for each Polarization (VV, VH) do x := Stack, y := shift(Stack,1) compute: (Equation 6) return: , , , compute: Threshold for (Equation 8). compute: Threshold for (Equation 8). compute: Change Map 2019 := ((( >= ) or ( >= )) and ART) compute: Change Map 2020 := ((( >= ) or ( >= )) and ART) compute: Trend for the year 2019 : average at each acquisition time compute: Trend for the year 2020 : average at each acquisition time compute: (Equation 4) compute: Heat-Maps for the year 2019 : average along time dimension (Equation 5) compute: Heat-Maps for the year 2020 : average along time dimension (Equation 5) |

3.1.3. Airplane Detection

- traditional machine learning methods based on varied computer vision techniques and

- deep learning techniques [43].

- find regions in the image that might contain an object,

- extract CNN features from these regions, and, finally,

- classify the objects using the extracted features.

3.2. Level-2 Processing

4. Results and Discussion

- we concentrate on the system performance assessment by comparing the airport activities of the Stack 2020 with the Stack 2019, described in Table 1;

- analyze within the sole Stack 2020 the activity levels detected over the airport before and after Italy’s Spring lockdown, officially imposed on 9 March 2020; and

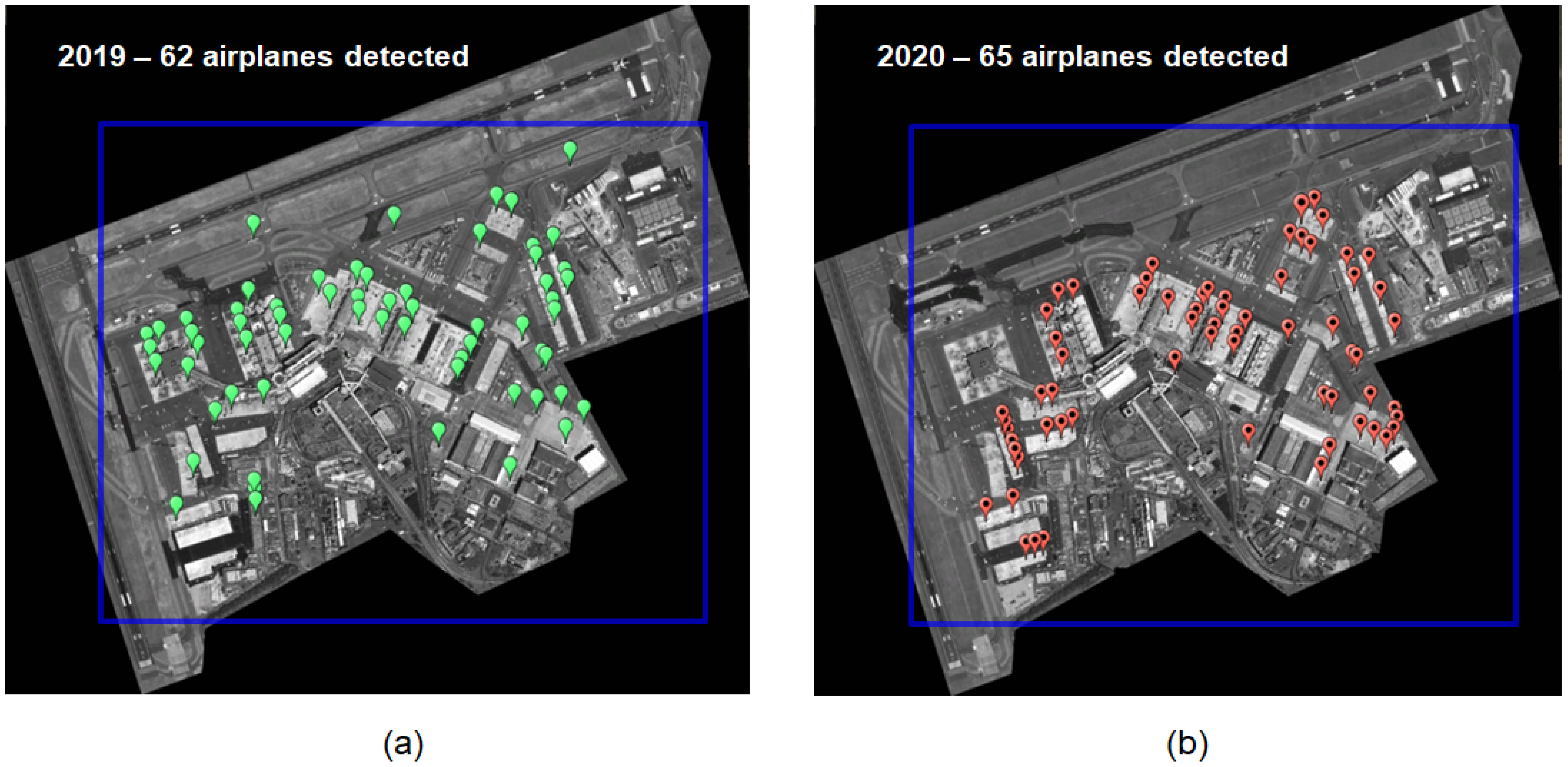

- extend the analysis to airplane detection with Pleiades VHR data, using the methodology described in Section 3.1.3.

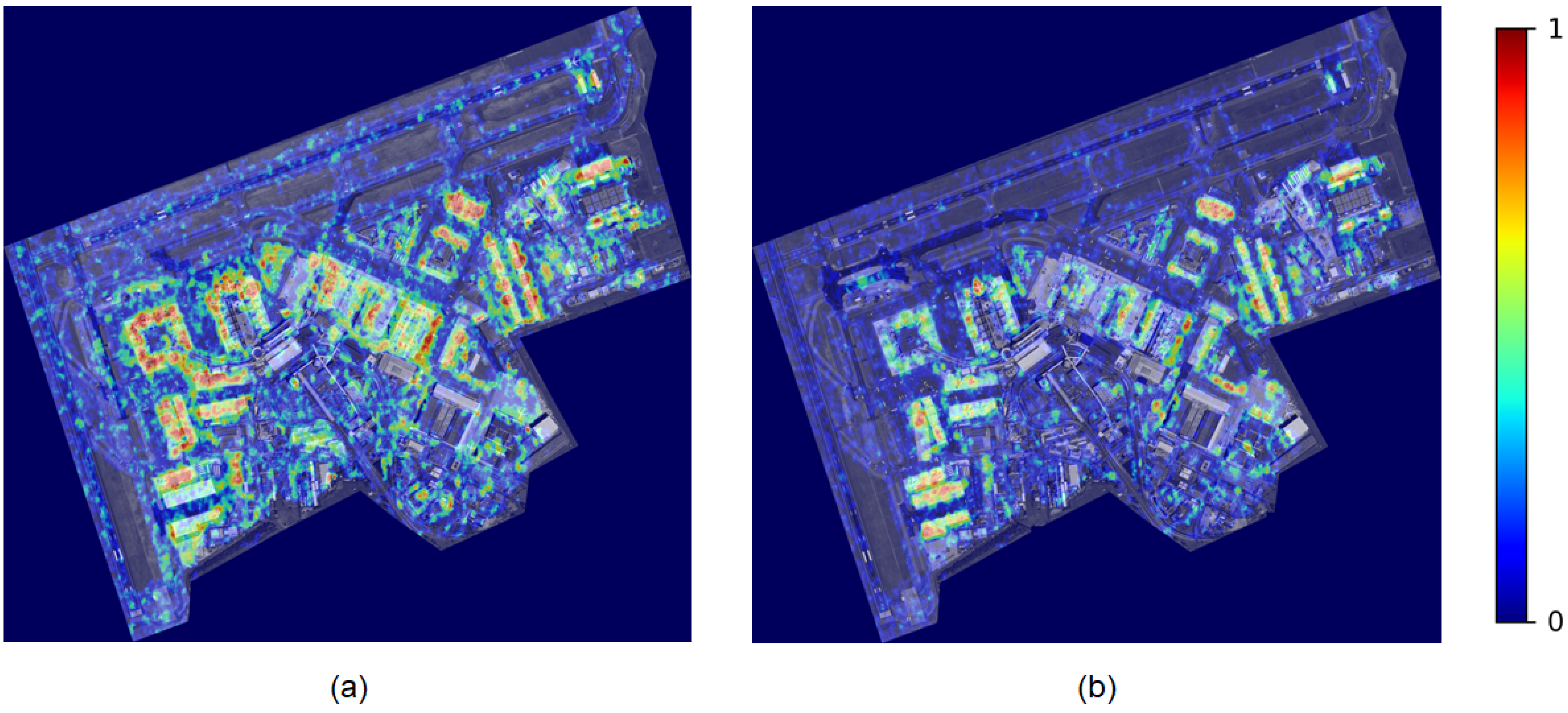

4.1. Airport Activity Levels

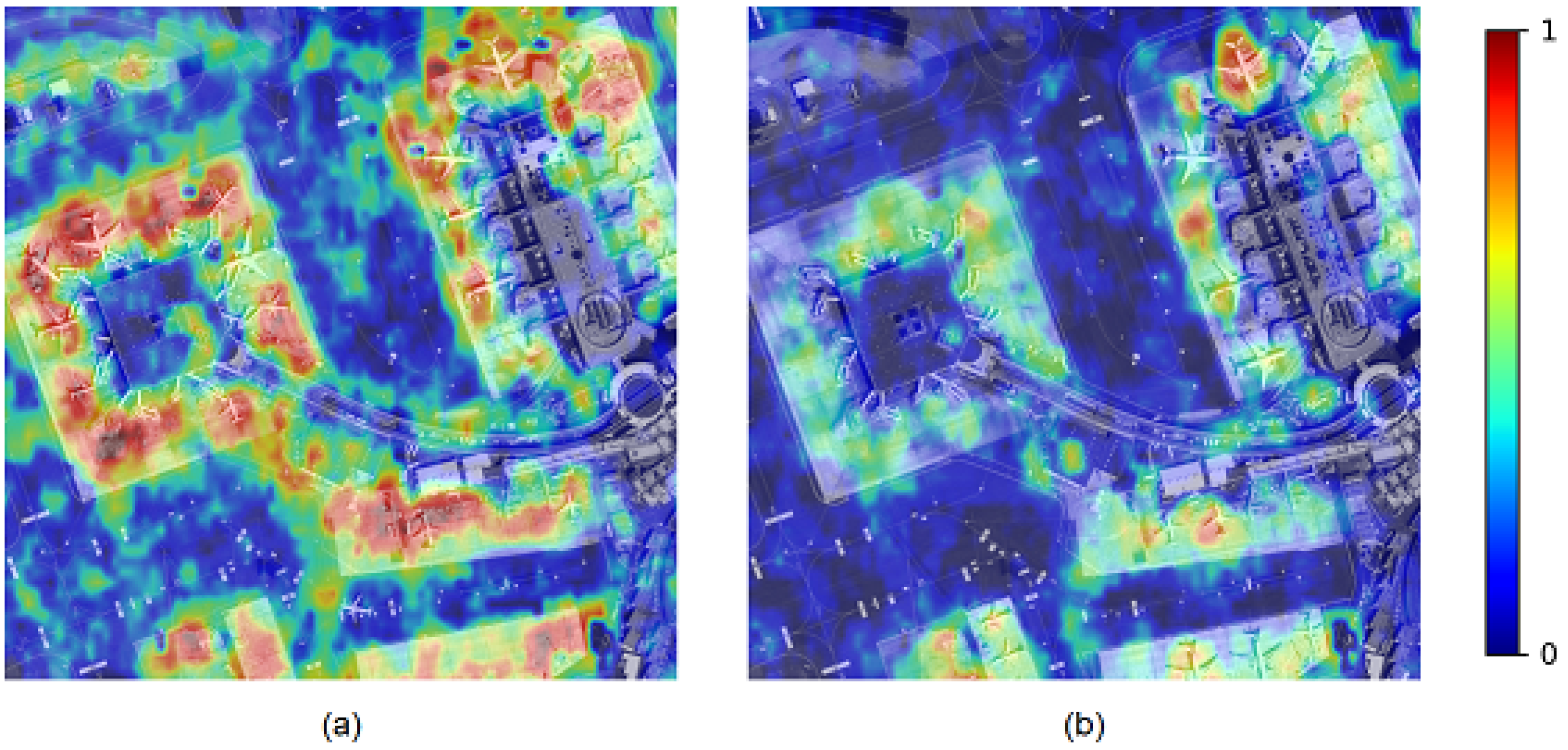

4.2. Airport Activity Levels before and after the Lockdown Event

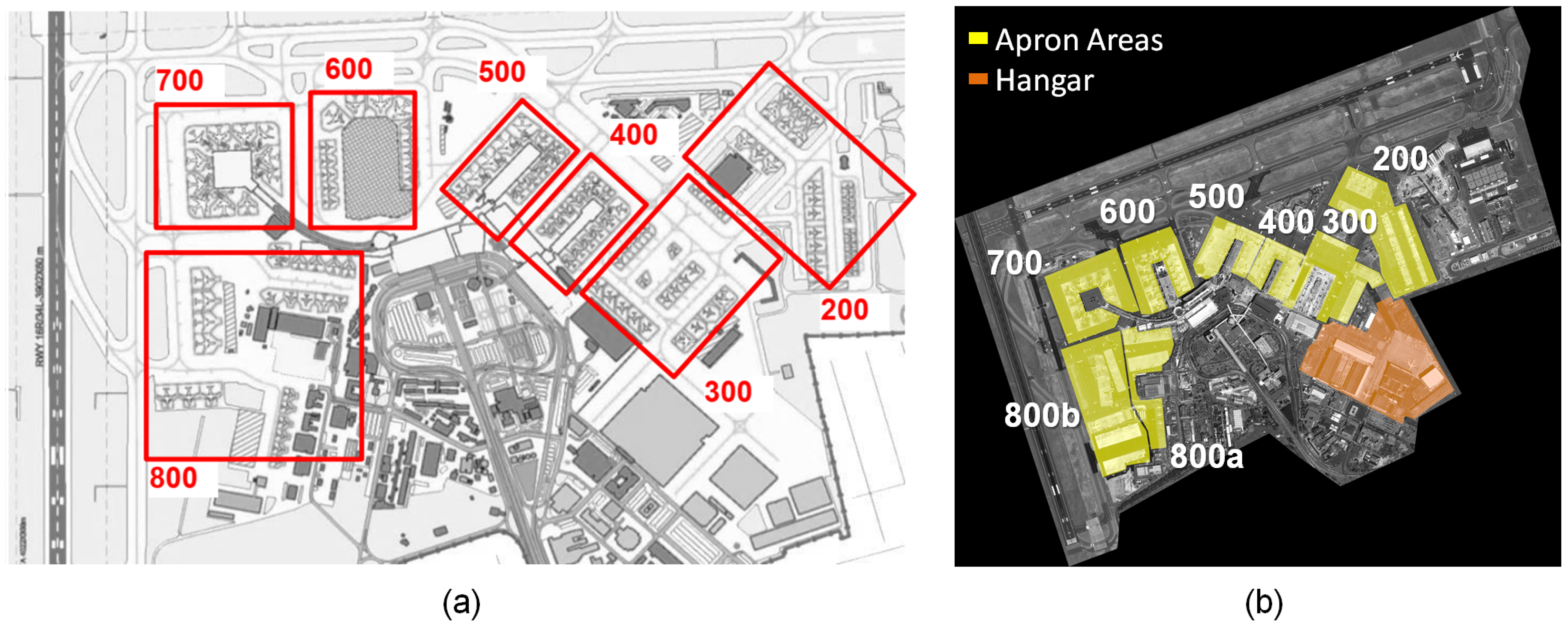

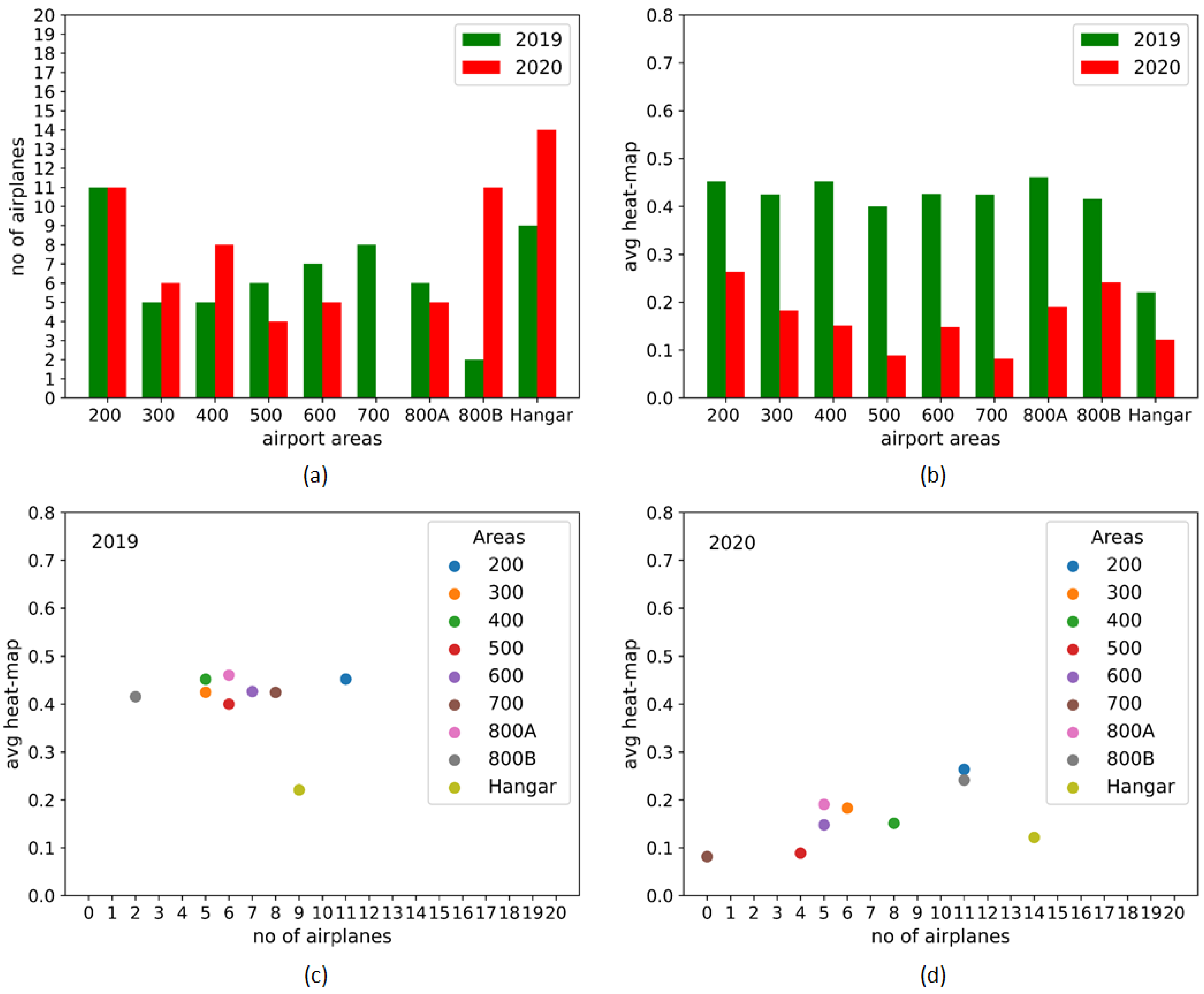

4.3. Airplanes Detection and Situational Awareness

- Hangar: has kept its functionality of airplane parking spot, hosting a higher number of airplanes during the lockdown, with respect to the previous year;

- 200: is probably one of the few areas which kept its full functionality as terminal and service area also during the lockdown;

- 300: decreased activity, but same functionality;

- 400: the increased number of airplanes together with the reduction of the average activity suggest that this area has been closed and used as parking area. This area corresponds indeed to Terminal 1, which was closed on the 17 March 2020 [33];

- 500: the strong decrease in average activity and number of airplanes suggests that this terminal area was closed;

- 600: kept its functionality, but probably only one gate was kept open as the red spot is indicating in Figure 13b;

- 700: as for the 500, this area shows a drastic decrease in both average activity and number of airplanes, suggesting that this terminal was closed;

- 800a: decreased activity, but same functionality;

- 800b: similar to area 200, this area probably kept its functionality. Since it showed a large number of airplanes and a low average activity, we can assume that it was also used as parking spot.

- discontinued operations (red): areas with low average activity and low number of airplanes;

- reduced operations (blue): regions with lower average activity w.r.t. 2019 and same number of airplanes w.r.t 2019;

- unchanged operations (yellow): spots presenting high average activity and high number of airplanes; and

- new parking spots (with stripes): areas with low average activity and high number of grounded airplanes.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| MDPI | Multidisciplinary Digital Publishing Institute |

| STAND-OUT | SituaTional Awareness of large iNfrastructures during the coviD OUTbreak |

| COVID-19 | COrona VIrus Disease-2019 |

| NDBI | Normalized Difference Built-up Index |

| NASA | National Aeronautics and Space Administration |

| RACE | Rapid Action on coronavirus and EO |

| DLR | Deutschen Zentrum für Luft-und Raumfahrt |

| ESA | European Space Agency |

| SAR | Synthetic Aperture Radar |

| SLC | Single-Look Complex |

| GRD | Ground Range Detected |

| VHR | Very-High-Resolution |

| RoI | Region of Interest |

| S-1 | Sentinel-1 |

| S-2 | Sentinel-2 |

| EC | European Commission |

| HR | High-Resolution |

| SA | Situational Awareness |

| RS | Remote Sensing |

| EO | Earth Observation |

References

- Endsley, M. Design and evaluation for situational awareness enhancement. In Proceedings of the Human Factors Society 32nd Annual Meeting, Anaheim, CA, USA, 24–28 October 1988; pp. 97–101. [Google Scholar]

- Smith, K.; Hancock, P.A. Situation Awareness Is Adaptive, Externally Directed Consciousness. Hum. Factors 1995, 37, 137–148. [Google Scholar] [CrossRef]

- Adams, M.J.; Tenney, Y.J.; Pew, R.W. Situation Awareness and the Cognitive Management of Complex Systems. Hum. Factors 1995, 37, 85–104. [Google Scholar] [CrossRef]

- Jensen, R.S. The Boundaries of Aviation Psychology, Human Factors, Aeronautical Decision Making, Situation Awareness, and Crew Resource Management. Int. J. Aviat. Psychol. 1997, 7, 259–267. [Google Scholar] [CrossRef]

- Li, W.C. The casual factors of aviation accidents related to decision errors in the cockpit by system approach. J. Aeronaut. Astronaut. Aviat. 2011, 43, 147–154. [Google Scholar]

- D’Arcy, J.; Della Rocco, P.S. Air traffic control specialist decision making and strategic planning—A field survey. In Technical Report DOT/FAA/CT-TN01/05; U.S. Department of Transportation, Federal Aviation Administration: Washington, DC, USA, 2001. [Google Scholar]

- Kalluri, S.; Gilruth, P.; Bergman, R. The potential of remote sensing data for decision makers at the state, local and tribal level: Experiences from NASA’s Synergy program. Environ. Sci. Policy 2003, 6, 487–500. [Google Scholar] [CrossRef]

- King, R.L. Putting Information into the Service of Decision Making: The Role of Remote Sensing Analysis. In Proceedings of the IEEE Workshop on Advances in Techniques for Analysis of Remotely Sensed Data, Greenbelt, MD, USA, 27–28 October 2003; pp. 25–29. [Google Scholar]

- Roemer, H.; Kiefl, R.; Henkel, F.; Cao, W.; Nippold, R.; Kurz, F.; Kippnich, U. Using Airborne Remote Sensing to Increase Situational Awareness in Civil Protection and Humanitarian Relief—The Importance of User Involvement. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Prague, Czechia, 12–19 July 2016; Volume XLI-B8, pp. 1363–1370. [Google Scholar]

- Borowitz, M. Strategic Implications of the Proliferation of Space Situational Awareness Technology and Information: Lessons Learned from the Remote Sensing Sector. Space Policy 2018, 47, 18–27. [Google Scholar] [CrossRef]

- Schroeter, E.; Kiefl, R.; Neidhardt, E.; Gurczik, G.; Dalaff, C.; Lechner, K. Trialing Innovative Technologies in Crisis Management—“Airborne and Terrestrial Situational Awareness” as Support Tool in Flood Response. Appl. Sci. 2020, 10, 3743. [Google Scholar] [CrossRef]

- Boschetti, L.; Roy, D.P.; Justice, C.O. Using NASA’s World Wind virtual globe for interactive internet visualization of the global MODIS burned area product. Int. J. Remote Sens. 2008, 29, 3067–3072. [Google Scholar] [CrossRef]

- The Guardian. Coronavirus Will Reshape Our Cities—We Just Don’t Know How Yet. 2020. Available online: https://www.theguardian.com/world/2020/may/22/coronavirus-will-reshape-our-cities-we-just-dont-know-how-yet (accessed on 14 October 2020).

- PWC. Five Actions Can Help Mitigate Risks to Infrastructure Projects Amid COVID-19. 2020. Available online: https://www.pwc.com/gx/en/industries/capital-projects-infrastructure/publications/infrastructure-covid-19.html (accessed on 14 October 2020).

- World Bank. COVID-19 and Infrastructure: A Very Tricky Opportunity. 2020. Available online: https://blogs.worldbank.org/ppps/covid-19-and-infrastructure-very-tricky-opportunity (accessed on 14 October 2020).

- McKinsey & Co. A New Paradigm for Project Planning. 2020. Available online: https://www.mckinsey.com/industries/capital-projects-and-infrastructure/our-insights/a-new-paradigm-for-project-planning (accessed on 14 October 2020).

- Zhang, Z.; Arshad, A.; Zhang, C.; Hussain, S.; Li, W. Unprecedented Temporary Reduction in Global Air Pollution Associated with COVID-19 Forced Confinement: A Continental and City Scale Analysis. Remote Sens. 2020, 12, 2420. [Google Scholar] [CrossRef]

- Zhang, K.; de Leeuw, G.; Yang, Z.; Chen, X.; Jiao, J. The Impacts of the COVID-19 Lockdown on Air Quality in the Guanzhong Basin, China. Remote Sens. 2020, 12, 3042. [Google Scholar] [CrossRef]

- Nichol, J.; Bilal, M.; Ali, M.; Qiu, Z. Air Pollution Scenario over China during COVID-19. Remote Sens. 2020, 12, 2100. [Google Scholar] [CrossRef]

- Fan, C.; Li, Y.; Guang, J.; Li, Z.; Elnashar, A.; Allam, M.; de Leeuw, G. The Impact of the Control Measures during the COVID-19 Outbreak on Air Pollution in China. Remote Sens. 2020, 12, 1613. [Google Scholar] [CrossRef]

- Veefkind, J.; Aben, I.; McMullan, K.; Förster, H.; de Vries, J.; Otter, G.; Claas, J.; Eskes, H.; de Haan, J.; Kleipool, Q.; et al. TROPOMI on the ESA Sentinel-5 Precursor: A GMES mission for global observations of the atmospheric composition for climate, air quality and ozone layer applications. Remote Sens. Environ. 2012, 120, 70–83. [Google Scholar] [CrossRef]

- Justice, C.O.; Vermote, E.; Townshend, J.R.G.; Defries, R.; Roy, D.P.; Hall, D.K.; Salomonson, V.V.; Privette, J.L.; Riggs, G.; Strahler, A.; et al. The Moderate Resolution Imaging Spectroradiometer (MODIS): Land remote sensing for global change research. IEEE Trans. Geosci. Remote Sens. 1998, 36, 1228–1249. [Google Scholar] [CrossRef]

- Griffin, D.; McLinden, C.; Racine, J.; Moran, M.; Fioletov, V.; Pavlovic, R.; Mashayekhi, R.; Zhao, X.; Eskes, H. Assessing the Impact of Corona-Virus-19 on Nitrogen Dioxide Levels over Southern Ontario, Canada. Remote Sens. 2020, 12, 4112. [Google Scholar] [CrossRef]

- Vîrghileanu, D.; Săvulescu, I.; Mihai, B.A.; Nistor, C.; Dobre, R. Nitrogen Dioxide (NO2) Pollution Monitoring with Sentinel-5P Satellite Imagery over Europe during the Coronavirus Pandemic Outbreak. Remote Sens. 2020, 12, 3575. [Google Scholar] [CrossRef]

- Jechow, A.; Hölker, F. Evidence That Reduced Air and Road Traffic Decreased Artificial Night-Time Skyglow during COVID-19 Lockdown in Berlin, Germany. Remote Sens. 2020, 12, 3412. [Google Scholar] [CrossRef]

- Elvidge, C.; Ghosh, T.; Hsu, F.C.; Zhizhin, M.; Bazilian, M. The Dimming of Lights in China during the COVID-19 Pandemic. Remote Sens. 2020, 12, 2851. [Google Scholar] [CrossRef]

- Niroumand-Jadidi, M.; Bovolo, F.; Bruzzone, L.; Gege, P. Physics-based Bathymetry and Water Quality Retrieval Using PlanetScope Imagery: Impacts of 2020 COVID-19 Lockdown and 2019 Extreme Flood in the Venice Lagoon. Remote Sens. 2020, 12, 2381. [Google Scholar] [CrossRef]

- Straka, W., III; Kondragunta, S.; Wei, Z.; Zhang, H.; Miller, S.; Watts, A. Examining the Economic and Environmental Impacts of COVID-19 Using Earth Observation Data. Remote Sens. 2021, 13, 5. [Google Scholar] [CrossRef]

- Liu, Q.; Sha, D.; Liu, W.; Houser, P.; Zhang, L.; Hou, R.; Lan, H.; Flynn, C.; Lu, M.; Hu, T.; et al. Spatiotemporal Patterns of COVID-19 Impact on Human Activities and Environment in Mainland China Using Nighttime Light and Air Quality Data. Remote Sens. 2020, 12, 1576. [Google Scholar] [CrossRef]

- Tanveer, H.; Balz, T.; Cigna, F.; Tapete, D. Monitoring 2011–2020 Traffic Patterns in Wuhan (China) with COSMO-SkyMed SAR, Amidst the 7th CISM Military World Games and COVID-19 Outbreak. Remote Sens. 2019, 12, 1636. [Google Scholar] [CrossRef]

- European Space Agency (ESA). RACE: Rapid Action on Coronavirus and EO. 2020. Available online: https://race.esa.int/ (accessed on 28 December 2020).

- Sentinel-Hub. COVID-19 Custom Script Contest by Euro Data Cube. 2020. Available online: https://www.sentinel-hub.com/develop/community/contest-covid/ (accessed on 28 December 2020).

- Aeroporti di Roma (ADR). News 2020.03.17. Downsize Airport Operations. 2020. Available online: http://www.adr.it/de/web/aeroporti-di-roma-en-/viewer?p_p_id=3_WAR_newsportlet&p_p_lifecycle=0&p_p_state=normal&p_p_mode=view&p_p_col_id=column-2&p_p_col_pos=1&p_p_col_count=2&_3_WAR_newsportlet_jspPage=/html/news/details.jsp&_3_WAR_newsportlet_nid=18829902&_3_WAR_newsportlet_redirect=/de/web/aeroporti-di-roma-en-/azn-news (accessed on 14 October 2020).

- Aeroporti di Roma (ADR). Aggiornamento Tariffario 2017—2021, Consultazioni con L’utenza: Investimenti. 2016. Available online: https://www.adr.it/documents/10157/9522121/2017-21+Investimenti+-+Incontro+Utenti+2016+__+v9+settembre.pdf/211fe00b-2b13-447d-afd9-dc0e49daa908 (accessed on 14 October 2020).

- Gleyzes, M.A.; Perret, L.; Kubik, P. Pleiades System Architecture and main Performances. Int. Arch. Photogramm. Remote. Sens. Spat. Inf. Sci. 2012, XXXIX-B1, 537–542. [Google Scholar] [CrossRef]

- Torres, R.; Snoeij, P.; Geudtner, D.; Bibby, D.; Davidson, M.; Attema, E.; Potin, P.; Rommen, B.; Floury, N.; Brown, M.; et al. GMES Sentinel-1 mission. Remote Sens. Environ. 2012, 120, 9–24. [Google Scholar] [CrossRef]

- Picterra. Picterra—Geospatial Imagery Analysis Made Easy. 2018. Available online: https://picterra.ch (accessed on 14 October 2020).

- Zha, Y.; Gao, J.; Ni, S. Use of Normalized Difference Built-Up Index in Automatically Mapping Urban Areas from TM Imagery. Int. J. Remote Sens. 2003, 24, 583–594. [Google Scholar] [CrossRef]

- Drusch, M.; Bello, U.D.; Carlier, S.; Colin, O.; Fernandez, V.; Gascon, F.; Hoersch, B.; Isola, C.; Laberinti, P.; Martimort, P.; et al. Sentinel-2: ESA’s Optical High-Resolution Mission for GMES Operational Services. Remote Sens. Environ. 2012, 120, 25–36. [Google Scholar] [CrossRef]

- Deledalle, C.A.; Denis, L.; Tupin, F. How to compare noisy patches? Patch similarity beyond Gaussian noise. Int. J. Comput. Vis. 2012, 99, 86–102. [Google Scholar] [CrossRef]

- Sica, F.; Reale, D.; Poggi, G.; Verdoliva, L.; Fornaro, G. Nonlocal adaptive multilooking in SAR multipass differential interferometry. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 1727–1742. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J. A Survey on Object Detection in Optical Remote Sensing Images. ISPRS J. Photogramm. Remote Sens. 2016, 117, 11–28. [Google Scholar] [CrossRef]

- Zou, X. A Review of Object Detection Techniques. In Proceedings of the 2019 International Conference on Smart Grid and Electrical Automation (ICSGEA), Xiangtan, China, 10–11 August 2019; pp. 251–254. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Boston, MA, USA, 7–12 June 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 June 2017; pp. 6517–6525. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 386–397. [Google Scholar] [CrossRef] [PubMed]

- BBC. Coronavirus: What Did China Do about Early Outbreak? 2020. Available online: https://www.bbc.com/news/world-52573137 (accessed on 14 October 2020).

- Sica, F.; Pulella, A.; Nannini, M.; Pinheiro, M.; Rizzoli, P. Repeat-pass SAR Interferometry for Land Cover Classification: A Methodology using Sentinel-1 Short-Time-Series. Remote Sens. Environ. 2019, 232, 111277. [Google Scholar] [CrossRef]

- Mazza, A.; Sica, F.; Rizzoli, P.; Scarpa, G. TanDEM-X Forest Mapping Using Convolutional Neural Networks. Remote Sens. 2019, 11, 2980. [Google Scholar] [CrossRef]

| Sentinel-1: List of Acquisitions | |||||

|---|---|---|---|---|---|

| Mode | Product Type | Rel. Orbit | Orbit Direction | Polarization | |

| IW | GRD | 117 | Ascending | VV/VH | |

| Stack 2019 | Stack 2020 | ||||

| Sensing Date | Sensor Name | ID | Sensing Date | Sensor Name | ID |

| 2 January 2019 | S-1B | 47EE | 3 January 2020 | S-1A | 5D72 |

| 8 January 2019 | S-1A | CB9E | 9 January 2020 | S-1B | E551 |

| 14 January 2019 | S-1B | 3CC5 | 15 January 2020 | S-1A | 2FEC |

| 20 January 2019 | S-1A | 06FE | 21 January 2020 | S-1B | 9DD5 |

| 26 January 2019 | S-1B | 6BAC | 27 January 2020 | S-1A | A254 |

| 1 February 2019 | S-1A | F94E | missed | ||

| 7 February 2019 | S-1B | A5D4 | 8 February 2020 | S-1A | A28B |

| 13 February 2019 | S-1A | A3D1 | 14 February 2020 | S-1B | 13D3 |

| 19 February 2019 | S-1B | A527 | 20 February 2020 | S-1A | 4DDB |

| 25 February 2019 | S-1A | F427 | 26 February 2020 | S-1B | 55EB |

| 3 March 2019 | S-1B | 6D76 | 3 March 2020 | S-1A | 1432 |

| 9 March 2019 | S-1A | 5CC6 | 9 March 2020 | S-1B | 5AB7 |

| 15 March 2019 | S-1B | F704 | 15 March 2020 | S-1A | D5CF |

| 21 March 2019 | S-1A | ABAA | 21 March 2020 | S-1B | B491 |

| 27 March 2019 | S-1B | DC1F | 27 March 2020 | S-1A | 67DD |

| 2 April 2019 | S-1A | 7D8E | 2 April 2020 | S-1B | 5BC1 |

| 8 April 2019 | S-1B | 7B3E | 8 April 2020 | S-1A | A470 |

| 14 April 2019 | S-1A | E962 | 14 April 2020 | S-1B | 86A6 |

| 20 April 2019 | S-1B | 0320 | 29 April 2020 | S-1A | 3A98 |

| 26 April 2019 | S-1A | 16DC | 26 April 2020 | S-1B | 2CEE |

| 2 May 2019 | S-1B | 889E | 2 May 2020 | S-1A | 4ED2 |

| 8 May 2019 | S-1A | D64D | 8 May 2020 | S-1B | 3FD5 |

| Sentinel-2: List of Acquisitions | |||

|---|---|---|---|

| Product Type | Rel. Orbit | Orbit Direction | |

| MSIL2A | 122 | Descending | |

| Acquisition Date | Sensor Name | Cloud Coverage | Tile Num. Field |

| 22 March 2019 | S-2A | 0.10 % | 33TTG |

| 5 April 2020 | S-2A | 24.13 % | 33TTG |

| Pleiades: List of Acquisitions | ||||||

|---|---|---|---|---|---|---|

| Airport | Location | Country | Sensing Date | Training | Validation | Test |

| Brussels National Airport | Zaventem | Belgium | 1 April 2019 | Y | - | - |

| Brussels National Airport | Zaventem | Belgium | 18 March 2020 | Y | Y | - |

| C. Columbus Airport | Genoa | Italy | 30 April 2019 | Y | - | - |

| F.J. Strauss International Airport | Munich | Germany | 4 April 2020 | Y | Y | - |

| A.S. Barajas International Airport | Madrid | Spain | 27 April 2019 | Y | - | - |

| A.S. Barajas International Airport | Madrid | Spain | 28 March 2020 | Y | - | - |

| Songshan International Airport | Taipei | Taiwan China | 25 March 2020 | Y | - | - |

| Fiumicino International Airport | Rome | Italy | 31 March 2019 | - | - | Y |

| Fiumicino International Airport | Rome | Italy | 4 April 2020 | - | - | Y |

| Property | HR Sentinel-1/-2 Data | VHR Pleiades Data | Combination |

|---|---|---|---|

| Constant repeat-pass coverage | Y | N | Y |

| (free-of-charge) | |||

| Frequent revisit time | Y | N | Y |

| (free-of-charge) | |||

| Direct data interpretation | N | Y | Y |

| (e.g., airplane detection/localization) | |||

| Sub-meter resolution | N | Y | Y |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pulella, A.; Sica, F. Situational Awareness of Large Infrastructures Using Remote Sensing: The Rome–Fiumicino Airport during the COVID-19 Lockdown. Remote Sens. 2021, 13, 299. https://doi.org/10.3390/rs13020299

Pulella A, Sica F. Situational Awareness of Large Infrastructures Using Remote Sensing: The Rome–Fiumicino Airport during the COVID-19 Lockdown. Remote Sensing. 2021; 13(2):299. https://doi.org/10.3390/rs13020299

Chicago/Turabian StylePulella, Andrea, and Francescopaolo Sica. 2021. "Situational Awareness of Large Infrastructures Using Remote Sensing: The Rome–Fiumicino Airport during the COVID-19 Lockdown" Remote Sensing 13, no. 2: 299. https://doi.org/10.3390/rs13020299

APA StylePulella, A., & Sica, F. (2021). Situational Awareness of Large Infrastructures Using Remote Sensing: The Rome–Fiumicino Airport during the COVID-19 Lockdown. Remote Sensing, 13(2), 299. https://doi.org/10.3390/rs13020299