Abstract

Wildfires are major natural disasters negatively affecting human safety, natural ecosystems, and wildlife. Timely and accurate estimation of wildfire burn areas is particularly important for post-fire management and decision making. In this regard, Remote Sensing (RS) images are great resources due to their wide coverage, high spatial and temporal resolution, and low cost. In this study, Australian areas affected by wildfire were estimated using Sentinel-2 imagery and Moderate Resolution Imaging Spectroradiometer (MODIS) products within the Google Earth Engine (GEE) cloud computing platform. To this end, a framework based on change analysis was implemented in two main phases: (1) producing the binary map of burned areas (i.e., burned vs. unburned); (2) estimating burned areas of different Land Use/Land Cover (LULC) types. The first phase was implemented in five main steps: (i) preprocessing, (ii) spectral and spatial feature extraction for pre-fire and post-fire analyses; (iii) prediction of burned areas based on a change detection by differencing the pre-fire and post-fire datasets; (iv) feature selection; and (v) binary mapping of burned areas based on the selected features by the classifiers. The second phase was defining the types of LULC classes over the burned areas using the global MODIS land cover product (MCD12Q1). Based on the test datasets, the proposed framework showed high potential in detecting burned areas with an overall accuracy (OA) and kappa coefficient (KC) of 91.02% and 0.82, respectively. It was also observed that the greatest burned area among different LULC classes was related to evergreen needle leaf forests with burning rate of over 25 (%). Finally, the results of this study were in good agreement with the Landsat burned products.

1. Introduction

Natural disasters are generally divided into two main categories: geophysical (e.g., wildfires, floods, earthquakes, and droughts) and biological (e.g., biotic stresses) [1]. Among natural geophysical disasters, wildfire occurs more than a thousand times annually over different regions of the globe [2,3,4] and can cause considerable economic and ecological damages, as well as human casualties. Additionally, wildfires have significant long-term impacts on both the environment and people, such as changing the structure of the ecosystems, soil erosion, destructing wildlife habitats, and increasing potential of flooding [4]. Moreover, wildfires emit a considerable amount of greenhouse gases (e.g., carbon dioxide and methane) which results in global warming [5]. Therefore, reliable, timely, and detailed information on burned areas in a wildfire event is necessary.

Wildfire mapping using traditional methods (e.g., field surveys and manual digitization) has many limitations. Although traditional methods can provide the highest accuracies, they are time-consuming and have limitations in terms of automation and repeating measurements over a long period of time. However, automated RS applications are more efficient in terms of cost, time, and coverage [6,7,8], and facilitate accurate wildfire mapping with many advanced machine learning algorithms (e.g., deep learning).

RS has been widely used for wildfire mapping and monitoring. For instance, Dragozi, et al. [9] investigated the effects of derivative spatial and spectral features to map burned areas using IKONOS imagery in Greece. To this end, they utilized a Support Vector Machine (SVM) and fuzzy complementary criterion for classification and feature selection, respectively. Moreover, Oliva and Schroeder [10] evaluated the Visible-Infrared Imaging Radiometer Suite (VIIRS) active fire products with the resolution of 375 (m) to estimate burned areas in ten different areas worldwide. Based on this research, the burned areas depended on the environment features and behavior of fire. Additionally, Chen, et al. [11] detected forest burned areas based on multiple methods using Landsat TM data. Their proposed method utilized four spectral indices to determine burned areas. Afterwards, the damaged areas were obtained by selecting an optimum threshold based on histogram analysis. Furthermore, Hawbaker, et al. [12] proposed a burned area estimation algorithm based on dense time-series of Landsat data and generated the Landsat Burned Area Essential Climate Variable (BAECV) products. This method utilized surface reflectance and multiple spectral indices as inputs into the gradient boosted regression models to generate burned probability maps. Finally, the burned areas were extracted from the generated burned probability maps using a combination of pixel-level thresholding and region growing process. Pereira, et al. [13] also proposed an automatic sample generation method for burned area mapping using VIIRS active fire products over the Brazilian Cerrado savanna. They also used one-class SVM algorithm for burned area mapping. Moreover, Roteta, et al. [14] developed a locally adapted multitemporal burned area algorithm based on Sentinel-2 and Moderate Resolution Imaging Spectroradiometer (MODIS) datasets over the sub-Saharan Africa. This method had two main parts: (1) burned areas were detected based on a fixed threshold, and (2) tile dependent statistical thresholds were estimated for each predictive variable in a two-phase strategy by overlaying the MCD14ML products. Furthermore, Ba et al. [15] developed a burned area estimation method using a single MODIS imagery based on back-propagation neural network and spectral indices. Their method had three main phases: (1) extraction of samples for five classes (cloud, cloud shadow, vegetation, burned area and bare soil), (2) feature selection, and (3) classification based on back-propagation neural network. They also evaluated the performance of the proposed method at three different areas in Idaho, Nevada, and Oregon. Woźniak and Aleksandrowicz [16] also developed an automatic burned area mapping framework based on medium resolution optical Landsat images. This method was implemented in four main steps: (1) extraction of the spectral indices and differencing them, (2) multiresolution segmentation and masking, (3) detection of the core burned areas based on an automatically adjusted threshold, and (4) region growing procedure and neighborhood analysis for detection of final burned areas. Additionally, Otón, et al. [17] presented a global product of burned areas using Advanced Very High Resolution Radiometer (AVHRR) imagery. A synthetic burned area index was proposed using a combination of spectral indices and surface reflectance data in the form of a single variable. The final burned area product was obtained based on the time series classification of synthetic burned area indices using the Random Forest (RF) algorithm. Finally, Liu, et al. [18] evaluated the performance of ICESat-2 photon-counting LiDAR data in estimating burned and unburned areas using both RF and logistic regression methods. They tested recent fires in 2018 in northern California and western New Mexico. This study confirmed the feasibility of employing ICESat−2 data for burned forest classification.

RS application for wildfire mapping and change analysis over large areas has several challenges, such as efficiently processing big RS data (e.g., thousands of satellite images). To address this issue, several cloud computing platforms have been so far developed, one of the most widely used among which is Google Earth Engine (GEE) [19,20]. This platform has brought a unique opportunity for undertaking Earth Observation (EO) research studies. GEE has been designed for parallel processing, storing, and mapping different types of RS datasets at various spatial scales. GEE has been extensively used for various applications, including wildfire mapping and trend analysis due to its key advanced characteristics, such as being free to use, providing high-speed parallel processing without downloading data, and being user-friendly. For example, Long, et al. [21] developed an automated framework to map burned areas at a global-scale using dense time-series of Landsat-8 images within GEE for 2014 to 2015. This method was based on feature generation from various spectral indices with a RF classifier. Moreover, Zhang, et al. [22] generated a global burned area product using Landsat-5 satellite imagery and a RF classifier within GEE. They also analyzed the spatial distribution pattern of the global burned areas. Furthermore, Barboza Castillo et al. [23] investigated forest burned areas using a combination of Sentinel-2 and Landsat-8 satellite images within the GEE cloud platform over Uttarakhand, Western Himalaya. They mapped forested burned area by differencing the spectral indices for post and pre fire events as well as combining unsupervised clustering and supervised classification methods.

As discussed, although many methods have been so far proposed to map burned areas, a few of them were developed within GEE. Moreover, although different algorithms have been developed for mapping burned areas using RS datasets, there are still several limitations as follows:

- (1)

- Many burned area products contain moderate spatial resolution. However, with the increasing availability of higher spatial resolution satellite imagery (e.g., Sentinel-2 with 10 (m) spatial resolution), there is potential to produce more detailed products in terms of spatial resolution.

- (2)

- Many RS methods for burned area mapping which are based on high-resolution imagery are complex and do not support the mapping of burned areas over large regions.

- (3)

- Although multi sensor-based methods for burned area mapping provide promising results, most of them are not computationally efficient.

- (4)

- A thresholding method which is applied in many research studies for discriminating burned from unburned areas does not provide accurate results for large regions, because burned areas at different regions depend on the characteristics of ecosystem and behavior of fire. Thus, a dynamic thresholding method should be developed to obtain high accuracies over different regions.

- (5)

- Many methods are based on the binary mapping (i.e., burned vs. unburned). However, estimation of the Land Use/Land Cover (LULC) over burned areas is necessary for many applications.

- (6)

- Many methods only use some specific spectral features. However, the potential of spatial features should be also comprehensively investigated to improve wildfire mapping and monitoring.

To address these issues, a framework was proposed in this study by leveraging GEE big data processing platform. The proposed method was implemented in two phases. The first phase detected burned area and was implemented in five main steps: (1) preprocessing, (2) spatial and spectral feature extraction; (3) change detection by image differencing on spatial and spectral features; (4) feature selection using the Harris’s Hawk Optimization (HHO) algorithm; and (5) mapping burned area by applying a supervised classifier to the selected features. The second phase estimated the type of burned LULC using MODIS LULC products.

The main contributions of this study are: (1) mapping Australian burned areas and damage assessment at a higher spatial resolution compared to other studies in GEE; (2) estimating burned area for different types of LULC using an innovative idea (most previous methods were only focused on binary burned mapping); (3) implementing a novel feature selection algorithm (i.e., HHO) for determining the most optimal spectral and spatial features for burned area mapping, (4) investigating the importance of features for burned area mapping; (5) utilizing a combination of spatial and spectral features for burned area mapping; and (6) comparing the performance of different classifiers for burned area mapping.

2. Materials and Methods

2.1. Study Area

The study area is mainland Australia with an area of 7.692 (Mkm2). This study uses Australian wildfire as a case study to evaluate the accuracy of the proposed method to map the burned area between September 2019 to February 2020. Australian wildfire started in the southeast of the country in June 2019 and rapidly spread throughout the continent [24]. Based on several reports, at least 28 people were killed, and more than 3000 homes were destroyed or damaged during this incident. Moreover, during the Australian wildfires an enormous amount of CO2 was released into the air which negatively impacted the quality of air. Due to the large extent of the Australian continent, it has six major climatic zones (i.e., desert, temperate, equatorial, subtropical, tropical, and grassland climates). Most areas experience the annual average rainfall of less than 600 (mm), and in some areas the annual average rainfall can be more than 1200–8000 (mm) [25].

2.2. Satellite Data

In this study, Sentinel-2 optical satellite images were utilized. Sentinel-2 is an Earth Observation (EO) mission launched by the European Space Agency (ESA) to continue ESA’s global services on multispectral high spatial resolution observations. This mission includes the Sentinel-2A and Sentinel-2B satellites. The temporal resolution of the constellation is 5 days. The MultiSpectral Instrument (MSI) is the main sensor of Sentinel-2 and is based on the pushbroom concept. MSI provides 13 spectral bands with a wide spectral coverage over the visible, Near Infrared (NIR) and Shortwave Infrared (SWIR) domains at different spatial resolutions from 10 (m) to 60 (m) [26]. The data is freely available at Sentinel Scientific Data Hub (https://scihub.copernicus.eu). In this study, the level 1C products of Sentinel-2, which are available in GEE (Dataset ID: ee.ImageCollection(“COPERNICUS/S2_SR”), were used. All maps are presented in a reference geographic coordinate system of the World Geodetic System 1984 (WGS 1984).

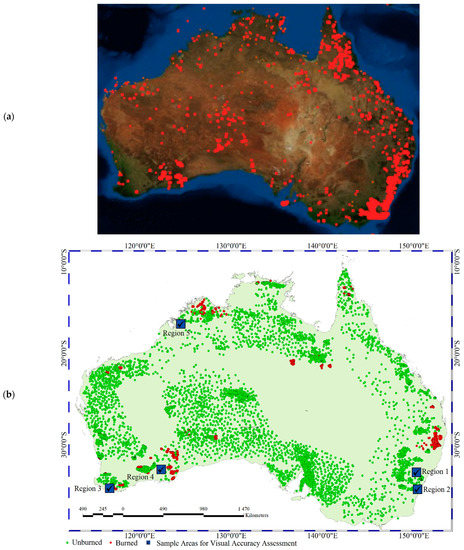

2.3. Reference Data

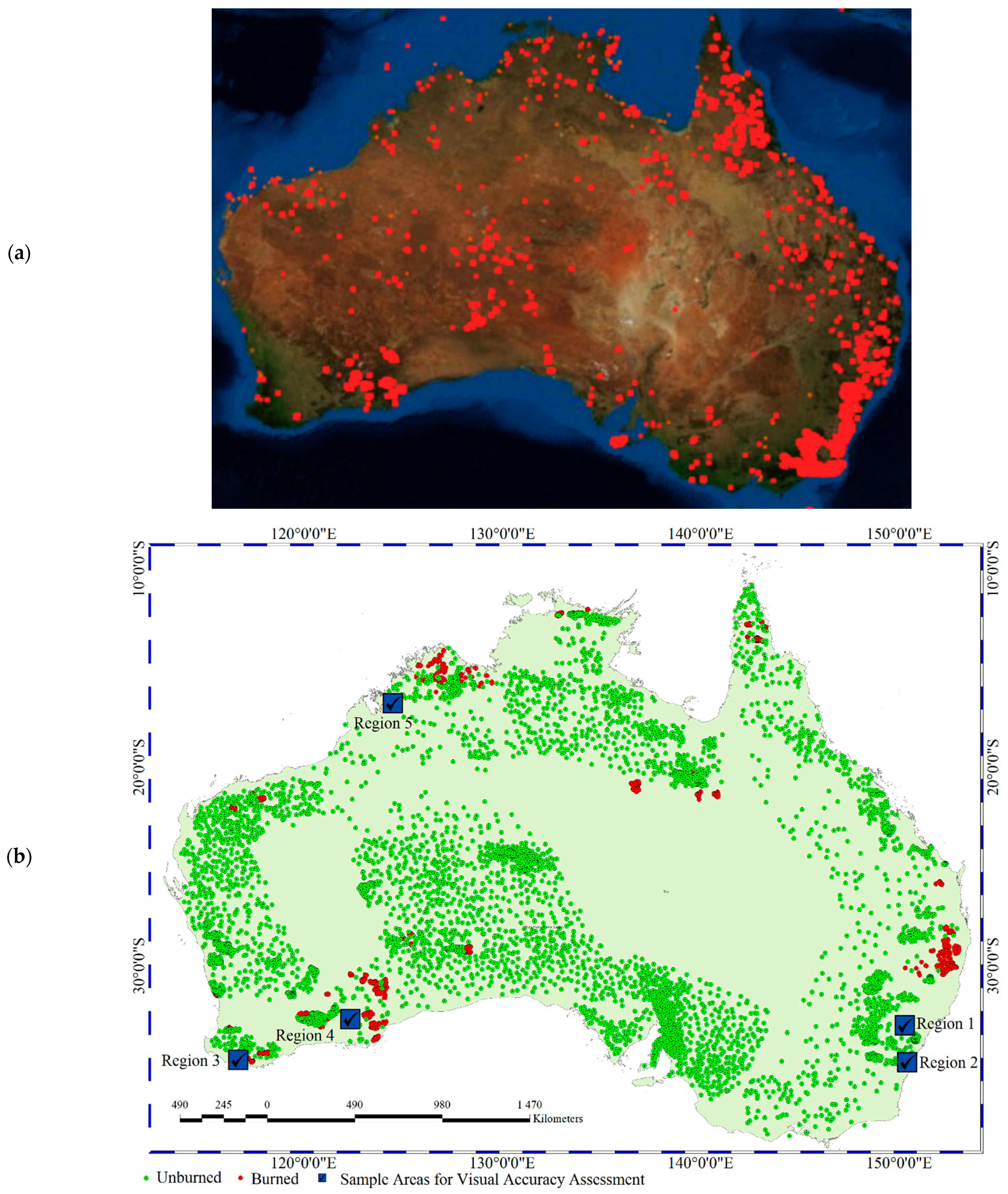

Reference samples are necessary to train any supervised classification algorithm. In this study, reference samples for the burned areas were generated using multiple reports about the locations of wildfires (https://www.ktnv.com/) [27]. Figure 1a illustrates the location of burned areas generated from these reports. Furthermore, the samples for the burned and unburned areas were generated by visual interpretation of the Sentinel-2 time series imagery, acquired during September 2019 to February 2020. In addition, Figure 1a was used for generation of sample data for several burned areas. Figure 1b illustrates the spatial distribution of the reference samples for both burned and unburned areas and some regions for visual accuracy assessment, and Table 1 provides the number of samples. Finally, all samples were randomly divided into three groups: training (50%), validation (17%), and test (33%). The validation dataset was used as an intermediate dataset for the fine tuning of the utilized classifiers [28].

Figure 1.

(a) The locations of Australian active wildfires reported on https://www.ktnv.com/ identified by the red color. (b) The spatial distribution of the generated reference samples and the five regions which were selected for visual accuracy assessment.

Table 1.

The number of reference samples for the burned and unburned areas.

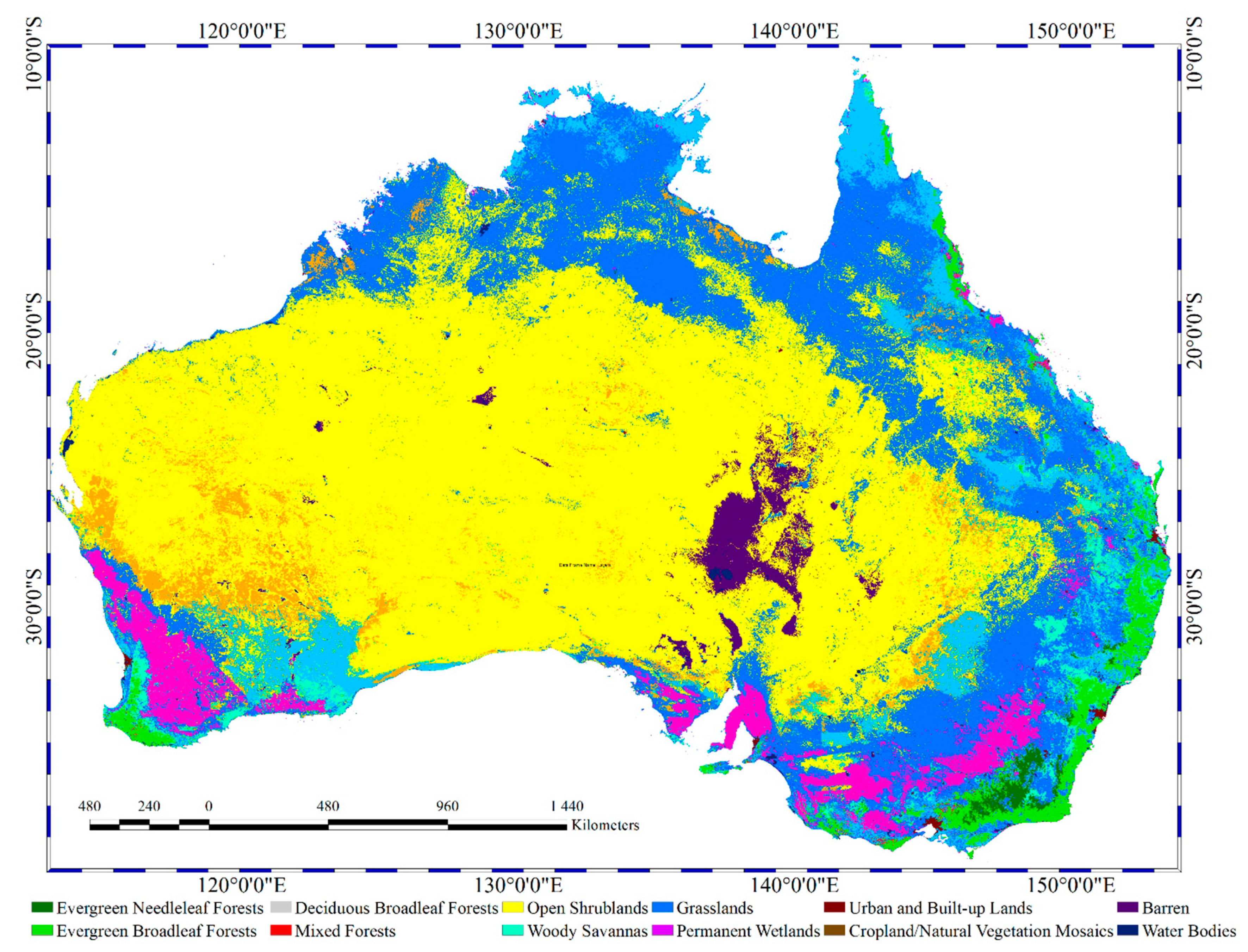

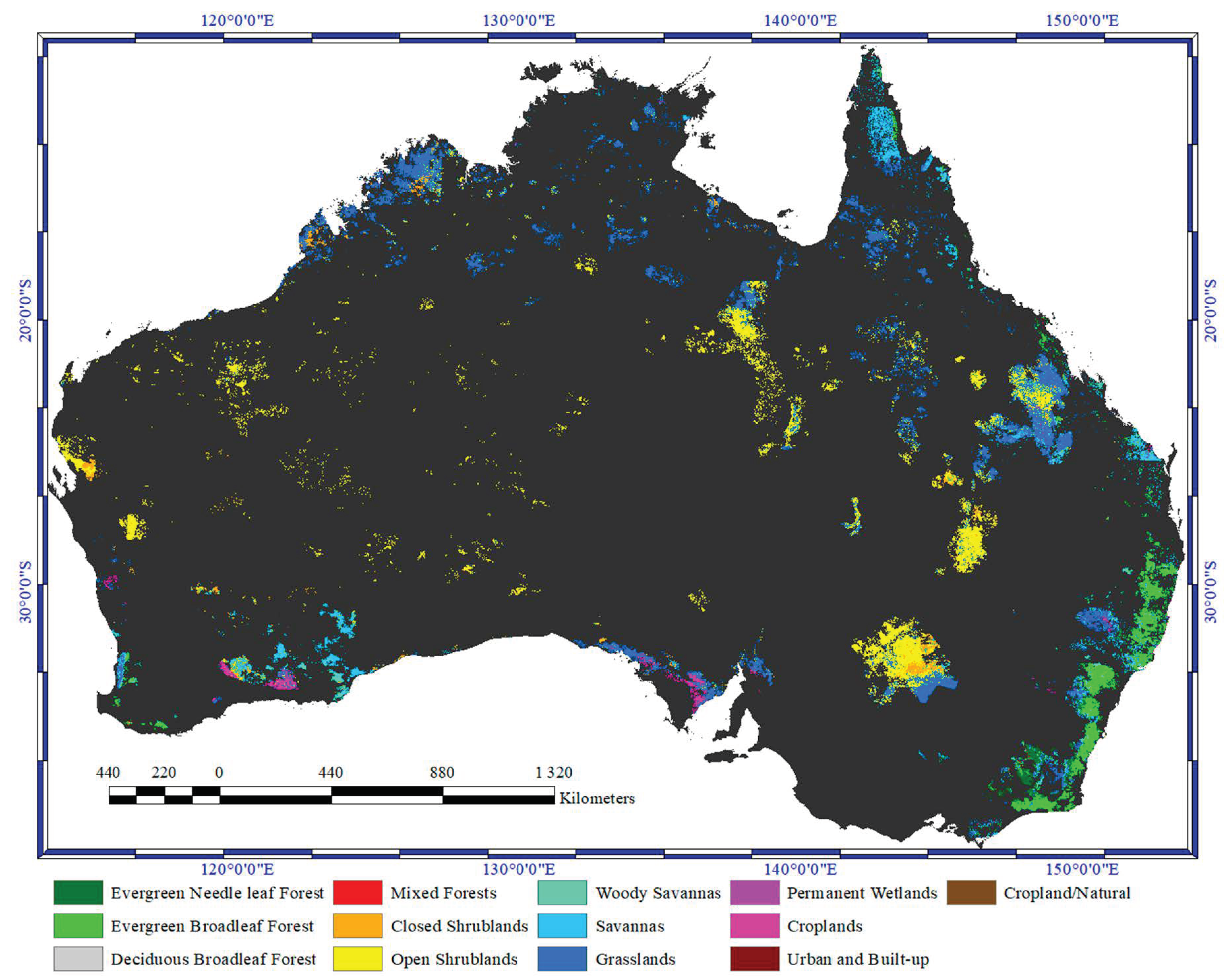

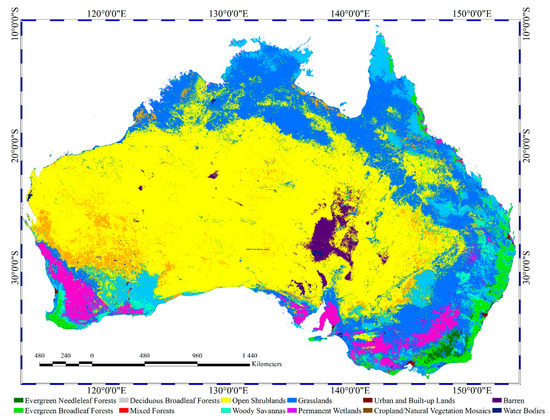

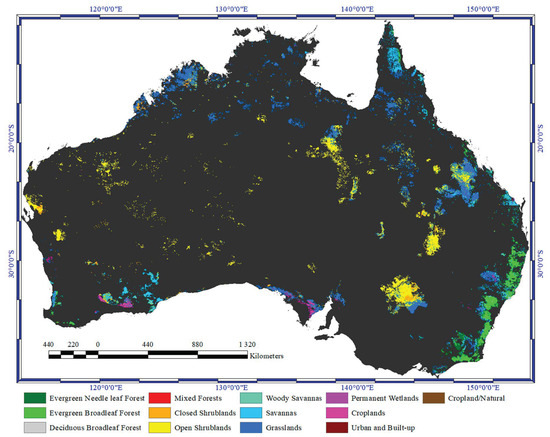

2.4. MODIS LULC Product

The MODIS LULC product was used in this study to determine the LULC types over the burned areas. Figure 2 demonstrates the MODIS LULC map over Australia (https://modis.gsfc.nasa.gov/). This map contains 17 classes (see Table 2 and Figure 2). The original spatial resolution of the MODIS LULC map is 500 (m) which was resampled to 10 (m) to conform the spatial resolution of Sentinel-2. As is clear, the dominant classes are open Shrublands and Grasslands. Forests are also mainly found in south east and south west of the country. In this study, the MODIS LULC products available in GEE (Dataset ID: ee.ImageCollection(“MODIS/006/MCD12Q1”)) were used.

Figure 2.

The Moderate Resolution Imaging Spectroradiometer (MODIS) Land Use/land Cover (LULC) product of Australia for 2018. Red squares are multiple burned areas which will be later used for visual accuracy assessment of the burned area map generated by the proposed method.

Table 2.

The Land Use/Land Cover (LULC) classes along with their descriptions used in the Moderate Resolution Imaging Spectroradiometer (MODIS) LULC product [29].

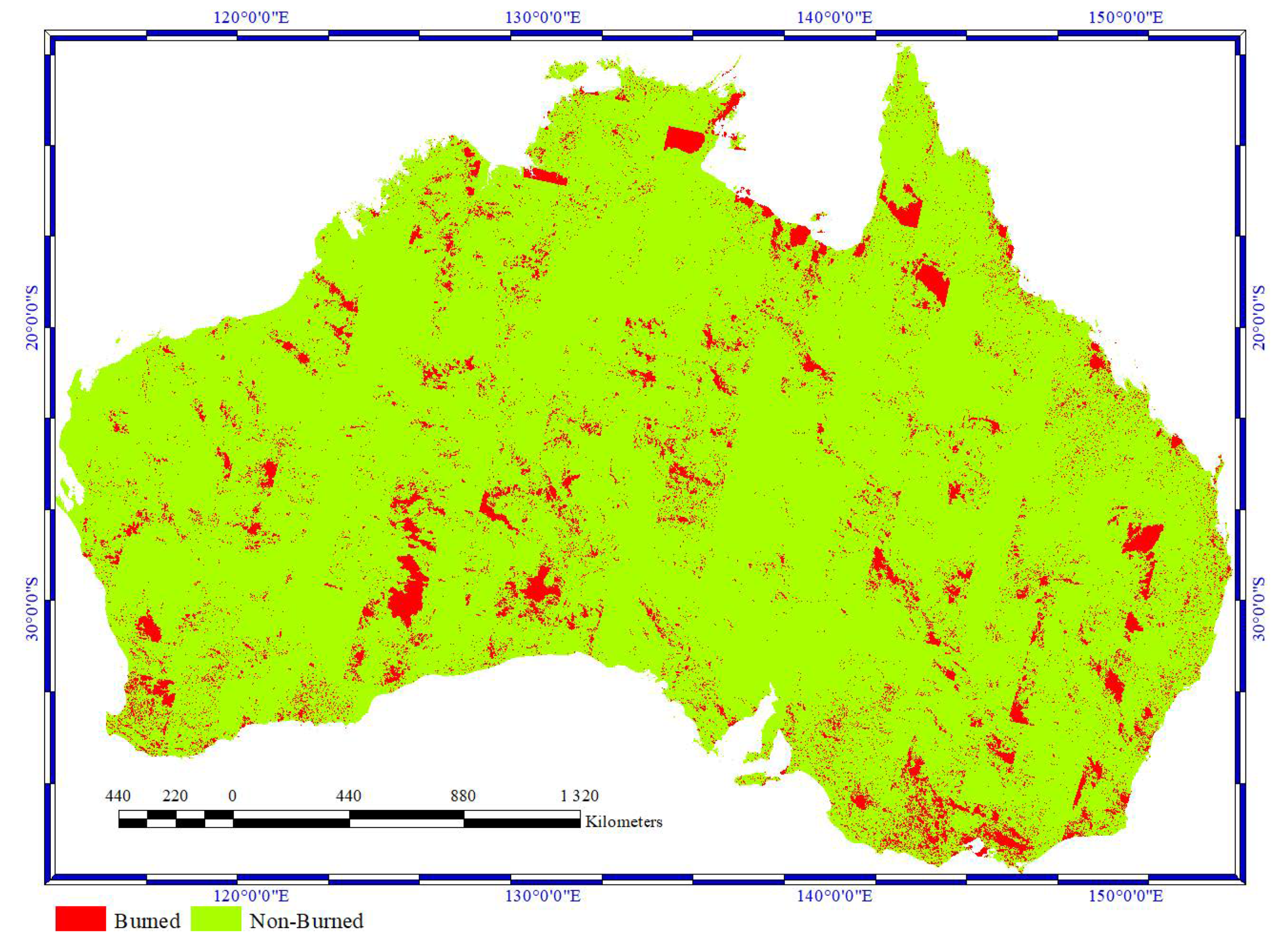

2.5. Landsat Burned Area Product

Burned area product of Landsat was used to assess the results of the proposed method in this study. The Landsat level 3 burned area product is designed to detect burned areas across all ecosystems [30]. This product is available at spatial resolution of 30 (m) from 2013 to present. The Landsat-8 burned product is generated based on surface reflectance and top of atmosphere brightness temperature data. In this study, the burned area products of Landsat-8 generated from September 2019 to February 2020 were used after aggregating them based on a burned probability threshold of 0.8 (Figure 3).

Figure 3.

The Landsat-8 burned product for Australian wildfire during September 2019 to February 2020.

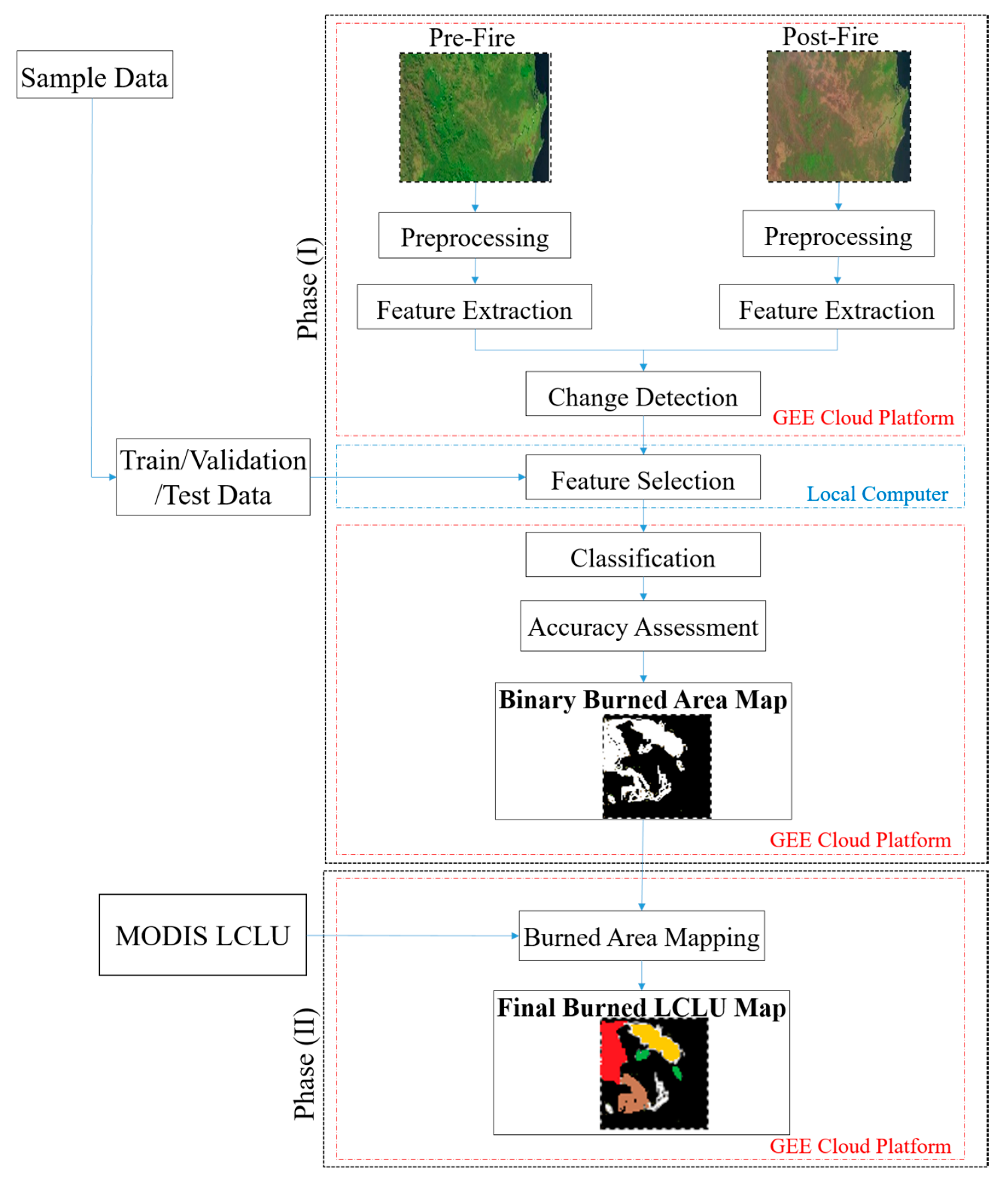

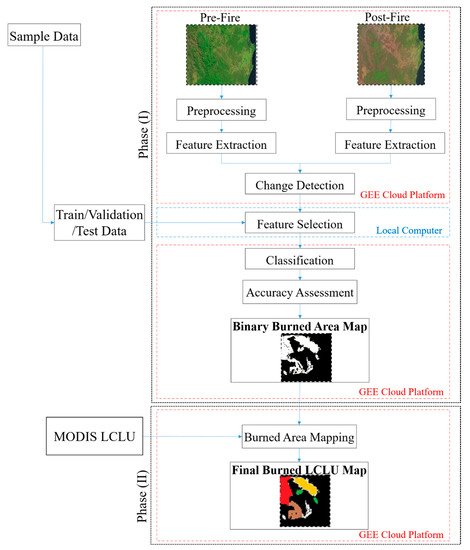

2.6. Proposed Method

The flowchart of the proposed method is provided in Figure 4. The proposed framework was implemented in two main phases: (1) binary burned area mapping (i.e., burned vs. unburned), (2) estimation of burned areas across different LULC types. More details on the proposed framework are provided in the following subsections.

Figure 4.

Overview of the proposed framework for burned Land Use/land Cover (LULC) mapping.

2.6.1. Phase 1: Binary Burned Areas Mapping

The first phase of the proposed method for binary burned areas mapping included five steps: (1) preprocessing; (2) spatial-spectral feature extraction; (3) change detection to predict burned areas by differencing pre-fire and post-fire layer-staked images (i.e., original spectral bands, spectral indices, and texture features); (4) feature selection using the HHO optimizer and supervised classifiers; and (5) binary burned area mapping using the selected features and the optimum classifier algorithm. These steps are discussed in more details in the following subsections.

Preprocessing

In this study, all preprocessing steps were performed in the GEE big data processing platform. The atmospheric correction was performed using the module Py6S that is available at https://github.com/robintw/Py6S. Moreover, the cloudy regions in the images were masked using the F-Mask algorithm with a threshold of 10%. Finally, all the spectral bands were resampled to 10 (m).

Feature Extraction

Although most wildfire studies conducted using RS methods have only utilized spectral features, spatial features could also provide valuable information to improve the results. Therefore, both spectral and spatial features were extracted and investigated in this study.

The spectral indices have many applications in RS image analysis [31]. The main characteristic of these indices is that they make some features more discernible compared to the original data (i.e., spectral bands). In this study, 90 different spectral indices were used (see Appendix A). These features could improve the result of burned area mapping. Since the proposed method was based on change detection, several areas were unwantedly included in changes, such as the boundary of water bodies and rapidly growing vegetation. Therefore, several water indices were used to reduce false alarms originated from undesired changes in water bodies. Vegetation indices were also necessary due to diversity of vegetation over the entire study area and the similarity of the burned vegetation response with those of croplands. Many research studies have argued that the burned indices improved the accuracy of burned area mapping [15,32,33].

The spatial features, such as texture indices, can also improve the results of LULC classification [34]. Texture refers to the relationship between an individual pixel with its neighboring pixels and provides valuable information for LULC classification. Different features, such as density, equality, non-roughness, and size uniformity, can be generated by texture analysis. In this study, 17 texture features were generated from the Grey Level Co-occurrence Matrices (GLCM) (seen Appendix B). It is worth noting that textural features are usually extracted from a panchromatic band. However, since Sentinel-2 does not contain a panchromatic band, three visible bands were combined based on Equation (1) to generate the panchromatic band.

where is the panchromatic band and , , and are the blue, green, and red bands, respectively. The window size for texture analysis was set to 3 3.

Change Detection

Change detection is one of the most important applications of RS and has been widely used for estimating burned areas using pre- and post-fire datasets [32]. In this study, image differencing was used as the change detection algorithm. Image differencing is a simple and popular change detection algorithm [6]. This algorithm is based on the band-to-band pixel subtraction of datasets from the first- and second-time datasets (Equation (2)):

where, and are the pixel values in the first and second images in the row r and column c for band b, respectively.

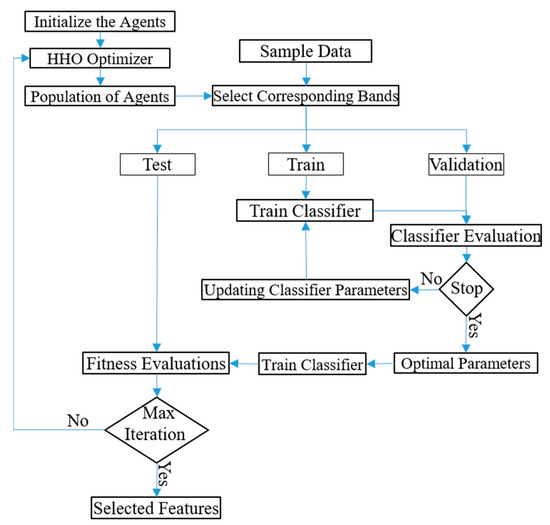

Feature Selection

The main purpose of feature selection is eliminating redundant features and using only the most optimal features during the classification [35]. In this study, feature selection was implemented in two steps: (1) defining the number of features, and (2) identifying the most useful features by an optimizer. To this end, the HHO algorithm was employed in this study. HHO is a new population-based and nature-inspired optimization algorithm, which has been widely used for optimization purposes in different fields [36]. HHO is based on the chasing-escaping patterns observed between the hawks and their prey (such as rabbit). In this method, the Harris’ hawks are considered as candidate solutions and the intended prey is considered as the best candidate solution in every iteration. The HHO algorithm has three main steps, including amaze pounce (exploration), transforming from exploration to exploitation, and exploitation [37]. The main purpose of exploration phase is mathematically modeling the waiting, searching, and discovering the desired hunt. Transforming from exploration to exploitation is applied based on the external energy of a rabbit. The exploitation is considered the residual energy of the prey. In the HHO algorithm, three parameters need to be initialized: total population size (N), maximum iteration, and number of features.

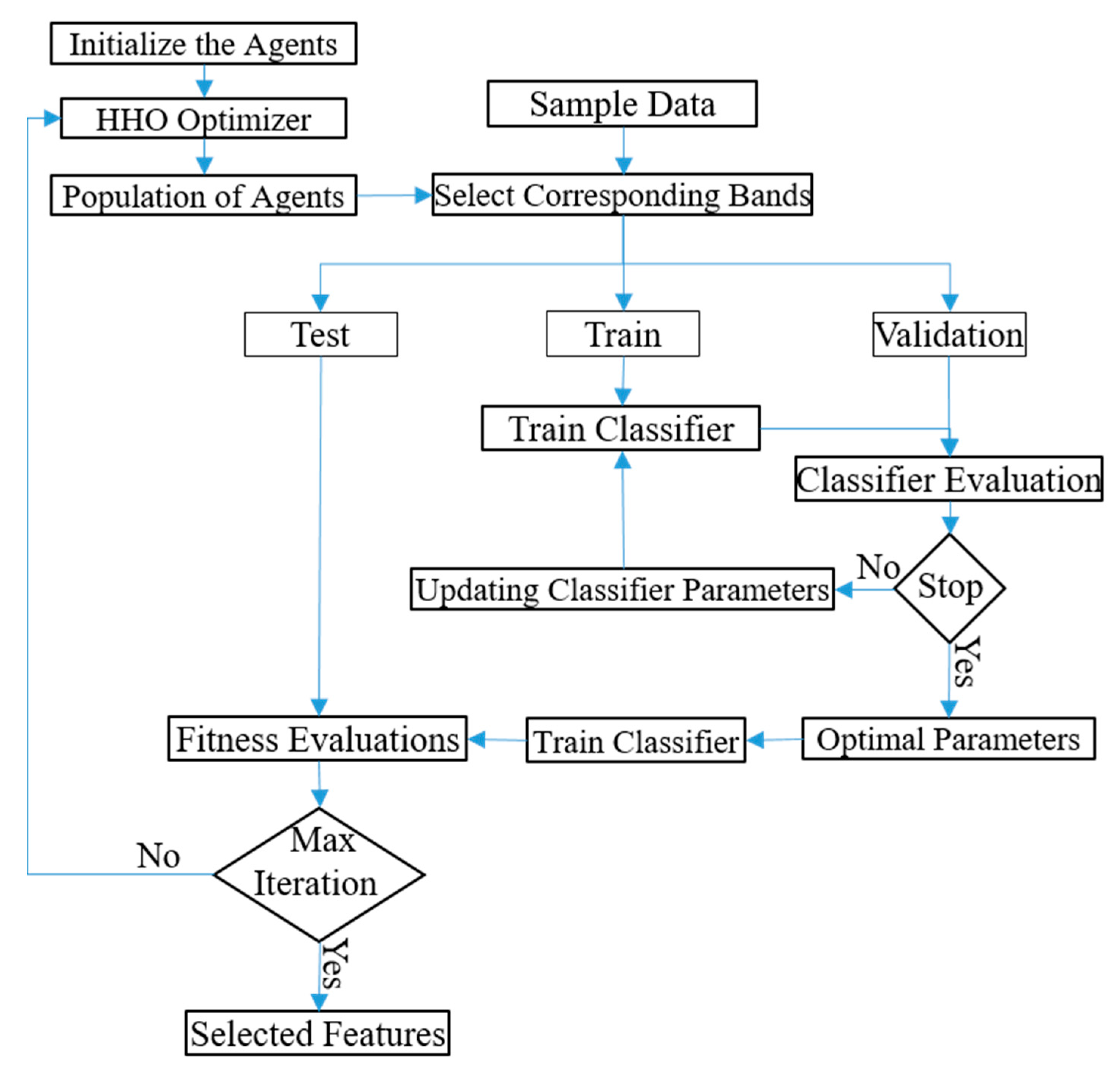

Figure 5 illustrates the proposed feature selection framework using the HHO algorithm. HHO is initialized with the number of agents, number of features and number of iterations. The initial values of the three HHO parameters were manually set after trial and error. Finally, 15, 500, and 60 were selected as the optimum values for the total population size, maximum iteration, and number of features, respectively. Then, the population of agents (solutions) was evaluated by a fitness function (i.e., Overall Accuracy (OA) of the classifier). The fitness of population of agents was calculated based on the performance of the classifiers on the test dataset. In this study, the supervised classifiers of the k-Nearest Neighbors (kNN), Support Vector Machine (SVM), and Random Forest (RF) were trained using the training samples and their performances were evaluated using the validation dataset. Since classifiers are sensitive to input features, it was required to tune the classifier parameters when the input features were changed [20,38]. In this study, the optimization of the tuning parameters of the classifiers was applied based on the grid search algorithm. The feature selection process was continued until all interactions were finished.

Figure 5.

Flowchart of the feature selection using the Harris’s Hawk Optimization (HHO) optimizer and a supervised classifier.

Classification

After selecting the most optimal features using the HHO algorithm, they were used as input for three classification algorithms (RF, kNN, and SVM) to produce burned areas maps.

RF Classifier

RF is an ensemble machine learning algorithm that has been widely used for RS classifications [39,40,41]. This method combines a number of weak classifiers to construct a powerful classifier. RF combines a set of decision trees based on randomly selected subsets of data where each decision tree classifies input data. A bootstrap sample approach is also used to train each tree using a set of sample data. Then, the highest number of votes as was chosen as the classification result [42]. RF classifier has two main tuning parameters which need to be defined by users. These parameters are the number of features to split each node and number of trees to grow into a whole forest. More details about the RF classifier can be found in [42].

k-NN Classifier

The k-NN algorithm is one of the simplest non-parametric classifiers which uses an instance-based learning approach. kNN has been extensively used for image classifications due to the simplicity of the implementation of this algorithm [43]. This algorithm classifies an unlabeled pixel based on the class attributes of its k nearest neighbors. In fact, the kNN algorithm identifies a group of k training samples that have nearest distance to unlabeled pixels. Then, it assigns a label of class to unknown pixels by calculating the average of the response variables. Therefore, k plays an important role in the accuracy of the kNN classifier and should be selected during the identification of the optimized tuning parameters. More details about the kNN classifier can be found in [44].

SVM Classifier

SVM is a supervised non-parametric algorithm based on statistical learning theory that is commonly utilized for classification purposes [45]. The basic idea of the SVM classifier is to build a hyperplane that maximizes the margin among classes [46]. SVM maps the input image data from the image space to feature space, where the classes can be effectively separated by a hyperplane. The mapping can be applied by different types of kernel functions, such as the Radial Basis Function (RBF), which has proved to provide the best performance among kernel types [47]. This classifier has two main tuning parameters: the kernel parameter (e.g., gamma in RBF) and the penalty coefficient (C). One can refer to [44] for more details on this algorithm.

As mentioned above, each of these supervised classifiers has some parameters that should be tuned to obtain the highest possible classification accuracies. One of the simplest methods for tuning these parameters is the grid search. Based on this approach, a range of unknown parameters is initially defined, and the model is built by the training data and is assessed by the validation data. This process continues until the defined bound is finished. Finally, the optimum tuning parameters correspond to the highest accuracy obtained from the validation data.

Accuracy Assessment

In this study, the confusion matrix of classification was used for statistical accuracy assessment. As illustrated in Table 3, a confusion matrix has four components: True Positive (TP), False Positive (FP), True Negative (TN), and False Negative (FN). Various accuracy matrices were generated based on these four parameters and were used to report the accuracy levels. As demonstrated in Table 4, these matrices were Overall Accuracy (OA), Balanced Accuracy (BA), F1-Score (FS), False Alarm (FA), Kappa Coefficient (KC), Precision (PCC), Recall, Miss-Detection (MD), and Specificity [48,49].

Table 3.

Confusion matrix [48,49].

Table 4.

The metrics which were used for accuracy assessment of the burned map in this study [48,49].

Besides statistical accuracy assessment, the wildfire map produced by the proposed method was visually evaluated over multiple burned areas with different LULC types. Moreover, the produced burned area map was compared with the Landsat burned area product described in Section 2.5.

2.6.2. Phase 2: Mapping LULC Types of Burned Areas

After producing a binary burned area map through the phase 1 of the proposed method, the LULC types of the burned areas were identified using the MODIS LULC product available in GEE (see Section 2.4). To this end, the binary burned areas map was overlaid on the MODIS LULC product and then, the amount of burned areas for different LULC classes were calculated.

2.6.3. Parameter Setting and Feature Selection

As discussed in Section 2.6.1 the optimal tuning parameters for the classifiers should be initially selected to obtain the highest possible classification accuracies. Table 5 provides the optimum tuning parameters for the three classifiers (RF, kNN, and SVM) that were obtained using the grid search method.

Table 5.

The optimum value for the tuning parameters of the classifiers (k-Nearest Neighbors (k-NN), Support Vector Machine (SVM), and Random Forest (RF)), k- obtained from the grid search method.

The HHO algorithm has three main parameters that need to be initialized. The number of hawks (population size) is set to 15, number of features for feature selection is set to 60, and the number of iterations is set to 500.

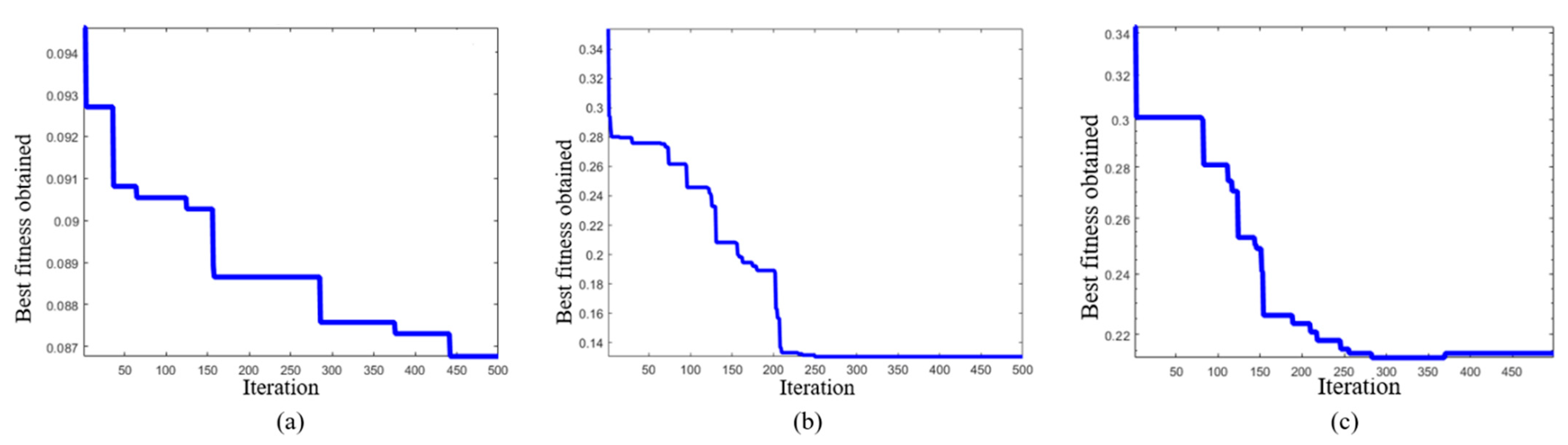

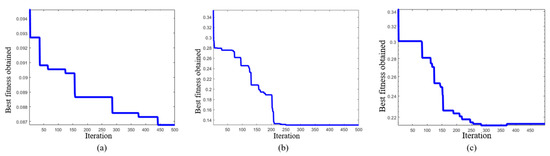

As discussed in Section 2.6.1 121 features (90 spectral features, 13 original spectral bands, 17 spatial features, and one panchromatic band) were initially extracted. Then, the HHO algorithm was applied to select the most optimal features. The convergence curves of the HHO optimizer for the three classifiers were obtained for the test data are illustrated in Figure 6. The kNN and SVM algorithms converged after 250 iterations, and the RF classifier converged after 450 iterations. The feature selection process of the SVM took relatively more time due to solving equations to build a hyperplane at each epoch.

Figure 6.

Convergence trends of the Harris’s Hawk Optimization (HHO) algorithm for the three classifiers: (a) Random Forest (RF), (b) k-Nearest Neighbor (k-NN), and (c) Support Vector Machine (SVM).

Table 6 provides the selected features for each classifier. As clear, the results of the HHO algorithm were different for various classifiers, indicating that each classifier was sensitive to different features. However, multiple common features were identified for all of them (e.g., NBR, DISS, and B2).

Table 6.

The selected features of the three classifiers (k-Nearest Neighbors (k-NN), Support Vector Machine (SVM), and Random Forest (RF)) using the Harris’s Hawk Optimization (HHO) feature selection method.

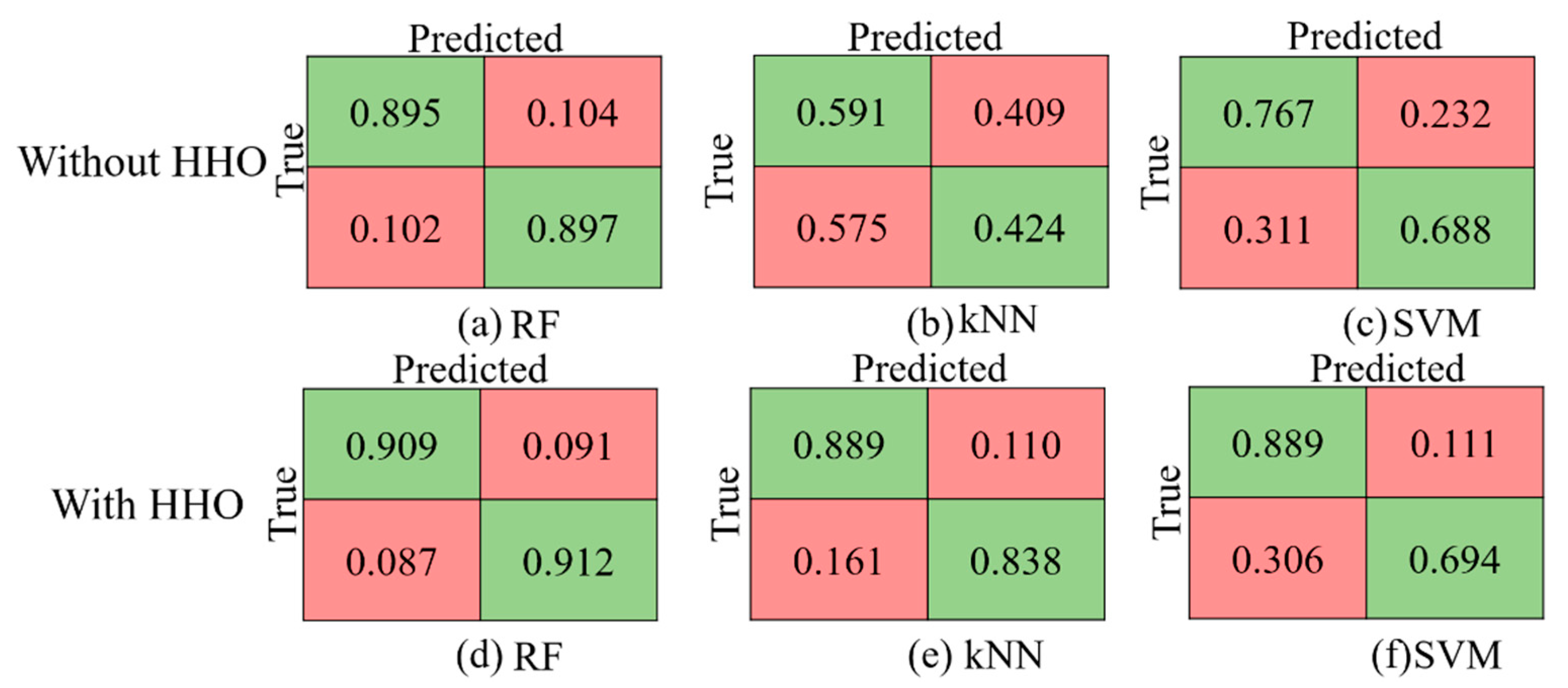

3. Results

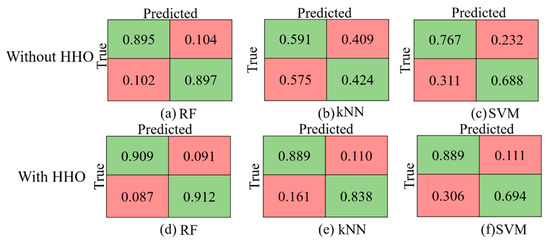

The confusion matrices of the classifications obtained by different classifiers for two scenarios (using selected features and without feature selection) are illustrated in Figure 7. The statistical accuracy results are also provided in Table 7. It was observed that all classifiers resulted in better accuracies when the HHO feature selection algorithm was employed. Moreover, the processing time was decreased when HHO was used compared to the case when all features were utilized. For example, the OA of the kNN algorithm was improved from 58.13% to 86.34% when HHO algorithm was applied to the selected features. Moreover, the OA of the SVM algorithm increased from 72.67% to 78.87% and MD rate reduced from 23.26 to 11.08 when feature selection was utilized. Generally, the HHO feature selection method improved the performance of the kNN and SVM classifiers in detection of Burned pixels which was reflected on the TP component in the confusion matrix and led to decreasing FN pixels. The performance of the RF classifier was also considerably improved by feature selection. For example, the detection rate of the unburned pixels (TN) was improved, which reduced the FP pixels.

Figure 7.

The confusion matrices of the Random Forest (RF), k-Nearest Neighbors (k-NN), and Support Vector Machine (SVM) classifiers when feature selection was not considered (a–c) and when the Harris’s Hawk Optimization (HHO) feature selection method was used (d–f).

Table 7.

The results of the Overall Accuracy (OA), Balanced Accuracy (BA), F1-Score (FS), False Alarm (FA), Kappa Coefficient (KC), Precision (PCC), and Miss-Detection (MD) for the three classifiers k-Nearest Neighbors (kNN), Support Vector Machine (SVM), and Random Forest (RF) are shown for Harris’s Hawk Optimization (HHO) feature selection and for the whole dataset.

Comparing the results of the three classifiers, the kNN algorithm had the poorest performance, especially in detecting burned areas without the feature selection method. The accuracies of kNN for both burned and unburned classes were under 60% and the error of classification was more than 40%. Although the performance of the SVM classifier was improved when feature selection was employed, it had low accuracy in detecting unburned pixels (lower than 70%). Overall, the RF had the highest accuracy and lowest errors in terms of MD and FA. RF had the highest performance in detecting both Burned and Unburned classes. Based on these results, the RF classifier was selected for wildfire mapping over mainland Australia.

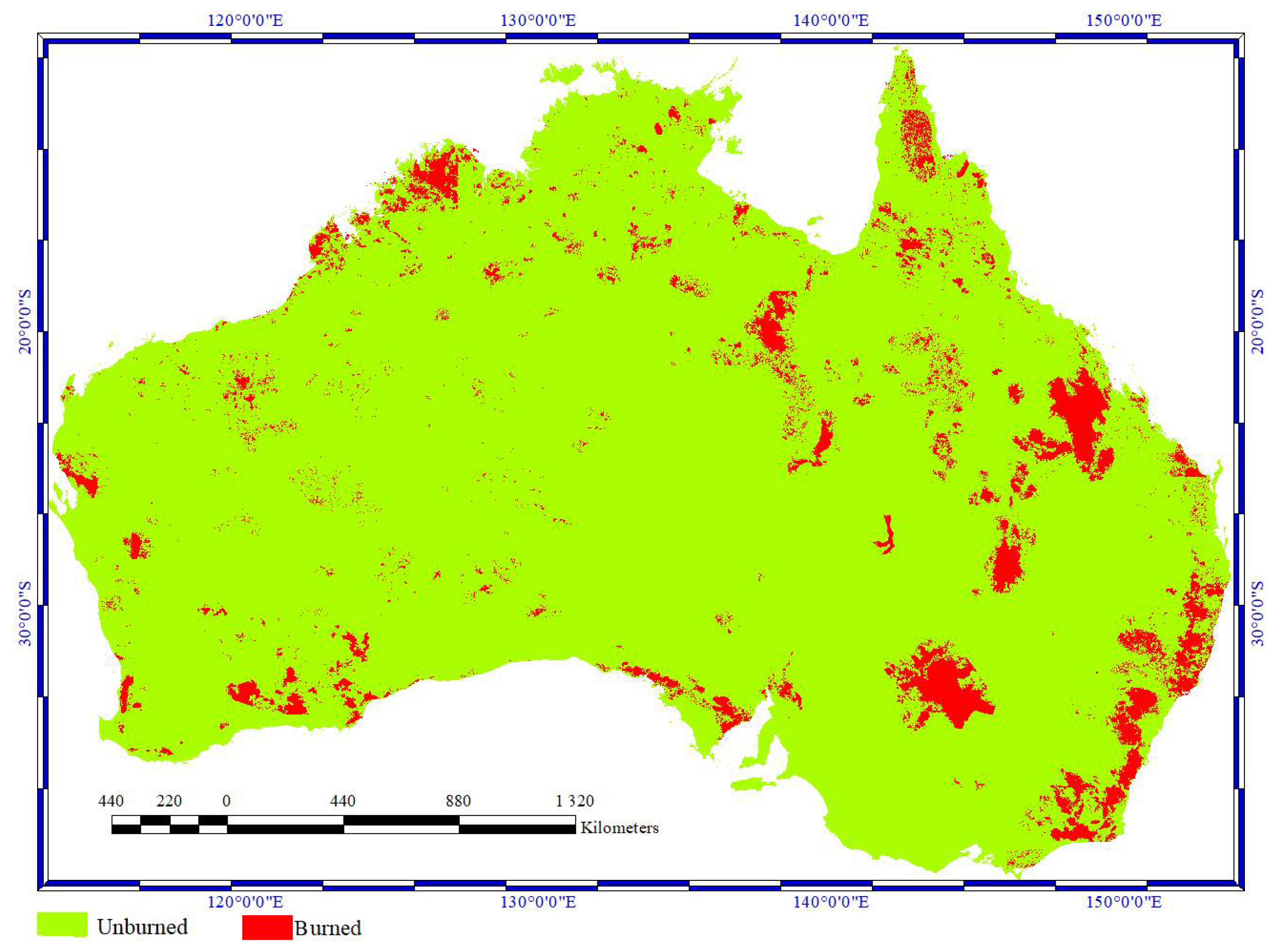

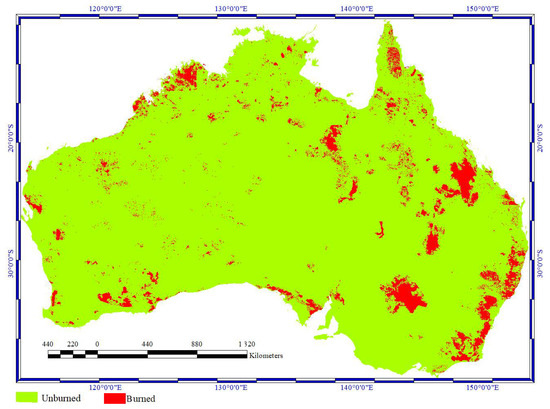

Figure 8 shows the binary burned areas based on the RF classifier applied to the selected features. Based on this Figure, the coverage of burned and unburned areas were 249,358 (km2) and 5,162,072 (km2), respectively. As clear from Table 7, the OA and KC of this classification were 91.02% and 0.82, respectively.

Figure 8.

Binary burned area map over mainland Australia using the phase 1 of the proposed method.

After producing the binary burned area map, phase 2 of the proposed method was applied to detect the LULC types over the burned areas. Figure 9 illustrates the produced map and Table 8 provides the coverage burned areas for different LULC classes. Based on the results, Evergreen Needleleaf Forests have suffered a 25% decrease relative to their own distribution, but the actual area covered by this class is quite small (5629 km2) compared to other LULC classes, such as Grasslands (91,106 km2), Open Shrublands (71,511 km2), and Savannas (27,878 km2).

Figure 9.

Land Use/Land Cover (LULC) types over the burned areas in mainland Australia.

Table 8.

The area and coverage of different Land Use/land Cover (LULC) types of the burned areas.

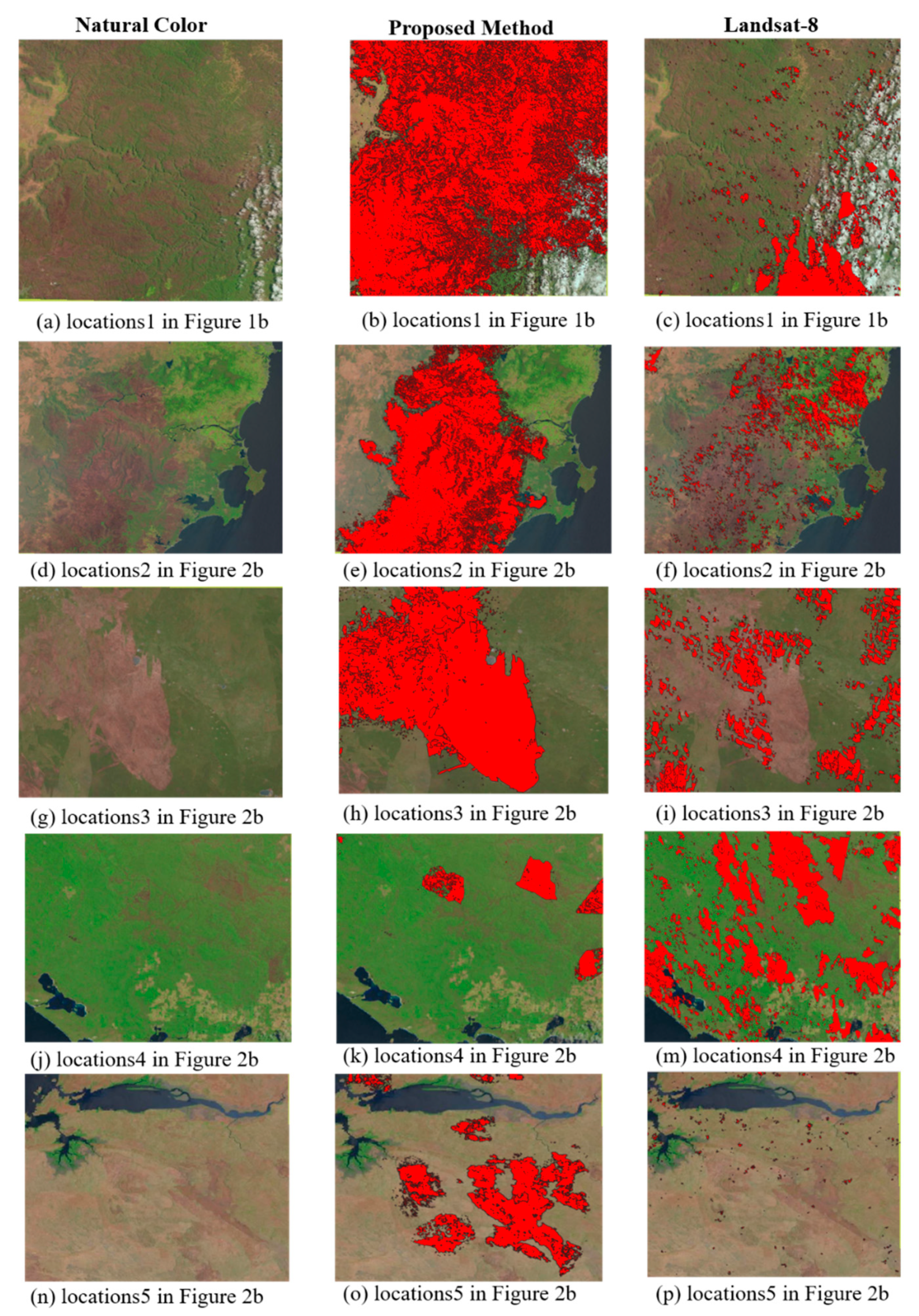

4. Discussion

In this study, the accuracy of the final wildfire map produced using the proposed method (Figure 10) was also visually assessed over multiple burned areas identified with blue squares in Figure 1b. These areas were related to different LULC types, such as Evergreen Broadleaf Forests (sample regions 1, 2, and 4 in Figure 1b), Woody Savannas (sample region 3 in Figure 1b), and Grasslands (sample region 5 in Figure 1b). Moreover, the results were compared with those obtained from the Landsat burned area product (Figure 3). Most burned areas were correctly classified by the proposed method (central column in Figure 11), and the results were more accurate than those of the Landsat-8 products (right-hand side in Figure 10). Finally, it should be noted that the produced burned area map in this study had higher spatial resolution compared to the Landsat burned areas product.

Figure 10.

Zoomed images over five sample burned areas. The left-hand side shows natural color composite of the sample region, the central column shows the detected burned area by the proposed methods, and the right-hand side illustrates the detected burned areas in the Landsat-8 burned areas product. The rows are for the sample regions identified by the blue squares in Figure 1b (locations (1), (2), (3), (4), and (5), respectively).

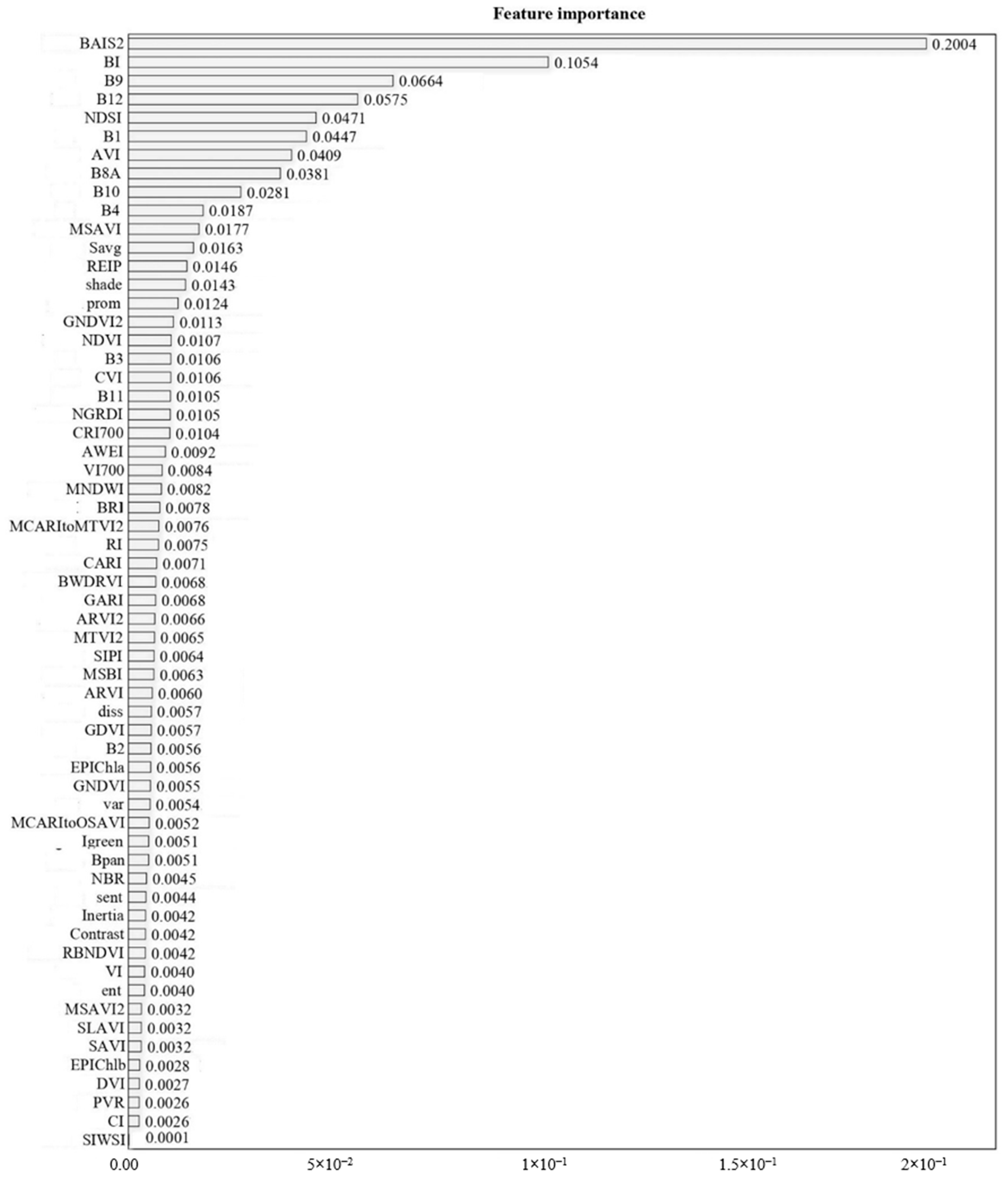

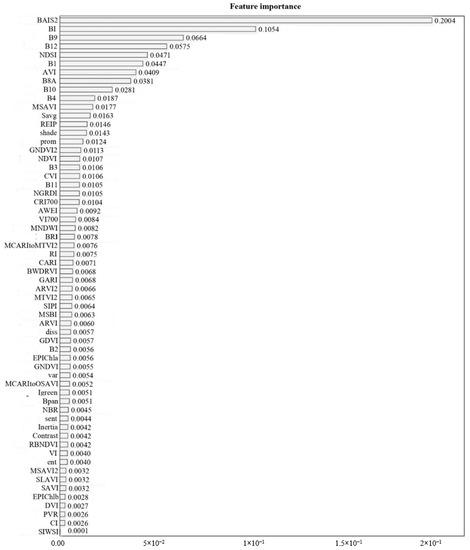

Figure 11.

The importance of the selected features using the Harris’s Hawk Optimization (HHO) algorithm in the random Forest (RF) classifier.

The sentinel-2 has high potential for burned area detection due to its higher temporal and spatial resolutions compared to other satellites which provide free data. For example, many research studies have been conducted to detect burned areas using Landsat imagery which has low temporal resolution (about 15 days) [3,11,12,21,22,23,50,51]. The poor temporal resolution of Landsat negatively affects the timely mapping of burned areas. Recently, burned area detection using unnamed aerial vehicle (UAV) imagery were also investigated. However, these methods have their own limitations, especially over large areas such as Australian continent [52,53,54,55,56]. Overall, based on the numerical and visual analysis, the sentinel-2 has a high potential for burned area mapping.

Most studies conducted on burned area mapping have focused on binary burned area mapping [5,11,12,13,14,15,16,17,18,21,22,33,52,57,58]. However, in most cases, it is very important to know the land cover types of burned areas. The proposed framework in this study can efficiently identify the type of land cover in burned areas.

Although selecting optimum features is an important step in burned area mapping, many studies have ignored it and only employed a few spectral features [56,58,59,60]. Based on the experiments in this study, both spectral and spatial features play key roles to obtain accurate burned maps. For example, based on Figure 11, the spectral indices and spatial features had high importance compared to the original spectral bands. In summary, the spectral indices had the highest importance. For instance, the BAIS2 index had the highest impact on the results by providing the importance of more than 0.2. Additionally, band 9 and 12 of Snetinel-2 had the highest importance compared to other spectral bands. Finally, SAVG and shade were the most important spatial features for burned area mapping. Consequently, it is important to use both spatial and spectral features to produce accurate burned area maps.

It is important to select the optimum spectral and spatial features to obtain accurate burned area maps. Based on the numerical results in this study, the feature selection by the HHO algorithm could considerably improve the performance of the classifiers by selecting optimum features for the classification. Moreover, feature selection improved the efficiency of the proposed method in terms of time processing.

Many studies have proved the high potential of the deep learning methods for burned area detection [61,62,63]. However, these methods need a large amount of training samples, the collection/generation of which is usually challenging. One of the advantages of the proposed method is that it could produce accurate burned are maps using relatively lower numbers of training samples (e.g., 11,000 pixels) compared to the commonly used deep learning algorithms. Furthermore, most of the deep learning methods require significant time for training the network, tuning the hyperparameters, and designing efficient architecture. However, the proposed framework had few tuning parameters and improved the time efficiency by an optimum architecture.

5. Conclusions

This study estimated burned areas over mainland Australia using Sentinel-2 imagery at spatial resolution of 10 (m) from September 2019 to February 2020 within the GEE platform. This study produced an accurate burned area map by (1) estimating burned area for different types of LULC, (2) utilizing a novel feature selection method for determining the most optimal spectral and spatial features for burned area mapping, (3) utilizing a combination of spatial and spectral features for burned area mapping, and (4) comparing the performance of different classifiers for burned area mapping. The proposed framework was based on image differencing and supervised machine learning algorithms. Many spectral (vegetation indices, water indices, soil indices, and burned area indices) and spatial (texture features extracted from the GLCM matrix) features were initially generated. Then, the performance of three classifiers was investigated through two scenarios: (1) classification based on all spectral and spatial features, (2) classification based on the selected features by the HHO algorithm. It was observed that feature selection significantly improved the accuracy of the classifications. Additionally, the RF classifier had the highest accuracy (OA = 91.02%). It was also observed that the proposed method had a higher accuracy compared to the Landsat burned area product. Based on the burned area map produced by the proposed method, about 250,000 (km2) across mainland Australia was damaged by wildfires. Based on the obtained results by feature selection, the BASI2 index was the most importance feature among other spectral and spatial features, as well as the original bands. Among different LULC classes, the Evergreen Needleleaf went through the most significant damage relative to its extent. Overall, it was concluded that the proposed algorithm within the GEE cloud platform had a high potential for burned area mapping over large areas in a costly, timely, and computationally efficient manner.

However, the main limitation of the proposed approach was using the MODIS LULC product within phase 2 of the method to identify the type of LULC over the burned areas. This product has low spatial resolution (0.5 km). Thus, future studies should utilize a higher resolution LULC product or perform the LULC classification using Sentinel-2 images in the phase 2 of the proposed method.

Author Contributions

Conceptualization, S.T.S., and M.A. (Mehdi Akhoondzadeh); methodology S.T.S., M.A. (Mehdi Akhoondzadeh), and M.A. (Meisam Amani); visualization S.T.S., and M.A. (Meisam Amani); supervision S.M., and M.A. (Meisam Amani); funding acquisition, S.M., and M.A. (Meisam Amani); writing—original draft preparation, S.T.S. and M.A. (Mehdi Akhoondzadeh); writing—review and editing, S.T.S., M.A. (Meisam Amani), and S.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Publicly available datasets were analyzed in this study. y. These datasets can be found here: [https://code.earthengine.google.com/].

Acknowledgments

The authors would like to thank the European Space Agency (ESA) for providing the Sen-tinel-2 Level-1C products and National Aeronautics and Space Administration (NASA) for providing Landsat burned product and MODIS land cover dataset. We thank the anonymous reviewers for their valuable comments on our manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Table A1.

Spectral indices calculated from Sentinel-2 data.

Table A1.

Spectral indices calculated from Sentinel-2 data.

| No. | Abbreviation | Index | Formula | Description | Reference |

|---|---|---|---|---|---|

| 1 | AFRI1 | Aerosol free vegetation index 1.6 | Vegetation Index | [64] | |

| 2 | AFRI2 | Aerosol free vegetation index 2.1 | Vegetation Index | [64] | |

| 3 | ARI | Anthocyanin reflectance index | Vegetation Index | [65] | |

| 4 | ARVI | Atmospherically resistant vegetation index | Vegetation Index | [66] | |

| 5 | ARVI2 | Atmospherically resistant vegetation index 2 | −0.18 + 1.17 ∗ | Vegetation Index | [66] |

| 6 | TSAVI | Adjusted transformed soil-adjusted vegetation index | Vegetation Index, a = 1.22, b = 0.03, X = 0.08 | [67] | |

| 7 | AVI | Ashburn vegetation index | Vegetation Index | [68] | |

| 8 | BNDVI | Blue-normalized difference vegetation index | Vegetation Index | [69] | |

| 9 | BRI | Browning reflectance index | Vegetation Index | [70] | |

| 10 | BWDRVI | Blue-wide dynamic range vegetation index | Vegetation Index | [71] | |

| 11 | CI | Color Index | Vegetation Index | [72] | |

| 12 | V | Vegetation | Vegetation Index | [73] | |

| 13 | CARI | Chlorophyll absorption ratio index | Vegetation Index, a = (Band5 − Band3)/150 b = B and 3 ∗ 550 ∗ a | [74] | |

| 14 | CCCI | Canopy chlorophyll content index | Vegetation Index | [75] | |

| 15 | CRI550 | Carotenoid reflectance index 550 | Vegetation Index | [76] | |

| 16 | CRI700 | Carotenoid reflectance index 700 | Vegetation Index | [76] | |

| 17 | CVI | Chlorophyll vegetation index | Vegetation Index | [77] | |

| 18 | Datt1 | Vegetation index proposed by Datt 1 | Vegetation Index | [78] | |

| 19 | Datt2 | Vegetation index proposed by Datt 2 | Vegetation Index | [79] | |

| 20 | Datt3 | Vegetation index proposed by Datt 3 | Vegetation Index | [79] | |

| 21 | DVI | Differenced vegetation index | Vegetation Index | [80] | |

| 22 | EPIcar | Eucalyptus pigment index for carotenoid | Vegetation Index | [79] | |

| 23 | EPIChla | Eucalyptus pigment index for chlorophyll a | Vegetation Index | [79] | |

| 24 | EPIChlab | Eucalyptus pigment index for chlorophyll a + b | Vegetation Index | [79] | |

| 25 | EPIChlb | Eucalyptus pigment index for chlorophyll b | Vegetation Index | [79] | |

| 26 | EVI | Enhanced vegetation index | Vegetation Index | [81] | |

| 27 | EVI2 | Enhanced vegetation index 2 | Vegetation Index | [82] | |

| 28 | EVI2.2 | Enhanced vegetation index 2.2 | Vegetation Index | [82] | |

| 29 | GARI | Green atmospherically resistant vegetation index | Vegetation Index | [83] | |

| 30 | GBNDVI | Green-Blue normalized difference vegetation index | Vegetation Index | [84] | |

| 31 | GDVI | Green difference vegetation index | Vegetation Index | [85] | |

| 32 | GEMI | Global environment monitoring index | Vegetation Index, n = | [86] | |

| 33 | GLI | Green leaf index | Vegetation Index | [87] | |

| 34 | GNDVI | Green normalized difference vegetation index | Vegetation Index | [83] | |

| 35 | GNDVI2 | Green normalized difference vegetation index 2 | Vegetation Index | [83] | |

| 36 | GOSAVI | Green optimized soil adjusted vegetation index | Vegetation Index | [88] | |

| 37 | GRNDVI | Green-Red normalized difference vegetation index | Vegetation Index | [89] | |

| 38 | GVMI | Global vegetation moisture index | Vegetation Index | [90] | |

| 39 | Hue | Hue | Vegetation Index | [91] | |

| 40 | IPVI | Infrared percentage vegetation index | Vegetation Index | [92] | |

| 41 | LCI | Leaf chlorophyll index | Vegetation Index | [78] | |

| 42 | Maccion | Vegetation index proposed by Maccioni | Vegetation Index | [93] | |

| 43 | MCARI | Modified chlorophyll absorption in reflectance index | Vegetation Index | [94] | |

| 44 | MTVI2 | Modified triangular vegetation index 2 | Vegetation Index | [95] | |

| 45 | MCARItoMTVI2 | MCARI/MTVI2 | Vegetation Index | [96] | |

| 46 | MCARItoOSAVI | MCARI/OSAVI | Vegetation Index | [95] | |

| 47 | MGVI | Green vegetation index proposed by Misra | Vegetation Index | [97] | |

| 49 | mNDVI | Modified normalized difference vegetation index | Vegetation Index | [98] | |

| 49 | MNSI | Non such index proposed by Misra | Vegetation Index | [97] | |

| 50 | MSAVI | Modified soil adjusted vegetation index | Vegetation Index | [99] | |

| 51 | MSAVI2 | Modified soil adjusted vegetation index 2 | Vegetation Index | [99] | |

| 52 | MSBI | Soil brightness index proposed by Misra | Vegetation Index | [97] | |

| 53 | MSR670 | Modified simple ratio 670/800 | Vegetation Index | [100] | |

| 54 | MSRNir/Red | Modified simple ratio NIR/red | Vegetation Index | [101] | |

| 55 | NBR | Normalized difference Nir/Swir normalized burn ratio | Vegetation Index | [102] | |

| 56 | ND774/677 | Normalized difference 774/677 | Vegetation Index | [103] | |

| 57 | NDII | Normalized difference infrared index | Vegetation Index | [104] | |

| 58 | NDRE | Normalized difference Red-edge | Vegetation Index | [105] | |

| 59 | NDSI | Normalized difference salinity index | Vegetation Index | [106] | |

| 60 | NDVI | Normalized difference vegetation index | Vegetation Index | [107] | |

| 61 | NDVI2 | Normalized difference vegetation index 2 | Vegetation Index | [85] | |

| 62 | NGRDI | Normalized green red difference index | Vegetation Index | [103] | |

| 63 | OSAVI | Optimized soil adjusted vegetation index | Vegetation Index | [108] | |

| 64 | PNDVI | Pan normalized difference vegetation index | Vegetation Index | [89] | |

| 65 | PVR | Photosynthetic vigor ratio | Vegetation Index | [109] | |

| 66 | RBNDVI | Red-Blue normalized difference vegetation index | Vegetation Index | [89] | |

| 67 | RDVI | Renormalized difference vegetation index | Vegetation Index | [110] | |

| 68 | REIP | Red-edge inflection point | 700 + 40 ∗ () | Vegetation Index | [111] |

| 69 | Rre | Reflectance at the inflexion point | Vegetation Index | [112] | |

| 70 | SAVI | Soil adjusted vegetation index | Vegetation Index | [113] | |

| 71 | SBL | Soil background line | Vegetation Index | [80] | |

| 72 | SIPI | Structure intensive pigment index | Vegetation Index | [114] | |

| 73 | SIWSI | Shortwave infrared water stress index | Vegetation Index | [115] | |

| 74 | SLAVI | Specific leaf area vegetation index | Vegetation Index | [116] | |

| 75 | TCARI | Transformed chlorophyll absorption Ratio | 3 ∗ (() − 0.2 ∗ ()()) | Vegetation Index | [94] |

| 76 | TCARItoOSAVI | TCARI/OSAVI | Vegetation Index | [108] | |

| 77 | TCI | Triangular chlorophyll index | 1.2 ∗ (() − 1.5 ∗ ()()) | Vegetation Index | [117] |

| 78 | TVI | Transformed vegetation index | Vegetation Index | [118] | |

| 79 | VARI700 | Visible atmospherically resistant index 700 | Vegetation Index | [119] | |

| 80 | VARIgreen | Visible atmospherically resistant index green | Vegetation Index | [119] | |

| 81 | VI700 | Vegetation index 700 | Vegetation Index | [120] | |

| 82 | WDRVI | Wide dynamic range vegetation index | Vegetation Index | [121] | |

| 83 | NDWI | Normalized Difference Water Index | Water Index | [122] | |

| 84 | MNDWI | Modified Normalized Difference Water Index | Water Index | [123] | |

| 85 | AWEInsh | Automated Water Extraction Index not dominant shadow | 4 ∗ () − (0.25 ∗ | Water Index | [50] |

| 86 | AWEIsh | Automated Water Extraction Index dominant shadow | + 2.5 ∗ − 1.5 ∗ () − 0.25 ∗ | Water Index | [50] |

| 87 | BI | Brightness Index | Bare Soil Index | [124] | |

| 88 | BI2 | Second Brightness Index | Bare Soil Index | [125] | |

| 89 | RI | Redness Index | Bare Soil Index | [72] | |

| 90 | BAIS2 | Burned Area Index for Sentinel-2 | ( | Burned Index | [33] |

| 91 | NBR | Normalized Burned Ratio Index | Burned Index | [102] |

Appendix B

Table A2.

Textural features computed from the gray level co-occurrence matrix (GLCM).

Table A2.

Textural features computed from the gray level co-occurrence matrix (GLCM).

| NO. | Abbreviation | Full Name | Formula | Description |

|---|---|---|---|---|

| 1 | ASM | Angular Second Moment | textural uniformity | |

| 2 | CONTRAST | Contrast | degree of spatial frequency | |

| 3 | CORR | Correlation | grey tone linear dependencies in the image | |

| 4 | VAR | Variance | Heterogeneity of image | |

| 5 | IDM | Inverse Difference Moment | image homogeneity | |

| 6 | SAVG | Sum Average | the mean of the gray level sum distribution of the image | |

| 7 | SVAR | Sum Variance | the dispersion of the gray level sum distribution of the image | |

| 8 | SENT | Sum Entropy | the disorder related to the gray level sum distribution of the image | |

| 9 | ENT | Entropy | Randomness of intensity distribution | |

| 10 | DVAR | Difference variance | the dispersion of the gray level difference distribution of the image | |

| 11 | DENT | Difference entropy | Degree of organization of gray level | |

| 12 | IMCORR1 | Information Measure of correlation 1 | dependency between two random variables | |

| 13 | IMCORR2 | Information Measure of correlation 2 | Linear dependence of gray level | |

| 14 | DISS | Dissimilarity | Total variation present | |

| 15 | INERTIA | Inertia | intensity contrast of image | |

| 16 | SHADE | Cluster Shade | Skewness of co-occurrence | |

| 17 | PROM | Cluster prominence | Asymmetry of image |

Where the P(i, j) is the (i, j)-th entry of the normalized GLCM, N is the total number of gray levels in the image, and x, y and x, y denote the mean and standard deviation of the row and column sums of the GLCM, respectively. , , , , and .

References

- Bokwa, A. Natural hazard. In Encyclopedia of Natural Hazards; Bobrowsky, P.T., Ed.; Springer: Dordrecht, The Netherlands, 2013; pp. 711–718. [Google Scholar] [CrossRef]

- Gibson, R.; Danaher, T.; Hehir, W.; Collins, L. A remote sensing approach to mapping fire severity in south-eastern Australia using sentinel 2 and random forest. Remote Sens. Environ. 2020, 240, 111702. [Google Scholar] [CrossRef]

- Collins, L.; McCarthy, G.; Mellor, A.; Newell, G.; Smith, L. Training data requirements for fire severity mapping using Landsat imagery and random forest. Remote Sens. Environ. 2020, 245, 111839. [Google Scholar] [CrossRef]

- Chuvieco, E.; Aguado, I.; Salas, J.; Garcia, M.; Yebra, M.; Oliva, P. Satellite Remote Sensing Contributions to Wildland Fire Science and Management. Curr. For. Rep. 2020, 6, 81–96. [Google Scholar] [CrossRef]

- Srivastava, S.; Kumar, A.S. Implications of intense biomass burning over Uttarakhand in April–May 2016. Nat. Hazards 2020, 101, 1–17. [Google Scholar] [CrossRef]

- Seydi, S.T.; Hasanlou, M. A new land-cover match-based change detection for hyperspectral imagery. Eur. J. Remote Sens. 2017, 50, 517–533. [Google Scholar] [CrossRef]

- Izadi, M.; Mohammadzadeh, A.; Haghighattalab, A. A new neuro-fuzzy approach for post-earthquake road damage assessment using GA and SVM classification from QuickBird satellite images. J. Indian Soc. Remote Sens. 2017, 45, 965–977. [Google Scholar] [CrossRef]

- Ghannadi, M.A.; SaadatSeresht, M.; Izadi, M.; Alebooye, S. Optimal texture image reconstruction method for improvement of SAR image matching. IET RadarSonar Navig. 2020, 14, 1229–1235. [Google Scholar] [CrossRef]

- Dragozi, E.; Gitas, I.Z.; Stavrakoudis, D.G.; Theocharis, J.B. Burned area mapping using support vector machines and the FuzCoC feature selection method on VHR IKONOS imagery. Remote Sens. 2014, 6, 12005–12036. [Google Scholar] [CrossRef]

- Oliva, P.; Schroeder, W. Assessment of VIIRS 375 m active fire detection product for direct burned area mapping. Remote Sens. Environ. 2015, 160, 144–155. [Google Scholar] [CrossRef]

- Chen, W.; Moriya, K.; Sakai, T.; Koyama, L.; Cao, C. Mapping a burned forest area from Landsat TM data by multiple methods. Geomat. Nat. Hazards Risk 2016, 7, 384–402. [Google Scholar] [CrossRef]

- Hawbaker, T.J.; Vanderhoof, M.K.; Beal, Y.-J.; Takacs, J.D.; Schmidt, G.L.; Falgout, J.T.; Williams, B.; Fairaux, N.M.; Caldwell, M.K.; Picotte, J.J. Mapping burned areas using dense time-series of Landsat data. Remote Sens. Environ. 2017, 198, 504–522. [Google Scholar] [CrossRef]

- Pereira, A.A.; Pereira, J.; Libonati, R.; Oom, D.; Setzer, A.W.; Morelli, F.; Machado-Silva, F.; De Carvalho, L.M.T. Burned area mapping in the Brazilian Savanna using a one-class support vector machine trained by active fires. Remote Sens. 2017, 9, 1161. [Google Scholar] [CrossRef]

- Roteta, E.; Bastarrika, A.; Padilla, M.; Storm, T.; Chuvieco, E. Development of a Sentinel-2 burned area algorithm: Generation of a small fire database for sub-Saharan Africa. Remote Sens. Environ. 2019, 222, 1–17. [Google Scholar] [CrossRef]

- Ba, R.; Song, W.; Li, X.; Xie, Z.; Lo, S. Integration of multiple spectral indices and a neural network for burned area mapping based on MODIS data. Remote Sens. 2019, 11, 326. [Google Scholar] [CrossRef]

- Woźniak, E.; Aleksandrowicz, S. Self-Adjusting Thresholding for Burnt Area Detection Based on Optical Images. Remote Sens. 2019, 11, 2669. [Google Scholar] [CrossRef]

- Otón, G.; Ramo, R.; Lizundia-Loiola, J.; Chuvieco, E. Global Detection of Long-Term (1982–2017) Burned Area with AVHRR-LTDR Data. Remote Sens. 2019, 11, 2079. [Google Scholar] [CrossRef]

- Liu, M.; Popescu, S.; Malambo, L. Feasibility of Burned Area Mapping Based on ICESAT− 2 Photon Counting Data. Remote Sens. 2020, 12, 24. [Google Scholar] [CrossRef]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Amani, M.; Ghorbanian, A.; Ahmadi, S.A.; Kakooei, M.; Moghimi, A.; Mirmazloumi, S.M.; Moghaddam, S.H.A.; Mahdavi, S.; Ghahremanloo, M.; Parsian, S. Google earth engine cloud computing platform for remote sensing big data applications: A comprehensive review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020. [Google Scholar] [CrossRef]

- Long, T.; Zhang, Z.; He, G.; Jiao, W.; Tang, C.; Wu, B.; Zhang, X.; Wang, G.; Yin, R. 30 m Resolution Global Annual Burned Area Mapping Based on Landsat Images and Google Earth Engine. Remote Sens. 2019, 11, 489. [Google Scholar] [CrossRef]

- Zhang, Z.; He, G.; Long, T.; Tang, C.; Wei, M.; Wang, W.; Wang, G. Spatial Pattern Analysis of Global Burned Area in 2005 Based on Landsat Satellite Images. In Proceedings of IOP Conference Series: Earth and Environmental Science; IOP Publishing: Philadelphia, PA, USA, 2020; p. 012078. [Google Scholar]

- Barboza Castillo, E.; Turpo Cayo, E.Y.; de Almeida, C.M.; Salas López, R.; Rojas Briceño, N.B.; Silva López, J.O.; Barrena Gurbillón, M.Á.; Oliva, M.; Espinoza-Villar, R. Monitoring Wildfires in the Northeastern Peruvian Amazon Using Landsat-8 and Sentinel-2 Imagery in the GEE Platform. ISPRS Int. J. Geo-Inf. 2020, 9, 564. [Google Scholar] [CrossRef]

- Ehsani, M.R.; Arevalo, J.; Risanto, C.B.; Javadian, M.; Devine, C.J.; Arabzadeh, A.; Venegas-Quiñones, H.L.; Dell’Oro, A.P.; Behrangi, A. 2019–2020 Australia Fire and Its Relationship to Hydroclimatological and Vegetation Variabilities. Water 2020, 12, 3067. [Google Scholar] [CrossRef]

- Available online: http://www.bom.gov.au/climate/ (accessed on 22 December 2020).

- Drusch, M.; Del Bello, U.; Carlier, S.; Colin, O.; Fernandez, V.; Gascon, F.; Hoersch, B.; Isola, C.; Laberinti, P.; Martimort, P. Sentinel-2: ESA’s optical high-resolution mission for GMES operational services. Remote Sens. Environ. 2012, 120, 25–36. [Google Scholar] [CrossRef]

- Available online: https://www.ktnv.com/ (accessed on 13 November 2020).

- Chen, Y.; Song, L.; Liu, Y.; Yang, L.; Li, D. A Review of the Artificial Neural Network Models for Water Quality Prediction. Appl. Sci. 2020, 10, 5776. [Google Scholar] [CrossRef]

- Sulla-Menashe, D.; Friedl, M.A. User Guide to Collection 6 MODIS Land Cover (MCD12Q1 and MCD12C1) Product; USGS: Reston, VA, USA, 2018; pp. 1–18.

- Available online: https://www.usgs.gov/core-science-systems/nli/landsat/landsat-burned-area (accessed on 13 November 2020).

- Xue, J.; Su, B. Significant remote sensing vegetation indices: A review of developments and applications. J. Sens. 2017, 2017, 1353691. [Google Scholar] [CrossRef]

- Liu, S.; Zheng, Y.; Dalponte, M.; Tong, X. A novel fire index-based burned area change detection approach using Landsat-8 OLI data. Eur. J. Remote Sens. 2020, 53, 104–112. [Google Scholar] [CrossRef]

- Filipponi, F. BAIS2: Burned area index for Sentinel-2. Proceedings 2018, 2, 364. [Google Scholar] [CrossRef]

- Conners, R.W.; Trivedi, M.M.; Harlow, C.A. Segmentation of a high-resolution urban scene using texture operators. Comput. Vis. Graph. Image Process. 1984, 25, 273–310. [Google Scholar] [CrossRef]

- Mahdavi, S.; Salehi, B.; Amani, M.; Granger, J.; Brisco, B.; Huang, W. A dynamic classification scheme for mapping spectrally similar classes: Application to wetland classification. Int. J. Appl. Earth Obs. Geoinf. 2019, 83, 101914. [Google Scholar] [CrossRef]

- Heidari, A.A.; Mirjalili, S.; Faris, H.; Aljarah, I.; Mafarja, M.; Chen, H. Harris hawks optimization: Algorithm and applications. Future Gener. Comput. Syst. 2019, 97, 849–872. [Google Scholar] [CrossRef]

- Houssein, E.H.; Hosney, M.E.; Oliva, D.; Mohamed, W.M.; Hassaballah, M. A novel hybrid Harris hawks optimization and support vector machines for drug design and discovery. Comput. Chem. Eng. 2020, 133, 106656. [Google Scholar] [CrossRef]

- Amani, M.; Mahdavi, S.; Berard, O. Supervised wetland classification using high spatial resolution optical, SAR, and LiDAR imagery. J. Appl. Remote Sens. 2020, 14, 024502. [Google Scholar] [CrossRef]

- Mahdavi, S.; Salehi, B.; Amani, M.; Granger, J.E.; Brisco, B.; Huang, W.; Hanson, A. Object-based classification of wetlands in Newfoundland and Labrador using multi-temporal PolSAR data. Can. J. Remote Sens. 2017, 43, 432–450. [Google Scholar] [CrossRef]

- Ghorbanian, A.; Kakooei, M.; Amani, M.; Mahdavi, S.; Mohammadzadeh, A.; Hasanlou, M. Improved land cover map of Iran using Sentinel imagery within Google Earth Engine and a novel automatic workflow for land cover classification using migrated training samples. ISPRS J. Photogramm. Remote Sens. 2020, 167, 276–288. [Google Scholar] [CrossRef]

- Amani, M.; Mahdavi, S.; Afshar, M.; Brisco, B.; Huang, W.; Mohammad Javad Mirzadeh, S.; White, L.; Banks, S.; Montgomery, J.; Hopkinson, C. Canadian wetland inventory using Google Earth engine: The first map and preliminary results. Remote Sens. 2019, 11, 842. [Google Scholar] [CrossRef]

- Liaw, A.; Wiener, M. Classification and regression by randomForest. R News 2002, 2, 18–22. [Google Scholar]

- Amani, M.; Salehi, B.; Mahdavi, S.; Granger, J.E.; Brisco, B.; Hanson, A. Wetland classification using multi-source and multi-temporal optical remote sensing data in Newfoundland and Labrador, Canada. Can. J. Remote Sens. 2017, 43, 360–373. [Google Scholar] [CrossRef]

- Fu, K.-S. Applications of Pattern Recognition; CRC Press: Boca Raton, FL, USA, 2019. [Google Scholar]

- Amani, M.; Salehi, B.; Mahdavi, S.; Brisco, B.; Shehata, M. A Multiple Classifier System to improve mapping complex land covers: A case study of wetland classification using SAR data in Newfoundland, Canada. Int. J. Remote Sens. 2018, 39, 7370–7383. [Google Scholar] [CrossRef]

- Vapnik, V. The Nature of Statistical Learning Theory; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Yekkehkhany, B.; Safari, A.; Homayouni, S.; Hasanlou, M. A comparison study of different kernel functions for SVM-based classification of multi-temporal polarimetry SAR data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 40, 281. [Google Scholar] [CrossRef]

- Seydi, S.T.; Hasanlou, M.; Amani, M. A New End-to-End Multi-Dimensional CNN Framework for Land Cover/Land Use Change Detection in Multi-Source Remote Sensing Datasets. Remote Sens. 2020, 12, 2010. [Google Scholar] [CrossRef]

- Wang, Q.; Yuan, Z.; Du, Q.; Li, X. GETNET: A general end-to-end 2-D CNN framework for hyperspectral image change detection. IEEE Trans. Geosci. Remote Sens. 2018, 57, 3–13. [Google Scholar] [CrossRef]

- Feyisa, G.L.; Meilby, H.; Fensholt, R.; Proud, S.R. Automated Water Extraction Index: A new technique for surface water mapping using Landsat imagery. Remote Sens. Environ. 2014, 140, 23–35. [Google Scholar] [CrossRef]

- Bastarrika, A.; Chuvieco, E.; Martin, M.P. Mapping burned areas from Landsat TM/ETM+ data with a two-phase algorithm: Balancing omission and commission errors. Remote Sens. Environ. 2011, 115, 1003–1012. [Google Scholar] [CrossRef]

- Fernández-Manso, A.; Quintano, C. A Synergetic Approach to Burned Area Mapping Using Maximum Entropy Modeling Trained with Hyperspectral Data and VIIRS Hotspots. Remote Sens. 2020, 12, 858. [Google Scholar] [CrossRef]

- Shin, J.-i.; Seo, W.-w.; Kim, T.; Park, J.; Woo, C.-s. Using UAV multispectral images for classification of forest burn severity—A case study of the 2019 Gangneung forest fire. Forests 2019, 10, 1025. [Google Scholar] [CrossRef]

- Fraser, R.H.; Van der Sluijs, J.; Hall, R.J. Calibrating satellite-based indices of burn severity from UAV-derived metrics of a burned boreal forest in NWT, Canada. Remote Sens. 2017, 9, 279. [Google Scholar] [CrossRef]

- Calin, M.A.; Parasca, S.V.; Savastru, R.; Manea, D. Characterization of burns using hyperspectral imaging technique–A preliminary study. Burns 2015, 41, 118–124. [Google Scholar] [CrossRef]

- Schepers, L.; Haest, B.; Veraverbeke, S.; Spanhove, T.; Vanden Borre, J.; Goossens, R. Burned area detection and burn severity assessment of a heathland fire in Belgium using airborne imaging spectroscopy (APEX). Remote Sens. 2014, 6, 1803–1826. [Google Scholar] [CrossRef]

- Yin, C.; He, B.; Yebra, M.; Quan, X.; Edwards, A.C.; Liu, X.; Liao, Z.; Luo, K. Burn Severity Estimation in Northern Australia Tropical Savannas Using Radiative Transfer Model and Sentinel-2 Data. In Proceedings of the IGARSS 2019–2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 6712–6715. [Google Scholar]

- Santana, N.C.; de Carvalho Júnior, O.A.; Gomes, R.A.T.; Guimarães, R.F. Burned-area detection in amazonian environments using standardized time series per pixel in MODIS data. Remote Sens. 2018, 10, 1904. [Google Scholar] [CrossRef]

- Simon, M.; Plummer, S.; Fierens, F.; Hoelzemann, J.J.; Arino, O. Burnt area detection at global scale using ATSR-2: The GLOBSCAR products and their qualification. J. Geophys. Res. Atmos. 2004, 109. [Google Scholar] [CrossRef]

- De Araújo, F.M.; Ferreira, L.G. Satellite-based automated burned area detection: A performance assessment of the MODIS MCD45A1 in the Brazilian savanna. Int. J. Appl. Earth Obs. Geoinf. 2015, 36, 94–102. [Google Scholar] [CrossRef]

- Knopp, L.; Wieland, M.; Rättich, M.; Martinis, S. A Deep Learning Approach for Burned Area Segmentation with Sentinel-2 Data. Remote Sens. 2020, 12, 2422. [Google Scholar] [CrossRef]

- Pinto, M.M.; Libonati, R.; Trigo, R.M.; Trigo, I.F.; DaCamara, C.C. A deep learning approach for mapping and dating burned areas using temporal sequences of satellite images. ISPRS J. Photogramm. Remote Sens. 2020, 160, 260–274. [Google Scholar] [CrossRef]

- Ban, Y.; Zhang, P.; Nascetti, A.; Bevington, A.R.; Wulder, M.A. Near Real-Time Wildfire Progression Monitoring with Sentinel-1 SAR Time Series and Deep Learning. Sci. Rep. 2020, 10, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Karnieli, A.; Kaufman, Y.J.; Remer, L.; Wald, A. AFRI—Aerosol free vegetation index. Remote Sens. Environ. 2001, 77, 10–21. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Chivkunova, O.B.; Merzlyak, M.N. Nondestructive estimation of anthocyanins and chlorophylls in anthocyanic leaves. Am. J. Bot. 2009, 96, 1861–1868. [Google Scholar] [CrossRef]

- Kaufman, Y.J.; Tanre, D. Atmospherically resistant vegetation index (ARVI) for EOS-MODIS. IEEE Trans. Geosci. Remote Sens. 1992, 30, 261–270. [Google Scholar] [CrossRef]

- Baret, F.; Guyot, G. Potentials and limits of vegetation indices for LAI and APAR assessment. Remote Sens. Environ. 1991, 35, 161–173. [Google Scholar] [CrossRef]

- Ashburn, P. The vegetative index number and crop identification. In Proceedings of the Technical Sessions of the LACIE Symposium, Houston, TX, USA, 23–26 October 1978; pp. 843–855. [Google Scholar]

- Yang, C.; Everitt, J.H.; Bradford, J.M. Airborne hyperspectral imagery and linear spectral unmixing for mapping variation in crop yield. Precis. Agric. 2007, 8, 279–296. [Google Scholar] [CrossRef]

- Chivkunova, O.B.; Solovchenko, A.E.; Sokolova, S.; Merzlyak, M.N.; Reshetnikova, I.; Gitelson, A.A. Reflectance spectral features and detection of superficial scald–induced browning in storing apple fruit. Russ. J. Phytopathol. 2001, 2, 73–77. [Google Scholar]

- Hancock, D.W.; Dougherty, C.T. Relationships between blue-and red-based vegetation indices and leaf area and yield of alfalfa. Crop Sci. 2007, 47, 2547–2556. [Google Scholar] [CrossRef]

- Pouget, M.; Madeira, J.; Le Floch, E.; Kamal, S. Caracteristiques spectrales des surfaces sableuses de la region cotiere nord-ouest de l’Egypte: Application aux donnees satellitaires SPOT. In Journee de Teledetection Caractérisation et Suivi des Milieux Terrestres en Régions Arides et Tropicales; ORSTOM: Paris, France, 1990; Volume 12, pp. 27–39. [Google Scholar]

- Jordan, C.F. Derivation of leaf-area index from quality of light on the forest floor. Ecology 1969, 50, 663–666. [Google Scholar] [CrossRef]

- Kim, M.S.; Daughtry, C.; Chappelle, E.; McMurtrey, J.; Walthall, C. The use of high spectral resolution bands for estimating absorbed photosynthetically active radiation (A par). In Proceedings of the 6th International Symposium on Physical Measurements and Signatures in Remote Sensing, Phoenix, AZ, USA, 1 January 1994; CNES: Paris, France, 1994. [Google Scholar]

- El-Shikha, D.M.; Barnes, E.M.; Clarke, T.R.; Hunsaker, D.J.; Haberland, J.A.; Pinter, P., Jr.; Waller, P.M.; Thompson, T.L. Remote sensing of cotton nitrogen status using the canopy chlorophyll content index (CCCI). Trans. Asabe 2008, 51, 73–82. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Merzlyak, M.N.; Chivkunova, O.B. Optical properties and nondestructive estimation of anthocyanin content in plant leaves. Photochem. Photobiol. 2001, 74, 38–45. [Google Scholar] [CrossRef]

- Hunt, E.R., Jr.; Daughtry, C.; Eitel, J.U.; Long, D.S. Remote sensing leaf chlorophyll content using a visible band index. Agron. J. 2011, 103, 1090–1099. [Google Scholar] [CrossRef]

- Datt, B. Remote sensing of water content in Eucalyptus leaves. Aust. J. Bot. 1999, 47, 909–923. [Google Scholar] [CrossRef]

- Datt, B. Remote sensing of chlorophyll a, chlorophyll b, chlorophyll a + b, and total carotenoid content in eucalyptus leaves. Remote Sens. Environ. 1998, 66, 111–121. [Google Scholar] [CrossRef]

- Richardson, A.J.; Wiegand, C. Distinguishing vegetation from soil background information. Photogramm. Eng. Remote Sens. 1977, 43, 1541–1552. [Google Scholar]

- Huete, A.; Didan, K.; Miura, T.; Rodriguez, E.P.; Gao, X.; Ferreira, L.G. Overview of the radiometric and biophysical performance of the MODIS vegetation indices. Remote Sens. Environ. 2002, 83, 195–213. [Google Scholar] [CrossRef]

- Miura, T.; Yoshioka, H.; Fujiwara, K.; Yamamoto, H. Inter-comparison of ASTER and MODIS surface reflectance and vegetation index products for synergistic applications to natural resource monitoring. Sensors 2008, 8, 2480–2499. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Merzlyak, M.N. Use of a green channel in remote sensing of global vegetation from EOS-MODIS. Remote Sens. Environ. 1996, 58, 289–298. [Google Scholar] [CrossRef]

- Wang, F.; Huang, J.; Chen, L. Development of a vegetation index for estimation of leaf area index based on simulation modeling. J. Plant Nutr. 2010, 33, 328–338. [Google Scholar] [CrossRef]

- Tucker, C.J.; Elgin, J., Jr.; McMurtrey, J., III; Fan, C. Monitoring corn and soybean crop development with hand-held radiometer spectral data. Remote Sens. Environ. 1979, 8, 237–248. [Google Scholar] [CrossRef]

- Pinty, B.; Verstraete, M. GEMI: A non-linear index to monitor global vegetation from satellites. Vegetatio 1992, 101, 15–20. [Google Scholar] [CrossRef]

- Gobron, N.; Pinty, B.; Verstraete, M.M.; Widlowski, J.-L. Advanced vegetation indices optimized for up-coming sensors: Design, performance, and applications. IEEE Trans. Geosci. Remote Sens. 2000, 38, 2489–2505. [Google Scholar]

- Rondeaux, G.; Steven, M.; Baret, F. Optimization of soil-adjusted vegetation indices. Remote Sens. Environ. 1996, 55, 95–107. [Google Scholar] [CrossRef]

- Wang, F.-M.; Huang, J.-F.; Tang, Y.-L.; Wang, X.-Z. New vegetation index and its application in estimating leaf area index of rice. Rice Sci. 2007, 14, 195–203. [Google Scholar] [CrossRef]

- Glenn, E.P.; Nagler, P.L.; Huete, A.R. Vegetation index methods for estimating evapotranspiration by remote sensing. Surv. Geophys. 2010, 31, 531–555. [Google Scholar] [CrossRef]

- Escadafal, R.; Belghith, A.; Ben-Moussa, H. Indices spectraux pour la dégradation des milieux naturels en Tunisie aride. In Proceedings of the 6ème Symp. Int.“Mesures Physiques et Signatures en Télédétection”, Val d’Isere, France, 17–21 January 1994; pp. 253–259. [Google Scholar]

- Crippen, R.E. Calculating the vegetation index faster. Remote Sens. Environ. 1990, 34, 71–73. [Google Scholar] [CrossRef]

- Maccioni, A.; Agati, G.; Mazzinghi, P. New vegetation indices for remote measurement of chlorophylls based on leaf directional reflectance spectra. J. Photochem. Photobiol. B Biol. 2001, 61, 52–61. [Google Scholar] [CrossRef]

- Daughtry, C.; Walthall, C.; Kim, M.; De Colstoun, E.B.; McMurtrey, J., III. Estimating corn leaf chlorophyll concentration from leaf and canopy reflectance. Remote Sens. Environ. 2000, 74, 229–239. [Google Scholar] [CrossRef]

- Haboudane, D.; Miller, J.R.; Pattey, E.; Zarco-Tejada, P.J.; Strachan, I.B. Hyperspectral vegetation indices and novel algorithms for predicting green LAI of crop canopies: Modeling and validation in the context of precision agriculture. Remote Sens. Environ. 2004, 90, 337–352. [Google Scholar] [CrossRef]

- Eitel, J.; Long, D.; Gessler, P.; Smith, A. Using in-situ measurements to evaluate the new RapidEye™ satellite series for prediction of wheat nitrogen status. Int. J. Remote Sens. 2007, 28, 4183–4190. [Google Scholar] [CrossRef]

- Misra, P.; Wheeler, S.G.; Oliver, R.E. Kauth-Thomas brightness and greenness axes. Contract NASA 1977, 23–46. [Google Scholar]

- Main, R.; Cho, M.A.; Mathieu, R.; O’Kennedy, M.M.; Ramoelo, A.; Koch, S. An investigation into robust spectral indices for leaf chlorophyll estimation. ISPRS J. Photogramm. Remote Sens. 2011, 66, 751–761. [Google Scholar] [CrossRef]

- Qi, J.; Chehbouni, A.; Huete, A.R.; Kerr, Y.H.; Sorooshian, S. A modified soil adjusted vegetation index. Remote Sens. Environ. 1994, 48, 119–126. [Google Scholar] [CrossRef]

- Chen, J.M. Evaluation of vegetation indices and a modified simple ratio for boreal applications. Can. J. Remote Sens. 1996, 22, 229–242. [Google Scholar] [CrossRef]

- Chen, J.M.; Cihlar, J. Retrieving leaf area index of boreal conifer forests using Landsat TM images. Remote Sens. Environ. 1996, 55, 153–162. [Google Scholar] [CrossRef]

- Key, C.; Benson, N. Landscape Assessment: Ground Measure of Severity, the Composite Burn Index; and Remote Sensing of Severity, the Normalized Burn Ratio; FIREMON: Fire Effects Monitoring and Inventory System; USDA Forest Service, Rocky Mountain Research Station: Fort Collins, CO, USA, 2005; Volume 2004.

- Zarco-Tejada, P.J.; Miller, J.R.; Noland, T.L.; Mohammed, G.H.; Sampson, P.H. Scaling-up and model inversion methods with narrowband optical indices for chlorophyll content estimation in closed forest canopies with hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2001, 39, 1491–1507. [Google Scholar] [CrossRef]

- Klemas, V.; Smart, R. The Influence of Soil Salinity, Growth Form, and Leaf Moisture on-the Spectral Radiance of. Photogramm. Eng. Remote Sens. 1983, 49, 77–83. [Google Scholar]

- Barnes, E.; Clarke, T.; Richards, S.; Colaizzi, P.; Haberland, J.; Kostrzewski, M.; Waller, P.; Choi, C.; Riley, E.; Thompson, T. Coincident detection of crop water stress, nitrogen status and canopy density using ground based multispectral data. In Proceedings of the Fifth International Conference on Precision Agriculture, Bloomington, MN, USA, 16–19 July 2000. [Google Scholar]

- Dehni, A.; Lounis, M. Remote sensing techniques for salt affected soil mapping: Application to the Oran region of Algeria. Procedia Eng. 2012, 33, 188–198. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Haboudane, D.; Miller, J.R.; Tremblay, N.; Zarco-Tejada, P.J.; Dextraze, L. Integrated narrow-band vegetation indices for prediction of crop chlorophyll content for application to precision agriculture. Remote Sens. Environ. 2002, 81, 416–426. [Google Scholar] [CrossRef]

- Metternicht, G. Vegetation indices derived from high-resolution airborne videography for precision crop management. Int. J. Remote Sens. 2003, 24, 2855–2877. [Google Scholar] [CrossRef]

- Broge, N.H.; Leblanc, E. Comparing prediction power and stability of broadband and hyperspectral vegetation indices for estimation of green leaf area index and canopy chlorophyll density. Remote Sens. Environ. 2001, 76, 156–172. [Google Scholar] [CrossRef]

- Herrmann, I.; Pimstein, A.; Karnieli, A.; Cohen, Y.; Alchanatis, V.; Bonfil, D. LAI assessment of wheat and potato crops by VENμS and Sentinel-2 bands. Remote Sens. Environ. 2011, 115, 2141–2151. [Google Scholar] [CrossRef]

- Clevers, J.; De Jong, S.; Epema, G.; Van Der Meer, F.; Bakker, W.; Skidmore, A.; Scholte, K. Derivation of the red edge index using the MERIS standard band setting. Int. J. Remote Sens. 2002, 23, 3169–3184. [Google Scholar] [CrossRef]

- Huete, A.R. A soil-adjusted vegetation index (SAVI). Remote Sensing of Environment. Remote Sens. Environ. 1988, 25, 295–309. [Google Scholar] [CrossRef]

- Penuelas, J.; Baret, F.; Filella, I. Semi-empirical indices to assess carotenoids/chlorophyll a ratio from leaf spectral reflectance. Photosynthetica 1995, 31, 221–230. [Google Scholar]

- Fensholt, R.; Sandholt, I. Derivation of a shortwave infrared water stress index from MODIS near-and shortwave infrared data in a semiarid environment. Remote Sens. Environ. 2003, 87, 111–121. [Google Scholar] [CrossRef]

- Lymburner, L.; Beggs, P.J.; Jacobson, C.R. Estimation of canopy-average surface-specific leaf area using Landsat TM data. Photogramm. Eng. Remote Sens. 2000, 66, 183–192. [Google Scholar]

- Haboudane, D.; Tremblay, N.; Miller, J.R.; Vigneault, P. Remote estimation of crop chlorophyll content using spectral indices derived from hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2008, 46, 423–437. [Google Scholar] [CrossRef]

- Rousel, J.; Haas, R.; Schell, J.; Deering, D. Monitoring vegetation systems in the great plains with ERTS. In Proceedings of the Third Earth Resources Technology Satellite—1 Symposium, Washington, DC, USA, 10–14 December 1973; pp. 309–317. [Google Scholar]