Machine Learning Classification of Mediterranean Forest Habitats in Google Earth Engine Based on Seasonal Sentinel-2 Time-Series and Input Image Composition Optimisation

Abstract

1. Introduction

- To test the potential of the GEE platform and Sentinel-2 data to classify forest habitat in a protected natural national park representative of the Mediterranean region, which includes remarkable Natura 2000 sites, performing the whole process inside the code editor environment of GEE;

- To test how different variables and their combinations, all available in GEE, can improve the classification performance (e.g., combinations of input images, bands, reflectance indices, and so on);

- To compare and assess the performance of different machine-learning classification algorithms, in terms of the obtained classification accuracy.

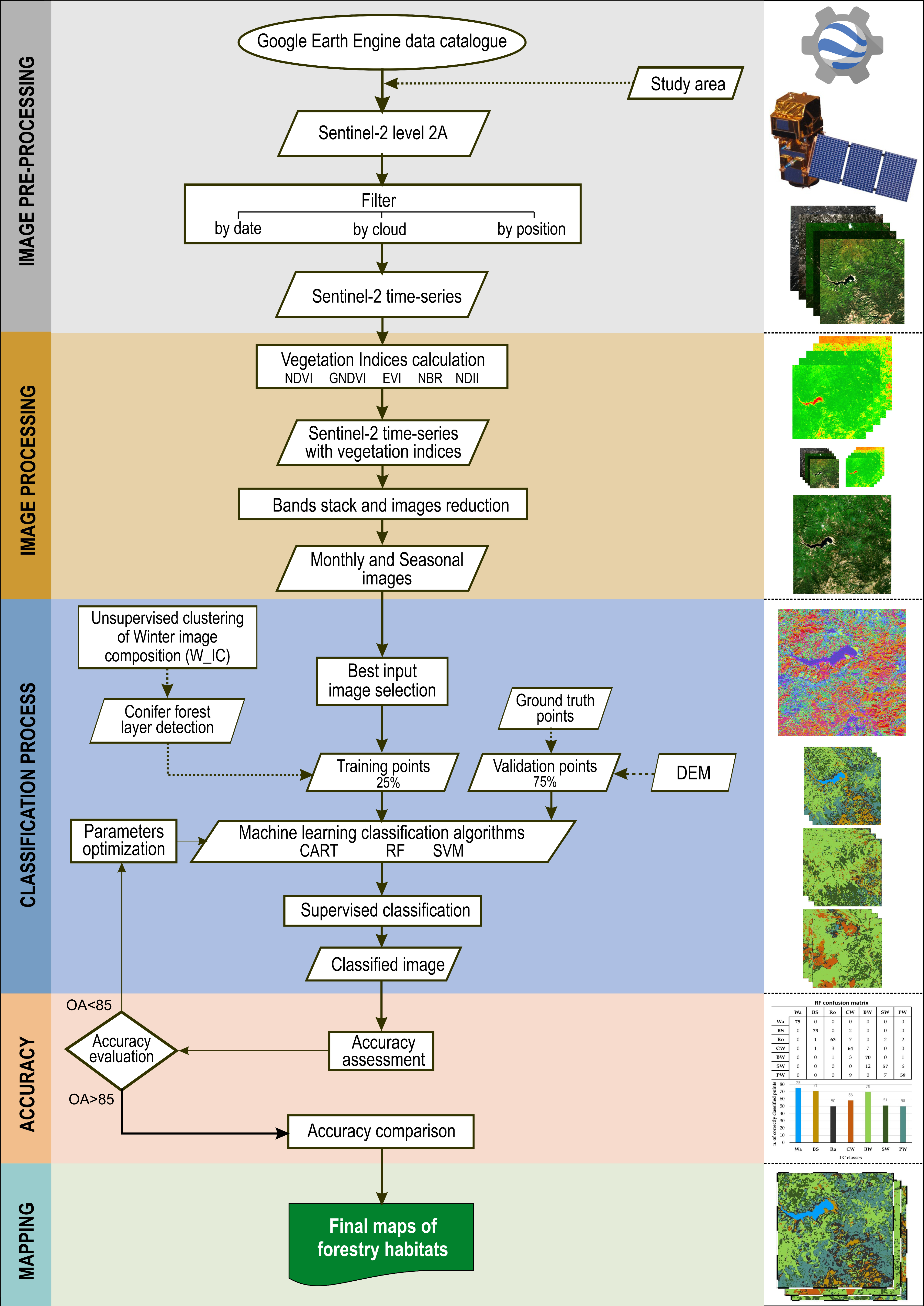

2. Materials and Methods

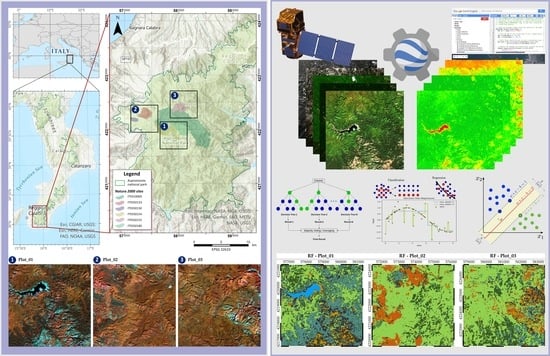

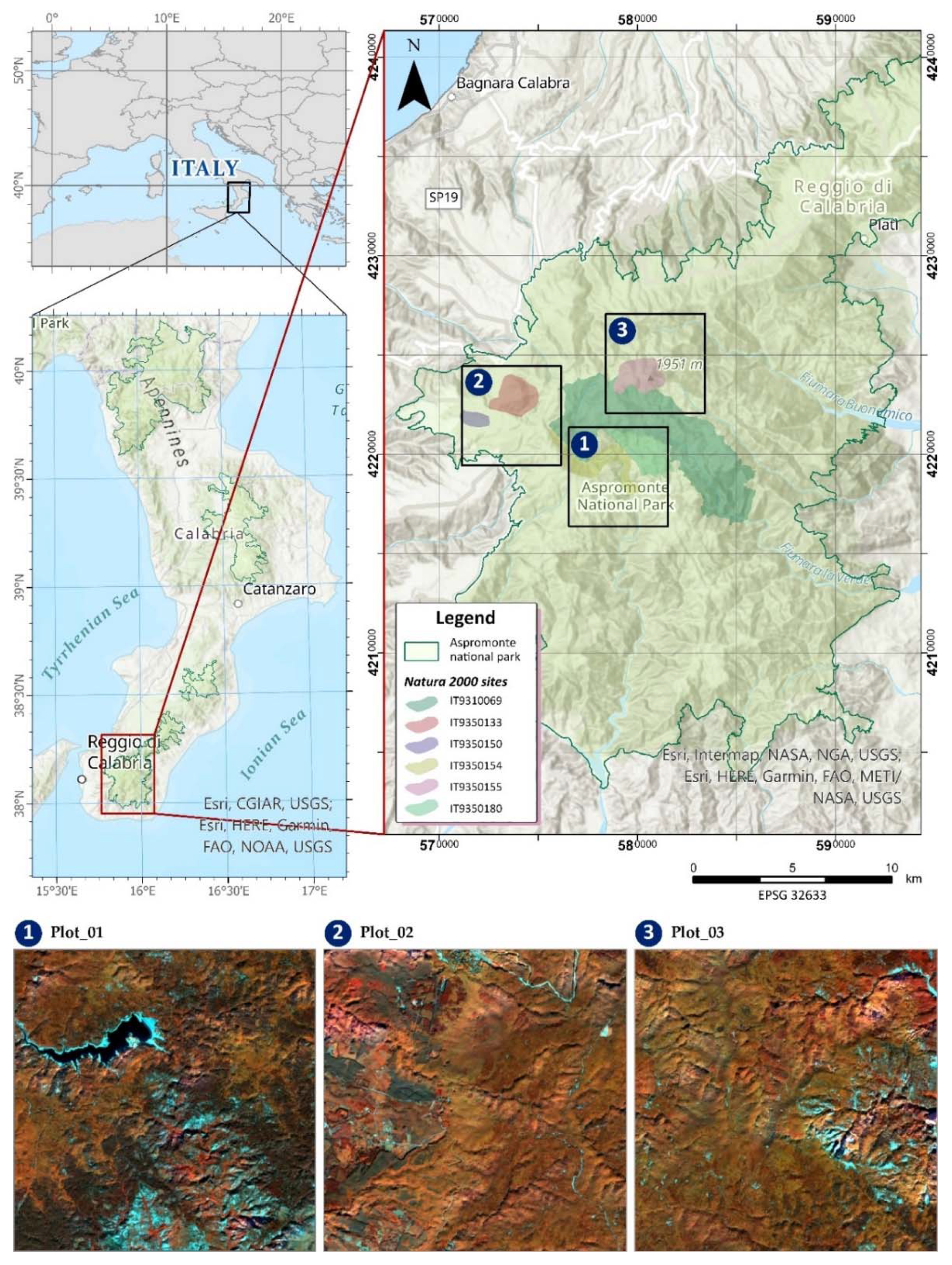

2.1. Study Area

2.2. Image Pre-Processing

2.2.1. Satellite Data Selection

2.2.2. Image Filtering and Time-Series Extraction

2.3. Image Processing

2.3.1. Vegetation Indices

2.3.2. Image Reduction

2.4. Classification

2.4.1. Unsupervised Clustering

2.4.2. Determination of Training and Validation Points

2.4.3. Machine Learning Classification Algorithms

2.4.4. Choice of the Best Input Image for Classification

2.5. Accuracy Assessment

3. Results

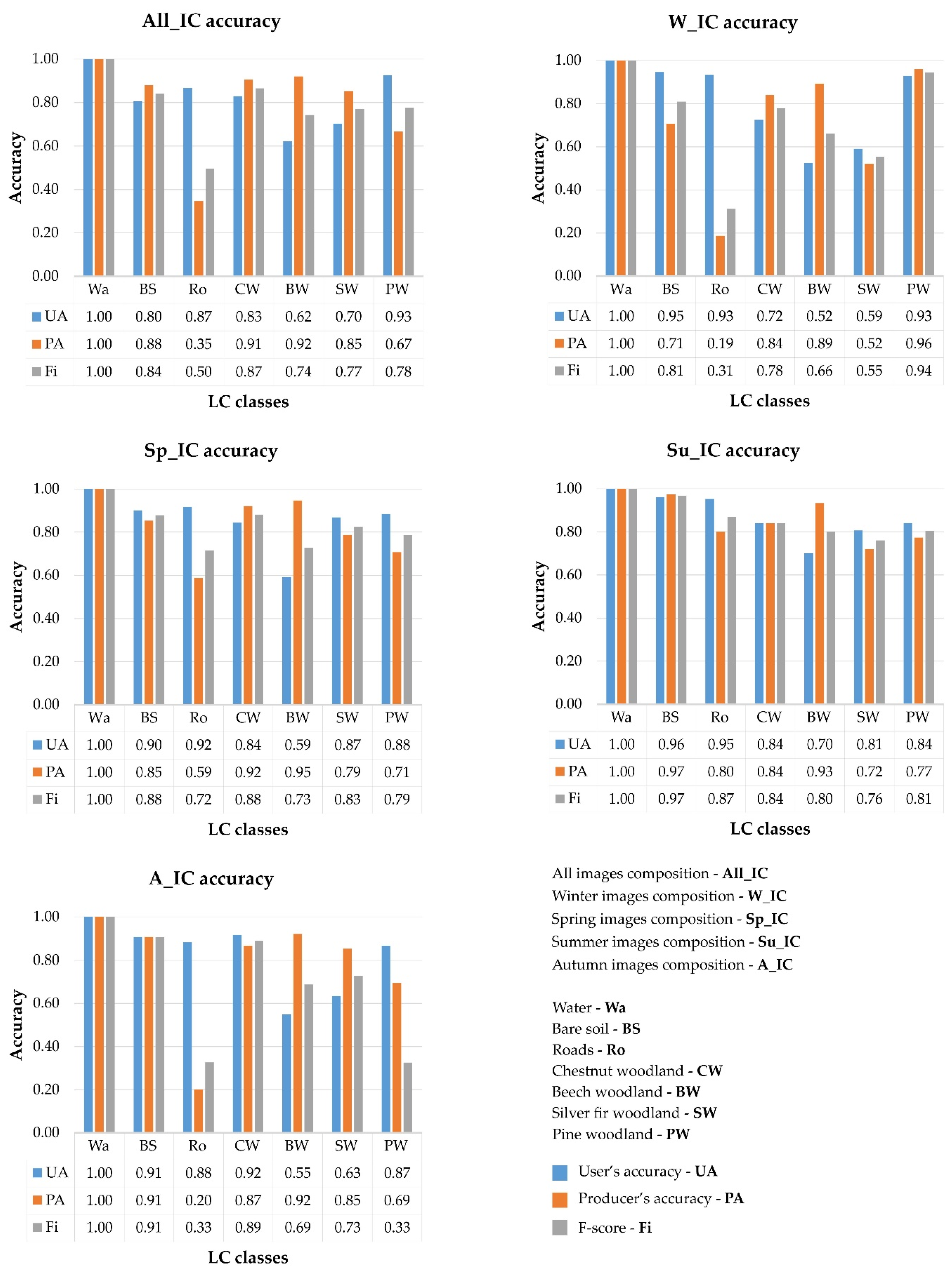

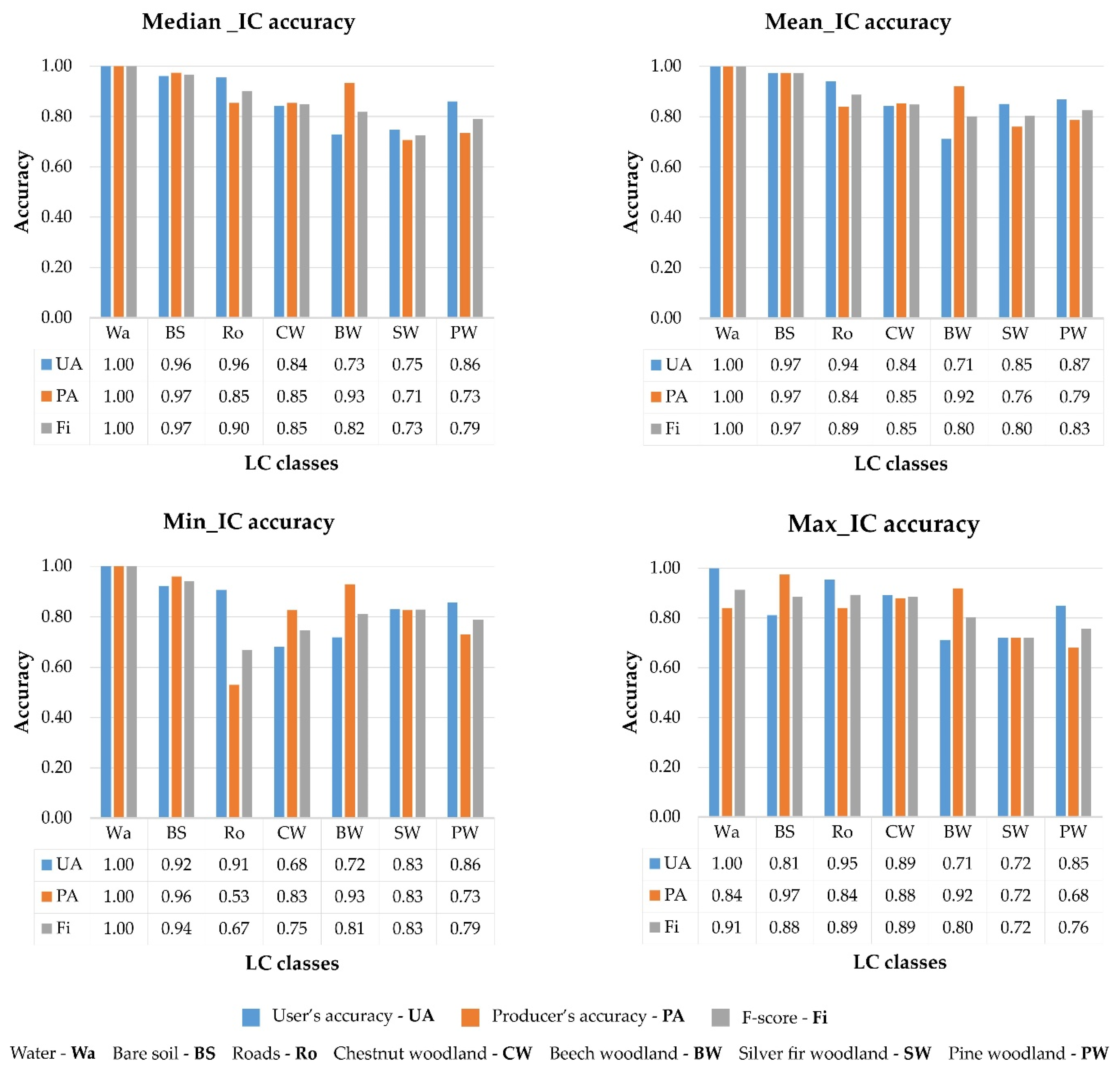

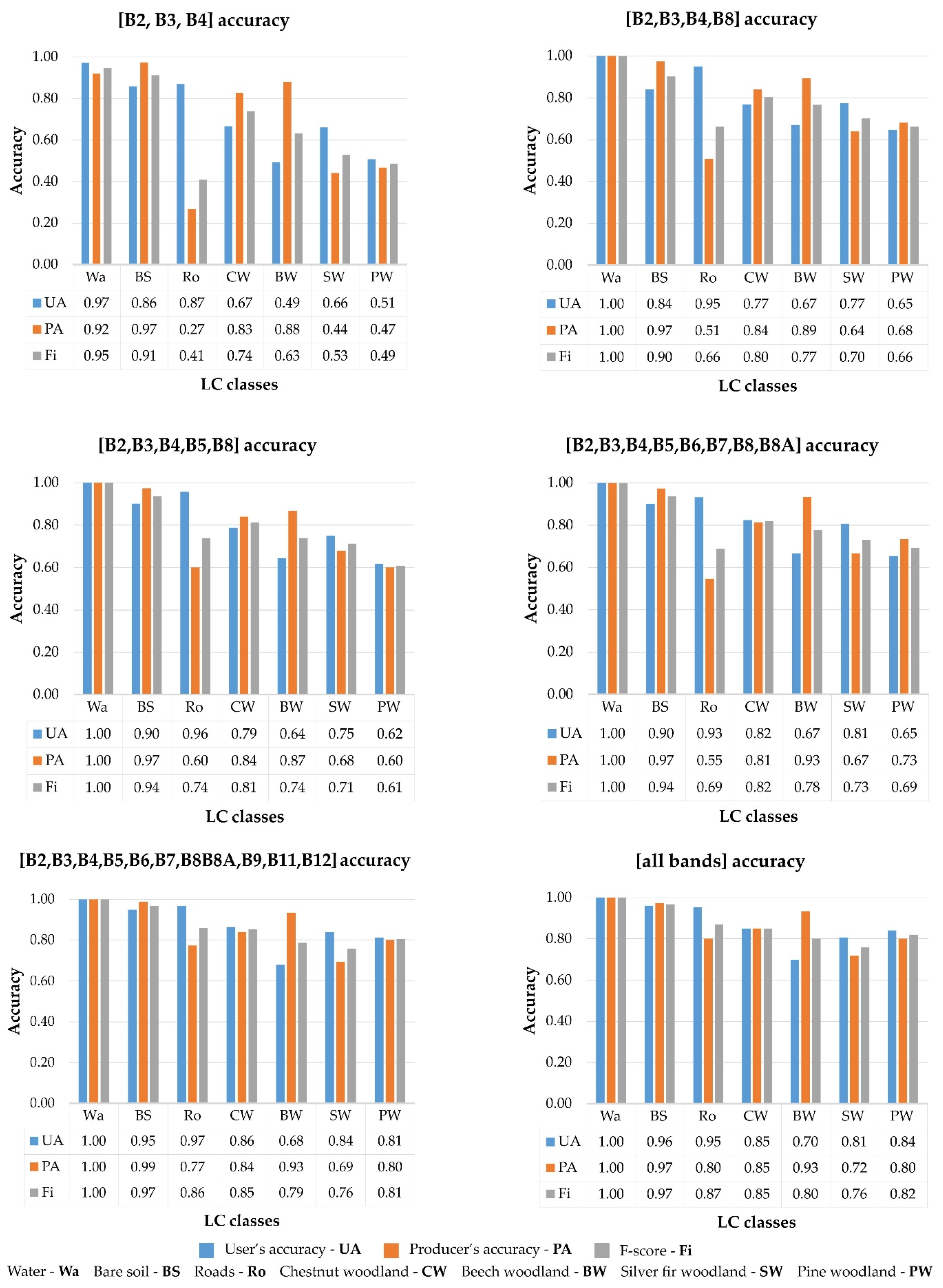

3.1. Best Input Image Composite (IC)

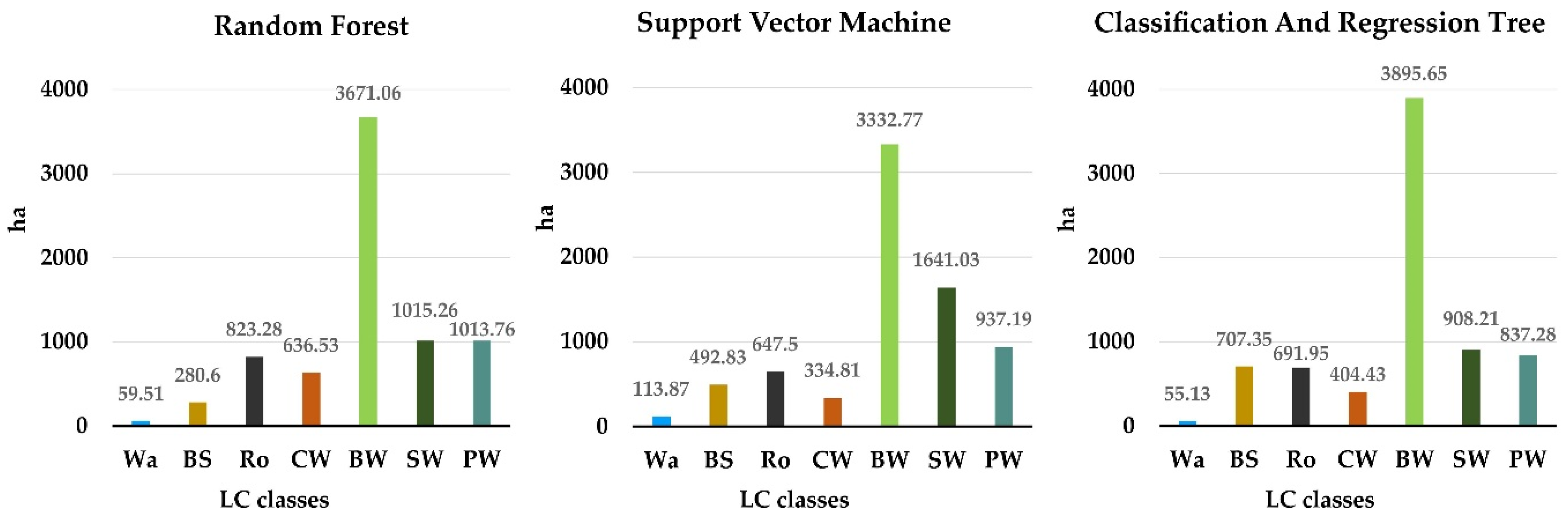

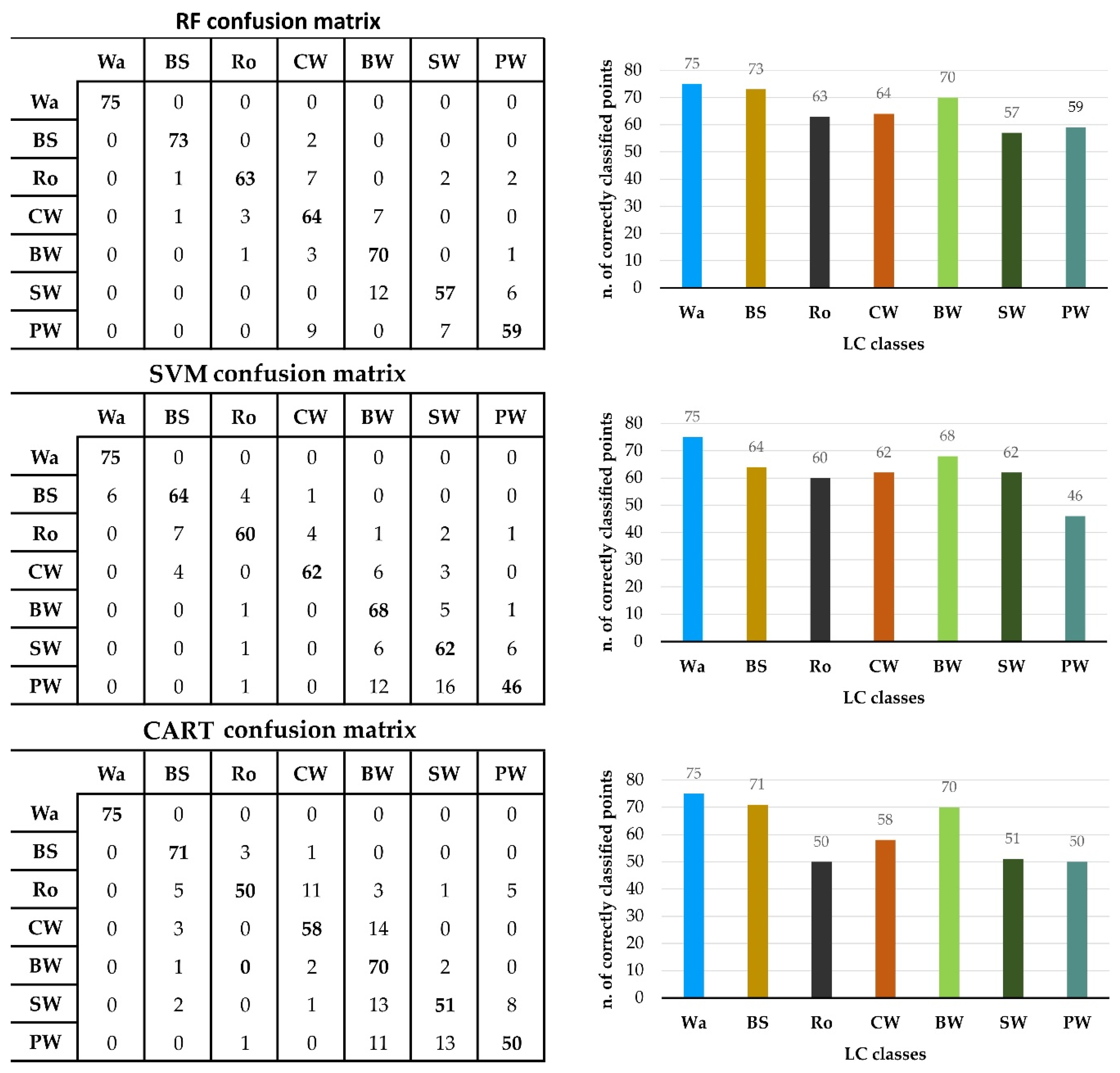

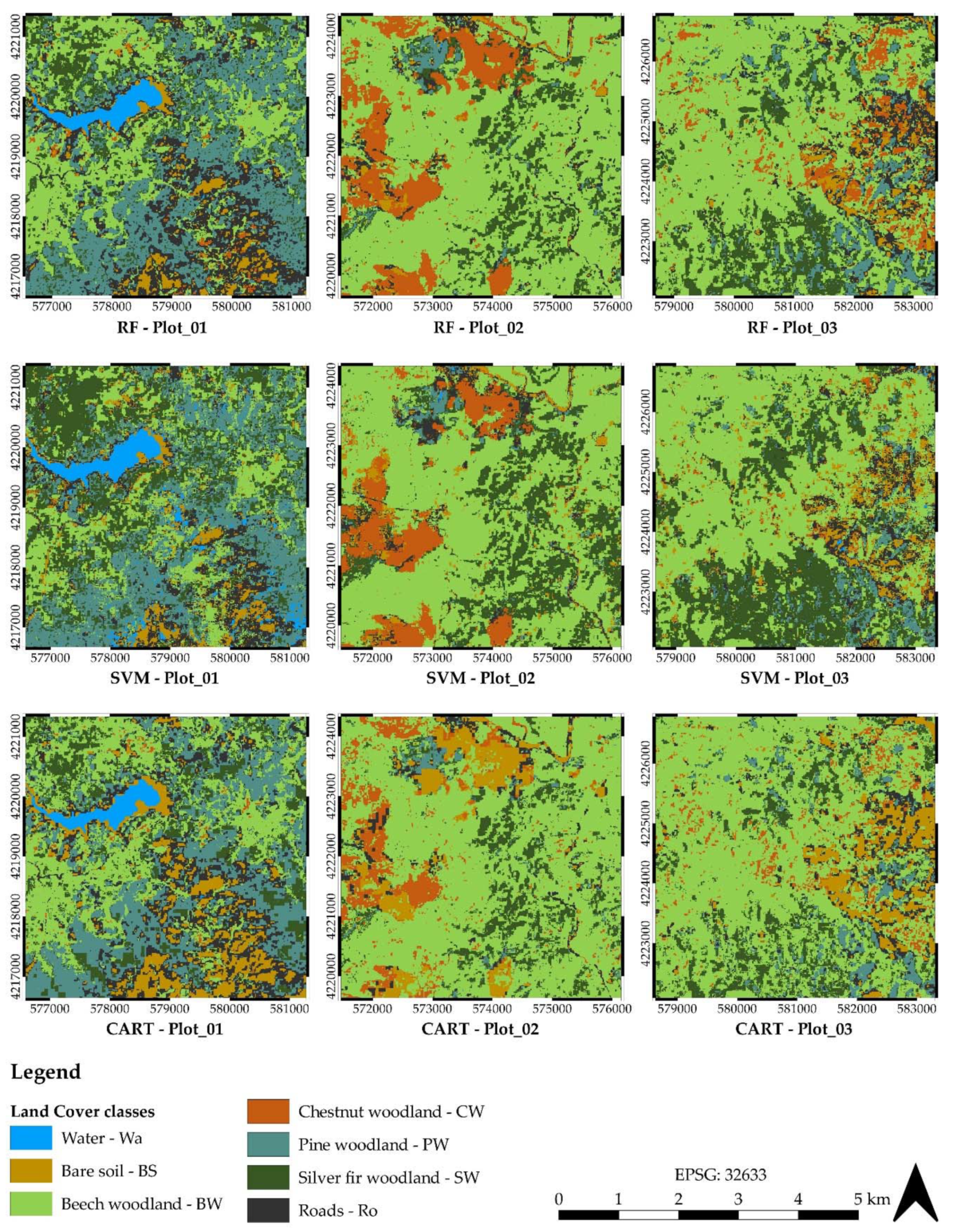

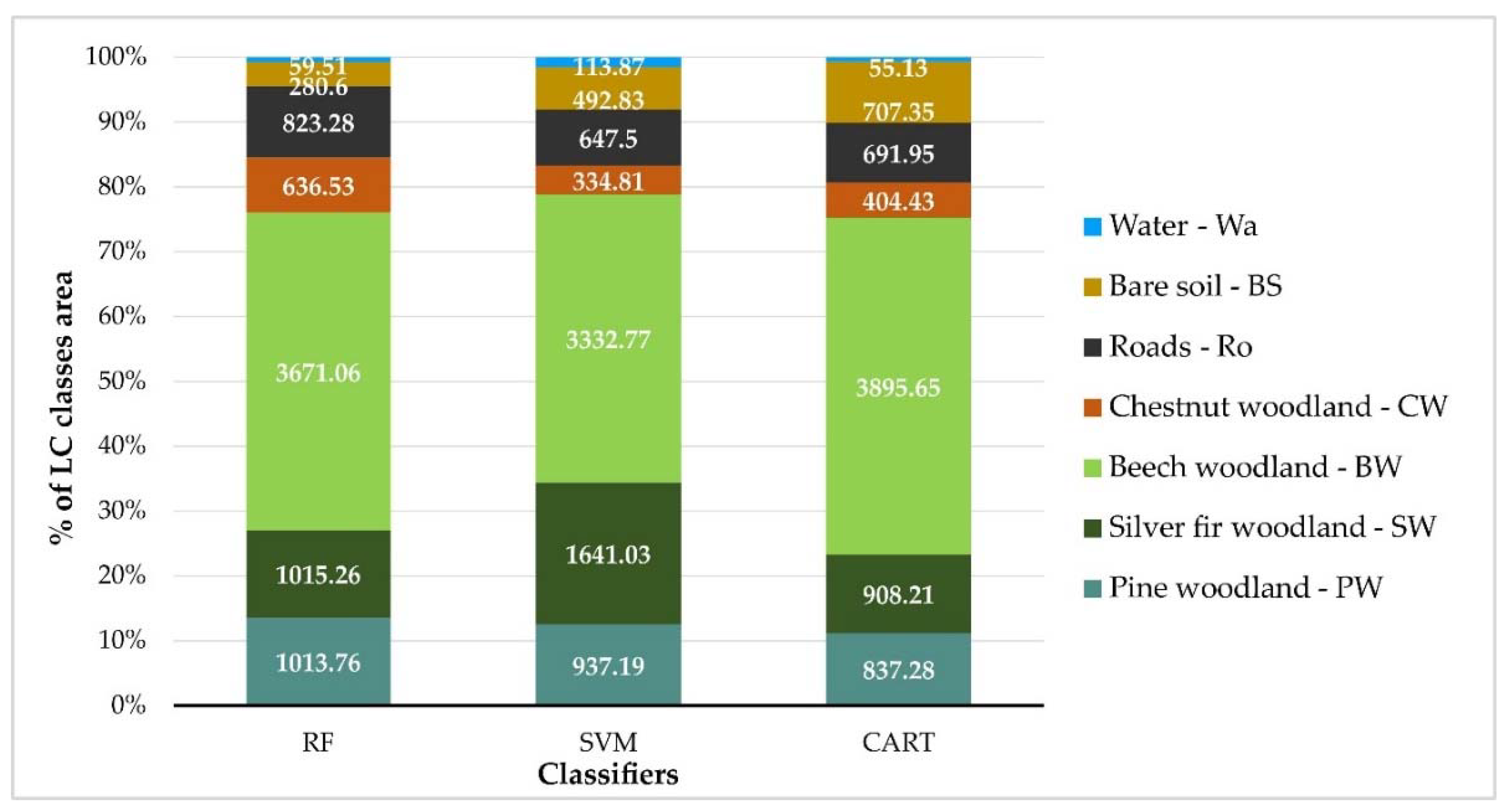

3.2. Classification Algorithms

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

References

- Díaz Varela, R.A.; Ramil Rego, P.; Calvo Iglesias, S.; Muñoz Sobrino, C. Automatic habitat classification methods based on satellite images: A practical assessment in the NW Iberia coastal mountains. Environ. Monit. Assess. 2008, 144, 229–250. [Google Scholar] [CrossRef]

- European Commission. Commission Note on Establishment Conservation Measures for Natura 2000 Sites. Doc. Hab. 13-04/05. 2013. Available online: https://ec.europa.eu/environment/nature/natura2000/management/docs/commission_note/comNote conservation measures_EN.pdf (accessed on 7 January 2021).

- Di Fazio, S.; Modica, G.; Zoccali, P. Evolution Trends of Land Use/Land Cover in a Mediterranean Forest Landscape in Italy. In Proceedings of the Computational Science and Its Applications-ICCSA 2011, Part I, Lecture Notes in Computer Science, Santander, Spain, 20–23 June 2011; pp. 284–299. [Google Scholar]

- Modica, G.; Merlino, A.; Solano, F.; Mercurio, R. An index for the assessment of degraded Mediterranean forest ecosystems. For. Syst. 2015, 24, e037. [Google Scholar] [CrossRef]

- Modica, G.; Praticò, S.; Di Fazio, S. Abandonment of traditional terraced landscape: A change detection approach (a case study in Costa Viola, Calabria, Italy). Land Degrad. Dev. 2017, 28, 2608–2622. [Google Scholar] [CrossRef]

- Gómez, C.; White, J.C.; Wulder, M.A. Optical remotely sensed time series data for land cover classification: A review. ISPRS J. Photogramm. Remote Sens. 2016, 116, 55–72. [Google Scholar] [CrossRef]

- Modica, G.; Vizzari, M.; Pollino, M.; Fichera, C.R.; Zoccali, P.; Di Fazio, S. Spatio-temporal analysis of the urban–rural gradient structure: An application in a Mediterranean mountainous landscape (Serra San Bruno, Italy). Earth Syst. Dyn. 2012, 3, 263–279. [Google Scholar] [CrossRef]

- Kerr, J.T.; Ostrovsky, M. From space to species: Ecological applications for remote sensing. Trends Ecol. Evol. 2003, 18, 299–305. [Google Scholar] [CrossRef]

- Modica, G.; Pollino, M.; Solano, F. Sentinel-2 Imagery for Mapping Cork Oak (Quercus suber L.) Distribution in Calabria (Italy): Capabilities and Quantitative Estimation. In Proceedings of the International Symposium on New Metropolitan Perspectives, Reggio Calabria, Italy, 22–25 May 2018; pp. 60–67. [Google Scholar] [CrossRef]

- Lanucara, S.; Praticò, S.; Modica, G. Harmonization and Interoperable Sharing of Multi-Temporal Geospatial Data of Rural Landscapes. Available online: https://doi.org/10.1007/978-3-319-92099-3_7 (accessed on 7 January 2021).

- De Luca, G.; Silva, J.M.N.; Cerasoli, S.; Araújo, J.; Campos, J.; Di Fazio, S.; Modica, G. Object-based land cover classification of cork oak woodlands using UAV imagery and Orfeo Toolbox. Remote Sens. 2019, 11, 1238. [Google Scholar] [CrossRef]

- Fraser, R.H.; Olthof, I.; Pouliot, D. Monitoring land cover change and ecological integrity in Canada’s national parks. Remote Sens. Environ. 2009, 113, 1397–1409. [Google Scholar] [CrossRef]

- Borre, J.V.; Paelinckx, D.; Mücher, C.A.; Kooistra, L.; Haest, B.; De Blust, G.; Schmidt, A.M. Integrating remote sensing in Natura 2000 habitat monitoring: Prospects on the way forward. J. Nat. Conserv. 2011, 19, 116–125. [Google Scholar] [CrossRef]

- Amiminia, H.; Salehi, B.; Mahdianpari, M.; Quackenbush, L.; Adeli, S.; Brisco, B. Google Earth Engine for geo-big data applications: A meta-analysis and systematic review. ISPRS J. Photogramm. Remote Sens. 2020, 164, 152–170. [Google Scholar] [CrossRef]

- Steinhausen, M.J.; Wagner, P.D.; Narasimhan, B.; Waske, B. Combining Sentinel-1 and Sentinel-2 data for improved land use and land cover mapping of monsoon regions. Int. J. Appl. Earth Obs. Geoinf. 2018, 73, 595–604. [Google Scholar] [CrossRef]

- Cihlar, J. Land cover mapping of large areas from satellites: Status and research priorities. Int. J. Remote Sens. 2000, 21, 1093–1114. [Google Scholar] [CrossRef]

- Franklin, S.E.; Wulder, M.A. Remote sensing methods in medium spatial resolution satellite data land cover classification of large areas. Prog. Phys. Geogr. Earth Environ. 2002, 26, 173–205. [Google Scholar] [CrossRef]

- Chaves, M.E.D.; Picoli, M.C.A.; Sanches, I.D. Recent Applications of Landsat 8/OLI and Sentinel-2/MSI for Land Use and Land Cover Mapping: A Systematic Review. Remote Sens. 2020, 12, 3062. [Google Scholar] [CrossRef]

- Rogan, J.; Chen, D. Remote sensing technology for mapping and monitoring land-cover and land-use change. Prog. Plan. 2004, 61, 301–325. [Google Scholar] [CrossRef]

- Choudhury, A.M.; Marcheggiani, E.; Despini, F.; Costanzini, S.; Rossi, P.; Galli, A.; Teggi, S. Urban Tree Species Identification and Carbon Stock Mapping for Urban Green Planning and Management. Forests 2020, 11, 1226. [Google Scholar] [CrossRef]

- Nossin, J.J. A Review of: “Remote Sensing, theorie en toepassingen van landobservatie (Remoie Sensing theory and applications of land observation”). Edited by H, J. BUITEN and J. G. P. W. CLEVERS. Series ‘Dynamiek, indenting and bcheer van landelijke gebieden’, part 2. (Wageningen: Pudoe Publ., 1990.) [Pp. 504 ] (312 figs, 38 tables, 22 colour plates. 10 supplements, glossary.). Int. J. Remote Sens. 1991, 12, 2173. [Google Scholar] [CrossRef]

- Zhang, Y.; Xiao, X.; Wu, X.; Zhou, S.; Zhang, G.; Qin, Y.; Dong, J. A global moderate resolution dataset of gross primary production of vegetation for 2000–2016. Sci. Data 2017, 4, 170165. [Google Scholar] [CrossRef]

- Zhou, H.; Wang, C.; Zhang, G.; Xue, H.; Wang, J.; Wan, H. Generating a Spatio-Temporal Complete 30 m Leaf Area Index from Field and Remote Sensing Data. Remote Sens. 2020, 12, 2394. [Google Scholar] [CrossRef]

- Zhang, Y.L.; Song, C.H.; Band, L.E.; Sun, G.; Li, J.X. Reanalysis of global terrestrial vegetation trends from MODIS products: Browning or greening? Remote Sens. Environ. 2017, 191, 145–155. Available online: https://linkinghub.elsevier.com/retrieve/pii/S0034425716304977 (accessed on 7 January 2021). [CrossRef]

- Bolton, D.K.; Gray, J.M.; Melaas, E.K.; Moon, M.; Eklundh, L.; Friedl, M.A. Continental-scale land surface phenology from harmonized Landsat 8 and Sentinel-2 imagery. Remote Sens. Environ. 2020, 240, 111685. [Google Scholar] [CrossRef]

- Hansen, M.C.; Potapov, P.V.; Moore, R.; Hancher, M.; Turubanova, S.A.; Tyukavina, A.; Thau, D.; Stehman, S.V.; Goetz, S.J.; Loveland, T.R.; et al. High-resolution global maps of 21st-century forest cover change. Science 2013, 342, 850–853. [Google Scholar] [CrossRef] [PubMed]

- Pekel, J.-F.; Cottam, A.; Gorelick, N.; Belward, A.S. High-resolution mapping of global surface water and its long-term changes. Nature 2016, 540, 418–422. Available online: http://www.nature.com/articles/nature20584 (accessed on 7 January 2021). [CrossRef] [PubMed]

- Li, J.; Roy, D.P. A Global Analysis of Sentinel-2A, Sentinel-2B and Landsat-8 Data Revisit Intervals and Implications for Terrestrial Monitoring. Remote Sens. 2017, 9, 902. [Google Scholar]

- Weiers, S.; Bock, M.; Wissen, M.; Rossner, G. Mapping and indicator approaches for the assessment of habitats at different scales using remote sensing and GIS methods. Landsc. Urban Plan. 2004, 67, 43–65. [Google Scholar] [CrossRef]

- Cheţan, M.A.; Dornik, A.; Urdea, P. Analysis of recent changes in natural habitat types in the Apuseni Mountains (Romania), using multi-temporal Landsat satellite imagery (1986–2015). Appl. Geogr. 2018, 97, 161–175. [Google Scholar] [CrossRef]

- Bock, M.; Xofis, P.; Mitchley, J.; Rossner, G.; Wissen, M. Object-oriented methods for habitat mapping at multiple scales–Case studies from Northern Germany and Wye Downs, UK. J. Nat. Conserv. 2005, 13, 75–89. [Google Scholar] [CrossRef]

- Wang, F.; Xu, Y.J. Comparison of remote sensing change detection techniques for assessing hurricane damage to forests. Environ. Monit. Assess. 2009, 162, 311–326. [Google Scholar] [CrossRef]

- Pôças, I.; Cunha, M.; Pereira, L.S. Remote sensing based indicators of changes in a mountain rural landscape of Northeast Portugal. Appl. Geogr. 2011, 31, 871–880. [Google Scholar] [CrossRef]

- Pastick, N.J.; Wylie, B.K.; Wu, Z. Spatiotemporal analysis of Landsat-8 and Sentinel-2 data to support monitoring of dryland ecosystems. Remote Sens. 2018, 10, 791. [Google Scholar] [CrossRef]

- Vogelmann, J.E.; Xian, G.; Homer, C.G.; Tolk, B. Monitoring gradual ecosystem change using Landsat time series analyses: Case studies in selected forest and rangeland ecosystems. Remote Sens. Environ. 2012, 122, 92–105. [Google Scholar] [CrossRef]

- Simonetti, E.; Szantoi, Z.; Lupi, A.; Eva, H.D. First Results From the Phenology-Based Synthesis Classifier Using Landsat 8 Imagery. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1496–1500. [Google Scholar] [CrossRef]

- Melaas, E.K.; Sulla-Menashe, D.; Gray, J.; Black, T.A.; Morin, T.H.; Richardson, A.D.; Friedl, M.A. Multisite analysis of land surface phenology in North American temperate and boreal deciduous forests from Landsat. Remote Sens. Environ. 2016, 186, 452–464. [Google Scholar] [CrossRef]

- Myneni, R.B.; Hall, F.G.; Sellers, P.J.; Marshak, A.L. Interpretation of spectral vegetation indexes. IEEE Trans. Geosci. Remote Sens. 1995, 33, 481–486. [Google Scholar] [CrossRef]

- Morisette, J.T.; Richardson, A.D.; Knapp, A.K.; Fisher, I.J.; Graham, A.E.; Abatzoglou, J.; Wilson, E.B.; Breshears, D.D.; Henebry, G.M.; Hanes, J.M.; et al. Tracking the rhythm of the seasons in the face of global change: Phenological research in the 21st century. Front. Ecol. Environ. 2009, 7, 253–260. [Google Scholar] [CrossRef]

- Solano, F.; Colonna, N.; Marani, M.; Pollino, M. Geospatial Analysis to Assess Natural Park Biomass Resources for Energy Uses in the Context of the Rome Metropolitan Area; Springer: Dordrecht, Netherlands, 2019; pp. 173–181. Available online: http://link.springer.com/10.1007/978-3-319-92099-3_21 (accessed on 7 January 2021).

- Lu, D.; Weng, Q. A survey of image classification methods and techniques for improving classification performance. Int. J. Remote Sens. 2007, 28, 823–870. [Google Scholar] [CrossRef]

- Jönsson, P.; Cai, Z.; Melaas, E.; Friedl, M.A.; Eklundh, L. A Method for Robust Estimation of Vegetation Seasonality from Landsat and Sentinel-2 Time Series Data. Remote Sens. 2018, 10, 635. [Google Scholar] [CrossRef]

- Thompson, S.D.; Nelson, T.A.; White, J.C.; Wulder, M.A. Mapping Dominant Tree Species over Large Forested Areas Using Landsat Best-Available-Pixel Image Composites. Can. J. Remote Sens. 2015, 41, 203–218. [Google Scholar] [CrossRef]

- Clark, M.L.; Aide, T.M.; Grau, H.R.; Riner, G. A scalable approach to mapping annual land cover at 250 m using MODIS time series data: A case study in the Dry Chaco ecoregion of South America. Remote Sens. Environ. 2010, 114, 2816–2832. [Google Scholar] [CrossRef]

- Wakulińska, M.; Marcinkowska-Ochtyra, A. Multi-Temporal Sentinel-2 Data in Classification of Mountain Vegetation. Remote Sens. 2020, 12, 2696. [Google Scholar] [CrossRef]

- Lehmann, E.A.; Wallace, J.F.; Caccetta, P.A.; Furby, S.L.; Zdunic, K. Forest cover trends from time series Landsat data for the Australian continent. Int. J. Appl. Earth Obs. Geoinf. 2013, 21, 453–462. [Google Scholar] [CrossRef]

- Gómez, C.; White, J.C.; Wulder, M.A.; Alejandro, P. Integrated Object-Based Spatiotemporal Characterization of Forest Change from an Annual Time Series of Landsat Image Composites. Can. J. Remote Sens. 2015, 41, 271–292. [Google Scholar] [CrossRef]

- Kollert, A.; Bremer, M.; Löw, M.; Rutzinger, M. Exploring the potential of land surface phenology and seasonal cloud free composites of one year of Sentinel-2 imagery for tree species mapping in a mountainous region. Int. J. Appl. Earth Obs. Geoinf. 2021, 94, 102208. [Google Scholar] [CrossRef]

- Li, S.; Dragicevic, S.; Anton, F.; Sester, M.; Winter, S.; Çöltekin, A.; Pettit, C.; Jiang, B.; Haworth, J.; Stein, A.; et al. Geospatial big data handling theory and methods: A review and research challenges. ISPRS J. Photogramm. Remote Sens. 2016, 115, 119–133. [Google Scholar] [CrossRef]

- Ma, Y.; Wu, H.; Wang, L.; Huang, B.; Ranjan, R.; Zomaya, A.Y.; Jie, W. Remote sensing big data computing: Challenges and opportunities. Futur. Gener. Comput. Syst. 2015, 51, 47–60. [Google Scholar] [CrossRef]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Kumar, L.; Mutanga, O. Google Earth Engine Applications Since Inception: Usage, Trends, and Potential. Remote Sens. 2018, 10, 1509. [Google Scholar] [CrossRef]

- Mondal, P.; Liu, X. (Leon); Fatoyinbo, T.; Lagomasino, D. Evaluating Combinations of Sentinel-2 Data and Machine-Learning Algorithms for Mangrove Mapping in West Africa. Remote Sens. 2019, 11, 2928. [Google Scholar] [CrossRef]

- Chen, B.; Xiao, X.; Li, X.; Pan, L.; Doughty, R.; Ma, J.; Dong, J.; Qin, Y.; Zhao, B.; Wu, Z.; et al. A mangrove forest map of China in 2015: Analysis of time series Landsat 7/8 and Sentinel-1A imagery in Google Earth Engine cloud computing platform. ISPRS J. Photogramm. Remote Sens. 2017, 131, 104–120. [Google Scholar] [CrossRef]

- FForstmaier, A.; Shekhar, A.; Chen, J. Mapping of Eucalyptus in Natura 2000 Areas Using Sentinel 2 Imagery and Artificial Neural Networks. Remote Sens. 2020, 12, 2176. [Google Scholar] [CrossRef]

- Saah, D.; Johnson, G.; Ashmall, B.; Tondapu, G.; Tenneson, K.; Patterson, M.S.; Poortinga, A.; Markert, K.; Quyen, N.H.; Aung, K.S.; et al. Collect Earth: An online tool for systematic reference data collection in land cover and use applications. Environ. Model. Softw. 2019, 118, 166–171. [Google Scholar] [CrossRef]

- Tassi, A.; Vizzari, M. Object-Oriented LULC Classification in Google Earth Engine Combining SNIC, GLCM, and Machine Learning Algorithms. Remote Sens. 2020, 12, 3776. [Google Scholar] [CrossRef]

- Parks, S.A.; Holsinger, L.M.; Koontz, M.J.; Collins, L.; Whitman, E.; Parisien, M.; Loehman, R.A.; Barnes, J.L.; Bourdon, J.; Boucher, J.; et al. Giving Ecological Meaning to Satellite-Derived Fire Severity Metrics across North American Forests. Remote Sens. 2019, 11, 1735. [Google Scholar] [CrossRef]

- Senf, C.; Seidl, R. Mapping the forest disturbance regimes of Europe. Nat. Sustain. 2021, 4, 63–70. [Google Scholar] [CrossRef]

- Pérez-Romero, J.; Navarro-Cerrillo, R.M.; Palacios-Rodriguez, G.; Acosta, C.; Mesas-Carrascosa, F.J. Improvement of remote sensing-based assessment of defoliation of Pinus spp. caused by Thaumetopoea pityocampa Denis and Schiffermüller and related environmental drivers in Southeastern Spain. Remote Sens. 2019, 11, 1736. [Google Scholar] [CrossRef]

- Wang, C.; Jia, M.; Chen, N.; Wang, W. Long-Term Surface Water Dynamics Analysis Based on Landsat Imagery and the Google Earth Engine Platform: A Case Study in the Middle Yangtze River Basin. Remote Sens. 2018, 10, 1635. [Google Scholar] [CrossRef]

- De Lucia Lobo, F.; Souza-Filho, P.W.M.; Novo EML de, M.; Carlos, F.M.; Barbosa, C.C.F. Mapping Mining Areas in the Brazilian Amazon Using MSI/Sentinel-2 Imagery (2017). Remote Sens. 2018, 10, 1178. [Google Scholar] [CrossRef]

- Snapir, B.; Momblanch, A.; Jain, S.; Waine, T.; Holman, I.P. A method for monthly mapping of wet and dry snow using Sentinel-1 and MODIS: Application to a Himalayan river basin. Int. J. Appl. Earth Obs. Geoinf. 2019, 74, 222–230. [Google Scholar] [CrossRef]

- Hagenaars, G.; De Vries, S.; Luijendijk, A.P.; De Boer, W.P.; Reniers, A.J. On the accuracy of automated shoreline detection derived from satellite imagery: A case study of the sand motor mega-scale nourishment. Coast. Eng. 2018, 133, 113–125. [Google Scholar] [CrossRef]

- Liu, X.; Hu, G.; Chen, Y.; Li, X.; Xu, X.; Li, S.; Pei, F.; Wang, S. High-resolution multi-temporal mapping of global urban land using Landsat images based on the Google Earth Engine Platform. Remote Sens. Environ. 2018, 209, 227–239. [Google Scholar] [CrossRef]

- Ji, H.; Li, X.; Wei, X.; Liu, W.; Zhang, L.; Wang, L. Mapping 10-m Resolution Rural Settlements Using Multi-Source Remote Sensing Datasets with the Google Earth Engine Platform. Remote Sens. 2020, 12, 2832. [Google Scholar] [CrossRef]

- Callaghan, C.T.; Major, R.E.; Lyons, M.B.; Martin, J.M.; Kingsford, R.T. The effects of local and landscape habitat attributes on bird diversity in urban greenspaces. Ecosphere 2018, 9, e02347. [Google Scholar] [CrossRef]

- Caloiero, T.; Coscarelli, R.; Ferrari, E.; Mancini, M. Trend detection of annual and seasonal rainfall in Calabria (Southern Italy). Int. J. Clim. 2010, 31, 44–56. [Google Scholar] [CrossRef]

- Cameriere, P.; Caridi, D.; Crisafulli, A.; Spampinato, G. La carta della vegetazione reale del Parco Nazionale dell’Aspromonte (Italia meridionale). In Proceedings of the 97 Congresso Nazionale Della Società Botanica Italiana, Lecce, Italy, 24–27 September 2002. [Google Scholar]

- Modica, G.; Praticò, S.; Laudari, L.; Ledda, A.; Di Fazio, S.; De Montis, A. Design and implementation of multispecies ecological networks at the regional scale: Analysis and multi-temporal assessment. Remote Sens. under review.

- Spampinato, G.; Cameriere, P.; Caridi, D.; Crisafulli, A. Carta della biodiversità vegetale del Parco Nazionale dell’Aspromonte (Italia Meridionale). Quaderno di Botanica Ambientale Applicata 2009, 20, 3–36. [Google Scholar]

- Sánchez-Espinosa, A.; Schröder, C. Land use and land cover mapping in wetlands one step closer to the ground: Sentinel-2 versus landsat 8. J. Environ. Manag. 2019, 247, 484–498. [Google Scholar] [CrossRef] [PubMed]

- Munyati, C.; Balzter, H.; Economon, E. Correlating Sentinel-2 MSI-derived vegetation indices with in-situ reflectance and tissue macronutrients in savannah grass. Int. J. Remote Sens. 2020, 41, 3820–3844. [Google Scholar] [CrossRef]

- Schmitt, M.; Hughes, L.H.; Qiu, C.; Zhu, X.X. Aggregating Cloud-Free Sentinel-2 Images with Google Earth Engine. ISPRS Ann. Photogramm. Remote Sens. Spatial Inf. Sci. 2019, 4, 145–152. [Google Scholar] [CrossRef]

- Nguyen, M.D.; Baez-Villanueva, O.M.; Du Bui, D.; Nguyen, P.T.; Ribbe, L. Harmonization of Landsat and Sentinel 2 for Crop Monitoring in Drought Prone Areas: Case Studies of Ninh Thuan (Vietnam) and Bekaa (Lebanon). Remote Sens. 2020, 12, 281. [Google Scholar] [CrossRef]

- Zhang, W.; Brandt, M.; Wang, Q.; Prishchepov, A.V.; Tucker, C.J.; Li, Y.; Lyu, H.; Fensholt, R. From woody cover to woody canopies: How Sentinel-1 and Sentinel-2 data advance the mapping of woody plants in savannas. Remote Sens. Environ. 2019, 234, 111465. [Google Scholar] [CrossRef]

- Dong, J.; Xiao, X.; Chen, B.; Torbick, N.; Jin, C.; Zhang, G.; Biradar, C. Mapping deciduous rubber plantations through integration of PALSAR and multi-temporal Landsat imagery. Remote Sens. Environ. 2013, 134, 392–402. [Google Scholar] [CrossRef]

- Coppin, P.; Jonckheere, I.; Nackaerts, K.; Muys, B.; Lambin, E. Digital change detection methods in ecosystem monitoring: A review. Int. J. Remote Sens. 2004, 25, 1565–1596. [Google Scholar] [CrossRef]

- Rouse, J.W.; Hass, R.H.; Schell, J.A.; Deering, D.W. Monitoring Vegetation Systems in the Great Plains with ERTS. In Proceedings of the Third Earth Resources Technology Satellite (ERTS) Symposium, Washington, DC, USA, 10–14 December 1973; Volume 1, pp. 309–317. [Google Scholar]

- Gitelson, A.A.; Kaufman, Y.J.; Merzlyak, M.N. Use of a green channel in remote sensing of global vegetation from EOS-MODIS. Remote Sens. Environ. 1996, 58, 289–298. [Google Scholar] [CrossRef]

- Huete, A.; Didan, K.; Miura, T.; Rodriguez, E.P.; Gao, X.; Ferreira, L.G. Overview of the radiometric and biophysical performance of the MODIS vegetation indices. Remote Sens. Environ. 2002, 83, 195–213. [Google Scholar] [CrossRef]

- Kimes, D.; Markham, B.; Tucker, C.; McMurtrey, J. Temporal relationships between spectral response and agronomic variables of a corn canopy. Remote Sens. Environ. 1981, 11, 401–411. [Google Scholar] [CrossRef]

- Garcia, M.J.L.; Caselles, V. Mapping burns and natural reforestation using thematic Mapper data. Geocarto Int. 1991, 6, 31–37. [Google Scholar] [CrossRef]

- Raymond Hunt, E.; Rock, B.N.; Nobel, P.S. Measurement of leaf relative water content by infrared reflectance. Remote Sens. Environ. 1987, 22, 429–435. [Google Scholar] [CrossRef]

- Ji, L.; Zhang, L.; Wylie, B.K.; Rover, J. On the terminology of the spectral vegetation index (NIR − SWIR)/(NIR + SWIR). Int. J. Remote Sens. 2011, 32, 6901–6909. [Google Scholar] [CrossRef]

- Modica, G.; Messina, G.; De Luca, G.; Fiozzo, V.; Praticò, S. Monitoring the vegetation vigor in heterogeneous citrus and olive orchards. A multiscale object-based approach to extract trees’ crowns from UAV multispectral imagery. Comput. Electron. Agric. 2020, 175, 105500. [Google Scholar] [CrossRef]

- Solano, F.; Di Fazio, S.; Modica, G. A methodology based on GEOBIA and WorldView-3 imagery to derive vegetation indices at tree crown detail in olive orchards. Int. J. Appl. Earth Obs. Geoinf. 2019, 83, 101912. [Google Scholar] [CrossRef]

- Makinde, E.O.; Salami, A.T.; Olaleye, J.B.; Okewusi, O.C. Object Based and Pixel Based Classification Using Rapideye Satellite Imager of ETI-OSA, Lagos, Nigeria. Geoinform. FCE CTU 2016, 15, 59–70. [Google Scholar] [CrossRef]

- Praticò, S.; Di Fazio, S.; Modica, G. Multi Temporal Analysis of Sentinel-2 Imagery for Mapping Forestry Vegetation Types: A Google Earth Engine Approach; Springer: Dordrecht, Netherlands, 2021; pp. 1650–1659. Available online: http://link.springer.com/10.1007/978-3-030-48279-4_155 (accessed on 7 January 2021).

- Cihlar, J.; Latifovic, R.; Beaubien, J. A Comparison of Clustering Strategies for Unsupervised Classification. Can. J. Remote Sens. 2000, 26, 446–454. [Google Scholar] [CrossRef]

- Tou, J.T.; Gonzalez, R.C. Pattern Recognition Principles; Addison-Wesley Publishing Company: Boston, MA, USA, 1974. [Google Scholar]

- Hartigan, J.A.; Wong, M.A. A K-Means Clustering Algorithm. Appl. Stat. 1979, 28, 100. [Google Scholar] [CrossRef]

- Arthur, D.; Vassilvitskii, S. K-Means++: The Advantages of Careful Seeding. In Proceedings of the Eighteenth Annual ACM-SIAM Symposium on Discrete Algorithms, New Orleans, LA, USA, 7–9 January 2007; pp. 1027–1035. [Google Scholar]

- Vauhkonen, J.; Imponen, J. Unsupervised classification of airborne laser scanning data to locate potential wildlife habitats for forest management planning. Forests 2016, 89, 350–363. [Google Scholar] [CrossRef]

- Ma, L.; Cheng, L.; Li, M.; Liu, Y.; Ma, X. Training set size, scale, and features in Geographic Object-Based Image Analysis of very high resolution unmanned aerial vehicle imagery. ISPRS J. Photogramm. Remote Sens. 2015, 102, 14–27. [Google Scholar] [CrossRef]

- Jones, H.G.; Vaughan, R.A. Remote Sensing of Vegetation: Principles, Techniques, and Applications; Oxford University Press: Oxford, UK, 2010; 384p. [Google Scholar]

- Brovelli, M.A.; Sun, Y.; Yordanov, V. Monitoring Forest Change in the Amazon Using Multi-Temporal Remote Sensing Data and Machine Learning Classification on Google Earth Engine. ISPRS Int. J. Geo-Inf. 2020, 9, 580. [Google Scholar] [CrossRef]

- Abdi, A.M. Land cover and land use classification performance of machine learning algorithms in a boreal landscape using Sentinel-2 data. GIScience Remote Sens. 2020, 57, 1–20. [Google Scholar] [CrossRef]

- Maxwell, A.E.; Warner, T.A.; Fang, F. Implementation of machine-learning classification in remote sensing: An applied review. Int. J. Remote Sens. 2018, 39, 2784–2817. [Google Scholar] [CrossRef]

- Mardani, M.; Korrani, H.M.; De Simone, L.; Varas, S.; Kita, N.; Saito, T. Integration of Machine Learning and Open Access Geospatial Data for Land Cover Mapping. Remote Sens. 2019, 11, 1907. [Google Scholar] [CrossRef]

- Modica, G.; De Luca, G.; Messina, G.; Fiozzo, V.; Praticò, S. Comparison and assessment of different object-based classifications using machine learning algorithms and UAVs multispectral imagery in the framework of precision agriculture. Remote Sens. under review.

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Cutler, D.R.; Edwards, T.C.; Beard, K.H.; Cutler, A.; Hess, K.T.; Gibson, J.; Lawler, J.J. Random Forests for Classification in Ecology. Ecology 2007, 88, 2783–2792. [Google Scholar] [CrossRef]

- Breiman, L.; Friedman, J.H.; Olshen, R.A.; Stone, C.J. Classification and Regression Trees [Internet]. Available online: https://www.taylorfrancis.com/books/9781351460491 (accessed on 7 January 2021).

- Loh, W. Classification and regression trees. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2011, 1, 14–23. [Google Scholar] [CrossRef]

- Bishop, C. Pattern Recognition and Machine Learning; Springer: New York, NY, USA, 2006; p. 738. [Google Scholar]

- Cortes, C.; Vapnik, V. Support-Vector Networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Vapnik, V. Statistical Learning Theory; New York John Wiley and Sons: New York, NY, USA, 1998. [Google Scholar]

- Huang, C.; Davis, L.S.; Townshend, J.R.G. An assessment of support vector machines for land cover classification. Int. J. Remote Sens. 2002, 23, 725–749. [Google Scholar] [CrossRef]

- Phan, T.N.; Kuch, V.; Lehnert, L.W. Land Cover Classification using Google Earth Engine and Random Forest Classifier—The Role of Image Composition. Remote Sens. 2020, 12, 2411. [Google Scholar] [CrossRef]

- Congalton, R.G.; Green, K. Assessing the Accuracy of Remotely Sensed Data; CRC Press: Boca Raton, FL, USA, 2019. [Google Scholar]

- Story, M.; Congalton, R.G. Remote Sensing Brief Accuracy Assessment: A User’s Perspective. Photogramm. Eng. Remote Sens. 1986, 52, 397–399. [Google Scholar]

- Goutte, C.; Gaussier, E. A Probabilistic Interpretation of Precision, Recall and F-Score, with Implication for Evaluation; Springer: Berlin/Heidelberg, Germany, 2005; pp. 345–359. [Google Scholar] [CrossRef]

- Sokolova, M.; Japkowicz, N.; Szpakowicz, S. Beyond Accuracy, F-Score and ROC: A Family of Discriminant Measures for Performance Evaluation; Springer: Berlin/Heidelberg, Germany, 2006; pp. 1015–1021. [Google Scholar] [CrossRef]

- Sokolova, M.; Lapalme, G. A systematic analysis of performance measures for classification tasks. Inf. Process. Manag. 2009, 45, 427–437. [Google Scholar] [CrossRef]

- Hauser, L.T.; An Binh, N.; Viet Hoa, P.; Hong Quan, N.; Timmermans, J. Gap-Free Monitoring of Annual Mangrove Forest Dynamics in Ca Mau Province, Vietnamese Mekong Delta, Using the Landsat-7-8 Archives and Post-Classification Temporal Optimization. Remote Sens. 2020, 12, 3729. [Google Scholar] [CrossRef]

- Hird, J.N.; DeLancey, E.R.; McDermid, G.J.; Kariyeva, J. Google earth engine, open-access satellite data, and machine learning in support of large-area probabilisticwetland mapping. Remote Sens. 2017, 9, 1315. [Google Scholar] [CrossRef]

- Regan, S.; Gill, L.; Regan, S.; Naughton, O.; Johnston, P.; Waldren, S.; Ghosh, B. Mapping Vegetation Communities Inside Wetlands Using Sentinel-2 Imagery in Ireland. Int. J. Appl. Earth Obs. Geoinf. 2020, 88, 102083. [Google Scholar] [CrossRef]

- Vivekananda, G.N.; Swathi, R.; Sujith, A. Multi-temporal image analysis for LULC classification and change detection. Eur. J. Remote Sens. 2020, 1, 1–11. [Google Scholar] [CrossRef]

- Tong, X.; Brandt, M.; Hiernaux, P.; Herrmann, S.; Rasmussen, L.V.; Rasmussen, K.; Tian, F.; Tagesson, T.; Zhang, W.; Fensholt, R. The forgotten land use class: Mapping of fallow fields across the Sahel using Sentinel-2. Remote Sens. Environ. 2020, 239, 111598. [Google Scholar] [CrossRef]

- Johansen, K.; Phinn, S.; Taylor, M. Mapping woody vegetation clearing in Queensland, Australia from Landsat imagery using the Google Earth Engine. Remote Sens. Appl. Soc. Environ. 2015, 1, 36–49. [Google Scholar] [CrossRef]

- Somers, B.; Asner, G.P. Tree species mapping in tropical forests using multi-temporal imaging spectroscopy: Wavelength adaptive spectral mixture analysis. Int. J. Appl. Earth Obs. Geoinf. 2014, 31, 57–66. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.; Ghimire, B.; Rogan, J.; Chicaolmo, M.; Rigol-Sanchez, J. An assessment of the effectiveness of a random forest classifier for land-cover classification. ISPRS J. Photogramm. Remote Sens. 2012, 67, 93–104. [Google Scholar] [CrossRef]

- Belgiu, M.; Drăgu, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Mahdianpari, M.; Salehi, B.; Mohammadimanesh, F.; Motagh, M. Random forest wetland classification using ALOS-2 L-band, RADARSAT-2 C-band, and TerraSAR-X imagery. ISPRS J. Photogramm. Remote Sens. 2017, 130, 13–31. [Google Scholar] [CrossRef]

- Mountrakis, G.; Im, J.; Ogole, C. Support vector machines in remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2011, 66, 247–259. [Google Scholar] [CrossRef]

- Holloway, J.; Mengersen, K. Statistical Machine Learning Methods and Remote Sensing for Sustainable Development Goals: A Review. Remote Sens. 2018, 10, 1365. [Google Scholar] [CrossRef]

- Messina, G.; Peña, J.M.; Vizzari, M.; Modica, G. A Comparison of UAV and Satellites Multispectral Imagery in Monitoring Onion Crop. An Application in the ‘Cipolla Rossa di Tropea’ (Italy). Remote Sens. 2020, 12, 3424. [Google Scholar] [CrossRef]

| Band | Sentinel-2 A | Sentinel-2 B | GSD [m] | ||

|---|---|---|---|---|---|

| Bandwidth | Central Wavelength | Bandwidth | Central Wavelength | ||

| [nm] | [nm] | [nm] | [nm] | ||

| 1 | 21 | 442.7 | 21 | 442.2 | 60 |

| 2 | 66 | 492.4 | 66 | 492.1 | 10 |

| 3 | 36 | 559.8 | 36 | 559.0 | 10 |

| 4 | 31 | 664.6 | 31 | 664.9 | 10 |

| 5 | 15 | 704.1 | 16 | 703.8 | 20 |

| 6 | 15 | 740.5 | 15 | 739.1 | 20 |

| 7 | 20 | 782.8 | 20 | 779.7 | 20 |

| 8 | 106 | 832.8 | 106 | 832.9 | 10 |

| 8A | 21 | 864.7 | 21 | 864.0 | 20 |

| 9 | 20 | 945.1 | 21 | 943.2 | 60 |

| 10 | 31 | 1373.5 | 30 | 1376.9 | 60 |

| 11 | 91 | 1613.7 | 94 | 1610.4 | 20 |

| 12 | 175 | 2202.4 | 185 | 2185.7 | 20 |

| Vegetation Index (VI) | Formula | Reference |

|---|---|---|

| Normalised Difference Vegetation Index (NDVI) | [79] | |

| Green Normalised Difference Vegetation Index (GNDVI) | [80] | |

| Enhanced Vegetation Index (EVI) | [81] | |

| Normalised Difference Infrared Index (NDII) | [82] | |

| Normalised Burn Ratio (NBR) | [83] |

| Input Image | Accuracy | OOB Error Estimate |

|---|---|---|

| All_IC | OA 0.79 Fm 0.80 | 0.01 |

| W_IC | OA 0.72 Fm 0.76 | 0.01 |

| Sp_IC | OA 0.82 Fm 0.82 | 0.02 |

| Su_IC | OA 0.86 Fm 0.87 | 0.01 |

| A_IC | OA 0.77 Fm 0.79 | 0.02 |

| Statistics | Accuracy | OOB Error Estimate |

|---|---|---|

| mean | OA 0.88 Fm 0.88 | 0.01 |

| median | OA 0.86 Fm 0.86 | 0.01 |

| minimum | OA 0.82 Fm 0.83 | 0.02 |

| maximum | OA 0.84 Fm 0.84 | 0.01 |

| Input Bands | Accuracy | OOB Error Estimate |

|---|---|---|

| Visible (B2, B3, B4) | OA 0.68 Fm 0.70 | 0.10 |

| Visible + NIR (B2, B3, B4, B8) | OA 0.79 Fm 0.80 | 0.06 |

| Visible + RE + NIR (B2, B3, B4, B5, B8) | OA 0.79 Fm 0.80 | 0.05 |

| Visible + all REs + all NIRs (B2, B3, B4, B5, B6, B7, B8, B8A) | OA 0.81 Fm 0.82 | 0.03 |

| Visible + all REs + all NIRs+ all SWIRs (B2, B3, B4, B5, B6, B7, B8, B8A, B9, B11, B12) | OA 0.85 Fm 0.84 | 0.02 |

| All bands | OA 0.86 Fm 0.87 | 0.01 |

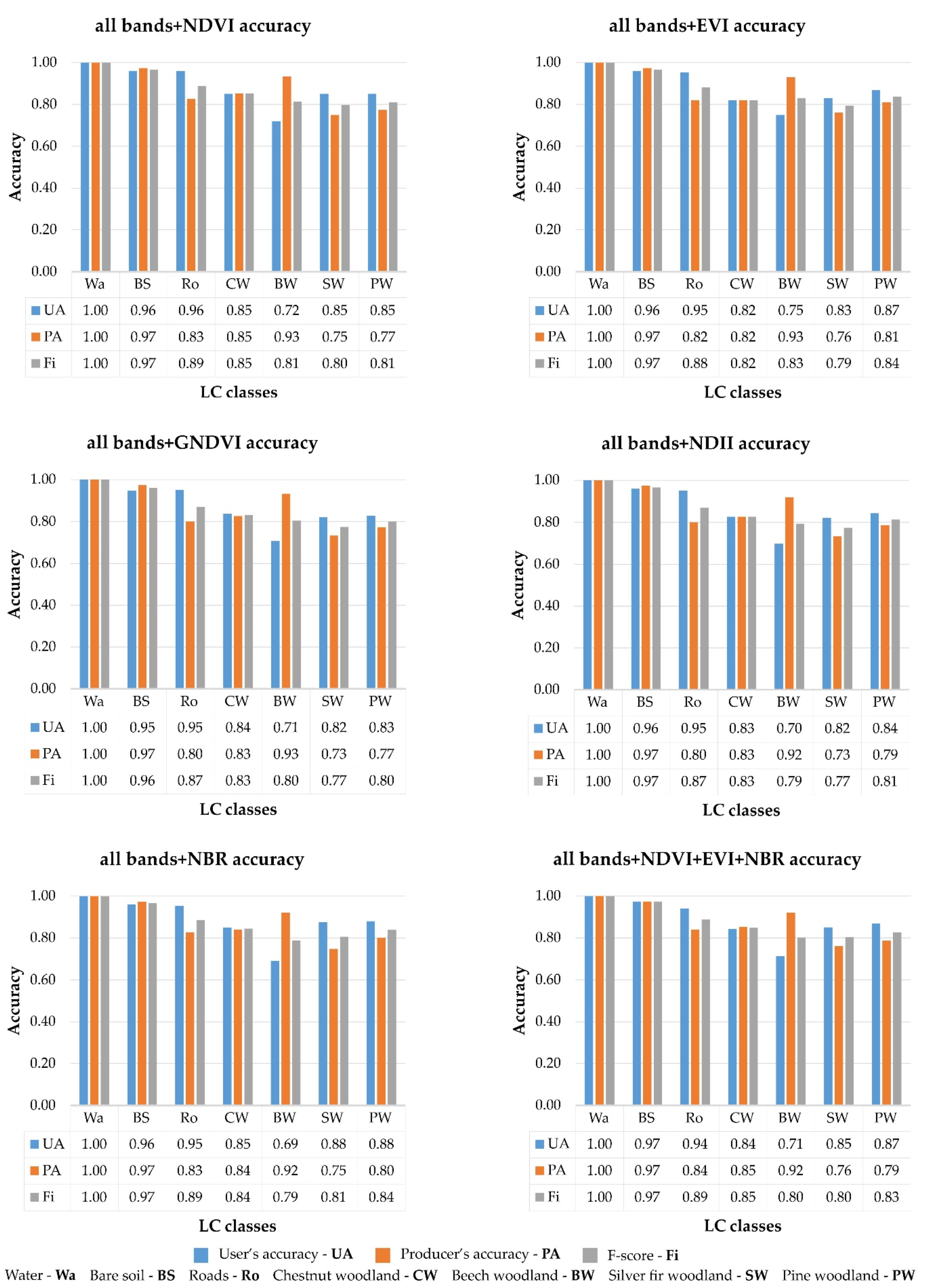

| Vegetation Indices | Accuracy | OOB Error Estimate |

|---|---|---|

| NDVI | OA 0.87 Fm 0.88 | 0.01 |

| EVI | OA 0.87 Fm 0.88 | 0.01 |

| GNDVI | OA 0.86 Fm 0.87 | 0.01 |

| NDII | OA 0.86 Fm 0.87 | 0.01 |

| NBR | OA 0.87 Fm 0.88 | 0.01 |

| NDVI + EVI + NBR | OA 0.88 Fm 0.88 | 0.01 |

| All indices | OA 0.87 Fm 0.87 | 0.01 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Praticò, S.; Solano, F.; Di Fazio, S.; Modica, G. Machine Learning Classification of Mediterranean Forest Habitats in Google Earth Engine Based on Seasonal Sentinel-2 Time-Series and Input Image Composition Optimisation. Remote Sens. 2021, 13, 586. https://doi.org/10.3390/rs13040586

Praticò S, Solano F, Di Fazio S, Modica G. Machine Learning Classification of Mediterranean Forest Habitats in Google Earth Engine Based on Seasonal Sentinel-2 Time-Series and Input Image Composition Optimisation. Remote Sensing. 2021; 13(4):586. https://doi.org/10.3390/rs13040586

Chicago/Turabian StylePraticò, Salvatore, Francesco Solano, Salvatore Di Fazio, and Giuseppe Modica. 2021. "Machine Learning Classification of Mediterranean Forest Habitats in Google Earth Engine Based on Seasonal Sentinel-2 Time-Series and Input Image Composition Optimisation" Remote Sensing 13, no. 4: 586. https://doi.org/10.3390/rs13040586

APA StylePraticò, S., Solano, F., Di Fazio, S., & Modica, G. (2021). Machine Learning Classification of Mediterranean Forest Habitats in Google Earth Engine Based on Seasonal Sentinel-2 Time-Series and Input Image Composition Optimisation. Remote Sensing, 13(4), 586. https://doi.org/10.3390/rs13040586