Application of Multispectral Camera in Monitoring the Quality Parameters of Fresh Tea Leaves

Abstract

:1. Introduction

2. Materials and Methods

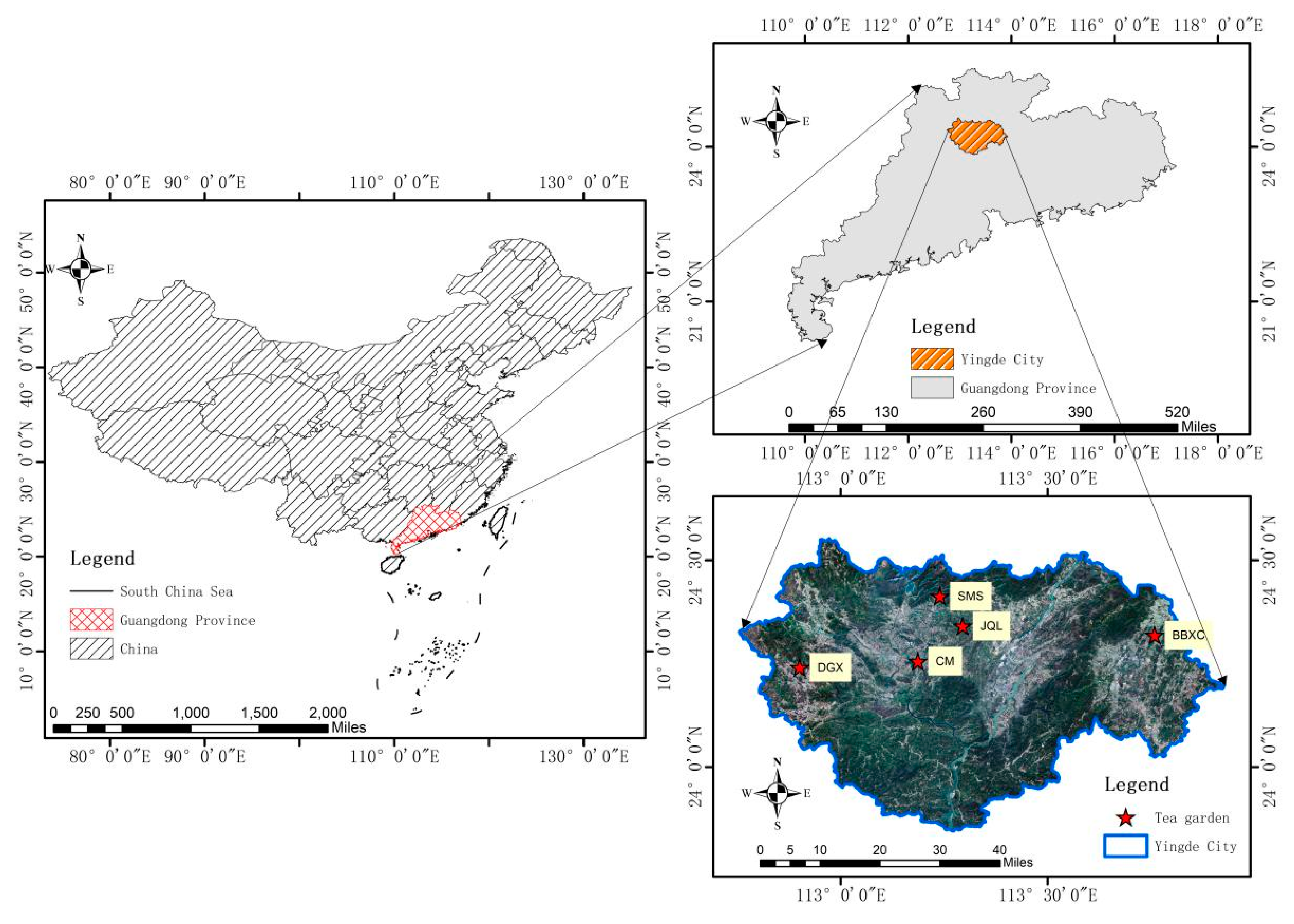

2.1. Experimental Program

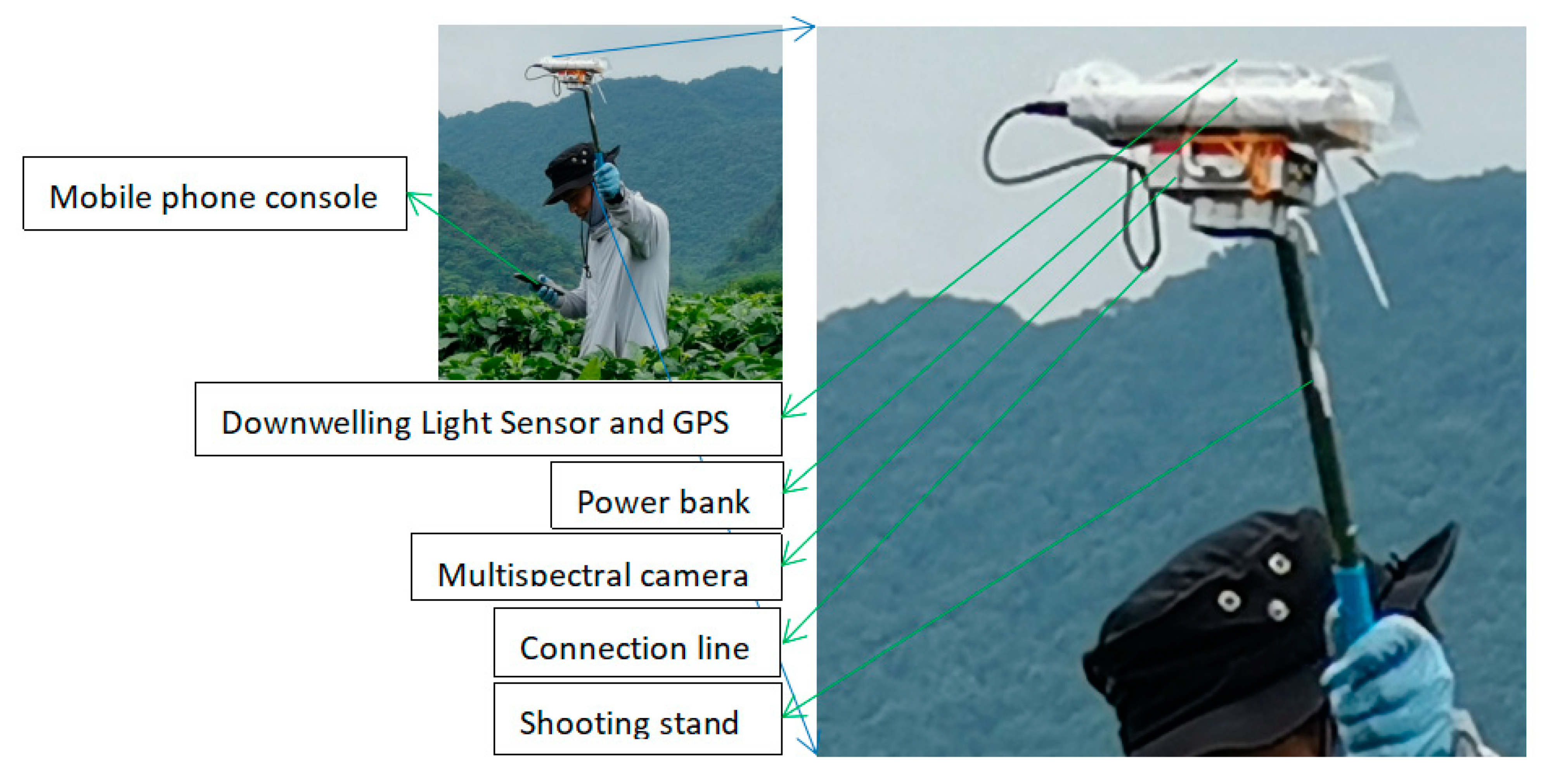

2.2. Data Acquisition

2.2.1. Spectral Data

2.2.2. Quality Parameters

2.3. Methods

2.3.1. Image Processing

- (1)

- Registration program and band fusion

- (2)

- Raster sampling

2.3.2. Spectral Feature Construction

| VIs | Formula | Reference | VIs | Formula | Reference |

|---|---|---|---|---|---|

| NDVI | NIR − R/NIR + R | [33] | RDVI | (NIR − ED)/SQRT(NIR + ED) | [34] |

| RVI | NIR/R | [35] | OSAVI | 1.16(NIR − ED)/(NIR + ED + 0.16) | [36] |

| DVI | NIR − R | [37] | NLI | (NIR2 − ED)/(NIR2 + ED) | [38] |

| EVI | 2.5(B − g)/(B + 6g − 7.5R + 1) | [39] | NDRE | (NIR − ED)/(NIR + ED) | [40] |

| VOG | (B − g)/(R + ED) | [41] | BGI | B/g | [42] |

| MTCI | (B − g)/(R − ED) | [43] | VARI | (R − g)/(g + R − B) | [44] |

| GNDVI | (NIR − g)/(NIR + g) | [45] | EXG | 2g − R − B | [31] |

| WDRVI | (0.1NIR − R)/(0.1NIR + R) | [46] | BI | SQRT(R2 + g2)/2 | [47] |

| GRVI | (g − R)/(g + R) | [48] | G | R/g | [42] |

| PSRI | (R − g)/ED | [49] | SIPI | (NIR − B)/(NIR + B) | [50] |

| RGR | R/g | [51] | MCARI | (B − g − 0.2(B − R))(B/g) | [52] |

| CCCI | (NIR − ED)/NIR + ED)/(NIR − R)/(NIR + R) | [53] | TGI | g + 0.39R − 0.61B | [54] |

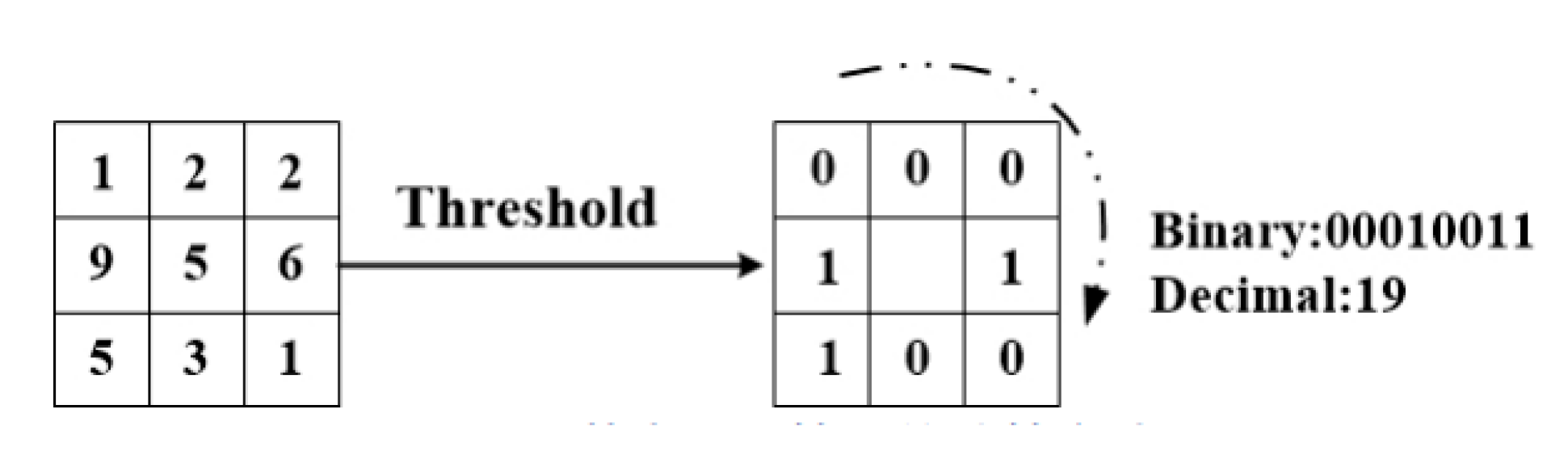

2.3.3. Texture Feature Extraction

2.3.4. Feature Selection

2.3.5. Regression Modeling

2.3.6. Accuracy Evaluation

3. Results

3.1. Correlation Analysis

3.2. Best Fit Sampling Method

3.3. Tea Varieties and Canopy Texture Features

3.4. Best Fit Modeling Algorithm

3.5. Effect of Texture Features on Model Accuracy

4. Discussion

4.1. Ground Multispectral Images

4.2. Vegetation Characteristics

4.3. Modeling Methods

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Yang, C.S.; Wang, Z.Y. Tea and cancer. J. Natl. Cancer Inst. 1993, 85, 1038–1049. [Google Scholar] [CrossRef]

- Yang, C.S.; Lambert, J.D.; Sang, S. Antioxidative and anti-carcinogenic activities of tea polyphenols. Arch. Toxicol. 2009, 83, 11–21. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Xu, J. Discussion on qingyuan tea industry development strategy based on SWOT analysis. Guangdong Tea Ind. 2016, 3, 5–8. [Google Scholar]

- Gao, H.; Zhang, M. Analysis of the status quo and countermeasures of the tea industry development in Yingde City. Guangdong Tea Ind. 2019, 5, 25–29. [Google Scholar]

- Jiang, H.; Xu, W.; Chen, Q. Evaluating aroma quality of black tea by an olfactory visualization system: Selection of feature sensor using particle swarm optimization. Food Res. Int. 2019, 126, 108605–108611. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Santos, J.S.; Cruz, T.M.; Marques, M.B.; Carmo, M.A.V.; Azevedo, L.; Granato, D. Multivariate effects of Chinese keemun black tea grades (Camellia sinensis var. sinensis) on the phenolic composition, antioxidant, antihemolytic and cytotoxic/cytopro-tection activities. Food Res. Int. 2019, 125, 108516–108525. [Google Scholar] [CrossRef] [PubMed]

- Zhou, P.; Zhao, F.; Chen, M.; Ye, N.; Lin, Q.; Ouyang, L.; Wang, Y. Determination of 21 free amino acids in 5 types of tea by ultra-high performance liquid chromatography coupled with tandem mass spectrometry (UHPLC–MS/MS) using a modified 6-aminoqui- nolyl-N-hydroxysuccinimidyl carbamate (AQC) method. J. Food Compos. Anal. 2019, 81, 46–54. [Google Scholar] [CrossRef]

- Mukhtar, H.; Ahmad, N. Tea polyphenols: Prevention of cancer and optimizing health. Am. J. Clin. Nutr. 2000, 71, 1698–1702. [Google Scholar] [CrossRef] [Green Version]

- Westerterp-Plantenga, M.S.; Lejeune, M.P.G.M.; Kovacs, E.M.R. Body weight loss and weight maintenance in relation to habitual caffeine intake and green tea supplementation. Obes. Res. 2005, 13, 1195–1204. [Google Scholar] [CrossRef]

- Miller, P.E.; Zhao, D.; Frazier-Wood, A.C.; Michos, E.D.; Averill, M.; Sandfort, V.; Burke, G.L.; Polak, J.F.; Lima, J.A.C.; Post, W.S.; et al. Associations of coffee, tea, and caffeine intake with coronary artery calcification and cardiovascular events. Am. J. Med. 2017, 130, 188–197. [Google Scholar] [CrossRef] [Green Version]

- Kumar, P.V.S.; Basheer, S.; Ravi, R.; Thakur, M.S. Comparative assessment of tea quality by various analytical and sensory methods with emphasis on tea polyphenols. J. Food Sci. Technol. 2011, 48, 440–446. [Google Scholar] [CrossRef] [Green Version]

- He, Y.B.; Yan, J. Factors affecting the quality of Xinyang Maojian tea. J. Anhui Agric. Sci. 2007, 22, 6842–6843. [Google Scholar]

- Zhi, R.; Zhao, L.; Zhang, D.Z. A framework for the multi-level fusion of electronic nose and electronic tongue for tea quality assessment. Sensors 2017, 17, 1007. [Google Scholar] [CrossRef] [Green Version]

- Ren, G.; Wang, S.; Ning, J.; Xu, R.; Wang, Y.; Xing, Z.; Zhang, Z. Quantitative analysis and geographical traceability of black tea using Fourier transform near-infrared spectroscopy (FT-NIRS). Food Res. Int. 2013, 53, 822–826. [Google Scholar] [CrossRef]

- Zhu, M.Z.; Wen, B.; Wu, H.; Li, J.; Lin, H.; Li, Q.; Li, Y.; Huang, J.; Liu, Z. The quality control of tea by near-infrared reflectance (NIR) spectroscopy and chemometrics. J. Spectrosc. 2019, 2019, 8129648. [Google Scholar] [CrossRef]

- Qi, D.; Miao, A.Q.; Cao, J.X.; Wang, W.; Ma, C. Study on the effects of rapid aging technology on the aroma quality ofwhite tea using GC-MS combined with chemometrics: In comparison with natural aged and fresh white tea. Food Chem. 2018, 265, 189–199. [Google Scholar] [CrossRef] [PubMed]

- Seleiman, M.F.; Selim, S.; Alhammad, B.A.; Alharbi, B.M.; Juliatti, F.C. Will novel coronavirus (COVID-19) pandemic impact agriculture, food security and animal sectors? Biosci. J. 2020, 36, 1315–1326. [Google Scholar] [CrossRef]

- Zhu, J.; Zhu, F.; Li, L.; Cheng, L.; Zhang, L.; Sun, Y.; Zhang, Z. Highly discriminant rate of Dianhong black tea grades based on fluorescent probes combined with chemometric methods. Food Chem. 2019, 298, 125046. [Google Scholar] [CrossRef] [PubMed]

- Li, L.; Xie, S.; Zhu, F.; Ning, J.; Chen, Q.; Zhang, Z. Colorimetric sensor array-based artificial olfactory system for sensing Chinese green tea’s quality: A method of fabrication. Int. J. Food Prop. 2017, 20, 1762–1773. [Google Scholar] [CrossRef]

- Hazarika, A.K.; Chanda, S.; Sabhapondit, S.; Sanyal, S.; Tamuly, P.; Tasrin, S.; Sing, D.; Tudu, B.; Bandyopadhyay, R. Quality assessment of fresh tea leaves by estimating total polyphenols using near infrared spectroscopy. J. Food Sci. Technol. 2018, 55, 4867–4876. [Google Scholar] [CrossRef]

- Chen, Q.; Zhao, J.; Chaitep, S.; Guo, Z. Simultaneous analysis of main catechins contents in green tea (Camellia sinensis (L.)) by Fourier transform near infrared reflectance (FT-NIR) spectroscopy. Food Chem. 2009, 113, 1272–1277. [Google Scholar] [CrossRef]

- Djokam, M.; Sandasi, M.; Chen, W.; Viljoen, A.; Vermaak, I. Hyperspectral imaging as a rapid quality control method for herbal tea blends. Appl. Sci. 2017, 7, 268. [Google Scholar] [CrossRef] [Green Version]

- Huang, J.; Ren, G.; Sun, Y.; Jin, S.; Li, L.; Wang, Y.; Ning, J.; Zhang, Z. Qualitative discrimination of Chinese dianhong black tea grades based on a handheld spectroscopy system coupled with chemometrics. Food Sci. Nutr. 2020, 8, 2015–2024. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Herrero-Langreo, A.; Lunadei, L.; Lleo, L. Multispectral vision for monitoring peach ripeness. J. Food Sci. 2011, 76, E174–E187. [Google Scholar] [CrossRef] [Green Version]

- Qin, J.W.; Chao, K.L.; Kim, M.S.; Lu, R.F.; Burks, T.F. Hyperspectral andmultispectral imaging for evaluating food safety and quality. J. Food Eng. 2013, 118, 157–171. [Google Scholar] [CrossRef]

- Feng, C.H.; Makino, Y.; Oshita, S.; Martín, J.F.G. Hyperspectral imaging and multispectral imaging as the novel techniques for detecting defects in raw and processed meat products: Current state-of-the-art research advances. Food Control 2018, 84, 165–176. [Google Scholar] [CrossRef]

- Overview of Yingde. Available online: http://www.yingde.gov.cn/ydgk/sqgk/content/post_856552.html (accessed on 21 February 2021).

- Lin, Y.H.; Wu, Y.; Luo, L.; Wang, K.; Zhou, X.Z.; Zhang, R.X. Climate characteristics and main meteorological disasters in Yingde City. Rural Econ. Technol. 2010, 21, 122–124. [Google Scholar]

- Balasundram, S.K.; Kamlesh, G.; Redmond, R.S.; Ganesan, V. Precision agriculture technologies for management of plant diseases. In Plant Disease Management Strategies for Sustainable Agriculture through Traditional and Modern Approaches, Malaysia; Springer: Cham, Switzerland, 2020; pp. 259–278. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Woebbecke, D.M.; Meyer, G.E.; Von Bargen, K.; Mortensen, D.A. Color indexes for weed identification under various soil, residue, and lighting conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histogram. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef] [Green Version]

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring vegetation systems in the great plains with ERTS. In Proceedings of the Third Earth Resources Technology Satellite—1 Symposium, Goddard Space Flight Center, Greenbelt, MD, USA, 10–14 December 1973; pp. 309–317. [Google Scholar]

- Roujean, J.L.; Breon, F.M. Estimating PAR absorbed by vegetation from bidirectional reflectance measurements. Remote Sens. Environ. 1995, 51, 375–384. [Google Scholar] [CrossRef]

- Jordan, C.F. Derivation of leaf area index from quality of light on the forest floor. Ecology 1969, 50, 535–761. [Google Scholar] [CrossRef]

- Rondeaux, G.; Steven, M.; Baret, F. Optimization of soil-adjusted vegetation indices. Remote Sens. Environ. 1996, 55, 95–107. [Google Scholar] [CrossRef]

- Richardson, A.J.; Wiegand, C.L. Distinguishing vegetation from soil background information. Photogramm. Eng. Remote Sens. 1977, 43, 1541–1552. [Google Scholar]

- Goel, N.S.; Qin, W. Influences of canopy architecture on relationships between various vegetation indices and LAI and Fpar: A computer simulation. Int. J. Remote Sens. 1994, 10, 309–347. [Google Scholar] [CrossRef]

- Huete, A.; Didan, J.; Miura, T.; Rodriguez, E.P.; Gao, X.; Ferreira, L.G. Overview of the radiometric and biophysical performance of the MODIS vegetation indices. Remote Sens. Environ. 2002, 83, 195–213. [Google Scholar] [CrossRef]

- Barnes, E.M.; Clarke, T.R.; Richards, S.E.; Colaizzi, P.D.; Haberland, J.; Kostrzewski, M.; Waller, P.; Choi, C.; Riley, E.; Thompson, T.; et al. Coincident detection of crop water stress, nitrogen status and canopy density using ground based multispectral data. In Proceedings of the Fifth International Conference on Precision Agriculture, Bloomington, MN, USA, 16–19 July 2000; Volume 1619. [Google Scholar]

- Zarco-Tejada, P.J.; Miller, J.R.; Noland, T.L.; Mohammed, G.H.; Sampson, P.H. Scaling-up and model inversion methods with narrowband optical indices for chlorophyll content estimation in closed forest canopies with hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2001, 39, 1491–1507. [Google Scholar] [CrossRef] [Green Version]

- Zarco-Tejada, P.J.; Berjón, A.; López-Lozano, R.; Miller, J.; Martín, P.; Cachorro, V.; González, M.R.; de Frutos, A. Assessing vineyard condition with hyperspectral indices: Leaf and canopy reflectance simulation in a row-structured discontinuous canopy. Remote Sens. Environ. 2005, 99, 271–287. [Google Scholar] [CrossRef]

- Dash, J.; Curran, P.J. Evaluation of the MERIS terrestrial chlorophyll index (MTCI). Adv. Space Res. 2007, 39, 271–287. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel algorithms for remote estimation of vegetation fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef] [Green Version]

- Gitelson, A.A.; Kaufman, Y.J.; Merzlyak, M.N. Use of a green channel in remote sensing of global vegetation from EOS-MODIS. Remote Sens. Environ. 1996, 58, 289–298. [Google Scholar] [CrossRef]

- Gitelson, A.A. Wide dynamicrangevegetation index for remote quantification of biophysical characteristics of vegetation. J. Plant Physiol. 2004, 161, 165–173. [Google Scholar] [CrossRef] [Green Version]

- Liu, J.; Moore, J.M. Hue image RGB colour composition. A simple technique to sup-press shadow and enhance spectral signature. Int. J. Remote Sens. 1990, 11, 1521–1530. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef] [Green Version]

- Merzlyak, M.N.; Gitelson, A.A.; Chivkunova, O.B.; Rakitin, V.Y. Non-destructive optical detection of pigment changes during leaf senescence and fruit ripening. Physiol. Plant. 1999, 106, 135–141. [Google Scholar] [CrossRef] [Green Version]

- Penuelas, J.; Baret, F.; Filella, I. Semi-empirical indices to assess carotenoids/chlorophyll a ratio from leaf spectral reflectance. Photosynthetica 1995, 31, 221–230. [Google Scholar]

- Gamon, J.A.; Surfus, J.S. Assessing leaf pigment content and activity with a reflectometer. New Phytol. 1999, 143, 105–117. [Google Scholar] [CrossRef]

- Haboudane, D.; Miller, J.R.; Pattey, E.; Zarco-Tejada, P.J.; Strachan, I.B. Hyperspectral vegetation indices and novel algorithms for predicting green LAI of crop canopies: Modeling and validation in the context of precision agriculture. Remote Sens. Environ. 2004, 90, 337–352. [Google Scholar] [CrossRef]

- El-Shikha, D.M.; Barnes, E.M.; Clarke, T.R.; Hunsaker, D.J.; Haberland, J.A.; Pinter, P.J., Jr.; Waller, P.M.; Thompson, T.L. Remote sensing of cotton nitrogen status using the canopy chlorophyll content index (CCCI). Trans. ASABE 2008, 51, 73–82. [Google Scholar] [CrossRef]

- Hunt, E.R.; Doraiswamy, P.C.; McMurtrey, J.E.; Daughtry, C.S.T.; Perry, E.M.; Akhmedov, B. A visible band index for remote sensing leaf chlorophyll content at the canopy scale. Int. J. Appl. Earth Obs. Geoinf. 2013, 21, 103–112. [Google Scholar] [CrossRef] [Green Version]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I.H. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, SMC-3, 610–621. [Google Scholar] [CrossRef] [Green Version]

- Haralick, R.M. Statistical and structural approaches to texture. Proc. IEEE 2005, 67, 786–804. [Google Scholar] [CrossRef]

- Huang, X.; Liu, X.B.; Zhang, L.P. A multichannel gray level co-occurrence matrix for multi/hyperspectral image texture representation. Remote Sens. 2014, 6, 8424–8445. [Google Scholar] [CrossRef] [Green Version]

- Manjunath, B.S.; Ma, W.Y. Texture features for browsing and retrieval of image data. IEEE Trans. Pattern Anal. Mach. Intell. 1996, 18, 837–842. [Google Scholar] [CrossRef] [Green Version]

- Choi, J.Y.; Ro, Y.M.; Plataniotis, K.N. Color local texture features for color face recognition. IEEE Trans. Image Process. 2012, 21, 1366–1380. [Google Scholar] [CrossRef]

- Guo, Z.H.; Zhang, L.; Zhang, D.W. A completed modeling of local binary pattern operator for texture classification. IEEE Trans. Image Process. 2010, 19, 1657–1663. [Google Scholar] [PubMed] [Green Version]

- Hong, Y.M.; Leng, C.C.; Zhang, X.Y. HOLBP: Remote sensing image registration based on histogram of oriented local binary pattern descriptor. Remote Sens. 2021, 13, 2328. [Google Scholar] [CrossRef]

- Wold, S.; Ruhe, A.; Wold, H.; Dunn, W.J. The collinearity problem in linear regression. The partial least squares (PLS) approach to generalized inverses. SIAM J. Sci. Stat. Comput. 1984, 5, 735–743. [Google Scholar] [CrossRef] [Green Version]

- Abdi, H. Partial least square regression (PLS Regression). In Encyclopedia of Social Science Research Methods; SAGE: Thousand Oaks, CA, USA, 2003; pp. 792–795. [Google Scholar]

- Peng, X.; Shi, T.; Song, A.; Chen, Y.; Gao, W. Estimating soil organic carbon using VIS/NIR spectroscopy with SVMR and SPA methods. Remote Sens. 2014, 6, 2699–2717. [Google Scholar] [CrossRef] [Green Version]

- Walczak, B.; Massart, D.L. The radial basis functions—Partial least squares approach as a flexible non-linear regression technique. Anal. Chim. Acta 1996, 331, 177–185. [Google Scholar] [CrossRef]

- Viscarra, R.R.; Behrens, T. Using data mining to model and interpret soil diffuse reflectance spectra. Geoderma 2010, 158, 46–54. [Google Scholar]

- Zhu, D.; Ji, B.; Meng, C.; Shi, B.; Tu, Z.; Qing, Z. The performance of ν-support vector regression on determination of soluble solids content of apple by acousto-optic tunable filter near-infrared spectroscopy. Anal. Chim. Acta 2007, 598, 227–234. [Google Scholar] [CrossRef]

- Balabin, R.M.; Safieva, R.Z.; Lomakina, E.I. Comparison of linear and nonlinear calibration models based on near infrared (NIR) spectroscopy data for gasoline properties prediction. Chemom. Intell. Lab. Syst. 2007, 88, 183–188. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P. Comparing support vector machines to PLS for spectral regression applications. Remote Sens. 2020, 12, 1357. [Google Scholar] [CrossRef]

- Li, Y.; Shao, X.; Cai, W. A consensus least squares support vector regression (LS-SVR) for analysis of near-infrared spectra of plant samples. Talanta 2007, 72, 217–222. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Belgiu, M.; Dragut, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.; Mendes, M.P.; Garcia-Soldado, M.J.; Chica-Olmo, M.; Ribeiro, L. Predictive modeling of groundwater nitrate pollution using random forest and multisource variables related to intrinsic and specific vulnerability: A case study in an agricultural setting (southern Spain). Sci. Total Environ. 2014, 476, 189–206. [Google Scholar] [CrossRef] [PubMed]

- Gong, C.Z.; Buddenbaum, H.; Retzaff, R.; Udelhoven, T. An empirical assessment of angular dependency for rededge-m in sloped terrain viticulture. Remote Sens. 2019, 11, 2561. [Google Scholar] [CrossRef] [Green Version]

- Su, J.Y.; Liu, C.C.; Hu, X.P.; Xu, X.M.; Guo, L.; Chen, W.H. Spatio-temporal monitoring of wheat yellow rust using UAV multispectral imagery. Comput. Electron. Agric. 2019, 167, 105035. [Google Scholar] [CrossRef]

- Fernandez, C.I.; Leblon, B.; Wang, J.F.; Haddadi, A.; Wang, K.R. Detecting Infected cucumber plants with close-range multispectral imagery. Remote Sens. 2021, 13, 2948. [Google Scholar] [CrossRef]

- Shin, J.I.; Seo, W.W.; Kin, T.; Park, J.; Woo, C.S. Using UAV Multispectral images for classification of forest burn severity-a case study of the 2019 gangneung forest fire. Forests 2019, 10, 1025. [Google Scholar] [CrossRef] [Green Version]

- Albetis, J.; Jacquin, A.; Goulard, M.; Poilve, H.; Rousseau, J.; Clenet, H.; Dedieu, G.; Duthoit, S. On the potentiality of UAV multispectral imagery to detect flavescence doree and grapevine trunk diseases. Remote Sens. 2019, 11, 23. [Google Scholar] [CrossRef] [Green Version]

- Jin, X.J.; Che, J.; Chen, Y. Weed identification using deep learning and image processing in vegetable plantation. IEEE Access. 2021, 9, 10940–10950. [Google Scholar] [CrossRef]

- Mendoza-Tafolla, R.O.; Ontiveros-Capurata, R.E.; Juarez-Lopez, P.; Alia-Tejacal, I.; Lopez-Martinez, V.; Ruiz-Alvarez, O. Nitrogen and chlorophyll status in romaine lettuce using spectral indices from RGB digital images. Zemdirbyste 2021, 108, 79–86. [Google Scholar] [CrossRef]

- Minarik, R.; Langhammer, J.; Lendzioch, T. Automatic tree crown extraction from UAS multispectral imagery for the detection of bark beetle disturbance in mixed forests. Remote Sens. 2020, 12, 4081. [Google Scholar] [CrossRef]

- Kawamura, K.; Asai, H.; Yasuda, T.; Soisouvanh, P.; Phongchanmixay, S. Discriminating crops/weeds in an upland rice field from UAV images with the SLIC-RF algorithm. Plant Prod. Sci. 2021, 24, 198–215. [Google Scholar] [CrossRef]

- Zhang, J.; Yuan, X.D.; Lin, H. The extraction of urban built-up areas by integrating night-time light and POI data-a case study of Kunming, China. IEEE Access 2021, 9, 22417–22429. [Google Scholar]

- Liu, Y.; Dai, Q.; Liu, J.B.; Liu, S.B.; Yang, J. Study of burn scar extraction automatically based on level set method using remote sensing data. PLoS ONE 2014, 9, e87480. [Google Scholar]

- Suo, X.S.; Liu, Z.; Sun, L.; Wang, J.; Zhao, Y. Aphid identification and counting based on smartphone and machine vision. J. Sens. 2017, 2017, 3964376. [Google Scholar]

- Shi, Y.; Wang, W.; Gong, Q.; Li, D. Superpixel segmentation and machine learning classification algorithm for cloud detection in remote-sensing images. J. Eng. JOE 2019, 2019, 6675–6679. [Google Scholar] [CrossRef]

- Kamble, B.; Kilic, A.; Hubbard, K. Estimating crop coefficients using remote sensing-based vegetation index. Remote Sens. 2012, 4, 1588–1602. [Google Scholar] [CrossRef] [Green Version]

- Xue, J.R.; Su, B.F. Significant remote sensing vegetation indices: A review of developments and applications. J. Sens. 2017, 2017, 1353691. [Google Scholar] [CrossRef] [Green Version]

- Glenn, E.P.; Huete, A.R.; Nagler, P.L.; Nelson, S.G. Relationship between remotely-sensed vegetation indices, canopy attributes and plant physiological processes: What vegetation indices can and cannot tell us about the landscape. Sensors 2008, 8, 2136–2160. [Google Scholar] [CrossRef] [Green Version]

- Gitelson, A.A.; Viña, A.; Ciganda, V.; Rundquist, D.C.; Arkebauer, T.J. Remote estimation of canopy chlorophyll content in crops. Geophys. Res. Lett. 2005, 32, L08403. [Google Scholar] [CrossRef] [Green Version]

- Xue, L.H.; Cao, W.X.; Luo, W.H.; Dai, T.B.; Zhu, Y. Monitoring leaf nitrogen status in rice with canopy spectral reflectance. Agron. J. 2004, 96, 135–142. [Google Scholar] [CrossRef]

- Osco, L.P.; Ramos, A.P.M.; Pinheiro, M.M.F.; Moriya, É.A.S.; Imai, N.N.; Estrabis, N.; Ianczyk, F.; de’Araújo, F.F.; Liesenberg, V.; de Castro Jorge, L.A.; et al. A machine learning approach to predict nutrient content in valencia-orange leaf hyperspectral measurements. Remote Sens. 2020, 12, 906. [Google Scholar] [CrossRef] [Green Version]

- Zheng, H.; Li, W.; Jiang, J.; Liu, Y.; Cheng, T.; Tian, Y.; Zhu, Y.; Cao, W.; Zhang, Y.; Yao, X. A comparative assessment of different modeling algorithms for estimating leaf nitrogen content in winter wheat using multispectral images from an unmanned aerial vehicle. Remote Sens. 2018, 10, 2026. [Google Scholar] [CrossRef] [Green Version]

- Dong, T.; Liu, J.; Shang, J.; Qian, B.; Ma, B.; Kovacs, J.M.; Walters, D.; Jiao, X.; Geng, X.; Shi, Y. Assessment of red-edge vegetation indices for crop leaf area index estimation. Remote Sens. Environ. 2019, 222, 133–143. [Google Scholar] [CrossRef]

- Gong, P.; Pu, R.; Biging, G.S.; Larrieu, M.R. Estimation of forest leaf area index using vegetation indices derived from Hyperion hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1355–1362. [Google Scholar] [CrossRef] [Green Version]

- Qi, J.G.; Chehbouni, A.; Huete, A.; Kerr, Y.; Sorooshian, S. A modified soil adjusted vegetation index. Remote Sens. Environ. 1994, 48, 119–126. [Google Scholar] [CrossRef]

- Chen, J.M. Evaluation of vegetation indices and a modified simple ratio for boreal applications. Can. J. Remote Sens. 1996, 22, 229–242. [Google Scholar] [CrossRef]

- Broge, N.H.; Leblanc, E. Comparing prediction power and stability of broadband and hyperspectral vegetation indices for estimation of green leaf area index and canopy chlorophyll density. Remote Sens. Environ. 2001, 76, 156–172. [Google Scholar] [CrossRef]

- Sa, I.; Popovic, M.; Khanna, R.; Chen, Z.T.; Lottes, P. WeedMap: A large-scale semantic weed mapping framework using aerial multispectral imaging and deep neural network for precision farming. Remote Sens. 2018, 10, 1432. [Google Scholar] [CrossRef] [Green Version]

- Zhang, L.P.; Huang, X.; Huang, B.; Li, P.X. A pixel shape index coupled with spectral information for classification of high spatial resolution remotely sensed imagery. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2950–2961. [Google Scholar] [CrossRef]

- Gruner, E.; Wachendorf, M.; Astor, T. The potential of UAV-borne spectral and textural information for predicting aboveground biomass and N fixation in legume-grass mixtures. PLoS ONE 2020, 15, e0234703. [Google Scholar] [CrossRef]

- Pla, F.; Gracia, G.; Garcia-Sevilla, P.; Mirmehdi, M.; Xie, X.H. Multi-spectral texture characterisation for remote sensing image segmentation. In Lecture Notes in Computer Science, Pattern Recognition and Image Analysis. IbPRIA 2009, Povoa de Varzim, Portugal, 10–12 June 2009; Springer: Berlin/Heidelberg, Germany, 2009; p. 257. [Google Scholar]

- Zehtabian, A.; Nazari, A.; Ghassemian, H.; Gribaudo, M. Adaptive restoration of multispectral datasets used for SVM classification. Eur. J. Remote Sens. 2015, 48, 183–200. [Google Scholar] [CrossRef] [Green Version]

- Zhang, J.; Li, P.J.; Wang, J.F. Urban built-up area extraction from landsat TM/ETM plus images using spectral information and multivariate texture. Remote Sens. 2014, 6, 7339–7359. [Google Scholar] [CrossRef] [Green Version]

- Moskal, L.M.; Styers, D.M.; Halabisky, M. Monitoring Urban tree cover using object-based image analysis and public domain remotely sensed data. Remote Sens. 2011, 3, 2243–2262. [Google Scholar] [CrossRef] [Green Version]

- Gini, R.; Sona, G.; Ronchetti, G.; Passoni, D.; Pinto, L. Improving tree species classification using UAS multispectral images and texture measures. ISPRS Int. Geo-Inf. 2018, 7, 315. [Google Scholar] [CrossRef] [Green Version]

- Qian, Y.T.; Ye, M.C.; Zhou, J. Hyperspectral image classification based on structured sparse logistic regression and three-dimensional wavelet texture features. IEEE Trans. Geosci. Remote Sens. 2013, 51, 2276–2291. [Google Scholar] [CrossRef] [Green Version]

- Laurin, G.V.; Puletti, N.; Hawthorne, W.; Liesenberg, V.; Corona, P.; Papale, D.; Chen, Q.; Valentini, R. Discrimination of tropical forest types, dominant species, and mapping of functional guilds by hyperspectral and simulated multispectral Sentinel-2 data. Remote Sens. Environ. 2016, 176, 163–176. [Google Scholar] [CrossRef] [Green Version]

- Soh, L.K.; Tsatsoulis, C. Texture analysis of SAR sea ice imagery using gray level co-occurrence matrices. IEEE Trans. Geosci. Remote Sens. 1999, 37, 780–795. [Google Scholar] [CrossRef] [Green Version]

- Zhang, X.; Liu, F.; He, Y.; Li, X. Application of hyperspectral imaging and chemometric calibrations for variety discrimination of maize seeds. Sensors 2012, 12, 17234–17426. [Google Scholar] [CrossRef] [PubMed]

- Tian, B.; Shaikh, M.A.; Azimi-Sadjadi, M.R.; Vonder Haar, T.H.; Reinke, D.L. A study of cloud classification with neural networks using spectral and textural features. IEEE Trans. Neural Netw. 1999, 10, 138–151. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Guo, Y.H.; Fu, Y.H.; Chen, S.Z.; Bryant, C.R.; Li, X.X.; Senthilnath, J.; Sun, H.Y.; Wang, S.X.; Wu, Z.F.; de Beurs, K. Integrating spectral and textural information for identifying the tasseling date of summer maize using UAV based RGB images. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102435. [Google Scholar] [CrossRef]

- Wei, L.F.; Wang, K.; Lu, Q.K.; Liang, Y.J.; Li, H.B.; Wang, Z.X.; Wang, R.; Cao, L.Q. Crops fine classification in airborne hyperspectral imagery based on multi-feature fusion and deep learning. Remote Sens. 2021, 13, 2917. [Google Scholar] [CrossRef]

- Shan, C.F.; Gong, S.G.; McOwan, P.W. Facial expression recognition based on Local Binary Patterns: A comprehensive study. Image Vis. Comput. 2009, 27, 803–816. [Google Scholar] [CrossRef] [Green Version]

- Motlagh, N.H.; Bagaa, M.; Taleb, T. UAV-based IoT platform: A crowd surveillance use case. IEEE Commun. Mag. 2017, 55, 633–647. [Google Scholar] [CrossRef] [Green Version]

- Yang, K.L.; Gong, Y.; Fang, S.H.; Duan, B.; Yuan, N.G.; Peng, Y.; Wu, X.T.; Zhu, R.S. Combining spectral and texture features of UAV images for the remote estimation of rice LAI throughout the entire growing season. Remote Sens. 2021, 13, 3001. [Google Scholar] [CrossRef]

- Mateos-Aparicio, G. Partial Least Squares (PLS) methods: Origins, evolution, and application to social sciences. Commun. Stat.-Theory Methods. 2011, 40, 2035–2317. [Google Scholar] [CrossRef] [Green Version]

- Moghaddam, T.M.; Razavi, S.M.A.; Taghizadeh, M.; Sazgarnia, A. Sensory and instrumental texture assessment of roasted pistachio nut/kernel by partial least square (PLS) regression analysis: Effect of roasting conditions. J. Food Sci. Technol. 2016, 53, 370–380. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Malegori, C.; Marques, E.J.N.; de Freitas, S.T.; Pimentel, M.F.; Pasquini, C.; Casiraghi, E. Comparing the analytical performances of Micro-NIR and Ft-NIR spectrometers in the evaluation of acerola fruit quality, using PLS and SVM regression algorithms. Talanta 2017, 165, 112–116. [Google Scholar] [CrossRef]

- Genisheva, Z.; Quintelas, C.; Mesquita, D.P.; Ferreira, E.C.; Oliveira, J.M.; Amaral, A.L. New PLS analysis approach to wine volatile compounds characterization by near infrared spectroscopy (NIR). Food Chem. 2018, 246, 172–178. [Google Scholar] [CrossRef] [Green Version]

- Razaque, A.; Frej, M.B.; Almi’ani, M.; Alotaibi, M.; Alotaibi, B. Improved support vector machine enabled radial basis function and linear variants for remote sensing image classification. Sensors 2021, 21, 4431. [Google Scholar] [CrossRef]

- Xu, X.G.; Fan, L.L.; Li, Z.H.; Meng, Y.; Feng, H.K.; Yang, H.; Xu, B. Estimating leaf nitrogen content in corn based on information fusion of multiple-sensor imagery from UAV. Remote Sens. 2021, 13, 340. [Google Scholar] [CrossRef]

| Band Number | Band Name | Center Wavelength (nm) | Bandwidth FWHM (nm) |

|---|---|---|---|

| 1 | Blue | 475 | 20 |

| 2 | Green | 560 | 20 |

| 3 | Red | 668 | 10 |

| 4 | Near-IR | 840 | 40 |

| 5 | Red Edge | 717 | 10 |

| Tea Polyphenols | Total Sugars | Free Amino Acids | Caffeine | ||||

|---|---|---|---|---|---|---|---|

| VIs | Correlations | VIs | Correlations | VIs | Correlations | VIs | Correlations |

| NDVI | 0.462 | WDRVI | 0.782 | BI | 0.475 | G | 0.546 |

| OSAVI | 0.462 | SIPI | 0.783 | NDVI | 0.483 | GNDVI | 0.551 |

| WDRVI | 0.464 | ED | 0.791 | OSAVI | 0.483 | V | 0.565 |

| R | 0.471 | NDVI | 0.796 | RVI | 0.491 | R | 0.567 |

| BI | 0.499 | OSAVI | 0.796 | WDRVI | 0.491 | B | 0.571 |

| V | 0.523 | B | 0.8030 | NDRE | 0.5 | SIPI | 0.592 |

| NDRE | 0.534 | BI | 0.812 | V | 0.503 | NDVI | 0.593 |

| G | 0.543 | R | 0.8150 | G | 0.516 | OSAVI | 0.593 |

| GNDVI | 0.544 | G | 0.8150 | GNDVI | 0.52 | RVI | 0.596 |

| ED | 0.556 | V | 0.8270 | ED | 0.522 | WDRVI | 0.598 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, L.; Xu, B.; Zhao, C.; Duan, D.; Cao, Q.; Wang, F. Application of Multispectral Camera in Monitoring the Quality Parameters of Fresh Tea Leaves. Remote Sens. 2021, 13, 3719. https://doi.org/10.3390/rs13183719

Chen L, Xu B, Zhao C, Duan D, Cao Q, Wang F. Application of Multispectral Camera in Monitoring the Quality Parameters of Fresh Tea Leaves. Remote Sensing. 2021; 13(18):3719. https://doi.org/10.3390/rs13183719

Chicago/Turabian StyleChen, Longyue, Bo Xu, Chunjiang Zhao, Dandan Duan, Qiong Cao, and Fan Wang. 2021. "Application of Multispectral Camera in Monitoring the Quality Parameters of Fresh Tea Leaves" Remote Sensing 13, no. 18: 3719. https://doi.org/10.3390/rs13183719

APA StyleChen, L., Xu, B., Zhao, C., Duan, D., Cao, Q., & Wang, F. (2021). Application of Multispectral Camera in Monitoring the Quality Parameters of Fresh Tea Leaves. Remote Sensing, 13(18), 3719. https://doi.org/10.3390/rs13183719