1. Introduction

Video SAR (ViSAR) can display sequential SAR images at a certain rate and has the ability of a dynamic monitoring scene. Compared with traditional SAR imaging results (i.e., static images), ViSAR can provide long-time persistent surveillance of ROI at a high frame rate and can obtain the trajectories of ground moving targets [

1,

2]. In order to better monitor the scene, a high frame rate and high resolution are required. For the given azimuth resolution, frame rate is proportional to carrier frequency. Thus, ViSAR operating at a high carrier frequency, such as Ka band or THz band, can achieve high resolution and high frame rate simultaneously [

3,

4]. One drawback of the THz ViSAR is the limited detection range due to hardware limitation. Therefore, the THz ViSAR airborne experiment is hardly to be carried out. At present, some institutions such as Jet Propulsion Laboratory (JPL) [

5], Pacific Northwest National Laboratory (PNNL) [

6] and High Frequency Physics and Radar Research Center (FHR) [

7] have successively developed several THz band SAR systems. The working range of the MIRANDA-300 system [

8], designed by FHR and operating with a center frequency of 300 GHz, was up to 500 m in 2015.

Spotlight mode is the main mode for ViSAR [

9]. The Back-Projection (BP) [

10,

11,

12] and the polar format algorithm (PFA) [

4,

13,

14] are two useful methods for spotlight SAR imaging. Compared with the BP algorithm, PFA is more efficient and easier to integrate with autofocus algorithms like Map-Drift (MD) [

15] and phase gradient autofocus (PGA) [

16]. Therefore, PFA is widely used in spotlight SAR imaging. However, phase error. introduced by the planar approximation of PFA, causes image distortion and defocusing [

17,

18,

19]. As the scene expands, the distortion and defocusing are more and more severe. Therefore, the depth of focus (DOF) of PFA is limited. The distortion of images can be corrected by the 2D image interpolation that projects the image grids onto an actual position [

18]. Some methods, such as spatially variant post filtering (SVPF) [

17,

20,

21] and sub-block imaging [

22], are proposed to avoid the defocusing of imaging results.

To achieve high resolution, phase error caused by non-ideal motion must be compensated for, especially in the case of SAR systems with high carrier frequency, because the short wavelength is more sensitive to motion error [

23,

24,

25]. The most common motion compensation (MOCO) method is GPS-based MOCO, which is based on aircraft motion measurement data measured by GPS. This method is simple and efficient, which is more suitable for real-time MOCO. However, GPS-based MOCO depends on a high precision GPS, which increases the cost of experiment, and the GPS accuracy is difficult to achieve centimeter lever. Apart from GPS-based MOCO, some autofocused techniques that are based on echo data have been proposed. One type is parametric, and it employs the polynomial phase error model, e.g., the reflectivity displacement method (RDM) [

26] and the MD technique [

15,

25]. The other type of solution is nonparametric, e.g., the rank one phase estimator (ROPE) [

27], shear averaging [

28] and PGA [

16,

25]. The effectiveness of these methods has been demonstrated by experiment data. However, in case of a very fine azimuth resolution, the phase error caused by motion error tends to be of a higher order. Under such conditions, even if the residual range cell migration (RCM) could be compensated for, some autofocused methods still suffer from performance degradation. MD can only estimate quadratic phase error, which cannot compensate for a higher order phase error. PGA can compensate for any higher order phase error, theoretically. Nevertheless, the main lobe widening and energy loss in the presence of a significant high-order phase error affect the performance of PGA [

29,

30]. In recent years, MOCO based on wavelet transform (WT) has been proposed to correct the vibration error in THz band [

31]. Normally, neural network can be used for imaging a high-quality image [

32]. With the increasing requirement for resolution, the topography variation, phase unwrapping and two-axis gimbal stabilization still need to be considered [

33,

34,

35].

In this paper, we propose the generation method of ViSAR and present the results of airborne ViSAR in THz and Ku band, respectively. First, the PFA for spotlight SAR is described, and the planar approximation of PFA is analyzed. The relationship between the coherent accumulation angle and the azimuth resolution and the relationship between the video frame rate and overlapping ratio are analyzed in depth. Furthermore, considering the difficulty of MOCO for fine azimuth resolution, three-step MOCO, which combines GPS-based MOCO, MD and PGA, is proposed for high resolution imaging. Three-step MOCO, which was refocused by MD before PGA, can improve the performance of PGA. Furthermore, to generate the video result, problems such as the jitter, non-uniform greyscale and low image SNR between images obtained from different aspect angles should be overcome. So, a ViSAR generation method is proposed to solve the above problems. By the proposed method, the satisfactory results of ViSAR in the THz and Ku band are presented in this paper. To the best of our knowledge, this is the first airborne linear spotlight ViSAR result at the THz band. We demonstrate the capability of using extremely short sub-apertures and a low overlapping ratio to generate high resolution and high frame rate ViSAR. The effectiveness of three-step MOCO is also validated. To further verify the efficiency of the generation method of ViSAR with a wide observation scene, another CSAR experiment in Ku band is carried out. What’s more, compared with linear spotlight SAR, circular SAR (CSAR), which illuminates targets from wide aspect angles, has the capability of monitoring urban scenes. For example, according to shadow information of ground moving targets, we can obtain their trajectories.

The main contributions of this paper are

- (i).

The three-step MOCO, which was first applied to high resolution imaging, can effectively compensate for motion error;

- (ii).

The procedure of ViSAR that is proposed in this paper can effectively improve ViSAR quality;

This paper is organized as follows.

Section 2 describes the ViSAR model. In

Section 3, procedure of ViSAR is introduced in detail. In

Section 4, the results of the experimental data are shown. Finally, the conclusion is given in

Section 5.

2. ViSAR Model

2.1. Theory of ViSAR Imaging Algorithm

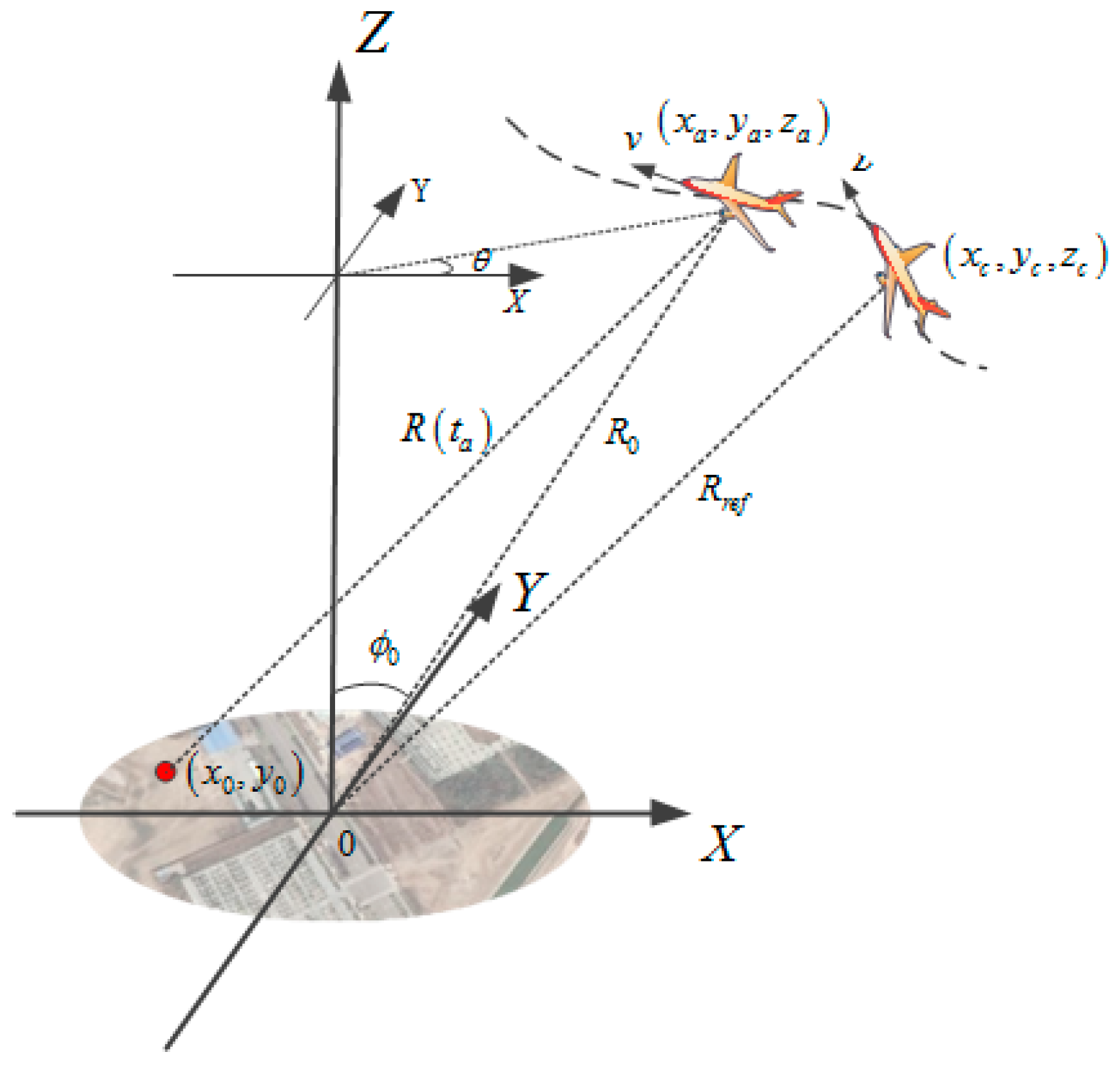

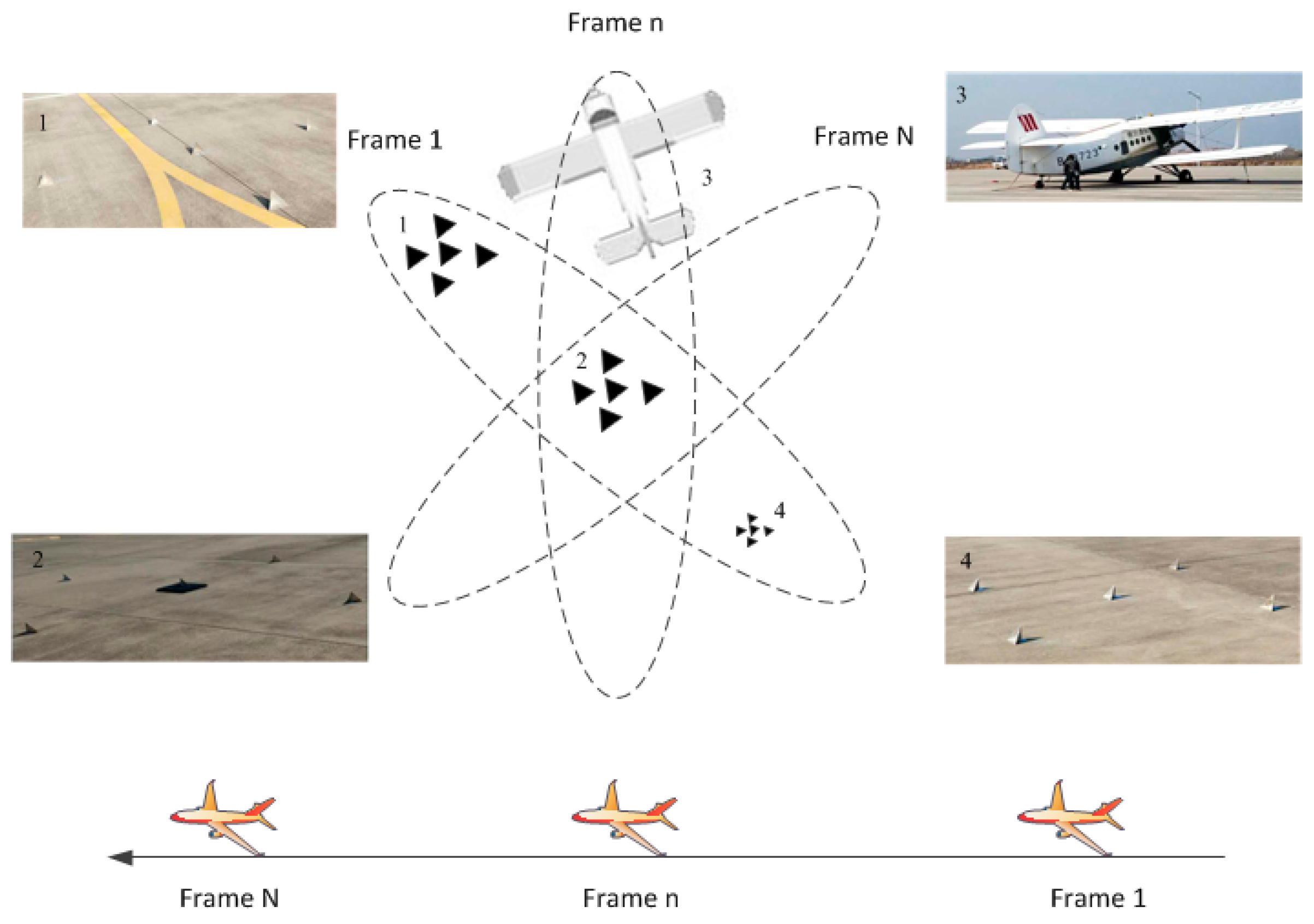

The geometry of airborne spotlight SAR is shown in

Figure 1. In

Figure 1, the radar antenna is continuously steered to illuminate a fixed scene as the platform flies along an imaginary line with the speed

. The echo of target can be described as

where

is the amplitude of signal,

is the signal time width,

is the center frequency,

is the Doppler rate,

is the speed of light,

is the fast-time,

is the slow-time.

denotes the instantaneous distance between platform position

and target

and it can be described as

Let

denote the instantaneous slant range from platform position to scene center

Scene center is selected as the reference point for MOCO. To simplify the subsequent analysis without loss of generality, the curvilinear aperture is assumed to have occurred in the slow time interval of

, therefore the time instant at the aperture center is defined as the initial time

. The coordinates of the aperture center are denoted by

and the slant range

can be expressed as

The de-chirp signal can be expressed as

where

. The last term in Equation (5) is the residual video phase (RVP) which should be removed. The expression of Equation (5) in wavenumber domain is

In Equation (6),

and

.

can be expressed with Taylor expansion

is the higher order term which is neglected in planar wave approximation [

10,

13,

14].

and

are depression and corresponding azimuth angles in coordinate system and the expression is

Substituting the expression of Equation (7) into Equation (6) and the expression can be rewritten as

where

,

. According to the expression of

and

, the spectrum in wavenumber domain is nonuniform. In order to project the nonuniform result onto a uniform grid in wavenumber domain, the Line-of-Sight Polar Interpolation (LOSPI) is applied [

3,

10]. The coordinate system is rotated to the direction of Line of Sight (LOS) in LOSPI. After interpolation, applying 2D FFT to obtain desired image

When the imaging scene is large, the

in Equation (7) cannot be eliminated. The phase error caused by

may affect the imaging quality seriously. The primary phase error causes distortion, and the QPE causes defocusing. For an image that is defocused, according to the expression of QPE the expression of the maximal allowable scene radius can be derived [

3,

10].

where

is azimuth resolution,

is wavelength,

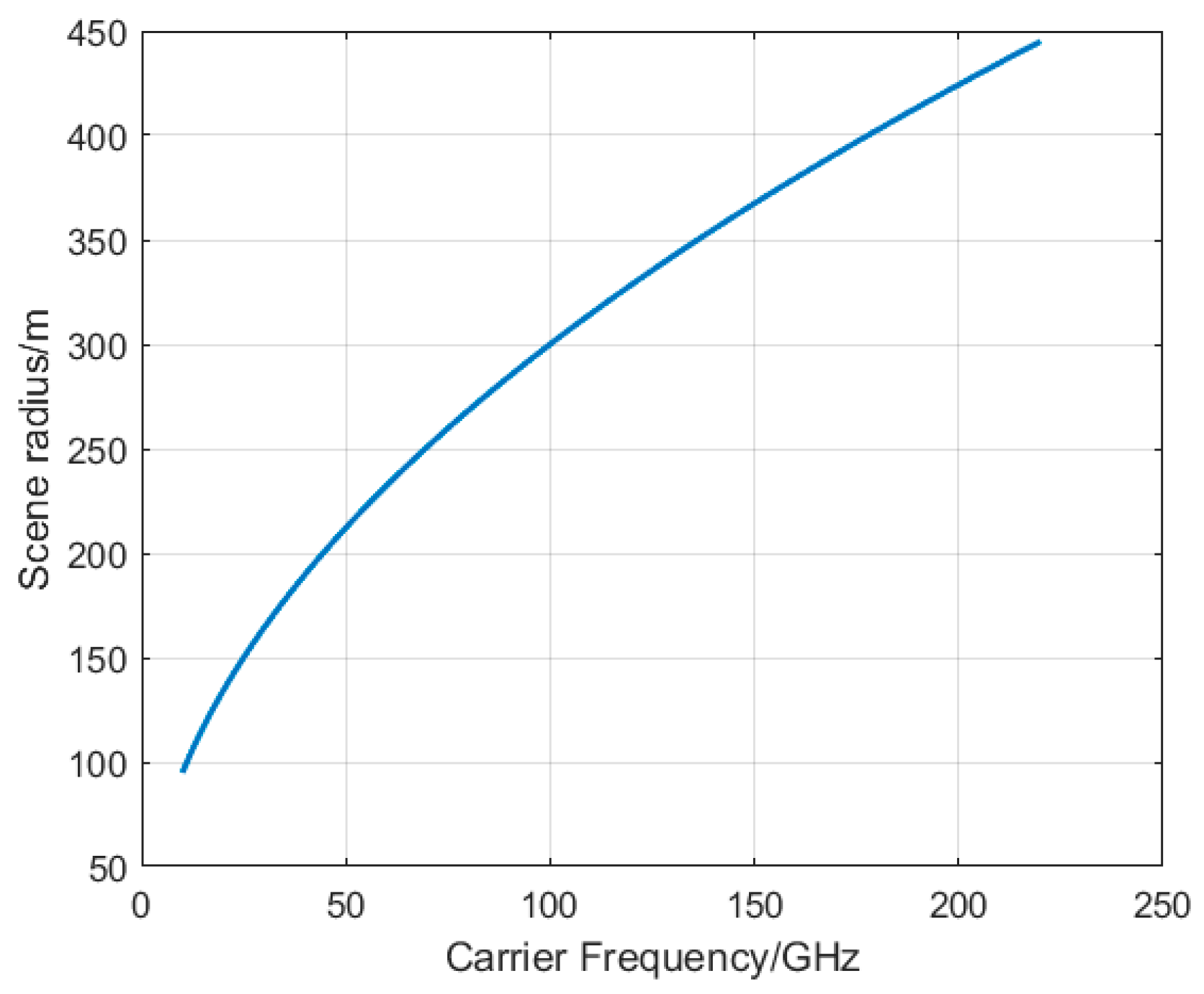

is the maximal allowable scene radius. For given azimuth resolution 0.15 m, the relationship between scene radius and carrier frequency is shown in

Figure 2.

From

Figure 2, with the higher carrier frequency, the maximal allowable scene radius is longer. For allowable region that is larger than ROI, the QPE of the traditional PFA images can be neglected and all the targets in the whole ROI have a good, focused performance. If ROI is larger than the allowable region, SVPF or sub-block imaging [

17,

20,

22] can be used to correct it. However, the image distortion cannot be neglected [

3]. To correct image distortion, 2D image interpolation is applied [

10]. Because of distortion, the target actual location

is located at

in PFA image. The relationship between

and

can be expressed as

where

is the aperture center’s azimuth angle. According to Equation (12), the distortion can be eliminated by an interpolation operation that can project

onto an actual location

.

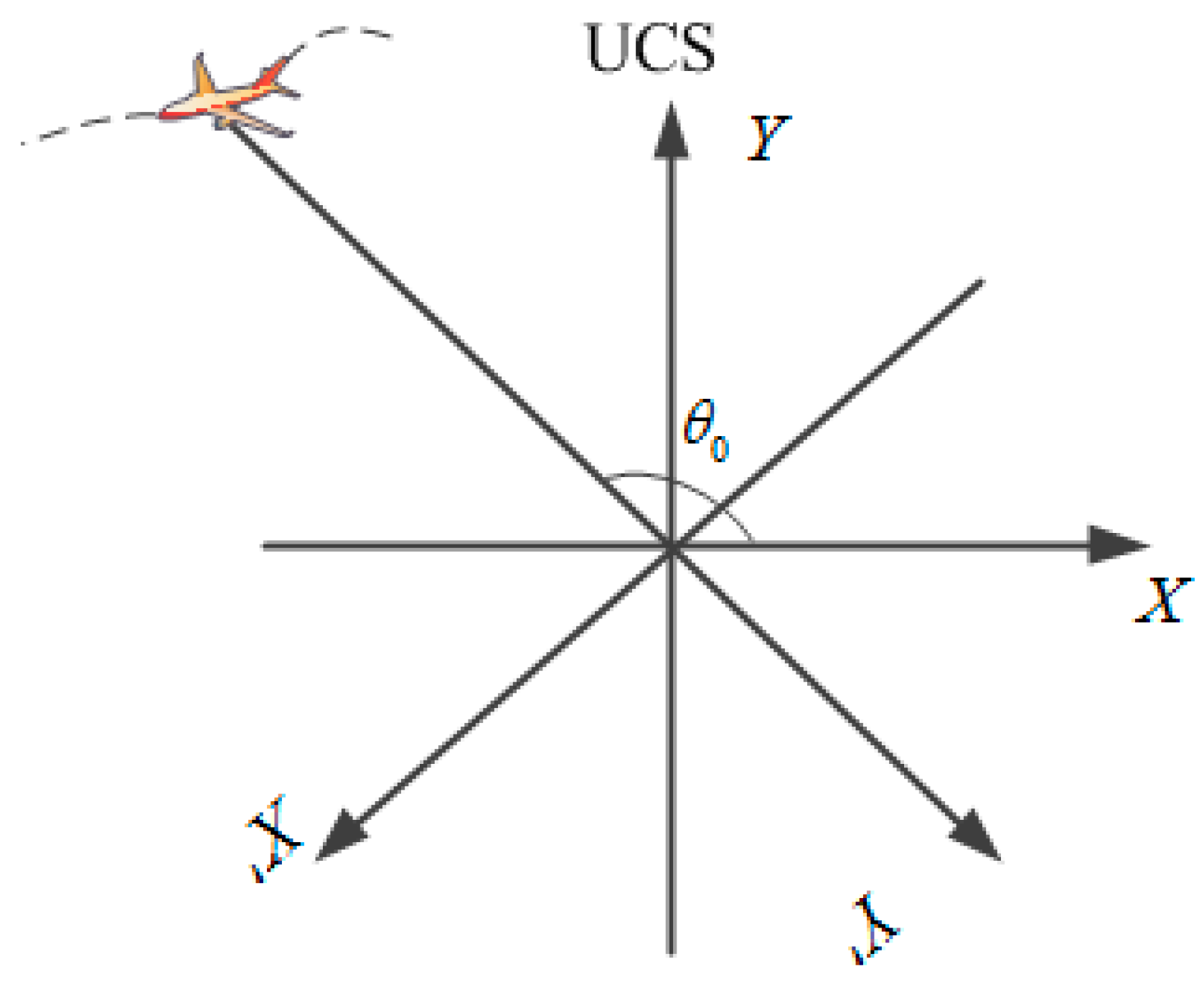

The process of LOSPI and 2D image interpolation is shown in

Figure 3.

As mentioned above, the imaging result of LOSPI is rotated to the direction of LOS. Therefore, the images in different frames are in different coordinate systems. The ViSAR images are formed in the unified coordinate system (UCS), so all images should be rotated into UCS [

3]. As shown in

Figure 4, the images in different frames are rotated

clockwise into UCS. The relationship between each coordinate system

and UCS

can be expressed as

2.2. Resolution and Frame Rate Analysis

According to SAR imaging theory [

36,

37,

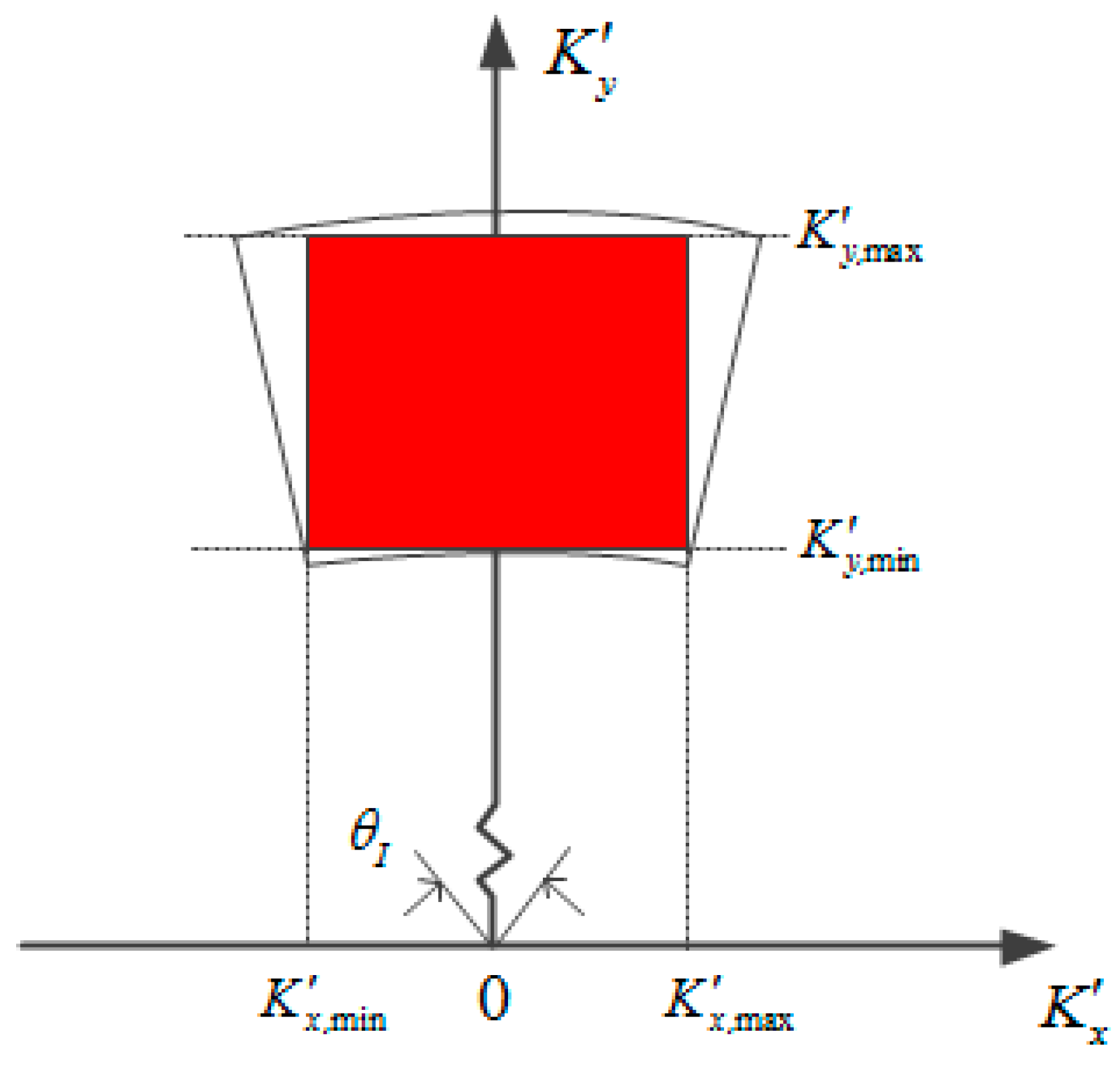

38], the resolution of SAR image is related to the width of wavenumber spectrum. As shown in

Figure 5, after LOSPI the range of

and

can be expressed as

where

and

is bandwidth,

denotes azimuth integration angle. The expression of range resolution

and azimuth resolution

is

The expression of Equation (14) is substituted into Equation (15). Due to a small azimuth integration angle that meets the condition of

, the azimuth and range resolution can be rewritten as [

38]

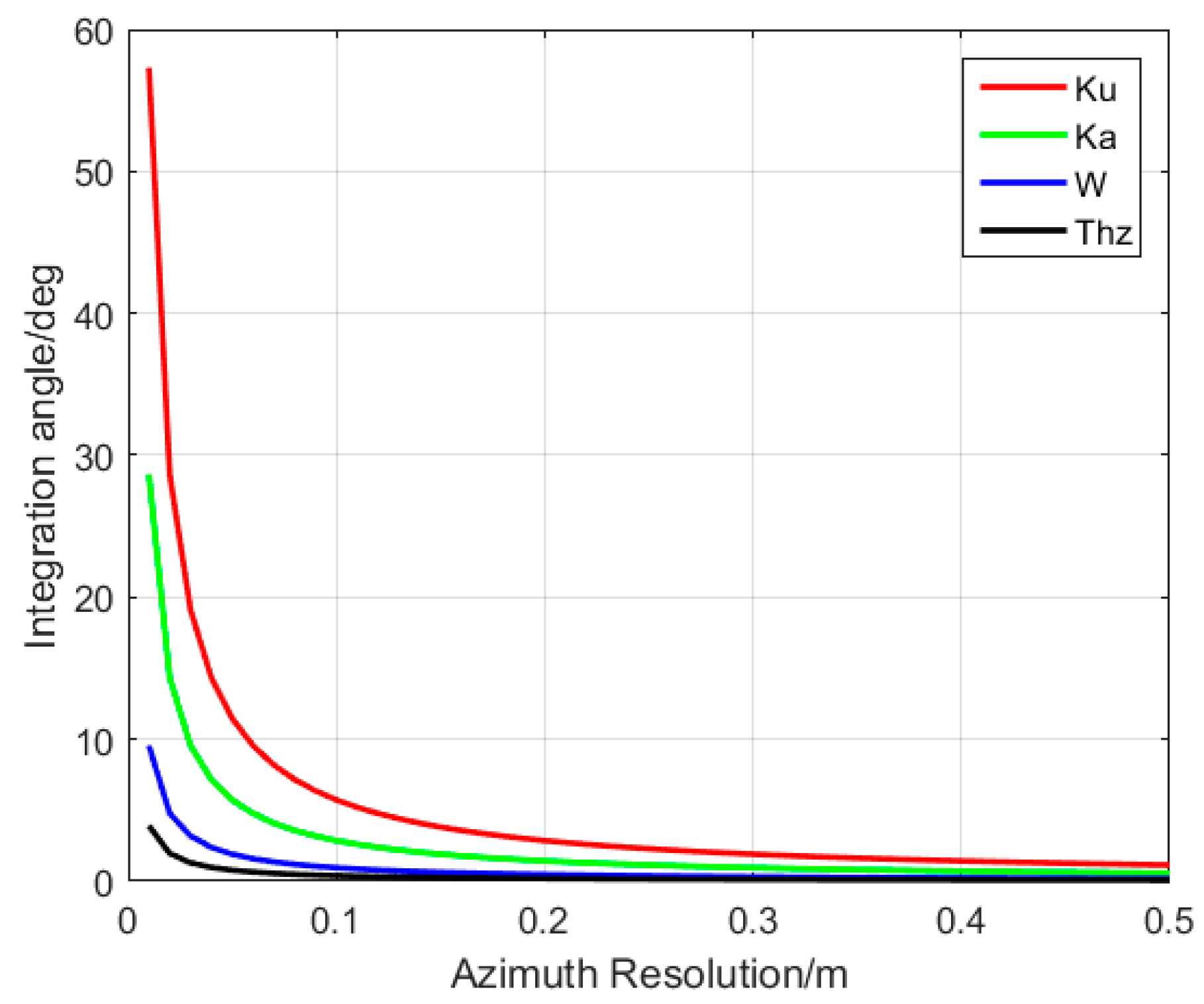

According to Equation (16), the azimuth resolution is inversely proportional to azimuth integration angle. The relationship between the azimuth integration angle and resolution in Ku, Ka, W and THz bands is shown in

Figure 6. A given azimuth resolution (high) can be achieved at a smaller azimuth integration angle if the carrier frequency is higher. For PFA, based on a small synthetic aperture angle assumption, we can hardly use low carrier frequency for high resolution imaging.

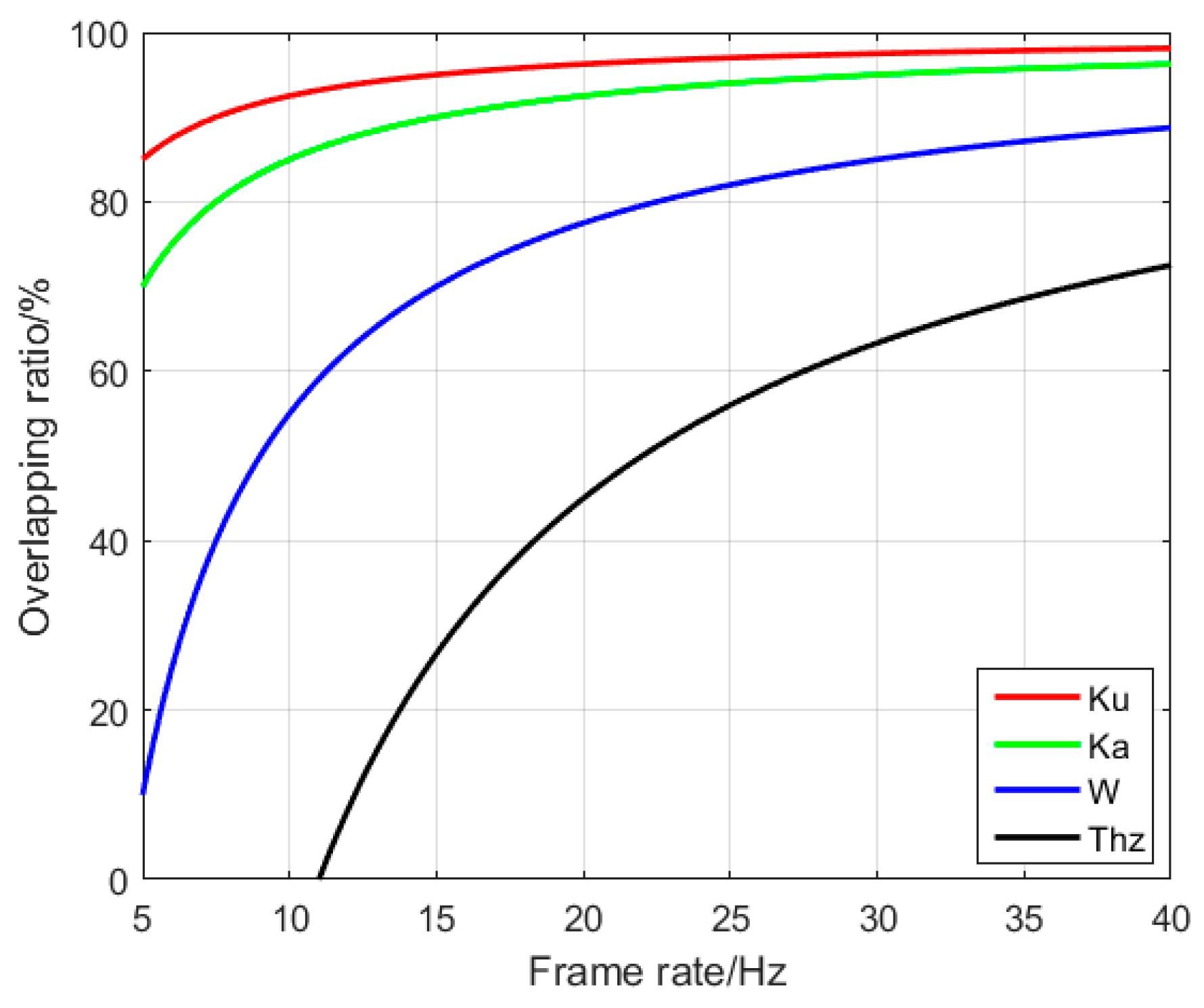

Assuming the required frame rate of ViSAR is

that can be written as [

3,

10]

where

denotes sub-aperture overlapping ratio. We set the

,

and

, then the relationship between overlapping ratio and frame rate is shown in

Figure 7. Generally, the frame rate is required to be more than 5 Hz to make ViSAR look more consecutive. For Ku band, the sub-aperture overlapping ratio needs to exceed 80% to meet the frame rate requirements.

2.3. Three-Step Motion Compensation

During the flight of aircraft, the motion error introduced by airflow disturbance and attitude control will seriously affect the SAR image quality. Especially for terahertz band, whose wavelength is short and more sensitive to motion error. Traditional GPS-based MOCO may not satisfy terahertz radar because of limited GPS accuracy. In this paper, a three-step MOCO is proposed that combines GPS-based MOCO and autofocused algorithms [

25].

Assuming the actual position of aircraft measured by GPS is

, the MOCO function can be expressed as

where

is the actual distance between aircraft and scene center. Multiplying the Equations (6) and (18) can compensate for most motion errors.

where

. Due to the very short wavelength of THz Radar and the limitation of GPS precision, after GPS based MOCO there still exists residual phase error and

denotes the residual phase error,

denotes the residual motion error. The depression and corresponding azimuth angles can be rewritten as

After LOSPI, the signal can be rewritten as

According to [

39], the properties of phase error along

is

According to Equation (24), two-dimensional coupled can be neglected, and autofocused algorithm relies on property of phase gradient. What’s more, the spatial variance of phase error can be solved by image segmenting. Because of GPS-based MOCO and short aperture, RCM introduced by phase error can be considered less than range resolution. Therefore, one-dimensional (1D) autofocus can be used to compensate for azimuth phase error. Applying the range FFT, the signal in range-Doppler domain is obtained

From Equation (25), this model perfectly fits autofocused assumptions. Applying PGA directly can effectively estimate phase error

. Let

represent the expression of Equation (25) at target range cell,

is the azimuth position index.

where

is the length of full aperture.

where

is phase angle,

is the conjugate function of

. The estimator in Equation (27) is well known as PGA [

10,

16,

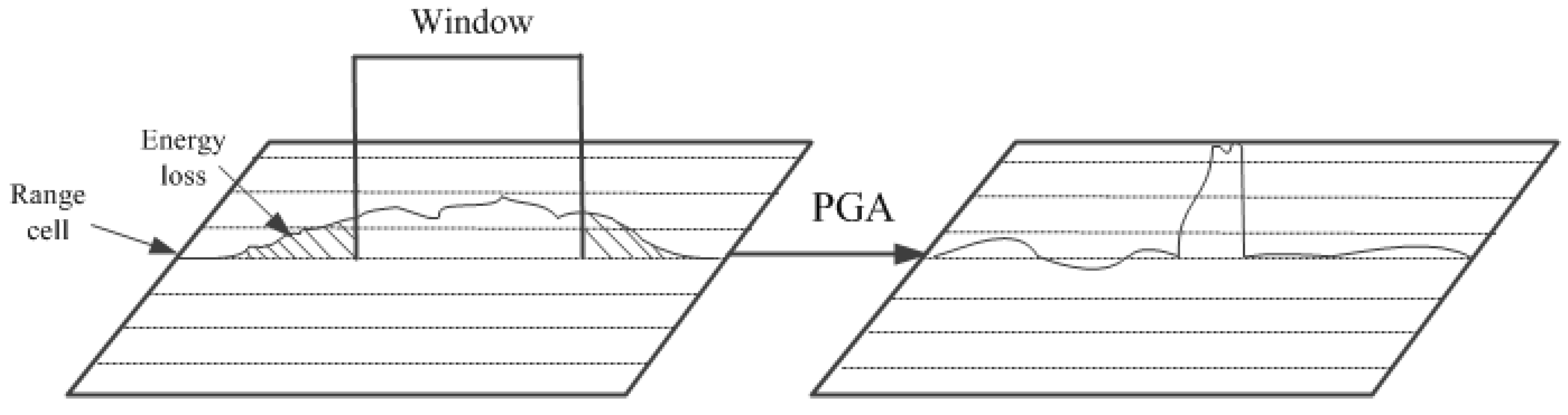

25]. Unlike the parametric methods PGA estimates the gradient instead of the polynomial coefficients of the phase error function in an optimal manner derived from maximum-likelihood estimation (MLE). In case of very fine azimuth resolution, large squint angle, long standoff distance or low speed radar platform, the PGA may suffer from performance degradation. The underlying mechanism is that the windowing step of PGA, being essentially a low-pass filter, on the one hand improves the signal-to-clutter ratio (SCR) that enhances error estimation accuracy, but on the other hand, it distorts the dominant scatter response that becomes more spread in the presence of significant high-order phase error [

29,

30]. As shown in

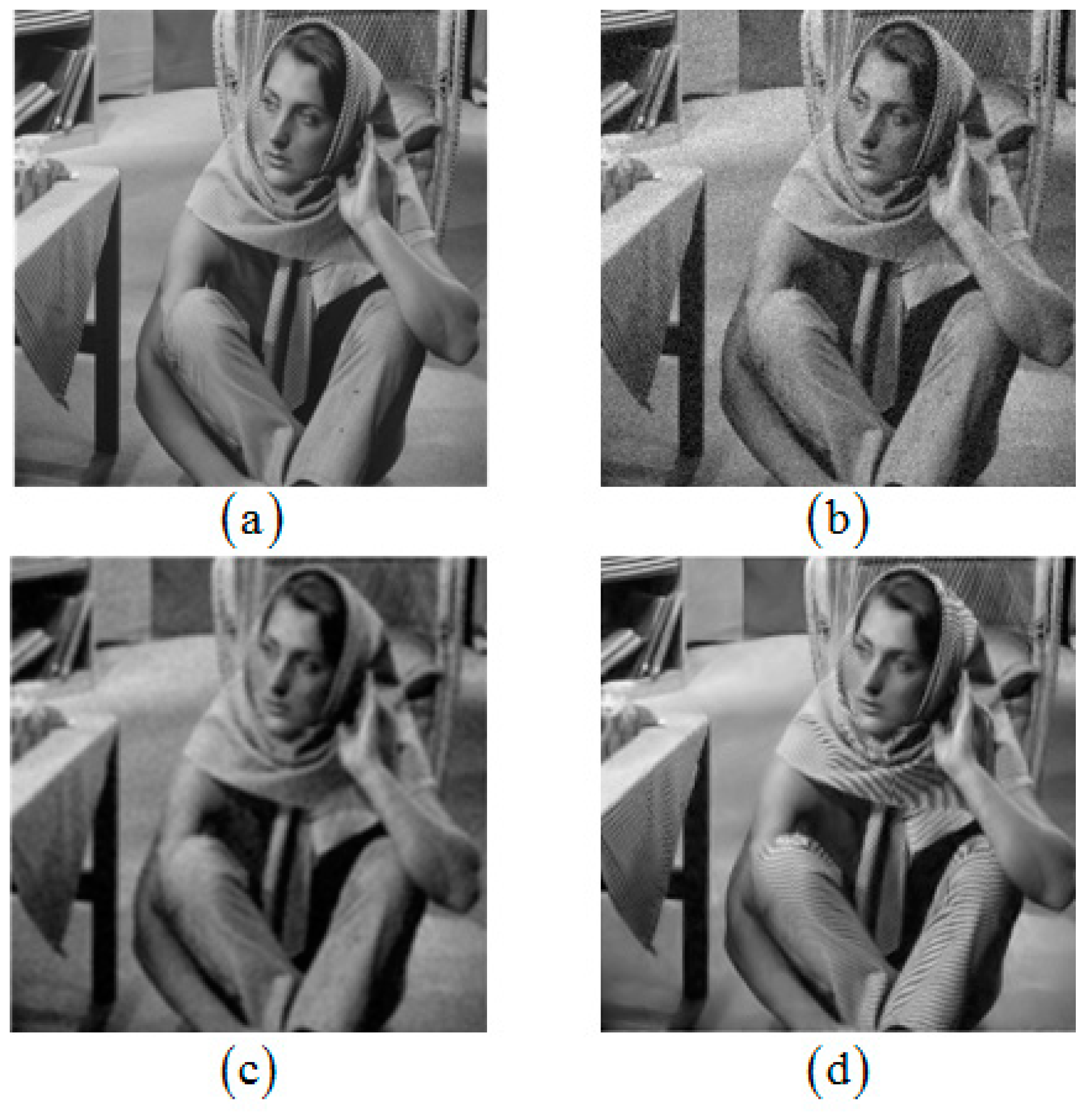

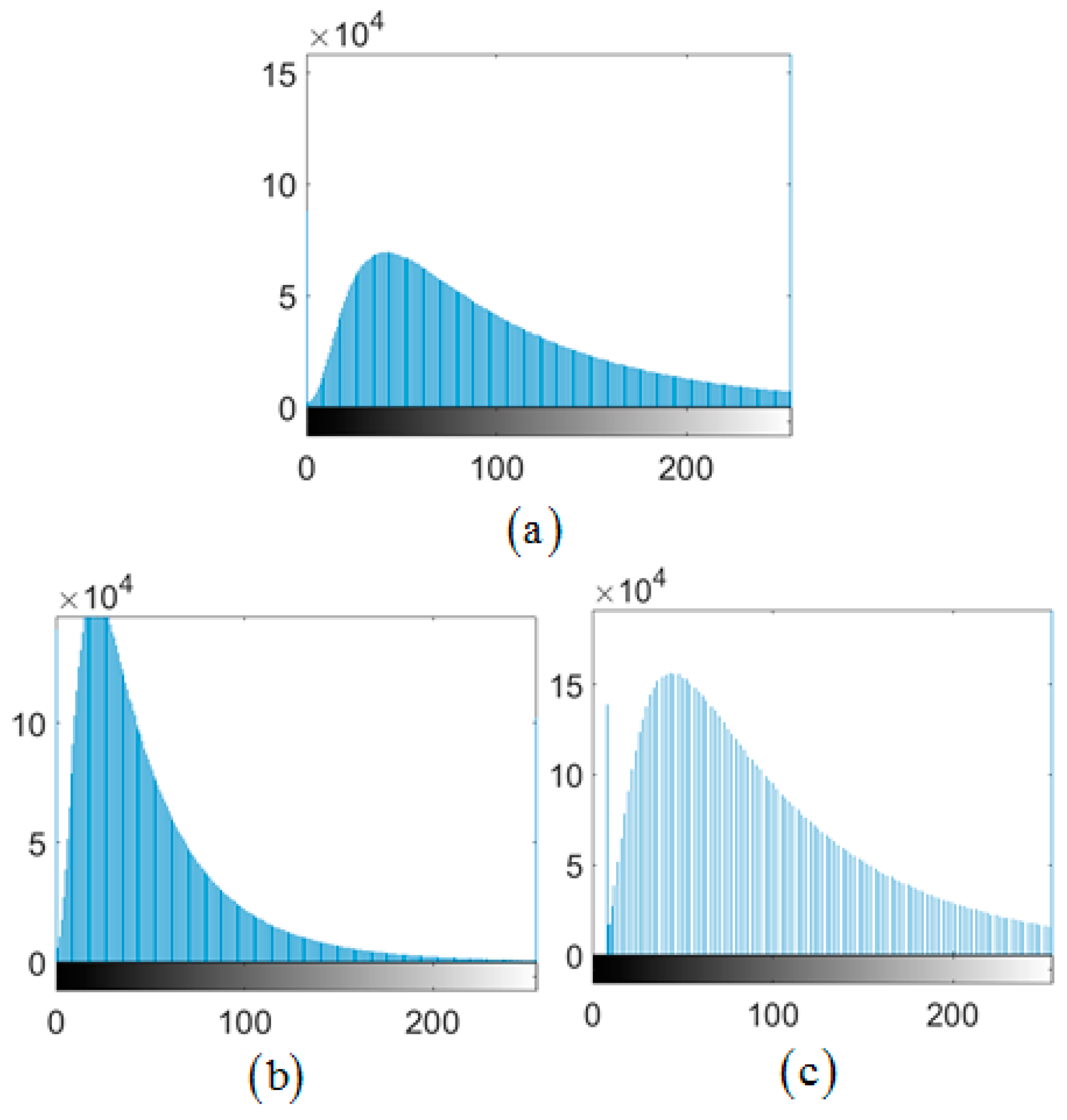

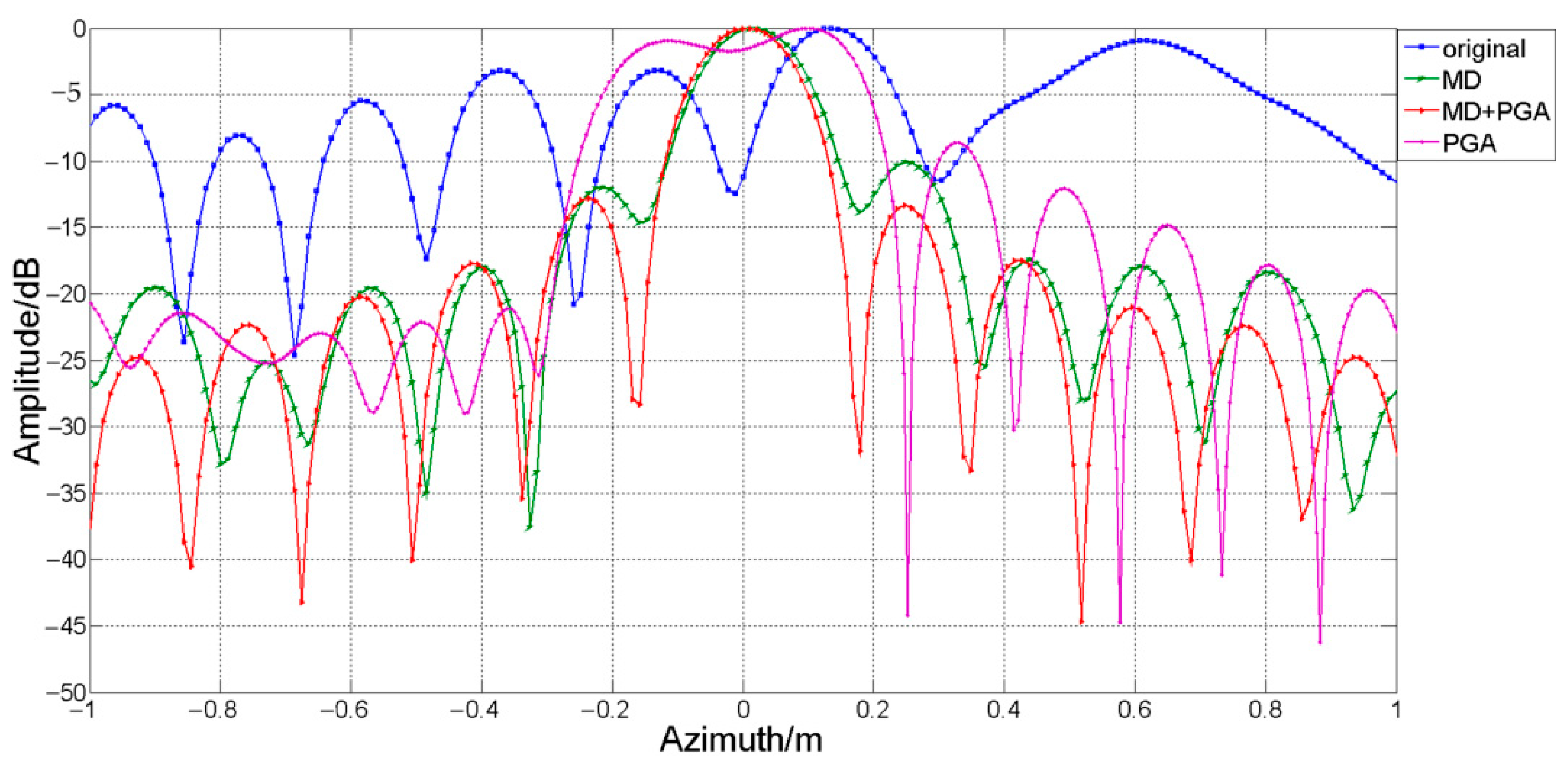

Figure 8, the main lobe widening and energy loss seriously affected the performance of PGA.

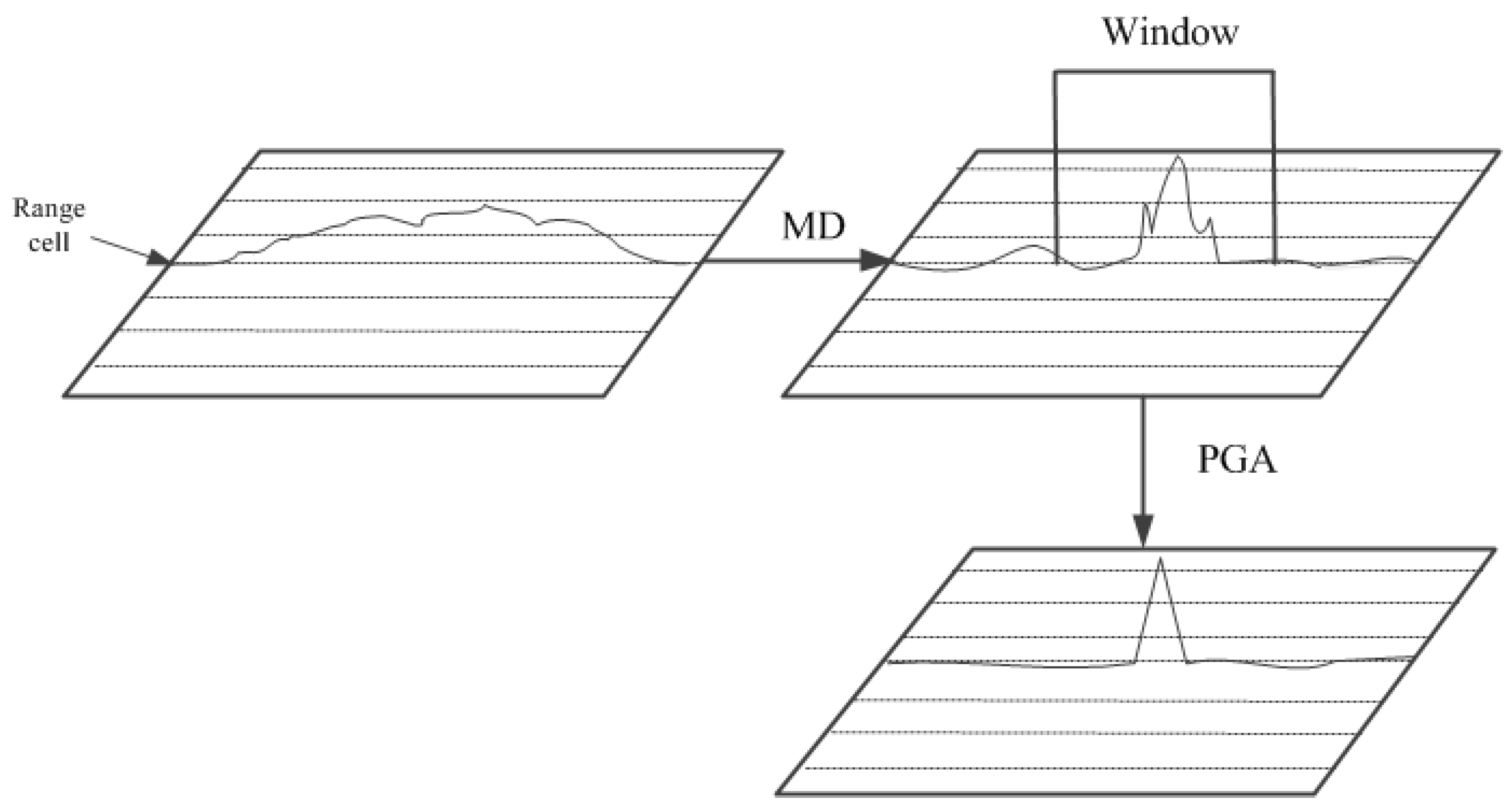

In order to solve the above problem, MD algorithm was applied to compensate for quadratic phase error before PGA. As shown in

Figure 9, after MD compensation, main lobe widening and energy loss are effectively suppressed which can greatly improve the performance of PGA.

MD algorithm is one of the autofocus algorithms based on parametric, therefore the spreading of dominant scatter response does not influence the performance of MD [

15,

25]. For MD algorithm, first, the full aperture is divided into

sub-apertures, the length of each sub-aperture is

. The phase error within the

sub-aperture is

The

sub-aperture is evenly divided into two non-overlapping parts

and

, and the offset value

between

and

can be given by cross-correlation method.

where

means cross-correlation. From

, the quadratic phase error can be estimated as

where

is the quadratic phase error in

sub-aperture. According to Equation (30), the quadratic phase error in each sub-aperture can be obtained. The quadratic phase error of each azimuth cell

can be obtained by interpolation and then compensate echo signal.

At last apply PGA to estimate residual high order phase error of , as shown in Equation (27). After being compensated by MD and PGA, the target is well focused.

2.4. Complexity Analysis of Proposed Algorithm

To sum up, the proposed imaging algorithm with three-step motion compensation has three main steps: LOSPI, three-step motion compensation and 2D image interpolation. Assuming that the size of input sample in range and slow time are and , respectively, the size of the output ROI image is . A complex multiplication requires four real multiplications and two real additions, a total of six floating point operations (flops). A complex addition requires two real additions, i.e., two flops. A 1-D FFT of length requires flops and a 2D FFT of requires flops.

The principle of LOSPI is to convolve the input nonuniform samples onto uniform grid with a truncated Gaussian kernel and exploit 2D FFT for efficient image reconstruction. Assume the length of kernel in the transform is . For each grid point, it requires complex multiplications and complex additions, a total of flops. Therefore, the computation of the total wavenumber grid is flops.

Three-step motion compensation consists of three procedures. The first procedure is GPS-based MOCO, it requires complex multiplication, a total of flops. The second procedure is MD algorithm, it requires complex multiplication, a total of flops. The third procedure is PGA algorithm, it requires complex multiplication, a total of flops. The total computation of three-step motion compensation is flops.

The final procedure of 2D image interpolation has the same computation compared with LOSPI. The whole procedure requires a range FFT and an azimuth FFT that transform the wavenumber domain into image domain. The total computation of FFT is flops. In summary, the total complexity for the whole procedure is flops.

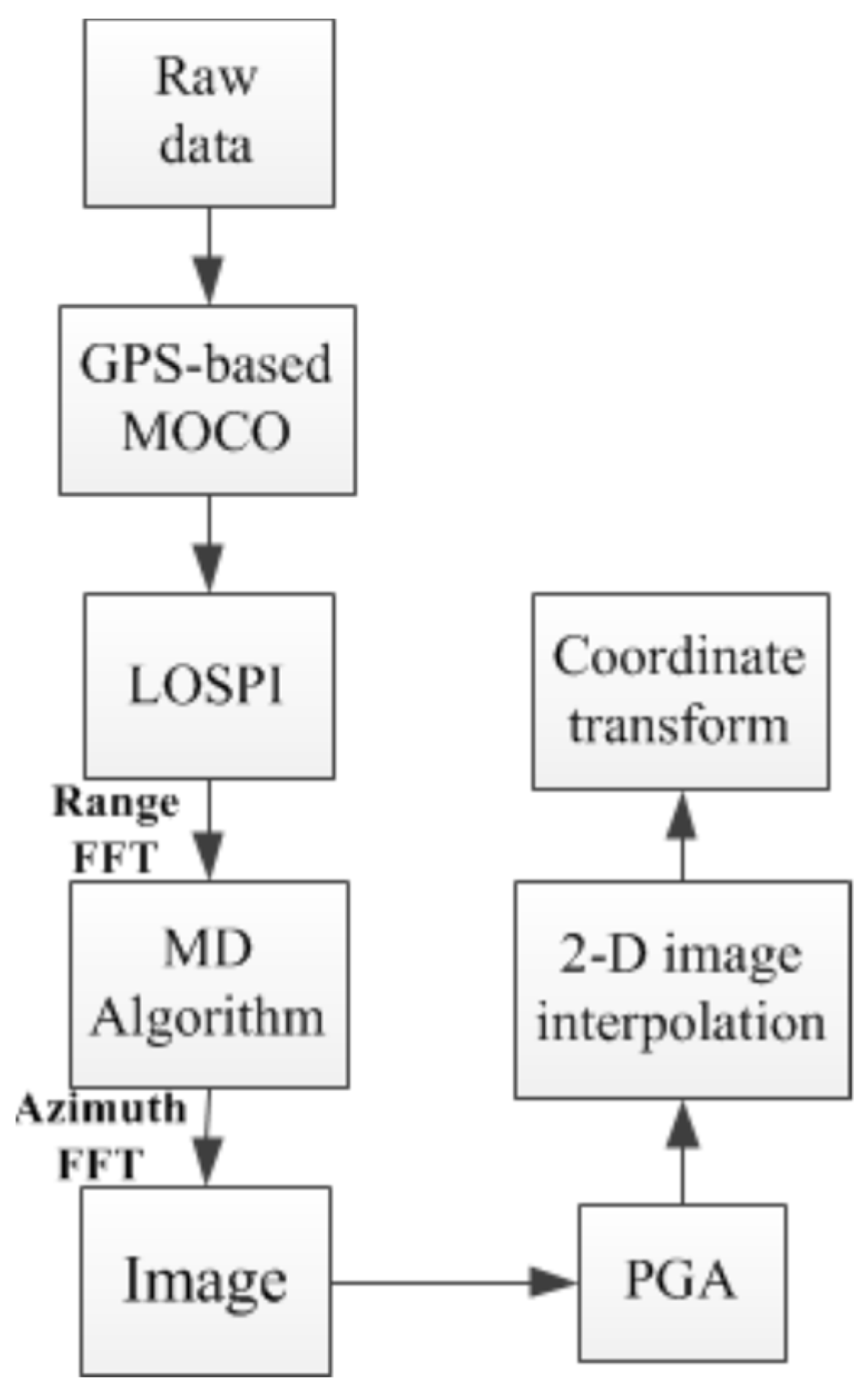

The flowchart of imaging algorithm with three-step motion compensation is shown in

Figure 10.

4. Experiment and Analysis

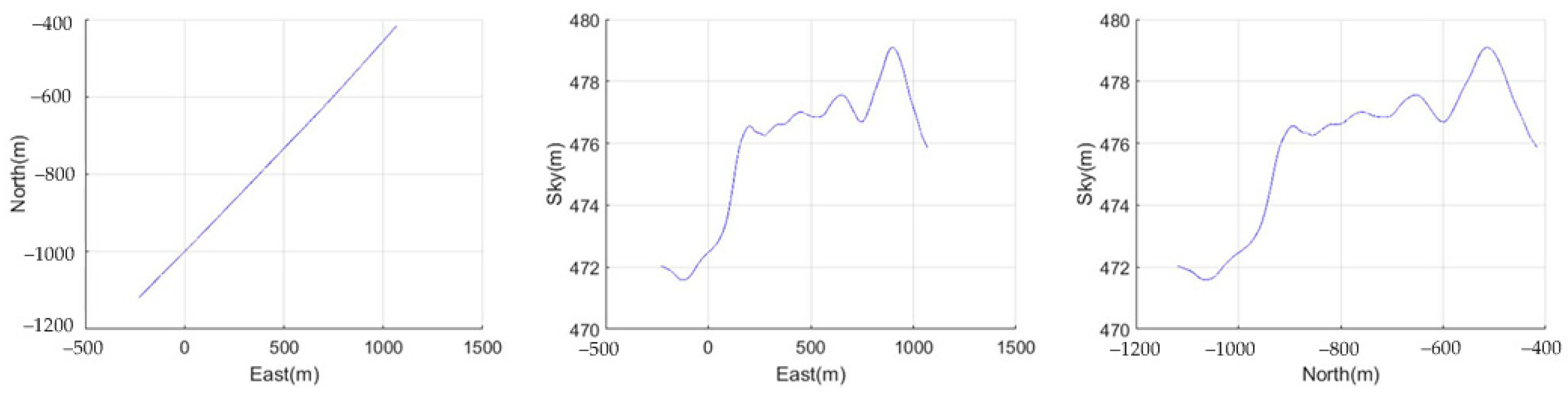

In order to demonstrate the theoretical analysis presented in this paper further, an experimental data processing experiment was carried out. The THz experiment was carried out by Beijing Institute of Radio Measurement in Shaanxi province, China. The radar operated in THz band.

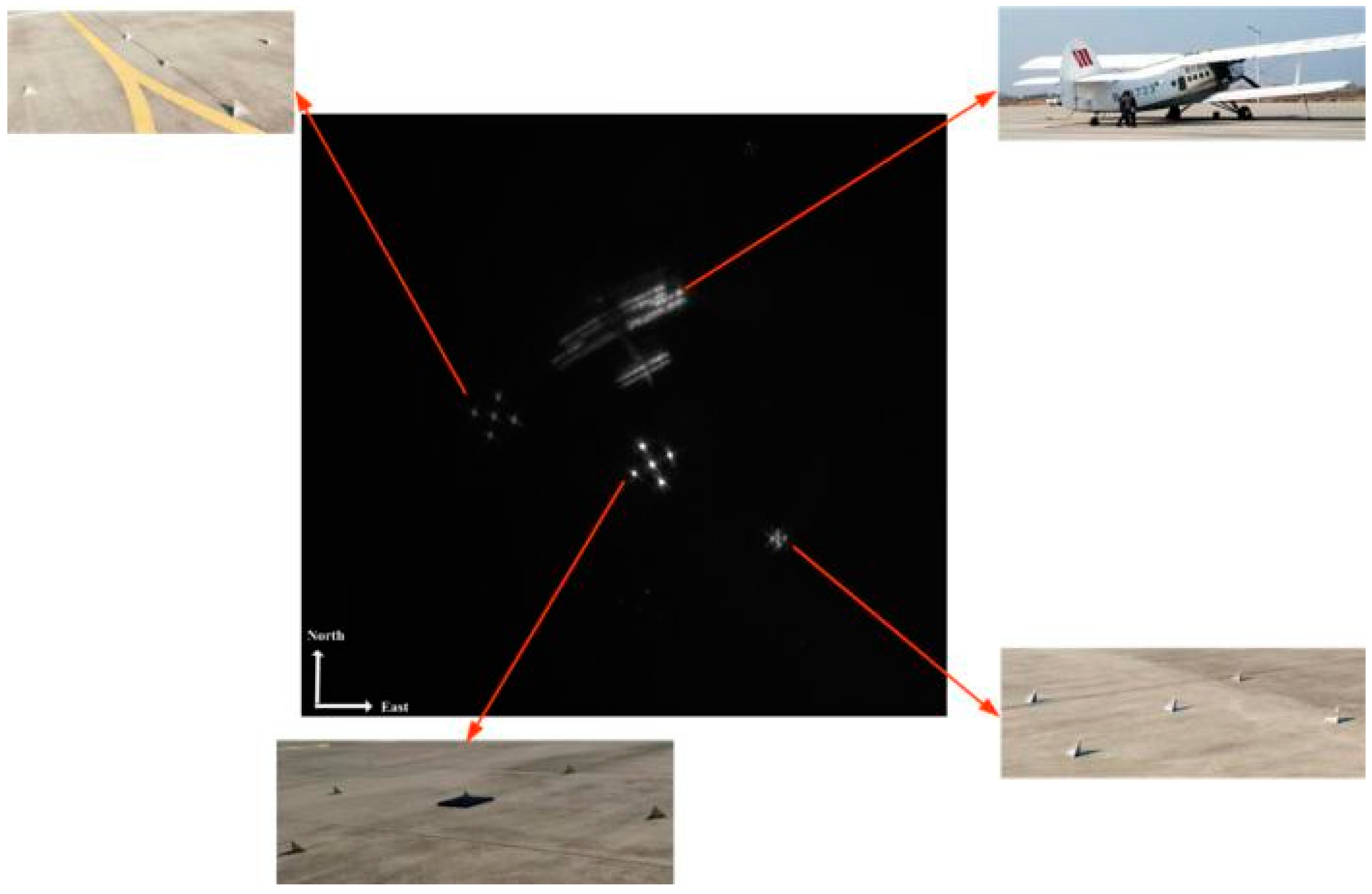

Figure 15 shows the flight trajectory of radar platform, which is not ideal linear trajectory. Because of terahertz band, the motion error cannot be neglected. The experimental scene is shown in

Figure 16. There were three groups of trihedral corner reflectors and an aircraft in the scene. The aircraft flew from east to west. The size of experimental size is

. Our experimental data processing was based on the Intel i7-4790 CPU, AMD Radeon R7 350 GPU hardware platform.

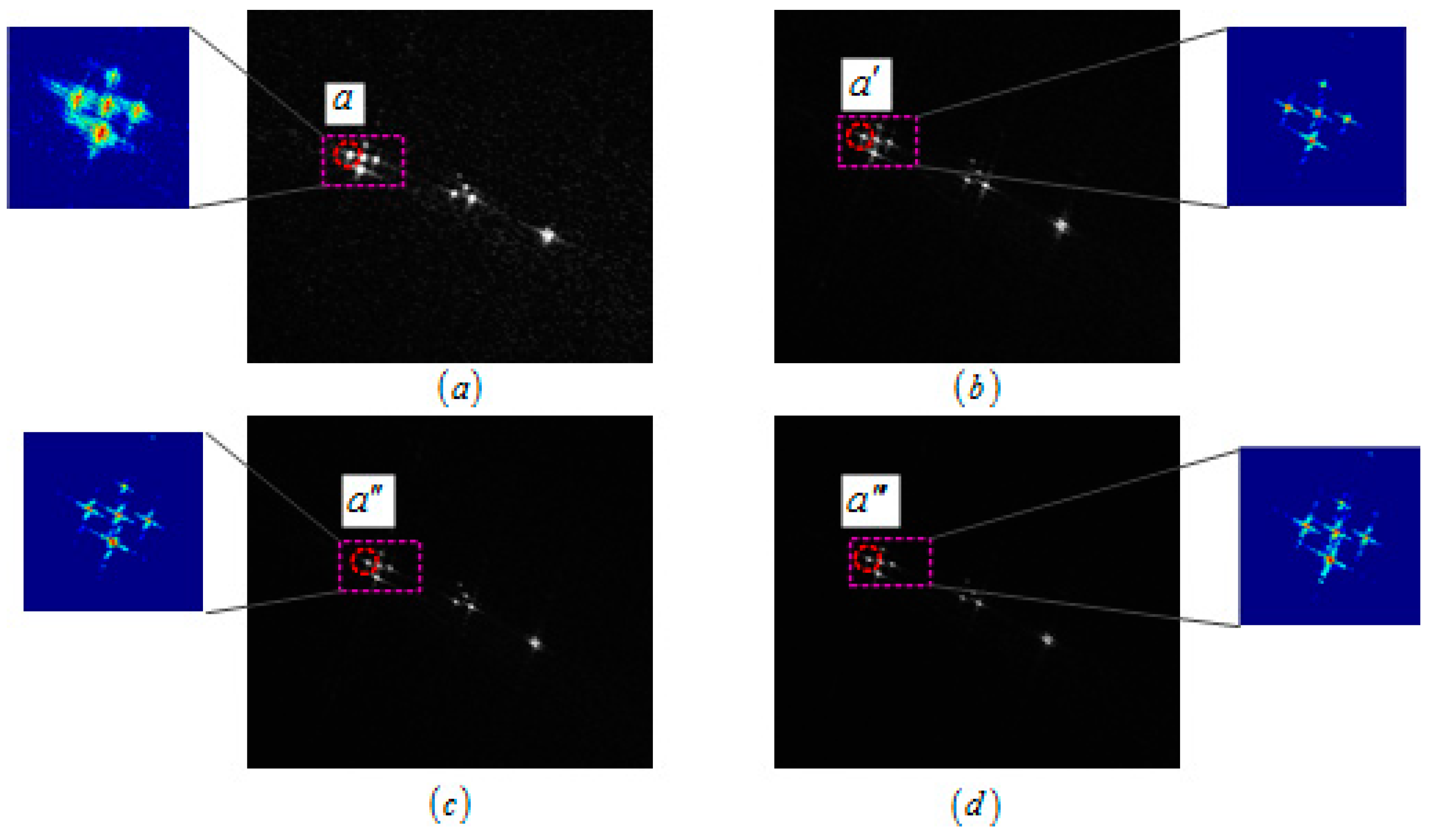

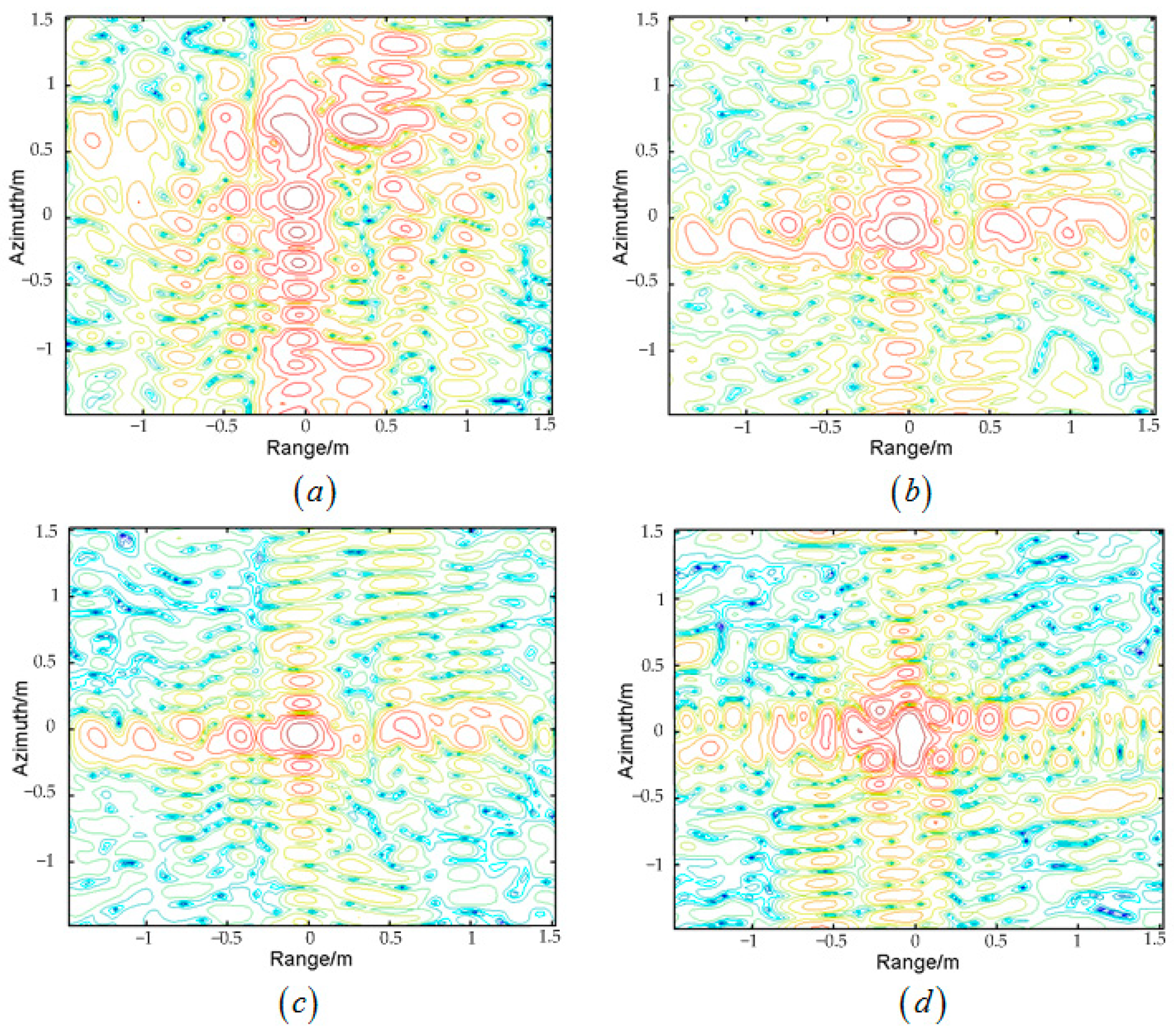

The azimuth integration angle was

, resulting in 0.15 m azimuth resolution. To achieve 15 Hz frame rate, the overlapping ratio can be set 26%. According to (11), the DOF

was 283 m, which is much larger than experimental size, therefore the image defocused, introduced by planar wave approximation, was neglected. To verify the effectiveness of three-step MOCO, we selected an imaging result of a single frame as shown in

Figure 17. In order to compare visibility, four same position targets

,

,

and

were selected to be compared, and the contour map results are shown in

Figure 18. Point

seriously defocused along azimuth. It revealed that the motion error greatly impacted the focusing properties. Compared with point

, point

was refocused by MD, which the main lobe focused well. However, the residual high order phase error caused the asymmetrical distribution of the side lobe. Point

was processed by MD and PGA, and we can see that the main lobe was well focused and the side lobe was symmetrically distributed. As mentioned before, main lobe widening and energy loss seriously affect the performance of PGA, therefore point

which was processed by PGA directly is not focused as well as point

. The azimuth profile of point

,

,

and

are shown in

Figure 19.

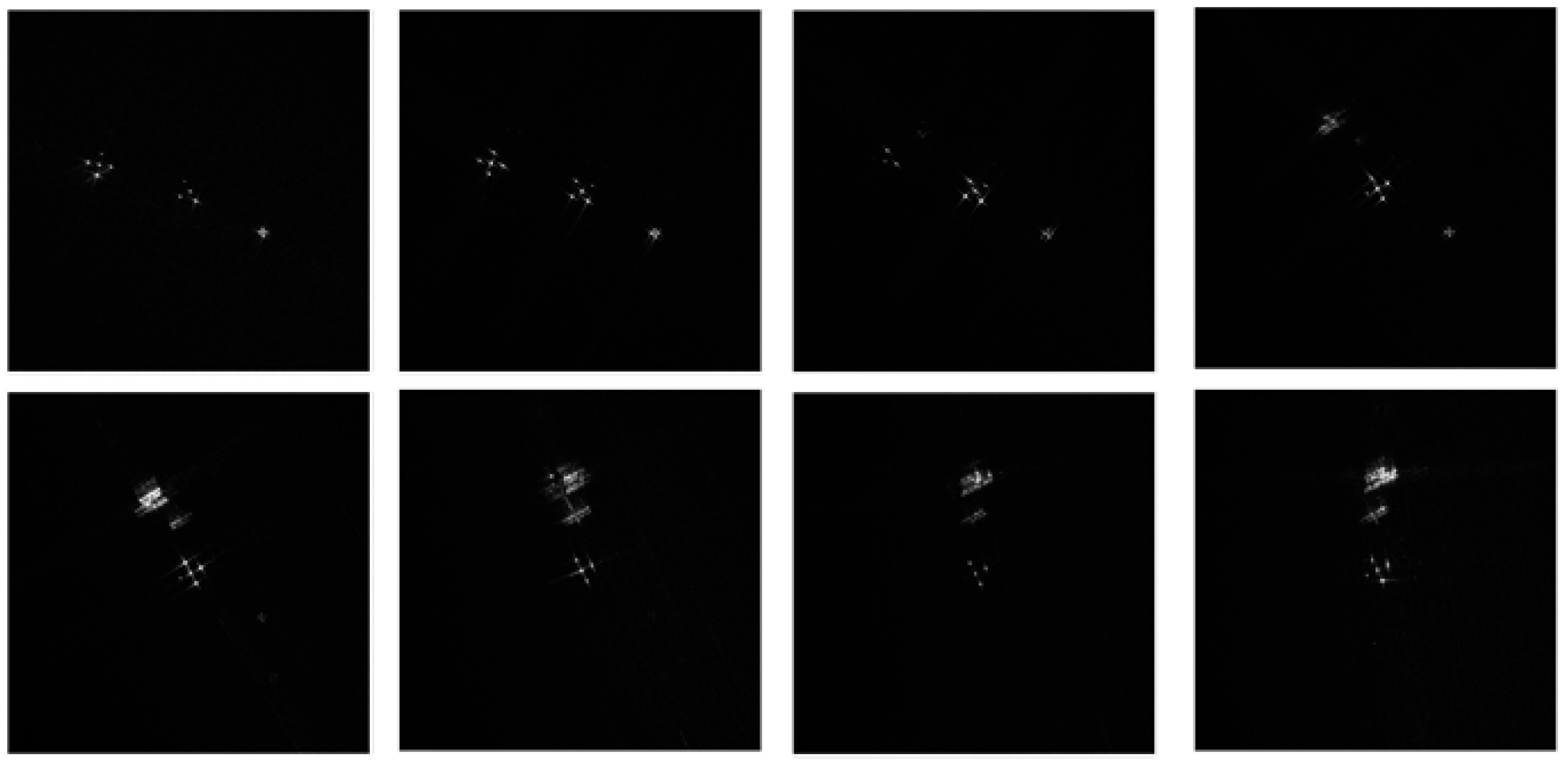

Furthermore, to verify the effectiveness of ViSAR procedures that were proposed in this paper, imaging results of different frames are shown in

Figure 20. According to imaging results, there was no jitter and grey scale difference between different frame images. To illustrate the whole imaging scene, the multiple-frame images were fused together. The result is shown in

Figure 21. Because of the high resolution in terahertz band, the outline of the aircraft is quite clear in the fused image.

To verify the ability of the long-time persistent surveillance of ROI, another experiment was carried out by National University of Defense Technology in Shaanxi province, China. The carrier frequency of radar was Ku band. The size of the experimental scene was

, and the experimental scene from Google Earth is shown in

Figure 22.

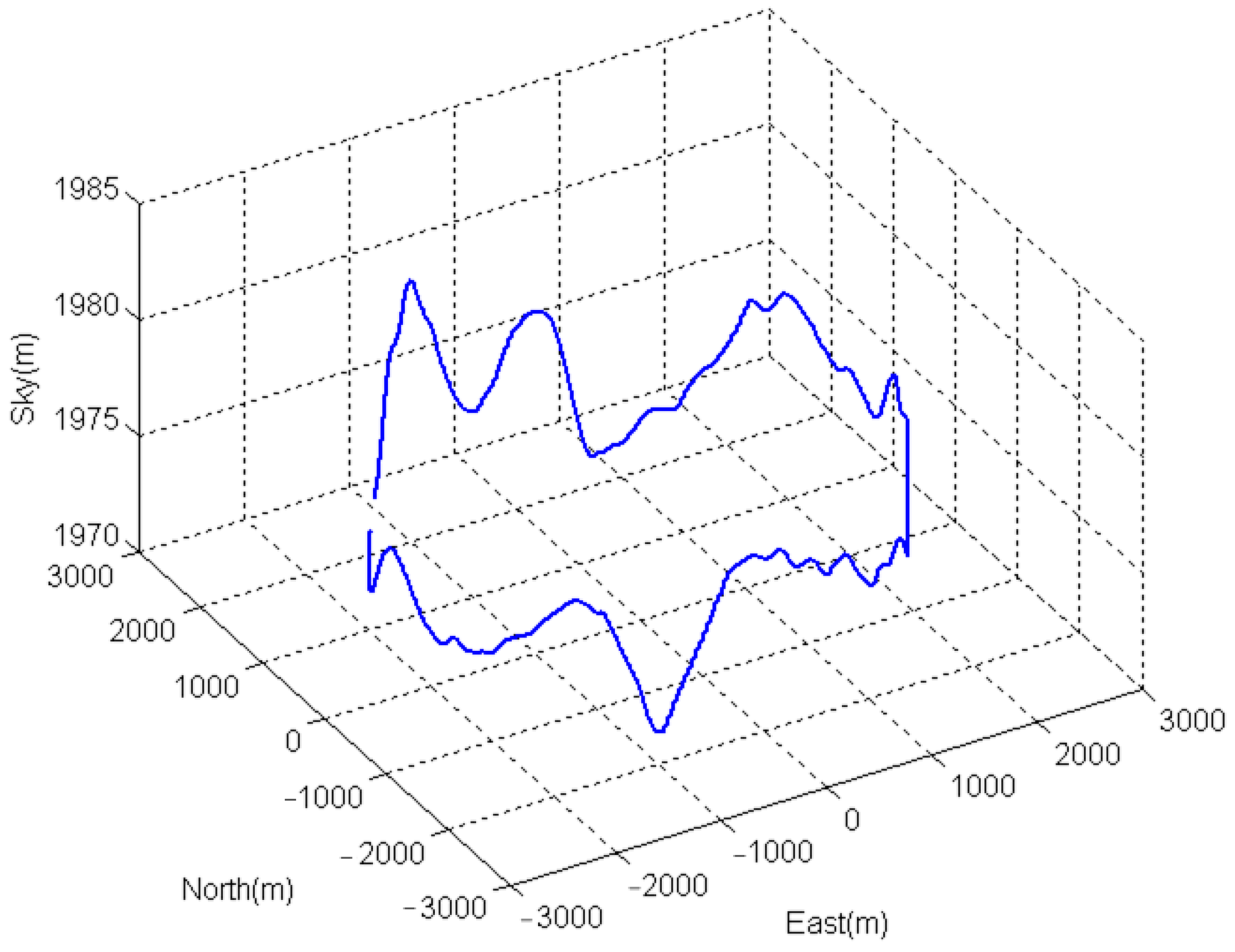

Figure 23 shows the 3D flight trajectory. Compared with linear spotlight SAR, circular SAR (CSAR) can illuminate the targets for long time which can achieve long-time persistent surveillance.

According to (16), each frame image has an approximate illumination time of 2 s corresponding to

azimuth integration angle and thus 0.25 m resolution in azimuth and 0.30 m resolution in range. To obtain results in good and smooth videos, the frame rate required 10 Hz with an overlap of 80%. This lead to approximate 1000 sub-aperture SAR images for a full circle. In

Figure 24, a target point is selected to analyze the imaging performance. The azimuth/range profiles and the measured parameters of target point are presented in

Figure 24b,c, respectively. We can see that the target focused well, which further proved the effectiveness of three-step MOCO.

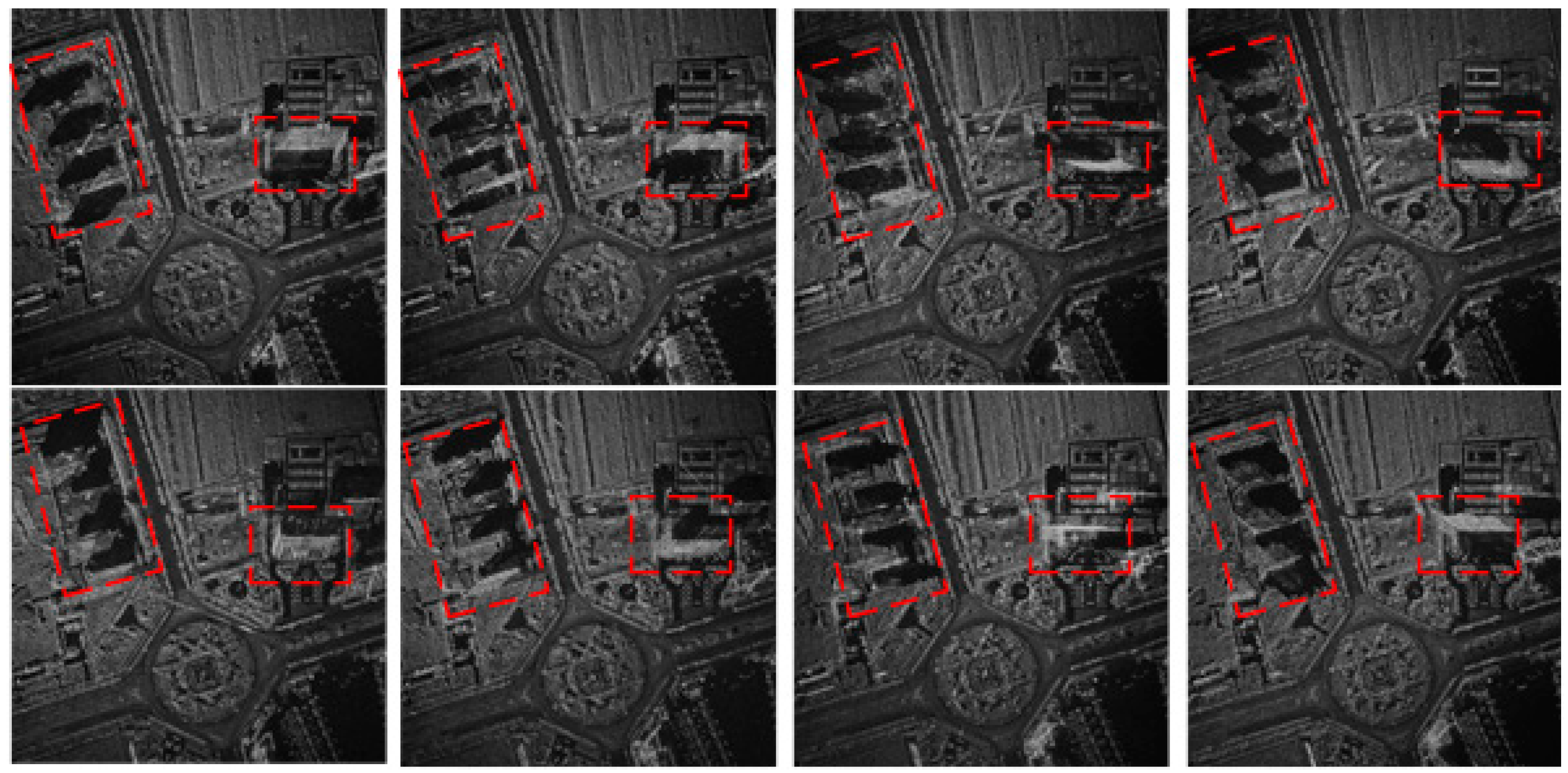

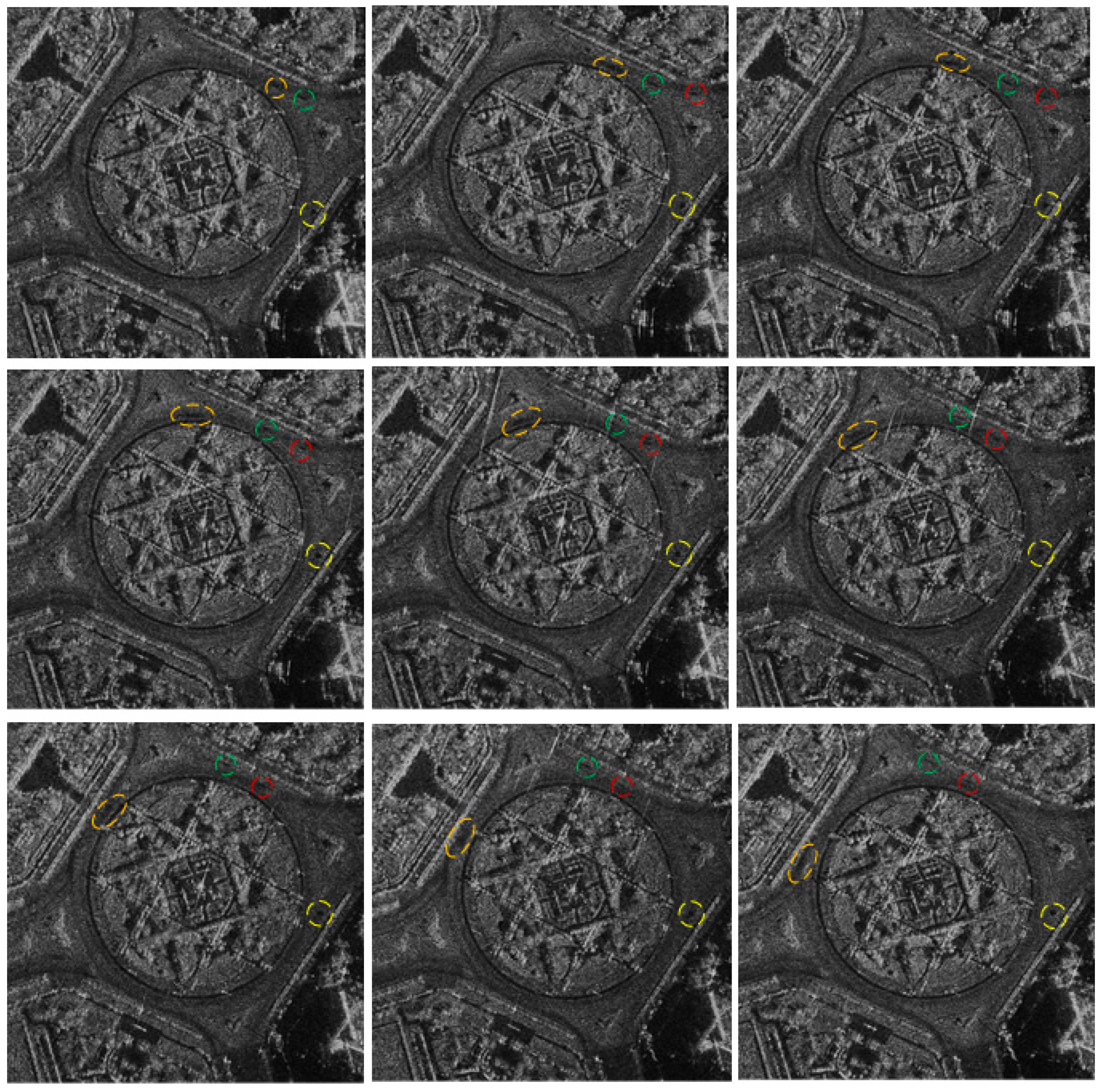

After all frame images were processed by ViSAR procedures, the ViSAR could be obtained. It shows eight frame images from different azimuth angles in

Figure 25. There are three residential buildings located in the northwest and a commercial building in northeast which are marked with red rectangles. Noticeably, the large shadow formation of buildings covers different large space of images in different azimuth angles. Objects behind the buildings remain hidden to the observer. The same is true for objects in front of the building (in the LOS direction to the radar), because of the large foreshortening of the building.

The capability of detecting and tracking the moving targets is presented in

Figure 26. The images were chosen in the consecutive order in a slightly interval time to better represent the dynamics of the scene. In total, the illustrated scene comprised of interval time 18 s, corresponding to integration angle

. Due to shadows formed by moving vehicles that can show a clear contrast compared to ground clutter, we can track the vehicles easily. For tracking vehicles, the quality of shadow is an important factor. While the vehicle was moving, the duration time that the parts of area covered with shadow during the total sub-aperture time determine the quality of the shadow [

46]. If the car is going fast or sub-aperture time is long, the shadow may smear and be buried in ground clutter [

46].

In

Figure 26, some vehicles are marked by different colors to better track their trajectories. From left to right then from up to down, it can be seen that the vehicle marked by orange ellipse was heading west along ring road very fast while the vehicles marked by green and red circle were heading north. Meanwhile a vehicle marked by yellow was going to east very slow.

The image fusion result is shown in

Figure 27. Compared with signal aspect image, the fusion result solves the shadow occlusion problem which can be used in the following SAR image interpretation. In order to compare the estimated position of the targets based on fused image with the true position of targets, we selected four areas as shown in

Figure 27, and the corresponding zoom-in optical images are shown in

Figure 27. The imaging center was set as the origin of the coordinate system, therefore the coordinates of the regional center could be obtained. For the true position of the regional center, we can obtain them from Google Maps. In fusion image, the coordinates of each region 1, 2, 3 and 4 are

,

,

and

, respectively. From Google Maps, the true positions of each regional center are

,

,

and

, respectively. By comparison, the position of targets in fusion result corresponds to the true position.