Canopy Volume Extraction of Citrus reticulate Blanco cv. Shatangju Trees Using UAV Image-Based Point Cloud Deep Learning

Abstract

:1. Introduction

2. Materials and Methods

2.1. Point Cloud Data Acquisition

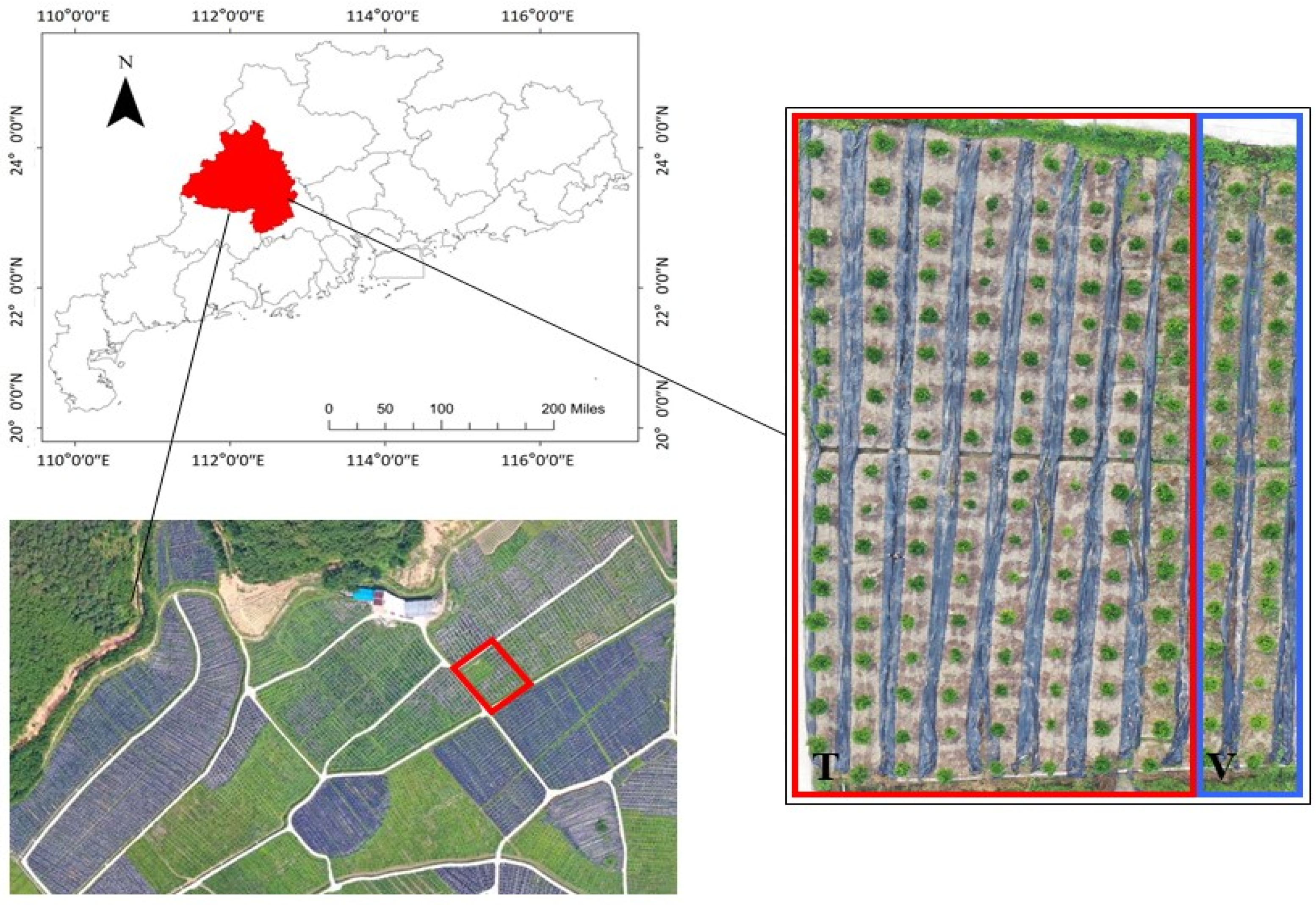

2.1.1. Experimental Site

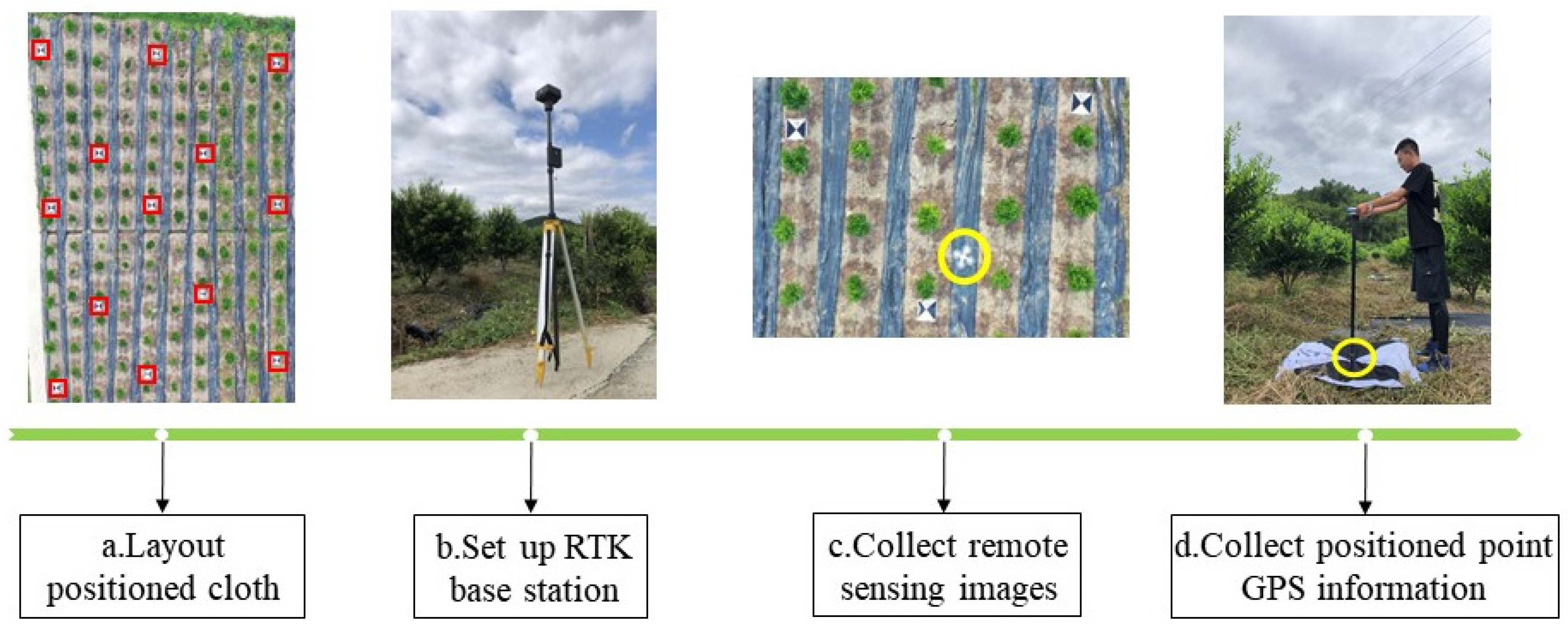

2.1.2. Acquisition of UAV Tilt Photogrammetry Images

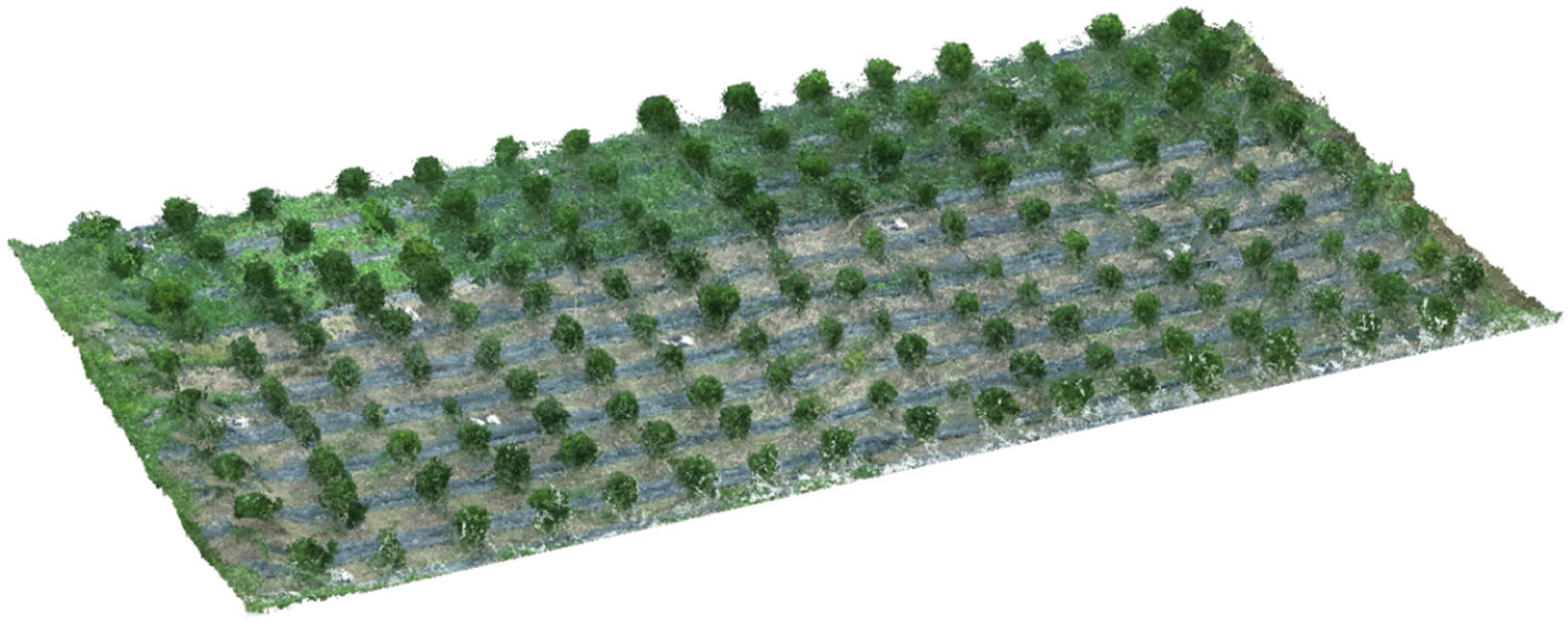

2.1.3. Reconstruction of 3D Point Cloud

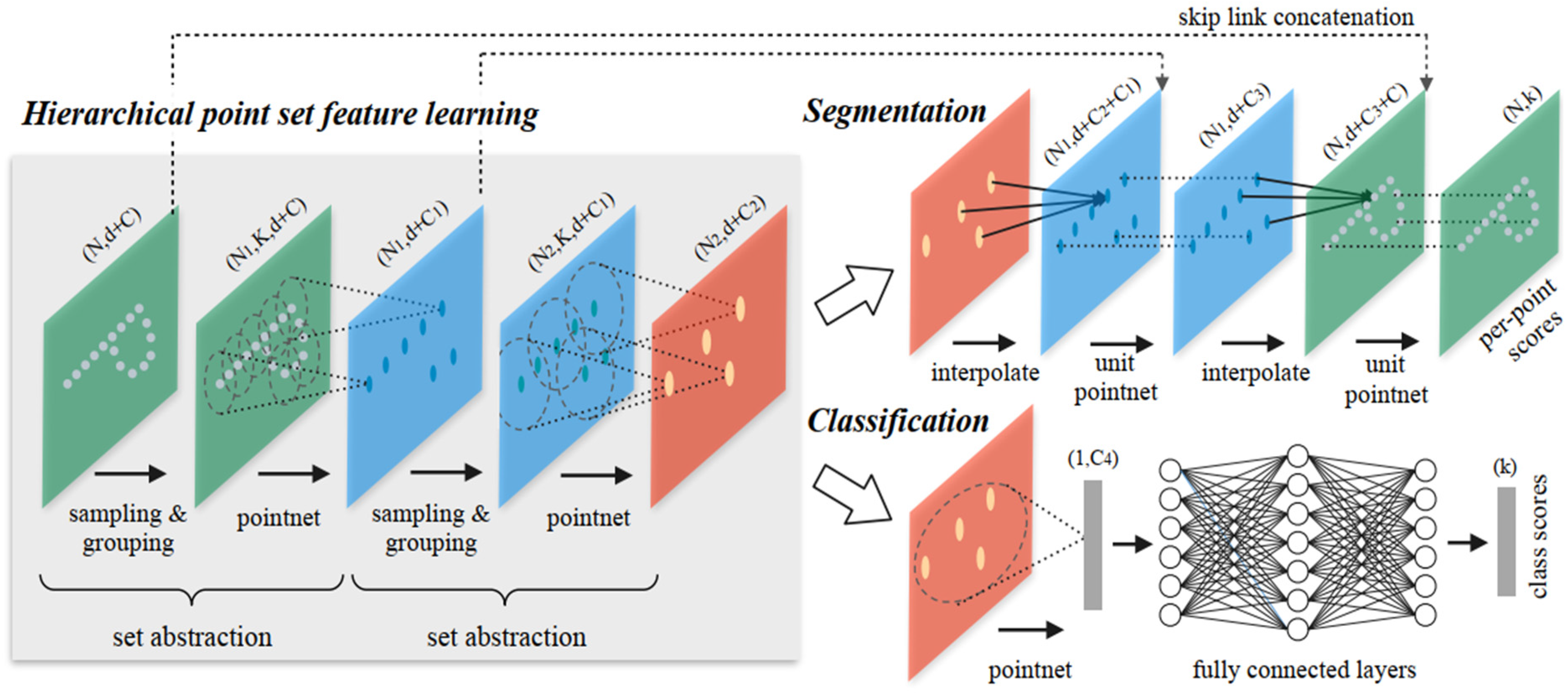

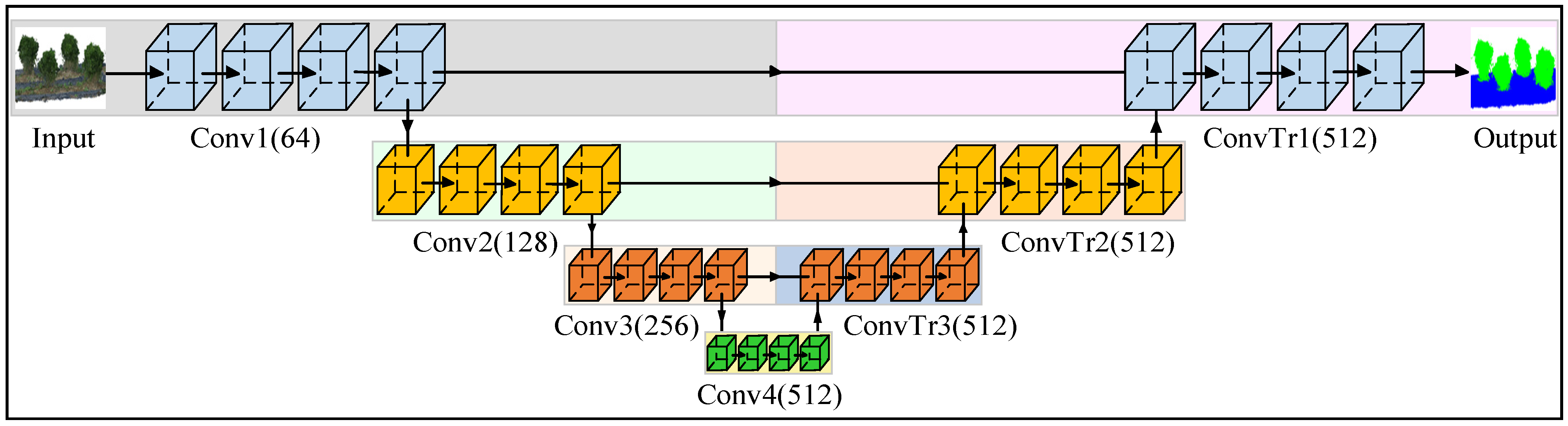

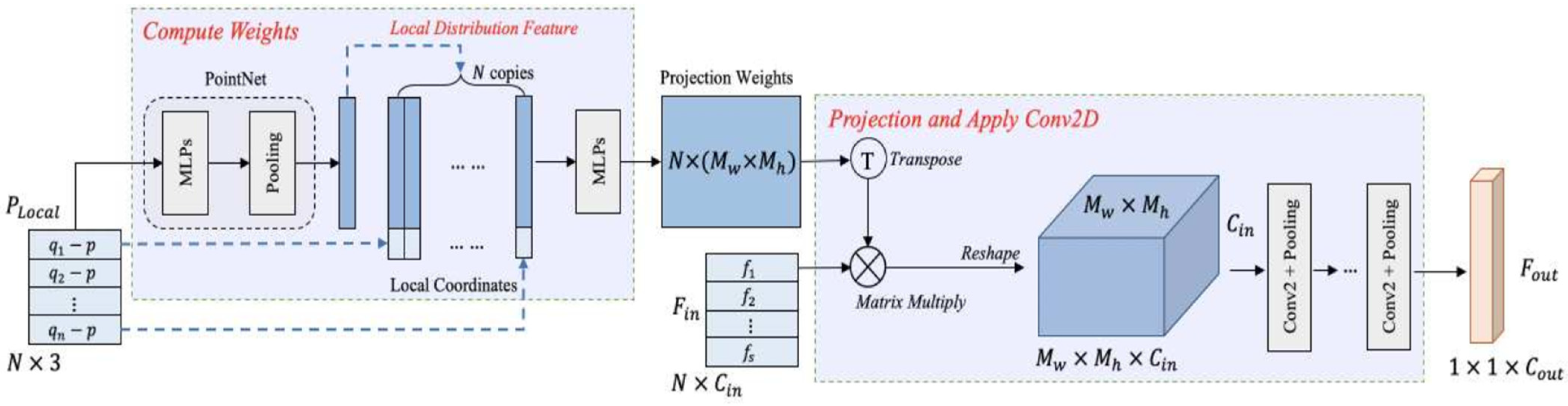

2.2. Deep Learning Algorithms for Point Clouds Data

2.2.1. Deep Learning Algorithms

2.2.2. Accuracy Evaluation of Algorithms

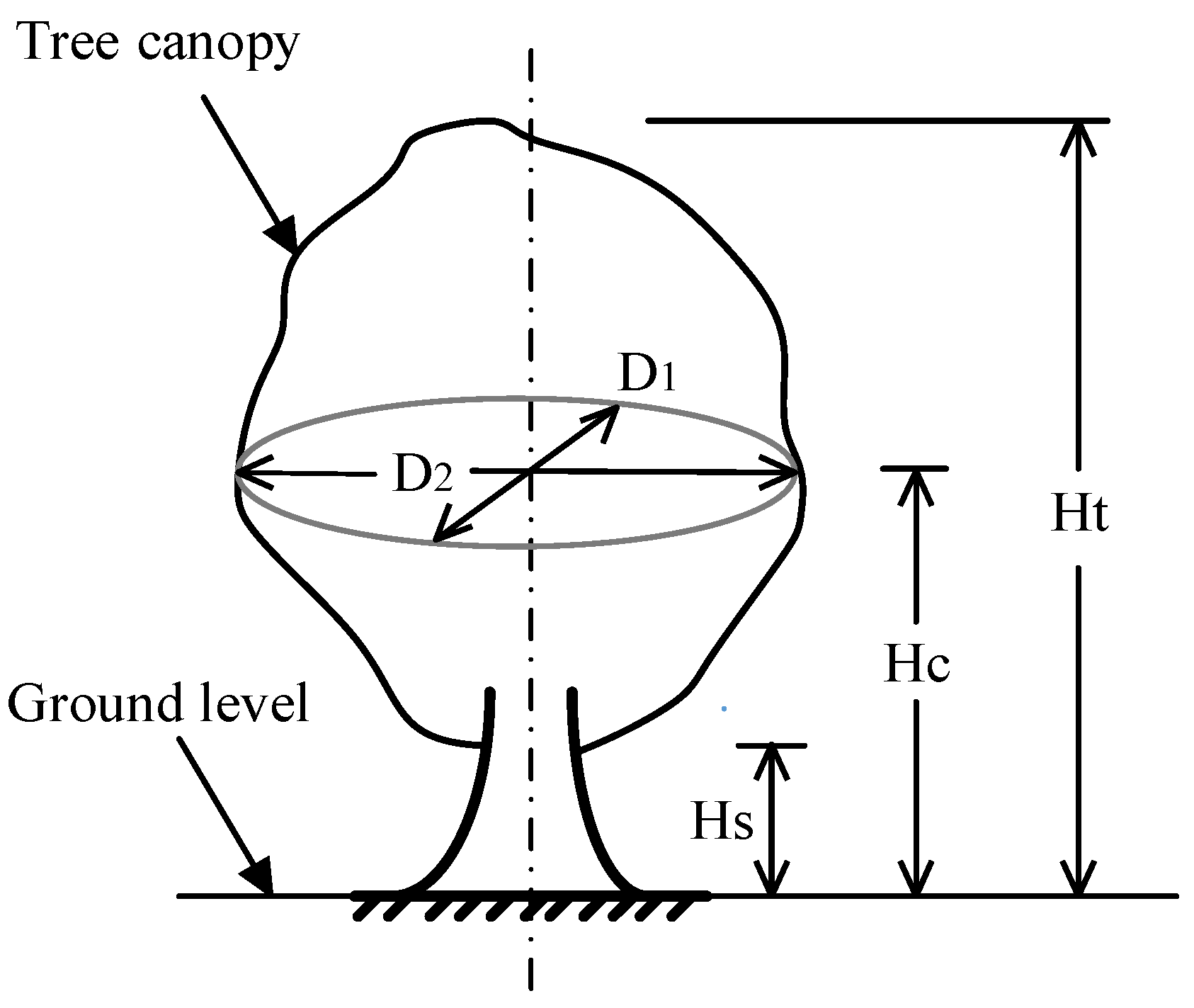

2.3. Tree Phenotype Parameter Extraction and Accuracy Evaluation

2.3.1. Manual Calculation Method

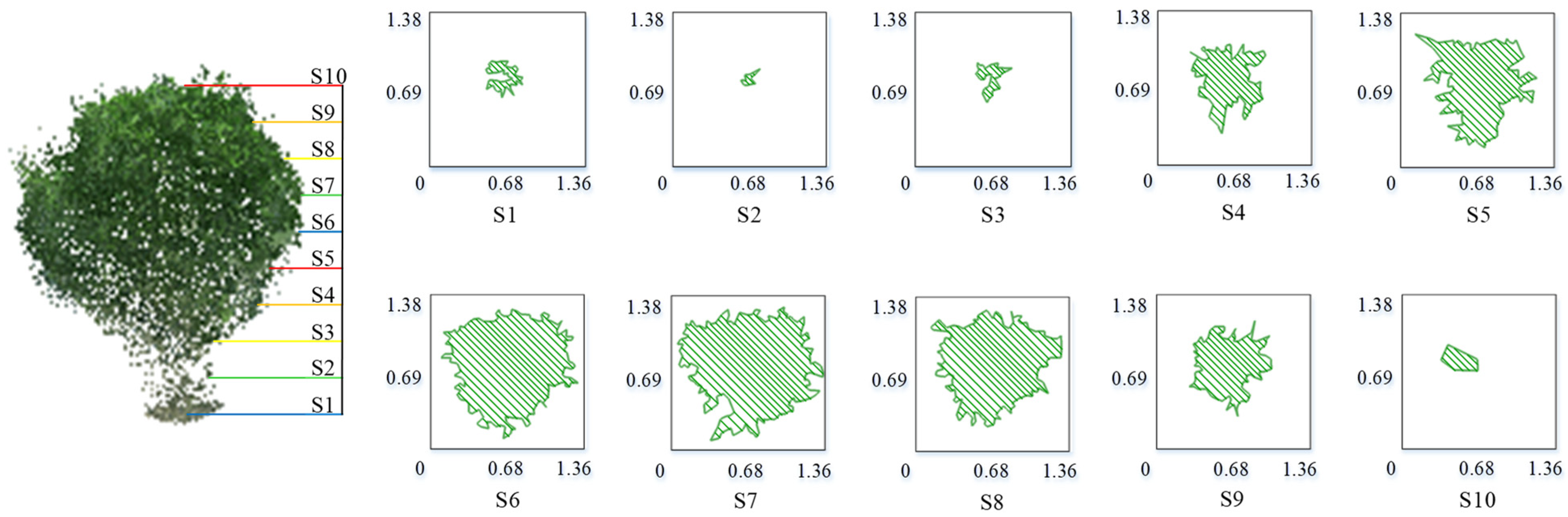

2.3.2. Phenotypic Parameters Obtained by the Algorithms

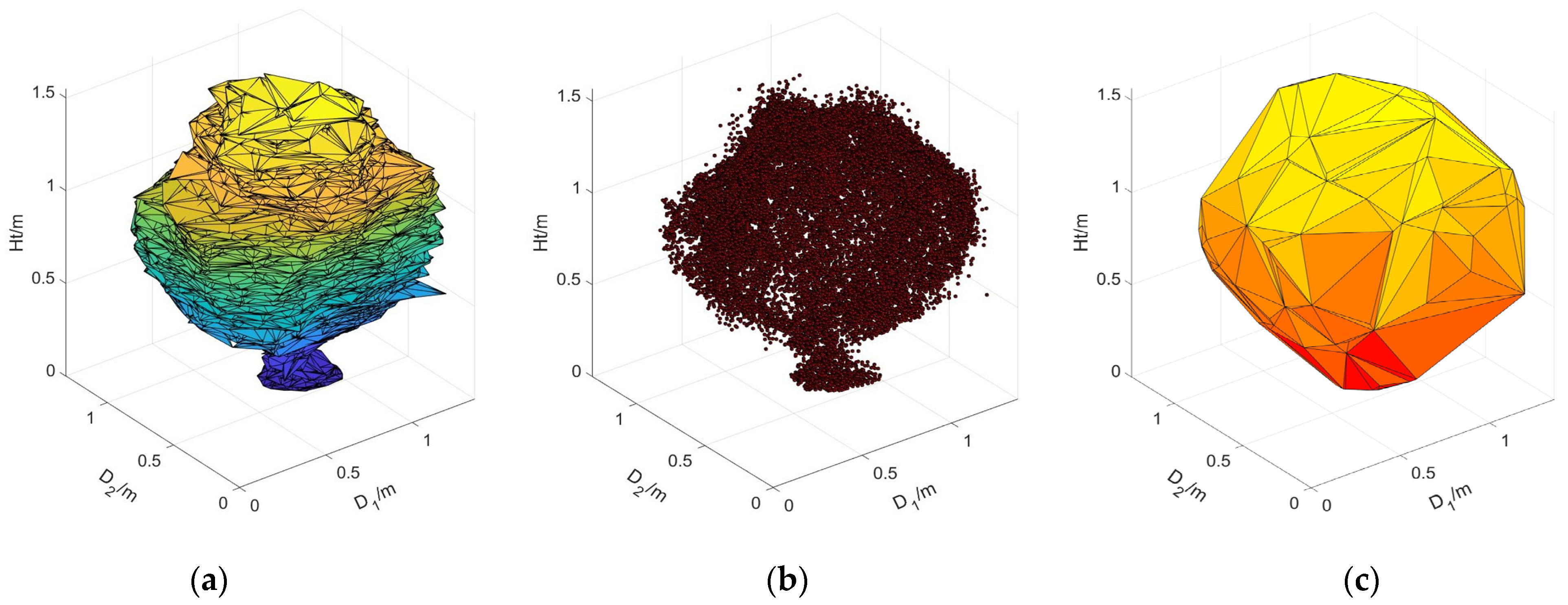

- 1.

- The point cloud of the Shatangju tree canopy was converted to .txt format data. Six coordinate points in the point cloud (including the maximum and minimum values of the coordinates) were selected to generate an irregular octahedron and form the initial convex hull model. At this moment, there were some points outside the octahedron. These points formed the new convex hull boundary, which were divided into eight separate regions by the octahedron. The point cloud inside the initial convex hull was removed when the polyhedron was built.

- 2.

- Among the points in the eight regions that were divided, the vertical distances of these points to the corresponding planes were compared and the point with the largest distance in each region was selected. The points selected in step 1 were merged with the newly selected points to form a new triangle and convex hull. Again, the points inside the new convex packet were deleted.

- 3.

- By repeating step 2, the point farthest from each new triangular plane was selected to create a new convex hull. The points inside the convex hull were deleted until there were no points outside the convex hull. Finally, an n-sided convex hull is formed, and the volume of this 3D convex hull model was taken as the volume of the tree canopy.

2.3.3. Evaluation of Model Accuracy

3. Results

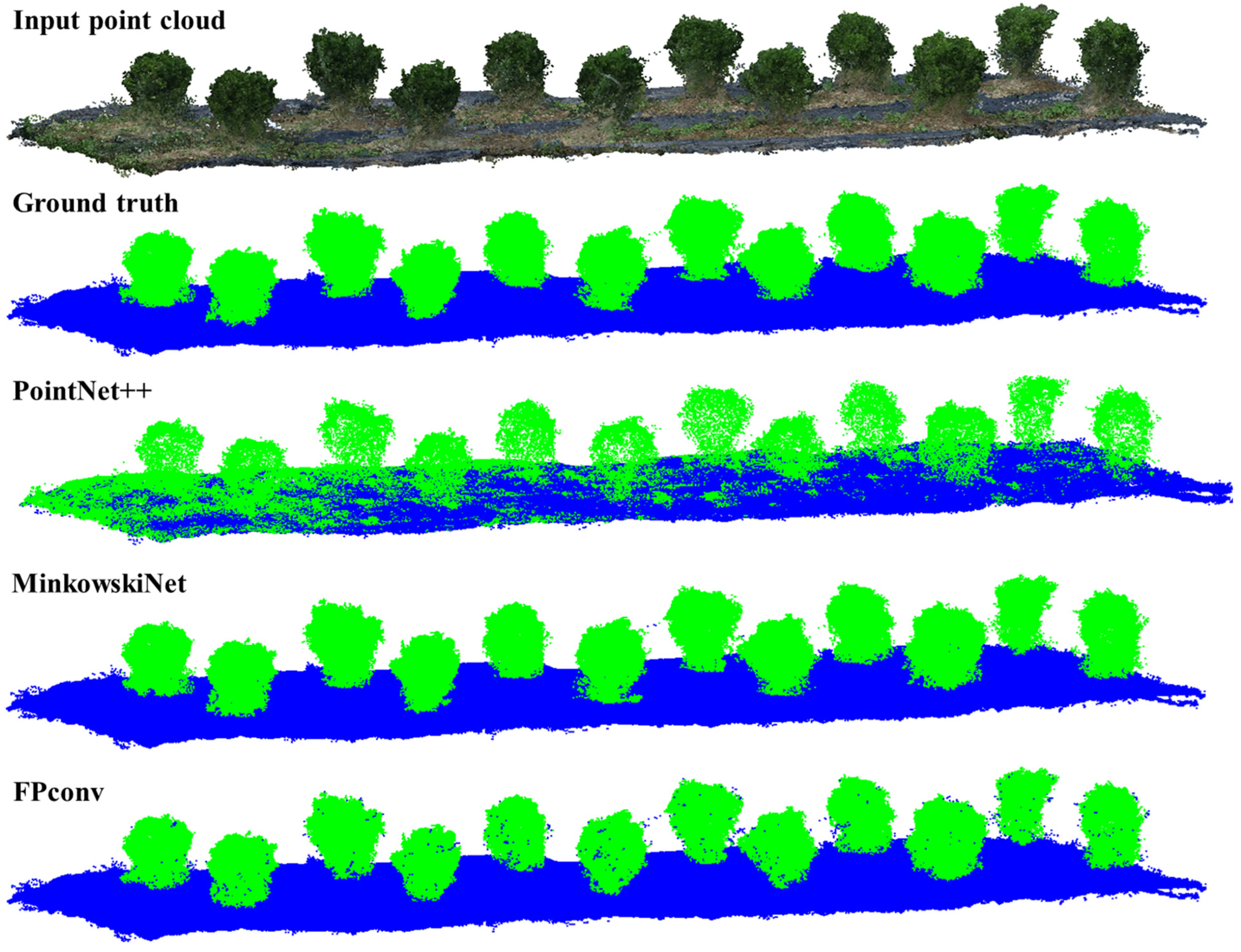

3.1. Segementation Accuracy of Deep Learning

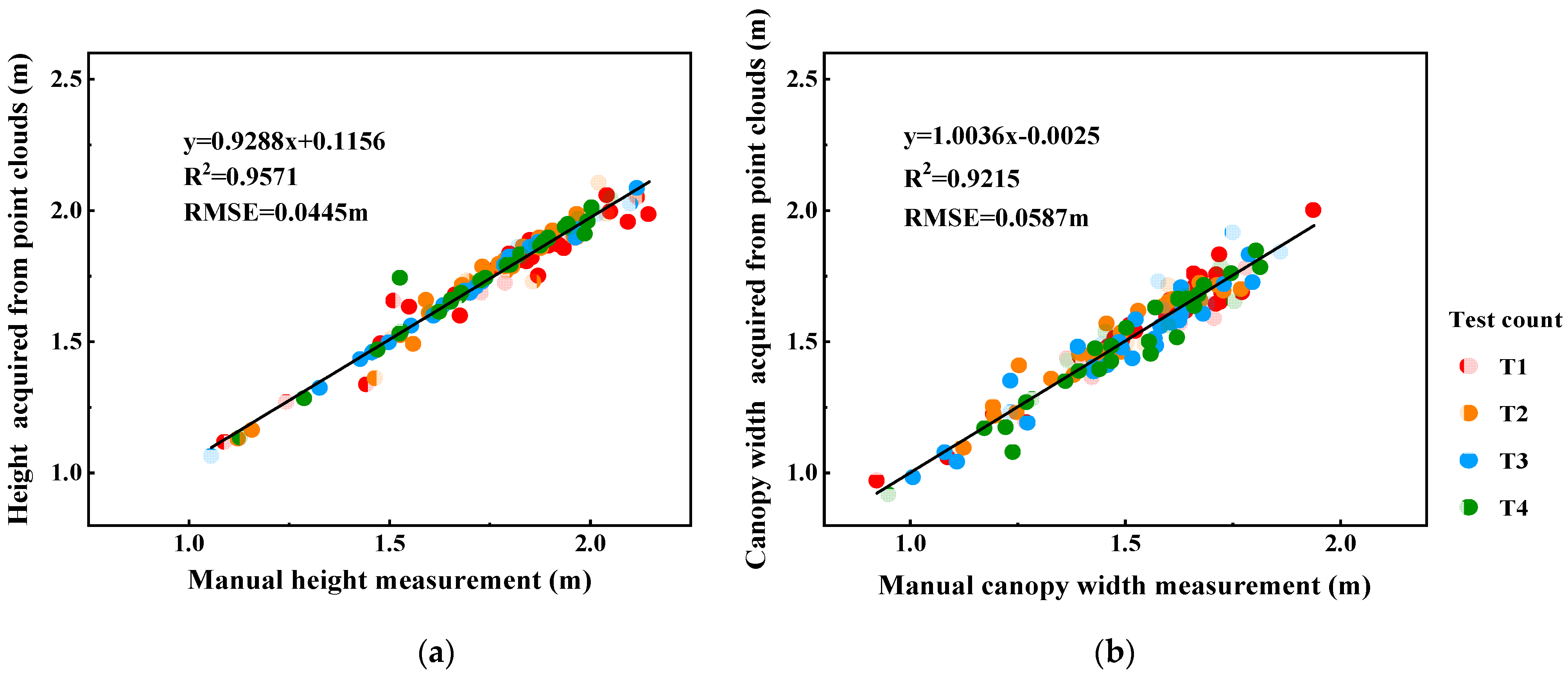

3.2. Accuracy of Phenotypic Parameters Acquisition Model

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Wang, W.; Hong, T.; Li, J.; Zhang, F.; Lu, Y. Review of the pesticide precision orchard spraying technologies. Trans. Chin. Soc. Agric. Eng. 2004, 20, 98–101. [Google Scholar]

- Zhou, L.; Xue, X.; Zhou, L.; Zhang, L.; Ding, S.; Chang, C.; Zhang, X.; Chen, C. Research situation and progress analysis on orchard variable rate spraying technology. Trans. Chin. Soc. Agric. Eng. 2017, 33, 80–92. [Google Scholar]

- Fox, R.D.; Derksen, R.C.; Zhu, H.; Brazee, R.D.; Svensson, S.A. A History of Air-Blast Sprayer Development and Future Prospects. Trans. ASABE 2008, 51, 405–410. [Google Scholar] [CrossRef]

- Feng, Z.; Luo, X.; Ma, Q.; Hao, X.; Chen, X.; Zhao, L. An estimation of tree canopy biomass based on 3D laser scanning imag-ing system. J. Beijing For. Univ. 2007, 29, 52–56. [Google Scholar]

- Wei, X.; Wang, Y.; Zheng, J.; Wang, M.; Feng, Z. Tree crown volume calculation based on 3-D laser scanning point clouds data. Trans. Chin. Soc. Agric. Mach. 2013, 44, 235–240. [Google Scholar]

- Ding, W.; Zhao, S.; Zhao, S.; Gu, J.; Qiu, W.; Guo, B. Measurement methods of fruit tree canopy volume based on machine vision. Trans. Chin. Soc. Agric. Mach. 2016, 47, 1–10. [Google Scholar]

- Wallace, L.; Lucieer, A.; Watson, C.; Turner, D. Development of a UAV-LiDAR system with application to forest inventory. Remote Sens. 2012, 4, 1519–1543. [Google Scholar] [CrossRef] [Green Version]

- MacFaden, S.W.; O’Neil-Dunne, J.P.; Royar, A.R.; Lu, J.W.; Rundle, A. High-resolution tree canopy mapping for New York City using LIDAR and object-based image analysis. J. Appl. Remote Sens. 2012, 6, 63567. [Google Scholar] [CrossRef]

- Gangadharan, S.; Burks, T.F.; Schueller, J.K. A comparison of approaches for citrus canopy profile generation using ultrasonic and Leddar® sensors. Comput. Electron. Agric. 2019, 156, 71–83. [Google Scholar] [CrossRef]

- Qin, Y.; Ferraz, A.; Mallet, C.; Iovan, C. Individual tree segmentation over large areas using airborne LiDAR point cloud and very high resolution optical imagery. In Proceedings of the 2014 IEEE Geoscience and Remote Sensing Symposium, Quebec City, QC, Canada, 13–18 July 2014; pp. 800–803. [Google Scholar] [CrossRef]

- Tian, J.; Dai, T.; Li, H.; Liao, C.; Teng, W.; Hu, Q.; Ma, W.; Xu, Y. A Novel Tree Height Extraction Approach for Individual Trees by Combining TLS and UAV Image-Based Point Cloud Integration. Forests 2019, 10, 537. [Google Scholar] [CrossRef] [Green Version]

- Gülci, S. The determination of some stand parameters using SfM-based spatial 3D point cloud in forestry studies: An analysis of data production in pure coniferous young forest stands. Environ. Monit. Assess. 2019, 191, 495. [Google Scholar] [CrossRef]

- Jurado, J.; Pádua, L.; Feito, F.; Sousa, J. Automatic Grapevine Trunk Detection on UAV-Based Point Cloud. Remote. Sens. 2020, 12, 3043. [Google Scholar] [CrossRef]

- Camarretta, N.; Harrison, P.A.; Lucieer, A.; Potts, B.M.; Davidson, N.; Hunt, M. From drones to phenotype: Using UAV-LiDAR to de-tect species and provenance variation in tree productivity and structure. Remote Sens. 2020, 12, 3184. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, J.; Chang, S.; Sun, L.; An, L.; Chen, Y.; Xu, J. Classification of street tree species using UAV tilt photogramme-try. Remote Sens. 2021, 13, 216. [Google Scholar] [CrossRef]

- Zamboni, P.; Junior, J.; Silva, J.; Miyoshi, G.; Matsubara, E.; Nogueira, K.; Gonçalves, W. Benchmarking Anchor-Based and Anchor-Free State-of-the-Art Deep Learning Methods for Individual Tree Detection in RGB High-Resolution Images. Remote. Sens. 2021, 13, 2482. [Google Scholar] [CrossRef]

- Egli, S.; Höpke, M. CNN-Based Tree Species Classification Using High Resolution RGB Image Data from Automated UAV Observations. Remote. Sens. 2020, 12, 3892. [Google Scholar] [CrossRef]

- Nezami, S.; Khoramshahi, E.; Nevalainen, O.; Pölönen, I.; Honkavaara, E. Tree Species Classification of Drone Hyperspectral and RGB Imagery with Deep Learning Convolutional Neural Networks. Remote Sens. 2020, 12, 1070. [Google Scholar] [CrossRef] [Green Version]

- Martins, J.; Nogueira, K.; Osco, L.; Gomes, F.; Furuya, D.; Gonçalves, W.; Sant’Ana, D.; Ramos, A.; Liesenberg, V.; dos Santos, J.; et al. Semantic Segmentation of Tree-Canopy in Urban Environment with Pixel-Wise Deep Learning. Remote Sens. 2021, 13, 3054. [Google Scholar] [CrossRef]

- Yan, S.; Jing, L.; Wang, H. A New Individual Tree Species Recognition Method Based on a Convolutional Neural Network and High-Spatial Resolution Remote Sensing Imagery. Remote Sens. 2021, 13, 479. [Google Scholar] [CrossRef]

- Chadwick, A.; Goodbody, T.; Coops, N.; Hervieux, A.; Bater, C.; Martens, L.; White, B.; Röeser, D. Automatic Delineation and Height Measurement of Regenerating Conifer Crowns under Leaf-Off Conditions Using UAV Imagery. Remote Sens. 2020, 12, 4104. [Google Scholar] [CrossRef]

- Rusu, R.B.; Blodow, N.; Beetz, M. Fast Point Feature Histograms (FPFH) for 3D registration. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 3212–3217. [Google Scholar]

- Joachim, N.; Franz, R.; Uwe, S. Contextual classification of LiDAR data and building object detection in urban areas. ISPRS J. Photogramm. 2014, 87, 152–165. [Google Scholar]

- Shen, Z.; Liang, H.; Lin, L.; Wang, Z.; Huang, W.; Yu, J. Fast Ground Segmentation for 3D LiDAR Point Cloud Based on Jump-Convolution-Process. Remote Sens. 2021, 13, 3239. [Google Scholar] [CrossRef]

- Aboutaleb, A.; El-Wakeel, A.; Elghamrawy, H.; Noureldin, A. LiDAR/RISS/GNSS Dynamic Integration for Land Vehicle Robust Positioning in Challenging GNSS Environments. Remote Sens. 2020, 12, 2323. [Google Scholar] [CrossRef]

- Phattaralerphong, J.; Sinoquet, H. A method for 3D reconstruction of tree crown volume from photographs: Assessment with 3D-digitized plants. Tree Physiol. 2005, 25, 1229–1242. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Olsoy, P.J.; Glenn, N.F.; Clark, P.E.; Derryberry, D.R. Aboveground total and green biomass of dryland shrub derived from terrestrial laser scanning. ISPRS J. Photogramm. Remote Sens. 2014, 88, 166–173. [Google Scholar] [CrossRef] [Green Version]

- Lauri, K.; Jari, V.; Anni, V.; Aarne, H.; Ilkka, K. Estimation of tree crown volume from airborne lidar data using computational geometry. Int. J. Remote Sens. 2013, 34, 7236–7248. [Google Scholar]

- Lin, W.; Meng, Y.; Qiu, Z.; Zhang, S.; Wu, J. Measurement and calculation of crown projection area and crown volume of individual trees based on 3D laser-scanned point-cloud data. Int. J. Remote Sens. 2017, 38, 1083–1100. [Google Scholar] [CrossRef]

- Fernández-Sarría, A.; Martínez, L.; Velázquez-Martí, B.; Sajdak, M.; Estornell, J.; Recio, J.A. Different methodologies for cal-culating crown volumes of Platanus hispanica trees using terrestrial laser scanner and a comparison with classical dendro-metric measurements. Comput. Electron. Agric. 2013, 90, 176–185. [Google Scholar] [CrossRef]

- Li, L.; Li, D.; Zhu, H.; Li, Y. A dual growing method for the automatic extraction of individual trees from mobile laser scanning data. ISPRS J. Photogramm. 2016, 120, 37–52. [Google Scholar] [CrossRef]

- Popescu, S.C.; Zhao, K. A voxel-based lidar method for estimating crown base height for deciduous and pine trees. Remote Sens. Environ. 2008, 112, 767–781. [Google Scholar] [CrossRef]

- Wang, Y.; Cheng, L.; Chen, Y.; Wu, Y.; Li, M. Building Point Detection from Vehicle-Borne LiDAR Data Based on Voxel Group and Horizontal Hollow Analysis. Remote Sens. 2016, 8, 419. [Google Scholar] [CrossRef] [Green Version]

- Wu, B.; Yu, B.; Yue, W.; Shu, S.; Tan, W.; Hu, C.; Huang, Y.; Wu, J.; Liu, H. A coxel-based method for automated identification and morphological parameters estimation of individual street trees from mobile laser scanning data. Remote Sens. 2013, 5, 584–611. [Google Scholar] [CrossRef] [Green Version]

- Castro-Arvizu, J.; Medina, D.; Ziebold, R.; Vilà-Valls, J.; Chaumette, E.; Closas, P. Precision-Aided Partial Ambiguity Resolution Scheme for Instantaneous RTK Positioning. Remote Sens. 2021, 13, 2904. [Google Scholar] [CrossRef]

- Nakata, Y.; Hayamizu, M.; Ishiyama, N.; Torita, H. Observation of Diurnal Ground Surface Changes Due to Freeze-Thaw Action by Real-Time Kinematic Unmanned Aerial Vehicle. Remote Sens. 2021, 13, 2167. [Google Scholar] [CrossRef]

- Cheng, Q.; Chen, P.; Sun, R.; Wang, J.; Mao, Y.; Ochieng, W. A New Faulty GNSS Measurement Detection and Exclusion Algorithm for Urban Vehicle Positioning. Remote Sens. 2021, 13, 2117. [Google Scholar] [CrossRef]

- Barbasiewicz, A.; Widerski, T.; Daliga, K. The analysis of the accuracy of spatial models using photogrammetric software: Agisoft Photoscan and Pix4D. E3S Web Conf. 2018, 26, 00012. [Google Scholar] [CrossRef]

- Koguciuk, D.; Chechliński, Ł.; El-Gaaly, T. 3D object recognition with ensemble learning-a study of point cloud-based deep learning models. In Advances in Visual Computing; Springer: Cham, Switzerland, 2019; pp. 100–114. [Google Scholar]

- Zhang, Z.; Zhang, L.; Tong, X.; Mathiopoulos, P.T.; Guo, B.; Huang, X.; Wang, Z.; Wang, Y. A Multilevel Point-Cluster-Based Discriminative Feature for ALS Point Cloud Classification. IEEE Trans. Geosci. Remote Sens. 2016, 54, 3309–3321. [Google Scholar] [CrossRef]

- Chen, G.; Maggioni, M. Multiscale geometric dictionaries for point-cloud data. In Proceedings of the International Conference on Sampling Theory and Applications, Singapore, 2–6 May 2011; pp. 1–4. [Google Scholar]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. PointNet: Ddeep learning on point sets for 3D classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. PointNet++: Deep hierarchical feature learning on point sets in a metric space. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 8 December 2017. [Google Scholar]

- Choy, C.; Gwak, J.; Savarese, S. 4D Spatio-Temporal ConvNets: Minkowski Convolutional Neural Networks. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Beach, CA, USA, 16–20 June 2019; pp. 3070–3079. [Google Scholar]

- Lin, Y.; Yan, Z.; Huang, H.; Du, D.; Liu, L.; Cui, S.; Han, X. FPConv: Learning Local Flattening for Point Convolution. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 4292–4301. [Google Scholar]

- Tumbo, S.D.; Salyani, M.; Whitney, J.D.; Wheaton, T.A.; Miller, W.M. Investigation of laser and ultrasonic ranging sensors for measurements of citrus canopy volume. Appl. Eng. Agric. 2002, 18, 367. [Google Scholar] [CrossRef]

- Zaman, Q.-U.; Schumann, A.W. Performance of an Ultrasonic Tree Volume Measurement System in Commercial Citrus Groves. Precis. Agric. 2005, 6, 467–480. [Google Scholar] [CrossRef]

- Yan, Z.; Liu, R.; Cheng, L.; Zhou, X.; Ruan, X.; Xiao, Y. A concave hull methodology for calculating the crown volume of Indi-vidual Trees Based on Vehicle-Borne LiDAR Data. Remote Sens. 2019, 11, 623. [Google Scholar] [CrossRef] [Green Version]

- Parmehr, E.G.; Amati, M. Individual Tree Canopy Parameters Estimation Using UAV-Based Photogrammetric and LiDAR Point Clouds in an Urban Park. Remote Sens. 2021, 13, 2062. [Google Scholar] [CrossRef]

- Aeberli, A.; Johansen, K.; Robson, A.; Lamb, D.; Phinn, S. Detection of Banana Plants Using Multi-Temporal Multispectral UAV Imagery. Remote Sens. 2021, 13, 2123. [Google Scholar] [CrossRef]

- Weiss, M.; Jacob, F.; Duveiller, G. Remote sensing for agricultural applications: A meta-review. Remote Sens. Environ. 2020, 236, 111402. [Google Scholar] [CrossRef]

- Lim, P.-C.; Rhee, S.; Seo, J.; Kim, J.-I.; Chi, J.; Lee, S.-B.; Kim, T. An Optimal Image–Selection Algorithm for Large-Scale Stereoscopic Mapping of UAV Images. Remote Sens. 2021, 13, 2118. [Google Scholar] [CrossRef]

- Lim, P.C.; Seo, J.; Son, J.; Kim, T. Feasibility study for 1:1000 scale map generation from various UAV images. In Proceedings of the International Symposium on Remote Sensing, Taipei, Taiwan, 17–19 April 2019. [Google Scholar]

- Rhee, S.; Hwang, Y.; Kim, S. A study on point cloud generation method from UAV image using incremental bundle adjust-ment and stereo image matching technique. Korean J. Remote Sens. 2019, 34, 941–951. [Google Scholar]

- Zhou, Y.; Rupnik, E.; Meynard, C.; Thom, C.; Pierrot-Deseilligny, M. Simulation and Analysis of Photogrammetric UAV Im-age Blocks—Influence of Camera Calibration Error. Remote Sens. 2019, 12, 22. [Google Scholar] [CrossRef] [Green Version]

- Dai, Y.; Gong, J.; Li, Y.; Feng, Q. Building segmentation and outline extraction from UAV image-derived point clouds by a line growing algorithm. Int. J. Digit. Earth 2017, 10, 1077–1097. [Google Scholar] [CrossRef]

| Method | mIoU | Segmentation Accuracy | |

|---|---|---|---|

| Tree | Ground | ||

| PointNet++ | 53.72 | 27.78 | 79.67 |

| MinkowskiNet | 94.57 | 90.82 | 98.32 |

| FPConv | 81.92 | 68.68 | 95.16 |

| Tree Number | Height Ht (m) | Diameter D (m) | Volume (m3) | |||

|---|---|---|---|---|---|---|

| M1 | M2 | M3 | M4 | |||

| 1 | 1.83 | 1.61 | 2.29 | 2.46 | 1.29 | 2.05 |

| 2 | 1.51 | 1.09 | 0.92 | 0.96 | 0.75 | 0.75 |

| 3 | 1.85 | 1.71 | 2.63 | 2.71 | 1.56 | 2.21 |

| 4 | 2.05 | 1.78 | 3.66 | 3.52 | 1.95 | 2.91 |

| 5 | 1.90 | 1.60 | 2.61 | 2.59 | 1.66 | 2.25 |

| 6 | 1.79 | 1.72 | 3.28 | 3.16 | 1.85 | 2.55 |

| 7 | 1.83 | 1.37 | 2.01 | 1.71 | 1.24 | 1.45 |

| 8 | 1.81 | 1.61 | 2.75 | 2.67 | 1.77 | 2.42 |

| 9 | 2.00 | 1.64 | 2.60 | 3.15 | 2.05 | 2.46 |

| 10 | 1.95 | 1.71 | 3.72 | 3.46 | 2.37 | 3.06 |

| 11 | 1.77 | 1.66 | 2.86 | 2.85 | 1.98 | 2.26 |

| 12 | 1.73 | 1.63 | 2.11 | 2.01 | 1.91 | 2.09 |

| 13 | 2.05 | 1.94 | 4.28 | 4.64 | 2.90 | 3.98 |

| 14 | 2.10 | 1.77 | 3.28 | 4.08 | 2.53 | 3.57 |

| 15 | 1.86 | 1.51 | 2.33 | 2.66 | 1.81 | 2.28 |

| 16 | 1.95 | 1.68 | 3.04 | 3.80 | 2.28 | 3.15 |

| 17 | 2.10 | 1.72 | 3.07 | 3.24 | 1.75 | 3.19 |

| 18 | 1.90 | 1.71 | 2.63 | 3.13 | 1.63 | 2.78 |

| 19 | 1.95 | 1.49 | 2.40 | 2.70 | 1.59 | 2.41 |

| 20 | 2.20 | 1.73 | 3.05 | 3.61 | 2.20 | 3.18 |

| 21 | 1.98 | 1.66 | 2.68 | 2.71 | 1.64 | 2.38 |

| 22 | 1.87 | 1.48 | 2.29 | 2.35 | 1.51 | 1.96 |

| 23 | 1.90 | 1.60 | 2.47 | 2.69 | 1.60 | 2.28 |

| 24 | 1.46 | 1.27 | 0.88 | 1.25 | 1.05 | 1.18 |

| 25 | 1.53 | 1.46 | 1.90 | 1.74 | 1.21 | 1.70 |

| 26 | 1.11 | 0.92 | 0.57 | 0.59 | 0.40 | 0.48 |

| 27 | 1.85 | 1.52 | 2.24 | 2.32 | 1.59 | 2.17 |

| 28 | 1.39 | 1.19 | 0.99 | 1.11 | 0.79 | 1.03 |

| 29 | 1.60 | 1.44 | 1.56 | 2.02 | 1.24 | 1.71 |

| 30 | 1.73 | 1.40 | 1.75 | 2.06 | 1.53 | 1.91 |

| 31 | 1.79 | 1.42 | 1.69 | 2.02 | 1.35 | 1.71 |

| 32 | 1.93 | 1.46 | 2.05 | 2.25 | 1.66 | 2.21 |

| Max | 2.20 | 1.94 | 4.28 | 4.64 | 2.90 | 3.98 |

| Min | 1.11 | 0.92 | 0.88 | 0.59 | 0.40 | 0.48 |

| Mean | 1.78 | 1.55 | 2.39 | 2.57 | 1.64 | 2.25 |

| S.D. | 0.24 | 0.21 | 0.85 | 0.91 | 0.52 | 0.77 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qi, Y.; Dong, X.; Chen, P.; Lee, K.-H.; Lan, Y.; Lu, X.; Jia, R.; Deng, J.; Zhang, Y. Canopy Volume Extraction of Citrus reticulate Blanco cv. Shatangju Trees Using UAV Image-Based Point Cloud Deep Learning. Remote Sens. 2021, 13, 3437. https://doi.org/10.3390/rs13173437

Qi Y, Dong X, Chen P, Lee K-H, Lan Y, Lu X, Jia R, Deng J, Zhang Y. Canopy Volume Extraction of Citrus reticulate Blanco cv. Shatangju Trees Using UAV Image-Based Point Cloud Deep Learning. Remote Sensing. 2021; 13(17):3437. https://doi.org/10.3390/rs13173437

Chicago/Turabian StyleQi, Yuan, Xuhua Dong, Pengchao Chen, Kyeong-Hwan Lee, Yubin Lan, Xiaoyang Lu, Ruichang Jia, Jizhong Deng, and Yali Zhang. 2021. "Canopy Volume Extraction of Citrus reticulate Blanco cv. Shatangju Trees Using UAV Image-Based Point Cloud Deep Learning" Remote Sensing 13, no. 17: 3437. https://doi.org/10.3390/rs13173437

APA StyleQi, Y., Dong, X., Chen, P., Lee, K.-H., Lan, Y., Lu, X., Jia, R., Deng, J., & Zhang, Y. (2021). Canopy Volume Extraction of Citrus reticulate Blanco cv. Shatangju Trees Using UAV Image-Based Point Cloud Deep Learning. Remote Sensing, 13(17), 3437. https://doi.org/10.3390/rs13173437