Small-Sized Ship Detection Nearshore Based on Lightweight Active Learning Model with a Small Number of Labeled Data for SAR Imagery

Abstract

:1. Introduction

- The boundary box distance is proposed to optimize candidate targets further, which makes the boundaries of the candidate targets more reasonable;

- In the training stage, the proposed method can achieve better performance with a small number of labeled data;

- In the ship detection stage, the proposed method is suitable for detecting a small-level ship on the nearshore.

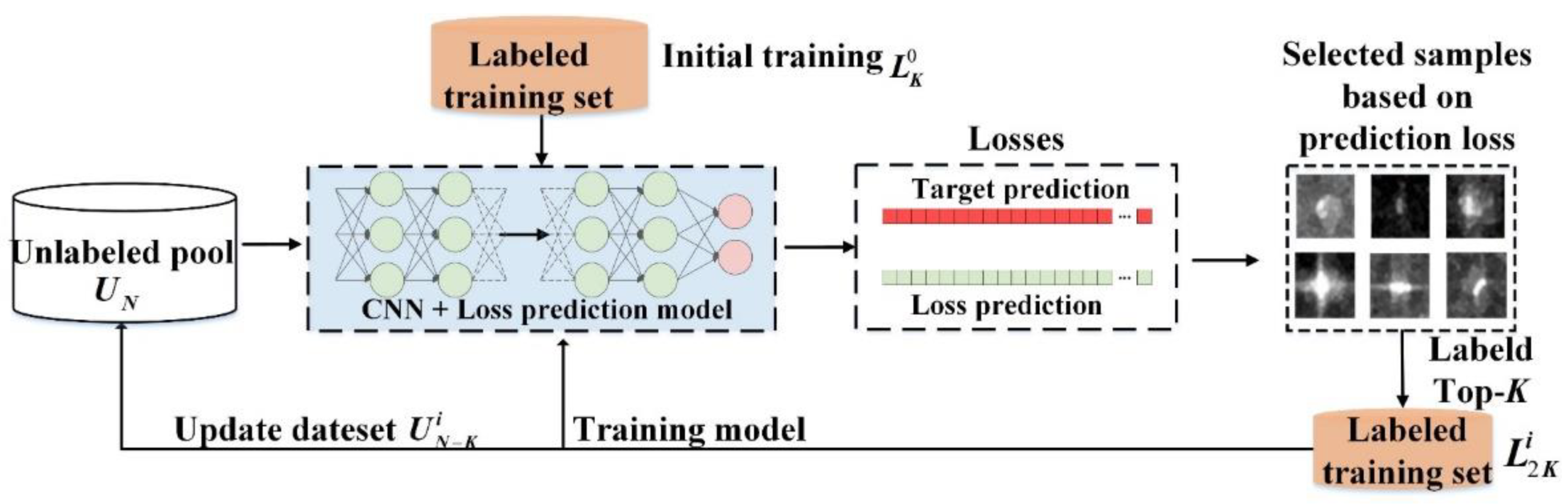

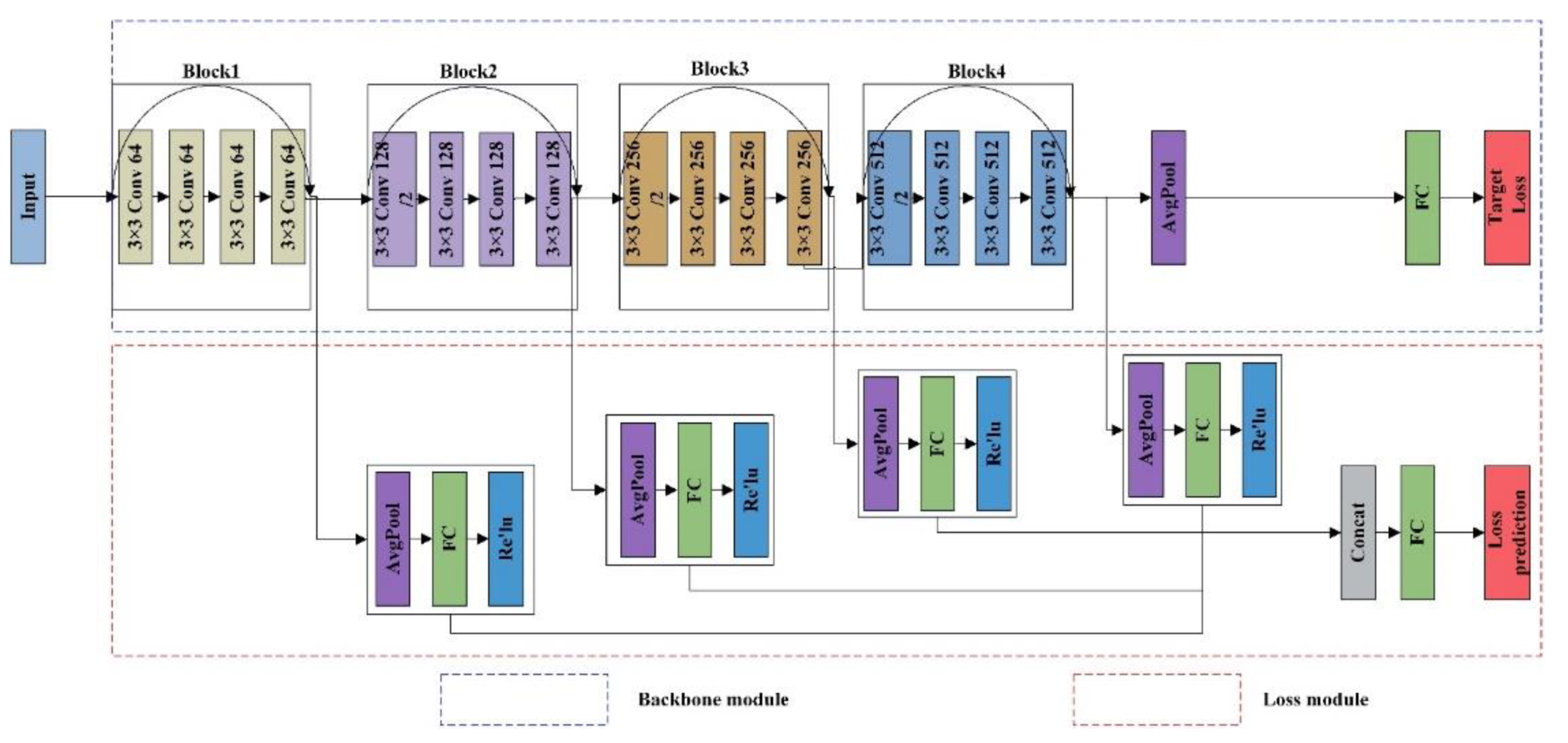

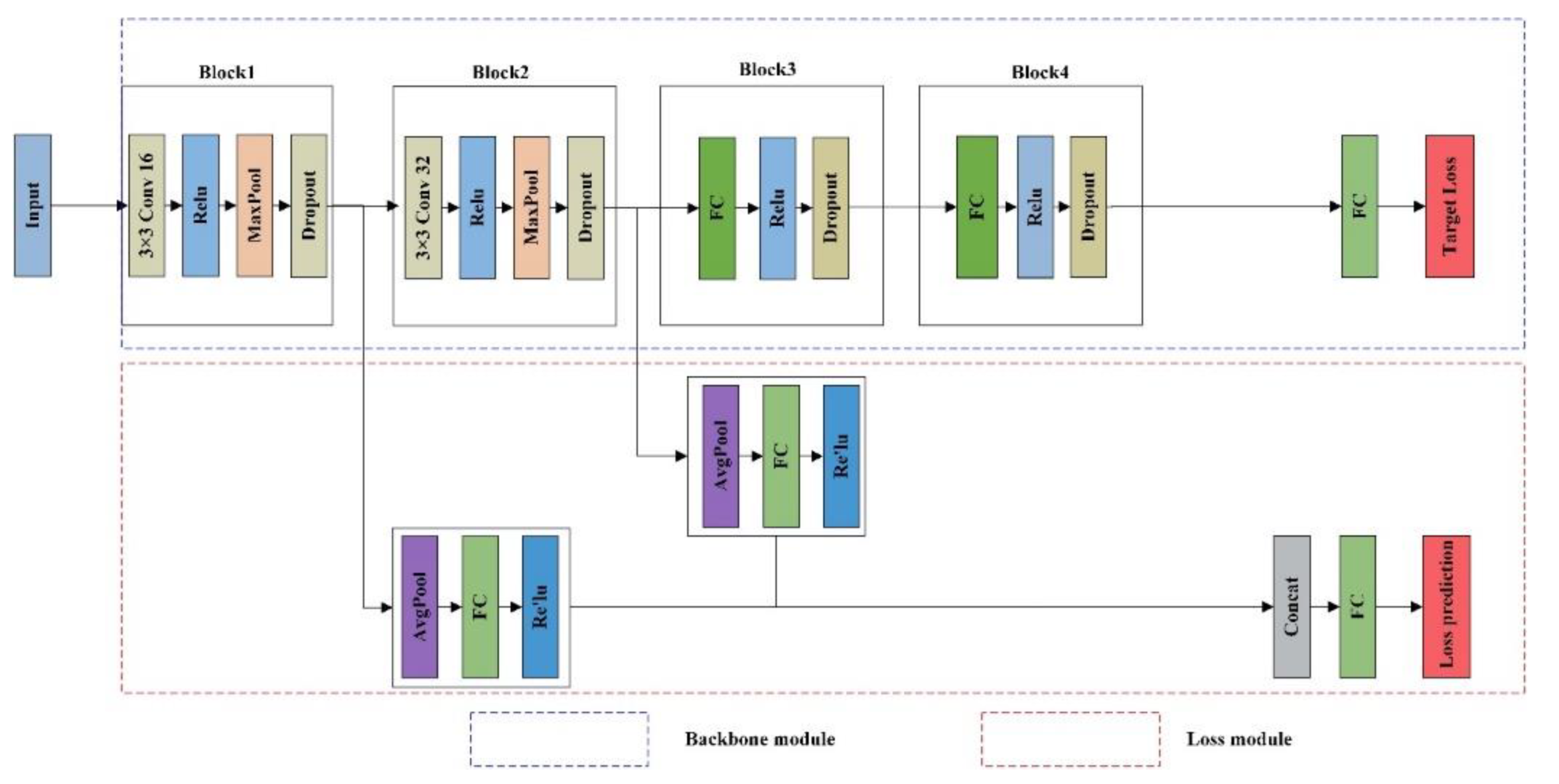

2. Methods

| Algorithm 1 pretraining of the proposed learning model |

| for cycles do |

| for i epoch do |

| if cycles == 0 |

| Input: initial labeled data and unlabeled data |

| else |

| 1. Train the lightweight CNN with embedded active learning scheme, and optimize it by stochastic gradient descent. The loss is calculated by target loss and loss prediction from the loss prediction module. 2. Then, get the uncertainty with the data samples of the highest losses. 3. Update the labeled dataset and unlabeled dataset , respectively. |

| end for |

| end for |

3. Results

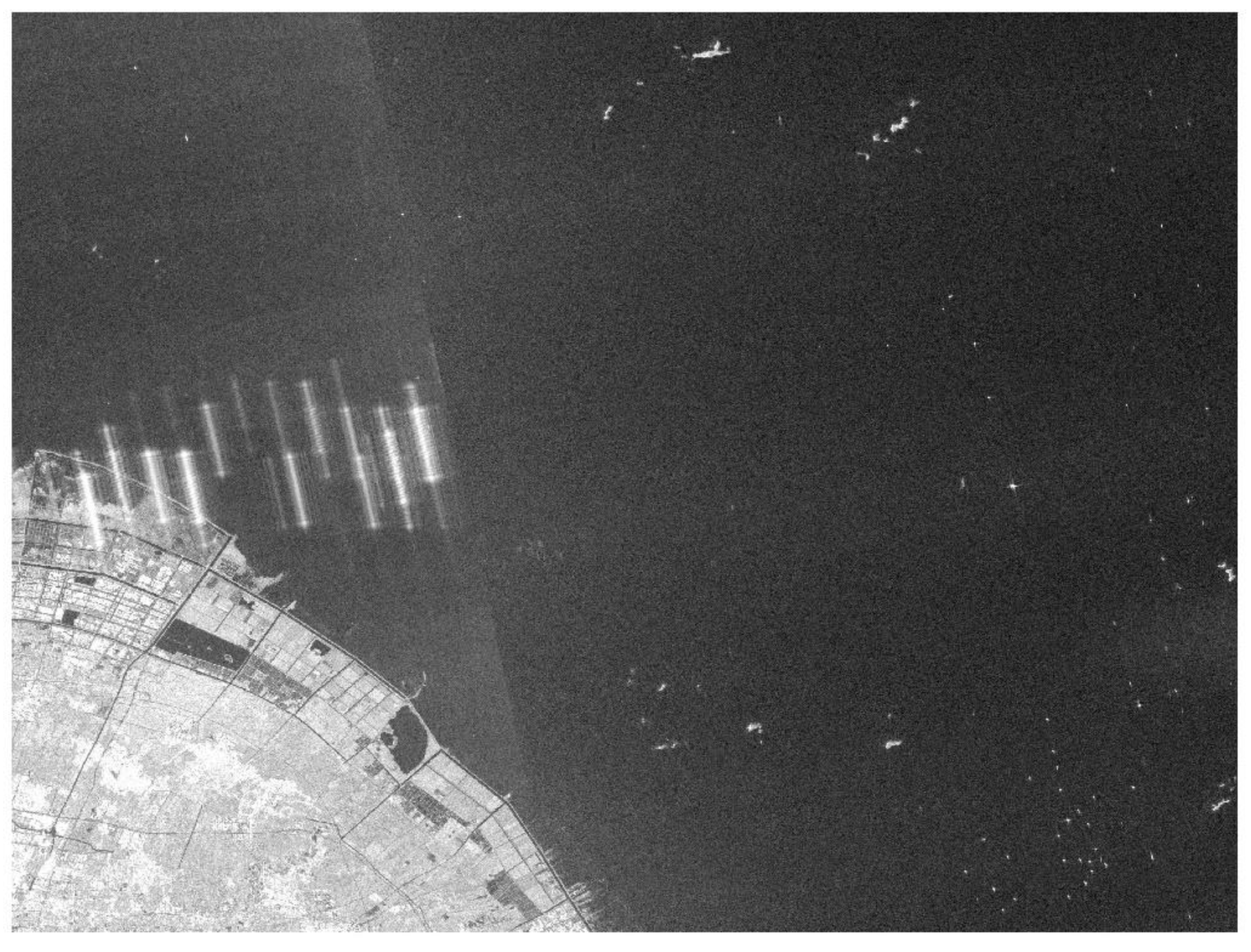

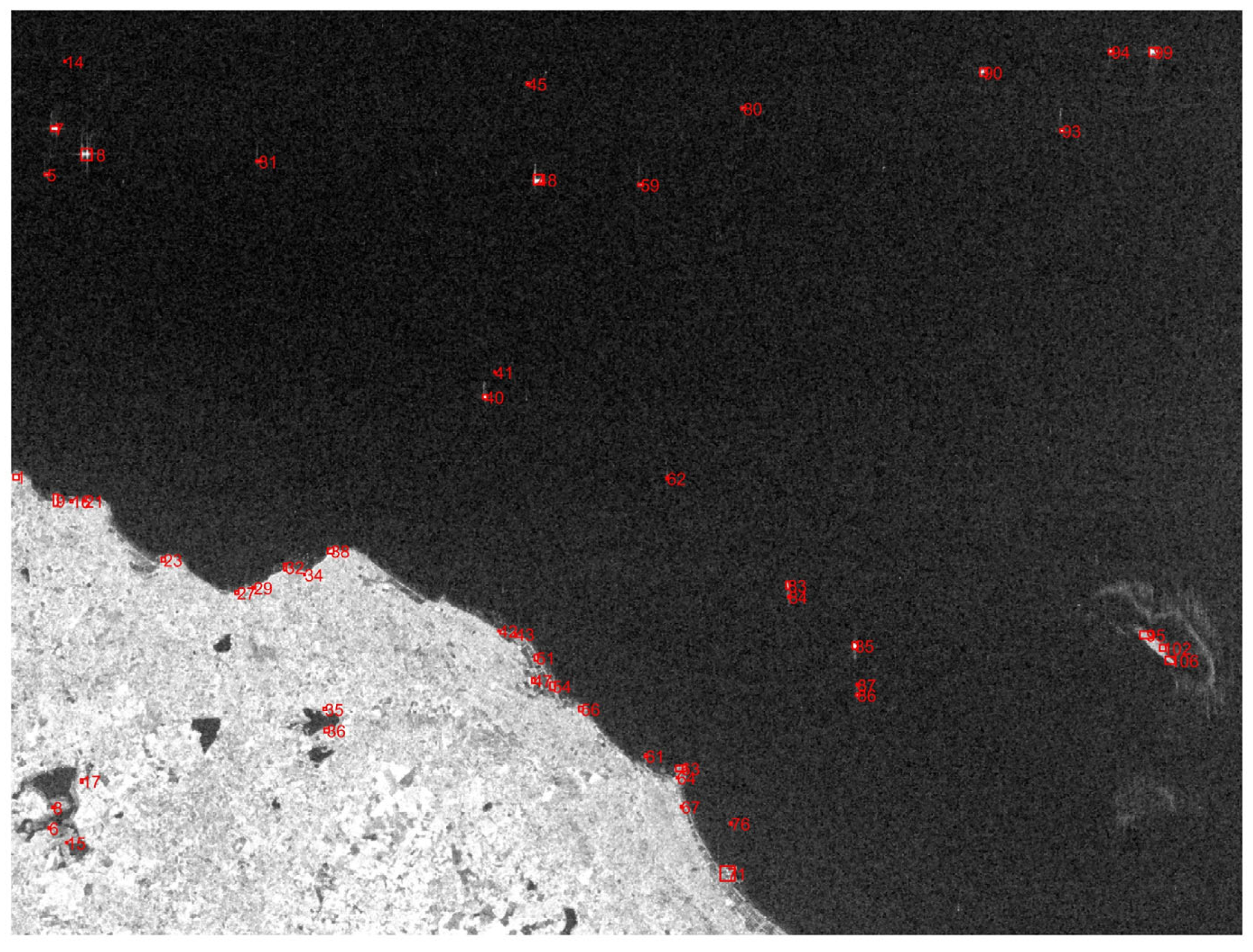

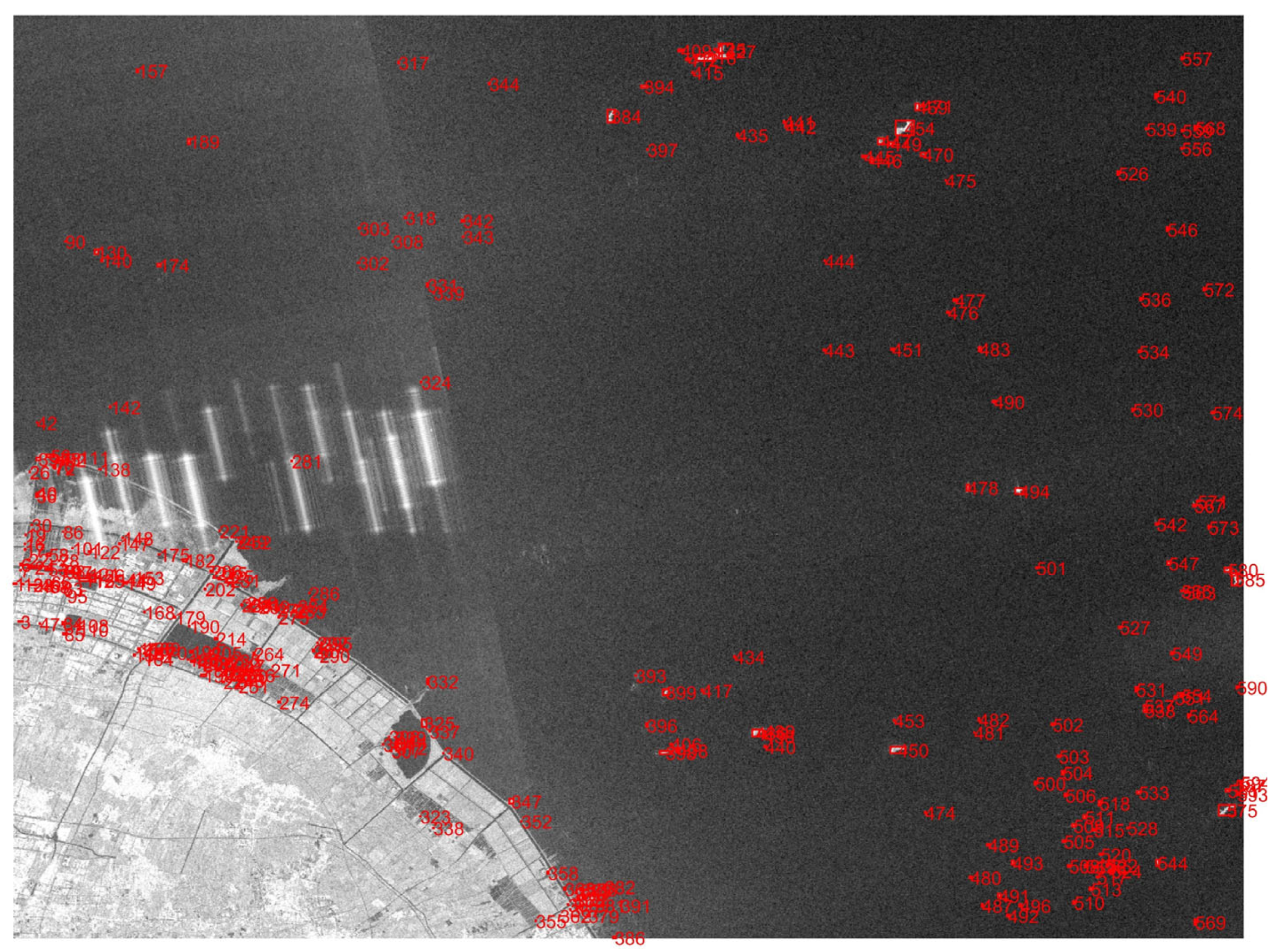

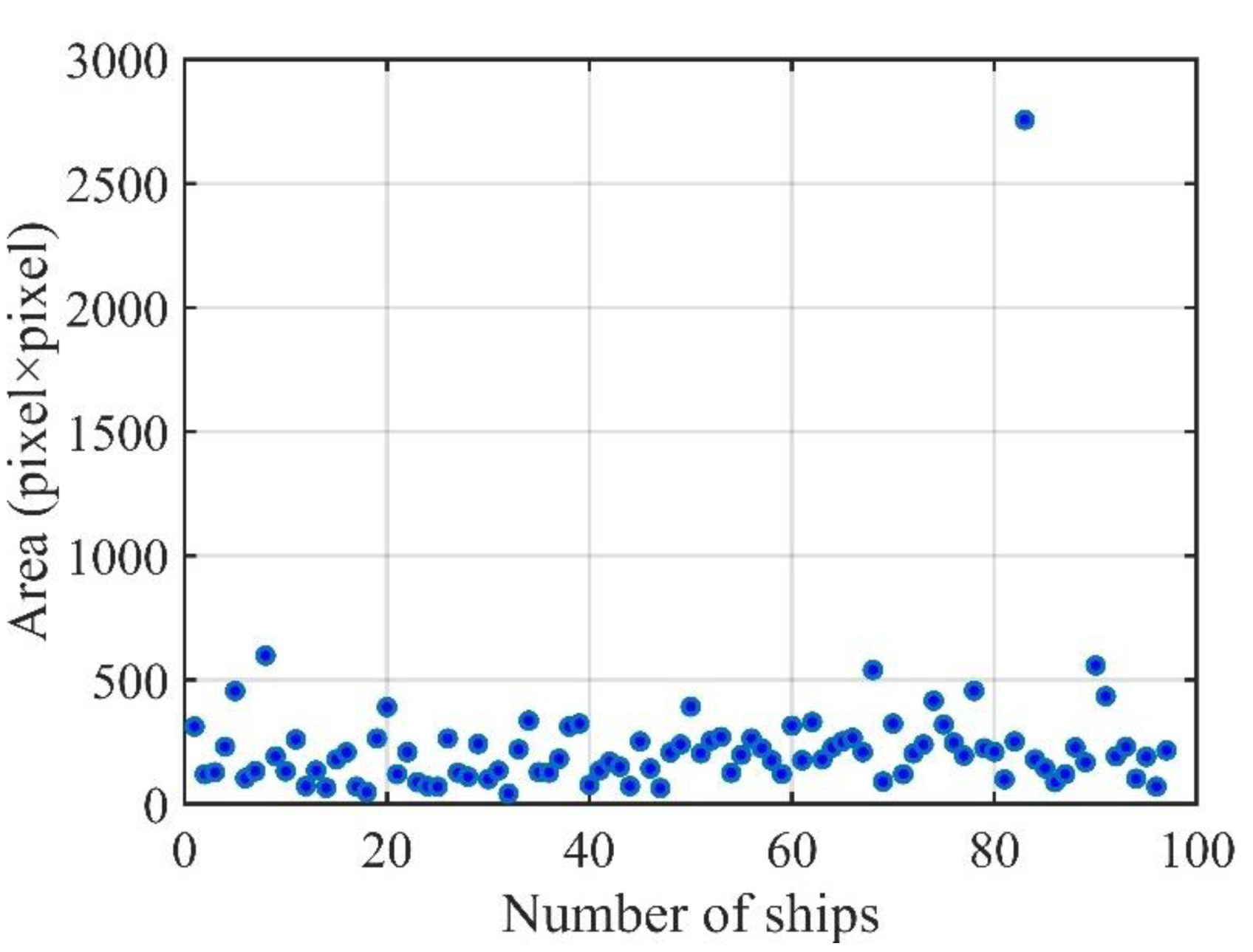

3.1. Dataset

3.2. Training Details

3.3. Evaluation Indexes

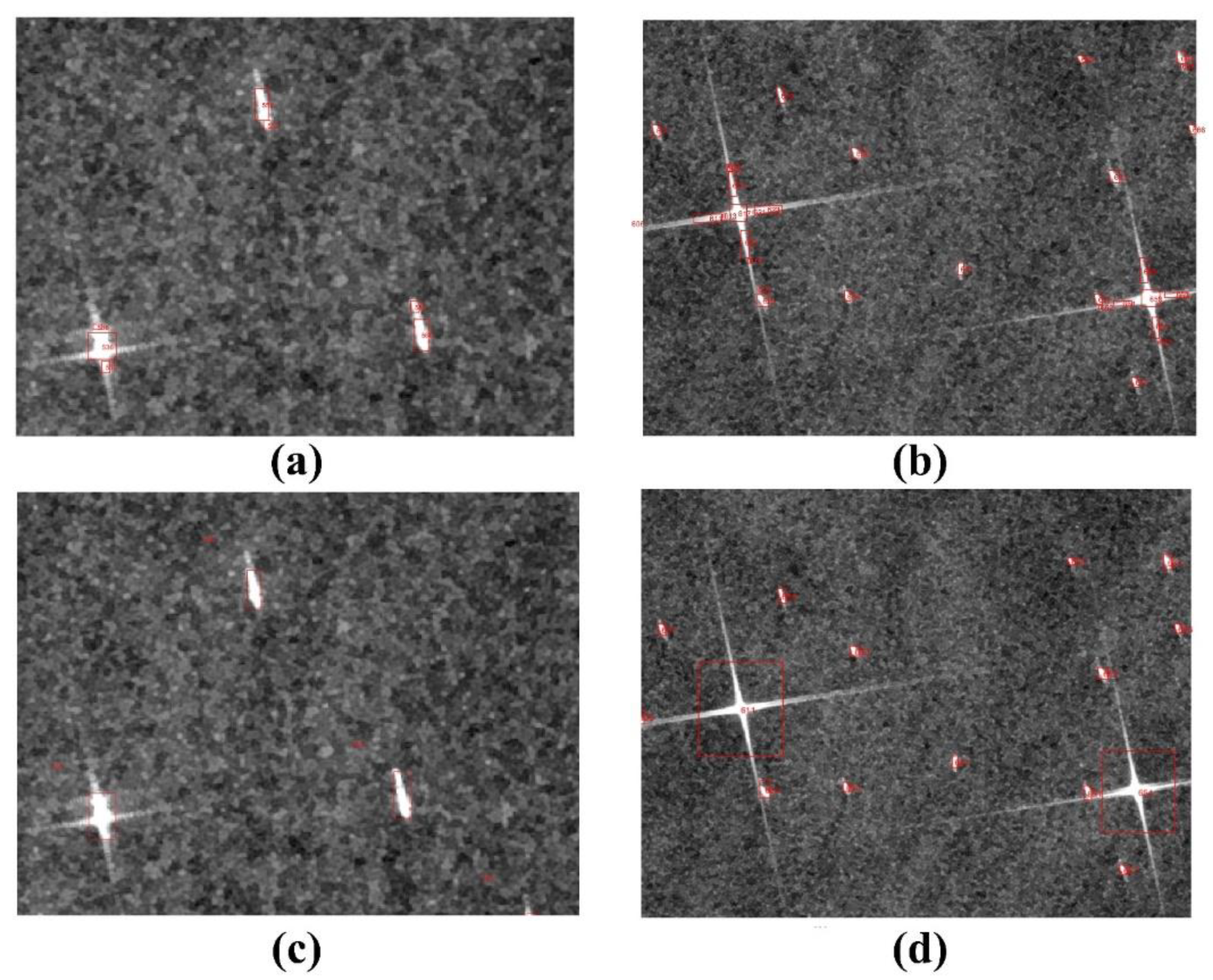

3.4. Candidate Detection

3.5. Boundary Box Optimization

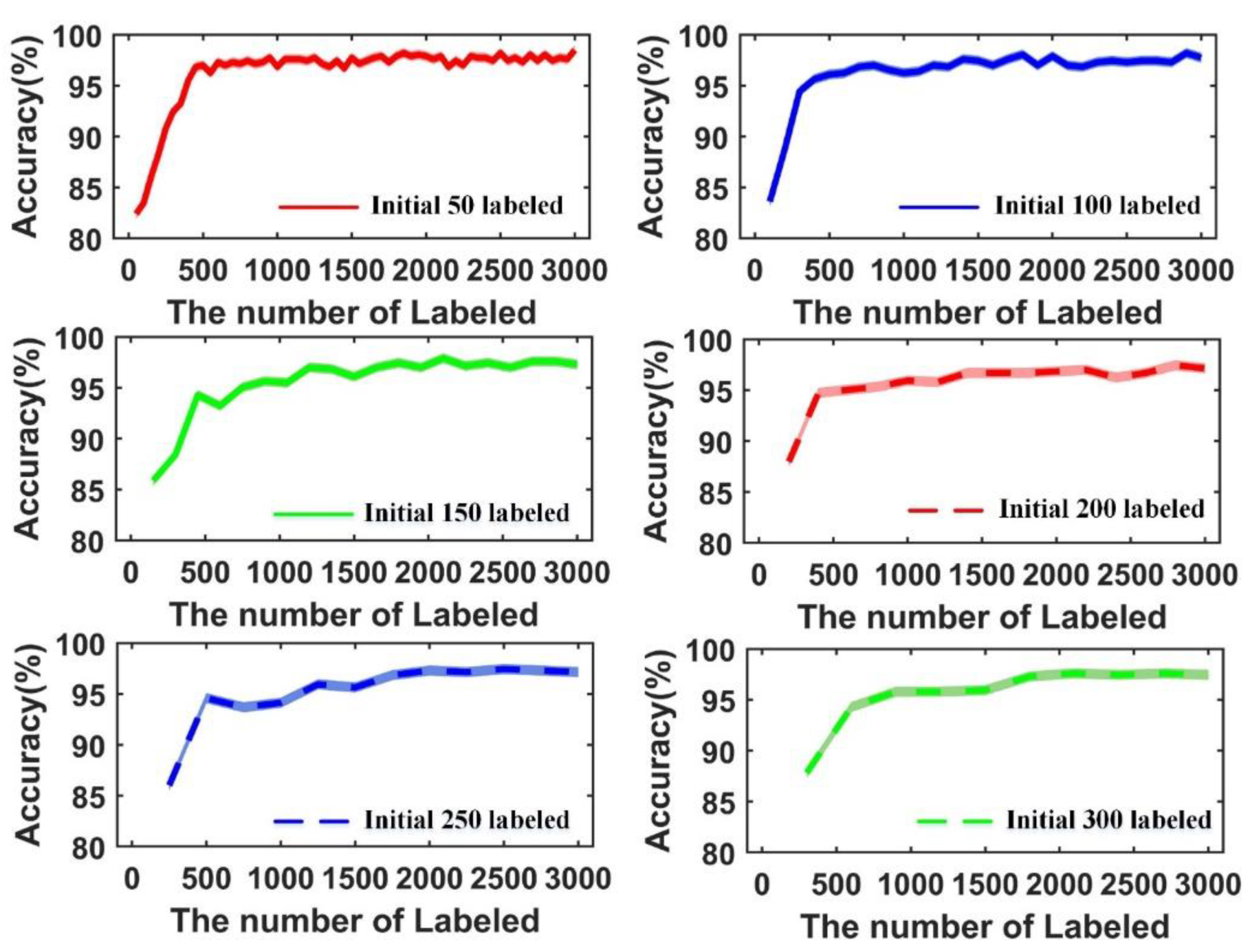

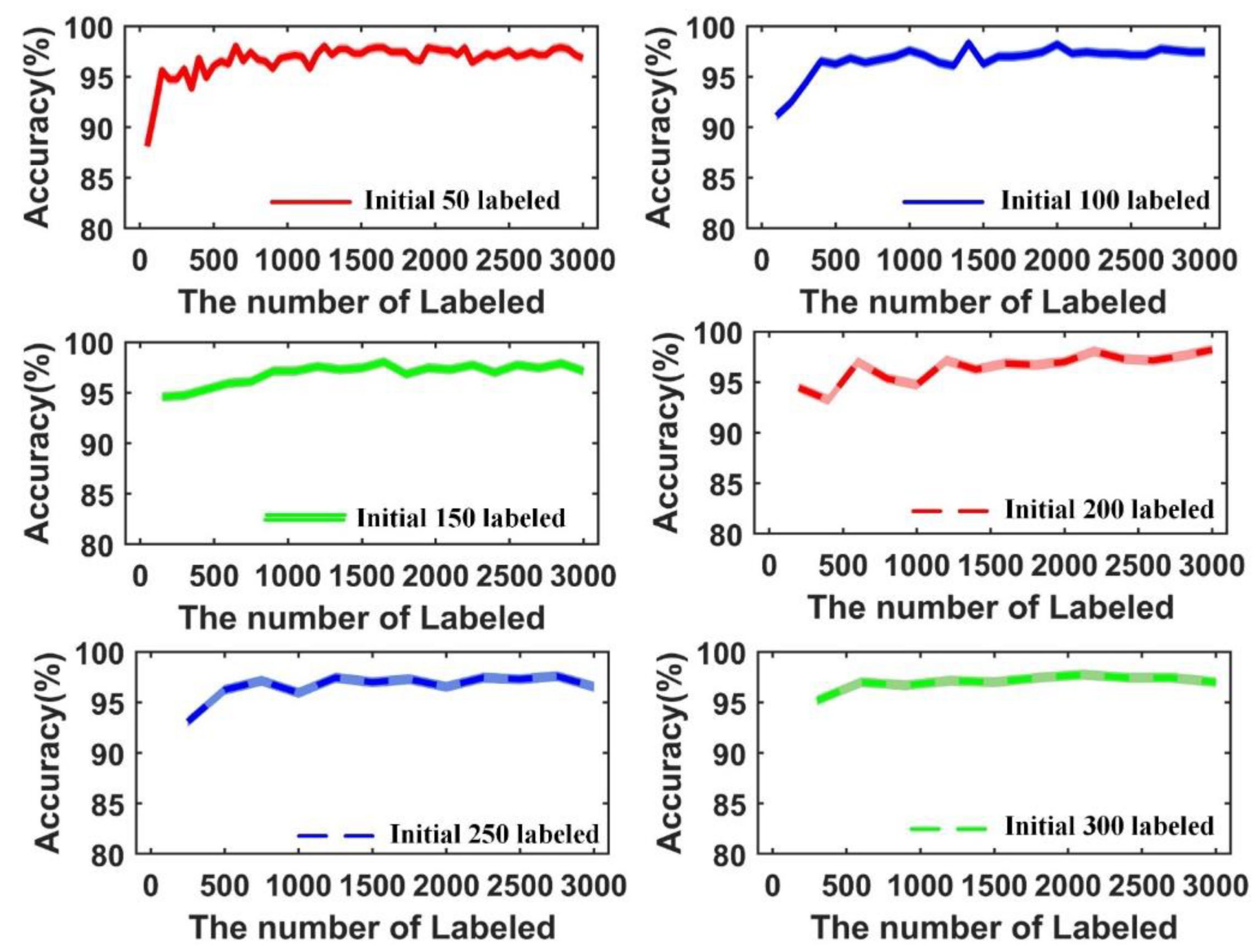

3.6. Effect of the Size of the Initial Labeled Training Set

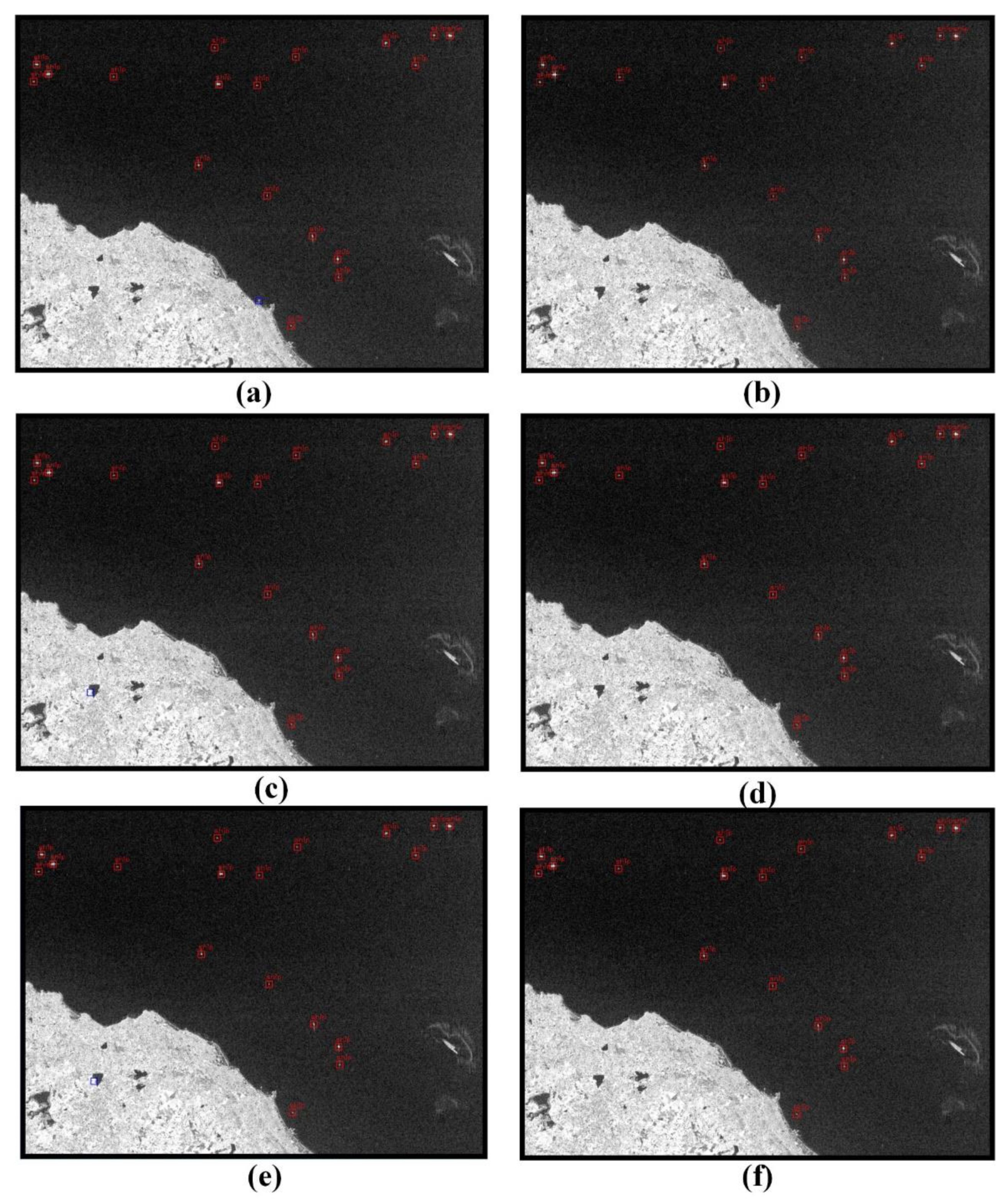

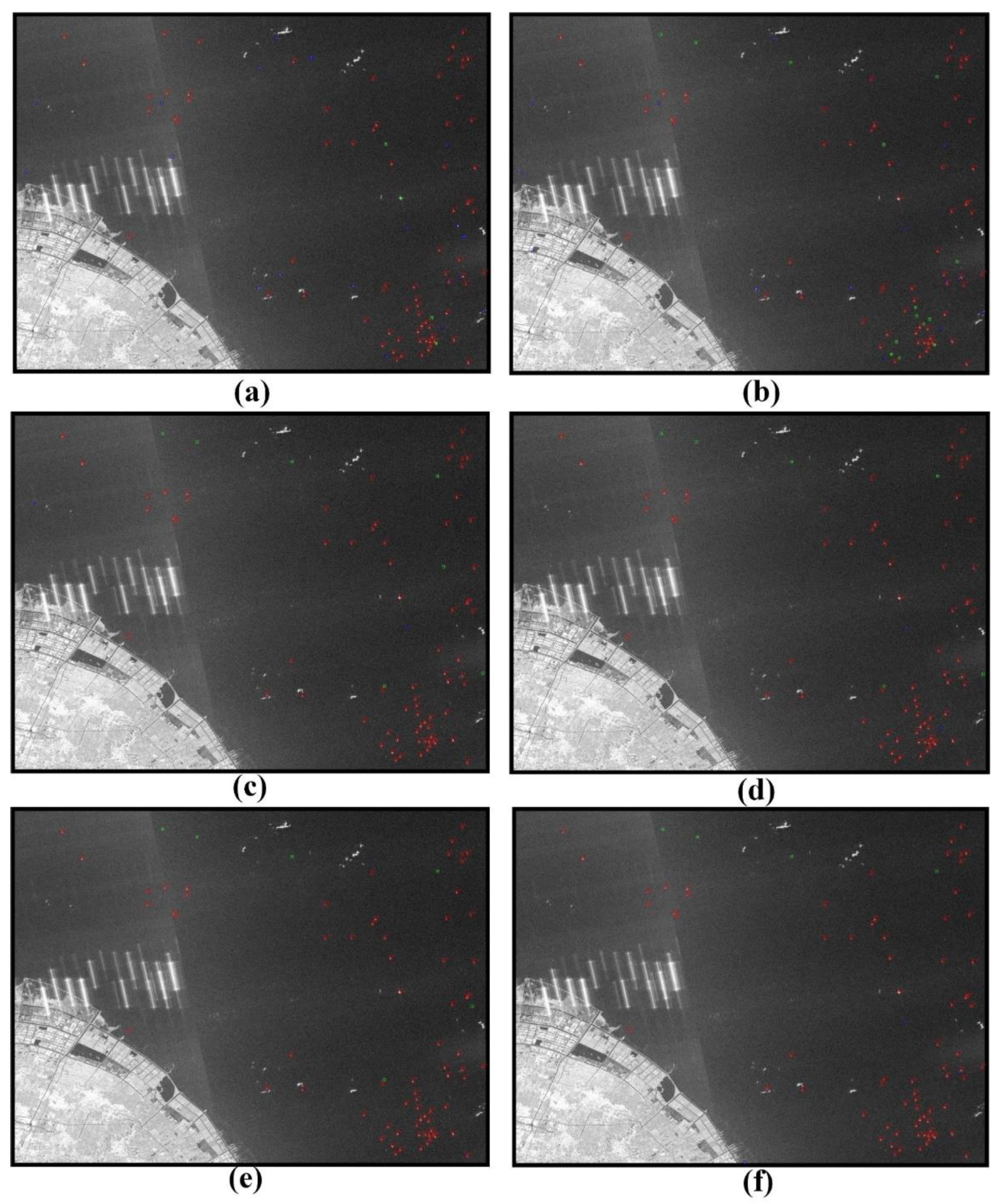

3.7. Comparison of the Results Derived by Different Methods

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lee, J.-S.; Pottier, E. Polarimetric Radar Imaging: From Basics to Applications; CRC Press: Boca Raton, FL, USA, 2009. [Google Scholar]

- Geng, X.; Li, X.-M.; Velotto, D.; Chen, K.-S. Study of the polarimetric characteristics of mud flats in an intertidal zone using C-and X-band spaceborne SAR data. Remote. Sens. Environ. 2016, 176, 56–68. [Google Scholar] [CrossRef]

- Ai, J.; Qi, X.; Yu, W.; Deng, Y.; Liu, F.; Shi, L. A New CFAR Ship Detection Algorithm Based on 2-D Joint Log-Normal Distribution in SAR Images. IEEE Geosci. Remote. Sens. Lett. 2010, 7, 806–810. [Google Scholar] [CrossRef]

- An, W.; Xie, C.; Yuan, X. An Improved Iterative Censoring Scheme for CFAR Ship Detection With SAR Imagery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 4585–4595. [Google Scholar] [CrossRef]

- Leng, X.; Ji, K.; Yang, K.; Zou, H. A Bilateral CFAR Algorithm for Ship Detection in SAR Images. IEEE Geosci. Remote. Sens. Lett. 2015, 12, 1536–1540. [Google Scholar] [CrossRef]

- Hazra, A.; Choudhary, P.; Sheetal Singh, M. Recent Advances in Deep Learning Techniques and Its Applications: An Overview. Adv. Biomed. Eng. Technol. 2021, 103–122. [Google Scholar]

- Li, J.; Qu, C.; Shao, J. Ship detection in SAR images based on an improved faster R-CNN. In Proceedings of the 2017 SAR in Big Data Era: Models, Methods and Applications (BIGSARDATA), Beijing, China, 13–14 November 2017; pp. 1–6. [Google Scholar]

- Wang, Y.; Wang, C.; Zhang, H.; Dong, Y.; Wei, S. A SAR dataset of ship detection for deep learning under complex backgrounds. Remote Sens. 2019, 11, 765. [Google Scholar] [CrossRef] [Green Version]

- Wang, Y.; Wang, C.; Zhang, H.; Dong, Y.; Wei, S. Automatic Ship Detection Based on RetinaNet Using Multi-Resolution Gaofen-3 Imagery. Remote Sens. 2019, 11, 531. [Google Scholar] [CrossRef] [Green Version]

- Chen, C.; He, C.; Hu, C.; Pei, H.; Jiao, L. A deep neural network based on an attention mechanism for SAR ship detection in multiscale and complex scenarios. IEEE Access 2019, 7, 104848–104863. [Google Scholar] [CrossRef]

- Cui, Z.; Li, Q.; Cao, Z.; Liu, N. Dense attention pyramid networks for multi-scale ship detection in SAR images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 8983–8997. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhao, L.; Xiong, B.; Kuang, G. Attention receptive pyramid network for ship detection in SAR images. IEEE J. Sel. Top. Appl. Earth Observ. 2020, 13, 2738–2756. [Google Scholar] [CrossRef]

- Dai, W.; Mao, Y.; Yuan, R.; Liu, Y.; Pu, X.; Li, C. A Novel Detector Based on Convolution Neural Networks for Multiscale SAR Ship Detection in Complex Background. Sensors 2020, 20, 2547. [Google Scholar] [CrossRef] [PubMed]

- Wei, S.; Zeng, X.; Qu, Q.; Wang, M.; Su, H.; Shi, J. HRSID: A high-resolution SAR images dataset for ship detection and instance segmentation. IEEE Access 2020, 8, 120234–120254. [Google Scholar] [CrossRef]

- Chen, P.; Li, Y.; Zhou, H.; Liu, B.; Liu, P. Detection of Small Ship Objects Using Anchor Boxes Cluster and Feature Pyramid Network Model for SAR Imagery. J. Mar. Sci. Eng. 2020, 8, 112. [Google Scholar] [CrossRef] [Green Version]

- Fu, J.; Sun, X.; Wang, Z.; Fu, K. An anchor-free method based on feature balancing and refinement network for multiscale ship detection in SAR images. IEEE Trans. Geosci. Remote. Sens. 2020, 59, 1331–1344. [Google Scholar] [CrossRef]

- Zhang, G.; Li, Z.; Li, X.; Yin, C.; Shi, Z. A Novel Salient Feature Fusion Method for Ship Detection in Synthetic Aperture Radar Images. IEEE Access 2020, 8, 215904–215914. [Google Scholar] [CrossRef]

- Xu, C.; Yin, C.; Wang, D.; Han, W. Fast ship detection combining visual saliency and a cascade CNN in SAR images. IET Radar Sonar Navig. 2020, 14, 1879–1887. [Google Scholar] [CrossRef]

- Wang, Z.; Yang, T.; Zhang, H. Land contained sea area ship detection using spaceborne image. Pattern Recognit. Lett. 2020, 130, 125–131. [Google Scholar] [CrossRef]

- Geng, X.; Shi, L.; Yang, J.; Li, P.; Zhao, L.; Sun, W.; Zhao, J. Ship Detection and Feature Visualization Analysis Based on Lightweight CNN in VH and VV Polarization Images. Remote Sens. 2021, 13, 1184. [Google Scholar] [CrossRef]

- Liu, G.; Zhang, X.; Meng, J. A Small Ship Target Detection Method Based on Polarimetric SAR. Remote Sens. 2019, 11, 2938. [Google Scholar] [CrossRef] [Green Version]

- Liu, A.K.; Peng, C.Y.; Weingartner, T.J. Ocean-ice interaction in the marginal ice zone. J. Geophys. Res. 1994, 99, 22391–22400. [Google Scholar] [CrossRef]

- Villas Bôas, A.B.; Ardhuin, F.; Ayet, A.; Bourassa, M.A.; Brandt, P.; Chapron, B.; Cornuelle, B.D.; Farrar, J.T.; Fewings, M.R.; Fox-Kemper, B.; et al. Integrated Observations of Global Surface Winds, Currents, and Waves: Requirements and Challenges for the Next Decade. Front. Mar. Sci. 2019, 6, 425. [Google Scholar] [CrossRef]

- Yoo, D.; Kweon, I.S. Learning loss for active learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 93–102. [Google Scholar]

- Settles, B. Active Learning Literature Survey; University of Wisconsin: Madison, WI, USA, 2009. [Google Scholar]

- Ren, P.; Xiao, Y.; Chang, X.; Huang, P.-Y.; Li, Z.; Chen, X.; Wang, X. A Survey of Deep Active Learning. arXiv 2019, arXiv:2009.00236. [Google Scholar]

- Lewis, D.D.; Catlett, J. Heterogeneous uncertainty sampling for supervised learning. In Machine Learning Proceedings 1994; Elsevier: Amsterdam, The Netherlands, 1994; pp. 148–156. [Google Scholar]

- Yang, L.; Zhang, Y.; Chen, J.; Zhang, S.; Chen, D.Z. Suggestive annotation: A deep active learning framework for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Quebec City, QC, Canada, 11–13 September 2017; pp. 399–407. [Google Scholar]

- Vezhnevets, A.; Buhmann, J.M.; Ferrari, V. Active learning for semantic segmentation with expected change. In Proceedings of the 2012 IEEE conference on computer vision and pattern recognition, Providence, RI, USA, 16–21 June 2012; pp. 3162–3169. [Google Scholar]

- Siddiqui, Y.; Valentin, J.; Nießner, M. Viewal: Active learning with viewpoint entropy for semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9433–9443. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Pelich, R.; Longépé, N.; Mercier, G.; Hajduch, G.; Garello, R. Performance evaluation of Sentinel-1 data in SAR ship detection. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; pp. 2103–2106. [Google Scholar]

- Leng, X.; Ji, K.; Zhou, S.; Xing, X.; Zou, H. Discriminating Ship From Radio Frequency Interference Based on Noncircularity and Non-Gaussianity in Sentinel-1 SAR Imagery. IEEE Trans. Geosci. Remote. Sens. 2018, 57, 352–363. [Google Scholar] [CrossRef]

- Sun, Y.; Li, X.M. Denoising Sentinel-1 Extra-Wide Mode Cross-Polarization Images Over Sea Ice. IEEE Trans. Geosci. Remote. Sens. 2020, 59, 2116–2131. [Google Scholar] [CrossRef]

- Zhu, X.; He, F.; Ye, F.; Dong, Z.; Wu, M. Sidelobe Suppression with Resolution Maintenance for SAR Images via Sparse Representation. Sensors 2018, 18, 1589. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Stankwitz, H.C.; Dallaire, R.J.; Fienup, J.R. Nonlinear apodization for sidelobe control in SAR imagery. IEEE Trans. Aerosp. Electron. Syst. 1995, 31, 267–279. [Google Scholar] [CrossRef]

- Tzutalin, D. LabelImg. GitHub Code. 2015. Available online: https://github.com/tzutalin/labelImg (accessed on 16 July 2021).

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision-ECCV 2014, Zurich, Swizerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Snapir, B.; Waine, T.W.; Biermann, L. Maritime Vessel Classification to Monitor Fisheries with SAR: Demonstration in the North Sea. Remote Sens. 2019, 11, 353. [Google Scholar] [CrossRef] [Green Version]

- Ma, M.; Chen, J.; Liu, W.; Yang, W. Ship Classification and Detection Based on CNN Using GF-3 SAR Images. Remote Sens. 2018, 10, 2043. [Google Scholar] [CrossRef] [Green Version]

- Wang, Y.; Li, W.; Li, X.; Sun, X. Ship Detection by Modified RetinaNet. In Proceedings of the 2018 10th IAPR Workshop on Pattern Recognition in Remote Sensing (PRRS) 2018, Beijing, China, 19–20 August 2018; pp. 1–5. [Google Scholar]

- Zhao, J.; Zhang, Z.; Yu, W.; Truong, T.-K. A Cascade Coupled Convolutional Neural Network Guided Visual Attention Method for Ship Detection From SAR Images. IEEE Access 2018, 6, 50693–50708. [Google Scholar] [CrossRef]

- Chang, Y.-L.; Anagaw, A.; Chang, L.; Wang, Y.C.; Hsiao, C.-Y.; Lee, W.-H. Ship Detection Based on YOLOv2 for SAR Imagery. Remote Sens. 2019, 11, 786. [Google Scholar] [CrossRef] [Green Version]

- Liu, Y.; Zhang, M.H.; Xu, P.; Guo, Z.W. SAR ship detection using sea-land segmentation-based convolutional neural network. In Proceedings of the 2017 International Workshop on Remote Sensing with Intelligent Processing (RSIP), Shanghai, China, 18–21 May 2017; pp. 1–4. [Google Scholar]

- He, K.; Girshick, R.; Dollar, P. Rethinking ImageNet Pre-Training. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 4917–4926. [Google Scholar]

- Tings, B.; Bentes, C.; Velotto, D.; Voinov, S. Modelling ship detectability depending on TerraSAR-X-derived metocean parameters. CEAS Space J. 2019, 11, 81–94. [Google Scholar] [CrossRef] [Green Version]

- Zhang, T.; Zhang, X.; Ke, X.; Zhan, X.; Shi, J.; Wei, S.; Pan, D.; Li, J.; Su, H.; Zhou, Y.; et al. LS-SSDD-v1.0: A Deep Learning Dataset Dedicated to Small Ship Detection from Large-Scale Sentinel-1 SAR Images. Remote Sens. 2020, 12, 2997. [Google Scholar] [CrossRef]

| Name | Count | Polarization |

|---|---|---|

| Ships | 1566 | VH |

| False alarms | 2099 |

| Time (UTC) | Polarization | Resolution (m) | Swath (km) | |

|---|---|---|---|---|

| 1 | 12 January 2019, 09:53 | VH,VV | 10 | 250 |

| 2 | 7 December 2020, 10:48 | VH,VV | 10 | 250 |

| Index | Position | Distance |

|---|---|---|

| 1 | Top | |

| 2 | Bottom | |

| 3 | Left | |

| 4 | Right | |

| 5 | Top and Left | |

| 6 | Top and Right | |

| 7 | Bottom and Left | |

| 8 | Bottom and Right |

| Strategy | Method | Initial Labeled Training Set Size | Min Accuracy (%) | Max Accuracy (%) | Average Accuracy (%) | Time |

|---|---|---|---|---|---|---|

| Active-learning | Improved M-LeNet | 50 | 82.41 | 98.50 | 96.36 | 2.32 h |

| 100 | 83.61 | 98.19 | 96.27 | 1.17 h | ||

| 150 | 85.86 | 97.90 | 95.56 | 47 min | ||

| 200 | 87.96 | 97.44 | 95.75 | 36 min | ||

| 250 | 86.01 | 97.44 | 95.26 | 29 min | ||

| 300 | 87.97 | 97.44 | 95.70 | 26 min | ||

| Improved ResNet18 | 50 | 96.54 | 98.50 | 96.04 | 2.87 h | |

| 100 | 93.38 | 98.50 | 97.37 | 1.46 h | ||

| 150 | 96.69 | 98.65 | 97.81 | 59 min | ||

| 200 | 96.39 | 98.20 | 97.77 | 45 min | ||

| 250 | 97.14 | 98.50 | 97.79 | 36 min | ||

| 300 | 94.44 | 97.94 | 97.75 | 31 min | ||

| Surpervised | M-LeNet | 2932 | 90.44 | 97.97 | 97.08 | 1.18 h |

| ResNet18 | 92.90 | 99.13 | 98.67 | 2.13 h | ||

| RF | - | - | 95.27 | - | ||

| SVM | - | - | 94.54 | - | ||

| CNN [19] | - | - | 97.20 | - |

| Strategy | Method | Accuracy | Recall | Precision | F1-Score | Missed | False | Detected |

|---|---|---|---|---|---|---|---|---|

| Active-learn | M-LeNet-50 | 100% | 1.0 | 1.0 | 1.0 | 0 | 0 | 18 |

| M-LeNet-100 | 100% | 1.0 | 1.0 | 1.0 | 0 | 0 | 18 | |

| M-LeNet-150 | 99.08 | 0.94 | 0.94 | 0.94 | 1 | 0 | 17 | |

| M-LeNet-200 | 98.16 | 0.78 | 1.0 | 0.88 | 4 | 0 | 14 | |

| M-LeNet-250 | 99.08 | 0.94 | 0.94 | 0.94 | 1 | 0 | 17 | |

| M-LeNet-300 | 99.08 | 0.94 | 0.94 | 0.94 | 1 | 0 | 17 | |

| ResNet-50 | 100% | 1.0 | 1.0 | 1.0 | 0 | 0 | 18 | |

| ResNet-100 | 100% | 1.0 | 1.0 | 1.0 | 0 | 0 | 18 | |

| ResNet-150 | 98.62 | 1.0 | 0.86 | 0.92 | 0 | 3 | 18 | |

| ResNet-200 | 99.54 | 1.0 | 0.95 | 0.97 | 0 | 0 | 18 | |

| ResNet-250 | 100% | 1.0 | 1.0 | 1.0 | 0 | 0 | 18 | |

| ResNet-300 | 100% | 1.0 | 1.0 | 1.0 | 0 | 0 | 18 | |

| Surpervised | M-LeNet | 99.54 | 1.0 | 0.95 | 0.97 | 0 | 1 | 18 |

| ResNet18 | 100% | 1.0 | 1.0 | 1.0 | 0 | 0 | 18 | |

| SVM | 99.54 | 1.0 | 0.95 | 0.97 | 0 | 1 | 18 | |

| RF | 100% | 1.0 | 1.0 | 1.0 | 0 | 0 | 18 |

| Strategy | Method | Accuracy | Recall | Precision | F1-Score | Missed | False | Detected |

|---|---|---|---|---|---|---|---|---|

| Active-learn | M-LeNet-50 | 97.78 | 0.92 | 1.0 | 0.96 | 6 | 0 | 73 |

| M-LeNet-100 | 97.41 | 0.92 | 0.99 | 0.95 | 6 | 1 | 72 | |

| M-LeNet-150 | 97.78 | 0.92 | 1.0 | 0.96 | 6 | 0 | 73 | |

| M-LeNet-200 | 95.93 | 0.89 | 0.97 | 0.93 | 9 | 2 | 70 | |

| M-LeNet-250 | 97.04 | 0.90 | 1.0 | 0.95 | 8 | 0 | 71 | |

| M-LeNet-300 | 97.04 | 0.92 | 0.97 | 0.95 | 6 | 2 | 73 | |

| ResNet-50 | 97.41 | 0.95 | 0.96 | 0.96 | 4 | 3 | 75 | |

| ResNet-100 | 97.04 | 0.92 | 0.97 | 0.95 | 6 | 2 | 73 | |

| ResNet-150 | 95.19 | 0.90 | 0.93 | 0.92 | 8 | 5 | 71 | |

| ResNet-200 | 96.30 | 0.92 | 0.95 | 0.94 | 6 | 4 | 73 | |

| ResNet-250 | 96.30 | 0.90 | 0.97 | 0.93 | 8 | 2 | 71 | |

| ResNet-300 | 97.04 | 0.92 | 0.97 | 0.95 | 6 | 2 | 73 | |

| Surpervised | M-LeNet | 96.67 | 0.91 | 0.97 | 0.94 | 7 | 2 | 72 |

| ResNet18 | 97.04 | 0.92 | 0.97 | 0.95 | 6 | 2 | 73 | |

| SVM | 90.74 | 0.95 | 0.78 | 0.86 | 4 | 21 | 75 | |

| RF | 89.63 | 0.82 | 0.82 | 0.82 | 14 | 14 | 65 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Geng, X.; Zhao, L.; Shi, L.; Yang, J.; Li, P.; Sun, W. Small-Sized Ship Detection Nearshore Based on Lightweight Active Learning Model with a Small Number of Labeled Data for SAR Imagery. Remote Sens. 2021, 13, 3400. https://doi.org/10.3390/rs13173400

Geng X, Zhao L, Shi L, Yang J, Li P, Sun W. Small-Sized Ship Detection Nearshore Based on Lightweight Active Learning Model with a Small Number of Labeled Data for SAR Imagery. Remote Sensing. 2021; 13(17):3400. https://doi.org/10.3390/rs13173400

Chicago/Turabian StyleGeng, Xiaomeng, Lingli Zhao, Lei Shi, Jie Yang, Pingxiang Li, and Weidong Sun. 2021. "Small-Sized Ship Detection Nearshore Based on Lightweight Active Learning Model with a Small Number of Labeled Data for SAR Imagery" Remote Sensing 13, no. 17: 3400. https://doi.org/10.3390/rs13173400

APA StyleGeng, X., Zhao, L., Shi, L., Yang, J., Li, P., & Sun, W. (2021). Small-Sized Ship Detection Nearshore Based on Lightweight Active Learning Model with a Small Number of Labeled Data for SAR Imagery. Remote Sensing, 13(17), 3400. https://doi.org/10.3390/rs13173400