Abstract

Unmanned aerial vehicles-collected (UAVs) digital red–green–blue (RGB) images provided a cost-effective method for precision agriculture applications regarding yield prediction. This study aims to fully explore the potential of UAV-collected RGB images in yield prediction of winter wheat by comparing it to multi-source observations, including thermal, structure, volumetric metrics, and ground-observed leaf area index (LAI) and chlorophyll content under the same level or across different levels of nitrogen fertilization. Color indices are vegetation indices calculated by the vegetation reflectance at visible bands (i.e., red, green, and blue) derived from RGB images. The results showed that some of the color indices collected at the jointing, flowering, and early maturity stages had high correlation (R2 = 0.76–0.93) with wheat grain yield. They gave the highest prediction power (R2 = 0.92–0.93) under four levels of nitrogen fertilization at the flowering stage. In contrast, the other measurements including canopy temperature, volumetric metrics, and ground-observed chlorophyll content showed lower correlation (R2 = 0.52–0.85) to grain yield. In addition, thermal information as well as volumetric metrics generally had little contribution to the improvement of grain yield prediction when combining them with color indices derived from digital images. Especially, LAI had inferior performance to color indices in grain yield prediction within the same level of nitrogen fertilization at the flowering stage (R2 = 0.00–0.40 and R2 = 0.55–0.68), and color indices provided slightly better prediction of yield than LAI at the flowering stage (R2 = 0.93, RMSE = 32.18 g/m2 and R2 = 0.89, RMSE = 39.82 g/m2) under all levels of nitrogen fertilization. This study highlights the capabilities of color indices in wheat yield prediction across genotypes, which also indicates the potential of precision agriculture application using many other flexible, affordable, and easy-to-handle devices such as mobile phones and near surface digital cameras in the future.

1. Introduction

The promising trend of precision agriculture distinguishes itself from traditional agriculture by its advanced equipment and technologies to collect and analyze the field information to optimize the use of fertilizers, water, herbicides, and pesticides and improve crop production and quality. The International Society of Precision Agriculture (https://www.ispag.org/, accessed on 10 July 2021) defined precision agriculture in 2019 as a management strategy that gathers, processes, and analyzes temporal, spatial, and individual data and combines it with other information to support management decisions according to estimated variability for improved resource use efficiency, productivity, quality, profitability, and sustainability of agricultural production.

Timely and non-destructive yield prediction before harvest is important for crop management, policy-making, regional trade, and food security [1,2,3]. It is an essential issue related to precision agriculture [4]. Traditional yield prediction relied on ground-based field surveys, which are costly, subjective, and not applicable to large-scale prediction [3,5]; on the contrary, remote sensing technology show great potential in timely and non-destructive yield prediction at local to regional scales [1,4,6,7,8].

In terms of global food security, wheat is one of the key cereal crops, as well as the main nutritional bases of human worldwide. As a result, there have been numerous studies on grain yield prediction in wheat based on vegetation indices (VIs) derived from different types of remote sensing sensors. Xue et al. (2007) [9] found a strong relationship between green ratio vegetation index and grain yield based on a hand-held spectroradiometer. Becker-Reshef et al. (2010) [10] developed an empirical regression model to forecast wheat yield in Kansas and Ukraine using satellite imageries from moderate resolution imaging spectroradiometer (MODIS). Moreover, some researchers used the data derived from unmanned aerial vehicles (UAVs) to predict wheat yield based on leaf area index (LAI), chlorophyll [11], etc. Some researchers applied the machine learning method to predict wheat yield using UAVs-derived data [3,12] or satellite imageries [2,13].

However, satellite data often have limitations for high-resolution precision agricultural applications due to the trade-off between temporal resolution and spatial resolution of satellite data, as well as the frequent cloud cover [3,7,14]. Some advanced satellite or airborne sensors with high spatial resolution might be sufficient for parts of precision agriculture applications, but its high cost limits its application [14]. Remote sensing via UAVs equipped with multiple imaging sensors, autopilots, and GPS systems is becoming a very important and competitive tool for augmenting traditional spaceborne and airborne remote sensing techniques [14,15,16]. UAVs can provide high spatial, temporal, and spectral resolution imageries at low-cost in a flexible way, offering great possibilities for precision agriculture [17,18]. Many kinds of imaging sensors can be mounted on the UAVs, including digital cameras [19,20], multispectral [21], hyperspectral [22], Light Detection and Ranging (LiDAR) [23], and thermal sensors [24]. Each type of camera has the trade-offs among sensor cost, computation task, and operational flexibility [25,26]. Digital red–green–blue (RGB) cameras with ultra-high resolution and lightweight construction are cost effective and simple to be operated regardless of atmospheric conditions (sunny or cloudy) [17,26,27,28]. They are becoming an attractive alternative to the use of multispectral or hyperspectral information and increasingly used in precision agriculture [20,29].

Many efforts have been made on RGB-based agricultural applications, such as leaf chlorophyll content estimation [16], yield prediction [29], leaf area index (LAI) estimation [30], plant height and biomass estimation [18], nutrient deficiency diagnosis [17], plant growth monitoring [31], stress detection [32], plant diseases monitoring [20], crop genotyping [28], etc. Some studies showed that RGB-derived VIs had similar or even better performance than NIR-based VIs derived from multispectral cameras in applications such as grain yield prediction under diseases [20], different water status [19], or nutrition status [17,33]. A digital camera was suggested by many previous studies [17,33], while some others indicated the limitation of RGB cameras in their functionality due to their limited quantification information [28].

Color indices are VIs calculated by the vegetation reflectance at visible bands (i.e., red, green, and blue) [1]. There is limited reporting of the practical use of UAV-based color indices in wheat yield prediction. Fernandez-Gallego et al. (2019) [29] evaluate different color indices to predict durum wheat yield under different water regime conditions, and the results showed that color indices gave comparable performance with NDVI in durum wheat yield prediction. However, to our knowledge, little is known about wheat yield using UAV-based color indices under different nitrogen regime conditions and its response to nitrogen. Nitrogen is essential for optimal crop production. Nitrogen monitoring is important for many metabolic and structural processes, such as grain yield and health status [16]. Global nitrogen fertilizer use was expected to continue to increase at 1.8% per year [34]. However, excessive use of nitrogen fertilizer often leads to unwanted environmental pollution, which needs to be avoided [35]. Schirrmann et al. (2016) [16] performed three missions of UAV flighting between booting and maturing of wheat plants and reported low correlations between wheat nitrogen concentrations and image variables from digital camera. The possible reason was explained by the low nitrogen variability due to water deficit stress conditions [16]. Nevertheless, are the image variables from digital camera applicable to study wheat yield prediction and its response to nitrogen without water stress?

Many measurements of the crops, e.g., LAI, color indices, chlorophyll, and volume metrics, characterize the physiological state and biological productivity of plants during the remote investigation of fields [11,15,29]. Thus, the objective of this study was to (1) analyze the variations and evaluate the applicability of different color indices derived from the UAV-mounted digital cameras, ground-measured LAI, and chlorophyll content throughout the crop cycle for wheat yield prediction and their response to nitrogen without water stress; and (2) determine the best phenological stage for wheat yield prediction. Therefore, firstly, we assessed the relationship between grain yield and VIs derived from digital images as well as the relationship between LAI and VIs observed at a single stage for all the nitrogen treatments together. Then, the relationship between grain yield and thermal, structure, and volumetric metrics derived at one of the most important single stages, i.e., the flowering stage, was studied. Moreover, the variations in LAI, color indices, dry matter content, and chlorophyll content at different stages across nitrogen regimes and genotypes were further analyzed by comparing with the variations in final grain yield. Finally, the relationships between grain yield and multi-temporal color indices across nitrogen regimes and genotypes were analyzed.

2. Materials and Methods

2.1. Trial Site and Experimental Setup

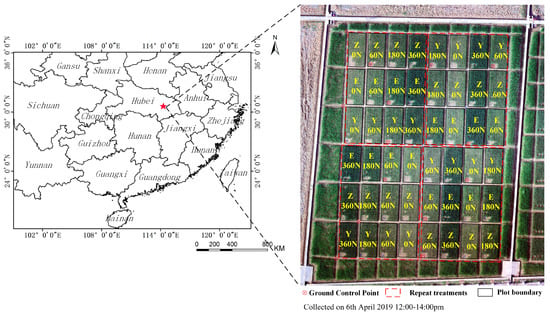

The trial site was set up at the Huazhong Agriculture University, Wuhan, China (Figure 1). It included 48 plots. Wuhan lies on the subtropical monsoon climate. The annual average temperature is 15–18 °C, and the annual precipitation is ≈1200 mm. This experiment utilized a complete block design with four replicate nitrogen applications at the rate of 0, 60, 180, and 360 kg ha−1 for 3 commonly planted wheat cultivars: “Zhengmai9023”, “Emai596”, and “Yangmai15”. Each plot was 3.5 m × 7 m in size. The fertilizers were divided into two equal parts. They were applied before planting and 10 days after the jointing stage, respectively. According to Lu et al. (2000) [36], we used the potassium dichromate colorimetric method, the Kjeldahl method, the sodium bicarbonate method, and the ammonium acetate method to measure organic matter, total nitrogen, available phosphorus, and available potassium in the topsoil (0–20 cm) of the experimental plots, respectively. Specifically, the organic matter, total nitrogen, available phosphorus, and available potassium in the topsoil (0–20 cm) of the experimental plots were 12.2 g kg−1, 1.0 g kg−1, 25.6 mg kg−1, and 89.8 mg kg−1, respectively. For phosphorus and potassium fertilizers, 120 kg P2O5 ha−1 and 120 kg K2O ha−1 were applied pre-sowing.

Figure 1.

Trial site in Huazhong Agriculture University, Wuhan, China. Z, E, and Y represent zhengmai9023, Emai596, and Yangmai15, respectively. 0 N, 60 N, 180 N, and 360 N represent 0 kg ha−1, 60 kg ha−1, 180 kg ha−1, and 360 kg ha−1 nitrogen fertilization, respectively.

All cultivars were planted at row spacing of 0.22 m. Considering that the agronomical or physiological interpretation of the relationship between grain yield and green biomass can vary with stress severity [16,19], we tried to avoid other stresses except nitrogen. Pre-emergence herbicide and post-emergence herbicide were applied to control weeds within the plots. All plots were irrigated when needed.

2.2. Field Data Collection

The field measurements including canopy height (CH), LAI, final yield, dry matter accumulation (DM), and chlorophyll (dry mass per fresh mass) were conducted at different growth stages, as shown in Table 1. CH was manually measured by averaging the 6 CH samples at each plot in 6 April 2019. LAI and DM measurements were collected at the time when the plot entered the four key phenological stages (Table 1). As the phenological dates for each plot were not the same, the observation time of LAI and DM for each plot varied from 3 to 6 days. LAI were measured as the sum area of green leaves cut from the plant samples by leaf scanner (LI-3100, LI-COR Inc., Lincoln, NE, USA). The measurements included 20 samples at each plot. The DM of leaves, stems, roots, and entire plants was measured simultaneously with LAI for each plot at the four key phenological stages. Nitrogen concentration was measured by a chlorophyll meter (Soil–Plant Analysis Development (SPAD) readings, SPAD-502, Minolta Company, Tokyo, Japan). SPAD measurements were performed on the top fully expanded leaf in/before jointing stage and performed on flag leaves after the flowering stage.

Table 1.

Field measurements conducted in different phenological stages.

2.3. Image Acquisition and Processing

2.3.1. Image Data Acquisition and Processing

Field images were acquired between 12:00 and 14:00 local time at the key growth period in 2019 (Table 2) by the AFD-6 UAV platform, which was developed by Beijing Afanda scientific and technical corporation. An RGB camera (Hasselblad L1D-20C) and thermal camera (DUO PRO R-640) with a 19 mm thermal lens were mounted on the six-axes gimbal of the UAV to collect RGB and thermal images with the image size of 6000 × 4000 and 640 × 512, respectively. The UAV platform was implemented with an autopilot system to execute a predefined flight plan that was created and uploaded with the Mission Planner ground control station. All flight missions were planned at the flight altitude and speed of 50 m and 2.5 m/s with the lateral and forward overlaps of 80%, respectively.

Table 2.

Acquisition of RGB and thermal images in 2019.

After data acquisition, image processing needs to be implemented to obtain a set of image data including RGB and thermal Infrared reflectance. For georeferencing the collected images, 15 Ground Control Points (GCPs, Figure 1) across the study area were set. The geo-locations of GCPs were measured by a cm-level Real-Time Kinematic (RTK) differential GNSS device (Huace Navigation Technology, LTD, Shanghai, China) with a horizontal error of 0.01 m and a vertical error of 0.02 m. Image mosaicking for RGB and thermal images were first performed using PhotoScan Software (Agisoft LLC, ST, Petersburg, Russia) and then saved in a Tagged Image Format (TIF) file. The geo-locations of GCPs were used during the ortho-mosaic process for image georeference. Then, image registration was conducted for the georeferenced images that were collected on different dates or from different sensors.

The digital number (DN) values recorded in the RGB images were then converted to reflectance data. A white board was used a reference panel to calibrate RGB images observed under different illumination conditions. Thermal sensors provide plant canopy temperature. The DN values obtained by thermal images were converted to canopy temperature (T) using the equation provided by the thermal sensor manufacture (). Then, the thermal data collected by UAV on 6 April were compared to the measured surface temperature collected on 6 April using an Apogee MI-210 infrared radiometer by sampling the surface temperature of water surface, bare soil, concrete surface, and vegetation canopy within the study area. The results showed that the UAV-acquired temperature measurement was reliable (not shown, R2 = 0.935, RMSE = 2.783 °C, slope = 1.10).

In order to calculate the plant height from UAV images, a digital elevation model (DEM) as well as a digital surface model (DSM) were generated from each experimental plot. A canopy height model (CHM) for each sampling day was generated by computing the pixel-wise difference between DSM and DEM, respectively [37]. Considering the limited penetration ability and uncertainty of photogrammetric point clouds derived from UAV-mounted digital cameras, the UAV-collected canopy height was compared to the plant height of winter wheat measured by steel tape for validation (not shown, R2 = 0.577, RMSE = 0.047 m).

A Canopy Volume Model (CVMH, Equation (1)) was calculated by accumulating pixel-wise canopy volume based on CHM. The pixel-wise canopy volume was approximated by the product of the area of the pixel and the plant height of the pixel derived from UAVs [37]. Considering that the biomass samples with same canopy volumes could have different weights due to the spatial differences in pigment concentration, canopy architecture, and species/genotypes, Maimaitijiang et al. (2019) [37] introduced a density factor and proposed Vegetation Index Weighted CVM (CVMVI, Equation (2)). CVMVI was calculated by accumulating pixel-wise canopy volume from the image-derived plant height and density factor. VIs can capture the differences in crop physiological state and was used as the density factor. So, CVMVI incorporates structural and spectral information. It is expressed as follows (Equation (2)):

where i represents the ith pixel in CHM, n is the total number of pixels in a plot, Ai represents the area of pixel i, and Hi represents the height of the pixel i. VIi is vegetation index as the density factor.

2.3.2. Data Analysis and Modelling Method

A set of common color indices for biomass and yield estimation were calculated (Table 3) from RGB Orth mosaics in this study to find the most suitable color index for further analysis hereafter. Soil background effects were removed based on Support Vector Machine (SVM) classifier. Then, the averaged indices of wheat canopy were calculated for each spot and each date by zonal statistics.

Table 3.

Definitions of color indices extracted from RGB images.

2.4. Model Evaluation

Linear regression was used to explore the relationship between image variables and crop parameters, including dry matters, LAI, and grain yield. To evaluate the model accuracy and robustness, the commonly used evaluation metrics, such as the root mean square error (RMSE), relative RMSE (RRMSE, Equation (3)), and coefficient of determination (R2) were employed in this study.

3. Results and Discussion

3.1. Relationships between Grain Yield and Color Indices at a Single Stage

The correlation coefficient between image-derived color indices of different growth stages and wheat yield were calculated as shown in Table 4. The color indices GRRI, GRVI, NDI, VARI, and EXR achieved the highest averaged correlation (R2 = 0.7). Generally, the color indices without including the blue band were significantly related to final yields and fertilizer responses before the maturity stage. Meanwhile, the color indices that include the blue band had significant lower correlation with final yield than those indices without including the blue band. It indicates that the blue band had no contribution and even provided noisy information to wheat final yield estimation. Including the blue band in the enhanced vegetation index (EVI) aimed at reducing noise and uncertainties associated with highly variable atmospheric aerosols, rather than providing additional biophysical information on vegetation properties [47]. Gracia-Romer et al. (2017) [17] and Buchaillot et al. (2019) [48] also found that red and green bands presented the best correlations with plant N concentrations, and the blue band was good to predict early season phosphorus stress.

Table 4.

Correlation coefficient (R2) between image-derived color indices and wheat yield.

The correlations between final yield and different color indices varied with growth stages from 0 to 0.94. The variables observed at the flowering stage or early maturity stage (when the senescence of leaves starts and the canopy color starts to shift from green to yellow) had higher correlation with grain yield than those observed on other dates. The highest averaged correlation of all the color indices was achieved using the images collected on 11 April when most of the plots were in flowering stages. The flowering stage represents the nutrient growth peak of the wheat plant, which can well reflect the maximal photosynthetic capacity and yield potential. The color indices indicate vegetation coverage, stature, and nitrogen concentration [49,50], all of which can be beneficial for grain yield. Therefore, strong correlations were expected at this stage. In addition, as stated by Bai et al. (2016) [51] and Fernandez-Gallego et al. (2019) [29], at the late season, the strong correlations seemed to be driven by wilted versus still healthy canopy in the plots. Higher greenness at this stage indicated a prolonged grain filling, which often increased the grain yield [51,52].

3.2. Relationship between LAI and Color Indices

LAI is an important parameter that indicates crop photosynthesis and growth status. It is of significance for crop yield prediction [1,53]. The situation issue associated with NDVI for dense vegetation has been widely discussed in previous studies [15,54,55]. Previous studies also evaluated the relationship between LAI and color indices derived from digital cameras. Some also found a considerable level of saturation significant relationship between color indices and LAI after LAI reaches high values in soybean and corn [14,56].

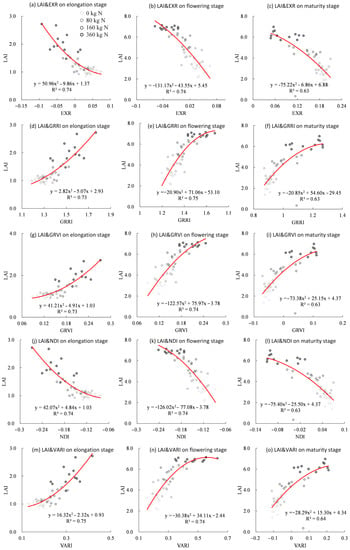

The color indices that had the highest correlation with grain yield in Table 4, i.e., EXR, GRRI, GRVI, NDI, and VARI, were further studied. The relationships between LAI and the color indices at different growth stages were analyzed. As shown in Figure 2, the five color indices all had a similar nonlinear relationship with LAI at different stages in terms of fitting coefficient and absolute change of slope between LAI and color indices. Similar to the previous study conducted by Zhou et al. (2017) [1] that found a significant correlation between LAI and VIs in the early and middle growth stages, the results in this study showed a good nonlinear relationship between LAI and all the five color indices during the jointing stage and flowering stage. Meanwhile, the color indices showed a lower correlation with LAI at the maturity stage.

Figure 2.

Scatter plot between LAI and image-derived EXR (a−c), GRRI (d−f), GRVI (g−i), NDI (j−l) and VARI (m−o) at three key stages for all the three genotypes under four levels of fertilization.

However, contrary to the study conducted by Zhou et al. (2017) [1] that found the color indices suffered from a saturation problem to different extend, this study showed that the five color indices collected on the three key phenological stages did not saturate. Instead, as shown in Figure 2, LAI collected at the flowering and maturity stages approached an asymptote and showed little sensitive mainly within the highest nitrogen level (360 kg ha−1) when LAI exceeded 6. LAI collected at the flowering stage also showed less sensitivity within low nitrogen levels (0 kg and 60 kg) when LAI was below 1.5. However, these color indices were still sensitive during the growth peak.

This is possibly explained by the different physiological importance between LAI and vegetation indices, as well as the different measuring method of LAI. In this study, LAI was measured by a leaf scanner collecting the absolute area of leaves by cutting off the leaves from the plants instead of the commonly used optical LAI instrument (e.g., LAI-2200C Plant Canopy Analyzer). The optical LAI instrument is based on the hypothesis that the amount of foliage in a vegetation canopy can be deduced from measurements of how quickly radiation is attenuated as it passes through the canopy. Its measurement reflects not only the absolute area of leaves but also the pigment and activation of the leaves. Meanwhile, the absolute areas of leaves measured in this study were not as sensitive as color indices in terms of predicting grain yield due to the species diversity, which was further analyzed in the next section. However, LAI is usually measured as the absolute area of leaves in agriculture applications or research. In addition, the relationship between LAI and VIs depends on the type of VIs, crops, the experimental conditions, and also many other factors such as soil background, canopy senescence, sun position, and plant architecture [56,57,58,59].

3.3. Relationships between Grain Yield and Thermal, Structure, and Volumetric Metrics at a Single Stage

In order to investigate the contribution of thermal, structure, and volumetric metrics in wheat yield predicting before harvest, the correlation coefficient was calculated between grain yield and canopy volume CVMH, canopy temperature, GRVI-weighted canopy volume CSMGRVI, and SPAD measurements on three key phenological stages, as shown in Table 5 and Table 6.

Table 5.

The coefficient of determination (R2) of yield to image-derived canopy temperature, structure metrics, and VI-weighted structure metrics.

Table 6.

The coefficient of determination (R2) of yield to ground measured chlorophyll.

GRVI was selected as a color index for further analysis considering that there was little difference among the correlation of the five selected color indices to LAI (Figure 2) and grain yield (Table 4). GRVI based on only visible spectral information had the highest correlation with the grain yield of wheat than other indices using thermal or/and structure metrics (Table 5). CSMGRVI incorporating structural and spectral information had an even slightly lower correlation with grain yield than GRVI itself. It indicates that UAV estimates of crop height had little contribution in grain yield prediction before harvest. On one hand, the correlation between final yield and CVMH was not high and lower than GRVI. On the other hand, the uncertainty in plant height due to the residual geometric deformation of UAV-derived images propagated into CSMGRVI.

Thermal data have been widely used for water stress monitoring and disease detection [60,61]. However, in this study, canopy temperature collected at the flowering stage could only explain 60% of the variability of grain yield, as the winter wheat was almost fully irrigated in this study, and there was almost no water stress throughout the growth cycle. Therefore, the thermal temperature was mainly driven by vegetation coverage, as the increase of vegetation coverage can lead to transpiration cooling, increased latent heat fluxes, deceased sensible heat fluxes, and consequently a reduced canopy temperature [62]. The reason might also include the fact that the canopy temperature dynamic is strongly affected by instantaneous environmental conditions that are not necessarily strongly corelated to grain yield [15,26], such as wind, cloud cover, humility, etc.

SPAD measurements gave the highest correlation to grain yield in the grain-filling stage (Table 6). However, it was still lower than that between GRVI and grain yield (R2 = 0.83 and R2 = 0.90). As SPAD measurements varied with varieties, while the varieties that tended to have higher SPAD readings did not necessarily have higher grain yield, which is further discussed in the next subsection.

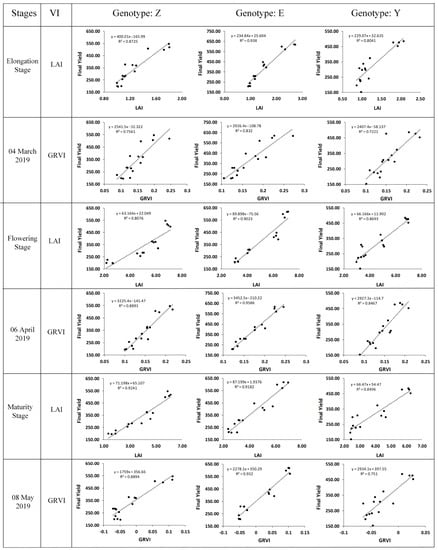

3.4. Grain Yield Estimation Based on LAI and GRVI

Yield is commonly the most important trait and the focus of breeding programs [51]. It is important to predict grain yield before harvest for crop management, policy making, regional trade, food security, as well as phenotyping and breeding. As shown in Figure 3, comparable correlations of grain yield to observed image-derived GRVI and ground measured LAI were observed under all levels of nitrogen fertilization for each genotype. A lower correlation of grain yield to both LAI and GRVI was observed for genotype Yangmai15 than genotype Zhengmai9023 and Emai596. In addition, the correlation under each nitrogen fertilization as shown in Figure 4 was significantly lower than that under all levels of nitrogen fertilization, because the change range of both GRVI/LAI and grain yield under each level of fertilization was much smaller than that of the observation under all the four levels of fertilization.

Figure 3.

Scatter plot between grain yield and image−derived GRVI or ground−measured LAI at three key stages for each genotype under four levels of fertilization (n = 16).

Figure 4.

Scatter plot between grain yield and image-derived GRVI or ground-measured LAI at three key stages for each level of fertilization under all the three genotypes (n = 12).

However, obvious differences between the correlation of grain yield to LAI and GRVI were observed under each nitrogen fertilization, as shown in Figure 4. The results show that LAI is good predictor in elongation stage while GRVI still has potential to be a good trail for grain yield prediction at the flowering stage and early maturity stage under the same level of nitrogen fertilization. GRVI had very weak correlation (R2 = 0.10–0.33) to grain yield at elongation stages under each level of fertilization, while LAI had relatively stronger correlation (R2 = 0.59–0.65) to grain yield at elongation stages under 180 kg and 360 kg nitrogen fertilization. However, LAI had very weak correlation (R2 = 0.00–0.03) to grain yield at flowering stages under each level of fertilization except 180 kg nitrogen (R2 = 0.40), while GRVI still had strong correlation (R2 = 0.55–0.68) to grain yield at flowering stages under each level of fertilization. In addition, GRVI had relatively stronger correlation (R2 = 0.54–0.59) to grain yield at early maturity stages under 180 kg and 360 kg nitrogen fertilization than LAI did (R2 = 0.12–0.43). The plants under low nitrogen fertilization (0 kg and 60 kg) tended to have a shorter crop cycle; they were already in the maturity stage and the leaves have already turned yellow due to leaf senescence on 8 May. As a result, GRVI was not able to differentiate the grain yield potential among the plots at this stage. While the plants under higher nitrogen fertilization (180 kg and 360 kg) partly headed into maturity, and some were still in the grain-filling stage. Higher color indices at the end of grain filling may indicate more green leaves in the plot, a longer grain-filling stage, and consequently higher final grain yield [19]. Nitrogen application can delay leaf senescence, sustain leaf photosynthesis during the grain filling period of wheat, and extend the duration of grain fill [63,64]. Meanwhile, the LAI in this study reflect the change of absolute leaf area rather than the activity of leaves or plants.

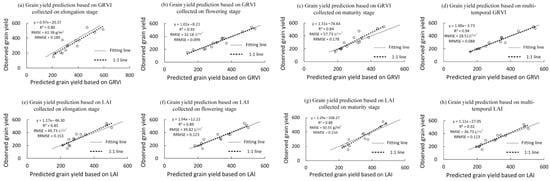

In order to further compare GRVI and LAI, yield prediction models were built using multiple regression based on LAI and GRVI collected on single stage and multiple stages, respectively. The dataset including the 48 plots was divided into two groups: calibration and validation datasets with a 70%/30% split into these groups using a simple random sampling. As shown in Figure 5, yield prediction models were validated using randomly selected 30% observations.

Figure 5.

Grain yield prediction based on single and multi-temporal GRVI (a–d) and LAI (e–h).

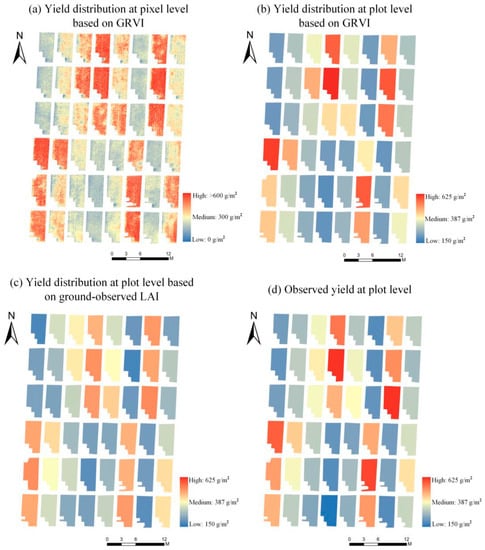

The result showed that the yield prediction models gave the highest accuracy at the flowering stage than other stages for both LAI and GRVI. The fitting lines were very close to the 1:1 line (Figure 5). The model based on GRVI gave slightly higher prediction accuracy at the flowering stage than that based on LAI (R2 = 0.93, RRMSE = 0.099, and R2 = 0.89, RRMSE = 0.123). As shown in Figure 6, the spatial distribution of observed final grain yield (Figure 6d) is more accordance with grain yield predicted by GRVI (Figure 6b) than grain yield predicted by ground-observed LAI (Figure 6c) at the flowering stage (6 April). The results predicted by ground-observed LAI tended to underestimate the plot with high grain yield. Yield prediction based on multi-temporal observations not only improved the accuracy over single-stage observations for both GRVI and LAI, but also yielded a more unbiased result with a nearly superposition of fitting line and 1:1 line, especially for GRVI (slope = 1.00, R2 = 0.94, RRMSE = 0.088).

Figure 6.

Grain yield distribution at pixel level based on GRVI observed at 6 April (a), grain yield distribution at plot level based on GRVI observed at 6 April (b), i.e., averaged yield per plot, grain yield distribution at plot level based on LAI observed at 6 April, (c) and observed final yield at plot level (d).

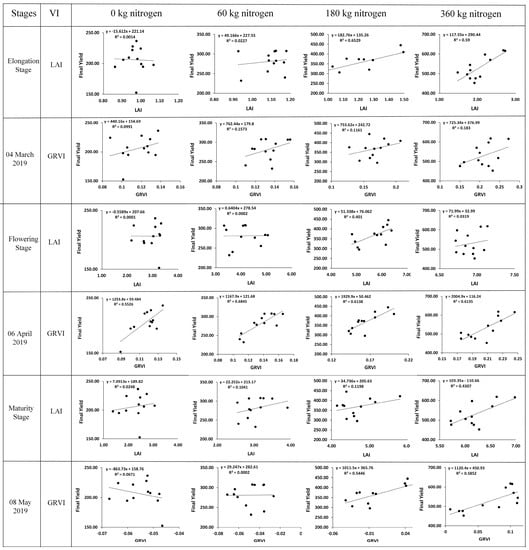

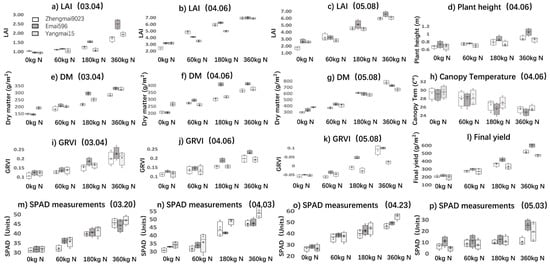

3.5. Relationship between Grain Yield and LAI, DM, Chlorophyll Content, and Color Indices under Different Genotypes and Nitrogen Fertilizations

To investigate the differences in correlation of grain yield to GRVI, LAI, ground observed plant height, and SPAD measurements, further analysis was conducted by comparing their variations among different genotypes under different levels of nitrogen fertilization, as shown in Figure 7. Under the same level of fertilization, obvious differences were observed in the variation of grain yield as well as other observed plant physiological parameters. For example, genotype Emai596 had higher final grain yield than the other two genotypes, especially under 180 kg and 360 kg nitrogen fertilization (Figure 7l). Similar to the results of some previous studies [63,64], DM showed inconsistent variation with grain yield, especially under 0 kg and 60 kg nitrogen fertilization at the flowering or maturity stage (Figure 7f,l), due to differences in the contribution of dry matter remobilization to grain yield. Nevertheless, the variations of GRVI among different genotypes under each level of nitrogen fertilization were generally in accordance with those of grain yield at the flowering stage (Figure 7j,l).

Figure 7.

Variation of LAI (a–c), plant height (d), DM (e–g), canopy temperature (h), GRVI (i–k), final grain yield (l), SPAD measurements (m–p) among different genotypes under four levels of nitrogen fertilization.

Ma et al. (2012) [65] also compared the LAI of three varieties under different levels of N nitrogen fertilization. Similar to the results of Ma et al. (2012) [65], the variations of LAI among cultivars were not necessarily the same with the final yield. For example, at the flowering stage, Zhengmai9023 had lowest and highest LAI under 0 kg and 60 kg nitrogen fertilization, respectively (Figure 7b), while Emai596 had highest averaged grain yield compared with the other two genotypes under both 0 kg and 60 kg nitrogen fertilization (Figure 7l). In addition, there was little difference among the LAI observations under 360 kg nitrogen fertilization at the flowering stage. However, Emai596 had higher grain yield under 360 kg nitrogen fertilization (Figure 7b). It is possibly explained by the saturation of leaf expansion and nonlinear increase of grain yield with further increase in LAI under high levels of nitrogen fertilization.

In addition, although several studies have shown that the SPAD readings correlate well with the leaf nitrogen content [66,67]. However, it is consistent with some previous studies [65,68,69,70] that the SPAD measurements of wheat also varied largely among cultivars in this study. In addition, their variations among cultivars were not necessarily the same with the final yield. For example, SPAD measurements of Yangmai15 tended to be higher before the maturity stage than the other two species, while it had the lowest final yield (Figure 7l–p).

Therefore, the inferior performance of LAI and SPAD measurements under the same level of nitrogen across the genotypes is possibly explained by the differences among the genotypes. The varieties that tended to have higher LAI readings did not necessarily have higher grain yield. Meanwhile, GRVI reflecting the color difference was effective at differentiating the nitrogen content of the leaves, the photosynthetic potential of the plant at the flowering and early maturity stages, and consequently, it was able to provide a reliable forecast of final yield across the genotypes and nitrogen fertilization levels.

4. Conclusions

UAVs have become an important platform in precision agriculture application [71,72]. The relationships between winter grain yield against ground-measured LAI and the color indices derived from digital images, structural, and thermal information were studied at three key growth stages in this study. The results showed that some color indices had high correlation with wheat grain yield at the elongation, flowering, and early maturity stages (R2 = 0.76–0.93). The flowering stage gave the highest prediction power (R2 = 0.88–0.93), while the other measurements including canopy temperature, volumetric metrics, and ground-observed chlorophyll content showed lower prediction power (R2 = 0.52–0.85) to grain yield. In addition, thermal information at the flowering stage as well as volumetric metrics generally had little contribution to the improvement of grain yield prediction when combining them with color indices from digital images.

Color index GRVI was also compared with ground-measured LAI. This study showed that LAI measured as the sum area of leaves cut from the plant samples had inferior performance than GRVI in grain yield prediction within the same level of nitrogen fertilization at the flowering stage (R2 = 0.00–0.40 and R2 = 0.55–0.68), and GRVI provided slightly better prediction of yield than LAI at the flowering stage (R2 = 0.93, RMSE = 32.18 g/m2 and R2 = 0.89, RMSE = 39.82 g/m2) under four levels of nitrogen fertilization. For one reason, as the canopy density increased, when LAI exceeded 6, LAI approached an asymptote within the highest nitrogen level (360 kg ha−1), but compared to LAI, some color indices collected at the three key phenological stages were not prone to saturation. For another reason, some physiological parameters, e.g., LAI and chlorophyll content, varied with varieties. Some varieties with higher LAI or SPAD readings might had lower final grain yield. As a result, lower yield prediction performance was observed across the genotypes.

This study highlights the capabilities of color indices in wheat yield prediction. Thermal and structural information provided little additional information for wheat yield prediction in this study. Considering the cost of multiple/hyper-spectral sensors, data integrations might not always be the optimal way for wheat yield prediction. Future work will test the model in wheat yield prediction with more genotypes and multi-year datasets to explore its suitability and reliability, as well as the potential of RGB images in other biochemical and biophysical plant parameters estimation. Moreover, many other flexible, affordable, and easy-to-handle devices such as mobile phones and Phenocam (http://phenocam.us/, accessed on 22 July 2021) provide alternatives as a precision agriculture platform.

Author Contributions

Conceptualization, L.Z. and R.M.; methodology, L.Z., R.M. and J.M.; software, G.P.; validation, R.M. and J.M.; formal analysis, G.P., W.L., investigation, L.Z., B.X., Z.L. and R.S.; resources, data curation, writing—original draft preparation, L.Z. and G.P.; writing—review and editing, R.M. and J.M.; supervision, R.M.; project administration, R.M.; funding acquisition, R.M. and J.M. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Nature Science Foundation of China program (Grant No. 41901353; No. 31601251), Fundamental Research Funds for the Central Universities (No. 2662019QD054; No.2662019PY057; 2662017QD038), the National Key Research and Development Program of China (Grant No. 2017YFC1502406-03), the Open Research Fund of Guangxi Key Laboratory of Water Engineering Materials and Structures, Guangxi Institute of water resources research (Grant No. GXHRI-WEMS-2019-03) and National Key Research and Development Program of Guangxi (Grant No. 2019AB20009).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The authors would like to thank the anonymous reviewers for their valuable comments to improve the quality of the paper. We thank Renjie Gao and Tingting Liu for data collections. We also thank Feng Zhao for discussion.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zhou, X.; Zheng, H.B.; Xu, X.Q.; He, J.Y.; Ge, X.K.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.X.; Tian, Y.C. Predicting grain yield in rice using multi-temporal vegetation indices from UAV-based multispectral and digital imagery. ISPRS J. Photogramm. Remote Sens. 2017, 130, 246–255. [Google Scholar] [CrossRef]

- Han, J.; Zhang, Z.; Cao, J.; Luo, Y.; Zhang, L.; Li, Z.; Zhang, J. Prediction of winter wheat yield based on multi-source data and machine learning in China. Remote Sens. 2020, 12, 236. [Google Scholar] [CrossRef] [Green Version]

- Yang, Q.; Shi, L.; Han, J.; Zha, Y.; Zhu, P. Deep convolutional neural networks for rice grain yield estimation at the ripening stage using UAV-based remotely sensed images—ScienceDirect. Field Crop. Res. 2019, 235, 142–153. [Google Scholar] [CrossRef]

- Wang, L.; Tian, Y.; Yao, X.; Zhu, Y.; Cao, W. Predicting grain yield and protein content in wheat by fusing multi-sensor and multi-temporal remote-sensing images. Field Crop. Res. 2014, 164, 178–188. [Google Scholar] [CrossRef]

- Reynolds, C.A.; Yitayew, M.; Slack, D.C.; Hutchinson, C.F.; Huete, A.; Petersen, M.S. Estimating crop yields and production by integrating the FAO Crop Specific Water Balance model with real-time satellite data and ground-based ancillary data. Int. J. Remote Sens. 2000, 21, 3487–3508. [Google Scholar] [CrossRef]

- Ren, J.; Chen, Z.; Zhou, Q.; Tang, H. Regional yield estimation for winter wheat with MODIS-NDVI data in Shandong, China. Int. J. Appl. Earth Obs. Geoinf. 2008, 10, 403–413. [Google Scholar] [CrossRef]

- Schut, A.G.T.; Traore, P.C.S.; Blaes, X.; De By, R.A. Assessing yield and fertilizer response in heterogeneous smallholder fields with UAVs and satellites. Field Crop. Res. 2018, 221, 98–107. [Google Scholar] [CrossRef]

- Sakamoto, T.; Gitelson, A.A.; Arkebauer, T.J. MODIS-based corn grain yield estimation model incorporating crop phenology information. Remote Sens. Environ. 2013, 131, 215–231. [Google Scholar] [CrossRef]

- Xue, L.H.; Cao, W.X.; Yang, L.Z. Predicting grain yield and protein content in winter wheat at different N supply levels using canopy reflectance spectra. Pedosphere 2007, 17, 646–653. [Google Scholar] [CrossRef]

- Becker-Reshef, I.; Vermote, E.; Lindeman, M.; Justice, C. A generalized regression-based model for forecasting winter wheat yields in Kansas and Ukraine using MODIS data. Remote Sens. Environ. 2010, 114, 1312–1323. [Google Scholar] [CrossRef]

- Kanning, M.; Kühling, I.; Trautz, D.; Jarmer, T. High-resolution UAV-based hyperspectral imagery for LAI and chlorophyll estimations from wheat for yield prediction. Remote Sens. 2018, 10, 2000. [Google Scholar] [CrossRef] [Green Version]

- Pantazi, X.E.; Moshou, D.; Alexandridis, T.; Whetton, R.L.; Mouazen, A.M. Wheat yield prediction using machine learning and advanced sensing techniques. Comput. Electron. Agric. 2016, 121, 57–65. [Google Scholar] [CrossRef]

- Cai, Y.; Guan, K.; Lobell, D.; Potgieter, A.B.; Wang, S.; Peng, J.; Xu, T.; Asseng, S.; Zhang, Y.; You, L.; et al. Integrating satellite and climate data to predict wheat yield in Australia using machine learning approaches. Agric. For. Meteorol. 2019, 274, 144–159. [Google Scholar] [CrossRef]

- Hunt, E.R.; Cavigelli, M.; Daughtry, C.S.T.; Mcmurtrey, J.E.; Walthall, C.L. Evaluation of digital photography from model aircraft for remote sensing of crop biomass and nitrogen status. Precis. Agric. 2005, 6, 359–378. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Ghulam, A.; Sidike, P.; Hartling, S.; Maimaitiyiming, M.; Peterson, K.; Shavers, E.; Fishman, J.; Peterson, J.; Kadam, S. Unmanned Aerial System (UAS)-based phenotyping of soybean using multi-sensor data fusion and extreme learning machine. ISPRS J. Photogramm. Remote Sens. 2017, 134, 43–58. [Google Scholar] [CrossRef]

- Schirrmann, M.; Giebel, A.; Gleiniger, F.; Pflanz, M.; Lentschke, J. Monitoring agronomic parameters of winter wheat crops with low-cost UAV imagery. Remote Sens. 2016, 8, 706. [Google Scholar] [CrossRef] [Green Version]

- Gracia-Romero, A.; Kefauver, S.; Vergara Diaz, O.; Zaman-Allah, M.; Prasanna, B.; Cairns, J.; Araus, J. comparative performance of ground vs. aerially assessed RGB and multispectral indices for early-growth evaluation of maize performance under phosphorus fertilization. Front. Plant Sci. 2017, 8. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bendig, J.; Bolten, A.; Bennertz, S.; Broscheit, J.; Eichfuss, S.; Bareth, G. Estimating biomass of barley using crop surface models (CSMs) derived from UAV-based RGB imaging. Remote Sens. 2014, 6, 10395–10412. [Google Scholar] [CrossRef] [Green Version]

- Casadesús, J.; Kaya, Y.; Bort, J.; Nachit, M.; Araus, J.; Slama, A.; Ferrazzano, G.; Maalouf, F.; Maccaferri, M.; Martos, V.; et al. Using vegetation indices derived from conventional digital cameras as selection for wheat breeding in water-limited environments. Ann. Appl. Biol. 2007, 150, 227–236. [Google Scholar] [CrossRef]

- Zhou, B.; Elazab, A.; Bort, J.; Vergara, O.; Araus, J.L. Low-cost assessment of wheat resistance to yellow rust through conventional RGB images. Comput. Electron. Agric. 2015, 116, 20–29. [Google Scholar] [CrossRef]

- Deng, L.; Mao, Z.; Li, X.; Hu, Z.; Duan, F.; Yan, Y. UAV-based multispectral remote sensing for precision agriculture: A comparison between different cameras. ISPRS J. Photogramm. Remote Sens. 2018, 146, 124–136. [Google Scholar] [CrossRef]

- Adão, T.; Hruška, J.; Pádua, L.; Bessa, J.; Peres, E.; Morais, R.; Sousa, J.J. Hyperspectral imaging: A review on UAV-based sensors, data processing and applications for agriculture and forestry. Remote Sens. 2017, 9, 1110. [Google Scholar] [CrossRef] [Green Version]

- Harkel, J.T.; Bartholomeus, H.; Kooistra, L. Biomass and crop height estimation of different crops using UAV-based lidar. Remote Sens. 2019, 12, 17. [Google Scholar] [CrossRef] [Green Version]

- Raeva, P.L.; Šedina, J.; Dlesk, A. Monitoring of crop fields using multispectral and thermal imagery from UAV. Eur. J. Remote Sens. 2019, 52, 192–201. [Google Scholar] [CrossRef] [Green Version]

- Andrade-Sanchez, P.; Gore, M.A.; Heun, J.T.; Thorp, K.R.; Carmo-Silva, A.E.; French, A.N.; Salvucci, M.E.; White, J.W. Development and evaluation of a field-based high-throughput phenotyping platform. Funct. Plant Biol. 2013, 41, 68–79. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sankaran, S.; Quirós, J.J.; Miklas, P.N. Unmanned aerial system and satellite-based high resolution imagery for high-throughput phenotyping in dry bean. Comput. Electron. Agric. 2019, 165, 104965. [Google Scholar] [CrossRef]

- Yan, G.; Li, L.; Coy, A.; Mu, X.; Zhou, H. Improving the estimation of fractional vegetation cover from UAV RGB imagery by colour unmixing. ISPRS J. Photogramm. Remote Sens. 2019, 158, 23–34. [Google Scholar] [CrossRef]

- Yang, G.; Liu, J.; Zhao, C.; Li, Z.; Huang, Y.; Yu, H.; Xu, B.; Yang, X.; Zhu, D.; Zhang, X. Unmanned aerial vehicle remote sensing for field-based crop phenotyping: Current status and perspectives. Front. Plant Sci. 2017, 8, 1111. [Google Scholar] [CrossRef] [PubMed]

- Fernandez-Gallego, J.A.; Kefauver, S.C.; Vatter, T.; Gutiérrez, N.A.; Nieto-Taladriz, M.T.; Araus, J.L. Low-cost assessment of grain yield in durum wheat using RGB images. Eur. J. Agron. 2019, 105, 146–156. [Google Scholar] [CrossRef]

- Córcoles, J.I.; Ortega, J.F.; Hernández, D.; Moreno, M.A. Estimation of leaf area index in onion (Allium cepa L.) using an unmanned aerial vehicle. Biosyst. Eng. 2013, 115, 31–42. [Google Scholar] [CrossRef]

- Kim, D.; Yun, H.; Jeong, S.; Kwon, Y.; Kim, S.; Lee, W.; Kim, H. Modeling and testing of growth status for Chinese cabbage and white radish with UAV-based RGB imagery. Remote Sens. 2018, 10, 563. [Google Scholar] [CrossRef] [Green Version]

- Su, J.; Coombes, M.; Liu, C.; Zhu, Y.; Chen, W.H. Machine learning based crop drought mapping system by UAV remote sensing RGB imagery. Unmanned Syst. 2020, 8, 71–83. [Google Scholar] [CrossRef]

- Vergara-Díaz, O.; Zaman-Allah, A.M.; Masuka, B.; Hornero, A.; Tejada-Pablo, Z.; Prasanna, M.B.; Cairns, E.J.; Araus, L.J. A novel remote sensing approach for prediction of maize yield under different conditions of nitrogen fertilization. Front. Plant Sci. 2016, 7, 666. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- FAO. The State of Food Insecurity in the World 2015. World’s Agriculture, Forestry and Fisheries: FAO News. 2015, Volume 316, pp. 3–8. Available online: http://www.fao.org/3/i4646e/i4646e.pdf (accessed on 10 July 2021).

- Bashir, M.T.; Ali, S.; Izni, A.; Harun, R.; Salmiaton, A. Impact of excessive nitrogen fertilizers on the environment and associated mitigation strategies. Asian J. Microbiol. Biotechnol. Environ. Sci. 2013, 15, 213–221. [Google Scholar]

- Lu, R.K. Analysis Methods of Soil Agro-Chemistrical; China Agriculture and Technology Press: Beijing, China, 2020; pp. 109, 147, 150, 180, 194. (In Chinese) [Google Scholar]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Maimaitiyiming, M.; Hartling, S.; Peterson, K.T.; Maw, M.J.W.; Shakoor, N.; Mockler, T.; Fritschi, F.B. Vegetation Index Weighted Canopy Volume Model (CVM VI) for soybean biomass estimation from Unmanned Aerial System-based RGB imagery. ISPRS J. Photogramm. Remote Sens. 2019, 151, 27–41. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef] [Green Version]

- Woebbecke, D.M.; Meyer, G.E.; Bargen, K.V.; Mortensen, D.A. Color indices for weed identification under various soil, residue, and lighting conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Kawashima, S.; Nakatani, M. An algorithm for estimating chlorophyll content in leaves using a video camera. Ann. Bot. 1998, 81, 49–54. [Google Scholar] [CrossRef] [Green Version]

- Louhaichi, M.; Borman, M.M.; Johnson, D.E. Spatially located platform and aerial photography for documentation of grazing impacts on wheat. Geocarto Int. 2001, 16, 65–70. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel algorithms for remote estimation of vegetation fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef] [Green Version]

- Meyer, G.E.; Neto, J.O.C. Verification of color vegetation indices for automated crop imaging applications. Comput. Electron. Agric. 2008, 63, 282–293. [Google Scholar] [CrossRef]

- Mao, W.; Wang, Y.; Wang, Y. Real-time detection of between-row weeds using machine vision. In Proceedings of the 2003 ASAE Annual Meeting, Las Vegas, NV, USA, 27–30 July 2003. [Google Scholar]

- Saberioon, M.M.; Amin, M.S.M.; Anuar, A.R.; Gholizadeh, A.; Wayayok, A.; Khairunniza-Bejo, S. Assessment of rice leaf chlorophyll content using visible bands at different growth stages at both the leaf and canopy scale. Int. J. Appl. Earth Obs. Geoinf. 2014, 32, 35–45. [Google Scholar] [CrossRef]

- Kataoka, T.; Kaneko, T.; Okamoto, H.; Hata, S. Crop growth estimation system using machine vision. In Proceedings of the 2003 IEEE/ASME International Conference on Advanced Intelligent Mechatronics (AIM 2003), Kobe, Japan, 20–24 July 2003. [Google Scholar]

- Jiang, Z.; Huete, A.R.; Didan, K.; Miura, T. Development of a two-band enhanced vegetation index without a blue band. Remote Sens. Environ. 2008, 112, 3833–3845. [Google Scholar] [CrossRef]

- Buchaillot, M.; Romero, A.; Diaz, O.; Zaman-Allah, M.; Tarekegne, A.; Cairns, J.; Prasanna, B.; Araus, J.; Kefauver, S. Evaluating maize genotype performance under low nitrogen conditions using RGB UAV phenotyping techniques. Sensors 2019, 19, 1815. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Shi, P.; Wang, Y.; Xu, J.; Zhao, Y.; Yuan, Z.; Sun, Q. Rice nitrogen nutrition estimation with RGB images and machine learning methods—ScienceDirect. Comput. Electron. Agric. 2020, 180, 105860. [Google Scholar] [CrossRef]

- Guo, Z.C.; Wang, T.; Liu, S.L.; Kang, W.P.; Zhi, Y. Biomass and vegetation coverage survey in the Mu Us sandy land-based on unmanned aerial vehicle RGB images. Int. J. Appl. Earth Obs. Geoinf. 2021, 94, 102239. [Google Scholar] [CrossRef]

- Bai, G.; Ge, Y.; Hussain, W.; Baenziger, P.S.; Graef, G. A multi-sensor system for high throughput field phenotyping in soybean and wheat breeding. Comput. Electron. Agric. 2016, 128, 181–192. [Google Scholar] [CrossRef] [Green Version]

- Yang, W.; Peng, S.; Dionisio-Sese, M.L.; Laza, R.C.; Visperas, R.M. Grain filling duration, a crucial determinant of genotypic variation of grain yield in field-grown tropical irrigated rice. Field Crop. Res. 2008, 105, 221–227. [Google Scholar] [CrossRef]

- Verger, A.; Vigneau, N.; Chéron, C.; Gilliot, J.; Comar, A.; Baret, F. Green area index from an unmanned aerial system over wheat and rapeseed crops. Remote Sens. Environ. 2014, 152, 654–664. [Google Scholar] [CrossRef]

- Zhang, Q.; Schaaf, C.; Seto, K.C. The vegetation adjusted NTL urban index: A new approach to reduce saturation and increase variation in nighttime luminosity. Remote Sens. Environ. 2013, 129, 32–41. [Google Scholar] [CrossRef]

- Gitelson, A.A. Wide dynamic range vegetation index for remote quantification of biophysical characteristics of vegetation. J. Plant Physiol. 2004, 161, 165–173. [Google Scholar] [CrossRef] [Green Version]

- Jannoura, R.; Brinkmann, K.; Uteau, D.; Bruns, C.; Joergensen, R.G. Monitoring of crop biomass using true colour aerial photographs taken from a remote controlled hexacopter. Biosyst. Eng. 2015, 129, 341–351. [Google Scholar] [CrossRef]

- Gamon, J.A.; Field, C.B.; Goulden, M.L.; Griffin, K.L.; Hartley, A.E.; Joel, G.; Penuelas, J.; Valentini, R. Relationships between NDVI, canopy structure, and photosynthesis in three Californian vegetation types. Ecol. Appl. 1995, 5, 28–41. [Google Scholar] [CrossRef] [Green Version]

- Goel, N.S.; Qin, W. Influences of canopy architecture on relationships between various vegetation indices and LAI and Fpar: A computer simulation. Remote Sens. Rev. 1994, 10, 309–347. [Google Scholar] [CrossRef]

- Baret, F.; Guyot, G. Potentials and limits of vegetation indices for LAI and APAR assessment. Remote Sens. Environ. 1991, 35, 161–173. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; González-Dugo, V.; Berni, J.A.J. Fluorescence, temperature and narrow-band indices acquired from a UAV platform for water stress detection using a micro-hyperspectral imager and a thermal camera. Remote Sens. Environ. 2012, 117, 322–337. [Google Scholar] [CrossRef]

- Biju, S.; Fuentes, S.; Gupta, D. The use of infrared thermal imaging as a non-destructive screening tool for identifying drought-tolerant lentil genotypes. Plant Physiol. Biochem. 2018, 127, 11–24. [Google Scholar] [CrossRef]

- Monteith, J.; Unsworth, M. Principles of Environmental Physics, 4th ed.; Elsevier: Amsterdam, The Netherlands, 2014. [Google Scholar]

- Frederick, J.R.; Camberato, J.J. Water and nitrogen effects on winter wheat in the southeastern Coastal Plain: II. Physiological responses. Agron. J. 1995, 87, 527–533. [Google Scholar] [CrossRef]

- Khan, E.A. Wheat response to application methods and levels of nitrogen fertilizer: I. Phenology, growth indices and protein content. Pak. J. Nutr. 2013, 12, 365–370. [Google Scholar]

- Ma, B.L.; Biswas, D.K.; Zhou, Q.P.; Ren, C.Z. Comparisons among cultivars of wheat, hulled and hulless oats: Effects of N fertilization on growth and yield. Can. J. Plant Sci. 2012, 92, 1213–1222. [Google Scholar] [CrossRef]

- Yue, X.; Hu, Y.; Zhang, H.; Schmidhalter, U. Evaluation of both SPAD reading and SPAD index on estimating the plant nitrogen status of winter wheat. Int. J. Plant Prod. 2019, 14. [Google Scholar] [CrossRef]

- Dunn, B.L.; Singh, H.; Goad, C. Relationship between chlorophyll meter readings and nitrogen in poinsettia leaves. J. Plant Nutr. 2018, 1–10. [Google Scholar] [CrossRef]

- Ravier, C.; Quemada, M.; Jeuffroy, M. Use of a chlorophyll meter to assess nitrogen nutrition index during the growth cycle in winter wheat. Field Crop. Res. 2017, 214, 73–82. [Google Scholar] [CrossRef]

- Le Bail, M.; Jeuffroy, M.; Bouchard, C.; Barbottin, A. Is it possible to forecast the grain quality and yield of different varieties of winter wheat from Minolta SPAD meter measurements? Eur. J. Agron. 2005, 23, 379–391. [Google Scholar] [CrossRef]

- Monostori, I.; Árendás, T.; Hoffman, B.; Galiba, G.; Gierczik, K.; Szira, F.; Vágújfalvi, A. Relationship between SPAD value and grain yield can be affected by cultivar, environment and soil nitrogen content in wheat. Euphytica 2016, 211, 103–112. [Google Scholar] [CrossRef] [Green Version]

- Yang, D.; Meng, R.; Morrison, B.D.; McMahon, A.; Hantson, W.; Hayes, D.J.; Breen, A.L.; Salmon, V.G.; Serbin, S.P. A Multi-Sensor Unoccupied Aerial System Improves Characterization of Vegetation Composition and Canopy Properties in the Arctic Tundra. Remote Sens. 2020, 12, 2638. [Google Scholar] [CrossRef]

- Lv, Z.; Meng, R.; Man, J.; Zeng, L.; Wang, M.; Xu, B.; Gao, R.; Sun, R.; Zhao, F. Modeling of winter wheat fAPAR by integrating Unmanned Aircraft Vehicle-based optical, structural, and thermal measurement. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102407. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).