Deep Convolutional Neural Network for Large-Scale Date Palm Tree Mapping from UAV-Based Images

Abstract

:1. Introduction

1.1. Background

1.2. Related Work

2. Study Area and Materials

2.1. Experimental Site

2.2. UAV Image Acquisition and Preprocessing

2.3. Labeled Data

3. Methodology

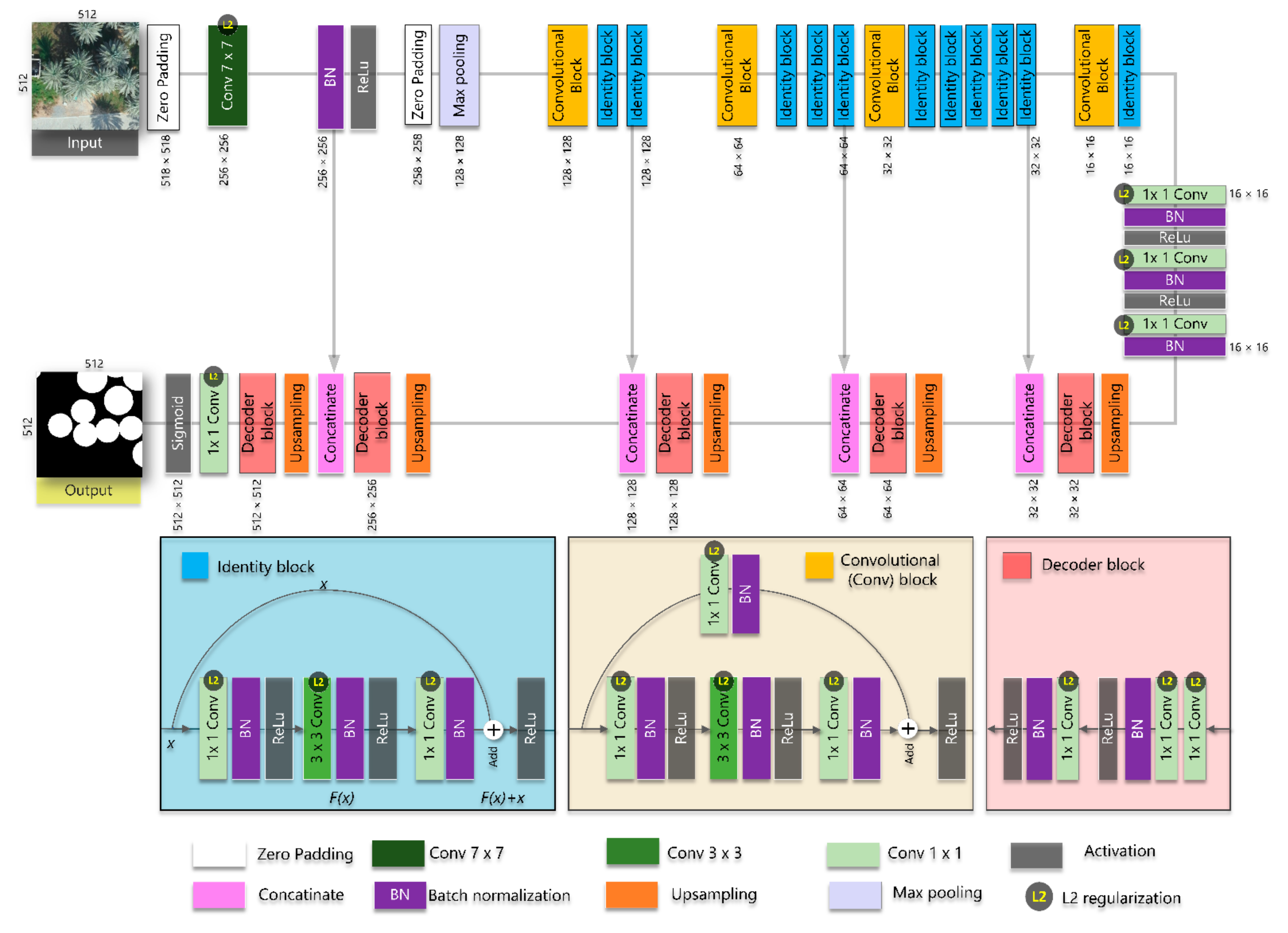

3.1. U-Net

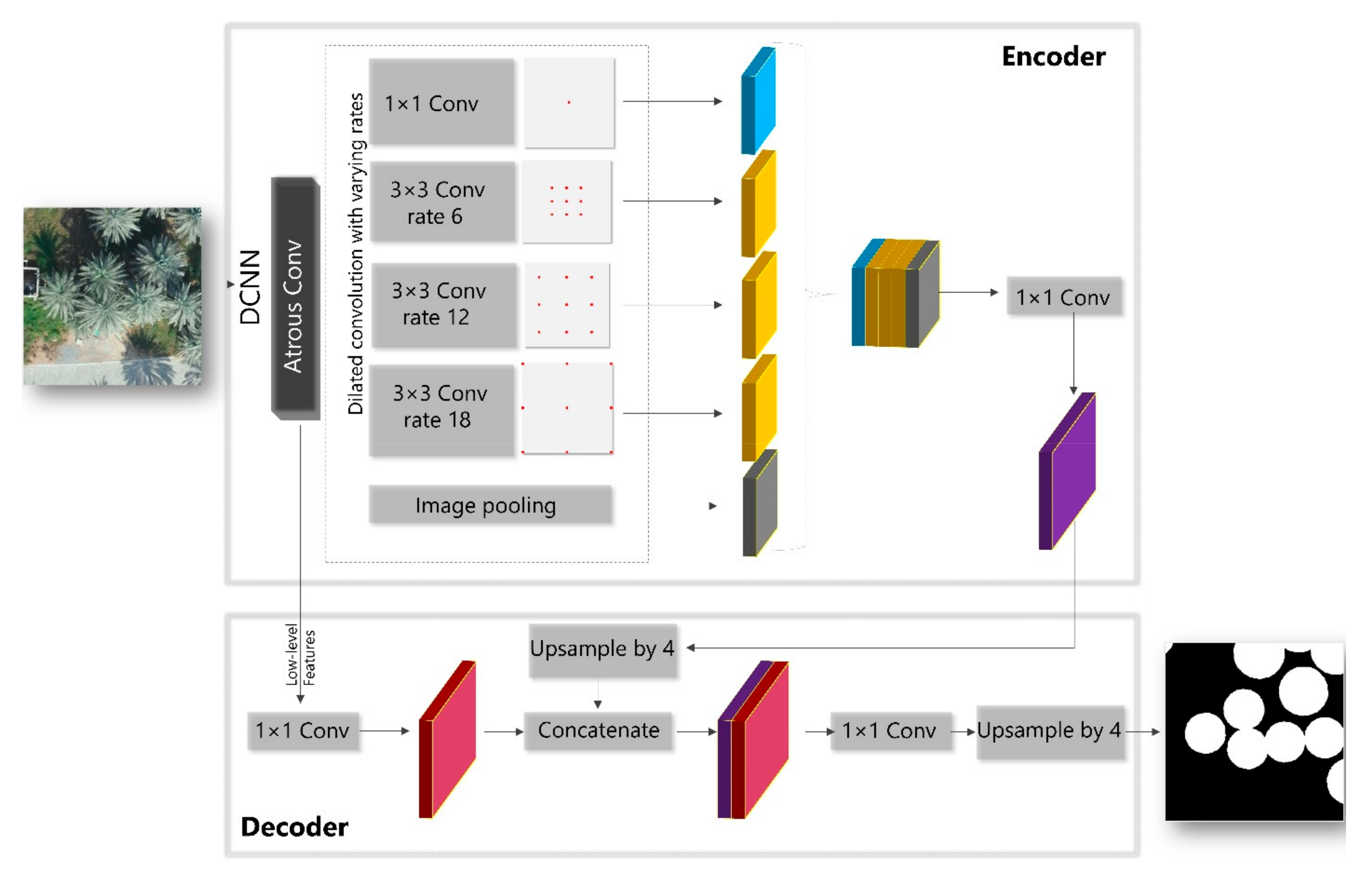

3.2. DeepLabV3+

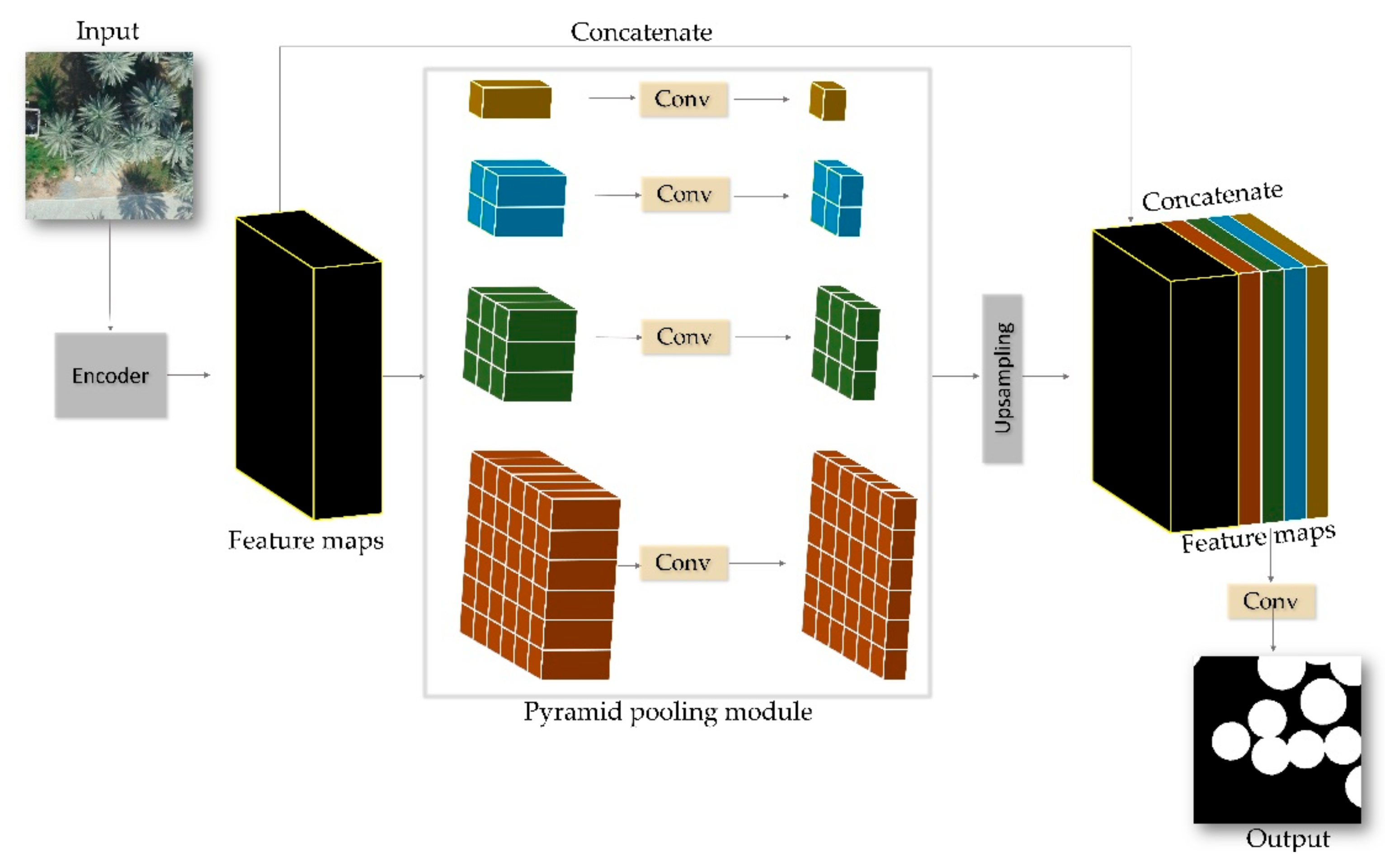

3.3. Pyramid Scene Parsing Network

3.4. Evaluation Metrics

3.5. Loss Function

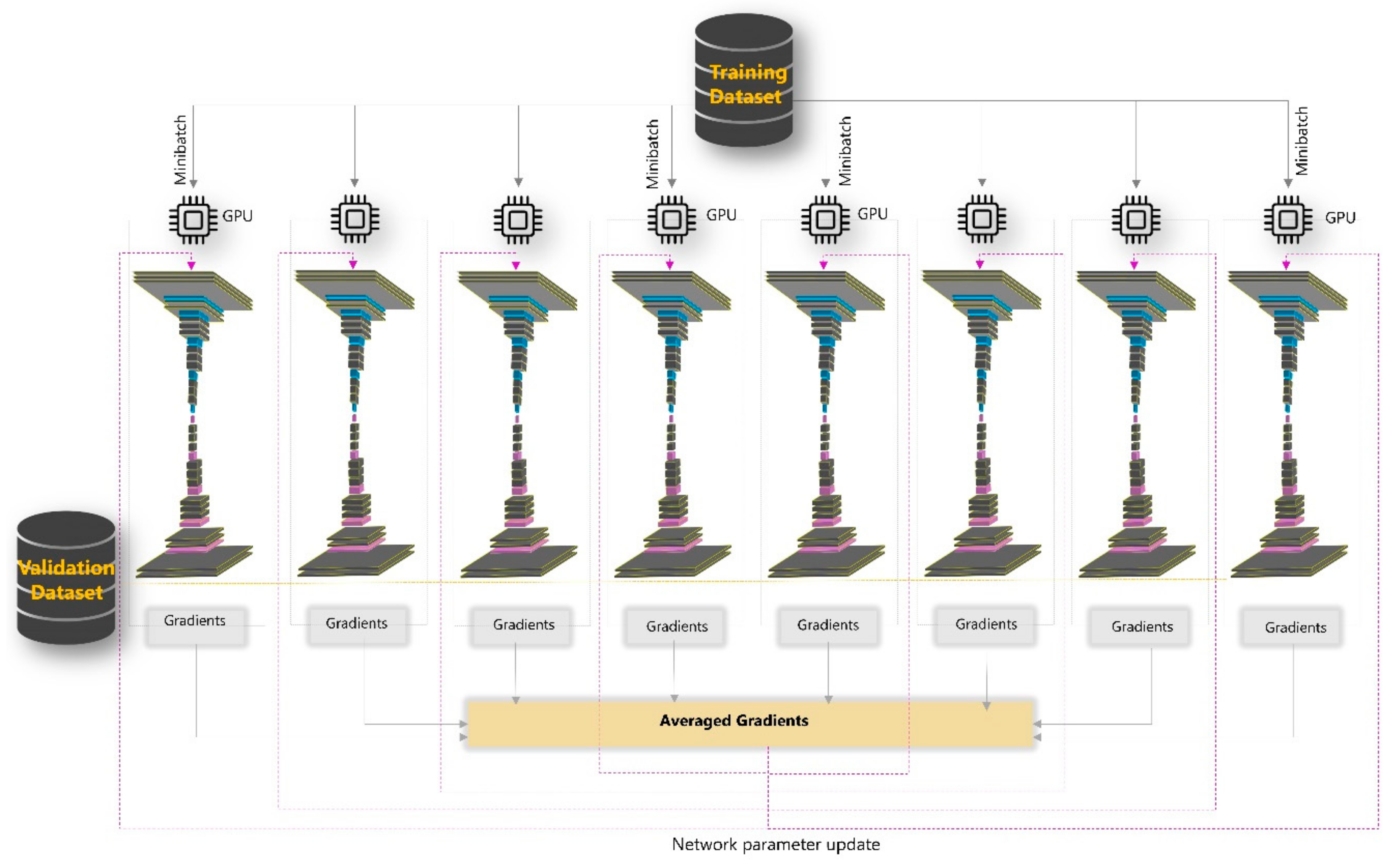

3.6. Experimental Setup

4. Results

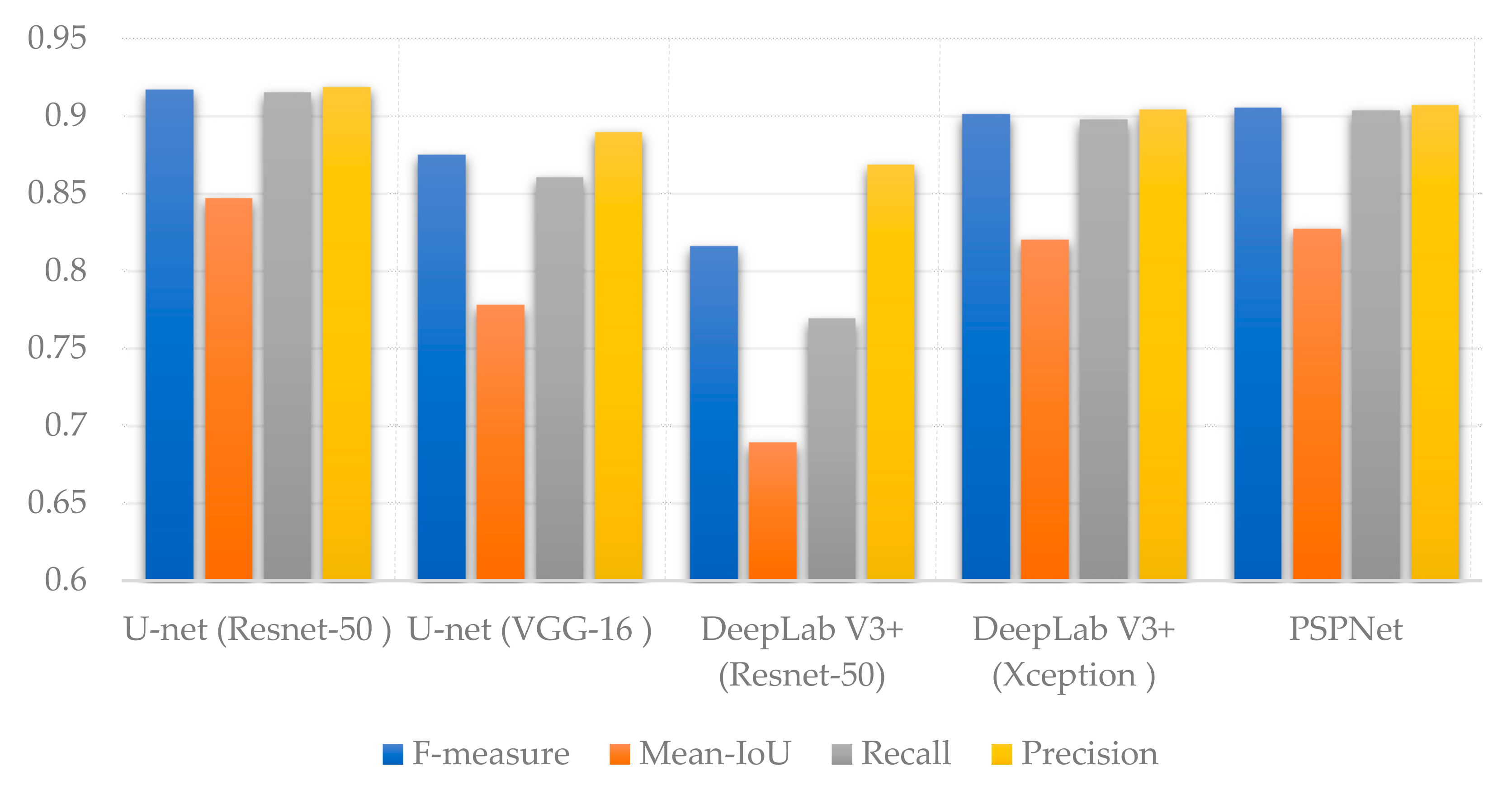

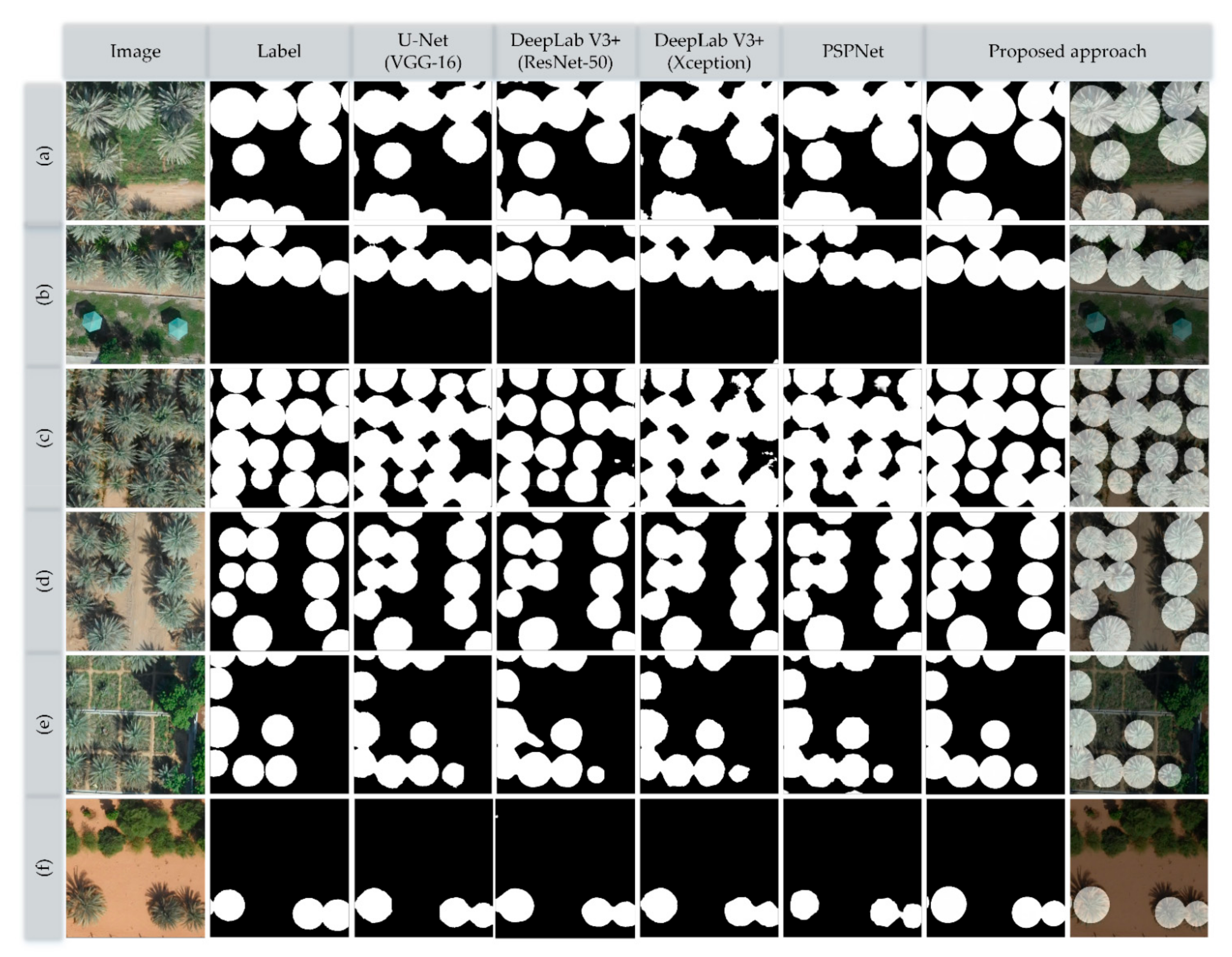

4.1. Evaluation of Segmentation Performance

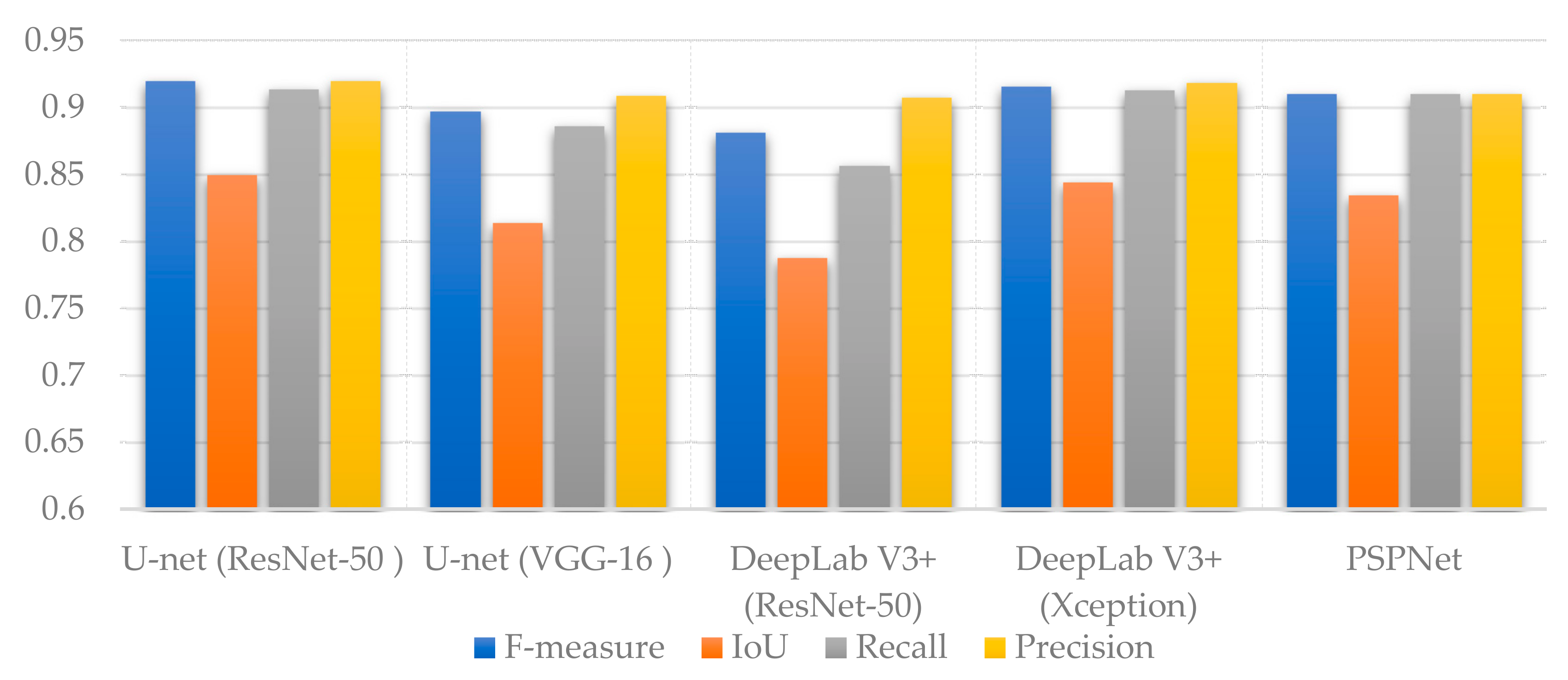

4.2. Generalizability Evaluation

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Riad, M. The date palm sector in Egypt. CIHEAM Options Mediterr. 1996, 53, 45–53. [Google Scholar]

- Tengberg, M. Beginnings and early history of date palm garden cultivation in the Middle East. J. Arid Environ. 2012, 86, 139–147. [Google Scholar] [CrossRef]

- Zaid, A.; Wet, P.F. Chapter I: Botanical and Systematic Description of the Date Palm; FAO: Rome, Italy, 2002; Available online: http://www.fao.org/docrep/006.Y4360E/y4360e05.htm (accessed on 31 March 2018).

- Spennemann, D.H.R. Review of the vertebrate-mediated dispersal of the date palm, Phoenix dactylifera. Zool. Middle East. 2018, 64, 283–296. [Google Scholar] [CrossRef]

- Chao, C.C.T.; Krueger, R.R. The date palm (Phoenix dactylifera L.): Overview of biology, uses, and cultivation. HortScience 2007, 42, 1077–1082. [Google Scholar] [CrossRef] [Green Version]

- Kurup, S.S.; Hedar, Y.S.; Al Dhaheri, M.A.; El-heawiety, A.Y.; Aly, M.A.M.; Alhadrami, G. Morpho-physiological evaluation and RAPD markers-assisted characterization of date palm (Phoenix dactylifera L.) varieties for salinity tolerance Morpho-physiological evaluation and RAPD markers-assisted characterization of date palm (Phoenix dactylife). J. Food Agric. Environ. 2009, 7, 503–507. [Google Scholar]

- Al-Alawi, R.; Al-Mashiqri, J.H.; Al-Nadabi, J.S.M.; Al-Shihi, B.I.; Baqi, Y. Date palm tree (Phoenix dactylifera L.): Natural products and therapeutic options. Front. Plant Sci. 2017, 8, 845. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- FAOSTAT. Available online: http://www.fao.org/faostat/en/#data/QC (accessed on 9 March 2021).

- Culman, M.; Delalieux, S.; Van Tricht, K. Individual palm tree detection using deep learning on RGB imagery to support tree inventory. Remote Sens. 2020, 12, 3476. [Google Scholar] [CrossRef]

- Pei, F.; Wu, C.; Liu, X.; Li, X.; Yang, K.; Zhou, Y.; Wang, K.; Xu, L.; Xia, G. Monitoring the vegetation activity in China using vegetation health indices. Agric. For. Meteorol. 2018, 248, 215–227. [Google Scholar] [CrossRef]

- Xie, Y.; Sha, Z.; Yu, M. Remote sensing imagery in vegetation mapping: A review. J. Plant Ecol. 2008, 1, 9–23. [Google Scholar] [CrossRef]

- Malatesta, L.; Scholte, P.T.; Vitale, M. Vegetation mapping from high- resolution satellite images in the heterogeneous arid environments of Socotra Island (Yemen). J. Appl. Remote Sens. 2019. [Google Scholar] [CrossRef] [Green Version]

- Zhao, A.; Zhang, A.; Liu, J.; Feng, L.; Zhao, Y. Assessing the effects of drought and “Grain for Green” Program on vegetation dynamics in China’s Loess Plateau from 2000 to 2014. CATENA 2019, 175, 446–455. [Google Scholar] [CrossRef]

- Marston, C.; Aplin, P.; Wilkinson, D.; Field, R.; O’Regan, H. Scrubbing Up: Multi-scale investigation of woody encroachment in a Southern African savannah. Remote Sens. 2017, 9, 419. [Google Scholar] [CrossRef] [Green Version]

- Spiekermann, R.; Brandt, M.; Samimi, C. Woody vegetation and land cover changes in the Sahel of Mali (1967–2011). Int. J. Appl. Earth Obs. Geoinf. 2015, 34, 113–121. [Google Scholar] [CrossRef]

- Hilker, T.; Lyapustin, A.I.; Hall, F.G.; Myneni, R.; Knyazikhin, Y.; Wang, Y.; Tucker, C.J.; Sellers, P.J. On the measurability of change in Amazon vegetation from MODIS. Remote Sens. Environ. 2015, 166, 233–242. [Google Scholar] [CrossRef]

- Gärtner, P.; Förster, M.; Kurban, A.; Kleinschmit, B. Object based change detection of Central Asian Tugai vegetation with very high spatial resolution satellite imagery. Int. J. Appl. Earth Obs. Geoinf. 2014, 31, 110–121. [Google Scholar] [CrossRef]

- Kumagai, K. Verification of the analysis method for extracting the spatial continuity of the vegetation distribution on a regional scale. Comput. Environ. Urban. Syst. 2011, 35, 399–407. [Google Scholar] [CrossRef]

- Disney, M. Remote sensing of vegetation: Potentials, limitations, developments and applications. In Canopy Photosynthesis: From Basics to Applications; Springer: Dordrecht, The Netherlands, 2016; pp. 289–331. [Google Scholar]

- Senthilnath, J.; Kandukuri, M.; Dokania, A.; Ramesh, K.N. Application of UAV imaging platform for vegetation analysis based on spectral-spatial methods. Comput. Electron. Agric. 2017, 140, 8–24. [Google Scholar] [CrossRef]

- Nebiker, S.; Annen, A.; Scherrer, M.; Oesch, D. A light-weight multispectral sensor for micro UAV Opportunities for very high resolution airborne remote sensing. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 37, 1193–1199. [Google Scholar]

- Candiago, S.; Remondino, F.; De Giglio, M.; Dubbini, M.; Gattelli, M. Evaluating multispectral images and vegetation indices for precision farming applications from UAV images. Remote Sens. 2015, 7, 4026–4047. [Google Scholar] [CrossRef] [Green Version]

- Xiang, H.; Tian, L. Development of a low-cost agricultural remote sensing system based on an autonomous unmanned aerial vehicle (UAV). Biosyst. Eng. 2011, 108, 174–190. [Google Scholar] [CrossRef]

- Komárek, J.; Klouček, T.; Prošek, J. The potential of unmanned aerial systems: A tool towards precision classification of hard-to-distinguish vegetation types? Int. J. Appl. Earth Obs. Geoinf. 2018, 71, 9–19. [Google Scholar] [CrossRef]

- Weil, G.; Lensky, I.; Resheff, Y.; Levin, N. Optimizing the timing of unmanned aerial vehicle image acquisition for applied mapping of woody vegetation species using feature selection. Remote Sens. 2017, 9, 1130. [Google Scholar] [CrossRef] [Green Version]

- Husson, E.; Reese, H.; Ecke, F. Combining spectral data and a DSM from UAS-images for improved classification of non-submerged aquatic vegetation. Remote Sens. 2017, 9, 247. [Google Scholar] [CrossRef] [Green Version]

- Michez, A.; Piégay, H.; Lisein, J.; Claessens, H.; Lejeune, P. Classification of riparian forest species and health condition using multi-temporal and hyperspatial imagery from unmanned aerial system. Environ. Monit. Assess. 2016, 188, 146. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lisein, J.; Michez, A.; Claessens, H.; Lejeune, P. Discrimination of deciduous tree species from time series of unmanned aerial system imagery. PLoS ONE 2015, 10, e0141006. [Google Scholar] [CrossRef] [PubMed]

- Prošek, J.; Šímová, P. UAV for mapping shrubland vegetation: Does fusion of spectral and vertical information derived from a single sensor increase the classification accuracy? Int. J. Appl. Earth Obs. Geoinf. 2019, 75, 151–162. [Google Scholar] [CrossRef]

- Mishra, N.; Mainali, K.; Shrestha, B.; Radenz, J.; Karki, D. Species-level vegetation mapping in a Himalayan treeline ecotone using unmanned aerial system (UAS) imagery. ISPRS Int. J. Geo-Inf. 2018, 7, 445. [Google Scholar] [CrossRef] [Green Version]

- Ishida, T.; Kurihara, J.; Viray, F.A.; Namuco, S.B.; Paringit, E.C.; Perez, G.J.; Takahashi, Y.; Marciano, J.J. A novel approach for vegetation classification using UAV-based hyperspectral imaging. Comput. Electron. Agric. 2018, 144, 80–85. [Google Scholar] [CrossRef]

- Müllerová, J.; Brůna, J.; Bartaloš, T.; Dvořák, P.; Vítková, M.; Pyšek, P. Timing is important: Unmanned aircraft vs. satellite imagery in plant invasion monitoring. Front. Plant Sci. 2017, 8. [Google Scholar] [CrossRef] [Green Version]

- Abeysinghe, T.; Simic Milas, A.; Arend, K.; Hohman, B.; Reil, P.; Gregory, A.; Vázquez-Ortega, A. Mapping invasive Phragmites australis in the old woman creek estuary using UAV remote sensing and machine learning classifiers. Remote Sens. 2019, 11, 1380. [Google Scholar] [CrossRef] [Green Version]

- Gaston, K.J.; Gonzalez, F.; Mengersen, K.; Gaston, K.J. UAVs and machine learning revolutionising invasive grass and vegetation surveys in remote arid lands. Sensors 2018, 18, 605. [Google Scholar] [CrossRef] [Green Version]

- Calderón, R.; Navas-Cortés, J.A.; Lucena, C.; Zarco-Tejada, P.J. High-resolution airborne hyperspectral and thermal imagery for early detection of Verticillium wilt of olive using fluorescence, temperature and narrow-band spectral indices. Remote Sens. Environ. 2013, 139, 231–245. [Google Scholar] [CrossRef]

- Nishar, A.; Richards, S.; Breen, D.; Robertson, J.; Breen, B. Thermal infrared imaging of geothermal environments and by an unmanned aerial vehicle (UAV): A case study of the Wairakei—Tauhara geothermal field, Taupo, New Zealand. Renew. Energy 2016, 86, 1256–1264. [Google Scholar] [CrossRef]

- Pérez-Ortiz, M.; Peña, J.M.; Gutiérrez, P.A.; Torres-Sánchez, J.; Hervás-Martínez, C.; López-Granados, F. A semi-supervised system for weed mapping in sunflower crops using unmanned aerial vehicles and a crop row detection method. Appl. Soft Comput. 2015, 37, 533–544. [Google Scholar] [CrossRef]

- Wu, Z.; Ni, M.; Hu, Z.; Wang, J.; Li, Q.; Wu, G. Mapping invasive plant with UAV-derived 3D mesh model in mountain area—A case study in Shenzhen Coast, China. Int. J. Appl. Earth Obs. Geoinf. 2019, 77, 129–139. [Google Scholar] [CrossRef]

- Zhang, X.; Han, L.; Dong, Y.; Shi, Y.; Huang, W.; Han, L.; González-Moreno, P.; Ma, H.; Ye, H.; Sobeih, T. A deep learning-based approach for automated yellow rust disease detection from high-resolution hyperspectral UAV images. Remote Sens. 2019, 11, 1554. [Google Scholar] [CrossRef] [Green Version]

- Vanegas, F.; Bratanov, D.; Powell, K.; Weiss, J.; Gonzalez, F. A novel methodology for improving plant pest surveillance in vineyards and crops using UAV-based hyperspectral and spatial data. Sensors 2018, 18, 260. [Google Scholar] [CrossRef] [Green Version]

- Cummings, A.R.; Karale, Y.; Cummings, G.R.; Hamer, E.; Moses, P.; Norman, Z.; Captain, V. UAV-derived data for mapping change on a swidden agriculture plot: Preliminary results from a pilot study. Int. J. Remote Sens. 2017, 38, 2066–2082. [Google Scholar] [CrossRef]

- Liu, H.; Zhu, H.; Wang, P. Quantitative modelling for leaf nitrogen content of winter wheat using UAV-based hyperspectral data. Int. J. Remote Sens. 2017, 38, 2117–2134. [Google Scholar] [CrossRef]

- Mesas-Carrascosa, F.J.; Clavero Rumbao, I.; Torres-Sánchez, J.; García-Ferrer, A.; Peña, J.M.; López Granados, F. Accurate ortho-mosaicked six-band multispectral UAV images as affected by mission planning for precision agriculture proposes. Int. J. Remote Sens. 2017, 38, 2161–2176. [Google Scholar] [CrossRef]

- Yue, J.; Feng, H.; Jin, X.; Yuan, H.; Li, Z.; Zhou, C.; Yang, G.; Tian, Q. A comparison of crop parameters estimation using images from UAV-mounted snapshot hyperspectral sensor and high-definition digital camera. Remote Sens. 2018, 10, 1138. [Google Scholar] [CrossRef] [Green Version]

- Kanning, M.; Kühling, I.; Trautz, D.; Jarmer, T. High-resolution UAV-based hyperspectral imagery for LAI and chlorophyll estimations from wheat for yield prediction. Remote Sens. 2018, 10, 2000. [Google Scholar] [CrossRef] [Green Version]

- Wei, L.; Yu, M.; Zhong, Y.; Zhao, J.; Liang, Y.; Hu, X. Spatial-spectral fusion based on conditional random fields for the fine classification of crops in UAV-borne hyperspectral remote sensing imagery. Remote Sens. 2019, 11, 780. [Google Scholar] [CrossRef] [Green Version]

- Nevalainen, O.; Honkavaara, E.; Tuominen, S.; Viljanen, N.; Hakala, T.; Yu, X.; Hyyppä, J.; Saari, H.; Pölönen, I.; Imai, N.N.; et al. Individual tree detection and classification with UAV-Based photogrammetric point clouds and hyperspectral imaging. Remote Sens. 2017, 9, 185. [Google Scholar] [CrossRef] [Green Version]

- Dos Santos, A.A.; Marcato Junior, J.; Araújo, M.S.; Di Martini, D.R.; Tetila, E.C.; Siqueira, H.L.; Aoki, C.; Eltner, A.; Matsubara, E.T.; Pistori, H.; et al. Assessment of CNN-based methods for individual tree detection on images captured by RGB cameras attached to UAVs. Sensors 2019, 19, 3595. [Google Scholar] [CrossRef] [Green Version]

- Bakambekova, A.; James, A.P. Deep learning theory simplified. In Deep Learning Classifiers with Memristive Networks; Modeling and Optimization in Science and Technologies; Springer Nature Switzerland AG: Cham, Switzerland, 2020; Volume 14, pp. 41–55. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Du, B. Deep learning for remote sensing data: A technical tutorial on the state of the art. IEEE Geosci. Remote Sens. Mag. 2016, 4, 22–40. [Google Scholar] [CrossRef]

- Malambo, L.; Popescu, S.; Ku, N.; Rooney, W.; Zhou, T.; Moore, S. A deep learning semantic segmentation-based approach for field-level sorghum panicle counting. Remote Sens. 2019, 11, 2939. [Google Scholar] [CrossRef] [Green Version]

- Ampatzidis, Y.; Partel, V. UAV-based high throughput phenotyping in citrus utilizing multispectral imaging and artificial intelligence. Remote Sens. 2019, 11, 410. [Google Scholar] [CrossRef] [Green Version]

- Zhou, K.; Ming, D.; Lv, X.; Fang, J.; Wang, M. CNN-based land cover classification combining stratified segmentation and fusion of point cloud and very high-spatial resolution remote sensing image data. Remote Sens. 2019, 11, 2065. [Google Scholar] [CrossRef] [Green Version]

- Nevavuori, P.; Narra, N.; Lipping, T. Crop yield prediction with deep convolutional neural networks. Comput. Electron. Agric. 2019, 163, 104859. [Google Scholar] [CrossRef]

- Yalcin, H. Plant phenology recognition using deep learning: Deep-Pheno. In Proceedings of the 2017 6th International Conference on Agro-Geoinformatics, Fairfax, VA, USA, 7–10 August 2017; pp. 1–5. [Google Scholar]

- Ma, L.; Liu, Y.; Zhang, X.; Ye, Y.; Yin, G.; Johnson, B.A. Deep learning in remote sensing applications: A meta-analysis and review. ISPRS J. Photogramm. Remote Sens. 2019, 152, 166–177. [Google Scholar] [CrossRef]

- Ji, S.; Zhang, Z.; Zhang, C.; Wei, S.; Lu, M.; Duan, Y. Learning discriminative spatiotemporal features for precise crop classification from multi-temporal satellite images. Int. J. Remote Sens. 2020, 41, 3162–3174. [Google Scholar] [CrossRef]

- Bosilj, P.; Aptoula, E.; Duckett, T.; Cielniak, G. Transfer learning between crop types for semantic segmentation of crops versus weeds in precision agriculture. J. Field Robot. 2020, 37, 7–19. [Google Scholar] [CrossRef]

- Lv, Y.; Zhang, C.; Yun, W.; Gao, L.; Wang, H.; Ma, J.; Li, H.; Zhu, D. The delineation and grading of actual crop production units in modern smallholder areas using RS Data and Mask R-CNN. Remote Sens. 2020, 12, 1074. [Google Scholar] [CrossRef]

- Bah, M.D.; Dericquebourg, E.; Hafiane, A.; Canals, R. Deep learning based classification system for identifying weeds using high-resolution UAV imagery. In Proceedings of the Science and Information Conference; Springer: Cham, Switzerland, 2019; pp. 176–187. [Google Scholar]

- Huang, H.; Deng, J.; Lan, Y.; Yang, A.; Deng, X.; Zhang, L. A fully convolutional network for weed mapping of unmanned aerial vehicle (UAV) imagery. PLoS ONE 2018, 13, e0196302. [Google Scholar] [CrossRef] [Green Version]

- Hasan, A.S.M.M.; Sohel, F.; Diepeveen, D.; Laga, H.; Jones, M.G.K. A survey of deep learning techniques for weed detection from images. Comput. Electron. Agric. 2021, 184, 106067. [Google Scholar] [CrossRef]

- Mazzia, V.; Comba, L.; Khaliq, A.; Chiaberge, M.; Gay, P. UAV and machine learning based refinement of a satellite-driven vegetation index for precision agriculture. Sensors 2020, 20, 2530. [Google Scholar] [CrossRef] [PubMed]

- Sharpe, S.M.; Schumann, A.W.; Yu, J.; Boyd, N.S. Vegetation detection and discrimination within vegetable plasticulture row-middles using a convolutional neural network. Precis. Agric. 2019, 1–14. [Google Scholar] [CrossRef]

- Bayr, U.; Puschmann, O. Automatic detection of woody vegetation in repeat landscape photographs using a convolutional neural network. Ecol. Inform. 2019, 50, 220–233. [Google Scholar] [CrossRef]

- Ganchenko, V.; Doudkin, A. Agricultural vegetation monitoring based on aerial data using convolutional neural networks. Opt. Mem. Neural Netw. 2019, 28, 129–134. [Google Scholar] [CrossRef]

- Neupane, B.; Horanont, T.; Hung, N.D. Deep learning based banana plant detection and counting using high-resolution red-green-blue (RGB) images collected from unmanned aerial vehicle (UAV). PLoS ONE 2019, 14, e0223906. [Google Scholar] [CrossRef]

- Kattenborn, T.; Leitloff, J.; Schiefer, F.; Hinz, S. Review on Convolutional Neural Networks (CNN) in vegetation remote sensing. ISPRS J. Photogramm. Remote Sens. 2021, 173, 24–49. [Google Scholar] [CrossRef]

- Braga, J.R.G.; Peripato, V.; Dalagnol, R.; Ferreira, M.P.; Tarabalka, Y.; Aragão, L.E.O.C.; de Campos Velho, H.F.; Shiguemori, E.H.; Wagner, F.H. Tree crown delineation algorithm based on a convolutional neural network. Remote Sens. 2020, 12, 1288. [Google Scholar] [CrossRef] [Green Version]

- Ferreira, M.P.; de Almeida, D.R.A.; de Almeida Papa, D.; Minervino, J.B.S.; Veras, H.F.P.; Formighieri, A.; Santos, C.A.N.; Ferreira, M.A.D.; Figueiredo, E.O.; Ferreira, E.J.L. Individual tree detection and species classification of Amazonian palms using UAV images and deep learning. For. Ecol. Manag. 2020, 475, 118397. [Google Scholar] [CrossRef]

- Roslan, Z.; Long, Z.A.; Husen, M.N.; Ismail, R.; Hamzah, R. Deep learning for tree crown detection in tropical forest. In Proceedings of the 2020 14th International Conference on Ubiquitous Information Management and Communication (IMCOM), Taichung, Taiwan, 3–5 January 2020. [Google Scholar] [CrossRef]

- Weinstein, B.G.; Marconi, S.; Bohlman, S.; Zare, A.; White, E. Individual tree-crown detection in rgb imagery using semi-supervised deep learning neural networks. Remote Sens. 2019, 11, 1309. [Google Scholar] [CrossRef] [Green Version]

- Kerkech, M.; Hafiane, A.; Canals, R. Deep leaning approach with colorimetric spaces and vegetation indices for vine diseases detection in UAV images. Comput. Electron. Agric. 2018, 155, 237–243. [Google Scholar] [CrossRef]

- Dang, L.M.; Ibrahim Hassan, S.; Suhyeon, I.; Sangaiah, A.K.; Mehmood, I.; Rho, S.; Seo, S.; Moon, H. UAV based wilt detection system via convolutional neural networks. Sustain. Comput. Inform. Syst. 2018. [Google Scholar] [CrossRef] [Green Version]

- Hasan, M.; Tanawala, B.; Patel, K.J. Deep learning precision farming: Tomato leaf disease detection by transfer learning. SSRN Electron. J. 2019. [Google Scholar] [CrossRef]

- Castelao Tetila, E.; Brandoli Machado, B.; Menezes, G.K.; da Silva Oliveira, A.; Alvarez, M.; Amorim, W.P.; de Souza Belete, N.A.; da Silva, G.G.; Pistori, H. Automatic recognition of soybean leaf diseases using UAV images and deep convolutional neural networks. IEEE Geosci. Remote Sens. Lett. 2019, 1–5. [Google Scholar] [CrossRef]

- Bajpai, G.; Gupta, A.; Chauhan, N. Real time implementation of convolutional neural network to detect plant diseases using internet of things. In International Symposium on VLSI Design and Test; Springer: Singapore, 2019; pp. 510–522. [Google Scholar]

- Kattenborn, T.; Eichel, J.; Wiser, S.; Burrows, L.; Fassnacht, F.E.; Schmidtlein, S. Convolutional neural networks accurately predict cover fractions of plant species and communities in unmanned aerial vehicle imagery. Remote Sens. Ecol. Conserv. 2020, 1–15. [Google Scholar] [CrossRef] [Green Version]

- Hamylton, S.M.; Morris, R.H.; Carvalho, R.C.; Roder, N.; Barlow, P.; Mills, K.; Wang, L. Evaluating techniques for mapping island vegetation from unmanned aerial vehicle (UAV) images: Pixel classification, visual interpretation and machine learning approaches. Int. J. Appl. Earth Obs. Geoinf. 2020, 89, 102085. [Google Scholar] [CrossRef]

- Fan, Z.; Lu, J.; Gong, M.; Xie, H.; Goodman, E.D. Automatic tobacco plant detection in UAV images via deep neural networks. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 876–887. [Google Scholar] [CrossRef]

- Yang, Q.; Shi, L.; Han, J.; Yu, J.; Huang, K. A near real-time deep learning approach for detecting rice phenology based on UAV images. Agric. For. Meteorol. 2020, 287, 107938. [Google Scholar] [CrossRef]

- Nezami, S.; Khoramshahi, E.; Nevalainen, O.; Pölönen, I.; Honkavaara, E. Tree species classification of drone hyperspectral and RGB imagery with deep learning convolutional neural networks. Remote Sens. 2020, 12, 1070. [Google Scholar] [CrossRef] [Green Version]

- Qian, W.; Huang, Y.; Liu, Q.; Fan, W.; Sun, Z.; Dong, H.; Wan, F.; Qiao, X. UAV and a deep convolutional neural network for monitoring invasive alien plants in the wild. Comput. Electron. Agric. 2020, 174, 105519. [Google Scholar] [CrossRef]

- Bonet, I.; Caraffini, F.; Pena, A.; Puerta, A.; Gongora, M. Oil palm detection via deep transfer learning. In Proceedings of the 2020 IEEE Congress on Evolutionary Computation (CEC), Glasgow, UK, 19–24 July 2020. [Google Scholar] [CrossRef]

- Tao, H.; Li, C.; Zhao, D.; Deng, S.; Hu, H.; Xu, X.; Jing, W. Deep learning-based dead pine tree detection from unmanned aerial vehicle images. Int. J. Remote Sens. 2020, 41, 8238–8255. [Google Scholar] [CrossRef]

- Zhang, C.; Xia, K.; Feng, H.; Yang, Y.; Du, X. Tree species classification using deep learning and RGB optical images obtained by an unmanned aerial vehicle. J. For. Res. 2020. [Google Scholar] [CrossRef]

- Nguyen, H.T.; Caceres, M.L.L.; Moritake, K.; Kentsch, S.; Shu, H.; Diez, Y. Individual sick fir tree (Abies mariesii) identification in insect infested forests by means of UAV images and deep learning. Remote Sens. 2021, 13, 260. [Google Scholar] [CrossRef]

- Safonova, A.; Guirado, E.; Maglinets, Y.; Alcaraz-Segura, D.; Tabik, S. Olive tree biovolume from uav multi-resolution image segmentation with mask r-cnn. Sensors 2021, 21, 1617. [Google Scholar] [CrossRef] [PubMed]

- Fromm, M.; Schubert, M.; Castilla, G.; Linke, J.; McDermid, G. Automated detection of conifer seedlings in drone imagery using convolutional neural networks. Remote Sens. 2019, 11, 2585. [Google Scholar] [CrossRef] [Green Version]

- Ocer, N.E.; Kaplan, G.; Erdem, F.; Kucuk Matci, D.; Avdan, U. Tree extraction from multi-scale UAV images using Mask R-CNN with FPN. Remote Sens. Lett. 2020, 11, 847–856. [Google Scholar] [CrossRef]

- Pulido, D.; Salas, J.; Rös, M.; Puettmann, K.; Karaman, S. Assessment of tree detection methods in multispectral aerial images. Remote Sens. 2020, 12, 2379. [Google Scholar] [CrossRef]

- Liu, X.; Ghazali, K.H.; Han, F.; Mohamed, I.I. Automatic detection of oil palm tree from UAV images based on the deep learning method. Appl. Artif. Intell. 2021, 35, 13–24. [Google Scholar] [CrossRef]

- Zheng, J.; Fu, H.; Li, W.; Wu, W.; Yu, L.; Yuan, S.; Tao, W.Y.W.; Pang, T.K.; Kanniah, K.D. Growing status observation for oil palm trees using Unmanned Aerial Vehicle (UAV) images. ISPRS J. Photogramm. Remote Sens. 2021, 173, 95–121. [Google Scholar] [CrossRef]

- Barmpoutis, P.; Kamperidou, V.; Stathaki, T. Estimation of extent of trees and biomass infestation of the suburban forest of Thessaloniki (Seich Sou) using UAV imagery and combining R-CNNs and multichannel texture analysis. In Proceedings of the Twelfth International Conference on Machine Vision (ICMV 2019), Amsterdam, The Netherlands, 16–18 November 2019. [Google Scholar] [CrossRef]

- Weinstein, B.G.; Marconi, S.; Bohlman, S.A.; Zare, A.; White, E.P. Cross-site learning in deep learning RGB tree crown detection. Ecol. Inform. 2020, 56, 101061. [Google Scholar] [CrossRef]

- Liu, Y.; Cen, C.; Che, Y.; Ke, R.; Ma, Y.; Ma, Y. Detection of maize tassels from UAV RGB imagery with faster R-CNN. Remote Sens. 2020, 12, 338. [Google Scholar] [CrossRef] [Green Version]

- Osco, L.P.; dos Santos de Arruda, M.; Marcato Junior, J.; da Silva, N.B.; Ramos, A.P.M.; Moryia, É.A.S.; Imai, N.N.; Pereira, D.R.; Creste, J.E.; Matsubara, E.T.; et al. A convolutional neural network approach for counting and geolocating citrus-trees in UAV multispectral imagery. ISPRS J. Photogramm. Remote Sens. 2020, 160, 97–106. [Google Scholar] [CrossRef]

- Yang, M.-D.; Tseng, H.H.; Hsu, Y.C.; Tsai, H.P. Semantic segmentation using deep learning with vegetation indices for rice lodging identification in multi-date UAV visible images. Remote Sens. 2020, 12, 633. [Google Scholar] [CrossRef] [Green Version]

- Huang, H.; Lan, Y.; Yang, A.; Zhang, Y.; Wen, S.; Deng, J. Deep learning versus object-based image analysis (OBIA) in weed mapping of UAV imagery. Int. J. Remote Sens. 2020, 41, 3446–3479. [Google Scholar] [CrossRef]

- Torres, D.L.; Feitosa, R.Q.; Happ, P.N.; La Rosa, L.E.C.; Junior, J.M.; Martins, J.; Bressan, P.O.; Gonçalves, W.N.; Liesenberg, V. Applying fully convolutional architectures for semantic segmentation of a single tree species in urban environment on high resolution UAV optical imagery. Sensors 2020, 20, 563. [Google Scholar] [CrossRef] [Green Version]

- Kattenborn, T.; Eichel, J.; Fassnacht, F.E. Convolutional neural networks enable efficient, accurate and fine-grained segmentation of plant species and communities from high-resolution UAV imagery. Sci. Rep. 2019, 9, 17656. [Google Scholar] [CrossRef] [PubMed]

- Liu, C.; Li, H.; Su, A.; Chen, S.; Li, W. Identification and grading of maize drought on RGB images of UAV based on improved U-net. IEEE Geosci. Remote Sens. Lett. 2021, 18, 198–202. [Google Scholar] [CrossRef]

- Wu, J.; Yang, G.; Yang, H.; Zhu, Y.; Li, Z.; Lei, L.; Zhao, C. Extracting apple tree crown information from remote imagery using deep learning. Comput. Electron. Agric. 2020, 174, 105504. [Google Scholar] [CrossRef]

- Liu, T.; Abd-Elrahman, A.; Morton, J.; Wilhelm, V.L. Comparing fully convolutional networks, random forest, support vector machine, and patch-based deep convolutional neural networks for object-based wetland mapping using images from small unmanned aircraft system. GISci. Remote Sens. 2018, 55, 243–264. [Google Scholar] [CrossRef]

- Morales, G.; Kemper, G.; Sevillano, G.; Arteaga, D.; Ortega, I.; Telles, J. Automatic segmentation of Mauritia flexuosa in unmanned aerial vehicle (UAV) imagery using deep learning. Forests 2018, 9, 736. [Google Scholar] [CrossRef] [Green Version]

- Kentsch, S.; Caceres, M.L.L.; Serrano, D.; Roure, F.; Diez, Y. Computer vision and deep learning techniques for the analysis of drone-acquired forest images, a transfer learning study. Remote Sens. 2020, 12, 1287. [Google Scholar] [CrossRef] [Green Version]

- Tang, T.Y.; Fu, B.L.; Lou, P.Q.; Bi, L. Segnet-based extraction of wetland vegetation information from UAV images. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 42, 375–380. [Google Scholar] [CrossRef] [Green Version]

- Al-Ruzouq, R.; Gibril, M.B.A.; Shanableh, A.; Kais, A.; Hamed, O.; Al-Mansoori, S.; Khalil, M.A. Sensors, features, and machine learning for oil spill detection and monitoring: A review. Remote Sens. 2020, 12, 3338. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Handa, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for robust semantic pixel-wise labelling. arXiv 2015, arXiv:1505.07293. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2015; Volume 9351, pp. 234–241. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar]

- Cao, K.; Zhang, X. An improved Res-UNet model for tree species classification using airborne high-resolution images. Remote Sens. 2020, 12, 1128. [Google Scholar] [CrossRef] [Green Version]

- Flood, N.; Watson, F.; Collett, L. Using a U-net convolutional neural network to map woody vegetation extent from high resolution satellite imagery across Queensland, Australia. Int. J. Appl. Earth Obs. Geoinf. 2019, 82, 101897. [Google Scholar] [CrossRef]

- Xiao, C.; Qin, R.; Huang, X. Treetop detection using convolutional neural networks trained through automatically generated pseudo labels. Int. J. Remote Sens. 2020, 41, 3010–3030. [Google Scholar] [CrossRef]

- Wagner, F.H.; Sanchez, A.; Tarabalka, Y.; Lotte, R.G.; Ferreira, M.P.; Aidar, M.P.M.; Gloor, E.; Phillips, O.L.; Aragão, L.E.O.C. Using the U-net convolutional network to map forest types and disturbance in the Atlantic rainforest with very high resolution images. Remote Sens. Ecol. Conserv. 2019, 5, 360–375. [Google Scholar] [CrossRef] [Green Version]

- Nogueira, K.; Santos, J.A.; Cancian, L.; Borges, B.D.; Silva, T.S.F.; Morellato, L.P.; Torres, R.S. Semantic segmentation of vegetation images acquired by unmanned aerial vehicles using an ensemble of ConvNets. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; Volume 2, pp. 3787–3790. [Google Scholar]

- Bhatnagar, S.; Gill, L.; Ghosh, B. Drone image segmentation using machine and deep learning for mapping raised bog vegetation communities. Remote Sens. 2020, 12, 2602. [Google Scholar] [CrossRef]

- Wagner, F.H.; Sanchez, A.; Aidar, M.P.M.; Rochelle, A.L.C.; Tarabalka, Y.; Fonseca, M.G.; Phillips, O.L.; Gloor, E.; Aragão, L.E.O.C. Mapping Atlantic rainforest degradation and regeneration history with indicator species using convolutional network. PLoS ONE 2020, 15, e0229448. [Google Scholar] [CrossRef] [Green Version]

- Liu, J.; Wang, X.; Wang, T. Classification of tree species and stock volume estimation in ground forest images using deep learning. Comput. Electron. Agric. 2019, 166, 105012. [Google Scholar] [CrossRef]

- Kentsch, S.; Karatsiolis, S.; Kamilaris, A.; Tomhave, L.; Lopez Caceres, M.L. Identification of tree species in Japanese forests based on aerial photography and deep learning. In Advances and New Trends in Environmental Informatics; Springer: Cham, Switzerland, 2020. [Google Scholar]

- Korznikov, K.A.; Kislov, D.E.; Altman, J.; Doležal, J.; Vozmishcheva, A.S.; Krestov, P.V. Using u-net-like deep convolutional neural networks for precise tree recognition in very high resolution rgb (Red, green, blue) satellite images. Forests 2021, 12, 66. [Google Scholar] [CrossRef]

- Ayhan, B.; Kwan, C. Tree, shrub, and grass classification using only RGB images. Remote Sens. 2020, 12, 1333. [Google Scholar] [CrossRef] [Green Version]

- Ayhan, B.; Kwan, C.; Budavari, B.; Kwan, L.; Lu, Y.; Perez, D.; Li, J.; Skarlatos, D.; Vlachos, M. Vegetation detection using deep learning and conventional methods. Remote Sens. 2020, 12, 2502. [Google Scholar] [CrossRef]

- Ayhan, B.; Kwan, C.; Larkin, J.; Kwan, L.M.; Skarlatos, D.P.; Vlachos, M. Deep learning models for accurate vegetation classification using RGB image only. In Proceedings of the SPIE Defense + Commercial Sensing, Online Only, 21 April 2020. [Google Scholar] [CrossRef]

- Wang, S.; Xu, Z.; Zhang, C.; Zhang, J.; Mu, Z.; Zhao, T.; Wang, Y.; Gao, S.; Yin, H.; Zhang, Z. Improved winter wheat spatial distribution extraction using a convolutional neural network and partly connected conditional random field. Remote Sens. 2020, 12, 821. [Google Scholar] [CrossRef] [Green Version]

- Lin, Z.; Guo, W. Sorghum panicle detection and counting using unmanned aerial system images and deep learning. Front. Plant Sci. 2020, 11, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Du, L.; McCarty, G.W.; Zhang, X.; Lang, M.W.; Vanderhoof, M.K.; Li, X.; Huang, C.; Lee, S.; Zou, Z. Mapping forested wetland inundation in the delmarva peninsula, USA using deep convolutional neural networks. Remote Sens. 2020, 12, 644. [Google Scholar] [CrossRef] [Green Version]

- Freudenberg, M.; Nölke, N.; Agostini, A.; Urban, K.; Wörgötter, F.; Kleinn, C. Large scale palm tree detection in high resolution satellite images using U-Net. Remote Sens. 2019, 11, 312. [Google Scholar] [CrossRef] [Green Version]

- Dong, R.; Li, W.; Fu, H.; Gan, L.; Yu, L.; Zheng, J.; Xia, M. Oil palm plantation mapping from high-resolution remote sensing images using deep learning. Int. J. Remote Sens. 2020, 41, 2022–2046. [Google Scholar] [CrossRef]

- Mihi, A.; Nacer, T.; Chenchouni, H. Monitoring Dynamics of Date Palm Plantations from 1984 to 2013 Using Landsat Time-Series in Sahara Desert Oases of Algeria; Springer: Berlin/Heidelberg, Germany, 2019; ISBN 9783030014407. [Google Scholar]

- Mulley, M.; Kooistra, L.; Bierens, L. High-resolution multisensor remote sensing to support date palm farm high-resolution multisensor remote sensing to support date palm farm management. Agriculture 2019, 9, 26. [Google Scholar] [CrossRef] [Green Version]

- Shareef, M.A. Estimation and mapping of dates palm using landsat-8 images: A case study in Baghdad City. In Proceedings of the 2018 International Conference on Advanced Science and Engineering (ICOASE), Duhok, Iraq, 9–11 October 2018; pp. 425–430. [Google Scholar]

- Issa, S.; Dahy, B.; Saleous, N. Mapping and assessing above ground biomass (AGB) of date palm plantations using remote sensing and GIS: A case study from Abu Dhabi, United Arab Emirates. In Proceedings of the Remote Sensing for Agriculture, Ecosystems, and Hydrology XXI, Strasbourg, France, 9–11 September 2019. [Google Scholar]

- Mazloumzadeh, S.M.; Shamsi, M.; Nezamabadi-pour, H. Fuzzy logic to classify date palm trees based on some physical properties related to precision agriculture. Precis. Agric. 2010, 258–273. [Google Scholar] [CrossRef]

- Al-Ruzouq, R.; Shanableh, A.; Barakat, A. Gibril, M.; AL-Mansoori, S.; Al-Ruzouq, R.; Shanableh, A.; Barakat, A. Gibril, M.; AL-Mansoori, S. Image segmentation parameter selection and ant colony optimization for date palm tree detection and mapping from very-high-spatial-resolution aerial imagery. Remote Sens. 2018, 10, 1413. [Google Scholar] [CrossRef] [Green Version]

- Culman, M.; Delalieux, S.; Van Tricht, K. Palm tree inventory from aerial images using retinanet. In Proceedings of the 2020 Mediterranean and Middle-East Geoscience and Remote Sensing Symposium (M2GARSS), Tunis, Tunisia, 9–11 March 2020; pp. 314–317. [Google Scholar]

- Tadesse, W.; Halila, H.; Jamal, M.; Assefa, S.; Oweis, T.; Baum, M. Role of sustainable wheat production to ensure food security in the CWANA region. J. Exp. Biol. Agric. Sci. 2017, 5. [Google Scholar] [CrossRef]

- Yilmaz, A.G.; Shanableh, A.; Merabtene, T.; Atabay, S.; Kayemah, N. Rainfall trends and intensity-frequency-duration relationships in Sharjah City, UAE. Int. J. Hydrol. Sci. Technol. 2020, 10, 487–503. [Google Scholar] [CrossRef]

- Murad, A.A.; Nuaimi, H.; Hammadi, M. Comprehensive assessment of water resources in the United Arab Emirates (UAE). Water Resour. Manag. 2007, 21, 1449–1463. [Google Scholar] [CrossRef]

- senseFly eMotion 3 User Manual; Revision 1.9; senseFly Parrot Group: Cheseaux-sur-Lausanne, Switzerland, 2018.

- Martins, G.B.; La Rosa, L.E.C.; Happ, P.N.; Filho, L.C.T.C.; Santos, C.J.F.; Feitosa, R.Q.; Ferreira, M.P. Deep learning-based tree species mapping in a highly diverse tropical urban setting. Urban For. Urban Green. 2021, 64, 127241. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015—Conference Track Proceedings, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Jiang, Y.; Liu, W.; Wu, C.; Yao, H. Multi-scale and multi-branch convolutional neural network for retinal image segmentation. Symmetry 2021, 13, 365. [Google Scholar] [CrossRef]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Semantic Image Segmentation with Deep Convolutional Nets and Fully Connected CRFs. arXiv 2014, arXiv:1412.7062. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2018; Volume 11211 LNCS, pp. 833–851. [Google Scholar]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6230–6239. [Google Scholar] [CrossRef] [Green Version]

- Krestenitis, M.; Orfanidis, G.; Ioannidis, K.; Avgerinakis, K.; Vrochidis, S.; Kompatsiaris, I. Oil spill identification from satellite images using deep neural networks. Remote Sens. 2019, 11, 1762. [Google Scholar] [CrossRef] [Green Version]

- Cui, B.; Fei, D.; Shao, G.; Lu, Y.; Chu, J. Extracting raft aquaculture areas from remote sensing images via an improved U-net with a PSE structure. Remote Sens. 2019, 11, 2053. [Google Scholar] [CrossRef] [Green Version]

- Milletari, F.; Navab, N.; Ahmadi, S.A. V-Net: Fully convolutional neural networks for volumetric medical image segmentation. In Proceedings of the 2016 4th International Conference on 3D Vision, Stanford, CA, USA, 25–28 October 2016; pp. 565–571. [Google Scholar] [CrossRef] [Green Version]

- Ma, J. Segmentation Loss Odyssey. arXiv 2020, arXiv:2005.13449. [Google Scholar]

- Lan, M.; Zhang, Y.; Zhang, L.; Du, B. Global context based automatic road segmentation via dilated convolutional neural network. Inf. Sci. 2020, 535, 156–171. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J.L. Adam: A method for stochastic optimization. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015—Conference Track Proceedings, San Diego, CA, USA, 7–9 May 2015; pp. 1–15. [Google Scholar]

- Paoletti, M.E.; Haut, J.M.; Plaza, J.; Plaza, A. Deep learning classifiers for hyperspectral imaging: A review. ISPRS J. Photogramm. Remote Sens. 2019, 158, 279–317. [Google Scholar] [CrossRef]

| Path | Unit | Kernel Size (k), Feature Map (fm) | Output Size (Width × Height × Channels) |

|---|---|---|---|

| Input | 512 × 512 × 3 | ||

| Encoder | ZeroPadding2D | 518 × 518 × 3 | |

| Conv2D | k = (7 × 7), fm = 64 | ||

| Batch normalization + Relu | k = (3 × 3), fm = 64 | 256 × 256× 64 | |

| ZeroPadding2D | Fm = 64 | 258 × 258× 64 | |

| MaxPooling2D | k = (3 × 3), fm = 64 | 128 × 128 × 64 | |

| Convltional block 2 Identity block | 128 × 128 × 256 | ||

| Convltional block 3 Identity block | 64 × 64 × 512 | ||

| Convltional block 5 Identity block | 32 × 32 × 1024 | ||

| Convltional blockIdentity block | 16 × 16 × 2048 | ||

| Bottleneck | Conv2D | 16 × 16 × 512 | |

| Batch normalization + Relu | 16 × 16× 512 | ||

| Conv2D | 16 × 16 × 512 | ||

| Batch normalization + Relu | 16 × 16 × 512 | ||

| Conv2D | 16 × 16 × 2048 | ||

| Batch normalization | 16 × 16 × 2048 | ||

| Decoder | Upsampling2D | 32 × 32 × 2048 | |

| Decoder block | 32 × 32 × 2048 | ||

| Concatenate_1 | 32, 32, 3072 | ||

| Upsampling2D | 64, 64, 3072 | ||

| Decoder block | 64, 64, 1024 | ||

| Concatenate005F2 | 64, 64, 1536 | ||

| Upsampling2D | 128, 128, 1536 | ||

| Decoder block | 128, 128, 512 | ||

| Concatenate_3 | 128, 128, 768 | ||

| Upsampling2D | 256, 256, 768 | ||

| Decoder block | 256, 256, 256 | ||

| Concatenate_4 | 256, 256, 320 | ||

| Upsampling2D | 512, 512, 320 | ||

| Decoder block | 512, 512, 64 | ||

| Output | Conv2D + sigmoid | k = (1 × 1), fm = 1 | 512, 512, 1 |

| Model | Backbone | No. of Trainable Parameters (M) | Training Time/Epoch (m) | Test Time (s)/Image |

|---|---|---|---|---|

| U-Net | ResNet-50 | ~157.280 | ~75 | ~0.17 |

| U-Net | VGG-16 | ~25.858 | ~43 | ~0.1 |

| DeepLab V3+ | ResNet-50 | ~17.795 | ~25 | ~0.07 |

| DeepLab V3+ | Xception | ~21.558 | ~29 | ~0.09 |

| PSPNet | ResNet-50 | ~46.631 | ~65 | ~0.14 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gibril, M.B.A.; Shafri, H.Z.M.; Shanableh, A.; Al-Ruzouq, R.; Wayayok, A.; Hashim, S.J. Deep Convolutional Neural Network for Large-Scale Date Palm Tree Mapping from UAV-Based Images. Remote Sens. 2021, 13, 2787. https://doi.org/10.3390/rs13142787

Gibril MBA, Shafri HZM, Shanableh A, Al-Ruzouq R, Wayayok A, Hashim SJ. Deep Convolutional Neural Network for Large-Scale Date Palm Tree Mapping from UAV-Based Images. Remote Sensing. 2021; 13(14):2787. https://doi.org/10.3390/rs13142787

Chicago/Turabian StyleGibril, Mohamed Barakat A., Helmi Zulhaidi Mohd Shafri, Abdallah Shanableh, Rami Al-Ruzouq, Aimrun Wayayok, and Shaiful Jahari Hashim. 2021. "Deep Convolutional Neural Network for Large-Scale Date Palm Tree Mapping from UAV-Based Images" Remote Sensing 13, no. 14: 2787. https://doi.org/10.3390/rs13142787

APA StyleGibril, M. B. A., Shafri, H. Z. M., Shanableh, A., Al-Ruzouq, R., Wayayok, A., & Hashim, S. J. (2021). Deep Convolutional Neural Network for Large-Scale Date Palm Tree Mapping from UAV-Based Images. Remote Sensing, 13(14), 2787. https://doi.org/10.3390/rs13142787