1. Introduction

The unmanned aerial vehicles (UAV) have been widely used in agriculture, including crop monitoring [

1,

2], crop yield assessment [

3,

4], and plant protection [

5,

6,

7]. Plant protection, especially through pesticide spraying to control pests and diseases is an important part of agricultural production. Compared to conventional ground-moving plant protection equipment, the plant protection UAV offer clear advantages in terms of terrain adaptation and high efficiency [

8].

However, obstacles in farmland will pose serious challenges to the safety and autonomous flight of the plant protection UAV. To address the challenge, the scholars have conducted many researches [

9]. Through some sensors, reliable control algorithms, and pre-measured information on the location of obstacles, autonomous flight can be realized to a certain extent [

10,

11]. The primary requirement for safety and autonomous flight of the plant protection UAV is the ability to obtain environmental information, actively perceive and understand the environment. Some common sensors, such as millimeter wave (MMV) radar [

12], LIDAR [

13], ultrasonic [

14], and infrared rangefinders [

15] have been widely used to detect obstacles. With the development of image processing and airborne computer technology, monocular camera [

16], and binocular camera [

17] have been used on UAV to help acquire environmental information. Some convolutional neural networks [

18] and deep learning [

19,

20,

21] have shown high performance in obstacle detection and obstacle avoidance path planning. For example, YOLO [

22] and SSD [

23] were applied for target detection and classification. Single-stream recurrent convolutional neural networks (SSRCNN) and deep recurrent convolutional neural networks (DRCNN) were used to detect and render salient objects in images [

24]. To further improve the accuracy of environmental information acquisition, scholars have researched multiple sensor combinations. Based on the characteristics of different sensors, data fusion has been carried out and some results have been achieved [

25,

26].

After obtaining the environmental information, the path planning for the plant protection UAV also needs to be solved. Additionally, scholars have presents many studies on this issue, such as sampling based techniques for UAV path planning, including cell decomposition methods [

27], roadmap methods [

28], potential field methods [

29], etc. Artificial intelligence techniques can also be used as an effective method for UAV path planning [

30,

31].

In summary, a great deal of research has been carried out on UAV environment awareness, obstacle detection, and path planning. However, it is still a huge challenge to improving the obstacle avoidance capabilities of the plant protection UAV in unstructured farmland and generating optimal obstacle avoidance paths based on flight requirements. Therefore, we conducted the research on obstacle recognition with MMV radar and monocular camera data fusion. Based on this, an improved A* obstacle avoidance algorithm for the plant protection UAV combined with flight requirements (high efficiency, smoothness, and continuity) was conducted. MMV radar can accurately measure the distance and monocular camera can provide rich image information. Through data fusion, Canny edge detection and morphological processing, the obstacle information (distance and contour) can be obtained. Based on the obstacle information obtained and the flight requirements, the traditional A* algorithms have been improved through dynamic heuristic functions, search point, and inflection point optimization. This article aiming to provide new ideas to further improve the safety and autonomous flight of the plant protection UAV in unstructured farmland and promote the application of the plant protection UAV in a wider range of fields.

2. Materials and Methods

2.1. Experimental Platform

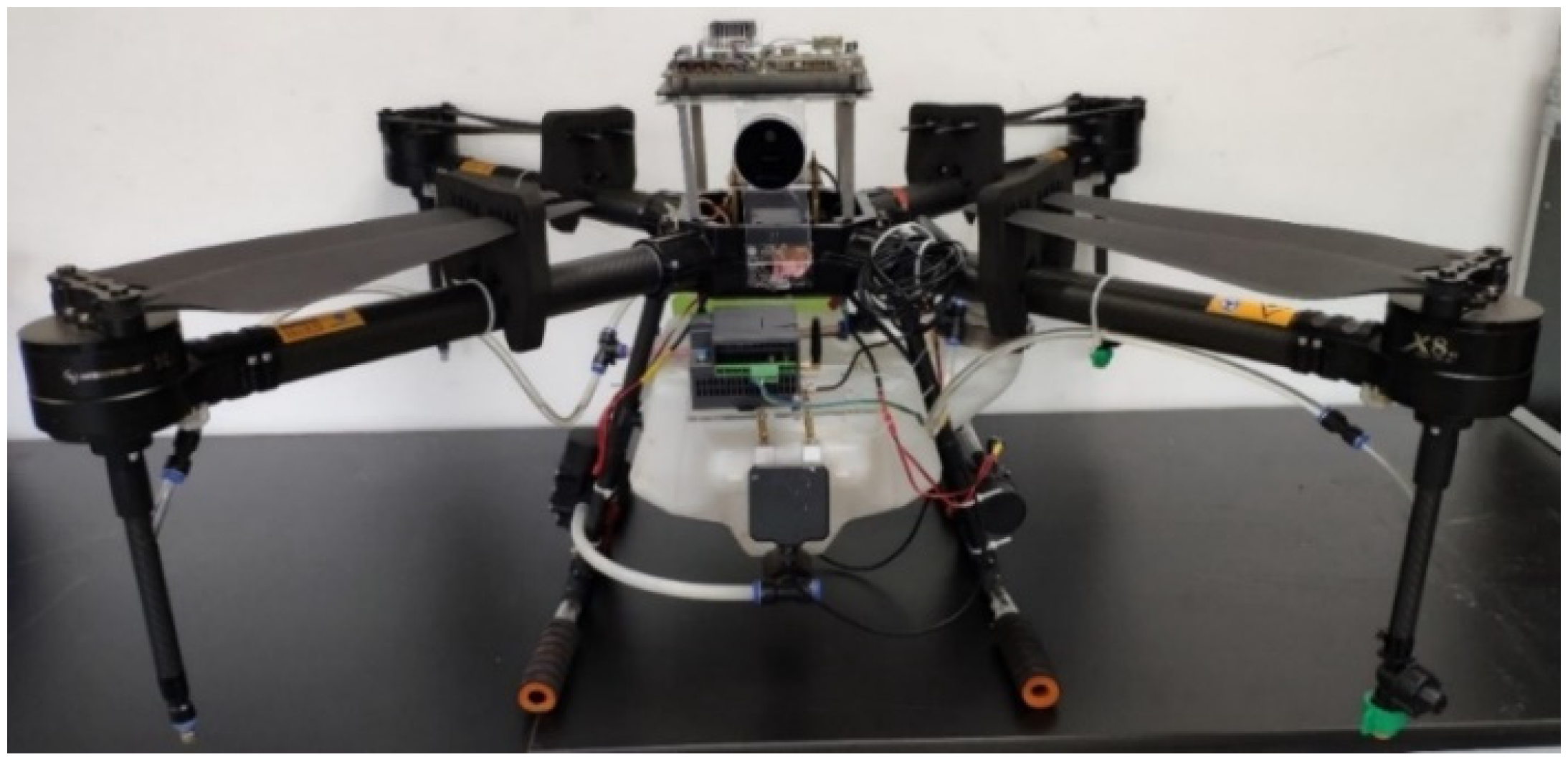

As shown in

Figure 1, this study was conducted on an experimental platform composed of NVIDIA Jetson TX2 airborne computer, Intel RealSense L515 camera, MMV radar, the plant protection UAV, Pixhawk4 flight controller, and QGroundControl ground station. The experimental platform parameters as shown in

Table 1.

A two-tier flight control system with an onboard computer and flight controller compose the experimental platform, which maintains reliability, stability, and expansion flexibility. NVIDIA Jetson TX2 onboard computer as the main controller with extensive peripheral interfaces. It runs advanced control programs, such as MMV radar and monocular camera data fusion, obstacle information extraction, and improved A* obstacle avoidance algorithm. The Pixhawk4 flight controller as the sub-controller. Due to the sustainable contribution from the open source community, Pixhawk4 has been proved to be a reliable standalone flight control firmware for the innovation and implementation of personalized applications based on the UAV platform. With the Pixhawk4’s offboard interface and MAVLINK communication protocol, it can receive the obstacle avoidance paths directly from the NVIDIA Jetson TX2 and control the plant protection UAV to execute the corresponding flight without any hardware compatibility modifications. The QGroundControl ground station was used to monitor flights and record flight logs in real time. The two-tier control system integrates NVIDIA Jetson TX2, Pixhawk4 flight controller, Intel RealSense L515, MMV radar, and the plant protection UAV into a seamless experimental platform system.

2.2. MMV Radar and Monocular Camera Data Fusion

According to size, the obstacles in Chinese unstructured farmland can be classified as micro obstacles (e.g., inclined cable, power grid), small and medium obstacles (e.g., tree, wire pole), large obstacles (e.g., shelter forest, high-pressure tower), and non-fixed or visually-distorting obstacles (e.g., bird, pond) [

32]. It is worth noting that most obstacles appearing in farmland are micro, small, and medium obstacles [

31]. In order to obtain accurate obstacles information and improve the obstacle avoidance capability of the plant protection UAV, we conducted a research on the data fusion method of MMV radar and monocular camera. When performing data fusion between MMV radar and monocular camera, due to the installation position on the plant protection UAV and sampling frequency of the two sensors were different, it is necessary to fuse the two sensors in spatial and time to match the same target and obtain accurate obstacle information.

In this research, a fusion model of the MMV radar and monocular camera was built. The spatial data fusion was realized according to the transformation relations between radar coordinate system, world coordinate system, camera coordinate system, image coordinate system, and pixel coordinate system. The time fusion was realized by the time synchronization method.

2.2.1. Spatial Fusion

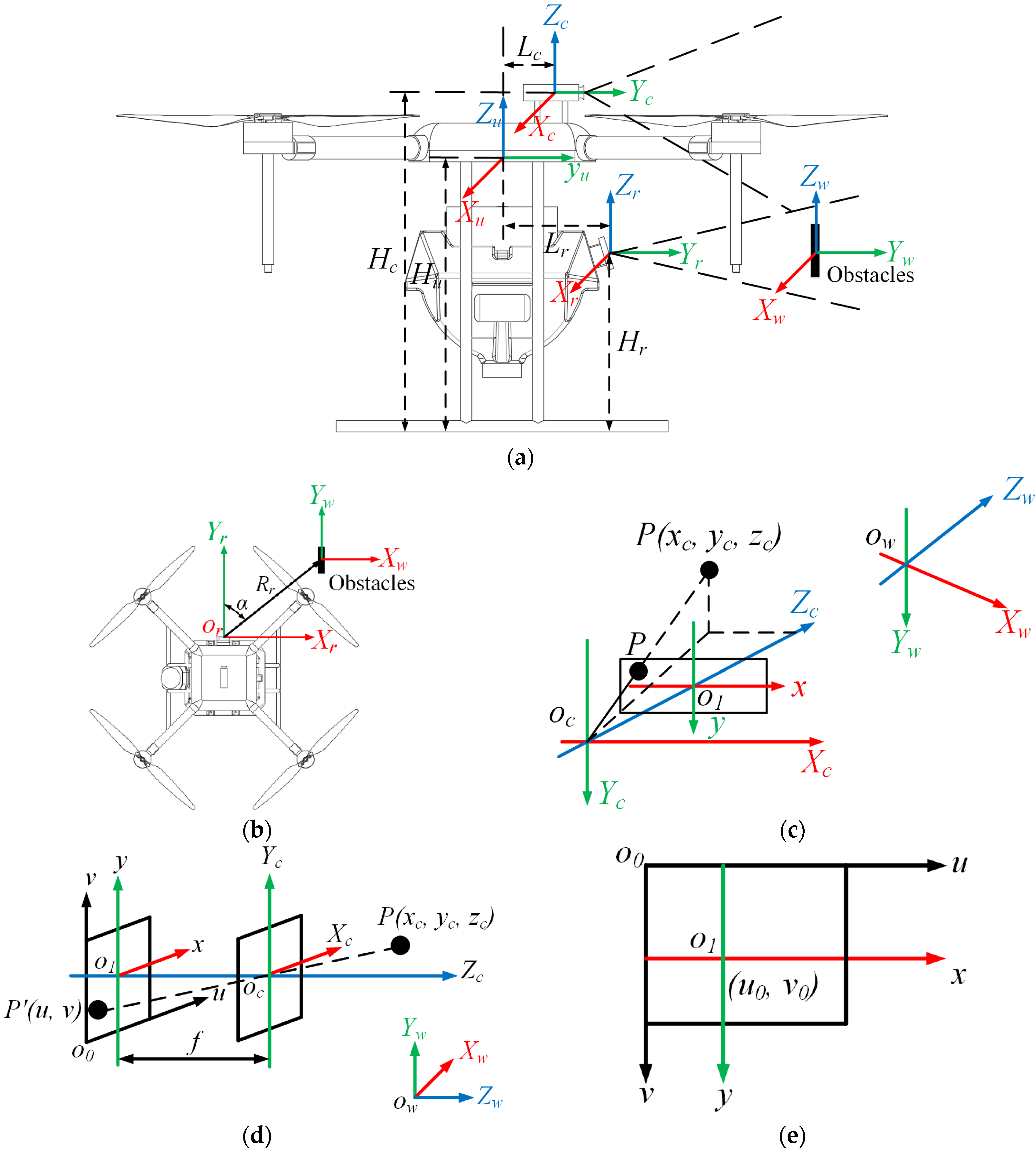

As shown in

Figure 2, the MMV radar and monocular camera were mounted in different positions on the plant protection UAV, so that the projection of same target in the radar and camera coordinate systems was not consistent. A spatial fusion model of the MMV radar and monocular camera was required to connect the two sensors and achieve spatial data fusion of the same target.

As shown in

Figure 2a, (

Xr, Yr, Zr), (

Xu, Yu, Zu), (

Xc, Yc,, Zc), and (

Xw, Yw, Zw) were the coordinate systems of MMV radar, UAV, monocular camera, and world, respectively. In this article, the world coordinate system was established with the center of obstacle. The flight controller mounting point of the plant protection UAV was used as the origin of the UAV coordinate system, the nose direction was positive on the

Y-axis, the

Z-axis was vertical upwards, and the

X-axis can be determined according to the right-hand rule. The MMV radar was mounted on the UAV tank. The monocular camera was mounted above the UAV. The

YOZ planes of the MMV radar coordinate system, monocular camera coordinate system, and the plant protection UAV coordinate system were located in the same plane. The height from the plant protection UAV coordinate system origin to the ground was

Hu. The height from the MMV radar coordinate system origin to the ground was

Hr and the horizontal distance to the plant protection UAV coordinate system origin was

Lr. The height from the monocular camera coordinate system origin to the ground was

Hc and the horizontal distance to the plant protection UAV coordinate system origin was

Lc.

As shown in

Figure 2b, when the MMV radar scans an obstacle, the conversion relationship between the MMV radar coordinate system and the world coordinate system was shown in Equation (1).

where

α is the azimuth of obstacle;

Rr is the distance between the MMV radar and obstacle; and

xw, yw, and

zw are the position of the obstacle in the world coordinate system.

The relationship between the world coordinate system and the pixel coordinate system has been extensively researched [

33,

34,

35] and not repeated in this article. Combined with

Figure 2, the transformation relationship from the obstacle

P(

xw,

yw,

zw) in the world coordinate system to the projected point

P’(u,v) in the pixel coordinate system was shown in Equation (2).

where

dx and

dy are the physical size of each pixel on the

x-axis and

y-axis of image coordinate system, respectively; (

u0,

v0) is the coordinates of image coordinate system origin in pixel coordinate system;

f is the focal length of monocular camera;

R is 3 × 3 orthogonal unit matrix that describes the rotation of coordinate system about the

X,

Y, and

Z axes, respectively; and

T is a translation vector used to describe the translational relationship of coordinate system origin.

Combining Equations (1) and (2), the obstacle coordinates under the data fusion of the MMV radar and monocular camera can be obtained according to Equation (3).

where

M1 is the internal parameter of monocular camera; and

M2 is the external parameter of monocular camera.

To achieve spatial data fusion between MMV radar and monocular camera, calibration needs to be carried out. Among the camera calibration methods, the checkerboard grid calibration method is widely used in computer vision because of its simple operation and high calibration accuracy [

34]. This article uses the monocular camera calibration toolbox in MATLAB for calibration. The checkerboard grid used for calibration has a size of 20 mm × 20 mm for each small square.

According to the above calibration method, the internal parameter

M1 can be obtained. The relative positions of the MMV radar and monocular camera on the plant protection UAV also need to be determined to complete the spatial data fusion. Based on Equation (4), the relative positions of MMV radar and monocular camera on the plant protection UAV can be determined.

where

Tr is the translation vector of MMV radar relative to the plant protection UAV coordinate system; and

Tc is the translation vector of monocular camera relative to the plant protection UAV coordinate system.

2.2.2. Time Fusion

The MMV radar and monocular camera have different sampling frequencies, the two sensors collected data from different times and the data were not synchronized. In order to ensure that the MMV radar and monocular camera collect distance and image of the obstacle at the same moment and location, the two sensors need to be time-synchronized.

The sampling frequency of the MMV radar and monocular camera was 90 Hz and 30 fps, respectively. The low sampling frequency of monocular camera was used as a reference for time synchronization and data fusion. As shown in

Figure 3, the time fusion model consists mainly of MMV radar data acquisition threads, monocular camera data acquisition threads, and data processing threads. Set the MMV radar and monocular camera to start working at the same time. Additionally, set the event trigger frequency of 30 Hz to read the distance information from the MMV radar data stream. The distance information from the MMV radar was used to determine whether there are any obstacles in front of the UAV. If an obstacle is present and the distance between the UAV and obstacle is less than or equal to the set safe distance threshold. The monocular camera image data thread will be triggered to acquire the current image. Then, add the distance and image data to the buffer queue. The data processing thread reads the distance and image data from the buffer queue. The image data were used for obstacle contour detection. Eventually, the distance and contour information of obstacle was sent to the improved A* obstacle avoidance algorithm of the plant protection UAV. A detailed description of the improved A* obstacle avoidance algorithm can be found in the

Section 2.4. The sampling frequency of MMV radar and monocular camera time fusion model was 30 Hz to ensure the time synchronization of data.

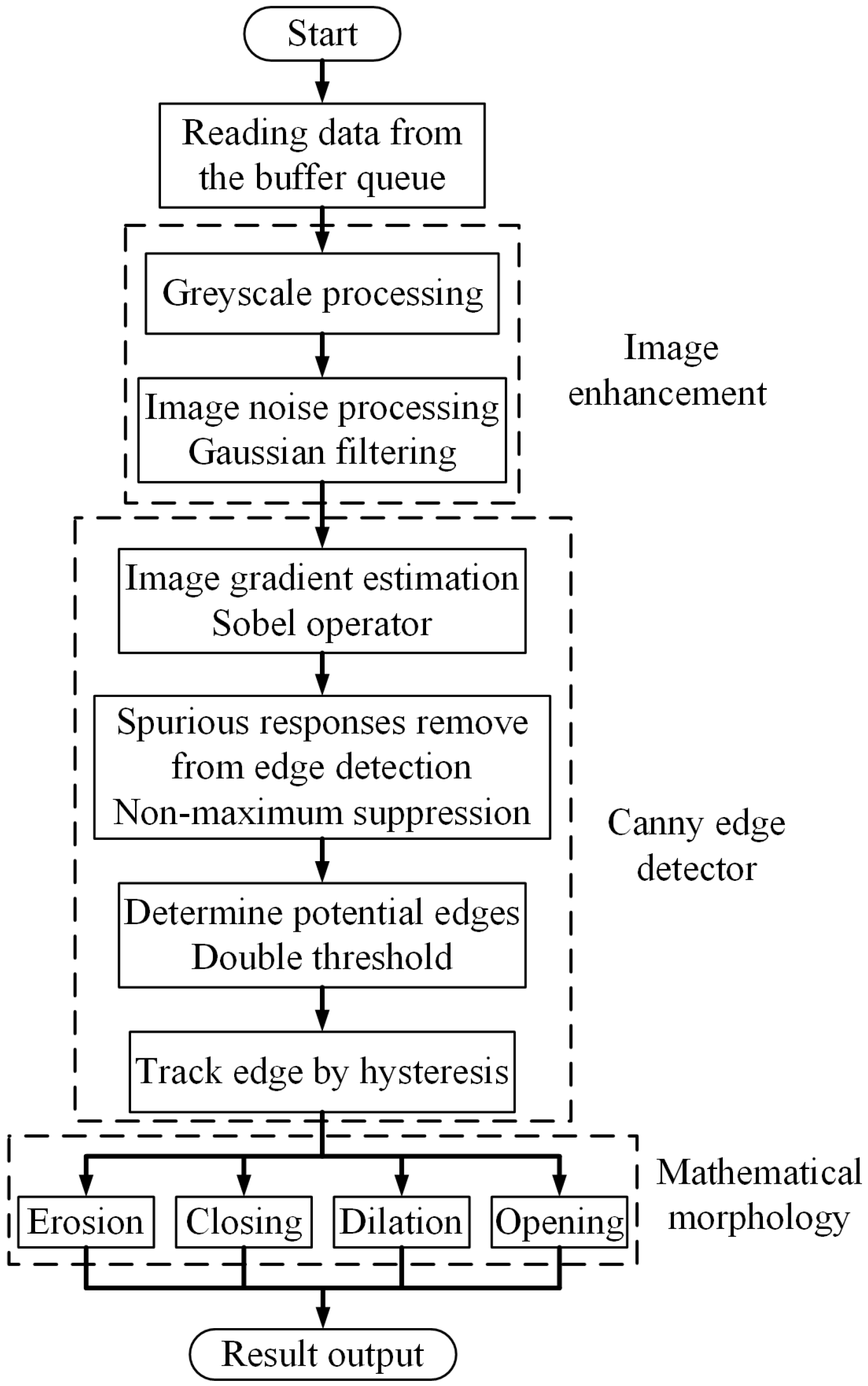

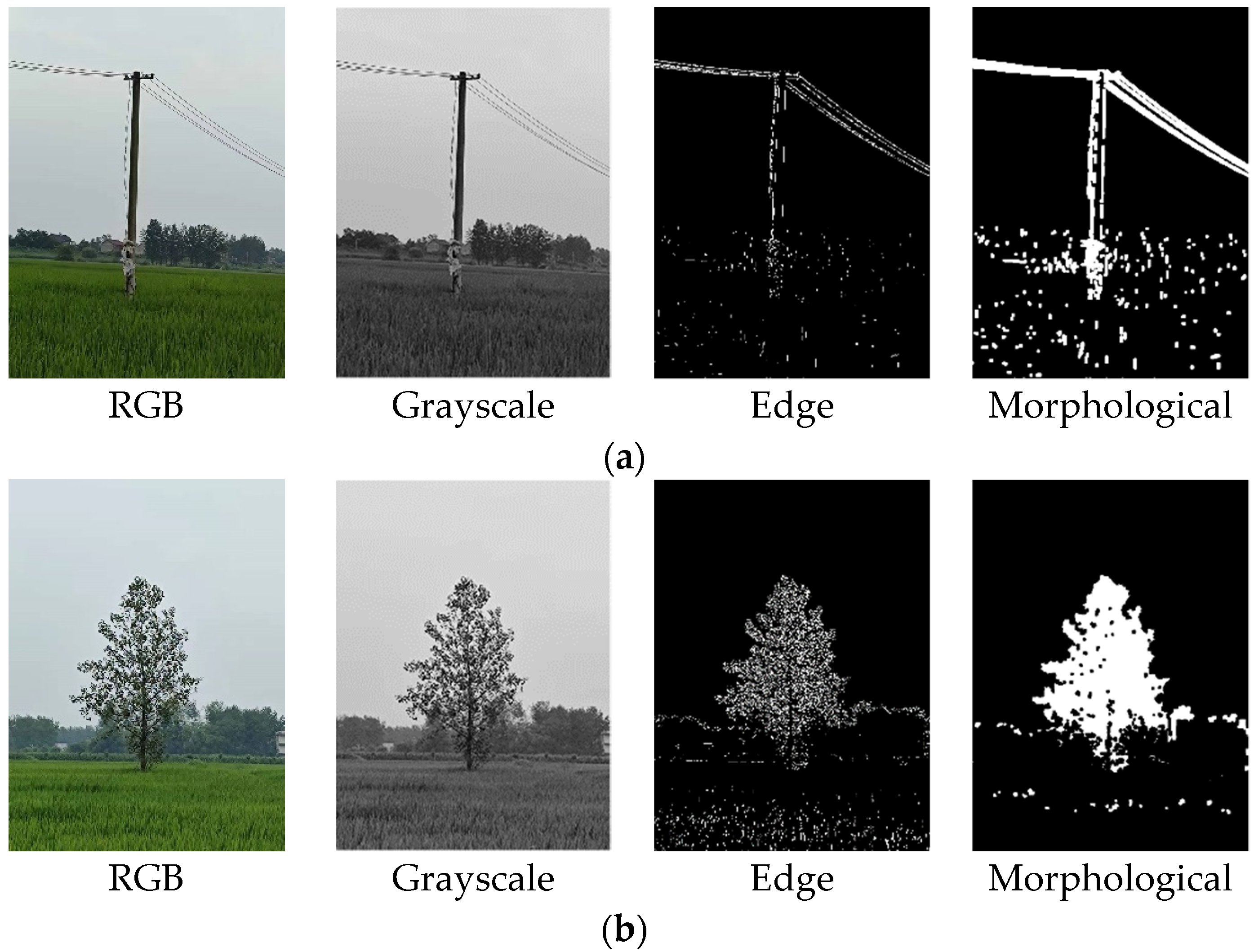

2.3. Obstacle Contour Extraction

As described above, the distance and image of the obstacle can be obtained based on the time-spatial fusion of MMV radar and monocular camera. Ideally, the obstacle captured by the monocular camera can be completely separable from the surroundings. In reality, the complexity and interference of images captured by monocular camera make it difficult to accurately identify the contours of obstacles. Therefore, this article uses the Canny edge detection algorithm [

36,

37,

38] and mathematical morphological methods [

39,

40,

41] to extract the contour of obstacles. The process was shown in

Figure 4.

The Canny edge detection algorithm usually processes gray images. The output of monocular camera used in this article was an RGB image, so the image needs to be processed in greyscale. As shown in Equation (5), a weighted summation was used for the greyscale processing of the image.

where

R,

G, and

B are the red, green, and blue color channels, respectively;

i,

j are the pixel point positions; and

α,

β, and

γ are the weights of the different colors, satisfies

. In this research, a weighting value proposed from human physiological perspective was used [

42,

43], with

α,

β, and

γ being 0.299, 0.587, and 0.114, respectively.

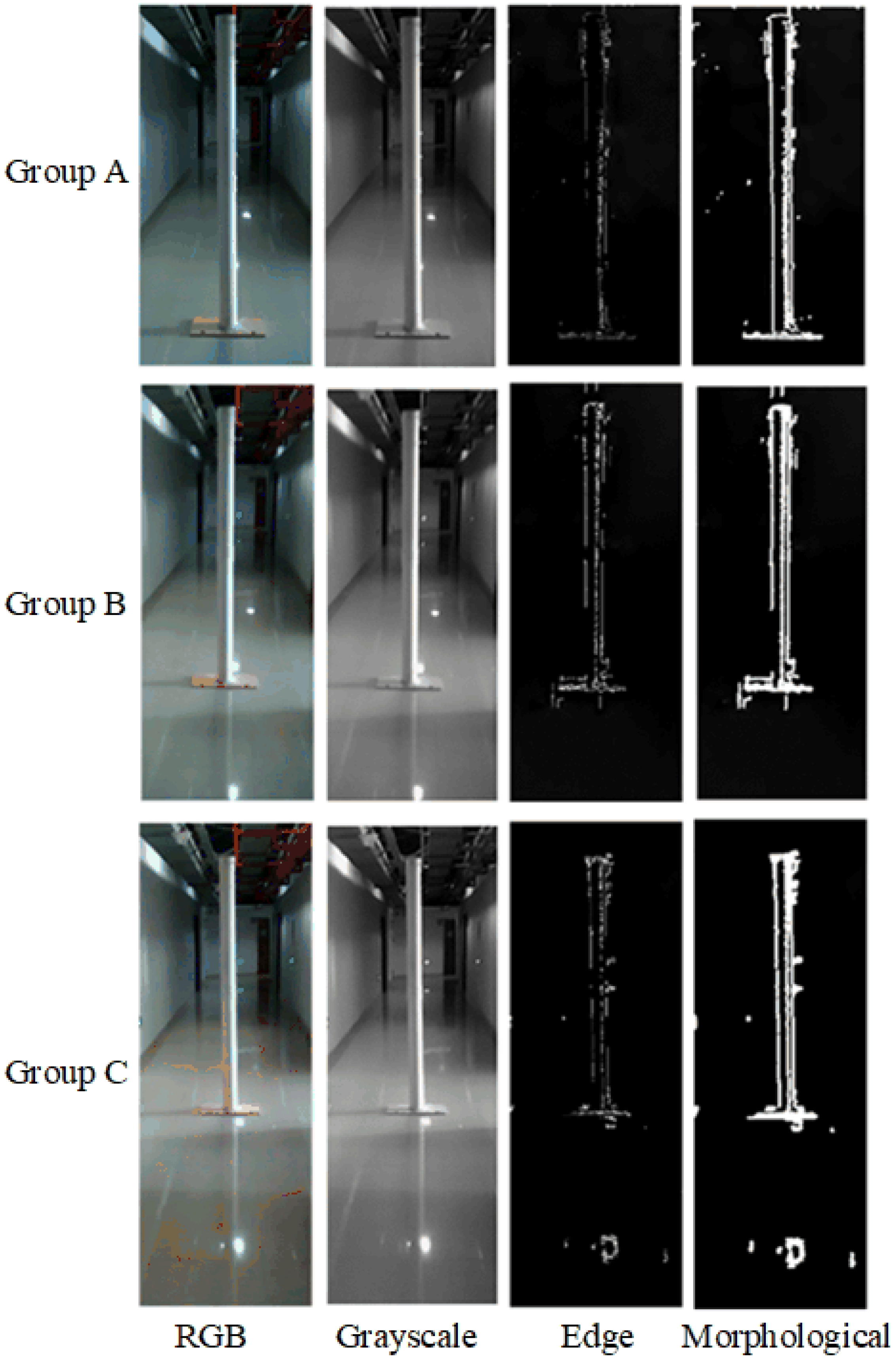

The above method can remove interference from the obstacle image, enhancing the obstacle information and making the obstacle contours better visible. Before carrying out obstacle information extraction in the field, this study presents validation experiments on the accuracy of MMV radar and monocular camera data fusion and obstacle contour extraction. The obstacle used for the validation experiment was the manual setting of a column with 110 mm diameter and 2100 mm height. The research shows that the distance between the plant protection UAV and obstacle was usually 2–5 m [

31,

32] and the UAV has a certain length of the rotor. Therefore, during the validation experiments, the distance from the MMV radar and monocular camera to the obstacle was set to 3–5 m. Depending on the distance, the verification experiment was divided into three groups. The distance from the UAV to obstacle in Group A, B, and C were 3 m, 4 m, and 5 m, respectively. The accuracy of the obstacle information extraction was evaluated based on the distance, height, and diameter of the obstacles obtained from the verification experiment.

After the verification experiment, the type and distribution of obstacles in unstructured farmland need to be considered when carrying out the real obstacle information extraction. As described above, most of the obstacles in unstructured farmland are micro, small, and medium obstacles. Therefore, the wire poles and trees were used as obstacles in this article. Additionally, according to the field operation parameters, the flight altitude and the distance from the UAV to obstacle were 2 m and 3 m, respectively. The MMV radar and monocular camera data fusion and obstacle contours extraction method were used to extract obstacle information in the field.

2.4. Improved A* Obstacle Avoidance Algorithm

Based on the obtained obstacle information, this article presents an improved A* obstacle avoidance algorithm for the plant protection UAV obstacle avoidance path planning. The traditional A* algorithm was based on a heuristic direct search method for solving shortest paths [

44]. However, the traditional A* algorithm has many search nodes, which leads to lower efficiency. Additionally, to ensure the shortest path, the algorithm has more inflection points [

45,

46]. Traditional A* algorithms applied directly to the plant protection UAV will cause unsmooth and instability flight.

For the high efficiency, smoothness, and continuity flight requirements of the plant protection UAV, an improved A* obstacle avoidance algorithm was proposed in this article. The algorithm improves on the traditional A* algorithm in the following three steps.

First step, a dynamic heuristic function was used to dynamically adjust the weight of the estimated cost based on the distance between the current point and the target point. Second step, carry out optimization of search points to reduce the number of search nodes. Third step, the number of inflection points was optimized to reduce the turns as much as possible without increasing the distance. After completing the three improvement steps, efficient obstacle avoidance path planning can be realized and the path only includes the starting point, ending point, and key turning point. It can reduce the number of turns made by the UAV during obstacle avoidance to improve avoidance efficiency and flight stability.

2.4.1. Dynamic Heuristic Function

The heuristic function of the traditional A* obstacle avoidance algorithm was shown in Equation (6). Additionally, the algorithm traverses many unnecessary nodes during the path search, which affects the efficiency of the search.

where

f(n) is the estimated cost from the initial point through the current point to the target point;

g(n) is the actual cost from the initial point to the current point;

h(n) is the estimated cost of the shortest path from the current point to the target point.

The key condition for the shortest path was the h(n). If the d(n) represents the actual cost of the shortest distance from the current point to the target point, then the following situation will arise.

When h(n) < d(n), more nodes will be searched with inefficient, but the shortest path can be found. When h(n) > d(n), the search has fewer nodes and more efficient, but the shortest path cannot be guaranteed. When h(n) = d(n), the shortest path can be searched with high efficiency. h(n) and d(n) were related to the position of the current point and if the current point was farther away from the target point, h(n) was smaller than d(n).

As shown in Equation (7), this article uses a dynamic heuristic function to complete the first step of improving the traditional A* obstacle avoidance algorithm according to the relative positions of the current point and the target point. When the current point was far from the target point, the weight of

h(n) will be increased to improve the efficiency. As the current point approaches the target point, the weight of

h(n) will be reduced to ensure that the shortest path can be searched.

where

R is the distance from the initial point to the target point; and

r is the distance from the current point to the target point.

2.4.2. Search Grid Optimization

According to the

Section 2.4.1, the traditional A* algorithm was improved in the first step by dynamic heuristic function to improve the efficiency of the path planning. However, the improved dynamic heuristic function A* algorithm still searches the eight neighborhood grids of the current point during path planning. As shown in

Figure 5a, the blue grid was the current point, and

n1 to

n8 was the 8 directions of the search grid. There were still unnecessary nodes in the eight neighborhood grids depending on the direction from the current point to the target point, resulting in wasted computation time and storage space.

Therefore, in order to further improve search efficiency, this article proposes a search point optimization method based on the dynamic heuristic function. According to the relative position of the current point and target point, only four search directions were reserved. The line connecting the current point and the target point has an angle of α with

n1 and clockwise was positive. The corresponding relationship between

α and the search direction as shown in

Table 2.

For the extreme case as shown in

Figure 5b, the blue grid was the current point, the red grid was the obstacle and the green grid was the target point. Obstacles incompletely surround the current point and the path was not within the four reserved search directions according to the search point optimization method. At this point the improved A* algorithm with the only dynamic heuristic function will be re-enabled for path planning. When the path of the current point has been searched, the search point optimization method will continue to be used for path planning.

2.4.3. Inflection Points Optimization

As pointed out above, the traditional A* algorithm has many inflection points in path planning to achieve the shortest path. However, the more turns the plant protection UAV makes, the less flight stability. Especially when there was liquid in the tank of the plant protection UAV [

47,

48,

49]. The path planning for the plant protection UAV should reduce the turns to ensure the smooth and stability flight.

Based on the dynamic heuristic functions and search point optimization method, this study proposes an inflection points optimization method to reduce the inflection points as far as possible without increasing the path length. So that the obstacle avoidance path only includes the starting point, ending point, and key turning point. This will reduce the turns during obstacle avoidance and improve the efficiency and flight stability in obstacle avoidance. The inflection point optimization method was as follows.

The improved A* obstacle avoidance algorithm described in this article divides the environment into grid points. The points can be divided into obstacle points, non-obstacle points, and special points (starting and ending points). In path planning, the starting point was used as the first parent node for the expansion of non-obstacle points with the search point optimization method. If there was a point in the extension of the non-obstacle point that meets the requirements, the point will be upgraded to a candidate point and put into the candidate point matrix. According to the dynamic heuristic function, the best point will be selected from the candidate point matrix and upgraded to the next parent node. After extending to the ending point, the planned path can be found and plotted out by using the parent nodes.

The best point was selected from the candidate point matrix. Through the direction information of the best point, it is possible to know which parent node develops the best point. Then, can get the direction information of the parent node, and get the position of the next step. For example, if the directional information of the parent node B of the best point A was right, it means that the parent node B was expanded from the point on the right side of the best point A. If a straight line path was required, then the next point C was the point to the left of the parent node B. Get the position of the next point C and determine whether it belongs to the candidate point matrix. If the next point C was in the candidate point matrix, and the cost was same as the original point. Then, the next point C will be used to replace the original point for optimization. Otherwise, the original point will continue to be used.

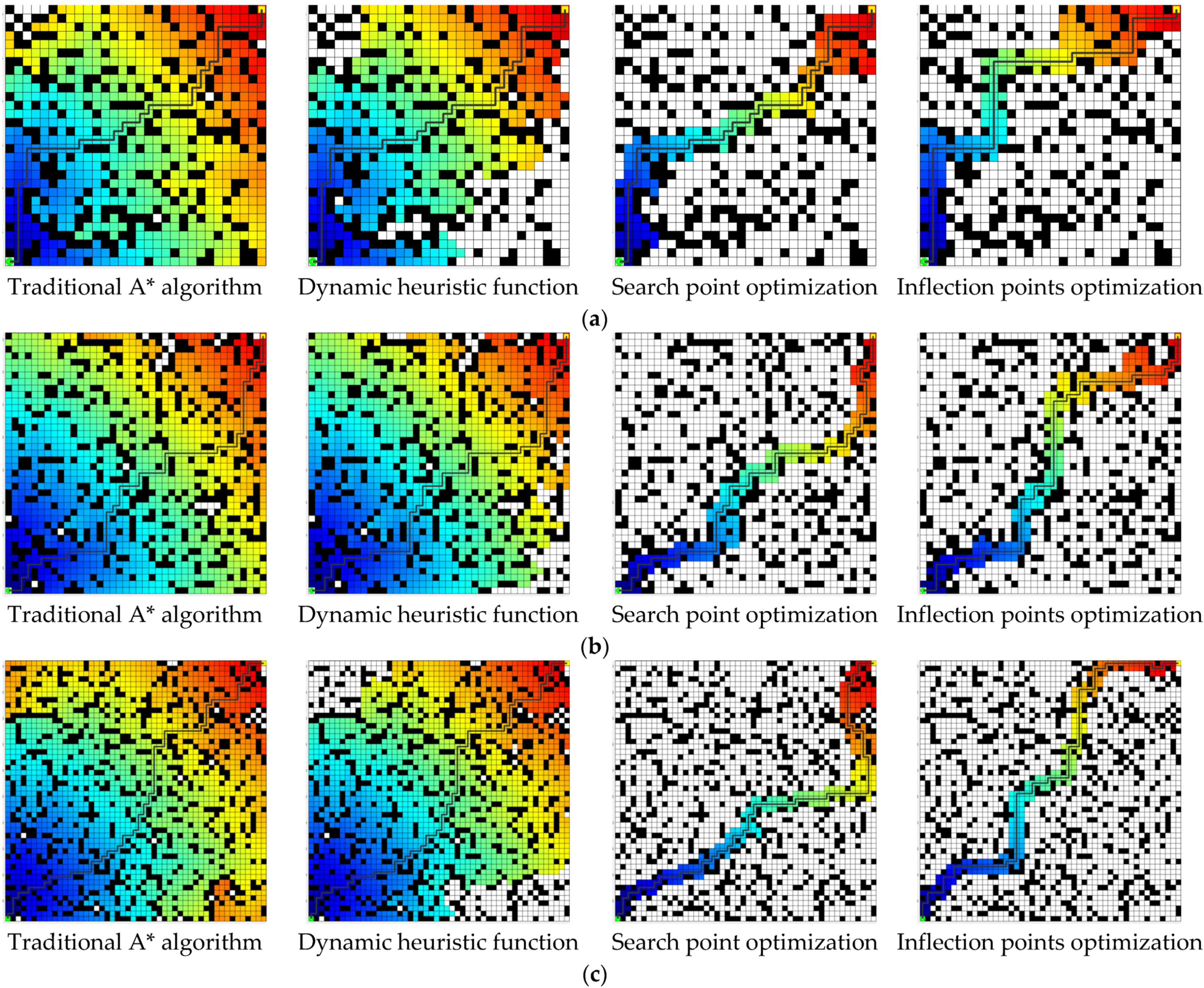

2.5. Improved A* Algorithm Performance Simulation

Based on the above, the improved A* obstacle avoidance algorithm improves on the traditional A* algorithm through dynamic heuristic function, search point optimization, and inflection points optimization. Different simulation environments were created in MATLAB to present comparisons of the obstacle avoidance performance between the improved A* algorithm and traditional A* algorithm.

Environment 1 was a 30 × 30 grid map with a starting point at coordinates (1, 1), ending point at coordinates (30, 30), and the obstacle was randomly distributed with a coverage of 30%. Environment 2 was a 40 × 40 grid map with a starting point at coordinates (1, 1), ending point at coordinates (40, 40), and the obstacle was randomly distributed with a coverage of 30%. Environment 3 was a 50 × 50 grid map with a starting point at coordinates (1, 1), ending point at coordinates (50, 50), and the obstacle was randomly distributed with a coverage of 30%. The data processing time, search grids number, path length, and inflection points number were used as evaluation metrics for the performance of the different algorithms.

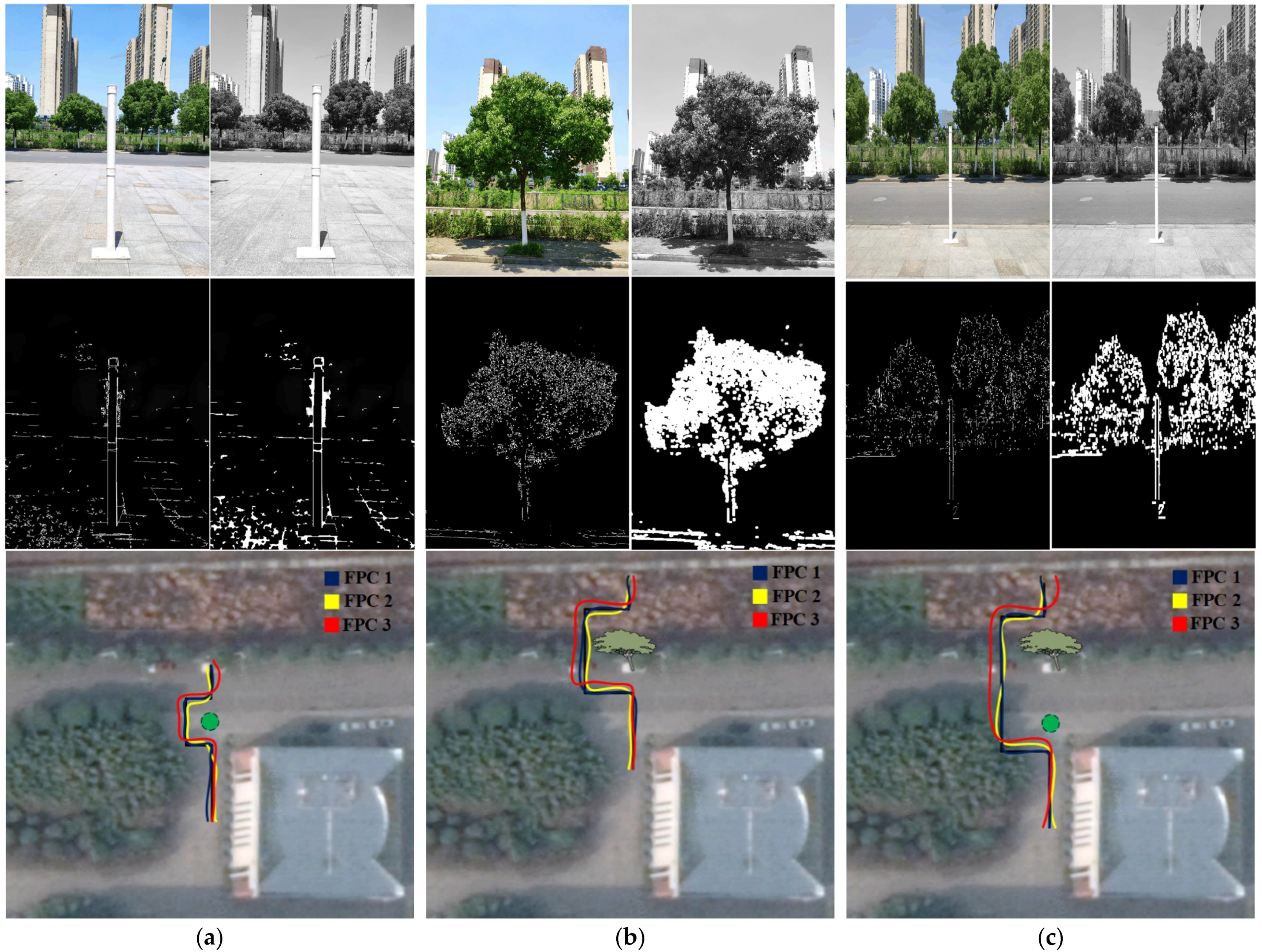

2.6. Obstacle Avoidance Flight Experiments

The experiments of the improved A* obstacle avoidance algorithm for the plant protection UAV based on MMV radar and monocular camera data fusion were conducted at Jiangsu University (32°11′57.1″ N, 119°30′07.9″ E).

As shown in

Figure 6, the experimental area was a 100 m × 20 m open field in the east–west direction, divided into take-off area and obstacle avoidance area. The take-off area was used for the take-off and stable flight speed of the plant protection UAV. The obstacle avoidance area was distributed with trees and manually arranged wire poles. To ensure safety, the plant protection UAV was equipped with an emergency control channel. If the trend of failed obstacle avoidance occurs, the plant protection UAV can switch to manual control mode through the emergency control channel. Additionally, at the end of the obstacle avoidance, the plant protection UAV will fly back to the take-off area and land through manual control mode.

The experiment was divided into three scenarios according to the obstacle arrangement in the obstacle avoidance area. Scenario A was only wire poles in the obstacle avoidance area, Scenario B was only trees in the obstacle avoidance area, and Scenario C was both wire poles and trees in the obstacle avoidance area. In scenario C, the distance between wire poles and trees was 9 m.

During the experiment, the take-off point of the plant protection UAU was 30 m from the obstacle and flying from east to west. Studies have shown that the spray performance of the plant protection UAV (droplet deposition coverage, deposition distribution uniformity, etc.) was better when flight speed was 1–3 m·s

−1 and flight altitude was 1–3 m during the spraying [

50,

51,

52]. Therefore, this article uses flight speed of 1–3 m·s

−1 and flight altitude of 1–3 m as flight parameters for the obstacle avoidance experiment. The experiments for each scenario were divided into three groups according to different flight parameter combinations (FPC). FPC 1 has flight speed of 1 m·s

−1 and altitude of 1 m, FPC 2 has flight speed of 2 m·s

−1 and altitude of 2 m, FPC 3 has flight speed of 3 m·s

−1 and altitude of 3 m. For consistency, the warning distances of the plant protection UAV for obstacle avoidance were all set to 3 m. Each FPC in each scenario was repeated three times and the GPS data of the plant protection UAV was recorded and averaged for obstacle avoidance trajectory analysis. The obstacle avoidance trajectory was selected with the obstacle avoidance warning point as the starting point and the obstacle avoidance ending point as the ending point. The obstacle avoidance path planned by the improved A* obstacle avoidance algorithm and the actual obstacle avoidance trajectory during flight were compared and analyzed. The relative distance between the plant protection UAV and obstacles during obstacle avoidance can reflect the safety of the algorithm. Therefore, the minimum relative distance between the UAV and obstacle during obstacle avoidance was measured based on GPS data under different scenarios and FPC.

3. Results

3.1. Data Fusion Results

As shown in

Figure 7, the calibration of the monocular camera was carried out according to the method described in the

Section 2.2.

The results of the monocular camera calibration as shown in

Table 3. The maximum error between calibrated focal length and nominal focal length was 1.0%. The mean error of the pictures used for calibration was 0.3 pixels.

In addition to the calibration of the monocular camera, the relative positions of the MMV radar and the monocular camera on the plant protection UAV also need to be determined to complete the data fusion. The plant protection UAV was placed on a horizontal surface and the height Hu from the UAV coordinate system origin to the ground was 320 mm. The height Hr from the MMV radar coordinate system origin to the ground was 180 mm and the horizontal distance Lr between the MMV radar coordinate system origin and the UAV coordinate system origin was 250 mm. The height Hc from the monocular camera coordinate system origin to the ground was 460 mm and the horizontal distance Lc between the monocular camera coordinate system origin and the UAV coordinate system origin was 120 mm. According to Equation (4), the relative position of MMV radar and monocular camera on the plant protection UAV was . Combining the results of monocular camera calibration and time synchronization, the MMV radar and monocular camera data fusion was completed.

3.2. Obstacle Information Extraction Results

According to the experiments methods for MMV radar and monocular camera data fusion and the field obstacle information extraction described above. The results of the validation experiments were shown in

Figure 8 and

Table 4. Additionally, the results of the field obstacle information extraction were shown in

Figure 9.

From the validation experimental results, it can be seen that according to the method of MMV radar and monocular camera data fusion and obstacle information extraction, the distance and contour of the obstacles can be effectively obtained. The distance measurement error was proportional to the measuring distance, with a maximum error of 8.2% within the set measuring range. The measured values of the obstacle’s diameter and height decrease as the measuring distance increases. The maximum error in the set measuring range was 27.3% for diameter and 18.5% for height. The validation experimental results also show that the measured values were generally smaller than the true values and the measurement accuracy depends heavily on the distance from MMV radar and monocular camera to the obstacle.

The experimental results of field obstacle information extraction show that according to the method of MMV radar and monocular camera data fusion and obstacle information extraction described in the article, clear and smooth contours of the obstacle can be obtained. It can provide a basis for the plant protection UAV obstacle avoidance decision.

3.3. Performance Simulation Results

Based on the

Section 2.4, the improved A* obstacle avoidance algorithm improves on the traditional A* algorithm through the following three steps: dynamic heuristic function, search point optimization, and inflection points optimization. Three different simulation environments were built using MATLAB to verify the algorithm performance. Additionally, the data processing time, search grids number, path length, and inflection points number were used as evaluation metrics.

The path planning trajectories of different algorithms in different environments, as shown in

Figure 10, and the performance results of different algorithms were shown in

Table 5.

It can be seen from

Figure 10 that both the traditional A* algorithm and the A* algorithm improved by the first step (dynamic heuristic function), the second step (search point optimization), and the third step (inflection point optimization) can plan the obstacle avoidance path. The traditional A* obstacle avoidance algorithm has the largest number of search grids. The A* algorithm with dynamic heuristic function can reduce the search grids number to some extent. The A* algorithm with search point optimization and inflection points optimization has more purposeful in the path search and significantly reduces the search grid.

Further analysis based on

Table 5 shows that the traditional A* obstacle avoidance algorithm has the shortest path length and longest data processing time. The longest path of the improved A* algorithm only increased by 2.0% in different environments. However, the data processing time has been significantly reduced, especially after the search point optimization and inflection points optimization. Compared to the traditional A* obstacle avoidance algorithm, the A* obstacle avoidance algorithm with the first optimized step (dynamic heuristic function) reduces at least 7.5% of data processing time and 6.2% of grid search number. The algorithm with the second optimized step (search point optimization) reduces at least 68.4% of data processing time and 79.1% of grid search number. The algorithm with the third optimized step (inflection point optimization) reduces at least 68.4% of data processing time, 74.9% of grid search number, and 20.7% of turning points.

According to the performance simulation results, the improved A* obstacle avoidance algorithm proposed in this article can significantly reduce the data processing time, search grid number, and turning point number. It meets the flight requirements of high efficiency, smoothness, and continuity for the plant protection UAV.

3.4. Obstacle Avoidance Flight Results

Actual obstacle avoidance flight experiments were carried out at Jiangsu University (32°11′57.1″ N, 119°30′07.9″ E) based on the methods described in the

Section 2.4. During the experiments, the outdoor temperature was 28 °C, ambient humidity was 21.5%, east wind, and average wind speed was 0.36 m·s

−1. The results as shown in

Figure 11. During the obstacle avoidance trajectory analysis, the obstacle size and flight path offset have been deliberately magnified by 10 times to make the trajectory clearer. The minimum relative distance between the plant protection UAV and obstacle during obstacle avoidance under different scenarios and FPC was shown in

Table 6.

The results show that during actual obstacle avoidance flight with different scenarios and FPC, the MMV radar and monocular camera data fusion and obstacle information extraction method can obtain the accurate distance and contours of obstacle. It can provide obstacle information for the improved A* obstacle avoidance algorithm. Based on the obtained obstacle information, the improved algorithm for the plant protection UAV can perform path planning and control the UAV to perform autonomous obstacle avoidance flight. The actual flight trajectory of the plant protection UAV was consistent with the path planning trajectory under different scenarios and FPC. The flight altitude has non-significant effects on flight trajectory offset and relative distance between the UAV and obstacle. The trajectory offset was proportional to the flight speed, with the maximum trajectory offset of 0.1 m for flight speed at 1 m·s−1 and 1.4 m for flight speed at 3 m·s−1. The minimum relative distance between the plant protection UAV and obstacle decreases as flight speed increases. When the flight speed was 1 m·s−1, the distance was 2.8 m, and when the flight speed was 3 m·s−1, the distance was 1.6 m.

4. Discussion

In order to improve the environment perception and autonomous obstacle avoidance capability of the plant protection UAV in unstructured farmland. The data fusion of MMV radar and monocular camera was implemented on the plant protection UAV. Combined with the operating environment and flight requirements of the plant protection UAV, the traditional A* algorithms has been improved through dynamic heuristic functions, search point, and inflection point optimization based on the data fusion.

The data fusion method can effectively obtain the distance of obstacle and trigger the monocular camera to capture the obstacle image according to the distance. Additionally, based on the obstacle image to extract a clear and smooth contour of the obstacle. Which can provide obstacle information for the improved A* obstacle avoidance algorithm of the plant protection UAV. The distance measurement error of the data fusion method was proportional to the measuring distance. Additionally, the maximum error in measuring distance was 8.2% within the set measurement range. The measured values of the obstacle’s width and height extracted from the monocular camera image decrease as the measuring distance increases. The maximum measurement error was 27.3% for width and 18.5% for height. The measured values were generally smaller than the true values and the measurement accuracy depends heavily on the distance from MMV radar and monocular camera to the obstacle.

Compared to the traditional A* obstacle avoidance algorithm, the improved A* obstacle avoidance algorithm at most increases the path length by 2.0%, at least reduces the data processing time by 68.4%, grid search number by 74.9%, and turning point number by 20.7%. The flight trajectory offset was proportional to the flight speed, with the maximum trajectory offset of 0.1 m for flight speed at 1 m·s−1 and 1.4 m for flight speed at 3 m·s−1. The minimum relative distance between the plant protection UAV and obstacle decreases as flight speed increases. When the flight speed was 1 m·s−1, the distance was 2.8 m, and when the flight speed was 3 m·s−1, the distance was 1.6 m. Combined with the UAV size used in this article (wheelbase: 1200 mm, rotor: 736.6 mm), there would be a risk of crash if the flight speed was further increased. Therefore, the higher flight speed was not tested for obstacle avoidance.

As can be seen from

Figure 11, the trajectory offset at the path inflection points was large and the minimum relative distance between the plant protection UAV and obstacle usually occurs at the first inflection point of the obstacle avoidance path. The possible reason for this phenomenon might be that although the obstacle avoidance algorithm delivers an obstacle avoidance command, the faster flight speed, the slower to adjust the flight attitude of the UAV under the effect of inertia. In the same data processing time and attitude adjustment time, the faster flight speed, the farther flight distance. Eventually, it shows that the faster the flight speed, the larger trajectory offset, and the smaller distance to the obstacle.

In general, the results show that the study in this article can improve obstacle avoidance ability of the plant protection UAV in unstructured farmland. It may provide new ideas for enhance safety and autonomous flight, and promote wider application of the UAV. In future work, the obstacle avoidance algorithm will be continuously optimized to improve the speed and safety of obstacle avoidance. Comparisons between different obstacle avoidance algorithms will also be carried out, integrating evaluation metrics, such as obstacle avoidance speed, obstacle avoidance zone size, flight stability, computational cost, and economic cost, aiming to find the most suitable obstacle avoidance algorithm for the plant protection UAV.

5. Conclusions

This study presented an improved A* obstacle avoidance algorithm for the plant protection UAV to avoid obstacles in unstructured farmland. The algorithm uses MMV radar and monocular camera data fusion to obtain obstacle information. Improved the traditional A* obstacle avoidance algorithms through dynamic heuristic functions, search point optimization, and inflection point optimization. Compared to the traditional A* algorithm, the improved algorithm at most increases the path length by 2.0%, at least reduces the data processing time by 68.4%, grid search number by 74.9%, and turning points by 20.7%. During the obstacle avoidance flight, the minimum error between actual flight trajectory and planned trajectory was 0.1 m and the maximum error was 1.4 m. The minimum relative distance between the plant protection UAV and obstacle was 1.6 m. Based on these results, the method presented in this article can enhance the obstacle avoidance capability of the plant protection UAV in unstructured farmland. It may provide a new solution for autonomous flight of plant protection UAV.

Author Contributions

Conceptualization: B.Q. and X.H.; methodology: B.Q. and X.H.; software: X.H. and S.A.; formal analysis: X.H. and K.L.; data curation: J.M., S.A. and J.L.; investigation: X.H.; writing—original draft preparation: X.H.; writing—review and editing: B.Q., X.H. and X.D.; supervision: B.Q.; funding acquisition: B.Q. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Key Research and Development Program of China (no. 2017YFD0701005), the National Natural Science Foundation (no. 31971790), the Key Research and Development Program of Jiangsu province, China (no. BE2020328), and A Project Funded by the Priority Academic Program Development of Jiangsu Higher Education Institutions (no. PAPD-2018-87).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to privacy and permissions restrictions.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Herwitz, S.; Johnson, L.; Dunagan, S.; Higgins, R.; Sullivan, D.; Zheng, J.; Lobitz, B.; Leung, J.; Gallmeyer, B.; Aoyagi, M.; et al. Imaging from an unmanned aerial vehicle: Agricultural surveillance and decision support. Comput. Electron. Agric. 2004, 44, 49–61. [Google Scholar] [CrossRef]

- Mulla, D.J. Twenty five years of remote sensing in precision agriculture: Key advances and remaining knowledge gaps. Biosyst. Eng. 2013, 114, 358–371. [Google Scholar] [CrossRef]

- Feng, A.; Zhou, J.; Vories, E.D.; Sudduth, K.A.; Zhang, M. Yield estimation in cotton using UAV-based multi-sensor imagery. Biosyst. Eng. 2020, 193, 101–114. [Google Scholar] [CrossRef]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Lan, Y.; Thomson, S.J.; Huang, Y.; Hoffmann, W.C.; Zhang, H. Current status and future directions of precision aerial application for site-specific crop management in the USA. Comput. Electron. Agric. 2010, 74, 34–38. [Google Scholar] [CrossRef] [Green Version]

- Liao, J.; Zang, Y.; Luo, X.; Zhou, Z.; Lan, Y.; Zang, Y.; Gu, X.; Xu, W.; Hewitt, A.J. Optimization of variables for maximizing efficacy and efficiency in aerial spray application to cotton using unmanned aerial systems. Int. J. Agric. Biol. Eng. 2019, 12, 10–17. [Google Scholar] [CrossRef]

- Fritz, B.K.; Hoffmann, W.C.; Martin, D.E.; Thomson, S.J. Aerial Application Methods for Increasing Spray Deposition on Wheat Heads. Appl. Eng. Agric. 2007, 23, 709–715. [Google Scholar] [CrossRef]

- Xue, X.; Lan, Y.; Sun, Z.; Chang, C.; Hoffmann, W.C. Develop an unmanned aerial vehicle based automatic aerial spraying system. Comput. Electron. Agric. 2016, 128, 58–66. [Google Scholar] [CrossRef]

- Aggarwal, S.; Kumar, N. Path planning techniques for unmanned aerial vehicles: A review, solutions, and challenges. Comput. Commun. 2020, 149, 270–299. [Google Scholar] [CrossRef]

- Hosseinpoor, H.R.; Samadzadegan, F.; DadrasJavan, F. PRICISE TARGET GEOLOCATION AND TRACKING BASED ON UAV VIDEO IMAGERY. ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B6, 243–249. [Google Scholar] [CrossRef] [Green Version]

- Huang, A.S.; Bachrach, A.; Henry, P.; Krainin, M.; Maturana, D.; Fox, D.; Roy, N. Visual Odometry and Mapping for Autonomous Flight Using an RGB-D Camera; Springer International Publishing: Cham, Switzerland, 2016; pp. 235–252. [Google Scholar]

- Wang, T.; Zheng, N.; Xin, J.; Ma, Z. Integrating Millimeter Wave Radar with a Monocular Vision Sensor for On-Road Obstacle Detection Applications. Sensors 2011, 11, 8992–9008. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rejas, J.-I.; Sanchez, A.; Glez-De-Rivera, G.; Prieto, M.; Garrido, J. Environment mapping using a 3D laser scanner for unmanned ground vehicles. Microprocess. Microsyst. 2015, 39, 939–949. [Google Scholar] [CrossRef] [Green Version]

- Queirós, R.; Alegria, F.; Girão, P.S.; Serra, A. Cross-Correlation and Sine-Fitting Techniques for High-Resolution Ultrasonic Ranging. IEEE Trans. Instrum. Meas. 2010, 59, 3227–3236. [Google Scholar] [CrossRef]

- Park, J.; Cho, N. Collision Avoidance of Hexacopter UAV Based on LiDAR Data in Dynamic Environment. Remote Sens. 2020, 12, 975. [Google Scholar] [CrossRef] [Green Version]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J. ORB-SLAM: A Versatile and Accurate Monocular SLAM System. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef] [Green Version]

- Iacono, M.; Sgorbissa, A. Path following and obstacle avoidance for an autonomous UAV using a depth camera. Robot. Auton. Syst. 2018, 106, 38–46. [Google Scholar] [CrossRef]

- Dai, X.; Mao, Y.; Huang, T.; Qin, N.; Huang, D.; Li, Y. Automatic obstacle avoidance of quadrotor UAV via CNN-based learning. Neurocomputing 2020, 402, 346–358. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Singla, A.; Padakandla, S.; Bhatnagar, S. Memory-Based Deep Reinforcement Learning for Obstacle Avoidance in UAV With Limited Environment Knowledge. IEEE Trans. Intell. Transp. Syst. 2021, 22, 107–118. [Google Scholar] [CrossRef]

- Yang, X.; Luo, H.; Wu, Y.; Gao, Y.; Liao, C.; Cheng, K.-T. Reactive obstacle avoidance of monocular quadrotors with online adapted depth prediction network. Neurocomputing 2019, 325, 142–158. [Google Scholar] [CrossRef]

- Laroca, R.; Severo, E.; Zanlorensi, L.A.; Oliveira, L.S.; Goncalves, G.R.; Schwartz, W.R.; Menotti, D. A Robust Real-Time Au-tomatic License Plate Recognition Based on the YOLO Detector. In Proceedings of the 2018 International Joint Conference on Neural Networks(IJCNN 2018), Rio de Janeiro, Brazil, 8–13 July 2018; pp. 2161–4407. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.; Berg, A.C. SSD: Single Shot MultiBox Detector; Springer International Publishing: Cham, Switzerland, 2016. [Google Scholar]

- Liu, Z.; Shi, S.; Duan, Q.; Zhang, W.; Zhao, P. Salient object detection for RGB-D image by single stream recurrent convolution neural network. Neurocomputing 2019, 363, 46–57. [Google Scholar] [CrossRef]

- García, J.; Molina, J.M.; Trincado, J. Real evaluation for designing sensor fusion in UAV platforms. Inf. Fusion 2020, 63, 136–152. [Google Scholar] [CrossRef]

- Mac, T.T.; Copot, C.; De Keyser, R.; Ionescu, C.M. The development of an autonomous navigation system with optimal control of an UAV in partly unknown indoor environment. Mechatronics 2018, 49, 187–196. [Google Scholar] [CrossRef]

- Duchoň, F.; Babinec, A.; Kajan, M.; Beňo, P.; Florek, M.; Fico, T.; Jurišica, L. Path Planning with Modified a Star Algorithm for a Mobile Robot. Procedia Eng. 2014, 96, 59–69. [Google Scholar] [CrossRef] [Green Version]

- Kavraki, L.; Latombe, J.-C.; Motwani, R.; Raghavan, P. Randomized Query Processing in Robot Path Planning. J. Comput. Syst. Sci. 1998, 57, 50–60. [Google Scholar] [CrossRef] [Green Version]

- Liu, H.; Liu, H.H.T.; Chi, C.; Zhai, Y.; Zhan, X. Navigation information augmented artificial potential field algorithm for collision avoidance in UAV formation flight. Aerosp. Syst. 2020, 3, 229–241. [Google Scholar] [CrossRef]

- Petres, C.; Pailhas, Y.; Patron, P.; Petillot, Y.; Evans, J.; Lane, D. Path Planning for Autonomous Underwater Vehicles. IEEE Trans. Robot. 2007, 23, 331–341. [Google Scholar] [CrossRef]

- Wang, D.; Li, W.; Liu, X.; Li, N.; Zhang, C. UAV environmental perception and autonomous obstacle avoidance: A deep learning and depth camera combined solution. Comput. Electron. Agric. 2020, 175, 105523. [Google Scholar] [CrossRef]

- Wang, L.; Lan, Y.; Zhang, Y.; Zhang, H.; Tahir, M.N.; Ou, S.; Liu, X.; Chen, P. Applications and Prospects of Agricultural Unmanned Aerial Vehicle Obstacle Avoidance Technology in China. Sensors 2019, 19, 642. [Google Scholar] [CrossRef] [Green Version]

- Steger, C.; Ulrich, M.; Weidemann, C. Machine Vision Algorithms and Applications; Wiley-VCH: Baden-Württemberg, Germany, 2018; p. 516. [Google Scholar]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef] [Green Version]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing; Pearson: New York City, NY, USA, 2018; p. 1168. [Google Scholar]

- Heath, M.; Sarkar, S.; Sanocki, T.; Bowyer, K. A robust visual method for assessing the relative performance of edge-detection algorithms. IEEE Trans. Pattern Anal. Mach. Intell. 1997, 19, 1338–1359. [Google Scholar] [CrossRef] [Green Version]

- Ding, L.; Goshtasby, A. On the Canny edge detector. Pattern Recognit. 2001, 34, 721–725. [Google Scholar] [CrossRef]

- Bao, P.; Zhang, L.; Wu, X. Canny edge detection enhancement by scale multiplication. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1485–1490. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Serra, J. Introduction to mathematical morphology. Comput. Vision, Graph. Image Process. 1986, 35, 283–305. [Google Scholar] [CrossRef]

- Soille, P.; Pesaresi, M. Advances in mathematical morphology applied to geoscience and remote sensing. IEEE Trans. Geosci. Remote Sens. 2002, 40, 2042–2055. [Google Scholar] [CrossRef]

- Aptoula, E.; Lefèvre, S. A comparative study on multivariate mathematical morphology. Pattern Recognit. 2007, 40, 2914–2929. [Google Scholar] [CrossRef] [Green Version]

- Grundland, M.; Dodgson, N. Decolorize: Fast, contrast enhancing, color to grayscale conversion. Pattern Recognit. 2007, 40, 2891–2896. [Google Scholar] [CrossRef] [Green Version]

- Ebner, M. Color constancy based on local space average color. Mach. Vis. Appl. 2009, 20, 283–301. [Google Scholar] [CrossRef]

- Hart, P.E.; Nilsson, N.J.; Raphael, B. A Formal Basis for the Heuristic Determination of Minimum Cost Paths. IEEE Trans. Syst. Sci. Cybern. 1968, 4, 100–107. [Google Scholar] [CrossRef]

- Sang, H.; You, Y.; Sun, X.; Zhou, Y.; Liu, F. The hybrid path planning algorithm based on improved A* and artificial potential field for unmanned surface vehicle formations. Ocean Eng. 2021, 223, 108709. [Google Scholar] [CrossRef]

- Hao, Y.; Agrawal, S.K. Planning and control of UGV formations in a dynamic environment: A practical framework with experiments. Robot. Auton. Syst. 2005, 51, 101–110. [Google Scholar] [CrossRef]

- Ahmed, S.; Qiu, B.; Ahmad, F.; Kong, C.-W.; Xin, H. A State-of-the-Art Analysis of Obstacle Avoidance Methods from the Perspective of an Agricultural Sprayer UAV’s Operation Scenario. Agronomy 2021, 11, 1069. [Google Scholar] [CrossRef]

- Zang, Y.; Zang, Y.; Zhou, Z.; Gu, X.; Jiang, R.; Kong, L.; He, X.; Luo, X.; Lan, Y. Design and anti-sway performance testing of pesticide tanks in spraying UAVs. Int. J. Agric. Biol. Eng. 2019, 12, 10–16. [Google Scholar] [CrossRef] [Green Version]

- Li, X.; Zhang, J.X.; Qu, F.; Zhang, W.Q.; Wang, D.S.; Li, W. Optimal design of anti sway inner cavity structure of agricultural UAV pesticide tank. Trans. Chin. Soc. Agric. Eng. 2017, 33, 72–79. [Google Scholar] [CrossRef]

- Wen, S.; Han, J.; Ning, Z.; Lan, Y.; Yin, X.; Zhang, J.; Ge, Y. Numerical analysis and validation of spray distributions disturbed by quad-rotor drone wake at different flight speeds. Comput. Electron. Agric. 2019, 166, 105036. [Google Scholar] [CrossRef]

- Faiçal, B.S.; Freitas, H.; Gomes, P.H.; Mano, L.; Pessin, G.; de Carvalho, A.; Krishnamachari, B.; Ueyama, J. An adaptive approach for UAV-based pesticide spraying in dynamic environments. Comput. Electron. Agric. 2017, 138, 210–223. [Google Scholar] [CrossRef]

- Fengbo, Y.; Xinyu, X.; Ling, Z.; Zhu, S. Numerical simulation and experimental verification on downwash air flow of six-rotor agricultural unmanned aerial vehicle in hover. Int. J. Agric. Biol. Eng. 2017, 10, 41–53. [Google Scholar] [CrossRef] [Green Version]

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).