1. Introduction

Seawater accounts for about 70% of the global area, and sea ice accounts for 5–8% of the global ocean area. Sea ice is the main cause of marine disasters in high-latitude regions, and it is also an important factor affecting fishery production and construction manufacturing in mid-high latitude regions [

1]. Therefore, sea ice detection has important research significance. As an important part of sea ice detection, sea ice image classification can extract the types of sea ice accurately and efficiently, which is of great significance in the assessment of sea ice conditions and the prediction of sea ice disasters [

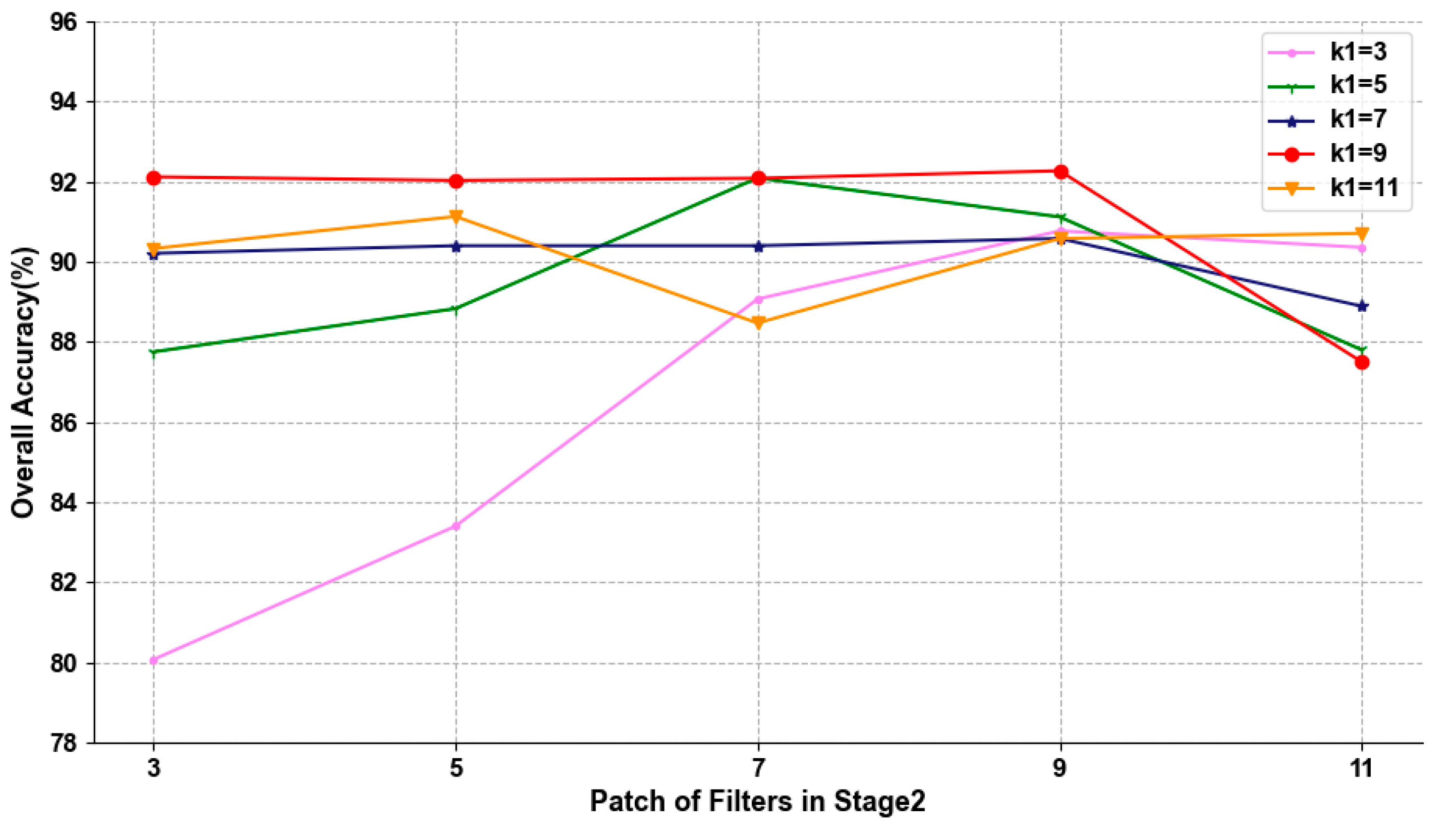

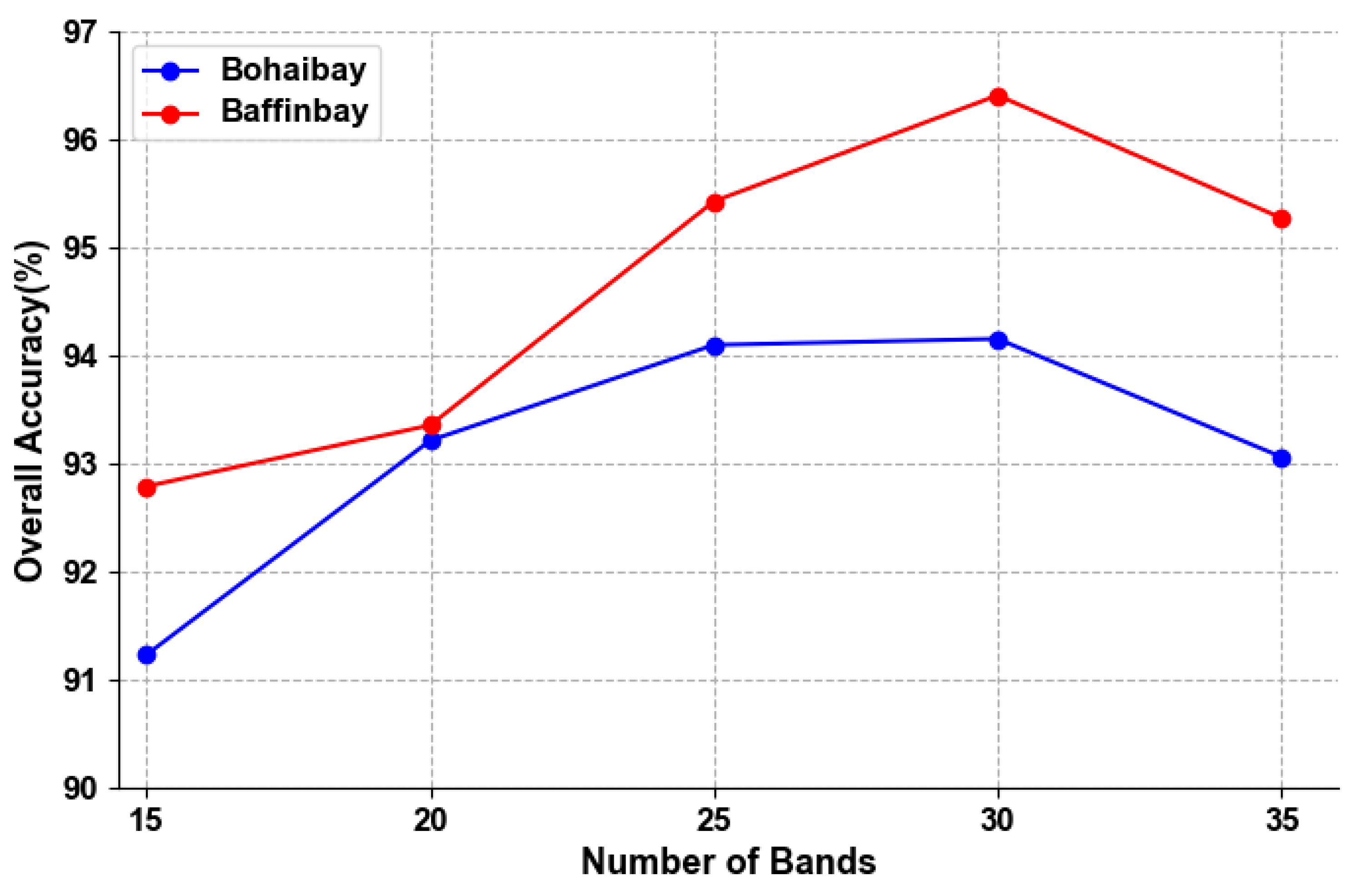

2].

In recent years, the continuous development of remote sensing technology has provided more data sources for sea ice detection. At present, common remote sensing data include the synthetic aperture radar [

3], multispectral satellite images with medium and high spatial resolution, and hyperspectral images [

4]. Of these, hyperspectral remote sensing data have the characteristics of wide coverage, high resolution, and multiple data sources. The data contain rich spectral and spatial information, which supports sea ice detection and classification [

5]. At present, more and more hyperspectral images are being used for sea ice classification, all of which have a good classification effect [

6]. However, the high dimensions of hyperspectral data bring many computational problems, such as strong correlation bands and data redundancy. In addition, due to the special environment of sea ice, it is difficult to obtain a sufficient number of sea ice samples. These problems bring great challenges to the classification of remote sensing sea ice images.

Traditional remote sensing image classification methods include maximum likelihood estimation, decision trees, and support vector machines (SVM) [

7]. Most of these methods do not take full advantage of the spectral and spatial information in hyperspectral images, so it is difficult to solve the phenomenon of having “different objects with the same spectral information”. Therefore, the introduction of effective spatial information can make up for the deficiency of using only spectral information. Common spatial feature extraction methods include the GLCM, Gabor filter, and the morphological profile [

8]. The GLCM is based on a statistical method and has the advantages of strong robustness and recognition ability. The Gabor filter is based on the signal method and has a similar frequency and direction to the human visual system, so it is particularly suitable for textural representation and discrimination [

9]. Zhang Ming et al. [

10] used the GLCM to extract features and carried out classification research on sea ice through the SVM. Zheng Minwei et al. [

11] used the GLCM to calculate the textural features of images and separated ice and water with the SVM. Yang Xiujie et al. [

12] extracted Gabor spatial features from the PCA projection subspace to quantify the local direction and scale features and then combined these with the Gaussian mixture model for classification. For classification using the spectral-spatial-joint features of remote sensing images, there are two main approaches, pixel-based and object-based. Amin et al. [

13] proposed a new semi-automatic framework for spectral-spatial classification of hyperspectral images, using pixel-based classification results as pixel reference and finally doing decision-level classification with object-based classification results. Although the above studies have achieved a certain classification effect, feature extraction is based on a shallow manual extraction method involving prior knowledge, and deeper feature information cannot be obtained, which also limits the accuracy of hyperspectral image classification to a certain extent.

Some studies have shown that the image classification method based on the deep learning algorithm can obtain better classification results than traditional methods [

14]. Since the hyperspectral image classification method based on deep learning was first introduced to the field of remote sensing in 2014 [

15], the simultaneous extraction of spectral and spatial features through deep learning has become a major technical hotspot in the classification of remote sensing images. These algorithms include the deep belief network and the convolutional neural network [

16]. In addition, the deep convolutional neural network algorithm is a relatively mature method that can be used for extracting spectral and spatial features simultaneously. Compared with prior knowledge, the neural network can automatically learn advanced features. It reduces the large amount of repetitive and cumbersome data preprocessing work required for traditional algorithms, such as SVMs [

17]. Chen Sizhe et al. [

18] used the full convolutional neural network to classify sea ice images and achieved a significantly superior average accuracy to that of traditional algorithms. The convolutional neural network model established by Wang Lei et al. [

19] has the ability to recognize the differences between subtle features, which is conducive to intra-class classification. However, training a neural network requires a wealth of data and a large number of parameters, which is a huge challenge for computational performance. In order to avoid cumbersome parameter tuning techniques in 2015, Chen et al. proposed a deep learning baseline network PCANet [

20] to reduce the computational overhead and achieved good results in tasks such as image classification and target detection. Wang Fan et al. [

21] proposed an improved PCANet model, whose improvement lies in the input layer of PCANet, and applied it to the classification of hyperspectral images. The PCA network, as a competitive deep learning network, has the following advantages: on one hand, it is easy to train, which allows it to achieve good effects for small samples and improve the training time efficiency; on the other hand, the cascading mapping process of the network allows a mathematical analysis to be carried out and proves its effectiveness, which is urgently needed in today’s deep learning algorithms.

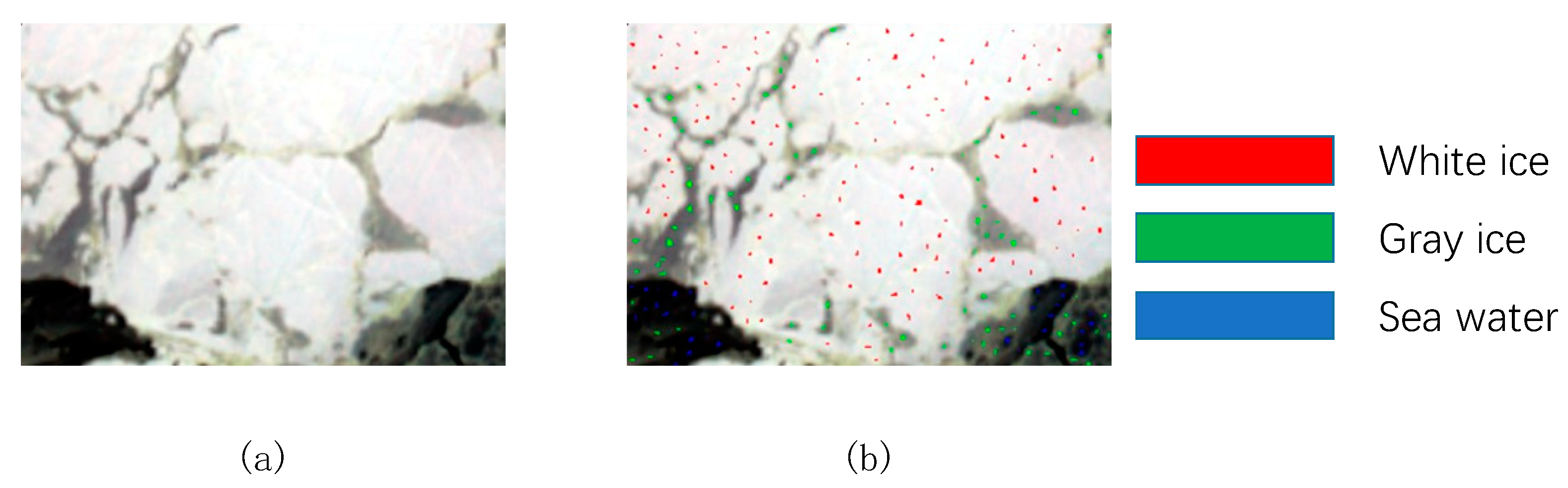

Based on the above research, this paper proposes a hyperspectral sea ice classification method based on a spectral-spatial-joint feature with PCANet. This method fully extracts spectral and spatial information from hyperspectral data and uses the PCANet deep learning network to obtain depth feature information from sea ice images. It further improves the sea ice classification accuracy and allows a highly efficient training process. In this method, the sea ice textural information is extracted by the GLCM, and the sea ice spatial information is extracted by Gabor filters. The influence of redundant bands is removed by correlation analysis, and the spectral and spatial features of sea ice images are excavated by the PCANet network to improve the final classification accuracy. The method selects samples randomly for training and then inputs them into the SVM classifier for classification, which improves the sea ice classification when there is only a small number of samples.

The rest of this paper is organized as follows.

Section 2 introduces the design framework and related algorithms in detail. The experimental data set and related experimental setups are described in

Section 3, and the experimental results and model parameters are discussed and analyzed at the same time. Finally, we summarize the work of this paper in

Section 4.

2. Proposed Method

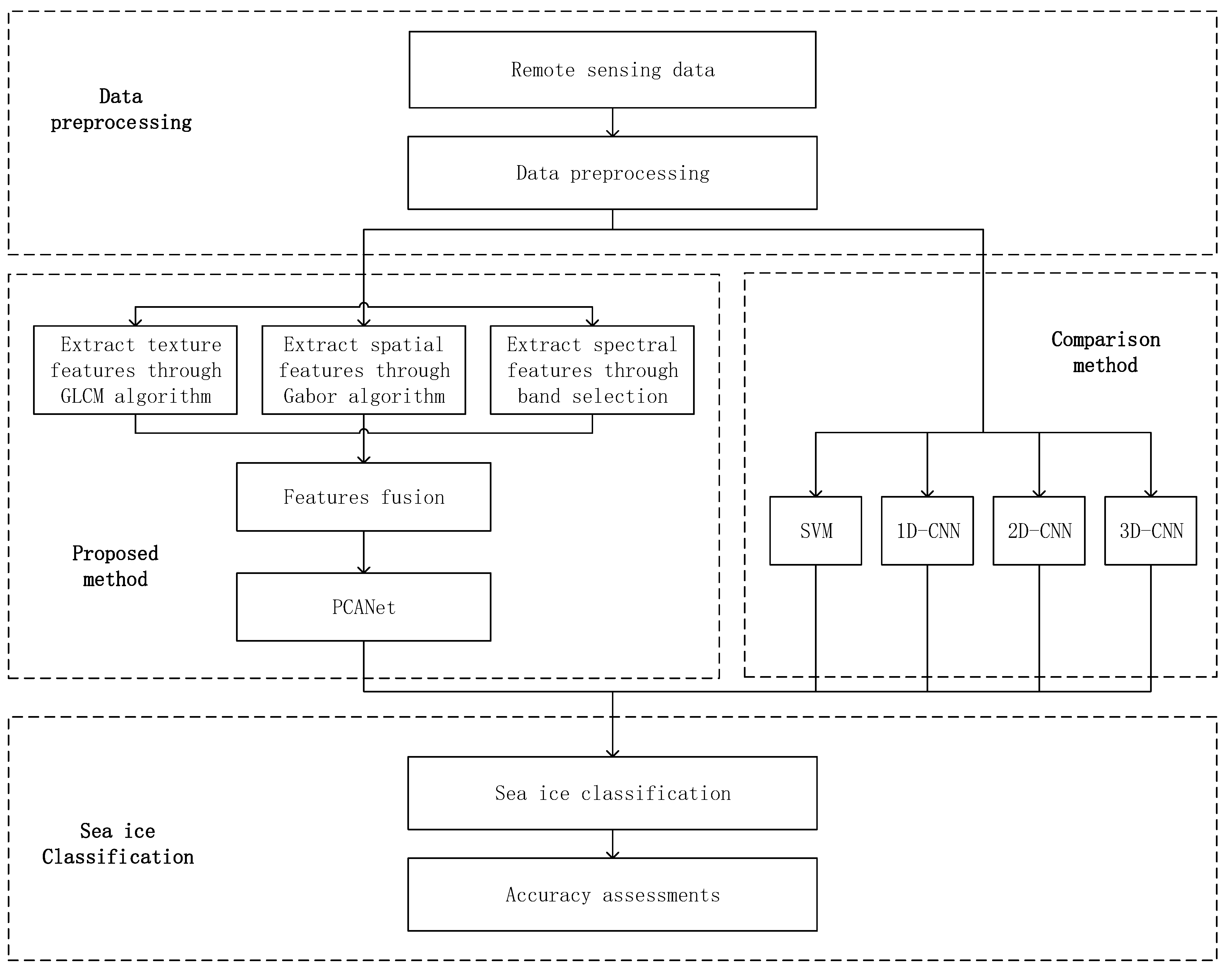

The proposed sea ice classification framework based on spectral-spatial-joint features is shown in

Figure 1. It includes four main parts: the extraction of textural features based on GLCM, the extraction of spatial features based on Gabor filters, the selection of spectral features based on the band selection algorithm, and feature extraction and classification based on PCANet. Firstly, the principal component analysis is used to reduce the dimensionality, and then the GLCM and Gabor filter are used to extract textural and spatial features from hyperspectral images. The band selection algorithm and correlation analysis are used to extract the subsets of the main spectral bands from the original data. Then, the spatial and spectral feature information extracted from the two branches are fused as the input of PCANet and classified by the SVM. Finally, classification accuracy is evaluated. This algorithm is described in subsequent sections.

2.1. Texture Feature Extraction

Texture refers to small, semi-periodic, or regularly arranged patterns that exist in a certain range of images. It is one of the main features of image processing and pattern recognition. Texture is usually used to represent the uniformity, meticulousness, and roughness of images [

10]. The textural features of an image reflect the attributes of the image itself and help to distinguish it.

Textural features refer to changes in of the gray value of the image, which is related to spatial statistics. The GLCM is a method of extracting textural features based on statistics. It describes the gray-level relation matrix between a pixel and adjacent pixels within a certain distance in the local or overall area of the image [

22]. It reflects the joint distribution probability of pixel pairs in the image, which can be expressed as follows:

In Equation (1),

represents the times at which pixel pairs appear on the direction angle

, where the gray values of pixel pairs are

and

, respectively.

,

∈

, where

is the number of quantized gray levels,

are the relative coordinates of pixels in the image as a whole,

and

are horizontal and vertical offsets respectively,

and

are the number of columns and rows of the image respectively,

is the distance between pixel pairs, and

is the direction angle in the process of displacement, which is generally set as 0°, 45°, 135°, or 180° [

22].

After calculating the GLCM, some statistics are usually constructed as texture classification features instead of applying the matrix directly. In this paper, eight typical texture scalars are extracted, which are the mean, variance, homogeneity, contrast, dissimilarity, entropy, angular second moment, and correlation.

2.2. Spatial Feature Extraction

The Gabor filters can effectively obtain representative frequencies and directions in the spatial domain [

2]. Therefore, the establishment of a group of Gabor filters with different frequencies and directions is conducive to the accurate extraction of spatial information features. As a linear filter, the Gabor is suitable for the expression and separation of features. By extracting relevant features at different scales and in different directions in the frequency domain, it can achieve multi-scale and multi-direction features of images.

Generally speaking, the Gabor filter is defined by the Gaussian kernel function, as shown in Equation (2). Its components are divided into a real part and an imaginary part. The real part can smooth the image, while the imaginary part can be used for edge detection, as shown in Equations (3) and (4).

In the above equations,, is the center point of the Gaussian kernel; is the wavelength, which is usually not greater than one-fifth of the size of the input image; is the direction, ranging from 0° to 360°; is the phase offset, which mainly considers the rotation possibility of the sample and increases its robustness to rotation invariance; is the standard deviation of the Gaussian function; and represents the spatial aspect ratio, which determines the ellipticity of the shape of the Gabor function.

After the principal component analysis of the original data, a set of fixed Gabor filters was applied to extract spatial information from the images extracted by the principal component analysis after various parameters had been adjusted and optimized.

2.3. Band Selection

Generally speaking, a reduction in the dimensionality of hyperspectral data can be achieved by feature extraction or feature selection (band selection). Band selection can effectively reduce the amount of redundant information without affecting the original content by selecting a group of representative bands in the hyperspectral image [

23]. This paper adopts a band selection algorithm based on a hybrid strategy. The purpose of this algorithm is to select the best band combinations that provide a large amount of information, less relevance, and strong category separability. The main idea is to find the best band division using the evaluation criterion function, and then evaluate all bands using the mixed strategy of clustering and sorting. Finally, the bands with the highest level in each division are used to form the final best band combination [

24].

In this paper, two objective functions, the Normalized Cut and Top-Rank Cut, are used to divide bands. The smaller the value of the former, the higher the intra-group correlation and the lower the inter-group correlation of each band combination. The smaller the value of the latter, the lower the overall correlation. Both of these partitioning functions are beneficial for subsequent sorting selection. In the mixed strategy, we adopt the maximum variance principal component analysis (MVPCA), information entropy (IE) based on sorting and the enhanced rapid density peak clustering (E-FDPC) based on clustering.

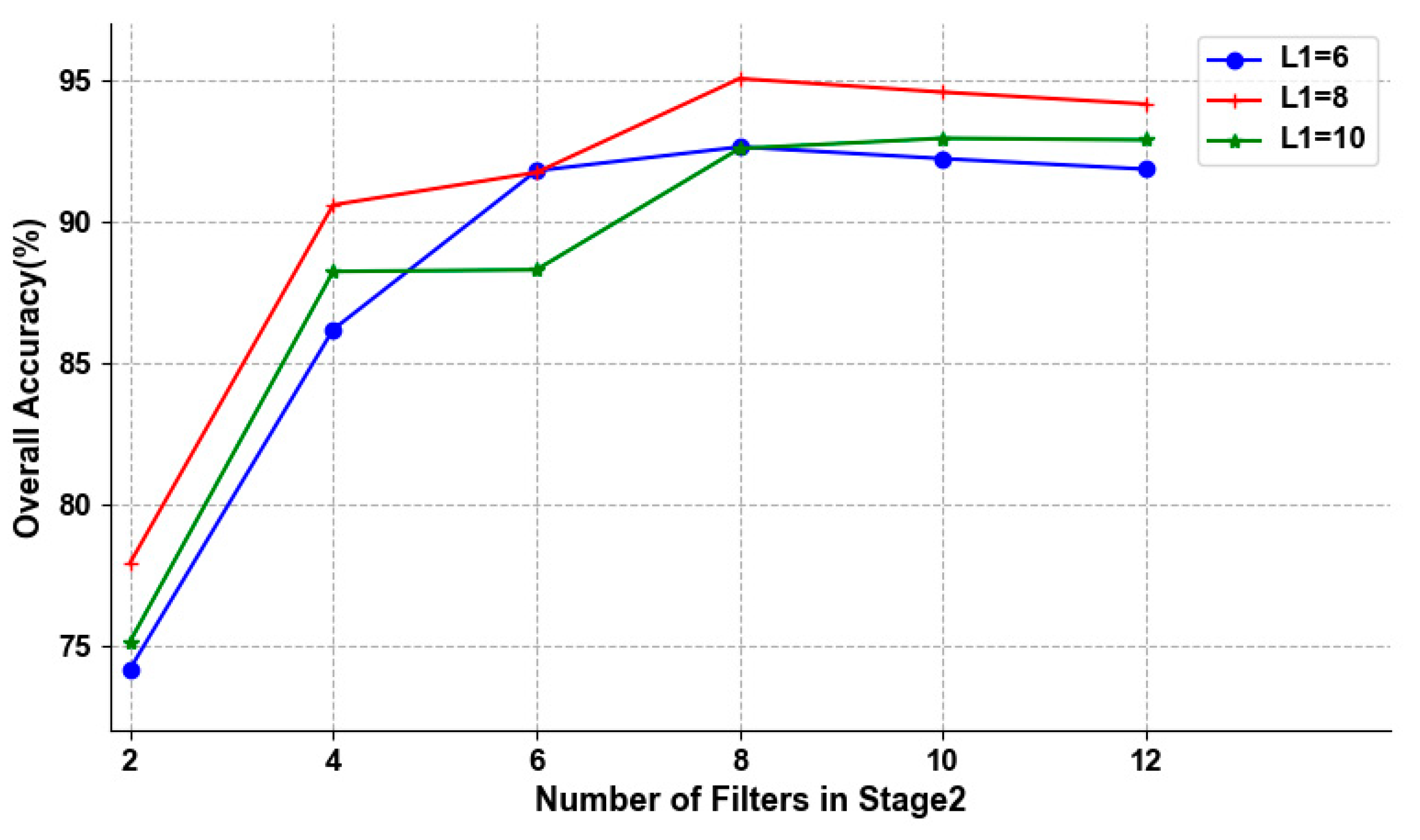

2.4. PCANet

Compared with previous convolution neural networks, the PCANet deep learning framework has strong competitiveness in terms of feature extraction. It can be used to train a simple network to learn data and learn low-level features from the data. These low-level features constitute high-level features with abstract meaning and invariance, which is beneficial for image classification, target detection, and other tasks [

25]. In hyperspectral image classification, PCANet can choose the adaptive convolution filter group as the basic principal component analysis filter, and hash binarization mapping and block histogram enhance feature separation and reduce feature dimension, respectively [

21]. At the same time, the spatial pyramid is used to extract the invariant features, and finally, the SVM classifier is used to output the classification results. The specific network structure includes PCA convolution layers, a nonlinear processing layer (NP layer), and a feature pooling layer (FP layer), as shown in

Figure 2.

Assuming that our input training set contains images of size , the filter size in all convolutional layers is . We need to learn the principal component analysis filter from , and the following text introduces the proposed architecture.

2.4.1. The First PCA Convolution Layer

First, each pixel in the input image

is sampled with a

block, and then these sample blocks are cascaded to get an

block. The sample block of image

is expressed as

, where

,

. Then, the sample block is de-zeroed and averaged to obtain

, where

is a sample block with the average value removed. Then, the same processing is done on the rest of the images. Finally, the training sample matrix is obtained, as shown in Equation (5). The PCA algorithm minimizes the reconstruction error by looking for a series of orthonormal matrices. We assume that the number of filters in the

layer is

, so the reconstruction error can be expressed by Equation (6). Similarly to the classic principal component analysis algorithm, the first

eigenvectors of the covariance matrix of matrix

need to be obtained, so the representation of the corresponding filter is shown in Equation (7), where the

function is used to transform the vector into a matrix, and

represents the

principal eigenvector. The output of the first layer is shown by Equation (8), where

represents convolution operation and

is the

input image.

2.4.2. The nth PCA Convolution Layer

The subsequent convolution layer process is same that for as the first layer. First, the output

of the previous layer is calculated. Then, the sample is zeroed to the edge to ensure it is the same size as the original image. The output is subjected to operations such as block sampling, cascading, and de-averaging. Similarly, the feature vector and output of the

layer are obtained. It is worth noting that since the first layer has

filters, the first layer generates

output matrices, and the second layer generates

outputs for each output by the first layer, which is accumulated layer by layer. For each sample, the

PCANet generates

output feature matrices, and the final output feature is expressed as shown in Equation (9). Thus, it can be seen that the individual convolutional layers of the PCANet are structurally similar, which facilitates the construction of the multi-layer deep network.

2.4.3. Nonlinear Processing Layer

In previous work, we obtained the features through the convolution layer and then used the hash algorithm to perform nonlinear processing on the output of the previous layer. The specific operation used is as follows: binary hash encoding is carried out on each output to reset the pixels and index to enhance the separation between different features.

images form a vector of length

, and each vector is converted to a decimal as the output of the non-linear processing layer. The function is shown below:

2.4.4. Feature Pooling Layer

After the nonlinear processing layer, each output matrix is divided into

blocks, and the histogram information of the decimal values in each block is calculated and cascaded into a vector to obtain the final block expansion histogram features, as shown in Equation (11). Histogram features add some stability to the variation in the features extracted by PCANet while reducing the feature dimension [

26]. In order to improve the multi-scale property of the spatial features, a spatial pyramid module is added after the block histogram to give the features a better distinguishing ability. Finally, the SVM classifier is selected to get the final output.

2.5. Algorithm Process Description

After describing the above framework, the specific implementation process for the Algorithm 1 used in this paper is as follows:

| Algorithm 1. The Proposed Algorithm Process. |

Begin

Input: the labeled sample set with a size of , where M × N is the size of the input image and B is the number of bands.

A. Feature extraction and fusion- (1)

Based on the band selection algorithm, the original band is selected to obtain the optimal band combination after selection, and the spectral features Fs are extracted; - (2)

The primary component analysis algorithm is used to process the original data, and the first principal component PC is obtained; - (3)

Using the gray-scale co-occurrence matrix algorithm, the gray-scale co-occurrence matrix of the sliding window based on the principal component PC in each direction at angle θ and step d is calculated, and the texture eigenvalue of the center pixel is obtained; - (4)

Repeat (2) until the principal component area is completely covered by the sliding window; - (5)

The texture features at each direction angle θ are averaged to obtain the final texture feature matrix; - (6)

Repeat (4) until the five texture features are obtained and the extraction of texture features Fg1 has been completed; - (7)

According to the Gabor algorithm, set a group of filter banks with scale s and direction θ; - (8)

Based on the results of (1), the results for the principal component PC based on Gabor filters at various scales and directions are calculated to obtain the final spatial feature information; - (9)

Repeat (8) until sixteen spatial features have been obtained to complete the extraction of spatial features Fg2; - (10)

Fuse the features of (1), (6), and (9) to complete the extraction and fusion of features.

B. PCANet

- (1)

Randomly select training samples with a certain training testing ratio and input them into the pre-established PCANet network; - (2)

The first principal component analysis filter is used to obtain the high-level abstract features, and the filter parameters are learned to calculate the first-layer convolution output ; - (3)

The output in (2) is taken as the input and (2) is repeated until all PCA filter layers have been calculated, and the output of the last layer has been obtained; - (4)

The separation between features is enhanced by hashing binarization, and is hash-encoded and converted to a decimal to obtain ; - (5)

The feature dimension is reduced by the block histogram, and the statistical histogram information is cascaded to get the final block extended histogram feature fi, and then the spatial pyramid is used to generate multi-scale spatial features; - (6)

The SVM classifier is used for classification.

Output: Confusion matrix, Overall accuracy (OA), Kappa value.

End |

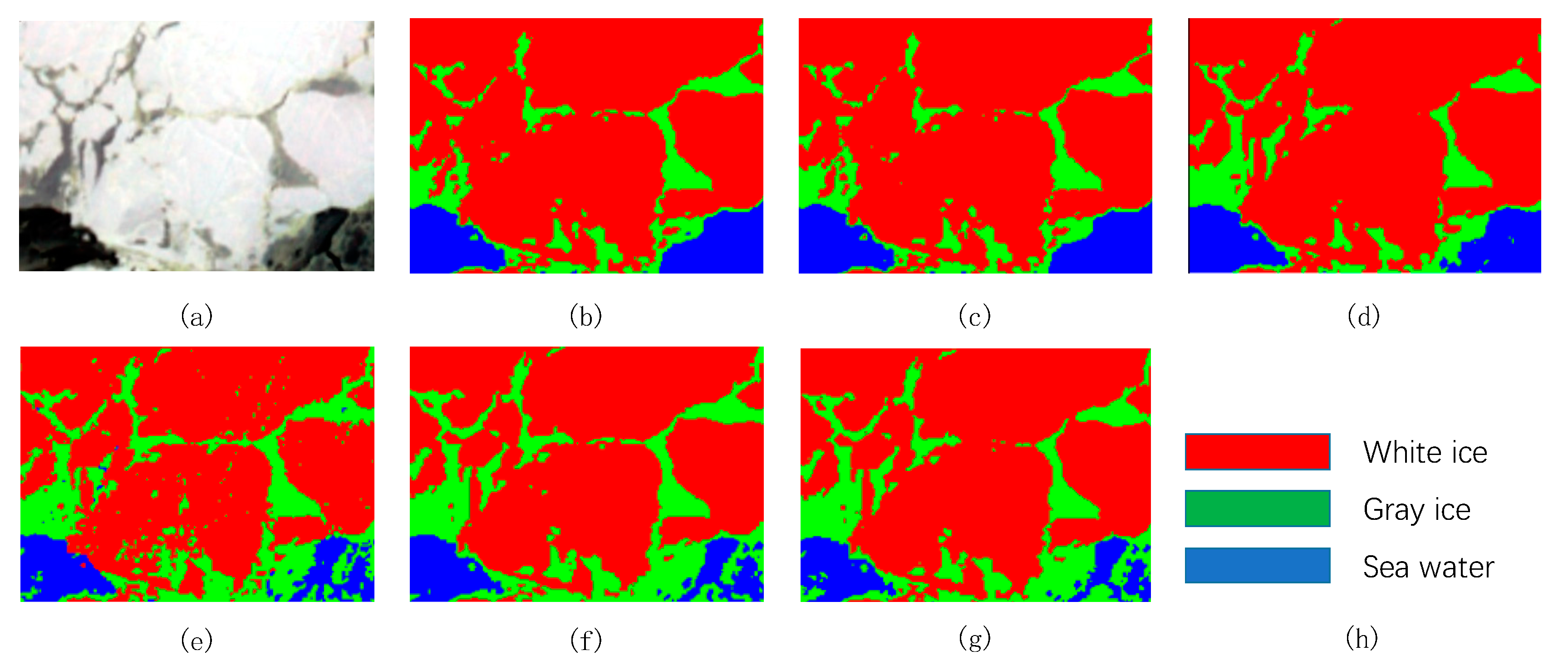

4. Conclusions

In the classification of hyperspectral remote sensing sea ice images, due to the limitation of environmental conditions, the cost of labeling samples is relatively high. In addition, the traditional sea ice classification methods mostly use a single feature and do not fully explore the deep spatial and spectral features hidden in the sea ice images, which limits the improvement of classification accuracy. To address the above-mentioned problem, this paper proposed a hyperspectral sea ice image classification method based on the spectral-spatial-joint feature with PCANet. The method uses fewer training samples to dig deeper into the textural, spatial, and spectral features implicit in hyperspectral data. The PCANet network was designed to produce improved sea ice image classification compared with other remote sensing image classification methods. The experimental results show that the proposed method can efficiently extract the depth features of remote sensing sea ice images with fewer training samples, and it has a better classification performance on the whole. Thus, it could be used as a new model for the classification of remote sensing sea ice images.

- (1)

Compared with sea ice image classification methods that only rely on spectral features, the proposed method, which is based on the GLCM and Gabor filter, can fully extract the spatial and texture features hidden in a sea ice image. It can enhance features, which makes it conducive to the classification of sea ice images.

- (2)

The rich spectral information present in hyperspectral images provides data support for sea ice classification, but the high level of correlation between bands increases the computational cost, and the existence of the Hughes phenomenon also reduces the classification accuracy. In this paper, a band selection algorithm based on a hybrid strategy was adopted to remove redundant bands with a high level of correlation, and bands with a large amount of information and a low level of correlation were selected to extract depth spectral features, which improved the training efficiency.

- (3)

Compared with other sea ice classification methods, this proposed method integrates the implicit spatial and spectral feature in the sea ice image. The PCANet network is used to select the adaptive convolution filter bank as the principal component analysis filter to excavate the depth characteristics of sea ice. Based on the hash binarization mapping and block histogram, this enhances the feature separation and feature dimension reduction. The low-level features are further learned from the data and used to form high-level features with abstract significance and invariance for sea ice image classification. In this paper, it was shown that, using this method, a good sea ice classification effect can be achieved with fewer training samples and a relatively short training time.

The two datasets used in this paper are not covered by clouds. Generally, the influence of clouds is inevitable. Therefore, in future research, we will integrate microwave remote sensing data and make full use of the characteristics of synthetic aperture radar data that are not affected by clouds in order to enhance the extraction of sea ice features, and solve the problem of sea ice classification in complex environments such as those affected by clouds.