1. Introduction

Snow cover plays a fundamental role in mountainous regions, as it is a key factor for different processes, ranging from ecological processes to the energy balance of solar radiation. Moreover, it has a significant influence on hydrological regimes and affects tourism and other human activities related to the presence of snow. For these reasons, the study and monitoring of snow cover has relevant implications in the study of fauna and flora, for risk management, for the study of socio-economic models, and for basin-scale hydrological modelling [

1,

2,

3,

4,

5,

6,

7,

8,

9,

10]. Snow cover has also been indicated, by the Global Observing System for Climate (GCOS), as one of the 50 Essential Climate Variables (ECVs), as it is an important variable in monitoring climate change [

11]. In situ observations are not sufficient to characterize the properties and distribution of snow, particularly in mountainous areas that are subject to high variability and, often, inaccessibility. However, satellite observations can provide information on the different properties of snow and its distribution with high temporal and spatial continuity [

12,

13].

Snow detection through the use of optical sensors is related to the reflection/absorption properties of snow. This method suffers from limitations, due to cloud cover and solar illumination, but represents a consolidated field of study, in which detection algorithms have reached maturity [

14].

Most optical sensor snow detection algorithms are built using the normalized-difference snow index (NDSI), proposed by Dozier [

15], based on the spectral bands of the Landsat Thematic Mapper. This index exploits the spectral signature of snow in order to distinguish it from other land-use classes, which generally have a high reflectance in the visible spectral region, as well as distinguishing it from clouds, which (unlike snow) have a high reflectance in a portion of the shortwave infrared (SWIR) range. Different algorithms have been developed, based on this index, in order to derive many snow parameters, such as the snow cover binary product, the snow depth, the snow water equivalent, or the fractional snow cover [

14].

Most of the snow operational products utilize the imagery detected by the Moderate Resolution Imaging Spectroradiometer (MODIS) and the Advanced Very High Resolution Radiometer (AVHRR), which offer a daily temporal resolution, with a spatial resolution ranging between 250 m and 25 km for products at regional to global scale [

16,

17,

18,

19]. An algorithm that has been widely affirmed as suitable for use with different optical sensors is SCAmod, proposed by Metsämäki, based “on a semi-empirical model, where the at-satellite observed reflectance is expressed as a function of the fractional snow cover” [

19]. This model relies on a forest transmissivity map and, therefore, is particularly suitable for detecting snow in forested areas. At present, this method is implemented for Northern Hemisphere snow extent production in the Copernicus Cryoland project named GlobSnow, which exploits MODIS and Sentinel-3 data to produce daily snow cover extent maps at 500 m resolution. Another publicly available snow cover data set for the European Alps has been offered by EURAC [

16]. It provides two different daily products at 250 m resolution, based on MODIS data: EURAC_SNOW, where the algorithm performs the detection of snow cover in open areas based on the NDVI (normalized difference vegetation index), while in forested areas, it is based on a multi-temporal approach; and EURAC_SNOW_CLOUDREMOVAL, a nearly cloud-free version derived from the first product by means of a combination of temporal and spatial filters [

20].

The Landsat mission, on the other hand, offers high spatial resolution, but is limited by a review time of 16 days and further diminished by cloud cover, which is very common in temperate mountain areas [

21,

22,

23]. Therefore, it is inadequate for the monitoring of snowpack, which can undergo rapid changes. In a recent study, conducted in the same study area used in this paper, an NDSI-based algorithm for snow detection—namely, the Snow Observations from Space (SOfS) algorithm—was applied to combine cloud-free Landsat images stored in an Open Data Cube into monthly aggregates, which provide the products of the maximum snow cover area for a given month, in the period 1984–2018 [

24]. This multi-sensor and multi-temporal approach has proved useful for studying snow trends in time-series in the past; however, it is unsuitable for finer scale monitoring and the study of snow-influenced processes [

25]. Conversely, the Sentinel-2 mission provides systematic global acquisitions of high-resolution imagery of the Earth’s surface with a 5-day revisit time at the equator, allowing for outstanding multi-spectral observations and characterization of dynamic surface processes from local to global scales [

26]. Gascoin et al. [

27] developed a snow cover detection algorithm designed for Sentinel-2 images, based on the NDSI index in combination with the use of a digital elevation model, in order to define a snow altitude threshold, below which the presence of snow is excluded. This algorithm was adopted by the Copernicus Land Monitoring Service and is available at a pan-European scale under the name of High-Resolution Snow & Ice (HR-S & I) products. The validation of this algorithm has given excellent results, despite its tendency to underestimate the snow; however, it has been focused on clear-sky images and the challenge of reducing the misclassification between snow and cloud remains open [

27]. Previous studies have also based the validation of snow cover output maps on using reference higher resolution satellite images, field surveys, webcams, or meteorological data, assuming clear-sky conditions [

28,

29,

30,

31]. When these conditions are not verified, the main challenge of snow cover mapping through optical imagery is to avoid misclassifications between clouds and snow [

14,

27,

32].

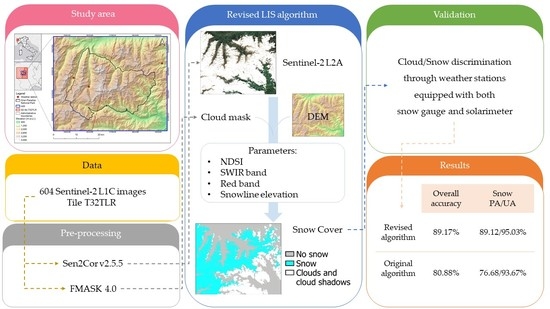

In this study, we revised the Let-It-Snow (LIS) algorithm in order to improve its discrimination between snow and cloud objects. In the pre-processing phase, while LIS adopts the MAJA algorithm [

32] for both atmospheric/topographic correction and cloud mask extraction, we introduced the Fmask algorithm [

33] and Sen2Cor [

34] processor. In the subsequent processing phase, the revised algorithm introduces a new parameter, based on the reflectance in the SWIR, to better discriminate snow from clouds. As a further improvement, the reduction of red-band noise is achieved by smoothing its values through a mean moving window, whereas the original algorithm adopts a band down-sampling technique. Finally, regardless of the amount of snow detected, the proposed algorithm produces an output map; that is, when restrictive threshold values are used for the NDSI, red band, and SWIR band, and a low amount of snow pixels are found (with respect to a defined quantitative threshold), the proposed algorithm provides an output snow cover map, whereas the original LIS algorithm produces no output in this case. This allows, in the case of very high cloud cover, for the detection of snow by applying only the most restrictive thresholds, thus reducing the occurrence of false positives. When the amount of snow pixels is greater than the aforementioned quantitative threshold, the algorithm adopts liberal thresholds for the NDSI, red band, and SWIR band, in order to improve the algorithm’s sensitivity for the detection of additional snow pixels. Another novelty is a contextual analysis, based on the introduction of a procedure that re-assigns clusters smaller than five pixels to the final label, based on neighboring pixels, in the final output.

We applied the revised algorithm to a mountainous study site located in the Italian north-western Alps, that is subject to frequent cloud cover. Finally, we used a new validation approach: investigating the classification accuracy, even in the case of cloudy observations, using ground data collected by a snow gauge and a solarimeter. The findings of the validation phase show that the introduction of the SWIR band can improve snow cover mapping in cloudy images.

The paper is structured as follows:

Section 2 describes the area of study, the data used, the revised algorithm, and its publication in an open framework, as well as the validation methodology. Validation was conducted using in situ data collected by a snow gauge and a solarimeter, the results of which are reported in

Section 3.

Section 4 discusses the improvements and limitations of this study. Finally,

Section 5 presents our conclusions and possible future developments.

2. Materials and Methods

2.1. Study Area and Data

2.1.1. Study Area

The Area of Interest (AOI) extends over 2065 km

2 between the Val d’Aosta and Piedmont regions in northern Italy, on the border with France (

Figure 1). This area includes the Gran Paradiso massif of the Alpi Graie, and has an elevation ranging from 400 m a.s.l., up to more than 4000 m a.s.l. at the mountain peaks. Glaciers and perennial snows dominate the landscape higher than 3000 m a.s.l. which, as in the rest of the Alpine chain, have been dramatically decreasing due to climate change.

The AOI is centered on Gran Paradiso National Park, the first National Park established in Italy, for the purpose of the conservation of the ibex (Capra ibex), which is also its symbol. The area is characterized by a continental climate, with severe winters lasting from November to April/May. Typically, towards the beginning of the snow season, ephemeral snowfall heralds the arrival of persistent snow, which forms a snowpack that can be greater than 1 m. The snow melting higher than 2000 m a.s.l. occurs, on average, in June. It is not unusual for snowfalls to occur out of season—even in the middle of summer—in which case, however, they have a duration limited to the meteorological event itself. Furthermore, given its topography, the area is often covered by clouds and changes in the weather can occur very quickly.

2.1.2. Data

The Sentinel-2 (S2) constellation is made up of twin satellites—S2A and S2B—each of which carries an optical imaging sensor multi-spectral instrument (MSI), which collects reflected radiance in 13 bands (from VIS to SWIR) at different resolutions, ranging from 10 to 60 m [

34]. The S2 satellites follow a sun-synchronous orbit at 786 km altitude, with a 290 km swath width. The combination of the two satellites allows for a revisit time of 5 days at the equator and an even shorter time in the mid-latitudes [

26]. The S2 images are freely available and are delivered in granules (called tiles) in the UTM/WGS84 projection, each with dimensions of 100 × 100 km

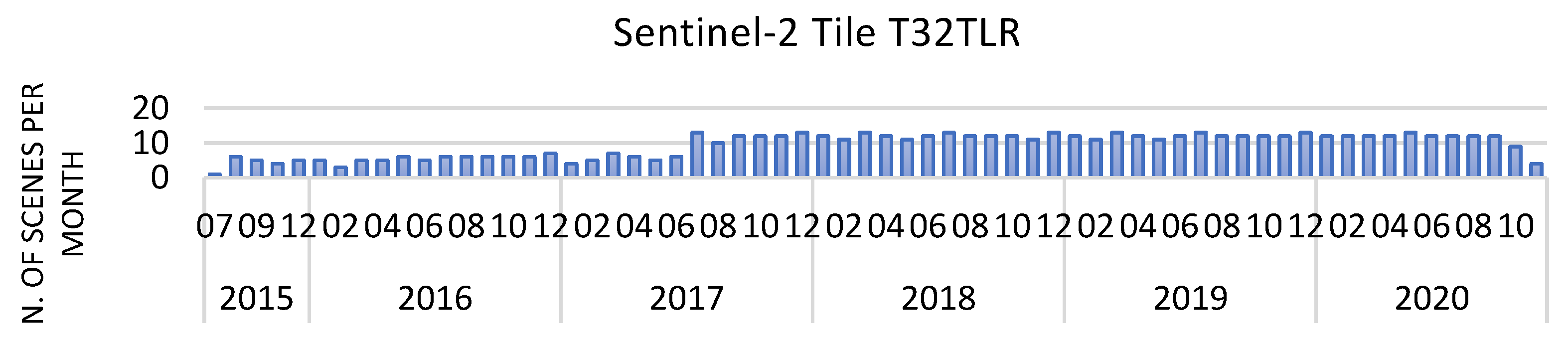

2. The AOI is entirely covered by the T32TLR tile. Thus, for this study, all the S2 L1C (top-of-atmosphere reflectance) data for the T32TLR tile available from the start of the mission (2015) to the start of the study (12 November 2020) were collected, for a total of 604 images (

Figure 2). The initial temporal coverage was 6 scenes per month, which has doubled since July 2017, when the twin satellite S2B became operational.

Given the complex topography of the territory, we decided to use a Digital Elevation Model (DEM) with high spatial resolution. A DEM was therefore developed using the reference system WGS84 UTM 32N at 6.6 m, by combining the following data:

Digital Terrain Model (DTM) at 2 m from the Val d’Aosta Region (DTM 2005/2008 aggregate);

DTM at 5 m from the Piedmont Region (RIPRESA AEREA ICE 2009–2011-DTM 5);

Copernicus DEM at 25 m (EU-DEM v1.1).

2.2. Workflow and Pre-Processing

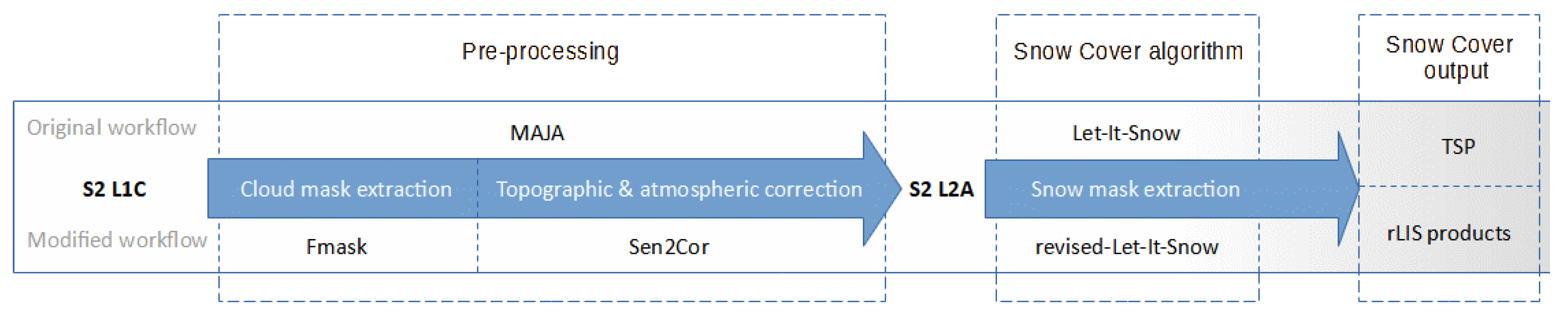

The original workflow, proposed by Gascoin et al. [

35], carried out S2 L1C pre-processing using the processor MAJA (MACCS-ATCOR Joint Algorithm), a multi-temporal cloud detection and topographic/atmospheric correction software package produced through the joint effort of CNES, CESBIO, and DLR [

32]. This workflow has been implemented in the MUSCATE scheduler, which manages the production and distribution of the Theia Snow Products [

27], a collection of ready-to-use snow cover products.

In this study, we used a different workflow (

Figure 3), which involves the use of two processes: one for cloud cover extraction and one for atmospheric and topographic correction. The cloud cover mask was extracted using Fmask v4.0 [

33] (function of mask), software for automated cloud, cloud shadow, snow, and water masking for Landsat 4–8 and Sentinel 2 images. The cloud probability threshold was set to 40%, and the dilation parameters for clouds, cloud shadows, and snow were set to 1, 1, and 0 pixels, respectively.

This choice was driven by the following concerns: previous studies have found that Fmask and MAJA performed similarly in cloud mapping [

36,

37]; however, MAJA performs at 240 m and, due to processing at a lower resolution, thin clouds with a size of about 100 m may be omitted in the MAJA cloud mask [

36]. Moreover, a pre-compiled Windows version of MAJA is not available, while Fmask has been distributed for both Windows and Linux, including standalone versions with or without a graphical user interface (GUI). The S2 L1C images were atmospherically and topographically corrected through Sen2Cor v2.5.5 [

34], using the high-resolution DEM described above as auxiliary data, in order to improve the correction performance [

38,

39], thus obtaining the S2 Level 2A images.

Revised Let-It-Snow Algorithm

To extract the snow cover, the Let-It-Snow (LIS) algorithm, developed by Gascoin et al. [

35] and based on the NDSI index [

15], was first implemented in the R programming language. We introduced some changes to the LIS algorithm, leading to the proposed algorithm, which was named revised Let-It-Snow (rLIS).

Here, we briefly describe the rLIS algorithm, focusing primarily on its differences from the original algorithm. For more details and a full description of the algorithm, please refer to the specific documentation [

35].

The rLIS algorithm requires three inputs:

An S2 L2A flattened surface bottom-of-atmosphere, in particular the green (band 3); red (band 4), and SWIR (band 11) bands (

Table 1);

The cloud cover mask generated by Fmask [

33];

A DEM.

First, the DEM is pre-processed to the same resolution as the S2 L2A 20 m bands. Then, the NDSI is calculated, from the atmospheric and topographically corrected green and SWIR bands at 20 m resolution, as:

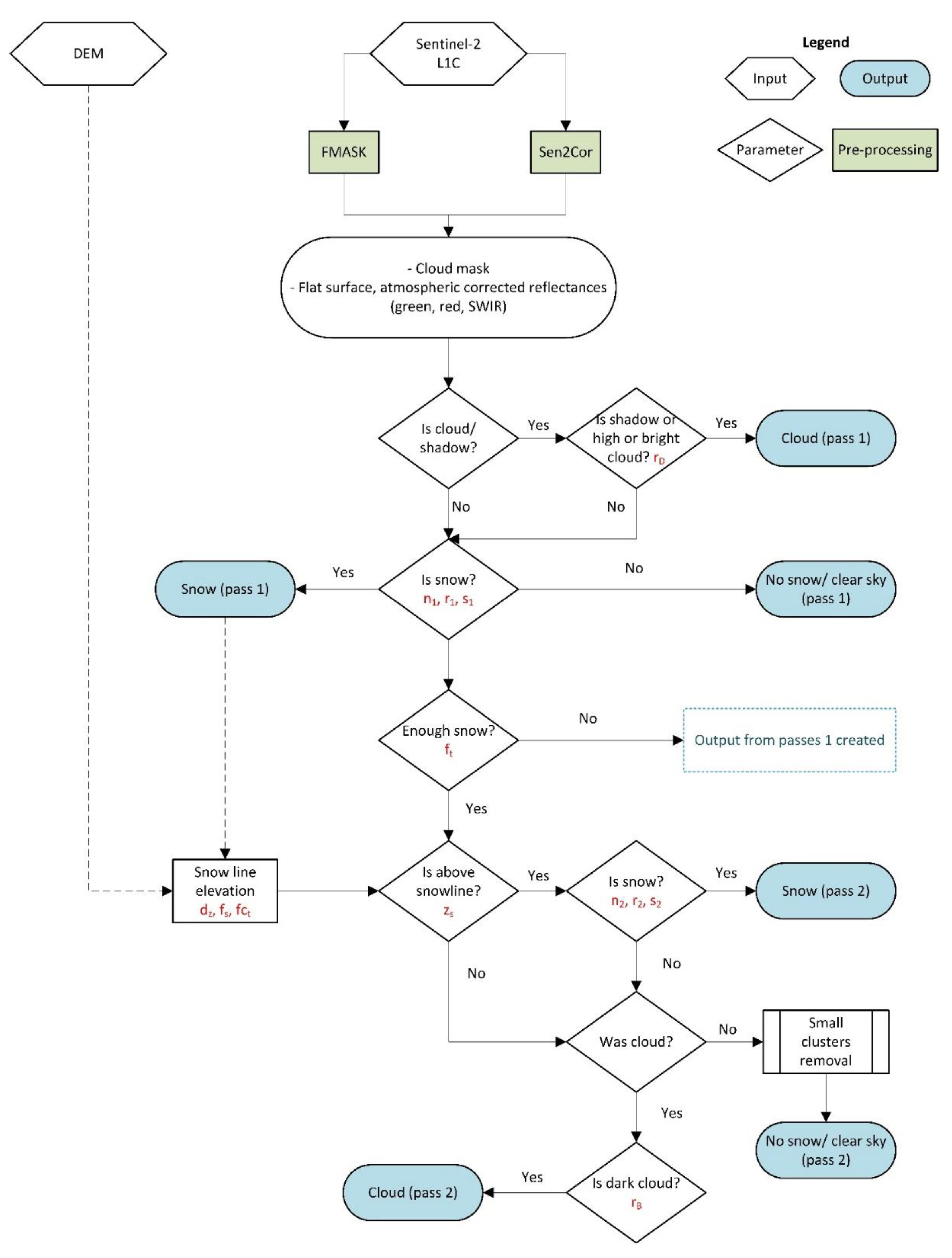

An important step involves searching for possible snow-covered areas under thin and transparent clouds, defined as “dark clouds”. Hence, in the “cloud pass 1” step, the dark clouds are searched for among all the pixels classified as clouds by Fmask, excluding only the pixels classified a priori as cloud shadows (i.e., 2,

Table 2). In the original algorithm, in addition to the cloud shadows, the high-altitude (or cirrus) clouds are excluded a priori from the computation. The “dark clouds” can be identified through use of a threshold, r

D, which is applied to the red band after reducing its noise through an average 3 × 3 moving window filter; in the original LIS process, the smoothing of anomalies is performed by down-sampling the red band by a factor of 12.

Therefore, the pixels flagged as clouds (i.e., 4,

Table 2) in the Fmask cloud mask that respond in the red band with a signal greater than r

D (

Table 3) are temporarily removed from the cloud mask, and are investigated for the possible presence of snow with the rest of the image. These pixels are identified as “dark cloud” pixels. The pixels of the cloud with the red band reflectance lower than r

D are excluded from further investigations.

In the following step, called “snow pass 1” (

Figure 4), the snow cover is identified by applying restrictive thresholds in order to minimize false snow detection. Thus, a pixel is classified as snow if the following conditions are fulfilled:

NDSI > n1;

red band > r1;

SWIR band < s1.

As turbid water and cold clouds have NDSI values similar to snow, the red reflectance is used to avoid the misclassification of turbid water surfaces, while the SWIR reflectance is used to avoid cold clouds from being confused with snow. The SWIR band thresholds used in the snow detection steps are a novel feature of rLIS, absent in the original algorithm (

Table 3). While the values of classification parameters based on fixed thresholds remained unchanged from those in the original algorithm, for comparison purposes, the new parameter values (i.e., those based on SWIR reflectance) were established using a two-step procedure. First, the distribution of the SWIR spectral response of homogeneous cloud areas was analyzed in order to determine a range of suitable values. Then, these values were tested, using a trial-and-error approach, to refine the selection until the final values were identified.

At this point, if the snow detected in the entire image is greater than the threshold f

t (see

Table 3), the algorithm proceeds with the calculation of the snowline elevation; otherwise, it stops. The output is produced by combining “cloud pass 1” and “snow pass 1” (

Figure 4). As the AOI is located in an alpine environment, the threshold f

t is almost always overcome, due to the presence of glaciers and perennial snow. When the image is almost or totally cloudy, f

t is not overcome and, therefore, the most restrictive step (i.e., “snow pass 1”) is maintained while the less restrictive one (i.e., “snow pass 2”) is skipped, in order to lower the likelihood of confusion between clouds and snow. In the LIS algorithm, on the other hand, if the total snow threshold f

t is not passed, the algorithm stops, but produces no output.

Thereafter, the snowline elevation is identified by calculating the percentage of snow present for each 100 m (dz) altitude band, with respect to the percentage of pixels free from clouds (fct), thus establishing the altitude limit below which the chance of snow is zero.

The snowline elevation (z

s) is defined as two altitudinal bands below the minimum identified band (d

min) in which the snow that is present is greater than the threshold f

s:

Then, the snow detection is performed a second time, using less restrictive thresholds and introducing the previously identified altitude limit, zs. In this step (called “snow pass 2”), a pixel is classified as snow if it meets the following conditions:

NDSI > n2;

red band > r2;

SWIR band < s2;

elevation > zs.

Hence, the previously identified dark cloud pixels that meet the conditions described above are now classified as snow. The remaining dark cloud pixels will return clouds (if they respond in the red band with a reflectance greater than the threshold r

B) or are classified as “No snow,” otherwise. The last step is the reclassification of the “No snow” pixel clusters smaller than 5 pixels (equal to 2000 m

2), based on the values of the neighboring cells, in order to eliminate any unreliable pixels from the final mask. This passage allows for the elimination of small gaps, which are present mainly in clouds and potentially subject to noisy reflectance and haze. The final output is a 20 m resolution raster mask (

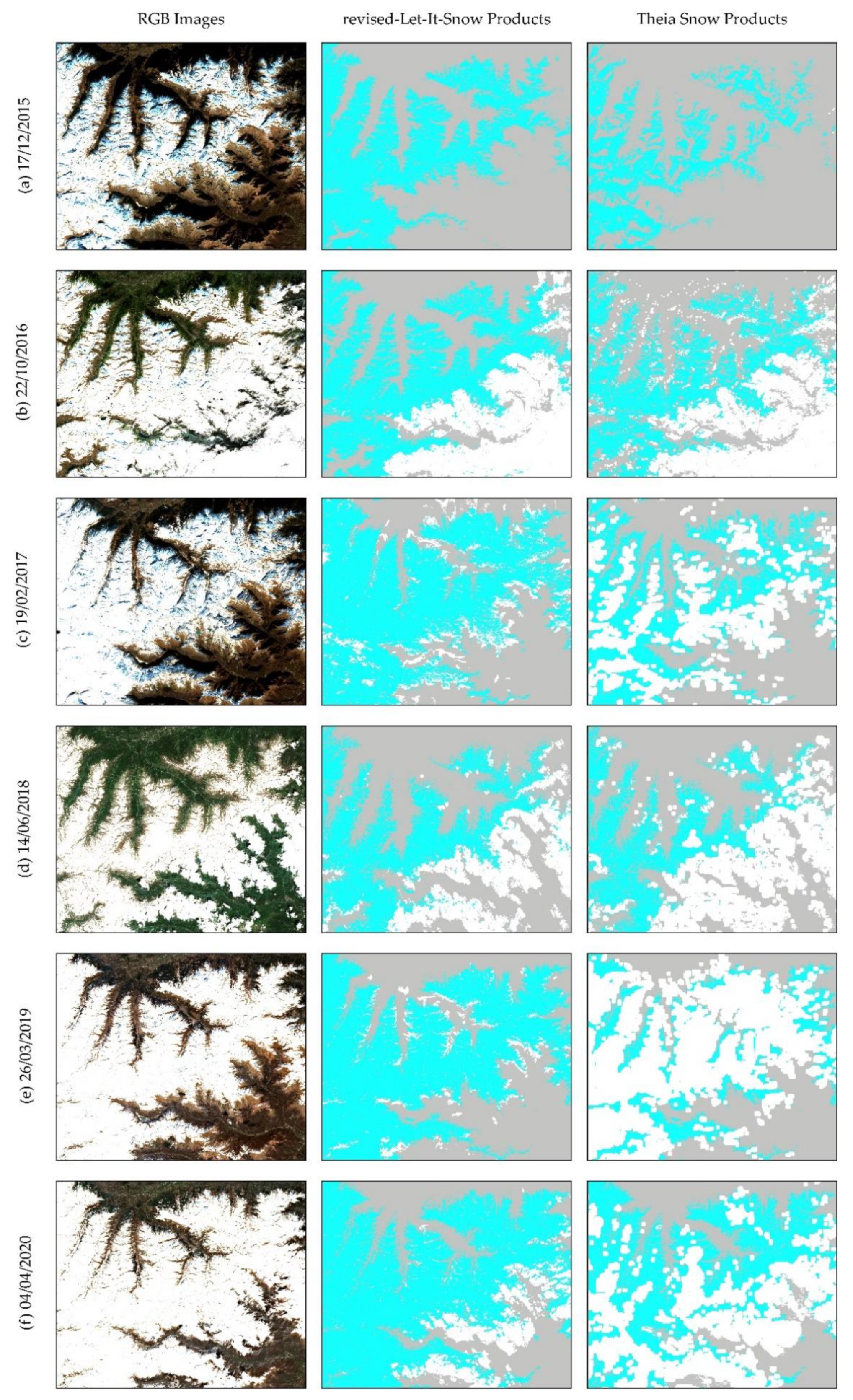

Figure 5) following the LIS classification: 0, No snow; 100, Snow; 205, Cloud; 254, No Data.

2.3. Validation

2.3.1. Comparison with In Situ Measurements from Weather Stations

Unlike previous studies based on optical data, which have focused on the ability of algorithms to discriminate only between snow and snow-free areas—and, therefore, excluding cloudy images from validation [

18,

24,

25]—we aimed to evaluate the ability of the proposed algorithm to discriminate snow cover from clouds, as well as from snow-free areas.

In order to achieve this goal, we collected measurements taken from weather stations located within the AOI, equipped with both snow gauges, which measure the amount of solid precipitation, and solarimeters, which measure the flow of solar radiation over a set period of time. These weather stations are owned and managed by the Valle d’Aosta Functional Center (Available online:

https://cf.regione.vda.it/home.php (accessed on 12 January 2021)), a service framed within the “Department of Civil Protection and Fire Brigade of the Presidency of the Executive” of the Italian state, which has the main objective of forecasting meteorological phenomena and related effects on the ground. All data collected by the network of meteorological stations are accessible through a dedicated portal (Available online:

https://presidi2.regione.vda.it/str_dataview (accessed on 12 January 2021)).

Within the AOI, there were only three stations equipped with both a snow gauge and solarimeter, all located in the Val d’Aosta Region: Rhêmes-Notre-Dame, Chaudanne (5,047,140 N, 352,873 E, 1794 m a.s.l.); Valsavarenche, Pont (5,043,251 N, 359,594 E, 1951 m a.s.l.); and Champorcher, Petit-Mont-Blanc (5,053,489 N, 391,685 E, 1640 m a.s.l.). These weather stations collected continuous punctual measurements, on a half-hourly basis from the snow gauge and averaged over the previous half hour for the solarimeter. We collected these data over the period from 30 July 2015 to 12 November 2020. We then selected the measurements corresponding to the dates and hours that were closest to the acquisition time of the S2 scenes. For example, for the S2 image acquired on 28 June 2020 at 10:25:59 UTC (HH:MM:SS), the validation data compared on the ground were the punctual data acquired at 10:30:00 UTC for the snow gauge, and the averaged data acquired between 10:00:00 and 10:30:00 UTC for the solarimeter. The values from the snow gauge were interpreted as snow in the case that the snow depth >0 cm, and no-snow otherwise. Furthermore, only the measurements with a solarimeter value equal to 30 min (i.e., 30 min out of 30 of sun) or 0 min (i.e., 0 min out of 30 of sun) were considered, in order to discriminate between situations of present or absent cloud cover with certainty.

The classification values of the rLIS algorithm were then extracted from the pixels at which the stations were located. No data values, due to the presence of partial scenes, were excluded. A total of 843 valid observations were found. Of these, in 111 cases the weather stations detected the simultaneous presence of both clouds and snow. These measurements were uncertain for comparison with the classification of the algorithm as, although it was designed with the aim of being able to identify the snow below the most transparent clouds, it is not possible to determine whether the algorithm had correctly identified snow or whether the classification was the result of a misclassification of clouds as snow. Excluding these observations, we took into consideration 732 valid observations for the analysis.

2.3.2. Comparison with Theia Snow Products (TSP)

The Theia Snow Products [

27] belong to a collection of ready-to-use snow cover products generated through the original LIS workflow. TSP provide the presence or absence of snow on the land surface every fifth day over selected regions, and is produced and distributed by the Theia Data and Services Centre [

40], as 20 m resolution raster and vector files. The workflow for the production of TSP, described in more detail in

Section 2.2, carries out the pre-processing of the optical images using the MAJA processor [

41], the outputs of which are the inputs to the LIS algorithm.

To evaluate the performance differences between the revised and the original algorithms, all S2 Theia Snow data for tile T32TLR, available from the beginning of the S2 mission up to 12 November 2020, were collected from the Theia Land Data Centre. A total of 484 products were collected. Similarly, the classification values for those pixels in which the weather stations were located were extracted from the TSP. These data were then combined with the in situ measurements from the three weather stations, as described above, into a confusion matrix. The same data set of meteorological observations was also used for the rLIS products, thus creating a third confusion matrix. No data values, due to the presence of partial scenes, were excluded from both data sets. Overall, a total of 591 valid observations were common to rLIS and TSP. Finally, both the revised and original classifiers were compared through contingency tables, for each class and for all classes.

2.3.3. Evaluation Metrics

The data measured by the weather stations were used as a reference data set, in order to build two multiclass confusion matrices, by crossing the reference data set with rLIS and TSP data. The class defined as “Clear/Snow” represents the observations in which the snow gauge has a recorded snow depth > 0 cm and the solarimeter has detected 30 min of sun over 30 min of measurement. The class defined “Clear/Snow-free” constitutes the observations with snow depth = 0 cm and 30 min of sun out of 30, representing the cloud- and snow-free areas. Finally, the “Cloudy/Snow-free” class indicates the observations with snow depth = 0 cm and 0 min of sun over 30 min detected. In order to evaluate the improvement in classification accuracy by the proposed algorithm, the following four parameters were computed: overall accuracy (OA), Kappa coefficient (K), user’s accuracy (UA), and producer’s accuracy (PA) [

42].

In addition, McNemar’s chi-squared (χ

2) test was used to evaluate the statistical significance of differences between the original and the revised algorithms [

43,

44,

45].

2.4. Publication of Revised Let-It-Snow (rLIS) Algorithm on VLab

The rLIS algorithm, as shown in

Figure 4, was published using the Virtual Earth Laboratory (VLab) framework [

46], facilitating its exploitability, interoperability between models, and reproducibility of the results.

VLab is a framework that implements all required orchestration functionalities to automate the technical tasks required to execute a model on different computing infrastructures, minimizing the possible interoperability requirements for both model developers and users. Through VLab, modelers can publish models developed in different programming languages and environments. Once a model is available on the VLab, users can request its execution and the framework can trigger its execution on a set of different computing platforms, including cloud platforms such as the European Open Science Cloud, the commercial Amazon Web Services (AWS) cloud, and some Copernicus Data and Information Access Services (DIAS) platforms (e.g., CREODIAS, ONDA, or Sobloo).

Specifically, three modules were published on VLab. Two modules implemented the pre-processing steps, while a third one carried out the snow cover processing: (i) the Fmask module, (ii) the Sen2Cor module, and (iii) the Snow Cover module.

The Fmask module implemented version 4.3 of the Function of Mask algorithm [

33], which is devoted to clouds, cloud shadows, snow, and water masking for both Landsat 4–8 and S2 images. The Fmask algorithm was provided by the Global Environmental Remote Sensing Laboratory (GERSL), University of Connecticut, and was developed in MATLAB. The module requires an S2 L1C product as input and optionally parameters, rather than the default ones. The output is a cloud cover mask. The Sen2Cor module was based on the Sen2Cor software [

34] provided by the European Space Agency (ESA). The inputs of the published module are an S2 L1C product and, optionally, a DEM, the ESACCI-LC data package [

47], and an area of interest. The output is an S2 L2A product. The Snow Cover module is implemented as the final step of the rLIS algorithm. This module was developed in R and has four inputs: an S2 L2A product, a DEM, the cloud cover mask computed by Fmask and, optionally, an area of interest. The output is a snow cover map.

For all the modules, the Sentinel product can be provided as the input to each model either as a link to a zip file (e.g., a product generated by the user or by another module), or as a valid identifier of an S2 product. In the second case, VLab implements all required functionalities to make the product available. After the execution of the module, the output is stored on the cloud platform by VLab and made available to users (for downloading and analysis) and to other modules (as input).

In terms of performance, while the computational complexity of the modules remains unaffected by their publication on VLab, the possibility to run the modules on cloud platforms provides advantages, in terms of data access and scalability. As far as data access is concerned, VLab allows for the utilization of data which are already present on the cloud platform where the module is executing; this allows users to avoid the time required to download the data (which, especially for satellite products, can be significant). As far as scalability is concerned, the possibility to run on cloud platforms enables on-demand scalability (both vertical and horizontal); that is, it is possible to use more powerful computational resources (vertical scalability) and/or a higher number of computational resources (horizontal scalability), in order to run the same module on different products at the same time. As an example, consider the Snow Cover module, whose execution takes about 4 min and 40 s when utilizing an AWS m3.large instance. If a user requires the execution of the module on, for example, three products, then VLab will launch three instances to execute the module and all three processes will complete in the same amount of time as for one single product.

4. Discussion

The Let-It-Snow (LIS) algorithm has been implemented in the Copernicus service High-Resolution Snow & Ice (HR-S & I) product, which, to the best of our knowledge, is the only S2-based high-resolution operational product available for snow cover. In this study, we improved this algorithm by introducing two innovations: the cloud mask generated by Fmask and a new parameter in the snow detection phase. Secondly, we tested the robustness of the revised algorithm for detecting snow and, in particular, in discriminating snow from clouds, which is still an open challenge for snow cover detection. We also compared its performance against that of the original algorithm.

The cloud cover used as input was produced by Fmask, while LIS used the mask generated by the MAJA processor, in the case of the Theia Snow Products (TSP) [

18]. In the “cloud pass 1” step, the dark clouds were searched for among all the pixels classified as clouds by Fmask, excluding only the pixels classified a priori as cloud shadows. In LIS, instead, in addition to the cloud shadows, the high-altitude clouds are also excluded a priori from the computation. The parameter added in the snow detection was a threshold in the SWIR band: s

1 for the first step (snow pass 1) and s

2 for the second (snow pass 2) (

Table 3). A further difference resulted from the different smoothing approach used for the red band: no down-sampling was performed, but anomaly smoothing was implemented by applying a 3 × 3 mean moving window. Finally, in the LIS algorithm, if the total snow detected in the snow pass 1 step is less than the threshold f

t, the algorithm stops and produces no output. In the revised Let-It-Snow (rLIS) algorithm, if this threshold is not reached, the algorithm still stops, but it produces a snow cover map by combining the pass 1 outputs (

Figure 4). This allows, in the case of very high cloud cover, for the detection of snow cover by applying only the most restrictive thresholds, skipping the more concessive ones of the second step and, therefore, reducing the possibility of misclassification between clouds and snow. The classification parameters are based on fixed thresholds; except for the snowline elevation, which is automatically extracted from the specific scene under analysis. The double snow detection approach, with restrictive thresholds in the first step and concessive thresholds in the second step, together with the adaptable snowline parameter, provides high flexibility. The proposed algorithm, therefore, shows promise to be applicable in different geographical contexts, and is particularly suitable for fine-resolution (e.g., Sentinel-2 or Landsat scale), rather than moderate resolution (e.g., MODIS) usage. In fact, the snowline threshold robustness is linked to the scale, as the snowline altitude is subject to a high spatial heterogeneity [

48,

49]. As has already been highlighted by Gascoin et al. [

27], the comparison between S2 and in situ data can lead to errors, due to inaccuracies in the geolocation of the S2 image and the fact that the punctual data may not be representative of the pixel. Nevertheless, the results showed high agreement between the in situ data and the outputs of the two algorithms. The evaluation of rLIS products using in situ data indicated excellent results (

Table 4), according to the OA and K values. In particular, the snow detection was very reliable, as indicated by the high UA and PA values.

As can be seen from the confusion matrix in

Table 4, rLIS incorrectly classified clouds as snow 4 times out of 111, equal to 3.6% of the cloudy observations. Conversely, snow was improperly classified as clouds only 15 times out of 214 in snowy ground observations, equal to 7% of the observations, proving the ability of the rLIS algorithm to discriminate between cloud cover and snow cover.

Comparing the revised and the original algorithms, rLIS achieved better accuracy and robustness than the original LIS algorithm, as demonstrated by the 8.29% and 0.13 higher OA and K coefficient, respectively. Both rLIS and TSP had low commission error for snow classification. Conversely, in accordance with the results obtained by Gascoin et al. [

27], TSP had a greater tendency to underestimate the snow cover, as can be seen from the PA values (89.12% for rLIS vs. 76.68% for TSP).

From the comparison of the rLIS and TSP collections with the in situ data set (

Table 5), it emerged that rLIS erroneously classifies clouds as snow 0 times out of 36 true cloud observations (0%), while the TSP, for the same data set, misclassified 1 time out of 36 (2.78%). However, snow was erroneously classified as cloud 14 times out of 193 true snowy observations (7.25%) in rLIS, and 36 times out of 193 (18.65%) for TSP. These data confirm that both algorithms were robust in discriminating clouds from snow, while the rLIS algorithm was more efficient in discriminating snow from clouds.

The statistically significant better performance of rLIS, in comparison with the LIS approach, was also confirmed by the McNemar chi-square statistic (

Table 6), except for the “Cloudy/snow-free” class. The differences in performance of the two algorithms may partially derive from the pre-processing of the input S2 top-of-atmosphere images. Theia Snow Products follow the MAJA–LIS workflow, with atmospheric and topographic correction performed using an auxiliary DEM at 20 m resolution, derived from the Shuttle Radar Topography Mission (SRTM, NASA) at 90 m. rLIS products follow the Sen2Cor–Fmask–rLIS workflow, performing the topographic and atmospheric correction using a DEM at 6.6 m, derived from high-resolution local DEMs. Given the complex topography of the territory, the different resolution of the auxiliary DEM could be significant for the correction performance [

38,

50]. Moreover, MAJA performed cloud detection at 240 m [

41], while Fmask performed it at 20 m, consistently with the snow detection resolution achieved with both rLIS and LIS. Furthermore, as reported by Tarrio et al. [

37], Fmask appears to be the most balanced algorithm, in terms of its commission and omission errors, in comparison to other algorithms such as MAJA and Sen2Cor. Nevertheless, the possible overestimation of cloud cover was not an issue, as the algorithm can reclassify the cloud pixels (identified through the cloud mask) into snow; while, conversely, it cannot reclassify the cloud pixels omitted by the cloud mask. The S2 level 2A data obtained by Sen2Cor includes aerosol optical thickness data, which can effectively determine the thin cloud. As suggested by Tarrio et al. [

38], a combination of cloud masking approaches could improve the cloud detection and, consequently, the snow mapping. This will be explored in further studies.

A consequence of the use of the Fmask cloud mask was the introduction of a new parameter used for snow detection. The LIS algorithm establishes that a cloud mask pixel classified as high-altitude cloud cannot be reclassified as snow, due to snow-like reflectance. However, the cloud mask produced by Fmask, which is used as an input to rLIS, distinguishes only clouds and cloud shadows (

Table 2), unlike the cloud masks produced by MAJA. Therefore, a new parameter was introduced, which was based on the SWIR reflectance, in order to exclude all clouds with a snow-like spectral signature, improving the robustness of the classification by decreasing the occurrence of false positives which, in TSP, are mainly due to the confusion between snow and clouds.

5. Conclusions

The Global Climate Observing System (GCOS) has identified snow cover as an Essential Climate Variable, as it plays an important role, both globally and regionally, in energy fluxes and water cycles. In mountain environments, snow plays a dominant role and the possibility of obtaining snow cover maps with high spatial and temporal resolution can be very useful for many applications, such as in ecological studies and for hydrological and socio-economic modelling.

In this context, we proposed a revision of the Let-It-Snow (LIS) algorithm, developed by Gascoin et al. [

27]. Similar to LIS, the revised algorithm performs snow cover detection based on the NDSI index at a resolution of 20 m using three inputs: a cloud cover mask, a DEM, and a terrain-corrected optical image. Compared to LIS, the main improvement of rLIS consists of the introduction of a new parameter for the snow cover detection—a threshold in the SWIR band—which leads to more efficient discrimination between snow and clouds, and increases the robustness of the algorithm. Unlike previous studies, we also investigated the algorithm’s ability to extract the snow cover, using not only clear-sky observations but those in all weather conditions, including cloudy observations. This allowed us to evaluate the efficiency of rLIS in discriminating snow from clouds, as well as to compare it with LIS.

As pointed out by Gascoin et al. [

27], the use of the snowline elevation as a parameter to determine snow cover makes the algorithm very efficient in mountainous areas. This altitudinal parameter, reinforced by parameters in the red and SWIR bands, allowed us to apply a slightly restrictive threshold on the NDSI index to detect the snow cover, which we deemed to make the algorithm robust enough to be applicable at the local scale in different geographical contexts [

51]. Therefore, in the future, it would be useful to investigate the efficiency of the algorithm in other study areas, assuming snow gauge and solarimeter data availability.

As with the LIS algorithm, rLIS is also applicable to Landsat images to extract the snow cover. Furthermore, rLIS can be applied in a straightforward way, as the United States Geological Survey’s (USGS) Landsat collection routinely uses the Fmask algorithm implemented in C (CFMask) to produce cloud masks, which are already available within the Landsat Collection 1 Level-1 Quality Assessment (QA) Band [

52].

The estimation of the snow detection accuracy in forested areas, especially under evergreen coniferous forests, which are very common in mountainous regions, still remains an open issue that was not investigated in this study. Further developments of the algorithm could take this aspect into consideration. Another desirable improvement could be the introduction of gap-filling techniques, in order to estimate the snow cover under thick clouds, which is useful for estimating another Essential Climate Variable: the snow cover duration.