1. Introduction

On-animal-sensors capable of measuring individual animal behaviour and location have long been considered a potentially transformative technology for extensive livestock grazing enterprises. Such sensors have been proposed to alleviate many of the labour and cost challenges associated with monitoring livestock health [

1]. One particular class of sensor, accelerometers, offer the capability to monitor changes in physical behaviour purely on the basis of movement and orientation. Such information could be used to create animal health and welfare indicators on the basis of deviations in activity patterns from baseline levels [

2,

3]. The capability of accelerometers to measure posture and activity states has been well established in ruminants (cattle [

4,

5,

6,

7,

8], goats [

9,

10,

11] and sheep [

3,

12,

13,

14,

15,

16,

17]).

In a commercial scenario, and especially under extensive grazing conditions, sensors must be able to monitor animal behaviour in near-real-time, and more or less continuously ‘24/7’ (or via a time segmented duty cycle program) if they are to enable accurate and timely management decisions aimed at optimising animal performance and welfare [

4]. The advantages and possible uses of a real-time behaviour classification system for extensive livestock production have been widely postulated [

18]. However, research to date has focused on applying it to commercial intensive dairy and beef feedlot sectors (for example, the IceTag3D™, REDI, SCR/Alflex and CowManager Sensor systems). Limited research has been conducted on the extensive sheep grazing industry, and there has been little consideration given to optimising data processing and analysis protocols.

The task of developing a near-real-time classification model on the basis of live sensor data is inherently more difficult than developing an off-line classifier which is effectively post-processing of historical data. Inherent constraints and challenges include: (i) correlated time-dependent measurements; (ii) transitional states; and (iii) rapid signal processing requirement with minimal computational energy and storage overhead [

8]. Many studies have focused on the classification of data where the start and end times of each behaviour state are known and the use of epochs, which groups data over a predetermined time period, is a common analysis step [

6,

7,

8,

12,

19].

These epoch values (derived from calculated metrics) are assumed to be representative of the estimated intensity of activities measured during the set time period [

20]. Selecting a short epoch may be suitable if activity is accumulated in a number of short bouts, that is, animals transition between activity states rapidly. A longer epoch has the advantage of offering data-smoothing through time averaging, while the disadvantage is that a higher proportion may contain a mixture of two or more activities of varying intensity with resulting average data reflecting an intermediate intensity [

21].

Epochs which contain more than one behaviour are classed as transitional events and are often excluded from analysis, owing to their potential to be misclassified [

6]. Previous studies have used training and validation datasets with the training dataset usually containing mutually exclusive behaviour epochs, though it is unclear whether the validation datasets include transitional behaviour epochs [

6,

12]. In a real-time classification system, segregation of these transitional epochs is not possible, as there is no prior knowledge of the animals’ current or future behaviour [

7]. Therefore, analytics that can handle the transition between behaviour classes must be developed.

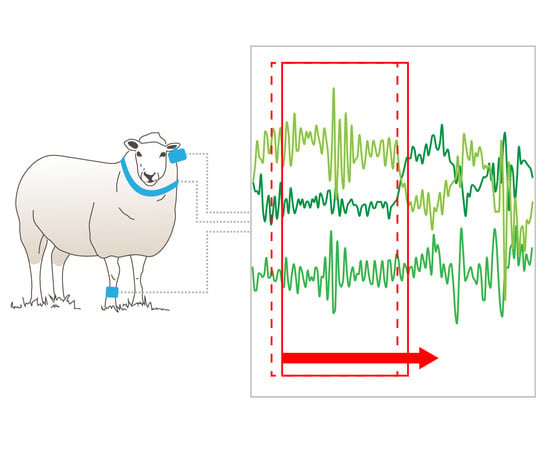

A simple approach using a moving window sampling technique with overlap between epochs has been proposed to alleviate some of the signal classification issues associated with the transition between behaviours [

12,

14,

22,

23]. To address this, Nielsen et al. [

24] used a moving average of 3 or 5 seconds of the motion index or step count values obtained from the IceTag3D

TM device. If the moving average at a particular time was greater than a predetermined threshold value (the value separating behaviour classes), then it was classified as walking; otherwise it was classed as standing. The simple approach has obvious application when dealing with univariate feature data with a simple threshold model but its application to classify behaviour states in sheep using ear acceleration data with multiple feature algorithms is yet to be evaluated.

If accelerometers are to be utilised in commercial operations for remote livestock health and welfare monitoring, there is a need to develop a system capable of real-time classification of behaviour that is in-sync with data acquisition. The accuracy of accelerometers to classify livestock behaviours in near real-time influences its utility for future research and application in livestock production systems.

The objective of this study was to apply a behaviour state classifier developed on “clean state” data to an example of live data, including transitional states, to replicate data acquisition in a commercial scenario. This was achieved using a moving window algorithm. The aim was to determine the performance of this moving window algorithm to classify continuous accelerometer signals from ear, leg and collar mounted devices into four mutually exclusive sheep behaviour states.

3. Results and Discussion

There were no apparent adverse effects of sensor attachment on animal behaviour. A summary of the quantities of data collected for each state across the three deployment modes is given in

Table 3. Observational periods were the same for all deployments as accelerometers were attached to the body of a given sheep in the three locations simultaneously. However, due to sensor malfunction, data quantities varied between deployments. In periods where limited data was collected because of sensor malfunction, a subsequent deployment was performed. Limited lying behaviour was recorded because of a lack of animal motivation to rest in a recumbent posture; therefore these results should be interpreted with caution.

In comparison with video observations, there were considerable differences in the classification performance across the four behaviours and between animals within each deployment (

Table 4). Deployment mode also had an influence on the between-animal classification accuracies, with the leg and collar deployments showing a greater range in successful classification accuracies compared to the ear attachment, especially for standing behaviour. This is consistent with the original classification algorithm performance and the lower prediction accuracies for standing behaviour in these modes of attachment [

3]. There were significant differences observed in the prediction accuracy of standing behaviour between the ear and leg deployments using a 10 second moving window (d.f. = 24, t.ratio = 2.515, P < 0.05) and for walking behaviour between the collar and leg (d.f. = 27, t.ratio = −2.559, P < 0.05) and collar and ear (d.f. = 27, t.ratio = −3.033, P < 0.05) deployments using the 3 second moving window classifier. There were no other significant (P > 0.05) differences observed for the interaction between window length and deployment mode for the remaining combinations.

The accuracies across the three moving window sizes varied little within each deployment mode. One of the main disadvantages of using a longer window is that the window may contain a mixture of two or more activities of varying intensity and the average data may reflect an intermediate intensity. For example, if a sheep’s activity abruptly changes from resting to walking, the intermediate moving average may be incorrectly ascribed to a period of eating. Additionally, if the activity bout is shorter than the window, the average value for that burst will differ from the actual activity intensity, again leading to misclassification. A longer window has the advantage of normal data-smoothing through time averaging and this is evident in the classification of walking behaviour from the collar deployment, with the longer window length having higher classification accuracy (although this difference is not statistically significant P > 0.05, d.f = 27, t.ratio = −0.636). This is further supported by the longer window lengths having fewer behaviour transitions in comparison to the 3 and 5 second window predicted data files.

There was a much larger number of behaviour transitions in the predicted datasets than was apparent in the annotated data file (

Table 5). This is a result of the moving window algorithm classification process, as each row of data could ultimately be given a different behaviour prediction than the preceding row. This presents a challenge for the current classification methodology because of the reasons outlined with varying epoch lengths. An approach worthy of future research may be to use exclusive epoch segments with no overlap which will reduce the number of behaviour transitions within the predicted dataset, more closely aligning to that seen in video recordings. Consequently, and in accordance with Smith et al. [

7], we found that window selection becomes a pragmatic trade-off to ensure the moving windows are sufficiently long enough to represent behaviours, while being short enough to reduce the likelihood of multiple behaviours being captured in the same window.

A behaviour transition is defined as the period in which the animal’s behaviour changes from one state to another, e.g., standing to walking. The number of behavioural transitions varied considerably between the annotated and predicted datasets. These decreased in the predicted datasets as the moving window length increased which may explain the slight improvements in classification success for the 10 second window. This can be explained given the number of transitions in the 10 second window length predicted dataset being closer to the actual number of behaviour transitions in the annotated dataset.

3.1. Leg

Window length was shown to have no significant (P > 0.05) effect on the behaviour prediction in any of the four behaviours recorded for the leg deployment. There was little variation across the three window lengths for the prediction of walking, with all three moving window lengths achieving greater than 90% accuracy for individual sheep. Similar results were reported by Nielsen et al. [

24] in cattle, with an average of 10% misclassification rates in walking periods using the IceTag3D

® device. Standing prediction accuracies were consistent across the three window lengths in this study, however there was large variation in prediction accuracy between sheep (0% to 79%). During model development, standing behaviour was often misclassified as grazing. Therefore, this misclassification is also evident in the moving window classifier used in this current study.

In terms of limb movement, grazing is characterised by infrequent steps followed by periods of minimal leg movement. When an animal is grazing, a leg attached accelerometer only detects the dynamic acceleration associated with the locomotive movements (i.e., steps taken in search of new pasture). The support phases of locomotion are extended during grazing activity and hence, the accelerometer is often recording static acceleration. This results in similar acceleration signals between grazing and standing behaviour (created by the extended support phase). Therefore, standing events were often misclassified with grazing using the current QDA model [

3]. This presents a challenge for the prediction of behaviours from leg mounted sensor, particularly behaviours that share similar dynamic leg movement. Prediction success may differ in response to the animals grazing environment as this can influence the speed at which animals search for new areas of available pasture, i.e., high levels of pasture may result in a higher level of misclassification of standing with grazing as animals move at a slower rate in comparison to short pastures where the animals have a higher level of movement as they seek out pasture.

There was no significant (P > 0.05) difference between the three moving window lengths for the prediction of grazing behaviour. However, on average, grazing behaviour was best classified by the 10 second moving window with between-sheep values ranging from 73% to 90% (

Table 4). Lying behaviour was well predicted for sheep A with the 3, 5 and 10 second windows recording accuracies of 95%, 95% and 94%, respectively. This is reflective of the clear differentiation between upright and lying postures based on the static acceleration values in relation to the gravitational field, when attached to the leg. Interestingly, sheep B and E had low prediction accuracy for grazing behaviour. This is likely associated with sensor movement on the leg for these two sheep resulting in a change in axis orientation, affecting the recorded acceleration for the Average X-axis feature which was used in the leg QDA algorithm. This highlights the importance of having metrics that use absolute acceleration, reducing the impact of sensor orientation on classification success.

3.2. Collar

Window length was found to have no significant (P > 0.05) effect on the accuracy of behaviour prediction due to the four behaviours recorded for the collar deployment. The average classification accuracy for walking improved after lengthening the window from 3 to 10 seconds, although not significantly. However, there was substantial variation in classification success between sheep with accuracies ranging from 61% to 96%. This between-animal variation with collar deployed sensors has previously been described by Trotter et al. [

8] and Hämäläinen et al. [

26], and is due to variation in sensor placement between individuals. Collars can move freely around the animal’s neck, thus the position of the accelerometer may not always be the same which may give inconsistent readings despite the posture of an animal remaining exactly the same. It has been reported that even a slight deviation in sensor orientation can influence the classification results [

27].

Classification success rate for the current study was higher than that reported for cattle by González et al. [

4] (~60%). These authors found travelling events were commonly misclassified with foraging behaviour. This discrepancy may be due to differences between the either travelling speed of sheep and cattle or the dynamic motion of collar movement. As sheep are physically smaller, their step length is shorter whilst step rate may be greater. Therefore, accelerations could be amplified due to greater dynamic sensor movement resulting in stronger acceleration signals and better analytical performance. In the current study, standing was consistently classified across each moving window, again with substantial difference between sheep being observed with values ranging from 24% to 96%. Grazing behaviour was also consistently classified across the three moving windows with high sensitivity (88%). Lying behaviour was very poorly predicted from the collar data, with a sensitivity of 6% for all moving windows. The small amount of data used for this behaviour in model training more than likely contributed to this poor classification, along with similarities in the sensor output between lying and other behaviours. Further research is warranted to explore this issue of data imbalance.

3.3. Ear

There was no significant (P > 0.05) effect due to window length on behaviour prediction of any of the three behaviours recorded in the ear deployment. The moving window length of 10 seconds yielded slightly better mean prediction accuracies compared to the 3 and 5 second window lengths although this difference was not significant.

The mean prediction percentages for the ear were the highest for all three deployment modes, a function of the clear differentiation between raw acceleration signals across grazing, standing and walking behaviours. Between-sheep variation was evident in the prediction of standing and grazing behaviour and to a lesser extent in walking activity. Increasing the moving window from 3 to 10 seconds yielded a small improvement in classification agreement, particularly for grazing behaviour which achieved an average 6% improvement with the longer window. Walking behaviour was consistently predicted, yielding a between-sheep accuracy range of only 15%. For the 10 second moving window, standing behaviour prediction accuracies ranged from 69% to 97%, walking prediction accuracies ranged from 85% to 100%, and grazing behaviour predictions ranged from 58% to 95%. For the 5 second moving window, standing behaviour prediction accuracies ranged from 70% to 97%, walking prediction accuracies ranged from 86% to 100%, and grazing behaviour predictions ranged from 62% to 92%. For the 3 second moving window, standing behaviour prediction accuracies ranged from 69% to 97%, walking prediction accuracies ranged from 86% to 100%, and grazing behaviour predictions ranged from 55% to 89%. The larger variation in grazing predictions is also reflected in the original classification model showing misclassification with standing and walking behaviours. This can be explained by the static acceleration signatures produced within these two behaviours. As an animal’s head is lowered to the ground to graze, the sensor’s freedom to hang sees it maintain a similar orientation to that during standing and walking. This problem is relevant when using the values associated with a particular axis (e.g., average X or average Y) which are used in the clean state model tested here. The variation in sensor orientation between animals is an issue with the ear-tag form factor arising from the susceptibility of the tag to rotate in the ear due to the ‘single pin’ method of fixation. Furthermore, the physical differences in ear structures between animals can influence the dynamic motion experienced by the sensor affecting the acceleration signals recorded across behaviours.

4. General Discussion

For on-animal behaviour monitoring systems to function commercially, the data must be captured, processed and analysed in sync with data acquisition. This is inherently more challenging than an offline classifier as in a forward propagating sense, the behaviour state model will be challenged by the transition between behaviours as it can only look at historic data, for example the previous 60 seconds of data to make a behaviour prediction. Therefore, models have to be capable of operating in a manner that can determine when behaviour state changes across a string of signals containing multiple behaviours. Furthermore, models must be able to account for between-animal variations in signal output. This is shown in the present study by the substantial classification differences between animals within the same behaviour category. This is particularly evident for standing behaviour predicted from the collar and leg deployments. Similar results were found by Blomberg [

28], who reported accelerometers correctly classified behaviour in cattle with a relatively high rate of accuracy, but with large differences between individuals. While this current study did not investigate the specific causes of variability between sheep, it is speculated that the primary source of this between-animal variation in signals arose from two related causes. Firstly, animal physical characteristics affecting how the sensor attaches to the animal and secondly, the sensor motion during activity. Wolfger et al. [

29] speculated that the inter-animal variation between estimates provided by observations and accelerometer recordings could be attributed to differences in ear movement between cattle. Similarly, differences between sheep in physical (skeletal/muscle/tendon) structure may influence their walking pattern (or gait) and thus sensor motion during measurement of activity. Consequently, the models’ ability to discriminate between behaviour states may be adversely affected. González et al. [

4] also observed large differences between animals with collars for the fitted parameters of the probability density functions, threshold values and structure of the frequency distributions (e.g., overlap of populations). Further research should investigate the source of such variation to determine the proportion of observed variation between experimental animals that result from differences in animal movement patterns, sensor attachment and measurement between sensors [

4], and whether or not it can be negated through the use of larger model training datasets or even development of models that accommodate or adapt to individual animals. This has huge implications for commercial adoption of this technology, as sensors will be deployed across a variety of animals of different age, breed, production status, and so on, and prediction algorithms need to account for this between-animal variation in their classification protocols.

A potential limitation of this current study is the small amount of data used for model development. It is important that enough data is recorded in order for the behaviour model to capture the variation between animals and also the variation within behaviour signals of an individual animal. A much larger cohort of test subjects is required for extensive validation of this classification approach. Furthermore, the data used for model development in Barwick et al. [

3] was unbalanced which can lead to the model overfitting certain behaviours, with small changes in the training dataset resulting in different behaviour predictions. To overcome this, up or under-sampling to equalize the data for each behaviour category in the training dataset can be used. Sakai et al. [

11] compared the precision and sensitivity of behaviour classification before and after balancing, reporting mixed results. A similar approach may be warranted with the current study to determine the effect of balancing data on the classification performance of the testing dataset.