Pyramid Pooling Module-Based Semi-Siamese Network: A Benchmark Model for Assessing Building Damage from xBD Satellite Imagery Datasets

Abstract

1. Introduction

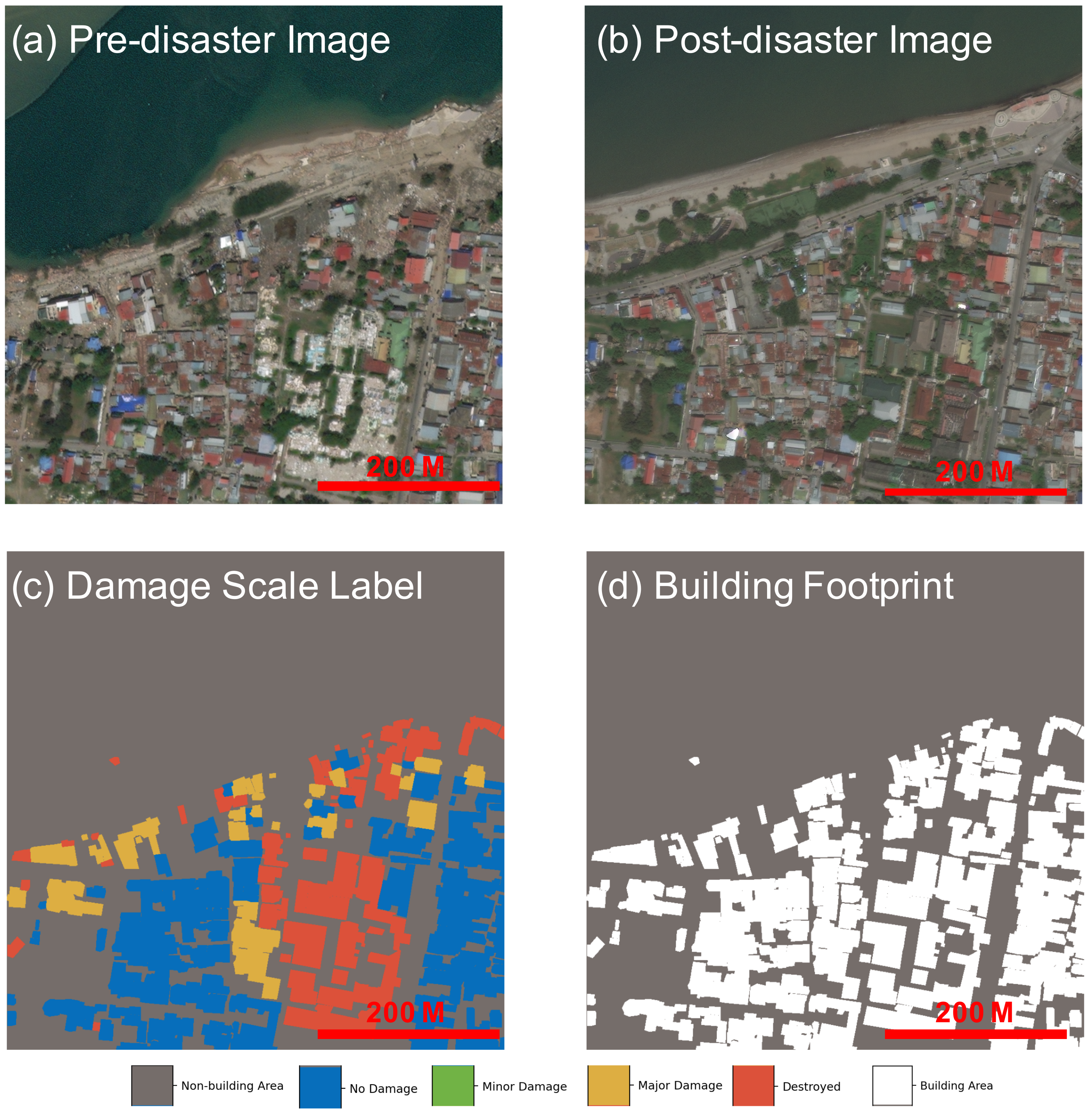

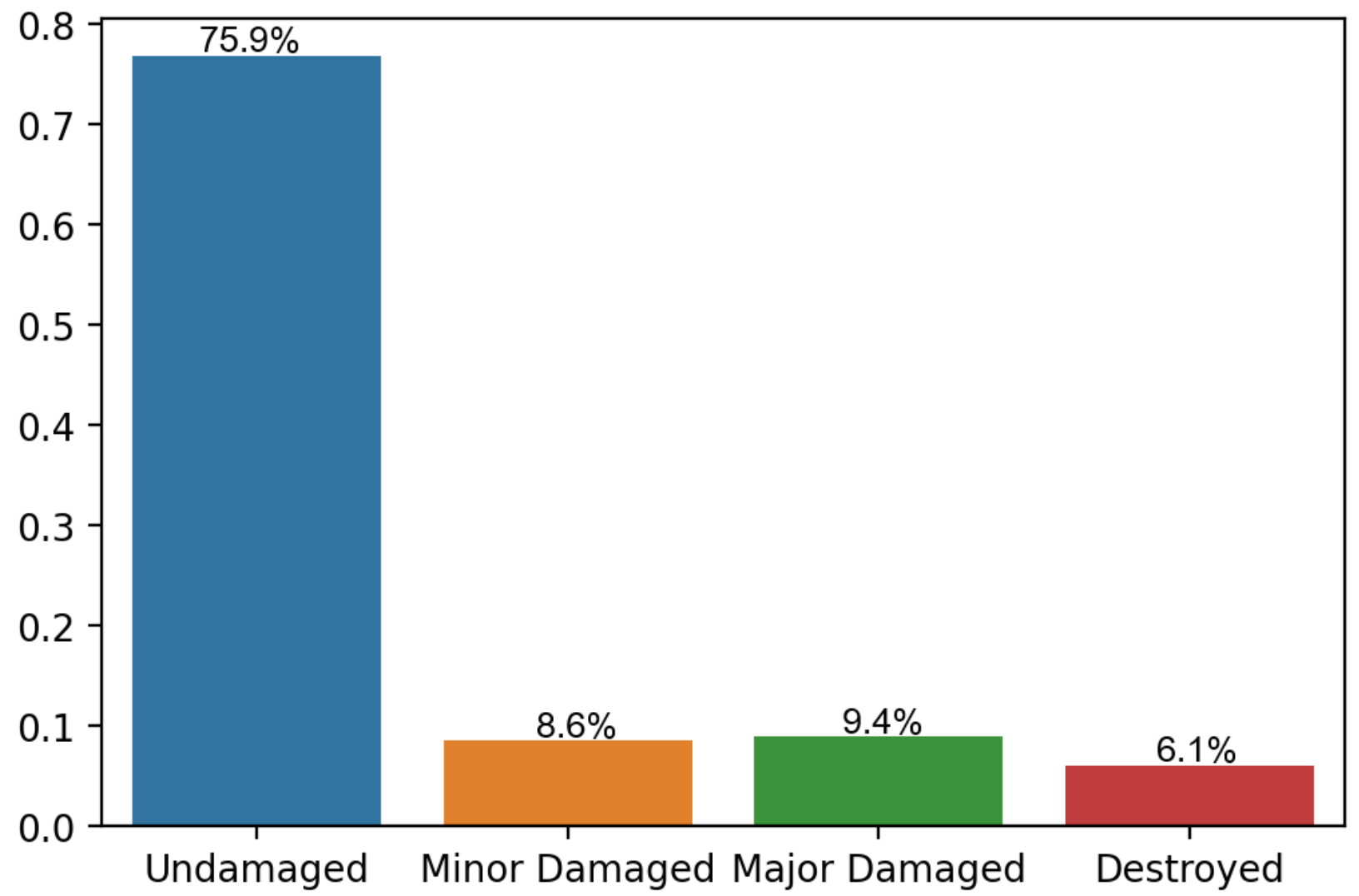

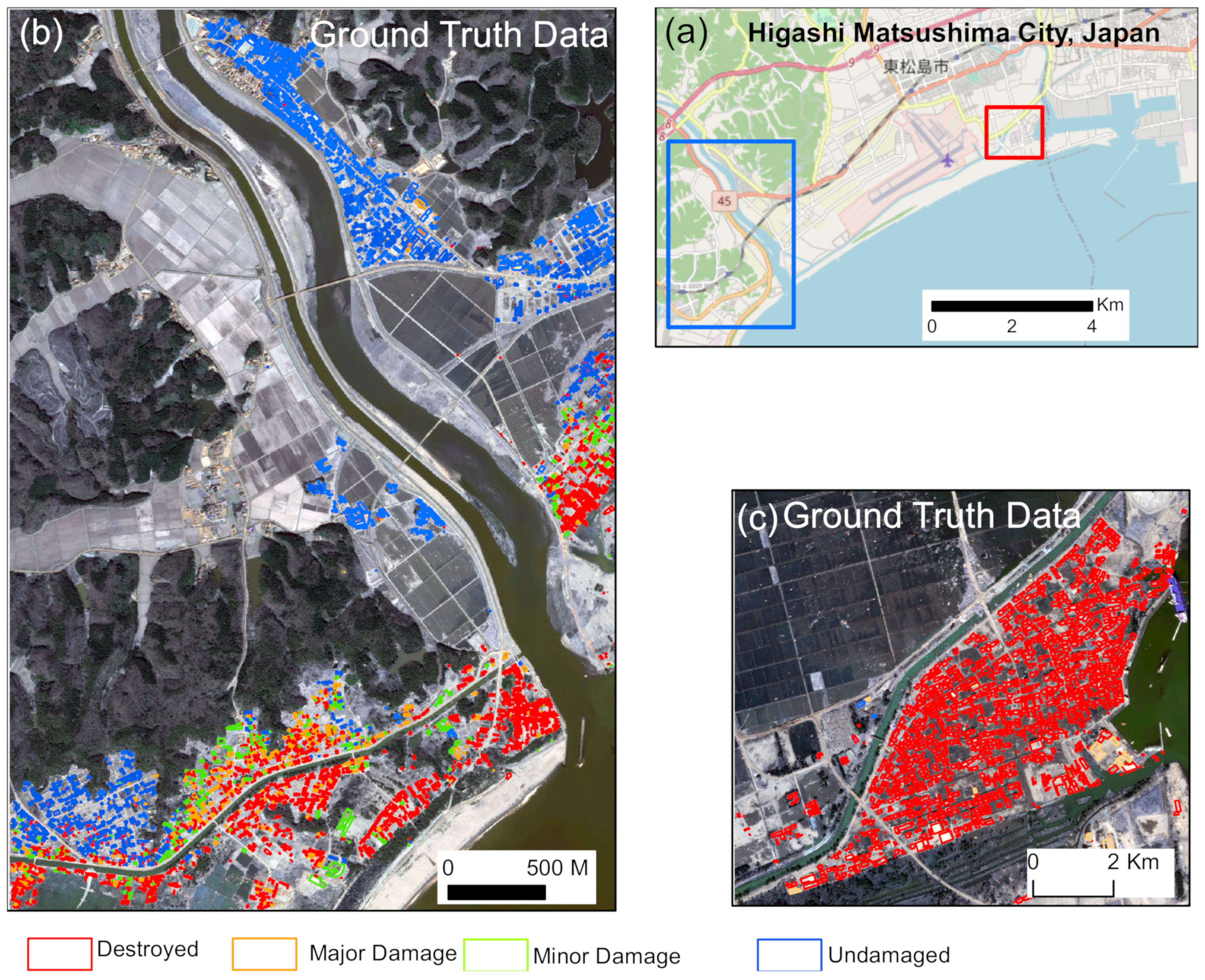

2. Data

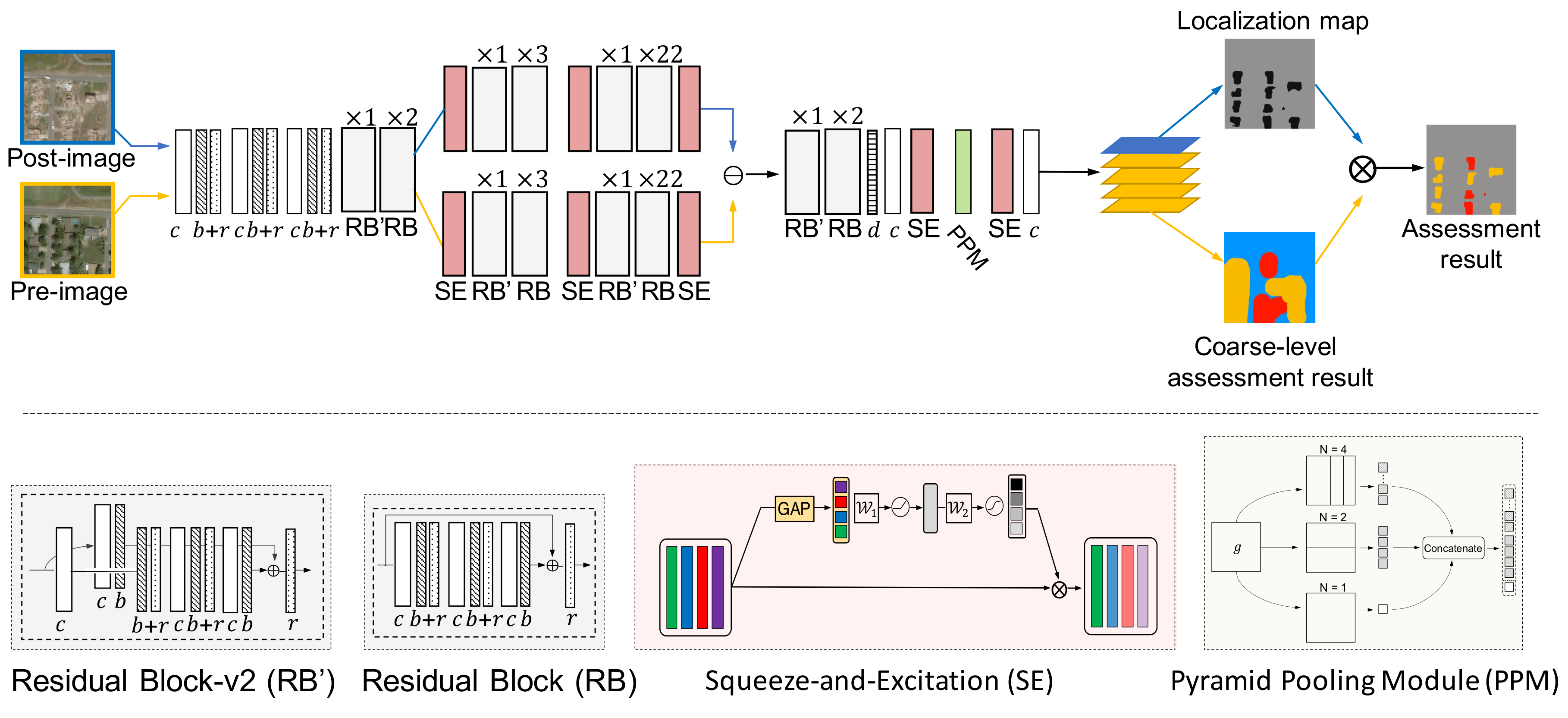

3. Methodology

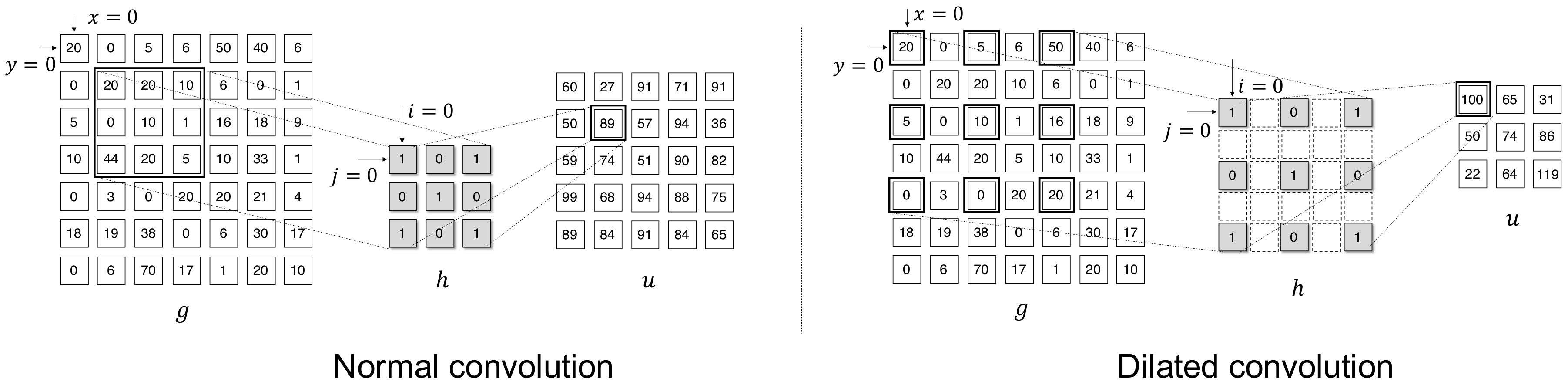

3.1. Dilated Convolution for Large Receptive Fields

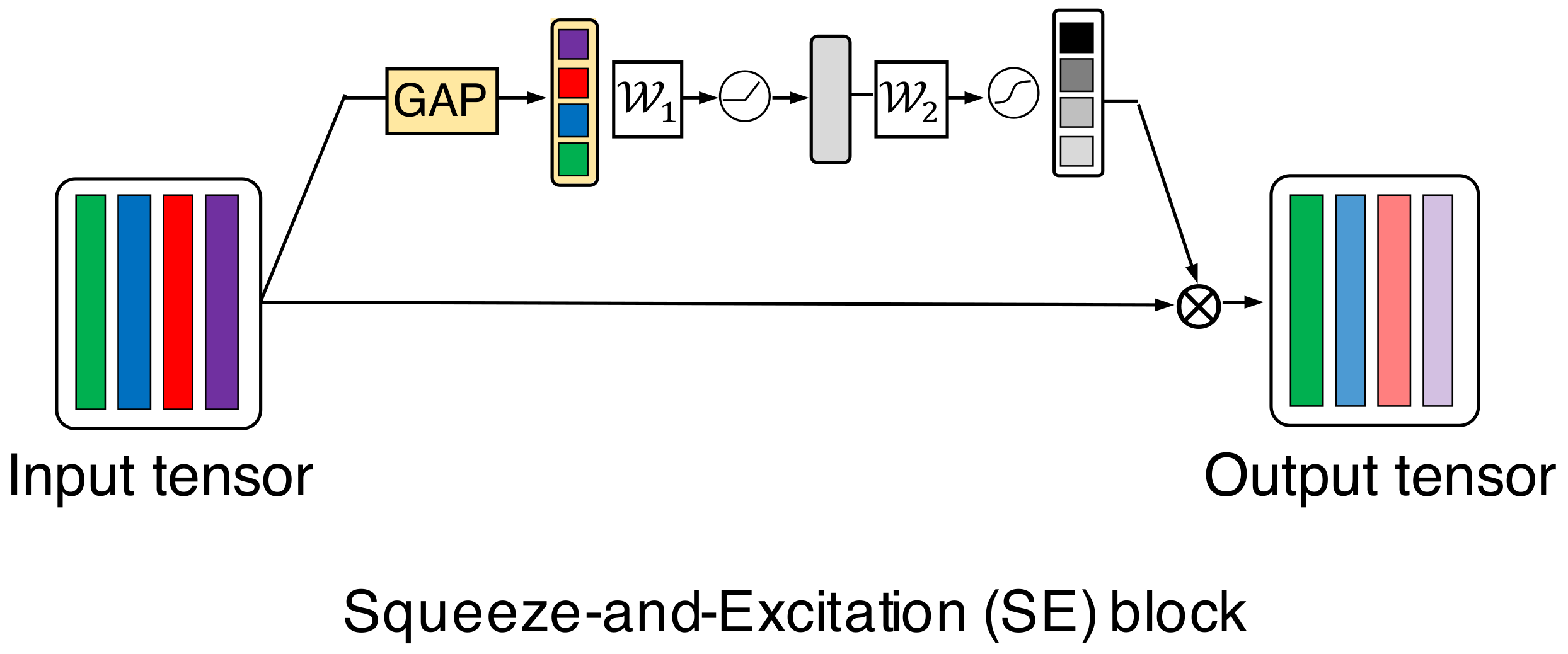

3.2. SE Mechanism for Attention

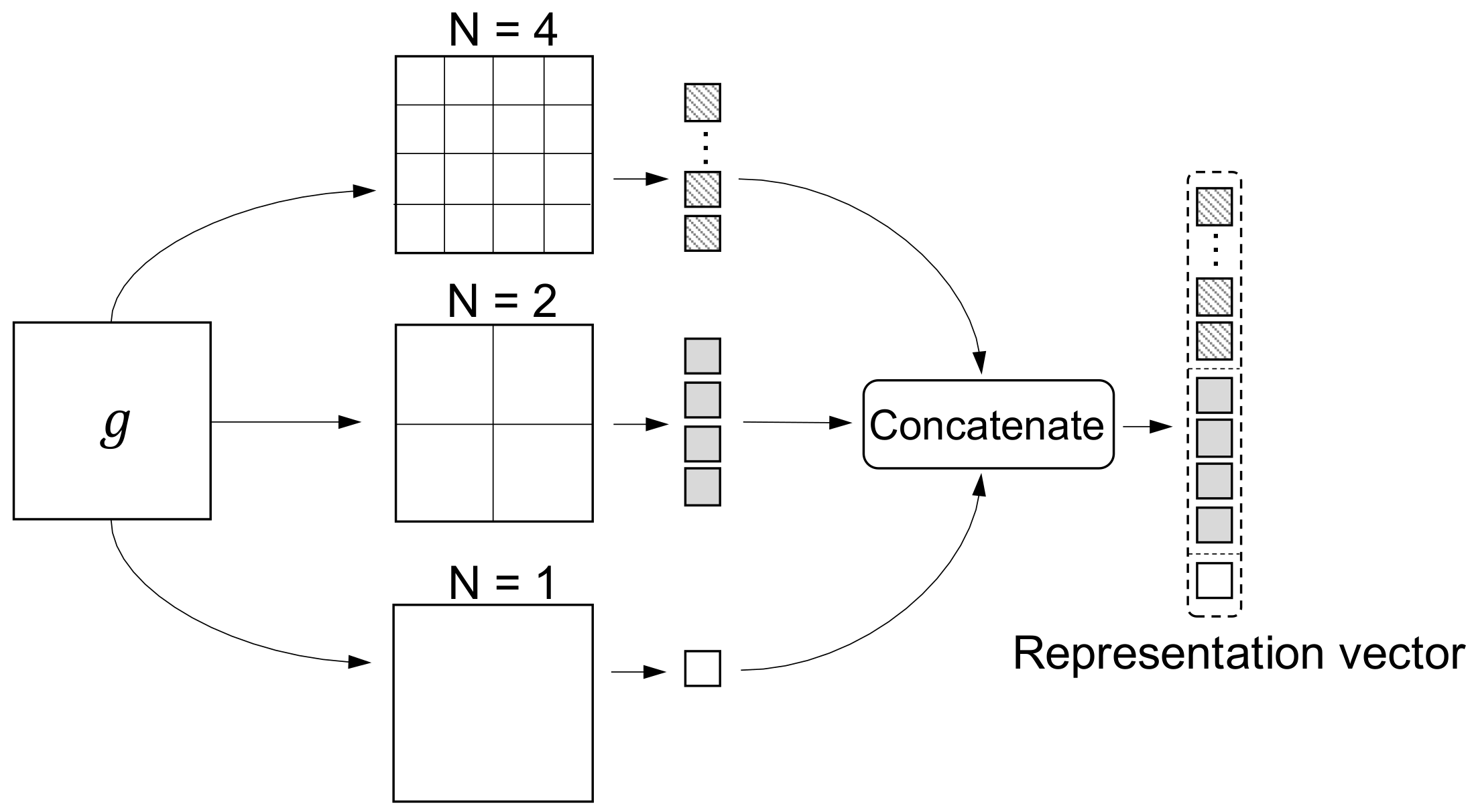

3.3. PPM

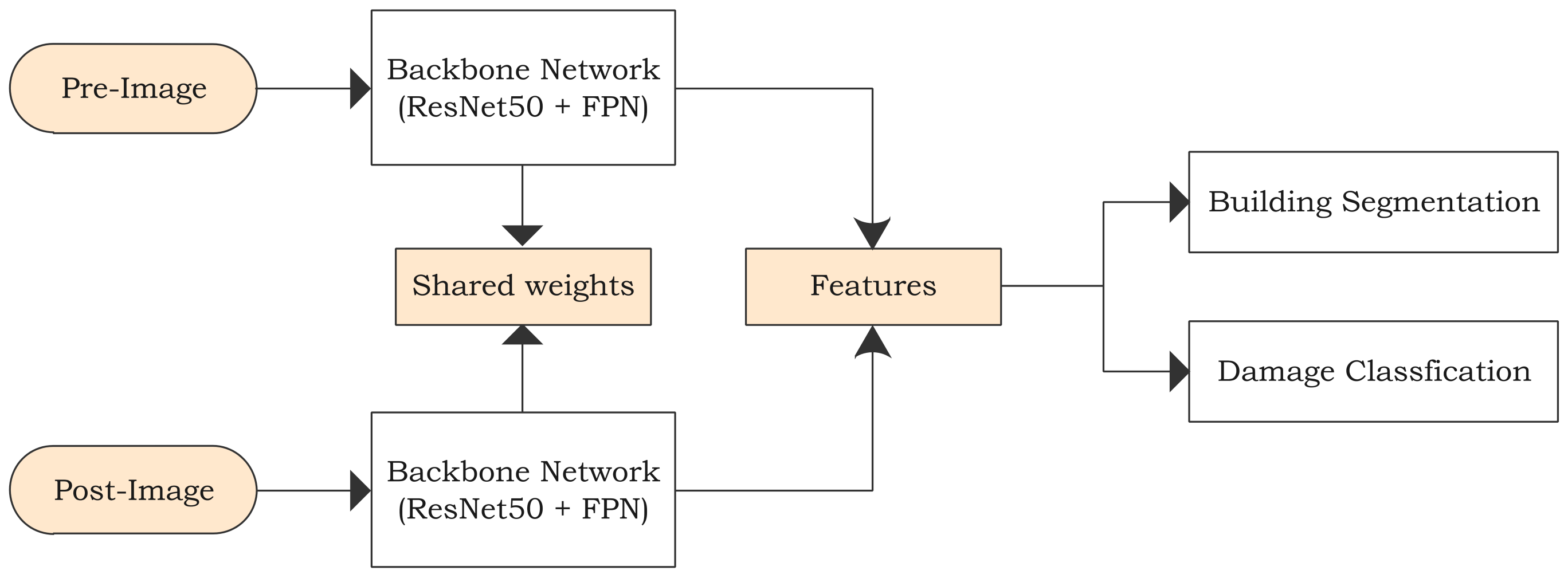

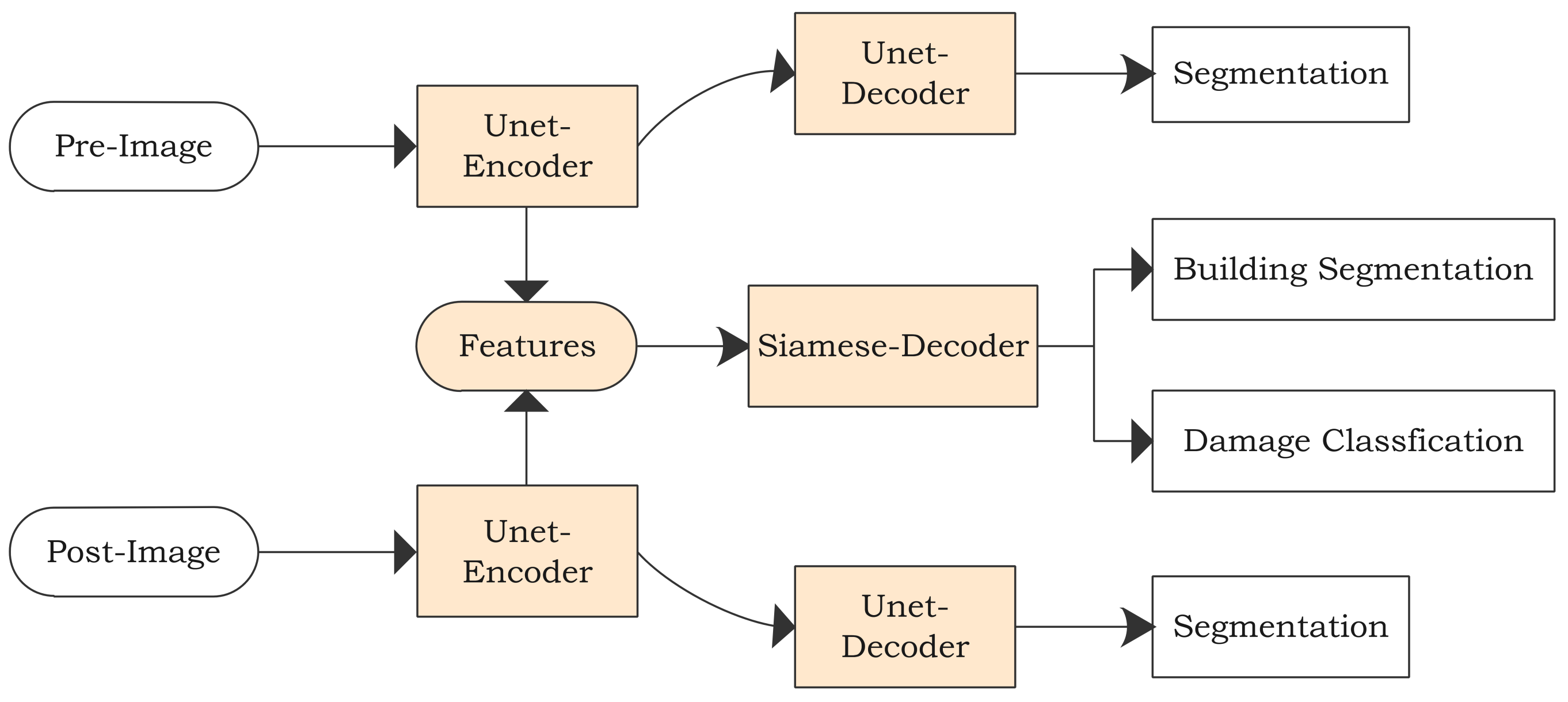

3.4. Pyramid Pooling Module-Based Semi-Siamese Network (PPM-SSNet)

4. Experimental Analysis

4.1. Resampling

4.2. Data Augmentation

4.3. Assessment Metrics

4.4. Loss and Mask Dilation

5. Results and Discussion

5.1. Experimental Setting

5.2. Ablation Study

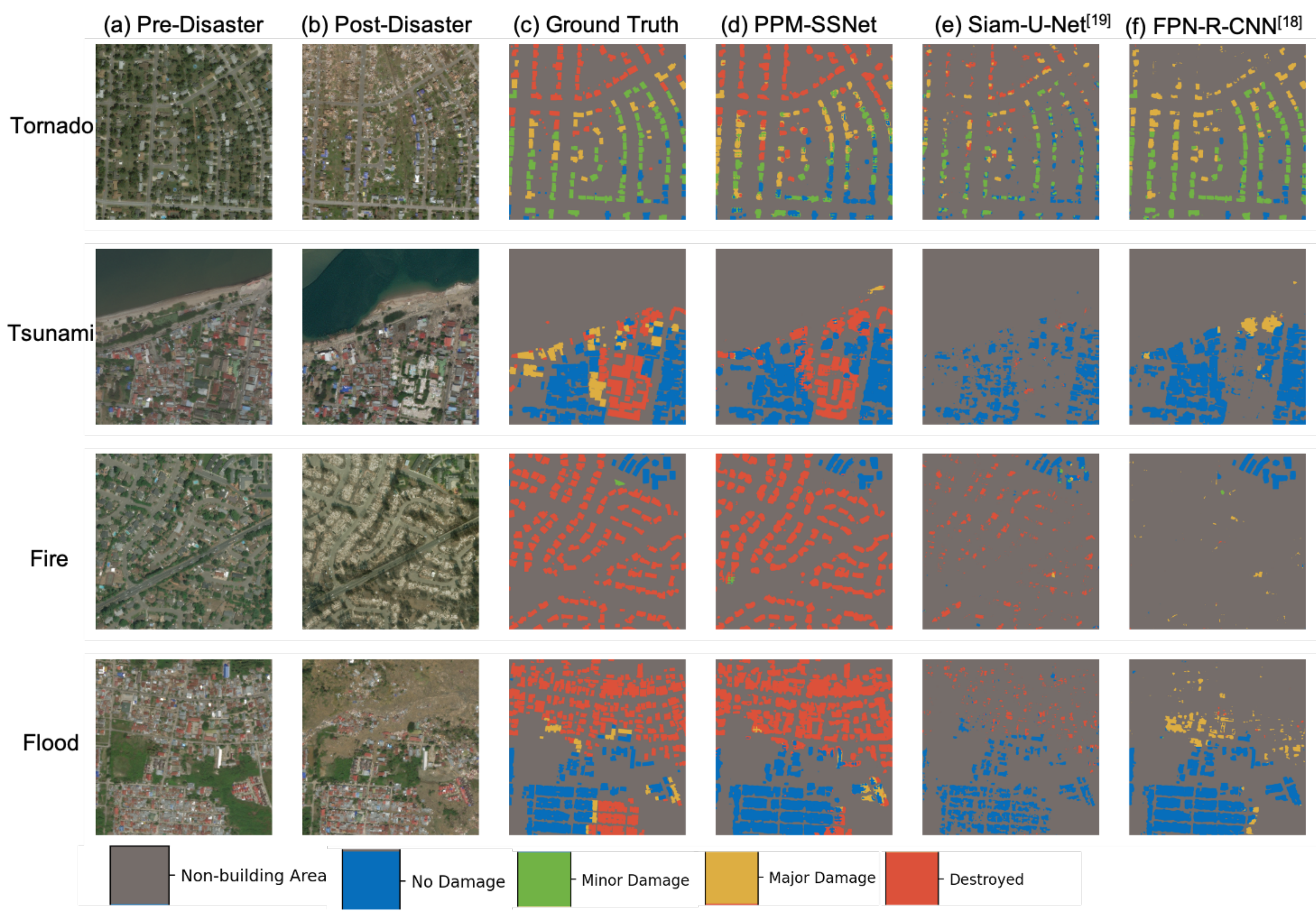

5.3. Comparisons with Other Methods

5.4. Robustness of the Method

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| PPM-SSNet | Pyramid Pooling Module-based Semi-Siamese Network |

| PPM | Pyramid Pooling Module |

| CNN | Convolutional Neural Network |

| IoU | Intersection over Union |

| SE | Squeeze-and-Excitation |

| RBs | Residual Blocks |

References

- Hillier, J.K.; Matthews, T.; Wilby, R.L.; Murphy, C. Multi-hazard dependencies can increase or decrease risk. Nat. Clim. Chang. 2020, 10, 595–598. [Google Scholar] [CrossRef]

- Koshimura, S.; Shuto, N. Response to the 2011 great East Japan earthquake and tsunami disaster. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 2015, 373, 20140373. [Google Scholar] [CrossRef] [PubMed]

- Mascort-Albea, E.J.; Canivell, J.; Jaramillo-Morilla, A.; Romero-Hernández, R.; Ruiz-Jaramillo, J.; Soriano-Cuesta, C. Action protocols for seismic evaluation of structures and damage restoration of residential buildings in Andalusia (Spain): “IT-Sismo” APP. Buildings 2019, 9, 104. [Google Scholar] [CrossRef]

- Mas, E.; Bricker, J.; Kure, S.; Adriano, B.; Yi, C.; Suppasri, A.; Koshimura, S. Field survey report and satellite image interpretation of the 2013 Super Typhoon Haiyan in the Philippines. Nat. Hazards Earth Syst. Sci. 2015, 15, 805–816. [Google Scholar] [CrossRef]

- Suppasri, A.; Koshimura, S.; Matsuoka, M.; Gokon, H.; Kamthonkiat, D. Application of remote sensing for tsunami disaster. Remote Sens. Planet Earth 2012, 143–168. [Google Scholar] [CrossRef]

- Bai, Y.; Adriano, B.; Mas, E.; Koshimura, S. Building damage assessment in the 2015 Gorkha, Nepal, earthquake using only post-event dual polarization synthetic aperture radar imagery. Earthq. Spectra 2017, 33, 185–195. [Google Scholar] [CrossRef]

- Bai, Y.; Gao, C.; Singh, S.; Koch, M.; Adriano, B.; Mas, E.; Koshimura, S. A framework of rapid regional tsunami damage recognition from post-event TerraSAR-X imagery using deep neural networks. IEEE Geosci. Remote Sens. Lett. 2017, 15, 43–47. [Google Scholar] [CrossRef]

- Moya, L.; Mas, E.; Koshimura, S. Learning from the 2018 Western Japan Heavy Rains to Detect Floods during the 2019 Hagibis Typhoon. Remote Sens. 2020, 12, 2244. [Google Scholar] [CrossRef]

- Koshimura, S.; Moya, L.; Mas, E.; Bai, Y. Tsunami Damage Detection with Remote Sensing: A Review. Geosciences 2020, 10, 177. [Google Scholar] [CrossRef]

- Bai, Y.; Mas, E.; Koshimura, S. Towards operational satellite-based damage-mapping using u-net convolutional network: A case study of 2011 tohoku earthquake-tsunami. Remote Sens. 2018, 10, 1626. [Google Scholar] [CrossRef]

- Nex, F.; Duarte, D.; Tonolo, F.G.; Kerle, N. Structural building damage detection with deep learning: Assessment of a state-of-the-art cnn in operational conditions. Remote Sens. 2019, 11, 2765. [Google Scholar] [CrossRef]

- Xu, J.Z.; Lu, W.; Li, Z.; Khaitan, P.; Zaytseva, V. Building damage detection in satellite imagery using convolutional neural networks. arXiv 2019, arXiv:1910.06444. [Google Scholar]

- Rudner, T.G.; Rußwurm, M.; Fil, J.; Pelich, R.; Bischke, B.; Kopačková, V.; Biliński, P. Multi3Net: Segmenting flooded buildings via fusion of multiresolution, multisensor, and multitemporal satellite imagery. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 702–709. [Google Scholar]

- Doshi, J.; Basu, S.; Pang, G. From satellite imagery to disaster insights. arXiv 2018, arXiv:1812.07033. [Google Scholar]

- Gupta, R.; Hosfelt, R.; Sajeev, S.; Patel, N.; Goodman, B.; Doshi, J.; Heim, E.; Choset, H.; Gaston, M. xbd: A dataset for assessing building damage from satellite imagery. arXiv 2019, arXiv:1911.09296. [Google Scholar]

- Gupta, R.; Goodman, B.; Patel, N.; Hosfelt, R.; Sajeev, S.; Heim, E.; Doshi, J.; Lucas, K.; Choset, H.; Gaston, M. Creating xBD: A Dataset for Assessing Building Damage from Satellite Imagery. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Gupta, R.; Shah, M. RescueNet: Joint Building Segmentation and Damage Assessment from Satellite Imagery. arXiv 2020, arXiv:2004.07312. [Google Scholar]

- Cooner, A.J.; Shao, Y.; Campbell, J.B. Detection of urban damage using remote sensing and machine learning algorithms: Revisiting the 2010 Haiti earthquake. Remote Sens. 2016, 8, 868. [Google Scholar] [CrossRef]

- Weber, E.; Kané, H. Building Disaster Damage Assessment in Satellite Imagery with Multi-Temporal Fusion. arXiv 2020, arXiv:2004.05525. [Google Scholar]

- Hao, H.; Baireddy, S.; Bartusiak, E.R.; Konz, L.; LaTourette, K.; Gribbons, M.; Chan, M.; Comer, M.L.; Delp, E.J. An Attention-Based System for Damage Assessment Using Satellite Imagery. arXiv 2020, arXiv:2004.05525. [Google Scholar]

- Nia, K.R.; Mori, G. Building damage assessment using deep learning and ground-level image data. In Proceedings of the 2017 14th Conference on Computer and Robot Vision (CRV), Edmonton, AB, Canada, 16–19 May 2017; pp. 95–102. [Google Scholar]

- Valentijn, T.; Margutti, J.; van den Homberg, M.; Laaksonen, J. Multi-Hazard and Spatial Transferability of a CNN for Automated Building Damage Assessment. Remote Sens. 2020, 12, 2839. [Google Scholar] [CrossRef]

- Harirchian, E.; Lahmer, T.; Kumari, V.; Jadhav, K. Application of Support Vector Machine Modeling for the Rapid Seismic Hazard Safety Evaluation of Existing Buildings. Energies 2020, 13, 3340. [Google Scholar] [CrossRef]

- Zhuo, G.; Dai, K.; Huang, H.; Li, S.; Shi, X.; Feng, Y.; Li, T.; Dong, X.; Deng, J. Evaluating potential ground subsidence geo-hazard of Xiamen Xiang’an new airport on reclaimed land by SAR interferometry. Sustainability 2020, 12, 6991. [Google Scholar] [CrossRef]

- Morfidis, K.E.; Kostinakis, K.G. Use of Artificial Neural Networks in the R/C Buildings’seismic Vulnerabilty Assessment: The Practical Point of View. In Proceedings of the 7th ECCOMAS Thematic Conference on Computational Methods in Structural Dynamics and Earthquake Engineering, Crete, Greece, 24–26 June 2019. [Google Scholar]

- Harirchian, E.; Lahmer, T.; Rasulzade, S. Earthquake Hazard Safety Assessment of Existing Buildings Using Optimized Multi-Layer Perceptron Neural Network. Energies 2020, 13, 2060. [Google Scholar] [CrossRef]

- Morfidis, K.; Kostinakis, K. Seismic parameters’ combinations for the optimum prediction of the damage state of R/C buildings using neural networks. Adv. Eng. Softw. 2017, 106, 1–16. [Google Scholar] [CrossRef]

- Takahashi, T.; Mori, N.; Yasuda, M.; Suzuki, S.; Azuma, K. The 2011 Tohoku Earthquake Tsunami Joint Survey (TTJS) Group. Available online: http://www.coastal.jp/tsunami2011 (accessed on 30 November 2020).

- Yu, F.; Koltun, V. Multi-scale context aggregation by dilated convolutions. arXiv 2015, arXiv:1511.07122. [Google Scholar]

- Zhang, H.; Patel, V.M. Densely connected pyramid dehazing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 3194–3203. [Google Scholar]

- Liu, X.; Suganuma, M.; Sun, Z.; Okatani, T. Dual residual networks leveraging the potential of paired operations for image restoration. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 7007–7016. [Google Scholar]

- Li, Y.; Song, L.; Chen, Y.; Li, Z.; Zhang, X.; Wang, X.; Sun, J. Learning Dynamic Routing for Semantic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–28 June 2020; pp. 8553–8562. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Yamazaki, F.; Iwasaki, Y.; Liu, W.; Nonaka, T.; Sasagawa, T. Detection of damage to building side-walls in the 2011 Tohoku, Japan earthquake using high-resolution TerraSAR-X images. In Proceedings of the Image and Signal Processing for Remote Sensing XIX, Dresden, Germany, 23–26 September 2013; Volume 8892, p. 889212. [Google Scholar]

- Duarte, D.; Nex, F.; Kerle, N.; Vosselman, G. Towards a more efficient detection of earthquake induced facade damages using oblique UAV imagery. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 93. [Google Scholar] [CrossRef]

| Non-Building Area | Building Area |

|---|---|

| 96.97% | 3.03% |

| Layer | Parameters | Number | |

|---|---|---|---|

| Conv. | ×1 | ||

| Share | Conv. | ×1 | |

| Conv. | ×1 | ||

| Share | RB’ | ×1 | |

| RB | ×2 | ||

| SE | ×1 | ||

| RB’ | ×1 | ||

| RB | ×3 | ||

| Independent | SE | ×1 | |

| RB’ | ×1 | ||

| RB | ×22 | ||

| SE | ×1 | ||

| Single | RB’ | ×1 | |

| RB | ×2 | ||

| Drop | − | ×1 | |

| Conv. | ×1 | ||

| SE | ×1 | ||

| Single | PPM | ×1 | |

| SE | ×1 | ||

| Conv. | ×1 |

| Main Label | No Damage | Minor Damage | Major Damage | Destroyed |

|---|---|---|---|---|

| Repeated Times | 0 | 3 | 2 | 1 |

| Method | Pre to Post | Flip | Rotate by 90 Degree | Shift Pnt |

|---|---|---|---|---|

| Probability | 0.015 | 0.5 | 0.95 | 0.1 |

| Method | Rotation | Scale | Color shifts | Change hsv |

| Probability | 0.1 | 0.7 | 0.01 | 0.01 |

| Method | CLAHE | Blur | Noise | Saturation |

| Probability | 0.0001 | 0.0001 | 0.0001 | 0.0001 |

| Method | Brightness | Contrast | ||

| Probability | 0.0001 | 0.0001 |

| Baseline model | 94.91 | 52.57 | 73.74 | 54.70 | 75.27 | 63.36 | 95.14 | 56.07 |

| +Siamese | 96.98 | 66.07 | 81.53 | 73.93 | 82.42 | 77.95 | 95.98 | 61.97 |

| +Siamese + Attention | 96.60 | 65.45 | 81.03 | 64.98 | 87.26 | 74.49 | 96.15 | 60.90 |

| +Siamese + PPM + Attention | 97.00 | 67.33 | 82.17 | 71.15 | 85.58 | 77.70 | 95.95 | 66.40 |

| Baseline Model | 87.22 | 93.04 | 90.04 | 54.64 | 26.20 | 35.43 | 48.14 | 56.41 | 51.95 | 85.41 | 45.02 | 58.96 | 52.95 |

| +Siamese | 90.19 | 79.10 | 84.28 | 22.59 | 55.14 | 32.05 | 67.24 | 65.25 | 66.23 | 92.07 | 55.73 | 69.44 | 55.12 |

| +Siamese + Attention | 91.35 | 77.26 | 83.72 | 22.52 | 56.60 | 32.22 | 61.73 | 66.64 | 64.10 | 83.07 | 62.31 | 71.21 | 55.08 |

| +Siamese + PPM + Attention | 90.64 | 89.07 | 89.85 | 35.51 | 49.50 | 41.36 | 65.80 | 64.93 | 65.36 | 87.08 | 57.89 | 69.55 | 61.55 |

| Ground Truth | ||||||

|---|---|---|---|---|---|---|

| Non-Building | No-Damage | Minor Damage | Major Damage | Destoryed | ||

| Non-building | ||||||

| No-damage | ||||||

| Prediction | Minor damage | |||||

| Major damage | ||||||

| Destoryed | ||||||

| Total | ||||||

| Accuracy(%) | 96.52 | 58.35 | 30.29 | 52.47 | 45.35 | |

| Strategy | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| post-only | 91.88 | 47.32 | 69.60 | 56.94 | 58.16 | 82.84 | 38.16 | 63.23 | 71.10 | 58.69 |

| pre-and-post | 97.00 | 67.33 | 82.17 | 77.70 | 66.40 | 89.85 | 41.36 | 65.36 | 69.55 | 61.55 |

| Networks | Mean | Mean | ||||

|---|---|---|---|---|---|---|

| Siam-U-Net-Diff | 96.50 | 44.57 | 70.54 | 52.75 | 90.75 | 66.72 |

| Weber et al. | 95.63 | 48.62 | 72.13 | 85.30 | 82.90 | 84.10 |

| PPM-SSNet | 97.00 | 67.33 | 82.17 | 71.15 | 85.58 | 77.70 |

| Networks | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Siam-U-Net-Diff | 80.58 | 49.64 | 60.51 | 28.69 | 26.32 | 27.45 | 51.31 | 27.60 | 35.89 | 75.00 | 33.03 | 45.86 | 39.01 |

| Weber et al. | 94.80 | 56.90 | 71.10 | 58.90 | 22.00 | 32.00 | 70.10 | 38.00 | 49.30 | 89.50 | 40.03 | 60.71 | 48.73 |

| PPM-SSNet | 90.64 | 89.07 | 89.85 | 35.51 | 49.50 | 41.36 | 65.80 | 64.93 | 65.36 | 87.08 | 57.89 | 69.55 | 61.55 |

| Prediction | ||||||

|---|---|---|---|---|---|---|

| Non-Building | No-Damage | Minor Damage | Major Damage | Destoryed | ||

| Non-building | 38,960,379 | 66,366 | 50,870 | 19,195 | 34,488 | |

| No-damage | 215,480 | 368,283 | 862 | 1962 | 39,889 | |

| Ground Truth | Minor damage | 58,680 | 2841 | 34,629 | 1736 | 8293 |

| Major damage | 86,002 | 8 | 4331 | 43,611 | 3272 | |

| Destoryed | 196,579 | 80,942 | 12,550 | 6839 | 314,583 | |

| Total | 39,517,120 | 518,080 | 103,242 | 73,343 | 400,525 | |

| Accuracy(%) | 98.59 | 71.04 | 33.54 | 59.46 | 78.54 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bai, Y.; Hu, J.; Su, J.; Liu, X.; Liu, H.; He, X.; Meng, S.; Mas, E.; Koshimura, S. Pyramid Pooling Module-Based Semi-Siamese Network: A Benchmark Model for Assessing Building Damage from xBD Satellite Imagery Datasets. Remote Sens. 2020, 12, 4055. https://doi.org/10.3390/rs12244055

Bai Y, Hu J, Su J, Liu X, Liu H, He X, Meng S, Mas E, Koshimura S. Pyramid Pooling Module-Based Semi-Siamese Network: A Benchmark Model for Assessing Building Damage from xBD Satellite Imagery Datasets. Remote Sensing. 2020; 12(24):4055. https://doi.org/10.3390/rs12244055

Chicago/Turabian StyleBai, Yanbing, Junjie Hu, Jinhua Su, Xing Liu, Haoyu Liu, Xianwen He, Shengwang Meng, Erick Mas, and Shunichi Koshimura. 2020. "Pyramid Pooling Module-Based Semi-Siamese Network: A Benchmark Model for Assessing Building Damage from xBD Satellite Imagery Datasets" Remote Sensing 12, no. 24: 4055. https://doi.org/10.3390/rs12244055

APA StyleBai, Y., Hu, J., Su, J., Liu, X., Liu, H., He, X., Meng, S., Mas, E., & Koshimura, S. (2020). Pyramid Pooling Module-Based Semi-Siamese Network: A Benchmark Model for Assessing Building Damage from xBD Satellite Imagery Datasets. Remote Sensing, 12(24), 4055. https://doi.org/10.3390/rs12244055