Abstract

The ability of the Canadian agriculture sector to make better decisions and manage its operations more competitively in the long term is only as good as the information available to inform decision-making. At all levels of Government, a reliable flow of information between scientists, practitioners, policy-makers, and commodity groups is critical for developing and supporting agricultural policies and programs. Given the vastness and complexity of Canada’s agricultural regions, space-based remote sensing is one of the most reliable approaches to get detailed information describing the evolving state of the country’s environment. Agriculture and Agri-Food Canada (AAFC)—the Canadian federal department responsible for agriculture—produces the Annual Space-Based Crop Inventory (ACI) maps for Canada. These maps are valuable operational space-based remote sensing products which cover the agricultural land use and non-agricultural land cover found within Canada’s agricultural extent. Developing and implementing novel methods for improving these products are an ongoing priority of AAFC. Consequently, it is beneficial to implement advanced machine learning and big data processing methods along with open-access satellite imagery to effectively produce accurate ACI maps. In this study, for the first time, the Google Earth Engine (GEE) cloud computing platform was used along with an Artificial Neural Networks (ANN) algorithm and Sentinel-1, -2 images to produce an object-based ACI map for 2018. Furthermore, different limitations of the proposed method were discussed, and several suggestions were provided for future studies. The Overall Accuracy (OA) and Kappa Coefficient (KC) of the final 2018 ACI map using the proposed GEE cloud method were 77% and 0.74, respectively. Moreover, the average Producer Accuracy (PA) and User Accuracy (UA) for the 17 cropland classes were 79% and 77%, respectively. Although these levels of accuracies were slightly lower than those of the AAFC’s ACI map, this study demonstrated that the proposed cloud computing method should be investigated further because it was more efficient in terms of cost, time, computation, and automation.

1. Introduction

Knowledge of the location, extent, and type of croplands are important for food security systems, poverty reduction, and water resource management [1]. Therefore, it is crucial for stakeholders to have accurate cropland maps. This will help in coordinating managerial plans and policies for croplands. In this regard, remote sensing provides valuable opportunity for classifying and monitoring agricultural areas in a cost- and time-efficient manner especially over large areas [2].

Numerous studies have investigated the potential of remote sensing for cropland mapping worldwide, most of which have been conducted over relatively small geographical areas. For instance, the study sites of the Group on Earth Observation Global Agricultural Monitoring (GEOGLAM) Joint Experiments for Crop Assessment and Monitoring (JECAM) are no larger than 25 × 25 km2. Although such studies have large value for demonstrating the capability of new methodologies and algorithms for crop mapping purposes, their ability to be scaled for operational application over much larger regions often remains unclear. In this regard, one of the important issues to be considered is processing big geo data (e.g., thousands of satellite images, often comprising tens of terabytes of data) over a large area in the classification procedure [3]. This task is impossible by commonly used classification software packages. Thus, several platforms, such as Google Earth Engine (GEE), have been developed to effectively process big geo data and produce large-scale maps.

The GEE cloud computing platform contains numerous open-access remote sensing datasets and various algorithms (e.g., cloud masking functions and classifiers) which can be easily accessed for different applications, such as producing country-wide cropland inventories [4,5,6]. So far, several studies have utilized GEE to produce large-scale cropland classifications. For example, Dong et al. [7] mapped paddy rice regions in northeast Asia using Landsat-8 images within GEE. Their results showed that GEE was significantly helpful in annual paddy rice mapping over large areas by reaching the Producer Accuracy (PA) and User Accuracy (UA) of 73% and 92%, respectively. Moreover, Xiong et al. [8] developed an automatic algorithm within GEE to classify croplands over the entire Africa using various in situ and remote sensing datasets. Massey et al. [9] also used Landsat imagery available in GEE to map agricultural areas in North America in 2010. For this purpose, they fused pixel-based Random Forest (RF) and an object-based segmentation algorithm, called Recursive Hierarchical Segmentation (RHSeg). The Overall Accuracy (OA) of the final map was more than 90%, indicating the high potential of their proposed method for continental cropland mapping tasks. Finally, Xie et al. [10] classified irrigated croplands over the conterminous United States with 30 m optical Landsat imagery in GEE. They reported that they were able to obtain the OA and KC of 94% and 0.88, respectively, at the aquifer level in 2012.

Canada contains numerous agricultural areas, making the country one of the largest food producers and exporters in the world [7]. Given the vastness and complexity of Canada’s agricultural regions in terms of crop types, remote sensing is one of the most reliable methods to produce detailed information describing the evolving state of the country’s environment. Sector-wide, there is a need for relevant, timely, and accurate spatially specific information on the state and trends in agricultural production, bio-physical resource utilization and mitigations, domestic and international market conditions, and how these conditions relate to global conditions. These needs coincide with a rapid expansion of data availability brought about by the increasing development of technology that has made geospatial data less expensive, more reliable, more available, and easier to integrate and disseminate. Over the past decade, various research studies have explored the utility of remote sensing methods for agricultural mapping across Canada’s diverse and often complex agricultural landscape. For instance, Deschamps et al. [11] compared Decision Tree (DT) and RF algorithms to map Canadian agricultural areas using satellite imagery. They reported that RF outperformed DT by up to 5% and 7% for OA and class accuracies, respectively. They employed multi-temporal remote sensing images acquired between May and September and reported that the best time to distinguish different crops was between August and early September at the peak biomass and after wheat harvest. Jiao et al. [12] also applied multi-temporal RADARSAT-2 images to an object-based classification algorithm to classify croplands in an agricultural area in northeastern Ontario, Canada. They classified five different crop types with an OA of 95%. Finally, Liao et al. [13] used the RF classifier along with multi-temporal RADARSAT-2 datasets to map croplands in southwestern Ontario, Canada. Their results showed that the elements of the coherency matrix and the imagery acquired between June to November provided the highest classification accuracy (OA = 90%).

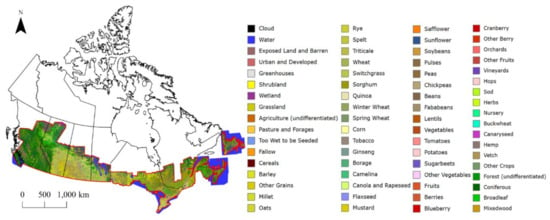

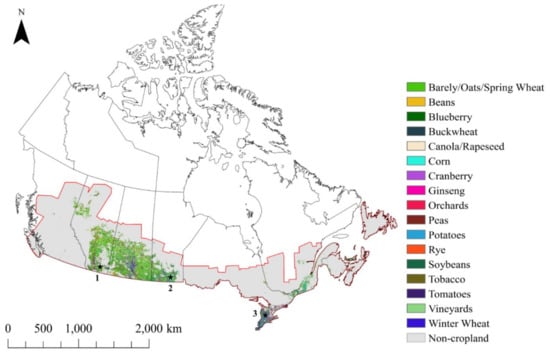

As mentioned above, most studies on cropland classification in Canada were conducted over relatively small areas and using relatively traditional methods where a small number of crop types were mapped. The only near nation-wide cropland classification is the Annual Space-Based Crop Inventory (ACI) produced by the Agriculture and Agri-Food Canada with 30 m spatial resolution (AAFC, Figure 1). ACI is updated annually and is available free of charge to the public via the Government of Canada‘s Open Data Portal. ACI contains a map of the agricultural land use and non-agricultural land cover found within Canada’s agricultural extent [14]. The DT methodologies along with optical (e.g., Landsat-8) and SAR (e.g., SGX dual-polarization RADARSAT-2 in the Wide mode) imagery are applied to produce ACI. Although these maps achieve an OA of 85% at the national scale, its accuracy can vary from crop-to-crop, from region-to-region, and from year-to-year, depending on the satellite data availability and training site distribution. Generally, the highest mapping accuracies (>90.0%) are found where crops display significantly different spectral characteristics at the time of the remote sensing data acquisition. Conversely, lower mapping accuracies (70% to 80%) occur where crops are spectrally similar [14].

Figure 1.

Annual Space-based Crop Inventory (ACI) produced by Agriculture and Agri-Food Canada (AAFC) in 2018.

Developing and implementing novel methods for improving the accuracy of ACI is an ongoing priority of AAFC remote sensing science. Recent advancements in the fields of machine learning and big data processing methods suggest that alternative approaches may exist for more quickly generating agricultural maps of Canada with high accuracy. Consequently, it is required to investigate the most recent machine learning algorithms and cloud computing methods to produce accurate ACI map in a more operational and automatic approach. Thus, this study aims to classify croplands over 10 provinces of the country using GEE and Artificial Neural Network (ANN) for the first time. For this purpose, all the Ground Range Detected (GRD) products of Sentinel-1 and Sentinel-2 acquired in 2018 were applied to produce an object-based ACI map at the spatial resolution of 10 m.

2. Study Area and Datasets

2.1. Study Area

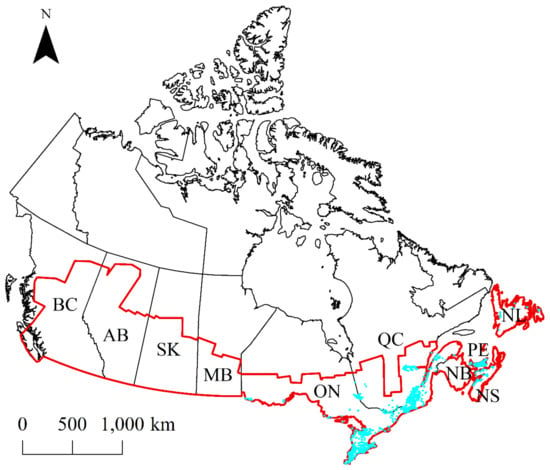

Figure 2 illustrates the study region in the red boundary with an area of 2.803 million km2 which comprises ~28% of Canada’s terrestrial landmass. This area includes important agricultural areas over 10 provinces of Canada, and it is the region with which AAFC generates its ACI maps. The study area contains a wide range of ecoregions and is characterized by various ecological factors, including climate, vegetation, and water because of its large extent and geography. Although land cover varies across the study area, it is mostly dominated by forests, wetlands, grassland, and croplands [3,15].

Figure 2.

The study area (red boundary) within Canada’s boundary (black boundary). The field samples are indicted by cyan color Note that since ground observations for the western Provinces are collected by Provincial Crop Insurance companies, they were unavailable for this study. (NL: Newfoundland and Labrador, NB: New Brunswick, PE: Prince Edward Island, NS: Nova Scotia, QC: Quebec, ON: Ontario, MB: Manitoba, SK: Saskatchewan, AB: Alberta, BC: British Columbia).

2.2. Field Data

Field data for 2018 were provided by AAFC. AAFC collects tens of thousands of crop type observations from crop insurance information and car-based “windshield surveys” every year. In the latter approach, some primary sampling units were first randomly delineated from the existing databases (i.e., administrative regions) according to their cropland size [14]. Subsequently, the Global Positioning System (GPS)-enabled tablets were used to determine the crop type along the roads from the moving vehicle. This approach allowed a simple and rapid collection of numerous field samples from diverse crop types. In this study, the AAFC database for 2018 that comprises the boundaries of the croplands mapped during the 2018-windshield surveys across the country was utilized. The quality of field boundary delineation was validated using a cross-check with existing high-resolution optical imagery from ArcGIS basemap and Google Earth. Figure 2 illustrates the distribution of field samples across Canada and Table 1 provides the information about the field samples in each province. It is worth noting that although AAFC considers about 59 different cropland classes (see Figure 1), only 17 cropland classes, which are the main classes found in Canada, were mapped in this study. The main reason for this was that the number of field samples for some of the classes was insufficient for training and validating the classification algorithm. Moreover, three classes of Barley, Oats, and Spring Wheat were combined. This was because they had similar spectral and backscattering characteristics in the satellite images and contained a high confusion in the classification.

Table 1.

The list of cropland classes along with their number of field samples (polygons) which were used in this study.

2.3. Satellite Data

A combination of multi-temporal SAR (Sentinel-1) and optical (Sentinel-2) imagery, acquired in 2018, was used in this study. Each of these satellite dataset can detect different spectral and physical characteristics of crops and, thus, a combination could potentially compensate the limitation of using one type of imagery [16,17,18]. For instance, Davidson et al. [14] reported that experiments undertaken at Canadian research sites indicated that a combination of Landsat and RADARSAT-2 data can improve the accuracy of cropland classification by up to 5–8% compared to when only Landsat imagery was used. Additionally, many studies (e.g., [15,17,19]) have discussed that it is essential to use multi-date satellite data to produce highly accurate cropland classification. This is because various croplands have different phenology and temporal patterns, which can be potentially detected by multi-temporal remote sensing images.

Sentinel-1 acquires C-band data in various swath modes (i.e., Interferometric Wide swath (IW), Extra Wide swath (EW), and Strip Map (SM)), and both single (HH: Horizontal transmit and Horizontal receive polarization, or VV: Vertical transmit and Vertical receive polarization) and dual polarizations (HH/HV: Horizontal transmit and Vertical receive polarization, or VV/VH: Vertical transmit and Horizontal receive polarization). The primary conflict-free modes are IW with VV+VH polarization over lands (excluding polar regions) [20]. In this study, the GRD data collected in IW mode and in the ascending orbit with 10 m spatial resolution were used. Furthermore, since the HH and HH/HV polarizations are designed only for polar regions [20], they were not available over the entire Canada and, thus, only the VV/VH dual polarization was used in this study.

Sentinel-2 is a European multi-spectral satellite, which acquires imagery in 13 spectral bands, such as visible (band 2–4), Red Edge (RE, band 5–7), Near Infrared (NIR, band 8), and Shortwave Infrared (SWIR, band 11–12) bands with 10 m, 20 m, 10 m, and 20 m spatial resolutions, respectively. In this study, the Normalized Difference Vegetation Index () and the Normalized Difference Water Index () with 10 m spatial resolution were only used to reduce the computational cost of processing.

3. Methodology

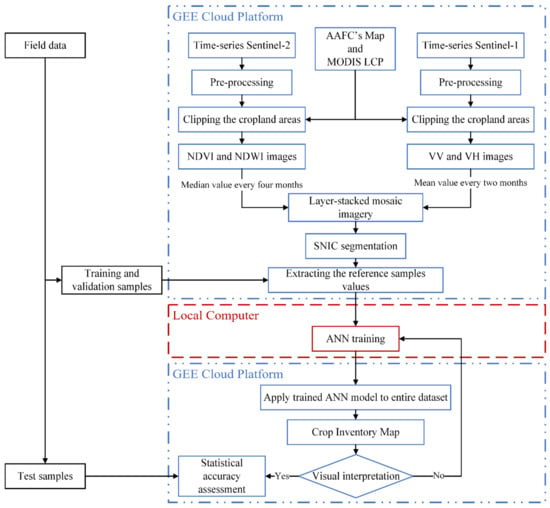

The flowchart of the method to produce the ACI map using the GEE platform and ANN algorithm is illustrated in Figure 3. The details of each step are also discussed in the following five subsections.

Figure 3.

Flowchart of the proposed method to produce the Annual Space-Based Crop Inventory (ACI) map using Google Earth Engine (GEE) and Artificial Neural Network (ANN) algorithm (AAFC: Agriculture and Agri-Food Canada, NDVI: Normalized Difference Vegetation Index, NDWI: Normalized Difference Water Index, SNIC: Simple Non-Iterative Clustering, MODIS LCP: Moderate Resolution Imaging Spectroradiometer Land Cover Product).

3.1. Satellite Data Pre-Processing

The available Sentinel-1 Ground Range Detected (GRD) products in the ascending mode within GEE (Image Collection ID: COPERNICUS/S1_GRD) were used in this study. This product was already calibrated to backscattering coefficient (σ°, dB), ortho-rectified, and converted to the backscattering coefficient using the Sentinel-1 Toolbox [21]. In fact, the five following pre-processing steps were used by the GEE developers to derive the backscattering coefficient for each pixel of Sentinel-1 image, the details of which are discussed in [22]: (1) applying orbit file, (2) GRD border noise removal, (3) thermal noise removal, (4) radiometric calibration, and (5) terrain correction. In addition to these pre-processing steps, a foreshortening mask correction was applied to reduce the geometric distortions due to SAR data imaging [23].

The level-1C Sentinel-2 images available within GEE (Image Collection ID: COPERNICUS/S2) were used in this study. These products were converted to Top of Atmosphere (TOA) reflectance values scaled by 10,000 through radiometric calibration [24]. Because of the necessity of cloud masking in optical images, all scenes with higher than 10% cloud cover were also removed from further steps. Moreover, the quality band was applied to remove invalid observations as well as to produce the cloud-free mosaic optical image over the study area.

Finally, the pre-processed Sentinel-1 and Sentinel-2 datasets were clipped over the cropland areas using the union of the AAFC’s cropland inventory map (Figure 1) and Moderate Resolution Imaging Spectroradiometer (MODIS) yearly land cover product with 500 m spatial resolution (Image Collection ID: MODIS/006/MCD12Q1) [25]. By doing this, the final study area was the cropland areas identified by either the AAFC or MODIS land cover product in 2018.

3.2. Feature Extraction

The performance of classification algorithms depends on the extraction of suitable features [26]. Therefore, in this section, the extracted features from optical and SAR datasets are discussed.

Regarding SAR features, the mean function was initially applied to preprocessed Sentinel-1 images to produce bi-monthly VV and VH images (see Table 2). The mean function enabled the production of 12 mosaic SAR images (6 VV + 6 VH) over the study area for the whole year of 2018. Moreover, the mean function reduced the effects of speckle noise in the feature layer [27].

Table 2.

Number of the Sentinel-1 and Sentinel-2 images used for each time interval.

Spectral indices derived from remote sensing images have been widely employed for crop classification [28]. In this regard, the Normalized Difference Vegetation Index (NDVI) proved to be competent in crop classification [29,30]. Moreover, the Normalized Difference Water Index (NDWI), which contains the amount of plant water content, has been extensively utilized for crop mapping [31,32,33]. Finally, it was reported that using a combination of these two indices provides complementary information about vegetation canopies and, thus, could improve the accuracy of crop classification [34]. Therefore, in this study, the NDVI and NDWI images were calculated from preprocessed Sentinel-2 images. A median function was applied to all NDVI and NDWI images within every four months, resulting in three NDVI and three NDWI images for the year of 2018 (see Table 2). This function allowed producing six single homogenous and cloud-free NDVI/NDWI images over the study area. Moreover, this function removed the wrong values that were calculated because of the existence of very bright/dark or noisy pixels [3,35]. It should be noted that the reason to produce the four-month mosaic images was the fact that four month was the minimum amount of time to produce cloud-free NDVI/NDWI mosaic images over the study area because of the frequent cloud cover in Canada.

Finally, three NDVI, three NDWI, and 12 SAR images were stacked, producing a single mosaic image with 18 layers over the study area. For this purpose, 3,828 Sentinel-1 and 35,945 Sentinel-2 images were processed within the GEE (see Table 2). This final mosaic image was applied to the classification algorithm to map croplands. It is worth noting that utilizing these two features at different time intervals reduced the artifact lines between images acquired at different orbits.

3.3. Segmentation

Many studies have reported that object-based image analysis could improve crop type classification compared to pixel-based methods [36,37]. Therefore, the Simple Non-Iterative Clustering (SNIC) algorithm, which is the best segmentation algorithm in GEE, was applied to segment the final layer-stacked mosaic image. SNIC is an improved version of the Simple Linear Iterative Clustering (SLIC) segmentation algorithm that benefits from a non-iterative procedure and enforces the connectivity rule from the initial stage [38]. The SNIC is initialized by a user-defined number of seed points spread on a regular grid in the image space. Afterward, a distance measure along with the 4/8 connectivity rule are applied to grow each seed point, resulting in final segments. In this study, the SNIC algorithm was applied to the single mosaic image with 18 layers and, then, the values of each segment were averaged to decrease the noises and increase the reliability of the samples that were used in further steps. Finally, these segments were employed to perform an object-based classification to produce the ACI map. The implemented SNIC algorithm within GEE comprises five input parameters [39]: Size, Compactness, Connectivity, Neighborhood Size, and Seeds (an optional parameter). In this study, these parameters were set to 50, 1, 8, 100, and null, respectively based on multiple trials and errors to find the optimum values for these parameters.

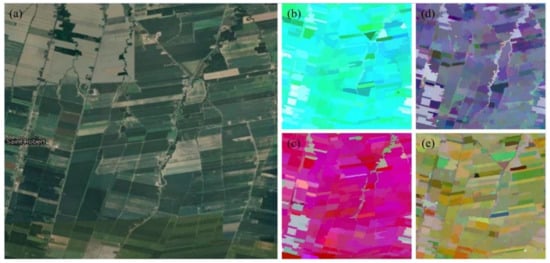

Figure 4 illustrates the results of SNIC segmentation over a sample region in the study area.

Figure 4.

Results of applying the Simple Non-Iterative Clustering (SNIC) algorithm and the calculated mean value of each segment for a sample region in the study area. (a) high-resolution image of the corresponding region. False RGB color composites of (b) NDVI-1, NDVI-2, and NDVI-3, (c) NDWI-1, NDWI-2, and NDWI-3, (d) VV-1, VV-2, and VV-3, and (e) VV-4, VV-5, and VV-6 (NDVI: Normalized Difference Vegetation Index, NDWI: Normalized Difference Water Index, VV: Vertical transmit and Vertical receive polarization).

3.4. Classification

Deep learning algorithms, such as ANN, have been extensively employed for crop type classifications [33,40,41,42]. ANN was inspired by the biological neural networks that simulates the human brain nervous recognition system with high potential for non-linear classification capacity [43].

In this study, the single mosaic image with 18 layers generated within GEE were used to produce the ACI map using the ANN algorithm. To this end, the reference samples were first randomly divided into three sets of training (70%), validation (15%), and test (15%) samples. According to sampling theory, random sampling shall provide independent information and avoid spatial biases [14]. The training, validation, and test samples were applied to train the ANN algorithm, adjust the hyperparameters of the ANN architecture, and accuracy assessment of the final ACI map, respectively. Despite the high computational performance of GEE, this cloud platform has computational limitations for the cases when the numbers of reference samples or input features for classification are too large [3,6,35]. Therefore, the mean values of training and validation samples were first extracted (for each segment) from the single mosaic image with 18 layers in GEE and, then, were transferred into a local computer to train a feedforward ANN algorithm. It should be noted that the training phase was conducted on a local computer with an Intel Core i7-5820K, 3.3 to 3.6 GHz.

Generally, four parameters of the number of layers, number of neurons, type of activation function, and learning algorithm are used to determine the architecture of an ANN model. In this study, an ANN architecture with two hidden layers with 40 and 30 neurons was used, respectively. The numbers of neurons were selected by implementing multiple trials and errors to find the most optimum values. The numbers of neurons for the input and output layers were 18 and 17 that were equal to the number of input features and ACI classes, respectively. The activations function of all neurons was the tangent sigmoid. Furthermore, the minimum performance gradient was used as the stopping criterion, and the model satisfied this criterion after 4,226 iterations. The Scaled Conjugate Gradient (SCG) method was also employed as the back-propagation learning algorithm. The SCG employs second-order techniques in the second derivatives and conjugate direction to find a better local minimum in each step [44]. In contrast to the algorithms that employ gradient direction as the search direction, SCG avoids zig-zag solutions [45]. Furthermore, the SCG algorithm benefits from relatively small storage requirement and fast convergence speed, which can be useful for large-scale studies [45,46]

After training the ANN model, it was transferred to GEE and was applied to the entire study area to produce the ACI map. It is worth noting that all the weights and biases of the trained ANN model on the local computer were manually transferred into GEE API.

3.5. Accuracy Assessment

It is necessary to evaluate the classification result of the final ACI map to ensure the reliability of the proposed method. Therefore, the accuracy of the final classified ACI map was assessed both visually and statistically. For visual assessment, the available high-resolution satellite imagery within ArcGIS and Google Earth were employed. Regarding statistical accuracy assessment, the confusion matrix of the classification was calculated using independent test samples (15% of all samples) within GEE. Subsequently, various metrics such as OA, KC, PA, UA, Commission Error (CE), and Omission Error (OE) were derived from the confusion matrix.

4. Results

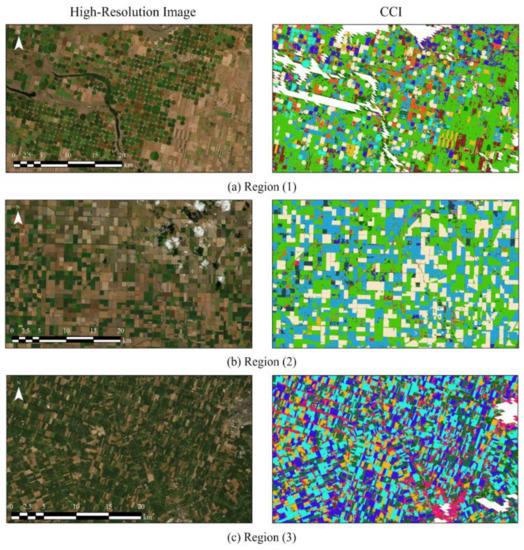

Figure 5 illustrates the object-based 2018 ACI map produced using the ANN algorithm and a combination of Sentinel-1 and -2 imagery within the GEE cloud computing platform. The resulting ACI map is visually clear and noise-free because of the incorporation of the SNIC algorithm for noise (salt-and-pepper) reduction, as mentioned in Section 3.3. Through the visual interpretation, it was observed that the proposed method was reasonably able to detect and classify the cropland classes. In this regard, three zoomed regions were explored and illustrated along with the corresponding high-resolution images available in ArcGIS to present the visual accuracy of the produced ACI map (see Figure 6).

Figure 5.

The object-based Annual Space-Based Crop Inventory (ACI) map produced using Sentinel-1 and -2 imagery, Google Earth Engine (GEE) cloud computing, and Artificial Neural Networks (ANN) algorithm. Three regions marked with black stars were selected for visual accuracy assessment of the produced ACI map.

Figure 6.

Three zoomed regions of the Annual Space-Based Crop Inventory (ACI) map along with the corresponding high-resolution satellite images used for visual assessment (see Figure 5 for the location of each region and the legend of the classes).

The first zoomed region (Figure 6a) is located in the southern part of Alberta in which circular (center-pivot irrigation) and rectangular croplands exist. The proposed approach was able to successfully identify the type of croplands and removed the non-cropland areas. Moreover, the proposed method had high potential in delineating the boundary of croplands (circular patterns) over this region. The dominant cropland classes were Barley/Oats/Spring Wheat over this zoomed region. Likewise, the proposed method efficiently classified the croplands located in the southern part of Manitoba (Figure 6b). Potatoes and Canola/Rapeseed classes were assigned the largest cultivation area in this region. Furthermore, Figure 6c shows the effectiveness of the proposed GEE workflow to classify the croplands in the south-east of Ontario. This region contains several croplands dominated by Corn and Winter Wheat.

Table 3 provides the area of each cropland type, which were calculated from the produced ACI map. In total, 81,157,426 hectares of the study area were identified and classified as croplands. Generally, three classes of Barley/Oats/Spring Wheat, Canola/Rapeseed, and Blueberry had the highest coverage over the study area with areas of about 34, 12, and 5 million hectares, respectively. The lowest coverages were for Tomatoes, Tobacco, and Ginseng classes with areas of approximately 118, 124, 129 thousand hectares, respectively.

Table 3.

Area of different cropland classes over the study area calculated from the produced ACI map in this study.

Table 4 provides the confusion matrix of the classified ACI map using over 10 million independent test samples. The OA and KC were respectively 77% and 0.74, indicating the high potential of the proposed cloud computing method for the ACI production. The classes had medium to high PA values, varying between 63% and 96%. The classes of Cranberry and Canola/Rapeseed had the highest PAs, respectively. These classes have distinct spectral (NDVI + NDWI) and backscattering (VV + VH) characteristics compared to other cropland classes. Moreover, the ACI map achieved a satisfactory average UA of 77%. The Cranberry and Blueberry classes obtained the highest UAs, respectively, while the classes of Rye and Soybeans had the lowest UA values. It was also observed that the classes of Barley/Oats/Spring Wheat had the highest confusion with other cropland classes (6 other classes), causing 34% loss of PAs in total. Furthermore, the largest amount of reciprocal confusion was observed between the classes of Soybeans and Beans, resulting in a reduction in PAs by approximately 28% in total.

Table 4.

Confusion matrix of the produced Annual Space-Based Crop Inventory (ACI) map in terms of the number of pixels.

5. Discussion

In this section, the limitations of the study regarding the filed data, similarity of croplands, and discriminating croplands from non-cropland are discussed. Several suggestions, such as adding more satellite data, producing the Canada-wide cropland map, producing ACI map, and change analysis are also provided.

5.1. Field Data

Similar to most of the remote sensing studies, the quality and quantity of field data play an important role in the accuracy of the classification. This problem is exacerbated in this study area due to the small number of field data collected relative to the very large geographical area mapped. In fact, the number of field data for several classes were insufficient for training and validating the classification algorithm and, thus, were initially removed. Significant variation in the number of reference samples of different classes results in an imbalance references sets, affecting the classifier performance [47,48,49]. Thus, the 59 cropland classes used by AAFC were reduced to 17 cropland classes in this study. It should be noted that these 17 classes are the major croplands in Canada by seeded area. Another characteristic of the current study that made it more sensitive to the number of field data was utilizing ANN as the deep learning classification method. This was another reason that the classes with a small number of field samples were initially removed.

Another issue regarding field data that affected the accuracy of classification was the fact that field data were available over only seven Canadian provinces. Clearly, different provinces have various climates and different land covers/land uses. Thus, lacking field data over some provinces reduced the accuracy and reliability of the final ACI map over several regions.

5.2. Spectral Similarity of Croplands

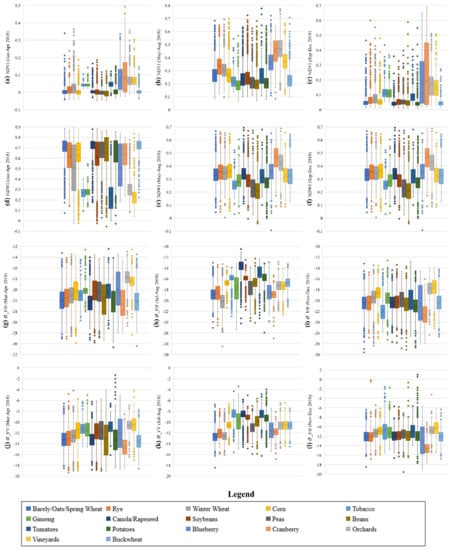

In this study, 17 classes were distinguished, some of which contained similar spectral and physical characteristics. Therefore, they had similar pixel values in both optical and SAR imagery. Figure 7 demonstrates the box plots of the 17 cropland classes using different spectral and SAR features at different time ranges in 2018. As is clear, there is a high overlap between the values of various croplands, which made the discrimination of these classes more challenging. This fact was also well reflected in the confusion matrix (Table 4) and the final ACI map (Figure 5) where a high confusion between some of the classes was observed and several misclassifications occurred. Furthermore, it was observed that using a specific feature at different time ranges was beneficial in discriminating various croplands. For example, the mosaic SAR images with the VH polarization (i.e., Figure 7g–i) had different patterns of box plots, indicating an improvement in cropland discrimination using the VH features generated at different seasons. It is finally worth noting that the confusion between multiple cropland classes would have been much worse if the numbers of the classes were not decreased to 17 classes.

Figure 7.

Box plots of the cropland classes using (a–c) three mosaic Normalized Difference Vegetation Index (NDVI) features, (d–f) three mosaic Normalized Difference Water Index (NDWI) features, (g–i) three mosaic backscattering coefficients at the Vertical transmit and Horizontal receive (VH) polarization, (j–l) three mosaic backscattering coefficients at the Vertical transmit and Vertical receive (VV) polarization. The cross (×) mark indicates the mean value.

5.3. Discrimination of Cropland and Non-Cropland Areas

Generally, cropland classification has two main steps: (1) discriminating cropland from non-cropland areas, and (2) classifying various crop types within the cropland area. As discussed in Section 3, the AAFC’s ACI map along with the MODIS yearly land cover product were initially applied to distinguish croplands from non-croplands. However, the accuracies of these products, especially the MODIS product, in identifying the cropland areas are questionable at multiple regions. Thus, a considerable amount of error could have incurred at this step. It is therefore recommended to develop a specific algorithm to initially distinguish these two general categories (i.e., cropland and non-cropland) to produce a more accurate and reliable ACI map in the future.

5.4. Including More Satellite Data

One of the approaches to improve the classification accuracy is utilizing different remote sensing datasets with various characteristics to facilitate discriminating different croplands by generating more information [50]. For instance, AAFC has used the datasets collected by three satellites of Landsat-8, Sentinel-2, and RADARSAT-2 for producing the 2018 ACI map with the spatial resolution of 30 m (Figure 1). However, in this study, the ACI map was produced using Sentinel-1 and Sentinel-2 datsets with 10 m spatial resolution. There are several recently launched and former satellites which could be included in the ACI production to improve the accuracy. For example, Canada has launched RADARSAT Constellation Mission (RCM) on June 12, 2019. This satellite provides circular polarization datasets with high spatial resolution and, thus, can considerably improve the accuracy of future ACI maps.

5.5. Canada-Wide Cropland Inventory Map

As discussed, the area specified by AAFC which is approximately 28% of the country (Figure 2) was considered in this study to produce the ACI map. Although this area contains most croplands in Canada, there are additional croplands outside of this boundary, such as Yukon. Consequently, the future studies should aim to produce ACI map for the entire country or extend the boundaries of classification until all the cropland areas are included.

5.6. ACI Maps at Diffeent Years and Change Analysis

For managing the amount of food being produced and distributed in a country, it is important to track crop production in different years. Moreover, for protecting agricultural areas and other land covers, like wetlands, it is imperial to detect what land covers are changed to agricultural fields or vice versa. Therefore, ACI maps at different years can be produced to answer these questions. The proposed GEE cloud computing method can be effectively applied to produce ACI maps and assess the changes in a more cost-efficient approach compared to the methods that are currently utilized by AAFC.

6. Conclusions

Although a vast portion of Canada is covered by different types of croplands, advanced machine learning and big geo data processing methods have not been investigated for producing ACI maps. Therefore, a cloud computing method was presented in this study to produce object-based ACI maps using a combination of multi-date Sentinel-1 and Sentinel-2 images acquired in 2018. The method was implemented within GEE, and an ANN was applied to delineate 17 cropland classes over 10 provinces of Canada. The overall classification accuracy was 77%, and the average PA and UA for cropland classes were 79% and 77%, respectively. This level of accuracy was reasonable for the first effort to use GEE for ACI production. The proposed method was highly efficient in terms of cost and computation. In fact, using GEE cloud platform and open-access satellite images (i.e., Sentiel-1/-2) instead of local computers and costly images (e.g., RADARSAT-2) used by AAFC made the proposed method more efficient in terms of time and cost and would facilitate automated production of the ACI maps in future. It is believed that the proposed approach could be effectively applied to create Canada-wide cropland inventories and facilitate change detection and monitoring efforts. Future studies should consider the proposed method along with the following factors to produce more accurate and reliable ACI maps: (1) More field data should be utilized to be able to discriminate more classes and improve the class accuracies; (2) an efficient algorithm should be developed to discriminate cropland from non-cropland regions. This will improve the accuracy and reliability of the map and will also facilitate the production of Canada-wide ACI maps; (3) ACI maps should be produced for different years and a change detection algorithm should be developed to assess the amount of changes; and (4) additional satellite data, such as RCM and X-band SAR images, should be incorporated into the classification.

Author Contributions

Conceptualization, M.A. and M.K.; data curation, M.A., M.K., A.M. (Armin Moghimi), A.G., A.D., T.F., and P.R.; formal analysis, M.A., M.K., A.M. (Armin Moghimi), A.G., and S.M.; funding acquisition, M.A.; investigation, M.A., M.K., A.G., and T.F.; methodology, M.A. and M.K.; project administration, M.A.; resources, M.A., M.K., A.M. (Armin Moghimi), A.G., A.D., T.F., P.R., and B.B.; software, M.A. and M.K.; supervision, M.A.; validation, M.A., M.K., A.M. (Armin Moghimi), A.G., S.M. and T.F.; visualization, M.A., M.K., and A.G.; writing—original draft, M.A., M.K., A.G., B.R., and S.M.; writing—review and editing, M.A., M.K., A.M. (Armin Moghimi), A.G., B.R., S.M., A.D., T.F., P.R., B.B., and A.M. (Ali Mohammadzadeh). All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Acknowledgments

The authors thank AAFC for providing valuable field data.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Shelestov, A.; Lavreniuk, M.; Kussul, N.; Novikov, A.; Skakun, S. Exploring Google Earth Engine Platform for Big Data Processing: Classification of Multi-Temporal Satellite Imagery for Crop Mapping. Front. Earth Sci. 2017, 5. [Google Scholar] [CrossRef]

- McNairn, H.; Brisco, B. The application of C-band polarimetric SAR for agriculture: A review. Can. J. Remote Sens. 2004, 30, 525–542. [Google Scholar] [CrossRef]

- Amani, M.; Mahdavi, S.; Afshar, M.; Brisco, B.; Huang, W.; Mohammad Javad Mirzadeh, S.; White, L.; Banks, S.; Montgomery, J.; Hopkinson, C. Canadian Wetland Inventory using Google Earth Engine: The First Map and Preliminary Results. Remote Sens. 2019, 11, 842. [Google Scholar] [CrossRef]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Amani, M.; Brisco, B.; Afshar, M.; Mirmazloumi, S.M.; Mahdavi, S.; Mirzadeh, S.M.J.; Huang, W.; Granger, J. A generalized supervised classification scheme to produce provincial wetland inventory maps: An application of Google Earth Engine for big geo data processing. Big Earth Data 2019, 3, 378–394. [Google Scholar] [CrossRef]

- Amani, M.; Ghorbanian, A.; Ahmadi, S.A.; Kakooei, M.; Moghimi, A.; Mirmazloumi, S.M.; Alizadeh Moghaddam, S.H.; Mahdavi, S.; Ghahremanloo, M.; Parsian, S.; et al. Google Earth Engine Cloud Computing Platform for Remote Sensing Big Data Applications: A Comprehensive Review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13. [Google Scholar] [CrossRef]

- Dong, T.; Liu, J.; Shang, J.; Qian, B.; Huffman, T.; Zhang, Y.; Champagne, C.; Daneshfar, B. Assessing the Impact of Climate Variability on Cropland Productivity in the Canadian Prairies Using Time Series MODIS FAPAR. Remote Sens. 2016, 8, 281. [Google Scholar] [CrossRef]

- Xiong, J.; Thenkabail, P.S.; Gumma, M.K.; Teluguntla, P.; Poehnelt, J.; Congalton, R.G.; Yadav, K.; Thau, D. Automated cropland mapping of continental Africa using Google Earth Engine cloud computing. ISPRS J. Photogramm. Remote Sens. 2017, 126, 225–244. [Google Scholar] [CrossRef]

- Massey, R.; Sankey, T.T.; Yadav, K.; Congalton, R.G.; Tilton, J.C. Integrating cloud-based workflows in continental-scale cropland extent classification. Remote Sens. Environ. 2018, 219, 162–179. [Google Scholar] [CrossRef]

- Xie, Y.; Lark, T.J.; Brown, J.F.; Gibbs, H.K. Mapping irrigated cropland extent across the conterminous United States at 30 m resolution using a semi-automatic training approach on Google Earth Engine. ISPRS J. Photogramm. Remote Sens. 2019, 155, 136–149. [Google Scholar] [CrossRef]

- Deschamps, B.; McNairn, H.; Shang, J.; Jiao, X. Towards operational radar-only crop type classification: Comparison of a traditional decision tree with a random forest classifier. Can. J. Remote Sens. 2012, 38, 60–68. [Google Scholar] [CrossRef]

- Jiao, X.; Kovacs, J.M.; Shang, J.; McNairn, H.; Walters, D.; Ma, B.; Geng, X. Object-oriented crop mapping and monitoring using multi-temporal polarimetric RADARSAT-2 data. ISPRS J. Photogramm. Remote Sens. 2014, 96, 38–46. [Google Scholar] [CrossRef]

- Liao, C.; Wang, J.; Huang, X.; Shang, J. Contribution of Minimum Noise Fraction Transformation of Multi-temporal RADARSAT-2 Polarimetric SAR Data to Cropland Classification. Can. J. Remote Sens. 2018, 44, 215–231. [Google Scholar] [CrossRef]

- Davidson, M.A.; Fisette, T.; McNarin, H.; Daneshfar, B. Detailed crop mapping using remote sensing data (Crop Data Layers). In Handbook on Remote Sensing for Agricultural Statistics; FAO: Rome, Italy, 2017; pp. 91–130. [Google Scholar]

- McNairn, H.; Champagne, C.; Shang, J.; Holmstrom, D.; Reichert, G. Integration of optical and Synthetic Aperture Radar (SAR) imagery for delivering operational annual crop inventories. ISPRS J. Photogramm. Remote Sens. 2009, 64, 434–449. [Google Scholar] [CrossRef]

- Ban, Y. Synergy of multitemporal ERS-1 SAR and Landsat TM data for classification of agricultural crops. Can. J. Remote Sens. 2003, 29, 518–526. [Google Scholar] [CrossRef]

- Veloso, A.; Mermoz, S.; Bouvet, A.; Le Toan, T.; Planells, M.; Dejoux, J.-F.; Ceschia, E. Understanding the temporal behavior of crops using Sentinel-1 and Sentinel-2-like data for agricultural applications. Remote Sens. Environ. 2017, 199, 415–426. [Google Scholar] [CrossRef]

- Van Tricht, K.; Gobin, A.; Gilliams, S.; Piccard, I. Synergistic Use of Radar Sentinel-1 and Optical Sentinel-2 Imagery for Crop Mapping: A Case Study for Belgium. Remote Sens. 2018, 10, 1642. [Google Scholar] [CrossRef]

- Agriculture and Agri-Food Canada. ISO 19131 Annual Crop Inventory–Data Product Specifications; Agriculture and Agri-Food Canada: Ottawa, ON, Canada, 2018.

- Agency, E.S. Sentinel-1-Observation Scenario—Planned Acquisitions—ESA. Available online: https://sentinel.esa.int/web/sentinel/missions/sentinel-1/observation-scenario (accessed on 15 March 2020).

- Sentinel-1 Toolbox. Available online: https://sentinel.esa.int/web/sentinel/toolboxes/sentinel-1 (accessed on 10 March 2020).

- Sentinel-1 Algorithms. Available online: https://developers.google.com/earth-engine/sentinel1 (accessed on 20 March 2020).

- Kakooei, M.; Nascetti, A.; Ban, Y. Sentinel-1 Global Coverage Foreshortening Mask Extraction: An Open Source Implementation Based on Google Earth Engine. In Proceedings of the IGARSS 2018–2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 6836–6839. [Google Scholar]

- Sentinel-2User Handbook. Available online: https://sentinel.esa.int/documents/247904/685211/Sentinel-2_User_Handbook (accessed on 5 April 2020).

- Friedl, M.; Sulla-Menashe, D. MCD12Q1 MODIS/Terra+ Aqua Land Cover Type Yearly L3 Global 500 m SIN Grid V006. 2019, distributed by NASA EOSDIS Land Processes DAAC. Available online: https://lpdaac.usgs.gov/products/mcd12q1v006/ (accessed on 20 March 2020).

- Ghorbanian, A.; Mohammadzadeh, A. An unsupervised feature extraction method based on band correlation clustering for hyperspectral image classification using limited training samples. Remote Sens. Lett. 2018. [Google Scholar] [CrossRef]

- Anchang, J.Y.; Prihodko, L.; Ji, W.; Kumar, S.S.; Ross, C.W.; Yu, Q.; Lind, B.; Sarr, M.A.; Diouf, A.A.; Hanan, N.P. Toward Operational Mapping of Woody Canopy Cover in Tropical Savannas Using Google Earth Engine. Front. Environ. Sci. 2020, 8, 4. [Google Scholar] [CrossRef]

- Wang, L.; Dong, Q.; Yang, L.; Gao, J.; Liu, J. Crop classification based on a novel feature filtering and enhancement method. Remote Sens. 2019, 11, 455. [Google Scholar] [CrossRef]

- Ashourloo, D.; Shahrabi, H.S.; Azadbakht, M.; Aghighi, H.; Nematollahi, H.; Alimohammadi, A.; Matkan, A.A. Automatic canola mapping using time series of sentinel 2 images. ISPRS J. Photogramm. Remote Sens. 2019, 156, 63–76. [Google Scholar] [CrossRef]

- Dimitrov, P.; Dong, Q.; Eerens, H.; Gikov, A.; Filchev, L.; Roumenina, E.; Jelev, G. Sub-Pixel Crop Type Classification Using PROBA-V 100 m NDVI Time Series and Reference Data from Sentinel-2 Classifications. Remote Sens. 2019, 11, 1370. [Google Scholar] [CrossRef]

- Gao, B.-C. NDWI—A normalized difference water index for remote sensing of vegetation liquid water from space. Remote Sens. Environ. 1996, 58, 257–266. [Google Scholar] [CrossRef]

- Sitokonstantinou, V.; Papoutsis, I.; Kontoes, C.; Lafarga Arnal, A.; Armesto Andrés, A.P.; Garraza Zurbano, J.A. Scalable parcel-based crop identification scheme using sentinel-2 data time-series for the monitoring of the common agricultural policy. Remote Sens. 2018, 10, 911. [Google Scholar] [CrossRef]

- Sun, C.; Bian, Y.; Zhou, T.; Pan, J. Using of multi-source and multi-temporal remote sensing data improves crop-type mapping in the subtropical agriculture region. Sensors 2019, 19, 2401. [Google Scholar] [CrossRef]

- Champagne, C.; Shang, J.; McNairn, H.; Fisette, T. Exploiting spectral variation from crop phenology for agricultural land-use classification. In Proceedings of Spie; Remote Sensing and Modeling of Ecosystems for Sustainability II, San Diego, CA, USA, 31 July–4 August 2005; Spie: Bellingham, MA, USA, 2005; Volume 5884, p. 588405. [Google Scholar]

- Ghorbanian, A.; Kakooei, M.; Amani, M.; Mahdavi, S.; Mohammadzadeh, A.; Hasanlou, M. Improved land cover map of Iran using Sentinel imagery within Google Earth Engine and a novel automatic workflow for land cover classification using migrated training samples. ISPRS J. Photogramm. Remote Sens. 2020, 167, 276–288. [Google Scholar] [CrossRef]

- Li, Q.; Wang, C.; Zhang, B.; Lu, L. Object-based crop classification with Landsat-MODIS enhanced time-series data. Remote Sens. 2015, 7, 16091–16107. [Google Scholar] [CrossRef]

- Belgiu, M.; Csillik, O. Sentinel-2 cropland mapping using pixel-based and object-based time-weighted dynamic time warping analysis. Remote Sens. Environ. 2018, 204, 509–523. [Google Scholar] [CrossRef]

- Achanta, R.; Susstrunk, S. Superpixels and polygons using simple non-iterative clustering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4651–4660. [Google Scholar]

- Google Earth Engine API. Available online: https://developers.google.com/earth-engine/api_docs (accessed on 8 March 2020).

- Murthy, C.S.; Raju, P.V.; Badrinath, K.V.S. Classification of wheat crop with multi-temporal images: Performance of maximum likelihood and artificial neural networks. Int. J. Remote Sens. 2003, 24, 4871–4890. [Google Scholar] [CrossRef]

- Kumar, P.; Prasad, R.; Mishra, V.N.; Gupta, D.K.; Singh, S.K. Artificial neural network for crop classification using C-band RISAT-1 satellite datasets. Russ. Agric. Sci. 2016, 42, 281–284. [Google Scholar] [CrossRef]

- Seydi, S.T.; Hasanlou, M.; Amani, M. A New End-to-End Multi-Dimensional CNN Framework for Land Cover/Land Use Change Detection in Multi-Source Remote Sensing Datasets. Remote Sens. 2020, 12, 2010. [Google Scholar] [CrossRef]

- Erinjery, J.J.; Singh, M.; Kent, R. Mapping and assessment of vegetation types in the tropical rainforests of the Western Ghats using multispectral Sentinel-2 and SAR Sentinel-1 satellite imagery. Remote Sens. Environ. 2018, 216, 345–354. [Google Scholar] [CrossRef]

- Møller, M.F. A scaled conjugate gradient algorithm for fast supervised learning. Neural Netw. 1993, 6, 525–533. [Google Scholar] [CrossRef]

- Chen, C.; Ma, Y.; Ren, G. Hyperspectral Classification Using Deep Belief Networks Based on Conjugate Gradient Update and Pixel-Centric Spectral Block Features. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 4060–4069. [Google Scholar] [CrossRef]

- Du, Y.-C.; Stephanus, A. Levenberg-Marquardt neural network algorithm for degree of arteriovenous fistula stenosis classification using a dual optical photoplethysmography sensor. Sensors 2018, 18, 2322. [Google Scholar] [CrossRef]

- Maxwell, A.E.; Warner, T.A.; Fang, F. Implementation of machine-learning classification in remote sensing: An applied review. Int. J. Remote Sens. 2018, 39, 2784–2817. [Google Scholar] [CrossRef]

- Naboureh, A.; Li, A.; Bian, J.; Lei, G.; Amani, M. A Hybrid Data Balancing Method for Classification of Imbalanced Training Data within Google Earth Engine: Case Studies from Mountainous Regions. Remote Sens. 2020, 12, 3301. [Google Scholar] [CrossRef]

- Moghimi, A.; Mohammadzadeh, A.; Celik, T.; Amani, M. A Novel Radiometric Control Set Sample Selection Strategy for Relative Radiometric Normalization of Multitemporal Satellite Images. IEEE Trans. Geosci. Remote Sens. 2020, 1–17. [Google Scholar] [CrossRef]

- Amani, M.; Mahdavi, S.; Berard, O. Supervised wetland classification using high spatial resolution optical, SAR, and LiDAR imagery. J. Appl. Remote Sens. 2020, 14, 1. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).