Leveraging Very-High Spatial Resolution Hyperspectral and Thermal UAV Imageries for Characterizing Diurnal Indicators of Grapevine Physiology

Abstract

1. Introduction

2. Materials and Methods

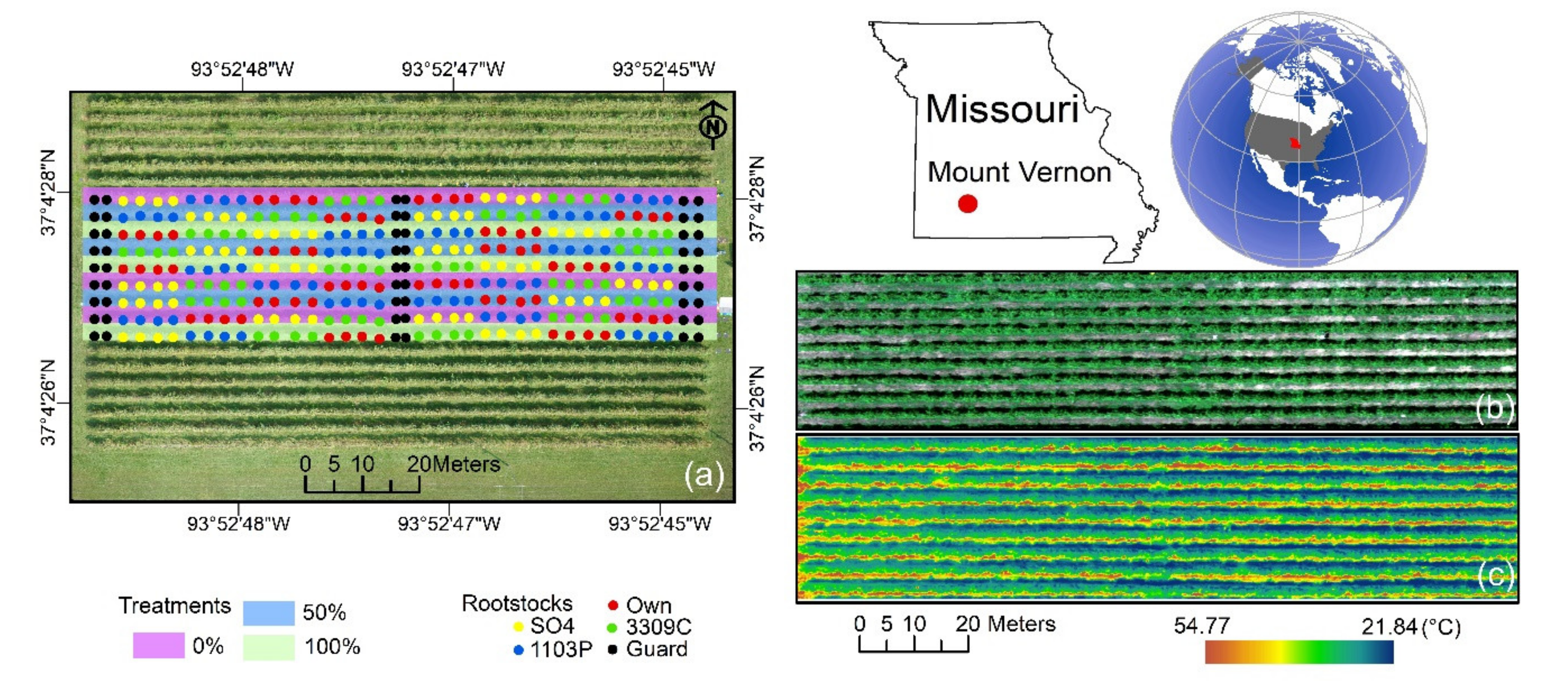

2.1. Experimental Site Description and Meteorological Measurements

2.2. Diurnal Physiological Measurements

2.3. Aerial Image Acquisition and Pre-Processing

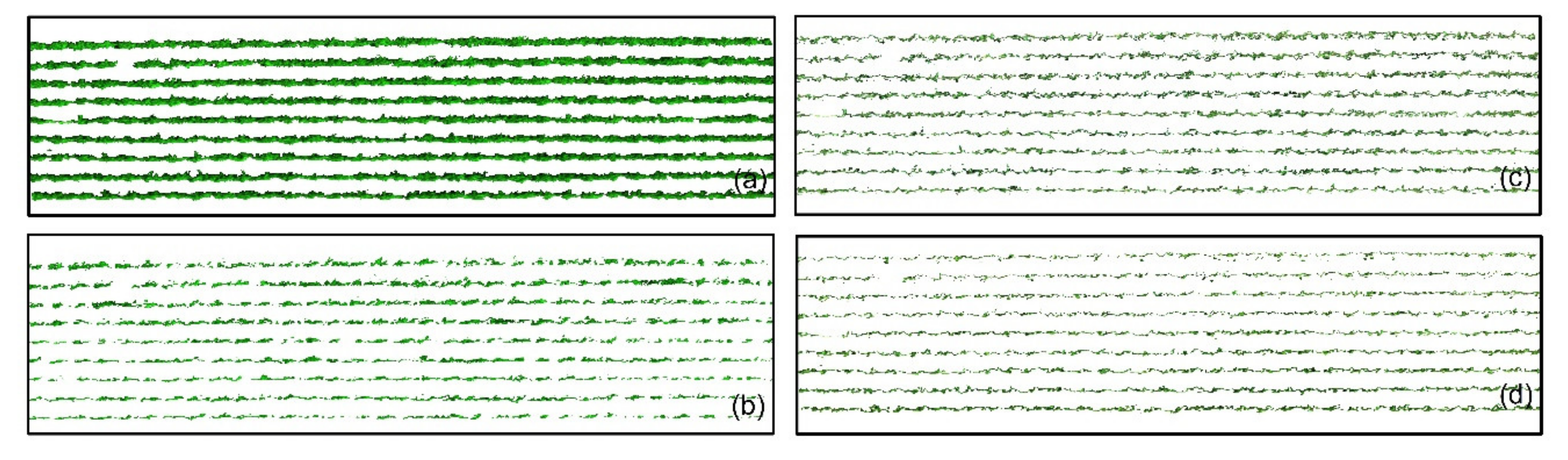

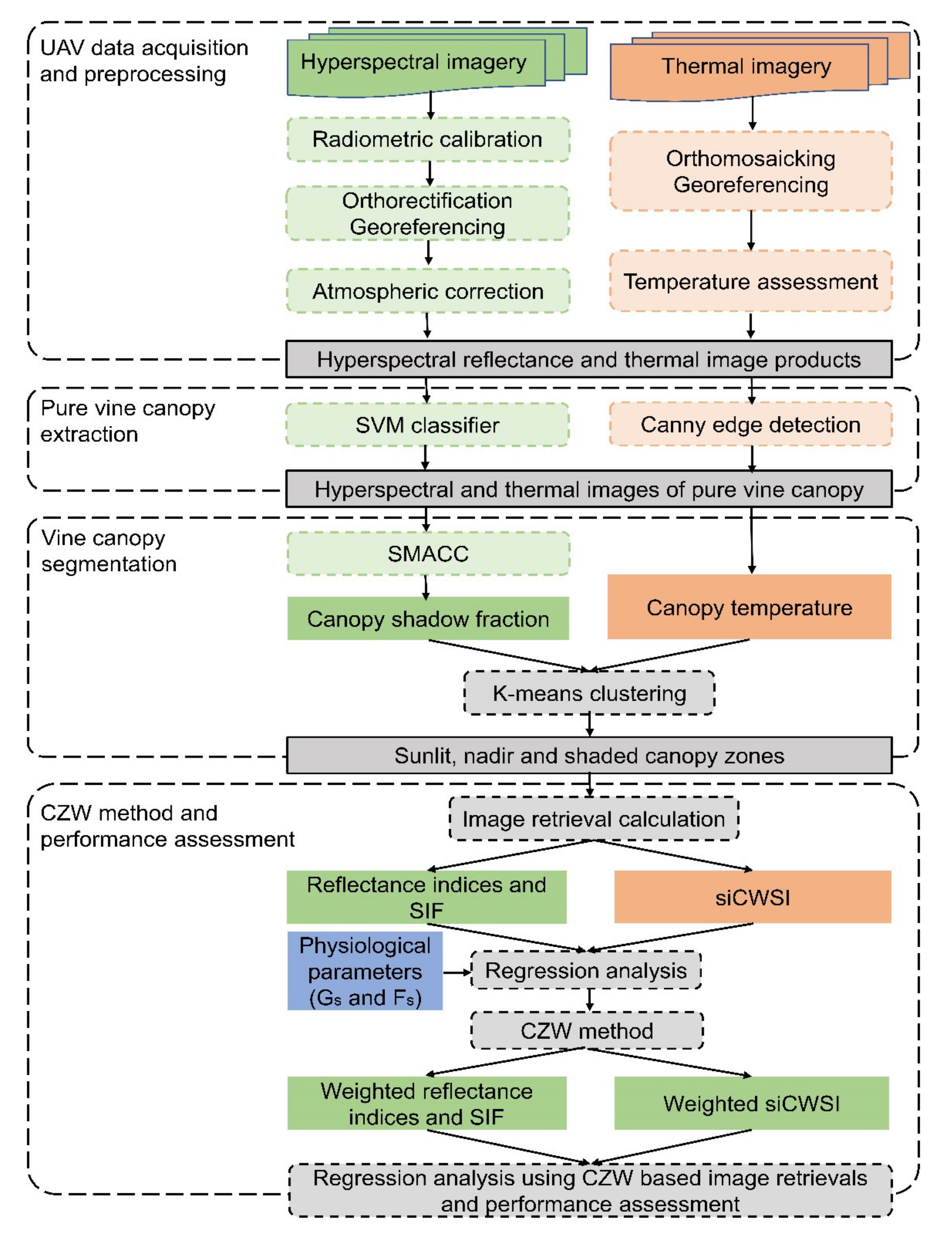

2.4. Extraction of Grapevine Canopy Row

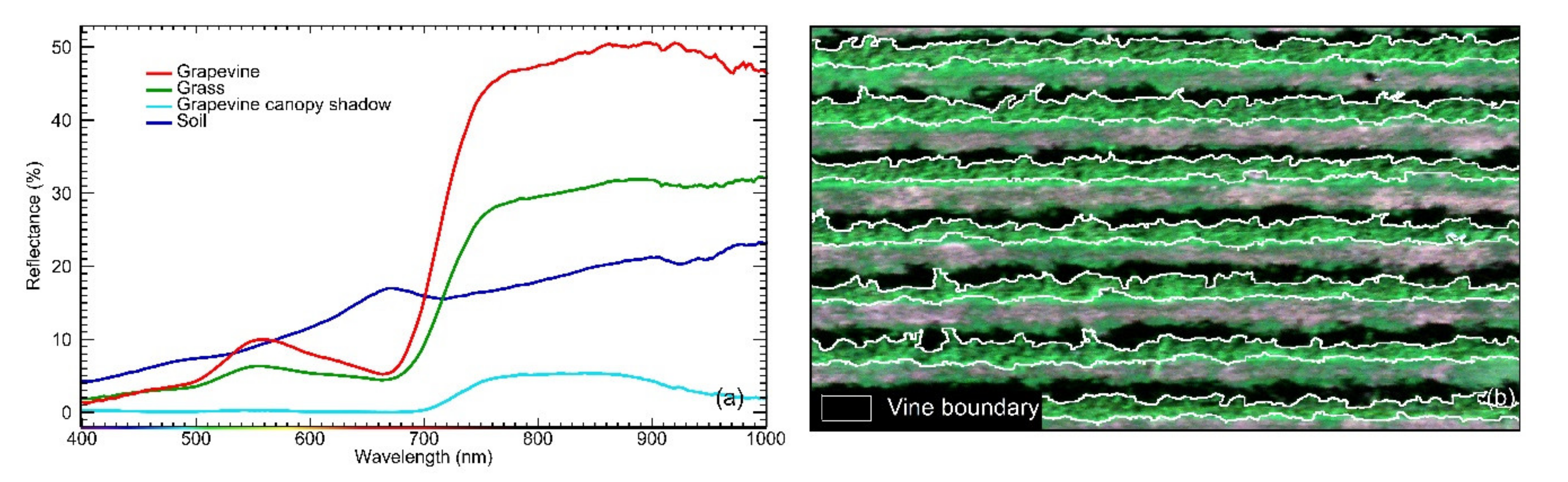

2.4.1. Canopy Row Extraction From Hyperspectral Images

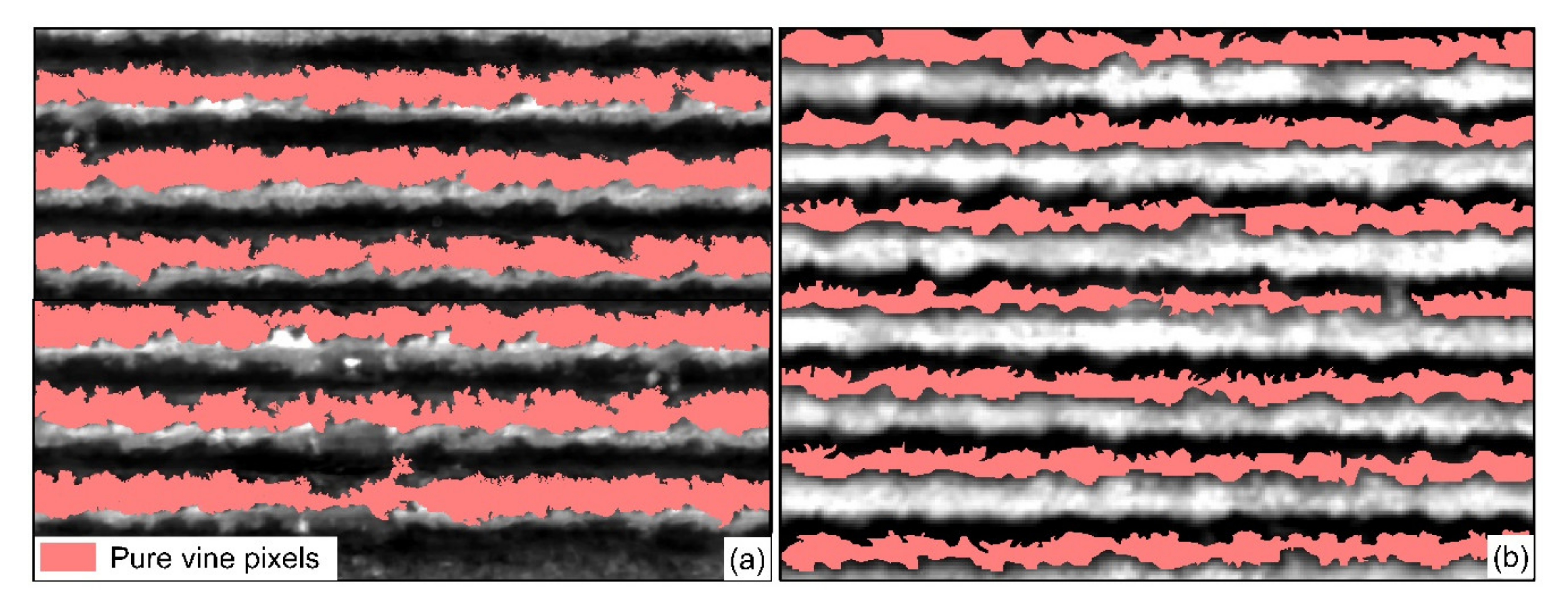

2.4.2. Canopy Row Extraction from Thermal Images

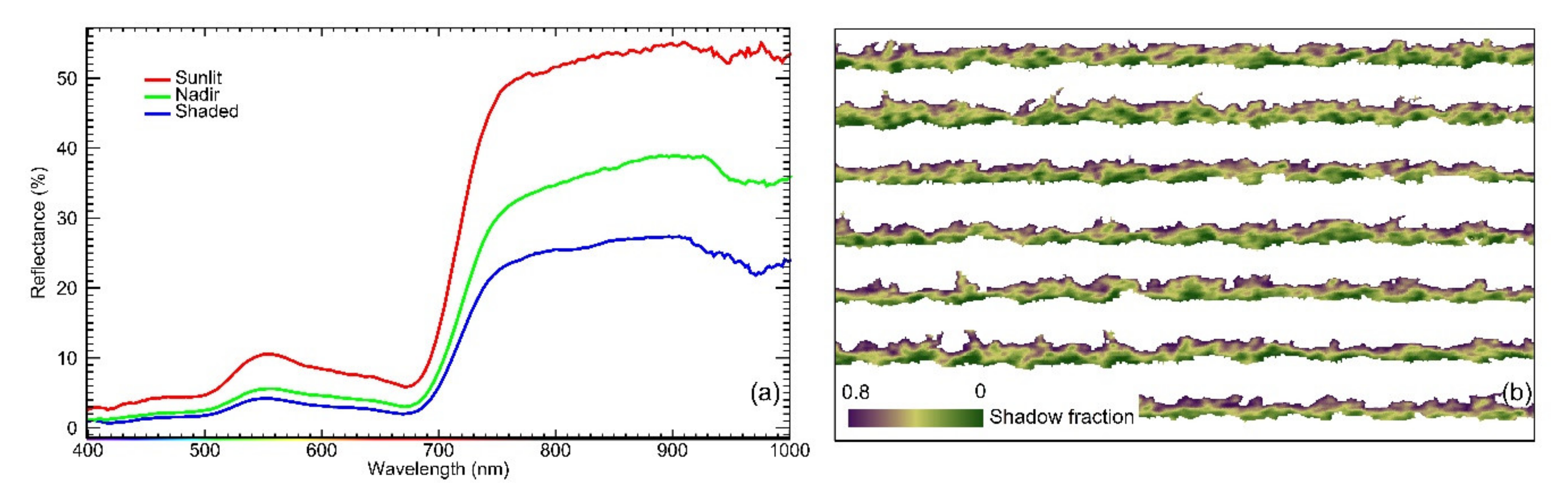

2.5. Estimation of Pixelwise Canopy Shadow Fraction

2.6. Grapevine Canopy Segmentation

2.7. Calculation of Spectral Indices and Features from Aerial Image

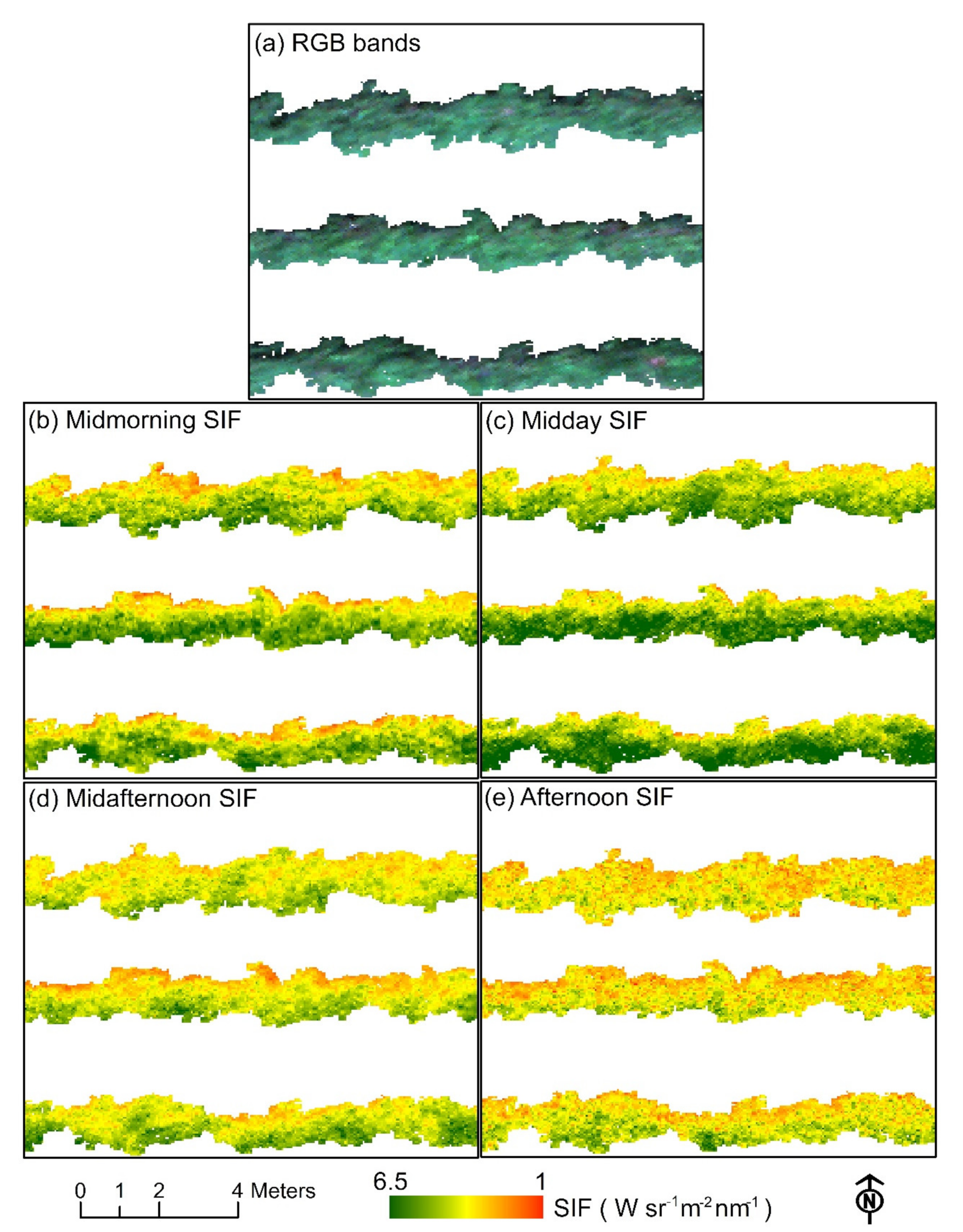

2.7.1. Hyperspectral Indices and SIF Retrieval

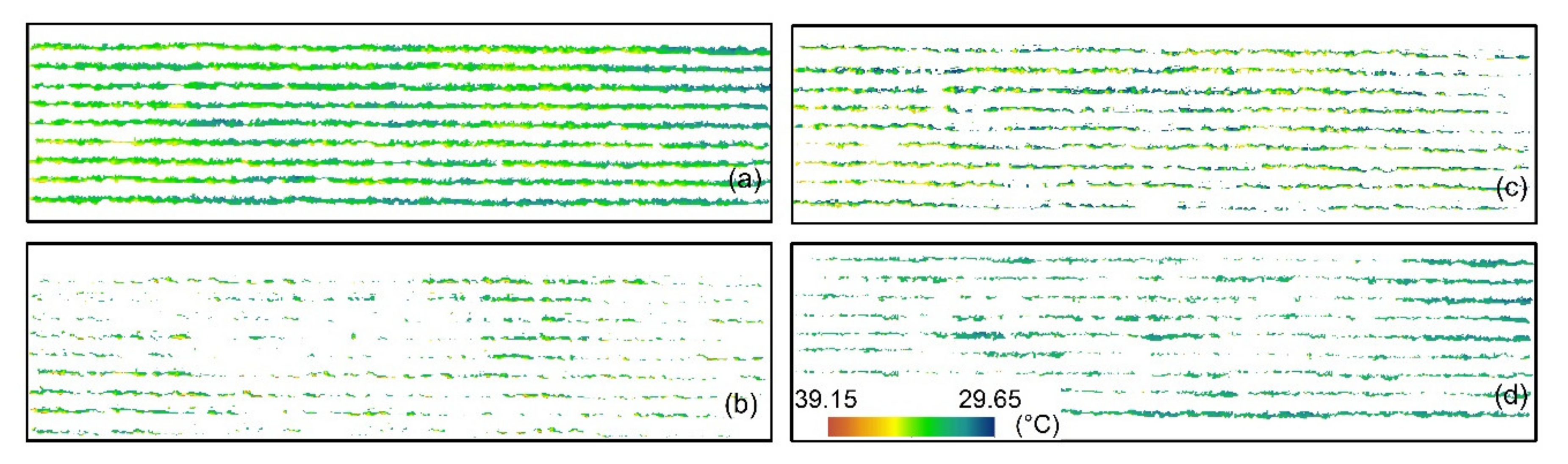

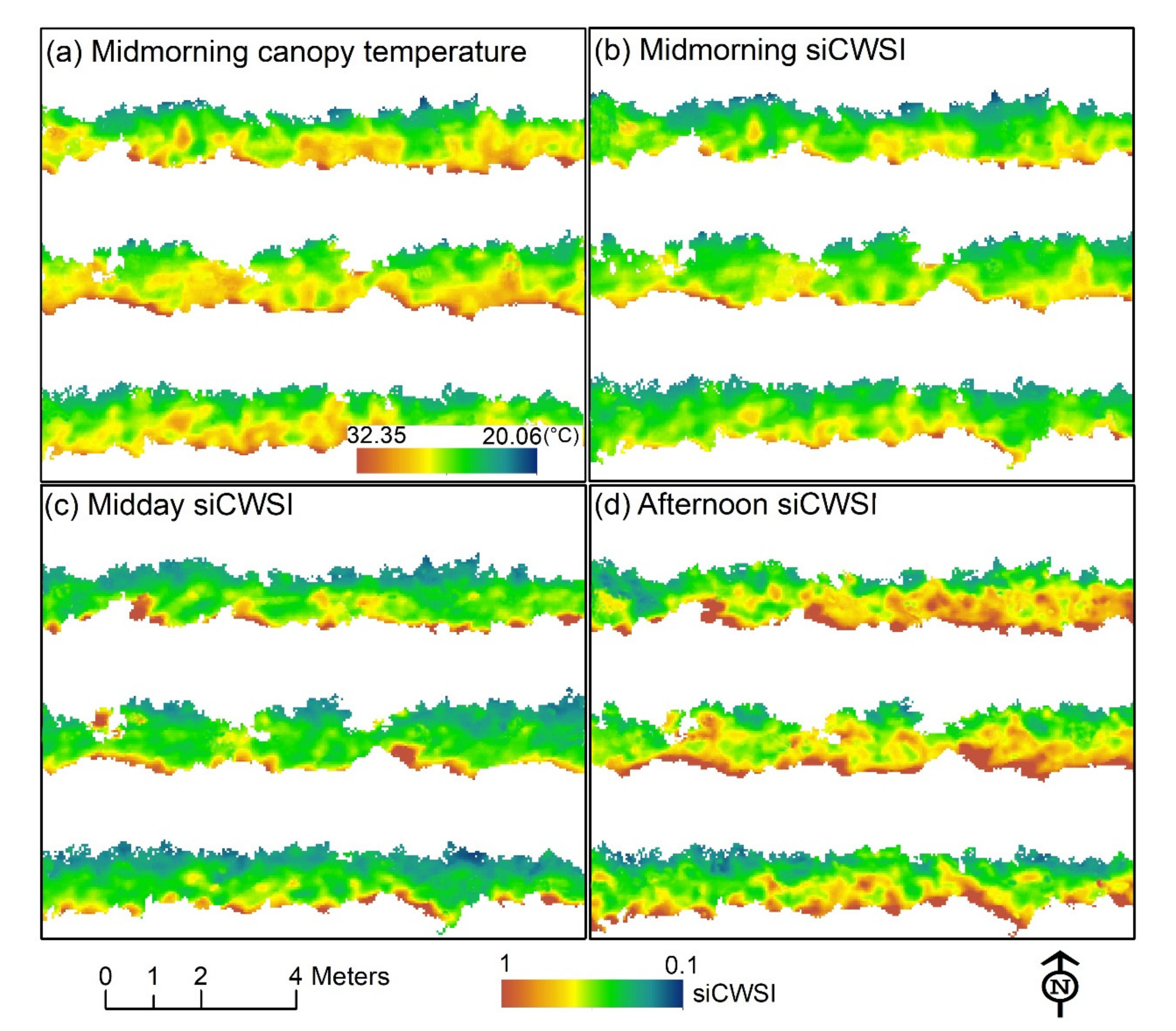

2.7.2. Simplified Canopy Water Stress Index (siCWSI)

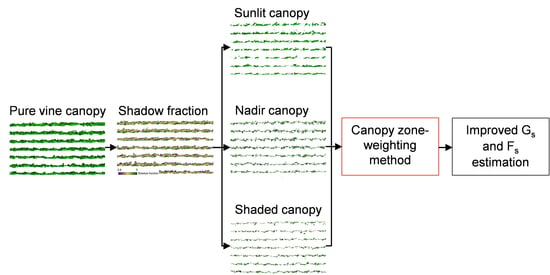

2.8. Proposed Canopy Zone-Weighting (CZW) Method

3. Results

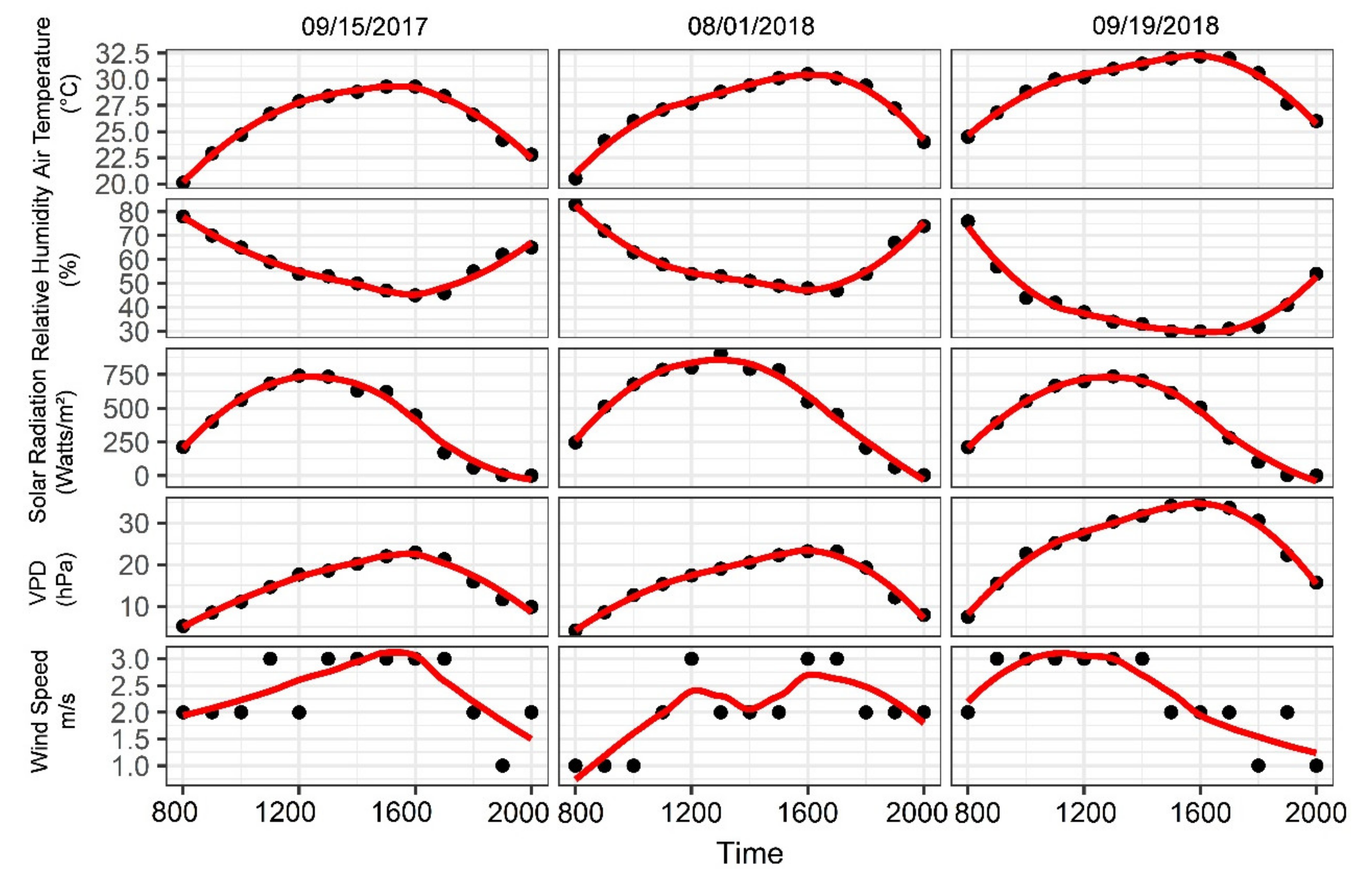

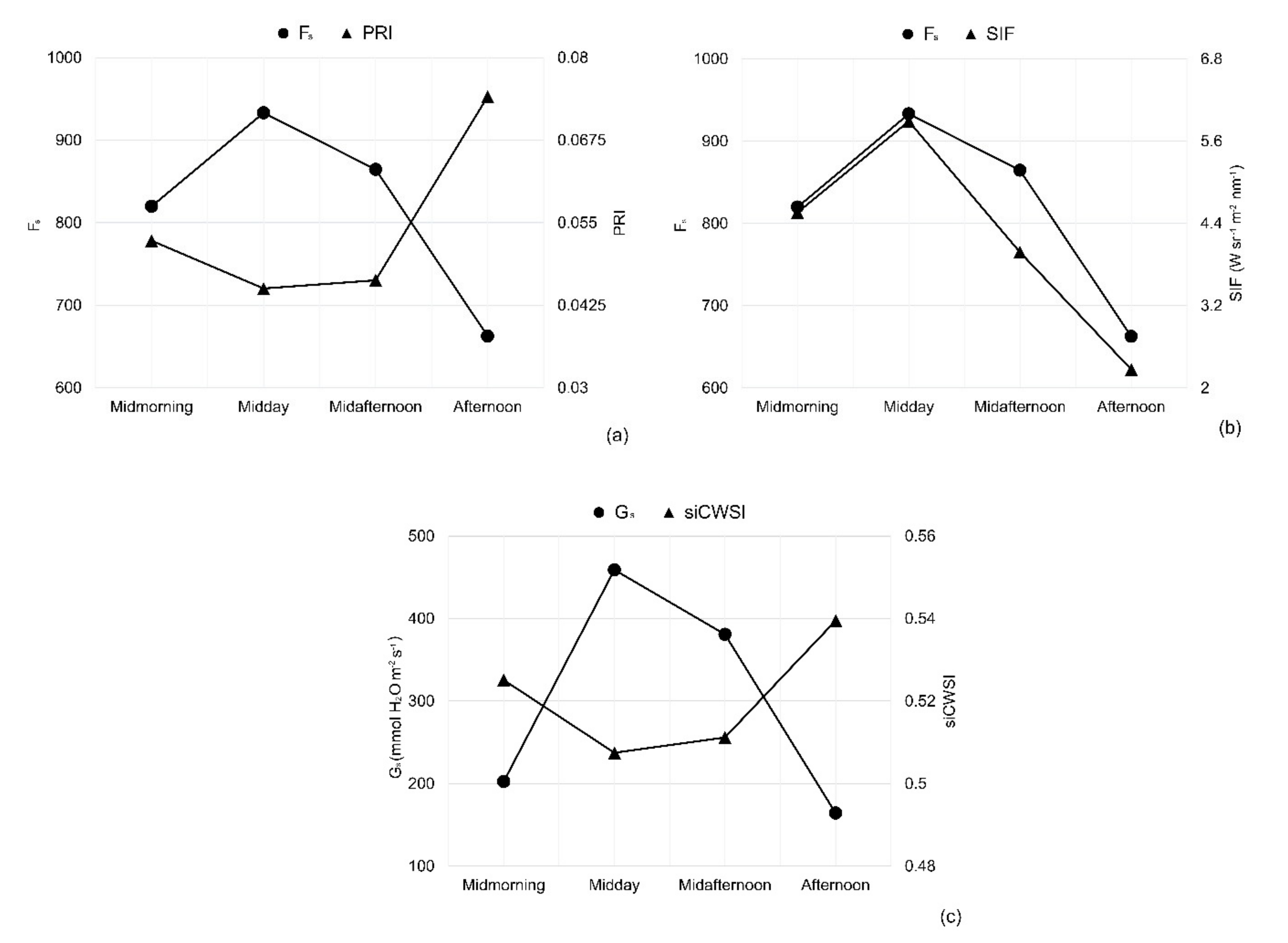

3.1. Environmental Conditions and Diurnal Physiological Indicators of Grapevine

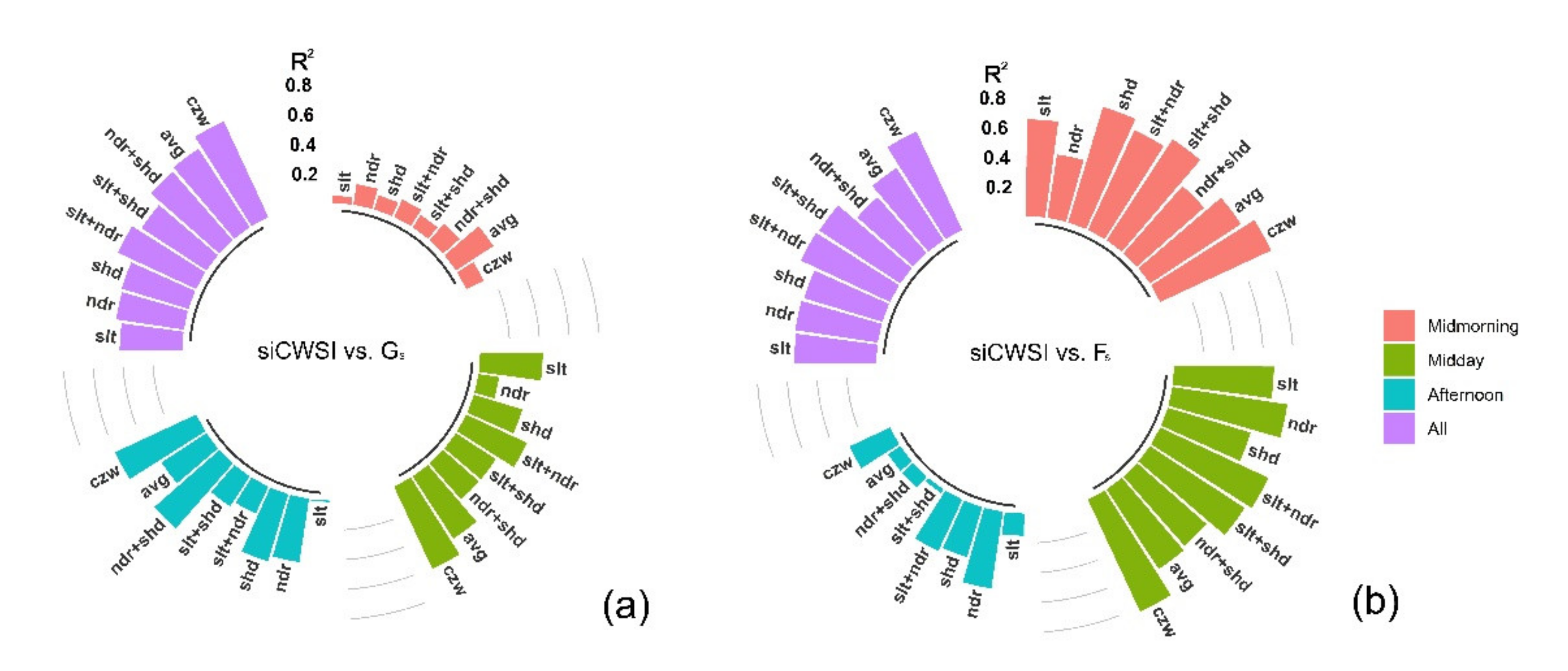

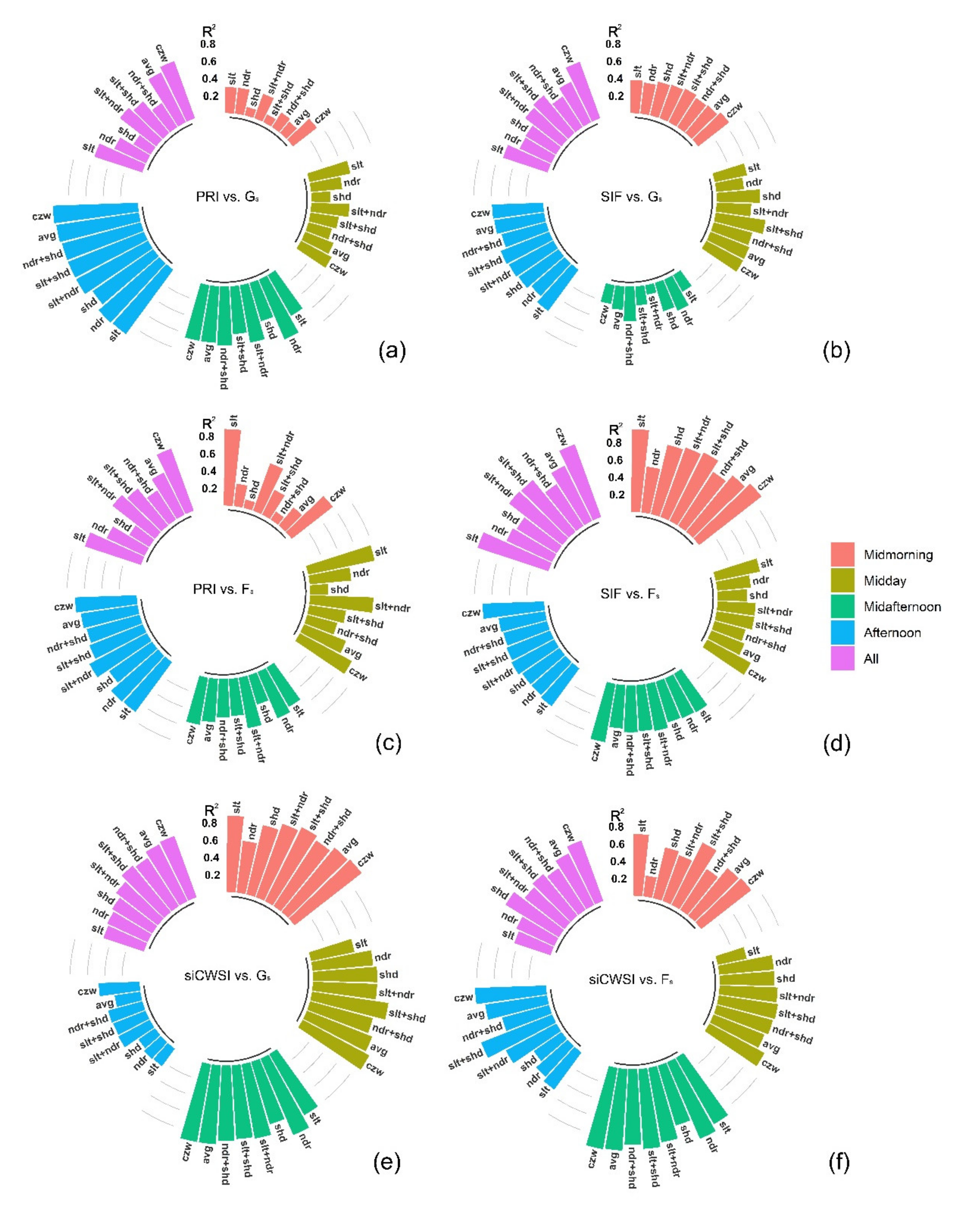

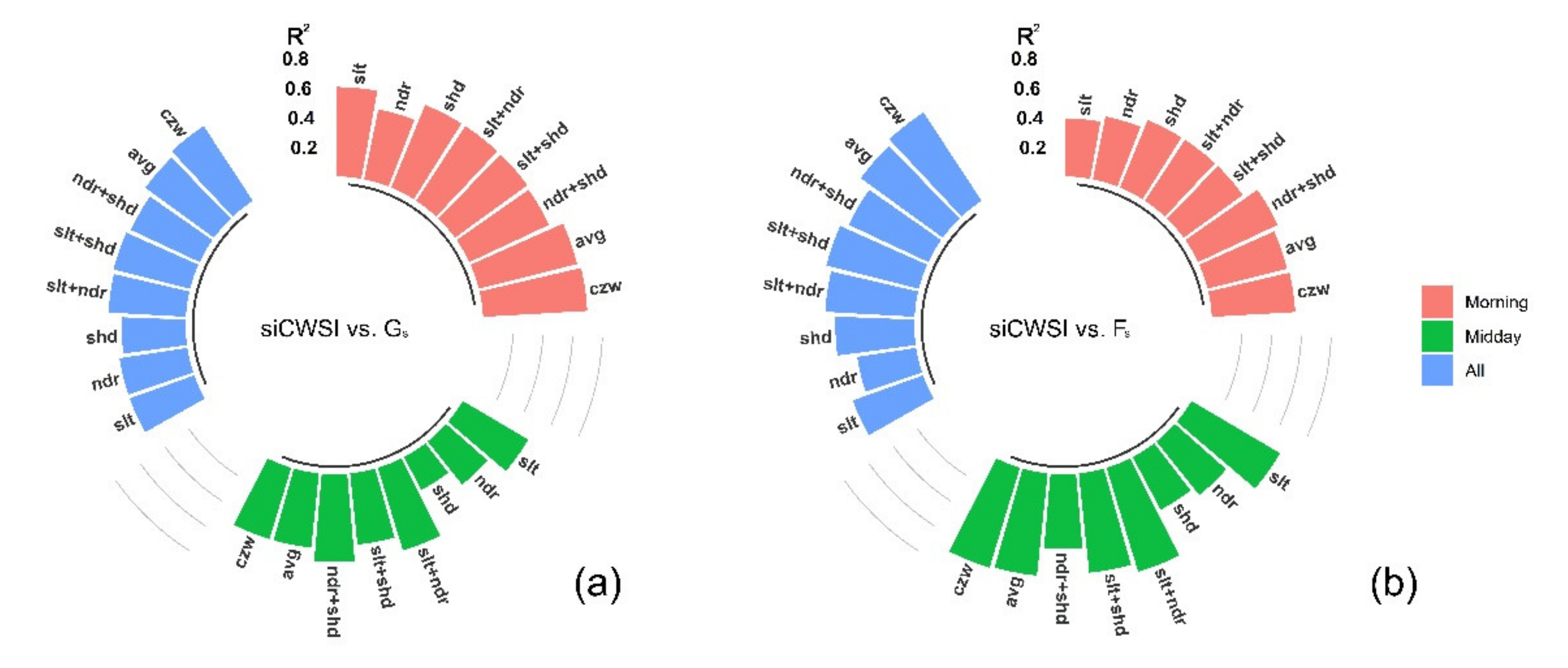

3.2. Relationship between the Aerial Image Retrievals and Grapevine Physiology

3.3. Diurnal Changes in Aerial Image Retrievals and Tracking Physiological Indicators

4. Discussion

4.1. Contribution of Different Canopy Zones to Grapevine Physiology: Hyperspectral Image Retrievals

4.2. Contribution of Different Canopy Zones to Grapevine Physiology: Thermal Image Retrieval

4.3. Canopy Zone-Weighting (CZW) Method Provided the Most Robust Estimates of Correlations between Aerial Image Retrievals and Grapevine Physiology

4.4. Outlook

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Vivier, M.A.; Pretorius, I.S. Genetically tailored grapevines for the wine industry. Trends Biotechnol. 2002, 20, 472–478. [Google Scholar] [CrossRef]

- Intrigliolo, D.S.; Castel, J.R. Interactive effects of deficit irrigation and shoot and cluster thinning on grapevine cv. Tempranillo. Water relations, vine performance and berry and wine composition. Irrig. Sci. 2011, 29, 443–454. [Google Scholar] [CrossRef]

- Intrigliolo, D.S.; Pérez, D.; Risco, D.; Yeves, A.; Castel, J.R. Yield components and grape composition responses to seasonal water deficits in Tempranillo grapevines. Irrig. Sci. 2012, 30, 339–349. [Google Scholar] [CrossRef]

- Mirás-Avalos, J.M.; Buesa, I.; Llacer, E.; Jiménez-Bello, M.A.; Risco, D.; Castel, J.R.; Intrigliolo, D.S. Water versus source–sink relationships in a semiarid Tempranillo vineyard: Vine performance and fruit composition. Am. J. Enol. Vitic. 2017, 68, 11–22. [Google Scholar] [CrossRef]

- Chaves, M.M.; Zarrouk, O.; Francisco, R.; Costa, J.M.; Santos, T.; Regalado, A.P.; Rodrigues, M.L.; Lopes, C.M. Grapevine under deficit irrigation: Hints from physiological and molecular data. Ann. Bot. 2010, 105, 661–676. [Google Scholar] [CrossRef]

- Chaves, M.M.; Santos, T.P.; Souza, C.D.; Ortuño, M.; Rodrigues, M.; Lopes, C.; Maroco, J.; Pereira, J.S. Deficit irrigation in grapevine improves water-use efficiency while controlling vigour and production quality. Ann. Appl. Biol. 2007, 150, 237–252. [Google Scholar] [CrossRef]

- Reynolds, A.G.; Wardle, D.A.; Naylor, A.P. Impact of training system, vine spacing, and basal leaf removal on Riesling. Vine performance, berry composition, canopy microclimate, and vineyard labor requirements. Am. J. Enol. Vitic. 1996, 47, 63–76. [Google Scholar]

- Ferrandino, A.; Lovisolo, C. Abiotic stress effects on grapevine (Vitis vinifera L.): Focus on abscisic acid-mediated consequences on secondary metabolism and berry quality. Environ. Exp. Bot. 2014, 103, 138–147. [Google Scholar] [CrossRef]

- Cifre, J.; Bota, J.; Escalona, J.M.; Medrano, H.; Flexas, J. Physiological tools for irrigation scheduling in grapevine (Vitis vinifera L.): An open gate to improve water-use efficiency? Agric. Ecosyst. Environ. 2005, 106, 159–170. [Google Scholar] [CrossRef]

- Hall, A.; Lamb, D.; Holzapfel, B.; Louis, J. Optical remote sensing applications in viticulture—A review. Aust. J. Grape Wine Res. 2002, 8, 36–47. [Google Scholar] [CrossRef]

- Rapaport, T.; Hochberg, U.; Shoshany, M.; Karnieli, A.; Rachmilevitch, S. Combining leaf physiology, hyperspectral imaging and partial least squares-regression (PLS-R) for grapevine water status assessment. ISPRS J. Photogramm. Remote Sens. 2015, 109, 88–97. [Google Scholar] [CrossRef]

- Trought, M.C.; Bramley, R.G. Vineyard variability in Marlborough, New Zealand: Characterising spatial and temporal changes in fruit composition and juice quality in the vineyard. Aust. J. Wine Res. 2011, 17, 79–89. [Google Scholar] [CrossRef]

- Bramley, R. Understanding variability in winegrape production systems 2. Within vineyard variation in quality over several vintages. Aust. J. Wine Res. 2005, 11, 33–42. [Google Scholar] [CrossRef]

- Guanter, L.; Alonso, L.; Gómez-Chova, L.; Amorós-López, J.; Vila, J.; Moreno, J. Estimation of solar-induced vegetation fluorescence from space measurements. Geophys. Res. Lett. 2007, 34. [Google Scholar] [CrossRef]

- Sepulcre-Cantó, G.; Zarco-Tejada, P.J.; Jiménez-Muñoz, J.; Sobrino, J.; Soriano, M.; Fereres, E.; Vega, V.; Pastor, M. Monitoring yield and fruit quality parameters in open-canopy tree crops under water stress. Implications for ASTER. Remote Sens. Environ. 2007, 107, 455–470. [Google Scholar] [CrossRef]

- Wendel, A.; Underwood, J. Illumination compensation in ground based hyperspectral imaging. ISPRS J. Photogramm. Remote Sens. 2017, 129, 162–178. [Google Scholar] [CrossRef]

- Hernández-Clemente, R.; North, P.R.J.; Hornero, A.; Zarco-Tejada, P.J. Assessing the effects of forest health on sun-induced chlorophyll fluorescence using the FluorFLIGHT 3-D radiative transfer model to account for forest structure. Remote Sens. Environ. 2017, 193, 165–179. [Google Scholar] [CrossRef]

- Aneece, I.; Thenkabail, P. Accuracies Achieved in Classifying Five Leading World Crop Types and their Growth Stages Using Optimal Earth Observing-1 Hyperion Hyperspectral Narrowbands on Google Earth Engine. Remote Sens. 2018, 10, 2027. [Google Scholar] [CrossRef]

- Mariotto, I.; Thenkabail, P.S.; Huete, A.; Slonecker, E.T.; Platonov, A. Hyperspectral versus multispectral crop-productivity modeling and type discrimination for the HyspIRI mission. Remote Sens. Environ. 2013, 139, 291–305. [Google Scholar] [CrossRef]

- Thenkabail, P.S.; Enclona, E.A.; Ashton, M.S.; Legg, C.; De Dieu, M.J. Hyperion, IKONOS, ALI, and ETM+ sensors in the study of African rainforests. Remote Sens. Environ. 2004, 90, 23–43. [Google Scholar] [CrossRef]

- Suárez, L.; Zarco-Tejada, P.J.; Berni, J.A.; González-Dugo, V.; Fereres, E. Modelling PRI for water stress detection using radiative transfer models. Remote Sens. Environ. 2009, 113, 730–744. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; González-Dugo, V.; Berni, J.A. Fluorescence, temperature and narrow-band indices acquired from a UAV platform for water stress detection using a micro-hyperspectral imager and a thermal camera. Remote Sens. Environ. 2012, 117, 322–337. [Google Scholar] [CrossRef]

- Atherton, J.; Nichol, C.J.; Porcar-Castell, A. Using spectral chlorophyll fluorescence and the photochemical reflectance index to predict physiological dynamics. Remote Sens. Environ. 2016, 176, 17–30. [Google Scholar] [CrossRef]

- Panigada, C.; Rossini, M.; Meroni, M.; Cilia, C.; Busettoa, L.; Amaducci, S.; Boschetti, M.; Cogliati, S.; Picchi, V.; Pinto, F.; et al. Fluorescence, PRI and canopy temperature for water stress detection in cereal crops. Int. J. Appl. Earth Obs. Geoinf. 2014, 30, 167–178. [Google Scholar] [CrossRef]

- Gamon, J.; Penuelas, J.; Field, C. A narrow-waveband spectral index that tracks diurnal changes in photosynthetic efficiency. Remote Sens. Environ. 1992, 41, 35–44. [Google Scholar] [CrossRef]

- Krause, G.H.; Weis, E. Chlorophyll fluorescence as a tool in plant physiology. Photosynth. Res. 1984, 5, 139–157. [Google Scholar] [CrossRef] [PubMed]

- Guanter, L.; Rossini, M.; Colombo, R.; Meroni, M.; Frankenberg, C.; Lee, J.-E.; Joiner, J. Using field spectroscopy to assess the potential of statistical approaches for the retrieval of sun-induced chlorophyll fluorescence from ground and space. Remote Sens. Environ. 2013, 133, 52–61. [Google Scholar] [CrossRef]

- Meroni, M.; Rossini, M.; Guanter, L.; Alonso, L.; Rascher, U.; Colombo, R.; Moreno, J. Remote sensing of solar-induced chlorophyll fluorescence: Review of methods and applications. Remote Sens. Environ. 2009, 113, 2037–2051. [Google Scholar] [CrossRef]

- Moya, I.; Camenen, L.; Evain, S.; Goulas, Y.; Cerovic, Z.G.; Latouche, G.; Flexas, J.; Ounis, A. A new instrument for passive remote sensing: 1. Measurements of sunlight-induced chlorophyll fluorescence. Remote Sens. Environ. 2004, 91, 186–197. [Google Scholar] [CrossRef]

- Inoue, Y.; Kimball, B.A.; Jackson, R.D.; Pinter, P.J.; Reginato, R.J. Remote estimation of leaf transpiration rate and stomatal resistance based on infrared thermometry. Agric. For. Meteorol. 1990, 51, 21–33. [Google Scholar] [CrossRef]

- Idso, S.; Jackson, R.; Pinter, P., Jr.; Reginato, R.; Hatfield, J. Normalizing the stress-degree-day parameter for environmental variability. Agric. Meteorol. 1981, 24, 45–55. [Google Scholar] [CrossRef]

- Jackson, R.D.; Idso, S.; Reginato, R.; Pinter, P. Canopy temperature as a crop water stress indicator. Water Resour. Res. 1981, 17, 1133–1138. [Google Scholar] [CrossRef]

- Suárez, L.; Zarco-Tejada, P.J.; Sepulcre-Cantó, G.; Pérez-Priego, O.; Miller, J.R.; Jiménez-Muñoz, J.; Sobrino, J. Assessing canopy PRI for water stress detection with diurnal airborne imagery. Remote Sens. Environ. 2008, 112, 560–575. [Google Scholar] [CrossRef]

- Rossini, M.; Fava, F.; Cogliati, S.; Meroni, M.; Marchesi, A.; Panigada, C.; Giardino, C.; Busetto, L.; Migliavacca, M.; Amaducci, S.; et al. Assessing canopy PRI from airborne imagery to map water stress in maize. ISPRS J. Photogramm. Remote Sens. 2013, 86, 168–177. [Google Scholar] [CrossRef]

- Santesteban, L.G.; Di Gennaro, S.F.; Herrero-Langreo, A.; Miranda, C.; Royo, J.B.; Matese, A. High-resolution UAV-based thermal imaging to estimate the instantaneous and seasonal variability of plant water status within a vineyard. Agric. Water Manag. 2017, 183, 49–59. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; Suárez, L.; González-Dugo, V. Spatial resolution effects on chlorophyll fluorescence retrieval in a heterogeneous canopy using hyperspectral imagery and radiative transfer simulation. IEEE Geosci. Remote Sens. 2013, 10, 937–941. [Google Scholar] [CrossRef]

- Matese, A.; Di Gennaro, S. Practical Applications of a Multisensor UAV Platform Based on Multispectral, Thermal and RGB High Resolution Images in Precision Viticulture. Agriculture 2018, 8, 116. [Google Scholar] [CrossRef]

- Camino, C.; Zarco-Tejada, P.J.; Gonzalez-Dugo, V. Effects of Heterogeneity within Tree Crowns on Airborne-Quantified SIF and the CWSI as Indicators of Water Stress in the Context of Precision Agriculture. Remote Sens. 2018, 10, 604. [Google Scholar] [CrossRef]

- Chapman, S.; Merz, T.; Chan, A.; Jackway, P.; Hrabar, S.; Dreccer, M.; Holland, E.; Zheng, B.; Ling, T.; Jimenez-Berni, J. Pheno-Copter: A Low-Altitude, Autonomous Remote-Sensing Robotic Helicopter for High-Throughput Field-Based Phenotyping. Agronomy 2014, 4, 279. [Google Scholar] [CrossRef]

- Gevaert, C.M.; Suomalainen, J.; Tang, J.; Kooistra, L. Generation of Spectral–Temporal Response Surfaces by Combining Multispectral Satellite and Hyperspectral UAV Imagery for Precision Agriculture Applications. IEEE J. Stars 2015, 8, 3140–3146. [Google Scholar] [CrossRef]

- Zaman-Allah, M.; Vergara, O.; Araus, J.L.; Tarekegne, A.; Magorokosho, C.; Zarco-Tejada, P.J.; Hornero, A.; Albà, A.H.; Das, B.; Craufurd, P.; et al. Unmanned aerial platform-based multi-spectral imaging for field phenotyping of maize. Plant. Methods 2015, 11, 35. [Google Scholar] [CrossRef] [PubMed]

- Araus, J.L.; Cairns, J.E. Field high-throughput phenotyping: The new crop breeding frontier. Trends Plant Sci. 2014, 19, 52–61. [Google Scholar] [CrossRef]

- Hilker, T.; Gitelson, A.; Coops, N.C.; Hall, F.G.; Black, T.A. Tracking plant physiological properties from multi-angular tower-based remote sensing. Oecologia 2011, 165, 865–876. [Google Scholar] [CrossRef] [PubMed]

- Yang, G.; Liu, J.; Zhao, C.; Li, Z.; Huang, Y.; Yu, H.; Xu, B.; Yang, X.; Zhu, D.; Zhang, X.; et al. Unmanned Aerial Vehicle Remote Sensing for Field-Based Crop Phenotyping: Current Status and Perspectives. Front. Plant Sci. 2017, 8, 1111. [Google Scholar] [CrossRef] [PubMed]

- Gago, J.; Douthe, C.; Coopman, R.E.; Gallego, P.P.; Ribas-Carbo, M.; Flexas, J.; Escalona, J.; Medrano, H. UAVs challenge to assess water stress for sustainable agriculture. Agric. Water Manag. 2015, 153, 9–19. [Google Scholar] [CrossRef]

- Lelong, C.; Burger, P.; Jubelin, G.; Roux, B.; Labbé, S.; Baret, F. Assessment of Unmanned Aerial Vehicles Imagery for Quantitative Monitoring of Wheat Crop in Small Plots. Sensors 2008, 8, 3557. [Google Scholar] [CrossRef] [PubMed]

- Maes, W.H.; Steppe, K. Perspectives for Remote Sensing with Unmanned Aerial Vehicles in Precision Agriculture. Trends Plant Sci. 2018, 24, 152–164. [Google Scholar] [CrossRef] [PubMed]

- Shi, Y.; Thomasson, J.A.; Murray, S.C.; Pugh, N.A.; Rooney, W.L.; Shafian, S.; Rajan, N.; Rouze, G.; Morgan, C.L.S.; Neely, H.L.; et al. Unmanned Aerial Vehicles for High-Throughput Phenotyping and Agronomic Research. PLoS ONE 2016, 11, e0159781. [Google Scholar] [CrossRef]

- Barbedo, J.G.A. A Review on the Use of Unmanned Aerial Vehicles and Imaging Sensors for Monitoring and Assessing Plant Stresses. Drones 2019, 3, 40. [Google Scholar] [CrossRef]

- Jang, G.; Kim, J.; Yu, J.-K.; Kim, H.-J.; Kim, Y.; Kim, D.-W.; Kim, K.-H.; Lee, C.W.; Chung, Y.S. Review: Cost-Effective Unmanned Aerial Vehicle (UAV) Platform for Field Plant Breeding Application. Remote Sens. 2020, 12, 998. [Google Scholar] [CrossRef]

- Sankaran, S.; Khot, L.R.; Espinoza, C.Z.; Jarolmasjed, S.; Sathuvalli, V.R.; Vandemark, G.J.; Miklas, P.N.; Carter, A.H.; Pumphrey, M.O.; Knowles, N.R. Low-altitude, high-resolution aerial imaging systems for row and field crop phenotyping: A review. Eur. J. Agron. 2015, 70, 112–123. [Google Scholar] [CrossRef]

- Guan, S.; Fukami, K.; Matsunaka, H.; Okami, M.; Tanaka, R.; Nakano, H.; Sakai, T.; Nakano, K.; Ohdan, H.; Takahashi, K. Assessing Correlation of High-Resolution NDVI with Fertilizer Application Level and Yield of Rice and Wheat Crops Using Small UAVs. Remote Sens. 2019, 11, 112. [Google Scholar] [CrossRef]

- Yeom, J.; Jung, J.; Chang, A.; Maeda, M.; Landivar, J. Automated Open Cotton Boll Detection for Yield Estimation Using Unmanned Aircraft Vehicle (UAV) Data. Remote Sens. 2018, 10, 1895. [Google Scholar] [CrossRef]

- Rasmussen, J.; Nielsen, J.; Garcia-Ruiz, F.; Christensen, S.; Streibig, J.C. Potential uses of small unmanned aircraft systems (UAS) in weed research. Weed Res. 2013, 53, 242–248. [Google Scholar] [CrossRef]

- Gao, J.; Liao, W.; Nuyttens, D.; Lootens, P.; Vangeyte, J.; Pižurica, A.; He, Y.; Pieters, J.G. Fusion of pixel and object-based features for weed mapping using unmanned aerial vehicle imagery. Int. J. Appl. Earth Obs. Geoinf. 2018, 67, 43–53. [Google Scholar] [CrossRef]

- Jay, S.; Baret, F.; Dutartre, D.; Malatesta, G.; Héno, S.; Comar, A.; Weiss, M.; Maupas, F. Exploiting the centimeter resolution of UAV multispectral imagery to improve remote-sensing estimates of canopy structure and biochemistry in sugar beet crops. Remote Sens. Environ. 2019, 231, 110898. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; Guillén-Climent, M.L.; Hernández-Clemente, R.; Catalina, A.; González, M.R.; Martín, P. Estimating leaf carotenoid content in vineyards using high resolution hyperspectral imagery acquired from an unmanned aerial vehicle (UAV). Agric. Forest Meteorol. 2013, 171–172, 281–294. [Google Scholar] [CrossRef]

- Berni, J.A.J.; Zarco-Tejada, P.J.; Sepulcre-Cantó, G.; Fereres, E.; Villalobos, F. Mapping canopy conductance and CWSI in olive orchards using high resolution thermal remote sensing imagery. Remote Sens. Environ. 2009, 113, 2380–2388. [Google Scholar] [CrossRef]

- Sepúlveda-Reyes, D.; Ingram, B.; Bardeen, M.; Zúñiga, M.; Ortega-Farías, S.; Poblete-Echeverría, C. Selecting canopy zones and thresholding approaches to assess grapevine water status by using aerial and ground-based thermal imaging. Remote Sens. 2016, 8, 822. [Google Scholar] [CrossRef]

- Reinert, S.; Bögelein, R.; Thomas, F.M. Use of thermal imaging to determine leaf conductance along a canopy gradient in European beech (Fagus sylvatica). Tree Physiol. 2012, 32, 294–302. [Google Scholar] [CrossRef]

- Pou, A.; Diago, M.P.; Medrano, H.; Baluja, J.; Tardaguila, J. Validation of thermal indices for water status identification in grapevine. Agric. Water Manag. 2014, 134, 60–72. [Google Scholar] [CrossRef]

- Baluja, J.; Diago, M.P.; Balda, P.; Zorer, R.; Meggio, F.; Morales, F.; Tardaguila, J. Assessment of vineyard water status variability by thermal and multispectral imagery using an unmanned aerial vehicle (UAV). Irrig. Sci. 2012, 30, 511–522. [Google Scholar] [CrossRef]

- Möller, M.; Alchanatis, V.; Cohen, Y.; Meron, M.; Tsipris, J.; Naor, A.; Ostrovsky, V.; Sprintsin, M.; Cohen, S. Use of thermal and visible imagery for estimating crop water status of irrigated grapevine. J. Exp. Bot. 2006, 58, 827–838. [Google Scholar] [CrossRef] [PubMed]

- Maimaitiyiming, M.; Ghulam, A.; Bozzolo, A.; Wilkins, J.L.; Kwasniewski, M.T. Early Detection of Plant Physiological Responses to Different Levels of Water Stress Using Reflectance Spectroscopy. Remote Sens. 2017, 9, 745. [Google Scholar] [CrossRef]

- Maimaitiyiming, M.; Sagan, V.; Sidike, P.; Kwasniewski, M.T. Dual Activation Function-Based Extreme Learning Machine (ELM) for Estimating Grapevine Berry Yield and Quality. Remote Sens. 2019, 11, 740. [Google Scholar] [CrossRef]

- Anderson, D.B. Relative humidity or vapor pressure deficit. Ecology 1936, 17, 277–282. [Google Scholar] [CrossRef]

- Struthers, R.; Ivanova, A.; Tits, L.; Swennen, R.; Coppin, P. Thermal infrared imaging of the temporal variability in stomatal conductance for fruit trees. Int. J. App. Earth Obs. Geoinf. 2015, 39, 9–17. [Google Scholar] [CrossRef]

- Flexas, J.; Briantais, J.-M.; Cerovic, Z.; Medrano, H.; Moya, I. Steady-State and Maximum Chlorophyll Fluorescence Responses to Water Stress in Grapevine Leaves: A New Remote Sensing System. Remote Sens. Environ. 2000, 73, 283–297. [Google Scholar] [CrossRef]

- Papageorgiou, G.C. Chlorophyll a Fluorescence: A Signature of Photosynthesis; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2007; Volume 19. [Google Scholar]

- Zarco-Tejada, P.J.; Catalina, A.; González, M.; Martín, P. Relationships between net photosynthesis and steady-state chlorophyll fluorescence retrieved from airborne hyperspectral imagery. Remote Sens. Environ. 2013, 136, 247–258. [Google Scholar] [CrossRef]

- Rahmati, M.; Mirás-Avalos, J.M.; Valsesia, P.; Lescourret, F.; Génard, M.; Davarynejad, G.H.; Bannayan, M.; Azizi, M.; Vercambre, G. Disentangling the effects of water stress on carbon acquisition, vegetative growth, and fruit quality of peach trees by means of the QualiTree model. Front. Plant Sci. 2018, 9, 3. [Google Scholar] [CrossRef]

- Decagon Devices. Leaf Porometer—Operator’s Manual: Version: October 17, 2016; Decagon Devices: Pullman, WA, USA, 2016. [Google Scholar]

- Sagan, V.; Maimaitijiang, M.; Sidike, P.; Eblimit, K.; Peterson, K.T.; Hartling, S.; Esposito, F.; Khanal, K.; Newcomb, M.; Pauli, D.; et al. UAV-Based High Resolution Thermal Imaging for Vegetation Monitoring, and Plant Phenotyping Using ICI 8640 P, FLIR Vue Pro R 640, and thermoMap Cameras. Remote Sens. 2019, 11, 330. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Hartling, S.; Esposito, F.; Fritschi, F.B. Soybean yield prediction from UAV using multimodal data fusion and deep learning. Remote Sens. Environ. 2020, 237, 111599. [Google Scholar] [CrossRef]

- Conel, J.E.; Green, R.O.; Vane, G.; Bruegge, C.J.; Alley, R.E.; Curtiss, B.J. AIS-2 radiometry and a comparison of methods for the recovery of ground reflectance. In Proceedings of the Third Airborne Imaging Spectrometer Data Analysis Workshop, Pasadena, CA, USA, 8–10 April 1985. [Google Scholar]

- Raczko, E.; Zagajewski, B. Comparison of support vector machine, random forest and neural network classifiers for tree species classification on airborne hyperspectral APEX images. Eur. J. Remote Sens. 2017, 50, 144–154. [Google Scholar] [CrossRef]

- Nevalainen, O.; Honkavaara, E.; Tuominen, S.; Viljanen, N.; Hakala, T.; Yu, X.; Hyyppä, J.; Saari, H.; Pölönen, I.; Imai, N. Individual tree detection and classification with UAV-based photogrammetric point clouds and hyperspectral imaging. Remote Sens. 2017, 9, 185. [Google Scholar] [CrossRef]

- Sidike, P.; Sagan, V.; Maimaitijiang, M.; Maimaitiyiming, M.; Shakoor, N.; Burken, J.; Mockler, T.; Fritschi, F.B. dPEN: Deep Progressively Expanded Network for mapping heterogeneous agricultural landscape using WorldView-3 satellite imagery. Remote Sens. Environ. 2019, 221, 756–772. [Google Scholar] [CrossRef]

- Essa, A.; Sidike, P.; Asari, V. Volumetric Directional Pattern for Spatial Feature Extraction in Hyperspectral Imagery. IEEE Geosci. Remote Sens. 2017, 14, 1056–1060. [Google Scholar] [CrossRef]

- Sumsion, G.R.; Bradshaw, M.S.; Hill, K.T.; Pinto, L.D.G.; Piccolo, S.R. Remote sensing tree classification with a multilayer perceptron. PeerJ 2019, 7, e6101. [Google Scholar] [CrossRef]

- Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 679–698. [Google Scholar] [CrossRef]

- Bian, J.; Zhang, Z.; Chen, J.; Chen, H.; Cui, C.; Li, X.; Chen, S.; Fu, Q. Simplified Evaluation of Cotton Water Stress Using High Resolution Unmanned Aerial Vehicle Thermal Imagery. Remote Sens. 2019, 11, 267. [Google Scholar] [CrossRef]

- Park, S.; Ryu, D.; Fuentes, S.; Chung, H.; Hernández-Montes, E.; O’Connell, M. Adaptive Estimation of Crop Water Stress in Nectarine and Peach Orchards Using High-Resolution Imagery from an Unmanned Aerial Vehicle (UAV). Remote Sens. 2017, 9, 828. [Google Scholar] [CrossRef]

- Takala, T.L.H.; Mõttus, M. Spatial variation of canopy PRI with shadow fraction caused by leaf-level irradiation conditions. Remote Sens. Environ. 2016, 182, 99–112. [Google Scholar] [CrossRef]

- Gruninger, J.H.; Ratkowski, A.J.; Hoke, M.L. The sequential maximum angle convex cone (SMACC) endmember model. In Algorithms and Technologies for Multispectral, Hyperspectral, and Ultraspectral Imagery X, Proceedings of SPIE; Orlando, FL, USA, 12–15 April 2004, Shen, S.S., Lewis, P.E., Eds.; SPIE: Bellingham, WA, USA, 2004; Volume 5425, pp. 1–14. [Google Scholar]

- MacQueen, J. Some methods for classification and analysis of multivariate observations. In Proceedings of the Fifth Berkeley Symposium on Mathematical Statistics and Probability, Berkeley, CA, USA, 21 June–18 July 1967; Volume 1, pp. 281–297. [Google Scholar]

- Plascyk, J.A.; Gabriel, F.C. The Fraunhofer line discriminator MKII-an airborne instrument for precise and standardized ecological luminescence measurement. IEEE Trans. Instrum. Meas. 1975, 24, 306–313. [Google Scholar] [CrossRef]

- Camino, C.; Gonzalez-Dugo, V.; Hernandez, P.; Zarco-Tejada, P.J. Radiative transfer Vcmax estimation from hyperspectral imagery and SIF retrievals to assess photosynthetic performance in rainfed and irrigated plant phenotyping trials. Remote Sens. Environ. 2019, 111186. [Google Scholar] [CrossRef]

- Damm, A.; Erler, A.; Hillen, W.; Meroni, M.; Schaepman, M.E.; Verhoef, W.; Rascher, U. Modeling the impact of spectral sensor configurations on the FLD retrieval accuracy of sun-induced chlorophyll fluorescence. Remote Sens. Environ. 2011, 115, 1882–1892. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; Miller, J.R.; Noland, T.L.; Mohammed, G.H.; Sampson, P.H. Scaling-up and model inversion methods with narrowband optical indices for chlorophyll content estimation in closed forest canopies with hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2001, 39, 1491–1507. [Google Scholar] [CrossRef]

- Rouse, J.W., Jr.; Haas, R.; Schell, J.; Deering, D. Monitoring vegetation systems in the Great Plains with ERTS. In Proceedings of the 3rd ERTS Symposium, Washington, DC, USA, 10–14 December 1973; Volume 1, pp. 309–317. [Google Scholar]

- Jordan, C.F. Derivation of Leaf-Area Index from Quality of Light on the Forest Floor. Ecology 1969, 50, 663–666. [Google Scholar] [CrossRef]

- Peñuelas, J.; Filella, I.; Biel, C.; Serrano, L.; Save, R. The reflectance at the 950–970 nm region as an indicator of plant water status. Int. J. Remote Sens. 1993, 14, 1887–1905. [Google Scholar] [CrossRef]

- Jones, H. Plants and Microclimate: A Quantitative Approach to Environmental Plant Physiology; Cambridge University Press: Cambridge, UK, 1992. [Google Scholar]

- Alchanatis, V.; Cohen, Y.; Cohen, S.; Moller, M.; Sprinstin, M.; Meron, M.; Tsipris, J.; Saranga, Y.; Sela, E. Evaluation of different approaches for estimating and mapping crop water status in cotton with thermal imaging. Precis. Agric. 2010, 11, 27–41. [Google Scholar] [CrossRef]

- Leinonen, I.; Jones, H.G. Combining thermal and visible imagery for estimating canopy temperature and identifying plant stress. J. Exp. Bot. 2004, 55, 1423–1431. [Google Scholar] [CrossRef]

- Romero-Trigueros, C.; Bayona Gambín, J.M.; Nortes Tortosa, P.A.; Alarcón Cabañero, J.J.; Nicolás Nicolás, E. Determination of Crop Water Stress Index by Infrared Thermometry in Grapefruit Trees Irrigated with Saline Reclaimed Water Combined with Deficit Irrigation. Remote Sens. 2019, 11, 757. [Google Scholar] [CrossRef]

- Meron, M.; Sprintsin, M.; Tsipris, J.; Alchanatis, V.; Cohen, Y. Foliage temperature extraction from thermal imagery for crop water stress determination. Precis. Agric. 2013, 14, 467–477. [Google Scholar] [CrossRef]

- Peterson, K.T.; Sagan, V.; Sidike, P.; Hasenmueller, E.A.; Sloan, J.J.; Knouft, J.H.J.P.E.; Sensing, R. Machine Learning-Based Ensemble Prediction of Water-Quality Variables Using Feature-Level and Decision-Level Fusion with Proximal Remote Sensing. Photogramm. Eng. Remote Sens. 2019, 85, 269–280. [Google Scholar] [CrossRef]

- Ghulam, A.; Kusky, T.M.; Teyip, T.; Qin, Q. Sub-canopy soil moisture modeling in n-dimensional spectral feature space. Photogramm. Eng. Remote Sens. 2011, 77, 149–156. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; Berni, J.A.; Suárez, L.; Sepulcre-Cantó, G.; Morales, F.; Miller, J. Imaging chlorophyll fluorescence with an airborne narrow-band multispectral camera for vegetation stress detection. Remote Sens. Environ. 2009, 113, 1262–1275. [Google Scholar] [CrossRef]

- Gerhards, M.; Schlerf, M.; Rascher, U.; Udelhoven, T.; Juszczak, R.; Alberti, G.; Miglietta, F.; Inoue, Y. Analysis of Airborne Optical and Thermal Imagery for Detection of Water Stress Symptoms. Remote Sens. 2018, 10, 1139. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; González-Dugo, V.; Williams, L.E.; Suárez, L.; Berni, J.A.J.; Goldhamer, D.; Fereres, E. A PRI-based water stress index combining structural and chlorophyll effects: Assessment using diurnal narrow-band airborne imagery and the CWSI thermal index. Remote Sens. Environ. 2013, 138, 38–50. [Google Scholar] [CrossRef]

- Hall, F.G.; Hilker, T.; Coops, N.C.; Lyapustin, A.; Huemmrich, K.F.; Middleton, E.; Margolis, H.; Drolet, G.; Black, T.A. Multi-angle remote sensing of forest light use efficiency by observing PRI variation with canopy shadow fraction. Remote Sens. Environ. 2008, 112, 3201–3211. [Google Scholar] [CrossRef]

- Hilker, T.; Hall, F.G.; Coops, N.C.; Lyapustin, A.; Wang, Y.; Nesic, Z.; Grant, N.; Black, T.A.; Wulder, M.A.; Kljun, N.; et al. Remote sensing of photosynthetic light-use efficiency across two forested biomes: Spatial scaling. Remote Sens. Environ. 2010, 114, 2863–2874. [Google Scholar] [CrossRef]

- Zhang, Q.; Chen, J.M.; Ju, W.; Wang, H.; Qiu, F.; Yang, F.; Fan, W.; Huang, Q.; Wang, Y.-P.; Feng, Y.; et al. Improving the ability of the photochemical reflectance index to track canopy light use efficiency through differentiating sunlit and shaded leaves. Remote Sens. Environ. 2017, 194, 1–15. [Google Scholar] [CrossRef]

- Sepulcre-Cantó, G.; Zarco-Tejada, P.; Jiménez-Muñoz, J.; Sobrino, J.; de Miguel, E.; Villalobos, F. Within-field thermal variability detection as function of water stress in Olea europaea L. orchards with high spatial remote sensing imagery. Agric. For. Meteorol. 2006, 136, 31–44. [Google Scholar] [CrossRef]

- Belfiore, N.; Vinti, R.; Lovat, L.; Chitarra, W.; Tomasi, D.; de Bei, R.; Meggio, F.; Gaiotti, F. Infrared Thermography to Estimate Vine Water Status: Optimizing Canopy Measurements and Thermal Indices for the Varieties Merlot and Moscato in Northern Italy. Agronomy 2019, 9, 821. [Google Scholar] [CrossRef]

- Bellvert, J.; Marsal, J.; Girona, J.; Zarco-Tejada, P.J. Seasonal evolution of crop water stress index in grapevine varieties determined with high-resolution remote sensing thermal imagery. Irrig. Sci. 2015, 33, 81–93. [Google Scholar] [CrossRef]

| Flight Dates | Flight Time | Aerial Platforms | Sensors | Ground Sampling Distance (cm) | Growing Stages | Field Sampling Instruments | Total Number of Vines Monitored |

|---|---|---|---|---|---|---|---|

| 09/15/2017 | Midmorning Midday Afternoon | DJI S1000 | ICI (thermal) | 4 | Preharvest | Fluorometer, porometer | 54 |

| 08/01/2018 | Midmorning Midday Midafternoon Afternoon | DJI M600 | Flir (thermal), HeadWall (hyperspectral) | 5 (Hyper) 9 (thermal) | Veraison | Li-6400XT | 48 |

| 09/19/2018 | Morning Midday | DJI S1000 | ICI (thermal) | 4 | Preharvest | Li-6400XT | 24 |

| Hyperspectral Image Retrievals | Acronym | Equation | References |

|---|---|---|---|

| Xanthophyll | |||

| Photochemical reflectance index | PRI | (R570 – R531)/(R570 + R531) | [25] |

| Chlorophyll | |||

| Red Edge ratio index | RE | R750/R710 | [90] |

| Structure | |||

| Normalized difference vegetation index | NDVI | (R800 – R670)/(R800 + R670) | [91] |

| Simple Ratio | SR | R800/R670 | [92] |

| Water content | |||

| Water index | WI | R900/R970 | [93] |

| Chlorophyll fluorescence | |||

| Sun-induced chlorophyll fluorescence | SIF | [87] |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Maimaitiyiming, M.; Sagan, V.; Sidike, P.; Maimaitijiang, M.; Miller, A.J.; Kwasniewski, M. Leveraging Very-High Spatial Resolution Hyperspectral and Thermal UAV Imageries for Characterizing Diurnal Indicators of Grapevine Physiology. Remote Sens. 2020, 12, 3216. https://doi.org/10.3390/rs12193216

Maimaitiyiming M, Sagan V, Sidike P, Maimaitijiang M, Miller AJ, Kwasniewski M. Leveraging Very-High Spatial Resolution Hyperspectral and Thermal UAV Imageries for Characterizing Diurnal Indicators of Grapevine Physiology. Remote Sensing. 2020; 12(19):3216. https://doi.org/10.3390/rs12193216

Chicago/Turabian StyleMaimaitiyiming, Matthew, Vasit Sagan, Paheding Sidike, Maitiniyazi Maimaitijiang, Allison J. Miller, and Misha Kwasniewski. 2020. "Leveraging Very-High Spatial Resolution Hyperspectral and Thermal UAV Imageries for Characterizing Diurnal Indicators of Grapevine Physiology" Remote Sensing 12, no. 19: 3216. https://doi.org/10.3390/rs12193216

APA StyleMaimaitiyiming, M., Sagan, V., Sidike, P., Maimaitijiang, M., Miller, A. J., & Kwasniewski, M. (2020). Leveraging Very-High Spatial Resolution Hyperspectral and Thermal UAV Imageries for Characterizing Diurnal Indicators of Grapevine Physiology. Remote Sensing, 12(19), 3216. https://doi.org/10.3390/rs12193216